Abstract

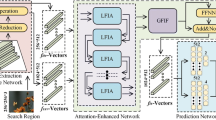

Recently, Siamese-like trackers have performed very well. Most of the methods exploit classification scores and quality assessment scores to estimate a target. However, their classification scores have a low correlation with target locational estimation, and quality scores by a simple strategy benefit the correlation limitedly, which damages the tracking ability of a tracker. To alleviate this problem, we propose a simple Siamese target estimation with image contents (luminance, contrast, and structure) method for object tracking. Specifically, we first employ image contents involving the target to generate similarity scores by SSIM (Structure Similarity Index Measure) in the similarity branch, aiming to aid the classification branch in improving target estimation by considering the whole target context information in our model. Secondly, we give different weights of the classification branch and similarity branch during inference to ease the low correlation, which shows more flexibility for the target locational estimation. Our tracker achieves competitive performance on three challenging benchmarks like OTB100, GOT-10k, and TrackingNet over a real-time speed, proving the effectiveness of our method. Particularly, our tracker outperforms the leading baseline by over 6.0% in SR\(_{0.75}\) score on GOT-10k benchmark still running at 67 FPS.

Similar content being viewed by others

Data availability

All the training data sets and test data sets used in our experiment are public and can be downloaded from their official websites. The results of all published algorithms can also be obtained from the websites provided by their respective authors.

References

Elayaperumal D, Joo YH (2020) Visual object tracking using sparse context-aware spatio-temporal correlation filter. J Vis Commun Image Represent 70:102820

Danelljan M, Bhat G, Khan FS, Felsberg M (2019) ATOM: accurate tracking by overlap maximization. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR)

Zhang J, Xie X, Zheng Z, Kuang L-D, Zhang Y (2022) Siamoa: siamese offset-aware object tracking. Neural Comput Appl:1–17

Li Z, Hu C, Nai K, Yuan J (2021) Siamese target estimation network with aiou loss for real-time visual tracking. J Vis Commun Image Represent 77:103107

Bertinetto L, Valmadre J, Henriques JF, Vedaldi A, Torr PH (2016) Fully-convolutional siamese networks for object tracking. In: European conference on computer vision, Springer, pp 850–865

Li B, Yan J, Wu W, Zhu Z, Hu X (2018) High performance visual tracking with siamese region proposal network. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 8971–8980

Ren S, He K, Girshick R, Sun J (2015) Faster R-CNN: towards real-time object detection with region proposal networks. Advan Neural Inform Process Syst 28

Tian Z, Shen C, Chen H, He T (2019) FCOS: fully convolutional one-stage object detection. In: Proceedings of the IEEE/CVF International conference on computer vision, pp 9627–9636

Xu Y, Wang Z, Li Z, Yuan Y, Yu G (2020) SiamFC++: towards robust and accurate visual tracking with target estimation guidelines. Proc AAAI Conference Artif Intell 34:12549–12556

Wu S, Li X, Wang X (2020) IoU-aware single-stage object detector for accurate localization. Image Vis Comput 97:103911

Tian Z, Shen C, Chen H, He T (2020) Fcos: a simple and strong anchor-free object detector. IEEE transactions on pattern analysis and machine intelligence

Zhang H, Wang Y, Dayoub F, Sunderhauf N (2021) VarifocalNet: an iou-aware dense object detector. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 8514–8523

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13(4):600–612

Bolme DS, Beveridge JR, Draper BA, Lui YM (2010) Visual object tracking using adaptive correlation filters. In: 2010 IEEE Computer society conference on computer vision and pattern recognition, IEEE, pp 2544–2550

Henriques JF, Caseiro R, Martins P, Batista J (2014) High-speed tracking with kernelized correlation filters. IEEE Trans Pattern Anal Mach Intell 37(3):583–596

Danelljan M, Robinson A, Shahbaz Khan F, Felsberg M (2016) Beyond correlation filters: learning continuous convolution operators for visual tracking. In: European conference on computer vision, Springer, pp 472–488

Danelljan M, Bhat G, Shahbaz Khan F, Felsberg M (2017) ECO: efficient convolution operators for tracking. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR)

Kiani Galoogahi H, Fagg A, Lucey S (2017) Learning background-aware correlation filters for visual tracking. In: Proceedings of the IEEE international conference on computer vision, pp 1135–1143

Sun Y, Sun C, Wang D, He Y, Lu H (2019) Roi pooled correlation filters for visual tracking. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 5783–5791

Bhat G, Johnander J, Danelljan M, Khan FS, Felsberg M (2018) Unveiling the power of deep tracking. In: Proceedings of the european conference on computer vision (ECCV), pp 483–498

Danelljan M, Häger G, Khan F, Felsberg M (2014) Accurate scale estimation for robust visual tracking. In: British machine vision conference, Nottingham, Bmva Press, Sept 1-5, 2014

Dai K, Wang D, Lu H, Sun C, Li J (2019) Visual tracking via adaptive spatially-regularized correlation filters. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 4670–4679

Wang F, Yin S, Mbelwa JT, Sun F (2022) Context and saliency aware correlation filter for visual tracking. Multimed Tools Appl 81(19):27879–27893

Zhao Z, Zhu Z, Yan M, Wu B, Zhao Z (2023) Robust object tracking based on power-law probability map and ridge regression. Multimedia Tool Appl:1–19

Wang Q, Zhang L, Bertinetto L, Hu W, Torr PHS (2019) Fast online object tracking and segmentation: a unifying approach. In: IEEE conference on computer vision and pattern recognition, CVPR 2019, Long Beach, CA, USA, Computer Vision Foundation / IEEE, June 16-20, 2019, pp 1328–1338

Li B, Wu W, Wang Q, Zhang F, Xing J, Yan J (2019) SiamRPN++: evolution of siamese visual tracking with very deep networks. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 4282–4291

Chen Z, Zhong B, Li G, Zhang S, Ji R (2020) Siamese box adaptive network for visual tracking. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 6668–6677

Zhu Z, Wang Q, Li B, Wu W, Yan J, Hu W (2018) Distractor-aware siamese networks for visual object tracking. In: Proceedings of the european conference on computer vision (ECCV), pp 101–117

Guo D, Wang J, Cui Y, Wang Z, Chen S (2020) SiamCAR: Siamese fully convolutional classification and regression for visual tracking. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 6269–6277

Sheng Q-h, Huang J, Li Z, Zhou C-y, Yin H-b (2023) SiamDAG: Siamese dynamic receptive field and global context modeling network for visual tracking. Multimed Tools Appl 82(1):681–701

Zhang J, Huang H, Jin X, Kuang L-D, Zhang J (2023) Siamese visual tracking based on criss-cross attention and improved head network. Multimedia Tool Appl:1–27

Valmadre J, Bertinetto L, Henriques J, Vedaldi A, Torr PH (2017) End-to-end representation learning for correlation filter based tracking. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2805–2813

Zhang Z, Peng H (2019) Deeper and wider siamese networks for real-time visual tracking. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 4591–4600

He A, Luo C, Tian X, Zeng W (2018) A twofold siamese network for real-time object tracking. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4834–4843

Lin T-Y, Goyal P, Girshick R, He K, Dollár P (2017) Focal loss for dense object detection. In: Proceedings of the IEEE international conference on computer vision, pp 2980–2988

Yu J, Jiang Y, Wang Z, Cao Z, Huang T (2016) Unitbox: an advanced object detection network. In: Proceedings of the 24th ACM international conference on multimedia, pp 516–520

Jiang B, Luo R, Mao J, Xiao T, Jiang Y (2018) Acquisition of localization confidence for accurate object detection. In: Proceedings of the european conference on computer vision (ECCV), pp 784–799

Li X, Wang W, Wu L, Chen S, Hu X, Li J, Tang J, Yang J (2020) Generalized Focal Loss: learning qualified and distributed bounding boxes for dense object detection. Adv Neural Inf Process Syst 33:21002–21012

Sermanet P, Eigen D, Zhang X, Mathieu M, Fergus R, LeCun Y (2013) OverFeat: integrated recognition, localization and detection using convolutional networks. arXiv:1312.6229

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2015) Going deeper with convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1–9

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. Advan Neural Inform Process Syst 25

Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, Khosla A, Bernstein M et al (2015) Imagenet large scale visual recognition challenge. Int J Comput Vis 115(3):211–252

Lin T-Y, Maire M, Belongie S, Hays J, Perona P, Ramanan D, Dollár P, Zitnick CL (2014) Microsoft COCO: common objects in context. In: European conference on computer vision, Springer, pp 740–755

Fan H, Lin L, Yang F, Chu P, Deng G, Yu S, Bai H, Xu Y, Liao C, Ling H (2019) LaSOT: a high-quality benchmark for large-scale single object tracking. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 5374–5383

Huang L, Zhao X, Huang K (2019) GOT-10k: a large high-diversity benchmark for generic object tracking in the wild. IEEE Trans Pattern Anal Mach Intell 43(5):1562–1577

Muller M, Bibi A, Giancola S, Alsubaihi S, Ghanem B (2018) TrackingNet: a large-scale dataset and benchmark for object tracking in the wild. In: Proceedings of the european conference on computer vision (ECCV), pp 300–317

Wu Y, Lim J, Yang M-H (2015) Object tracking benchmark. IEEE Trans Pattern Analysis Mach Intell 37(9):1834–1848

Li X, Ma C, Wu B, He Z, Yang M-H (2019) Target-aware deep tracking. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 1369–1378

Li P, Chen B, Ouyang W, Wang D, Yang X, Lu H (2019) GradNet: gradient-guided network for visual object tracking. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 6162–6171

Zheng J, Ma C, Peng H, Yang X (2021) Learning to track objects from unlabeled videos. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 13546–13555

Wang N, Song Y, Ma C, Zhou W, Liu W, Li H (2019) Unsupervised deep tracking. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 1308–1317

Hu W, Wang Q, Zhang L, Bertinetto L, Torr PH (2023) SiamMask: a framework for fast online object tracking and segmentation. IEEE Trans Pattern Anal Mach Intell 45(3):3072–3089

Lukežič A, Matas J, Kristan M (2021) A discriminative single-shot segmentation network for visual object tracking. IEEE Trans Pattern Anal Mach Intell 44(12):9742–9755

Zhang Z, Peng H, Fu J, Li B, Hu W (2020) Ocean: object-aware anchor-free tracking. In: European conference on computer vision, Springer, pp 771–787

Zhao A, Zhang Y (2023) Evota: an enhanced visual object tracking network with attention mechanism. Multimedia Tool Appl:1–22

Chen X, Peng H, Wang D, Lu H, Hu H (2023) SeqTrack: sequence to sequence learning for visual object tracking. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 14572–14581

Xie F, Chu L, Li J, Lu Y, Ma C (2023) VideoTrack: learning to track objects via video transformer. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 22826–22835

Yang Y, Gu X (2023) Joint correlation and attention based feature fusion network for accurate visual tracking. IEEE Trans Image Process

Peng J, Jiang Z, Gu Y, Wu Y, Wang Y, Tai Y, Wang C, Lin W (2021) Siamrcr: reciprocal classification and regression for visual object tracking. arXiv:2105.11237

Bhat G, Danelljan M, Gool LV, Timofte R (2019) Learning discriminative model prediction for tracking. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 6182–6191

Huang L, Zhao X, Huang K (2020) GlobalTrack: a simple and strong baseline for long-term tracking. Proc AAAI Conf on Artif Intell 34:11037–11044

Yu B, Tang M, Zheng L, Zhu G, Wang J, Feng H, Feng X, Lu H (2021) High-performance discriminative tracking with transformers. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 9856–9865

Wang N, Zhou W, Song Y, Ma C, Liu W, Li H (2021) Unsupervised deep representation learning for real-time tracking. Int J Comput Vis 129(2):400–418

Han W, Dong X, Khan FS, Shao L, Shen J (2021) Learning to fuse asymmetric feature maps in siamese trackers. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 16570–16580

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

We declare that we have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, S., Chen, X. & Yan, J. Accurate target estimation with image contents for visual tracking. Multimed Tools Appl (2024). https://doi.org/10.1007/s11042-024-18869-7

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11042-024-18869-7