Abstract

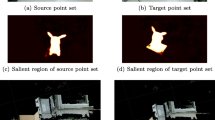

Point cloud registration is fundamental for generating a complete 3D scene from partial scenes obtained using 3D scanners. The key to the success of the task is extracting consistent descriptor values from arbitrary rotations (that is, rotation-robust) and selecting salient descriptors between the discriminative and noise regions. However, extracting rotation-invariant features and setting a criterion for selecting salient descriptors are difficult. In this study, we present a new method for building salient and rotation-robust 3D local descriptors for point registration tasks. For each point, we first extracted neighboring points (patches) for each interest point and aligned them with their local reference frame. Next, we encoded the aligned patches using cylindrical kernels to obtain rotation-invariant descriptors. Then, we estimated dissimilarities between the descriptors to exclude descriptors extracted from monotonous and repeating areas and select discriminative descriptors, and registration tasks were performed using only the salient descriptors. We experimented with our method on indoor and outdoor datasets (3DMatch and ETH) to evaluate its performance. The performance of our method is comparable to that of state-of-the-art methods using discriminative rotation-invariant descriptors. By selecting salient descriptors, we improved our feature-matching recall scores by up to 3%.

Similar content being viewed by others

Data availability

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

References

Hagbi N, Bergig O, El-Sana J, Billinghurst M (2010) Shape recognition and pose estimation for mobile augmented reality. IEEE Trans Visual Comput Graphics 17(10):1369–1379. https://doi.org/10.1109/ismar.2009.5336498

Placitelli AP, Gallo L (2011) Low-cost augmented reality systems via 3D point cloud sensors. In 2011 Seventh International Conference on Signal Image Technology & Internet-Based Systems (pp 188–192). IEEE. https://doi.org/10.1109/sitis.2011.43

Zhu Y, Mottaghi R, Kolve E, Lim JJ, Gupta A, Fei-Fei L, Farhadi A (2017) Target-driven visual navigation in indoor scenes using deep reinforcement learning. In 2017 IEEE International Conference on Robotics and Automation (ICRA) (pp 3357–3364). IEEE. https://doi.org/10.1109/icra.2017.7989381

Rusu RB, Marton ZC, Blodow N, Dolha M, Beetz M (2008) Towards 3D point cloud based object maps for household environments. Robot Auton Syst 56(11):927–941. https://doi.org/10.1016/j.robot.2008.08.005

Fraundorfer F, Scaramuzza D (2012) Visual odometry: Part II: Matching robustness, optimization, and applications. IEEE Robot Autom Mag 19(2):78–90. https://doi.org/10.1109/mra.2012.2182810

Liang M, Yang B, Wang S, Urtasun R (2018) Deep continuous fusion for multi-sensor 3D object detection. In Proceedings of the European Conference on Computer Vision (ECCV) (pp 641–656). https://doi.org/10.1007/978-3-030-01270-0_39

Pylvanainen T, Roimela K, Vedantham R, Itaranta J, Grzeszczuk R (2010) Automatic alignment and multi-view segmentation of street view data using 3D shape priors. In Symposium on 3D Data Processing, Visualization, and Transmission (3DPVT) 737:738–739

Kumar M, Jindal MK, Kumar M (2022) Distortion, rotation and scale invariant recognition of hollow Hindi characters. Sādhanā 47(2):92. Springer. https://doi.org/10.1007/s12046-022-01847-w

Zeng A, Song S, Nießner M, Fisher M, Xiao J, Funkhouser T (2017) 3DMatch: Learning the matching of local 3D geometry in range scans. In CVPR (vol. 1, p 4)

Pomerleau F, Liu M, Colas F, Siegwart R (2012) Challenging data sets for point cloud registration algorithms. Int J Robot Res 31(14):1705–1711. https://doi.org/10.1177/0278364912458814

Salti S, Tombari F, Di Stefano L (2014) Shot: Unique signatures of histograms for surface and texture description. Comput Vision Image Underst 125. https://doi.org/10.1016/j.cviu.2014.04.011

Rusu RB, Blodow N, Beetz M (2009) Fast point feature histograms (fpfh) for 3D registration. In 2009 IEEE International Conference on Robotics and Automation (pp 3212–3217). https://doi.org/10.1109/ROBOT.2009.5152473

Guo Y, Bennamoun M, Sohel F, Lu M, Wan J, Zhang J (2014) Performance evaluation of 3D local feature descriptors. In Asian Conference on Computer Vision (pp 178–194). Springer. https://doi.org/10.1007/978-3-319-16808-1_13

Phan AV, Nguyen ML, Nguyen YLH, Bui LT (2018) Dgcnn: A convolutional neural network over large-scale labeled graphs. Neural Netw 108:533–543. https://doi.org/10.1016/j.neunet.2018.09.001

Zhang K, Hao M, Wang J, Chen X, Leng Y, de Silva CW, Fu C (2021) Linked dynamic graph cnn: Learning through point cloud by linking hierarchical features. IEEE. https://doi.org/10.1109/M2VIP49856.2021.9665104

Hua B-S, Tran M-K, Yeung S-K (2018) Pointwise convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp 984–993). https://doi.org/10.1109/CVPR.2018.00109

Atzmon M, Maron H, Lipman Y (2018) Point convolutional neural networks by extension operators. ACM Trans Graph 37(4). https://doi.org/10.1145/3197517.3201301

Shen W, Zhang B, Huang S, Wei Z, Zhang Q (2020) 3D-rotation-equivariant quaternion neural networks. In Computer Vision – ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XX (pp 531–547). Springer Berlin Heidelberg. https://doi.org/10.1007/978-3-030-58565-5_32

Rao Y, Lu J, Zhou J (2019) Spherical fractal convolutional neural networks for point cloud recognition. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (pp 452–460). https://doi.org/10.1109/CVPR.2019.00054

Zhang Z, Hua B-S, Rosen DW, Yeung S-K (2019) Rotation invariant convolutions for 3D point clouds deep learning. In 2019 International Conference on 3D Vision (3DV) (pp 204–213). https://doi.org/10.1109/3DV.2019.00031

Chen C, Li G, Xu R, Chen T, Wang M, Lin L (2019) Clusternet: Deep hierarchical cluster network with rigorously rotation-invariant representation for point cloud analysis. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (pp 4989–4997). https://doi.org/10.1109/CVPR.2019.00513

Li X, Li R, Chen G, Fu C-W, Cohen-Or D, Heng P-A (2022) A rotation-invariant framework for deep point cloud analysis. IEEE Trans Visual Comput Graph 28(12):4503–4514. https://doi.org/10.1109/TVCG.2021.3092570

Zhang Z, Hua B-S, Yeung S-K (2022) Riconv++: Effective rotation invariant convolutions for 3D point clouds deep learning. Int J Comput Vision 130(5):1228–1243. https://doi.org/10.1007/s11263-022-01601-z

Gojcic Z, Zhou C, Wegner JD, Wieser A (2019) The perfect match: 3D point cloud matching with smoothed densities. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (pp 5540–5549). https://doi.org/10.1109/CVPR.2019.00569

Poiesi F, Boscaini D (2021) Distinctive 3D local deep descriptors. In 2020 25th International Conference on Pattern Recognition (ICPR) (pp 5720–5727). IEEE. https://doi.org/10.1109/icpr48806.2021.9411978

Poiesi F, Boscaini D (2022) Learning general and distinctive 3D local deep descriptors for point cloud registration. IEEE Trans Pattern Anal Mach Intell. https://doi.org/10.1109/tpami.2022.3175371

Bai X, Luo Z, Zhou L, Fu H, Quan L, Tai C-L (2020) D3feat: Joint learning of dense detection and description of 3D local features. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp 6359–6367). https://doi.org/10.1109/cvpr42600.2020.00639

Jung S, Shin Y-G, Chung M (2023) Robust kernel-based feature representation for 3D point cloud analysis via circular convolutional network. https://doi.org/10.1016/j.cviu.2023.103678. [Accessed from: https://www.sciencedirect.com/science/article/pii/S1077314223000589]

Johnson AE, Hebert M (1999) Using spin images for efficient object recognition in cluttered 3D scenes. IEEE Trans Pattern Anal Mach Intell 21(5):433–449. https://doi.org/10.1109/34.765655

Rusu R, Blodow N, Marton Z, Beetz M (2008) Aligning point cloud views using persistent feature histograms. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (pp 3384–3391). https://doi.org/10.1109/IROS.2008.4650967

Tombari F, Salti S, Di Stefano L (2010) Unique shape context for 3D data description. https://doi.org/10.1145/1877808.1877821

Frome A, Huber D, Kolluri R, Bülow T, Malik J (2004) Recognizing objects in range data using regional point descriptors. In Proceedings of the Third International Symposium on 3D Data Processing, Visualization, and Transmission (vol 3, pp 224–237). https://doi.org/10.1007/978-3-540-24672-5_18

Deng H, Birdal T, Ilic S (2018) Ppfnet: Global context aware local features for robust 3D point matching. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp 195–205). https://doi.org/10.1109/CVPR.2018.00028

Deng H, Birdal T, Ilic S (2018) PPF-FoldNet: Unsupervised learning of rotation invariant 3D local descriptors. In European Conference on Computer Vision (ECCV).https://doi.org/10.1007/978-3-030-01228-1_37

Choy C, Park J, Koltun V (2019) Fully convolutional geometric features. In 2019 IEEE/CVF International Conference on Computer Vision (ICCV) (pp 8957–8965). https://doi.org/10.1109/ICCV.2019.00905

Choy C, Gwak J, Savarese S (2019) 4D spatio-temporal convnets: Minkowski convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp 3070–3079). https://doi.org/10.1109/CVPR.2019.00319

Horache S, Deschaud J-E, Goulette, F (2021) 3D point cloud registration with multi-scale architecture and unsupervised transfer learning. In 2021 International Conference on 3D Vision (3DV) (pp 1351–1361). IEEE. https://doi.org/10.1109/3dv53792.2021.00142

Thomas H, Qi CR, Deschaud J, Marcotegui B, Goulette F, Guibas L (2019) KPConv: Flexible and deformable convolution for point clouds. In 2019 IEEE/CVF International Conference on Computer Vision (ICCV) (pp 6410–6419). https://doi.org/10.1109/ICCV.2019.00651

Li L, Fu H, Ovsjanikov M (2022) WSDESC: Weakly supervised 3D local descriptor learning for point cloud registration. IEEE Trans Visual Comput Graphics. https://doi.org/10.1109/tvcg.2022.3160005

Ao S, Hu Q, Yang B, Markham A, Guo Y (2021) SpinNet: Learning a general surface descriptor for 3D point cloud registration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp 11753–11762). https://doi.org/10.1109/cvpr46437.2021.01158

Qi CR, Yi L, Su H, Guibas LJ (2017) PointNet++: Deep hierarchical feature learning on point sets in a metric space. In Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, December 4–9, 2017, Long Beach, CA, USA (pp 5099–5108)

Valentin J, Dai A, Niessner M, Kohli P, Torr P, Izadi S, Keskin C (2016) Learning to navigate the energy landscape. In 2016 Fourth International Conference on 3D Vision (3DV) (pp 323–332). https://doi.org/10.1109/3DV.2016.41

Shotton J, Glocker B, Zach C, Izadi S, Criminisi A, Fitzgibbon A (2013) Scene coordinate regression forests for camera relocalization in RGB-D images. In 2013 IEEE Conference on Computer Vision and Pattern Recognition (pp 2930–2937). https://doi.org/10.1109/CVPR.2013.377

Xiao J, Owens A, Torralba A (2013) Sun3D: A database of big spaces reconstructed using SFM and object labels. In 2013 IEEE International Conference on Computer Vision (pp 1625–1632). https://doi.org/10.1109/ICCV.2013.458

Lai K, Bo L, Fox D (2014) Unsupervised feature learning for 3D scene labeling. In 2014 IEEE International Conference on Robotics and Automation (ICRA) (pp 3050–3057). https://doi.org/10.1109/ICRA.2014.6907298

Halber M, Funkhouser T (2016) Structured global registration of RGB-D scans in indoor environments. arXiv preprint arXiv:1607.08539

Fischler MA, Bolles RC (1981) Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun ACM 24(6):381–395. https://doi.org/10.1016/b978-0-08-051581-6.50070-2

Li L, Zhu S, Fu H, Tan P, Tai C-L (2020) End-to-end learning local multi-view descriptors for 3D point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp 1919–1928). https://doi.org/10.1109/cvpr42600.2020.00199

Khoury M, Zhou Q-Y, Koltun V (2017) Learning compact geometric features. In Proceedings of the IEEE International Conference on Computer Vision (pp 153–161). https://doi.org/10.1109/iccv.2017.26

Spezialetti R, Salti S, Di Stefano L (2019) Learning an effective equivariant 3D descriptor without supervision. In Proceedings of the IEEE/CVF International Conference on Computer Vision (pp 6401–6410). https://doi.org/10.1109/ICCV.2019.00650

Charles RQ, Su H, Kaichun M, Guibas LJ (2017) PointNet: Deep learning on point sets for 3D classification and segmentation. In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp 77–85). https://doi.org/10.1109/CVPR.2017.16

Funding

This research was partly supported by the MSIT(Ministry of Science and ICT), Korea, under the Innovative Human Resource Development for Local Intellectualization support program(IITP-2024-RS-2022-00156360) supervised by the IITP(Institute for Information & communications Technology Planning & Evaluation). This work was partly supported by the Material&parts technology development project (20024280, Development of a Focused Ultrasound Therapeutic Device for BBB Opening and Neuromodulation) funded By the Ministry of Trade, Industry & Energy(MOTIE, Korea).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

None declared.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Jung, S., Shin, YG. & Chung, M. Point cloud registration with rotation-invariant and dissimilarity-based salient descriptor. Multimed Tools Appl (2024). https://doi.org/10.1007/s11042-024-18577-2

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11042-024-18577-2