Abstract

Electroencephalography (EEG)-based Brain-Computer Interface (BCI) systems for emotion recognition have the potential to assist the enrichment of human–computer interaction with implicit information since they can enable understanding of the cognitive and emotional activities of humans. Therefore, these systems have become an important research topic today. This study aims to present trends and gaps on this topic by performing a systematic literature review based on the 216 published scientific literature gathered from various databases including ACM, IEEE Xplore, PubMed, Science Direct, and Web of Science from 2016 to 2020. This review gives an overview of all the components of EEG based BCI system from the signal stimulus module which includes the employed device, signal stimuli, and data processing modality, to the signal processing module which includes signal acquisition, pre-processing, feature extraction, feature selection, classification algorithms, and performance evaluation. Thus, this study provides an overview of all components of an EEG-based BCI system for emotion recognition and examines the available evidence in a clear, concise, and systematic way. In addition, the findings are aimed to inform researchers about the issues on what are research trends and the gaps in this field and guide them in their research directions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Emotions have a great impact on the basic cognitive process of people, but they are also very effective in the decision-making process as well [1]. They play a crucial role in personal communication and many aspects of daily life [2]. If emotions, which are an indispensable part of human life, can be fully recognized and predicted by computers, the advancement of the fields such as artificial intelligence, affective computing, or brain-computer interface will be considerably accelerated. Therefore, automatic human emotion recognition can be said to be a key technology for natural human–computer interaction (HCI) [3].

HCI is an interdisciplinary field of study that deals with the design, evaluation, and application of interactive technology [4] and mainly refers to the interaction and relationship between humans and computers. From the past to the present, human interaction with computers has developed considerably and innovative interaction modalities have emerged. Brain-Computer Interface (BCI) systems are one of the emerging technologies in HCI research recently. BCI is a type of user interface that allows users to interact with computational systems only by using electrical signals generated in the brain without a body movement [5].

BCI is a term that was first introduced by Jacques Vidal [6, 7]. BCI technology was primarily aimed to enable users, especially the physically impaired ones, to interact with computational systems by using only electrical signals gathered directly from the brain rather than using any body movements [8]. However, currently owing to the release of low-cost consumer-grade devices that make the development of BCI systems easier, it has begun to be employed in various domains and begun to target healthy users as well [9]. Various examples can be seen in applications in various domains such as entertainment [10], games [11], education [12], or communication [13]. Thus, it mainly provides new research opportunities in the field of HCI as well as in other fields such as neuroscience, medicine, signal processing, and artificial intelligence due to its interdisciplinary nature.

In recent years, studies have been conducted on the transfer of these emotions for interaction with the computer. There are various methods used to sense and recognize the users’ emotional states. Non-physiological methods such as facial gestures or voice tones are not considered to be reliable since they can be voluntarily manipulated [14]. On the other hand, thanks to the advancements of BCI systems in addition to the technology of Affective Computing [15], physiological methods such as electroencephalography (EEG)-based BCIs seem to be more efficient and reliable to a certain extent because signals gathered from the nervous system are hard to be controlled willfully [2].

Affective computing is a computing domain that relates to, arises from, or deliberately influences human emotion and can be performed by observing and analyzing behavioral indicators such as physiological changes in a person's body, facial expressions, tone of voice, and word use [1]. BCIs convert the signals created due to the electrical activity inside the brain into direct commands without being connected to any peripheral nerves or muscles [16], so they are also used for affective computing. These two fields form the affective BCI systems (aBCI system) [17] that detect emotional state signals and then use them to support HCI. Thus, BCIs can also be used to sense the user’s emotional states in addition to their primary purpose of control.

For an aBCI systems, Electroencephalogram (EEG) data have been one of the widely used signals since it is noninvasive and provides an intuitive method for measuring emotion [2]. In fact, EEG is often used, especially in the diagnosis of neurological diseases, for example epilepsy, brain tumors and sleep disorders [18]. However, it is also possible to determine the mental and emotional states of the person. Thus, in situations where people's responses need to be reliably evaluated, EEG-based BCI technology stands out as a tool that can be used for identifying emotional states because it can provide more accurate results [19, 20].

The main components for emotion recognition with EEG-based BCIs are identified as the signal stimulus module which includes the employed device, signal stimuli, data processing modality, and signal processing which includes signal acquisition, pre-processing, feature extraction, feature selection, classification algorithms, and performance evaluation [21]. There are many studies focusing either on computational methods of any component [22,23,24,25,26,27,28,29,30], as well as reviews [31,32,33,34,35] of emotion recognition in the literature. However, there is no study that focuses on all components of EEG-based emotion recognition in the context of HCI and affective BCIs. Therefore, in this study, it is aimed to conduct a comprehensive systematic review on emotion recognition with EEG-based BCI studies conducted to determine the major trends regarding all the defined components. This method is adopted since it aims to identify all empirical evidence that meets predetermined inclusion criteria to answer a particular research question or hypothesis [36]. Besides, it minimizes bias on the gathered findings so that more reliable conclusions can be drawn and decisions can be made. Thus, possible information gaps are revealed based on what has been done so far on this subject. In addition, future development insights are provided for the researchers who would like to contribute to the field.

2 Background of the study

2.1 EEG

The source of all emotion is the human brain and the emotions are created as brain waves that are generated by electrical potentials based on spontaneous and rhythmic impulses of brain neurons [37]. There are various methods that can be used to detect these brain activities such as magnetoencephalogram (MEG), electrocorticogram (ECoG), infrared spectroscopy (NIRS), functional magnetic resonance imaging (fMRI), electromyographic (EMG), and electroencephalography (EEG). EEG is one of the most accessible, portable, low cost and practical to use among these techniques nowadays. First, Hans Berger, a German psychiatrist in 1924, succeeded in recording the electrical activity of the cerebral cortex with EEG and revealed that it is possible to see how the brain works [38].

EEG is one of the non-invasive methods that measure the electrical potential changes in brain cells [39]. EEG does not record the activity of a single neuron but rather it reflects the joint activity of millions of neurons extending along a few centimeters of cortical tissue [40]. Since the captured signals are very weak and of poor quality, in order to provide a higher spatial resolution and a more precise system, several electrodes are needed to be used [5]. Neuronal activities are measured and recorded by electrodes that are placed on the pre-determined reference points on the skull by electroencephalography. There are various standard points for the placement of electrodes on the skull for EEG measurements. The most widely used is the international 10/20 system, which is standardized by the International Federation of Societies for Electroencephalography and Clinical Neurophysiology [41]. As can be seen in Fig. 1, in this placement system, letters and numbers are used to identify the electrodes. Each letter indicates the relevant lobe of the brain, and the number indicates its position on the cerebral hemisphere. F is used for Frontal, T is used for Temporal, C is used for Central (although not the central lobe, the letter C is used for identification), P is used for Parietal and O is used for Occipital lobes. Even numbers indicate electrode positions in the right hemisphere, odd numbers indicate positions in the left hemisphere. Z is used to define the electrodes placed on the vertical midline.

The international 10/20 system [41]

EEG records signals from the brain by the electrodes placed on the skull. Thus, it creates an image of electrical activity which is characterized by waves of varying frequencies, amplitude, and shape [42]. Brain waves are classified into five according to their frequencies and are called delta, theta, alpha, beta, and gamma waves [43]. These frequency bands are associated with different mental states of the brain and the associated definitions for each band vary among studies [5, 35, 37, 44]. The associations and potential localization on the brain areas of these bands determined in various studies are summarized in Table 1.

EEG has several benefits compared to other brain imaging techniques or physiological recordings (such as GSR) [44]. It is a method with a good temporal resolution and acceptable spatial resolution. This makes EEG an ideal technology for studying the precise timing of cognitive and emotional processing underlying behavior. In addition, its being non-invasive and portable are the other advantages that cause it to be the most frequently used physiological method for emotion recognition. It also has some drawbacks such that the data cannot be collected easily, and the data obtained can often be too noisy.

2.2 Emotion and emotion models

Emotion is one of the difficult to define phenomena based on human cognition. It is considered as a general term for a series of subjective cognitive experiences. However, there is no scientific agreement on a definition of emotions. It is defined as "the bodily changes directly follow the perception of the exciting fact, and that our feelings of the changes as they occur” by William James (1884, cited in [14]) while Ekman et al. [45] define it as a “human’s state of mind and feelings, including psychological, physical and social aspects”. The affective state is used as a more common term by researchers for emotional states [1].

Various models have been proposed in the literature to classify and represent emotions and as in the definition of emotion, there is no consensus on a general model as well [42]. These are categorical models in which discrete definitions are determined for each emotion while the other is dimensional models in which emotions are classified based on dimensional scales [46, 47]. Two of the models stand out from each category in current research [14]. The discrete emotional model proposed by Ekman [45] describes discrete emotions as a group of universal or in other words as being commonly accepted in different cultures and determines 6 basic emotions namely anger, fear, sadness, joy, surprise, and disgust. On the other hand, the dimensional model defines emotions on two or three-dimensional planes. One of the widely known dimensional model is Russel’s circumplex model [48] in which feelings of pleasant to unpleasant emotions are represented on a valance scale while feelings of being active and inactive are represented on an arousal scale. Thus, emotions are interpreted according to the values of each scale. This type of dimensional model enables researchers to focus on emotion recognition tasks without being bound to a particular emotion category [42].

2.3 Emotion recognition with EEG-based brain-computer interfaces

BCIs help to understand the cognitive and emotional activities of humans so have the potential to assist the enrichment of human–computer interaction with implicit information [49, 50]. [5] related the classification criteria of a BCI system to various factors regarding the nature of the employed device (invasive or noninvasive), the nature of the data processing modality (synchronous or asynchronous), the nature of the signal stimuli (exogenous or endogenous) [51], and how the control signal is applied (active, passive, or reactive). EEG has been the primary approach as a non-invasive technique for developing BCI systems in current research [19, 20].

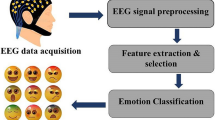

As emotion is considered as a mental state, it can be recognized by a BCI system by using specific techniques to classify emotional states based on EEG data [5]. The basic procedure required for an EEG-based BCI system for emotion recognition can be seen in Fig. 2 adapted from previous studies [17, 35, 52,53,54]. The procedure consists of the signal stimulus module which includes the signal acquisition step and signal processing module that includes the computational methods required to perform an accurate emotion recognition process [55]. The data acquisition step involves stages of signal stimuli selection and recording EEG signals to create an EEG dataset. Signal processing involves stages of preprocessing, feature extraction, feature selection, classification, regression, and performance evaluation.

Emotion recognition procedure for EEG-based BCI systems (adapted from [35])

2.3.1 Data acquisition

With the intention of conducting an EEG-based emotion recognition study, initially the EEG signals should be created by inducing emotion and then the EEG signals created in the brain of the participants should be detected and recorded by using an EEG device. However, many of the studies also use pre-defined EEG datasets published in the literature although these datasets were also created by the same procedure handled in the signal acquisition step.

Powerful stimuli are required for emotion elicitation. There are two general approaches to induce emotions namely subject-induced or event-induced [56]. The subject-induced approach requires participants to remember a previous feeling whereas in the event-induced approach various modalities are used to trigger the emotion such as audio or visual stimulus to evoke specific emotions [42]. These audio or visual stimulus are sometimes used together as a combination. In many of the studies, researchers use their own stimuli set for instance by selecting from some movie clips [57] or by giving some cognitive tasks to the participants [58]. However, there are some publicly available standard databases that provides already validated and ready to use stimuli set for EEG data collection such as the International Affective Picture System (IAPS) [59] and International Affective Digitized Sounds (IADS) [60], Geneva Affective Picture Database (GAPED)[61].

In this signal acquisition step, participants are required to wear EEG devices to gather and record their brain signals. Although EEG devices were intended for medical purposes in the past, many commercial wireless headsets which are low-cost and easy to use emerged lately [5]. Some mostly used EEG devices and their features are presented in Table 2.

There are several publicly available datasets developed by researchers for use in EEG-based emotion recognition studies. By these datasets, researchers have the opportunity to carry out their studies without the need for any laboratory, participant, and special recording systems. These datasets are academically accepted as reliable, and they are freely accessible with the permission of the publisher. Some commonly used datasets and their features are summarized in Table 3. As can be seen, many of the datasets, video clips were used for the induction of emotions, the number of participants ranged from minimum 5 to 32, various EEG headsets with different number of channels were used to record EEG data and some other signal types were also included. Target emotions recognized with these datasets are generally based on dimensional models.

2.3.2 Signal processing

EEG-based BCI system for emotion recognition continues with the processing of the gathered signals during the signal acquisition step. Signal processing is also conducted in several sub-stages of pre-processing, feature extraction, feature selection, classification, and performance evaluation.

EEG data is generally contaminated by muscle activity, eye-blinks, or movements. Therefore, pre-processing is conducted as an initial step to filter EEG data from these internal and external artifacts and inferences. There are three commonly used filter types which are low-frequency (low-pass), high-frequency (low-pass), and notch filters for EGG data [35]. Mostly used pre-processing methods for EEG signals are namely, Principal Component Analysis (PCA), Independent component analysis (ICA), and Common Spatial Patterns (CSP) [35] while some regression methods are used especially for muscle artifact removal [75]. However, care must be taken while applying these filters because they may also have relevant emotional state information that can improve emotion recognition algorithms [35].

The clean data filtered in the pre-processing stage is then transferred to the feature extraction process which is one of the most crucial steps in an EEG-based BCI system for emotion recognition [32]. Features can be computed in the domain of time, frequency, time–frequency, or space and the most used algorithms are listed as WT (Wavelet Transform), PCA, Hjorth, ICA, and statistical measures [35]. A single feature extraction technique is not optimal for all applications and existing signals may not be adequate so introducing more features from different analysis domains are an accepted strategy [76]. In addition, it is not possible to determine which feature represents which emotion, precisely [77]. Power features from different frequency bands are accepted as the highly related features to the emotional states such as differential entropy, fractal dimension values, and power spectral density.

Finally, the EEG data is trained and interpreted by a machine learning classifier. The features extracted in the feature extraction process will be fed to the classifier. Several machine learning algorithms have been used as emotion classifiers. SVM (Support Vector Machine SVM), LDA (Linear Discriminant Analysis) from linear classifier category, kNN (k-Nearest Neighbors), from nearest neighbor classifier category CNN (Convolutional Neural Network) from neural networks category, and AdaBoost from ensemble classifier category are the mostly implemented classifiers [35, 75, 76]. In addition to these, Deep Learning methods have begun to be implemented for EEG-based BCI systems for emotion recognition systems as well [35].

Results of this classification should be evaluated by performance measures for different researchers to understand and compare their studies. Confusion matrix, accuracy, error rating, and other measures obtained from the confusion matrix, such as the recall, specificity, precision, Area Under the Curve (AUC), and F-measure can be listed as mostly used performance evaluation measures [35].

3 Related works

In the literature, there are several previous review studies that focus on EEG-based emotion recognition. These previous studies can be grouped into two main categories namely narrative reviews and systematic literature reviews (SLR) [78]. Although the general aim of these two types of reviews is similar in that they summarize the results of previous literature on a topic, they also have obvious differences. For instance, narrative reviews are broad while SLRs are more specific, and they follow a specific study selection mechanism for the inclusion of the studies regarding their source, selection, and evaluation. Therefore, SLRs are evidence-based [78]. The literature review studies especially published between 2016 and 2021 are summarized in Table 4 regarding the type of review, source databases used, number of studies included, the timespan of the primary studies included in the review, and the focus of the review.

The studies are categorized as a SLR if only the steps of an SLR process similar to the process defined by Kitchenham [79] are reported clearly in the study; otherwise, they are categorized as a narrative review. As can be seen from Table 4, many of the studies were conducted as a narrative review study therefore the number of primary studies, source databases and the studies’ timespan are ambiguous. The number of primary studies reviewed are generally low or not mentioned. Many of the reviews focus on the signal processing aspects of EEG-based emotion recognition. On the other hand, few of them focus on some specific topics. These are specific emotional states such as human stress [80] or mental states [81]; specific computational methods such as entropy-based methods [14] or transfer learning methodology [82] and some applications of BCI such as BCI based robots [83]. SLR studies gathered the articles from the databases of Springer, MDPI (Multidisciplinary Digital Publishing Institute), ScienceDirect, Google Scholar, IEEE Xplore, or WOS (Web of Science).

This study is different from all the narrative review-based articles regarding the method that is implemented. Although the scope of the three SLRs reported in Table 3 [31, 32, 35] are somewhat similar to this study; this study distinguishes itself from them since it investigates the EEG-based BCI system for emotion recognition in a broader and more detailed affective BCI perspective. For instance, Torres et al. [35] reported the related issues based on only 31 articles selected from the pool of articles gathered by a SLR process. Therefore, their results are limited. On the other hand, the SLRS conducted by Hamada et al. [32] and Al-Nafjan [31] covered the articles published before 2016. In addition, Hamada et al. [32]’s study also focused on the effect of music on emotion recognition.

4 Method

Within the scope of the study, a systematic literature review is conducted to examine the publications on EEG-based BCI systems for emotion recognition. The steps of the evidence-based software engineering approach proposed by Kitchenham et al. [90]. are followed to conduct this systematic review. Systematic literature review studies are distinguished from other types of reviews based on the features such that they have more specific research questions, their search process is defined by the research questions, search strategy requirements are extremely stringent to be able to find all relevant studies, and they must follow a quality evaluation to provide results based on best quality evidence [79].

The research steps proposed by Kitchenham [79] are adapted according to the requirements of the study. In this scope, the study is conducted as a three-phased study including the phases of planning, investigation, and reporting. The study phases can be seen in Fig. 3. In the planning phase, initially research questions are determined, and then the article gathering procedure is determined by defining the boundaries of the study. Search keywords, article inclusion, and exclusion criteria, databases to be searched, the article inclusion year span are decided to determine the boundaries of the review. Finally, a systematic review form is prepared to be used by all reviewers who took part in the study to provide consolidated findings. In the investigation phase, the search is conducted, the studies are gathered, the quality evaluations are performed, the pilot review is conducted, and all the articles are reviewed. In the reporting phase, all the findings are gathered and reported.

4.1 Research questions

The research questions of this study can be grouped under the main goal of determining the recent trends in EEG-based BCI systems for emotion recognition. The detailed research questions and sub-questions are as follows.

-

What are the orientation characteristics of the studies?

-

What are the data acquisition details?

-

What are the number and characteristics of the participants in the studies?

-

Which emotion models are used?

-

What is used for emotion elicitation?

-

What benchmarking EEG emotional databases are used?

-

Which emotion types are recognized?

-

What physiological records are used?

-

What are the EEG devices used?

-

What are the characteristics of EEG devices used? (Electrode placement, number of electrodes/channels, etc.)

-

Which recognition model is used?

-

-

What computational methods are used for signal preprocessing?

-

What are the filtering methods implemented for pre-processing?

-

What type of EEG correlates of emotion (signals) are used?

-

What kind of normalization is used?

-

-

Which methods are used for feature extraction and selection?

-

Which methods are used for classification and regression?

-

What type of performance evaluation is conducted?

4.2 Systematic review form

In the planning phase of the study, a review protocol is created based on the research questions determined. The goal of this protocol is to ease the review process of the researchers who participated in this study. Thus, they can reach rapidly consolidated findings during the analysis of the results. The review form consists of six sections. The sub-items determined in the form are adapted from previous review studies and are described in detail as follows.

-

1.

Study selection: This section involves information about the articles including their title, publication type (conference or a journal), publication year, publication venue (journal or conference name), and researchers’ country.

-

2.

Study orientation: The articles are classified according to their methods and types of application domains as adapted from Al Nafjan et al. [31]’s study. The methods are defined as a review or an experimental study. Since the review articles are not included in the review process only the application domains of the experimental studies are examined. The domains are grouped as medical and non-medical. Afterward, types of applications are also examined under these domains. Application types are defined as entertainment, marketing, education, assistance, games in the non-medical domain while assessment, assistance, diagnosis, monitoring in the medical domain.

-

3.

Data acquisition: Participants’ characteristics: The number and characteristics of participants who participated to gather EEG data in these studies are examined. Emotion related characteristics: Emotion related characteristics are determined as the emotion models [31, 34, 81] emotion elicitation methods [31, 35], EEG based emotion databases [31, 35, 81] and emotion types. BCI system components: In this section BCI system components are examined. These components are defined as physiological records, EEG devices, the number of electrodes and electrode placements, the channels used, and the recognition model.

-

4.

Signal Preprocessing: This section includes the examination of all preprocessing steps as in most of the systematic or narrative reviews. This process covers all the steps such that filtering, normalization.

-

5.

Feature extraction and selection: This section includes the details of the feature extraction and selection methods implemented.

-

6.

Classification and regression: This section includes the details of classification and regression methods implemented.

-

7.

Performance evaluation: This section includes the details of performance evaluation methods implemented.

4.3 Boundaries of the study

Some criteria are also defined in line with the research questions to limit the number of articles to be examined in the systematic review. These criteria are related to the time span of the articles, the search keywords regarding research questions, the source databases to be queried for the related articles, inclusion and exclusion criteria, and the quality evaluation procedure for the articles.

There are several previous review studies that have similar research questions until 2016. Therefore, it is decided the review to cover the year between 2016 and 2020. Thus, this study can provide follow up results to those studies, too [31, 32].

Several search keywords are determined regarding the goal of the study. These keywords are as follows; “Emotion Detection”, “Emotion Recognition”, “EEG”, “Electroencephalography”, “Brain-Computer Interface”, “BCI”, “Affective”, “Emotion”, and “Affective Computing”. Then the source databases to be queried are determined namely ACM, IEEE Xplore, PubMed, Science Direct, and Web of Science digital library as these databases provide cross-disciplinary research papers and articles in highly reliable journals or conferences [32]. Determined keywords are used in each database using Boolean operators alternatively in order not to miss any related studies.

After the queries are conducted in each database, studies are downloaded and then filtering, and examination of the studies takes place. During this process, each study is manually examined to decide whether to be included in the review in four rounds. In the first-round duplicate articles and articles which are not accessed full text are excluded. In the second round, out-of-scope articles and review articles are filtered by reading the title, abstract, and conclusion sections. In the third round, the articles are subjected to quality evaluation. The quality evaluation is conducted according to the checklist items defined by Ekman [91] that can be seen in Table 5. In the fourth-round articles are again evaluated regarding the following inclusion criteria (1) the study is limited to only English-language and human subjects’ studies; (2) includes only conference and journal articles; (3) the articles focus on EEG-based emotion recognition; and (4) the articles that are assessed with a quality score above 10. If an article did not comply with any of these criteria, they are decided to be excluded. As a result of the quality assessment, it is observed that the quality degree of the articles was either A or B since all the articles were assessed with a quality score above 10 as can be seen in Fig. 4. Therefore, no articles are excluded based on the quality evaluation step. In Fig. 5, the number of articles obtained at the end of each step is presented.

A total of 216 conference and journal articles published between 2016 and 2020 are included and reviewed in the study based on the study selection process which is presented in Fig. 5. Table 6 shows the distribution of the number of articles by year and publication type and it can be seen that there are 84 conference papers and 132 journal articles. The journals and conferences that frequently published these reviewed studies are reported in Table 7 and 8. Generally, journals on neuroscience, signal processing, and biomedical informatics and the conferences on neuroscience and human computer interaction are revealed to publish these studies. In addition, Table 9 presents the top five countries that have published the most articles, as China is the first one.

When all the articles to be reviewed are determined, random five articles are selected and reviewed as a pilot review. All the authors review these articles by using the review protocol individually and then come together and consolidate their findings. The inadequacies of the review protocol are revealed and corrected during this pilot study. Next, the articles are distributed to all authors for revision, and revision of an article by at least two authors is ensured during the review process to eliminate biases. While investigating the authors assigned articles at least one category in each section of the review protocol. However, articles can be assigned more than one category whenever possible. Therefore, the number of total articles mapped in each category shows some variance.

5 Results

Within the scope of this study, a systematic literature review of the studies published in scientific databases about EEG-based aBCI systems for emotion recognition is carried out. Research studies published in a 5-year period, between 2016 and 2020, are reviewed according to the research questions defined in Sect. 4.1. The findings based on 216 articles are reported under six sub-headings which are the study orientation, data acquisition, preprocessing, feature extraction and selection, classification and regression, and performance evaluation. Data acquisition sub-heading reports detailed characteristic of participants and the concept of emotion including emotion models, emotion elicitation models, EEG datasets and type of emotions as well as the information regarding the BCI devices used in the studies while preprocessing sub-heading reports type of EEG signals, and filtering and normalization methods. In addition, the rest of the sub-headings reports about the computational methods used for feature extraction, feature selection, classification, regression algorithms, as well as the performance evaluation metrics.

5.1 Study orientation

At the beginning of the study, the articles are classified according to their methods and only the experimental studies are included in the review. Next, they are categorized according to their application domains [31] as medical (N = 10, 5%) and non-medical (N = 206, 95%) as can be seen in Fig. 6. Afterwards, medical domain articles were grouped as assistance (N = 5, 50%), monitoring (N = 3, 30%), and diagnosis (N = 2, 20%) respectively. On the other hand, articles in non-medical domain are categorized as a new method proposal (N = 148, 68%), monitoring (N = 32, 15%), assistance (N = 11, 5%), entertainment (N = 4, 2%), and other (N = 19, 9%). The details of these distributions can also be seen from Figs. 7 and 8.

5.2 Data acquisition

5.2.1 Participants’ characteristics

The studies are reviewed regarding the characteristics of the participants. Participant characteristics vary among the studies regarding the number, age, gender, and health status. Figure 9 demonstrates the number distribution of participants. When the number of participants in experimental studies is examined, it is observed that 32 or less participants take part in the majority of them. In addition, when the number of participants in the EEG databases are considered in Table 3, it can be seen that the numbers are also similar. On the other hand, some researchers also carry out their studies (N = 13, 9%) with more than 32 participants to assure statistical significance. 12% (18 articles) of studies are conducted with 21 to 32 participants, while 31% (N = 46) are conducted with 11 to 20 participants. It is also observed that 17% (N = 25) of the studies are conducted with less than or equal to 10 participants. And 31% of the studies (N = 47) do not provide any information about the participants who that took part in the experiments. Meanwhile, it is worth noting that the number of participants of the studies that implemented EEG datasets are not included in this Figure.

The stages of life are defined as baby (0–2 years), young adults (3–39 years), middle adults (40–59 years) and old (60–99 years) [92]. In this study, they were recategorized as baby (0–2 years), children (2–17 years), adults (18–60) and old (60 +). Figure 10 presents the age distribution of the participants that took part in the studies. It is observed that half of the studies (N = 109, 50%) are carried out with adult participants. Few studies (N = 6, 3%) include children as participants. The participant age group is not specified in 47% of the studies (N = 101).

Figure 11 indicates the gender distribution, and it is seen that more than half of them (N = 86, 40%) are carried out with both female and male participants. On the other hand, there are single- gender studies such that in 4 articles there are only female (2%) participants while in 5 articles (2%) there are only male participants. Some studies (N = 121, 56%) do not specify any gender information, too.

Figure 12 shows the distribution of health status of the participants. It is observed that the health status of the participants is not specified in half of the studies (N = 79, % 50). Almost the other half of the studies (N = 69, 43%) are conducted with healthy participants. Only 8 studies (5%) are conducted with unhealthy participants, while 3 studies (2%) are conducted with both healthy and unhealthy participants.

5.2.2 Emotion-related characteristics

In this section, emotion models, emotion elicitation methods, EEG-based emotion databases, and triggered emotions commonly used in the studies are reported.

Emotion models

Dimensional and discrete models of emotion are commonly used approaches to modeling emotions. It is revealed that the dimensional emotion model is used in some of the articles (N = 110, 51%). On the other hand, 15% (N = 33) of articles use the discrete emotion model and the rest of the articles (N = 73, 34%) not mentioned (NM) explicitly any emotion model used, as can be seen in Fig. 13. Figure 14 shows the distribution of types of the discrete model. Many articles (N = 13, 39%) that used the discrete model implemented the Ekman’s six basic emotions model while the rest (N = 20, 61%) used other discrete models such as Plutchik's wheel of emotions. Finally, there were also different types for the dimensional models as seen in Fig. 15. A two-dimensional model named valence and arousal model was used in most of the articles (N = 95, 86%). Besides, it was observed that some articles (N = 11, 10%) used a three-dimensional model named valence, arousal, and dominance model. Moreover, other (e.g. Waku-Waku: a three-axial model of emotion) dimensional models were also used in 4% of articles (N = 4).

Emotion elicitation methods

Many emotion elicitation methods have been developed to elicit target emotions. These methods can be divided into two categories as passive and active [93]. Passive methods use stimuli such as images, film clips, and music while active methods use social or dyadic interactions with a realistic scenario in a naturalistic context [93]. Some studies use a single stimulus to trigger emotion, but some others use some social scenario rather than one stimulus. Some researchers also report that using more than one stimulus is more effective [31]. Figure 16 shows the number and percentages of emotion elicitation methods used in articles. It is observed that the audio-visual stimulus using short film clips were the most used stimulus (N = 67, 31%) while 12% of studies (N = 26) used audio-based elicitation by using music. Visual-based elicitation using images (N = 22, 10%) is used less frequently to elicit emotions. Researchers’ prepared tasks (e.g. DX-Ball game, trading in a competitive stock market) are also used as a stimulus in a few research papers (N = 17, 8%). Some articles (N = 6, 3%) used other emotion elicitation methods such as game play, imagination techniques/memory recall, movie clips and binaural beats. A very small number of papers (N = 5, 3%) included multiple techniques. In some of the studies some pre-prepared EEG datasets were implemented directly so these studies and the studies that do not mention any specific method are categorized as not mentioned (NM) (N = 71, 33%).

Publicly available standard databases are also used to elicit emotions. The distribution of these databases among the reviewed studies can be seen in Fig. 17. Some articles (N = 19, 10%) use the International Affective Picture System (IAPS) [59] dataset containing pictures while some (N = 4, 2%) used the International Affective Digital Sound library (IADS) [60] dataset containing sounds. A few articles (N = 3, 2%) use Geneva Affective Picture Database (GAPED) [61] as a stimulus. On the other hand, it is observed that in almost a quarter of the articles (N = 41, 23%), the researchers create their own stimuli datasets. Finally, 6% of the articles (N = 10) use other datasets and more than half of the articles (N = 103, 57%) do not explicitly specify the details regarding the type of stimulus used.

EEG datasets

There are various publicly available datasets developed by researchers for use in EEG-based emotion recognition studies. Figure 18 shows the distribution of their usage in the articles. It is revealed that while some studies implement only one dataset, some others implement multiple datasets. DEAP is used in more than half of the articles (N = 68, 57%). Following, the SEED (N = 27, %23), MAHNOB-HCI (N = 7, 6%) and DREAMER (N = 3, 2%) databases are used. In addition, 12 percent of the articles (N = 15) use some other databases such as eNTERFACE, SEED-VI or self-generated datasets.

Emotions

Figure 19 presents the distribution of target emotions determined in the studies. It is worth noting that the valence and arousal model is widely used in the reviewed studies. Therefore, valence (N = 72), arousal (N = 69), negative (N = 54), positive (N = 53) and neutral (N = 49) feelings were determined as target emotions in many of the articles. Besides, dominance (N = 11) feeling are elicited in few articles that use the valence, arousal, and dominance model. In some of the articles, some discrete emotions are also report such as sad (N = 52), happiness (N = 49), fear (N = 30), and anger (N = 26) feelings. In addition, relax, calm, excited, dominance, surprise, disgust, joy, bored and, liking feelings are also reported in less than 14 studies, too.

5.2.3 BCI system components

Physiological records

Emotion recognition process can be performed by using multimodal physiological records, in addition to EEG signals. In the reviewed studies, as can be seen in Fig. 20, EEG signals (N = 151, 70%) are determined to be used alone in many of the studies. The other physiological records frequently used with EEG can be listed as Electrooculogram (EOG) (N = 12, 6%), eye-tracking (N = 11, 5%), Electromyogram (EMG) (N = 9, %4), Functional Magnetic Resonance Imaging (fMRI) (N = 7, 3%), Electrocorticography (ECoG) (N = 5, 2%). The other less used multimodal records are facial expression, skin temperature, Blood Volume Pulse (BVP), ElectroDermal Activity, Functional Near-InfraRed spectroscopy (fNIRS), and Galvanic Skin Response (GSR) and these are used less than 4 studies.

BCI devices

Various EEG-based BCI devices are used in the studies as can be seen in Fig. 21. It is seen that the Emotiv EPOC EEG headset (N = 33, 15%) is the widely used device. Moreover, it is observed that few articles used NeuroSky MindWave (N = 11, 5%), and Biosemi Active-electrodes (N = 7, 3%), the Muse headband (N = 3, 2%), and ESI NeusoScan system (N = 3, 1%). In addition, as can be seen from the table that other BCI devices (Ultracortex Mark IV EEG headset, g.Nautilus, Electro-Cap, CGX Quick-30 EEG, and Quik-cap for NuAmps etc.) are also used in 11% (N = 24) of the studies but since their number is less than 3 they are not represented in the Figure separately. 63% (N = 135) of the studies do not report any information about the BCI device used.

Since various EEG-based BCI devices are used in the studies, the number of channels used for the studies is also varied, too. Figure 22 presents the distribution of the number of channels used if they are explicitly reported in the reviewed studies. When the distribution was examined, it is seen that generally 16 (N = 33, 15%) and 32 (N = 19, 9%) channel-devices are more preferred. It is also observed that 64 (N = 11, 5%) channel-devices are used in a small number of studies. It is seen that in 7 (3%) of the studies, single-channel devices are used. In addition, it has been observed that fewer studies use 21 (N = 5, 2%), 62 (N = 4, 2%), 24 (N = 4, 2%), and 19 (N = 3, 2%) channel devices. Other category included the studies reported 3, 8, 30, or 128 channels devices which is total of 7% (N = 16) of the studies.

When the electrode types used in the studies are examined, it can be said that this is directly related to the BCI devices used. Each BCI device in Fig. 21 uses similar or different electrode types. Among the articles reviewed, traditional wet Ag/AgCl electrode (N = 39, 18%) is the most used because of the widespread use of Emotiv EPOC headset. In addition, it is seen that different electrodes such as passive/active dry electrode (N = 26, 12%) (Neurosky Mindwave and the Muse headband), gel-based electrodes (N = 12, 6%) (Biosemi active two, and ESI NeuroScan System) are also used. In addition, it was observed that while other electrode types (foam-based, pure tine electrode etc.) were used in 2% (N = 4) of the studies, no information was given about the type of electrode used in 62% (N = 135) of the studies.

There are various international positioning systems such as 10–5, 10–10, and 10–20 to position electrodes on the head. In this study, it is observed that the 10–20 positioning system (N = 100, 46%) is commonly used while 10–10 positioning system (2 articles, 1%) is rarely used. Also, 53% (114 articles) of the studies do not provide information about the system.

EEG-based emotion recognition studies can be implemented either offline or online in other words real-time mode. When the studies are examined, it is seen that EEG-based emotion recognition is performed in offline mode great majority of the articles (N = 143, 67%) while a few studies (N = 32, 15%) have been carried out online 18% of them (38 articles) are not mentioned the type of recognition model.

5.3 Preprocessing

5.3.1 Type of EEG correlates of emotion (signals)

More than half of the studies (N = 129, 58%) do not mention any EEG correlates of emotion explicitly. However, it is seen that 33% of the studies (N = 73) reported frontal EEG asymmetry and 6% of them (N = 14) reported Event-Related Potentials to be used. While other types such as Steady-State Visually Evoked Potentials are mentioned only in 3% of the studies (N = 8). The distribution of EEG correlates is presented in Fig. 23.

5.3.2 Filtering

The filtering techniques frequently used in the studies are investigated and mentioned methods are reported. Some studies mentioned implementing multiple filtering approach. According to the results presented in Fig. 24, the bandpass filter is the most frequently (N = 72, 26%) applied technique to raw EEG signals to eliminate the lower and higher frequencies. A small number of articles implemented the high and low pass filter, respectively 12% (N = 33), and 11% (N = 30). Furthermore, few studies mentioned the Butterworth (N = 19, 7%), Notch (N = 17, 6%), Independent Component Analysis (N = 3, 1%) or some other (N = 21, 8%) filtering techniques such as Savitzky-Golay filter, Kalman filter, Elliptic filter and Chebyshev Infinite Impulse Response (IIR) filter. On the other hand, it is observed that 29% (N = 81) of the studies did not report any filtering technique.

5.3.3 Normalization

The studies are examined according to the normalization methods implemented. As can be seen in Fig. 25, the studies use various normalization methods such as standardized normal distribution (N = 11, 5%), Z-score (N = 6, 3%), Min–Max (N = 4, 2%), and Rectified Linear Unit (N = 3, 1%). Some other techniques reported are Global normalization, Local normalization, and L1/2 normalization in a small number of studies with a total of 5% (N = 12). In addition, 84% of them did not mentioned any technique explicitly.

5.4 Feature extraction and selection

5.4.1 Feature extraction

Features are commonly extracted from frequency, time, frequency-time, and wavelet domains in EEG-based emotion recognition studies. Figure 26 indicates the feature extraction domains implemented in the reviewed studies. It is seen that 44% (N = 147) of these studies were extracted from the frequency domain, 29% (N = 96) from the time domain, 12% (N = 42) from the wavelet domain, and 10% (N = 34) from the frequency-time domain. In addition, some studies (N = 18, 5%) do not mention any method explicitly. In some of the studies only one type of domain is used while in some studies multiple domains are used for feature extraction.

Figure 27 demonstrates extracted features from the frequency domain. Multiple features are extracted in many of the studies. Thus, Beta (N = 107, 19%), Alpha (N = 106, 19%), Theta (N = 92, 16%), Gamma (N = 83, 15%) and Delta (N = 58, 10%) frequency bands are determined as higher extracted features in the studies. In addition to these, Power Spectral Density (N = 42, 8%), Band power (FT, DFT, STFT, Welch) (N = 28, 5%), Independent Component Analysis (N = 17, 3%), Differential Entropy (N = 3, 1%) and Principal Component Analysis (N = 3, 1%) features are also observed to be extracted in the studies. In some studies (N = 18, 3%), the other frequency domain features were extracted such as average frequency band division, rational asymmetry, and differential asymmetry.

Figure 28 shows the distribution of the number of studies by the percentage of extracted features in the time domain. It is observed that statistical features are used in most of the articles (N = 67, 54%) while Hjorth features are extracted in some articles (N = 29, 23%), too. Moreover, Fractal Dimension (N = 6, 5%), Event-Related Potentials (N = 6 articles, 5%), Common Spatial Pattern (N = 4, 3%), and other (N = 9, 7%) time-domain features such as Cross-Correlation, Hurst Exponent, Zero Crossing Rate, and Peak Signal to Noise Ratio are also mentioned in fewer studies.

Figure 29 shows the usage percentages of statistical features in time domain features. It is seen that mean (N = 46, 27%), standard deviation (N = 42, 24%), and variance (N = 25, 14%) are widely used in the studies. In addition, Kurtosis symmetry (N = 9, 5%), median (N = 7, 4%), power (N = 7, 4%), skewness (N = 6, 4%), the first difference (N = 4, 2%), the second difference (N = 4, 2%), and average (N = 3, 2%) are also used as statistical features in the time domain in fewer studies. And also in some studies (N = 20; 12%) the other statistical features were extracted such as maximum, minimum, and root mean square.

Figure 30 presents the number of articles that used Hjorth features in the time domain. It is seen that complexity (N = 23, 42%), activity (N = 21, 38%), and mobility (N = 11, 20%) are extracted in the studies in which Hjorth features were implemented.

Figure 31 indicates the number of articles that used frequency-time domain features. The discrete wavelet transform feature is extracted in almost half of the studies (N = 16, 43%) which used time–frequency domain features. Almost in the other half of the studies (N = 18, 49%), it was observed that other features such as Wigner-Ville Distribution, Hilbert-Huang spectrum, Higher order spectra, Permutation phase transfer entropy, and Analytic Wavelet Transform were extracted. Finally, a few number of studies (N = 3, 8%) did not mentioned which time–frequency domain features were extracted.

The features extracted in the articles that implemented wavelet domain are seen in Fig. 32. It is observed that the entropy (N = 27, 43%) and energy (N = 14, 26%) features are extracted from the wavelet domain in many of the studies. On the other hand, few studies (N = 9, 17%), extracted features such as Coiflet wavelet of order 1 or Stationary Wavelet Transform from wavelet domain.

5.4.2 Feature selection

The studies are reviewed regarding the feature selection methods implemented but it is revealed that many of the studies do not use any feature selection method. Very few articles report about the implementation of Pearson Correlation Analysis (N = 3, 1%), and Minimum Redundancy Maximum Relevance (N = 3, 1%) as a feature selection method as can be seen in Fig. 33. Other methods such as Elephant Herding Optimization, Principal Component Analysis, Unsupervised Discriminative Feature Selection, Forward Feature Selection method, Linear Model Feature Ranking, Recursive Feature Elimination, Random Forest Feature Ranking, Multidimensional Scaling, Locally Linear Embedding, Laplacian Eigenmaps and Multidimensional Scaling (MDS) are all used less than 3 articles in the rest of studies with a total of 15% (N = 35).

5.5 Classification and regression

5.5.1 Classification

The distribution of classification methods used in the reviewed studies are presented in Fig. 34. Support Vector Machine (N = 87, 27%) and Artificial Neural Networks algorithms (such as Convolutional Neural Network, Extreme Learning Machine, and Recurrent Neural Network) (N = 75, 23%) are the two widely used classifiers. Some other articles use K-nearest neighbor (N = 35, 11%), Random Forest (N = 20, 6%), Linear Discriminant Analysis (N = 19, 6%), Naïve Bayes (N = 12, 4%), Decision Tree (N = 9, 3%), and Logistic Regression (N = 8, 3%) as a classifier, too. Few studies use Bayesian Classifier (N = 4, 1%), Quadratic Discriminant Classifier (N = 4, 1%), Gradient Boosting (N = 3, 1%), and Multi-Layer Perceptron (N = 3, 1%). Other methods such as Gradient Boosting, ET-Extra Trees, K-Means, and Fuzzy C-Means clustering are all used less than 3 articles in the other studies (N = 42, 13%).

5.5.2 Regression

Figure 35 shows the distribution of regression methods reported explicitly in the studies. When the methods used in the regression are examined, it is seen that Support Vector Regression (N = 6, 23%), RF (N = 4, 15%), Neural Network Regression (N = 4, 15%), Linear Regression (N = 3, 12%), and others (N = 9, 35%) such as Logistic Regression and Nested-LOOCV regression are implemented.

5.6 Performance evaluation

The distribution of evaluation techniques used in studies are presented in Fig. 36. It is seen that accuracy calculation is used as performance evaluation in the majority of studies (N = 166, 61%). In addition, it is observed that F-score (N = 20, 7%), Sensitivity (N = 14, 5%), Specificity (N = 12, 4%), ANOVA (11, 4%), and Mean Square Error (8, 3%) are also calculated in some of the studies. In a few studies, Precision (N = 4, 2%) Recall (N = 3, 1%), and T-Test (N = 3, 1%) values are calculated to evaluate performance, too. It is also reported that some other performance evaluation techniques such as Mean Absolute Percentage Error, The Pearson Correlation coefficient, and Root Mean Square Error (N = 32, 12%) are used in some of the studies.

6 Discussion

In the scope of this study, a systematic literature review is conducted regarding the EEG-based aBCI systems for emotion recognition studies in the context of human–computer interaction and affective BCI to determine the major trends regarding the defined components [35]. These components are listed as the signal stimulus module which includes the employed device, signal stimuli, data processing modality, and the signal processing module which comprises signal acquisition, pre-processing, feature extraction, feature selection, classification algorithms, and performance evaluation. The review initially presents these components in detail and then reports the research trends in 216 scientific papers published between 2016 and 2020 gathered from various databases including ACM, IEEE Xplore, PubMed, Science Direct, and Web of Science. The findings are aimed to inform researchers from various disciplines who work currently or will work in the future in this field, about the issues on what are research trends and the gaps in this field and guide them in their research directions.

EEG-based emotion recognition studies attract various research domains including computer science, neuroscience, engineering, health, and psychology [53, 75]. Therefore, the articles are published in various journals and conferences on these disciplines and there is an increasing trend in the number of articles published every year. Although the primary development domain of EEG devices is for medical purposes, most of the investigated articles reviewed in this study are published in the non-medical domain including general topics of a new method proposal monitoring, assistance, entertainment, and some other fields such as neuromarketing.

Since one of the most important step of the data acquisition is to determine the number and profile of participants, it is revealed from the reviewed studies that the number and profile of participants vary according to the experiment type. However, in the majority of the articles, it was observed that the studies are generally performed with 32 or less participants while this is same for the EEG data set creation studies [22, 23, 25, 29, 30, 94, 95]. The studies are generally conducted with healthy and adult participants in non-medical domains while participants subjected to epilepsy [96], mental disorders [94, 97], or Parkinson's disease [98] etc. are included in medical research.

Other important issues related to data acquisition include the determination of emotion model, the emotion elicitation methods, and the type of triggered emotions to be used in the studies. Regarding emotion-related characteristics, it is revealed that many of the studies implemented dimensional models [21, 24, 26, 95] and rather than discrete emotional models [23, 27]. This is due to the fact that the dimensional models enable researchers to focus on emotion recognition tasks without being bound to a particular emotion category [42]. It is also more popular because it reduces the problem of dimensionality [83]. Especially Russell’s 2D or 3D models from dimensional models and Ekman’s six basic emotions stand out as implemented emotion models. The use of audio-visual elicitation using short video clips are the usually used emotion elicitation methods but it is seen that there is not any standard common video clips database. Similarly, when the researchers prefer to use visual-based elicitation methods, it is revealed that they prefer to use their stimuli set rather than using standard databases such as IAPS or GAPED. Since the authors generally do not share their stimuli set in their publications, the results of these studies cannot be compared with others. Therefore, it can be concluded that there is a need to implement some standard datasets to induce emotions in the studies, because it is believed that determining favorable tasks to characterize the participants' emotional state will help to develop the user performance and validity of the system in the long term [83]. It is also revealed that some of the researchers either conduct their emotion recognition studies by directly using previously created EEG datasets rather than creating their own dataset with conducting experiments. The DEAP dataset comes forward as a preferred ready-made dataset in many of the studies and these studies generally focus on signal processing issues of emotion recognition. Since dimensional models are the most used emotion models, valence and arousal are determined as the most recognized emotions in the reviewed studies.

The important and final stage of data acquisition is to determine the types of signals to be recorded and the BCI device to record these signals. When the BCI system components are investigated in terms of physiological records and EEG devices and their characteristics, it is seen that EEG is the only recording modality in many of the studies since it is believed to give better feature extraction results as compared to other signals [37, 80]. However, some other modalities such as EOG, eye tracking, or EMG are also used in some studies too, since using multiple modalities can enable to provide more comprehensive and reliable findings [42, 76, 83]. As a BCI device, Emotiv Epoc and Neurosky Mindwave devices are mainly used since they have lower cost, being more accessible, and easier to use in the market although they have fewer channels with respect to expensive headsets.

In terms of the signal processing module, methods implemented in filtering, feature extraction, classification, and performance evaluation are all reviewed. It is seen that many of the studies do not mention all the stages of the signal processing they have implemented. Many of them test and compare different algorithms while some others suggest a new method for the steps such as feature extraction or classification [42].

In the preprocessing step of EEG signals, filters are used to remove the artifacts in the signals. However, these filters may corrupt the signals' waveform and structure in the time domain. Therefore, it is essential to be careful in choosing and applying the filters to be used [21]. The bandpass filter is revealed to be the most implemented filtering method in the reviewed studies [99, 100].

In general, alpha, beta, theta, gamma and delta low frequency bands and Power Spectral Density attributes from the frequency domain are commonly extracted features in the studies [30, 101,102,103]. However, due to the low computational cost mean, standard deviation and variance [29, 102, 104, 105] are also frequently encountered for feature extraction in the time domain. In the reviewed studies [29, 102, 104, 105] the discrete wavelet transform (DWT) is the most preferred feature in the time–frequency domain. Although DWT is useful for analyzing irregular data models, it has the disadvantages of requiring high computation and memory costs [35].

In addition, the most implemented classification methods are revealed as respectively, SVM [22,23,24,25,26,27,28,29,30], ANN [21, 24, 25, 101], K-NN [22, 25,26,27,28] and RF [21, 22, 24, 102]. EEG data consists of high dimensionality vectors. Thus, SVM is often preferred since it works better in high dimensionality features and provides high accuracy [35]. Moreover, although regression methods are mentioned in a few studies, logistics regression [106, 107] is commonly used.

Finally, accuracy is the most reported performance metric in the reviewed studies [24, 30, 35, 84, 86, 101,102,103]. While accuracy is a suitable performance measure for balanced data, its potential disadvantage is that it can be higher when classification is not performed correctly. In this context, inaccurate classification includes various scenarios such as utilizing imbalanced datasets with uneven class distribution, model errors, ambiguity in labels leading to uncertainty, and misclassification during the classification process. Improper execution of classification tasks can significantly increase the risk of inaccuracies in performance evaluations. To address this concern, using diverse performance evaluation methods and including multiple metrics [35, 84, 86], such as confusion matrix, precision, recall, F1 score, specificity, sensitivity, and Cohen's kappa in addition to the accuracy metric can substantially improve the robustness and reliability of research results. Thus, based on these findings, it is not possible to determine the best method for all stages of signal processing, as each method has its own advantages and limitations [35, 42, 99].

7 Conclusion

In recent years, with the development of technology and research, progress has been made in the field of emotion recognition using BCI systems. Currently, low-cost commercial EEG headsets are being used in various domains such as communication, education, health, and entertainment. This study presents a systematic literature review on EEG-based emotion recognition in the context of HCI and aBCI.

In the scope of the study, a total of 216 studies, including 84 conferences and 132 journal publications, conducted between 2016 and 2020, were identified by using certain keywords and then they are gathered and reviewed. Since there are certain components to be included in EEG-based brain computer interface systems for emotion recognition. These components are primarily used for the systematic review and the results are reported accordingly. The results can be summarized in the following order. First, it is revealed that frequently valence and arousal dimensions of emotions are triggered by using audio-visual stimuli and recorded by using the Emotiv Epoc EEG headset mostly from adult female and male participants during the data acquisition phase. Next, in signal processing, the bandpass filter is seen to be commonly used for the preprocessing EEG data while the standard normal distribution normalization technique is used to normalize it. Besides, the features are generally extracted from the frequency domain while feature selection method is rarely used in the studies. Furthermore, SVM, ANN, LR are found to be the prominently applied methods in classification and regression stages. Finally, accuracy stands out in articles as the performance metric to measure the success of the developed systems. The significant findings of this study are in line with the results of many previous SLR studies [34, 35, 42, 99, 108, 109].

EEG-based BCIs hold significant importance in the context of HCI research. Because these systems have the potential to revolutionize the way we interact with technology and understand human behavior. In addition to enhancing the user experience, these systems have the potential to open up new possibilities in various domains, from healthcare and education to entertainment and market research. Therefore, the aim of this review was to provide comprehensive insights and guidance to researchers who are studying or just starting to research in the area of EEG-based emotion recognition, by providing a thorough overview of the current state of the field and understanding its overall landscape by identifying recent trends. The results of the review present all the necessary components of an EEG-based BCI system and provide a comprehensive report on the best practices implemented in the development of such a system. The topics to be considered can be listed as emotion models, emotion elicitation tools and techniques, and BCI system components including recording devices and signal processing methods. This review also contributes to the HCI context in that researchers need to know which robust emotion recognition approaches to use, be familiar with common machine learning techniques, and compare their performance, especially when developing real-world applications. In addition, this review assesses which areas have received more or less attention, highlights research gaps that require further investigation, and raises awareness of the limitations associated with EEG-based BCI technologies, especially in user-centered design where considerations such as user emotion are critical.

EEG-based BCIs can be considered effective as they have shown significant potential in identifying emotions. One of their key strengths lies in their ability to provide real-time, non-invasive access to neural activity, which can be correlated with emotional states. EEG-based BCIs can be considered relatively safe, user friendly [14, 85], cost-effective and portable compared to some other neuroimaging techniques, making them accessible for various applications. EEG-based BCI systems have several advantages. These include enabling automatic/intelligent emotion recognition [14, 42, 85], real-time emotion recognition with wearable technologies [31, 82, 87], and creating an implicit communication channel between humans and computers in medical and non-medical settings [2, 83, 85]. Finally, with the increasing use of AI and machine learning along with the development of advanced body sensors, cameras, and neuroimaging techniques, the use of such applications is becoming more widespread [31].

However, there are also notable challenges associated with EEG-based emotion recognition. One significant challenge is the need for robust feature extraction and classification methods to accurately interpret EEG signals related to emotions. Additionally, inter-subject variability in brain activities [31, 82, 85,86,87] and the limited spatial resolution of EEG can pose difficulties in achieving high accuracy. Noise interference, such as muscle artifacts and environmental factors, can further complicate the task of recognizing emotion using EEG [2, 42, 82, 83, 85]. Addressing these challenges requires ongoing research and advancements in signal processing, machine learning, and the development of standardized protocols for emotion elicitation and data collection. This study also aims to provide a comprehensive understanding of EEG-based BCI systems for emotion recognition in all these relevant aspects.

One of the most important limitations of this type of SLR study is reliability. Therefore, the study is conducted according to an iterative process. Initially, the review protocol is formed and then as a first iteration it is used by all researchers to review randomly selected five common articles. The researchers come together and compare their review results together. Thus, it is ensured that all researchers understood the same thing from the protocol. In addition, points where some of the classification areas included in the review protocol used are revealed and new items have been added to address this limitation as well. Afterwards, in each following iteration, each article is ensured to be reviewed by at least two authors. One author checks the other ones’ review findings in pairs. Author bias is tried to be reduced in this way. Another limitation can be the possibility of missing some influential articles not included in the review due to the database search. However, this limitation is tried to be minimized as well by searching various databases which index cross-disciplinary research papers and articles in highly reliable journals or conferences.

References

Picard RW (1995) Affective Computing. MIT Press Camb, MA USA

He Z et al (2020) Advances in multimodal emotion recognition based on brain–computer interfaces. Brain Sci 10:687

Spezialetti M, Placidi G, Rossi S (2020) Emotion Recognition for Human-Robot Interaction: Recent Advances and Future Perspectives. Front Robot AI 7:532279

Dix A, Finlay J, Abowd GD, Beale R (2003) Human-computer interaction (3rd ed). Harlow, England. New York: Pearson/Prentice-Hall

Vasiljevic GAM, de Miranda LC (2020) Brain–computer interface games based on consumer-grade EEG Devices: A systematic literature review. Int J Human-Computer Interact 36:105–142

Vidal JJ (1973) Toward direct brain-computer communication. Annu Rev Biophys Bioeng 2:157–180

Vidal JJ (1977) Real-time detection of brain events in EEG. Proc IEEE 65:633–641

Darwin C (1872) The expression of emotion in man and animals (1st ed). London: John Murray

Ahn M, Lee M, Choi J, Jun S (2014) A Review of Brain-Computer Interface Games and an Opinion Survey from Researchers, Developers and Users. Sensors 14:14601–14633

Folgieri, R, Lucchiari, C, Granato, M, Grechi, D (2014) Brain, Technology and Creativity. BrainArt: A BCI-Based Entertainment Tool to Enact Creativity and Create Drawing from Cerebral Rhythms. in Digital Da Vinci (ed. Lee, N.) 65–97 (Springer New York, 2014). https://doi.org/10.1007/978-1-4939-0965-0_4.

Nijholt A, Erp, J, van Heylen DKJ (2008) BrainGain: BCI for HCI and Games. In: Proceedings AISB Symposium Brain Computer Interfaces and Human Computer Interaction: A Convergence of Ideas, The Society for the Study of Artificial Intelligence and Simulation of Behaviour, Aberdeen, pp 32–35

Serrhini, M, Dargham, A (2017) Toward Incorporating Bio-signals in Online Education Case of Assessing Student Attention with BCI. in Europe and MENA Cooperation Advances in Information and Communication Technologies (eds. Rocha, Á., Serrhini, M. & Felgueiras, C.) vol. 520 135–146, Springer International Publishing

Birbaumer N (2006) Breaking the silence: Brain?computer interfaces (BCI) for communication and motor control. Psychophysiology 43:517–532

Patel P, R, R, Annavarapu, RN (2021) EEG-based human emotion recognition using entropy as a feature extraction measure. Brain Inform 8:20

Inkpen D, Strapparava C (2013) Computational Approaches to the Analysis of Emotion in Text. Comput Intell 29:389–389

Wolpaw JR, Birbaumer N, McFarland DJ, Pfurtscheller G, Vaughan TM (2002) Brain–computer interfaces for communication and control. Clin Neurophysiol 113:767–791

Mühl C, Allison B, Nijholt A, Chanel G (2014) A survey of affective brain computer interfaces: principles, state-of-the-art, and challenges. Brain-Comput Interfaces 1:66–84

Niedermeyer E, da Silva FL (2005) Electroencephalography: basic principles, clinical applications, and related fields (5th ed). Philadelphia: Lippincott Williams & Wilkins

Kim M-K, Kim M, Oh E, Kim S-P (2013) A Review on the Computational Methods for Emotional State Estimation from the Human EEG. Comput Math Methods Med 2013:1–13

Marshall D, Coyle D, Wilson S, Callaghan M (2013) Games, gameplay, and BCI: the state of the art. IEEE Trans Comput Intell AI Games 5:82–99

Torres EP, Torres EA, Hernández-Álvarez M, Yoo SG (2020) Emotion recognition related to stock trading using machine learning algorithms with feature selection. IEEE Access 8:199719–199732

da Silva Junior M et al (2019) Exploratory study of the effect of binaural beat stimulation on the EEG activity pattern in resting state using artificial neural networks. Cogn Syst Res 54:1–20

Gao Z et al (2020) A GPSO-optimized convolutional neural networks for EEG-based emotion recognition. Neurocomputing 380:225–235

Halim Z, Rehan M (2020) On identification of driving-induced stress using electroencephalogram signals: A framework based on wearable safety-critical scheme and machine learning. Inf Fusion 53:66–79

Hou H-R, Zhang X-N, Meng Q-H (2020) Odor-induced emotion recognition based on average frequency band division of EEG signals. J Neurosci Methods 334:108599

Kim S-K, Kang H-B (2018) An analysis of smartphone overuse recognition in terms of emotions using brainwaves and deep learning. Neurocomputing 275:1393–1406

Kurbalija V et al (2018) Emotion perception and recognition: an exploration of cultural differences and similarities. Cogn Syst Res 52:103–116

Li X et al (2020) VR motion sickness recognition by using EEG rhythm energy ratio based on wavelet packet transform. Comput Methods Programs Biomed 188:105266

Li Z et al (2020) Enhancing BCI-based emotion recognition using an improved particle swarm optimization for feature selection. Sensors 20:3028

Sun Y, Ayaz H, Akansu AN (2020) Multimodal affective state assessment using fNIRS+ EEG and spontaneous facial expression. Brain Sci 10:85

Al-Nafjan A, Hosny M, Al-Ohali Y, Al-Wabil A (2017) Review and classification of emotion recognition based on EEG brain-computer interface system research: A systematic review. Appl Sci 7(12):12

Hamada M, Zaidan BB, Zaidan AA (2018) A Systematic Review for Human EEG Brain Signals Based Emotion Classification, Feature Extraction, Brain Condition. Group Comparison J Med Syst 42:162

Rawnaque FS et al (2020) Technological advancements and opportunities in Neuromarketing: a systematic review. Brain Inform 7:10

Soroush MZ, Maghooli K, Setarehdan SK, Motie Nasrabadi A (2017) A Review on EEG Signals Based Emotion Recognition. Int Clin Neurosci J 4:118–129

Torres EP, Torres EA, Hernández-Álvarez M, Yoo SG (2020) (2020) EEG-Based BCI Emotion Recognition: A Survey. Sensors 20:5083

Snyder H (2019) Literature review as a research methodology: An overview and guidelines. J Bus Res 104:333–339

Wagh, KP, Vasanth, K (2019) Electroencephalograph (EEG) Based Emotion Recognition System: A Review. in Innovations in Electronics and Communication Engineering (eds. Saini, H. S., Singh, R. K., Patel, V. M., Santhi, K. & Ranganayakulu, S. V.) vol. 33 37–59 (Springer Singapore, 2019)

Berger H (1929) Über das Elektrenkephalogramm des Menschen. Arch Für Psychiatr Nervenkrankh 87:527–570

Berka C et al (2004) Real-Time Analysis of EEG Indexes of Alertness, Cognition, and Memory Acquired With a Wireless EEG Headset. Int J Hum-Comput Interact 17:151–170

Pfurtscheller, G, Neuper, C, Birbaumer, N (2004) Human Brain—Computer Interface. in Motor Cortex in Voluntary Movements (eds. Riehle, A. & Vaadia, E.) vol. 20044553 (CRC Press, 2004)

Jasper HH (1958) Report of the committee on methods of clinical examination in electroencephalography. Electroencephalogr Clin Neurophysiol 10:370–375

Dadebayev D, Goh WW, Tan EX (2022) EEG-based emotion recognition: Review of commercial EEG devices and machine learning techniques. J King Saud Univ-Comput Inf Sci 34:4385–4401

De Munck JC, Gonçalves SI, Mammoliti R, Heethaar RM, Lopes da Silva FH (2009) Interactions between different EEG frequency bands and their effect on alpha–fMRI correlations. Neuroimage 47:69–76

Farnsworth B (2019) EEG (Electroencephalography): The Complete Pocket Guide - iMotions, Global HQ: Copenhagen, Denmark. https://imotions.com/blog/learning/best-practice/eeg/

Ekman P et al (1987) Universals and cultural differences in the judgments of facial expressions of emotion. J Pers Soc Psychol 53:712–717

Scherer KR (2005) What are emotions? And how can they be measured? Soc Sci Inf 44:695–729

Sreeja PS, Mahalakshmi G (2017) Emotion models: a review. Int J Control Theory Appl 10(8):651–657

Russell JA (1980) A circumplex model of affect. J Pers Soc Psychol 39:1161–1178

Cao Z (2020) A review of artificial intelligence for EEG-based brain−computer interfaces and applications. Brain Sci Adv 6:162–170

Zander TO, Kothe C (2011) Towards passive brain–computer interfaces: applying brain–computer interface technology to human–machine systems in general. J Neural Eng 8:025005

Nicolas-Alonso LF, Gomez-Gil J (2012) Brain Computer Interfaces, a Review. Sensors 12:1211–1279

Bhise, PR, Kulkarni, SB, Aldhaheri, TA (2020) Brain Computer Interface based EEG for Emotion Recognition System: A Systematic Review. In 2nd International Conference on Innovative Mechanisms for Industry Applications (ICIMIA) 327–334 (IEEE, 2020). https://doi.org/10.1109/ICIMIA48430.2020.9074921