Abstract

This paper presents an evaluation of the latest MPEG-5 Part 2 Low Complexity Enhancement Video Coding (LCEVC) for video streaming applications using best effort protocols. LCEVC is a new video standard by MPEG, which enhances any base codec through an additional low bitrate stream, improving both video compression efficiency and and transmission. However, there is an interplay between packetization, packet loss visibility, choice of codec and video quality, which implies that prior studies with other codecs may be not as relevant. The contributions of this paper is, therefore in twofold: It evaluates the compression performance of LCEVC and then the impact of packet loss on its video quality when compared to H.264 and HEVC.The results from this evaluation suggest that, regarding compression, LCEVC outperformed its base codecs, overall in terms average encoding bitrate savings when using the constant rate factor (CRF) rate control. For example at a CRF of 19, the average encoding bitrate was reduced by 18.7% and 15.8% when compared with the base H.264 and HEVC codecs respectively. Furthermore, LCEVC produced better visual quality across the packet loss range compared to its base codecs and the quality only started to decrease once packet loss exceeded 0.8-1%, and decreases at a slower pace compared to its equivalent base codecs. This suggests that the LCEVC enhancement layer also provides error concealment. The results presented in this paper will be of interest to those considering the LCEVC standard and expected video quality in error-prone environments

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Recent advances in high-bandwidth storage devices, high-speed networks, computing, and compression technology have made it possible for Over-the-Top streaming, providing real-time multimedia services over unmanaged networks via the internet [1]. Many broadcast studios are embracing internet protocol (IP) based solutions for transporting real-time live video due to the flexibility IP encapsulation can by lowering the amount of cabling by aggregating multiple signals onto ethernet connections [2]. Due to the lack of bandwidth availability these videos are usually compression [1] and then transmitted using either protocols that are layered over the connection oriented transmission control protocol (TCP) or the best effort user datagram protocol (UDP). While the user expects to receive high visual quality videos, the reality is video is still streamed to such devices over error-prone wireless networks, where it is impossible to make ’less error-prone’ by altering the physical channel. It is true that a TCP-based form of HTTP Adaptive Streaming (HAS) [3] can be applied to error-prone communications to allow for lost or damaged packets to be re-transmitted. However, if such video streams are transmitted using best effort protocols (UDP), network impairments such as packet loss can have a significant effect on the resulting video quality for users [4].

The recently standardized MPEG-5 Part 2 Low Complexity Enhancement Video Coding (LCEVC) enhances any base codec through coding tools specialised for residual data sub-layers, a hierarchical image representation and parallel processing. The core concept of LCEVC consists of using a conventional video codec standards, such as H.264/Advanced Video Coding (AVC) and High Efficiency Video Coding (HEVC), as base codecs at a lower resolution and recreating a full resolution video by combining up to two enhancement residual sub-layers with the decoded low-resolution video. As a result, LCEVC reduces the overall encoding complexity whilst improving the video compression efficiency [5, 6]. LCEVC is codec agnostic and has a royalty free layer, with its design enabling it to be backwards- compatible and implemented purely in software, further simplifying deployment [5, 7]. Therefore, allowing the deployment of LCEVC with no hardware support, accomplishing all enhancement layer processing in software [7].

However, a gap in the literature exists on the performance of best-effort transmission of LCEVC-coded video and in particular the impact of packet loss on the resulting visual quality. For example it was found that when packet loss is taken into account older video codecs achieve better receiver quality when compared with newer ones [8, 9]. Furthermore, previous literature on LCEVC video compression has focused the use of constant bitrate (CBR) and constant quantization parameters (CQP), with minimal references to the use of constant rate factor (CRF). CRF has been shown to be the best rate control method in terms visual quality using the same target bitrate [10]. Therefore, the contribution of this paper is to evaluate the performance of LCEVC enhancements when compared to H.264 and HEVC base codecs, in terms of video quality, compression efficiency and the relative impact of packet loss when it’s streamed over an error-prone network.

The remainder of this paper is organized as follows. Section 2 mentions related work in addition to that in this introductory Section or describes already mentioned work in a little more depth. Section 3 describes the methodology, video configurations, and other aspects of the video quality evaluations in the two sets of experiments reported on in this paper. Section 4 presents the video quality evaluations for the two sets of experiments and in doing so analyzes the results. Finally, Section 5 discusses the conclusions arising from the evaluations and makes recommendations for further research.

2 Related work

Over the past decades there have been many efforts in providing a better understanding of Quality-of-Experience (QoE) [11] for video streaming applications, with visual quality being a key metric. Resource constraints in the transportation and storage of video have resulted in the development of video compression standards to reduce high video data rates that exist by removing redundancies, enabling one to provide high quality video services under the limited capabilities of storage and transmission networks [7, 12]. Numerous studies have evaluated the performance of codecs using video quality (after video decoding) and encoding bitrates as key metrics. Video quality is usually conducted using objective metrics such as Video Multi-Method Assessment Fusion (VMAF) [13], Structural Similarity Index (SSIM) [14], and Peak Signal-to-Noise Ratio (PSNR), and subjective quality assessments [15].

While video compression significantly reduces the amount of video data for either storage or transmission, perceptual quality degradation caused either directly by video compression, such as blocking edge artefacts or indirectly by packet loss when transmitted over an error-prone network. Since video compression removes both temporal and spatial redundancy of the original video, each packet is significant to enable the receiver to reconstruct the video [16]. The authors in [17] performed subjective evaluations on six HEVC test sequences, encoded at two different resolutions of 416 x 240 and 832 x 480. They found that on average, at 1% packet loss the MOS began to fall significantly, from 9.16 to 8.38, indicating the impact of packet loss is becoming perceptible, with 3% packet loss found to be the threshold of user tolerance. In [18] packet loss and bitrate were identified to be more important than frame rate. The authors in [19] broadly examined two factors: the loss pattern and content characteristics. Packet losses were mainly in I-frames, which reduces the generality of the tests. When scene changes occurred, an additional I-frame was to be inserted within a Group-of-Pictures (GoP), provided the error burst was not long enough to affect both frames, scene changes actually halted the usual temporal error propagation. This was similar to the results of [20], which also noted that the presence of camera motion (zoom and pan) increased the subsequent distortion resulting from packet loss. Otherwise, the extent of I frame packet loss visibility depended on the burst length in [19]. The impact of data loss was found to be dependent on the data loss pattern, especially the number of packets affected, which is consistent with [21]. Other works, as well as [22, 23], have tried to study the impact upon subjective video quality.

While it is ideal to use subjective assessment to assess video quality managers of video streaming normally do not have access to a panel of viewers [24, 25], owing to either time restrictions and/or difficulty of assembling a suitable set of viewers. In addition, subjective testing also does not allow a real-time response to changes in packet loss rate (PLR) or packet structures and is not repeatable. However, objective subjective ratings can approximate the results of subjective testing with a high degree of correlation especially with newer metrics such as VMAF that has shown to exhibit a strong correlation with subjective Mean Opinion Score (MOS) of 0.948 [26].

The impact of packet loss on 4K H.264 and HEVC was investigated in [27] using video sequences with different amounts of spatial and temporal information. They found all video sequences, for both H.264 and HEVC, had poor to unacceptable video quality above 0.6% packet loss, with higher motion sequences suffering more. Similar to this, the authors in [28] found a packet loss rate 0.6% was the threshold above which the objective video quality began to significantly degraded. they studied the impact of different packet loss rates (0.05-1.5%), on ten 30 fps 1920 x 1080 H.264 encoded video streams, finding that overall, as the percentage of packet loss increases, the VMAF, SSIM and PSNR scores decreased.

The authors in [29] evaluated the impact of packet loss up to 1.5% when streaming two 720 x 576 30 fps H.264 video sequences over RTP/UDP. The author found that as packet loss increased the SSIM and PSNR scores decreased. However, the SSIM measurements changed very gradually from 0-1.5%, with the average maximum SSIM of one sequence reducing by only 0.09. Hence, the author concluded SSIM to be less affected by packet loss than PSNR.While in [9] the impact of packet loss on both H.264/AVC and HEVC 4K video sequences, of varying motion and temporal complexities was investigated. They found that overall, H.264 appears more resilient to packet loss than HEVC, producing higher video quality ratings, suggesting HEVC’s more efficient encoding makes it more sensitive to packet loss. They also found that lower motion sequences for both codecs were less affected by packet loss when a fixed CBR was used, producing higher resultant video quality. However, as the bitrate was increased, the author found the impact of packet loss reduced, resulting in improved video quality for both codecs. The aforementioned studies have evaluated the impact of network factors on the base codecs (H.264, HEVC and VVC), however, there is little to no information on the impact of network factors on enhancement codecs such as LCEVC.

In [5] the performance of LCEVC to H.264, HEVC and VVC using eight 4K video sequences of different frame rates encoded using constant quality profile (CQP) was evaluated. They found that LCEVC produced overall bit-rate savings of 39% and 21%, (VMAF), and provided higher video quality ratings, when compared with H.264/AVC and HEVC base codecs respectively. This pattern of results was further observed in [30] where the performance of LCEVC to both H.264/AVC and HEVC for 14 full HD 60 frames per second (fps) gaming videos with different spatial and temporal complexities, using constant bitrate (CBR) rate control. Although, these studies provide us with some insights into the evaluation of LCEVC in comparison with the base codecs H.264/AVC and HEVC, they do not evaluate the impact of network factors on the real-time transmission of LCEVC in comparison with the base codecs H.264/AVC and HEVC.

Therefore in this paper, we provide a comprehensive evaluation of LCEVC vs H.264/AVC and HEVC in terms of video quality, compression efficiency and the relative impact of packet loss when it’s streamed over an error-prone network.

3 Evaluation methodology

To evaluate the performance of LCEVC compared to H.264/AVC and HEVC base codecs, two sets of experiments were conducted. The first set concentrated on compression efficiency and the impact of compression on visual quality, while the second set performed a live streaming experiment to judge the effect of packet scheduling when packet losses occurred for each video compression standard. In both cases we used the objective metrics: VMAF, SSIM and PSNR, to measure video quality.

3.1 Source video content

In both sets of experiments, the authors used seven open-source uncompressed 1080 x 1920 4:2:0 video test sequences of varying characteristics. Their specifications are outlined in Table 1 and the thumbnails for each sequence shown in Fig. 1. To confirm the sequences exhibited varying amounts of motion and detail, the Spatial Information (SI) and Temporal Information (TI) was calculated according to ITU-T P.910 [31] for each test sequence as shown in Fig. 2.

This classifier establishes a spatial Information (SI) from a video sequence by taking the luminance magnitude of a Sobel fillter’s output and forms a temporal index (TI), based upon successive frame differences using the luminance values. The advantage of this method of classification is that it can be performed in real-time, possibly using the software tool mentioned in [32]. TI can range from 0 to 130, with 0 meaning very limited motion and SI can vary from 0 to 130, with 0 implying very little spatial detail.

3.2 Video compression comparison (experiment 1)

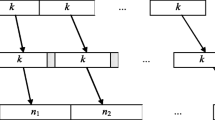

The test sequences from Section 3.1 were compressed using the software implementations of the video standards H.264/AVC, HEVC, LCEVC/H.264 and LCEVC/HEVC. For the H264/AVC and HEVC video compression, the implementations by the open-source and popular FFMPEG [33] (x264 and x265), while the LCEVC implementation was an extension of the FFMPEG libraries to allow for its enhancements to be applied to the chosen base codecs H.264/AVC and HEVC as shown in Fig. 3.

V-Nova LCEVC implementation [6]

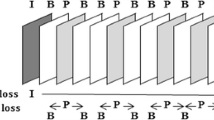

The coding parameters are summarized in Table 2. The GOP structure has one intra-coded I-frame and the remainder frames being predictely coded P-frames, while the GOP length is 120 which is in line with GoP length used in previous studies. Context Adaptive Variable-Length Coding (CAVLC) entropy coding was adopted to speed-up tasting, and the CRF rate control which has been shown to provided optimal compression over CBR and CQP rate control mechanisms [10, 34]. According to the official FFmpeg encoding guide a “subjective sane range” of CRF values is 17-28, and has been used in prior studies. However, other studies extended their testing to include the value of 35. Hence, for this experiment CRF values of 19, 27 and 35 similar to what were used in [35, 36]. The resulting datarates can be found in Appendix A.

3.3 Impact of packet loss(experiment 2)

To understand the impact of packet loss on the video sequences in Fig. 1,each encoded video sequence based on the parameters in Table 2 was streamed using the modified FFMPEG build discussed in Section 3.3 over an isolated network. Two computers (PC) were connected to a router using Cat-5e cables, which meet the standards defined in ANSI/TIA-568-C.2 [37]. For this experiment, the Linux PC behaved as the transmitter, sending video streams, via a router, to the Gigabyte PC, which acted as the receiver. The topology of the experimental set-up is illustrated in Fig. 4 and the logical setup in Fig. 5.

To evaluate the effects of packet loss on video quality, UDP was used in order to provide best effort conditions for the video.The encoded videos were first encapsulated in an MPEG-transmission stream (MPEG2-TS) packet and this was further encapsulated in UDP packet. Therefore the protocol stack used was MPEG-2 TS/UDP/IP and the maximum transmission unit (MTU) was 1500B. Consequently, Table 3 reports the transmission parameters for 4kUHD video transmission over a Wide Area Network (WAN). The open source WAN emulator, network emulation (NetEM) software [38] using bridging mode was attached to the outbound link of the sender, which added MPEG-2 TS/UDP/IP headers to the bit-stream payload. Packet loss was applied to the outgoing stream on the transmitter PC as a percentage with a defined correlation of 10% used for each packet loss value similar to the authors in [29]. For example, if packet loss was set to 1% and the correlation was defined as 10%, then this caused 1% of packets to be lost, with each successive probability depending by a tenth on the last one, as shown in (1). it should be noted that there are normally seven MPEG-2 TS packets per one UDP transport layer packet dropped by the WAN emulator and no error concealment strategy was used during these experiments.

4 Results and discussion

4.1 Findings from experiment one

4.1.1 Average resultant bitrate

As outlined in Section 3, each video test sequence was encoded using three different CRF levels with the the resultant bitrates for each sequence given in Appendix A. The average resultant bitrate for all sequences at each CRF level was calculated per codec can be seen in Table 4, Firstly, from the results in Table 5.1, LCEVC/H.264 reduces the average encoding bitrate of H.264 by 18.7% at a CRF level of 19. However, for CRF levels of 27 and 35, LCEVC/H.264 requires a 2.85% and 12.4% higher bitrate than H.264 respectively. Therefore, suggesting that LCEVC/H.264 outperforms H.264, with respect to the encoding bitrate, when only a CRF level of 19 is implemented, with its performance subsequently degrading as the CRF level increases.

Secondly, the results also show that LCEVC/HEVC produces lower resultant bitrates for all three CRF levels than its base codec, with the overall bitrate 15.8% lower than HEVC at a CRF level of 19. However, this bitrate reduction decreases as the CRF level rises. Hence, suggesting that LCEVC/HEVC outperforms HEVC, illustrating greater efficiency, in terms of the encoding bitrate at all CRF levels, although this outperformance is most significant at lower CRF levels.

4.1.2 Video Quality

Firstly, when comparing H.264 with LCEVC/H.264 (Table 5),and HEVC with LCEVC/HEVC (Table 6), it was observed that for all CRF levels LCEVC outperformed its base codecs in terms of VMAF, producing higher scores for both high and low SI/TI sequences, supporting the VMAF results in [30] and [5], even though they used CBR and CQP respectively for encoding. However, for SSIM and PSNR, both base codecs appear to outperform LCEVC for the majority of CRF levels for both high and low SI/TI sequences, supporting some but not all results in [5] regarding HEVC and LCEVC/HEVC. These differences in results for PSNR compared to VMAF can potentially be explained by the fact that current LCEVC encoder implementations available do not include any Mean Square Error (MSE)-specific rate-distortion optimisations for PSNR, resulting in LCEVC having a different behavioural pattern with PSNR compared to VMAF. Hence, affirming the suggestion by [5] that PSNR is not a reliable metric for assessing the performance of LCEVC.

From Tables 5 and 6, one can also compare the correlations in visual quality to SI and TI for LCEVC and its respective base codecs. All four codecs produce higher VMAF scores for content with a high TI for CRF levels of 19 and 27 except for H.264 which only produced higher scores for a CRF level of 19. However, when regarding SSIM and PSNR, all four codecs provided higher objective visual quality when the content exhibits a lower TI across the majority of CRF levels. Furthermore, all four codecs produce higher VMAF scores for all three CRF levels for higher SI content. Although, the opposite is seen for PSNR and SSIM. while these results regarding VMAF do go against the results from [30] and similar prior studies in [9], this is due to CRF encoding being used, resulting in sequences with higher spatial and temporal complexities being encoded at higher bitrates. Therefore, due to these higher bitrates, high SI and TI sequences will exhibit better video quality.

4.2 Findings from experiment two

4.2.1 Impact of Packet Loss

Figures 6, 7, 8, 9, 10 and 11 show the graphical representation of impact of packet loss on the video streams using the objective metrics (VMAF, SSIM and PSNR) for the individual sequnces. Overall, it was observed that, for all three metrics, once packet loss exceeds approximately 0.8-1%, there was a general reduction in the objective video quality seen across all four codecs, similar to the results in [17] for HEVC, [28] for H.264 and [27] for both codecs. However, for the latter two, the threshold was found to be slightly lower at 0.6%, although for [28], 30 fps content was used with no indications on how the content was encoded nor what protocol was used for streaming, whilst for [27], 4K video was under consideration with CBR encoding used. Furthermore, overall, the video quality decreases as the CRF level increases for all three metrics for the majority of packet loss values.

4.2.2 Packet loss vs si and ti

From Figs. 6 - 11, an analysis on the correlation between the SI and TI of the content and the resultant objective video quality for LCEVC can be made when subject to packet loss. As shown in Fig. 2, sequence B has the lowest SI and TI, whilst sequences E and G have the highest SI and TI. Hence, these sequences were used to discuss any possible correlations.

It was observed that in some cases the video quality of both LCEVC/H.264 and LCEVC/HEVC is affected less as packet loss increases to 1.4% for content with a high SI. For example, for LCEVC/H.264 at a CRF level of 35, the VMAF and PSNR score is reduced by 1.895 and 0.762 points going from 0-1.4% packet loss for sequence E with a high SI compared to 8.312 and 7.162 points for sequence B with a low SI, respectively. Whilst for example, for LCEVC/HEVC, at a CRF level of 19, the VMAF and PSNR score is reduced by 36.91 and 13.794 points from 0-1.4% packet loss for sequence E compared to 54.485 and 22.599 points for sequence B, respectively. A similar trend is seen when regarding TI for both LCEVC/H.264 and LCEVC/HEVC. For example, at a CRF level of 19, the VMAF scores are reduced by 4.151 and 13.354 points for sequence G, with a high TI, compared to 11.871 and 54.485 points for sequence B, with a low TI, respectively. However, these correlations to SI and TI for both LCEVC codecs are not completely clear for all metrics and CRF levels.

Furthermore, these correlations go against the general trend found in [9] and [27] where content was encoded using CBR. The reason is CRF encoding resulted in the sequences with a high SI or TI having higher bitrates, resulting in the amount of coded information being distributed amongst more packets, each with a fixed size of 1316 bytes. For example, a 25 Mbps video stream will have more packets over time than a stream at a lower rate of 13 Mbps. However, the amount of coded information per packet is lower for the higher bitrate stream; the coded data in a single packet is split into two or more packets. Therefore, one packet lost for high bitrate videos will contain less information than a packet lost for a lower bitrate video. Hence, having a smaller impact on the video quality, supporting the findings from [12] regarding increasing bitrates. Therefore, these results illustrate the advantage of CRF encoding when streaming content with high spatial and motion complexities, despite the downside of a higher throughput.

5 Conclusion

This paper has presented a performance comparison of LCEVC enhancements with that of its base codecs H.264/AVC and HEVC for live streaming applications using best effort protocols.These results will be of interest to those considering LCEVC codec and expected quality in error-prone environments. We found that when using the CRF rate control, LCEVC appeared to outperform its base codecs in terms video quality, producing higher VMAF scores while reducing average encoding bitrate by of 18.7% and 15.8% over base codecs H.264 and HEVC respectively. However, When using the SSIM and PSNR metrics we found that LCEVC generally underperformed due the non availability of MSE-specific rate-distortion optimisations in current implementations. In terms of packet loss, we found that this had less of an impact on LCEVC/H.264, LCEVC/HEVC compared with its base codecs and overall video quality started to decrease once the packet loss rate exceeded 0.8-1% for all three CRF levels. Furthermore LCEVC was found to produce better video quality after encoding and greater protection against packet loss for content with higher spatial and temporal complexity due to CRF encoding being used. it can therefore be suggested that the LCEVC enhancement layer also provides error concealment.

While this paper provides promising results there are a few limitations. Although VMAF is considered to have high correlation to human perception, it currently cannot be used as a substitute for subjective assessments. Furthermore, only one protocol stack was used for video transmission so it is unclear how packet loss affects LCEVC when transported with other best effort protocols.

LCEVC is still a very new standard with a handful of studies. Therefore, future work could include extending this paper’s research to include subjective tests. Secondly, one could make a comparison on LCEVC’s performance when streamed over UDP and SRT; only UDP was considered in this paper.Also, error concealment was not considered in this investigation. Hence, one could investigate where error concealment would fit in best - whether at the base codec or within the enhancement layer of LCEVC, when impacted by packet loss. Finally, this experiment only considered the effect of packet loss. Therefore, this experiment could be expanded to investigate the effect latency and jitter, as well as multiple impairments combined, have on the video quality of LCEVC

Data Availibility Statement

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Wu D, Hou YT, Zhu W, Zhang YQ, Peha JM (2001) Streaming video over the internet: approaches and directions. IEEE Trans Circuits Syst Video Technol 11(3):282–300

Han L, Yan J, Cai Y (2018) An implementation of capture and playback for ip-encapsulated video in professional media production. In: International Forum on Digital TV and Wireless Multimedia Communications, pp. 346–355. Springer

Sodagar I (2011) The mpeg-dash standard for multimedia streaming over the internet. IEEE Multimedia 18(4):62–67

Bing B (2010) 3D and HD Broadband Video Networking. Artech House

Meardi G, Ferrara S, Ciccarelli L, Cobianchi G, Poularakis S, Maurer F, Battista S, Byagowi A (2020) Mpeg-5 part 2: Low complexity enhancement video coding (lcevc): Overview and performance evaluation. Applications of Digital Image Processing XLIII 11510:238–257

V-NOVA (2022) FFMPEG with LCEVC. https://docs.v-nova.com/v-nova/lcevc/reference-applications/ffmpeg Accessed 2022-03-18

Punchihewa A, Bailey D (2020) A review of emerging video codecs: Challenges and opportunities. In: 2020 35th International Conference on Image and Vision Computing New Zealand (IVCNZ), pp. 1–6. IEEE

Pinson MH, Wolf S, Gregory Cermak H (2010) Subjective quality of h. 264 vs. mpeg-2, with and without packet loss. broadcasting. IEEE Transactions on Issue Date 56(1)

Adeyemi-Ejeye AO, Alreshoodi M, Al-Jobouri L, Fleury M, Woods J (2017) Packet loss visibility across sd, hd, 3d, and uhd video streams. J Vis Commun Image Represent 45:95–106

Merritt L, Vanam R (2007) Improved rate control and motion estimation for h. 264 encoder. In: 2007 IEEE International Conference on Image Processing, vol. 5, p. 309. IEEE

Jain R (2004) Quality of experience. IEEE multimedia 11(1):96–95

Ma S, Zhang X, Jia C, Zhao Z, Wang S, Wang S (2019) Image and video compression with neural networks: A review. IEEE Trans Circuits Syst Video Technol 30(6):1683–1698

Li Z, Bampis C, Novak J, Aaron A, Swanson K, Moorthy A, Cock J (2018) Vmaf: The journey continues. Netflix Technology Blog 25

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13(4):600–612

ITU-T Recommendation P (1999) Subjective video quality assessment methods for multimedia applications

Hx Rui, Cr Li, Sk Qiu (2006) Evaluation of packet loss impairment on streaming video. Journal of Zhejiang University-SCIENCE A 7(1):131–136

Nightingale J, Wang Q, Grecos C, Goma S (2013) Subjective evaluation of the effects of packet loss on hevc encoded video streams. In: 2013 IEEE Third International Conference on Consumer Electronics, Berlin (ICCE Berlin), pp. 358–359. IEEE

Cermak GW, Laboratories V (2009) Subjective video quality as a function of bit rate frame rate, packet loss, and codec. In: 2009 International Workshop on Quality of Multimedia Experience, pp. 41–46. IEEE

Boulos F, Parrein B, Le Callet P, Hands D (2009) Perceptual effects of packet loss on h. 264/avc encoded videos. In: Fourth International Workshop on Video Processing and Quality Metrics for Consumer Electronics VPQM–09

Reibman AR, Poole D (2007) Predicting packet-loss visibility using scene characteristics. In: Packet Video 2007, pp. 308–317. IEEE

Liang YJ, Apostolopoulos JG, Girod B (2003) Analysis of packet loss for compressed video: Does burst-length matter? In: 2003 IEEE International Conference on Acoustics, Speech, and Signal Processing, 2003. Proceedings.(ICASSP’03)., vol. 5, p. 684 (2003). IEEE

Kanumuri S, Cosman PC, Reibman AR, Vaishampayan VA (2006) Modeling packet-loss visibility in mpeg-2 video. IEEE Trans Multimedia 8(2):341–355

Kanumuri S, Subramanian SG, Cosman PC, Reibman AR (2006) Predicting h. 264 packet loss visibility using a generalized linear model. In: 2006 International conference on image processing, pp. 2245–2248. IEEE

Mohamed S, Rubino G (2002) A study of real-time packet video quality using random neural networks. IEEE Trans Circuits Syst Video Technol 12(12):1071–1083

Sullivan GJ, Ohm JR, Han WJ, Wiegand T (2012) Overview of the high efficiency video coding (hevc) standard. IEEE Trans Circuits Syst Video Technol 22(12):1649–1668

Rassool R (2017) Vmaf reproducibility: Validating a perceptual practical video quality metric. In: 2017 IEEE International Symposium on Broadband Multimedia Systems and Broadcasting (BMSB), pp. 1–2. IEEE

Adeyemi-Ejeye AO, Alreshoodi M, Al-Jobouri L, Fleury M (2019) Impact of packet loss on 4k uhd video for portable devices. Multimedia Tools and Applications 78(22):31733–31755

Ahmad N, Wahab A, Schormans J (2020) Importance of cross-correlation of qos metrics in network emulators to evaluate qoe of video streaming applications. In: 2020 11th International Conference on Network of the Future (NoF), pp. 43–47. IEEE

Tommasi F, De Luca V, Melle C (2015) Packet losses and objective video quality metrics in h. 264 video streaming. J Vis Commun Image Represent 27:7–27

Barman N, Schmidt S, Zadtootaghaj S, Martini MG (2022) Evaluation of mpeg-5 part 2 (lcevc) for live gaming video streaming applications. In: Proceedings of the 1st Mile-High Video Conference, pp. 108–109

Installations T, Line L (2023) Itu-tp. 910. Subjective video quality assessment methods for multimedia applications, Recommendation ITU-T, 910

Robitza W (2022) SITI: Spatial Information / Temporal Information. https://pypi.org/project/siti/ Accessed 2022-03-20

Bellard, F., Bingham, B.: FFmpeg. https://ffmpeg.org/ Accessed 2021-11-20

Asan A, Mkwawa IH, Sun L, Robitza W, Begen AC (2018) Optimum encoding approaches on video resolution changes: A comparative study. In: 2018 25th IEEE International conference on image processing (ICIP), pp. 1003–1007. IEEE

Münzer B, Schoeffmann K, Böszörmenyi L (2013) Improving encoding efficiency of endoscopic videos by using circle detection based border overlays. In: 2013 IEEE International Conference on Multimedia and Expo-Workshops (ICMEW), pp. 1–4. IEEE

Khani M, Sivaraman V, Alizadeh M (2021) Efficient video compression via content-adaptive super-resolution. In: Proceedings of the IEEE/CVF international conference on computer vision, pp. 4521–4530

STANDDARD T (2009) Balanced Twisted-Pair Telecommunications Cabling and Components Standards. Telecommunications Industry Association. ANSI/TIA-568-C

Hemminger S et al (2005) Network emulation with netem. In: Linux Conf Au, vol. 5, p. 2005 (2005)

Acknowledgements

The authors would like to thank V-NOVA for providing the LCEVC software implementation and technical support. In particular we are grateful to Florian Maurer, Lorenzon Ciccarelli and Simone Ferrara for their insightful comments.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

The authors received research support from V-NOVA. V-NOVA provided the LCEVC software implementation and technical support.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A Average bitrates of Video seqeuences

Appendix A Average bitrates of Video seqeuences

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Roberts, G., Adeyemi-Ejeye, A. Performance evaluation of mpeg-5 part 2 (lcevc): impact of packet loss. Multimed Tools Appl (2024). https://doi.org/10.1007/s11042-023-17931-0

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11042-023-17931-0