Abstract

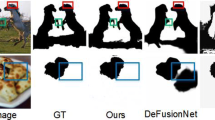

Defocus blur detection (DBD), a technique for detecting defocus or in-focus pixels in a single image, has been widely used in various fields. Although deep learning-based methods applied to DBD attain superior performance compared to traditional methods that rely on manually-constructed features, these methods cannot distinguish many microscopic details when the images are complex. To address this issue, this work proposes a hybrid CNN-Transformer architecture (TCRL) based on complementary residual learning, which employs global information captured by the Transformer and hierarchical complementary information from the network to optimize DBD. Specifically, to enhance global target detection, our backbone network adopts a CNN-Transformer architecture, where the Transformer effectively drives the network to focus on the global context and thus achieve precise localization. To better detect microscopic details, we combine each convolutional neural network layer with layered complementary information from the network module to optimize the defocus blur detection process. This strategy opposes current schemes that output a binary mask, affording the layered feature-guided learning method to exploit better both low- and high-level information and effectively drive the network to refocus on boundaries and sparse, easily overlooked parts. Additionally, this work also considers the features of the in-focus and defocus pixels within the image. In this complementary model, the information ignored by one side may be learned by the other side, thus enhancing global target detection and local boundary refinement process. The experimental results on three datasets validate the effectiveness and superiority of the developed method.

Similar content being viewed by others

Data availability

The datasets used or analysed during the current study are available from the corresponding author on reasonable request.

References

Darren M (2019) Wikipedia: the free encyclopedia. J Am Musicological Soc 72(1):279–295. https://doi.org/10.1525/jams.2019.72.1.279

Masia B, Presa L, Corrales A, Gutierrez D (2012) Perceptually optimized coded apertures for Defocus Deblurring. Comput Graphics Forum 31(6):1867–1879. https://doi.org/10.1111/j.1467-8659.2012.03067.x

Xu G, Quan Y, Hui J (2017) Estimating defocus blur via rank of local patches. IEEE International Conference on Computer Vision(ICCV), pp 5381–5389. https://doi.org/10.1109/ICCV.2017.574

Wang R, Li W, Zhang L (2019) Blur image identification with ensemble convolution neural networks. Sig Process 155:73–82. https://doi.org/10.1016/j.sigpro.2018.09.027

Wei Z, Cham WK (2009) Single image focus editing. 2009 IEEE 12th International Conference on Computer Vision Workshops (ICCV Workshops) pp 1947–1954. https://doi.org/10.1109/ICCVW.2009.5457520

Peng J, Ling H, Yu J et al (2013) Salient region detection by UFO: Uniqueness, Focusness and Objectness. IEEE International Conference on Computer Vision(ICCV) pp 1976–1983

Chang T, Jin W, Zhang C et al (2017) Salient object detection via weighted low rank matrix recovery. IEEE Signal Process Lett 24(99):490–494. https://doi.org/10.1109/lsp.2016.2620162

Tang C, Hou C et al (2013) Defocus map estimation from a single image via spectrum contrast. Opt Lett 38(10):1706–1708. https://doi.org/10.1364/ol.38.001706

Bae S, Durand F (2007) Defocus magnification. Comput Graphics Forum 26(3):571–579. https://doi.org/10.1111/j.1467-8659.2007.01080.x

Hong Y, Ren G, Liu E et al (2016) A blur estimation and detection method for out-of-focus images. Multimed Tools Appl 75:10807–10822. https://doi.org/10.1007/s11042-015-2792-1

Zhuo S, Sim T (2011) Defocus map estimation from a single image. Pattern Recogn 44(9):1852–1858. https://doi.org/10.1016/j.patcog.2011.03.009

Su B, Lu S, Tan CL (2011) Blurred image region detection and classification. International Conference on Multimedea DBLP pp 1397–1400. https://doi.org/10.1145/2072298.2072024

Vu CT, Phan TD, Chandler DM (2012) S 3: a spectral and spatial measure of local perceived sharpness in natural images. IEEE Trans Image Process 21(3):934–945. https://doi.org/10.1109/tip.2011.2169974

Hirakawa K (2013) Blur processing with double discrete wavelet transform. Imaging Syst Appl. https://doi.org/10.1364/ISA.2013.IM2E.1

Zhu X, Cohen S, Schiller S et al (2013) Estimating spatially varying defocus blur from a single image. IEEE Trans Image Process 22(12):4879–4891. https://doi.org/10.1109/tip.2013.2279316

Pang Y, Zhu H, Li X, Li X (2016) Classifying discriminative features for blur detection. IEEE Trans on Cybern 46(10):2220–2227. https://doi.org/10.1109/tcyb.2015.2472478

Tang C, Wu J, Hou Y et al (2016) A spectral and spatial approach of coarse-to-fine blurred image region detection. IEEE Signal Process Lett 23(11):1652–1656. https://doi.org/10.1109/lsp.2016.2611608

Saad E, Hirakawa K (2016) Defocus blur-invariant scale-space feature extractions. IEEE Trans Image Process 25(7):3141–3156. https://doi.org/10.1109/TIP.2016.2555702

Shi J, Li X, Jia J (2014) Discriminative blur detection features. Computer Vision & Pattern Recognition. 2014 IEEE Conference on Computer Vision and Pattern Recognition, pp 2965–2972 https://doi.org/10.1109/CVPR.2014.379

Shi J, Li X, Jia J (2015) Just noticeable defocus blur detection and estimation. 2015 IEEE Conference on Computer Vision and, Recognition P pp 657–665. https://doi.org/10.1109/CVPR.2015.7298665

Ma K, Fu H, Liu T, Wang Z, Tao D (2018) Deep blur mapping:exploiting high-level semantics by deep neural networks. IEEE Trans Image Process 27(10):5155–5166. https://doi.org/10.1109/tip.2018.2847421

Lee J, Lee S, Cho S, Lee S (2019) Deep defocus map estimation using domain adaptation. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 12222–12230. https://doi.org/10.1109/CVPR.2019.01250

Zhao Z, Yang H, Luo H (2022) Defocus Blur detection via transformer encoder and edge guidance. Appl Intell 52:14426–14439. https://doi.org/10.1007/s10489-022-03303-y

Zhao W, Hou X, He Y, Lu H (2021) Defocus blur detection via boosting diversity of deep ensemble networks. IEEE Trans Image Process 30:5426–5438. https://doi.org/10.1109/TIP.2021.3084101

Zhao W, Zheng B, Lin Q et al (2019) Enhancing diversity of defocus blur detectors via cross-ensemble network. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 8897–8905 https://doi.org/10.1109/CVPR.2019.00911

Tang C, Liu X, Zheng X et al (2022) DeFusionNET: Defocus blur detection via recurrently fusing and refining discriminative multi-scale deep features. IEEE Trans Pattern Anal Mach Intell 44(2):955–968. https://doi.org/10.1109/TPAMI.2020.3014629

Kai Z, Wang Y, Mao J et al (2019) A local metric for defocus blur detection based on CNN feature learning. IEEE Trans Image Process 28(5):2107–2115. https://doi.org/10.1109/TIP.2018.2881830

Zhao W, Zhao F, Wang D, Lu H (2020) Defocus blur detection via multi-stream bottom-top-bottom network. IEEE Trans Pattern Anal Mach Intell 42(8):1884–1897. https://doi.org/10.1109/TPAMI.2019.2906588

Chen Z, Xu Q, Cong R et al (2020) Global context-aware progressive aggregation network for salient object detection. Proceedings of the AAAI Conference on Artificial Intelligence 34:10599–10606. https://doi.org/10.48550/arXiv.2003.00651

He K, Zhang X, Ren S et al (2016) Deep residual learning for image recognition. IEEE Conference on Computer Vision and Pattern Recognition, pp 770–778

Zheng S, Lu J, Zhao H et al (2021) Rethinking semantic segmentation from a sequence-to-sequence perspective with transformers. Comput Vis Pattern Recognit, 6877–6886. https://doi.org/10.1109/CVPR46437.2021.00681

Sun P, Jiang Y, Zhang R et al (2020) TransTrack: Multiple-object tracking with Transformer. https://doi.org/10.48550/arXiv.2012.15460

Lin X, Li H, Cai Q (2022) Hierarchical complementary residual attention learning for defocusblur detection. Neurocomput 501(28):88–101. https://doi.org/10.1016/j.neucom.2022.06.023

Shi J, Xu L, Jia J (2014) Discriminative blur detection features. IEEE Conference on Computer Vision and Pattern Recognition, pp 2965–2972

Golestaneh SA, Karam LJ (2017) Spatially-varying blur detection based on multiscale fused and sorted transform coefficients of gradient magnitudes. In: IEEE Conference on Computer Vision and Pattern Recognition pp 596–605

Park J, Tai YW, Cho D, Kweon IS (2017) A unified approach of multi-scale deep and hand-crafted features for defocus estimation. IEEE Conference on Computer Vision and Pattern Recognition, pp 2760–2769

Ranftl R, Bochkovskiy A, Koltun V (2021) Vision transformers for dense prediction. 2021 IEEE/CVF International Conference on Computer Vision (ICCV), pp 12159–12168 https://doi.org/10.1109/ICCV48922.2021.01196

Wang W, Xie E, Li X et al (2021) Pyramid vision transformer: a versatile backbone for dense prediction without convolutions. Comput Vis Pattern Recognit. https://doi.org/10.48550/arXiv.2102.12122

Chen J, Lu Y, Yu Q et al (2021) TransUNet: Transformers make strong encoders for medical image segmentation. https://doi.org/10.48550/arXiv.2102.04306

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. Comput Sci. https://doi.org/10.48550/arXiv.1409.1556

Deng J, Dong W, Socher R, Li L, Li K, Li FF (2009) Imagenet: A large-scale hierarchical image database. In: IEEE Conference on Computer Vision and Pattern Recognition 5:248–255. https://doi.org/10.1109/cvprw.2009.5206848

Fan DP, Cheng MM, Liu Y, Li T, Borji A (2017) Structure-measure: A new way to evaluate foreground maps. Proceedings of the IEEE International Conference on Computer Vision, pp 4548–4557. https://doi.org/10.1007/s11263-021-01490-8

Achanta R, Hemami S, Estrada F, Susstrunk S (2009) Frequency-tuned salient region detection. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 1597–1604. https://doi.org/10.48550/arXiv.1708.00786

Willmott CJ, Matsuura K (2005) Advantages of the mean absolute error (mae) over the root mean square error (rmse) in assessing average model performance. Climate Res 30(1):79–82. https://doi.org/10.3354/cr030079

Xin Y, Eramian M (2016) LBP-Based segmentation of Defocus Blur. IEEE Trans Image Process 25(4):1626–1638. https://doi.org/10.1109/TIP.2016.2528042

Zhao W, Zhao F, Wang D, Lu H (2019) Defocus blur detection via multi-stream bottom-top-bottom network. IEEE Trans Pattern Anal Mach Intell 42(8):1884–1897. https://doi.org/10.1109/tpami.2019.2906588

Alexander Kirillov E, Mintun N, Ravi et al (2023) Segment anything. https://doi.org/10.48550/arXiv.2304.02643

Acknowledgements

This work was supported by Guangdong Basic and Applied Basic Research Foundation (Grant No.2022A1515110024) and the Fundamental Research Funds for the Central Universities (No. BLX202018).

Author information

Authors and Affiliations

Contributions

Xixuan Zhao contributed to the conception of the study, acquisited the financial support; Shuyao Chai performed the data analyses, wrote the manuscript, prepared figures and Tables; Jiaming Zhang prepared the software section. Jiangming Kan helped with constructive discussions, acquisited the financial support; All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chai, S., Zhao, X., Zhang, J. et al. Defocus blur detection based on transformer and complementary residual learning. Multimed Tools Appl 83, 53095–53118 (2024). https://doi.org/10.1007/s11042-023-17560-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-17560-7