Abstract

The proliferation of fake news on social media platforms poses significant challenges to society and individuals, leading to negative impacts. As the tactics employed by purveyors of fake news continue to evolve, there is an urgent need for automatic fake news detection (FND) to mitigate its adverse social consequences. Machine learning (ML) and deep learning (DL) techniques have emerged as promising approaches for characterising and identifying fake news content. This paper presents an extensive review of previous studies aiming to understand and combat the dissemination of fake news. The review begins by exploring the definitions of fake news proposed in the literature and delves into related terms and psychological and scientific theories that shed light on why people believe and disseminate fake news. Subsequently, advanced ML and DL techniques for FND are dicussed in detail, focusing on three main feature categories: content-based, context-based, and hybrid-based features. Additionally, the review summarises the characteristics of fake news, commonly used datasets, and the methodologies employed in existing studies. Furthermore, the review identifies the challenges current FND studies encounter and highlights areas that require further investigation in future research. By offering a comprehensive overview of the field, this survey aims to serve as a guide for researchers working on FND, providing valuable insights for developing effective FND mechanisms in the era of technological advancements.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Traditionally, people fundamentally consume news or information from newspapers and TV channels; however, with the advent of the Internet and its intrusion into our lifestyle, the former has become less prominent [31]. Today, online social networks (OSNs) and lifestreaming platforms play a fundamental role compared to television as one of the major news sources, where in 2016, 62% of U.S. people gained news from social media, while 49% of U.S. people recorded watching news through social media in 2012 [209]. Figure 1 illustrates the level of interest in the term ’fake news’ over the span of the last decade, as extracted from Google Trends [257].

Recently, the role of OSNs has significantly increased due to their convenient access, as it is no longer limited to being a window for communication between individuals, but rather it has become an important tool to exchange information and for influencing and shaping public opinion where individuals can release data in all its forms on various OSNs. Nevertheless, unfortunately, the other side of the coin is fake news dissemination, specifically on OSNs, which poses a great concern to the individual and society due to the lack of control, supervision and automatic fact-checking, leading to low-quality and fake content generation. As such, users are prone to countless disinformation and misinformation on OSNs, including fake news, i.e., news stories with intentionally false information [12, 235]. As a major source for misinformation spreaders, OSNs were developed primarily for connecting individuals who exploit the connectivity and globality of such networks [134]. According to [60], on OSNs, fake news spreads six times faster compared to true news resulting in fear, panic, and financial loss to society [299]. It is not so surprising to see such falsehood information disseminated rapidly as OSNs and the Internet, in general, give people some degree of anonymity that those fake news spreaders can harness to achieve their intent. This, in turn, is bound to result in worse and more severe consequences if not combated. For example, fake news has led to significant impacts on real-world events where a piece of fake news from Reddit causes a real shooting [247].

By the end of the 2016 U.S. presidential election, for instance, over 1 million tweets were found to be related to that piece of fake news known as PizzagateFootnote 1. Furthermore, during this period, top-20 fake news pieces were reported to be larger than the top-20 most-discussed true storiesFootnote 2. Research on fake news velocity states that tweets including falsified information on Twitter reach people six times faster than trustworthy tweets [138]. This, in fact, indicates how terribly fake news disseminates and how it can have adverse social effects. The matter of concern is the quick reactions, such as retweets, likes, and shares of a tweet (fake news story) received on Twitter without pre-thinking, aggravating the problem even more. According to [268], false news, particularly political news, on Twitter is usually retweeted by more users and spreads extremely rapidly. In fact, the major problem is that some popular sources for information that are considered to be genuine, such as Wikipedia, are also prone to fake news or false information [132]. According to a report in China, fake information constitutes more than one-third of trending events on microblogs [291]. Therefore, without news verification, fake news would spread rapidly through OSNs resulting in real consequences [73]. Fake news is not a new phenomenon [119] (e.g., the New York Sun published in 1835 a series of articles known as the Great Moon Hoax, described the discovery of life on the moon [12]); however, enquiries of why such phenomenon has attracted more attention and emerged as a hot topic of interest are specifically relevant nowadays [296]. The primary cause is that fake news online, as opposed to traditional news media (e.g., newspapers and TV), can be created and published faster and cheaper [235], which destroys the authenticity balance of the entire news ecosystem. The popularity and growth of OSNs also contribute to this raising of interest [181, 286, 296].

Fake news trends (2010-2022) [257])

The OSNs have contributed to the fostering of false news that intentionally false information transmitted to the public for various purposes, including political or financial manipulation [196]. Furthermore, substantial potential political and economic benefits can be earned from the excessive activity around these online social media platforms, which often motivate spiteful entities to create and spread false information [297]. Indeed, the ulterior motive behind these (intentional) fake news creators is not necessarily “closely connected to the content of the claims they are manufacturing” [80, p. 107], where “the deception lies not in getting an audience to believe a false claim, but in getting them to believe it is worth sharing” [80, p. 108]. For example, during the U.S. presidential election, the dozen of “well-known” teenagers in the Macedonian town of Veles has become wealthy as a result of creating fake news for millions on social media, where, according to NBC, each user “has earned at least $60,000 in the past six months - far outstripping their parents’ income and transforming his prospects in a town where the average annual wage is $4,800” [297]. What this example shows is that news is not necessarily created and published to manipulate others, so the intent may be for a malicious goal, such as intentionally defaming or instilling malicious goals and beliefs in people, since “purveyors of fake news deliberately engage in practices that they know, or can reasonably foresee, to lead to the likely formation of false beliefs on the part of their audience” [80, p. 107], or it may be for financial goals on purpose, but without the presence of the malicious intent [80]. Subsequently, as the valence effect theory indicates, individuals tend to overestimate the benefits of spreading fake news instead of its costs [117], and thus this might be one reason for fake news’s widespread alarmingly. Therefore, the rise of falsified information (i.e., disinformation websites) is attributed to two main reasons: (i) the promotion of viral news articles generates significant advertising revenue, and (ii) it is usually the goal of false news providers to influence the public opinion on particular topics [12]. Another major factor contributing to the spread of misinformation is the presence of malicious agents such as bots and trolls [131, 229] (see Section 2.4.1 for more information on this). Fake news widespread leads to severe threats across society and makes it challenging to discover [13, 17]. This severely damaged society, including the economy, politics, health and peace. Regarding the economy, the negative implications of fake news widespread affected economies (i.e., stock markets), causing financial loss. One example is the false bankruptcy story about UAL’s parent company in 2008 which led to a 76% drop in stock price [36]. In addition, an Associated Press account has released a fake tweet claiming that President Barack Obama was injured in an explosion which in turn caused stocks to drop immediately where the Dow plunged over 140 points, and the estimated loss of market cap in S &P 500 was 136.5 billion dollars [158].

Extensive research has demonstrated that fake political news exhibits greater speed, reach, and popularity compared to fake news in domains such as terrorism, business, science, and entertainment [269]. This phenomenon was notably exemplified during the 2016 U.S. presidential election, where an overwhelming amount of fake news favouring either Hillary Clinton or Donald Trump proliferated on Facebook, amassing over 37 million shares in a relatively short three-month period before the election. Notably, the top twenty frequently discussed fake election news stories on OSNs, particularly Facebook, received a staggering 8,711,000 comments, reactions, and shares, surpassing the combined engagement of the top twenty most-discussed election stories posted by 19 major news websites [240]. Furthermore, a fake news story endorsing Donald Trump by Pope Francis garnered millions of views, shares, and likes, perpetuating its deceptive narrative [59].

The deleterious impact of fake news dissemination extends beyond politics, encompassing health-related consequences of grave magnitude. Tragic incidents have occurred where online advertisements for experimental cancer treatments, mistakenly perceived as reliable medical information, have resulted in the untimely death of cancer patients [174]. Likewise, false or misleading claims regarding the COVID-19 virus have threatened public health, as individuals are swayed to take risks by consuming harmful substances or disregarding social distancing guidelines. In recent years, the COVID-19 pandemic has witnessed the proliferation of fake news that presents attention-grabbing content, deceiving individuals into believing its purported usefulness. Shockingly, within two months, the International Fact-Checking Network (IFCN) uncovered over 3,500 false claims related to COVID-19 [201]. For instance, disseminating fake news suggesting unproven remedies or attributing the virus to 5G towers has resulted in physical harm [176]. Tragically, it is estimated that at least 800 individuals worldwide may have lost their lives during the first three months of 2020 due to coronavirus-related false claims [175]. Consequently, disseminating fake news poses a significant threat to both individuals and society, with OSNs amplifying this peril. The erosion of social confidence, credibility, and integrity within the news system, coupled with political polarization, has contributed to the rampant prevalence of fake news on OSNs [298]. The inherent structure of these networks facilitates the rapid propagation of fake news, rendering OSNs increasingly popular platforms for its dissemination. In fact, research by Gartner [34] predicts that by 2022, individuals in mature economies will consume more false information than true information, underscoring the urgency for automated FND methods to combat the exponential rise in false news. Researchers specializing in NLP have dedicated their efforts to developing a diverse array of ML and DL algorithms for detecting fake news (for further details, refer to Section 3). In this survey, we extensively review previous studies to comprehensively understand fake news dissemination to devise strategies to mitigate its impact.

The primary contributions of this paper are as follows:

-

In-depth review and exploration of fake news definitions, related terms, and their manifestation in both traditional and modern online media. By delving into the underlying reasons why individuals tend to believe and disseminate fake news, we aim to enhance our comprehension of this phenomenon.

-

Comprehensive survey encompassing a wide range of feature-based methods, diverse ML and DL techniques, and state-of-the-art transformer-based models employed in FND. This survey offers valuable insights into the effectiveness of these approaches and equips researchers with the necessary knowledge to navigate the evolving landscape of FND.

-

Discussion of the challenges that must be addressed to effectively curb the dissemination of fake news. By highlighting these challenges, we seek to inspire further research and innovation in the field, fostering a community of dedicated scholars committed to mitigating the adverse effects of fake news dissemination.

This paper is structured as follows. Table 9 lists all the acronyms used in this paper. Section 2 reviews some preliminaries on the topic. Section 3 presents a comprehensive review of FND approaches. In Section 4, we review the commonly used methods, and in Section 5, we shed light on the current challenges. The limitations and the recommended potential directions for future research have been introduced in Sections 6 and 7, respectively. Finally, Section 8 concludes the paper. Figure 2 shows the outline of this paper.

2 Preliminaries

2.1 Fake news definition

The fake news epidemic, as a growing issue affecting the world, had existed from the time when news started to spread widely after the invention of the printing press in 1439 [157]. Efforts are dedicated primarily to identifying and detecting fake news from users’ social media content. However, despite the dedicated researchers’ efforts, fake news term (i.e., refers to a variety of terms in the literature, including (mis)disinformation, rumour, hoax, etc.) is still vague. The leading cause of such ambiguity might be the existence of related terms (discussed in detail in Section 2.2). As Axel Gelfert [80] stated, the plethora of (tentative) definitions that have been proposed have led some to worry that the term fake news, as a result of its heterogeneity, may become “a catch-all term with multiple definitions” [145, p. 1]. Facebook produced a whitepaper in 2017 that discussed potential threats to online communication and the responsibility of being one of the most popular OSNs today [276]. When exploring the growing issue of utilising the vague term fake news, they stated that “the overuse and misuse of the term “fake news” can be problematic because, without common definitions, we cannot understand or fully address these issues” [276, p. 4]. Fake news typically mimics trustworthy news in order to gain credibility where such falsified content derives its value by mimicking trustworthy content; as Axel Gelfert [80] puts it, “fakes derive their value entirely from the originals they successfully mimic, specifically from the scarcity of the latter”. We acknowledge that the definition of fake news is a highly debated topic. Before listing the definitions of fake news term proposed in the literature (in their context), let us remind the reader that there is an explicit definition of fake news as “the online publication of intentionally or knowingly false statements of fact” [125, p. 6], since “it is widely circulated online” [19, p. 1], which, indeed, justifies the existence of “the recognition that the medium of the internet (and social media, in particular) has been especially conducive to the creation and proliferation of fake news” [80, p. 96]. Hence, why has fake news become such a powerful force in the online world? Regina Rini stated:

“A fake news story is one that purports to describe events in the real world, typically by mimicking the conventions of traditional media reportage, yet is known by its creators to be significantly false and is transmitted with the two goals of being widely re-transmitted and of deceiving at least some of its audience” [212, p. E45].

Similarly, Darren Lilleker [145, p. 2], a professor in political communication, argues that “fake news is the deliberate spread of misinformation, be it via traditional news media or through social media”. Roger Plothow argues, “Fake news should be defined as a story invented entirely from thin air to entertain or mislead on purpose” [198, p. A5], which is then echoed by economists Hunt Allcott and Matthew Gentzkow as “news stories that have no factual basis but are presented as news” [12, p. 5]Footnote 3.

A definition by [12], which was then adopted by most of the existing studies, including [48, 125, 170, 200], defined fake news as “news article that is intentionally and verifiably false and could mislead readers”. This leaves out rumours, conspiracy theories, unintentional reporting of mistakes, and misleading reports, but of course, not necessarily false, while including intentionally fabricated pieces and satire sites [12, 125]. However, this conceptualisation excludes mainstream media misreporting from scrutiny [167]. Considering authenticity and intention as two key factors, a 2017 survey paper introduces a similar definition [235] of fake news as a news article that is intentionally and verifiably false. Based on those two key factors, fake news includes verified false information and can be created to intentionally mislead readers. This compound term can also be defined as [230] “a news article or message published and propagated through media, carrying false information regardless of the means and motives behind it,” which, according to [296], overlaps with false news, misinformation [129], disinformation [128], satire news [218], or even the improper stories [83]. Defining fake news as false or inaccurate news is not fruitful since this does not exclude the occasional errors that occur in the reports from being fake news. Axel Gelfert argued that “being likely to mislead its target audience by bringing about false beliefs in them—are not yet sufficient to demarcate fake news from, say, merely accidentally false reports.” Gelfert [80, p. 105], indicating that such merely accidentally false reports should not be considered fake news. This is because these “reports do mislead their audiences by instilling false beliefs in them, but they do so as the result of an unforeseen defect in the usually reliable process of news production.” Gelfert [80, p. 105] while “fake news, by contrast, is misleading its target audience in a non-accidental way” [80, p. 105], and even if a putative report is misleading in a non-accidental way, it must also be deliberately in order to be counted as fake news [80]. Vian Bakir and Andrew McStay proposed to define fake news “as either wholly false or containing deliberately misleading elements incorporated within its content or context” [19, p. 1].

A widely adopted definition belongs to Cohen et al. [46], where they define fake news as everything ranging from malignant news to political propaganda. Another definition by Lazer et al. [140] fake news is “fabricated information that mimics news media content in form but not in organizational process or intent”. Furthermore, the term “fake news” is defined by the Collins English dictionary [58] as “false, often sensational, information disseminated under the guise of news reporting”. A definition that captures most of the fake news distinctive featuresFootnote 4 is proposed by Axel Gelfert: “(FN) Fake news is the deliberate presentation of (typically) false or misleading claims as news, where the claims are misleading by design” [80, p. 108]. Thus far, fake news refers to any kind of content whose main purpose is to deliberately deceive and mislead the readers (by instilling false beliefs in them). Nevertheless, there has been a recent point of discussion about the definition, perception and conceptualization of fake news term [50, 235].

2.2 Related terms

Journalists and others have urged us to “stop calling everything ‘fake news”’ [182]. Thus, it becomes necessary to introduce related concepts and definitions to differentiate fake news from related terms such as misinformation, rumours, spam, etc. Some related terms often co-occur or overlap with fake news term reported in the literature, namely, false news [268], deceptive news [12, 139, 235], disinformation [128], satire news [119], misinformation [129, 275], clickbait [42], rumor [300] and others. A 2020 survey [296] reported that these terms could be differentiated based on three characteristics: (i) authenticity, which emphasizes the falsity of the information; (ii) intention, which emphasizes the intention to mislead readers; and (iii) whether the information is news. Propaganda, conspiracy theories, hoaxes, biased or one-sided stories, clickbait, and satire news, are other types of potentially false information that we can find on OSNs that contribute to information pollution [162].

However, whether satirical publications should be considered in the definition of fake news sparked controversy among many researchers where some scholars point out that satire should be excluded from the fake news definition since it is “unlikely to be misconstrued as factual” and not necessarily to inform audiences [12, p. 214], while others disagree in that it should be rather included in the definition of fake news as it could be misconstrued as telling the truth, even though it is legally protected speech [125]. For instance, an apology was issued by a satirical site run by hoaxer Christopher Blair, in 2017, for making a story “too real” since many were unable to detect its satirical nature [75, 167]. According to [289], false information can be proliferated by bots, activist or political organizations, governments, journalists, criminal/terrorist organizations, conspiracy theorists, hidden paid posters, state-sponsored trolls, and individuals that benefit from false information. There are many ways to motivate those actors, either to manipulate public opinion, to create disorder and confusion, to hurt or disrepute, to obtain financial gain by increasing site views, to promote ideological biases, or even to entertain individuals [231]. Several topics related to FND have been studied in the existing literature. This is including misinformation [109, 203, 290], rumors detection [152, 224], and spammer detection [105, 142, 159]. Following previous research [279], a series of key terms (i.e., the concept of misinformation and a list of subconcepts) related to fake news is adopted. The following definitions are provided. Misinformation “defined as false, mistaken, or misleading information” that is unintentionally spread due to honest reporting mistakes or incorrect interpretations [66, 98] while Disinformation can be understood as “the distribution, assertion, or dissemination of false, mistaken, or misleading information in an intentional, deliberate, or purposeful effort to mislead, deceive, or confuse” [70, p. 231] or promote biased agenda [265]. The differences between misinformation and disinformation have been identified conceptually in [246]. Let us remind the reader that while misinformation and disinformation terms are both referring to false (incorrect) information, they differ in terms of the intention characteristic, where misinformation is spread without the intent to deceive, whereas disinformation is spread with the intention to deceive [67, 98, 133]. Depending on the intent of the source, rumours can fall into either of these two types [misinformation and disinformation], given that rumours are not necessarily false but may turn out to be true [300]. A Rumor is a story that carries truth that is sort of unverified or doubtful, circulating from one person to another. This term has been used to overlap with the term fake news and other terms of disinformation recently. Indeed, media scholars and some social epistemologists have long been concerned with demarcating such phenomena as gossip, rumour, hoaxes, and urban legends [79].

Thereupon, gossip “possesses relevance only for a specific group” and “is disseminated in a highly selective manner within a fixed social network”, while rumours are “unauthorized messages that are always of universal interest and accordingly are disseminated diffusely” [27, p. 70]. On the other hand, hoaxes are “deliberately fabricated falsehoods that masquerade as the truth” and, different from fake news, “serve quite different purposes and typically intended to be found out eventually” [80]. A Spam is defined as unwanted messages containing irrelevant or inappropriate information sent to a large number of recipients. We remind the reader that the terms fake news and rumours, specifically, are often used interchangeably in the literature. Readers are referred to [163] for a difference between these two, where fake news was defined as false information spread through the Internet or news outlets to intentionally gain political or financial benefits, and rumours were defined as an unverified piece of information that can be true or false; when this information is false, it can be considered fake news. The conceptual differences and similarities between these terms and many other terms associated with “fake news” have been provided by previous research; we refer interested readers to [167] for more information.

2.3 Fake news on traditional news media

Traditionally, fake news, as a growing issue affecting the world, exist and is typically disseminated via traditional media ecology over time, such as newspaper and television. Nevertheless, unlike traditional newspapers, fake news on OSNs is terribly prevalent. As Alvin Goldman stated on the advantages of traditional newspapers over online blogs:

“Newspapers employ fact checkers to vet a reporter’s article before it is published. They often require more than a single source before publishing an article and limit reporters’ reliance on anonymous sources. These practices seem likely to raise the veristic quality of the reports newspapers publish and hence the veristic quality of their readers’ resultant beliefs” [87, p. 117].

Fake news is spreading quickly due to the upbringing and development of the information environment such as OSNs, as we no longer rely on traditional news environments where people cannot express their opinions and easily share falsified information with others. Today, to create and circulate content online, it is not necessitous to be a journalist and work for a publication [167], where individuals can participate, share and react with others freely on OSNs leading to exacerbating the problem of fake news dissemination that have devastating effects on society. Furthermore, studies show that they may even be preferred over traditional professional sources [248]. This is specifically problematic given that individuals find information that agrees and matches with prior beliefs as more credible and reliable because credible information appears together with personal opinions, creating an environment that aggravates misinformation [30]. This subsection discusses several psychological and social science theories describing why people believe fake news, why they participate in spreading it, and the negative influence of fake news on individuals and society.

2.3.1 Psychological theories

In reality, fake news has the power to influence people (whom we often refer to as vulnerable users, those who were involved in fake news dissemination without recognizing the falsehood). By exchanging uninformed knowledge over networks, vulnerable users are considered major contributors to the dissemination of such knowledge. On digital platforms, different actions can be expressed by different users towards a specific piece of information (fake) where a group of users may believe and repost information blindly based on their preexisting beliefs or because a credible source received it while others may further search for other external sources for a piece of evidence in order to verify or dismiss new information. Two major psychological and cognitive factors exist to demonstrate that people are prone to fake news where they cannot, by nature, discriminate between fake and real news. (i) Naive Realism: individuals tend to believe that their perceptions of reality are the only accurate views, while others who disagree are regarded as uninformed, irrational, or biased [213]; (ii) Confirmation Bias: people tend to trust and prefer to receive information that confirms their existing views or beliefs [177]. Indeed, “citizens will rely on their beliefs when they are unable to believe alternative accounts” [145, p. 1]. According to Pennycook and Rand [192], individuals fail to think analytically when encountering misinformation, and thus they easily fall for it. This is especially true with information that agrees with their prior knowledge and beliefs [30, 210]. Owing to such cognitive biases, vulnerable users often perceive fake news as real. The matter of concern is that once such a misperception is formed, it is then very challenging to change it. Psychology studies show that true information is not only unhelpful in correcting false information (e.g., fake news) but also may sometimes increase the misperceptions [178]; Similar to confirmation bias, (iii) Selective Exposure: where users often tend to prefer information that confirms their preexisting attitudes, as the art historian Mark Jones stated, with some hyperbole: “Each society, each generation, fakes the thing it covets most” [118, p. 13]; and (iv) Desirability Bias: users are more likely to accept information that pleases them [297]. In the realm of fake news, the power to sway individuals’ beliefs and behaviours is evident. Vulnerable users unknowingly spread fake news due to cognitive biases like naive realism and confirmation bias. These biases hinder discernment between real and fake news, with confirmation bias reinforcing preexisting beliefs. Additionally, encountering misinformation often curbs analytical thinking, amplifying the challenge of correcting false beliefs.

2.3.2 Social theories

Under this heading, we discuss social science theories demonstrating people’s tendency to spread fake news. For example, Prospect Theory describes decision-making as a process through which people make choices to promote relative gains or diminish relative losses compared to their current state [121, 259]. As stated by Social Identity Theory [252, 253] and Normative Influence Theory [15, 122], this social acceptance and confirmation is a must to reflect person’s identity and self-esteem. Based on that, people usually tend to choose "socially safe" options; that is, when a social group of users consumes fake news, they, as a group, are likely to disseminate news assuming that it increases social gain. Some of these theories have been categorised in Table 1; for detailed descriptions, readers are referred to [297].

2.4 Fake news on OSNs

Under this heading, we discuss some key features of fake news widespread on OSNs. Unlike traditional media ecology (e.g., television), fake news spreads very quickly on OSNs, and here we explain the characteristics that cause this phenomenon.

2.4.1 Malicious accounts on OSNs

Using social media has led to an increase in fake account creation that breeds the daily spread of fake news for specific purposes. Real humans do not necessarily manage some malicious accounts but can also be bots. Research has shown that fake news pieces will likely be created and spread by non-human accounts, such as social bots or cyborgs [228, 235] or trolls. (i) Social bot can be described as a social media account that is managed by a computer algorithm to automatically produce content and interact with humans (or other bot users) on OSNs [69]. Driven by benefits, social bots can distort a large amount of information on OSNs and often use the intention to spread falsified information. For example, it was found that a considerable amount of online social bots distorted the 2016 U.S. presidential election online discussions [28]. In the week before election day, a massive amount of social bots accounts on Twitter (i.e., roughly 19 million) published tweets supporting either Clinton or Trump [108]. Moreover, some current efforts discussed how social bots coordinated misinformation campaigns during the 2017 French presidential election [68]. As Howard et al. [104, p. 1] clarify, “both fake news websites and political bots are crucial tools in digital propaganda attacks—they aim to influence conversations, demobilise opposition and generate false support”. A recent study, for instance, showed that social bots are considered the catalyst for fake news spreading on social media platforms where they amplify the diffusion of content coming from low-credibility sources suggesting that “curbing social bots may be an effective strategy for mitigating the spread of low credibility content” [229, p. 5-6]. Another group of users likely to spread fake news are so-called (ii) trolls, real human users who publish inflammatory messages that carry emotional responses on social media platforms or other newsgroups to manipulate the public. Evidence, for instance, indicates that 1,000 paid Russian trolls were disseminating fake news on Hillary Clinton [106], suggesting that these malicious users are often paid to spread false information. The trolling effect sparks people’s inner negative emotions (e.g., anger and fear), leading to mistrust and irrational behaviour [235]. (iii) Cyborg account is another type of malicious accounts. Cyborg users are human users rather than bots who often utilise automation to spread fake news. Cyborg’s account usually is registered by a human as camouflage and set automated programs to perform activities on social networks [180].

These malicious accounts promote fake news dissemination on OSNs; thus, precautionary measures are required to mitigate the effects of such accounts. Twitter, for example, deleted up to 6 per cent of all its registered suspicious accounts [107]. As social media emerges as the modern battleground for information warfare, the intricate interplay between human and non-human agents comes into focus. This subsection delves into the diverse range of actors responsible for propagating fake news, including social bots, trolls, and cyborg accounts. This exploration illuminates the multifaceted nature of fake news dissemination by scrutinising their motivations and tactics.

2.4.2 Echo chamber effect

The OSNs are one of the most powerful sources of information by which users can communicate and share their opinions. However, a disruptive new phenomenon in the news ecosystem has risen from OSNs: the so-called echo chambers [1].

Studies revealed that users on Facebook are more likely to form polarised groups, i.e., echo chambers, and choose the information that follows their belief system [53]. Users on Facebook always follow similar-minded users and therefore consume news that supports their preferred existing stories [207], leading to an echo chamber effect. Owing to the following psychological factors, the echo chamber effect simplifies the process of disseminating fake news [189]: The first factor is (i) Social Credibility where users frequently believe that a source is reliable if others believe it to be reliable, even when there is not enough evidence to determine whether the source is telling the truth. The second factor (ii) Frequency Heuristic lies in the fact that users naturally prefer information they frequently hear regardless of its truthfulness. In the echo chamber, consumers tend to share the same information. Research showed that increased exposure to an idea is sufficient to generate a positive opinion of it [287, 288]. With the flooding of information on OSNs, usually, the existence of the so-called echo chamber effect amplifies and reinforces biased information [110]. That being the case, this echo chamber effect forms segmented and homogeneous communities with a relatively limited information ecology, which, as studies stated, becomes the major factor of information dissemination that further promotes polarisation [52].

To some extent, all the factors mentioned in the previous subsections are related to the echo chamber. In turn, this leads to the emergence of homogeneous groups in which individuals share and discuss similar ideas. Groups such as these usually have polarised views since they are insulated from opposing perspectives [185, 249, 250]. This type of close-knit community is responsible for the major dissemination of misinformation [52]. Indeed, several possible interventions for preventing the spread of falsified information on OSNs have been proposed, ranging from (1) curtailing the most active (and presumably bot) users [229] to (2) harnessing the flagging activities of users in collaboration with fact-checking groups. In [264], the second intervention strategy is proposed as the first viable mitigation tool to reduce misinformation dissemination by utilising users’ Facebook reporting activities. As a result, many popular organisations are now tackling the dissemination of fake news. In addition, in certain countries, Facebook works with third-party fact-checking organisations to review, rate, and identify information’s accuracy [38]. On the other hand, Twitter introduced new labels and warning messages as an initiative in May 2020 to curtail the misinformation around COVID-19 [214] and to notify people about the falsified tweets, facilitating the process of fact-checking such tweets in order to make informed decisions. For instance, in January 2021, Twitter launched BirdWatch [47], a community-driven strategy enabling users to identify tweets they perceive to be misleading. In summary, individuals are increasingly drawn to communities that share similar viewpoints, resulting in isolated information environments that strengthen their pre-existing convictions. These divided echo chambers enable the effortless spread of false information, accentuated by psychological aspects like social credibility and the frequency heuristic. Researchers and society at large need to recognise the potential consequences of these echo chambers and work towards fostering more open and diverse information ecosystems to mitigate the perpetuation of biased narratives.

3 Existing FND approaches

FND is a critical task in the field of information processing, aiming to distinguish between true and false information circulating in various media sources, particularly OSNs. It involves the development of computational methods and techniques to automatically identify and classify news articles, headlines, or social media posts that contain deceptive, misleading, or fabricated information. The proliferation of fake news has raised concerns about its detrimental impact on individuals, societies, and democratic processes, as it can influence public opinion, incite polarization, and even contribute to real-world consequences. FND involves the application of various approaches, including NLP, ML, and DL, to analyze textual content, contextual information, source credibility, and other features to discern the veracity of news items.

Trustworthiness and veracity analytics of online statements is a hot research topic [215] recently. This includes predicting information credibility shared in social media [166], stance classification [301] and contradiction detection [141]. Previous related studies rely heavily on textual content features to detect news veracity. Under this heading, we summarise classic and recent work on FND. Given how fast fake news is disseminated through OSNs and other websites, it would lead to real consequences if it is not paid enough attention. It is difficult for a human being to distinguish fake from real news. In one study, by a rough comparison scale, human judges achieved a success rate of only 50-63% in identifying fake news [217]. Another study found that respondents found it “‘somewhat’ or ‘very’ accurate 75% of the time” when shown a fake news article, and another discovered that 80% of high school students had a hard time determining whether an article was fake [61, 63]. Human efforts have been intensified to combat the spreading of false information. Two of the most common examples of fact-checking websites developed to reduce the effect of growing misinformation are SnopesFootnote 5, PolitifactFootnote 6 and others. Previously, with more and more user-generated content (UGC) on OSNs, fact-checking websites and tools are vital to validate the information integrity [183] and reduce the effect of falsified information. These websites have been developed to verify the truthfulness of news, where annotations must be made manually by journalists and other experts who examine the article’s content to determine its authenticity. These websites perform a fact-checking task (i.e., the assessment of the truthfulness of a news story or claim [262]) to verify the information veracity by comparing them with one or more reliable sources [171]. One of the shortcomings of such sites is that they require extensive expert analysis, i.e., labour-intensive and time-consuming, which results in a late response. Furthermore, due to the volume of newly generated information, particularly on OSNs, these websites do not scale well [286]. Research by Gartner [34] predicts that “By 2022, most people in mature economies will consume more false information than true information”. Thus, it becomes more obvious how it would be worth automating the FND process if you consider how much a manual detection may cost in terms of both time and human effort. It is not an easy job for sure, given that the proposed models need to accurately understand the natural language nuances of fake and real content. Table 2 presents some of these manual fact-checking websites and tools (PolitiFactFootnote 7, FiskkitFootnote 8, SnopesFootnote 9, TruthOrFictionFootnote 10, HoaxSlayerFootnote 11, GossipCopFootnote 12, FullFactFootnote 13, Social Media sitesFootnote 14) designed for information veracity detection.

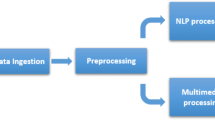

This section aims to provide a comprehensive overview of the related work on FND that utilised an assortment of ML and DL approaches based on three main categories of FND methods: content-based, context-based, and hybrid-based methods. It also provides a comprehensive review of the commonly used FND datasets. We summarise the existing approaches in Table 3. Additionally, we describe these existing representative works in the following subsections.

3.1 Content-based

Earlier research on FND mainly relied on hand-engineering relevant data that exploited linguistic features. Several ML and DL methods are employed to solve the classification problem, ranging from logistic regression to convolutional and recurrent neural networks. Text classification has traditionally used statistical ML methods such as Logistic Regression (LR), Support Vector Machines (SVM), K-Nearest Neighbour (K-NN), Naive Bayes (NB), Decision Trees (DT), Random Forest (RF), Gradient Boost (GB), and XGBoost (XGB). Many studies have applied the above mentioned algorithms to detect fake news content, achieving high accuracy. For example, a study by Bali et al. [20] extracted a set of features from both news headlines and news contents, such as the n-grams count feature for automatic FND. Their study—using seven different classification algorithms on three different datasets, found Gradient Boosting (XGB) classification algorithm to be the best, yielding the highest accuracy across all datasets. Perez-Rosas et al. [193] present a dataset of false and true news articles and analyse various features of the news articles (including n-grams, punctuation, grammar, and readability). Then, a linear SVM classifier is trained based on these features, with varying results depending on the feature set. In this study, computational linguistic features were shown to be useful in detecting false news automatically.

Potthast et al. [200] attempted to assess the stylistic similarity of several categories of news: hyper-partisan, mainstream, satirical, and false. A meta-learning approach originally intended for authorship verification is employed in the proposed methodology. As a result of comparing topic- and style-based features with RF classifiers, the researchers concluded that while hyper-partisan, satire and mainstream news are distinguishable, style-based analyses alone cannot detect false news. Fuller et al. [74] developed a linguistic-based method for deception detection consisting of thirty-one linguistic features where three classifiers were to be used to refine them to only eight cues. Such cues were based on the earlier proposed different clue sets in the linguistic field [190, 292]. However, their work is disadvantageous in that the cues heavily relied on the text’s topic or domain, leading to generalisation issues where the model could not generalise well when tested on contents from different domains [11]. Using a relatively simple approach based on term frequency (TF) and term frequency-inverse document frequency (TF-IDF) has shown effectiveness in some previous studies. For example, Riedel et al. [211] applied a multi-layer perception (MLP) in the context of the fake news challenge dataset, and they have shown that using a relatively simple approach based on TF and TF-IDF yielded a good accuracy of 88.5%. In a study conducted by Ahmed et al. [4], the performance of the linear SVM classifier on a fake news dataset, so-called ISOT, has been tested and achieved promising results of 92% accuracy. Furthermore, Ahmed et al. [3] tested the performance of various classifiers, including but not limited to SVM, LR, and RF, to detect fake news. In their study, which uses several fake news datasets, the RF classifier also provides promising results on almost proposed datasets.

Bharadwaj [29] has experimented with different features such as TF and TF-IDF with n-gram features, and the results show that RF with bigram features achieves the best accuracy of 95.66%. Wynne et al. [281] experimented with character n-grams and word n-grams to study their effect on detecting fake news and concluded that the former contributes more towards improving FND performance compared to word n-grams. The TF-IDF and Count Vectorizer were used by [89] as feature extraction techniques. They demonstrated that their approach was more accurate than state-of-the-art approaches like Bidirectional Encoder Representations from Transformers (BERT). Linguistic approaches for FND have been applied using both supervised and unsupervised approaches. The prospective deceivers use certain language patterns, such as lots of phrasal verbs, certain tenses, small sentences, etc., which have been revealed by some experiments performed by psychologists in cooperation with linguistics experts and computer scientists [33, 96, 172, 255, 292]. For example, 16 language variables were investigated by Burgoon et al. [33] in order to see if they may help distinguish between deceptive and truthful communications. They conducted two experiments to construct a database, in which they set up face-to-face or computer-based discussions, with one volunteer acting as the deceiver and the other acting genuinely. Then such discussions were transcribed for further processing, and they came up with certain linguistic cue classes that could disclose the deceiver; refer to Table 4. They employed the C4.5 Decision Tree technique with 15-fold cross-validation to cluster and produce a hierarchical tree structure of the proposed features. In a short sample of 72 cases, their method’s overall accuracy was 60.72 per cent. They concluded that noncontent words (e.g., function words) should be included when studying social and personality processes. According to the authors, linguistic style markers like articles, pronouns, and prepositions are as important as specific nouns and verbs in revealing what individuals are thinking and feeling, and thus liars tend to tell stories that are less complicated, less self-relevant and more negative.

Liars were more likely than truth-tellers to utilise negative emotion (more negative feeling) terms [173]. Liars may feel guilty, either for their lie or the topic they lied about [270]. Knapp et al. [126] observed that liars were far more likely than truth-tellers to make disparaging statements about their communication partners. They observed that liars typically use more other references than truth-tellers; however, this is inconsistent with what [173] found, where they observed that liars used third-person pronouns at a lower rate than truth-tellers. According to [173], (i) liars employed fewer “exclusive” words than truth-tellers, implying a lower level of cognitive complexity. When someone employs words like but, unless, and without, they are distinguishing between what belongs in a category and what does not, and (ii) liars employ more “motion” verbs than truth-tellers, as simple, tangible descriptions are provided by motion verbs (e.g., walk, go, carry), which are more easily accessible compared to words (e.g., think, believe) that focus on evaluations and judgments. Horne et al. [100] applied an SVM classifier with a linear kernel using several linguistic clues. The authors cast the problem as a multi-class classification, attempting to determine whether an article is real, fake, or satire where classes are equally distributed. After 5-fold cross-validation with Buzfeed news (see Section 3.5) that was enriched with satire articles, they got a 78% accuracy. The feature set they employed mostly consisted of POS tags and certain Linguistic Inquiry Word Count (LIWC) word categories. As a result, they concluded that real news and false news are substantially different in their titles, while the content of satire and false news is somewhat similar.

Following the same vein, Newman et al. [172] thoroughly examined five experimental case studies, each of which had a different number of participants who were asked to be deceptive or genuine. They concluded with a collection of five out of twenty-nine linguistic cues (e.g., first-person singular pronouns, third-person pronouns, negative emotion words, exclusive words, and motion verbs) as the most significant predictors of deception. Again, the authors utilised LR to evaluate features and obtained better results than human assessors (67% vs 52% of accuracy). Table 5 shows the feature set proposed in their study. A study examining fake COVID-19-related news was conducted by Bandyopadhyay et al. [22], where the authors analysed data from 150 users by extracting information from their social media accounts, such as Twitter, and their email, mobile, and Facebook for the period spanning from March 2020 to June 2020. During the pre-processing phase, unrelated and incomplete news was removed. In this case, using K-NN as a classifier, the best prediction result was for June, with 0.91 F1-score, and the worst was for March, with 0.79 F1-score. Several ML baseline models, such as DT, LR, GB and SVM, are used in [187] to detect COVID-19-related fake news. In constructing an ensemble of bidirectional Long Short-Term Memory (BiLSTM), SVM, LR, NB, and NB combined with LR, the researchers [239] achieved a 0.94 F1 score.

In addition, Zhou et al. [292] presented a collection of twenty-seven linguistic characteristics divided into nine groups. They conducted an experiment in which the players in that scenario communicated using a web-based messaging system. Participants were split into pairs, one acting as the deceiver and the other acting honestly. The authors then used statistical analysis to evaluate the features, demonstrating the feasibility of using linguistic-based cues to differentiate between true and false texts. Table 6 shows the feature set proposed in their study.

A wide range of text stylistic, morphological, grammatical, and punctuation could serve as useful cues to detect news veracity. These sets of features were adopted by Papadopoulou et al. [184] using a two-level text-based classifier to detect click baits. Similarly, Rubin et al. [219] used some of these features, such as punctuation and grammatical features. Using supervised learning, Castillo et al. [37] assessed the credibility of content on Twitter. Topics categorised as news or chat by human annotators are extracted, and a model is built that determines which topics are newsworthy based on their credibility labels. Popat et al. [199] proposed an explainable attention-based neural network framework for classifying true and false claims and providing self-evidence for the credibility assessment. The goal of Hosseini et al. [102] is to use news content to detect different (six) categories of false news (from satire to junk news). In their analysis, they analysed documents using tensor decomposition to capture latent relationships between articles and terms and spatial and contextual relations between them. They then employed an ensemble method to combine different decompositions to identify classes with higher homogeneity and lower outlier diversity yielding superior results to state-of-the-art techniques. Using Convolutional Neural Network (CNN) and pre-trained word embeddings, Goldani et al. [84] propose a capsule network model based on ISOT and LIAR datasets for (binary and multi-class) fake news classification. Their results showed that the best accuracy obtained using binary classification on ISOT is 99.8%, while multi-class classification using the LIAR dataset yielded 39.5%. Similarly, Girgis et al. [82] performed fake news classification using LIAR datasets. The authors employed three different models: a vanilla recurrent neural network (RNN), a gated recurrent unit (GRU) and an LSTM. Regarding accuracy, the GRU model results in 21.7%, slightly higher than the other two models (LSTM with 21.6% and RNN with 21.5%).

The technique of learning how to transfer knowledge is a concept in ML known as transfer learning, which stores and applies the knowledge gained from performing a specific task to another problem. Learning this way is useful for training and evaluating models with relatively small amounts of data. In recent years, pre-trained language models (PLMs) have become mainstream for downstream text classification [56], thanks to transformer-based structures. Major advances have been driven by the use of PLMs, such as ELMo [194], GPT [208], or BERT [56]. BERT and RoBERTa, as the most commonly utilised PLMs, were trained on exceptionally large corpora, such as those containing over three billion words for BERT [56]. The success of such approaches raises the question of how such models can be used for downstream text classification tasks. Over the PLMs, task-specific layers are added for each downstream task, and then the new model is trained with only those layers from scratch [56, 147, 169] in a supervised manner. Specifically, these models use a two-step learning approach. In a self-supervised manner, they learn language representations by analysing a huge amount of text. This process is commonly called pre-training. Feature-based and fine-tuning approaches can then be used to apply these pre-trained language representations to downstream NLP tasks. The former uses pre-trained representations and includes them as additional features for learning a given task. The latter introduces minimal task-specific parameters, and all pre-trained parameters are fine-tuned on the downstream tasks. These models are advantageous in that they can learn deep context-aware word representations from large unannotated text corpora—large-scale self-supervised pre-training. This is especially useful when learning a domain-specific language with insufficient available labelled data.

Besides the fact that surface-level features cannot effectively capture semantical patterns in text, the lack of sufficient data constitutes a bottleneck for DL models. Thus, the power of BERT and its variations can be leveraged to build robust fake news predictive models. Relatively little research has been done to detect fake news using the recent pre-trained transformer-based models. The few observational studies that have been done using such models, despite the use of different methodologies and different scenarios, have shown promising results. One recent example of this is a study conducted by Kula et al. [130] presents a hybrid architecture based on a combination of BERT and RNN. Aggarwal et al. [2] showed that BERT, even with minimal text pre-processing, provided better performance compared to that of LSTM and gradient-boosted tree models. Jwa et al. [120] adopted BERT for FND by analysing the relationship between the headline and the body text of news using the FNC dataset, where they achieved an F1 score of 0.746. In an attempt to automatically detect fake news spreaders, Baruah et al. [23] proposed BERT for the classification task achieving an accuracy of 0.690. Although the BERT model has made great breakthroughs in text classification, it is computationally expensive as it contains millions of parameters (i.e., BERT base contains 110 million parameters while BERT large has 340 million parameters) [56]. Even though BERT is more complex to train (depending on how large a number of parameters are being used), a variation of BERT, so-called DistilBERT [225], provides a simpler and reasonable number of parameters compared to that of BERT (reducing BERT by 40% in size while retaining 97% of its language understanding abilities), thus, faster training (60% faster). With a larger dataset, larger batches, and more iterations, a robust BERT was developed, which is the so-called RoBERTa [147]. A benchmark study of ML models for FND has been provided by [123], where the authors formulated the problem of FND using three different datasets, including the LIAR dataset, as a binary classification. Their experimental results showed the power of advanced PLMs such as BERT and RoBERTa.

In a study conducted by Gautam et al. [78], the authors applied a pre-trained transformer model, so-called XLNet, combined with Latent Dirichlet Allocation (LDA) by integrating contextualised representations generated from the former with topical distributions produced by the latter. Their model achieved an F1 score of 0.967. In the same vine, a fine-tuned transformer-based ensemble model has been proposed by [232]. The proposed model achieved 0.979 F1 scores on the Constraint@AAAI2021-COVID19 fake news dataset. Similarly, the authors in [261] carried out several experiments on the same dataset, and they proposed a framework for detecting fake news using the BERT language model by considering content information and prior knowledge and the credibility of the source. According to the results, the highest F1 scores obtained ranged from 97.57 to 98.13. By applying several supervised ML algorithms such as CNN, LSTM, and BERT to detect COVID-19 fake news, the authors in [274] achieved the best accuracy of 98.41% using BERT cased version. Alghamdi et al. [5] conducted a comprehensive benchmark study to evaluate the effectiveness of various ML and DL techniques in detecting fake news. The study involved the use of classical ML algorithms, advanced ML algorithms, and DL transformer-based models. The experiments were performed on four real-world fake news datasets, namely LIAR, PolitiFact, GossipCop, and COVID-19. The authors utilised different pre-trained word embedding methods and compared the performance of different techniques across the datasets. Specifically, they compared context-independent embedding methods, such as GloVe, with the effectiveness of BERT, which provides contextualised representations for FND. The results showed that the proposed approach achieved better results by solely relying on news text compared to the state-of-the-art methods across the used datasets. In a study conducted by [9], the authors focused on detecting COVID-19 fake news, considering the risks associated with disseminating false information during the pandemic. For this task, they investigated the effectiveness of various ML algorithms and transformer-based models, specifically BERT and COVID-Twitter-BERT (CT-BERT). To assess the performance of different neural network structures, the authors experimented with incorporating CNN and BiGRU layers on top of the BERT and CT-BERT models. They explored variations such as frozen or unfrozen parameters to determine the optimal configuration. The evaluation was conducted using a real-world COVID-19 fake news dataset. The experimental results revealed that incorporating BiGRU on top of the CT-BERT model yielded outstanding performance, achieving a state-of-the-art F1 score of 98%.

We believe that choosing effective features plays a crucial role in obtaining a good performance on news verification. Exploiting visual content to examine the truthfulness of social news events on OSNs is essential [113], and thus, incorporating visual cues, specifically images, with textual features is effective and would result in better performance in detecting fake from real news. The reason to justify this is that, according to several studies, visual information is easier to interact with and retain than its text-based counterparts, implying the importance of its use. This is because using images instead of 140 characters is more interesting, leads to more interaction, and is thus widespread. According to Twitter statistics, tweets with images gain roughly 35% higher retweets than text-only tweets. So, exploiting such cues in detecting fake news events on OSNs is a golden opportunity. Although the studies mentioned above that relied largely upon features derived from content perform well in identifying fake content, their major limitation is that content-based features alone cannot be used to characterise fake news content properly. The fakers mainly utilise different tactics to mimic trustworthy content. Therefore, considering other auxiliary information besides content-based features would provide a more comprehensive understanding of the phenomenon, resulting in higher detection performance.

3.2 Context-based

With the continued growth of social media platforms and the rise in falsified information that mimics trustworthy information being generated, people worldwide face a significant challenge in discriminating fake claims from real ones. The process of detecting fake news is inherently challenging because fake news is usually written intentionally to mislead the readers. One solution to this problem currently being explored is, in addition to the linguistic and lexical cues of the article, the use of auxiliary contextual information, including user engagements and activities on social media platforms. If effective, such a solution could potentially boost detection performance. However, high-quality data, particularly online social media data, poses another challenge to the process, including misspellings and others. Adding to the problem is the access restriction posed by Twitter API. As such, developing an automated solution with high accuracy is, therefore, challenging. Besides content-based information, additional contextual features about the context—derived from user social engagements on microblogging can be used for successful FND. User social engagements represent the diffusion of news over time, giving useful auxiliary information to infer the news article’s veracity [235]. Researchers relied heavily on using news content to model user behaviour and interests to detect fake news based on the assumption that users’ posts and activities on microblogs and social networks often reflect their behaviour. Context-based features refer to users’ interactions and engagements through social media platforms. This includes social context features such as the number of friends (followers), number of posts, replies [136, 302], and others.

These features have been investigated by the existing work to characterise fake content. For example, Tacchini et al. [251] present a framework wherein the authors used conspiracy theories and scientific pages as sources to build a dataset by collecting a set of posts and users for the goal of detecting fake news based on users who liked them on Facebook. They compare LR to a harmonic method that demonstrates the effectiveness of their model using only a small percentage of the training data.

Volkova et al. [266] proposed a fusion neural-based model using a combination of tweet text, linguistic cues such as moral foundations, and user interactions to detect four types of suspicious news: satire, hoaxes, clickbait, and propaganda. They compared their approach with state-of-the-art techniques. The authors discovered that adding syntax and grammar features does not affect performance, whereas incorporating linguistic features improves classification results, with social interaction features being the most informative for finer-grained separation of such suspicious news posts. Propagation of news items on OSNs has proven effective in uncovering useful patterns to help discriminate fake content from real ones. For example, Yu et al. [149] performed an early detection of false news by modelling diffusion pathways as multivariate time series using a hybrid model of CNN and GRU. Their approach is tested on two real-world datasets from Twitter and Sine Weibo, outperforming other state-of-the-art algorithms.

The goal of Wu et al. [278] is to investigate the propagation of falsified messages in social networks. As a result, they used the Twitter API and the fact-checking website Snopes to create a custom dataset that included both genuine and fake news. Furthermore, they employ a neural network model to classify news after inferring embeddings for users from the social graph. To this end, they developed a new model for embedding a social network graph in a low-dimensional space and built a sequence classifier by analysing message propagation pathways using Long Short-Term Memory (LSTM) networks. Another study conducted by Wu et al. [277] detected fake news/rumours by examining high-order propagation patterns using a graph kernel-based model. Similarly, Ma et al. [153] evaluated the similarities between propagation tree structures of news by applying the same classifier and features for their verification. Later, they suggested a top-down/bottom-up tree-structured neural network for rumour detection; in other words, they made use of a non-sequential propagation structure for identifying different types of rumours [155]. Kwon et al. [135] used the network and temporal features to detect rumours, and their findings demonstrated the importance of such features in detecting and identifying rumours over longer periods.

Finally, based on the assumption that fake news has a different propagation pattern than other types of news, one study examined the propagation pattern among news publishers and subscribers using a propagation network [295]. However, the downside of the approaches that solely relied on context-based features is that these approaches are unable to effectively detect fake news content as early as possible, i.e., early fake news detection. This is because such information is insufficient or often unavailable at the early stage of fake news dissemination. Therefore, this calls for building approaches that can effectively harness news content to detect fake content as quickly as possible before such content goes viral.

3.3 Hybrid-based features

Incorporating heterogeneous features, often called hybrid features, has demonstrated favourable results across a range of tasks in diverse domains [202]. By combining multiple types of information or characteristics, such as textual, visual, or contextual features, the integration of hybrid features allows for a more comprehensive representation of the underlying data. Several related studies leverage the advantage of exploiting both content- and context-based features for FND. For example, Shu et al. [237] detected fake news by considering the tri-relationship between publishers, news items, and users. A non-negative matrix factorisation [188] and users’ credibility scores were used to analyse user-news interactions and publisher-news relations. The performance of several classifiers was evaluated on the FakeNewsNet dataset, and the findings suggested that the social context could be leveraged effectively to improve the detection of fake news. A natural language inference approach (i.e., inferring the veracity of the news item) using BiLSTM and BERT embeddings is proposed by Sadeghi et al. [222] using the PolitiFact dataset. The authors aimed to use NLI methods to improve several classical ML and DL models, such as DT, NB, RF, LR, k-NN, SVM, BiGRU and BiLSTM, using various word embedding methods, including context-independent word embedding methods such as Word2vec, GloVe, fastText, and context-aware embedding models such as BERT. The experimental results show the effectiveness of using such methods for FND, achieving an accuracy 85.58% on the PolitiFact dataset.

To classify suspicious and trusted news, Volkova et al. [265] built models based on linguistic features. The authors aimed to classify 130 thousand news posts as either verified or suspicious and to predict four sub-types of suspicious news: hoaxes, satire, propaganda, and click baits, using different predictive models. Using tweet content and social network interactions, the author demonstrates that neural network-based models surpass lexicon-based models. Additionally, in contrast to earlier studies on deception detection, they discovered that incorporating grammar and syntax features into the models does not affect performance.

Although including linguistic features showed promising classification results, a finer-grained separation between the classes is achieved with social interaction features. Various features, according to O’Donovan et al. [179], can be used to predict content credibility. To begin, they defined a set of features that included content-based features, user profile features, and others that reflected the dynamics of information flow based on Twitter data. Then, after examining the distribution of each feature category across Twitter topics, they concluded that their efficacy varies greatly with context, both in terms of the occurrence of a particular feature and how it is used. Considering tweet-based textual features and Twitter account features, Gupta et al. [92] applied decision tree-based classification models that achieved a 97 per cent detection accuracy to detect fake and real images shared on Twitter using the Hurricane Sandy dataset. To identify fake news, Conroy et al. [48]—as one of the first researchers to apply network analysis in FND, reviewed linguistic and network approaches as two major categories of methods to uncover fake news characteristics. The former is in charge of revealing language patterns such as n-grams and syntactic structures, semantic similarity, and rhetoric relations between linguistic elements and their associations with deception. As the name implies, the latter deals with network information and propagation patterns that can be harnessed to measure mass deception.

Elhadad et al. [64] experimented with an assortment of ML algorithms such as DT, LR, SVM, multinomial NB, and neural networks using hybrid sets of features extracted from online news content and textual metadata on three publicly available datasets (ISOT, FA-KES and LIAR). In their study, both SVM and LR classifiers were shown to be the best-performing models on the LIAR dataset, while the best accuracy of 100% was obtained when using Decision Trees on the ISOT dataset, and the best result of 58% was obtained when applying Multinomial Naive Bayes model on FA-KES dataset. Ruchansky et al. [220] proposed a hybrid DL model using two real-world datasets of Twitter and Weibo for FND. Their approach comprised three modules, namely capture, score and integrate. Given an article, the first module adopted RNN LSTM to capture the temporal pattern of user activity based on the response and text, while the second module, based on the behaviour of users, learns source characteristics, and those modules are combined to classify fake articles from real ones. Their experimental results demonstarted the importance of capturing the articles’ temporal behaviour and the users’ behaviour for FND, where they achieved high accuracies of 89% and 95%, respectively, on Twitter and Weibo datasets.

Alghamdi et al. [6] developed a deep 6-way multi-class classifier using the BERT model to classify statements in the LIAR dataset into fine-grained categories of fake news. The framework employed three main components: BERT\(_{base}\) was utilised for encoding and representing the text data, followed by passing it through a CNN and a max-pooling layer for feature map reduction. The metadata associated with the statements was encoded using another CNN to capture local patterns. The output from this CNN was then passed through a BiLSTM network to extract contextual features. The outputs from the two components were concatenated and fed into a fully connected layer for classification. The authors emphasised the importance of feature selection, particularly in the pre-processing stage, to improve the classifier’s performance. They found that selecting relevant features, such as credit history, was crucial, as some other features were found to confuse the classifier and degrade its performance. The authors in [8] focused on detecting fake news by examining both the news content and users’ posting behaviour. They employed DL techniques, specifically BERT, CNN and a BiGRU with a self-attention mechanism, to capture rich and contextual representations of news texts. By combining natural language understanding with transfer learning and context-based features, the proposed architectures aimed to enhance the detection of fake news. The experiments were conducted using the FakeNewsNet dataset. The results demonstrated that incorporating information about users’ posting behaviours, in addition to textual news data, improved the performance of the models in detecting fake news.

Incorporating visual cues has also been investigated. For example, Wang et al. [273] proposed an event adversarial neural network (EANN) approach using textual and visual features from multi-modal data for FND. Their architecture comprises three modules: (1) multi-modal feature extractor (given news article, this module is in charge of extracting both textual and visual features using neural networks); (2) event discriminator (given news article, this component uses a min-max game in order to further captures event-invariant features; and (3) fake news detector (for news classification as either true or false). In addition, deep neural network (DNN)-based models, including Convolutional Neural Networks (CNN), BiLSTM, and ubiquitous transformers, have increasingly been the focus of research over the past few years. For example, Yang et al. [283] proposed a hybrid model using a CNN-based model by including textual and visual (images) features for FND. While their model was found to be effective, nevertheless, relying on the CNN model would result in neglecting contextual semantical relations existing in the context of the news content. Thus, when fusing CNN with other state-of-the-art techniques, such as the pre-trained BERT model, we expect the local and global contextual and semantical relations to be effectively captured, leading to fine-grained performance.

Using the attention mechanism, Jin et al. [111] built a hybrid framework by incorporating text, images, and context-based features. Their framework used a pre-trained VGG-19 network to extract visual features and an LSTM network to concatenate text- and context-based information. Then, these components are fused using an attention mechanism. To detect fake news, Khattar et al. [124] utilised text- and visual-based information in a variational autoencoder model coupled with a binary classifier. More recent work by Singhal et al. [244] designed an architecture called Spotfake by conducting a survey in order to detect fake news based on text and images. Text feature representations are obtained using BERT, while image feature representations are obtained using a pre-trained VGG-19 network. Predictions are then made by combining the two modalities. Despite the success of their model’s performance, their architecture failed to take advantage of the correlation between modalities, which is crucial when detecting fake news. They found that using the multimodal approach, 81.4% of people could discriminate fake news from real ones while relying on the unimodal approach (only text or only images), the discrimination rate is 38.4% if only and 32.6%, respectively.

Researchers have also focused on using auxiliary information beyond visual and textual information to detect fake news. As part of their deep framework, Cui et al. [49] incorporated user sentiment extracted from comments into the multimodal framework. According to their experimental results on PolitiFact and GossipCop datasets, they have achieved better F1 scores than baseline methods (77% and 80%, respectively). The similarity between the text and image features is also measured to evaluate whether the news is credible [294]. Fusing the relevant information between different modalities while maintaining the unique properties of each is a challenge. In addition, for some news, a fusion between different modalities may result in noise information that has a negative impact on the performance of the model. To handle these challenges, a multimodal FND framework based on Crossmodal Attention Residual and Multichannel convolutional neural Networks (CARMN) [245] is proposed. While maintaining the unique information about the target modality, this framework can selectively extract relevant information about a target modality from another source modality.