Abstract

The importance of early prediction in reducing the impact of crime cannot be overstated. Machine learning algorithms have proven to be effective in this regard, but their inability to capture key features automatically can be a hindrance. To overcome this challenge, we propose a deep neural network model that is capable of extracting salient features automatically for predicting crime categories using real-world crime data sourced from the Chicago open data portal. To ensure the robustness of our proposed model, we carried out an extensive exploratory data analysis to determine the impact of socioeconomic indicators on crime occurrences. Additionally, we implemented a data upsampling technique to handle class imbalance issues, and we leveraged hyperparameter optimization algorithms to fine-tune the model. The results of our study were impressive. Our proposed model outperformed the baseline model and other algorithms, with an average improvement of 6% in macro F1 score. This suggests that our model is highly effective, if not superior, in predicting crime categories accurately. Overall, our study provides a solid framework for using deep neural network models in crime prediction, while highlighting the importance of automatic feature extraction in enhancing the accuracy of predictions. By reducing the impact of crime through early prediction, we can help to create a safer and more secure society.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Crime is a highly chaotic and randomly occurring pattern. Crime prediction is crucial for building resilience to crime by proactively managing and minimizing its effects on our lives. This work attempts to build a model that can accurately and efficiently predict crime at a specified location within a specific time in the future. Data mining requires a systematic study of large datasets to (dis)prove established hypotheses or ideas or to discover new or previously unknown (but valuable) knowledge using machine learning methods [25]. As one of the Artificial Intelligence methods, machine learning may provide highly cost-effective solutions in the prediction area. Crime prediction analysis is the process of identifying crime patterns to prevent potential crime before it happens.

Due to the massive amount of continuously generated crime data, machine learning and data mining techniques are urgently needed. Analyzing such a massive amount of data could assist in identifying crime trends and extracting some interesting patterns, which in turn can be utilized in such a way as to mitigate the indisputable impacts and provide information to assist operational personnel in the arrest of criminal offenders. Most of the existing studies into crime type prediction relied on classical machine learning algorithms to detect crime events automatically. The inability to capture more deep semantical features is one limitation of these algorithms.

This presented work differs from previous studies by applying state-of-the-art techniques such as deep neural networks to predict the crime occurrence w.r.t the main elements of crime data, namely, crime type, location and crime occurrence time. In particular, it goes beyond the work of [24] by increasing the prediction performance accuracy. This study proposes a deep neural network (DNN) with hyperparameters optimized using Keras Tuner to build a model that accurately and efficiently predicts the crime type given some spatiotemporal attributes. More specifically, the framework consists of three dense layers with 160, 64, and 64 units, activated using ReLU as an activation function. Finally, the resultant output is passed to a classification layer with four neurons activated using the softmax function. Exploratory data analysis is conducted, and imbalanced class distribution is handled using the synthetic minority oversampling technique (SMOTE). The study found that the DNN model is significantly effective for crime category prediction. It achieves, on average, about 6% better considering macro F1 score compared to the baseline model.

The rest of this paper is organized as follows. First, the literature related to the prediction of crime trends is summarized in Section 2. Then, Section 3 describes the methods used in this work, and an extensive exploratory analysis is presented. Then, the experimental results and discussion are presented in Section 4. Finally, Section 5 concludes the paper.

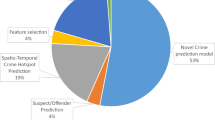

2 Related work

Many studies proved several factors that may lead to crime. A considerable amount of literature has been published on predicting crime occurrence and crime rates [21, 33, 37, 41]. Drawing on an extensive range of sources, the authors explored the relationship between particular factors of crime (e.g., the level of income, poverty, and unemployment) and criminal activity [7, 19, 30].The literature highlights a relationship between crime and the following three dimensions: time, place, and population, which makes the researcher’s task more complicated [10]. Following the line of research, numerous studies have been carried out to detect the patterns of specific types of crime across a particular region or time for prediction [18, 20, 22, 39].

2.1 Time-centric paradigm

Temporal patterns indicate when the crime occurs. Mining frequently occurring patterns within particular time sequences is in urgent need. Crime rates or crime occurrences can be investigated for different hours of the day, days of the week, months of the year, years, and others. A bulk of studies attempt to investigate and predict crime incidents based on the temporal dimension. Mohler et al. [27] examined the extent to which crime occurrences spread from a certain place to its neighbourhoods by using a self-exciting point processes model to identify the crime patterns. Later, this study extended to a marked point process [26], where some years of crime data, and different types of crime, have been used by hotspot maps. Unlike the non-parametric model introduced in [27], the new model is a parametric version. In [3], the authors applied the seq2seq based encoder-decoder LSTM model to model the crime events prediction framed as a univariate time series task.

2.2 Place-centric paradigm

Spatial patterns refer to where the crime occurs. Detecting criminal hotspot patterns would contribute to lowering crime. The criminologist David Weisburd, 2008, suggested the principle of switching a people-centric paradigm to a place-centric one [38], which indicates that paying more attention to geographical topology is crucial for crime detection. Under this heading, several studies often focus on investigating and detecting crime hotspots in order to predict spatial patterns considered to pose considerable risk to society, including [8, 11]. Wang et al. [37] utilized Point-of-Interest (POI) data and geographical features to study the crime rate inference problem for Chicago community areas. Other studies [2, 4, 29] utilized Kernel Density Estimation (KDE), Localised Kernel Density Estimation (LKDE) and Apriori algorithm, respectively, for the aim of identifying the spatial patterns and crime hotspots for crime prediction. Similarly, Chainey et al. [11] applied Kernal Density Estimation (KDE) utilising criminal historical data of geographic areas. In [9], the authors, for the objective of crime analysis and prediction, have developed an application named Regional Crime Analysis Program (ReCAP) in order to assist local police forces such as Albemarle Country, University of Virginia(UVA) as well as Charlottesville City. This system integrates geographic information system (GIS), database, and data mining techniques, which in turn analyze the crime hotspots to generate spatial mining results.

2.3 Spatio-temporal paradigm

In crime analysis, temporal patterns and spatial patterns often come hand in hand [23]. According to [40], considering spatial and temporal data can augment prediction performance. Several earlier studies have been conducted on the Spatio-temporal aspect. In [4], the authors analyzed two different real crime datasets in order to find spatial and temporal criminal hotspots to determine the most likely places where crimes occur within a specific time. Social media networks and other types of data have also been utilized as criminal incident sources. Several studies, for instance, considered various forms of data to predict crimes, including [13, 15, 36], where they utilized Twitter data to predict the crimes. Other studies utilized cellphone data, including [7, 35] in order to investigate and evaluate the crime theories at scale. In [7], the authors combined demographic data and mobile phone data (human behavioural data) to forecast whether a particular area will be considered a criminal hotspot or not in the subsequent month in the European metropolis. They implemented various classifiers, including logistic regression, support vector machine, neural network, decision trees, and ensemble methods with different parameters. They observed that decision tree-based Random Forest (RF) outperforms all other classifiers. This study’s limitation, which the authors acknowledged, is the only three weeks of collected data being used (between Dec. 2012 and the first week of Jan. 2013). Utilizing a huge dataset would highly enable a granular prediction performance. Another limitation is that this study did not consider different times granularity, such as a week, day, hour, and minute; that is, such prediction was monthly based. Moreover, they focused generally on predicting the crime level to determine the likelihood of crime occurring in a particular geographic location. However, they did not consider predicting the crime type that may arise in such criminal areas. Another recent study [37] has incorporated data about taxi flow and point of interest (POI) data. This study aimed to predict the crime in community areas where the authors have utilized POI data collected from the Foursquare database and taxi flow obtained from the Freedom of information act (FOIA). To make the prediction, linear regression and negative binomial have been applied, analyzed and compared. Their experimental results showed that negative binomial outperforms linear regression. However, the study generally focused on crime inference and did not consider different elements of crime data such as the time of crime which has been proven as an essential factor to consider when studying crimes [27]. A recent study [5] aims to build models to predict future crime incidents in a smaller city based on social media and Internet of Things (IoT) datasets. In this study, they combined multiple sources of data in order to enhance prediction accuracy. They explored population-based features along with streetlight and FourSquare-centric features. They also consider the temporal factor of the crime. Consequently, their findings showed that demographic and streetlight features positively correlate with crime, and they significantly enhance the performance of crime prediction. Moreover, their outcomes showed that these features outperform FourSquare features. This study is similar to ours; however, our study area will consider a mega-city such as Chicago rather than a smaller city. Moreover, they did not consider the type of crime that might happen in the near future, which is crucial. In an attempt to build a model that can effectively predict the crime category, this work leverages the soctiotemporal attributes and other auxiliary information using deep neural network. The experimental results show the superiority of the proposed approach.

3 Methodology

Data collection, data preparation, and data analysis using some statistical analysis are discussed in this section. Thereafter, the process of constructing the predictive model to achieve the objectives of this research is presented.

3.1 Data Collection

In order to build the predictive models, historical crime data were collected from Chicago open data portal [1], which involves all reported criminal incidents from 2001 to the present. The dataset consists of more than 7 million records and twenty-two features. The features include the exact criminal incident location (longitude and latitude), the type of crime committed, community area, district, the ward where the incidents occurred and other features. Initially, more than 7 M data were collected from the Chicago data portal. However, this study experiment was conducted using the data spanning from 2014 to 2016 to make a fair comparison with the baseline model.

3.2 Data pre-processing

Before feeding the data into the proposed model, a pre-processing step is performed. The first process is to select the relevant features to decrease the dimensionality and avoid the overfitting problem. The independent variables are year, month, day, location description, community area, day period, domestic, and arrest. The dependent variable is the types of crime categorized into four major categories (violent, property, drug, and other crime). After the feature selection phase, categorical data is converted into numerical data. DNNs algorithm works better with numerical inputs. There are many ways to convert categorical values into numerical values. Each approach has its trade-offs and impact on the feature set [31]. First, the string values of the feature set are converted to integer values using the label encoder. To prepare data for DNN, one-hot encoding applies to labelled data and generates one-hot-encoded (a.k.a binarization) data. This is because DNN is more accurate with one-hot encoded input [31] and used to prevent misinterpretation and the errors that models could make using data pre-processed by label encoding only. Moreover, there were 36 crime types in the Chicago dataset regarding the target variable, which showed an imbalanced class distribution. In order to reduce the skewness of the class distribution, these crime types can be categorized into four major categories (violent, property, drug, and other crime), following the same way as in [24] using Chicago data that spans from 2014 to 2016 in order to make a fair comparison. This way, we reduced the number of target variable categories, sped up the modelling process, and generated a more manageable set [32].

3.2.1 Imbalanced class distribution

Learning from skewed multi-class datasets can be problematic, and this is an important topic that arises very often in practice in classification problems [17]. The classification approaches based on imbalanced data have recently attracted a great deal of attention from researchers in data mining over the past few decades. Imbalanced class distribution can be problematic since it leads to performance degradation, leading to misclassification problems, i.e., machine learning techniques would perform poorly on the minority classes. Therefore, the classification approaches to imbalanced data have significantly improved the algorithm’s performance. Machine learning, and more specifically, classification of crime types occurrence, is a practical approach to predict the crime type occurrence that will occur in the future. However, the imbalanced class distribution problem is prevalent in criminal records. These records have different kinds of crimes; some frequently occur, whereas others are rare. Since the accuracy may perform poorly using imbalanced data and add inadequate information about the classifier’s effectiveness, an upsampling technique is applied, which will be discussed further next.

There are two main approaches to address the class imbalance problem: an oversampling strategy used to over-sample the minority examples and an under-sampling strategy designed for the majority examples. The former uses some techniques, including duplicating samples in the minority class and creating artificial samples from the existing examples. The former is the so-called SMOTE (Synthetic Minority Oversampling)—one of the common approaches for handling imbalanced datasets, which aims at balancing class distribution through generating synthetic minority samples (examples) to over-sample the minority class [12]. Chawla [12] introduced the SMOTE algorithm, which used a linear interpolation method to synthesize the minority class samples. Later, the SMOTE algorithm was improved by other algorithms, such as the Borderline-SMOTE algorithm and the Random-SMOTE algorithm proposed by Han et al. [16] and Dong [14], respectively. As the name suggests, oversampling is a technique where we increase the number of samples in the minority class to match the total length of the majority class labels. As remarked previously, some classes occur most of the time while other types occur very infrequently, and this variation causes a class imbalance issue. One way to solve this problem is to oversample the examples in the minority classes. This can be achieved by simply synthesizing examples from the minority classes in the training dataset before fitting the models. This type of data augmentation for tabular data can be beneficial. For this study, Synthetic Minority Oversampling Technique (SMOTE) [12] was applied to balance class distribution and avoid the overfitting problem. Figure 1 shows the categories before and after applying SMOTE.

3.2.2 Data normalization

Normalization has become one of the most important steps in order to scale the features to fit a certain range [28]. There are different types of normalization techniques. Among them, we utilized Min-Max normalization, which is given by the following formula:

where \(x = (x_1,...,x_n)\) and \(z_i\) is the \(i^{th}\) normalized data.

3.3 XGBoost algorithm

XGBoost is a decision-tree-based ensemble machine learning algorithm that sequentially adds a learner to the top of the previous tree structure. In prediction problems, neural networks tend to outperform all other algorithms or frameworks. However, decision tree-based algorithms can be a good option for the prediction problem of tabular data. Furthermore, gradient boosting algorithms like XGBoost have many hyperparameters, and hyperparameter tuning is an important part of using them.

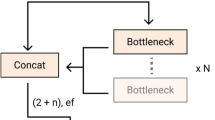

3.4 Proposed model architecture

The proposed DNN structure includes five layers, as shown in Fig. 2. The input layer receives the training data from the previous pre-processing step passing through the following dense layers-3 hidden layers with 160, 64, and 64 units at the output, activated by an activation function called Rectified Linear Unit (ReLU), followed by a ’softmax’ as an activation function with four nodes, with each node representing one class to predict the output. In order to reduce overfitting, dropout layers are used between the network layers. Some activations (nodes) dropped out randomly in those layers, which drastically helps in speeding up the training process [34]. The dataset was divided into 80% for training and 20% for testing purposes.

The obtained result from the hyperparameter optimization stage suggests that 10% of dropout probabilities were the best values for all dropout layers. Then, the model compilation is defined. We used categorical cross-entropy as a loss function and categorical accuracy as a metric. Finally, the model was trained for ten epochs in each hyperparameter combination and evaluated using the validation set at the end of each epoch. The model is trained with a batch size of 32. All the work for the experiments was carried out using an Intel Core i5 2.3 GHz, 8 GB RAM system running macOS. The model is constructed using Tensorflow and Keras libraries.

3.4.1 Hyperparameter optimization

In order to ensure good performance, hyperparameter optimization is applied. For the proposed algorithm, slightly more care must be taken to set the learning rate value to fit the models to training data appropriately, and as a result, the model can generalize well on unseen data. Keras tuner makes it easy to define a search space, and leverage included algorithms to find the best hyper-parameter values. It comes with Bayesian Optimization, Hyperband, and Random Search algorithms built-in. For using the Keras tuner, first, a tuner is defined; its role is to determine which hyper-parameter combinations should be tested. Next, the library search function performs the iteration loop, which evaluates a certain number of hyperparameter combinations. Then, evaluation is performed by computing the trained model’s metric on a validation set. Finally, the best hyperparameter combination in terms of validation accuracy can be tested on the test set. Therefore, to perform the hyperparameter tuning process, we need to define the search space, that is to say, which hyperparameters need to be optimized and in what range. We first define six hyperparameters that can be tuned. These hyperparameters and their search space are shown in Table 1. The optimized DNN parameters are shown in Table 2 and Table 3.

3.5 Exploratory data analysis (EDA)

We conduct EDA in order to understand crime trends. Figure 3 shows the number of crimes committed during the day hours. It can be seen that from 12 afternoon onwards, the number of crimes is increased dramatically until midnight, then it decreased. A possible reason for this might be because the criminals planned to exploit the times when people are at work to commit crimes, implying that more attention should be paid during this interval. It can be observed from the right plot (b) that the period from 2 pm-10 pm has a large number of criminal incidents compared to the period from 10 pm-6 am. Note that the colour indicates the arrest percentage-the darker the colour, the higher the arrest percentage. Figure 4 demonstrates interesting insights into the monthly and yearly crime density statistics. As can be seen from (a), the month of July has the maximum crime occurrence across all years in Chicago. In contrast, an interesting observation is that February has the least number of crimes across all the years (2001 - 2020). A possible reason behind this could be that people in February in the USA spend most of their time in their homes, reducing the likelihood of criminal events. Regarding the right plot(b), the crime counts over the years from 2001 until 2020 are highlighted; crime has reached its maximum from 2001 to 2008 and has gradually declined over the years. Figure 5 shows the top three safest and most dangerous community areas in Chicago; such analysis would help law enforcement agencies allocate more resources to the areas with more crime incidents. For example, Austin has the highest crime rate, implying that proper measures need to be taken for this area, such as increasing police officers or any other appropriate resources.

To investigate the relationship between the crime rate and socioeconomic factors, demographic data extracted from Chicago open data portal and a scatter plot has been created, highlighting the impact of these indicators on the crime rate from 2008 to 2012. The community crime rate is associated with unemployment, low income, and poverty, at least at this analysis level. Figure 6 (a) shows the percentage of people aged +16 who were unemployed against the number of crimes w.r.t the community area. Figure 6 (a) provides a closer look at such a relationship where it shows that as the percentage of unemployed people aged +16 increases, so does the number of crimes for that specific community area. So, the community areas with more unemployed people aged 16 or older have higher crime rates. The regression line in this figure has an RS equal to 0.14 and a p-value equal to 0.0007, which implies a positive correlation between the number of crimes and the percentage of unemployment. This is because the obtained p-value is less than 0.05, which indicates a significant relationship. Therefore, we agree with [19] that there is a correlation between the crime rate and unemployment, which implies that the crime rate will increase as the unemployment percentage increases. Similarly, Fig. 6 (b) provides a closer look at the relationship between the crime rate and the percentage of households below the poverty factor, where it shows that as this percentage increases, so does the number of crimes for that specific community area. The regression line in this figure has an RS equal to 0.14 and a p-value equal to 0.2, which implies a weak relationship between the number of crimes and the percentage of households below the poverty factor. This is because the obtained p-value is less than 0.05, indicating a weak relationship. Likewise, Fig. 6 (c) highlights the per capita income level against the number of crimes w.r.t the community area. This indicates that as the income level decreases, the number of crimes for that specific community area increases. So, the community areas that have less income are more likely to have higher crime rates. The regression line in this Figure has an RS equal to 0.14 and a p-value equal to 0.0003, implying a positive correlation between the number of crimes and the income level. This is because the obtained p-value is less than 0.05, which indicates a significant relationship.

4 Results and discussion

We applied the model described above with SMOTE strategy. The evaluation metrics used are micro F1_score, macro F1_score, precision and recall. When a set of classes is imbalanced, the macro F1_score is an important metric to validate the classifier’s ability to perform well in smaller categories [6].

Table 4 provides a comparison of the performance metrics between the proposed DNN model and the baseline XGBoost model. The DNN model demonstrates superior performance across all evaluation metrics, indicating its effectiveness in predicting crime types. Notably, the DNN model achieves a higher micro F1 score of 64.72% compared to the baseline model’s score of 52.62%, representing an improvement in overall prediction accuracy. The macro F1 score, which considers the performance across different classes, is a crucial metric for assessing the classifier’s ability to perform well in smaller categories. The DNN model achieves a macro F1 score of 56.89%, outperforming the baseline model’s score of 48.48%. This indicates that the DNN model exhibits better generalization and performs well in predicting crime types, particularly in the less frequent categories. Precision measures the model’s ability to correctly identify positive instances, while recall measures the model’s ability to capture all positive instances. The DNN model demonstrates higher precision (68.84%) and recall (64.72%) compared to the baseline model, indicating its effectiveness in correctly identifying and capturing crime types. Overall, the quantitative findings from Table 4 support the claim that the proposed DNN model outperforms the baseline XGBoost model in predicting crime types. The improvements in micro F1 score, macro F1 score, precision, and recall highlight the superior performance and accuracy of the DNN model. Figure 7 shows the confusion matrix of the proposed model.

4.1 Potential application domains

Some potential applications of the proposed approach include:

-

Urban Planning and Resource Allocation: The predictive model can be used by city planners and law enforcement agencies to allocate resources effectively. By identifying high-risk areas and predicting crime types, they can allocate resources such as police presence, surveillance, and lighting infrastructure accordingly.

-

Public Safety and Crime Prevention: The model can aid in developing proactive crime prevention strategies. Law enforcement agencies can use the predictions to prioritize patrols, increase surveillance, and implement targeted intervention programs to prevent specific types of crimes in specific areas.

-

Emergency Services Optimization: Emergency services, such as ambulances and fire departments, can benefit from the predictive model. By identifying areas with a higher likelihood of specific types of crimes, emergency services can optimize their response routes and readiness, ensuring timely and efficient emergency assistance.

-

Community Engagement and Awareness: The model’s predictions can be shared with the public to raise awareness and encourage community participation in crime prevention. Community members can be informed about the prevalent crime types in their neighborhoods and take proactive measures to enhance their safety and security.

5 Conclusion

We present a predictive model for crime type occurrence prediction based on spatio-temporal information. We use a real-world crime dataset of Chicago city. We propose a deep neural network model to predict crime categories. To improve the prediction quality, hyperparameter optimization was applied using Keras Tuner. After conducting data pre-processing, classification-based prediction is applied. The effectiveness of the proposed DNN model has shown good accuracy by optimizing its hyperparameters. Moreover, the experiment is conducted to handle class imbalance using SMOTE strategy. As feeding the original data to the classifiers does not express the desired accuracy and shows biased results (i.e., the model is biased towards those categories with fewer examples and gives the most frequent categories as a prediction result each time), the oversampling approach builds the model with higher accuracy. While XGBoost as a tree boosting helps reduce the overfitting of imbalanced data and thus handles the class imbalance in skewed datasets, a deep neural network approach shows superior results compared to XGBoost on imbalanced data. The experimental results demonstrate that the proposed model is highly effective, if not superior, compared to its counterparts and other algorithms. The proposed model performed, on average, around 6% better considering the macro F1 score compared to the baseline model.

Data Availability

The data is available at https://data.cityofchicago.org/Public-Safety/Crimes-2001-to-Present/ijzp-q8t2

References

Chicago Data Portal. Retrieved Feb 2023, from https://data.cityofchicago.org/Public-Safety/Crimes-2001-to-present-Dashboard/5cd6-ry5g

Al Boni M, Gerber MS (2016) Automatic optimization of localized kernel density estimation for hotspot policing. In: 2016 15th IEEE international conference on machine learning and applications (ICMLA). pp 32–38

Alghamdi J, Huang Z (2021) Modeling daily crime events prediction using seq2seq architecture. In: Qiao M, Vossen G, Wang S, Li L (eds) Databases theory and applications. Springer International Publishing, Cham, pp 192–203

Almanie T, Mirza R, Lor E (2015) Crime prediction based on crime types and using spatial and temporal criminal hotspots. Int J Data Mining Knowl Manag Process 5(4):01–19. https://doi.org/10.5121/ijdkp.2015.5401

Bappee FK, Petry LM, Soares A, Matwin S (2020) Analyzing the impact of foursquare and streetlight data with human demographics on future crime prediction

Benevenuto F, Magno G, Rodrigues T, Almeida V (2010) Detecting spammers on twitter. In: Collaboration, electronic messaging, anti-abuse and spam conference (CEAS). vol 6, p 12

Bogomolov A, Lepri B, Staiano J, Oliver N, Pianesi F, Pentland A (2014) Once upon a crime: Towards crime prediction from demographics and mobile data. arXiv.org http://search.proquest.com/docview/2084601394/

Braga AA (2001) The effects of hot spots policing on crime. The Annals Amer Academy Political Social Sci 578(1):104–125. https://doi.org/10.1177/000271620157800107

Brown D (1998) Regional crime analysis program (recap): A framework for mining data to catch criminals. vol 3, pp 2848–2853. http://search.proquest.com/docview/26742508/

Buczak AL, Gifford CM (2010) Fuzzy association rule mining for community crime pattern discovery. In: ACM SIGKDD workshop on intelligence and security informatics. ISI-KDD ’10, Association for Computing Machinery, New York, NY, USA . https://doi.org/10.1145/1938606.1938608

Chainey S, Tompson L, Uhlig S (2008) The utility of hotspot mapping for predicting spatial patterns of crime. Sec J 21(1–2):4

Chawla NV, Bowyer KW, Hall LO, Kegelmeyer WP (2002) Smote: synthetic minority over-sampling technique. J Artif Intell Res 16:321–357

Chen X, Cho Y, Jang SY (2015) Crime prediction using twitter sentiment and weather. In: 2015 systems and information engineering design symposium. pp 63–68. IEEE

Dong Y (2009) The study on random-smote for the classification of imbalanced data sets. Dalian University of Technology

Gerber MS (2014) Predicting crime using twitter and kernel density estimation. Decision Support Syst 61(1):115–125

Han H, Wang WY, Mao BH (2005) Borderline-smote: a new over-sampling method in imbalanced data sets learning. In: International conference on intelligent computing. pp 878–887. Springer

He H, Ma Y (2013) Imbalanced learning: foundations, algorithms, and applications. John Wiley & Sons

Huang Y, Li C, Jeng S (2015) Mining location-based social networks for criminal activity prediction. In: 2015 24th wireless and optical communication conference (WOCC). pp 185–189

Hu T, Zhu X, Duan L, Guo W (2018) Urban crime prediction based on spatio-temporal bayesian model.(research article). PLoS ONE 13(10):e0206215

Iqbal R, Murad M, Mustapha A, Hassany Shariat Panahy P, Khanahmadliravi N (03 2013) An experimental study of classification algorithms for crime prediction. Indian J Sci Technol 6:4219–4225. https://doi.org/10.17485/ijst/2013/v6i3.6

Kang HW, Kang HB, Choo KKR (2017) Prediction of crime occurrence from multi-modal data using deep learning. PLoS ONE 12(4)

Kumar R, Nagpal B (2019) Analysis and prediction of crime patterns using big data. In J Inf Technol 11(4):799–805

Leong K, Sung A (2015) A review of spatio-temporal pattern analysis approaches on crime analysis. Int E-J Criminal Sci (9). https://dialnet.unirioja.es/servlet/oaiart?codigo=4948370

Mansour AL, H, Lundy M, (2019) Crime types prediction. In: Alfaries A, Mengash H, Yasar A, Shakshuki E (eds) Advances in data science, cyber security and IT applications. Springer International Publishing, Cham, pp 260–274

McCue C (2014) Data mining and predictive analysis: Intelligence gathering and crime analysis. Butterworth-Heinemann

Mohler G (2014) Marked point process hotspot maps for homicide and gun crime prediction in Chicago. Int J Forecast 30(3):491–497. https://doi.org/10.1016/j.ijforecast.2014.01.004, http://www.sciencedirect.com/science/article/pii/S0169207014000284

Mohler GO, Short MB, Brantingham PJ, Schoenberg FP, Tita GE (2011) Self-exciting point process modeling of crime. J Amer Stat Assoc 106(493):100–108. https://doi.org/10.1198/jasa.2011.ap09546

Mustaffa Z, Yusof Y (2011) A comparison of normalization techniques in predicting dengue outbreak. International conference on business and economics research. 1:345–349

Nakaya T, Yano K (2010) Visualising crime clusters in a space-time cube: An exploratory data-analysis approach using space-time kernel density estimation and scan statistics. Trans GIS 14(3):223–239

Patterson EB (1991) Poverty, income inequality, and community crime rates. Criminology 29(4):755–776

Potdar K, Pardawala TS, Pai CD (2017) A comparative study of categorical variable encoding techniques for neural network classifiers. Int J Comput Appl 175(4):7–9

Sengupta A, Kumar M, Upadhyay S (2014) Crime analyses using r

Soares A, Matwin S (2020) Analyzing the impact of foursquare and streetlight data with human demographics on future crime prediction. arXiv.org, http://search.proquest.com/docview/2413788144/

Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R (2014) Dropout: a simple way to prevent neural networks from overfitting. Journal Machine Learn Res 15(1):1929–1958

Traunmueller M, Quattrone G, Capra L (2014) Mining mobile phone data to investigate urban crime theories at scale. vol 8851, pp 396–411. Springer Verlag

Wang X, Gerber M (2012) Brown D. Automatic crime prediction using events extracted from twitter posts. 7227:231–238

Wang H, Kifer D, Graif C, Li Z (2016) Crime rate inference with big data. In: Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining. KDD ’16, vol 13-17-, pp 635–644. ACM

Weisburd D (2008) Place-based policing. In: Ideas in American policing. Citeseer

Yu C, Ding W, Morabito M, Chen P (2016) Hierarchical spatio-temporal pattern discovery and predictive modeling. IEEE Trans Knowl Data Eng 28(4):979–993

Yu L, Sun X, Huang Z (2016) Robust spatial-temporal deep model for multimedia event detection. Neurocomputing 213:48 – 53. binary Representation Learning in Computer Vision. https://doi.org/10.1016/j.neucom.2016.03.102, http://www.sciencedirect.com/science/article/pii/S0925231216307275,

Zhao X, Tang J (2017) Modeling temporal-spatial correlations for crime prediction. In: Proceedings of the 2017 ACM on conference on information and knowledge management. CIKM ’17, vol 131841, pp 497–506. ACM

Acknowledgements

I would like to thank Dr Helen Huang and Dr Yadan Luo for their invaluable guidance throughout this research

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

No conflict of interest

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Alghamdi, J., Al-Dala’in, T. Towards spatio-temporal crime events prediction. Multimed Tools Appl 83, 18721–18737 (2024). https://doi.org/10.1007/s11042-023-16188-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-16188-x