Abstract

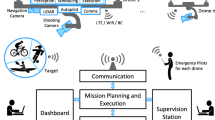

One of the most important aesthetic concepts in autonomous Unmanned Aerial Vehicle (UAV) cinematography is the UAV/Camera Motion Type (CMT), describing the desired UAV trajectory relative to a (still or moving) physical target/subject being filmed. Usually, for the drone to autonomously execute such a CMT and capture the desired shot in footage, the 3D states (positions/poses within the world) of both the UAV/camera and the target are required as input. However, the target’s 3D state is not typically known in non-staged settings. This paper proposes a novel framework for reformulating each desired CMT as a set of requirements that interrelate 2D visual information, UAV trajectory and camera orientation. Then, a set of CMT-specific vision-driven Proportional-Integral-Derivative (PID) UAV controllers can be implemented, by exploiting the above requirements to form suitable error signals. Such signals drive continuous adjustments to instant UAV motion parameters, separately at each captured video frame/time instance. The only inputs required for computing each error value are the current 2D pixel coordinates of the target’s on-frame bounding box, detectable by an independent, off-the-shelf, real-time, deep neural 2D object detector/tracker vision subsystem. Importantly, neither UAV nor target 3D states are required ever to be known or estimated, while no depth maps, target 3D models or camera intrinsic parameters are necessary. The method was implemented and successfully evaluated in a robotics simulator, by properly reformulating a set of standard, formalized UAV CMTs.

Similar content being viewed by others

Data Availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

Notes

Benchmark_RAI and Annotations_Bicycles_Raw datasets were downloaded from https://aiia.csd.auth.gr/open-multidrone-datasets/

A default level of noise is already inserted by the simulator.

References

Åström KJ, Hägglund T, Astrom KJ (2006) Advanced PID control, vol 461. ISA-The Instrumentation Systems, and Automation Society Research Triangle

Alcantara A, Capitan J, Cunha R, Ollero A (2021) Optimal trajectory planning for cinematography with multiple Unmanned Aerial Vehicles. Robot Auton Syst 140:103778

Alcantara A, Capitan J, Torres-Gonzalez A, Cunha R, Ollero A (2020) Autonomous execution of cinematographic shots with multiple drones. IEEE Access pp 201300–201316

Alexandrov AG, Palenov MV (2014) Adaptive PID controllers: State of the art and development prospects. Autom Remote Control 75(2):188–199

Bahdanau D, Cho K, Bengio Y (2014) Neural machine translation by jointly learning to align and translate. arXiv:1409.0473

Bhattacharya S, Mehran R, Sukthankar R, Shah M (2014) Classification of cinematographic shots using lie algebra and its application to complex event recognition. IEEE Trans Multimed 16(3):686–696

Bonatti R, Ho C, Wang W, Choudhury S, Scherer S (2019) Towards a robust aerial cinematography platform:, Localizing and tracking moving targets in unstructured environments. arXiv:1904.02319

Bradski G, Kaehler A, Pisarevsky V (2005) Learning-based computer vision with intel’s open source computer vision library. Intel Technol J 9(2):119–130

Cao Z, Fu C, Ye J, Li B, Li Y (2021) SiamAPN++: Siamese attentional aggregation network for real-time UAV tracking. In: Proceedings of the International Conference on Intelligent Robots and Systems (IROS)

Caraballo LE, Montes-Romero A, Diaz-Baṅez JM, Capitan J, Torres-Gonzalez A, Ollero A (2020) Autonomous planning for multiple aerial cinematographers. In: Proceedings of the International Conference on Intelligent Robots and Systems (IROS)

Carrio A, Sampedro C, Rodriguez-Ramos A, Campoy P (2017) A review of deep learning methods and applications for unmanned aerial vehicles. Journal of Sensors 2017

Chen C, Ling Q (2019) Adaptive convolution for object detection. IEEE Trans Multimed 21(12):3205–3217

Devo A, Dionigi A, Costante G (2021) Enhancing continuous control of mobile robots for end-to-end visual active tracking. Robot Auton Syst 142:103799

Fourati H, Belkhiat D (2016) Multisensor attitude estimation: fundamental concepts and applications CRC press LLC

Gao XS, Hou XR, Tang J, Cheng HF (2003) Complete solution classification for the perspective-three-point problem. IEEE Trans Pattern Anal Mach Intell 25(8):930–943

Grewal MS, Weill LR, Andrews AP (2007) Global positioning systems, inertial navigation, and integration. John Wiley & Sons

Gschwindt M, Camci E, Bonatti R, Wang W, Kayacan E, Scherer S (2019) Can a robot become a movie director? learning artistic principles for aerial cinematography. arXiv:1904.02579

Huang C, Lin CE, Yang Z, Kong Y, Chen P, Yang X, Cheng KT (2019) Learning to film from professional human motion videos. In: Proceedings of the IEEE Conference on computer vision and pattern recognition

Huang C, Yang Z, Kong Y, Chen P, Yang X, Cheng KTT (2019) Learning to capture a film-look video with a camera drone. In: Proceedings of the IEEE International Conference on Robotics and Automation (ICRA)

Huynh-Thu Q, Garcia MN, Speranza F, Corriveau P, Raake A (2010) Study of rating scales for subjective quality assessment of high-definition video. IEEE Trans Broadcast 57(1):1–14

Jocher Gea (2022) ultralytics/yolov5: v7.0 - YOLOv5 SOTA Realtime instance segmentation. https://doi.org/10.5281/zenodo.7347926

Joubert N, Goldman DB, Berthouzoz F, Roberts M, Landay JA, Hanrahan P (2016) Towards a drone cinematographer:, Guiding quadrotor cameras using visual composition principles. arXiv:1610.01691

Kakaletsis E, Symeonidis C, Tzelepi M, Mademlis I, Tefas A, Nikolaidis N, Pitas I (2021) Computer vision for autonomous UAV flight safety: an overview and a vision-based safe landing pipeline example. ACM Computing Surveys (CSUR) 54(9):1–37

Karakostas I, Mademlis I, Nikolaidis N, Pitas I (2018) UAV Cinematography constraints imposed by visual target tracking. In: Proceedings of the IEEE International Conference on Image Processing (ICIP)

Karakostas I, Mademlis I, Nikolaidis N, Pitas I (2019) Shot type feasibility in autonomous UAV cinematography. In: Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)

Karakostas I, Mademlis I, Nikolaidis N, Pitas I (2020) Shot type constraints in UAV cinematography for autonomous target tracking. Inf Sci 506:273–294

Kelchtermans K, Tuytelaars T (2017) How hard is it to cross the room? Training (Recurrent) Neural Networks to steer a UAV. arXiv:1702.07600

Kim D, Chen T (2015) Deep neural network for real-time autonomous indoor navigation. arXiv:1511.04668

Kuang Q, Jin X, Zhao Q, Zhou B (2019) Deep multimodality learning for UAV video aesthetic quality assessment. IEEE Transactions on Multimedia

Li G, Mueller M, Casser V, Smith N, Michels DL, Ghanem B (2018) OIL:, Observational Imitation Learning. arXiv:1803.01129

Li B, Yan J, Wu W, Zhu Z, Hu X (2018) High performance visual tracking with siamese Region Proposal Network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

Mademlis I, Mygdalis V, Nikolaidis N, Montagnuolo M, Negro F, Messina A, Pitas I (2019) High-level multiple-UAV cinematography tools for covering outdoor events. IEEE Trans Broadcast 65(3):627–635

Mademlis I, Mygdalis V, Nikolaidis N, Pitas I (2018) Challenges in autonomous UAV cinematography: an overview. In: Proceedings of the IEEE International Conference on Multimedia and Expo (ICME)

Mademlis I, Nikolaidis N, Tefas A, Pitas I, Wagner T, Messina A (2018) Autonomous unmanned aerial vehicles filming in dynamic unstructured outdoor environments. IEEE Signal Proc Mag 36:147–153

Mademlis I, Nikolaidis N, Tefas A, Pitas I, Wagner T, Messina A (2019) Autonomous UAV cinematography: a tutorial and a formalized shot type taxonomy. ACM Comput Surv 52(5):105

Mademlis I, Torres-González A, Capitán J, Montagnuolo M, Messina A, Negro F, Le Barz C, Gonçalves T, Cunha R, Guerreiro B et al (2022) A multiple-UAV architecture for autonomous media production. Multimed Tools Appl pp 1–30

Meier L, Tanskanen P, Fraundorfer F, Pollefeys M (2011) Pixhawk: a system for autonomous flight using onboard computer vision. In: Proceedings of the IEEE International Conference on Robotics and Automation (ICRA)

Mur-Artal R, Tardós JD (2017) Visual-inertial monocular SLAM with map reuse. IEEE Robot Autom Lett 2(2):796–803

Nägeli T, Alonso-Mora J, Domahidi A, Rus D, Hilliges O (2017) Real-time motion planning for aerial videography with dynamic obstacle avoidance and viewpoint optimization. IEEE Robot Autom Lett 2(3):1696–1703

Nägeli T, Meier L, Domahidi A, Alonso-Mora J, Hilliges O (2017) Real-time planning for automated multi-view drone cinematography. ACM Trans Graphics 36(4):1321–13210

Naseer T, Sturm J, Cremers D (2013) Followme: Person following and gesture recognition with a quadrocopter. In: Proceedings of the IEEE International Conference on Intelligent Robots and Systems (IROS)

Nousi P, Mademlis I, Karakostas I, Tefas A, Pitas I (2019) Embedded UAV real-time visual object detection and tracking. In: Proceedings of the IEEE International Conference on Real-time Computing and Robotics (RCAR)

Nousi P, Patsiouras E, Tefas A, Pitas I (2018) Convolutional Neural Networks for visual information analysis with limited computing resources. In: Proceedings of the IEEE International Conference on Image Processing (ICIP)

Papaioannidis C, Mademlis I, Pitas I (2021) Autonomous UAV safety by visual human crowd detection using multi-task deep neural networks. In: Proceedings of the IEEE International Conference on Robotics and Automation (ICRA)

Papaioannidis C, Mademlis I, Pitas I (2022) Fast CNN-based single-person 2D human pose estimation for autonomous systems. IEEE Transactions on Circuits and Systems for Video Technology

Passalis N, Tefas A (2019) Deep reinforcement learning for controlling frontal person close-up shooting. Neurocomputing 335:37–47

Passalis N, Tefas A, Pitas I (2018) Efficient camera control using 2D visual information for unmanned aerial vehicle-based cinematography. In: Proceedings of the IEEE International Symposium on Circuits and Systems (ISCAS)

Patrona F, Mademlis I, Tefas A, Pitas I (2019) Computational UAV cinematography for intelligent shooting based on semantic visual analysis. In: Proceedings of the IEEE International Conference on Image Processing (ICIP)

Patrona F, Nousi P, Mademlis I, Tefas A, Pitas I (2020) Visual object detection for autonomous UAV cinematography. In: Proceedings of the northern lights deep learning workshop

Piao J, Kim S (2019) Real-time visual–inertial SLAM based on adaptive keyframe selection for mobile AR applications. IEEE Trans Multimed 21 (11):2827–2836

Ranjan R, Patel VM, Chellappa R (2017) Hyperface: a deep multi-task learning framework for face detection, landmark localization, pose estimation, and gender recognition. IEEE Trans Pattern Anal Mach Intell 41(1):121–135

Redmon J, Farhadi A (2017) Yolo9000: Better, faster, stronger. In: The IEEE conference on computer vision and pattern recognition (CVPR)

Sadeghi F, Levine S (2016) (CAD)2RL:, Real single-image flight without a single real image. arXiv:1611.04201

Serra P, Cunha R, Hamel T, Cabecinhas D, Silvestre C (2016) Landing of a quadrotor on a moving target using dynamic image-based visual servo control. IEEE Trans Robot 32(6):1524–1535

Shah S, Dey D, Lovett C, Kapoor A (2017) Airsim: High-fidelity visual and physical simulation for autonomous vehicles. In: Proceedings of the field and service robotics conference

Symeonidis C, Mademlis I, Nikolaidis N, Pitas I (2019) Improving neural Non-Maximum Suppression for object detection by exploiting interest-point detectors. In: Proceedings of the IEEE International Workshop on Machine Learning for Signal Processing (MLSP)

Teuliere C, Eck L, Marchand E (2011) Chasing a moving target from a flying UAV. In: Proceedings of the IEEE International Conference on Intelligent Robots and Systems (IROS)

Teuliere C, Marchand E (2014) A dense and direct approach to visual servoing using depth maps. IEEE Trans Robot 30(5):1242–1249

Wu Y, Lim J, Yang M (2013) Online object tracking: a benchmark. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

Zhong F, Sun P, Luo W, Yan T, Wang Y (2018) AD-VAT: An asymmetric dueling mechanism for learning visual active tracking. In: Proceedings of the International Conference on Learning Representations (ICLR)

Funding

The research leading to these results has received funding from the European Union’s European Union Horizon 2020 research and innovation programme under grant agreement No 731667 (MULTIDRONE). This publication reflects only the author’s views. The European Union is not liable for any use that may be made of the information contained therein.

The authors have no competing interests to declare that are relevant to the content of this article.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Mademlis, I., Symeonidis, C., Tefas, A. et al. Vision-based drone control for autonomous UAV cinematography. Multimed Tools Appl 83, 25055–25083 (2024). https://doi.org/10.1007/s11042-023-15336-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-15336-7