Abstract

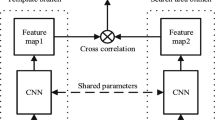

Single object tracking (SOT) is one of the most important tasks in computer vision. With the development of deep neural networks and the release for a series of large scale datasets for single object tracking, Siamese networks have been proposed and perform better than most of the traditional methods. However, recent Siamese networks are getting slower to obtain better performance as they become deeper. Most of those networks could only meet the needs of real-time object tracking in ideal environments. In order to achieve a better balance between efficiency and accuracy, we propose a simpler Siamese network for single object tracking, which runs fast in poor hardware configurations while remaining an acceptable accuracy. The proposed method consists of three parts: sample generation, SE-Siamese and regression localization. In the sample generation stage, template patch and detection patch are cropped from the selected video frames in a new way. The SE-Siamese subnetwork adopts Siamese network and Squeeze-and-Excitation (SE) network as the feature extractor which is an effective way of speeding up the training phase. The regression localization network aims to compute the location of the tracked object in a more efficient way without losing much precision. To validate the effectiveness of the proposed approach, we conduct extensive experiments on several challenging tracking benchmark datasets, including VOT2015, VOT2016, VOT2017 and OTB-100. The experimental results show that our approach displays significant speed improvements compared to several strong baseline trackers (19.5 FPS to 44.4 FPS).

Similar content being viewed by others

Change history

28 March 2023

A Correction to this paper has been published: https://doi.org/10.1007/s11042-023-15159-6

References

Bai S, He Z, Dong Y, Bai H (2020) Multi-hierarchical independent correlation filters for visual tracking. In: Proceedings of the IEEE international conference on multimedia and expo, pp 1–6

Benenson R, Petti S, Fraichard T, Parent M (2008) Towards urban driverless vehicles. J Vehicle Auton Syst 1/2(6):4–23

Bertinetto L, Valmadre J, Golodetz S, Miksik O, Torr PHS (2016) Staple: complementary learners for real-time tracking. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1401–1409

Bertinetto L, Valmadre J, Henriques JF, Vedaldi A, Torr PHS (2016) Fully-convolutional siamese networks for object tracking. In: Proceedings of the European conference on computer vision workshops, vol 9914, pp 850–865

Beymer D, Konolige K (1999) Real-time tracking of multiple people using continuous detection. In: Proceedings of the IEEE frame rate workshop, pp 1–8

Bolme DS, Beveridge JR, Draper BA, Lui YM (2010) Visual object tracking using adaptive correlation filters. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2544–2550

Comaniciu D, Ramesh V, Meer P (2003) Kernel-based object tracking. IEEE Trans Pattern Anal Mach Intell 25(5):564–577

Danelljan M, Bhat G, Khan FS, Felsberg M (2017) ECO: efficient convolution operators for tracking. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 6931–6939

Danelljan M, Häger G, Khan F, Felsberg M (2014) Accurate scale estimation for robust visual tracking. In: British machine vision conference, pp 65.1–65.11

Danelljan M, Hȧger G, Khan FS, Felsberg M (2015) Learning spatially regularized correlation filters for visual tracking. In: Proceedings of the IEEE international conference on computer vision, pp 4310–4318

Danelljan M, Hȧger G, Khan FS, Felsberg M (2017) Discriminative scale space tracking. IEEE Trans Pattern Anal Mach Intell 39(8):1561–1575

Danelljan M, Khan FS, Felsberg M, van de Weijer J (2014) Adaptive color attributes for real-time visual tracking. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1090–1097

Danelljan M, Robinson A, Khan FS, Felsberg M (2016) Beyond correlation filters: learning continuous convolution operators for visual tracking. In: Proceedings of the European conference on computer vision, vol 9909, pp 472–488

Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, Dehghani M, Minderer M, Heigold G, Gelly S, Uszkoreit J, Houlsby N (2021) An image is worth 16x16 words: transformers for image recognition at scale. In: International conference on learning representations

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Held D, Thrun S, Savarese S (2016) Learning to track at 100 fps with deep regression networks. In: Proceedings of the European conference on computer vision, vol 9905, pp 749–765

Henriques JF, Caseiro R, Martins P, Batista J (2015) High-speed tracking with kernelized correlation filters. IEEE Trans Pattern Anal Mach Intell 37(3):583–596

Henriques JF, Caseiro R, Martins P, Batista JP (2012) Exploiting the circulant structure of tracking-by-detection with kernels. In: Proceedings of the European conference on computer vision, vol 7575, pp 702–715

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7132–7141

Hua Y, Alahari K, Schmid C (2015) Online object tracking with proposal selection. In: Proceedings of the IEEE international conference on computer vision, pp 3092–3100

Huang L, Zhao X, Huang K (2021) Got-10k: a large high-diversity benchmark for generic object tracking in the wild. IEEE Trans Pattern Anal Mach Intell 43(5):1562–1577

Kristan M, Leonardis A, Matas J, Felsberg M, Pflugfelder R, Cehovin Zajc L, Vojir T, Hager G, Lukezic A, Eldesokey A et al (2017) The visual object tracking VOT2017 challenge results. In: Proceedings of the IEEE international conference on computer vision workshops, pp 1949–1972

Kristan M, Matas J, Leonardis A, Felsberg M, Cehovin L, Fernȧndez G, Vojír T, Häger G, Nebehay G, Pflugfelder RP (2015) The visual object tracking VOT2015 challenge results. In: Proceedings of the IEEE international conference on computer vision workshop, pp 564–586

Kristan M et al (2016) The visual object tracking VOT2016 challenge results. In: Proceedings of the European conference on computer vision workshops, vol 9914, pp 777–823

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. In: Advances in neural information processing systems, pp 1106–1114

Li B, Wu W, Wang Q, Zhang F, Xing J, Yan J (2019) Siamrpn++: evolution of siamese visual tracking with very deep networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4282–4291

Li B, Yan J, Wu W, Zhu Z, Hu X (2018) High performance visual tracking with siamese region proposal network. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 8971–8980

Li C, Lin S, Qiao J, An S (2021) Partial tracking method based on siamese network. Vis Comput 37(3):587–601

Li Y, Zhang X (2019) Siamvgg: visual tracking using deeper siamese networks. arXiv:1902.02804

Lin T, Maire M, Belongie SJ, Hays J, Perona P, Ramanan D, Dollár P, Zitnick CL (2014) Microsoft COCO: common objects in context. In: Proceedings of the European conference on computervision, vol 8693, pp 740–755

Liu T, Wang G, Yang Q (2015) Real-time part-based visual tracking via adaptive correlation filters. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4902–4912

Liu Z, Lin Y, Cao Y, Hu H, Wei Y, Zhang Z, Lin S, Guo B (2021) Swin transformer: hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 10012–10022

Lukezic A, Vojir T, Zajc LC, Matas J, Kristan M (2017) Discriminative correlation filter with channel and spatial reliability. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4847–4856

Nam H, Baek M, Han B (2016) Modeling and propagating cnns in a tree structure for visual tracking. arXiv:1608.07242

Nam H, Han B (2016) Learning multi-domain convolutional neural networks for visual tracking. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4293–4302

Ning X, Duan P, Li W, Shi Y, Li S (2020) A CPU real-time face alignment for mobile platform. IEEE Access 8:8834–8843

Ning X, Duan P, Li W, Zhang S (2020) Real-time 3d face alignment using an encoder-decoder network with an efficient deconvolution layer. IEEE Signal Process Lett 27:1944–1948

Ong P, Chong TK, Ong KM, Low ES (2021) Tracking of moving athlete from video sequences using flower pollination algorithm. Vis Comput

Possegger H, Mauthner T, Bischof H (2015) In defense of color-based model-free tracking. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2113–2120

Ren S, He K, Girshick R, Sun J (2017) Faster r-CNN: towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Mach Intell 39(6):1137–1149

Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, Khosla A, Bernstein MS, Berg AC, Li F (2015) Imagenet large scale visual recognition challenge. Int J Comput Vis 115(3):211–252

Vishwakarma S, Agrawal A (2013) A survey on activity recognition and behavior understanding in video surveillance. Vis Comput 29(10):983–1009

Vojir T, Noskova J, Matas J (2014) Robust scale-adaptive mean-shift for tracking. Pattern Recognit Lett 49:250–258

Wang L, Ouyang W, Wang X, Lu H (2015) Visual tracking with fully convolutional networks. In: Proceedings of the IEEE international conference on computer vision, pp 3119–3127

Wang N, Yeung D (2014) Ensemble-based tracking: aggregating crowdsourced structured time series data. In: Proceedings of the international conference on machine learning, vol 32, pp 1107–1115

Wang Q, Zhang L, Bertinetto L, Hu W, Torr PH (2019) Fast online object tracking and segmentation: a unifying approach. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1328–1338

Wang X, Girshick R, Gupta A, He K (2018) Non-local neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7794–7803

Woo S, Park J, Lee JY, So Kweon I (2018) Cbam: convolutional block attention module. In: Proceedings of the European conference on computer vision, pp 3–19

Wu Y, Lim J, Yang MH (2013) Online object tracking: a benchmark. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2411–2418

Xu T, Feng Z, Wu X, Kittler J (2019) Learning adaptive discriminative correlation filters via temporal consistency preserving spatial feature selection for robust visual object tracking. IEEE Trans Image Process 28(11):5596–5609

Yang C, Duraiswami R, Davis LS (2005) Fast multiple object tracking via a hierarchical particle filter. In: Proceedings of the IEEE international conference on computer vision, pp 212–219

Zhang T, Xu C, Yang M (2017) Multi-task correlation particle filter for robust object tracking. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4819–4827

Zhang W, Du Y, Chen Z, Deng J, Liu P (2021) Robust adaptive learning with siamese network architecture for visual tracking. Vis Comput 37(5):881–894

Zhu G, Porikli F, Li H (2015) Tracking randomly moving objects on edge box proposals. arXiv:1507.08085

Zhu Z, Wang Q, Li B, Wu W, Yan J, Hu W (2018) Distractor-aware siamese networks for visual object tracking. In: Proceedings of the European conference on computer vision, pp 101–117

Acknowledgements

This work is supported by the National Natural Science Foundation of China under Grant Nos. (62276127).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this article was revised: The affiliations of Jianmin Wu were incorrect in the original publication of this article.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Han, F., Jiang, S., Wu, J. et al. Real-time object tracking in the wild with Siamese network. Multimed Tools Appl 82, 24327–24343 (2023). https://doi.org/10.1007/s11042-023-14519-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-14519-6