Abstracts

The study analyses user awareness of multimodal biometrics and its acceptability for online transactions in the current dynamic world. The study was performed on the five underlying perspectives: User Acceptability, Cognizant Factors towards Biometrics, Technological factors, Perceptional Factors (Fingerprints, Iris, Face Recognition and Voice) and Data Privacy Factors. A questionnaire was prepared and circulated to the 530 biometrics users; on that basis, the corresponding answer was obtained for analysis. SEM is first employed to gauge the research model and test the prominent hypothesized predictors, which are then used as inputs in the neural network to evaluate the relative significance of each predictor variable. By considering the standardized significance of the feed-for-back-propagation of ANN algorithms, the study found a significant effect of DPF_3 (93%), DPF_2 (50%) and DPF_4 (34%) on the adoption of MMB. In the Perceptional construct, PRF_2 (49%) and PRF_3 (33%) was relatively the most important predictor, whereas, in User Acceptability, UAC_2 (37%), UAC_3 & UAC_5 (41%) was vital to be considered. Only one item, TCF_2 (35%), from Technological Factors, followed by Cognizant factors, i.e., CFG_1 (33%), confirmed the best fit model to adopt MMB. The research is a novel effort when compared to past studies as it considered cognizant and perceptual factors in the proposed model, thereby expanding the analytical outlook of MMB literature. Thus, the study also explored several new and valuable practical implications for adopting multimodal instruments of biometrics along with certain limitations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A human-machine interface is the key research area of contemporary computational technologies [1]. According to [2, 3], the primary aim of the research advancement is to serve humankind and create an eco-system of continuous support for the betterment of the world. In the words of [4, 39], interaction with machines for a different purpose is visible. Its success depends upon user acceptability and ease of application. Data privacy and security are also paramount factors in this digital era that is unpredictable in the fast-changing technical development process, especially in financial transactions [5]. Scholarly evidence [6, 7] stated that the use of biometrics authentication for offline transactions ensures that the use of biometrics authentication in the authenticated user’s presence while operating at the point of sale. Moreover, during online transactions, the authentication and verification process enhances reliability and improves security for the user and service providers [8].

According to [10, 11], biometric systems are traditionally classified into two key areas, i.e., Unimodal and Multimodal biometrics. Unimodal is a system based on a single biometric trait, unlike a multimodal system where more than one physiological or behavioral trait is used to recognize the person. Popular physiological traits are the face, ear, iris, fingerprint, finger vein, and knuckle, whereas behavioral traits are gait, keystroke, voice, eye movement, facial gradient etc. Over the years, quality datasets of all traits have been developed for research purposes and are available for experimental testing for academic and commercial uses. Researchers have combined multiple traits for identification problems to improve performance and better data security, known as Multimodal biometric (MMB) fusion. Due to its incredible efficiency, cheap cost, and convenience, biometric technology has become the most extensively used human identification and authentication technology in public and private sectors [12]. Biometric systems, also known as Identity Certification (IC) systems, are the art of constructing authentication procedures using biometric traits to identify automatically, measure, and validate a living human [13]. The biometric system is based on the concept that everyone is unique in cognitive and behavioral characteristics. Identifiers are permanent, one-of-a-kind, and distinct from one another. Developing such systems is to improve the digital world’s safety and security. Science, security, surveillance, identifying information, and commerce require biometric recognition systems to ensure user authentication and identification. Because it is difficult for an imitator to detect and skit a registered person’s biometric modality, biometric technologies have grown popular due to their universal framework (see Fig. 1). High accuracy rates and the difficulty of spoofing are the significant criteria that separate old security solutions [14].

Similarly, MMB has shown better results in online trade transactions in terms of more reliability, enhanced security, and safe transaction [15]. On the other hand, the unimodal process where single traits are utilized for authentication is comparatively more prone to imposter attacks and has higher chances of eye dropping [13]. According to [16,17,18], the last decade has been a transition period for electronic payment-based applications and commercial uses. The wild development of processors and high-speed internet has provided a foundation for digital payment systems in this era. However, traditional methods like memory-based password gateway are not safe to give such transactions high security and reliability. Instead of fusion of face, ear, iris, keystroke, voice, and fingerprint provides a robust mechanism for authentication (see Fig. 2). Related work [19, 20, 22] also stated that various fusion techniques classify into different categories, such as rank level, score, decision level, and feature level. Advantages and challenges associated with these different categories compete in terms of performance parameters. For applications requiring rich information for decision making, feature-level methods are used. The terminology depends upon the level of fusion and feature extraction positions [23].

According to [24], “supervised learning and pattern acknowledgement are critical research areas in information retrieval, data engineering, medical image processing, and intrusion recognition”. This work aims to identify a robust classifier and consider a network-based intrusion detection system. Another incredible research has been done by [28], using the dataset of 858 budding cancer patients to corroborate the performance based on the combinations of iForest with SMOTE and iForest with SMOTETomek. Besides, the study used a mobile application that can gather data on cervical cancer risk factors and delivers results from CCPM for immediate and appropriate accomplishment at the early phase of cervical cancer.

2 Review of literature

A systematic review of the literature effectively coordinates and deduces facts relevant to a particular research question rationally, descriptive, dependable, and accurate. [21, 29, 30] studied multimodal biometrics which is based on a fusion of different physical and behavioral traits like face, ear, iris, gait, keystroke, voice etc. [4] presented a detailed study on types of fusion methods for MMB. They have highlighted various scoring fusions: min, max, average, mode, and others. Feature and sensor levels come under the first category score, whereas decision & rank level fusion is in the second category. The recent studies viz. [26, 31, 32] advocated that a combination of unimodal and multimodal biometrics features is helpful for text and voice output accuracy. [33] used unimodal score and multimodal score to compare performance for different conditions. They found MMB has the advantage of a more secure and reliable process at the cost of increased complexity.

In the words of [34,35,36], multimodal biometrics outperformed unimodal in multiple experiments with different feature extraction algorithms. However, authors have used a different combination of face, ear, iris, fingerprint, palm print, and palm knuckle to confine the results. [38] stated fused fingerprint, retina and finger vein at feature level and used RSA key generation to achieve FAR (false acceptance rate) .01, 95.3% GAR (genuine acceptance rate). Modified RSA (Rivest, Shamir and Adleman) is the contribution made by the authors to improve GAR, FAR from 90% & 2.06%, respectively. Individual unimodal GAR FAR compared with the MBM results. RSA with fingerprint GAR of 80.2% and FAR of 3.25%, RSA with retina GAR of 84.2% and FAR of 2.2%, RSA with finger vein GAR of 87.6% and FAR of 0.52% were obtained. [15] reported fast and robust biometrics system design using Ear trait. Later it was combined with other characteristics and compared in precision, recall rate, accuracy, ease of use. The ear has stable features for long tenure and is comparatively less affected by age and other factors. [37] studied the computerized progression of classifying skin disease using deep learning MobileNet V2. They specified that the projected system could assist general practitioners in diagnosing skin conditions proficiently and reduce complications and indisposition. In a similar line of research [40] developed a consolidated tree construction (CTC) algorithm to create a sound sample from a high-class imbalanced dataset at the detector’s pre-processing phase. The outcomes delineated the accuracy of 99.96%, reflecting the CICIDS2017 dataset and the NSL-KDD data frame using thirty-four topographies.

Scholarly evidence [41, 42] a robust and efficient ear-based biometric system using AdaBoost-based ear detection, local features extraction, and stereo matching-based recognition algorithm proposed in this work outperforms multimodal biometrics [32]. [43] outlined IITD, UND-F, USTB databases used for experimental purposes and comparison. The results were compared with other methods where SVM, plane Adaboost, MNN and the proposed method generated accuracy of 96.20%, 94.40%, 96.40% and 98.50%, respectively. [36] highlighted the feature level fusion-based method, where correlation analysis is done in class and intraclass feature sets. Discrete correlation analysis is adopted for feature fusion to get a more pairwise correlation. DCA also reduces class correlation to create a clear boundary. The experimental results have shown better performance than other LCA methods in similar environments. [44] outlined the detailed status of contemporary biometric system design issues, prospects, commercial uses, and market demand and supplied part of unimodal vs multimodal biometrics systems. A significant portion of the current market is occupied by a unimodal system which is getting tough competition from multimodal systems. Moreover, users are changing priorities from user-friendliness to security and fraud protection. Some previous studies viz. [33, 45, 46] studied various applications of biometrics in school attendance systems, public place access, office/institute access, airports, and sensitive regions like border crossing points. At the same time, online commercial benefits like purchase, banking transactions, stock trading, shopping, and non-commercial online parts such as necessary access like defence lab systems or high-security areas access are studied in detail [47]. Certain authors [1, 12] highlighted the increasing impact of artificial intelligence and deep learning in biometric system design. Using AI-based algorithm testing and deployment part is becoming smooth. Moreover, smart devices like phones and other tablets are now using multimodal biometric algorithms to enhance user experience.

[13] conducted a brief review on the research dimension of multimodal biometrics. It was reported that MBS could be classified into two major areas synchronous and asynchronous systems. In the first case, two or more biometric systems are under the same authorization systems. In contrast, asynchronous category two, the biometrics process, is used one after another. Further, subdivisions are serial (cascade), parallel, and hierarchical modes. [41] categorized the fusion schemes into two categories like fusion before and after matching: feature level, score level, decision, rank level, and hybrid levels. Soft biometrics is another vital aspect of a user-friendly system where age, gender, height, eye color, skin color, and hair color input are added with other traits. It is convenient for the user and enhances the perceived ease of service. The pattern matching process is also vital in an extensive database. Some prominent techniques, such as k-d & k-d-b, are frequently used. [48] used a convolution neural network for advanced biometric system design with cancellable biometrics rules. Their previous work used braided CNN for speech classification, which generated a high accuracy of 97.5%, 98.8%, and 98.06% for US8k, GSCv1, and GSCv2 datasets. Later in the cited work, they utilized cancellable biometrics for upgrading the security and protection of in-ear biometrics. This shows the path to use cancellable biometrics in MBS design to improve safety and privacy. CNN and SVM combination are utilized for feature extraction and classification part. SHA 3 hashing algorithm is used for secure ear templates where 12.5 EER were obtained on AWE dataset. As SHA 3 is noninvertible, process intrusion is almost impossible.

For multimodal biometrics, gait is essential as user acceptance and ease of data collection are very high. In 2014 [34], successfully presented a novel approach to obtaining gait identification from low-quality videos where the frame rate was 1fps and resolution was 32*22. To reduce error large number of weak classifiers (ensemble of classifiers) were used over average gate image (AGI), and it is noted that performance was highly correlated with diversity. The experiment was conducted on outdoor and indoor databases like USF and OU-ISIR-D. Different kinds of fusion strategies are used to cope with less information, where features matching scores from other spaces of the same trait were fused. In 2018, a detailed survey on Unimodal and Multimodal biometrics was carried out by [36]. They explained the biometric system’s modules like sensors, feature extractors, pre-processing, matching modules, and decision modules. Various biometric systems like face, ear, iris, fingerprint, voice, manner, and palmprint have been mentioned. The fusion methods were classified into three categories such as rule-based method, classification-based, and estimation-based fusion methods. Kalman filter and particle filter had been explained for the estimation-based fusion category. This work gives an excellent introduction to biometric studies and the fundamental technologies used. In recent years, CNN-based biometric system design is becoming popular as traditional feature extractions/ classification methods are less efficient in large datasets [49]. Also, it removes the need to pre-process the inputs, as multiple filters inside the network layers perform the task automatically in the state of the artwork, invalidating the significance of sample size in biometrics identification. The proposed multivariate copula models for correlated biometric acquisitions demonstrated the calculation of minimum numbers of samples required for the authentication process. The CNN-based system manages such tasks with more excellent performance [48].

[13] delineated an excellent analytical outlook on customer awareness of the biometric mechanism of unimodal and multimodal systems in online transactions. It motivates system designers to improve system performance and integration with modern e- platforms such as banking sites, e-commerce platforms and device access. More than 100, 93.4% of users have shown high interest in multimodal biometrics systems based on online transactions in the selected sample size. Furthermore, a multidimensional questionnaire was floated among users from different classes regarding their profession, gender, age etc. According to [50], 68.87% of customers reflected confidence in the online purchase if platform authentication is MBS equipped. By analyzing the previous outcomes of biometrics behavioral studies in a recent survey, it has been observed that several studies have subsumed the adoption of technology through a notion of a single theory. A single theory cannot attempt a comprehensive view of the research matter, so a model of several theories is conducive and advantageous for panoramic in-depth research. Ideas from earlier studies were used as part of the literature study, and this material was filtered and aggregated to derive a broad range of previously found factors. Several studies focus on the organization’s internal and external conditions and technological aspects in analyzing drivers for new technology diffusion [51]. The TOE framework [52] considers multidimensional factors when studying technology adoption. It yields a greater explanatory power than other adoption models, such as the Technology Acceptance Model [53]. The manifests selected in this study also mapped with the Theory of Planned Behavior (Ajzen 1991) matrix, the Unified Theory of Acceptance and Use of Technology [47]. Comprehensive usages of these models may help establish whether MMB is appropriate in facilitating the delivery of online financial transactions. The extended model fit describes how suitable MMB is for delivering services, and this may be tested by determining how user-friendly and secured the tasks are. In the current Covid 19 era, researchers are working on touchless biometrics and remote authentication processes using AI-ML models [55]. These technologies are more user-friendly, robust, and reliable for commercial use and high-security asset access. Moreover, users are becoming more convenient with the advanced version of the process. However, the previous studies based on literature review did not support any theory and were not empirically validated in MMB [54].

The paper is meticulously framed to get inside factors influencing biometrics adoption in the present scenario where people are restricted from moving freely due to Covid-19. To fulfil the research objectives, the following section is conducive to comprehension. Section 2 portrays the literature review, research gap, theoretical constructs, and development of hypotheses. Section 3 outlines the research frame and methodology to assess customer awareness and acceptability of biometrics transactions. Section 4 illustrates data analysis, SEM, and hypotheses testing, followed by ANN modelling, sensitivity analysis and interpretation with the help of statistical tools. The last section considers practical implications, limitations, future scope, and conclusions.

2.1 Research gap, conceptual model, and novelty

The model’s constructs were evaluated using artefacts found in the existing literature. A literature review of previous works creates the measurement constructs, and the present study seeks to identify and determine the factors influencing cloud computing implementation in LMS [56]. The notion of biometrics adoption was assessed using 21 items (five-point Likert scale) from published sources. Thus, theoretical constructs viz. technological factors, perceptional factors (fingerprints, iris, face recognition and voice), cognizant factors towards biometrics, user acceptability and data privacy factors extracted from previous research [5, 10, 13].

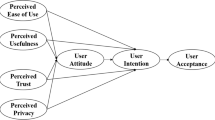

This research contributes to a survey related to customer awareness and acceptability of biometric mechanisms while transacting online. As a result, the proposed questionnaire is prepared and receives responses from various customers. Based on feedback received from technical experts/users, hypotheses of the proposed model are tested by framing SEM and followed by ANN. This is one of the few studies investigating the factors that affect MMB adoption. As a result, the established model contributes to the literature in this field—similarly, few studies quintessence on the adoption of BA from users’ perspectives (see Fig. 3).

3 Theoretical constructs and development of hypotheses

The research examines factors of adopting multimodal biometrics mechanisms in online transactions. Analysis was done based on the survey to find out the degree to which they are embraced. To examine the objectives- user acceptability, cognizant factors, technological factors, perceptional factors, and data privacy were exercised to determine the impact on the adoption performance of the virtual consumers. Distributions of essential variables were included in the investigation reported in previous studies. Table 1 highlights the constructs and their reported significance in the literature. In different phrases, primary and higher-level data analysis is done on the selected constructs.

There is stepwise extraction of manifests from past research work, i.e., users’ acceptability criterion towards biometrics usage while performing the online transaction, the notion of the cognizant factors towards Biometrics usage by the users, and technological factors that evoke the practices of biometrics applications. Subsequently, perceptional factors (Fingerprints, Iris, Face Recognition and Voice) influence MMB, followed by data privacy & security while using biometrics submission.

3.1 Hypotheses for the research

The following hypotheses are proposed based on the literature review and conceptual model:

-

H1: There is a connotation between user acceptability and MMB while transacting online.

-

H2: There is subsume association between technical factors and MMB adoption.

-

H3: There is evidence of cognizant Factors that influence MMB.

-

H4: Perceptional Factors (Fingerprints, Iris, Face Recognition and Voice) are strongly associated with MMB.

-

H5: There is an association between data privacy factors and the adoptive operation of biometrics operations.

4 Research methodology

The study is a perfect blend of a quantitative-qualitative frame conducive to exploring the determinants of adoption of biometrics mechanisms from users’ viewpoint. All the elements used to quantify the study variables were adapted from previous research, with slight terminology adjustments to acclimate them to the unique biometrics context from the users’ perspective [49]. The adopted process flow is presented in the Fig. 4.

4.1 Survey instruments and data collection

Nevertheless, the survey instruments were finalized by focus group discussions and walk-through evaluations of selected respondents who indulged in biometrics features while conducting online transactions. This confirmed the content validity of the questionnaire used to gauge each unobserved variable depicted in Table 1. This objective was accomplished using the observational approach of focus groups [65]. In this instance, a few focus groups were formed comprised of individuals with a working knowledge of biometrics applications. The study’s subject was assigned to the selected groups to familiarize them with biometrics terminology before filling out the final questionnaire. The questionnaire survey was developed using these inputs and previous research findings.

Assembly 530 samples handle the procurement of relevant primary data by virtue of using Google form from plentiful individuals concerning the online transaction. In addition, a personal interview was conducted to obtain the required answer, i.e., biometrics data. This personal interview cleared the doubts of the respondents about an acquaintance of the question based on biometrics awareness. Therefore, the reliability of the responses can be enhanced. Similarly, the sample size of the study is based on the following calculation that 530 respondents are a good fit for further study [66]:

where:

-

n number of sample size.

-

σ standard deviation as considered at 1.2 based on prior studies.

-

Qα upper tail in the SND. corresponding to Qα = 1.96, p value-0.05.

-

Qβ lower tail in the SND. corresponding to Qβ = −0.84 at β = 0.2.

-

μ1 and μ2 difference in means.

4.2 Measurement used in the study

To elucidate the degree of correlation among the variables in an array of data sets, EFA is a conducive technique to examine a factor structure. It facilitates the performance of certain functions, i.e., exploring the pattern of data configurated, illustrating the relationship among various patterns, and extracting valid data to the next level of analysis [67, 71]. CFA is used to test the core hypothesis and evaluate the association between observed variables underlying their latent constructs to confirm the observed variables in a set of factor structures. CFA is a significant and vital part of the modelling structure equation [9]. It is advantageous and apt to formulate observed variables for measuring and validating the hypothesized model of latent constructs [72]. The researchers use the relevance of items representing the proposed theory, empirical or scientific research, postulate the association framework a priori and confirm hypotheses. Thus, CFA is a unique specified factor conjoint technique widely used to validate the model supported by any previous theory [73].

A priori model, number of factors, item loading on each factor, fit indices, error term, and standard regression weights on items are elucidated by SEM. The goodness of fit (GoF) should be considered to conceptualize the model practically. Moreover, GoF is inversely interrelated to sample size and the number of variables in the model. Therefore, it is vital and mandatory to view reliability in terms of Cronbach Alfa, followed by establishing convergent and discriminant validity of a model [74, 75]. With the assistance of SPSS and AMOS tools, the recorded data was listed, analyzed, and interpreted. Having considered 530 respondents from various respondents, mostly bankers, technical experts and engineers, the researcher intends to test the convergent and discriminant validity of the group variables. Therefore, the questionnaire consists of two sections. The first part encompasses six questions about the all-purpose profile of the experts and actors using biometrics while doing online transactions. The second part entails five major constructs along with 22 variables. The research took place from January to April of 2021.

5 Data analysis and interpretation

The researcher instigated the data analysis by examining responses to survey questions intended to measure awareness and acceptability towards adopting the MMB Mechanism while doing online transactions. Prima facie, discussing well-versed users’ demographic profiles and becoming acquainted with the biometric application is pertinent (Table 2).

The above table enunciates that the sample comprised 52.80% male and 47.20% female; principally, 47.70% of respondents were below the age set of 30 years, and 43.60% were between 30 and 40 years, 08.70% persons were above the age of 40. Besides, most technical respondents were UG, i.e., 62.30%, PG professionals, 19.80%, and others were only 17.90%. 44.30% of experts had work experience of fewer than two years, 34.30% were 2–5 years, and 21.40% share above five years. Among the respondents, a significant share of 42.80% of service class, businesspersons were 40%, and share statistics of professional was 17.20%. Eventually, most defendants were from the junior management level, i.e., 36.80%, middle management scored 46.40%, and top management share was 16.80%. Prima facie, the research aims to test the reliability of selected items that have already been discussed; Cronbach’s Alpha expressed the score of 0.825, summarized that the observed variables were reliable enough for confirmatory statistical analysis.

5.1 Harman’s single variable test

The statistical distribution of the constructs used in the analysis outlines in Table 3. Skewness and kurtosis were determined to ascertain the symmetry distribution and peakedness of the gathered data. In general, skews are considered within the spectrum of alternatives +1/−1, and the values are usually distorted [76, 77]. The analysis outlines those respondents prioritize user acceptability (20.31), perceptional factors such as fingerprints, iris, facial recognition, speech etc. (15.54), technical factors (15.33), and cognizant factors towards biometrics (12.80) as primary reasons for embracing MMB, followed by data privacy factors (11.84). All in all, there is an asymmetrical distribution of results when it comes to biometric adoption. According to Harman’s single factor test, just 24% of the variance in all variables is explained by a single factor, demonstrating that CMV is not a problem in this analysis. Another test was run to ensure that no similarities were greater than 0.90, which may mean skewed data [62]. As a result, none of the measured correlations surpasses the suggested threshold, indicating that CMV is not a severe concern in this analysis.

5.2 Measurement model

Determinants of biometrics mechanism adoption were used in research to evaluate the users’ acceptance and awareness while transacting online. Exploratory factors were used to understand biometric behavioral, knowledge and adoption. Moreover, the Kaiser-Mayo-Olkin score was used to gauge the sample size’s adequacy. The measured score of 0.874 intended that the samples were adequate for the factor analysis. Determinants of MMB were analyzed, and Table 4 enunciated the G’F’I (0.936), A’G’F’I (0.812) along with N′F’I (0.866) and R’F’I (0.833) whereas C′F’I (0.920), Tucker-Lewis delineates 0.918. The value of RMSEA was 0.035, which specifies that the anticipated model is a decent fit [67, 78].

The model exposed a good fit measurement i.e., λ2 = 951.69, df = 195, CMIN/df = 4.88, at significant level p = 0.038. As illustrated in Table 5, the factor loading, Cronbach alfa, AVE, and CR of all factors were highly significant [79].

Possible options for normality, linearity, cointegration and homoscedasticity were conducted before evaluating the proposed model. The data was then subjected to CFA to see if it matched the proposed theoretical model and confirm the gauged constructs’ validity and reliability. The factor correlation among latent items has to be less than the square root of the average variance explained by each factor [4]. With all figures, it has been inferred that the model meets the standards of reliability, the validity of substance, convergent validity, and discriminant validity. In this way, it was succeeded by testing the structural equation model.

The discriminant validity infers the degree to which dormant items are varied from other dormant variables in the selected frame. Also, the construct correlation among dormant items must be less than the square root of the AVE of every factor [80]. With these all standards, it has been confirmed that the model estimates the criteria of reliability and the rationality of matter with convergent validity (Table 6). In this way, it was accomplished by perusing the SEM.

Figure 5 revealed the standard estimates of confirmatory model in which six factors depicted their item and eventually examined the proposed hypotheses, structural model fit, and path analysis. Confirmatory factor analysis (CFA) is a multivariate statistical procedure for determining how well measured variables represent the number of constructs. The rationalized SEM model exhibits theorized connotation among the latent variables. The assessment of standardized regression loads was applied to fetch an appreciation concerning the proposed disposition, as signified by (Agrawal, 2019; Rakesh Kumar Birda & Manish Dadhich, 2019; Singhal, 2020). In the table below, βeta, S. Er, Cri. ratios were positive, and eventually, null hypotheses could be rejected. The computed p-values of all five projected hypotheses were less than 0.05, viz. user acceptability and biometrics adoption (0.035), technical factors and adoption of multi-model biometrics adoption (0.045), Cognizant Factors towards Biometrics (0.040), Perceptional Factors (0.020), and Data Privacy (0.001) for the adoptive operation of biometrics. Most of the constructs were consistent with the previous studies. Hence, it validates the proposed model good fit. Eventually, expressed hypotheses were supported and accepted (Tables 7 and 8).

The researchers used CFA to validate the hypotheses of the research model, as discussed in section 1. Besides, the researcher opted to perform an additional research tool ANN to rate the normalized value of the significant predictors based on the SEM analysis. Due to nonlinear correlations between the independent and outcome variables, the two-stage SEM-ANN methodology entails getting a precise classification of performing predictors for biometrics adoption [39, 83]. These two methods complement each other because the SEM is idyllic for hypothesis testing of linear relationships but cannot describe the relationship of nonlinearity, whereas the ANN can detect nonlinear relationships and is not suitable for hypothesis testing [14, 84].

5.3 Analysis of neural network

In the word of [85, 86], ANN is an incredibly analogous scattered processor composed of computational units with a natural propensity for storing experimental data and improving its usability. Moreover, ANN seems to be a more advanced and typically stable method that offers a higher level of precision than traditional tools. Nodes or neurons store the information that is referred to as synaptic weights. Further, it has already been proved by some prominent studies [39, 87] that the advantage of this methodology is that the neural network model can learn intricate linear and nonlinear relationships between predictors and the adoption decision. At the outset, SEM is applied to evaluate the overall research model and test the significant hypothesized predictors, which were further used as inputs in the neural network model to assess the relative significance of each predictor item. Input nodes, hidden/black box, and output layer make up a neural network. Data are projected into the input layers, generating the output information in the output layers. Synaptic weights are assigned to each input and passed to the hidden layers. Using applied weights, a nonlinear activation process uses these values into an output value. There are numerous other types of neural networks, but the researcher employs one of the most common and well-known instruments, i.e., the feed-forward back-propagation MLP [14, 88] (Fig. 6).

A typical neural network has multiple hierarchical levels, including one input, one or more hidden layers, and one output layer. Any incessant function can be represented with one hidden layer, but discontinuous functions can be described with two hidden layers. However, only one hidden layer is commonly employed in technology acceptance neural network models [89]. Each layer comprises neurons that connect with neurons in the next layer, and each link is represented by a synaptic weight that can be adjusted. Radial basis, recurrent networks, multi-layer perceptron, and Feed-forward neuronal functional networks are the four significant kinds of ANN. The research applied the MLP model to study users’ awareness and acceptability of adopting a multimodal biometrics mechanism in online transactions. The synaptic weights of the relationships will be changed through an iterative training process while using the training samples to train the network [73].

The research employed 10 Epochs of training and testing of the selected manifests. For the initial iteration, the Initial Learning Rate of the model was 0.4, Momentum-0.9, and Interval Offset ± 0.5, which was suggested by previous work [14, 25, 29, 40]. The study used the customized architecture tool of SPSS. The hyperbolic tangent has been used as a hidden layer activation function to yield appropriate output layer activation identify, which was empirically validated by prior studies [8, 31, 35, 49]. Dark blue lines in the above figure confirm the higher synaptic weight of CGF, PRF, DPF and TCF to achieve and explain biometric adoption (Fig. 7).

In step 1, Inputs of X1, X2, and X3 are associated with their respective weights, W1, W2, and W3. The output Y of the neuron is computed as shown in the above figure. Step 2, f, is a nonlinear process titled the activation function (AF). The (AF) aims to initiate nonlinearity into the output of a neuron, which is vital since the real-world data are mostly nonlinear.

and

Where μk is the linear combiner output because of input signal k. Wkj (j = 1, 2, …, n) is the respective weights of neuron k, where Φ is the activation function.

The code used for the in-built ANN algorithm in SPSS:

Input: # Data Preprocessing of the indicator’s variables viz. User Acceptability: UAC,

Technological factors: TCF, Cognizant Factors: CGF, Perceptional Factors: PRF,

Data Privacy Factors: DPF.

-

Import the Libraries

-

Load the Dataset

-

Split Dataset into X and Y

-

Encode Categorical Data

-

Split the X and Y Dataset into the Training set and Test set

-

Build ANN Model

-

Initialize the ANN

-

Add the input layer and the first hidden layer

-

Train and compile the ANN

-

Fit the ANN to the Training setting

Output: # Predict the Test Set of Multimodal Biometrics (MMB).

The pre-processed dataset from SEM is again used as an input variable for ANN and transformed into numerical representation to adopt Multimodal Biometrics analysis (see Table 9).

5.4 Results of neural network modeling

The neural network approach was examined using the widely used statistical program SPSS. The statistically relevant determinants from the SEM analysis were inserted into this model. From the findings of the structural equation, five constructs have been considered vital for further research. As a result, these items were rendered as input variables in the input layers. In this case, biometrics were chosen as the output layer’s dependent variable. Furthermore, a cross-validation tool was used to overcome the over-fitting issue of the model [35, 45].

In the ANN model, [90] proposed that hidden nodes should be in the range of 1–10. 10% of the data were used for experimentation during the study process, while 90% were used for training, as suggested by earlier established studies [20, 29, 58, 63]. Similarly, there was no documentary evidence and empirical study of holding the data set. Table 9 shows the RMSE values for both training and testing data points and the mean and standard deviation. The outputs show that the mean RMSE values for the training and testing model are 0.694 and 0.084, respectively, whereas the σ of training data are 0.012 and 0.081 for testing data (see Fig. 8). The RMSE values with comparatively small σ indicate higher accuracy in the statistical results [64]. We, thus, affirm that the model is a good fit. Using a similar approach as [61] applied, the researcher computed the R2 of 0.87%, and the result reveals that the ANN model predicts multimodal biometrics adoption with an accuracy of 87%. The results also show that the ANN mined very secure connections between the significant predictors and the output variables. Furthermore, in evaluating meaningful work, the sensitivity analytics were determined with the average importance of the predictor. The normalized relative significance of each forecast in the model was calculated by dividing each predictor’s relative importance by the highest predictor. Table 10 shows each predictor’s Normalized and Sensitivity Assessment. The results of the ANN, however, outline that the average explanation of the data privacy construct was significant, i.e., DPF_3 (93%), DPF_2 (50%) and DPF_4 (34%). Perceptional construct-PRF_2 (49%) and PRF_3 (33%) was relatively most important predictor of BA, whereas in user acceptability-UAC_2 (37%), UAC_3 & UAC_5 (41%), technological factors- TCF_2 (35%), followed by cognizant factors- CFG_1 (33%) towards biometrics adoption (see Table 10). Thus, the model confirms the best fit from both CFA-ANN approaches.

6 Practical implications

Regarding the quantitative outcomes, this study’s results contribute to advancing the literature on biometrics and its awareness among various segments of users. Furthermore, this study contributes significantly to our understanding of biometrics adoption at the virtual level. In this study, integrating certain constructs, i.e., cognizant and perceptional factors, has rendered a new theoretical contribution to the issues influencing biometrics adoption. Although these factors’ application was ubiquitous in marketing research, there is a lack of studies that examined its comprehensive effects with other variables. Thus, this is perhaps the first research encompassing five constructs and 21 variables to explicate biometrics adoption in the present chaotic scenario. Contrary to current linked studies using linear models, the study adopted a two-stage SEM-ANN approach consisting of a linear and nonlinear ANN model. It is a new technique since a decrease in one predictor can be neutralized by increasing another predictor in a linear compensatory model. The study has effectively resolved the weakness of linear models and has made an innovative theoretical contribution to established literature using the ANN model. In summary, the study aims to provide a systematic, in-depth, and consistent understanding of biometrics’s direct and indirect effect on online transactions, which may be helpful for researchers, administrators, and academicians while developing frameworks to deal with complex technological changes. Existing research has predominantly focused on the drivers of MMB. Adoption at the virtual platform; however, this study takes it a step further by developing and testing hypotheses about continent-level factors that affect the use and adoption of biometrics techniques for online operation.

7 Limitations and future research

Even though the findings of this study have significant consequences for researchers and practitioners, it has the following limitations: First, the representative sample for this empirical research is minimal. By gathering more data, future research would provide more credible scientific findings. Second, SEM was used in this research to analyze the research model. Even though SEM is a standard statistical technique in information management research, it does not hierarchically evaluate independent variables. Future research should consider computational methods such as AI-ML, which can isolate variables at the personal and organizational levels. Third, this work conducted an empirical investigation using a survey approach. In the future, an in-depth interview or qualitative methods and measurement can be used to get more feasible and meaningful findings in terms of theoretical contributions. Fourth, the research focused exclusively on educational organizations located on a single continent. It is recommended that future cross-country research on MMB can be done to generalize the findings. Fifth the insignificant variables of this study can be removed, and new manifests can be included to improve the validity of the proposed model. Sixth, a new practical model can also be implemented, which will be theory-driven [88,89,90]. Finally, the study delineated users’ knowledge and willingness to adopt the MMB in a limited time frame.

8 Conclusion

The numerous applications of biometrics are gaining traction in business and society. The study focuses on analyzing user awareness of MMB and its acceptability for online transactions in the current dynamic world under pandemics. The study was conducted on the five underlying perspectives named user acceptability, cognizant factors towards biometrics, technological factors, perceptional factors (fingerprints, iris, face recognition and voice) and data privacy. The study illustrates the relationship between previously used constructs and evaluates a multimodal biometrics adoption. A questionnaire was prepared and circulated to the 530 biometrics users; on that basis, the corresponding answers were obtained for analysis. The collected replies were just from the professionals/experts who had performed online tasks of MMB. SEM is first employed to gauge the wholesome research model and test the prominent hypothesized predictors, which are then used as inputs in the neural network to evaluate the relative significance of each predictor variable. SEM-ANN analysis was used to identify the inclusive link among variables, including linear-nonlinear and non-compensatory correlations [39, 48, 54, 78]. Additionally, the researcher extended the prior study by incorporating two new constructs, i.e., cognizant and perceptual factors, with tested elements like user acceptability, technological considerations, and data privacy. The study is a unique and comprehensive attempt compared to past studies, as it considered cognizant and perceptual factors, thereby extending the analytical outlook of MMB literature. Thus, the study also explored several new and valuable theoretical implications for adopting multimodal instruments of biometrics adoption.

Dataset Availabilty

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Abbas J (2020) Impact of Total quality management on corporate sustainability through the mediating effect of knowledge management. J Clean Prod 244:1–15. https://doi.org/10.1016/j.jclepro.2019.118806

Abomhara M, Yayilgan SY, Livinus ON (2021) A comparison of primary stakeholders views on the deployment of biometric Technologies in Border Management: case study of SMart MobILity at the European land Borders. Technol Soc 64 (January):101484. https://doi.org/10.1016/j.techsoc.2020.101484

Al-Ahmari AN, Ajlan AM, Bajunaid K, Alotaibi NM, Al-Habib H, Sabbagh AJ, Al-Habib AF, Saleh SB (2020) Perception of Neurosurgery Residents and Attendings on Online Webinars During Covid-19 Pandemic and Implications on Future Education. World Neurosurg 146:e811–e816. https://doi.org/10.1016/j.wneu.2020.11.015

Anil AP, Satish KP (2016) Investigating the relationship between TQM practices and Firm’s performance: a conceptual framework for Indian organizations. Procedia Technology 24:554–561. https://doi.org/10.1016/j.protcy.2016.05.103

Arifin WN, Muhamad Saiful Bahri Y (2016) Confirmatory factor analysis of the Universiti Sains Malaysia emotional quotient inventory among medical students in Malaysia. SAGE Open 6:1–9. https://doi.org/10.1177/2158244016650240

Asadi Z, Mohammadhiwa A (2020) Cloud computing services adoption among higher education faculties: development of a standardized questionnaire. Educ Inf Technol 25:175–191

Belhadi A, Kamble SS, Zkik K, Cherrafi A, Fatima ET (2020) The integrated effect of big data analytics, lean six sigma and green manufacturing on the environmental performance of manufacturing companies: The case of North Africa. J Clean Prod 252:1–14. https://doi.org/10.1016/j.jclepro.2019.119903

Bernard RM, Borokhovski E, Schmid RF, Tamim RM, Philip CA (2014) A Meta-analysis of blended learning and technology use in higher education: from the general to the applied. J Comput High Educ 26(1):87–122. https://doi.org/10.1007/s12528-013-9077-3

Birda RK, Manish D (2019) Study of ICT and E-governance facilities in Tribal District of Rajasthan. Zenith Int J Multidiscip Res 9(7):39–49

Breitinger F, Tully-doyle R, Courtney H (2020) A survey on smartphone User’s security choices, awareness and education. Comput Secur 88:1–14. https://doi.org/10.1016/j.cose.2019.101647

Buckley O, Jason RCN (2019) The language of biometrics: Analysing public perceptions. J Inf Secur Appl 47:112–119. https://doi.org/10.1016/j.jisa.2019.05.001

Buriro A, Crispo B, Mauro C (2019) AnswerAuth: a bimodal behavioral biometric-based user authentication scheme for smartphones. J Inf Secur Appl 44:89–103. https://doi.org/10.1016/j.jisa.2018.11.008

Carrión-ojeda D, Fonseca-delgado R, Israel P (2021) Analysis of factors that influence the performance of biometric systems based on EEG signals. Expert Syst Appl 165 (July 2020):113967. https://doi.org/10.1016/j.eswa.2020.113967

Chandra A, Durand R, Weaver S, West V (2007) The uses and potential of biometrics in health care are consumers and providers ready for it ? Int J Pharm Healthc Mark 2(1):22–34. https://doi.org/10.1108/17506120810865406

Cherrat E, Alaoui R, Hassane B (2020) Convolutional neural networks approach for multimodal biometric identification system using the fusion of fingerprint, finger-vein and face images. Peer J Comput Sci 6:e248. https://doi.org/10.7717/peerj-cs.248

Chong AYL (2013) A two-staged SEM-neural network approach for understanding and predicting the determinants of m-commerce adoption. Expert Syst Appl 40(4):1240–1247. https://doi.org/10.1016/j.eswa.2012.08.067

Choudhury SH, Kumar A, Shahedul HL (2021) Adaptive Management of Multimodal Biometrics-a Deep Learning and Metaheuristic Approach. Appl Soft Comput J 1:1–46. https://doi.org/10.1016/j.asoc.2021.107344

Chowdary MK, Hemanth DJ (2019) Human emotion recognition using intelligent approaches: a review. Intell Decis Technol 13:417–433. https://doi.org/10.3233/IDT-190101

Dadhich M (2017) An analysis of factors affecting on online shopping behavior of customers. ZENITH Int J Bus Econ Manag Res 7(1):20–30

Dadhich M, Kamal KH (2022) Empirical investigation of extended TOE model on corporate environment sustainability and dimensions of operating performance of SMEs: a high order PLS-ANN approach. J Clean Prod 363:1–16. https://doi.org/10.1016/j.jclepro.2022.132309

Dadhich M, Kant K (2022) Empirical investigation of extended TOE model on corporate environment sustainability and dimensions of operating performance of SMEs: a high order PLS-ANN approach. J Clean Prod 363:1–16. https://doi.org/10.1016/j.jclepro.2022.132309

Dadhich M, Pahwa MS, Rao SS (2018) Factor Influencing to Users Acceptance of Digital Payment System. Int J Comput Sci Eng 06(09):46–50. https://doi.org/10.26438/ijcse/v6si9.4650

Dadhich M, Purohit H, Anand AB (2021) Determinants of green initiatives and operational performance for manufacturing SMEs. Mater Today: Proceedings 46(20):10870–10874. https://doi.org/10.1016/j.matpr.2021.01.889

Dadhich M, Rao SS, Sethy S, Renu S (2021) Determining the factors influencing cloud computing implementation in library management system (LMS): a high order PLS-ANN approach. Libr Philos Pract:6281 https://digitalcommons.unl.edu/libphilprac/6281

Dadhich M, Pahwa MS, Jain V, Rochi D (2021) Predictive models for stock market index using stochastic time series ARIMA modeling in emerging economy. In: Advances in Mechanical Engineering, pp 281–290. https://doi.org/10.1007/978-981-16-0942-8_26

Dadhich M, Poddar S, Kant K (2022) Antecedents and consequences of patients ’ adoption of the IoT 4.0 for e-health management system: a novel PLS-SEM approach. Smart Health 25(5):1–14. https://doi.org/10.1016/j.smhl.2022.100300

Dadhich M, Hiran KK, Rao SS, Renu S (2022) Factors influencing patient adoption of the IoT for E-health management systems (e-HMS) using the UTAUT model: a high order SEM-ANN approach. Int J Ambient Comput Intell (IJACI) 13(1):18–18. https://doi.org/10.4018/IJACI.300798

Dargan S, Munish K (2020) A comprehensive survey on the biometric recognition systems based on physiological and behavioral modalities. Expert Syst Appl 143:113114. https://doi.org/10.1016/j.eswa.2019.113114

Davis FD, Bagozzi RP, Warshaw PR (1989) User acceptance of computer technology: a comparison of two theoretical models. Manag Sci 35(8):982–1003. https://doi.org/10.1287/mnsc.35.8.982 S2CID 14580473

Dinh VS, Nguyen HV, The NN (2018) Cash or cashless?: promoting consumers’ adoption of Mobile payments in an emerging economy. Strateg Dir 34(1):1–4. https://doi.org/10.1108/SD-08-2017-0126

Dubey R, Gunasekaran A, Sadia SA (2015) Exploring the relationship between leadership, operational practices, institutional pressures and environmental performance: a framework for green supply chain. Int J Prod Econ 160:120–132. https://doi.org/10.1016/j.ijpe.2014.10.001

Durdyev S, Ihtiyar A, Banaitis A, Derek T (2018) The construction client satisfaction model: a PLS-SEM approach. J Civ Eng Manag 24(1):31–42. https://doi.org/10.3846/jcem.2018.297

El-fishawy N (2015) Multi-biometric systems: a state of the art survey and research directions. Int J Adv Comput Sci Appl 6(6):128–138

Faheem A, Mughal KS, Ashiq A, Mohmand YT (2020) Forecasting Islamic securities index using artificial neural Networks: Performance Evaluation of Technical Indicators. J Econ Adm Sci. https://doi.org/10.1108/JEAS-04-2020-0038

Gokulkumari G (2020) Analytical outlook on customer awareness towards biometrics mechanism of unimodal and multimodal in online transactions. Multimed Tools Appl 79(2):1–24. https://doi.org/10.1007/s11042-020-09526-w

Guan Y, Sun Y, Li C-t, Massimo T (2014) Human gait identification from extremely low-quality videos: an enhanced classifier ensemble method. IET Biometrics 3(2):84–93. https://doi.org/10.1049/iet-bmt.2013.0062

Gupta G, Sonika N (2020) Green dimensions, environment orientation and size: impact assessment on operational performance of manufacturing firms. Glob Bus Rev 1:1–14. https://doi.org/10.1177/0972150920919880

Gupta S, Choudhary H, Agarwal DR (2018) An empirical analysis of market efficiency and Price discovery in Indian commodity market. Glob Bus Rev 19(3):771–789. https://doi.org/10.1177/0972150917713882

Gutierrez A, Boukrami E, Ranald L (2015) Technological, Organisational and environmental factors influencing managers’ decision to adopt cloud computing in the UK. J Enterp Inf Manag 28(6):788–807. https://doi.org/10.1108/JEIM-01-2015-0001

Gyamfi NK (2018) Bank Fraud Detection Using Support Vector Machine. 2018 IEEE 9th annual information technology, Electronics and Mobile Communication Conference (IEMCON), 37–41

Hair JF Jr, Black WC, Babin BJ, Anderson RE (2009). n.d.) Multivariate Data Analysis, 7th edn. Pearson Prentice Hall, Upper Saddle River

Heracleous L, Templeton C (2006) Biometrics: The next frontier in service excellence, productivity and security in the service sector. Manag Serv Qual 16(1):12–22. https://doi.org/10.1108/09604520610639937

Ijaz MF (2020) Data-driven cervical Cancer prediction model with outlier detection and over-sampling methods. Sensors, MDPI 20:1–22. https://doi.org/10.3390/s20102809

Ioannou A, Tussyadiah I, Yang L (2020) Privacy concerns and disclosure of biometric and behavioral data for travel. Int J Inf Manag 54:1–15. https://doi.org/10.1016/j.ijinfomgt.2020.102122

Jackson LA (2009) Biometric technology: The future of identity assurance and authentication in the lodging industry. Int J Contemp Hosp Manag 21(7):892–905. https://doi.org/10.1108/09596110910985340

Jagadiswary D, Saraswady D (2016) Biometric Authentication Using Fused Multimodal Biometric. Procedia - Procedia Comput Sci 85(Cms):109–116. https://doi.org/10.1016/j.procs.2016.05.187

Joshi M, Mazumdar B, Somnath D (2020) A comprehensive security analysis of match-in-database fingerprint biometric system R. Pattern Recogn Lett 138:247–266. https://doi.org/10.1016/j.patrec.2020.07.024

Ju TL, Lin B, Lin C, Hao JK (2006) TQM critical factors and KM value chain activities. Total Qual Manag Bus Excell 17(3):373–393. https://doi.org/10.1080/14783360500451614

Kalini Z (2017) A SEM-neural network approach for predicting antecedents of m-commerce acceptance. Int J Inf Manag 37:14–24. https://doi.org/10.1016/j.ijinfomgt.2016.10.008

Khan MH-M, Naushad Mamode K (2014) Analysing factors affecting hand biometrics during image capture. Procedia - Procedia Comput Sci 32:521–528. https://doi.org/10.1016/j.procs.2014.05.456

Khayer A, Talukder S, Bao Y, Nahin H (2020) Cloud computing adoption and its impact on SMEs ’ performance for cloud supported operations: a dual-stage analytical approach. Technol Soc 60(September 2019):101225. https://doi.org/10.1016/j.techsoc.2019.101225

Königstorfer F, Stefan T (2020) Applications of artificial intelligence in commercial banks – a research agenda for behavioral finance. J Behav Exp Financ 27:100352. https://doi.org/10.1016/j.jbef.2020.100352

Krishnakumar P, Rameshkumar K, K I R. (2018) Machine learning based tool condition classification using acoustic emission and vibration data in high speed milling process using wavelet features. Intell Decis Technol 12:265–282. https://doi.org/10.3233/IDT-180332

Kumar N, Manish D (2014) Risk Management for Investors in stock market. Excel Int J Multidiscip Manag Stud 4(3):103–108

Kumar, Manish dadhich & N. (2015) An analysis of factors affecting to entrepreneur development in Rajasthan. Int J Manag IT Eng 5(12):41–48

Kumar S, Mikhail Z (2019) A machine learning approach to analyze customer satisfaction from airline tweets. J Big Data 6(62):1–16. https://doi.org/10.1186/s40537-019-0224-1

Leong L-y, Hew T-s, Ooi K-b, June W (2020) Predicting Mobile wallet resistance: a two-staged structural equation modeling-artificial neural network approach. Int J Inf Manag 51:1–24. https://doi.org/10.1016/j.ijinfomgt.2019.102047

Liébana-Cabanillas F, Marinkovic V, De Luna IR, Zoran K (2018) Predicting the determinants of Mobile payment acceptance: a hybrid SEM-neural network approach. Technol Forecast Soc Change 129(February 2017):117–130. https://doi.org/10.1016/j.techfore.2017.12.015

Liu Y, Dong S, Wei J, Yu T (2020) Assessing cloud computing value in firms through socio-technical determinants. Inf Manag 57:1–17. https://doi.org/10.1016/j.im.2020.103369

Mashelkar RA (2018) Exponential technology, industry 4.0 and future of jobs in India. Rev Mark Integr 10(2):138–157. https://doi.org/10.1177/0974929218774408

Modisane P, Jokonya O, Phenyo M (2021) Evaluating the benefits of cloud computing in small, medium and enterprises (SMMEs). Procedia Comput Sci 181(2019):784–792. https://doi.org/10.1016/j.procs.2021.01.231

Nappi M, Ricciardi S, Massimo T (2018) Context awareness in biometric systems and methods: state of the art and future scenarios. Image Vis Comput 76:27–37. https://doi.org/10.1016/j.imavis.2018.05.001

Oloyede MO, Member S, Gerhard PH (2016) Unimodal and multimodal biometric sensing systems: a review. IEEE Access 4:7532–7555

Panigrahi R, Borah S, Bhoi AK, Ijaz MF, Pramanik M, Jhaveri RH, Chiranji LC (2021) Performance assessment of supervised classifiers for designing intrusion detection systems: a comprehensive review and recommendations for future research. Mathematics 9(6):1–32. https://doi.org/10.3390/math9060690

Panigrahi R, Borah S, Bhoi AK, Ijaz MF, Pramanik M, Kumar Y, Rutvij HJ (2021) A consolidated decision tree-based intrusion detection system for binary and multiclass imbalanced datasets. Mathematics 9(7):2–35. https://doi.org/10.3390/math9070751

Porwik P, Doroz R (2021) Adaptation of the idea of concept drift to some behavioral biometrics: preliminary studies. Eng Appl Artif Intell 99(December 2020):104135. https://doi.org/10.1016/j.engappai.2020.104135

Psomas EL, Carmen J (2016) The impact of Total quality management on service company performance: evidence from Spain. Int J Qual Reliab Manag 33(3):380–398. https://doi.org/10.1108/IJQRM-07-2014-0090

Purohit H, Ajmera PK (2002) Multi-modal biometric fusion based continuous user authentication for E-proctoring using hybrid LCNN-Salp swarm optimization. Cluster Comput 25, 827–846. https://doi.org/10.1007/s10586-021-03450-w

Purohit H, Ajmera PK (2020) Multimodal multilevel fusion of face ear iris with multiple classifications. In: Goel N, Hasan S, Kalaichelvi V (eds) Modelling, Simulation and Intelligent Computing. MoSICom 2020. Lecture Notes in Electrical Engineering, vol 659. Springer, Singapore. https://doi.org/10.1007/978-981-15-4775-1_37

Purohit H, Ajmera PK (2021) Optimal feature level fusion for secured human authenication in multimodal biometric system. Machine Vision and Applications 32, 24. https://doi.org/10.1007/s00138-020-01146-6

Rahi A, Shakil A, Ferdausy N, Moshiur R (2021) Effect of eco-physiological factors on biometric traits of green mussel Perna Viridis cultured in the South-East Coast of the bay of Bengal, Bangladesh. Aquac Rep 19(December 2020):100562. https://doi.org/10.1016/j.aqrep.2020.100562

Raut RD, Priyadarshinee P, Gardas BB, Manoj K (2018) Analyzing the factors influencing cloud computing adoption using three-stage hybrid SEM-ANN-ISM (SEANIS) approach. Technol Forecast Soc Change 134:98–123. https://doi.org/10.1016/j.techfore.2018.05.020

Rogers E (2003) Simon and Schuster. Diffusion of innovations, 5th edn.

Sadasivam GS, Lakshme SM (2016) Corporate governance fraud detection from annual reports using big data analytics Mutyala Subrahmanyam and Dasaraju Himachalam Bhanu Prasad Pinnamaneni. Int J Big Data Intell Inderscience Enterpr Ltd 3(1):51–60

Sarier ND (2021) Comments on biometric-based non-transferable credentials and their application in Blockchain-based identity management. Comput Secur 105:102243. https://doi.org/10.1016/j.cose.2021.102243

Singh GK, Manish D (2021) Impact of Total quality management on operational performance of Indian cement manufacturing industry- a structural equation remodeling approach. Turkish Journal of Computer and Mathematics Education 12(7):22–41

Sinha H, Pawan KA (2019) Upgrading security and protection in ear biometrics. IET Biometrics 8(4):259–266. https://doi.org/10.1049/iet-bmt.2018.5081

Sinha H, Awasthi V, Pawan KA (2015) Audio classification using braided convolutional neural networks. IET Research Journals:1–7. https://doi.org/10.1049/iet-spr.2019.0381

Sireesha V, Sandhyarani K (2018) Overview of fusion techniques in multimodal. Int J Eng Res Technol 2:3–8

Siyal AW, Donghong D (2018) M-banking barriers in Pakistan: a customer perspective of adoption and continuity intention. Data Technol Appl 53(1):58–84. https://doi.org/10.1108/DTA-04-2018-0022

Srinivasu PN, Sivasai JG, Ijaz MF, Bhoi AK, Kim W, James JK (2021) Classification of skin disease using deep learning neural networks with MobileNet V2 and LSTM. Sensors, MDPI 21(2852):1–27. https://doi.org/10.3390/s21082852

Stylios I, Kokolakis S, Thanou O, Sotirios C (2021) Behavioral biometrics & continuous user authentication on Mobile devices: a survey. Inf Fusion 66(August 2020):76–99. https://doi.org/10.1016/j.inffus.2020.08.021

Teh PS, Zhang N, Ke C (2016) TDAS: a touch dynamics based multi-factor authentication solution for Mobile devices. Int J Pervasive Comput Commun 12(1):127–153. https://doi.org/10.1108/IJPCC-01-2016-0005

Timans W, Ahaus K, van Solingen R, Kumar M, Jiju A (2016) Implementation of continuous improvement based on lean six sigma in small- and medium-sized enterprises. Total Qual Manag Bus Excell 27(3–4):309–324. https://doi.org/10.1080/14783363.2014.980140

Tornatzky LG, Fleischer M (1990) The processes of technological innovation, TOE. J Technol Transf 16:45–46. https://doi.org/10.1007/BF02371446

Tortorella G, Giglio R, Fogliatto FS, Rapinder S (2019) Mediating role of learning organization on the relationship between Total quality management and operational performance in Brazilian manufacturers. J Manuf Technol Manag 31(3):524–541. https://doi.org/10.1108/JMTM-05-2019-0200

Venkatesh V, Morris MG, Davis GB, Davis FD (2003) User acceptance of information technology: toward a unified view. MIS Q 27(3):425–478. https://doi.org/10.2307/30036540

Venkatraman S (2008) Biometrics in banking security: a case study. Inf Manag Comput Secur 16(4):415–430. https://doi.org/10.1108/09685220810908813

Vereycken AY, De Kort L, Vanhootegem G, Ezra D (2019) Care living labs’ effect on care organization and quality of working life. Int J Health Care Qual Assur 32(4):709–719. https://doi.org/10.1108/IJHCQA-03-2018-0069

Yacob P, Wong LS, Saw Chin K (2019) An Empirical Investigation of Green Initiatives and Environmental Sustainability for Manufacturing SMEs. J Manuf Technol Manag 30(1):1–25. https://doi.org/10.1108/JMTM-08-2017-0153

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

First Author Himanshu Purohit, Second Author Manish Dadhich, and Third Author Pawan K Ajmera respectively declare that they have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Purohit, H., Dadhich, M. & Ajmera, P.K. Analytical study on users’ awareness and acceptability towards adoption of multimodal biometrics (MMB) mechanism in online transactions: a two-stage SEM-ANN approach. Multimed Tools Appl 82, 14239–14263 (2023). https://doi.org/10.1007/s11042-022-13786-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-13786-z