Abstract

Instance-level image retrieval in fashion industry is a challenging issue owing to its increasing importance in real-scenario visual fashion search. Cross-domain fashion retrieval aims to match the unconstrained customer images as queries for photographs provided by retailers; however, it is a difficult task due to a wide range of consumer-to-shop (C2S) domain discrepancies and also considering that clothing image is vulnerable to various non-rigid deformations. To this end, we propose a novel multi-scale and multi-granularity feature learning network (MMFL-net), which can jointly learn global-local aggregation feature representations of clothing images in a unified framework, aiming to train a cross-domain model for C2S fashion visual similarity. First, a new semantic-spatial feature fusion part is designed to bridge the semantic-spatial gap by applying top-down and bottom-up bidirectional multi-scale feature fusion. Next, a multi-branch deep network architecture is introduced to capture global salient, part-informed, and local detailed information, and extracting robust and discrimination feature embedding by integrating the similarity learning of coarse-to-fine embedding with the multiple granularities. Finally, the improved trihard loss, center loss, and multi-task classification loss are adopted for our MMFL-net, which can jointly optimize intra-class and inter-class distance and thus explicitly improve intra-class compactness and inter-class discriminability between its visual representations for feature learning. Furthermore, our proposed model also combines the multi-task attribute recognition and classification module with multi-label semantic attributes and product ID labels. Experimental results demonstrate that our proposed MMFL-net achieves significant improvement over the state-of-the-art methods on the two datasets, DeepFashion-C2S and Street2Shop. Specifically, our approach exceeds the current best method by a large margin of +4.2% and + 11.4% for mAP and Acc@1, respectively, on the most challenging dataset DeepFashion-C2S.

Similar content being viewed by others

Data availability

The datasets generated during the current study are available from the corresponding author on reasonable request.

References

Ak KE, Kassim AA, Lim JH, Tham JY (2018) Learning attribute representations with localization for flexible fashion search. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7708–7717. https://doi.org/10.1109/CVPR.2018.00804

Biasotti S, Cerri A, Abdelrahman M et al (2014) SHREC’14 track: retrieval and classification on textured 3D models. In: Proceedings of the Eurographics workshop on 3d object retrieval, pp 111–120. https://doi.org/10.2312/3DOR.20141057

Biasotti S, Cerri A, Aono M et al (2016) Retrieval and classification methods for textured 3D models: a comparative study. Vis Comput 32(2):217–241. https://doi.org/10.1007/s00371-015-1146-3

Bossard L, Dantone M, Leistner C, Wengert C, Quack T, Gool LV (2012) Apparel classification with style. In: Asian conference on computer vision, Springer, pp 321–335. https://doi.org/10.1007/978-3-642-37447-0_25

Cao Y, Xu J, Lin S, Wei F, Hu H (2019) Gcnet: non-local networks meet squeeze-excitation networks and beyond. In: Proceedings of the IEEE/CVF international conference on computer vision workshops(ICCVW), pp 1971–1980. https://doi.org/10.1109/ICCVW.2019.00246

Chen Q, Huang J, Feris R, Brown LM, Dong J, Yan S (2015) Deep domain adaptation for describing people based on fine-grained clothing attributes. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5315–5324. https://doi.org/10.1109/CVPR.2015.7299169

Chen W, Liu Y, Wang W, Bakker E, Georgiou T, Fieguth P, Liu L, Lew MS (2021) Deep learning for instance retrieval: A survey. arXiv: 2101.11282. https://doi.org/10.48550/arXiv.2101.11282

Cheng WH, Song S, Chen CY, Hidayati SC, Liu J (2021) Fashion meets computer vision: A survey. ACM Comput Surv 54(4):1–41. https://doi.org/10.1145/3447239

Di W, Wah C, Bhardwaj A, Piramuthu R, Sundaresan N (2013) Style finder: fine-grained clothing style recognition and retrieval. In: IEEE conference on computer vision and pattern recognition workshops, pp 8–13. https://doi.org/10.1109/CVPRW.2013.6

Dong Q, Gong S, Zhu X (2017) Multi-task curriculum transfer deep learning of clothing attributes. In: 2017 IEEE Winter Conference on Applications of Computer Vision(WACV). IEEE, pp 520–529. https://doi.org/10.1109/WACV.2017.64

Dong J, Ma Z, Mao X, Yang X, He Y, Hong R, Ji S (2021) Fine-grained fashion similarity prediction by attribute-specific embedding learning. IEEE Trans Image Process 30:8410–8425. https://doi.org/10.1109/TIP.2021.3115658

Han X, Wu Z, Jiang YG, Davis LS (2017) Learning fashion compatibility with bidirectional lstms. In: Proceedings of the 25th ACM international conference on Multimedia, pp 1078–1086. https://doi.org/10.1145/3123266.3123394

Hidayati SC, You CW, Cheng WH, Hua KL (2018) Learning and recognition of clothing genres from full-body images. IEEE Trans Cybern 48(5):1647–1659. https://doi.org/10.1109/TCYB.2017.2712634

Hou Y, Vig E, Donoser M, Bazzani, L (2021) Learning attribute-driven disentangled representations for interactive fashion retrieval. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 12147–12157. https://doi.org/10.1109/ICCV48922.2021.01193

Huang J, Feris RS, Chen Q, Yan S (2015) Cross-domain image retrieval with a dual attribute-aware ranking network. In: Proceedings of the IEEE international conference on computer vision, pp 1062–1070. https://doi.org/10.1109/ICCV.2015.127

Ji X, Wang W, Zhang M, Yang Y (2017) Cross-domain image retrieval with attention modeling. In: Proceedings of the 25th ACM international conference on Multimedia, pp 1654–1662. https://doi.org/10.1145/3123266.3123429

Kiapour MH, Han X, Lazebnik S, Berg AC, Berg TL (2015) Where to buy it: matching street clothing photos in online shops. In: Proceedings of the IEEE international conference on computer vision, pp 3343–3351. https://doi.org/10.1109/ICCV.2015.382

Kuang Z, Gao Y, Li G, Luo P, Chen Y, Lin L, Zhang W (2019) Fashion retrieval via graph reasoning networks on a similarity pyramid. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 3066–3075. https://doi.org/10.1109/ICCV.2019.00316

Kucer M, Murray N (2019) A detect-then-retrieve model for multi-domain fashion item retrieval. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, pp 344–353. https://doi.org/10.1109/CVPRW.2019.00047

Kuo YH, Hsu WH (2017) Feature learning with rank-based candidate selection for product search. In: Proceedings of the IEEE International Conference on Computer Vision Workshops, pp 298–307. https://doi.org/10.1109/ICCVW.2017.44

Lang Y, He Y, Yang F, Dong J, Xue H (2020) Which is plagiarism: fashion image retrieval based on regional representation for design protection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 2595–2604. https://doi.org/10.1109/CVPR42600.2020.00267

Lin TY, Dollar P, Girshick R, He K, Hariharan B, Belongie S (2017) Feature pyramid networks for object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 936–944. https://doi.org/10.1109/CVPR.2017.106

Liu S, Song Z, Liu G, Xu C, Lu H, Yan S (2012) Street-to-shop: Cross-scenario clothing retrieval via parts alignment and auxiliary set. In 2012 IEEE conference on computer vision and pattern recognition. IEEE, pp 3330–3337. https://doi.org/10.1145/2393347.2396471

Liu Z, Luo P, Qiu S, Wang X, Tang X (2016) DeepFashion: powering robust clothes recognition and retrieval with rich annotations. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1096–1104. https://doi.org/10.1109/CVPR.2016.124

Liu S, Qi L, Qin H, Shi J, Jia J (2018) Path aggregation network for instance segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 8759–8768. https://doi.org/10.1109/CVPR.2018.00913

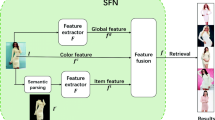

Liu AA, Zhang T, Song D, Li W, Zhou M (2021) FRSFN: a semantic fusion network for practical fashion retrieval. Multimed Tools Appl 80(11):17169–17181. https://doi.org/10.1007/s11042-020-08973-9

Lu Y, Kumar A, Zhai S, Cheng Y, Javidi T, Feris R (2017) Fully-adaptive feature sharing in multi-task networks with applications in person attribute classification. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5334–5343. https://doi.org/10.1109/CVPR.2017.126

Lu S, Zhu X, Wu Y, Wan X, Gao F (2021) Outfit compatibility prediction with multi-layered feature fusion network. Pattern Recogn Lett 147:150–156. https://doi.org/10.1016/j.patrec.2021.04.009

Luo Z, Yuan J, Yang J, Wen W (2019) Spatial constraint multiple granularity attention network for clothes retrieval. In: 2019 IEEE International Conference on Image Processing(ICIP). IEEE, pp 859–863. https://doi.org/10.1109/ICIP.2019.8802938

Luo Y, Wang Z, Huang Z, Yang Y, Lu H (2019) Snap and find: deep discrete cross-domain garment image retrieval. arXiv:1904.02887. https://doi.org/10.48550/arXiv.1904.02887

Newell A, Yang K, Deng J (2016) Stacked hourglass networks for human pose estimation. In: European conference on computer vision. Springer, pp 483–499. https://doi.org/10.1007/978-3-319-46484-8_29

Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D (2017) Grad-cam: visual explanations from deep networks via gradient-based localization. In: Proceedings of the IEEE international conference on computer vision, pp 618–626. https://doi.org/10.1109/ICCV.2017.74

Sharma V, Murray N, Larlus D et al (2021) Unsupervised meta-domain adaptation for fashion retrieval. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp 1348–1357. https://doi.org/10.1109/WACV48630.2021.00139

Sun Y, Zheng L, Yang Y, Tian Q, Wang S (2018) Beyond part models: person retrieval with refined part pooling (and a strong convolutional baseline). In: Proceedings of the European Conference on computer vision, pp 480–496. https://doi.org/10.1007/978-3-030-01225-0_30

Tan M, Pang R, Le QV (2020) Efficientdet: scalable and efficient object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 10781–10790. https://doi.org/10.1109/CVPR42600.2020.01079

Tan F, Yuan J, Ordonez V (2021). Instance-level image retrieval using reranking transformers. In: proceedings of the IEEE/CVF international conference on computer vision, pp 12105–12115. https://doi.org/10.1109/ICCV48922.2021.01189

Veit A, Belongie S, Karaletsos T (2017) Conditional similarity networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 830–838. https://doi.org/10.1109/CVPR.2017.193

Wang Z, Gu Y, Zhang Y, Zhou J, Gu X (2017) Clothing retrieval with visual attention model. In: 2017 IEEE visual communications and image proceeding, pp 1–4. https://doi.org/10.1109/VCIP.2017.8305144

Wang F, Jiang M, Qian C, Yang S, Li C, Zhang H, Wang X, Tang X (2017) Residual attention network for image classification. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3156–3164. https://doi.org/10.1109/CVPR.2017.683

Wang G, Yuan Y, Chen X, Li J, Zhou X (2018) Learning discriminative features with multiple granularities for person re-identification. In: proceedings of the 26th ACM international conference on multimedia, pp 274–282. https://doi.org/10.1145/3240508.3240552

Wang Q, Wu B, Zhu P, Li P, Zuo W, Hu Q (2020) ECA-net: efficient channel attention for deep convolutional neural networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 11531–11539. https://doi.org/10.1109/CVPR42600.2020.01155

Wang Z, Pu Y, Wang X, Zhao Z, Xu D, Qian W (2020) Accurate retrieval of multi-scale clothing images based on multi-feature fusion. Chine J Comput 43(4):740–754. https://doi.org/10.11897/SP.J.1016.2020.00740

Wieczorek M, Michalowski A, Wroblewska A, Dabrowski J (2020) A strong baseline for fashion retrieval with person re-identification models. In: International Conference on Neural Information Processing, pp 294–301. https://doi.org/10.1007/978-3-030-63820-7_33

Wieczorek M, Rychalska B, Dabrowski J (2021) On the unreasonable effectiveness of centroids in image retrieval. In: International Conference on Neural Information Processing, pp 212–223. https://doi.org/10.1007/978-3-030-92273-3_18

Yang F, Kale A, Bubnov Y, Stein L, Wang Q, Kiapour H, Piramuthu R (2017) Visual search at ebay. In: Proceedings of the 23rd ACM SIGKDD international conference on knowledge discovery and data mining, pp 2101–2110. https://doi.org/10.1145/3097983.3098162

Yang M, Yu K, Zhang C, Li Z, Yang K (2018) Denseaspp for semantic segmentation in street scenes. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 684–3692. https://doi.org/10.1109/CVPR.2018.00388

Zhang H, Cisse M, Dauphin YN, Lopez-Paz D (2017) Mixup: beyond empirical risk minimization. arXiv:1710.09412. https://doi.org/10.48550/arXiv.1710.09412

Zhang Z, Zhang X, Peng C, Xue X, Sun J (2018) Exfuse: enhancing feature fusion for semantic segmentation. In: Proceedings of the European conference on computer Vision, pp 269–284. https://doi.org/10.1007/978-3-030-01249-6_17

Zhang X, Chen Y, Zhu B, Wang J, Tang M (2020) Semantic-spatial fusion network for human parsing. Neurocomputing 402:375–383. https://doi.org/10.1016/j.neucom.2020.03.096

Zhao H, Shi J, Qi X, Wang X, Jia J (2017) Pyramid scene parsing network. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2881–2890. https://doi.org/10.1109/CVPR.2017.660

Zhao Q, Sheng T, Wang Y, Tang Z, Chen Y, Cai L, Ling H (2019) M2det: a single-shot object detector based on multi-level feature pyramid network. Proceedings of the AAAI conference on artificial intelligence 33(1):9259–9266

Zhong Z, Zheng L, Cao D, Li S (2017) Re-ranking person re-identification with k-reciprocal encoding. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1318–1327. https://doi.org/10.1109/CVPR.2017.389

Acknowledgements

The authors would like to thank the anonymous reviewers for their helpful and valuable comments and suggestions. This work was supported in part by the National Natural Science Foundation of China under Grant No. 61972458, 62172367, in part by the Zhejiang Province Public Welfare Technology Application Research Project under Grant LGF22F020006.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Bao, C., Zhang, X., Chen, J. et al. MMFL-net: multi-scale and multi-granularity feature learning for cross-domain fashion retrieval. Multimed Tools Appl 82, 37905–37937 (2023). https://doi.org/10.1007/s11042-022-13648-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-13648-8