Abstract

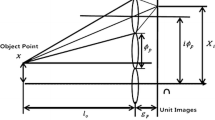

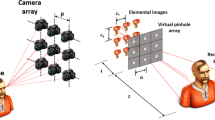

A new approach for depth estimation based on integral imaging is proposed. In this method, multiple viewpoint images captured from a three-dimensional scene are used to extract the range information of the scene. These elemental images are captured through an array of lenses over a high-resolution camera or an array of cameras. Then the scene is computationally reconstructed in different depths using integral imaging reconstruction algorithm. Finally, by processing the reconstructed images and finding objects of the scene in these images using a matching technique with speeded-up robust features (SURF), the depth information of the objects will be acquired. Experimental results show that the proposed method has high accuracy and does not have many limitations of standard image processing, including sensitivity to the surface type and size of the objects.

Similar content being viewed by others

References

Aloni D, Stern A, Javidi B (2011) Three-dimensional photon counting integral imaging reconstruction using penalized maximum likelihood expectation maximization. Opt Express 19:19681–19687

Ashutosh S, Schulte J, Ng AY (2007) Depth estimation using monocular and stereo cues. IJCAI 7:2197–2203

Aslantas V (2007) A depth estimation algorithm with a single image. Opt Express 15(8):5024–5029

Axelsson P (1999) Processing of laser scanner data–algorithms and applications. ISPRS J Photogramm Remote Sens 54:138–147

Badenko V, Fedotov A, Vinogradov K (2018) Algorithms of laser scanner data processing for ground surface reconstruction. In: International conference on computational science and its applications, pp 397–411

Bae J, Yoo H (2019) Review and comparison of computational integral imaging reconstruction. Int J Appl Eng Res 14(1):250–253

Baek E, Ho Y (2017) Real-time depth estimation method using hybrid camera system. Electron Imaging 5:118–123

Bay H, Tuytelaars T, Van Gool L (2006) Surf: speeded up robust features. European conference on computer vision. Springer, Berlin, pp 404–417

Bay H, Ess A, Tuytelaars T, Van Gool L (2008) Speeded-up robust features (SURF). Comput Vis Image Underst 110(3):346–359

Cho M, Javidi B (2009) Computational reconstruction of three-dimensional integral imaging by rearrangement of elemental image pixels. J Disp Technol 5(2):61–65

Cruz-Mota J, Bogdanova L, Paquier B, Bierlaire M, Thiran JP (2012) Scale invariant feature transform on the sphere: theory and applications. Int J Comput Vis 98(2):217–241

DaneshPanah M, Javidi B (2009) Profilometry and optical slicing by passive three-dimensional imaging. Opt Lett 34(7):1105–1107

Diebel J, Thrun S (2005) An application of Markov random fields to range sensing. Proc. of Advances in Neural Information Processing systems, pp 291–298

Ens J, Lawrence P (1993) An investigation of methods for determining depth from focus. IEEE Trans Pattern Anal Mach Intell 15(2):97–108

Furukawa Y, Hernández C (2015) Multi-view stereo: a tutorial. Found Trends Comput Graph Vis 9(1–2):1–148

Ghaneizad M, Aghajan H, Kavehvash Z (2016) Three-dimensional reconstruction of heavily occluded pedestrians using integral imaging. Proceedings of the 10th international conference on distributed smart camera. ACM, pp 1–7

Ghaneizad M, Kavehvash Z, Mehrany KH, Tayaranian Hosseini SM (2017) A fast bottom-up approach toward three-dimensional human pose estimation using an array of cameras. Opt Lasers Eng 95:69–77

Godard C, Mac Aodha O, Brostow G J (2017) Unsupervised monocular depth estimation with left-right consistency. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 270–279

Greengard A, Schechner Y, Piestun R (2006) Depth from diffracted rotation. Opt Lett 31:181–183

Gur S, Wolf L (2019) Single image depth estimation trained via depth from defocus cues. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7683–7692

Hazirbas C, Soyer S G, Staab M C, Leal-Taixé L, Cremers D (2018) Deep depth from focus. In: Asian conference on computer vision, pp 525–541

He W, Hu J, Zhou C, Li C, Li W, Han Y (2018) A hierarchical model for embedded real-time stereo imaging. In: High-Performance Computing in Geoscience and Remote Sensing VIII 10792: 1079209. International Society for Optics and Photonics

Hong S-H, Jang J-S, Javidi B (2004) Three-dimensional volumetric object reconstruction using computational integral imaging. Opt Express 12(3):483–491

Hong S, Shin D, Lee B, Kim E (2012) Depth extraction of 3D objects using axially distributed image sensing. Opt Express 20(21):23044–23052

Inoue K, Cho M (2019) Enhanced depth estimation of integral imaging using pixel blink rate. Opt Lasers Eng 115:1–6

Izzat I, Li F (2015) Stereo-image quality and disparity/depth indications. U.S. Patent 9: 030-530

Javidi B, Moon I, Yeom S (2006) Three-dimensional identification of biological microorganism using integral imaging. Opt Express 14:461–468

Jeon H-G, Park J, Choe G, Park J, Bok Y, Tai Y-W, Kweon IS (2015) Accurate depth map estimation from a lenslet light field camera. Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp 1547–1555

Karami E, Prasad S, Shehata M (2017) Image matching using SIFT, SURF, BRIEF and ORB performance comparison for distorted images. CoRR arXiv: 1710.02726

Komatsu S, Markman A, Javidi B (2018) Optical sensing and detection in turbid water using multidimensional integral imaging. Opt Lett 43:3261–3264

Lee M-C, Inoue K, Kim C-S, Cho M (2016) Regeneration of elemental images in integral imaging for occluded objects using a preoptic camera. Chin Opt Lett 14(12):101–121

Levin A, Fergus R, Durand F, Freeman WT (2007) Image and depth from a conventional camera with a coded aperture. ACM Trans Graph 26(3):70-es

Li X, Wang Y, Wang QH, Liu Y, Zhou X (2019) Modified integral imaging reconstruction and encryption using an improved SR reconstruction algorithm. Opt Lasers Eng 112:162–169

Lippmann G (1908) Épreuves réversibles donnant la sensation du relief. J Phys Theor Appl 7(1):821–825

Martínez-Corral M, Javidi B (2018) Fundamentals of 3D imaging and displays: a tutorial on integral imaging, light-field, and plenoptic systems. Adv Opt Photon 10(3):512–566

Martínez-Usó A, Latorre-Carmona P, Sotoca JM, Pla F, Javidi B (2016) Depth estimation in integral imaging based on a maximum voting strategy. J Disp Technol 12(12):1716–1723

Matoba O, Tajahuerce E, Javidi B (2001) Real-time three-dimensional object recognition with multiple perspectives imaging. Appl Opt 40:3318–3325

Moon L, Javidi B (2008) Three-dimensional visualization of objects in scattering medium by use of computational integral imaging. Opt Express 16:13080–13089

Otsu N (1979) A threshold selection method from gray-level histograms. IEEE Trans Syst Man Cybern 9:62–66

Poozesh P, Sarrafi A, Mao Z, Avitabile P, Niezrecki C (2017) Feasibility of extracting operating shapes using phase-based motion magnification technique and stereo-photogrammetry. J Sound Vib 407:350–366

Qu H, Piao Y, Xing L, Zhang M (2017) Segmentation-based occlusion removal technique for partially occluded 3D objects in integral imaging system. Appl Opt 56(9):151

Ren H, Ni LX, Li HF, Sang XZ, Gao X, Wang QH (2019) Review on tabletop true 3D display. J Soc Inf Disp 28(1):75–91

Rublee E, Rabaud V, Konolige K, Bradski G (2011) ORB: an efficient alternative to SIFT or SURF. 2011 international conference on computer vision, pp 2564–2571

Saadat A (1998) Formulating depth information in an image. Sci Iran 4(4):183–189

Son JY, Son WH, Kim SK, Lee KH, Javidi B (2013) Threedimensional imaging for creating real-world-like environments. Proc IEEE 101(1):190–205

Torralba A, Oliva A (2002) Depth estimation from image structure. IEEE Trans Pattern Anal Mach Intell 24(9):1226–1238

Um G, Kim K, Ahn C, Lee L (2005) Three-dimensional scene reconstruction using multiview images and depth camera. Proc. of 3D digital imaging and modeling, pp 271–280

Venkataraman K, Gallagher P, Jain A, Nisenzon S (2016) Pelican imaging Corp. Systems and methods for stereo imaging with camera arrays. U.S. Patent 9: 438,888

Zhang L, Nayar S (2006) Projection defocus analysis for scene capture and image display. ACM Trans Graph 25(3):907–917

Zhou C, Lin S, Nayar SK (2011) Coded aperture pairs for depth from defocus and defocus deblurring. Int J Comput Vis 93(1):53–72

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Barzi, F.K., Nezamabadi-pour, H. Automatic objects’ depth estimation based on integral imaging. Multimed Tools Appl 81, 43531–43549 (2022). https://doi.org/10.1007/s11042-022-13221-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-13221-3