Abstract

Fundus image is widely used diagnosis method and involves the retinal tissues which can be important biomarkers for diagnosing diseases. Many studies have proposed automatic algorithms to detect the optic disc (OD) and fovea. However, they showed some limitations. Although the precise regions of retinal tissues are clinically important, most of these studies focused on the localization not the segmentation. Also, they did not sufficiently prove the clinical effectiveness of the methods using quantitative analysis. Furthermore, many of them have researched about the single retinal tissue. To compensate for these limitations, this study proposed automated segmentation method for both of the OD and fovea. In this study, the dataset was acquired from the DRIVE and Drions databases, and additional ground truth dataset was obtained from an ophthalmologist. The original fundus image was preprocessed to remove noise and enhance contrast. And the retinal vessel was segmented to use for the OD and fovea segmentation. In the OD and fovea segmentation step, a region of interest was designated based on the image features to increase the segmentation accuracy. To segment the OD, the retinal vessel was removed and substituted based on the intensity value of the four nearest non-vessel pixels. Finally, the OD and fovea regions were segmented based on the image features including intensity, shape and size. The proposed method was evaluated by quantitative analysis using eight methods. As a result, the proposed method showed high segmentation performance for the OD and fovea with accuracy of 99.18 and 99.80 % on the DRIVE database.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Fundus camera is widely used medical imaging equipment for fundus examinations. It acquires fundus images non-invasively, and the images provide useful clinical information involving retinal tissues, retinal lesions, and anatomical structures, etc.

Retinal tissues, which mainly consist of the retinal vessel, optic disc (OD), and fovea, show different features in fundus images. These retinal tissues can be the most important biomarkers for the diagnosis of diverse diseases in early stage [17]. Specifically, the OD and fovea can provide various information for the diagnosis of glaucoma and diabetic retinopathy, respectively [35, 3]. Furthermore, the OD and fovea show similar characteristics with retinal lesions like microaneurysm, exudate, and drusen, and it may occur misdiagnosis of the retinal lesions. Therefore, segmentation of the OD and fovea is an important task in image processing.

In general, these retinal tissues are analyzed by subjective manual screening conducted by an ophthalmologist. However, manually checking a large number of fundus images is a highly difficult and time consuming work. Also, the diagnostic accuracy may be affected by the skill of the examiner. To solve these problems, many studies have proposed automated algorithms for the segmentation of the OD and fovea.

Extensive researches investigating how to search the OD and fovea have been conducted and many different kinds of methods have been proposed based on morphological operation, watershed transform, active contour, template, etc. [20, 21, 14]. Reza [31] proposed a novel method named circle operator that oriented with specific angles and pixel length. This method segmented the OD by analyzing the pixel intensity variation at four points. This method showed success rate of OD localization from 81.48 to 100 % for different six datasets. Guo et al. [13] proposed structure label transfer to localize the fovea. This method found the most similar images from the reference image and localize the fovea by using the local feature descriptor. The proposed method achieved average success rate of 98.18 % in DRIVE, DIARETDB0, DIARETDB1 and STARE databases, and the average distance between ground truth and detected location was 0.151 OD diameter. Qureshi et al. [30] proposed an ensemble algorithm that combined different methods to overcome the weaknesses of each individual method. They decreased the error rate for detection of the OD and fovea by applying the combined system to the Diaretdb0, Diaretdb1, and DRIVE databases. Abdullah et al. [1] segmented the OD based on morphological operators, circular Hough transform, and grow-cur algorithm. In Drions database, this method achieved an average level of the sensitivity, specificity, and accuracy of 85.08 %, 99.66 %, and 95.49 %, respectively. Samawi et al. [33] segmented the OD using thresholding-based method. They evaluated the method on seven different database and acquired accuracy over 95 %. Naqvi et al. [22] proposed the method of OD homogenization and contour estimation, and this method segmented the OD with accuracy of 98.60 %, 96.72 %, and 98.51 % on Messidor, Drions, and ONHSD.

Despite all these efforts, previous studies showed mainly three limitations. First, Most of the OD and fovea segmentation studies focused on the localization, not segmentation. Localization is that just recognizes the location of the object, while segmentation more precisely finds the object in terms of pixel unit. Secondly, they did not sufficiently prove the clinical effectiveness of the methods using a variety of quantitative evaluation approaches. Some studies designated the center of the true retinal tissue as ground truth and calculated the distance between the center of the segmented region and ground truth to compute accuracy [13, 30, 29, 15, 5]. Others manually labeled the actual retinal tissue region and considered the result to be successful if the segmented point was positioned inside the labeled region [31, 34, 6]. In these cases, evaluation of the method was not sufficiently performed. Lastly, most of existing studies have proposed automated method for the single retinal tissues, although complex information of different retinal tissues is clinically important.

To compensate for these limitations, this study proposed a novel automated segmentation method for both of OD and fovea in the fundus image. They were precisely segmented in pixel unit and the performance was quantitatively evaluated with diverse methods. The overall process of this study is illustrated in Fig. 1. First, the original fundus image was preprocessed based on color space selection, filtering, and contrast enhancement algorithms to remove noise and enhance the contrast. Also, the retinal vessel was segmented using the morphological operator and Otsu’s method prior to the OD and fovea segmentation step. A region of interest (ROI) was assigned based on information of the image features and detected retinal vessel to segment the OD and fovea. For segmentation of the OD, the retinal vessel region was removed and substituted based on the intensity values of the four nearest non-vessel pixels. Finally, the OD and fovea regions were segmented from each of the candidate regions based on the image features. The proposed method was evaluated using quantitative analysis methods including the sensitivity, specificity, precision, negative predictive value (NPV), accuracy, F1 score, structure measure (S-measure), and enhanced-alignment measure (E-measure). The rest of this paper is organized as follows. Section 2 describes the acquired fundus image database and algorithm for the proposed method. Then, Section 3 summarizes results of the proposed method and Section 4 analyzes the results. Finally, Section 5 provides the conclusion of this study.

2 Materials and methods

2.1 Data acquisition

This study obtained the fundus image dataset from the DRIVE and Drions databases to apply and evaluate the proposed method. They are publicly available databases and provide not only fundus images but also ground truth datasets that were manually segmented by an expert. Detailed information on these two databases are as follows.

The DRIVE [36], which stands for Digital Retinal Images for Vessel Extraction, provides image dataset containing a total of 40 fundus images which have been compressed into JPEG format. These images were randomly selected from the dataset that acquired from a diabetic retinopathy screening program in the Netherlands. The images were obtained from a Canon CR5 non-mydriatic 3CCD camera with a 45 degree field of view (FOV). Resolution of each image was 768 × 584 pixels and the FOV was circularly-shaped with a diameter of approximately 540 pixels. In addition, the DRIVE database provides the ground truth dataset of the vasculature for each fundus image. This dataset was manually segmented by human observers who were instructed and trained by an experienced ophthalmologist. In this study, the OD and fovea areas were manually segmented by an ophthalmologist (Dongguk University Hospital, Ilsan, the Republic of Korea) to acquire additional ground truth dataset because the DRIVE database only labeled for the retinal vessel. The ophthalmologist drew a line on the fundus images for the OD and fovea regions. Based on the drawn line, the regions for each tissue were segmented, and binary images were obtained for the ground truth dataset.

The Drions database [2] consists of 110 fundus images obtained from the Ophthalmology Service at Miguel Servet Hospital, Saragossa (Spain). The mean age of the patients was about 53 years with a standard deviation of 13.05. 53.8 % of patients were female and the others were male. First, the images were acquired with a colour analogical fundus camera. Then, they were digitized using a HP-PhotoSmart-S20 high-resolution scanner. Through this process, RGB format images with a resolution of 600 × 400 and 8 bits per pixel were obtained. In this study, Drions database was used to compare the OD segmentation performance of the proposed method with other previous studies.

2.2 Preprocessing

In advance of the segmentation step, the original fundus image was modified by preprocessing based on color space selection, filtering, and contrast enhancement algorithms to improve the image quality. The original image may contain the noise and non-uniform background due to diverse factors, such as non-uniform illumination and variation in the pigment of the eye [23]. Noise and non-uniform background can adversely affect segmentation of the retinal tissues. Therefore, these elements were removed to improve segmentation accuracy.

In this study, the green channel was selected from the original RGB image because it showed a clear contrast between each of the retinal tissues (Fig. 2b). And Gaussian filter removed noise by eliminating high-frequency elements in the green channel image (Fig. 2c). The Gaussian filter was set with parameters of 15 × 15 window and 0.8 sigma. This parameter set was selected by comparing the various combinations of parameter conditions. Then, contrast limited adaptive histogram equalization (CLAHE) was applied to improve the local contrast of the image (Fig. 2d). Ordinary histogram equalization adjusts the distribution of all pixels on the image, whereas CLAHE operates on pixels in a small region rather than the entire region of the image by computing several histograms. It also reduces the occurrence of the noise amplification by limiting the contrast amplification in homogeneous regions. Original fundus image was optimized ahead of segmenting the retinal tissues as shown in Fig. 2.

2.3 Segmentation of the retinal vessel

Morphological operation, which is a widely used image processing method, extracts the structure in image by approaching it from a mathematical morphologic perspective [12]. It transforms the shape of an object on image using a variety of operators which include dilation, erosion, opening, and closing. Each of operators is performed by applying a mask, called a structuring element, in different ways. At this point, the suitable shape and size of a structuring element should be predetermined with consideration of the characteristic of the structure to be detected.

Prior to the OD and fovea segmentation step, the retinal vessel region was segmented to use this information for detecting the ROI and reconstructing the image. It was segmented based on morphological operation, among them using a closing operator. The closing operator do dilation followed by erosion as defined in Eqs. (1)–(3).

where A is the image and B is the structure element. ⊕, ⊖, and \(\cdot\) indicate dilation, erosion, and closing, respectively.

Therefore, this process can eliminate a hole, while maintaining the initial size of the region. It was applied to the preprocessed image to remove detail structure (Fig. 3a). For the closing operator, a disk-shaped structure element with a radius of 5 pixels was selected because the disk shape mask is independent for changes in rotation. Then, the difference between the closed image and the preprocessed image was computed (Fig. 3b). This difference image was transformed into a binary image using Otsu’s method [27]. Finally, the retinal vessel was automatically segmented from the fundus image as shown in Fig. 3c.

2.4 Segmentation of the optic disc

This study proposed a novel method which mainly consists of two steps, designation of a ROI and image reconstruction, to improve the segmentation accuracy of the OD region. In the first step, candidate region of OD was detected from the preprocessed image based on the image characteristic of the OD. This region was detected by calculating the threshold and transforming the image into binary image. To set the threshold, the entire pixel values were arranged in ascending order and the pixel value of the top 10 % was calculated. Then, this pixel value was selected as the threshold value. Among all regions in the acquired binary image (Fig. 4a), one region was selected as a ROI for the OD by using image features (Fig. 4b). In this process, the length and intensity features of the OD were applied to prevent misdetection of the other region caused by the noise, illumination artefacts, and pathological structures. Then, a circle-shaped region within radius of 70 pixels from the center of mass (COM) of the selected region was designated as the ROI as shown in Fig. 4c. This parameter was selected by investigating the general size of the OD on fundus images. To enhance the contrast between pixels in the ROI, CLAHE was applied to the selected region as shown in Fig. 4d.

In the second step, the retinal vessel region that could disturb the segmentation of the OD was removed from the selected ROI. The retinal vessel region segmented in the prior procedure was transformed into NaN elements. The NaN elements were substituted with the new pixel values calculated using the four nearest non-vessel pixel values. These four neighbor pixels consist of two non-vessel pixels close to the target pixel in the horizontal (C1, C2) and vertical (R1, R2) coordinate, respectively. As shown in Eq. (4), two weight values were acquired by calculating the distance ratios between target vessel pixel and nearest non-vessel pixels which are on the horizontal coordinate, and sum of weighted intensity values was calculated. Also, this process was perform in the vertical coordinate in the same way as shown in Eq. (5). Then, new value for the vessel pixel was obtained with a mean value of acquired two values (Eq. (6)). In Eqs. (5)–(7), i and j is the coordinate value of vessel pixel in the fundus image. Figure 4e shows the vessel removed image through this process. Finally, Otsu’s method was applied to reconstructed image to segment the OD region. And this region was corrected by measuring the eccentricity and ratio between major and minor length to optimize the segmentation. Figure 4f is the result image of the automated OD segmentation algorithm.

where I is intensity value of image. C and R represent the column and row value of pixel. h and v indicate the horizontal and vertical coordinate.

2.5 Segmentation of the fovea

A ROI was designated prior to the segmentation of the fovea, as in the precedent OD segmentation process, to obtain the candidate region and increase the segmentation accuracy. The ROI was obtained based on the structural correlation between the retinal vessel and fovea. The fovea is generally located near the center of the retinal vessel in fundus image. Therefore, the main retinal vessel was selected from the formerly segmented region and the COM was obtained as shown in Fig. 5a. Then, a circle-shaped region within radius of 100 pixels from the COM was designated as the ROI (Fig. 5b). This parameter was selected based on the general size of the fovea on fundus images.

To segment the fovea, a flood-fill operation was applied to the ROI (Fig. 5c). This operation converted the intensity value of dark pixels which enclosed by brighter pixels based on the intensity value of the surrounding pixels. It filled the holes on image such as the fovea. Also, a closing operator was applied to the ROI in the same manner as the flood-fill operation (Fig. 5d). Then, the difference image was acquired by subtracting the closed image from the flood-fill image as shown in Fig. 5e, and the final fovea region was segmented from the difference image using Otsu’s method. Figure 5f is the result image of the automated fovea segmentation algorithm.

2.6 Quantitative evaluation

This study evaluated the performance of the proposed method using diverse quantitative analysis methods derived from a confusion matrix, including sensitivity, specificity, precision, NPV, accuracy, and F1 score. These evaluation methods were calculated based on true positive (TP), true negative (TN), false positive (FP), and false negative (FN) values. TP represents the number of pixels correctly segmented as the retinal tissue, and TN indicates the number of pixels correctly segmented as the non-retinal tissue. FP and FN signify the number of pixels incorrectly identified as the retinal and non-retinal tissue, respectively. Each evaluation method was calculated as defined in Eqs. (7)–(12).

Sensitivity and precision measure the ability of the proposed method to segment the retinal tissue. Meanwhile, specificity and NPV measure the ability to segment the non-retinal tissue. Accuracy indicates the ratio of correctly classified pixels among all pixels. F1 score represents the harmonic average of precision and sensitivity. This study also calculated structure measure (S-measure) and enhanced-alignment measure (E-measure) which were proposed on [8, 10]. These metrics evaluate the structural similarity between the segmented region and ground truth.

3 Experimental results

This study proposed an automated segmentation method of the OD and fovea in the fundus image. The performance of the proposed method was evaluated using eight evaluation methods described in Section 2. These evaluation methods were used to prove the clinical effectiveness of the proposed method by performing quantitative analysis, unlike some of the existing studies which calculated only the success rate.

The evaluation was conducted using DRIVE and Drions databases with 40 and 110 images, respectively. And different parameters were compared and optimal conditions were selected. For morphological operation, disk shaped structure element with a radius of 5 pixels was selected because it is independent for changes in rotation. Also, optimal ROI size was selected by investigating the general size of the OD and fovea on fundus images. It was circle-shaped region with radius of 70 and 100 pixels, respectively. The experiment was performed on Intel Core I7-7700 K CPU with MATLAB R2016a.

3.1 Segmentation results of the retinal vessel, OD, and fovea on the DRIVE database

To segment each of three retinal tissues, the proposed method was carried out on the DRIVE database and evaluated based on ground truth determined by manual segmentation by a human. Although the main goal of this study was focused on the OD and fovea, retinal vessel information was used for segmentation of two retinal tissues. Therefore, segmentation results of retinal vessel also evaluated in addition to the OD and fovea. The ground truth of the OD and fovea was separately obtained by an ophthalmologist because DRIVE database only provides the ground truth of the retinal vessel. Table 1 shows the results of retinal tissues segmentation using the proposed method. The segmentation accuracy was in the order of fovea > OD > retinal vessel. The accuracy was over 95 % for all retinal tissues. Especially, the fovea and OD was segmented with a significantly high accuracy of 99.80 and 99.18 %. Also, S-measure and E-measure values were over 0.95 on these two retinal tissues.

3.2 Comparison of segmentation results with previous studies on the DRIVE and drions databases

The retinal vessel dataset of the DRIVE database was used for a comparative study between the proposed method and previous studies. The proposed method also applied to the Drions database, which includes the ground truth of OD region, for comparative study of OD segmentation. The experimental results of this study are shown in Tables 3 and 2. The segmentation accuracy of the retinal vessel and OD was 95.35 and 98.94 %, respectively. The proposed method showed high performance in terms of accuracy for segmentation compared with previous studies. Also, it required considerably fast computational time lower than 0.3 s. Also, retinal vessel took at least 2 s of computational time for one fundus image in previous studies, whereas the proposed method performed the algorithm in just 0.03 s.

3.3 OD segmentation results according to the application of the two main algorithms to the DRIVE database

The proposed method segmented OD using two main algorithms, the designation of a ROI and image reconstruction. In this study, four conditions were set according to whether or not these two algorithms were applied. Then, the results of each condition were compared to evaluate the performance of these algorithms. Table 4 shows the OD segmentation results for the four conditions. All of the evaluation indices were increased by applying the two algorithms. Among them, sensitivity and precision increased significantly. Specifically, condition 1 and 2 without a ROI showed the low sensitivity and precision which were less than 55 %, whereas they sharply increased above 70 % in condition 3 and 4 by applying the step designating a ROI. Furthermore, in condition 4, the accuracy and F1 score were respectively increased by 0.64 and 26.35 % compared to condition 1.

3.4 Performance of the fovea segmentation algorithm for images not including the fovea

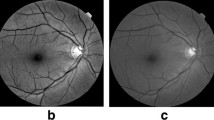

The proposed method demonstrated an outstanding ability to segment the fovea region with a significantly high accuracy of 99.79 % as shown in Table 1. However, there was a limitation to accomplish the comparative study of fovea segmentation because most of previous studies conducted localization study and did not utilize the quantitative evaluation approaches which performed in this study. Therefore, to identify the robustness of the proposed method, this study analyzed the results on five fundus images which did not include the fovea as shown in Fig. 6. The results showed that the proposed method did not detect any region in all images.

4 Discussion

Many previous studies suggested diverse methods for searching the OD and fovea region in fundus images to resolve the problems that can occur in subjective manual screening. However, these studies also had some limitations. Therefore, this study proposed an automated segmentation method of the OD and fovea to compensate for these limitations. Then, quantitative analysis was performed based on a total of eight evaluation methods to determine the clinical effectiveness of the proposed method. Also, this study manually segmented the OD and fovea area to obtain the ground truth, because the DRIVE database only has information for the retinal vessel.

As a results of the quantitative analysis, the proposed method showed excellent performance reaching 99 % in the segmentation of the OD and fovea. It is considered that this significantly high experimental results was obtained because the target area was narrowed by designation of a ROI. Through this step, the candidate region was detected and further image processing was conducted focusing on this area. Also, retinal vessel was segmented with accuracy over 95 %. It was segmented without ROI and showed relatively low results than OD and fovea segmentation. These results may have been due to the narrow and tiny vessels in the eye. They are very hard to detect because they show low contrast in fundus image. The proposed method may have failed to segment these retinal vessels. However, main region which is important in this study for the OD and fovea segmentation was almost fully segmented.

In the present study, a comparative study was performed between the proposed method and previous studies. The comparative study was conducted for the retinal vessel and OD using the DRIVE and Drions databases. The experimental results showed that the proposed method segmented the retinal vessel and OD with higher accuracy than previous studies. Especially, the accuracy for OD segmentation was 98.94 %, while it was less than 98 % on the previous studies. This showed that it was more capable of segmenting the OD compared to previous studies. Also, the proposed method segmented the retinal vessel and OD in low computational time which is under 0.3 s. It considered that the proposed method segmented the OD region with an effective computational time by designating a ROI and reducing the quantity of calculations. Also, retinal vessel was segmented at high speed compared with the proposed method, because it used a morphological operator which is relatively simple algorithm, while complex algorithms like supervised and unsupervised methods were applied in previous studies. In addition, it optimized the shape and size of the structure element to achieve high accuracy in less time. The speed of algorithm is one of the important thing to monitor many images and check the retinal tissues. Therefore, the low computation time is significant strength from a clinical point of view.

This study proposed the designation of a ROI and image reconstruction to segment the OD region and evaluated the performance of these main algorithms. As a result, all evaluation indices were increased by applying these two algorithms, and it demonstrates that they improved the performance for segmentation of the OD. Sensitivity and precision were sharply increased among the six evaluation indices in particular. These results indicate that the increase of TP, and it means the proposed method got better in ability to segment the OD correctly by applying the algorithms. Also, the results showed that the step of designating a ROI affected the segmentation performance more than image reconstruction.

There was a limitation to compare performance for the fovea because previous studies conducted only localization. Therefore, to evaluate the ability and robustness of the proposed method for the fovea segmentation, this study performed the algorithm on five fundus images which did not include fovea. In the results, any area was not detected in all images. It indicates that the proposed method accurately judged there was no the fovea in any of five images. The proposed method detected ROI region based on the vessel structure and segmented the fovea region using its image feature that is the dark circular region enclosed by brighter pixels. On the images not containing the fovea, there would be no region which showed this image characteristic. This is considered to be the reason why no area was segmented in these images. In real clinical site, the image may not involve the fovea region according to shooting condition. These results present that the proposed method didn’t show any error in this situation and confirm the superior performance of the proposed method for segmentation of the fovea.

5 Conclusions

This study proposed a novel automated segmentation method to segment the retinal tissues. To compensate for the limitation of previous studies, this study focused on the precise segmentation for both of the OD and fovea, and the proposed method was evaluated by quantitative analysis methods. As an experimental result, its high performance was confirmed by segmenting the OD and fovea with accuracy of 99.18 and 99.80 %, respectively. Also, the segmentation accuracy of retinal vessel was 95.35 %, and main retinal vessel structure which was used for the OD and fovea segmentation was almost fully segmented. In addition, this study confirmed the outstanding performance of the proposed method in terms of accuracy and computational time by conducting a comparative study between the proposed method and previous studies. Furthermore, this study verified the usefulness of the two main OD segmenting algorithms by comparing and analyzing the results with or without them. The results demonstrate that these algorithms can improve the segmentation performance. Also, the robustness of the fovea segmenting algorithm was confirmed by applying the proposed algorithm to fundus images which did not contain the fovea. These results proved the clinical effectiveness of the proposed method because accuracy and time are the most important parts in the screening system of retinal tissues.

However, there are some limitation that need to be supplemented through future study. This study was performed with a small number of fundus images. Particularly, the DRIVE database, which was mostly used in the study, consisted of only 40 fundus images. Therefore, future study should acquire large numbers of images for evaluation of the segmentation method. Also, the method should be performed with abnormal images corrupted by diverse factors including noise and artifact for verification of its clinical applicability.

Data availability

Not applicable.

Code availability

Not applicable.

References

Abdullah M, Fraz MM, Barman SA (2016) Localization and segmentation of optic disc in retinal images using circular Hough transform and grow-cut algorithm. PeerJ 4:e2033. https://doi.org/10.7717/peerj.2003

Carmona EJ, Rincón M, García-Feijoó J, Martínez-de-la-Casa JM (2008) Identification of the optic nerve head with genetic algorithms. Artif Intell Med 43(3):243–259. https://doi.org/10.1016/j.artmed.2008.04.005

Chalakkal RJ, Abdulla WH, Thulaseedharan SS (2018) Automatic detection and segmentation of optic disc and fovea in retinal images. IET Image Process 12(11):2100–2110. https://doi.org/10.1049/iet-ipr.2018.5666

Claro M, Santos L, Silva W, Araujo F, Moura N (2016) Automatic glaucoma detection based on optic disc segmentation and texture feature extraction. CLEI Eletronic J 19(2):1–10

Devasia T, Jacob P, Thomas T (2015) Automatic optic disc localization and segmentation using swarm intelligence. World Comput Sci Inf Technol J 5(6):92–97

Devasia T, Jacob P, Thomas T (2018) Automatic optic disc localization in color retinal fundus images. Adv Comput Sci Technol 11(1):1–13

Fan Z, Li F, Rong Y, Li W, Cai X, Lin H (2015) Detecting optic disk based on adaboost and active geometric shape model. In: IEEE International Conference on Cyber Technology in Automation, Control, and Intelligent Systems (CYBER)

Fan DP, Cheng MM, Liu Y, Li T, Borji A (2017) Structure-measure: A new way to evaluate foreground maps. In: IEEE international conference on computer vision

Fan Z, Rong Y, Cai X, Lu J, Li W, Lin H, Chen X (2017) Optic disk detection in fundus image based on structured learning. IEEE J Biomed Health Inform 22(1):224–234. https://doi.org/10.1109/JBHI.2017.2723678

Fan DP, Gong C, Cao Y, Ren B, Cheng MM, Borji A (2018) Enhanced-alignment measure for binary foreground map evaluation. arXiv preprint arXiv:1805.10421

Fraz MM, Remagnino P, Hoppe A, Uyyanonvara B, Rudnicka AR, Owen CG, Barman SA (2012) An ensemble classification-based approach applied to retinal blood vessel segmentation. IEEE Trans Biomed Eng 59(9):2538–2548. https://doi.org/10.1109/TBME.2012.2205687

Gonzalez RC, Woods RE, Eddins SL (2009) Digital image processing using MATLAB, 2nd edn. Prentice Hall, Upper Saddle River

Guo XX, Li Q, Sun C, Lu YN (2018) Automatic localization of macular area based on structure label transfer. In J Ophthalmol 11(3):422. https://doi.org/10.18240/ijo.2018.03.12

Joshi S, Karule PT (2017) Fundus image analysis for detection of fovea: A review. In: International Conference on Intelligent Computing and Control (I2C2)

Kamble R, Kokare M, Deshmukh G, Hussin FA, Mériaudeau F (2017) Localization of optic disc and fovea in retinal images using intensity based line scanning analysis. Comput Biol Med 87(1):382–396. https://doi.org/10.1016/j.compbiomed.2017.04.016

Lam BS, Gao Y, Liew AW (2010) General retinal vessel segmentation using regularization-based multiconcavity modeling. IEEE Trans Med Imaging 29(7):1369–1381. https://doi.org/10.1109/TMI.2010.2043259

Macgillivray TJ, Trucco E, Cameron JR, Dhillon B, Houston JG, van Beek EJ (2014) Retinal imaging as a source of biomarkers for diagnosis, characterization and prognosis of chronic illness or long-term conditions. Br J Radiol 87(1040):20130832. https://doi.org/10.1259/bjr.20130832

Marin D, Aquino A, Gegundez-Arias ME, Bravo JM (2011) A new supervised method for blood vessel segmentation in retinal images by using gray-level and moment invariants-based features. IEEE Trans Med Imaging 30(1):146–158. https://doi.org/10.1109/TMI.2010.2064333

Miri MS, Mahloojifar A (2011) Retinal image analysis using curvelet transform and multistructure elements morphology by reconstruction. IEEE Trans Biomed Eng 58(5):1183–1192. https://doi.org/10.1109/TBME.2010.2097599

Mohammadi F, Esmaeili M, Javadzadeh A, Tabar HA, Rasta SH (2019) The computer based method to diabetic retinopathy assessment in retinal images: a review. Electron J Gen Med 16(2). https://doi.org/10.29333/ejgm/108619

Mookiah MRK, Acharya UR, Chua CK, Lim CM, Ng EYK, Laude A (2013) Computer-aided diagnosis of diabetic retinopathy: A review. Comput Biol Med 43(12):2136–2155. https://doi.org/10.1016/j.compbiomed.2013.10.007

Naqvi SS, Fatima N, Khan TM, Rehman ZU, Khan MA (2019) Automatic optic disk detection and segmentation by variational active contour estimation in retinal fundus images. Signal Image Video Process 13(6):1191–1198

Nayak J, Bhat PS, Acharya R, Lim CM, Kagathi M (2008) Automated identification of diabetic retinopathy stages using digital fundus images. J Med Syst 32(2):107–115

Nguyen UTV, Bhuiyan A, Park LAF, Ramamohanarao K (2013) An effective retinal blood vessel segmentation method using multi-scale line detection. Pattern Recogn 46(3):703–715. https://doi.org/10.1016/j.patcog.2012.08.009

Nugroho HA, Listyalina L, Setiawan NA, Wibirama S, Dharmawan DA (2015) Automated segmentation of optic disc area using mathematical morphology and active contour. In: International Conference on Computer, Control, Informatics and its Applications (IC3INA)

Nugroho HA, Aras RA, Lestari T, Ardiyanto I (2017) Retinal vessel segmentation based on Frangi filter and morphological reconstruction. In: International Conference on Control, Electronics, Renewable Energy and Communications (ICCREC)

Otsu N (1979) A threshold selection method from gray-level histograms. IEEE Trans Sys Man Cyber 9:62–66. https://doi.org/10.1109/TSMC.1979.4310076

Pal S, Chatterjee S, Dey D, Munshi S (2019) Morphological operations with iterative rotation of structuring elements for segmentation of retinal vessel structures. Multidimens Syst Signal Process 30(1):373–389. https://doi.org/10.1007/s11045-018-0561-9

Panda R, Puhan NB, Panda G (2017) Robust and accurate optic disk localization using vessel symmetry line measure in fundus images. Biocybern Biomed Eng 37(3):466–476. https://doi.org/10.1016/j.bbe.2017.05.008

Qureshi RJ, Kovacs L, Harangi B, Nagy B, Peto T, Hajdu A (2012) Combining algorithms for automatic detection of optic disc and macula in fundus images. Comput Vis Image Underst 116(1):138–145. https://doi.org/10.1016/j.cviu.2011.09.001

Reza MN (2018) Automatic detection of optic disc in color fundus retinal images using circle operator. Biomed Signal Process Control 45:274–283. https://doi.org/10.1016/j.bspc.2018.05.027

Roychowdhury S, Koozekanani DD, Parhi KK (2015) Blood vessel segmentation of fundus images by major vessel extraction and subimage classification. IEEE J Biomed Health Inform 19(3):1118–1128. https://doi.org/10.1109/JBHI.2014.2335617

Samawi HJ, Al-Sultan AY, Al-Saadi EH (2020) Optic disc segmentation in retinal fundus images using morphological techniques and intensity thresholding. In: International Conference on Computer Science and Software Engineering (CSASE)

Sekhar S, Al-Nuaimy S, Namdi AK (2008) Automated localisation of optic disk and fovea in retinal fundus images. In: 16th European Signal Processing Conference

Singh A, Dutta MK, ParthaSarathi M, Uher V, Burget R (2016) Image processing based automatic diagnosis of glaucoma using wavelet features of segmented optic disc from fundus image. Comput Methods Programs Biomed 124:108–120. https://doi.org/10.1016/j.cmpb.2015.10.010

Staal J, Abràmoff MD, Niemeijer M, Viergever MA, van Ginneken B (2004) Ridge based vessel segmentation in color images of the retina. IEEE Trans Med Imaging 23(4):501–509. https://doi.org/10.1109/TMI.2004.825627

Wang L, Liu H, Lu Y, Chen H, Zhang J, Pu J (2019) A coarse-to-fine deep learning framework for optic disc segmentation in fundus images. Biomed Signal Process Control 51:82–89

Funding

This study was supported by the Dongguk University Research Fund of 2019 (S-2019-G0001-00041).

Author information

Authors and Affiliations

Contributions

GYK was major contributor in conducting the study and writing the original draft. SHL participated in collecting and interpreting the data. SMK supervised the entire process of the research and reviewed the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflicts of interest/Competing interests

Not applicable.

Ethics approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kim, G.Y., Lee, S.H. & Kim, S.M. Automated segmentation and quantitative analysis of optic disc and fovea in fundus images. Multimed Tools Appl 80, 24205–24220 (2021). https://doi.org/10.1007/s11042-021-10815-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-021-10815-1