Abstract

This paper proposes and describes an unsupervised computational model that monitors an elderly person who lives alone and issues alarms when a risk to the elderly person’s well-being is identified. This model is based on data extracted exclusively from passive infrared motion sensors connected to a ZigBee wireless network. The proposed monitoring system and model is non-intrusive, does not capture any images, and does not require any interaction with the monitored person. Thus, it is more likely to be adopted by members of the elderly population who might reject other more intrusive or complex types of technology. The developed computational model for activity discovery employs a kernel estimator and local outlier factor calculation, which are reliable and have a low computational cost. This model was tested with data collected over a period of 25 days from two elderly volunteers who live alone and have fairly different routines. The results demonstrate the model’s ability to learn relevant behaviors, as well as identify and issue alarms for atypical activities that can be suggestive of health problems. This low-cost, minimalistic sensor network approach is especially suited to the reality of underdeveloped (and developing) countries where assisted living communities are not available and low cost and ease of use are paramount.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The global population is aging at an unprecedented rate. According to the World Health Organization (WHO), the 2019 global average life expectancy was 72.6 years, and in some developed countries, it was over 83 years [58]. The United Nations estimated that the older population (65+ years) accounted for 9.1% of the world’s population in 2019 [58].

Another important trend is the increasing number of single-person households worldwide, which accounted for 32.8% of households in the European Union in 2018 [17] and 28% in the USA in 2015 [57]. Such a trend has also been present in the elderly population, with 42% of households headed by someone aged 65+ years in the USA [21]. The United Nations estimated that 11% of men and 19% of women older than 60 years lived alone in 2005 [56], corresponding to a population that was greater than 840 million.

The increase in members of the elderly population living alone has also occurred in developing countries, and numbers have been growing at a much faster pace. In the next 20 years, the increase in population aging expected in Brazil will be equivalent to the rise over nearly 100 years that has occurred in European countries such as France, Sweden, and the United Kingdom [61]. Data from the latest Brazilian census showed that the percentage of single-person households had grown to 12% in 2010 and that 39.4% of those were occupied by a person aged 60 years or older [23].

This presents a new paradigm for Brazilians, who must now deal with the rapid increase in the number of older people living in single-person households without the eldercare infrastructure that is available in developed countries. Although assisted living communities are commonly available in more developed nations, they are virtually non-existent in countries like Brazil [30], where even nursing homes are limited [6, 11]. Satisfying an older person’s desire to age in place, often poses a safety risk and puts financial strain on families needing to hire help for the older person [5, 30]. This points to the need for a system that can monitor the older person’s wellbeing and alert concerned family and friends.

Wireless sensor networks and smart home technologies have been used for the remote monitoring of older people’s health and their daily activities in the home environment [2, 3, 7, 9, 14, 16, 24, 25, 27, 28, 31, 33, 34, 39, 41,42,43, 46, 50, 53, 54, 59]. Rantz et al. demonstrated the effectiveness of alerting family and healthcare providers to subtle changes in an older person’s behavior detected by smart home technologies, which led to the diagnosis of health issues 10 to 14 days (or one to two weeks) prior to the older person’s realization of the health issue symptoms [42,43,44].

Despite the many benefits that this technology can provide, previous works have shown a resistance to the use of image recording sensors [7, 8, 10, 20, 35] and low compliance in the long-term use of wearable sensors or technology that requires action from the monitored person [20, 28, 35, 42]. A recent study showed that the most cited barriers for the adoption of smart home technologies are privacy and security, reliability and obsolescence, and usability [51]. In addition, an analysis of smart-home adoption in Brazil indicated that cost, followed by culture, were the major barriers [45].

Therefore, a successful elder monitoring system must be low cost, easy to use and maintain, avoid the use of image-acquiring technology, and must not require interaction with the monitored elder. Recently, we reported a streamlined elder monitoring system that addressed these requirements [26]. The strategy taken was to deploy a motion sensor network in the residence that communicates with a remote server where the data could be analyzed, and alarms issued to an appointed family member or friend. Unlike previously reported work, the system used a single type of sensor, which lowers the installation and maintenance costs, and does not require an assisted living infrastructure and healthcare professionals to supervise the system.

The work reported in [26] demonstrated that recorded activations were mostly consistent with the behavior reported by the elders but also allowed the uncovering of unusual activities by visual analysis of the activations. This also identified the need for an automated data analysis system that identifies the number of people in the house and tracks the sensor activation sequence. These requirements are addressed in the present article.

Although supervised techniques such as support vector machines, decision trees, naïve Bayes, multiple-instance learning, neural networks and hidden Markov models, are often used for analyzing smart home data [3, 7, 9, 10, 18, 20, 28, 33, 36, 52], we chose to investigate the feasibility of using unsupervised techniques as they have a significantly lower computational cost and do not require an extensive training data set. These are important aspects to keep the system development and maintenance costs low and to enable wider user adoption. A different unsupervised technique, namely clustering activity sensor sequences, was recently reported [22], which was used in the context of a multiple-type sensor network in an assisted living community.

The developed computational model for the activity discovery and learning employed in this work used a combination of kernel density estimation and outlier detection. This unsupervised method has a low computational cost and is easy to implement, which are important attributes to keep the overall system cost low. In combination with the streamlined sensor network reported in [38], this enables an effective monitoring system for elderly individuals, which meets the requirements of underdeveloped (and developing) countries where assisted living communities are not available.

2 Materials and methods

This project involved the testing of a system with two elderly volunteers and was submitted and approved by the Brazilian Ethics Committee, registered under Plataforma Brasil, CAAE 35247214.5.0000.5544. Both volunteers signed an informed consent form in compliance with the rules established by the Ethics Committee.

2.1 Monitoring system

In designing the monitoring system [26], a few guidelines were used to maximize its chances of being adopted by the elderly population:

-

Low cost: accessible to a wider income range.

-

Low complexity: easy installation and maintenance.

-

Transparent to the elder: no interaction with the system required.

-

No images: to preserve the elder’s privacy and modesty.

To address the first two items, a single type of passive infrared (PIR) motion sensor was used to reduce the installation complexity and maintenance costs. These sensors are reliable, low cost, small, do not require special lighting conditions, and do not record images, being widely used in monitoring systems [1, 7, 10, 19, 24, 28, 55]. Their unobtrusiveness is an important feature appreciated by the monitored residents [10, 12, 13, 43]. The PIR sensor used in this study was made by Parallax (#555–28,027) and has low power consumption (<100 μA) and a motion detection range of ~20 ft, large enough for average household rooms.

A schematic of the monitoring system is depicted in Fig. 1. The system consists of a network of motion sensor nodes with wireless communication to a home monitor unit (HMU) that collects, stores, and transmits the data to a remote monitoring center (MC) where the data can be further analyzed and alarms triggered if necessary. The sensor network was deployed in a star topology with broadcast communication between the HMU and the various sensor nodes. The number of sensor nodes depends on the number of rooms and pieces of furniture that require monitoring. Therefore, a ZigBee network was chosen due to its longer reach and ability to handle a larger number of devices than Bluetooth LE, which are important attributes for large houses. Each sensor node contained an Arduino Nano microprocessor, an Xbee radio (ZigBee protocol), and at least one motion passive infrared (PIR) sensor. The following criteria were used for the sensors’ placement:

-

1.

A motion sensor was placed inside each house’s exit door to infer incoming and outgoing house traffic (Fig. 1, Sensor 1, named S1).

-

2.

At least one motion sensor was placed in each room to detect the person’s movement (Fig. 1, Sensor 2, named S2). Large rooms may require more than one sensor for full coverage.

-

3.

One motion sensor was placed above each item of furniture where prolonged stays may occur, such as on a sofa, bed, or at a table, to monitor activities such as sleeping and eating (Fig. 1, Sensor 3, named S3).

When a person moves within the range of a motion sensor, the sensor transmits a wireless signal to the HMU. The HMU records and stores the signal in a csv (comma separated value) string with the sensor’s unique identification (ID) and date and time of the sensor activation in the format ID;DD/MM/YYYY HH:MM:SS. The HMU consists of a computer connected to an Arduino board with another Xbee radio, which receives the information from the sensor nodes and transmits the aggregated information to the remote MC via the internet at a predetermined time interval.

2.2 Model overview

The main objective of the model described here is to identify whether a person is alive, which means verifying that the person is moving inside the house. The secondary objective is to learn behavioral patterns and identify significant deviations that may be indicative of any health problems.

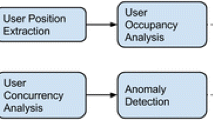

The model consists of a set of algorithms integrated into a database, grouped by the different steps, as depicted in Fig. 2. The Activity discovery step aims at inferring, from the captured data, the activity in which the person is involved at a particular moment, i.e., eating, sleeping, moving around the house, or getting in/out of the house. This step also transforms the original data structure into one that fits the behavior recognition algorithms. The Behavior recognition step runs at the end of each day and updates the person’s behavioral patterns. The Risk identification step performs a series of checks to identify significant periods without motion activity being recorded and deviations from the usual pattern that may warrant the issue of an alarm. This step analyzes the data generated from both the Activity discovery and Behavior recognition steps.

The object-relational database management system PostgreSQL was used, which is free and open-source [37]. The routines for importing the monitored data to the database were written in C. The algorithms for handling the data, generating the tables, migrating data, issuing and recording alarms were written in PL/pgSQL, the procedural language for PostgreSQL [38]. The PL/pgSQL was also used for calling the LOF (local outlier factor) function from the statistical open-source software R [40] and the circular kernel density function from MATLAB® [29], which are used in the Activity discovery and Behavior recognition steps described next.

During the initialization of the model, the number of sensors, their positions, detection coverage area, distances, and possible paths between them, are entered manually. Using that information, the model creates a graph [15, 49, 60] to represent the possible paths among the sensors, as displayed in Fig. 3. In this graph, the nodes are represented by each individual motion sensor, the edges are represented by bidirectional paths between two sensor coverage areas and the length of each edge, d, is represented by the distance, in meters, between the borders of the sensor detection area. The graph accounts for sensors with different detection coverage areas as well as for the existence of blind spots, so these are not a problem for the model as long as they occur in passage areas where the elderly person would not stay for extended periods of time.

2.3 Activity discovery

The Activity discovery step generates the tables needed for the Risk identification and Behavior recognition algorithms. This is composed of three major steps:

-

1.

Identification of the number of people in the house.

-

2.

Identification of activities involving few motion activations.

-

3.

Determination of the number of sensor activations within each hour.

The first task consists of the identification of the number of people in the house at any given moment, as this information is used later in the Behavior recognition and Risk identification steps. For instance, there is no need to monitor while there is a second person in the home, as the second person would help if a problem arose. However, it is important to know when the resident leaves the house so that the model can appropriately interpret extended periods of time with no sensor activity.

The number of people inside the house is determined by monitoring the entrance door sensor, the sequence of activated sensors, and the time between activations, using the graph generated during the initialization phase. When the door sensor is activated and there is no one in the house, the model assumes that one person is entering the house. When there are people in the house and the door sensor activation is not followed by the activation of other sensors, the model will assume that the resident has left and the house is empty.

If there are activations prior to and after the door sensor activation, the model will look at the sequence of activations to determine if a person is entering or leaving, and whether the number of people is increasing or decreasing. The model also checks for the number of simultaneous activations of different sensors to confirm the presence of more than one person inside the house. This task will generate a table recording the number of people in the house and the corresponding time period, for usage in subsequent steps, as shown in Table 1. The task also generates the information needed to analyze the patterns of entrance and exit in the house that will be described in the next section.

The second Activity discovery Task is the monitoring of activities involving few motion activations. There are some activities, such as sleeping, eating, and watching TV, which might lead to extended periods of time without activation of the motion sensors in the rooms, but are not related to an impending problem. To account for that, dedicated motion sensors are placed above the places of interest (sofa, bed, and dining table) to increase the number of activations. The model will then identify when the resident is utilizing one of those items of furniture and adjust the expected frequency of sensor activation. The data captured by those sensors is stored in the format shown in Table 2.

The third Activity discovery Task tabulates the number of activations for every sensor in each hour of the day and saves the information as shown below (Table 3).

Note that the data for the sensor activation density for the rooms (Table 3) and low activation density information for monitored furniture/places (Table 2) excludes the periods with two or more persons in the house (Table 1).

2.4 Behavior recognition

At the end of each day, the Behavior recognition Step updates the person’s behavioral patterns with the data generated by the Activity discovery Step on that day. Three different analyses are performed at this step: the room’s occupancy rate (time spent in each room) for every hour segment, the time spent sleeping, and the patterns of entrance and exit from the house.

The occupancy rate for each room and item of monitored furniture is obtained by calculating a probability density function (using a circular kernel estimator) of the activations of each motion sensor. The probability of a person being in a room or on one of the monitored furniture items in a given time period can be approximated by a density function [32, 48]:

where x is the time, Xi is the timestamp of the sensor activation, n is the number of activations, K is a kernel function that satisfies \( {\int}_{-\infty}^{+\infty }K(t) dt=1 \) and h is a smoothing parameter (bandwidth) that was adjusted empirically for each sensor.

Given the circular characteristic of our data, we used the MATLAB® algorithm circ_ksdensity.m as proposed by Muir [29] with the kernel function given as a wrapped normal distribution:

with μ and σ from the unwrapped normal.

The sleep patterns were determined in a similar way. The model calculates the density function for the times of sleeping and waking up obtained during the Activity discovery Step and stored in Table 2.

The patterns for leaving and arriving at the house are simply established by calculating the average time out of the house and the frequency of outings in the three periods: morning, afternoon, and evening/night.

2.5 Risk identification

The Risk identification step distinguishes two categories of critical situations: immediate and non-immediate risk. The first one consists of situations that may reflect an imminent risk to the person’s well-being. The second one relates to potential future risks that may be inferred from changes in the person’s behavior, like their sleeping and eating routines.

An immediate risk is identified when no motion activation occurs in a pre-defined time period based on the health status of the person being monitored and his/her previously learned behavior. It is also adjusted to a larger value when the person is out of the house or is using a specific furniture item (bed, sofa, or dining table) that reduces the frequency of motion sensor activations. Additionally, lengths of time outside the house longer than usual (greater than two standard deviations from past experience) are considered immediate risks and an alarm is issued.

Sufficient sleep is considered crucial for good quality of life, especially for the elderly. A night of atypical sleep can have a negative impact throughout the rest of the day, increasing the risk of falls [47]. Therefore, at this step, the model identifies changes in aspects related to sleeping activity, characterized by the data generated from the Activity discovery step and stored in Table 2. The model cannot guarantee that the individual is really sleeping; it can only suggest that the person is in bed. The technique chosen for anomaly detection is the calculation of outliers using the local outlier factor (LOF) proposed by Breunig et al. [4], which has the advantage of calculating the degree of outlierness characterized by the LOF parameter.

The algorithm starts by calculating the reachability distance of an object p with respect to object o, given by [4]:

where k-distance(o) is the distance between the object o and its k-nearest neighbors, k is an integer, and d(p,o) is the distance between the objects p and o. In the model, an object is the data set formed by a line entry in Table 2, which includes the characteristics of each sleep period (bedtime, wake-up time, length of time in bed, number of wakings, and length of awake time). A suitable value for k was found empirically to be 15 neighbors.

Next, the algorithm calculates the local reachability density, lrd, for each object [4]:

where Nk(o) represents the set of objects with distances less than or equal to the k-distance(o).

Based on the local reachability density of each object and its neighbors, the local outlier factor of the object, LOF, is calculated by [4]:

According to Breunig [4], values around 1 indicate that the point is similar to its neighbors and the farther from 1, the more different the point is from its neighbors.

3 Experimental evaluation

In this section, the analysis of data obtained experimentally from two single-person households is described and discussed. The objective of the experiments was to test the model’s ability to identify behavioral patterns and to send alarms if any situations involving potential risks occurred. Prior to the start of the monitoring time, a questionnaire was completed by each elder regarding their sleeping, eating, and television watching habits, as well as their bath time routine, when they receive visitors, and when they leave home. Elders 1 and 2 had significant differences in their reported routines. Elder 1 went out for lunch every day, had naps in the mornings and afternoons, and went to the bathroom several times during the night. Elder 2 did not have naps, had her meals at home, and did not get up at night to go to the bathroom. Both elders had help with house cleaning chores: one morning a week for Elder 1 and five mornings for Elder 2. Elder 1 received frequent visits from a girlfriend, and Elder 2 received family on Sundays. The modeling results were compared with the answers to the questionnaire and unexpected results or behaviors identified by the model were clarified with them at the time of the occurrence or in the final interview after testing.

3.1 Test environment

The model was used to monitor two elderly volunteers who lived alone, over a period of 25 days each. Elder 1 was a 67-year-old male living in a 40 m2 apartment and Elder 2 was a 71-year-old female living in a 90 m2 house. Figures 4 and 5 present the house layouts showing the locations of the installed sensors.

3.2 Activity discovery results

During the monitoring period, a total of 43,233 and 86,765 sensor activations were recorded for Elder 1 and Elder 2, respectively. Figure 6 shows the activation data for each elder, which was analyzed by the model to generate Tables 1, 2 and 3 as described in Section 2.

a Elder 1 and b Elder 2 sensor activation charts showing the time of each activation (dots) and time periods when the calculated number of persons in the house was either 0 (orange) or > 1 (blue) during the monitored days. The orange time slots with hatch marks are the ones that generated alarms for being longer than the average length of the outings plus two standard deviations calculated with the data from the first seven days

The number of people in the house was calculated using the method described in Section 2.3. Due to the small size of Elder 1’s house, and consequently the greater proximity among its sensors, the model was set up to identify more than one person in the house when two or more motion sensors separated by more than 50 cm were activated within a second, at least 10 times during each one-hour period. As Elder 2 lived in a larger house and the sensors were farther apart, different thresholds were needed. In this case, the model was set up to identify the presence of more than one person when multiple sensor activations occurred within 1 s, at least five times during a one hour period. The activations during the time periods with two or more people in the house did not need to be processed, reducing the number of analyzed activations from the original 43,233 and 86,765 activations to 23,134 and 41,949 for Elders 1 and 2, respectively.

The model identified more than one person at Elder 1’s home in 44% of the monitored days, with no clear pattern as shown in Fig. 6. Although Elder 1 indicated in the questionnaire that he had a cleaning lady coming on Thursday mornings, the model did not identify this. When questioned, Elder 1 explained that the cleaning lady’s schedule was flexible, that she had a key and sometimes he was not present during the cleaning period. In fact, the cleaning lady was present during an afternoon visit by one of the researchers. This situation suggests a limitation in the model: it can only identify whether there is someone in the house and is unable to confirm that the monitored person is actually the elder. In the case of Elder 2, the model identified more than one person in the house on 80% of the days, consistent with the reported times for the maid and the visits from family as shown in Fig. 6 and summarized in Table 4.

The periods of time with no people in the house were initiated when a door sensor was activated, followed by no sensor activation for 20 min, and terminated when the door sensor was activated again as explained in Section 2.3. As shown in Fig. 6a, the model identified that Elder 1 spent most of the daytime away from his residence. He went out on 100% of the monitored days, averaging 2.52 exits per day, staying out on average for 2 h and 41 min. He reported in the pre-test questionnaire that he left for lunch from 13:00 to 14:00. According to the model, the house was empty at these times in 19 out of the 25 monitored days.

Elder 2 had a different routine, spending more time at home. The model identified exit movements in 56% of the days in this case, as can be seen in Fig. 6b. Both elders were away for two nights during the monitored period, which were correctly identified by the model. The model misidentified two exit periods for Elder 1, 2:00–5:00 on day 13 and 0:00–2:00 on day 14, due to a faulty door sensor connection that was fixed in the afternoon of day 14. This caused continuous firing of the door sensor that led to miscalculation of the number of people in the house on those days.

A comparison of the model results, the pre-test questionnaire answers, and the interviews performed for confirmation during and after the experiment are summarized in Table 4. As can be seen, these findings were mostly consistent with the information provided by the elders.

Figure 6 also shows that the activation density was reduced during bed time as expected. The analyses of these results (Table 2) as well as the room occupancy (Table 3) are discussed in the next section.

3.3 Behavior recognition

The calculated density functions for the sensors located in the living/dining room, kitchen and bathroom for both elders, shown in Fig. 7, indicate that both individuals spent most of their time in the living/dining room during the day, with trips to the bathroom and kitchen at certain times. In particular, Elder 1’s presence was more evident in the early morning, early afternoon, and late night, which can be related to breakfast, an afternoon nap on the sofa, and to the use of the computer, which was on a table located in the living/dining room.

The circular kernel density of elder motion in the living/dining room, kitchen, and bathroom (from left to right). The upper densities refer to Elder 1, and the lower refer to Elder 2. The density was calculated using the function circ_ksdensity.m [66] with automatic selection of the kernel function and bandwidth

Elder 2 spent time in the living/dining room area during the afternoon and evening, starting soon after lunch time. This behavior was due to the use of a reclining chair for watching TV and the use of a computer and table located in this area. The low activation in the morning was due to the presence of a cleaner who came five times a week from approximately 08:15:00 to 11:45:00. As the behavior recognition algorithms were not executed during the periods when the presence of more than one person is detected inside the house, there is limited information on Elder 2’s daily morning routine.

The kitchen sensor’s kernel density in Elder 1’s house presented peaks that are consistent with the time for the preparation and consumption of his main meals. The lower peaks for the mid-day and evening were consistent with the habit of eating out reported in the questionnaire. Similarly, the kernel density for Elder 2 was also consistent with the reported meal preparation times. Additionally, the gaps in activity in the kitchen between the peaks around 8:00 and 12:00 were consistent with the cleaner’s arrival at ~8:15 and departure at ~11:45.

The bathroom usage by Elder 1 presented two large peaks at ~8:00 and 16:00 and a small one around 22:00. The morning peak was consistent with the reported wake-up and bathing time. The smaller elevation observed around 22:00 was also consistent with the reported evening bathing time. However, the large peak observed in the afternoon could not be correlated with activities reported in the questionnaire. A post-testing interview disclosed a change in the Elder 1 routine that happened during the monitoring period, caused by the start of a romantic relationship, which may have introduced additional grooming time in the bathroom prior to an evening outing. The most intense use of the bathroom for Elder 2 in the morning was consistent with the wake up and bathing times noted in the questionnaire. We observed three other small elevations at ~14:00, ~18:00, and again at bedtime. No significant visits to the bathroom during the night were detected for Elder 2, as reported in the questionnaire, but a single visit 1–2 h prior to wake-up was identified.

Elder 1 seldom used the dining table, with activity detected on 52% of the days and only 5 days with activity over 30 min. The morning activations were brief and occurred around 9:00–10:00, prior to Elder 1 exiting the house, which was reflected in the first kernel density peak in Fig. 8. The model did not identify table activity during the reported breakfast eating time because Elder 1 often did not have breakfast or ate it in the kitchen as explained in the post-test interview. The density peaks around 16:00 were associated with use of the computer, which was located on the table. Although Elder 1’s questionnaire reported that he usually had dinner from 20:00 to 23:00, this was not captured by the model and can be explained by his change in routine as disclosed in the post-testing interview. The small dip in his presence at the table during lunch time was consistent with not having lunch at home as reported in the questionnaire. Elder 1 informed us that he usually takes naps on the bed or the sofa between 10:00 and 11:00 and in the early afternoon, between 13:00 and 14:00. We did not notice the morning nap but the slight increase in use of the sofa at ~13:00 was consistent with the reported behavior. The major density peak on the sofa occurred between 20:00 and 22:00, which fell within the 17:00–01:00 period for watching TV as informed by Elder 1.

The dining table usage by Elder 2 at the reported lunch time was evident in Fig. 8. The model did not identify a significant presence at the dining table at the reported breakfast and dinner times. The first was a consequence of the cleaning lady’s presence, and the second is explained by dining at a chair in front of the TV as explained by Elder 2 in the post-test interview. This was corroborated by the sofa/chair density increase after 18:00.

A comparison of the behaviors detected by the model with the information provided by the elders is shown in Table 5.

Figure 9 shows the kernel density related to bedtime and wake-up times. Out of the 25 days analyzed, the model did not identify sleeping activity on three nights for Elder 1. For two of them it was clear that Elder 1 did not sleep at home, as the model could identify his exit from the apartment and his return on the next day as was indicated in Fig. 6a. Additionally, there was no motion sensor activation during that period. The other night was due to a faulty door sensor connection, which caused continuous activation during the night/dawn of days 12/13 as previously discussed, preventing the model from identifying sleep activity. The average time to go to bed was ~22:30 for Elder 1 and to wake up was ~7:00. Both are close to the reported times in the questionnaire. In 43% of the days, he got up three to five times during the night. These wakings, identified during the Activity discovery Step and recorded in Table 2 as previously explained, are consistent with the ones reported in the questionnaire.

Regarding Elder 2, the model identified 22 nights of sleep activities out of the 25 monitored nights. The model was able to identify that Elder 2 did not sleep at home in two of the other three nights since there were clear exits and returns with no motion sensor activation in between. The third night was due to a power outage that affected some of the sensors until 03:00 and all the sensors from 03:00 to 09:00 of day 14.

On average, Elder 2 went to bed at midnight and got up at 8:00, in agreement with the bed and wake-up times noted in the questionnaire. On 72% of the days, Elder 2 got up to go to the bathroom once during sleep, with an average stay of one and a half minutes. This pattern was not reported in the questionnaire but confirmed in the post-test interview.

The monitoring data show that although both elders spent, on average, the same time in bed, with less than 20 min’ difference, the density functions for Elder 2 were narrower than for Elder 1, indicating more disciplined behavior for the former.

The model captured most of the elders’ behavior reported in the questionnaire. The discrepancies are small and might be due to inaccuracies in their own perception of the frequency or time taken for their daily activities. A summary of the comparison between the elder-reported information and modeling results is presented in Table 6.

3.4 Critical situations and alarms

The model analyzed the sensor activations and behavioral patterns and identified several situations of potential risk for the monitored elders.

3.4.1 Immediate risks

This risk category is related to the lack of sensor activation for a period longer than expected and lengths of time outside the house longer than usual. If the individual is at home they could reflect a fall or a fainting spell, or if outside, an accident or becoming lost. An alarm should be issued as soon as this situation is detected. Fortunately for the two elders, none of these situations occurred during the 25 monitored days. However, there were a few situations that warranted the issue of alarms.

The model identified a longer than usual out-of-home time for the residents if it exceeded a threshold that was set to be the average length plus two standard deviations for the outings in the first 7 days, corresponding to 5 h 20 min for Elder 1 and 5 h 27 min for Elder 2. The nights spent away from home were not included in the average calculations; however, they would be considered an exception and an alarm would be issued. As can be seen in Fig. 6, the length for Elder 2’s outings increased after the first week and the number of outings in the first week for Elder 1 was too few to be representative. Therefore, the threshold value was recalculated using the full 25 day data resulting in 7 h 05 min for Elder 1 and 6 h 40 min for Elder 2. Hence, the number of alarms was reduced from 11 to 4 for Elder 1 and 4 to 2 for Elder 2. The out-of-home situations that warranted an alarm appear with hatch marks on Fig. 6 and are discussed in Table 7.

When the elder is at home, long periods without activation become important. The firings of alarms due to periods longer than expected depends on the threshold set for them, and are typically different for the periods when the elder is usually awake or sleeping. The threshold values were set to 120 min for day/evening hours and 240 min for the sleeping hours for Elder 1 since he took naps during the day and sometimes took sleeping medication at night. Those threshold values were 150 min for Elder 2 as she often watched TV and used the computer for extended periods and had a more regular sleep pattern. The alarms issued under these conditions are listed and commented on in Table 8. Differences in the amount and types of movement among the elder population are to be expected due to their individual behavior and health status, and the threshold values should be adjusted accordingly.

There was a situation that generated alarms associated with behavior recognition that pointed to an immediate risk for Elder 1. This occurred in the early hours of day 9, with the bathroom motion sensor showing almost continuous activation from 00:48 to 05:15, a very atypical behavior. One of the researchers visited Elder 1 the following morning to check on him. At first, Elder 1 said that his health was fine and that he did not have any problems during the previous night. However, when asked about the frequent bathroom sensor activation data, he confirmed that he was in the bathroom but did not want to discuss what had happened. That highlights an ethical problem that needs to be addressed prior to widespread use of this system: what should be done when a problem is identified but the monitored elder does not want anyone to know? The more specific these alarms become, the greater the risk of invading the monitored person’s privacy.

3.4.2 Non-immediate risks

The local outlier factor (LOF) algorithm was run on the sleeping activity data and was able to identify atypical situations for both elders associated with values different from 1, which are summarized in Table 9. There were increases in the LOF value as well as instances with no computed values due to the absence of identifiable sleep activity. The former were caused by a shorter than usual time in bed, and the latter were caused by nights away or system failures. The sleeping pattern for Elder 2 was much more regular as evidenced by the small LOF value deviations from 1.

The model does not monitor meal intake as it only detects the person’s presence at the dining table during meal times. If the meal is taken while standing by the kitchen counter or on the sofa while watching TV, this also affects the results obtained by the system. Breakfasts or lunches that occurred while the cleaner was at the house were not tracked, as this indicated a second person. As a consequence, the model was not able to identify breakfast and dinner times for Elder 1 for 77 and 53% of the monitored days, respectively. For Elder 2, the model failed in identifying breakfast, lunch, and dinner for 76, 60, and 72% of the monitored days. This highlights the need for a continuous update in the information supplied by the elder in the initial questionnaire and also an awareness of changes in lifestyle.

4 Conclusions

We have proposed and described a model that can monitor elderly people who live alone, learn their behavior, and subsequently issue alarms when a risk to their well-being is identified. Although initially aimed at seniors, due to their impending need for such solutions, the same system can also be used for any single person household whose occupant’s health deserves attention.

We reported the results of two test trials for the model with two elderly volunteers who lived alone. The system was tested for a period of a month on two residences with significantly different sizes and shapes and whose residents had fairly different routines, attesting to the versatility of the model. Using a combination of kernel density estimation with outlier detection, our model was able to successfully capture most of the behaviors reported by both elders. The model was also able to identify risk situations and issue alarms as well as to flag the need for a service call due to equipment failure and power outage.

One important aspect of the model is the determination of the number of people in the house and its use to eliminate the need to extract information from the data in this situation. This significantly simplifies the problem and enables the use of a simpler model compared with others found in the literature.

Although it was not the aim of the model, a perceived limitation is related to meal intakes, as the model can only detect presence/absence at the dining table during meal times but cannot guarantee that the person actually is having a meal or if they are eating elsewhere at home. Integrating the PIR sensors with other sensors, such as pressure sensors in strategic locations, could be a method of acquiring more information to enrich the model and better understand the user behavior.

Another limitation that was not addressed in the current work is the impact of the existence of large pets. In its current form, the model would interpret pet activations as the presence of a second person in the residence. However, there are PIR sensors available on the market that are described as “pet immune motion detectors”, which include a sensitivity selector to avoid false activations by pets up to 36 kg. They will be tested in future studies that include pet owners.

The results obtained also highlight inaccuracies in the elders’ perceptions of their own daily routines as disclosed in the pre-installation questionnaire, and the evolving nature of their daily habits. This points to the need for continuous updating of the relevant information through a machine learning algorithm, questionnaire updates and adjustments to the model’s parameters. Such updates, combined with data gathered from several users, would enable the early recognition of possible pathologies associated with behavior changes.

The proposed monitoring system is non-intrusive and does not capture any images, nor does it require any interaction with the monitored person, and it can be adjusted to preserve privacy while still being able to highlight atypical situations. Consequently, this system has a greater chance of being adopted by people living alone who would significantly benefit from and feel safer with the placement of non-intrusive monitoring and who would likely reject other types of invasive or complex technologies.

Data availability

References

Alexander G, Wakefield BJ, Rantz M, Skubic M, Aud MA, Erdelez S et al (2011) Passive sensor technology Interface to assess elder activity in independent living. Nurs Res 60:318–325

Al-Khafajiy M, Baker T, Chalmers C, Asim M, Kolivand H, Fahim M, Waraich A (2019) Remote health monitoring of elderly through wearable sensors. Multimed Tools Appl 78:24681–24706. https://doi.org/10.1007/s11042-018-7134-7

Banerjee T, Keller JM, Popescu M, Skubic M (2015) Recognizing complex instrumental activities of daily living using scene information and fuzzy logic. Comput Vis Image Und 14:68–82

Breunig MM, Kriegel H, Ng RT, Sander J (2000) LOF: identifying density-based local outliers. Proceedings of ACM SIGMOD 2000 Int. Conf. of Management of Data, Dalles, TX, USA.

Camargos MCS, Rodrigues RN, Machado CJ (2011) Idoso, família e domicílio: uma revisão narrativa sobre a decisão de morar sozinho. Rev Est Pop 28:217–230

Chaimowicz F, Greco DB (1999) Dynamics of institutionalization of folder adults in Belo Horizonte, Brazil. Rev Saude Publ 33:454–460

Chen L, Hoey J, Nugent C, Cook D, Yu Z (2012) Sensor-based activity recognition. IEEE Trans Syst Man Cybern 42

Cook D, Narayanan K (2014) Mining the home environment. J Intell Inf Syst 43:503–519

Cook D, Narayanan K, Parisa R (2013) Activity discovery and activity recognition: a new partnership. IEEE Trans Cybern 43:820–828

Cook DJ, Crandall AS, Thomas BL, Krishnan NC (2013) CASAS: a smart home in a box. Computer 46:62–69. https://doi.org/10.1109/MC.2012.328

Creutzberg M, Gonçalves LHT, Sobottka EA, Ojeda BS (2007) Long-term care institutions for elders and the health system. Rev Lat-am Enferm 15:1144–1149

Demiris G, Skubic M, Rantz MJ, Courtney KL, Aud MA, Tyrer HW et al (2006) Facilitating interdisciplinary design specification of “smart” homes for aging in place. Ubiquity: Technologies for Better Health in Aging Societies. https://doi.org/10.3233/978-1-58603-647-8-45

Demiris G, Hensel BK, Skubic M, Rantz M (2008) Senior residents’ perceived need of and preferences for “smart home” sensor technologies. Int J of Technol Assess 24:120–124

Demongeot J, Virone G, Duchêne F, Benchetrit G, Hervé T, Noury N, Rialle V (2002) Multi-sensors acquisition, data fusion, knowledge mining and alarm triggering in health smart homes for elderly people. CR Biol 325:673–682

Diestel R (2000) Graph theory – electronic edition 2000. Springer-Verlag: New York, USA. Available from https://www.springer.com. Accessed 10 Mar 2015

Duchêne F, Rialle V, Noury N (2002) Home health Telecare: proposal of an architecture for patient monitoring and critical situation detection. Available from https://www-sop.inria.fr/orion/personnel/Florence.Duchene/publications/FDuchene_Healthcom_2002.pdf. Accessed 20 Nov 2016

Eurostat Statistical Books (2020) The EU in the world 2020 edition. Available from https://ec.europa.eu/eurostat/web/products-statistical-books/-/KS-EX-20-001?msg=mailSent. Accessed 14 Jul 2020

Ghayvat H, Awais M, Pandya S, Ren H, Akbarzadeh S, Chandra Mukhopadhyay S, Chen C, Gope P, Chouhan A, Chane W (2019) Smart aging system: uncovering the hidden wellness parameter for well-being monitoring and anomaly detection. Sensors 19:766

Guralnik V, Haigh KZ (2012) Learning models of human behavior with sequential patterns. Proceedings of the Association for the Advancement of artificial intelligence AAAI, workshop automation as caregiver, Edmont, Canada, may.

Hajihashemi Z, Popescu M (2013) Detection of abnormal sensor patterns in eldercare. The 4th IEEE Int Conf EHB, Iasi, Romania, November 21–23.

Harvard University, Joint Center for Housing Studies (2019) Housing America’s older adults 2019. Available from https://www.google.com/url?sa=t&rct=j&q=&esrc=s&source=web&cd=&cad=rja&uact=8&ved=2ahUKEwjt6oKApM_qAhV1CrkGHc_BDwUQFjADegQIDBAG&url=https%3A%2F%2Fwww.jchs.harvard.edu%2Fcalendar%2Fnew-report-housing-americas-older-adults-2019&usg=AOvVaw3WHvDV0GBrLl4KqxQO4kqH. Accessed 15 Jul 2020

Ibrahim OA, Keller J, Popescu M (2019) An unsupervised framework for detecting early signs of illness in eldercare. IEEE International Conference on Bioinformatics and Biomedicine (BIBM). https://doi.org/10.1109/BIBM47256.2019.8983010

Instituto Brasileiro de Geografia e Estatística – IBGE (2010) Censo Demográfico 2010 – Família e domicílios – Resultados da amostra. Available from http://biblioteca.ibge.gov.br/visualizacao/periodicos/97/cd_2010_familias_domicilios_amostra.pdf. Accessed 10 Nov 2016.

Kaye JA, Maxwell SA, Mattek N, Hayes TL, Dodge H, Pavel M et al (2011) Intelligent Systems for Assessing Aging Changes: home-based, unobtrusive, and continuous assessment of aging. J Gerontol B-Psychol 66:i180–i190

Ko J, Lu C, Srivastava MB, Stankovic JA, Terzis A, Welsh M (2010) Wireless sensor networks for healthcare. In Proceedings of the IEEE 98:1947–1960. https://doi.org/10.1109/JPROC.2010

Loureiro da Silva V, Soares CL, Soussa MRB, Senna V (2018) Wireless sensors network for elder monitoring. R Tecnol Inf Comun 8:15–23

Lowe SA, ÓLaighin G (2014) Monitoring human health behaviour in one's living environment: a technological review. Med Eng Phys 36:147–168

Luo X, Tan H, Guan Q, Liu T, Zhuo HH, Shen B (2016) Abnormal activity detection using Pyroelectric infrared sensors. Sensors 16:822

Muir D. Kernel density estimation for circular functions. Available from http://dylan-muir.com/articles/circular_kernel_estimation/. Accessed 12 Oct 2017

Nakagawa EY, Antonino PO, Becker M, Maldonado JC, Storf H, Villela KB, Rombach D (2013) Relevance and perspectives of AAL in Brazil. J Syst Software 86:985–996

Palumbo F, Ulberg J, Stimec A, Furfari F, Karlsson L, Coradeschi S (2014) Sensor network infrastructure for a home care monitoring system. Sensors 14:3833–3860

Parzen E (1962) On estimation of a probability density function and mode. Ann Math Stat 33:1065–1076

Pavel M, Jimison HB, Wactlar HD, Hayes T, Barkis W, Skapik J et al (2013) The role of technology and engineering models in transforming healthcare. IEEE R-BME 6

Pavel M, Jimison HB, Korhonen I, Gordon C, Saranummi N (2015) Behavioral informatics and computational modeling in support of proactive health management and care. IEEE Trans Biomed Eng 62:2763–2775

Peek STM, Wouters EJM, Hoof JV, Luijkx KG, Boeije HR, Vrijhoef HJM (2014) Factors influencing acceptance of technology for aging in place: a systematic review. Int J Med Inform 83:235–248. https://doi.org/10.1016/j.ijmedinf.2014.01.004

Popescu M, Mahnot A (2012) Early illness recognition using in-home monitoring sensors and multiple instance learning. Method Inform Med 51:359–367

PostgreSQL. Available from https://www.postgresql.org/. Accessed 10 Nov 2016

PostgreSQL 9.6.1 Documentation. PL/pgSQL - SQL Procedural Language. Available from https://www.postgresql.org/docs/current/static/plpgsql.html. Accessed 2 Feb 2017

Prendergast D (2020) Ethnography, technology design, and the future of “aging in place”. In: Sokolovsky J (ed) The cultural context of aging: worldwide perspectives, 4th edn. ABC-CLIO, California, pp 130–150

R Project. Available from https://www.r-project.org/. Accessed Feb 2017

Rantz MJ, Skubic M, Miller SJ, Galambos C, Alexander G, Keller J et al (2013) Sensor technology to support aging in place. JAMDA 14:382–391

Rantz M, Scott SD, Miller SJ, Skubic M, Phillips L, Alexander G et al (2013) Evaluation of health alerts from an early illness warning system in independent living. CIN 31:274–280

Rantz MJ, Skubic M, Popescu M, Galambos C, Koopman RJ, Alexander GL, Phillips LJ, Musterman K, Back J, Miller SJ (2014) A new paradigm of technology-enabled ‘vital signs’ for early detection of health change for older adults. Gerontology 61:281–290. https://doi.org/10.1159/000366518

Rantz MJ, Skubic M, Abbott C, Galambos C, Popescu M, Keller J, Stone E, Back J, Miller SJ, Petroski GF (2015) Automated in-home fall risk assessment and detection sensor system for elders. The Gerontologist 55:S78–S87

Rebouças SV, Silva VL (2019) Survey for the collection of data about the residential automation market in Brazil. V international symposium on innovation and technology, Salvador, Brazil. Blucher Engineering Proceedings 6:506–515. https://doi.org/10.1016/siintec2019-65

Reddy R, Reddy S (2019) Residents aware network for intelligent assistance to enable aging-in-place. IEEE International Conference on Engineering, Technology and Innovation (ICE/ITMC), Valbonne Sophia-Antipolis, France. https://doi.org/10.1109/ICE.2019.8792628

Roepke SK, Ancoli-Israel S (2010) Sleep disorders in the elderly. Indian J Med Res 131:302–310

Rosenblatt M (1956) Remarks on some nonparametric estimates of a density function. Ann Math Stat 27:832–837

Ruohonen K. Graph Theory. Available from http://www.e-booksdirectory.com/. Accessed 3 Mar 2017

Sheahen M, Skubic M (2015) Design and usability of a smart home sensor data user Interface for a clinical and research audience. In Smart Homes and Health Telematics. https://doi.org/10.1007/978-3-319-14424-5_2

Sovacool BK, Del Rio DDF (2020) Smart home technologies in Europe: a critical review of concepts, benefits, risks and policies. Renew Sust Energ Rev 120:109663

Sprint G, Cook DJ, Fritz R, Schmitter-Edgecombe M (2016) Using smart homes to detect and analyze health events. Computer 49:29–37. https://doi.org/10.1109/MC.2016.33

Sung W, Chang K (2014) Health parameter monitoring via a novel wireless system. Appl Soft Comput 22:667–680

Susnea I, Dumitriu L, Talmaciu M, Pecheanu E, Munteanu D (2019) Unobtrusive monitoring the daily activity routine of elderly people living alone, with low-cost binary sensors. Sensors 19:10. https://doi.org/10.3390/s19102264

Tapia EM, Intille SS, Larson K (2004) Activity recognition in the home using simple and ubiquitous sensors. Pervas Comput 3001:158–175

United Nations, Department of Economic and Social Affairs, Population Division (2013) World Population Ageing 2013. ST/ESA/SER.A/348.

United Nations (2019) Department of Economic and Social Affairs, Household Size & Composition Available from https://population.un.org/Household/index.html#/countries/840. Accessed 14 Jul 2020

United Nations Department of Economic and Social Affairs, Population Division (2019) World Population Prospects 2019 Highlights ST/ESA/SER.A/423. Available from https://population.un.org/wpp/Publications/. Accessed 14 Jul 2020

Wang S, Bolling K, Mao W, Reichstadt J, Jeste D, Kim H, Nebeker C (2019) Technology to support aging in place: older Adults' perspectives. Healthcare 7(2):60. https://doi.org/10.3390/healthcare7020060

Wilson RJ (1996) Introduction to graph theory, 4th edn. Essex, England, Addison Wesley Longman

World Health Organization-WHO (2015) Who report on aging and health, WHO LibraryGeneva, Switzerland, Available from https://www.who.int/ageing/publications/world-report-2015/en/. Accessed 29 Nov 2016

Code availability

Not Applicable.

Funding

(SENAI-CIMATEC)

Author information

Authors and Affiliations

Contributions

Not Applicable.

Corresponding author

Ethics declarations

Conflict of interest

Not Applicable.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Soussa, M.R.B., de Senna, V., da Silva, V.L. et al. Modeling elderly behavioral patterns in single-person households. Multimed Tools Appl 80, 22097–22120 (2021). https://doi.org/10.1007/s11042-021-10635-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-021-10635-3