Abstract

Quality of Experience (QoE) is inextricably linked to the human side of the multimedia experience. Whilst there has been a considerable amount of research undertaken to explore the various dimensions of QoE, one facet which been relatively unexplored is the role of individual differences in determining an individual’s QoE. Whereas this is certainly true of multimedia applications, when it comes to mulsemedia (multiple media engaging three or more human senses) this is even more so, given its emerging and novel nature. Accordingly, in this paper we report the results of a study which investigated the role that individual differences (such as age, gender, education, and smell sensitivity) have on QoE, when mulsemedia incorporating olfactory and haptic stimuli is experienced in cross-modal environments. Our results reveal that whilst users had a satisfying overall mulsemedia experience the specific use of cross modally matched odours did not result in significantly higher QoE levels than when a control scent (rosemary) was employed. However, aspects of QoE are impacted upon by all individual differences dimensions considered in our study.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The key success of any interactive system is determined by the end users’ overall satisfaction of the system. To this end, the concept of Quality of Experience (QoE), plays an important role. QoE is “the degree of delight or annoyance of the user expectations with respect to the utility and or enjoyment of the application or service in the light of the user’s personality and current state” [2]. Indeed, to achieve the best desirable user experience, understanding users’ personalities and their different characteristics are of fundamental importance in building successful systems. This underlines the importance of accepting how individual differences (e.g. age, gender, education, culture, personality, etc.) influence users’ satisfactions and enjoyment of an application. Whilst previous studies [13, 14, 33] suggest individual differences play an important role in perceptual multimedia quality, there are however few studies [32, 39, 40] which consider individual differences in mulsemedia (multiple sensorial media) applications. This is precisely the niche that we focus on in this paper, in which we report on the results of a study exploring the impact of individual differences on QoE and user perception of mulsemedia content.

Mulsemedia refers to integration of different media types that engage more than three human senses. This is in contrast to multimedia, which integrate two or sometimes three by now traditional media e.g. text, image and video. Multimedia applications target just two human senses, usually vision and audition [15]. As such, mulsemedia applications contain media which target additional senses such as those of touch (haptic), olfaction (smell) and gustation (taste).

Given the fact that our interaction with the surrounding environment is multisensorial it is unsurprising that mulsemedia applications attempt to convey an added degree of realism to traditional digital human-computer interaction [4, 5]. Moreover, the human brain has evolved to learn and operate in natural environments and accordingly our behavior is based on information integrated across multiple sensory modalities. Multisensory data can thus produce a greater, more effective and more efficient absorption of digitally-conveyed information than when unisensory data is employed [34].

Accordingly, in this paper we are looking at multisensory interaction beyond the audio-visual and have added haptic and olfactory stimuli to the user experience, exploring how individual differences mediate this. Our findings can support efforts to consider users’ perception and preferences in order to design better mulsemedia applications in future. In our experiments we show users various videos with added layers of auditory, olfactory and vibrotactile content that are cross modally correspondent to these features. Based on these videos, we investigate the impact of the auditory and olfactory content on the users’ QoE in mulsemedia setup designed on principles of cross-modal correspondence. Section 2 discusses related work focusing on mulsemedia and previous works including individual differences in the design of multimedia applications. Section 3 describes the research methodology employed. Sections 4 and 5 presents results and conclusion of the conducted research.

2 Related work

With the recent rapid development in technologies underpinning smart and wearable devices human senses (beyond the audio-visual) can now be included in digital applications. Other senses such as smell and touch is an increasingly realistic proposition which has the potential to enhance a user’s QoE. These new multi-sensory technologies are now more affordable and accessible for all people. Accordingly, there have been a proliferation of studies exploring user QoE of mulsemedia applications incorporating non-traditional media types such as haptics [20], gustatory [30], olfactory [12, 28] or indeed, a combination thereof, such as haptic-olfactory [19].

It is fair to say that QoE has been extensively investigated in and considered to be a very important aspect of mulsemedia [37], with several potential application areas being identified. For instance, the use of olfaction in a gaming context has been explored and shown to increase QoE [29]. Research also shows that the use of multisensory environments improves the performance of visual searches and reduces the amount of mental workload [17]. In education, multisensory digital learning experiences that involve the sense of smell can lead to enhanced QoE levels for the user. Worked described in [4] explored benefits of mutually supportive multisensory information in educational programmes. The literature review covers the advantages of multisensory learning environment and reported how multisensory information can be used for correcting and improving literacy skills for autistic individuals. Moreover, it is suggested that multisensory setups can increase performance and user engagement in an educational game. The authors proposed multisensory dimensions should be added to applications to enrich users’ QoE and perception levels. The benefit of olfactory media to enrich QoE has also been proven in several other studies [4, 5, 11, 12, 21, 25,26,27, 35,36,37,38]. Therefore, previous studies strengthen the belief that multisensory integration in a digital context will enhance QoE when using interactive systems. Given the importance of user characteristics in QoE, one of the key outstanding issues is to investigate and clarify the influence of individual differences on mulsemedia QoE.

The benefit of including individual differences in the design of multimedia and mulsemedia systems opens up the possibility of fine-grained personalization, namely adapting digital contents and delivery to users based on individual characteristics. The premise is that if we tailor the content around each individual’s preferences then we have the potential to enhance the QoE in these applications. In [39], the authors use the term ‘individual QoE’ and explain the fact that QoE is unique to each individual and to each individual’s experience. To achieve their aim of a personalized multimedia environment they develop an open-source Facebook application for studying individual experience for videos. One of the outcome of the study showed that personal information from the platform can be useful in modelling individual QoE. In line with the work done here it is believed that in order to design for personalisation we need to identify the individual differences influencing perception of QoE in mulsemedia applications. This will help to maintain a user satisfaction in a personalised setting.

In the multimedia arena, a number of studies have shown that individual differences such as age, gender, cognitive style and personal interests do impact on QoE enhancement [13, 14, 31, 32, 39, 40]. In particular, research described in [33] looked into the influence of personality and cultural traits on the perception of multimedia quality. They reported that individual differences play an important role in the way perception of quality and enjoyment are rated by users. However, the study is in the context of multimedia applications. There is relatively little work done focusing on individual differences in perceptual mulsemedia quality. For example in respect of olfactory data, research shows humans perceive smells differently based on a number of factors, including age, culture, mood, gender and life experience [11]. For an enhanced users’ QoE in mulsemedia applications, it is thus important to consider other characteristics of users that effect their perception of such setting.

Work described in [26] explored the impact of individual differences on mulsemedia QoE and is the closest to our work. It investigated how age and gender influence users’ perception of the temporal boundaries within which they perceive olfactory data and video to be synchronized. Our work presented here is different as the focus is on the potential influence of using the concept of cross-modal correspondences (an area unexplored by [26]) in mulsemedia. Cross-modal correspondences in the field of cognitive science refers to the systematic associations frequently made between different sensory modalities. For example high pitch sound is matched with angular shapes. In the non-digital world, the smell of lemon and high pitch audio can be associated to sharp objects [18]. Very little is known about the combination of senses in the digital world and what occurs as soon as one stimulus is stronger than others [6].

The lack of in-depth investigation of individual differences influence on user perception of mulsemedia applications has been one of the main drivers behind us conducting the research described here. Accordingly, we have investigated the impact of age, gender and education on users’ perception of cross-modal mulsemedia content. Our aim of studying individual differences is to determine meaningful user requirements for effective multisensory interactions. To this end, in this paper we present a study which had two underlying research questions:

RQ1: What is the influence of cross modally matched olfactory stimuli on user mulsemedia QoE?

RQ2: What is the influence of individual differences (smell sensitivity, age, gender, education) on user mulsemedia QoE?

3 Methodology

3.1 Participants

We recruited 24 participants who were randomly allocated to two groups: an experimental group (9 males and 3 females) and a control one (7 males and 5 females), as detailed in Table 1. Participants were aged between 18 and 41 years and came from various nationalities and educational backgrounds (Table 2). All participants spoke English and self-reported as being computer literate.

3.2 Experimental material

3.2.1 Video clips

In our experiment, the content of the six video clips was based upon dominant visual features including colour, brightness and angularity of objects as shown in Table 3. Each of these videos was 120 s long and the associated frame rate was 30 frames per second. The original soundtrack was generated from the recorded video. The resolution for the video clips was 1366 × 768 pixels and the viewing area 1000 × 700 pixels.

3.2.2 Haptic vest

To facilitate the vibrotactile experience, we chose the KOR-FX gaming vest that uses 4DFX based auditory-haptic signals to enable haptic feedback to the left and right sides of the chest [23]. The vest is wirelessly connected to a control box meant to accept the standard sound output of the sound card of a computer and enables the vest to vibrate in sync with the audio input received. This type of device provides additional information about environmental factors enabling users to immerse themselves with the on-screen content.

3.2.3 Olfactory device

For our experiment we employed the Exhalia SBi4 olfactory emitting device. This is considered by prior research more robust and reliable than other existing devices [25].

3.3 Experimental preamble

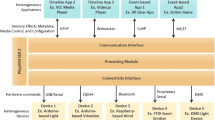

Our experiment was focused on the cross-modal correspondence between olfaction, audio, haptic effect, and their impact on user QoE. The experiment was carried out in a noiseless laboratory and lasted for approximately 40 min. Before the experiment, each participant was asked to complete a pre-smell sensitivity questionnaire (Table 4). This questionnaire consisted of a 5-point Likert scale and was developed to see if the participants have any previous history of olfactory dysfunction/disorders or if they have a normal sense of olfactory perception.. The Exhalia SBi4 device was placed at 0.5 m in front of the participant, letting him/her to detect the smell in 2.7–3.2 s [24]. All participants were explained the procedure and tasks involved in this experiment. Participants were seated behind a table, facing the 15.6-in. Lenovo (Windows 10) laptop screen. Each participant was then provided with headphones (iShine) and a haptic vest to wear (KOR-FX) as shown in Fig. 1. When participants confirmed that wearing the haptic vest was comfortable and were satisfied with the whole setup, they then continued to view the multimedia video clips. The experiment was approved by the Ethical Committee of Brunel University and informed consent was obtained from all participants.

Conditions

There were two conditions that differed in the provided scents:

-

(1)

In the experimental group condition (EG) users were exposed to six scents that were matched to the corresponding dominant visual cues: lilial and bergamot [7, 16], clear lavender and lavender [22], lemon and raspberry [18] (Table 1). These scents were selected based on olfactory-visual cross-modal principles.

-

(2)

In the control group condition (CG) in all six videos users were only exposed to one scent (rosemary) because of its demonstrated benefits on increasing alertness in tasks [8].

3.4 Experimental process

The experiment involved six video clips that were accompanied by olfactory, auditory and vibrotactile contents. Videos were viewed in a random order so that order effects were minimised. Olfactory content was emitted using Exhalia’s SBi4 four built-in-fans blowing through cartridges that contain scented polymer balls. A program employing Exhalia’s Java-based SDK was used to emit olfactory content throughout the duration of the video clips. Accordingly, scents were emitted for 10s at 30s intervals throughout the video clip (i.e. starting at 0 s, 30s, 60s, and 90s). When the Exhalia SBi4 was not emitting scents, the scent’s lingering effect ensured that it was still noticeable for the next 20s, after which the SBi4’s fans were switched back on to emit for the next 10s. Alongside odours, vibrotactile effects were provided throughout the whole duration of the clips, vibrating according to the associated audio soundtrack.

After each video clip, participants were asked to complete a subjective questionnaire with a set of 14 questions in relation to QoE, designed to capture users’ views and their overall experience of this experiment (Table 5). Each question was answered on a 5-point Likert scale with positive questions anchored one end with “Strongly Disagree” and with “Strongly Agree” at the other end. The negatively phrased questions were anchored with the opposite “Strongly Agree” one end and “Strongly Disagree” at the other. These questions were developed based on the System Usability Scale (SUS), widely used amongst researchers and by a variety of industries [1].

4 Results

This study reported in this paper had two underlying research questions. Accordingly, we will structure our analysis and discussion to mirror these. We used IBM SPSS software to run our statistical analysis. Throughout, a significance level of 0.05 was adopted for the analysis.

4.1 RQ1: What is the influence of cross modally matched olfactory stimuli on user mulsemedia QoE?

To investigate the impact of cross modally matched olfactory stimuli on user mulsemedia QoE we undertook an independent sample t-test between responses to the control and experimental groups of our study in questions targeting user QoE in Table 5. The mean opinion scores of the responses for both the EG and CG for each of the questions relating to smell and on the question targeting overall on the multisensorial experience are depicted side by side on the bar chart in Figs. 2 and 3. Our analysis revealed that there were no statistically significant differences between responses, which shows that the influence of cross-modally matched scents is negligible. Nonetheless, points to be remarked are that:

In terms of smell relevance, although the mean score of the cross modally-mapped smells is slightly higher than when rosemary was used, participants in the CG also perceived the smell to be relevant to the video.

Both EG and CG participants were neutral on the issue of the strength of the perceived smell.

Both EG and CG participants, although displaying positions slightly higher than the neutral stance, did not consider the use of scents (cross modally-mapped or not) to cause distraction.

This pattern is again reported for both EG and CG users, who were neutral in respect of the smells experienced being consistent with the content of the viewed video clips.

In terms of smell annoyance, although the mean score rating of both the EG and CG is roughly halfway between neutral and agreement, the participants felt slightly less annoyed when cross modally-mapped smells were used.

In terms of lingering effect, both EG and CG participants were neutral. This implies the participants in both groups have perceived that the smells faded away normally after watching the video clips.

Lastly, both EG and CG participants reported an agreement on the overall enjoyment of the multisensorial experience. However, they were neutral about the impact of the smells employed on enhancing their viewing experience.

4.2 RQ2: What is the influence of individual differences on user mulsemedia QoE?

In order to analyse the influence of individual differences on user mulsemedia QoE, we undertook two different types of statistical tests. Accordingly, in order to understand if age, gender and education influence a user’s satisfaction and enjoyment of mulsemedia applications we analysed the impact of each on self-reported QoE (Table 5). To this end, we undertook an Analysis of Variance (ANOVA) test with age, gender and education as independent variables and the user QoE responses as dependent variables. The result of this analysis is shown in Table 6. Finally, we undertook a Pearson correlation test to investigate links between participants’ smell sensitivity (Table 4) and their self-reported QoE (Table 5).

4.3 Impact of age on cross-modal mulsemedia QoE

As detailed in Table 2 we considered two age groups in our study: 18–21 years old, and over 21 years old, so as to have roughly the same number of participants in each groups (13 in the first, 11 in the second). The ANOVA revealed a highly significant main effect of age for Q6, Q7, Q10, Q11 and Q14 (Table 5) and no significant differences in the mean value for other responses.

Our analysis also highlights that the main effect for age group is significant for Q6 (p = .006) and Q7 (p = .003). Hence the 18–21 years old group agreed (meansmellfading = 3.32) the smell faded away slowly after watching the video clip as opposed to the older group (over 21 years old) who tended to disagree (meansmellfading = 2.61). The same applies to Q7 and the younger group (meansmellenhance = 3.30) were again more positive than their older counterparts (meansmellenhance = 2.60) in respect of the fact that they considered that the smells employed enhanced their viewing experience.

With regard to the questions about the vibration of the vest (Q10 and Q11), results show that 18–21 years old users were more distracted (meandistraction = 3.42) and annoyed (meanannoyance = 3.55) by the vibration from the haptic vest than users from the older group (meandistraction = 2.88; meanannoyance = 3.00), and that these differences are statistically significant (p = .001 in both cases). These results imply that younger users are more positive towards the use of olfactory-enhanced viewing experiences, whilst older users tend to prefer experiences in which effects generated by the haptic vest are in place. Overall the 18–21 years old group enjoyed the multisensorial experience (meanenjoy = 3.17) (Q14) more than the older generation (meanenjoy = 2.84), with differences again being statistically significant between the two groups (p = .020).

4.4 Impact of gender on cross-modal mulsemedia QoE

The gender of participants significantly influences the responses to Q3, Q4, Q5, Q10, Q11, Q13 and Q14. Accordingly, the ANOVA revealed a highly significant main effect of gender (p = .000) for Q3. Females scored more (meandistraction = 3.84) than males (meandistraction = 3.17) in respect of the smell being distracting. The literature [9] also suggests that women are more sensitive than men to odorant, which might explain why females considered the olfactory effects to be more distracting.

Once again there is a significant effect of gender (p = .006) for Q4 in which females (meanconsistence = 3.13) felt more than males (meanconsistence = 2.64) that the smell was consistent with each video clip. The same applies to Q5 (the smell was annoying) when females (meanannoyance = 3.82) were more annoyed than males (meanannoyance = 3.47), with the differences between the two groups being significant (p = .035). Our result is in line with previous studies. For instance, in an experiment conducted by [3], women outperformed men in the identification of four olfactory stimuli by 92.5%. The same trend was revealed in another study [10], where women outperformed men on 45 out of the 50 stimuli (90%).

Moreover, female participants were significantly (p = .008) more satisfied with the haptic vest enhancing their viewing experience (Q13, meanenhance = 3.40) than males (meanenhance = 2.80); overall, females enjoyed the multisensorial experience (Q14, meanenjoy = 3.22) more than males (meanenjoy = 2.70), with differences being significant statistically (p = .006). This highlights that whilst female users seem to be more sensitive to the effects introduced by scents in mulsemedia, they appreciate haptic vests effects and enjoy cross-modal mulsemedia experiences more than males.

4.5 Impact of education level on cross-modal mulsemedia QoE

The mean value of responses to Q8 and Q9 are significantly determined by education. With regard to the haptic vest effects, the result shows the mean value for responses to Q8 (I enjoyed watching the video clip whilst wearing a Haptic Vest) is significantly determined by education (p = .029). Accordingly, participants with postgraduate education enjoyed watching the videos wearing the haptic vest (meanhapticenjoy = 3.38) more than the undergraduate group (meanhapticenjoy = 2.95). Interestingly, there is also a significant effect of education (p = .002) in the mean value of responses to Q10 where once again postgraduate group felt that the haptic effects were more relevant to the video clip they were watching (meanrelevance = 3.63), compared to the other group (meanrelevance = 2.84).

4.6 Interaction effect between gender and education

We also conducted Simple Effect test in SPSS to determine if a three-way interaction effect exists between age, gender and education in response to the questions. An interaction effect occurs when the effect of one independent variable (age, gender and education) on the dependent variable (Table 5) depends on the level of the other independent variable.

In response to Q2 (The smell came across strong), neither gender nor education have a statistically significant influence but the interaction of gender and education has a statistically significant effect on the average (p = .014). Therefore, we conducted a simple effect test to understand how the effect of gender vary across different level of education. The result obtained shows that the average of gender is significantly different across the education (Table 7) and hence participants with postgraduate qualification had an influence on the average.

4.7 Relationship between smell sensitivity and self-reported QoE

In this section, we present the analysis and discussion of the results obtained from our correlation analysis between user responses to the smell sensitivity questionnaire and the on-screen QoE questionnaire. To this end, a Pearson correlation test revealed the following:

-

Based on the results of the study, Q1 is strongly related to Q4SS (r = .277) for the EG as there is a statistically significant positive correlation (p = .019). So, participants who report that they have difficulty breathing when they encounter smoke, nevertheless found cross modally matched odors relevant to the video clips they were viewing. Similarly, there was a statistically significant negative correlation between Q1 and Q5SS (r = −.248; p = .036) for the CG. This indicates that the less participants are likely to be able to stay in smoky rooms for a long period the more they associate the single rosemary scent used in the CG videos as being relevant to the content watched.

-

The correlation between Q2 and Q11SS was negative and statistically significant (p = .002; r = −.359) in the EG. In this case participants who find exhaust gases smell very unpleasant, in contrast found the odors used in the experiment were not strong. For the CG, there were insignificant results suggesting participants in this group found the smell of rosemary subtle than strong.

-

There was a statistically significant correlation between Q3 and Q10SS for the EG with a negative correlation (r = −.351; p = .003). This reveals that participants who self-reported as not being able to tolerate certain perfumes, did not deem odors to be distractive. Also, there were negative correlations in the CG with statistically significant results in respect of Q3 and Q2SS (r = −.256; p = .033) as well as Q5SS (r = −.396; p = .001). Thus, participants who stated that they have difficulty breathing with smells associated to sprays and paint as well as not being able to stay in smoky rooms for a long time, did not perceive the odors as distractive.

-

The p-values for the correlation between Q4 and smell sensitivity questions for both groups (EG and CG) are greater than the significance level of 0.05, which indicates that the correlation coefficients are not significant. This implies that the smells were found to be consistent when released amongst the video clips.

-

For the EG the correlation between Q5 and Q7SS was positive (r = .239; p = .043). The participants who have self-reported that they feel dizzy when they come across strong smells of paint and smoke, equally found the odors were annoying. Also, the relationship between Q5 and Q10SS variables were negatively correlated and significant (r = −.285; p = .015). Thus, although participants cannot tolerate certain perfumes, they did nonetheless find odors less annoying. In the CG there were negative correlations with statistically significant results Q1SS (r = −.241; p = .044), Q2SS (r = −.248; p = .039) and Q5SS (r = −.326; p = .006). When participants smell paint and sprays, they develop difficulty in breathing. Also, the participants who are not able to stay in smoky rooms for a certain time, nonetheless perceived the odors less annoying.

-

In respect of Q6 there were statistically significant positive correlations amongst Q2SS (r = .274; p = .021) and Q5SS (r = .270; p = .023) in the EG. This shows the participants who develop difficulty breathing with concentrated smells such as sprays and drying paints also cannot stay in smoky rooms for a long time – if smells are cross modally-mapped. Hence, EG participants disagreed in the smell fading away, as they rather felt it lingered. Furthermore, there were insignificant correlations in the CG, indicating that the lingering effect of the smell may have not been as noticeable because only one smell was used throughout all the videos.

-

In Q7 there were statistically significant positive correlations as regards Q5SS (r = .442; p = .000) and Q7SS (r = .410; p = .000) in the EG. Though, participants cannot stay in smoky rooms for a long period and smells such as smoke and paint cause difficulty in breathing, the different types of cross modally mapped odors used in our experiment enhanced their viewing experience as they were not highly concentrated. For the CG Q1SS (r = −.302; p = .012) and Q11SS (r = −.257; p = .034) were significant with negative correlations. Participants who state that they have difficulty breathing when they smell paint and find the exhaust gases smell unpleasant, correspondingly found the odors did not enhance their viewing experience.

-

In Q8 there were statistically significant negatively correlated responses with respect to Q6SS (r = −.347; p = .003), Q8SS (r = −.351; p = .003) and Q9SS (r = −.354; p = .003) in the EG. Thus, participants in the EG who self-reported that the smells of paint and petrol affect them, causing difficulty in breathing, feeling nauseous and are sensitive to the smell of detergents, impacted their enjoyment of watching videos with the effects generated by the haptic vest. Moreover, Q10SS also has a positive correlation with a significant value (r = .366; p = .002). Participants who are sensitive to certain perfumes favored in wearing the haptic vest when scents are cross modally mapped. As regards the CG Q1SS (r = −.246; p = .041), Q3SS (r = −.251; p = .037), Q10SS (r = −.265; p = .028) and Q11SS (r = −.290; p = .016) all correlated negatively with Q8. Accordingly, participants who reported that they have difficulty in breathing when entering freshly painted rooms, small quantities of smoke cause them to cough, cannot endure certain perfumes and find the exhaust gases smell unpleasant were underwhelmed in watching the video clips with a haptic vest.

-

There was a positive relationship in the EG amongst Q9 and Q7SS (r = .262; p = .026). This highlights that participants who feel dizzy when sensing smells such as paint and smoke yet found the haptic effect relevant to the video clips – as long as cross modally mapped scents are employed in the mulsemedia environment. The negatively correlated variables Q8SS (r = −.462; p = .000) and Q9SS (r = −.297; p = .011) tell us that participants have smell sensitivity with petrol and detergents create difficulty in breathing. Hence, they did not find the haptic effects relevant to the video clips they viewed. Only Q8SS (r = −.311; p = .008) was significant with a negative correlation in the CG which means that for participants who are sensitive to petrol smell, the haptic vest effects were professed not to be relevant in relation to the video clips.

-

As far as Q10 is concerned, Q6SS (r = −.292; p = .014), Q8SS (r = −.354; p = .003) and Q11SS (r = −.432; p = .000) correlated negatively, whereas Q7SS (r = .237; p = .048) correlated positively in the EG. The negative correlations show that participants who are sensitive to the smell of petrol, for whom strong smells of paint make them feel nauseous and who feel that exhaust gases are unpleasant did not find the haptic vest distracting. Consequently, for the positive correlation Q7SS (r = .237; p = .048) participants for whom smells of paint and smoke make them feel dizzy, found the haptic vibrations distractive. In the CG Q1SS (r = −.390; p = .001), Q2SS (r = −.340; p = .005), Q3SS (r = −.369; p = .002) and Q4SS (r = −.241; p = .047) were statistically significant and correlated negative. This highlights that participants who have difficulty breathing when coming across smells such as paint, sprays and smoke as well as small quantities of smoke, nevertheless found the haptic vest vibrations less distracting.

-

In Q11 there were negative correlations with Q6SS (r = −.400; p = .001), Q8SS (r = −.349; p = .003), Q9SS (r = −.251; p = .035) and Q11SS (r = −.464; p = .000) in the EG, whereas Q10SS was positively correlated (r = .249; p = .036). The negative correlations show that participants whose smell sensitivity is affected and self-report as experiencing nausea when sensing paint, are sensitive to petrol smell, have difficulty breathing when encountering detergents and that the smell of exhaust gases are unpleasant for them did also found the haptic vest vibrations annoying, when cross modally-mapped scents were used in our study. In contrast, the positive correlation implies that while some participants cannot tolerate certain perfumes, they nonetheless found the vibrations of the haptic vest rather annoying. For the CG Q1SS (r = −.297; p = .013) and Q3SS (r = −292; p = .014) were significantly but negatively correlated with Q11. Thus, vibrations of the haptic vest were found to be annoying when experiencing associated rosemary scents, by users for whom the smells of paint and small quantities of smoke affect their breathing, making them cough.

-

In the EG for Q12 there were three significant positive correlations Q1SS (r = .245; p = .039), Q7SS (r = .266; p = .025) and Q10SS (r = .425; p = .003). The participants who would have breathing difficulties, feel dizzy when encountering smells of paint and smoke, felt that the effects of the haptic vest enhanced the sense of reality as long as cross modally matched smells are used. The same applies for the participants who cannot tolerate certain perfumes. However, the negative correlations Q6SS (r = −293; p = .013) and Q8SS (r = −.508; p = .000) suggest that those who feel nausea with paint smell and are sensitive to the smell of petrol, found the haptic vest effect did not enhance the sense of reality. In respect of CG participants, no statistically significant correlation were found between user responses to the smell sensitivity questionnaire and Q12.

-

In Q13 significant positive correlations were found with Q1SS (r = .241; p = .044) and Q10SS (r = .425; p = .000) in the EG. Accordingly, participants who have difficulty breathing when entering freshly painted rooms and cannot tolerate certain perfumes even so found that the haptic vest effects enhanced their viewing experience. The negative correlations associated to Q13 were Q6SS (r = −.373; p = .001) and Q8SS (r = −.508; p = .000) with significant results. Hence, participants who report that the strong smell of paint makes them feel nauseous and who are also sensitive to the smell of petrol found that haptic effects did not enhance their viewing experience when cross modally-mapped scents are used. In the CG Q10SS (r = −.309; p = .013) and Q11SS (r = −.366; p = .003) were significant with a negative correlation. Participants who cannot tolerate certain perfumes and find the smell of exhaust gases unpleasant, found the use of the haptic vest effects did not enhance their viewing experience.

-

In Q14 there were negative correlations with Q6SS (r = −.298; p = .012), Q8SS (r = −.366; p = .002), and Q9SS (r = −.367; p = .002) whilst one variable correlated positively, namely Q7SS (r = .310; p = .008), in the EG. The negative correlations indicate that participants would feel nausea with the strong paint smell and are sensitive to petrol smells as well as have difficulty breathing when sensing detergents did not enjoy the multisensorial experience. On the other hand, the positive correlation shows those who would feel dizzy with strong smells of paint and smoke did enjoy the multisensorial experience when cross modally-mapped scents of lilial, bergamot, clear lavender, lavender, lemon and raspberry. Furthermore, when only rosemary was employed throughout, there were positive correlations in the CG between Q14 and Q6SS (r = .287; p = .016), Q7SS (r = .332; p = .005) as well as Q9SS (r = .435; p = .000). Accordingly, users for whom strong smells of paint and smoke cause them to feel nauseous and dizzy, as well as having their breathing affected by the smell of detergents nevertheless enjoyed the multisensorial experience.

The Pearson correlation test revealed that the CG had more participants agreeing with (Q1SS) than the EG. In the CG the odours used in our experiment were considered annoying and did not enhance the users viewing experience when linked to Q1SS. With regard to the haptic vest the effects did not contribute in improving the participant’s enjoyment. Even though the participants in the EG agree to Q1SS, the haptic vest effects made a positive difference in terms of the users overall viewing experience with cross modally mapped scents. It is clear that the six different scents as well as the haptic vest made a great impact on the users experience whereas the single scent not so much. In the CG, participants who relate to (Q2SS) perceived the odours reasonably pleasant and were satisfied with the haptic vest vibrations. However, in the EG participants disagreed the lingering effect of the odours faded away. This could be because there were different cross modally mapped scents used in the EG whereas in the CG there was only one. Most participants in the CG who agreed with (Q3SS), were not amused with the haptic vest which affected their experience.

Respectively, for Q4SS in the EG participants were able to relate the cross modally matched odours to the videos and in the CG they did not observe the vibrations to be distracting. In Q5SS for the CG the haptic vest did not appear bothersome amongst participants. Although, in the EG for Q5SS the participants acknowledged the lingering effect of the smells did not fade away, they felt the use of cross modally mapped scents did enhance their viewing experience. In the EG for Q6SS the haptic vest effects were not deemed inconvenient but received negative feedback in terms of the whole multisensorial experience. Many participants in the EG who relate to Q7SS felt that the cross modally mapped odours were annoying, but enhanced their viewing experience to a certain extent. Also, the haptic vest was well received even though the participants did not enjoy the multisensorial experience but the CG enjoyed it. For Q8SS the EG participants were discouraged with the use of the haptic vest when viewing the content, it did not influence their experience.

Participants in the EG who agree with Q9SS were not fond of the haptic vest effects and did not see the relevance to the content. The haptic vest restricted the user’s multisensorial experience. Oppositely, the CG participants enjoyed the multisensorial experience. In the EG Q10SS displayed that participants were overjoyed in wearing the haptic vest when scents are cross modally mapped. Conversely, in the CG participants were not so keen in watching the video clips whilst wearing the haptic vest. To this end the users QoE was not enriched. Lastly, participants in the EG who agree with Q11SS found the odours delicate than strong and were delighted with the haptic vest effects. On the other hand in the CG the participants were not pleased in watching the videos with the haptic vest and felt the odours did not comply with the content presented.

5 Conclusions

This paper reports the results of a study which sought to answer two research questions:

RQ1. What is the influence of individual differences (smell sensitivity, age, gender, education) on user mulsemedia QoE?

RQ2. What is the influence of cross modally matched olfactory stimuli on user mulsemedia QoE?

From the results obtained of the influence of individual differences (age, gender and education) on user mulsemedia QoE we can conclude overall, compare to the older generation, the 18–21 years old group enjoyed the multisensorial experience more than above 21 years old users. In terms of olfactory experience the younger group had positive feedback as oppose to the former group and were more positive towards the use of olfactory-enhanced viewing experiences. However, the older generation preferred experiences in which effects generated by the haptic vest were in place.

In terms of the influence of gender, in line with previous studies in the literature, our result also conclude that females are more sensitive to smell compare with males. Females were also more satisfied with the haptic experience than males. Therefore, female users were more sensitive to the effects introduced by scents in mulsemedia and they appreciated haptic vests effects. Moreover, results showed they enjoyed cross-modal mulsemedia experiences more than males.

Education of our participants also had effect on their mulsemedia QoE. With regard to the haptic vest effects, the result showed, participants with postgraduate education enjoyed watching the videos wearing the haptic vest more than the undergraduate group. Postgraduate group felt that the haptic effects were more relevant to the video clip they were watching, compared to the other group.

Finally, our analysis of three-way interaction effect between age, gender and education showed the interaction of gender and education had a statistically significant effect on the average to the responses given for Q2. The result obtained showed that the average of gender is significantly different across the education and hence participants with postgraduate qualification had an influence on the average.

In conclusion there were numerous correlations found between the questionnaires (smell sensitivity and QoE). It appears that most users had a satisfying overall experience and the cross modally matched odours as well as the haptic vest were perceived positively. This is emphasised in the CG where participants who relate to Q5SS, Q6SS, Q7SS and Q9SS found the single rosemary scent relevant to the videos (Q1) and generally enjoyed the multisensorial experience (Q14) this could be because there was only one scent used throughout the videos. Moreover, in both groups participants who relate to Q2SS, Q5SS and Q10SS did not find the cross modally matched odours distractive (Q3). This could be due to the odours being less intense and more bearable than the highly concentrated smells. However, in respect of the EG participants who relate to Q7SS found the odours rather annoying (Q5). This shows that any type of scent would have a negative effect on them as shown in Q7 the participants did not enjoy the multisensorial experience. Nonetheless, more participants in the CG embraced this experiment satisfying as opposed to the EG. This could be because different types of odours were used in the EG where some were preferred more than the others. Our exploratory work has highlighted the importance of considering individual differences in mulsemedia environments and raised the possibility of interesting future work; of particular interest is the investigation of other individual differences (e.g. personality, learning style, culture) on cross modally matched mulsemedia. Whilst our work has highlighted valuable insights, the set of human factors examined here is not exhaustive; other determinants such as personality, culture and learning styles are all worthy of future exploration.

References

Bangor, A, Kortum, P and Miller, J 2009. “Determining what individual SUS scores mean: adding an adjective rating scale.” J Usability Stud 114–123

Kjell Brunnström, Sergio Ariel Beker, Katrien de Moor, Ann Dooms, Sebastian Egger. (2013). Qualinet White Paper on Definitions of Quality of Experience, version 1.2. March. http://www.qualinet.eu

Cain WS (1982) Odor identification by males and females: predictions vs performance. Chem Senses 7(2):129–142

Covaci A, Ghinea G, Lin CH, Huang SH, Shih JL (2018) Multisensory games-based learning-lessons learnt from olfactory enhancement of a digital board game. Multimed Tools Appl 77(16):21245–21263

Covaci, A, Mesfin, G, Hussain, N, Kani-Zabihi, E, Andres, F and Ghinea, G 2018. “A study on the quality of experience of crossmodal mulsemedia.” In Proceedings of the 10th International Conference on Management of Digital EcoSystems. ACM. 176-182

Covaci A, Zou L, Tal I, Muntean GM, Ghinea G (2018) Is multimedia multisensorial? A review of mulsemedia systems. ACM Computing Surveys (CSUR) 51(5):91

Dematte ML, Sanabria D, Spence C (2006) Crossmodal associations between odors and colors. Chem Senses 31(6):531–538

Diego MA, Jones NA, Field T, Hernandez-Reif M, Schanberg S, Kuhn C, Galamaga M, McAdam V, Galamaga R (1998) Aromatherapy positively affects mood, EEG patterns of alertness and math computations. Int J Neurosci 96(3–4):217–224

Doty RL, Cameron EL (2009) Sex differences and reproductive hormone influences on human odor perception. Physiol Behav 97(2):213–228

Doty RL, Shaman P, Dann M (1984) Development of the University of Pennsylvania Smell Identification Test: a standardized microencapsulated test of olfactory function. Physiol Behav 32(3):489–502

Ghinea G, Ademoye OA (2011) Olfaction-enhanced multimedia: perspectives and challenges. Multimed Tools Appl 55(3):601–626

Ghinea G, Ademoye O (2012) The sweet smell of success: enhancing multimedia applications with olfaction. ACM Transactions on Multimedia Computing, Communications, and Applications (TOMM) 8(1):2

Ghinea G, Chen SY (2006) Perceived quality of multimedia educational content: a cognitive style approach. Multimedia Systems 11(3):271–279

Ghinea G, Chen SY (2008) Measuring quality of perception in distributed multimedia: verbalizers vs. imagers. Computers in Hunman Behavior 24(4):1317–1329

Ghinea G, Timmerer C, Lin W, Gulliver SR (2014) Mulsemedia: state of the art, perspectives, and challenges. ACM Transactions on Multimedia Computing, Communications, and Applications (TOMM) 11(1):17

Avery N Gilbert, Robyn Martin, and Sarah E Kemp. 1996. “Cross-modal correspondence between vision and olfaction: the color of smells.” Am J Psychol 335–351

Hancock PA, Mercado JE, Merlo J, Van Erp JB (2013) Improving target detection in visual search through the augmenting multi-sensory cues. Ergonomics 56(5):72

Hanson-Vaux G, Crisinel A-S, Spence C (2012) Smelling shapes: Crossmodal correspondences between odors and shapes. Chem Senses 38(2):161–166

Hoshino, S, Ishibashi, Y, Fukushima, N and Sugawara, S (2011). “QoE assessment in olfactory and haptic media transmission: Influence of inter-stream synchronization error.” In 2011 IEEE international workshop technical committee on communications quality and reliability (CQR). IEEE. 1-6

Iwata K, Ishibashi Y, Fukushima N, Sugawara S (2010) QoE assessment in haptic media, sound, and video transmission: effect of playout buffering control. Computers in Entertainment (CIE) 8(2):12

Jalal, L and Murroni, M (2017) “Enhancing TV broadcasting services: A survey on mulsemedia quality of experience.” In 2017 IEEE international symposium on broadband multimedia systems and broadcasting (BMSB). IEEE . 1-7

Kemp SE, Gilbert Avery N (1997) Odor intensity and color lightness are correlated sensory dimensions. Am J Psychol 110(1):35

KOR-FX. 2014. KOR-FX Gaming Ves. http://www.korfx.com/

Murray N, Ademoye OA, Ghinea G, Muntean GM (2017) A tutorial for olfaction-based multisensorial media application design and evaluation. ACM Computing Surveys (CSUR) 50(5):67

Murray N, Lee B, Qiao Y, Muntean GM (2014) Multiple-scent enhanced multimedia synchronization. ACM Transactions on Multimedia Computing, Communications, and Applications (TOMM) 11(1):12

Murray, N, Qiao, Y, Lee, B, Karunakar, AK and Muntean, GM 2013. “Subjective evaluation of olfactory and visual media synchronization.” In Proceedings of the 4th ACM Multimedia Systems Conference ACM 162-171

Murray N, Qiao Y, Lee B, Muntean GM (2014) User-profile-based perceived olfactory and visual media synchronization. ACM Transactions on Multimedia Computing, Communications, and Applications (TOMM) 10(1):11

Murray, N, Qiao, Y, Lee, B, Muntean, GM and Karunakar, AK 2013. “Age and gender influence on perceived olfactory & visual media synchronization.” In 2013 IEEE international conference on multimedia and expo (ICME). IEEE

Nakamoto T, Otaguro S, Kinoshita M, Nagahama M, Ohinishi K, Ishida T (2008) Cooking up an interactive olfactory game display. IEEE Comput Graph Appl 28(1):75–78

Narumi, T, Nishizaka, S, Kajinami, T, Tanikawa, T and Hirose, M (2011). “Augmented reality flavors: gustatory display based on edible marker and cross-modal interaction.” In Proceedings of the SIGCHI conference on human factors in computing systems. SIGCHI . 93-102

Rao, D, Yarowsky, D, Shreevats, A and Gupta, M (2010). “Classifying latent user attributes in twitter.” In Proceedings of the 2nd international workshop on Search and mining user-generated contents. 37-44

Scott, MJ, Guntuku, SC, Huan, Y, Lin, W and Ghinea, G (2015). “Modelling human factors in perceptual multimedia quality: on the role of personality and culture.” In Proceedings of the 23rd ACM international conference on Multimedia. ACM . 481

Scott MJ, Guntuku SC, Lin W, Ghinea G (2016) Do personality and culture influence perceived video quality and enjoyment? IEEE Transactions on Multimedia 18(9):1796–1807

Shams L, Seitz AR (2008) Benefits of multisensory learning. Trends Cogn Sci 12(11):411–417

Tortell R, Luigi DP, Dozois A, Bouchard S, Morie JF, Ilan D (2007) The effects of scent and game play experience on memory of a virtual environment. Virtual Reality 11(1):61–68

Yuan Z, Bi T, Muntean GM, Ghinea G (2015) Perceived synchronization of mulsemedia services. IEEE Transactions on Multimedia 17(7):957–966

Yuan Z, Chen S, Ghinea G, Muntean GM (2014) User quality of experience of mulsemedia applications. ACM Transactions on Multimedia Computing, Communications, and Applications (TOMM) 11(1):15

Zhang, L, Sun, S, Xing, B, Fu, J and Yu, S 2016. “Exploring olfaction for enhancing multisensory and emotional game experience.” In International Conference on Technologies for E-Learning and Digital Entertainment. Springer, Cham. 111-121

Zhu Y, Guntuku SC, Lin W, Ghinea G, Redi JA (2018) Measuring individual video QoE: a survey, and proposal for future directions using social media. ACM Transactions on Multimedia Computing, Communications, and Applications (TOMM) 14(2):30

Zhu Y, Heynderickx I, Redi JA (2015) Understanding the role of social context and user factors in video quality of experience. Comput Hum Behav 49:412–426

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kani-Zabihi, E., Hussain, N., Mesfin, G. et al. On the influence of individual differences in cross-modal Mulsemedia QoE. Multimed Tools Appl 80, 2377–2394 (2021). https://doi.org/10.1007/s11042-020-09757-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-020-09757-x