Abstract

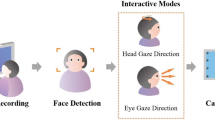

Current eye-tracking systems include many types that have been applied in numerous applications; however, they are unsuitable for industrial environments such as aircraft cockpits and train cabs. While wearable eye-tracking glasses have a wide field of view, they provide only a relative point of view in a captured video image; thus, they cannot function as a real-time interface to digital monitors. Desktop eye-tracking applications can interact with a single screen in real-time using pre-attached fixed cameras or infrared sensors; however, these applications allow only a narrow field of view. In this study, we developed a novel eye-tracking solution that integrates both the requirement for interaction and a wide field of view. The eye-tracking glasses gather eye movement data while a motion capture device provides data concerning the position and orientation of the head. A spatial depth-resolving algorithm is proposed to estimate the distance from the eyes to the digital screen, making it possible to locate the screens. Our proposed method is a generalized solution for estimating gaze points on wide screens; it is not dependent on specific devices. We tested the method in a virtual environment using unity3d and reached three conclusions: the algorithm theoretically has good accuracy and stability; it cannot be simplified; and when applied in a real environment it should have a satisfying and acceptable usability. Subsequently, we performed experiments in a real environment that validated the theory. Further applications: This type of unique wearable equipment can function as a real-time machine input interface to enhance the machine’s perception of the user’s situational awareness and improve the machine’s dynamic service capabilities.

Similar content being viewed by others

References

Aungsakun S, Phinyomark A, Phukpattaranont P, Limsakul C (2011) Robust eye movement recognition using EOG signal for human-computer interface. In International Conference on Software Engineering and Computer Systems (pp. 714–723). Springer, Berlin

Biosyn Company, F.A.B system, Measurement Range, Accuracy, and Data Rate Specifications, http://biosynsystems.com/wp-content/uploads/2010/06/Sensor-Range-Accuracy.pdf

Dempster WT, Gaughran GR (1967) Properties of body segments based on size and weight. Dev Dyn 120(1):33–54

Guo Z, Zhou Q, Liu Z (2017) Appearance-based gaze estimation under slight head motion. Multimed Tools Appl 76(2):2203–2222

Hao Z, Lei Q (2008) Vision-based interface: Using face and eye blinking tracking with camera. In Intelligent Information Technology Application, 2008. IITA'08. Second International Symposium on (Vol. 1, pp. 306–310). IEEE

Lee EC, Park MW (2013) A new eye tracking method as a smartphone interface. KSII Trans Inter Inform Syst (TIIS) 7(4):834–848

Lin CS, Ho CW, Chang KC, Hung SS, Shei HJ, Yeh MS (2006) A novel device for head gesture measurement system in combination with eye-controlled human–machine interface. Opt Lasers Eng 44(6):597–614

Lu F, Sugano Y, Okabe T, Sato Y (2014) Adaptive linear regression for appearance-based gaze estimation. IEEE Trans Pattern Anal Mach Intell 36(10):2033–2046

Lv Z, Wu XP, Li M, Zhang D (2010) A novel eye movement detection algorithm for EOG driven human computer interface. Pattern Recogn Lett 31(9):1041–1047

Ma J, Zhang Y, Cichocki A, Matsuno F (2015) A novel EOG/EEG hybrid human–machine interface adopting eye movements and ERPs: application to robot control. IEEE Trans Biomed Eng 62(3):876–889

Manabe H, Fukumoto M, Yagi T (2015) Direct gaze estimation based on nonlinearity of EOG. IEEE Trans Biomed Eng 62(6):1553–1562

Murawski K, Różanowski K, Krej M (2013) Research and Parameter Optimization of the Pattern Recognition Algorithm for the Eye Tracking Infrared Sensor. Acta Phys Polonica A 124(3)

Panev, S., & Manolova, A. (2015). Improved multi-camera 3D eye tracking for human-computer interface. In Intelligent Data Acquisition and Advanced Computing Systems: Technology and Applications (IDAACS), 2015 IEEE 8th International Conference on (Vol. 1, pp. 276–281). IEEE

Peng W, Fang W, Guo B (2017) A colored petri nets based workload evaluation model and its validation through multi-attribute task battery-ii. Appl Ergon 60:260–274

Różanowski K, Murawski K (2012) An infrared sensor for eye tracking in a harsh car environment. Acta Phys Pol A 122(5):874–879

SMI Company, Eye Tracking Glasses, Technical Specifications, http://www.mindmetriks.com/ uploads/4/4/6/0/44607631/final_smi_etg2w_naturalgaze.pdf

Soltani S, Mahnam A (2016) A practical efficient human computer interface based on saccadic eye movements for people with disabilities. Comput Biol Med 70:163–173

Sun L, Liu Z, Sun MT (2015) Real time gaze estimation with a consumer depth camera. Inf Sci 320:346–360

Tao D, Cheng J, Song M, Lin X (2016) Manifold ranking-based matrix factorization for saliency detection. IEEE Trans Neur Netw Learn Syst 27(6):1122–1134

Tao D, Guo Y, Song M, Li Y, Yu Z, Tang YY (2016) Person re-identification by dual-regularized kiss metric learning. IEEE Trans Image Process 25(6):2726–2738

Tao D, Guo Y, Li Y, Gao X (2018) Tensor rank preserving discriminant analysis for facial recognition. IEEE Trans Image Process 27(1):325–334

Wit Motion Company, 9 axis Attitude Sensor, http://www.wit-motion.com/english.php?m=goods&a=details&content_id=91

Xiong C, Huang L, Liu C (2014) Gaze estimation based on 3D face structure and pupil centers. In Pattern Recognition (ICPR), 2014 22nd International Conference on (pp. 1156–1161). IEEE

Acknowledgements

Our research was supported by the National Natural Science Foundation of China under Grant No. 51575037 and Research Foundation of State Key Laboratory of Rail Traffic Control and Safety under Grant No. RCS2018ZT009.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Bao, H., Fang, W., Guo, B. et al. Real-time wide-view eye tracking based on resolving the spatial depth. Multimed Tools Appl 78, 14633–14655 (2019). https://doi.org/10.1007/s11042-018-6754-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-018-6754-2