Abstract

The ability to test for statistical causality in linear and nonlinear contexts, in stationary or non-stationary settings, and to identify whether statistical causality influences trend of volatility forms a particularly important class of problems to explore in multi-modal and multivariate processes. In this paper, we develop novel testing frameworks for statistical causality in general classes of multivariate nonlinear time series models. Our framework accommodates flexible features where causality may be present in either: trend, volatility or both structural components of the general multivariate Markov processes under study. In addition, we accommodate the added possibilities of flexible structural features such as long memory and persistence in the multivariate processes when applying our semi-parametric approach to causality detection. We design a calibration procedure and formal testing procedure to detect these relationships through classes of Gaussian process models. We provide a generic framework which can be applied to a wide range of problems, including partially observed generalised diffusions or general multivariate linear or nonlinear time series models. We demonstrate several illustrative examples of features that are easily testable under our framework to study the properties of the inference procedure developed including the power of the test, sensitivity and robustness. We then illustrate our method on an interesting real data example from commodity modelling.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

There are multiple notions of causality present in the statistics, econometrics and machine learning literature. We will consider one of these which is widely known as the class of causal concepts termed “statistical causality”. We therefore, do not enter into any additional debate about merits of or frameworks for other notions of causality that may be common in areas of structured learning. Quoting Wiener (1956) “For two simultaneously measured signals, if we can predict the first signal better by using the past information from the second one than by using the information without it, then we call the second signal causal to the first one.” The general concept of statistical causality is based on comparing two predictive models, and this will be the case regardless of the type of predictive models used.

We seek to define a class of causality tests which is very general, and in principle agnostic to the class of underlying process that generates the time series being studied. We will achieve this via a class of semi-parametric models that we will utilise to model structural hypotheses regarding how causality may have manifested in the observed vector valued processes. To characterise testing of such relationships for the specific class of models we will develop, we will be able to explicitly evaluate a test statistics for a hypothesis test in which the asymptotic distribution is known in closed form, and under conditions discussed it can be shown to be the uniformly most powerful test. To achieve this we will introduce the class of representations we develop to characterise the observed vector valued time series according to Gaussian process models. These models are flexible in that they will efficiently allow us to test very general linear and nonlinear causality structures in the trend or volatility dynamics of the observed time series.

Throughout, we will consider without loss of generality, three multivariate time series denoted generically by \(\mathbf{X}_t \in \mathbb {R}^p, \mathbf{Y}_t \in \mathbb {R}^{p'}\) and \(\mathbf{Z}_t \in \mathbb {R}^{\bar{p}}\), which will be treated as column vectors. Our goal is to develop a framework in order to be able to assess conjectures regarding temporal relationships between these two multivariate processes \(\mathbf{X}_t, \mathbf{Y}_t\), in the presence of side information \(\mathbf{Z}_t\), such that we can apply formal inference procedures to determine the strength of evidence for or against such conjectures. We aim to achieve this in as general a manner as possible in order to accommodate differing forms of these relationships such as linear and nonlinear as well as stationary or non-stationary relationships. The approach we adopt will not require specific assumptions on the mechanism or model that generated these two series, which is important to understand. We form a distinction between the models used to postulate and test for the presence or absence of relationships between processes in a statistical causal manner and the knowledge of the true model or data generating processes. We will propose a framework which is applicable to testing very flexible and general relationships of causality whilst still potentially being misspecified with respect to the true data generating mechanism. As such, we note that we do not seek to perfectly represent the true underlying data generating processes, we simply seek to determine plausible relationships and existence of different causality relationships. This can be seen as a more general result than trying to model exactly the true underlying processes of the time series, as our approach can be used for rapid screening of multiple hypotheses about causality prior to a more detailed model development procedure.

To facilitate such generality, we set up the testing framework based on a model of the time series causal relationships captured by Gaussian process. Note, here we are not assuming the data is necessarily truly generated by a Gaussian process, but rather that the relationships of causality may be adequately reflected by such processes. This therefore only assumes a smooth variation of the causal relationships between the partially observed time series represented by data \(\lbrace \mathbf{X}_t\rbrace\), \(\lbrace \mathbf{Y}_t\rbrace\), \(\lbrace \mathbf{Z}_t\rbrace\). One particularly advantageous feature of working with Gaussian process representations of the causal relationships that are conjectured to be expressed by the time series is that we are able to derive and efficiently calculate relevant test statistics to perform inference of relevance to detection of causality structures in both linear and nonlinear classes.

1.1 Perspectives on Causal Analysis

The concept of statistical causality central to this paper is only one of numerous concepts of causality that have been proposed. For centuries, causality was studied by philosophers, until the advancements in science generated a need to express this concept in mathematical terms. As a consequence, there turns out to be a wide array of possible mathematical ways to express the concepts inherent in causality. In this section we would like to pay special attention to the General Theory of Causation by Pearl, and explain how Granger’s statistical causality and Pearl’s theory of causation cater to different needs.

Granger made certain assumptions, that he has called axioms Granger (1980), which are still central to statistical causality:

-

A1 Time ordering: states that the cause happens prior to the effect;

-

A2 No redundant information: states that the cause contains unique information about the effect – it is not related via a deterministic function.

-

A3 Consistency: says that the existence and direction of the causal relationship remain constant in time.

We note that Granger has also pointed out the contentious nature of this third axiom “[...] generally accepted, even though it is not necessarily true”, which he saw as central to the applicability of the concept of causality.

However, it is important to understand that causal theory as developed by Pearl (2000, 2010) does not recognise these axioms. Instead Pearl postulated that causal analysis should allow inferring probabilities under static conditions, as well as how they change under dynamic conditions, by answering three types of questions:

-

Q1 Policy evaluation: What is the effect of potential intervention?

-

Q2 Probabilities of counterfactuals: Can an event be identified as responsible for another event?

-

Q3 Mediation: Can causal effect be assessed as direct or indirect?

Furthermore, Pearl clearly distinguished between associational and causal concepts: “An associational concept is any relationship that can be defined in terms of a joint distribution of observed variables, and a causal concept is any relationship that cannot be defined from the distribution alone” Pearl (2010). Following that criterion, causality in the sense of Granger – which here and in literature is referred to as either “statistical causality”, or “Granger causality” – is an associational concept that is conditional and probabilistic in nature. According to Pearl, given adequately large sample and precise measurements, one can in principle test associational assumptions, but not causal assumptions, which require experimental control. The last one is of crucial importance to understanding the differences in the applicability of those two concepts. Statistical causality has been developed for, and is often used in studying time series, and in that context no experimental control is available in an observational context rather than a designed experimental context.

We conclude this part by referring the reader to the literature that offers tools for reconciling statistical causality with Pearl’s General Theory of Causation. Eichler (2001) proposed using Granger causality graphs, and Eichler and Didelez (2007) introduced interventions to the Granger causal framework in a way that is similar to Pearl’s approach, and thus offer tools for reconciling statistical causality with Pearl’s General Theory of Causation. White et al. (2011) demonstrated how Pearl’s Causal Model and Granger causality are linked when expressed in terms of extension of Pearl’s Causal Model with settable systems.

1.2 Contributions

In this section we briefly outline the novelty of the proposed statistical causality framework developed and contrast the contributions to related references Amblard et al. (2012a, b). In Amblard et al. (2012a) they propose the use of Gaussian Process (GP) models for univariate time series studies and use the data evidence obtained from the models to design a test for causality. However, these works do not explore the complete flexibility of these classes of models, where one can generalise readily to multivarite time series settings, also add side information, and they do not explore the range of causal structures that includes linear or nonlinear structures in the trend or covariance, as well as the estimation optimisation procedure, and model calibration aspects that we generalise in this manuscript. Furthermore, no sensitivity studies or analysis of the effect of model misspecifications is undertaken in the aforementioned works. Despite formulating test statistic as a difference in marginal loglikelihoods, Amblard et al. (2012a) do not exploit the properties of likelihood ratio type tests (LRT or GLRT). Therefore, in this manuscript we build upon these interesting papers and we genearlise the class of models significantly as they have not formulated the required nested structures that are achieved in this manuscript to facilitate more direct hypothesis testing frameworks through the LRT and GLRT, where we may take advantage of well known properties of the resulting test statistics asymptotic distribution under the null.

We would also like to point out that in our research we found the “kernelised Geweke’s measure” of Amblard et al. (2012a) to be a nonlinear generalisation of Granger causality with many good properties, but with several shortcomings that we were able to successfully address by the use of GPs (notably: the difficulty of hyperparameter estimation, model calibration, lack of interpretability of model parameters). However the authors of Amblard et al. (2012a) have progressed from what we see as more general model (GP) to a less general model (kernelised ridge regression), which they saw as more practical for modelling instantaneous causality Amblard et al. (2012b). In our manuscript we do not address instantaneous causality (instantaneous coupling), but our framework can incorporate it, just like it can model causality between multivariate time series.

The multivariate causal testing framework developed in this manuscript allows one to incorporate aspects of causality, linear and nonlinear, in the mean and the covariance. In line with the very general definition of non-causality, models of statistical causality typically test for the equivalence of two conditional distributions. One can then differentiate approaches based on what further assumptions are made on the models. For instance, linear regression methods focus on recognising dependence in trend, under strict model assumptions, while nonlinear generalisations relax these model assumptions. These models do not, however, allow for causality in covariance, or any other nonlinear structures. The framework developed in this manuscript can accomodate these valuable extensions to allow direct straightforward testing of causality in the covariance in linear and nonlinear settings.

Secondly, analysing causal structure with Gaussian processes hasn’t been done in the likelihood ratio framework, we suppose due to the complication in formulating a nested testing model structure. In this manuscript we propose a way to construct model nesting that allows for application of the likelihood ratio test (LRT) and Generalised Likelihood Ratio Test (GLRT). This model nesting is constructed to be applicable for assessing causality in the mean, or covariance, or both, and is achieved through Automatic Relevance Determination (ARD) construction of the kernel. The development of nested models is important, as the standard asymptotic distribution of the LRT test statistic under the null being \(\chi ^2\) does not hold for non-nested hypotheses. Thus, we emphasise that the novelty does not lie in the development of the asymptotic behaviour of the test, which is standard, but in constructing a framework that allows to apply that test in this general and flexible statistical causality framework we propose. Furthermore, with our GP model formulations the test statistic can be written in a closed form, can be computed point-wise, and is efficient to compute.

There are numerous advantages of using GPs, beginning with: ease of optimisation and interpretability of hyperparameters, flexibility, richness of covariance functions, allowing for various model structures. Using a likelihood ratio type test with a GP is a very natural choice, as estimating GP model parameters is often done on the basis of maximising likelihood, and therefore this estimation can be incorporated into the compound version of the likelihood ratio test (Generalised Likelihood Ratio Test, GLRT). From Gaussian variables, GPs inherited the property of being fully specified by the mean and the covariance, and so testing for model equivalence inherently means testing for equivalence of the mean and covariance functions. But many popular kernels do not have the ARD property, and using them for a likelihood ratio test settings gives no easy way to account for causal structures in covariance. Consequently, it is using GLRT with an ARD-GP that gives a uniformly most powerful test with an unparalleled flexibility: known asymptotic distribution under the null, explicit evaluation and in a closed form, and usefulness also for misspecified models.

Thirdly, we demonstrate the ability to detect and identify causal structures in the mean and covariance, even in the presence of different types of model misspecifications. We undertake careful study of sensitivity and robustness of these testing frameworks to various features that one would encounter, like: sample size, parameter misspecification and structural misspecification. It is important as these studies demonstrate that one can reliably apply these tests in a general framework, even if the model is misspecified in those ways, and still have confidence that the inference procedure can detect these types of causality in mean and covariance incorporated in this framework reliably.

2 Concepts in Statistical Causality

It is important to understand the context of our proposed framework in light of the specific formulations that have been proposed before when studying the concept of statistical causality. We, therefore, briefly outline previous approaches to consider this testing framework for statistical causality and importantly the required assumptions.

2.1 Granger Causality

In this section, we present the original formulation of the hypothesis tests and test statistics for Granger causality, as well as a few of their later extended formulations that form the basis for considering concepts of statistical causality.

The first testable form of statistical causality proposed by Granger (1963) was developed in the context of linear forms of vector autoregressive models. For time series \(\left\{ \mathbf{X}_t\right\} , \left\{ \mathbf{Y}_t\right\}\) and \(\left\{ \mathbf{Z}_t\right\}\) lets assume we consider two alternative model formulations for \(\left\{ \mathbf{Y}_t\right\}\):

with \(l\in \mathbb {N}\) being the maximum number of lagged observations and \(\epsilon _{Y,t}, \epsilon _{Y,t}'\) denoting noise (later \(E_t^X, E_t^Y\) will denote residuals). In this setting, we introduce Granger’s definition:

Definition 1

Granger (1963) Causality of the process Y by the process X is defined when: \(Var(E'_{Y,t}) < Var(E_{Y,t})\), and denoted as: \(X \rightarrow Y\). There is no causality, if \(Var(E'_{Y,t}) = Var(E_{Y,t})\), and this is denoted as: \(X \nrightarrow Y\).

Typically the hypotheses will be given by one of the following sets of null hypothesis of Granger non-causality and alternative hypothesis of lack of Granger non-causalityFootnote 1. The version 1 of the hypothesis of non-causality is consistent with the Definition 1:

In the specific case of the linear regression models from the Eqs. 1 and 2, we can also use the version 2 of the hypothesis of non-causality (which implies version 1):

Granger proposed to test the null hypothesis from Eq. 3 using a test statistic called strength of causality and denoted \(L_{X \rightarrow Y}^{SC}\), defined as the ratio of the two variances of prediction errors:

The strength of causality underlines the relationship between Granger causality and model specification tests for linear regression.

Since this instrumental work there have been numerous developments and extensions proposed. For instance Geweke (1982) proposed measure of linear feedback, with the same model assumptions and equivalent to strength of causality (Eq. 5), and defined as:

which will be \(\chi ^2_p\) distributed under the null hypothesis of lack of causality.

Kernelised version of the Geweke’s measure of linear feedback has been proposed in Amblard et al. (2012b) with the aim to make it a nonlinear method. This kernelised version of the measure of linear feedback has the same form as in the Eq. 6, but arises from a different model: kernel ridge regression, with the best predictor in the reproducing kernel Hilbert space (RKHS) generated by the associated kernel.

In the kernel ridge regression the solution is no longer represented in terms of optimised coefficients \(A_{\cdot \cdot ,j}\) from Eqs. 1 and 2, the so called primal solution which we will denote as \(\varvec{\alpha }\). Instead, the dual solution \(\varvec{\beta }^{krr}\) are such coefficients, that allow the solution to be represented in terms of inner product of the covariates (independent variables). Below, we introduce notation that will allow convenient matrix operations, and that will be used throughout also in the later sections:

For Model A, we have \(\mathbb {Q}_t:= \left[ \mathbf{Y}_t, \mathbf{Z}_t\right] , \mathbb {Q}:= \left[ \mathbf{Y}^{-l}, \mathbf{Z}^{-m}\right]\), and if these two need to be distinguished, we will add subscript referring to the model: \(\mathbb {Q}_{B,t}, \mathbb {Q}_{A,t}\). Here the matrix \(\mathbb {Q}\) represents all available covariate data, and the matrix \(\mathbb {Q} \mathbb {Q}^\text {T} : T \times T\) is a Gramm matrix of the covariates data – it is in such a form that admits application of the kernel trick by “substituting” it with a Gramm matrix which we will denote \(\mathbf{K}_{\mathbb {Q}}\). The Gramm matrix \(\mathbf{K}_{\mathbb {Q}}\), also called kernel matrix or covariance matrix, can be defined element-wise as Mercer kernel function evaluations: \(\left\{ \mathbf{K}_{\mathbb {Q}} \right\} _{i,j} = k (\mathbf {Q}_{i-l:i-1},\mathbf {Q}_{j-l:j-1})\). The Mercer kernel function \(k(\cdot ,\cdot )\in {M} \left( \mathcal {X}\right)\) is a real, symmetric and semi-positive definite kernel function, defined on the domain \(\mathcal {X} \times \mathcal {X}\). Then, the optimal weights, fitted values and mean square of prediction error will for kernel ridge regression be as follows:

When kernel ridge regression is applied to model A, or model B, all of the steps above are applied, but with different definition of \(Q_t\), and therefore different values of the covariance matrix \(\mathbf{K}_{\mathbb {Q}}\). Denoting the fitted values as \(\hat{\mathbf {Y}}^A_{1:T}\) and \(\hat{\mathbf {Y}}^B_{1:T}\), we obtain the mean square errors of kernel ridge regression prediction of the two models: \(Var\left( \hat{\mathbf {Y}}_{1:T}^A - \mathbf {Y}_{1:T}\right)\) and \(Var\left( \hat{\mathbf {Y}}_{1:T}^B - \mathbf {Y}_{1:T}\right)\), which are used in the test statistic in a similar manner to the strength of causality from Eq. 5, and to the test statistic from Eq. 6. Thus the test statistic based on the kernelised ridge regression, that Amblard et al. (2012b) proposed is formulated as follows:

The hypotheses are:

See Amblard et al. (2012b); Zaremba and Aste (2014). We also refer to Lungarella et al. (2007) for other generalisations of Granger causality.

2.2 Transfer entropy

A third set of hypothesis has been subsequently introduced, which also relies on concepts of conditional independence, see Granger (1980); Eichler (2001); Amblard et al. (2012b):

The hypotheses in Eq. 8 were a starting point for a wide range of other tests, many of which would no longer assume the linear form of the models in the Eqs. 1 and 2, see Schreiber (2000); Lungarella et al. (2007); Chen (2006). One of the more important papers here is the one by Schreiber (2000) who introduced the information theoretic approach to modelling causality by proposing transfer entropy, which is now one of the most popular nonlinear statistical causality measures. Transfer entropy is defined as a difference of two conditional entropies:

where

is the differential (continuous) entropy and

is the conditional entropy.

The asymptotic properties of transfer entropy are analysed less often. However Barnett and Bossomaier (2012) prove that for ergodic processes, the transfer entropy is a log-likelihood ratio, asymptotically distributed according to the \(\chi ^2\) distribution under the null hypothesis of lack of causality, and having asymptotic non-central \(\chi ^2\) distribution for the alternative hypothesis. The rate of convergence of the test statistics asymptotic distribution can however be problematic in practice, requiring very large sample sizes for valid application of the test according to the asymptotic distribution (please refer to the experimental results, Sect. 5.6).

In general, the null hypothesis from Eq. 8 is not equivalent to neither that from Eq. 3 nor from Eq. 4. For the linear model from Eqs. 1 and 2, and with the assumptions of \(\epsilon _{Y,t},\epsilon '_{Y,t}\) having the same distributions, the null hypothesis from Eq. 4 implies the null hypothesis from Eq. 8. Furthermore, under the normality assumptions, the test statistic \(L^{TE}_{X \rightarrow Y}\) is equivalent to both \(L^{SC}_{X \rightarrow Y}\) and \(L^{MLF}_{X \rightarrow Y}\), see Barnett and Bossomaier (2012).

3 Semi-Parametric Nonlinear Causal Process Representations

We begin by defining Gaussian Processes, as this will serve as our base class of stochastic processes that we adopt to characterise different examples of causality model structures. The vector valued time series \(\left\{ \mathbf{Y}_t\right\}\) is described by a Gaussian Process model, which is denoted as \(\mathcal {GP}\) and defined as follows:

Definition 2

(Gaussian Process (GP)) Denote by \(f\left( \mathbf{x}\right) : \mathcal {X} \mapsto \mathbb {R}\) a stochastic process parametrised by \(\left\{ \mathbf{x}\right\} \in \mathcal {X}\), where \(\mathcal {X} \subseteq \mathbb {R}^p.\) Then, the random function \(f\left( \mathbf{x}\right)\) is a Gaussian process if all its finite dimensional distributions are Gaussian, where for any \(N \in \mathbb {N}\), the random vector \(\left( f\left( \mathbf{x}_1\right) , f\left( \mathbf{x}_2\right) , \ldots , f\left( \mathbf{x}_N\right) \right)\) is jointly normally distributed, see Rasmussen and Williams (2006).

We can therefore interpret a GP as formally defined by the following class of random functions:

At each point the mean of the function is \(\mu (\cdot ;\varvec{\theta }_{\mu }),\) parametrised by \(\varvec{\theta }_{\mu }\), and the dependence between any two points is given by the covariance function, also called Mercer kernel: \(k \left( \cdot ,\cdot ;\varvec{\theta }_{k}\right) : M \left( \mathcal {X}\right)\), parametrised by \(\varvec{\theta }_{k}\), see detailed discussion in Rasmussen and Williams (2006). We will later use notation \(\varvec{\theta }= \varvec{\theta }_{\mu } \cup \varvec{\theta }_k\), and will refer to \(\varvec{\theta }\) as hyperparameters of the GP random function f.

We then model the time series \(\left\{ \mathbf{Y}_t\right\}\) causal relationships as realisationsFootnote 2 from a GP \(f(\cdot )\) with additive Gaussian noise \(\epsilon _{t}\).

with the following generic definition of the mean function \(\mu _{t}: \mathbb {R}^{kp+lp'+m\bar{p}} \rightarrow \mathbb {R}\) and the covariance function \(k_{t,s}: \mathbb {R}^{kp+lp'+m\bar{p}} \times \mathbb {R}^{kp+lp'+m\bar{p}} \rightarrow \mathbb {R}\):

It will be useful to make the following notational definitions for the mean vector, and correlation matrix, respectively:

and \(SPD_T\) is the manifold of symmetric positive definite matrices of size \(T\times T\).

3.1 Covariance Functions and Automatic Relevance Determination for Causality

As is standard in GP modelling, we will represent the covariance functions with functions that are known as kernels, and we will focus on the class of Mercer kernels \(M \left( \mathcal {X}\right)\).

Definition 3

(Semi-positive definite kernel) A function \(k: \mathcal {X} \times \mathcal {X} \rightarrow \mathbb {R}\) is called a semi-positive definite kernel kernel (positive definite) if and only if it is symmetric, that is, \(\forall \mathbf{x},\mathbf{x}' \in \mathcal {X}, k(\mathbf{x},\mathbf{x}') = k(\mathbf{x}',\mathbf{x})\) and semi-positive definite, that is

There are several important properties of kernels, see Scholkopf and Smola (2001). A centered GP is uniquely determined by its covariance function (semi-positive definite kernel). Conversely, any semi-positive definite kernel determines a covariance function and a unique centered GP, see Hein and Bousquet (2004). Moreover, there exists a bijection between the set of all real-valued semi-positive kernels on some space \(\mathcal {X}\) and the set of all centered GPs defined on \(\mathcal {X}\). Kernels can also be seen as inner products, see Schoelkopf et al. (2004).

An important concept that will be broadly used in the context of kernel classes is the concept of Automatic Relevance Determination (ARD). It has been initially introduced by MacKay (1994), as a Bayesian model where input relevance can be introduced and controlled with parameters; see also Neal (1996). This has later become popular in a wider context of feature selection and sparse learning in Bayesian models, see Qi et al. (2004). We use the same concept, but for a purpose of ensuring we have nested models for inference hypothesis design (see Sect. 4.1.3), and it will be crucial when applying the Generalised Likelihood Ratio Test.

In the ARD model, each input variable has an associated hyperparameter whose value can scale the effect of that input. In the Bayesian approach, this is achieved by setting a separate Gaussian prior for each of the inputs. In our (frequentist) case we treat each dimension as a separate input and define our mean and covariance functions in such a manner that the effect of each of the univariate inputs can be separately changed through zeroing of the hyperparameter associated with the given marginal input component. In particular, by setting specific values of the hyperparameters we can practically eliminate some of the univariate variables from the mean/covariance. This construction has several important advantages: it allows for marginal causality testing as well as developing a class of nested model structures, critical to determining the statistical significance of causality relationships under consideration. In the table below (Table 1) are two examples of popular kernels and their ARD versions. Rasmussen and Williams in their MATLAB toolbox provide an ARD version of the squared exponential kernel with \(diag\left( \left[ l_1^{-2}, ..., l_n^{-2} \right] \right)\), our version from the Table 1 allows to choose \(l_i=0\) which removes the effect of the i-th dimension of input on the kernel. As a result, the covariance for lower dimensional space can be expressed as a covariance with a higher dimensional space \(k^{SE}_{t,s}(\left[ \mathbf{Y}_{t-1}, \mathbf{Z}_{t-1}\right] ,\left[ \mathbf{Y}_{s-1}, \mathbf{Z}_{s-1}\right] ) = k^{SE}_{t,s}(\left[ \mathbf{X}_{t-1}, \mathbf{Y}_{t-1}, \mathbf{Z}_{t-1}\right] ,\left[ \mathbf{X}_{s-1}, \mathbf{Y}_{s-1}, \mathbf{Z}_{s-1}\right] ; l_1 = 0)\).

Given a set of input points \(\left\{ \mathbf{x}_i|i = 1, . . . , n \right\}\) we can compute the Gram (covariance) matrix \(\mathbf{K}\) whose entries are \(K_{ij} = k(\mathbf{x}_i, \mathbf{x}_j)\).

4 Characterising Causality Hypotheses With Gaussian Process Models

When performing inferential tests for statistical causality one will typically compare two alternative model hypotheses. We have already seen in the Sect. 1, that such hypotheses can be formulated in multiple ways, see Eqs. 3, 4 and 8. In defining the non-causality tests, we start from the more general forms of the hypotheses outlined in Eq. 8.

The two causal model structures are generically represented as multi-dimensional Gaussian process time series models observed in additive Gaussian noise and denoted by Model A and Model B in the Eqs. 9 and 10 respectively:

with the following forms of mean functions \(\mu _A: \mathbb {R}^{lp' + m\bar{p}} \rightarrow \mathbb {R}\), \(\mu _B: \mathbb {R}^{kp +lp' + m\bar{p}} \rightarrow \mathbb {R}\) and covariance functions \(k_A: \mathbb {R}^{lp' + m\bar{p}} \times \mathbb {R}^{lp' + m\bar{p}} \rightarrow \mathbb {R}\), \(k_B: \mathbb {R}^{kp +lp' + m\bar{p}} \times \mathbb {R}^{kp +lp' + m\bar{p}} \rightarrow \mathbb {R}\):

We assume the mean and covariance functions, \(\mu _A, k_A\) and respectively \(\mu _B, k_B\), have similar functional forms and only differ in dimensionality and hyperparameters.

Having defined these two models we may now state the form of the hypotheses for testing for non-causality (lack of causality) in nonlinear times series. The test that allows comparing two models from the Eqs. 9 and 10 is fundamentally a test comparing two distributions – the conditional distribution of the time series \(\lbrace \mathbf{Y}_t\rbrace\) conditioned on inputs from either of the two models. As it was already mentioned, we never actually confirm the statistical causality, but rather reject lack of causality (test for non-causality).

Under such a test, the null hypothesis is that there is no causal relationship from time series \(\lbrace \mathbf{X}_t\rbrace\) to \(\lbrace \mathbf{Y}_t\rbrace\), and including the past of \(\lbrace \mathbf{X}_t\rbrace\) does not improve the prediction of \(\lbrace \mathbf{Y}_t\rbrace\). Given the model formulations, this means equality of conditional distribution of \(\mathbf{Y}\), conditioning on either set of explanatory variables (analogously to Eq. 8):

The distributions above can be obtained in closed form only in the case of additive Gaussian noise, or in cases where there is no assumed additive noise in Model A or model B.

Since a GP is also specified by its sufficient mean and covariance functions, testing for equality of distributions will be equivalent to testing for equality of the mean functions and the covariance functions. Hence, the convenient feature of the causality testing framework developed from the GP framework we propose is that these general distributional statements about population quantities in the null and alternative hypotheses are equivalent to the following population statements on mean and covariance functions.

where \(M\) represents the class of all Mercer kernels.

If the classes of mean and covariance functions are restricted so that the Model A is nested in the Model B (defined in the Subsect. 4.1.3), then the above hypotheses can be tested with the Generalised Likelihood Ratio Test.

4.1 Generalised Likelihood Ratio Test

The GLRT is a composite hypothesis test that can be used in the case of nested hypothesis if the parameters are unknown and need to be estimated. Below we describe the test, using notation from Garthwaite et al. (2002). The GLRT gives us asymptotic distribution of the test statistics, but it requires that the hypotheses are nested – which can be expressed in terms of restriction on mean and covariance formulations.

4.1.1 Theory for Generalised Likelihood Ratio Test

Let \(\mathbf{X}_1, \mathbf{X}_2, ..., \mathbf{X}_N\) be a random sample of size N from a distribution with pdf \(p(\mathbf{x}; \varvec{\theta })\), and suppose that we wish to test: \(H_0: \varvec{\theta }\in \omega \text { vs } H_1: \varvec{\theta }\in \Omega -\omega\). Then define a random variable:

where \(L(\varvec{\theta }; \mathbf{x}) = p (\mathbf{x}; \varvec{\theta })\) is the likelihood function. For some constant A, we can use a test with critical region \(\Lambda \le A\).

If we define q as the difference in dimensionality of \(H_0\) and \(H_0 \cup H_1\), then we have that under the null, the asymptotic distribution of the test statistic is distributed according to:

We would like to emphasise that the GLRT test compares the likelihoods of parameters either belonging to the whole parameter space \(\Omega\), or to its subset \(\omega \in \Omega\) (Eq. 13). This nesting of parameter spaces will be the basis for defining nested hypotheses in Definition (4).

4.1.2 Generalised Likelihood Ratio Test for Testing Causality

Let us refer to the null hypothesis of non-causality as it was formed in the Eq. 11. The likelihood ratio test can be rewritten in terms of a difference of two marginal log-likelihoods \(\ln p(\mathbf{Y}\mid \mathbf{X}^{-k}, \mathbf{Y}^{-l}, \mathbf{Z}^{-m}; \varvec{\theta }_B, \mathcal {M}_B) = \ln p(\mathbf{Y}\mid \mathbf{Y}^{-l}, \mathbf{Z}^{-m}; \varvec{\theta }_A, \mathcal {M}_A)\), and it leads to the definition of a causality test statistic \(L_{X \rightarrow Y \mid Z}\), first proposed by Amblard et al. (2012a):

In this paper we assume additive Gaussian errors, which allows us to calculate the marginal likelihoods analytically. For the calculations please refer to the Appendix 1. The resulting distributions are:

If we use the hat notation for MLE estimators of the hyperparameters of the mean and covariance functions, then the test statistic is given by:

In the Eq. 15 we present a general form of the test statistic for multivariate time series, and in the special case of a univariate time series \(\mathbf{Y}\) this simplifies to a form from the Eq. 16. Distinguishing between the two definitions can also be seen as a distinction between joint causality and marginal causality.

Under certain regularity conditions, with the assumptions of conditional independence of \(\mathbf{Y}_t \mid \mathbf{X}_{t-1}^{-k},\mathbf{Y}_{t-1}^{-l}, \mathbf{Z}_{t-1}^{-m}\) for all t, and with the assumption that models A and B are nested (see 4.1.3) we can treat \(L_{X \rightarrow Y \mid Z}\) as a GLRT and use the asymptotic results:

where q is the difference in dimensionality between the parameter space for \(\varvec{\theta }_A\) and \(\varvec{\theta }_B\).

4.1.3 Nested Models

An essential concept in our testing procedures is that of nested models. Its importance arises from the fact that the Generalised Likelihood Ratio Test (GLRT) on nested hypotheses has known asymptotic distribution.

Definition 4

Nested models. Two models: \(\mathcal {M}_A\) parametrised by \(\varvec{\theta }_A\) and \(\mathcal {M}_B\) parametrised by \(\varvec{\theta }_B\) are said to be nested if it is possible to derive one from another by means of parametric restriction, see Clarke (2000)

Intuitively, we could say that model A is nested in model B if the input space of model A is embedded in input space of model B, but the Definition 4 is formulated in terms of embedding of the model parameter spaces, rather than embedding of the input spaces. Formulating our Gaussian Process models A and B in such a way that they are nested according to the above definition is not always possible. This is because for the above definition of nested models we require the mean and covariance function to have parameters that correspond to the dimensionality of the input space, or that correspond to the inclusion or not of the input X.

In practice, when we talk about nested models we consider mean and kernel functions allowing the nested model representation. The simplest example of how the mean and kernel functions can allow nested models are for linear mean and kernel functions. Define \(\mu _{t}(\left[ X_{t-1}, Y_{t-1}, Z_{t-1}\right] )= a_1 X_{t-1} + a_2 Y_{t-1} + a_3 Z_{t-1}\), which under restriction \(a_1=0\) will become equivalent to a mean \(\mu _{t}(\left[ Y_{t-1}, Z_{t-1}\right] )= a_2 Y_{t-1} + a_3 Z_{t-1}\), defined on the parameter space \(\left[ Y_{t-1}, Z_{t-1}\right]\). Analogously, for the linear kernel:

restriction \(A_{1,1}, A_{1,2}, A_{1,3}, A_{2,1}, A_{2,2}, A_{2,3}, A_{3,1} = 0\) will make this kernel equivalent to a linear kernel defined on \(\left[ Y_{t-1}, Z_{t-1}\right]\) with parameters \(A_{2,2}, A_{2,3}, A_{3,2}, A_{3,3}\).

A popular kernel function that does not allow nested models is squared exponential kernel:

which, however, can be extended to a representation under an ARD structure, which does have a form that allows for nested models (see Subsect. 3.1 and the Table 1), if the division by a scalar lengthscale parameter \(2 l^2\) is replaced by a multiplication by the following matrix of lengthscale parameters: \(diag\left( \left[ l_X^2,l_Y^2,l_Z^2\right] \right)\).

If the nested model representation is not practical, then GLRT test should not be used. There are several approaches for non-nested models: modified (centered) loglikelihood ratio procedure – Cox procedure, “comprehensive model approach”, “encompassing procedure”, Vuong closeness test: likelihood-ratio-based test for model selection using the Kullback-Leibler information criterion. We refer the reader to the following papers (and references therein): Vuong (1989); MacKinnon (1983); Pesaran and Weeks (2001); and Wilson (2015).

5 Synthetic Data Experiments to Assess Proposed Causality Testing Framework

In this section, we seek to study the behaviour of our proposed methodology for GP testing of statistical causality relationships. In order to motivate the causality studies in this paper, we consider three illustrative nonlinear time series models. They will serve as references that we will apply our causality testing framework to, throughout the synthetic studies undertaken in the results analysis for testing power, sensitivity, and robustness of our proposed causality testing framework.

In particular the classes of model we have chosen as illustrations of data generating processes for the time series that will form inputs to our testing framework characterise a range of general model structures which allow for assessment of linear and nonlinear causality structures in the trend or the volatility or both components of the resulting data generating models.

Example Time Series Model Class 1: Structural Trend Based Causality

Consider an autoregressive nonlinear model class comprised of structures incorporating time series with linear and nonlinear polynomial causality in the trend, with Gaussian noise.

The examples that we will use will assume \(q=2\), which means that in the mean this time series will have a nonlinear causality in the direction \(Y \rightarrow Z\), aside from the linear causality \(X \rightarrow Y\).

We will express the model from the Eq. 17 in the form of three GPs, as in the Eq. 18. When generating the data, as Eq. 20 show, we will use Matern covariance functions with degrees of freedom \(\nu = 1.5\), we will also extend the model to allow causal relationship in covariance – relationships, that were not existing in the time series formulations from Eq. 17.

A formulation of the time series from the Eq. 17 explicitly as GPs can be done according to the following conditional distributions:

where the mean functions are linear:

and covariance functions incorporate the noise which was already defined as a GP:

Note that the main causality structure has been encoded in the mean functions, but the way the covariance functions are formulated allows some causality in the covariance in the directions \(X \rightarrow Y\) and \(Y \rightarrow Z\).

Example Time Series Model Class 2: Structural Causality Incorporated in Volatility

The second causality structure has similar autoregressive and causal components to the Structure 1, but the error terms depend on past values of the other time series (so no autoregression in the covariance) via nonlinear functions \(f_y, f_z\):

where

The formulation above is general and the noise terms \(\epsilon _y, \epsilon _z\) can depend explicitly on time via the functions \(g_y(t)\) and \(g_z(t)\). We use \(c_y, c_z, d_y, d_z, p, q\) to denote constants. For this time series to be expressed in terms of GP we will have exactly the same general GP structure as for the time series 1 in the Eq. 18, and exactly the same mean functions – the Eq. 19. To construct the kernels that will match the covariance structure, we use the properties that summations and multiplications of kernels yield new kernels, for example as follows:

where: \(k^{ts2}_{g, p, r, c, d}([W_{t},V_{t}], [W_{t'},V_{t'}]) = (g + c W_{t}^p + d V_{t}^q)^2 (g + c W_{t'}^p + d V_{t'}^q)^2\) is a kernel with the functions \(g_y(t), g_z(t)\) simplified to a constant g. The notation \([W_{t},V_{t}]\) should be understood as either \([X_{t-1},Z_{t-1}]\) or \([X_{t-1},Y_{t-1}]\).

Example Time Series Model Class 3: Causality Features in Presence of Long Memory

The third data structure is a long memory process: ARFIMA(p,d,q), for \(d \in \left[ 0,0.5\right)\), with causality structure encoded in the form of external regressors:

where B is a backshift operator, the autoregressive coefficients for the time series \(Y_t, Z_t\) include external regressors, the moving average coefficient according to characteristic polynomial: \(\Theta (B) = 1 - \theta _1 B - ... - \theta _q B^q\), and the long memory operator has linear process series expansion given for \(d \in (0,0.5)\) as follows:

In this example, there is no natural way to trivially develop a GP representation, however, it does not preclude fitting a misspecified model in order to screen for causality structures that may be present. We can fit such a model to partial observations of this reference example. This poses an interesting example to study the effect of model misspecification on the ability to detect linear and nonlinear causality structures.

5.1 Synthetic Data Experiments

In this section, we provide results for a series of tests of performance focusing on three key attributes of the proposed causality inference framework: power, sensitivity to parameters and robustness to model misspecification or parameter estimation errors. We perform these analyses for each of the three case study models introduced. We begin with sensitivity and misspecification tests, which we follow with experiments on the power of the test for simple and compound tests.

The sensitivity analysis shows how the test reacts to varying the parameter values used to generate the time series data in Example model 1, Eqs. 18–20. Here, we know the exact model so that a simple test is performed, where we assess its power over the parameter space.

The model misspecification tests show how the test reacts to discrepancy between the parameter values used to generate the time series data and the parameters used in the test statistic. This is a structured form of compound test analysis, since in practical settings in general the parameters will be estimated from data and then used in a compound testing procedure, in which the test statistics is a function of the estimated parameters.

We begin with two simple illustrative examples showing how the values of the test statistics from Eq. 14 change for different data samples, and what values of the \(\chi ^2\) cdf they obtain. Throughout, we will perform analysis relative to the level of significance for the test of 10%. The Fig. 1 illustrates a compound test with optimised parameters – showing the values of test statistics \(L_{X \rightarrow Y}\) vs \(L_{Y \rightarrow X}\) and the 1-p values, or the evaluations of the distribution \(\chi ^2_2 (2 L_{X \rightarrow Y})\) vs \(\chi ^2_2 (2 L_{Y \rightarrow X})\). The data has been generated from causality structure 1 with strong causal effect \(X \rightarrow Y\), with each of the 50 data replicate time series samples being of length 500 sample points.

Test statistics and corresponding cumulative density function evaluations. Causality structure 1, true parameters: \(a_X = a_Y = a_Z = 0.3, b_Y = b_Z = 0.7, q = 2, l_a = l_b = e^{-6}, \sigma _f = e^{-10}, \sigma _n = 0.01\). The horizontal axis represents 50 separate trials, each with a time series of length 500

The interpretation of the Fig. 1 is the following. From the left plot we can see that the test statistics \(L_{X \rightarrow Y}\) has values which are separated from and considerably larger than the test statistics \(L_{Y \rightarrow X}\). This by itself is an indication that the causal effect \(X \rightarrow Y\) should be stronger than \(Y \rightarrow X\). From the plot of cdf evaluations we observe that all of the values of \(L_{X \rightarrow Y}\) are in the tail (with cdf values of exactly 1) and therefore the null hypothesis is strongly rejected at any confidence level, for each of the trials. This means that the estimator of the power of the test, i.e. the probability of rejecting the null hypothesis if it is not true, is very close to 1 for a very large range of confidence levels, certainly between 0.01% and 10%

This indicates that, as expected, the test performs very well in detecting the correct direction of causality – in this case \(Y \rightarrow X\).

5.2 Model Sensitivity Analysis

It is important to ensure that, on one hand, the tests behave in a stable way when the parameters change – at least in some non-extreme region – and, on the other hand, that the tests are not heavily penalising misspecifications.

This test is performed for the first data structure, Eqs. 18–20. We use the following settings: Matern kernel, additive noise with variance of \(\sigma _n^2=0.01\), grid of 21 different parameter values for each variation of the true model parameters assessed. For each experiment we consider 100 trials and the length of the simulated time series varies over range 20, 50, 100, 200, 500, 1000. We report rejection or lack of rejection of the test with the significance of \(\alpha = 0.1\). The starting point is the parameter set: \(a_X = a_Y = a_Z = 0.3\) and \(b_Y = b_Z = 0.7\) (parameters of, respectively, autoregression and causality in the mean, as per Eq. 19), \(l_a = l_b = e^{-1}, \sigma _f = e^{-3}, \sigma _n = 0.1\) (covariance parameters: autoregression, causality, multiplicative scaling, noise covariance, Eq. 20). Parameters are changed one at a time, and a new set of data is generated for each set of parameters.

We do not report results of the sensitivity test for the directions without causality: \(Y \rightarrow X\) or \(Z \rightarrow X\), as the test statistics in those cases will always be zero. When changing parameters in both models at the same time, we no longer use the true parameters, but we still compare models that are equivalent.

In the direction with causality \(X \rightarrow Y\) we see that the behaviour of the test is very stable, with the changes in the frequency of rejection/non-rejection (here presented as estimated power of the test) influenced mostly by the sample size. The power of the test is the probability \(P(H_0 \text { rejected} | H_1 \text { true})\), which in our case is estimated as \(0.01 \cdot \sum _i^{100} F(2 L_{X_i \rightarrow Y_i})\), where we have 100 trials, F denotes the cdf of \(\chi ^2_2\) and 0.9 is 1 - confidence level.

When compared to the \(X \rightarrow Y\) direction, the results for \(Y \rightarrow Z\) are less uniform, as shown in Table 2. The Table 2 demonstrates the power of the test for minimum and maximum of the parameter range, which is enough to portray the behaviour of the test for all parameters except \(\sigma _f\) for the \(Y \rightarrow Z\) direction, for which local minimum can be seen in the Fig. 2. Based on the Table 2, and corresponding Fig. 2, we can also observe that the results for \(Y \rightarrow Z\) are more sensitive to the change in parameters than the results for \(X \rightarrow Y\), in particular the causal coefficient \(b_Z\).

Causality structure 1, direction \(Y \rightarrow Z\) original parameters: \(a_X = a_Y = a_Z = 0.3, b_Y = b_Z = 0.7, q = 2, l_a = l_b = e^{-1}, \sigma _f = e^{-3}, \sigma _n = 0.1.\) Heatmaps show power of the test (hypothesis of no-causality rejected for cdf above 0.9) for different lengths of the time series and for one of the mean or covariance parameters changing \(+-50\%\) in simulation and model as well

5.3 Model Misspecification Analysis

For the misclassification test we have chosen different starting settings for the covariance function \(l_a = l_b = e^{-3}, \sigma _f = e^{1}\), which result in higher covariance, and much more pronounced effects of misclassification of covariance function parameters. Starting from the base set of parameters we alter one parameter at a time when calculating the test statistic; however, we use data generated for the base parameters: so that altered parameter is misspecified. It has to be emphasised that in the misspecification test a parameter will be altered for model A or model B, but not both.

Results of misclassification in the mean, which we do not report, are straightforward to understand and interpret. The power of the test depends mostly on the size of the sample and, to a smaller degree, on the deviation from the true mean. For the direction where causality exists, the power of the test changes almost uniformly with the misclassification of the mean parameter. This is in line with observations that we will see repeatedly – that the power of the test is more robust to any parameter changes in the presence of causality in the mean.

Results of misclassification in the covariance, Figs. 3 and 4, are not so straightforward to understand and interpret. In particular, the performance of the tests seems to be more sensitive to the misclassification of the strength of the observation noise – this is not observed when parameters of the covariance (mainly \(\sigma _f\)) are smaller.

5.4 Power of the Hypothesis Tests: Simple Tests

Summary of the Section:

Analysing power of the test (1-rate of type II error) is a popular technique of assessing the quality of a test or a testing procedure. It is expected that the power of the test will increase with increasing sample size, and showing that this is indeed the case for our testing procedure will be the focus of this and the following sections. We start by analysing the results of simple tests, where exact parameters are used, and there is no effect of parameter misspecification. Strictly speaking, the simple test can be performed only for the first two data structures, as the third has been defined as an econometric model with no GP representation. However, for the third data structure, we perform a few tests with chosen parameters – to show the reaction of the test to certain properties of the data.

Example Time Series Model Structure 1

When using the exact parameters, as in a simple test, typically the behaviour for the Model 1 (Eqs. 19 and 20) is as expected: the power of the test increases with the sample size, and even in case of short time series the classification rule works well. This typical behaviour is illustrated in the left chart of Fig. 5 and in the left chart of Fig. 6. Figure 5 shows evolution of receiver operating characteristic (ROC) curves with increasing sample length, for two sets of parameters. When performing simple test, for most of the parameters, the ROC curves will show that positives and negatives are almost always properly classified, even for short time series – as seen in the example in the left chart of Fig. 5. This example represents testing of model 1 with true parameters: \(a_X = 0.3, b_Y = 0.7\) for mean function, and \(l_a = e^{-3}, l_b = e^{-1}, \sigma _f^2 = e^{-10}\) as the kernel parameters. The corresponding distributions of 1-power of the test can be seen on the left chart of the Fig. 6, in the form of boxplots. These distributions have medians at 1 for samples of length from 50 up, and no outliers for samples of length 500 and 1000.

Examples of parameter combinations for which the ROC curve shows different behaviour with longer sample (time series). True parameters: \(a_X = 0.3, b_Y = 0.7\) in all 3 charts, the kernel parameters respectively: (left) \(l_a = e^{-3}, l_b = e^{-1}, \sigma _f^2 = e^{-10}\), (right) \(l_a = e^{-3}, l_b = e^{-1}, \sigma _f^2 = e^{-2}\)

Examples of parameter combinations that lead to different evolution of the test statistics distribution. True parameters: \(a_X = 0.3, b_Y = 0.7\) in all 3 charts, the kernel parameters respectively: (left) \(l_a = e^{-3}, l_b = e^{-1}, \sigma _f^2 = e^{-10}\), (middle) \(l_a = e^{-3}, l_b = e^{-1}, \sigma _f^2 = e^{-2}\) and (right) \(l_a = e^{-1}, l_b = e^{-3}, \sigma _f^2 = e^{-2}\). The right plot show an extreme case of performance decreasing with sample size for the typical range of sizes, hence the addition of results for data f length 5000

The notable exceptions observed are as detailed below. Firstly, we show an example for which a higher rate of misclassification is seen, albeit it still decreases with the size of the sample. The right chart in the Fig. 5, has larger value of \(\sigma _f^2 = e^{-2} \simeq 0.1353\), but with other mean and kernel hyperparameters remaining the same. The middle chart of the Fig. 6 shows that in this case, even for the sample of length 500 we still can observe some outliers with 1-power of the test at 0.

The right chart of the Fig. 6 shows an extreme case, where the power of the test degrades with length of the time series to a random coin flip on the hypothesis, although it improves if we consider exceptionally long samples of 5000 data points. We can see that the medians of the distributions of 1-power of the test drops from 1 to 0 for samples of length 100–1000, and gets back to 1 for sample of length 5000. The kernel hyperparameters in this case are equal: \(l_a = e^{-1}, l_b = e^{-3}, \sigma _f^2 = e^{-2}\). This means signal variance at the same level as the less extreme case, but bigger autoregressive hyperparameter and smaller causal hyperparameter in the covariance function. Those parameter values, where increasing the sample size temporarily causes decrease of power of the test, can correspond to the dark areas from the Figs. 3 and 4.

The parameters that cause such behaviour is primarily the signal variance \(\sigma _f^2\), and to a smaller extent \(l_a\) – the coefficient of autoregression in covariance function. The hyperparameter \(\sigma _f^2\) increases the value of the covariance proportionately, while \(l_a\) - inversely and less than proportionately. Higher values of the covariance function mean higher volatility clustering, an effect which could compete with causality, but that could be less visible in short time series. We will not elaborate on this point here, but additional dependence structure can complicate the explanation of causality structure. Therefore longer time series appears necessary to correctly recognise causality in this case. The Fig. 7 shows the effect of length of a time series on the value of the test statistics \(L_{X \rightarrow Y}\) for a particular combination of parameters. A single data set of length 5000 has been simulated and subsequently tests statistics have been calculated on the first 100, 200, 300, ...5000 data points. The chosen data set has a general trend of test statistics increasing for longer data lengths (as for all other data sets generated with the same parameters) but it shows to major dips of test statistics temporarily worsening.

The causal effect in the covariance function is difficult to observe. This is because on one hand, it seems to have a much subtler effect than the causality in mean, but also because it is entwined with other effects that can be observed for different parameter combinations. Figure 8 shows that for following parameters \(b_Y = 0, a_Y = 0, l_a = e, l_b = e, \sigma _f^2 = e^{4}\) the causality in covariance is unambiguously observed already for sample size of 50. As a reminder, according to the Eq. 19, \(b_Y = 0\) means no causality in the mean and \(a_Y = 0\) means no autoregression in the mean.

Test statistics and the distribution evaluation: no causality in mean (\(b_Y = 0\)), no autocorrelation in mean (\(a_Y = 0\)), very large covariance parameters \(l_a = e, l_b = e, \sigma _f^2 = e^{4}\). The right subplot does not explicitly show distribution evaluations for sample sizes from 50 to 500, because they are all equal 1 (just like for sample size 1000)

Example Time Series Model Structure 2

The results for testing of model 2, Eqs. 21–23, are just commented on here, since in the simple testing framework they do not show anything unexpected. In particular, the power of the test does increase with increasing length of the time series. Arguably, there is much less opportunity for problematic behaviour. This is firstly because the range of parameters which are available for the Example structure 2 is much narrower than for the Example structure 1 (i.e. parameters for which the series does not explode to infinity). Secondly, we assumed \(cov(\epsilon _{Y_t}, \epsilon _{Y_{t'}}) = 0\), but if we did not we could have had again the problem with volatility clustering masquerading as causality.

Example Time Series Model Structure 3

We do, however, report a few observations on the testing of model 3. Firstly, model 3 does not have a GP representation, so when reporting on the results of the “simple test” in this case we do not perform a test with “true” parameters, but a test with fixed, rather than optimised, parameters. These observations become particularly interesting when compared with the results of the compound test for the data generated from the model 3. The main property of interest in the model 3 is the long memory, and this is what we concentrate on here. When analysing results for the data generated from model 3 (simple or compound test), on one hand, we expect that existence of the long memory will make recognition of causality more difficult, but on the other hand, we would like to see that causality can still be reasonably detected. Figure 9 shows how the power of the test is affected by increasing the long memory (values of the parameter \(d=0.1\) vs \(d=0.45\)), and how this effect can be increased by changing other parameters (the degree of moving average from MA(1) to MA(4), noise covariance from \(\sigma ^2 = 0.1\) to \(\sigma ^2 = 10\), strength of linear causality from \(b_Y = 0.7\) to \(b_Y = 0.2\)). It is worth emphasizing that decreasing strength of causality has the biggest influence, and is the only factor that affects the power of the test for long time series (length = 1000).

5.5 Power of the Hypothesis Tests: Compound Tests

Summary of the Section

Compound tests are two stage tests where both the likelihood as well as the model parameters are estimated. Robust estimation of parameters while possibly costly, is one of the most important pillars of robust testing with compound tests. In this section we want to draw attention of the reader to a few important phenomena: firstly, that the framework is much better in picking up causality than accepting the lack of causality; and secondly, that even with strong model misspecification – which we will see for the model 3 – it is possible to identify causality.

One of the biggest factors influencing quality of the compound test is the efficiency of the optimisation algorithm. The objective function obtained from maximisation of the likelihood for parameter estimation produces generally a non-convex optimisation problem, which means that existence of local optima is likely. Using multiple starting points is highly recommended, but can potentially make the calculations very time consuming (our implementation involves a random grid of starting points). Using GPs with the assumptions we made in this paper (mainly: additive Gaussian noise) offers the advantage of being able to calculate the likelihood analytically. However, it is still possible that the data set can be so large, that this calculation will be prohibitively expensive. A popular approach in the literature is to decrease the dimensionality of the input data, see Snelson and Ghahramani (2007), or strive for efficient implementation, see Rasmussen and Williams (2006). An interesting and little known approach is to choose covariance function that promotes sparsity of the covariance matrix, as proposed by Melkumyan and Ramos (2009). Ensuring an approach is applicable to time series potentially adds a level of complication.

Example Time Series Model Structure 1

An observation that arguably holds for all data – not only the Model Structure 1 – is that when causality does exist in the data, the distribution of the test statistics estimator is much narrower than when there is no causality. An example is shown in the Fig. 10: the first plot shows that the causal signal can be picked up even for the shortest data, and the distribution of the tests statistics converges to value 1 already for length 100. When causality is not present (subplots 2 to 4) even for the longest used samples the distributions of test statistics are wide with median at zero, but 75th percentile often reaching close to 1.

Boxplots showing how the sample size affects distributions of the test statistics, in the case of existing causal effect (first subplot \(X \rightarrow Y\) and \(b_Y = 0.7\)) and in the case where causal effect disappears due to causal coefficient equal to zero (second subplot \(X \rightarrow Y\) and \(b_Y = 0\)), construction (third subplot \(Y \rightarrow X\)) or both (fourth subplot)

Example Time Series Model Structure 2

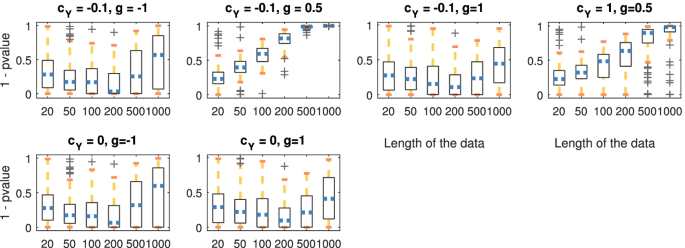

The results of testing of model 2 show some very interesting behaviours. When fitting the model, we introduced model misspecification, because we allowed the structures to be the same for both directions. The first misspecification is in using polynomial means of second degree for \(Y \rightarrow Z \mid X\) as well as \(Z \rightarrow Y \mid X\). The second misspecification is in using the same volatility structure for both \(X \rightarrow Y \mid Z\) and \(Y \rightarrow X \mid Z\). As a result the estimated parameters in mean are often correctly estimated to be near zero, but the parameters in variance are strongly misspecified. The results still have reasonable power of the test: the existence of causality is always correctly identified, however, in some cases the results could be interpreted as spurious causality. Also, like with model 1, there are cases where we seem to be spotting the causal effect in the covariance function when there is no causality in the mean, shown in the Fig. 11.

Model 2, \(X \rightarrow Y \mid Z\). Changes in recognition of causality with increasing sample size: different parameter settings. The top row shows the parameter settings where causal effect in covariance can be expected (\(c_Y \ne 0\)), while the bottom row shows cases where causality in covariance is not expected (\(c_Y = 0\)). In all the cases there was no causality in the mean (\(b_Y = 0\))

At the same time, we see that spurious causality signals are detected for the opposite direction: \(Y \rightarrow X \mid Z\). Figure 12 shows how in the presence of causality \(X \rightarrow Y \mid Z\) (\(b_Y = 0.7\)), the opposite direction also starts displaying causality with growing sample size. Explaining spurious causality is often complicated. In this case, we want to emphasise the following observations. First of all, the value of the test statistics is much bigger for the side where true causality exists, and a much smaller sample is needed to start indicating that causality with high confidence. Secondly, we run a misspecified model for the \(Y \rightarrow X \mid Z\) direction (the misspecification is in the covariance function, with the multiplicative parameter \(\sigma _f\) having to equal zero to achieve properly specified function consisting of the multiplicative noise only), and even with multiple starting points, the optimised parameters are not as close to the true parameters as would be desired.

Model 2, \(Y \rightarrow X \mid Z\). Changes in recognition of causality with increasing sample size: different parameter settings. No true causality in the direction \(Y \rightarrow X \mid Z\), but there was causality in the opposite direction (\(b_Y = 0.7\)). The parameter that affects recognition of spurious causality is the additive parameter g, whose higher absolute values tend to increase covariance

Example Time Series Model Structure 3

The results for the third data set exhibit a similar trend in the aspect that when a strong causal signal is present, it is correctly recognised. In case of lack of causality, or with very weak causal component, the distribution of the test statistics can be wide, but no spurious causality is detected. The data generated from model 3 has a long memory component, controlled by the parameter \(d \in \left[ 0,0.5\right)\), and one of the most interesting aspects is understanding the effect of long memory.

First of all, with the standard parameters, long memory hardly influences recognition of causality. Here, standard parameters are: strong causal component present (\(b_Y = 0.7, b_Z = 0.7\)), and the noise variance is not substantial (\(\sigma _n^2 = 0.01\)).

Figure 13 shows the distribution of test statistics when long memory is not present (\(d=0\)), and when the effect of long memory is strong (\(d=0.45\)), for different data lengths. The effect of changing parameters on the data generated from the model 3, in particular of changing the memory parameter d, is not significant. This seems unexpected at first, compared to the results of the simple test.

The explanation, however, lies in how the parameter estimation works, illustrated in the Fig. 14. The model is strongly misspecified and several properties of the data are not well described by the model. Let us remember, though, that the long memory component has an infinite sum moving average representation, and the moving average model has an autoregressive representation. So the primary effect of increasing moving average part and the long memory part is the increase of parameters responsible for autoregression.

How estimation of the autoregressive \(a_Y\) parameter “compensates” long memory or moving average effects. This figure shows the estimates of \(\hat{a}_Y\) for different values of \(d, MA, \sigma ^2_Y\) and for different experiments, all of length 1000. It can be seen that the estimates strongly increase with increasing d and MA, and that this pattern appears for all values of the noise variance

5.6 Comparison to Other Models

This section is provided to substantiate some of the claims we make about how our methods compare to existing methods. We provide three case studies, two of them compare our method to benchmark methods for causality: Granger causality and transfer entropy. The third case study compares our method to using generalised likelihood ratio test on a well specified econometric model (ARFIMA, example time series model class 3, Eqs. 24–26). What we show in our experiments is that our model achieves good results for all types of data, but in all cases, except for applying linear Granger causality test to linear causality, our method has superior asymptotic properties, as it reaches good power of the test for small samples.

Please note that in these case studies we concentrate on the ability to detect causality, and not on the time complexity of the algorithm.

Case Study 1: Granger Causality

Granger causality can be seen as the original, but also the simplest method of assessing statistical causality. For Gaussian noise and linear causal relationship, Granger causality is arguably the best method, given that the test statistics have known asymptotic distributions, and estimators have excellent numerical properties. What is more, Granger causality can perform well for a range of data that departs from the model assumptions.

In this, and in the next case study, we will use four data sets, designed to show the effect of the departure from the assumption of data with linear dependence, stationary distributions, and Gaussian noise (as introduced earlier in the Eq. 17), replicated below with slight modifications:

The data model from Eq. 27 exhibits two causal relationships. The causal relationship \(X \rightarrow Y\) is – if we assume Gaussian white noise – of the type that Granger causality has been designed to model: linear, stationary, with Gaussian distributions. We will call this a base case (set one), and we will consider three other cases, each presenting a departure from one of those three properties. The causal relationship \(Y \rightarrow Z\) is not linear, and it forms the set 2. We will also consider what happens to the ability to detect relationship \(X \rightarrow Y\), if we changed Gaussian noise to t-student noise (set 3), and if we changed stationary to non-stationary marginal distributions (set 4; in this case we use polynomial covariance, please refer to the Table 1). These four set and their properties are summarised in the Table 3.

We present the results for the Granger causality method, using the GCCA toolbox. The test statistic used in the toolbox is the measure of linear feedback introduced by Geweke (1982), as in the Eq. 6. The corresponding test used for testing the null hypothesis of lack of causality is the F-test. The results are presented graphically in the Figs. 15 and 16.

The results of using Granger causality can be summarised by two main observations. Firstly, for strong linear causality relationship, the linear Granger causality test is very robust and practical even if we do not observe Gaussian noise or stationary covariance. Secondly, for nonlinear causality, the linear Granger causality method behaves no better than a random guess, regardless of the data size. How does that compare to our method? The Fig. 16 shows that for strong, linear causality, our method is not as robust as linear Granger causality, and requires a bigger sample. However, our method can successfully detect nonlinear causality. For the data with t-distributed noise, we present results for the test statistic calculated by assuming the correctly specified model, and using an approximate methodFootnote 3.

Case Study 2: Transfer Entropy

We have used the same data structures as described in the Eqs. 27 together with the Table 3. The results are graphically shown in the Fig. 17.

Transfer entropy is a popular method used as a nonlinear extension of the linear Granger causality (for Gaussian distributions these two methods are equivalent). It is able to consider wider range of data types and relationships, however it is much more difficult to estimate. Compared to our method, transfer entropy requires much larger data samples, and at the same time it is not able to deal with model structures like long memory, non-stationarity, etc. Comparing Figs. 15 and 17 shows inferior performance of transfer entropy to our method in each of the four cases, and inferior to (linear) Granger causality in three cases. Transfer entropy is better than Granger causality in recognising nonlinear causality, however, only for the sample of size 500 is transfer entropy performing recognisably better than a random choice.

What is not shown in the results, but for the sake of fairness needs to be mentioned, is the fact that transfer entropy is much faster than our method, with the current implementation.

Case Study 3: ARFIMA Model

The data that was used for this example has been generated according to an ARFIMA (1,d,1) model with external regressors, Eqs. 24–26, can be represented in a form emphasising the autoregressive part (this is possible because we restricted the choice of d to (0, 0.5)):

We estimate data using modified MATLAB code ARFIMA-SIM by Fatichi (2009). For fitting the ARFIMA with external regressors we use the rugarch R library. We present results for nine parameter settings, which are listed in the Table 4 .

We present the results of using our causality method to estimate causality in Fig. 18, while the results of using a fully specified likelihood of the ARFIMA model are shown in the Fig. 19.

Our method is operating on the GP model representation, which is clearly misspecified. However, that does not prevent our model from detecting causality even for the smallest samples of length 20. That is not the case for using the well specified ARFIMA model and estimated likelihood – in this case a very large sample is needed for the estimation to even converge – data of length 1000 is required for the calculation of the results for all 9 data sets.

6 Real Data Experiments

In this section we apply the testing procedures to analyse commodity futures data.

In our analysis we use the following data: 1 and 36 month expiry oil futures contracts, obtained from futures curves built on the basis of West Texas Intermediate (WTI) Crude oil futures prices traded on the New York Mercantile Exchange, as described by Ames et al. (2016). The effect of the currency level, captured by the US Dollar Index DXY, is constructed as an index of USD relative to EUR, JPY, GBP, CAD, SEK, CHF. Thirdly, we also use a widely considered proxy for convenience yield based on a component related to transportation expense, given by the cost of freighting and short term storage, measured by the Baltic Dry Index (BDI), see Ames et al. (2016). There is a stochastic functional relationship between commodity futures contracts of different maturities (term structure) based on: spot price, convenience yield, interest rate, and dollar value. Convenience yield is very hard to model, but can be captured to some extent by BDI, and the interest rate can be proxied by the time value of money expressed by the futures contracts. Hence the choice of both long and short dated futures contracts for our analysis. The Fig. 20 shows the four covariates from 17th Jan 1990 to 23rd Dec 2015. For literature studying classical relationships between these data, we refer to: Ames et al. (2016), Bakshi et al. (2010) and Dempster et al. (2012).

6.1 Interpreting Causal Relationships

The study performed here uses causality testing to demonstrate the risk factors that investors should consider in their decision process. It also shows how speculators in currency markets and futures markets have a propensity to respond to information observed at different lags and the time it takes them to re-adjust the expectations for futures market hedging or speculation in light of this information.

Figures 21, 22, 23, 24 present the changing significance of causal relationships between the dates 17th Jan 1990 to 23rd Dec 2015. The four pairs that we look at, and the abbreviations that we will use are as follows: 1 month oil futures (1m WTI) and freighting/ storage index (BDI), 36 months oil futures (36m WTI) and freighting/ storage index, 1 month oil futures and dollar index (DXY), 36 months oil futures and dollar index. We are presenting causal reactions at two lags: one week, which can be seen as nearly instantaneous, and eight weeks. Figures 21–24 show charts smoothed with cubic spline smoothing, which makes it easier to observe the main trends, in particular in the case of lags of 8 weeks.

Markets learn from the news and facilitate them into the price, according to the efficient market hypothesisFootnote 4, to which we subscribe (Fama (1970); Fama and French (1988); Campbell and Shiller (1988); Campbell et al. (1997); Malkiel (2003)). We want to learn which variables have effect on price formation, and at what time horizon. We also want to relate to the fact that the three different classes of investments (oil futures, currencies, physicals) have different investor profiles, and thus we expect a difference in the type and speed of reaction. The last question that interests us, is whether the results confirm the intuition that regimes affect the direction and significance of causal influence.

Evolution of the causal influence: 1-pvalues of the test statistic for 1 months WTI and BDI, with 1 lags (top subplot) and 8 lags (middle subplot), rolling window of 104 weeks and cubic spline smoothing. Bottom subplot presents prices of 1 month oil futures contracts and historical values of BDI index