Abstract

We formulate and prove Cutkosky’s Theorem regarding the discontinuity of Feynman integrals in the massive one-loop case up to the involved intersection index. This is done by applying the techniques to treat singular integrals developed in Fotiadi et al. (Topology 4(2):159–191, 1965) . We write one-loop integrals as an integral of a holomorphic family of holomorphic forms over a compact cycle. Then, we determine at which points simple pinches occur and explicitly compute a representative of the corresponding vanishing sphere. This also yields an algorithm to compute the Landau surface of a one-loop graph without explicitly solving the Landau equations. We also discuss the bubble, triangle and box graph in detail.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

One of the primary tasks in perturbative quantum field theory is the computation of Feynman integrals. These are integrals associated with graphs, essentially given by quadratic functions

with n the number of edges and L the number of independent cycles of the underlying graph, which themselves depend (quadratically) on physical parameters (the external momenta p and masses m). The integrals under consideration are then of the form

and, if they are well-defined at all,Footnote 1 define functions in the parameters p and m. Even in simple cases, it is unfortunately very difficult (though sometimes possible, see for example the database Loopedia [5] for a collection of available results or the articles [2, 20] for some calculations in action) to express such an integral in terms of well-understood mathematical functions. Therefore, there is a growing need to understand the properties of functions defined by Feynman integrals in their own right. To this end, the benefits of considering a Feynman integral as a function of general complex momenta instead of just physical Minkowski momenta (living in \(i{\mathbb {R}}\times {\mathbb {R}}^{D-1}\) in our setup) have been discovered long ago. Among many other advantages, this allows us to apply the plethora of elegant techniques from complex analysis to the problem.

Leaving aside the problem of renormalization (which we conveniently sidestep in this work by using analytic regulators \(\lambda _1,\ldots ,\lambda _n\in {\mathbb {C}}\)) posed by the fact that the integral (2) might be ill-defined for every value of (p, m) even when integrating over \(({\mathbb {R}}^D)^L\) instead of \((i{\mathbb {R}}\times {\mathbb {R}}^{D-1})^L\), the expression (2) does certainly not make sense for all possible complex values of (p, m). Leaving the masses m fixed and positive (as we do throughout this text), we can, however, find an open neighborhood U in the complex space of external momenta where the expression is well-defined and yields in fact a holomorphic function on U. This immediately leads to the question along which paths this function can be analytically continued and what the result of such a continuation is. In other words, we seek to understand the analytic structure of functions defined by Feynman integrals.

To answer questions of this nature, the authors of [8] developed a framework, later expanded by Pham in [18, 19], to deal with integrals of the form

This is to be understood as follows: Suppose X and T are two complex analytic manifolds. Denote by \(\pi :X\times T\twoheadrightarrow T\) the canonical projection. We assume there is an analytic subset \(S\subset X\times T\) such that every fiber \(S_t:=\pi ^{-1}(t)\cap S\) (naturally viewed as a subset of X) is a codimension 1 analytic subset of X. We take \(\omega (t)\) to be a holomorphic n-form on \(X\backslash S_t\) holomorphically dependent on \(t\in T\) (a notion which we define precisely in the preliminaries in Sect. 2) and \(\Gamma \) to be a compact n-cycle in \(X\backslash S_{t_0}\) for some fixed \(t_0\in T\). The authors of [8] were able to show that in good cases the integral (3) defines a holomorphic function in an open neighborhood of \(t_0\) which can be analytically continued along any path in T which does not meet a certain analytic subset \(L\subset T\) of codimension 1. This L is called the Landau surface of the integral (3). The discontinuity of such functions along simple loops around points \(t\in L\) of codimension 1 at certain \(t'\in T\backslash L\) close to t can then be computed to be

where \({\tilde{e}}\) (resp. e) is an n-cycle in \(X\backslash S_{t'}\) (resp. (\(n-m\))-cycle in \(S_{t'}\)) called the vanishing sphere (resp. vanishing cycle) which can be computed by means of the local geometry of \(S_{t'}\) alone and \(\text {Res}^m\) is the iterated Leray residue. Here, N is an integer determined by the intersection index of the integration cycle at \(t'\) with a homology class in X relative to \(S_{t'}\) associated with \({\tilde{e}}\) and e.

We want to apply this program to a class of simple integrals of the form (2), namely integrals associated with one-loop Feynman graphs. The goal is to prove Cutkosky’s Theorem, first stated in [7], in this case. It describes the discontinuity of functions defined by Feynman integrals in terms of simpler integrals. Suppose we consider the case \(\lambda _1=\cdots =\lambda _n=1\). Then, conjecturally the discontinuity along certain loops around a problematic point p in Minkowski space evaluated at points \(p'\) close to p is given by the formula

with C a certain subset of edges. Here, the integration over \(\delta _+(Q_e(p',m)(k))\) means integrating the residue of \(Q_e(p',m)\) along its positive energy part. Unfortunately, this is not yet a mathematically precise statement and correspondingly there does not seem to be a rigorous proof of this statement anywhere in the literature. The only attempt the author is aware of is the paper [4] by Bloch and Kreimer which is still work in progress and has not been published yet. The paper does not discuss the compactifiction of the integration cycle and the ambient space which is necessary to apply the techniques from [8]. But even in the one-loop case, where this task does not immediately lead to substantial problems, achieving this compactification in a satisfying way is not at all a trivial task as is extensively discussed here. It is far from obvious how this can be done in the multi-loop case, if it can be done at all. Furthermore, the discussion in [4] chooses a kinematic configuration of Minkowski momenta as the starting point for an analytic continuation. While from the perspective of physics, this might be more satisfying than starting at Euclidean momenta (as is done here), potentially severe mathematical issues arise from the Minkowski setup: To move the poles of the propagators off the integration domain, the \(i\epsilon \)-prescription must be employed. But “at infinity,” the \(i\epsilon \)-term vanishes, so any naive compactification fails immediately and something more has to be done.

It should also be mentioned that while [7] discusses the general case of discontinuities across arbitrary branch cuts (equation (6) in the paper in question), which we aim to prove in this work, the paper also contains a separate discussion of a special case (equation (17) in [7]).Footnote 2 This later case is predominantly used in physics and concerns the discontinuity along so-called normal thresholds, arising from sets of edges who’s removal results in a graph with two connected components. It is used to compute the imaginary part of transition amplitudes and can also be derived from more physical considerations relating to the S-matrix.

The plan of this paper is as follows: First, we modify Feynman integrals to fit the form (3). The main obstacle is the non-compactness of the integration domain and the complex ambient space \({\mathbb {C}}^{LD}\) it lives in. From this new representation of one-loop Feynman integrals, we derive the well-known fact that the Landau surface L is given by the Landau equations.Footnote 3 We then proceed to establish that, outside of a small set of pathological external momenta, the integral is behaved well enough for the techniques above to apply (more precisely: all relevant pinches are simple pinches). Therefore, the vanishing sphere and cell are defined and we compute them explicitly. Finally, putting all these ingredients together, we prove Cutkosky’s Theorem for one-loop graphs up to the yet undetermined intersection index. The computation of the latter is postponed to the second part of this work as it involves an array of quite different techniques than the ones employed here.

The paper has the following structure: In Sect. 2, we review all the necessary concepts and techniques needed to state and proof our version of Cutkosky’s Theorem. This includes remarks on real and complex projective space, some basics from the theory of sheaves (Sect. 2.2) and the theory of singular integrals as developed by Pham et. al. (Sect. 2.3). The theory of Feynman integrals is quickly reviewed in Sect. 3. The subsequent Sect. 4 contains the main part of this paper, leading to a statement and proof of Cutkosky’s Theorem for one-loop graphs. The proof also yields an algorithm to compute the Landau surface without solving the Landau equations explicitly. To the authors knowledge, this algorithm is new. After the results for general one-loop graphs are established, we look at two advanced examples, the triangle and the box graph, in detail in Sect. 5. In the concluding Sect. 6, we comment on the relevance of these results and ideas for future continuation of this work.

2 Preliminaries

Before diving into the details regarding Feynman integrals and Cutkosky’s Theorem, we introduce some amount of known theory for the convenience of the reader. In Sect. 2.1, we start with some elementary properties of real (resp. complex) projective space viewed as a real analytic (resp. complex analytic) manifold. After that, we recap some basics from the theory of sheaves, in particular the theory of local systems, in Sect. 2.2. These provide a convenient language to talk about the theory of singular integrals as initiated in [8] and developed further by Pham in [18, 19], which we review in some detail in Sect. 2.3.

2.1 Some aspects of projective space

A considerable amount of this work’s content relies on various properties of the real and complex projective spaces \({\mathbb {R}}{\mathbb {P}}^n\) and \({\mathbb {C}}{\mathbb {P}}^n\). Therefore, in this subsection we recall some of their properties that we make use of frequently throughout this work.

Let \({\mathbb {K}}\in \{{\mathbb {R}},{\mathbb {C}}\}\). First, recall that the n-dimensional projective space \({\mathbb {K}}{\mathbb {P}}^n\) viewed as a set can be defined as the quotient of \({\mathbb {K}}^{n+1}\backslash \{0\}\) by the equivalence relation

The equivalence class of any \(x=(x_0,\ldots ,x_n)\in {\mathbb {K}}^{n+1}\backslash \{0\}\) with respect to this equivalence relation is denoted by \([x]=[x_0:\cdots :x_n]\). The quotient space comes with a natural projection

As a topological space \({\mathbb {K}}{\mathbb {P}}^n\) is equipped with the induced quotient topology. Additionally, \({\mathbb {R}}{\mathbb {P}}^n\) is a real analytic manifold of dimension n and \({\mathbb {C}}{\mathbb {P}}^n\) is a complex analytic manifold of (complex) dimension n. For convenience of language, we simply use the term \({\mathbb {K}}\)-analytic to mean real analytic if \({\mathbb {K}}={\mathbb {R}}\) and complex analytic if \({\mathbb {K}}={\mathbb {C}}\). Both \({\mathbb {R}}{\mathbb {P}}^n\) and \({\mathbb {C}}{\mathbb {P}}^n\) can be covered by \(n+1\) charts, namely

for \(i\in \{0,\ldots ,n\}\). The transition maps are \({\mathbb {K}}\)-analytic, and the projection \(\pi _{\mathbb {K}}\) is \({\mathbb {K}}\)-analytic with respect to the induced manifold structure. While \({\mathbb {C}}{\mathbb {P}}^n\) is always orientable (as it is a complex manifold), the manifold \({\mathbb {R}}{\mathbb {P}}^n\) is orientable if and only if n is odd. We denote

for \({\mathbb {K}}\in \{{\mathbb {R}},{\mathbb {C}}\}\) which we call the hyperplane at infinity.Footnote 4 The reason for this terminology is the fact that \({\mathbb {K}}^n\) embeds into \({\mathbb {K}}{\mathbb {P}}^n\) via the inclusion

This defines a diffeomorphism (for \({\mathbb {K}}={\mathbb {R}}\)) or a biholomorphic map (for \({\mathbb {K}}={\mathbb {C}}\)) \({\mathbb {K}}^n\overset{\sim }{\rightarrow }{\mathbb {K}}{\mathbb {P}}^n\backslash H_\infty =U_{0,{\mathbb {K}}}\) with inverse \(\varphi _{0,{\mathbb {K}}}\). The remaining points in \(H_\infty \) not in the image of i can be thought of as additional points added “at infinity”. If it is clear from context if we mean \({\mathbb {K}}={\mathbb {R}}\) or \({\mathbb {K}}={\mathbb {C}}\), we simply write \(\varphi _i:=\varphi _{i,{\mathbb {K}}}\), \(H_\infty :=H_{\infty ,{\mathbb {K}}}\) and so on.

It is often useful to work in \({\mathbb {K}}^{n+1}\backslash \{0\}\) instead of \({\mathbb {K}}{\mathbb {P}}^n\) and then, infer desired results by passing to the quotient using the projection \(\pi _{\mathbb {K}}\). The following useful proposition is an example of a result which allows such an inference.

Proposition 1

Let \(f:{\mathbb {K}}^{n+1}\backslash \{0\}\rightarrow {\mathbb {K}}\) be a \({\mathbb {K}}\)-analytic, homogeneous function and \(p\in {\mathbb {K}}\) a regular value of f. Then, \(\pi _{\mathbb {K}}(f^{-1}(p))\) is a \({\mathbb {K}}\)-analytic submanifold of \({\mathbb {K}}{\mathbb {P}}^n\).

Proof

The Lie group \({\mathbb {K}}^\times \) acts on \({\mathbb {K}}^{n+1}\backslash \{0\}\) by sending x to \(\lambda x\) for every \(x\in {\mathbb {K}}^{n+1}\backslash \{0\}\) and \(\lambda \in {\mathbb {K}}^\times \). It is not difficult to see that this action is \({\mathbb {K}}\)-analytic, free and proper. Thus, by the Quotient Manifold Theorem, we see that the quotient of \(f^{-1}(p)\) (which is a \({\mathbb {K}}\)-analytic manifold by the Regular Value Theorem) by this action is a \({\mathbb {K}}\)-analytic manifold itself. \(\square \)

For our purposes, we need the pull-back of the canonical n-form \(d^nz\) on \({\mathbb {C}}^n\) by \(\varphi _{0,{\mathbb {C}}}\). This is computed in the following

Lemma 2

We have

Proof

Obtained by a straightforward calculation. \(\square \)

Note that (11) extends only to a meromorphic n-form on \({\mathbb {C}}{\mathbb {P}}^n\). The pullback of \(d^nz\) by \(\varphi _{0,{\mathbb {C}}}\) cannot be extended to a holomorphic form: The manifold \({\mathbb {R}}{\mathbb {P}}^n\) is compact n-cycle in \({\mathbb {C}}{\mathbb {P}}^n\), so integrating a holomorphic n-form over it yields a finite result. But since removing the set \(H_\infty \) of measure zero from \({\mathbb {R}}{\mathbb {P}}^n\) and choosing inhomogeneous coordinates yields \(\int _{{\mathbb {R}}{\mathbb {P}}^n-H_\infty }\varphi _{0,{\mathbb {C}}}^*d^nz=\int _{{\mathbb {R}}^n}\textrm{d}^nz=\infty \), this cannot be correct.

2.2 Some basics on sheaves and monodromy

The central aim of this work is to understand the multivaluedness of holomorphic functions defined by Feynman integrals. The theory of sheaves, particularly the theory of local systems, provides a convenient framework to formulate and investigate such questions. We start by recalling some of the basic notions of sheaf theory together with the theorems that are relevant to us. Everything contained in this subsection is rather elementary and well-understood. All statements presented here can be found in standard textbooks on the subject, and we recommend [22] or [13]. For details on local systems in particular, the reader is referred to [28]. But since the objects of investigation in this text are Feynman integrals which are mainly studied by physicists, the author decided to include this material for the convenience of the reader.

First recall that a pre-ordered set or poset \((X,\prec )\) is a set X together with a relation \(\prec \subset X\times X\) on X such that \(x\prec x\) as well as \(x\prec y\wedge y\prec x\;\Rightarrow \; x=y\) and \(x\prec y\wedge y\prec z\;\Rightarrow \;x\prec z\) for all \(x,y,z\in X\) (i.e., \(\prec \) is reflexive, anti-symmetric and transitive). Now, recall that to any pre-ordered set \((X,\prec )\) we can associate a category by taking the objects to be the elements of X and for any \(x,y\in X\) taking the set of morphisms \(\text {Hom}(x,y)\) to contain one element if \(x\prec y\) and be empty otherwise. For any topological space X, the open subsets of X form a pre-ordered set with respect to the subset-relation. The associated category of this pre-ordered set is denoted by \(\text {Ouv}_X\) and can be thought of as a subcategory of \({\textbf {Top}}\), the category of topological spaces and continuous maps, with objects the open subsets U of X and morphisms the natural inclusion maps. A pre-sheaf on X with values in a category \({\mathcal {C}}\) is a functor \({\mathcal {F}}:\text {Ouv}_X^\text {op}\rightarrow {\mathcal {C}}\), where \({\mathcal {D}}^\text {op}\) denotes the opposite category of \({\mathcal {D}}\) for any category \({\mathcal {D}}\). For any two open sets \(V\subset U\) with \(i:V\hookrightarrow U\) the natural inclusion and any \(s\in {\mathcal {F}}(U)\), it is customary to denote \(s\vert _V:={\mathcal {F}}(i)(s)\) called the restriction of s to V. A morphism of pre-sheaves is just a natural transformation between the functors. The stalk \({\mathcal {F}}_x\) of \({\mathcal {F}}\) at \(x\in X\) is

where the limit is over all open neighborhoods U of x. The elements of \({\mathcal {F}}_x\) are called germs of \({\mathcal {F}}\) at x. Given an element \(s\in {\mathcal {F}}(U)\), we can thus speak of the germ of s at x. A pre-sheaf \({\mathcal {F}}\) on X is called a sheaf on X if for every \(U\in X\) and every open cover \(\{U_i\}_{i\in I}\) of U the diagram

is an equaliser diagram. Here, \(\tau _i:U_i\hookrightarrow U\) and \(\tau ^i_j:U_i\cap U_j\hookrightarrow U_i\) are the natural inclusions. Recall that this means that \(\prod _{i\in I}{\mathcal {F}}(\tau _i)\) is injective and that

More concretely, this means two things: First, for any open covering \(\{U_i\}_{i\in I}\) of an open set U, if \(s,t\in {\mathcal {F}}(U)\) satisfy \(s\vert _{U_i}=t\vert _{U_i}\) for all \(i\in I\) we have \(s=t\) (Locality). Second, if \(\{s_i\}_{i\in I}\) are elements \(s_i\in {\mathcal {F}}(U_i)\) such that \(s_i\vert _{U_i\cap U_j}=s_j\vert _{U_i\cap U_j}\) for all \(i,j\in I\), there exists a section \(s\in {\mathcal {F}}(U)\) such that \(s\vert _{U_i}=s_i\) for all \(i\in I\) (Gluing). For any open set \(U\subset X\), the elements of \({\mathcal {F}}(U)\) are called the (local) sections over U. In particular, the elements of \({\mathcal {F}}(X)\) are called global sections. A morphism of sheaves is a morphism of the underlying pre-sheaves.

For every pre-sheaf \({\mathcal {F}}\) on X, we can construct a sheaf \({\mathcal {F}}^+\) on X called the sheafification of \({\mathcal {F}}\) together with a morphism \(\theta :{\mathcal {F}}\rightarrow {\mathcal {F}}^+\), which satisfies the following universal property: Given any sheaf \({\mathcal {G}}\) on X and any morphism of sheaves \(\phi :{\mathcal {F}}\rightarrow {\mathcal {G}}\), there exists a unique morphism \(\psi :{\mathcal {F}}^+\rightarrow {\mathcal {G}}\) such that \(\phi =\psi \circ \theta \). One possibility to construct \({\mathcal {F}}^+\) is to set

for every open \(U\subset X\). Let Y be another topological space and \(f:X\rightarrow Y\) a continuous map. Given a sheaf \({\mathcal {F}}\) on Y, the inverse image sheaf \(f^{-1}{\mathcal {F}}\) of \({\mathcal {F}}\) by f is the sheafification of the pre-sheaf given by

where the limit is taken over all open \(V\subset X\) containing f(U). Let X be a topological space and \({\mathcal {C}}\) a category. Let \({\mathcal {F}}\) be a sheaf on X with values in \({\mathcal {C}}\). Then, for any subspace \(Y\subset X\), there is a sheaf \({\mathcal {F}}\vert _Y\) called the restriction of \({\mathcal {F}}\) to Y defined by \({\mathcal {F}}\vert _Y:=i^{-1}{\mathcal {F}}\), where \(i:Y\hookrightarrow X\) is the natural inclusion map. For any object A in \({\mathcal {C}}\), there is a constant pre-sheaf associated with A defined by sending each open set to A and each morphism to \(\text {id}_A\). The sheafification of this pre-sheaf is called the constant sheaf associated with A. A sheaf \({\mathcal {F}}\) on X is called a local system or a locally constant sheaf if for any \(x\in X\) there is an open neighborhood \(U\subset X\) of x such that \({\mathcal {F}}\vert _U\) is a constant sheaf. The inverse image sheaf of any (locally) constant sheaf is (locally) constant [28].

Proposition 3

[28] Any locally constant sheaf on a contractible space is constant.

Local systems on a path-connected space X with fiber F (i.e., a local system which is locally isomorphic to the constant sheaf associated with F) are in a bijective correspondence to homomorphisms from the fundamental group of X to the automorphism group of F. This correspondence can be established as follows: Let \({\mathcal {F}}\) be a local system on X, let \(x\in X\) and let \(\gamma :[0,1]\rightarrow X\) be a loop based at x. Then, the inverse image sheaf \(\gamma ^{-1}{\mathcal {F}}\) is a local system on [0, 1] and since [0, 1] is contractible, this is a constant sheaf by Proposition 3. Thus,

and we obtain an automorphism of F, called the monodromy along \(\gamma \), by composing the above isomorphisms. It can be shown that this automorphism depends only on the homotopy class \([\gamma ]\in \pi _1(X,x)\) of \(\gamma \) (see [28]). The other way around, suppose we are given a homomorphism \(\rho :\pi _1(X,x)\rightarrow \text {Aut}(F)\). Let \(\tilde{{\mathcal {F}}}\) be the constant sheaf associated with F on the universal covering space \({\tilde{X}}\) of X. Then, the sections of \(\tilde{{\mathcal {F}}}\) invariant under deck-transformations form a local system on X. It is not difficult to see that these two operations are inverse to each other.

We also need the notion of a multivalued section:

Definition 1

Let \({\mathcal {F}}\) be a sheaf on a topological space X and \(\pi :{\tilde{X}}\rightarrow X\) the universal covering. A multivalued section of \({\mathcal {F}}\) is a section in the (constant) inverse image sheaf \(\pi ^{-1}{\mathcal {F}}\).

On well-behaved topological spaces, local sections of local systems can always be extended to multivalued global sections:

Proposition 4

[19] Let X be a locally connected topological space and \({\mathcal {F}}\) a sheaf on X. If \({\mathcal {F}}\) is locally constant, then every local section of \({\mathcal {F}}\) can be extended to a multi-valued global section of \({\mathcal {F}}\).

A class of sheaves which bares particular importance to us is given in the following

Definition 2

Let Y and T be smooth manifolds and \(\pi :Y\rightarrow T\) a smooth map. The homology sheaf in degree p of Y over T, denoted by \({\mathcal {F}}^p_{Y/T}\), is the sheafification of the pre-sheaf

For any section h of \({\mathcal {F}}_{Y/T}^p\), we denote its germ at t by h(t).

Proposition 5

[19] Let Y, T and \(\pi :Y\rightarrow T\) as in Definition 2. Then, for every \(t\in T\) and every \(p\in {\mathbb {N}}\), there are isomorphisms

where the limit is taken over all open neighborhoods of t.

When we consider germs of sections of \({\mathcal {F}}^p_{Y/T}\), we usually apply the isomorphism from Proposition 5 above implicitly without mention as long as no confusion can arise.

The sheaves from Definition 2 play an important role for the analytical continuation of functions defined by integrals in our setup. In fact, the extension of local sections to larger domains in these sheaves corresponds directly to analytic continuations as we will see in the next Subsection.

2.3 Singularities of integrals

We want to understand Feynman integrals, revisited in some detail in the following Sect. 3, as holomorphic functions in the external momenta (and possibly the masses of the virtual particles). To do so, we employ the framework from [8] which deals with the analytic properties of functions defined by integrals in a sufficiently general manner. We begin by revisiting the basic ideas of this framework. Let Y and T be two complex analytic manifolds of dimension \(n+m\) and m, respectively, and let \(\pi :Y\rightarrow T\) be a smooth submersion. We denote the fibers by \(Y_t:=\pi ^{-1}(t)\) for all \(t\in T\). By the Implicit Function Theorem, there exists for every \(y\in Y\) a coordinate neighborhood \(U\subset Y\) of y with coordinates

such that there is a chart \((V,\psi )\) of T around \(\pi (y)\) with \(\psi \circ \pi =(t_1,\ldots ,t_m)\). In particular, the fibers \(Y_t\) are smooth manifolds for all \(t\in T\). This can be used to define families of differential forms on the fibers \(Y_t\) which depend holomorphically on t. We use the common multi-index notation for wedge products: For any

with \(i_1<\cdots <i_p\), we write

The following definition and notation is adapted from [19]:

Definition 3

We say that a differential p-form \(\omega \) on Y is a holomorphic p-form relative to T if it can be expressed in the local coordinates (20) as

with holomorphic functions \(f_I\). We denote the space of all holomorphic p-forms relative to T by \(\Omega ^p(Y/T)\). The germ of \(\omega \) at \(t\in T\) is denoted by \(\omega (t)\).

It makes sense to define a codifferential which acts only on the x-part of a differential form relative to T. Thus, we define linear maps

for all \(p\in {\mathbb {N}}\) whose action on \(\omega \in \Omega ^p(Y/T)\) in local coordinates reads

As usual, we simply write \(d_{Y/T}\) for the corresponding endomorphism of \(\bigoplus _{p\in {\mathbb {N}}}\Omega ^p(Y/T)\). It is easy to check that \(d_{Y/T}\circ d_{Y/T}=0\) and thus,

is a cochain complex. Correspondingly, just as in the regular de Rham complex of differential forms, we say that \(\omega \) is a closed differential p-form relative to T if \(d_{Y/T}\omega =0\).

Note that if h is a section of \({\mathcal {F}}_{Y/T}^p\), then according to Proposition 5 we have

Thus, if \(\omega \in \Omega ^p(Y/T)\), it makes sense to integrate \(\omega (t)\) over h(t). The next proposition shows why this is a good idea.

Proposition 6

[19] Let \(\omega \in \Omega ^p(Y/T)\) and \(h\in {\mathcal {F}}^p_{Y/T}(T)\). If \(\omega \) is closed then

defines a holomorphic function on T.

This proposition is the basis for our investigation of holomorphic functions defined by integrals. In practice, however, the situation is scarcely as nice as in Proposition 6. Usually, we are not provided with a global section h but only with a local section. More specifically, often times (in particular for one-loop Feynman integrals) the situation is as follows: The manifold Y has a product structure minus some analytic subset of problematic points, i.e., there is a compact complex analytic manifold X of dimension n and an analytic subset \(S\subset X\times T\) such that \(Y=(X\times T)\backslash S\). In this case, the map \(\pi :Y\twoheadrightarrow T\) is taken to be the canonical projection. We denote the fiber of S at t by \(S_t:=Y_t\cap S\) for all \(t\in T\). Suppose we are only provided with a fixed p-cycle \(\Gamma \) in the fiber \(Y_{t_0}\) for some given \(t_0\in T\). This is already sufficient to define at least a local section:

Lemma 7

There is a \(\dim T\)-simplex \(\sigma \) in T such that \(t_0\in \overset{\circ }{\sigma }\) and \(\Gamma \times \sigma \) defines an element in \({\mathcal {F}}_{Y/T}^p(\overset{\circ }{\sigma })\) (where \(\overset{\circ }{\sigma }\) denotes the interior of \(\sigma \)).

Proof

Since Y is a \(T_4\)-space (every metric space is a \(T_4\)-space [23]) and \(\Gamma \) as well as \(S_{t_0}\) are closed sets, there exists open neighborhoods \(V_1\subset X\) of \(\Gamma \) and \(V_2\subset X\) of \(S_{t_0}\) such that \(V_1\cap V_2=\emptyset \). Due to continuity and the compactness of the fibers, there exists an open neighborhood \(U\subset T\) of \(t_0\) such that \(S_t\subset V_2\) for all \(t\in U\). Then, \(\Gamma \cap S_t\subset V_1\cap V_2=\emptyset \) for all \(t\in U\). Thus, \(\Gamma \times U\) is a subset of Y. We may assume that there exists a \(\dim T\)-simplex \(\sigma \subset U\) in T. Hence, viewing \(\Gamma \times \sigma \) as a \((p+\dim T)\)-chain in \(X\times T\), we compute

Now, \(\Gamma \times \partial \sigma =0\) in \(H_{p+\dim T}(Y,\pi ^{-1}(T-\overset{\circ }{\sigma }))\) (this is already true on the level of chains) since it is contained within \(\pi ^{-1}(T-\overset{\circ }{\sigma })\). Thus, we indeed have \(\partial (\Gamma \times \sigma )=0\) in \(H_{p+\dim T}(Y,\pi ^{-1}(T-\overset{\circ }{\sigma }))\) as claimed. We conclude that \(\Gamma \times \sigma \) defines a local section in the homology sheaf in degree p of Y relative to T. \(\square \)

The situation described above applies for example to one-loop Feynman integrals (defined further below, see for example Eq. (96)), where \(t_0\) corresponds to a Euclidean (i.e., real) configuration of momenta. Now, the question is if this local section can be extended to a (possibly multivalued) global section, generally relative to a slightly smaller base space \(T^*\subset T\). A central result by Pham in this direction is the following

Proposition 8

[19] Let \(T^*\subset T\) be an open subset. If \(\pi \vert _{\pi ^{-1}(T^*)}:\pi ^{-1}(T^*)\rightarrow T^*\) defines a locally trivial \(C^\infty \)-fibration, then \(\int _{h(t_0)}\omega (t_0)\) defines a multi-valued holomorphic function on \(T^*\).

The idea for the proof of Proposition 8 is rather simple: By Lemma 7, the initial integration domain \(\Gamma \) defines a local section of \({\mathcal {F}}^p_{Y/T^*}\). If \(\pi \vert _{\pi ^{-1}(T^*)}:\pi ^{-1}(T^*)\rightarrow T^*\) defines a locally trivial \(C^\infty \)-fibration, then the sheaf \({\mathcal {F}}^p_{Y/T^*}\) is locally constant. Hence, every local section can be extended to a multivalued global section according to Proposition 4.

As it stands, this is a little bit too abstract for our purposes. Much more useful to us is a more detailed discussion of the situation where Y has a product structure minus an analytic set and \(\pi \) is the canonical projection as described above. For this purpose, we recall the following notions:

Definition 4

[8] A fiber bundle of pairs is a tuple \((E,S,B,\pi ,F)\) consisting of topological spaces E, B, F, a subset \(S\subset E\) and a continuous surjection \(\pi :E\twoheadrightarrow B\) such that \((E,B,\pi ,F)\) is a fiber bundle which has local trivializations at every point \(b\in B\) whose restriction to S are local trivializations of \((S,B,\pi \vert _S,\pi ^{-1}(\{\text {pt.}\})\cap S)\) (where \(\text {pt.}\) denotes any point in B).

Furthermore, we shall say that \((E,S,B,\pi ,F)\) is smooth as a fiber bundle of pairs if the following conditions are satisfied:

-

E, B and F are smooth manifolds.

-

\(\pi \) is a smooth map.

-

For any local trivialization g, the inverse \(g^{-1}\) is differentiable with respect to t.

-

For any local trivialization g, the inverse \(g^{-1}\) lifts smooth vector fields on B to locally Lipschitzian vector fields on E.

To simplify the notation, we denote a smooth fiber bundle of pairs \((E,S,B,\pi ,F)\) by \(\pi :(E,S)\rightarrow B\).

Definition 5

[11] Let V and X be smooth manifolds. An isotopy from V to X is a continuous map \(\sigma :V\times [0,1]\rightarrow X\) such that \(\sigma (\cdot ,t)\) is an embedding for all \(t\in [0,1]\). If \(V\subset X\) and \(\sigma (\cdot ,0)\) is the natural inclusion, we say that \(\sigma \) is an isotopy of V in X. If \(V=X\), \(\sigma (\cdot ,0)=\text {id}_X\) and \(\sigma (\cdot ,t)\) is a diffeomorphism for all \(t\in [0,1]\), we say that \(\sigma \) is an ambient isotopy.

For an isotopy \(\sigma :V\times [0,1]\rightarrow X\), we denote its track by

and its support by

where \(\text {cl}\) denotes the topological closure. Under reasonable circumstances, isotopies in X can be extended to ambient isotopies of X.

Theorem 9

[11] Let X be a manifold, \(A\subset X\) a compact subset and \(U\subset X\) an open neighborhood of A. If \(\sigma :U\times [0,1]\rightarrow X\) is an isotopy of U in X such that \({\hat{\sigma }}(U\times [0,1])\) is open in \(X\times I\), then there exists an ambient isotopy \({\tilde{\sigma }}\) of X with compact support such that \({\tilde{\sigma }}\) agrees with \(\sigma \) in a neighborhood of \(A\times [0,1]\).

Suppose again that we are in the situation above where \(Y=(X\times T)\backslash S\) with an analytic subset \(S\subset X\times T\) and suppose that \(\pi :(X\times T,S)\rightarrow T\) is a (locally trivial) smooth fiber bundle of pairs, i.e., \(\pi :X\rightarrow T\) is a fiber bundle which has local trivializations which also locally trivialize \(\pi \vert _S:S\rightarrow T\). Then, in particular \(\pi :Y\rightarrow T\) defines a locally trivial \(C^\infty \)-fibration and Proposition 8 applies. This can be thought of quite intuitively: If \(\gamma :[0,1]\rightarrow T\) is any path from \(t_0\in T\) to \(t_1\in T\), then the pull-back bundle of pairs of \(\pi :(X\times T,S)\rightarrow T\) by \(\gamma \) has a contractible base space and is thus globally trivial. A trivialization of this pull-back bundle of pairs is an ambient isotopy

such that \(\sigma (\Gamma ,s)\cap S_{\gamma (s)}=\emptyset \) for all \(s\in [0,1]\). In the context of our problem, this can be viewed as a continuous deformation of the integration cycle \(\Gamma \) away from the points where the differential form is singular. Phrased differently, the ambient isotopy \(\sigma \) allows us to extend the local section defined by \(\Gamma \) along \(\gamma \):

Proposition 10

Let \(\gamma :[0,1]\rightarrow T\) be a path, \(\Gamma \subset X\backslash S_{\gamma (0)}\) a p-cycle and \(\sigma :X\times [0,1]\rightarrow X\) an ambient isotopy such that

for all \(s\in [0,1]\). Then, there is an open neighborhood U of \(\gamma ([0,1])\) such that \(\Gamma \) defines a section of \({\mathcal {F}}_{Y/T}(U)\).

Proof

We know from Lemma 7 that for every \(s\in [0,1]\) there exists a \(\dim T\)-simplex \(\alpha _s\) in T such that \(\gamma (s)\in \overset{\circ }{\alpha }_s\) and such that \(\sigma (\Gamma ,s)\times \alpha _s\) defines an element in \({\mathcal {F}}_{Y/T}^p(\overset{\circ }{\alpha }_s)\). We want to show that these can be glued together to yield a local section of \({\mathcal {F}}_{Y/T}^p\) on \(U:=\bigcup _{s\in [0,1]}\overset{\circ }{\alpha }_s\). By the sheaf axioms, it suffices to show that for any \(s_1,s_2\in [0,1]\) such that \(\overset{\circ }{\alpha }_{s_1}\cap \overset{\circ }{\alpha }_{s_2}\ne \emptyset \), the two sets \(\sigma (\Gamma ,s_1)\times (\alpha _{s_1}\cap \alpha _{s_2})\) and \(\sigma (\Gamma ,s_2)\times (\alpha _{s_1}\cap \alpha _{s_2})\) define the same element in \({\mathcal {F}}_{Y/T}^p(\overset{\circ }{\alpha }_{s_1}\cap \overset{\circ }{\alpha }_{s_2})\). We can assume \(s_1<s_2\) without loss of generality. Now, let

where \(\sigma ^*\) denotes the map on the level of cycles induced by \(\sigma \). Then, the boundary of \(\tau \) is

The second term \(\sigma ^*(\Gamma \times [s_1,s_2])\times \partial (\alpha _{s_1}\cap \alpha _{s_2})\) is 0 in \({\mathcal {F}}_{Y/T}(\overset{\circ }{\alpha }_{s_1}\cap \overset{\circ }{\alpha }_{s_2})\), and we compute the first factor of the first term to be

Thus, we conclude that

is the boundary of \((-1)^p\cdot \tau \) which completes the proof. \(\square \)

Corollary 11

In the same situation as in Proposition 10, the integral \(\int _\Gamma \omega (\gamma (0))\) defines a holomorphic function on some open neighborhood of \(\gamma (0)\) which can be analytically continued along \(\gamma \) by

Proof

Follows directly from Propositions 6 and 10. \(\square \)

Due to this result, we make the following definition:

Definition 6

Let \(\gamma :[0,1]\rightarrow T\) be a path, \(\Gamma \subset X\) a submanifold and \(\sigma :X\times [0,1]\rightarrow X\) an ambient isotopy of \(\Gamma \) in X. We say that \(\sigma \) is adapted to \(\gamma \) if

for all \(s\in [0,1]\).

With this definition in place, Corollary 11 can be rephrased as

Proposition 12

Let \(\gamma :[0,1]\rightarrow T\) be a path from \(t_0\in T\) to some \(t\in T\) and \(\sigma :X\times [0,1]\rightarrow X\) an ambient isotopy adapted to \(\gamma \). Then, the integral \(\int _\Gamma \omega (t_0)\) can be analytically continued along \(\gamma \) via

We need some criteria to determine if an appropriate ambient isotopy for such an analytic continuation exists. Above, we discussed how it is sufficient for \(\pi :(X\times T,S)\rightarrow T\) to be a fiber bundle of pairs. A special case that is of particular interest to us is the case where the fibers \(S_t\) are finite unions of complex analytic manifolds in general position. Recall that for smooth submanifolds \(S_1,\ldots ,S_k\subset X\) of a smooth manifold X, we say that \(S_1,\ldots ,S_k\) are in general position if the normal vectors at every intersection point are linearly independent. More concretely, for every \(x\in \bigcup _{i=1}^kS_i\) set

and let \(s_{1,x},\ldots ,s_{k,x}:X\rightarrow {\mathbb {R}}\) be local equations for the manifolds \(S_1,\ldots ,S_k\) around \(x\in \bigcup _{i=1}^kS_i\). Then, \(S_1,\ldots ,S_k\) are in general position if and only if for every \(x\in \bigcup _{i=1}^kS_i\) there are coordinates \(\phi \) around x such that the gradients of \(\{s_{i,x}\circ \varphi ^{-1}\}_{i\in I_x}\) at \(\phi (x)\) are linearly independent. We have the following result on the analytic continuation in this case:

Proposition 13

[8] Suppose that for all \(t\in T\) the fiber \(S_t=\bigcup _{i=1}^k(S_i)_t\) is the union of finitely many complex analytic manifolds \((S_i)_t\), depending smoothly on t. If the \((S_1)_t,\ldots ,(S_k)_t\) are in general position for all \(t\in T\), then \(\pi :(X\times T,S)\rightarrow T\) is a smooth fiber bundle of pairs. In particular, \(\int _\Gamma \omega (t_0)\) can be analytically continued along any path in T. Furthermore, the analytic continuation to \(t\in T\) can be written as

for an appropriate cycle \(\Gamma '\).

For our purposes, this is unfortunately not enough. It turns out that after compactification, the relevant \((S_1)_t,\ldots ,(S_k)_t\) in the case of Feynman integrals are generally not in general position at any \(t\in T\). A more refined criterion can be obtained by equipping S with a Whitney stratification and we introduce the necessary theory here.

Definition 7

[10] Let X be a topological space and \(({\mathcal {S}},\prec )\) a partially ordered set. An \({\mathcal {S}}\)-decomposition of X is a locally finite collection of disjoint locally closed sets \(A_i\subset X\) such that the following hold:

-

1.

\(X=\bigcup _{i\in {\mathcal {S}}}A_i\).

-

2.

For all \(i,j\in {\mathcal {S}}\), we have

$$\begin{aligned} A_i\cap {\bar{A}}_j\ne \emptyset \;\Leftrightarrow \; A_i\subset {\bar{A}}_j\;\Leftrightarrow \; i\prec j. \end{aligned}$$(43)

The \(A_i\) are called the pieces, their connected components the strata of the \({\mathcal {S}}\)-decomposition.

To apply techniques from differential topology, it is useful to require that the pieces \(A_i\) fit together nicely.

Definition 8

[10] Let M be a smooth manifold and \(X,Y\subset M\) two smooth submanifolds. We say that the pair (X, Y) satisfies Whitney’s condition B if the following holds: Let \(y\in Y\) and suppose \(x_n\) is a sequence in X converging to y and \(y_n\) is a sequence in Y converging to y. Then, if the sequence of secants \(\overline{x_ny_n}\) converges to some line l and the sequence of tangent planes \(T_{x_n}X\) converges to some plane T,Footnote 5 we have \(l\subset T\).

A few remarks are in order: First, note that if X and Y are submanifolds of M such that \({\bar{X}}\cap Y=\emptyset \), the above condition is vacuous. If \(Y\subset {\bar{X}}\) and \({\bar{X}}\) is a smooth manifold, it is also evident that Whitney’s condition B is satisfied for the pair (X, Y) (simply check the condition in coordinates on \({\bar{X}}\)).

Definition 9

[10] Let Y be a smooth manifold, \(S\subset Y\) a closed subset and \({\mathcal {S}}\) a partially ordered set. A Whitney stratification of S is an \({\mathcal {S}}\)-decomposition such that all pairs of strata satisfy Whitney’s condition B. We call the set S together with a Whitney stratification of S a stratified set.

Suppose that \(S\subset Y\) is a closed subset which decomposes into a finite union of smooth manifolds \(S_1,\ldots ,S_n\subset Y\) in general position. Then, there is a natural Whitney stratification of S with strata given by the connected components of

The pieces are the union of all strata with equal codimension (i.e., equally many manifolds involved in the intersection), and the underlying partial order is given by the dimension of the pieces.

Definition 10

[19] Let Y be a smooth manifold equipped with a Whitney stratification. We say that Y is a stratified bundle if there exists a Whitney stratified set X such that Y is locally homeomorphic to \(X\times T\) by homeomorphisms which map every stratum in Y to the product of a stratum in X with T.

Now, we can formulate the following criterion:

Theorem 14

(Thom’s Isotopy Theorem, [19]) Let Y and T be two differentiable manifolds with T connected and let \(\pi :Y\rightarrow T\) be a proper differential map. Suppose that Y is a stratified set and the restriction of \(\pi \) to each stratum is a submersion. Then, \(\pi :Y\rightarrow T\) is a stratified bundle.

Suppose \(S\subset Y\) is a closed subset equipped with a Whitney stratification. Then, the stratification of S together with the connected components of \(Y\backslash S\) yield a Whitney stratification of Y [19]. If this makes \(\pi : Y \rightarrow T\) a stratified bundle then in particular \(\pi :(Y,S)\rightarrow T\) is a fiber bundle of pairs.

Supposing again that we are in the situation where \(Y=(X\times T)\backslash S\) as above, we can equip S with a Whitney stratification (every analytic set admits such a stratification, see [27]). Denote by \(\{A_i\}_{i\in I}\) the collection of strata and for every \(i\in I\) denote by \(cA_i\subset A_i\) the set of all points at which the restriction of \(\pi \) to \(A_i\) fails to be a submersion. Then, we can conclude that the integral of interest defines a (multivalued) holomorphic function outside of the following set:

Definition 11

In the same situation as above, we call the set

the Landau surface of the integral \(\int _\Gamma \omega (t_0)\).

It should be remarked that it can be shown that the Landau surface is an analytic set [19] (a consequence of Remmert’s Proper Mapping Theorem). Thus, if we assume T to be connected, L is either all of T or \(T\backslash L\) is a non-empty open subset. We conclude that \(\int _\Gamma \omega (t_0)\) defines a holomorphic function on \(T^*:=T\backslash L\). Furthermore, it is well-known among physicists that in the case of Feynman integrals, the Landau surface is given by the solutions to a set of algebraic equations (see Eqs. (105) and (106) for the one-loop case). These equations are famously known as the Landau equations and informally detect points at which the singular loci of the integrand are in non-general position at finite distance.

The fact that a given local section may only be extended to a global section in the multivalued sense immediately gives rise to the question of the discontinuity of the function defined by a given integral. This can be easily expressed in terms of the monodromy of the section h in question. Let \(t_0\in T^*\) and let \(\gamma :[0,1]\rightarrow T^*\) be a loop based at \(t_0\). Then, continuing the germ \(h(t_0)\) along \(\gamma \) yields a class \(\gamma ^*h(t_0)\in H_p(Y_{t_0})\), which can be shown to only depend on the homotopy class of \(\gamma \) [19]. Then, analytically continuing the integral along \(\gamma \) yields

We define the variation of \(h(t_0)\) along \(\gamma \) by

and the discontinuity of \(\int _{h(t_0)}\omega (t_0)\) along \(\gamma \) by

The variation can be understood as a homomorphism

The task is now to compute \(\text {Var}_{[\gamma ]}h(t_0)\). This is generally a difficult problem. A rather simple case which can be applied in particular to one-loop Feynman integrals, however, is extensively studied in [19]. These are the so-called simple pinches we discuss further below.

The idea to understand these simple pinches is to localize the problem such that the variation yields a homology class which has a representative with support contained in an arbitrarily small neighborhood of a pinch point. We cannot expect that the problem can be localized like this for any arbitrary loop. But there is a class of loops whose homotopy classes span the fundamental group of \(T\backslash L\) if T is simply connected which allow for such a localization:

Let \(u\in L\) be a point of codimension 1. Then, there are coordinates \((t_1,\ldots ,t_m)\) of T defined in a neighborhood \(U\subset T\) of u such that L locally looks like the set \(\{t_1=0\}\). Let \(\theta :[0,1]\rightarrow T\backslash L\) be a path from some basepoint \(u_0\in T\backslash L\) to some \(u_1\in U-L\). Let \(\omega :[0,1]\rightarrow U-L\) be a loop based at \(u_1\) that traces a circle in the coordinate \(t_1\) with \(t_2,\ldots ,t_m\) fixed. Then, \(\gamma :=\theta \omega \theta ^{-1}\) is a loop in \(T\backslash L\) based at \(u_0\). A loop constructed like this is called a simple loop. In an abuse of language, we shall call elements of the fundamental group which can be represented by a simple loop also simple loops. In the cases we study here, it is always possible to restrict our attention to simple loops without sacrificing any amount of generality due to the following

Proposition 15

[19] The fundamental group \(\pi _1(T\backslash L,u_0)\) of \(T\backslash L\) is spanned by simple loops if and only if T is simply connected.

2.3.1 Leray’s calculus of residues

In the preceding subsubsection, we have seen how to compute the discontinuity of a function defined by an integral of the form (3) by extending a local section of \({\mathcal {F}}_{Y/T}^n\) to a multivalued global section. To obtain Cutkosky’s Theorem in Sect. 4, we also need to employ the multi-variant version of the Residue Theorem, going back to Leray [15], as already eluded to in the introduction.

First, recall the regular Residue Theorem from complex analysis: Let \(U\subset {\mathbb {C}}\) be a simply connected open set and let \(a\in U\) be a point. Then, the theorem states that for a holomorphic function \(f:U\backslash \{a\}\rightarrow {\mathbb {C}}\) and a positively oriented simple closed curve \(\gamma :[0,1]\rightarrow U\backslash \{a\}\) we have

where \(\text {Res}(f,a)\) is the residue of f at a.Footnote 6 To generalize this to multiple dimensions, we essentially have to answer two questions: What is the multi-dimensional analog for the curve \(\gamma \) along which we integrate and what is the multi-dimensional analog of the residue?

First, we attend to the first question. We follow [19]. Recall that for a smooth manifold M and a smooth submanifold \(S\subset M\) a tubular neighborhood of S in M is a vector bundle \(\pi :E\rightarrow S\) (with S the base space, E the total space and \(\pi \) the bundle projection of the bundle) together with a smooth map \(J:E\rightarrow M\) such that:

-

If \(i:S\hookrightarrow M\) is the natural embedding and \(0_E\) is the zero section, we have \(J\circ 0_E=i\).

-

There exist \(U\subset E\) and \(V\subset M\) with \(0_E[S]\subset U\) and \(S\subset V\) such that \(J\vert _U:U\overset{\sim }{\rightarrow }V\) is a diffeomorphism.

We are mainly interested in V and in an abuse of language call this a tubular neighborhood of S as well. Note that there is an associated retraction \(\mu :V\rightarrow S\) by applying \((J\vert _U)^{-1}\), retracting to the zero section \(0_E\) and going back to M via \(J\vert _U\). Now, suppose X is a complex analytic manifold and \(S\subset X\) a closed analytic submanifold of codimension 1. Fix a closed tubular neighborhood \(V\subset X\) of S. The associated retraction \(\mu : V\rightarrow S\) induces a disk bundle structure over S. Then, if \(\sigma \) is a simplex in S, its preimage \(\mu ^{-1}(\sigma )\) is homeomorphic to \(\sigma \times D\), where D is the unit disk. On the level of chains, we obtain

The boundary of \(\mu ^*\sigma \) is thus

Using this result, we define a homomorphism \(\delta _\mu :C_p(S)\rightarrow C_{p+1}(X-S)\) by setting \(\delta _\mu (\sigma ):=\partial D\otimes \sigma \) for each simplex \(\sigma \) and extending by linearity. Note that \(\delta _\mu \) anti-commutes with the boundary \(\partial \):

Hence, \(\delta _\mu \) descends to a homomorphism

which we call the Leray coboundary. It can be shown that this map does not depend on the choice of \(\mu \) which is why we dropped the subscript. Note that this construction gives a simple closed curve \(\gamma \) if we consider the one-dimensional case where S is just a point.

Now, we turn to the second question. To answer it, let us consider a closed differential p-form \(\omega \) on \(X\backslash S\). For all \(x\in S\), let \(s_x\) be a local equation for S near x. If for all \(x\in S\) the form \(s_x\cdot \omega \) can be extended to a differential p-form on a neighborhood of x, we say that \(\omega \) has a polar singularity of order 1 along S.

Proposition 16

[19] If \(\omega \) has a polar singularity of order 1 along S then for every \(x\in X\) there exist differential forms \(\psi _x\) and \(\theta _x\) defined on a neighborhood of x such that

Furthermore, \(\psi _x\vert _S\) is closed and depends only on \(\omega \). We call \(\psi _x\vert _S\) the residue of \(\omega \).

In the situation of Proposition 16, we denote

The \((p-1)\)-form \(\text {res}[\omega ]\) is called the residue form of \(\omega \). Now, if \(\omega \) is any closed form on \(X\backslash S\), then \(\omega \) is cohomologous to a closed form \({\tilde{\omega }}\) on \(X\backslash S\) with a simple pole along S [19]. Thus, it makes sense to define a homology class

and it can be shown that it only depends on the cohomology class of \({\tilde{\omega }}\) in \(X\backslash S\) [19].

Theorem 17

(Residue Theorem, [19]) Let \(\gamma \) be a \((p-1)\)-cycle in S and \(\omega \) a closed differential p-form on \(X\backslash S\). Then, the following identity holds:

We also have to deal with situations in which S is not a submanifold but a union of N closed submanifolds \(S_1,\ldots ,S_N\subset X\) in general position. To achieve this, we can iterate the construction above. For each \(k\in \{1,\ldots ,N\}\) the manifold \(S_1\cap \cdots \cap S_k\) is a closed submanifold in which \(S_{k+1},\ldots ,S_N\) intersect in general position. Thus, we obtain sequences of maps

and

This allows us to define the composite maps

Similarly to the case of one manifold S, we obtain the following

Theorem 18

(Iterated Residue Theorem, [19]) Let \(\gamma \) be a \((p-m)\)-cycle in S and \(\omega \) a closed differential p-form on \(X\backslash S\). Then, the following identity holds:

The (iterated) Leray residue has the following nice property that we employ later on:

Proposition 19

The Leray residue commutes with pullbacks, i.e., for any differential map \(f:Y\rightarrow X\) between two smooth manifolds X and Y, any submanifold \(S\subset X\) of codimension 1 and any differential form \(\omega \) on \(X\backslash S\) we have

Proof

It suffices to show the case \(m=1\) since the case for general m follows by induction. We can assume without loss of generality that \(\omega \) has a polar singularity of order 1 along S (otherwise, we replace \(\omega \) by a cohomologous form with this property). First, note that \(f^*\omega \) is a closed differential form on \(Y\backslash f^{-1}(S)\). According to Proposition 16, we can write \(\omega =\frac{ds_x}{s_x}\wedge \psi _x+\theta _x\). We compute

Now, \(s_x\circ f\) is a local equation for \(f^{-1}(S)\) around any point in \(f^{-1}(x)\). Thus, we conclude

as claimed. \(\square \)

As mentioned in the introduction, Cutkosky’s Theorem involves the use of \(\delta \)-functions (which are of course not functions in the conventional sense). These are usually defined in the language of functional analysis. But in the context of singular integrals, we can define the \(\delta \)-function and its derivatives evaluated at a test function by integrating the residue of a differential form induced by the given test function.

Definition 12

[19] For any test function f set \(\omega :=fdx_1\wedge \cdots dx_n\). Then, we define

2.3.2 Simple pinches

Now, we want to compute the variation of homology classes for a rather simple situation in which the singular points of the differential form is a finite union of manifolds which are in general position except at isolated points. Again, we follow [19].

Definition 13

Let Y and T be two complex analytic manifolds, \(\pi :Y\rightarrow T\) a smooth map and \(S_1,\ldots ,S_N\subset Y\) complex analytic submanifolds of codimension 1. Denote by \((S_i)_t:=\pi ^{-1}(t)\cap S_i\) the fiber of \(S_i\) over t for all \(i\in \{1,\ldots ,N\}\) and \(t\in T\). We say that the system \(S_1,\ldots ,S_N\) has a simple pinch at \(y\in Y\) if there is a coordinate chart \((\varphi =(x_1,\ldots ,x_n,t_1,\ldots ,t_m),U)\) in a neighborhood \(U\subset Y\) of y such that there are local equations

and

for \(S_1,\ldots ,S_N\) around y.

In should be remarked that the case \(N=n+1\) is a little different from the remaining cases \(N\le n\). The first one is called a linear pinch while the remaining cases are called quadratic pinches.

Suppose \(S_1,\ldots ,S_N\) has a simple pinch at \(y\in Y\) and let \((\varphi ,U)\) be the coordinate chart from Definition 13. Then, \(t:=\pi (y)\) must necessarily be a point of codimension 1 in the Landau surface. Suppose we fix \(t\in T\) such that \(t_1\) is real and positive and denote \(U_t:=Y_t\cap U\). Then,

where \(S^k_{\mathbb {C}}\) denotes the complex unit sphere of dimension \(k\in {\mathbb {N}}\).Footnote 7 It is well-known that the complex k-sphere deformation retracts to the real k-sphere \(S^k\). Thus, in case \(n>N\), we obtain

for the homology groups of \(U_t\cap (S_1)_t\cap \cdots \cap (S_N)_t\) and the generator of \(H_{n-N}(U_t\cap (S_1)_t\cap \cdots \cap (S_N)_t)\) is represented by a real \((n-N)\)-sphere contained within \(U_t\cap (S_1)_t\cap \cdots \cap (S_N)_t\). On the level of cycles, this is called the vanishing sphere denoted by

It is the iterated boundary of the vanishing cell

This means that \(e=(\partial _1\circ \cdots \circ \partial _N)({\textbf{e}})\), where \(\partial _i\) is the operator taking the boundary within \((S_i)_t\) for all \(i\in \{1,\ldots ,N\}\). Furthermore, we define the vanishing cycle

by taking the iterated Leray coboundary of the vanishing sphere. These define homology classes

These three classes are the protagonists in the calculation of the variation in the situation of a simple pinch. Due to the following proposition, it suffices in principle to know one of the three.

Proposition 20

[8] In the situation above, the maps \(\partial _i\) and \(\delta _i\) are isomorphisms on the level of homology. In particular, \([{\textbf{e}}]\) generates the relative homology group \(H_n(U_t,(S_1)_t\cap \cdots \cap (S_N)_t)\) and \([{\tilde{e}}]\) generates the homology group \(H_n(U_t-(S_1)_t\cap \cdots \cap (S_N)_t)\) of the complement.

In fact, we can use this generating property as the definition of the three classes in question.Footnote 8

Definition 14

The vanishing cell \([{\textbf{e}}]\), vanishing sphere [e] and vanishing cycle \([{\tilde{e}}]\) is a generator of \(H_{n-N}(U_t\cap (S_1)_t\cap \cdots \cap (S_N)_t)\), \(H_n(U_t,(S_1)_t\cup \cdots \cup (S_N)_t)\) and \(H_n(U_t-(S_1)_t\cup \cdots \cup (S_N)_t)\), respectively.

Note that this definition does only determine \([{\textbf{e}}]\), [e] and \([{\tilde{e}}]\) up to orientation (\({\mathbb {Z}}\) has two generators: 1 and -1). In what follows, it will become evident that this choice of orientation does not matter as all calculation in which these three classes appear are independent of the chosen orientation.

There are two marginal cases that we also need to cover: In case \(n=N\), we are dealing with the 0-sphere which consists of two points. Thus,

and the 0th homology group is generated by the two points \(p_1,p_2\) of the 0-sphere. In this case, we set \([e]:=[p_2]-[p_1]\). In case linear case \(n=N+1\), the intersection \(U_t\cap (S_1)_t\cap \cdots \cap (S_N)_t\) is empty and the vanishing sphere does not exist. In accordance with [19], we agree on the convention that all associated vanishing classes are 0 in this case.

2.3.3 The Picard–Lefschetz formula for simple pinches

We have seen that an element \([\gamma ]\in \pi _1(T\backslash L,t_0)\) gives rise to an automorphism \([\gamma ]_*\) of \(H_\bullet (Y_{t_0}\backslash S_{t_0})\). In the situation of a simple pinch, the problem can be localized in the sense that the variation \(([\gamma ]_*-\text {id})[h]\) of a class \([h]\in H_n(Y_{t_0}\backslash S_{t_0})\) can be represented by a cycle with support entirely contained within U, the domain of the coordinate chart \((\varphi ,U)\) appearing in the definition of a simple pinch. Since the homology group \(H_n(U_{t_0}-(S_1)_{t_0}\cap \cdots \cap (S_N)_{t_0})\) is spanned by a single element (the vanishing cycle), it is clear that the variation must thus be some integer multiple \(N\cdot [{\tilde{e}}]\) of the vanishing cycle.Footnote 9 The discontinuity of the integral (28) corresponding to \([\gamma ]\) is thus given by

and the remaining task is to determine the integer N. This can be done by a Picard–Lefschetz type formula introduced in [8]. To understand its content, we first need the notion of an intersection index. There are quite general definitions of this concept.Footnote 10 But for our purposes it suffices to consider the intersection index of two closed oriented manifolds with- or without boundary intersecting transversally and with dimensions adding up to the dimension of the whole space.

Proposition 21

[19] Let M be a smooth manifold of dimension n and \(S_1,S_2\subset M\) two orientable submanifolds (with or without boundary) of dimensions p and \(n-p\) such that

If \(S_1\) and \(S_2\) intersect transversally, then \(S_1\cap S_2\) consists of a finite number of isolated points and the intersection index \(\langle S_2\vert S_1\rangle \) of \(S_1\) and \(S_2\) is given by

where \(N_+\) (resp. \(N_-\)) is the number of points in \(S_1\cap S_2\) at which the orientations of \(S_1\) and \(S_2\) match (resp. do not match).

We can express the integer N from (76) as the intersection index of the vanishing cell with the integration cycle as follows:

Theorem 22

(Picard–Lefschetz Formula, [19]) The integer N in Eq. (76) is given by

where h is the integration cycle at t.

In this paper, we do not explicitly compute the intersection index relevant for one-loop Feynman integrals since it involves a very different array of techniques, mostly from homological algebra. Therefore, this step is postponed to the second part of this work.

2.3.4 Variation and discontinuity along products of loops

So far, we only discussed how to compute the variation and discontinuity for simple pinches along simple loops around points of codimension 1. To fully understand the discontinuities of one-loop Feynman integrals, it is useful to be able to relate the variation along products of loops to the variation along the individual factors. For the case of simple pinches, we have the following:

Lemma 23

Let \([\gamma _1],[\gamma _2]\in \pi _1(T\backslash L,t_0)\) be two simple loops. Then,

and

Proof

Let \(h\in H_n(Y_{t_0})\). Denote \(\text {Var}_{[\gamma _i]}h=:N_i{\tilde{e}}_i\) for \(i=1,2\). We compute

The corresponding formula for the discontinuity follows immediately. \(\square \)

We can easily generalize this to arbitrary finite products of loops:

Proposition 24

Let \([\gamma _1],\ldots ,[\gamma _k]\in \pi _1(T^*,t_0)\) be simple loops. Then,

and

Proof

We proceed by induction on k. For \(k=1\), the formulas are trivial. For the induction step, we compute

where we used Lemma 23 in the first step and the induction hypothesis in the second step. Again the formula for the discontinuity follows immediately. \(\square \)

With Proposition 24, we can compute the discontinuity along any loop around points where codimension 1 parts of the Landau surface intersect in general position.

3 Feynman graphs and integrals

Now, we turn to the study of Feynman integrals as holomorphic functions in the external momenta. To ensure that there are no ambiguities regarding the notation, conventions and terminology employed in this work and to revisit the basic notions of the field for the non-expert reader, we quickly recall the definition of Feynman graphs and integrals. For a more detailed exposition, the reader is referred to [25] or [26]. A graph always means a finite multi-graph in this text. For a graph G, we denote its underlying set of vertices by V(G) and its underlying (multi-)set of edges by E(G). The first Betti number of G, which is the maximal number of independent cycles, is denoted by \(h_1(G)\). It is customary to say that G has \(h_1(G)\) loops. For us, a Feynman integral means a graph together with some additional information specifying at which vertices how many particles are in- or outgoing. More specifically, we make the following

Definition 15

A Feynman graph \((G,\phi )\) is a graph G together with a map \(\phi :V(G)\rightarrow {\mathbb {N}}\) called the external structure.

This is not the most general definition of Feynman graphs. In many physical theories, for example quantum electro- or chromodynamics, one wants to distinguish different types of edges (see Fig. 1 for two examples). The essential features of the analytic structure of the parameter-dependent integrals associated with such a graph are, however, already captured in our setting. So we do not go into detail regarding these more general Feynman graphs and integrals.

A Feynman graph (or Feynman diagram as they are sometimes called) is a representation of a collection of possible ways elementary particles can interact: The external structure represents in- and outgoing particles. It assigns each vertex a number of external momenta. It is common to draw a pictorial representation of a Feynman graph \((G,\phi )\) by drawing the underlying graph G as usual and then attaching \(\phi (v)\) lines not connected to a second vertex to each vertex \(v\in V(G)\).

Each Feynman graph G is assigned a Feynman integral by applying the Feynman rules to it, which contributes to the probability amplitude of a given process. There are several equivalent descriptions of this procedure. Here, we focus on the so-called momentum space representation, which is obtained from a Feynman graph \((G,\phi )\) in the following manner: First, we equip the graph G with an arbitrary orientation and denote by \({\mathcal {E}}\in M(\vert V(G)\vert \times \vert E(G)\vert ;{\mathbb {Z}})\) the corresponding incidence matrix. For each edge \(e\in E(G)\), we write down a factor of \(\frac{1}{(k_e^2+m_e^2)^{\lambda _e}}\) (called the propagator), where \(k_e\in {\mathbb {C}}^D\) is called the internal momentum and \(m_e\in {\mathbb {R}}_{\ge 0}\) the mass associated with the edge e. The \(\lambda _e\) are generally complex numbers with positive real part called analytic regulators. For each vertex \(v\in V(G)\), we write down a constant factor (which we ignore in this text since it does not influence the analytic structure) and assign \(\phi (v)\) external momenta \(p_{v,1},\ldots ,p_{v,\phi (v)}\in {\mathbb {C}}^D\) to it. We denote the total external momentum at v by \(p_v:=\sum _{i=1}^{\phi (v)}p_{v,i}\). Then, we enforce momentum conservation at every vertex \(v\in V(G)\), i.e., we insist on the internal and external momenta satisfying \(\sum _{e\in E(G)}{\mathcal {E}}_{v,e}k_e+p_v=0\) for every \(v\in V(G)\).Footnote 11 Usually, one wants to factor out the overall momentum conservation, i.e., the condition \(\sum _{v\in V(G)}p_v=0\), as it does not depend on the internal momenta \(k_e\). To do this, one may fix some vertex \(v_0\in V(G)\) and drop the momentum conservation at \(v_0\). The Feynman integral I(G) corresponding to a Feynman graph G thus reads

Integrating out the \(\delta \)s leads to a linear system of equations for the \(k_e\), and it can be shown that all, but \(h_1(G)\) of the internal momenta can be eliminated by solving this system. The remaining internal momenta are then integrated over, and the result reads

where \(K_e:{\mathbb {C}}^{h_1(G)D}\rightarrow {\mathbb {C}}^D\) and \(P_e:{\mathbb {C}}^{(\sum _{v\in V(G)}\phi (v))D}\rightarrow {\mathbb {C}}^D\) are linear maps for all \(e\in E(G)\). The resulting propagators and thus, the maps \(K_e\) and \(P_e\) are not uniquely determined, but the result of the integration (if it is well-defined at all) is independent of the remaining freedom of choice. In physics, one is almost always concerned with Minkowski momenta to adhere to the principals of special relativity. This means in the physics literature the internal momenta are considered to be real, but the momentum-squares are typically defined as \(k^2:=-k_0^2+k_1^2+\cdots +k_{D-1}^2\).Footnote 12 Unfortunately, this immediately leads to problems since the integration domain now includes the poles at \(k_e^2+m_e^2=0\). The usual ploy to avoid this issue is to introduce a small complex shift in the propagator by replacing \((k_e^2+m_e^2)^{-\lambda _e}\) with \((k_e^2+m_e^2-i\epsilon )^{\lambda _e}\) for some \(0<\epsilon \ll 1\). Then, the integration is carried out and the limit \(\epsilon \rightarrow 0^+\) is taken at the very end of the calculation. This is called the \(i\epsilon \)-prescription. We shall see that this is not satisfactory for our purposes and we take a different route: In our setting, it is necessary to consider complex internal momenta. The Minkowski momenta can then be identified with those momenta that have purely imaginary 0th component and all remaining components real. We, however, start with an entirely real integration domain \({\mathbb {R}}^D\) (in the physics literature this is known as a Euclidean Feynman integral) instead of choosing to integrate over all Minkowski momenta \(i{\mathbb {R}}\times {\mathbb {R}}^{D-1}\), which never meets the zero locus of \(k_e^2+m_e^2\) as long as \(m_e^2>0\). This serves as the starting point for an analytic continuation. In Sect. 2, we saw how an analytic continuation requires us to continuously deform the integration domain as we move along a path in the space of external momenta. In particular, we show in Sect. 4 how this is done explicitly for one-loop Feynman integrals in the case where we want to continue from Euclidean external momenta to Minkowski external momenta and explain how this is in agreement with the \(i\epsilon \)-prescription. For now, we simply define our Feynman integrals as Euclidean integrals.

Definition 16

Let G be a Feynman graph. The corresponding Feynman integral in momentum space representation in \(D\in {\mathbb {N}}\) dimensions is

where \(K_e\) and \(P_e\) are the linear maps obtained as described above.

As mentioned above, we have omitted some constant factors in this definition as they do not play a role in the analytical structure. These factors are only needed to compare numerical values obtained from Feynman integrals with the experiment. Note that it suffices to understand the bridge-less graphs G, or one-particle-reducible (1PI) graphs as they are called in the physics literature, to understand all Feynman integrals, as integrals corresponding to graphs with bridges factorize into 1PI contributions.

Up to this point, we have not stated what complex manifold we would like to (or even can) consider a Feynman integral to be a function on. In this work, we focus on the dependence on the external momenta. The masses are regarded as fixed and positive (the massless case works differently and we postpone the discussion of this case to future research). Thus, an obvious choice would be \(({\mathbb {C}}^D)^{\sum _{v\in V(G)}\phi (v)}\). But this can be simplified. First of all, the external momenta are restricted to a hyperplane by overall momentum conservation. In particular, we can express one of the momenta as minus the sum of all the others so that \(({\mathbb {C}}^D)^{\sum _{v\in V(G)}\phi (v)-1}\) would be a sufficient space to work with. Furthermore, it is well-known in physics that a Feynman integral is Lorentz-invariant, i.e., applying the same Lorentz transformation to all external momenta does not change the value of the integral. In our setup, this statement takes a slightly different form: Since we need to work with arbitrary complex momenta and not just Minkowski momenta, our transformation group is different. But the general idea stays the same: The integral should be invariant under all linear transformations of the external momenta leaving the products \(p_ip_j\) unchanged. This group is the Lie group \(O(D,{\mathbb {C}})\) of complex orthogonal \(D\times D\)-matrices. We define an action of \(O(D,{\mathbb {C}})\) on \(({\mathbb {C}}^D)^n\) by

for all \(g\in O(D,{\mathbb {C}})\) and all \(n\in {\mathbb {N}}^*\), where the multiplication on the right is just regular matrix-vector multiplication. The invariance of Feynman integrals under \(O(D,{\mathbb {C}})\) for the one-loop case is proven in the next section.

4 One-loop Feynman integrals

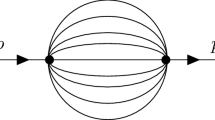

We now begin our investigation of one-loop Feynman graphs, i.e., Feynman graphs G with \(h_1(G)=1\). As mentioned in Sect. 3, it suffices to consider the 1-particle-irreducible graphs to understand their analytic structure. The 1PI graphs with one loop are the cycle graphs \(C_n\) (viewed as Feynman graphs) shown in the following figure:

On the level of (multi-)sets, this means

Note that we assigned exactly one external momentum to each vertex, i.e., the external structure \(\phi \) of the graphs under consideration is simply given by \(\phi (v)=1\) for all \(v\in V(C_n)\).Footnote 13 We could consider more general external structures, but this bears no relevance to our discussion: If there is more than one line attached to a vertex, the integral depends only on the sum of all external momenta going into that vertex. In the coordinates we chose, the general one-loop Feynman integral in momentum space representation in D dimensions from Definition 16 reads

To ease notation, we set \(P^{(i)}(p):=\sum _{j=1}^{i-1}p_j\) for all \(i\in \{1,\ldots ,n\}\). Recall that we agreed to fix all masses \(m_i\) to be real and positive. Of course, the integral (91) is not well-defined in general. But for \(p\in ({\mathbb {R}}^D)^{n-1}\) the integral (91) converges absolutely if and only if \(2\text {Re}(\lambda )>D\). This is an application of Weinberg’s famous Power Counting Theorem [24] in its simplest form (where no subdivergencies need to be considered since all proper subgraphs of \(C_n\) are forests and hence, trivially “converge” as there is no integration to be performed).

The Feynman graph \(C_2\) will accompany us as a running example throughout this section to illustrate all the ideas as they occur:

Example 1

Consider the Feynman graph \(C_2\):

In terms of (multi-)sets, this means \(C_2=(G,\varphi )\) with

and \(\varphi (v)=1\) for \(v=1,2\). The corresponding Feynman integral in D dimensions reads

It does not depend on \(p_2\) and in fact momentum conservation demands \(p_2=-p_1\).

For reasons that become apparent further below, we exclude a certain subset of momenta from our parameter space:

Definition 17

For all \(n\in {\mathbb {N}}^*\), we define

The configurations of external momenta in \(({\mathbb {C}}^D)^{n-1}\backslash T_n\) are colinear and behave rather differently. Examples have shown that for these momentum configurations, Feynman integrals exhibit poles instead of essential singularities and hence, there is no associated monodromy. A discussion of these points is beyond the scope of this paper and postponed to future research. Note that \(p\in T_n\) implies that the momenta \(p_1,\ldots ,p_{n-1}\) are linearly independent (over \({\mathbb {C}}\)) and in particular \(T_n\ne \emptyset \) if and only if \(D\ge n-1\). During the course of this section, we will find that for momenta in \(T_n\) only simple pinches occur which can be analyzed by the techniques from Sect. 2.3.

4.1 Compactification and stratification

In the form (91), we can not yet apply the techniques from Sect. 2.3 to \(I(C_n)\). We first have to compactify the integration cycle as well as its ambient space. In the one-loop case, this can be done without substantial problems. For details on the problems that occur when working with multiple loops, see [17]. There are various ways to achieve a compactification, but we stick to the arguably simplest one for the purpose of this work. Further, below we show that in the cases we are interested in, genuine pinches which trap the integration cycle appear only at points \(k\in {\mathbb {C}}^D\) at “finite distance,” i.e., outside of the set of additional points the compactification introduces. So the chosen compactification is not particularly important for our purposes.

There is one rather obvious way to achieve the desired compactification in the case of odd D. We view the integration domain \({\mathbb {R}}^D\) as being embedded in the complex analytic manifold \({\mathbb {C}}^D\). The ambient space \({\mathbb {C}}^D\) can in turn be viewed as being embedded in the compact complex analytic manifold \({\mathbb {C}}{\mathbb {P}}^D\). Applying the pull-back of the inverse of the natural inclusion

restricted to its image (i.e., viewed as a biholomorphic map \({\mathbb {C}}^D\overset{\sim }{\rightarrow }i({\mathbb {C}}^D)={\mathbb {C}}{\mathbb {P}}^D\backslash H_\infty \)) to the integral (91), we obtain

where

is the differential form from Lemma 2. Here, we denote the additional (homogeneous) coordinate introduced by the inclusion into complex projective space by u instead of \(k_0\) since, as mentioned above, it is customary in the physics literature to index the components of k from 0 to \(D-1\) instead of from 1 to D. This notation also helps to render the conceptual difference between the coordinate u and the coordinates given by the D components of k more visible. Note also that we replaced the integration domain \(i({\mathbb {R}}^D)\) by its closure \(\overline{i({\mathbb {R}}^D)}={\mathbb {R}}{\mathbb {P}}^D\). This does not affect the value of the integral as \({\mathbb {R}}{\mathbb {P}}^D-i({\mathbb {R}}^D)=H_\infty \cap {\mathbb {R}}{\mathbb {P}}^D\) has Lebesgue measure 0.

The same idea works for even D with a slight modification: In this case, \({\mathbb {R}}{\mathbb {P}}^D\) is not orientable, so the integral (96) does not make any sense. However, we can still apply the program from [19] by lifting to the oriented double cover \(S^D\) of \({\mathbb {R}}{\mathbb {P}}^D\). The geometric part of the analysis, which does not require the integration cycle to be oriented, can be performed on the level of \({\mathbb {R}}{\mathbb {P}}^D\) embedded in the compact space \({\mathbb {C}}{\mathbb {P}}^D\) while the actual integration can be performed on the double cover. The details of this can be found in the second part of this work.

Now, we need to establish some notation. We write

for all \(p\in ({\mathbb {C}}^D)^{n-1}\). For all \(i\in \{1,\ldots ,n\}\), we set

and the corresponding fiber (with respect to the obvious projection) at \(p\in T_n\) by

Furthermore, we set \(S:=\bigcup _{i=1}^nS_i\) and \(S(p):=\bigcup _{i=1}^nS_i(p)\) for all \(p\in T_n\). Note that \(S_i(p)\) (resp. \(S_i\)) is a complex analytic closed submanifold of \({\mathbb {C}}{\mathbb {P}}^D\) (resp. \({\mathbb {C}}{\mathbb {P}}^D\times T_n\)) of (complex) codimension 1 for all \(p\in T_n\) and all \(i\in \{1,\ldots ,n\}\) by Proposition 1. Indeed, the gradient of the defining equation \(Q_i(p)(u,k)=0\) in \({\mathbb {C}}^{D+1}\backslash \{0\}\) vanishes nowhere:

implies \(k=-uP^{(i)}(p)\) by the first D equations and thus, \(u^2m_i^2=0\) by the last equation. Since \(m_i^2\ne 0\), this means \(u=0\) which in turn implies \(k=0\), a contradiction. Thus, the zero locus S(p) is the union of a finite number of closed complex analytic submanifolds for all \(p\in T_n\). The integration domain \({\mathbb {R}}{\mathbb {P}}^D\) is now a compact D-cycle in \({\mathbb {C}}{\mathbb {P}}^D\backslash S(p)\) for any \(p\in ({\mathbb {R}}^D)^{n-1}\). Indeed, for \((u,k)\in {\mathbb {R}}^{D+1}\backslash \{0\}\), \(p\in ({\mathbb {R}}^D)^{n-1}\) and \(i\in \{1,\ldots ,n\}\), the equation

implies \(u=0\) and thus, \(k=0\), again a contradiction. The integrand of (96) is a holomorphic D-form on \({\mathbb {C}}{\mathbb {P}}^D\backslash S(p)\) for all \(p\in T_n\) which depends holomorphically on p, i.e.,

Therefore, we can conclude that \(I(C_n)\) defines a holomorphic function outside of its Landau surface. Now, we would like to compute the Landau surface of \(I(C_n)\) as in Definition 11. Further, below we introduce a stratification on S which allows us to see that the Landau surface is given precisely by all \(p\in T_n\) such that the finite parts of the \(S_i(p)\) are not in general position and effectively ignore what happens at infinity. This is the case if and only if there is an index set \(I\subset \{1,\ldots ,n\}\) and a point \(k\in \bigcap _{i\in I}(S_i(p)-H_\infty )\) (where we implicitly use inhomogeneous coordinates) such that the normal vectors of the \(S_i(p)-H_\infty \) with \(i\in I\) at k are linearly dependent. Thus, the Landau surface consists of all points \(p\in T_n\) for which a solution

with

and