Abstract

Distributional data have recently become increasingly important for understanding processes in the geosciences, thanks to the establishment of cost-efficient analytical instruments capable of measuring properties over large numbers of particles, grains or crystals in a sample. Functional data analysis allows the direct application of multivariate methods, such as principal component analysis, to such distributions. However, these are often observed in the form of samples, and thus incur a sampling error. This additional sampling error changes the properties of the multivariate variance and thus the number of relevant principal components and their direction. The result of the principal component analysis becomes an artifact of the sampling error and can negatively affect the subsequent data analysis. This work presents a way of estimating this sampling error and how to confront it in the context of principal component analysis, where the principal components are obtained as a linear combination of elements of a newly constructed orthogonal spline basis. The effect of the sampling error and the effectiveness of the correction is demonstrated with a series of simulations. It is shown how the interpretability and reproducibility of the principal components improve and become independent of the selection of the basis. The proposed method is then applied on a dataset of grain size distributions in a geometallurgical dataset from Thaba mine in the Bushveld complex.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

With the onset of new analytical instrumentation, it is becoming increasingly common in the geosciences to acquire distributional data, such as the particle size distribution of sediments, the grain size distribution of specific minerals in metamorphic rocks, the age distribution of rutile crystals in sediments, the abundance of geologically relevant trace minerals in grains of specific minerals (a.k.a. varietal studies) or the distribution of the mineral composition of particles in milled ores. As distributions are inherently high-dimensional objects with many degrees of freedom, it is interesting to understand their inherent variability, as unraveled for standard multivariate data via principal component analysis (PCA).

Bayes spaces (van den Boogaart et al. 2014) provide a metric linear space structure for probability distributions and thus theoretically allow us to generalize PCA directly to distributions (Hron et al. 2016). Such analysis provides distribution-valued principal components (eigenfunctions), real-valued scores, variance contributions interpretable in the linear space structure, as well as biplots, screeplots, compressed representations and the like. There are, however, a few practical challenges, due to the infinite dimensionality of the space of distributions on continuous scales: (i) It is not obvious how to handle infinite-dimensional objects on a computer. (ii) Distributions can typically only be observed with a certain sampling error, which might be quite substantial, especially in regions of low probability density.

Machalová et al. (2021) and Bortolotti (2021) provided methods to solve the first problem by representing distributions by means of splines (De Boor 1978) designed to approximate elements of a Bayes space by a finite-dimensional set of coefficients on an orthonormal basis. This way, an observed distribution can be represented by a spline and the principal components can be calculated directly on the spline coefficients, with possible (visual) representation in functional sense. This paper applies this idea for tackling the first challenge, and addresses the second problem of sampling errors while observing the distribution. In other words, we assume that the distributions are not observed directly, but rather through a sample. An appropriate dataset for the method proposed here would thus consist either of a set of samples, or a set of histogram data, providing counts of observations in value classes. This is already a common approach for the functional data analysis (FDA) of probability density functions (Hron et al. 2016; Talská et al. 2018, 2021; Menafoglio et al. 2021). For the computation, a distributional spline (so-called compositional spline) as defined in Machalová et al. (2021) is fitted to each of the samples. The “observed” distributions estimated from each of the samples are, however, not the true distributions, for two reasons:

-

1.

The spline can only approximate the true distribution. This is typically not relevant for the analysis, as the scientifically relevant variation of the distributions will be on a scale larger than the resolution of a spline approximation. The implicit (or even explicit, that is, desired) smoothing by the spline approximation will rather reduce noise of irrelevant small scale variability due to sampling.

-

2.

The estimated distribution will typically vary around the true distribution. This variance might be quite substantial for small samples.

This second problem is essential as it systematically changes the variance-covariance structure, and thus the result of the singular value decomposition. Therefore, the goal will be to adequately estimate the error caused by sampling and to remove it from the observed covariance structure. This contribution proposes to correct the empirical variance-covariance matrix by subtracting the variance-covariance estimated for the error. This way, one should be able to get a clearer view of the true structure of the individual distributions as well as a more accurate estimate of the principal components themselves. This contribution develops the case in context of the (functional) distributional version of PCA, also known as simplicial functional principal component analysis (SFPCA, Hron et al. (2016)), but the approach can be generalized to every situation in which principal components of the underlying quantities shall be inferred from observations with errors. A similar approach for correcting for observational error for discriminant analysis can be found in Pospiech et al. (2021).

The remainder of paper is organized as follows: Sect. 2 discusses the Bayes spaces for representation of probability density functions (PDFs) and an orthonormal basis of a finite-dimensional approximation of this space in terms of compositional splines. In Sect. 3, Doob’s \(\Delta \)-method is used to compute the sampling error in this basis representation. Section 4 shows how to use a corrected variance-covariance estimator for SFPCA. The advantages and relevance of the new approach are then demonstrated both via simulations (Sect. 5), and a real-world grain size distribution example (Sect. 6). Section 7 summarizes the practical considerations when applying the proposed methodology. The final Sect. 8 provides some conclusions and an outlook on future work.

2 Bayes Spaces and Compositional Splines

2.1 Bayes Spaces

Continuous distributions with a common interval domain are often represented by unit-integral non-negative functions (probability density functions, or PDFs). They share similar properties with their discrete counterparts, namely, distributions on a finite set of discrete support points, analogous to compositional data (Pawlowsky-Glahn et al. 2015). In both cases, the relative information contained in proportions between elements (subintervals) tends to be more important than individually considered absolute function values of PDFs. The framework of compositional data analysis has been thus generalized to deal with densities in a so-called Bayes space \(\mathcal {B}^2\) (van den Boogaart et al. 2014) which enables us to recognize specific properties of PDFs (Egozcue et al. 2006).

To this end, the Aitchison geometry (Pawlowsky-Glahn and Egozcue 2001), commonly used in compositional data analysis for measuring dissimilarity between compositions, is generalized for the infinite-dimensional case. Furthermore, the standard operations of addition of functions, multiplication of a function by a scalar and the inner product used for \(L^2\) functions, are reformulated for \(f,g \in \mathcal {B}^{2}, \ \alpha \in \mathbb {R}\) and \( t,u \in I = \left[ a,b\right] \) to the so-called perturbation, powering and the Bayes inner product,

resulting in a Hilbert space structure for \(\mathcal {B}^2\) (van den Boogaart et al. 2014). It is thus possible to unambiguously express objects from \(\mathcal {B}^2\) in the \(L^2\) space via the centered log-ratio (clr) transformation

while maintaining the relative information of PDFs. Accordingly, the clr transformation results in a zero-integral curve from \(L^{2}(I)\), that is,

This way, standard FDA methods developed for objects from the \(L^2\) space (Ramsay and Silverman 2005) can be used on the clr-transformed PDFs.

In the geosciences, as well as in other fields of human activity where distributional data are naturally collected, it is rarely possible to observe PDFs in their continuous form. Normally, one is left with a discretized input, for example, a sample of the underlying random variable, either as is or pre-aggregated in a form of a histogram. In FDA, one common approach for approximating functions from discrete data is to use a B-spline representation (De Boor 1978). Machalová et al. (2021) adapted these splines for the case of distributions in the Bayes space, giving rise to the so-called compositional splines.

2.2 Zero-Integral B-Splines and Their Orthogonalization

The clr transformation bijectively maps \(\mathcal {B}^2\) onto \(L^2_{0}\)—an \(L^2\) subspace of zero-integral functions. Therefore, it is natural to construct smoothing splines in \(L_0^2(I)\) while honoring the constraint expressed in (4). Smoothing splines with zero integral were first studied in Machalová et al. (2016) and Talská et al. (2018), where the necessary and sufficient conditions for the respective B-splines coefficients were provided. In Machalová et al. (2021) the B-splines with zero integral (called ZB-splines) were introduced. These splines form a base of the corresponding space. A relationship between classical B-splines and ZB-splines was developed, which is useful for practical computations. In this paper, the orthonormalized ZB-splines basis is introduced as a counterpart to the orthonormalized B-spline basis for approximation of clr-transformed density functions.

We briefly recall the construction of ZB-splines while following the notation of Machalová et al. (2021). We denote \(\mathcal{Z}_{k}^{\Delta \lambda }[a,b]\) the vector space of polynomial zero-integral spline functions of degree \(k>0\), \(k\in \mathbb {N}\), with the given increasing sequence of knots \(\Delta \lambda \) spanning a finite interval \(I=[a,b]\). The dimension of such a space is \(g+k.\) Then, every spline \(s_k(t) \in \mathcal{Z}_{k}^{\Delta \lambda }[a,b]\) can be expressed as a unique linear combination of ZB-splines \(Z_i^{k+1}(t)\), that is, as

In matrix notation

where \(\textbf{Z}_{k+1}(t)=(Z_{-k}^{k+1}\left( t\right) ,\ldots ,Z_{g-1}^{k+1}\left( t\right) )\) is the collocation matrix of ZB-splines, \(\textbf{z}=\left( z_{-k},\ldots ,z_{g-1}\right) ^{\top }\), \(\textbf{B}_{k+1}(t)=(B_{-k}^{k+1}\left( t\right) ,\ldots ,B_{g}^{k+1}\left( t\right) )\) is the classical collocation matrix (De Boor 1978),

and

For further details, see Machalová et al. (2021).

If we want to work in the space \(\mathcal{Z}_{k}^{\Delta \lambda }[a,b]\) endowed with an orthonormalized basis, it is necessary to find a linear transformation \(\Phi \) such that

forms an orthonormal set of basis functions, that is,

where \(\textbf{I}\) is the \((g+k)\)-dimensional identity matrix. According to Machalová et al. (2021), an invertible transformation \(\Phi \) orthogonalizes the basis functions \(\textbf{Z}_{k+1}(t)\) if and only if it satisfies the condition that

where \(\textbf{G}\) represents the positive definite matrix

With respect to the definition of basis functions \(\textbf{Z}_{k+1}(t)\), the matrix \(\textbf{G}\) can be expressed as

where

The linear transformation \(\Phi \) is not unique. One way to obtain it is by means of the Cholesky decomposition of \(\textbf{G}^{-1}\). The basis functions \(\textbf{O}_{k+1}(t)=(O_{-k}^{k+1}(t),\ldots ,O_{g-1}^{k+1}(t))\) are then orthonormal and have zero integral. Finally, the spline \(s_k(t)\) with zero integral can be constructed as a linear combination of orthonormal splines \(O_i^{k+1}(t)\) having zero integral in a form

where \(\textbf{o}\, =\,(o_{-k},\ldots ,o_{g-1})^{\top }\).

Now, one can proceed with the construction of a smoothing spline in \(L_0^2(I)\) using the orthonormal basis \(\textbf{O}_{k+1}(t).\) Let the data \((t_i,y_i)\), \(a\le t_i \le b\), some weights \(w_i>0\), \(i=1,\dots ,n\), and parameters \(\alpha \in (0,1]\), \(l \in \{1,\dots ,k-1\}\) be given. The task is to find a spline \(s_{k}(t)\in \mathcal{Z}_{k}^{\Delta \lambda }[a,b]\subset L_0^2(I)\) which minimizes the functional

Let us denote \(\textbf{t}=\left( t_{1},\ldots ,t_{n}\right) ^{\top }\), \(\textbf{y}=\left( y_{1},\ldots ,y_{n}\right) ^{\top }\), \(\textbf{w}=\left( w_{1},\ldots ,w_{n}\right) ^{\top }\), \(\textbf{W}=\text{ diag }\left( \textbf{w}\right) \). Using the representation (10), the functional \(J_l(s_k)\) can be written as a quadratic function

where \(\textbf{N}_{kl}\) is a positive definite matrix

In fact, the minimization of the function \(J_l(\textbf{o})\) can be rewritten as a weighted least-squares problem

where

and the matrix \(\textbf{L}\) can be taken as the Cholesky decomposition of the matrix \(\textbf{N}_{kl}\, = \, \textbf{L}\textbf{L}^{\top }\).

Now let the variance-covariance matrix \(\Sigma \) for the discretized clr-transformed data be provided (either known or estimated). The discrete clr transformation is defined as

where \(g(\textbf{y})\) stands for the geometric mean of \(\textbf{y}\). One then has a model for an \(n-\)dimensional observed vector \(\textbf{y} \in \mathbb {R}^{n}\) as a histogram class representation (in the clr space) of the underlying PDF \(\text {clr}f(\textbf{t})\), a true signal \(\textbf{x} \in \mathbb {R}^{n}\) and a respective random error vector \({\varvec{{\epsilon }}}\)

If \(\Sigma \) is symmetric positive definite matrix, then we can set \( \textbf{W} \, = \, \Sigma ^{-1}, \) see Ramsay and Silverman (2005), and the solution to (12) can be easily found by using a minimization technique for strictly convex quadratic functions. But in our case the variance-covariance matrix is symmetric positive semidefinite due to the zero-integral property of the clr transformation (13). Therefore, to find a solution to the problem (12) it is not possible to use standard methods. Using the ideas from Rao and Mitra (1971), Fišerová et al. (2007) and the notation

one uses the generalized inverse of a partitioned matrix

with

where \(\widetilde{\textbf{T}} = \widetilde{\Sigma } + \widetilde{\textbf{O}}\widetilde{\textbf{O}}^{\top }.\) In this case with a positive semidefinite matrix \(\Sigma \), the solution of the minimization problem (12) is given by the formula

With respect to the definition of \(\widetilde{\textbf{y}}\), one can make use of the so-called hat matrix

using all rows and the first n columns of the matrix \(\textbf{A}_2^{\top }\). Then

and the final smoothing spline for the given data with zero integral results in

In matrix notation, we have

It is obvious that

To observe the sampling variance of the spline to the data, using (17) one obtains

By following Ramsay and Silverman (2005), confidence limits can be then computed by adding and subtracting a multiple of standard errors, that is, the square root of the sampling variances, to the actual smoothing spline. For example, the limits of the 95% confidence interval at point \(\bar{t}\) correspond to

However, note that in the functional sense the resulting confidence bounds cannot be perceived for the function as a whole. The confidence limits are meaningful only when considered for an individual time point.

3 The Sampling Error of Compositional Splines

As discussed previously, when observing true distributional data (PDFs) it is common to observe only discretized observations, although the true phenomenon might actually show functional character. This discretization is most often caused by the very discrete nature of sampling and data acquisition themselves. In some cases, the data are available as large datasets of observations of the underlying variables, although most commonly these are already summarized in histograms. Consequently, one observed distribution has the form of a vector of frequencies, usually assigned to the centers of predefined bins. It is natural to display these data in the form of a frequency and/or probability histogram as shown in Fig. 1.

An example of four samples of discretized grain size distributions used in Sect. 6 (left) and their smoothed clr representation (right). Generally, coarser fractions tend to have a relatively low number of particles. The absolute frequencies (and therefore total numbers of particles) also show significant differences among the dataset,highlighted by the width of the confidence bounds as derived at the end of Sect. 2.2

Observed values of the (discretized) data are often unavoidably influenced by a sampling error which can occur due to the measuring process, for example insufficient sample size, or a human factor. The goal here is to estimate this error, expressed in the form of a variance-covariance matrix \(\Sigma \), and incorporate it into the continuous form of the data (produced using splines described in Sect. 2.2). The additional information will then be used for further analysis in context of SFPCA.

Let \(I = \left[ a,b\right] \) be the continuous domain and \(\textbf{h} = (h_{1},\dots ,h_{D})\) be the D-dimensional grid of points, representing the interval centers of the histograms on I. Each of N samples, corresponding to one observed distribution, can be written down as a vector \(\textbf{v}_{i}= (n_{i1},\dots ,n_{iD}), \quad \sum _{j=1}^{D}n_{ij}=n_{i}, \quad i = 1,\dots , N\), where \(n_{i}\) stands for the total number of observed values within the ith sample. As a first step, it is natural to expect that \(\textbf{v}_{i}\) follows a multinomial distribution \(Mu(\textbf{p}_{i},n_{i})\) with \(\textbf{p}_{i}\) being the relative proportions in the sample. For \(Mu(\textbf{p}_{i},n_{i})\), the expected value and variance-covariance matrix, respectively, are defined as

Since \(\textbf{v}_{i}\) represents a compositional vector, it is common to proceed by performing the clr transformation (here in its discrete form (13)). In order to do so, the issue of potential occurrence of zero values in the histogram has to be addressed. A very simple yet effective solution is to add an artificial \(\frac{1}{2}\) to all histogram interval frequencies (Martín-Fernández et al. 2015), resulting in adjusted vectors consisting of values \(n^{*}_{ij} = n_{ij}+\frac{1}{2}\), that is,

After applying the clr transformation, the following vectors of transformed values are obtained

To determine the variance-covariance structure of (18), the generalized variant of the \(\Delta \)-method (Doob 1935) is used. Considering the vector \({\varvec{{\epsilon }}}_{i}\) defined above, it is possible to maintain only the first two terms of the Taylor series and estimate the variance-covariance matrix as

where

4 Analyzing PDFs with Measurement Errors

In the previous sections all necessary ingredients were developed so that now the main goal of the paper can be tackled: to define a FDA approach for PDFs filtering measurement errors. Specifically, we demonstrate it for SFPCA as outlined in the introductory section.

4.1 The Corrected Variances and Covariances

In a classical PCA we would estimate the variance-covariance structure of the values \(\textbf{x}_i\) through the empirical variance-covariance matrix

which is an unbiased estimator of the variance-covariance structure

In a setting where the underlying distributions are only observed indirectly, a measurement error is introduced, and we only have access to observations \(\textbf{y}_i=\textbf{x}_i + \epsilon _i\), see (14), with errors of a known variance-covariance \(\Sigma _i=\mathop {var}(\epsilon _i)\). The empirical variance of the available data,

has then an expectation and variance-covariance

The covariance term is 0 due to uncorrelatedness of the underlying distributions and the observation error. The last sum only depends on the variance structure of the \(\epsilon \)-s,

and thus

which results in

A unbiased estimator for \(var(\textbf{x})\) can thus be given by Pospiech et al. (2021) as the correction

As reported in Pospiech et al. (2021), this estimator can provide non-definite estimations, whenever \(\hat{var}(\textbf{y})\) underestimates an eigenvector \(\textbf{v}_i\) by more than the variance of \(\mathop {var}(\textbf{v}_i^{\top } \bar{\epsilon })=\textbf{v}_i^{\top }\frac{1}{n} \sum _i\mathop {var}(\epsilon _i) \textbf{v}_i\). In such a case, the eigenvalue of the variance-covariance matrix of \(\textbf{x}\) happens to be smaller than the estimation precision in the associated eigendirection, and can thus be considered negligible, to be removed from the PCA. We thus use a corrected matrix \(\hat{\Sigma }_X\) for the PCA, given by

where

is the orthogonal eigenvector decomposition of the symmetric matrix \(\hat{var}_Y(\textbf{x})\) with orthogonal matrix \(\textbf{V}\) and a diagonal matrix \(\textbf{D}\) and \(\textbf{D}^*\) is the same matrix as \(\textbf{D}\), but with all negative entries (i.e. negative eigenvalues) set to 0.

4.2 Simplicial Functional Principal Component Analysis with Corrected Covariance Structure

As a common dimension-reduction tool, PCA has been broadly used in applications of both multivariate and functional statistics. Aiming to maintain a significant amount of the original information, new (latent) variables are constructed as linear combinations of the original ones. A functional counterpart to multivariate PCA is a functional principal component analysis (FPCA) (Ramsay and Silverman 2005), where the newly obtained variables (harmonics, eigenfunctions) form an orthogonal functional basis. For distributions, a simplicial functional principal component analysis (SFPCA) was introduced in Hron et al. (2016) which exploits their relative information.

Considering a centered sample of \(\mathcal {B}^2\) objects \(X_{1},\dots , X_{N}\), where N stands for the sample size, the goal of SFPCA is to find a sequence of simplicial functional principal components \(\{\zeta _{j}(t) \}_{j=1}^{N}\) which for each object \(\zeta _{j}(t)\) maximizes

The unique optimal solution for (22) is the sequence of eigenfunctions derived from the sample covariance operator \(V(s,t) = \frac{1}{N}\sum _{i=1}^{N} X_{i}(s) X_{i}(t)\) for \(X_{1},\dots ,X_{N}\) and \(s, t \in I\), that is,

where \(\{ g_{j}\}_{j=1}^{N}\) is the vector of eigenvalues corresponding to V(s, t).

Focusing on clr-transformed densities, one can reformulate the problem from (22) directly in a sense of principal components in the \(L^2_{0}\) space. Due to the isometric isomorphism between \(\mathcal {B}^{2}\) and \(L^{2}_{0}\) (van den Boogaart et al. 2014), (22) is analogously formulated as the maximizing of

where \(||\text {clr}(\zeta _ {j})|| =1\) and \(\left\langle \text {clr}(\zeta _{j}), \text {clr}(\zeta _{k}) \right\rangle = 0\) for \(k < j\). The maximum is attained for \(\{\text {clr}(\zeta _{j}) = \nu _{j}\}_{j=1}^{N}\), with \(\{ \nu _{j}\}_{j=1}^{N}\) standing for the eigenfunctions of the sampled covariance operator of the clr-transformed sample \(\text {clr}(X_{1}),\dots ,\text {clr}(\text {X}_{N})\).

Using the ZB-spline basis expansion, the functional problem reduces to the multivariate one performed on the spline coefficients. As described in Sect. 2.2, individual data objects \(X \in \mathcal {B}^{2}\) correspond to the expansion on an orthogonal basis \(X(t) = \sum _{i=-k}^{g-1} o_{i}^{*}O_{i}^{k+1}(t)\). Using the matrix notation, the variance-covariance operator then takes the form of \(V(s,t) = \frac{1}{N} \textbf{O}^{k+1}(t)^\top \textbf{o}^{*\top } \textbf{o}^{*} \textbf{O}^{k+1}(s)\). With the same orthogonal basis for representation of the eigenfunctions of V(s, t), one can represent the eigenproblem on the coefficient matrix and hence represent each eigenfunction \(\zeta _{j}(t)\) as a linear combination \(\textbf{O}^{k+1}(t) {\varvec{{\upsilon }}}_{j}\), where \({\varvec{{\upsilon }}}_{j}\) is the vector of spline coefficients defining the jth eigenfunction. The optimal solution for \({\varvec{{\upsilon }}}_{j}\), resulting in the same principal components as (23), is then found as \(\frac{1}{N} \textbf{o}^{*\top } \textbf{o}^{*} \mathbf {\upsilon }_{j} = g_{j}\mathbf {\upsilon }_{j}\) (Hron et al. 2016).

The question is then which variance-covariance matrix has to be used to derive the respective loadings, weights in the linear combination of spline functions, and for construction of the spline representation itself, cf. Sec. 2.2. In the presence of estimation uncertainties of the distributions, it makes sense to use a corrected variance-covariance matrix as shown in (21). While the corrected variance-covariance operator is not necessarily positive semidefinite, the eigenfunctions corresponding to the originally negative eigenvalues (fixed manually to 0) are by construction blurred beyond recovery by the variability caused by the measurement error; hence the safest thing is to ignore them when attempting to analyze the underlying process.

5 Simulation Study

To illustrate the ideas described above and to showcase the benefits of using the corrected variance-covariance matrix for SFPCA, a series of simulated scenarios was generated using distributions from the beta family as the true underlying distribution. To ease the transfer of the lessons learned to the real data example, the domain I was set to be \(\left\langle 0.5, 5{,}000 \right\rangle \) and we consider these generated distributions to be hypothetical particle size distributions. Therefore each simulation consists of (i) generating N beta-distributed particle sizes, and (ii) generating the vectors of relative frequencies for each of the 20 bins in which I was split, building essentially a 20-part composition. In the simulation process, three main combinable factors were considered:

-

Setting the parameters \(\alpha \) and \(\beta \) for the beta distribution. Here, four scenarios were explored:

-

1.

\(Beta(\alpha , 1)\), where \(\alpha \in \left\langle 0.5, 1.5 \right\rangle \);

-

2.

\(Beta(\alpha = \beta = a)\), where \(a \in \left\langle 0.5, 1.5 \right\rangle \);

-

3.

\(Beta(\alpha , \beta )\), where \(\alpha \in \left\langle 0.5, 1.5 \right\rangle \), \(\beta \in \left\langle 0.5, 1.5 \right\rangle \);

-

4.

\(Beta(\alpha = a-e, \beta =a+e)\), where \(a \in \left\langle 0.5, 1.5 \right\rangle \), \(e \in \left\langle -0.25, 0.25 \right\rangle \).

These scenarios were constructed so that the underlying variability would differ in each situation. In scenarios 1 and 2, there is one direction of underlying variability, while the directions are different. In the other two cases the underlying distribution has two eigenvalues, but with different ratios of eigenvalues.

-

1.

-

Size of the sample N (i.e. number of particles in the sample to estimate one distribution). With a decreasing number of particles in the sample, we naturally expect the measurement variability to increase. Here we produced three different simulations for each setting with 100, 1,000 and 10,000 observations. To ensure non-zero frequencies as forced by the clr transformation, \(\frac{1}{2}\) was added to counts in each histogram class, resulting into overall increase of particles by \(\frac{D}{2}=10\).

-

Number of knots and their position. As mentioned above, the preprocessing phase of the analysis of distributional data plays a key role due to interpolation and smoothing of the binned data. Here, the three sequences of knots used for simulation study had lengths 5, 12 and 20 (resulting in bases of 6, 13 and 21 functions, respectively, for used degree \(k=2\)) and their position was chosen to balance the interpolating and smoothing effect of the orthogonal ZB-spline representation. Due to the logarithmic scale used for particle sizes, the respective sequences were chosen as follows.

$$\begin{aligned} \lambda ^{5}= & {} \exp {\left( -0.75,\,2,\, 4,\, 6,\, 8.75 \right) },\\ \lambda ^{12}= & {} \exp {\left( -0.75,\, -0.5, \, 0.5, \, 1.5, \, 2.5,\, 3.5, \, 4.5, \, 5.5, \, 6.5, \, 7.5, \, 8.5, \, 8.75\right) },\\ \lambda ^{20}= & {} \exp (-0.75,\, -0.25,\, 0.25,\, 0.75,\, 1.25,\, 1.75,\, 2.25,\, 2.75,\, 3.25,\, 3.75, \\{} & {} 4.25, \,4.75,\, 5.25,\, 5.75,\, 6.25,\, 6.75, \, 7.25,\, 7.75, \, 8.25, \, 8.75). \end{aligned}$$

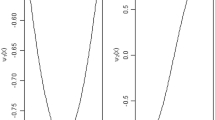

Scenarios preprocessed using the spline basis of dimension 6 are presented in Figs. 2 and 3. From both the visual inspection and the construction of the simulation scenarios, one can expect one main mode of variability in simulations 1 and 2 (due to the respective roles of parameters \(\alpha \) and a), while in scenarios 3 and 4, two prevalent variability sources are present (parameters \(\alpha , \beta \) and a, e, respectively). This also corresponds to theoretical expectations, because the number of varying parameters in a distribution from the exponential family determines the dimensionality of their subspace in the Bayes space (van den Boogaart et al. 2010).

The results of SFPCA with corrected covariance matrix (hereafter distributional SFPCA) are compared with the original non-corrected SFPCA, to see the effect of filtering the measurement error from the covariance structure. Indeed, in Figs. 4, 5, 6 and 7 (from now on only results corresponding to sample sizes 100, 1,000 and 10,000 are shown) one or two eigenvalues, depending on the simulation scenario, are “significantly” positive in distributional SFPCA; the rest of the (originally negative) eigenvalues were set to zero. On the other hand, for smaller sample sizes, standard SFPCA yields quite high eigenvalues, even for other functional principal components (FPCs), which must be understood (in light of the way we have simulated them) as purely induced by measurement error. In both cases, as expected, with increasing number of particles, the dominance of the first (two) component(s) is more prevalent.

Orthogonal bases obtained through distributional SFPCA (center) and ’standard’ SFPCA (right) for simulation 1. The behavior of corresponding eigenvalues is shown in the left column for both distributional SFPCA (\(\bullet \)) and standard SFPCA (\(\times \)). The considered number of particles increases gradually with the row index (100, 1,000, 10,000)

Orthogonal bases obtained through distributional SFPCA (center) and ’standard’ SFPCA (right) for simulation 2. The behavior of corresponding eigenvalues is shown in the left column for both distributional SFPCA (\(\bullet \)) and standard SFPCA (\(\times \)). The considered number of particles increases gradually with the row index (100, 1,000, 10,000)

Orthogonal bases obtained through distributional SFPCA (center) and ’standard’ SFPCA (right) for simulation 3. The behavior of corresponding eigenvalues is shown in the left column for both distributional SFPCA (\(\bullet \)) and standard SFPCA (\(\times \)). The number of particles considered increases gradually with the row index (100, 1,000, 10,000)

Orthogonal bases obtained through distributional SFPCA (center) and ’standard’ SFPCA (right) for simulation 4. The behavior of corresponding eigenvalues is shown in the left column for both distributional SFPCA (\(\bullet \)) and standard SFPCA (\(\times \)). The considered number of particles increases gradually with the row index (100, 1,000, 10,000)

A similar effect of N on the ability to capture the main modes of variability can be observed for the respective eigenfunctions, which are depicted in the left and middle plots of Figs. 4, 5, 6 and 7 for corrected and uncorrected SFPCA, respectively. Here, uncorrected SFPCA seems to be a bit more sensitive to the lack of sufficient sample size (see in particular the first row in Figs. 4, 5, 6 and 7) because with small sample sizes we expect the measurement error to become dominant. All information apparently being captured by higher-order principal components in the uncorrected case appears to be reasonably captured by the first few corrected principal component(s) with positive eigenvalues. This is clearly visible for scenarios 3 and 4 (Figs. 6 and 7): while standard SFPCA assigns merely the same first and second eigenfunctions to both scenarios, corrected SFPCA correctly reveals the main source of variability as connected to the first eigenfunction. This is also reflected on reconstructions of the original functions in cases of lower sample sizes (see example for simulation 4 in Fig. 8), where for the lowest sample size the main mode of variability was not clear enough. Finally, the consistency of the proposed method is shown in Fig. 9, as the increment of knots does not much change the behavior of the (colored) significant SFPCs.

Visualization of the effect of the selected number of knots for simulation 4. In rows, the number of particles is changing (100, 1,000 and 10,000, respectively), while in columns, the number of knots (5, 12 and 20), and therefore the number of FPCs (5, 12 and 20) is increasing. To avoid confusion, only the first two FPCs are colored as they correspond to the true modes of variability in the data—the effect of the remaining components is negligible. It is possible to see that with the increasing number of knots, the shape of the first two FPCs is consistent—therefore the inclusion of additional knots does not “confuse” the method. The effect of changing sample size remains consistent for all knot selections: there is a visible improvement in the component shape estimation between the first row (\(N=100\)) and the second row \((N=1{,}000)\)

6 Application to Grain Size Distributions

6.1 The Data

The Bushveld complex (South Africa) is a large scale ultramafic layered intrusion, in which chromitite layers host several world class platinum group element ore deposits. These elements are typically present in a large variety of minerals, collectively called platinum group minerals, co-occurring with several base metal sulfide minerals (BMS). In the present dataset by Bachmann (2020), 113 locations spread across four layers (called “seams”) at the Thaba mine were analyzed for their 22 BMS minerals. In particular, a sample of grain sizes of these minerals was obtained for each location by means of an automated mineralogy system based on scanning electron microscopy. A total of 137,945 grains of BMS were measured, very unevenly distributed across samples (between 51 and 8,295 grains per sample). Note that in this context a grain is not a particle: particles are solid continuous volumes, while grains are solid continuous volumes of a specific mineral phase; a monomineralic particle will contain a single grain, but polyminerallic particles contain several grains.

6.2 Principal Component Analysis

The dataset used consists of 113 individual grain size distributions (Fig. 10). For this purpose, \(D=16\) bins split the domain \(I=\text {exp}([-0.75,6.25])\). Once again, zero frequencies were avoided by means of the addition of half a count in each bin. A spline with five knots was fitted to the histogram data, resulting in a series of clr coefficients, for which a variance-covariance data matrix was computed. In parallel, the measurement errors were obtained with the multinomial approach described in Sect. 3. Finally, a principal component decomposition was constructed based both on the corrected variance-covariance and on the uncorrected variance-covariance. Figure 11 shows the two sets of obtained eigenvalues, as well as the eigenvectors of coefficients (expressed as clr functions) for the corrected case. It is worth noting that the magnitude of the correction is larger than the intensity of the residual signal in all but the first principal component.

The distributional variability spanning the first two principal components is visualized in Fig. 12. The first eigenfunction mostly represents a rebalancing of the grain size from intermediate-fine to intermediate-coarse grains, while the second eigenfunction is dominated by a rebalancing of grain size between intermediate-coarse grains and coarse grains. In this figure, we show a family of distributions obtained perturbing the average distribution of the dataset along the principal direction (first and/or second) with increasing magnitude of the perturbation up to the standard deviation corresponding to the square root of the corrected first resp. second eigenvalue, that is,

This can be seen in the notably larger variation exhibited by Fig. 12a in comparison to Fig. 12b. The combined effect of both first and second eigenfunctions (Fig. 12c) is then presented with respect to the “weights” shown in Fig. 12d.

Next, the scores of the grain size distributions of the first four principal components are set in relation to the location of each sample in their corresponding seam, that is, their depth level. Figure 14 presents the distribution of scores for each principal component across all four seams studied, comparing the corrected and uncorrected distributions. One can see that across all four seams, the first two principal components do not seem much affected by the correction. On the contrary, the third and fourth principal directions appear to have swapped places after the correction, indicating that (particularly in the fourth FPC) they were strongly dominated by the measurement uncertainty prior to the correction. This is confirmed by the matrix of correlation coefficients between corrected and uncorrected FPCs (Table 1), showing very good correlations of the corrected vs uncorrected first FPC as well as of the corrected vs uncorrected second FPC, but a swapping between third and fourth FPCs after correction. This correction also produces the phenomenon wherein plots of data scores exhibit larger dispersions than those that the principal components nominally capture (Fig. 13). This produces the effect that the third FPC scores have less dispersion than the fourth FPC scores. Additionally, we can observe both in the violin plots (Fig. 14) as well as in the left biplot (Fig. 13) a slight tendency to larger FPC1 scores by seams. A global Fisher F-test for the analysis of variance (ANOVA) of the FPC1 score versus seam gave a p-value of 0.006086, individual coefficient t-tests give significant differences (p-value \(<0.05\)) for the differences LG6-LG6a and LG6-MG2.

7 Practical Considerations

The proposed methodology combines a spline representation adequate for distributions with a correction of observational errors prior to applying the desired statistical method, in the present case, functional principal component analysis. Considering observation errors and correcting for them has the advantage of providing a natural way to distinguish between relevant modes of variability and irrelevant, or noise-dominated ones: functional principal components associated with negative or almost-zero eigenvalues correspond to those irrelevant modes of variation completely obscured by the observation error, particularly for cases with relatively low number of underlying observations per distribution (e.g. Fig. 7).

The simulation exercises have shown that the combination of a spline representation and an observational error correction is particularly apt to extract the actual structure of principal components. First, splines can be used to smooth the histogram moderately, hence implicitly filtering a bit of the observational error. The additional observation error correction robustifies the extraction of the number of relevant principal components: without error correction, spline approximations with more knots tend to produce more principal components, some of which will by chance exhibit a variability strong enough to be considered as relevant. This is avoided by the proposed observation error correction strategy.

The (functional) principal components so extracted need to be interpreted as perturbations with respect to the mean distribution, as is common with conventional principal component analysis. These principal components are best understood expressed as clr curves: if a clr-PC is positive (or negative) over a certain part of the domain, this part of the domain becomes more (or less) likely along that principal component. But given that clr-densities always integrate to zero, they are always positive in some parts of the domain and negative in other parts. Hence, such principal components represent a reweighting or rebalancing of the probability function over the domain, increasing the likelihood of some subsets at the expense of other subsets. In the present case, PC1 increases the likelihood of grain sizes between approximately 20 and 400 \(\upmu \)m at the expense of the likelihood of grain sizes between about 2 and 20 \(\upmu \)m, that is, the higher PC1 is, the more frequent tend to be grains of size larger than approximately 20 \(\upmu \)m. PC2 increases the likelihood of grain sizes roughly between 5 and 250 \(\upmu \)m at the expense of coarser and finer grains.

The concrete interpretation of the factors controlling these principal components will, of course, depend on the exact case study and possible available covariables. McLaren and Bowles (1985), for instance, discuss how such curves for particle size distribution (not grain sizes, like here) can be used to characterize the sediment transport along pathways. In other cases, for example in varietal studies of the chemical composition of specific minerals between different samples, the principal components might indicate chemical reaction intensities (linked to gradients on pH, oxygen and sulfur fugacities, or temperature), or perhaps suggest direction to sources of transporting fluids. Extrapolating these considerations to our case study, PC1 shows a slight but consistent trend to higher values from LG6 to MG2 (Fig. 14), indicating conditions more favorable to larger grain sizes in the seams from upper parts of the sequence. Whether this is related to syngenetic (e.g. lower temperature gradients in the upper seams?), or to posterior processes (e.g. recrystallization due to stronger hydrothermal overprint?) or any other explanation, is beyond the scope of this contribution. Further research on this and other specific case studies may shed more light onto these aspects of interpretation of functional principal components.

8 Conclusions

It is still not common practice in functional data analysis to take the measurement error of observations into account. For analyzing probability density functions which result naturally from aggregation of sampled data, this is an important aspect which needs to be seriously considered. Histograms as results of the aggregation process are by essence realization of a multinomial random vector, thus allowing us to derive a sensible covariance structure for its “measurement” errors. Consequently, when the errors are filtered out, the covariance structure of the underlying distributions can be finally observed and used for further statistical processing. Moreover, thanks to the orthonormalization of the ZB-spline basis for representation of PDFs in the clr space, all these considerations can be performed on the basis of multivariate data processing.

In this paper the focus was on dimension reduction of PDFs via simplicial functional principal component analysis. However, results from Sects. 2 through 4 are general enough and can be used for any covariance-based FDA method, for example, in classification, sparse FDA or functional time series (Ramsay and Silverman 2005; Kokoszka and Reimherr 2017). As the demand for using FDA models also concerns histogram data resulting from rather moderate sample sizes (e.g. in Menafoglio et al. (2021), on average only 26.48 observations per location were available), taking into account the influence of measurement errors is expected to become even more important. Still, it should be clearly stated that even without considering any specific FDA analysis, results from Sect. 2 are essential for any sensible representation of PDFs resulting from aggregation of the input values into histogram data.

Analogous considerations can be extended to bivariate (Hron et al. 2022) and in general to multivariate densities (Genest et al. 2023), where the curse of dimensionality must also be taken into account. Finally, although other representations of the aggregated data are possible, such as kernel estimation of PDFs (Guégan and Iacopini 2018), the presented theoretical developments enable further statistical inference with the resulting spline functions and their coefficients, which is a notable advantage of the proposed approach. Further efforts in this direction will thus follow in the near future.

References

Bachmann K (2020) Predictive geometallurgical modelling. Ph.D. thesis, Techniche Universität Bergakademie Freiberg

Bortolotti T (2021) Weighted functional data analysis for partially observed seimic data: an application to ground motion modelling in Italy. Ph.D. thesis, Politecnico Di Milano

De Boor C (1978) A practical guide to splines. Springer, New York

Doob JL (1935) The limiting distributions of certain statistics. Ann Math Stat 6(3):160–169

Egozcue J, Díaz-Barrero J, Pawlowsky-Glahn V (2006) Hilbert space of probability density functions based on Aitchison geometry. Acta Math Sinica 22:1175–1182

Fišerová E, Kubáček L, Kunderová P (2007) Linear statistical models: regularity and singularities. Academia, Praha

Genest C, Hron K, Nešlehová J (2023) Orthogonal decomposition of multivariate densities in bayes spaces and its connection with copulas 198:105228. https://doi.org/10.1016/j.jmva.2023.105228

Guégan D, Iacopini M (2018) Nonparametric forecasting of multivariate probability density functions. arXiv:1803.06823

Hron K, Menafoglio A, Templ M, Hrůzová K, Filzmoser P (2016) Simplicial principal component analysis for density functions in Bayes spaces. Comput Stat Data Anal 94:330–350

Hron K, Machalová J, Menafoglio A (2022) Bivariate densities in bayes spaces: orthogonal decomposition and spline representation. Stat Pap 64:1629–1667

Kokoszka P, Reimherr M (2017) Introduction to functional data analysis. CRC Press, Boca Raton

Machalová J, Hron K, Monti G (2016) Preprocessing of centred logratio transformed density functions using smoothing splines. J Appl Stat 43(8):1419–1435

Machalová J, Talská R, Hron K, Gába A (2021) Compositional splines for representation of density functions. Comput Stat 36(2):1031–1064

Martín-Fernández J, Hron K, Templ M, Filzmoser P, Palarea-Albaladejo J (2015) Bayesian-multiplicative treatment of count zeros in compositional data sets. Stat Model 15(2):134–158

McLaren P, Bowles D (1985) The effects of sediment transport on grain-size distributions. J Sediment Res 55(4):457–470

Menafoglio A, Guadagnini L, Guadagnini A, Secchi P (2021) Object oriented spatial analysis of natural concentration levels of chemical species in regional-scale aquifers. Spatial Stat 43:100494

Pawlowsky-Glahn V, Egozcue JJ (2001) Geometric approach to statistical analysis on the simplex. Stoch Environ Res Risk Assess 15(5):384–398

Pawlowsky-Glahn V, Egozcue JJ, Tolosana-Delgado R (2015) Modeling and analysis of compositional data. Wiley, Chichester

Pospiech S, Delgado RT, van den Boogaart KG (2021) Discriminant analysis for compositional data incorporating cell-wise uncertainties. Math Geosci 53:1–20

Ramsay J, Silverman BW (2005) Functional data analysis, 2nd edn. Springer, New York

Rao CR, Mitra SK (1971) Generalized inverse of matrices and its applications. Wiley, New York

Talská R, Menafoglio A, Machalová J, Hron K, Fišerová E (2018) Compositional regression with functional response. Comput Stat Data Anal 123:66–85

Talská R, Hron K, Grygar TM (2021) Compositional scalar-on-function regression with application to sediment particle size distributions. Math Geosci 53:1667–1695

van den Boogaart KG, Egozcue JJ, Pawlowsky-Glahn V (2010) Bayes linear spaces. Stat Oper Res Transa 34(2):201–222

van den Boogaart KG, Egozcue JJ, Pawlowsky-Glahn V (2014) Bayes Hilbert Spaces. Aust N Z J Stat 56(2):171–194

Acknowledgements

We gratefully acknowledge the support of this research and researchers by the following grants: HiTEc Cost Action CA21163, IGA_PrF_2022_008 and IGA_PrF_2023_009 Mathematical models and the Czech Science Foundation grant 22-15684 L, the project PID2021-123833OB-I00 provided by the Spanish Ministry of Science and Innovation (MCIN/AEI/10:13039/501100011033) and ERDF A way of making Europe.

Funding

Open access publishing supported by the National Technical Library in Prague.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All authors declare that they have no Conflict of interest.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Pavlů, I., Machalová, J., Tolosana-Delgado, R. et al. Principal Component Analysis for Distributions Observed by Samples in Bayes Spaces. Math Geosci (2024). https://doi.org/10.1007/s11004-024-10142-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11004-024-10142-9