Abstract

Semi-supervised ordinal regression (S2OR) has been recognized as a valuable technique to improve the performance of the ordinal regression (OR) model by leveraging available unlabeled samples. The balancing constraint is a useful approach for semi-supervised algorithms, as it can prevent the trivial solution of classifying a large number of unlabeled examples into a few classes. However, rapid training of the S2OR model with balancing constraints is still an open problem due to the difficulty in formulating and solving the corresponding optimization objective. To tackle this issue, we propose a novel form of balancing constraints and extend the traditional convex–concave procedure (CCCP) approach to solve our objective function. Additionally, we transform the convex inner loop (CIL) problem generated by the CCCP approach into a quadratic problem that resembles support vector machine, where multiple equality constraints are treated as virtual samples. As a result, we can utilize the existing fast solver to efficiently solve the CIL problem. Experimental results conducted on several benchmark and real-world datasets not only validate the effectiveness of our proposed algorithm but also demonstrate its superior performance compared to other supervised and semi-supervised algorithms

Similar content being viewed by others

Data availability

Benchmark datasets are available at https://www.dcc.fc.up.pt/~ltorgo/Regression and real-world datasets are available at https://nijianmo.github.io/amazon/ index.html.

Code availability

The code will be publicly available once the work is published upon agreement of different sides.

Notes

\(\phi (\cdot )\) is transformation function from an input space to a high-dimensional reproducing kernel Hilbert space.

SVOR is available at http://www.gatsby.ucl.ac.uk/~chuwei/svor.htm.

TSVM-CCCP is available at https://github.com/fabiansinz/UniverSVM.

TSVM-LDS is available at https://github.com/paperimpl/LDS.

Benchmark datasets are available at https://www.dcc.fc.up.pt/~ltorgo/Regression.

Real-world datasets are available at https://nijianmo.github.io/amazon/ index.html.

References

Abdi, H., & Williams, L. J. (2010). Principal component analysis. Wiley Interdisciplinary Reviews: Computational Statistics, 2(4), 433–459.

Aizawa, A. (2003). An information-theoretic perspective of tf-idf measures. Information Processing & Management, 39(1), 45–65.

Allahzadeh, S., & Daneshifar, E. (2021). Simultaneous wireless information and power transfer optimization via alternating convex-concave procedure with imperfect channel state information. Signal Processing, 182, 107953.

Berg, A., Oskarsson, M., & O’Connor, M. (2021) Deep ordinal regression with label diversity. In: 2020 25th international conference on pattern recognition (ICPR) (pp. 2740–2747). IEEE.

Bertsekas, D. P. (2014). Constrained optimization and Lagrange multiplier methods. Academic Press.

Buri, M., & Hothorn, T. (2020). Model-based random forests for ordinal regression. The International Journal of Biostatistics. https://doi.org/10.1515/ijb-2019-0063

Cardoso, J. S., da Costa, J. F. P., & Cardoso, M. J. (2005). Modelling ordinal relations with SVMs: An application to objective aesthetic evaluation of breast cancer conservative treatment. Neural Networks, 18(5–6), 808–817.

Chang, C. C., & Lin, C. J. (2011). LIBSVM: A library for support vector machines. ACM Transactions on Intelligent Systems and Technology (TIST), 2(3), 1–27.

Chapelle, O., Scholkopf, B., & Zien, A. (2009). Semi-supervised learning. IEEE Transactions on Neural Networks, 20(3), 542–542.

Chapelle, O., Sindhwani, V., & Keerthi, S. S. (2008). Optimization techniques for semi-supervised support vector machines. Journal of Machine Learning Research, 9, 203–233.

Chapelle, O., & Zien, A. (2005). Semi-supervised classification by low density separation. In AISTATS (Vol. 2005, pp. 57–64). Citeseer.

Chen, P. H., Fan, R. E., & Lin, C. J. (2006). A study on SMO-type decomposition methods for support vector machines. IEEE Transactions on Neural Networks, 17(4), 893–908.

Chen, Y., Tao, J., Zhang, Q., Yang, K., Chen, X., Xiong, J., Xia, R., & Xie, J. (2020). Saliency detection via the improved hierarchical principal component analysis method. Wireless Communications and Mobile Computing. https://doi.org/10.1155/2020/8822777

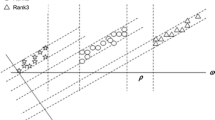

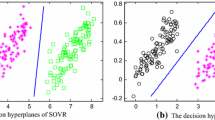

Chu, W., & Keerthi, S. S. (2005). New approaches to support vector ordinal regression. In Proceedings of the 22nd international conference on machine learning (pp. 145–152).

Chu, W., & Keerthi, S. S. (2007). Support vector ordinal regression. Neural Computation, 19(3), 792–815.

Collobert, R., Sinz, F., Weston, J., & Bottou, L. (2006). Large scale transductive SVMs. Journal of Machine Learning Research, 7, 1687–1712.

Crammer, K., & Singer, Y. (2002). Pranking with ranking. In Advances in neural information processing systems (pp. 641–647).

Fullerton, A. S., & Xu, J. (2012). The proportional odds with partial proportionality constraints model for ordinal response variables. Social Science Research, 41(1), 182–198.

Ganjdanesh, A., Ghasedi, K., Zhan, L., Cai, W., & Huang, H. (2020). Predicting potential propensity of adolescents to drugs via new semi-supervised deep ordinal regression model. In International conference on medical image computing and computer-assisted intervention (pp. 635–645). Springer.

Garg, B., & Manwani, N. (2020). Robust deep ordinal regression under label noise. In: Asian conference on machine learning (pp. 782–796). PMLR.

Gu, B., Zhang, C., Huo, Z., & Huang, H. (2023). A new large-scale learning algorithm for generalized additive models. Machine Learning, 112, 3077–3104.

Gu, B., Zhang, C., Xiong, H., & Huang, H. (2022). Balanced self-paced learning for AUC maximization. In Proceedings of the AAAI conference on artificial intelligence (vol. 36, pp. 6765–6773).

Haeser, G., & Ramos, A. (2020). Constraint qualifications for Karush–Kuhn–Tucker conditions in multiobjective optimization. Journal of Optimization Theory and Applications, 187(2), 469–487.

Herbrich, R. (1999). Support vector learning for ordinal regression. In: Proceedings of the 9th international conference on neural networks (pp. 97–102).

Joachims, T. (1999) Transductive inference for text classification using support vector machines. In ICML (vol. 99, pp. 200–209).

Li, L., & Lin, H. T. (2007). Ordinal regression by extended binary classification. In Advances in neural information processing systems (pp. 865–872).

Li, X., Wang, M., & Fang, Y. (2020). Height estimation from single aerial images using a deep ordinal regression network. IEEE Geoscience and Remote Sensing Letters, 19, 1–5.

Liu, Y., Liu, Y., Zhong, S., & Chan, K. C. (2011). Semi-supervised manifold ordinal regression for image ranking. In Proceedings of the 19th ACM international conference on multimedia (pp. 1393–1396).

Nakanishi, K. M., Fujii, K., & Todo, S. (2020). Sequential minimal optimization for quantum-classical hybrid algorithms. Physical Review Research, 2(4), 043158.

Oliveira, A. L., & Valle, M. E. (2020). Linear dilation-erosion perceptron trained using a convex-concave procedure. In SoCPaR (pp. 245–255).

Onan, A. (2020). Sentiment analysis on product reviews based on weighted word embeddings and deep neural networks. Concurrency and Computation: Practice and Experience, 33, e5909.

Pang, G., Yan, C., Shen, C., Hengel, A. v. d., & Bai, X. (2020). Self-trained deep ordinal regression for end-to-end video anomaly detection. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 12173–12182).

Platt, J. (1998). Sequential minimal optimization: A fast algorithm for training support vector machines. Microsoft Research Technical Report 98.

Rastgar, F., Singh, A. K., Masnavi, H., Kruusamae, K., & Aabloo, A. (2020). A novel trajectory optimization for affine systems: Beyond convex–concave procedure. In 2020 IEEE/RSJ international conference on intelligent robots and systems (IROS) (pp. 1308–1315). IEEE.

Seah, C. W., Tsang, I. W., & Ong, Y. S. (2012). Transductive ordinal regression. IEEE Transactions on Neural Networks and Learning Systems, 23(7), 1074–1086.

Shashua, A., & Levin, A. (2003). Ranking with large margin principle: Two approaches. In Advances in neural information processing systems (pp. 961–968).

Sornalakshmi, M., Balamurali, S., Venkatesulu, M., Krishnan, M. N., Ramasamy, L. K., Kadry, S., Manogaran, G., Hsu, C. H., & Muthu, B. A. (2020). Hybrid method for mining rules based on enhanced apriori algorithm with sequential minimal optimization in healthcare industry. Neural Computing and Applications, 34, 10597–10610.

Srijith, P., Shevade, S., & Sundararajan, S. (2013). Semi-supervised Gaussian process ordinal regression. In Joint European conference on machine learning and knowledge discovery in databases (pp. 144–159). Springer.

Su, T. V., & Luu, D. V. (2020). Higher-order Karush–Kuhn–Tucker optimality conditions for Borwein properly efficient solutions of multiobjective semi-infinite programming. Optimization, 71, 1749–1775.

Sulaiman, N. S., & Bakar, R. A. (2017). Rough set discretization: Equal frequency binning, entropy/mdl and semi Naives algorithms of intrusion detection system. Journal of Intelligent Computing, 8(3), 91.

Tsuchiya, T., Charoenphakdee, N., Sato, I., & Sugiyama, M. (2019). Semi-supervised ordinal regression based on empirical risk minimization. arXiv preprint arXiv:1901.11351.

Van Su, T., & Hien, N. D. (2021). Strong Karush–Kuhn–Tucker optimality conditions for weak efficiency in constrained multiobjective programming problems in terms of mordukhovich subdifferentials. Optimization Letters, 15(4), 1175–1194.

Wang, T., Lu, K., Chow, K. P., & Zhu, Q. (2020). COVID-19 sensing: Negative sentiment analysis on social media in China via BERT model. IEEE Access, 8, 138162–138169.

Xu, L., Neufeld, J., Larson, B., & Schuurmans, D. (2005). Maximum margin clustering. In Advances in neural information processing systems (pp. 1537–1544).

Zemkoho, A. B., & Zhou, S. (2021). Theoretical and numerical comparison of the Karush–Kuhn–Tucker and value function reformulations in bilevel optimization. Computational Optimization and Applications, 78(2), 625–674.

Zhai, Z., Gu, B., Deng, C., & Huang, H. (2023). Global model selection via solution paths for robust support vector machine. IEEE Transactions on Pattern Analysis and Machine Intelligence. https://doi.org/10.1109/TPAMI.2023.3346765

Zhai, Z., Gu, B., Li, X., & Huang, H. (2020). Safe sample screening for robust support vector machine. In Proceedings of the AAAI Conference on Artificial Intelligence (Vol. 34, pp. 6981–6988).

Zhu, X. J. (2005). Semi-supervised learning literature survey. Technical report, Department of Computer Sciences, University of Wisconsin-Madison.

Funding

Bin Gu was partially supported by the National Natural Science Foundation of China (No. 61573191).

Author information

Authors and Affiliations

Contributions

Bin Gu contributed to the conception and the design of the method. Chenkang Zhang contributed to running the experiments and writing paper. Heng Huang contributed to providing feedback and guidance. All authors contributed on the results analysis and the manuscript writing and revision.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflict of interest to declare that are relevant to the content of this article.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Ethics approval

Not applicable.

Additional information

Editor: Joao Gama.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Proof of Lemma 1

Firstly, we define some data subsets where \(j \in \{1,\ldots ,r\}\):

It is easy to see that \(\theta _k\) is optimal if it minimizes the function:

Then, we obtain the derivative of \(e_k(\theta )\) with respect to \(\theta\):

And through simple calculation, we have:

In order to prove that the order of \(\theta\) is no longer guaranteed in \(\hbox {S}^2\)OR problem, we construct a counterexample. Firstly, we find that the derivative \(l_k(\theta )\) (28) is not a monotone function. What’s more, when \(\theta\) tends to be positive infinity, we have \(l_k(\theta )>0\) and when \(\theta\) tends to be negative infinity, we have \(l_k(\theta )<0\). In this case, we assume that \(l_k(\theta )\) and \(l_{k+1}(\theta )\) both own three zero points, and the first derivative of these points is not zero. Next, we set the zero points of \(l_k(\theta )\) from left to right as \(a_1\), \(a_2\) and \(a_3\), and the zero points of \(l_{k+1}(\theta )\) as \(b_1\), \(b_2\) and \(b_3\). According to (29), we assume one situation that \(a_1<b_1<b_2<a_2<a_3<b_3\). Under such condition, \(e_k(\theta )\) and \(e_{k+1}(\theta )\) both own two local minimum points \(a_1, a_3\) and \(b_1, b_3\). Finally, we assume that point \(a_3\) and point \(b_1\) are global optimal solutions of \(e_k(\theta )\) and \(e_{k+1}(\theta )\). Then we have \(\theta _k=a_3 > \theta _{k+1}=b_1\), and this situation conflicts with the order \(\theta _k \le \theta _{k+1}\) we asked for. Therefore, we conclude that the order of \(\theta\) is no longer guaranteed in \(\hbox {S}^2\)OR problem.

Appendix B: Proof of the DC formulation

In order to handle the non-convex objective function (5) better, we rewrite the loss of unlabeled samples:

where \(R_s(t)=\min \{ 1-s,\max \{ 0,1-t \} \}=H_1(t)-H_s(t)\) represents a ramp loss. Further more, we rewrite the loss term of unlabeled samples in the objective function (5) with the help of the ramp loss:

Then, according to the artificial labeled samples (14), the expression in (31) is in the new formulation:

where the constant does not affect the optimization problem obviously, and we can just ignore it.

Finally, original objective function is in the formulation of

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, C., Huang, H. & Gu, B. Tackle balancing constraints in semi-supervised ordinal regression. Mach Learn (2024). https://doi.org/10.1007/s10994-024-06518-x

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10994-024-06518-x