Abstract

Logic-based machine learning aims to learn general, interpretable knowledge in a data-efficient manner. However, labelled data must be specified in a structured logical form. To address this limitation, we propose a neural-symbolic learning framework, called Feed-Forward Neural-Symbolic Learner (FFNSL), that integrates a logic-based machine learning system capable of learning from noisy examples, with neural networks, in order to learn interpretable knowledge from labelled unstructured data. We demonstrate the generality of FFNSL on four neural-symbolic classification problems, where different pre-trained neural network models and logic-based machine learning systems are integrated to learn interpretable knowledge from sequences of images. We evaluate the robustness of our framework by using images subject to distributional shifts, for which the pre-trained neural networks may predict incorrectly and with high confidence. We analyse the impact that these shifts have on the accuracy of the learned knowledge and run-time performance, comparing FFNSL to tree-based and pure neural approaches. Our experimental results show that FFNSL outperforms the baselines by learning more accurate and interpretable knowledge with fewer examples.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Logic-based machine learning (Muggleton, 1991; Law et al., 2019) learns interpretable knowledge expressed in the form of a logic program, called a hypothesis, that explains labelled examples in the context of (optional) background knowledge. Recent logic-based machine learning systems have demonstrated the ability to learn highly complex and noise-tolerant hypotheses in a data efficient manner [e.g., Learning from Answer Sets (LAS) (Law et al., 2019)]. However, they require labelled examples to be specified in a structured logical form, which limits their applicability to many real-world problems. On the other hand, differentiable learning systems, such as (deep) neural networks, are able to learn directly from unstructured data, but they require large amounts of training data and their learned models are difficult to interpret (Gilpin et al., 2018).

Within neural-symbolic artificial intelligence, many approaches aim to integrate neural and symbolic systems with the goal of preserving the benefits of both paradigms (Besold et al., 2017; Garcez & Lamb, 2020). Most neural-symbolic integrations assume the existence of pre-defined knowledge expressed symbolically, or logically, and focus on training a neural network to extract symbolic features from raw unstructured data (Manhaeve et al., 2018; Yang et al., 2020; Serafini & d’Avila Garcez, 2016; Cohen, 2016; Riegel et al., 2020). In this paper, we introduce Feed-Forward Neural-Symbolic Learner (FFNSL), a neural-symbolic learning framework that assumes the opposite. Given a pre-trained neural network, FFNSL uses a logic-based machine learning system robust to noise to learn a logic-based hypothesis whose symbolic features are constructed from neural network predictions. The motivation is to enable logic-based machine learning systems to utilise pre-trained neural networksFootnote 1 to learn symbolic features from unstructured data, and use these features to learn interpretable knowledge needed to solve a downstream classification task. FFNSL preserves the benefits of both paradigms, increasing the scope of the tasks logic-based machine learning systems can be applied to. The challenge in performing such an integration, is that neural networks are vulnerable to distributional shifts, where unstructured data belonging to a distribution different from that used for training often leads to incorrect predictions (Ovadia et al., 2019; Sensoy et al., 2018; Amodei et al., 2016). By using a logic-based machine learning system that is robust to noise, such as a LAS system, FFNSL is capable of learning robust logic-based hypotheses from examples generated from labelled unstructured data, which may contain incorrect or noisy features as a result of incorrect neural network predictions.

The novel aspect of our FFNSL framework is the Data-to-Knowledge (D2K) generator that bridges the neural and symbolic learning components. The D2K generator automatically constructs a symbolic representation of the features predicted from the unstructured data, and weights such knowledge with a level of truthfulness that reflects the confidence score of the neural network predictions. The symbolic features can then be used by the symbolic learning component to automatically generate weighted examples from which to learn general and interpretable knowledge needed to solve the given downstream task.

FFNSL is general enough to support the integration of any neural component capable of making discrete predictions from unstructured data (binary or multi-class classification), with any logic-based machine learning system capable of learning from noisy examples. In this paper, we present four instances of our framework, where the LAS systems, ILASP (Law, 2018) and FastLAS (Law et al., 2020), are used as the symbolic learning component, and different neural network architectures are used as the neural component. The LAS systems have been shown to learn optimal hypotheses from noisy examples (Law et al., 2018), and to be suitable for different forms of symbolic learning tasks. In these systems, a noisy example includes a weight, which defines the penalty paid by a hypothesis for not covering that example. FFNSL interprets this weight as a level of certainty of the example, and computes it using the confidence score of the related neural network predictions. In this way, the LAS systems become biased towards learning a hypothesis that has minimal penalty, i.e., a hypothesis that covers examples generated from high confidence neural network predictions (examples with high weights). For each proposed instance of our FFNSL framework, we investigate: (1) whether FFNSL can learn an accurate and interpretable hypothesis from incorrect feature predictions of the neural component, (2) how robust the learned hypothesis is in the presence of distributional shifts applied to an increasing percentage of the unstructured data, (3) the impact of using an uncertainty-aware neural network component that provides more robust confidence estimates when distributional shifts are applied to the unstructured data, and (4) how FFNSL performs in comparison to other hybrid systems where the same pre-trained neural networks, used for predicting features from the unstructured data, are integrated with a random forest and deep neural networks trained to learn the knowledge required to solve the downstream task.

To evaluate our FFNSL framework, we use four neural-symbolic classification tasks, one for each proposed instance.Footnote 2 Firstly, the Follow Suit Winner task is a card game where 4 players each play a card and the goal is to predict the winning player. In order to solve the task, the neural network predicts the rank and suit of the playing card images and the rules of the game are learned as symbolic knowledge, where the winner is the player that plays the highest ranked card with the same suit as player 1. The second task is Sudoku Grid Validity classification, which consists of observing a sequence of images of handwritten MNIST digits, corresponding to the digits in a Sudoku grid, and predicting if the grid is valid or not. The neural network classifies each digit and the symbolic knowledge required to be learned is the definition of valid (or invalid) Sudoku grids. The final tasks are Crop Yield Prediction and Indoor Scene Classification, which demonstrate the applicability of FFNSL to real-world problems and datasets. The Crop Yield Prediction task requires predicting the quality of crop yield from an image containing potentially diseased crops, where the neural network predicts the crop’s species and disease status, and the learned symbolic knowledge predicts the quality of yield. In the Indoor Scene Classification task, the neural network is pre-trained to predict the scene class from an image, and the learned symbolic knowledge maps scene classes to high-level super-classes. In the first task, the neural network is pre-trained on images of playing cards from a standard deck, but our FFNSL framework is applied on card images subject to distributional shifts, where a percentage of standard card images are replaced with images from alternative card decks. In the second task, the neural network is pre-trained on the standard MNIST dataset, and our FFNSL system is applied on an out-of-distribution MNIST dataset generated by rotating MNIST digits \(90^\circ \) clockwise. In the Crop Yield Prediction task, we pre-train the neural network on the Plant Village dataset (Hughes & Salathé, 2015), and apply distributional shifts using a hue filter. Finally, in the Indoor Scene Classification task, we adopt a neural network model pre-trained on the MIT Indoor Scene dataset (Quattoni & Torralba, 2009), and apply distributional shift using blur, hue, and rotation filters.

Our evaluation demonstrates that FFNSL outperforms the baselines on all four tasks. The hypotheses learned from unstructured data, subject to distributional shifts, are more interpretable and more accurate than those learned by the random forest and deep neural networks even when these baselines are trained with significantly more data. We have also evaluated the robustness of the FFNSL instances when applied to a test set that is also subject to distributional shifts. The results show that FFNSL outperforms the baselines, trained with the same amount of unstructured data, when up to \(\sim \)80% of the test set is subject to distributional shifts.

The paper is structured as follows. Section 2 provides necessary background material on the LAS framework, alongside further discussion of the drawbacks of the standard neural network Softmax layer for providing robust confidence estimates, and details of the uncertainty-aware neural networks used in this paper. Section 3 presents our general FFNSL framework followed by four instances discussed in detail in Sect. 4. We introduce our evaluation methodology in Sect. 5 and present the results of each FFNSL instance on the Follow Suit Winner and Sudoku Grid Validity tasks in Sects. 6 and 7 respectively, followed by the Crop Yield Prediction and Indoor Scene Classification tasks in Sect. 8. Related work is discussed in Sects. 9 and 10 concludes the paper.

2 Background

This section provides an overview of the LAS framework and the neural network approaches used in FFNSL. We discuss the difference between confidence estimates of uncertainty-aware neural networks versus that of the standard Softmax layer, when applying these trained networks to out-of-distribution data. This is particularly relevant to our FFNSL framework, as FFNSL relies upon neural network predictions and their confidence scores to learn interpretable knowledge for solving a downstream task.

2.1 Learning from answer sets

LAS (Law et al., 2019) is a logic-based machine learning approach that extends the field of Logic Programming (ILP) (Muggleton, 1991) with systems ILASP (Law, 2018) and FastLAS (Law et al., 2020). ILASP and FastLAS are capable of learning interpretable knowledge, expressed in the language of Answer Set Programming (ASP) (Gelfond & Kahl, 2014), from noisy labelled examples in an effective and scalable manner. Typically, an ASP program includes four types of rules: normal rules, choice rules, and hard and weak constraints. In this paper, we consider ASP programs composed of normal rules only.Footnote 3 A normal rule is of the form \({\mathtt {h {{\,\mathrm{\mathtt {:-}}\,}}b_1,\ldots , b_n, {{\,\mathrm{\,\texttt{not}\,}\,}}c_{1},\ldots ,{{\,\mathrm{\,\texttt{not}\,}\,}}c_{m}}}\), where \({\texttt{h}}, {\mathtt {b_1}},\ldots , {\mathtt {b_n}}, {\mathtt {c_{1}}},\ldots ,{\mathtt {c_{m}}}\) are atoms, “\(\texttt{not}\)” is negation as failure, \({\texttt{h}}\) is the head of the rule and \({\mathtt {b_1,\ldots , b_n, {{\,\mathrm{\,\texttt{not}\,}\,}}c_{1},\ldots , {{\,\mathrm{\,\texttt{not}\,}\,}}c_{m}}}\) is the body of the rule. The Herbrand Base of an ASP program P, denoted \(HB_P\), is the set of ground (variable free) atoms that can be formed from predicates and constants in P. Subsets of \(HB_P\) are called interpretations of P. The semantics of an ASP program P is defined in terms of answer sets, a subset, denoted as AS(P), of all interpretations of P that satisfy every rule in P. Given an answer set A, a ground normal rule is satisfied if the head is satisfied by A whenever all positive atoms and none of the negated atoms of the body are in A, that is when the body is satisfied. A partial interpretation, \(e_{\textrm{pi}}\), is a pair of sets of ground atoms \(\left\langle e^{\textrm{inc}}_{\textrm{pi}}, e^{\textrm{exc}}_{\textrm{pi}} \right\rangle \), called the inclusion and exclusion sets respectively. An interpretation I extends \(e_{\textrm{pi}}\) iff \(e_{\textrm{pi}}^{\textrm{inc}} \subseteq I\) and \(e_{\textrm{pi}}^{\textrm{exc}} \cap I = \emptyset \).

In the LAS framework, labelled examples are specified as Context-Dependent Partial Interpretations (CDPIs). A CDPI example e is a pair \(\langle e_{\textrm{pi}}, e_{\textrm{ctx}}\rangle \), where \(e_{\textrm{pi}}\) is a partial interpretation and \(e_{\textrm{ctx}}\) is an ASP program called the context of e. An ASP program P is said to accept e if there is at least one answer set A of \(P \cup e_{\textrm{ctx}}\) that extends \(e_{\textrm{pi}}\). Essentially, a CDPI states that a learned program P, together with \(e_{\textrm{ctx}}\), should bravely entailFootnote 4 all inclusion atoms and none of the exclusion atoms of e. When a CDPI example is noisy, that is, the truthfulness of its context and/or partial interpretation is not guaranteed, it has a weight or penalty assigned to it, in the form of a positive integer. A Weighted Context-Dependant Partial Interpretation (WCDPI) is therefore a CDPI weighted with a penalty. It is formally defined as a tuple \(e=\langle e_{\textrm{id}}, e_{\textrm{pen}}, e_{\textrm{pi}}, e_{\textrm{ctx}} \rangle \) where \(e_{\textrm{id}}\) is a unique identifier of e, \(e_{\textrm{pen}}\) is the penalty of e, and \(e_{\textrm{pi}}\) and \(e_{\textrm{ctx}}\) represent a CDPI. A LAS system that is noise-tolerant learns an ASP program H, called a hypothesis, from WCDPI examples. If a hypothesis H does not accept a WCDPI example, we say that it pays the penalty of that example. Informally, penalties are used to calculate the cost associated with a hypothesis for not covering examples. The cost function of a hypothesis H is the sum over the penalties of all of the examples that are not covered by H, augmented with the length of the hypothesis. A LAS learning task with noisy examples, consists of an ASP program denoting background knowledge, a hypothesis space defined by a language bias,Footnote 5 expressing the set of rules that can be used to construct a solution of the task, and a set of WCDPI examples. The goal of such a task is to find a hypothesis H in the hypothesis space that minimises a cost function with respect to a given set of noisy examples. This is formally defined below, adapted from Law (2018).

Definition 1

An \(\textrm{ILP}^{\textrm{noise}}_{\textrm{LAS}}\) task T is a tuple \(T=\langle B, S_M, E\rangle \), where B is an ASP program, \(S_M\) is a hypothesis space, and E is a set of WCDPIs. Given a hypothesis \(H \subseteq S_M\),

-

1.

\(\textrm{UNCOV}(H, T)\) is the set consisting of all examples \(e \in E\) such that \(B \cup H\) does not accept e.

-

2.

The penalty of H, denoted as \(\textrm{PEN}(H, T)\), is the sum \(\sum _{e \in \textrm{UNCOV}(H, T)} e_{\textrm{pen}}\).

-

3.

The score of H, denoted as \({\mathcal {S}}(H, T)\), is calculated as \(\vert H\vert + \textrm{PEN}(H, T)\).

-

4.

H is an optimal inductive solution of T if and only if \(\not \exists H' \subseteq S_M\) such that \({\mathcal {S}}(H', T) < {\mathcal {S}}(H, T)\).

ILASP and FastLAS are two state-of-the-art systems capable of solving an \(\textrm{ILP}^{\textrm{noise}}_{\textrm{LAS}}\) task. The optimisation function used by both systems aims at learning a hypothesis H that jointly minimises the total penalty paid for the uncovered examples and its length. In practice, this creates a bias towards shorter, and therefore more general solutions that cover examples with a high penalty value.

2.2 Uncertainty-aware neural networks

Our FFNSL framework relies on pre-trained neural networks to extract symbolic features from unstructured data. The neural network prediction and its confidence score may therefore affect the accuracy of a learned hypothesis. In this paper, we consider two different types of neural networks as FFNSL neural components: a standard Convolutional Neural Network (CNN) that uses a Softmax layer, and an uncertainty-aware CNN that provides more robust confidence estimates when given data outside the training distribution.

Uncertainty can be formulated as either aleatoric or epistemic uncertainty (Hüllermeier & Waegeman, 2021; Pearce et al., 2021). In a machine learning classification task, aleatoric uncertainty can be thought of as the uncertainty along the class decision boundary, whereas epistemic uncertainty can be thought of as whether the sample falls into any of the classes at all. The confidence estimates output by a neural network Softmax layer in a classification task often only capture aleatoric uncertainty, as these outputs are based on a single probability distribution over a set of classes squashed into real values between 0 and 1. For example, given neural network output logits \({\varvec{l}}\) and k possible classes, the Softmax output \(\sigma ({\varvec{l}})\) for class i, where \(1\le i\le k\) is calculated as:

where \({\varvec{e}}=2.71828...\) is the Euler number.Footnote 6 There are three challenges with this approach in terms of uncertainty quantification. Firstly, the exponent applied to neural network outputs inflates the confidence estimate. Secondly, as the Softmax output is a point-wise, multinomial distribution, it is only possible to compare the confidence of the predicted class among other classes, as opposed to estimating the predictive distribution variance (Sensoy et al., 2018). Finally, when Softmax is paired with the commonly used cross-entropy loss, the network is only trained to minimise prediction error, as opposed to expressing uncertainty robustly.

To address these challenges, many techniques have been proposed in the literature (Rasmussen, 2003; Mackay, 1995; Blundell et al., 2015; Abdar et al., 2021). In this paper we consider the EDL-GEN (Sensoy et al., 2020) approach, which is a neural network based on generative models of Evidential Deep Learning (EDL) systems (Sensoy et al., 2018) that have been shown to achieve state-of-the-art performance in handling epistemic uncertainty. An EDL (Sensoy et al., 2018) system replaces the Softmax layer in a neural network with a linear layer that represents the parameters of a Dirichlet distribution, a second-order distribution that inherently models the variance of a predictive distribution as opposed to the single point-wise output provided by Softmax. It then uses a new loss function that jointly minimises prediction error and the variance of the Dirichlet distribution, to reduce aleatoric uncertainty on the class decision boundary. EDL-GEN (Sensoy et al., 2020) extends this approach to also capture epistemic uncertainty by firstly treating the output of each class as a binary decision and secondly, using a variational auto-encoder to automatically generate out-of-distribution samples for training, in order to help the network discriminate between samples within and outside the training distribution.

To better understand how the uncertainty estimation of neural network predictions impacts the overall accuracy of our FFNSL framework, we analyse in the evaluation Sects. 6 and 7, the predicted confidence scores generated by a standard CNN with a Softmax layer and an EDL-GEN neural network and evaluate how they affect the accuracy of FFNSL when increasing percentages of input training data are subject to distributional shifts.

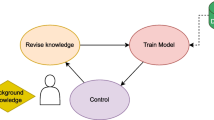

3 FFNSL framework

In this section we present our general FFNSL framework. It consists of three components, a pre-trained neural network, a symbolic (logic-based) learning system and a D2K generator that bridges the neural and symbolic learning components. It takes as input a dataset D of labelled (sequences of) unstructured data, alongside a background knowledge B (if any) and a search space \(S_{M}\). The output is a hypothesis H in the search space \(S_{M}\) (\(H\subseteq S_{M}\)), that predicts the labels of (sequences of) unstructured data. An overview of the FFNSL architecture is presented in Fig. 1.

FFNSL architecture and data flow generated for a single data point \(\langle {\varvec{x}},y\rangle \), where \({\varvec{x}}\) is a sequence of images and y is a label for the sequence. B is the background knowledge, \(S_{M}\) is the hypothesis search space and H is the learned hypothesis. In practice, the architecture is applied on a set of data points from which the D2K generator produces a set of symbolic examples passed in as input to the symbolic learner

We now define each of the three components of our FFNSL architecture. Let us assume that the training dataset D is given by a finite set \(D=\{\langle {\varvec{x}}_{w}, y_{w}\rangle \mid 1 \le w\le \vert D\vert \}\). The downstream task is a classification task where the objective is to predict the target label \(y\!\in \! {\mathcal {Y}}\) given a sequence of unstructured data \({\varvec{x}}\!\in \! {\mathcal {X}}_{1}\!\times \!\ldots \!\times \! {\mathcal {X}}_{n}\). Note that \({\mathcal {X}}_{i}\) could refer to different types of unstructured inputs and the sequence could also contain only a single input. The neural component of FFNSL contains up to n pre-trained neural network(s).Footnote 7 Each neural network \(g_{i}:{\mathcal {X}}_{i}\rightarrow [0,1]^{k_{i}}\) returns a vector denoting relative assignment to \(k_{i}\) possible classes for an unstructured input \(x_{i} \in {\varvec{x}}\). Each possible class \(z_{i}\in \left\{ 1,\ldots ,k_{i}\right\} \) represents a set of symbolic feature and value pairs from a given set \(F_{g_{i}}\) of symbolic feature mappings associated with the neural network \(g_{i}\). For example, in the Follow Suit Winner task, \(F_{g_{i}}\) contains all possible suit and rank values corresponding to the possible predictions of the neural network, when given an image of a playing card.

The second component of FFNSL is the D2K generator that outputs a symbolic representation of the sequence of neural network predictions, together with an aggregated confidence value. Specifically, for a given sequence of unstructured data \({\varvec{x}}=\langle x_{1},\ldots ,x_{n}\rangle \), the D2K generator takes each neural network output \(g_{i}(x_{i})\), and computes the corresponding prediction \(z_{i}\). Each \(z_{i}\), for \(1\le i\le n\), is obtained by using the standard “arg max" function, i.e., the class with the maximum confidence score:

The D2K generator then uses the set \(F_{g_{i}}\), associated with \(g_{i}\) and generates the set \(f^{z_{i}}_{g_{i}}\subseteq F_{g_{i}}\) of symbolic feature and value pairs corresponding to the prediction \(z_{i}\). As an example, in the Follow Suit Winner task, \(z_{i}\) is an identifier for one of 52 playing cards, and \(f^{z_{i}}_{g_{i}}\) contains two feature and value pairs, one for the suit, and one for the rank of the card \(z_{i}\). The D2K generator also generates a set \(l_{i}\) of pairs containing additional symbolic meta-data, associated with each input \(x_{i}\). Again, each pair in \(l_{i}\) contains a name and a value. In the Follow Suit Winner task, \(l_{i}\) contains one pair indicating which player played the card \(z_{i}\). The generated set of tuples \(\{\langle m_{g_{i}}, f^{z_{i}}_{g_{i}}, l_{i} \rangle \mid x_{i} \in {\varvec{x}}\}\), where \(m_{g_{i}}\) is a unique identifier for the neural network \(g_{i}\), defines the symbolic features extracted from a sequence of unstructured data \({\varvec{x}}\), based on the neural network predictions. Finally, the D2K generator computes an aggregated confidence value \(W({\varvec{x}})\) for the generated symbolic features, representing the combined confidence scores of the neural network predictions:

\(W({\varvec{x}})\) is a generalisation of the binary Gödel t-norm used in fuzzy logic to encode fuzzy conjunctions (Metcalfe et al., 2008). So, given a sequence of unstructured inputs \({\varvec{x}}=\langle x_{1},\ldots ,x_{n}\rangle \) and the predicted vector \(\langle g_{1}(x_1)[z_1],\ldots ,g_{n}(x_n)[z_{n}]\rangle \) from the neural network, the output of the D2K generator is formally defined as:

A pseudo-code implementation of the D2K generator is presented in Algorithm 1. Note that some aspects are task specific, such as the set of feature value pairs \(f^{z_{i}}_{g_{i}}\), and meta-data \(l_{i}\). These are left general in Algorithm 1, and specified in more detail for each task in Sect. 4.

The third component of our FFNSL framework is a symbolic logic-based machine learning system. For each labelled unstructured data \(\langle {\varvec{x}}, y\rangle \in D\), the symbolic learning system takes as input \(D2K({\varvec{x}})\) and the label y, and generates a weighted symbolic labelled example denoted as the tuple \(\langle W^{\prime }({\varvec{x}}), e_{\langle {\varvec{x}}, y\rangle }\rangle \) where \(W^{\prime }({\varvec{x}})\) is a penalty for the example, calculated from the aggregated confidence score \(W({\varvec{x}})\), and \(e_{\langle {\varvec{x}}, y\rangle }\) is a labelled example. The syntactic form of \(e_{\langle {\varvec{x}}, y\rangle }\) and the calculation of the penalty \(W^{\prime }({\varvec{x}})\) depends on the specific symbolic learning system used in the instantiation of the framework. In Sect. 4 we present two specific instances of FFNSL where the symbolic learning systems are LAS systems and we show how weighted symbolic labelled examples are defined as WCDPI examples. We denote with E the set of weighted symbolic labelled examples defined by the symbolic learning system for all \(\langle {\varvec{x}}, y\rangle \in D\). A symbolic learning task \(T=\langle B, S_{M}, E\rangle \) is then generated where B and \(S_{M}\) are respectively the background knowledge and a search space given as input to FFNSL. The symbolic learner then computes an optimal solution H for this task T as the output of FFNSL.

Formally, an FFNSL learning task is a tuple \(T=\langle B, S_{M}, D\rangle \) where D is a set of labelled unstructured data, B is a set of optional background knowledge and \(S_{M}\) is a search space of possible solutions for T. A hypothesis \(H\subseteq S_{M}\) is an inductive solution of T if and only if H is an optimal inductive solution of the symbolic learning task \(\langle B, S_{M}, E\rangle \), where E is the set of weighted symbolic labelled examples automatically generated relative to the given set D. In the next section, we present four specific instances of our FFNSL framework and give specific examples of the components described here.

4 FFNSL with LAS systems

The generality of our FFNSL framework allows it to be instantiated differently, using alternative neural and/or symbolic learning components, depending on the nature of the classification task in hand. We have considered four different classification tasks, called Follow Suit Winner, Sudoku Grid Validity, Crop Yield Prediction and Indoor Scene Classification respectively. The first requires the learning of concepts that are not directly observed in the labels, but linked to the label through the background knowledge, whereas the other tasks require the learning of concepts that define the classification label. Because of the different types of symbolic learning, we consider instantiations of our FFNSL framework with different LAS systems. In what follows we introduce these tasks, their datasets, define the respective FFNSL learning tasks and describe in more detail the FFNSL instances we have implemented to solve these tasks. Firstly, let us define the weighted symbolic labelled examples within a LAS system, based on the output from the D2K generator. Essentially, the predicted symbolic features and meta-data define the context of a LAS example, represented as a conjunction of facts, and the aggregated confidence score \(W({\varvec{x}})\) is used to calculate the associated weight penalty:

which converts \(W({\varvec{x}})\) to an integer \(W^{\prime }({\varvec{x}})\!>\!0\) as required by the LAS systems. Given the output generated by D2K, a LAS system constructs a weighted symbolic labelled example \(\langle W^{\prime }({\varvec{x}}), e_{\langle {\varvec{x}}, y\rangle }\rangle \) as a WCDPI of the form \(\langle e_{\textrm{id}}, e_{\textrm{pen}}({\varvec{x}}), e_{\textrm{pi}}(y), e_{\textrm{ctx}}({\varvec{x}})\rangle \), where \(e_{\textrm{id}}\) is a unique identifier, \(e_{\textrm{pen}}({\varvec{x}}) = W^{\prime }({\varvec{x}})\), \(e_{\textrm{pi}}(y)\) is the partial interpretation \(\langle \{y\}, {\mathcal {Y}}\setminus \{y\}\rangle \), defined in terms of the label y and its domain \({\mathcal {Y}}\), and the context \(e_{\textrm{ctx}}({\varvec{x}})\) is a conjunction of facts created from the predicted symbolic features and meta-data. The components \(e_{\textrm{pi}}(y)\) and \(e_{\textrm{ctx}}({\varvec{x}})\) together constitute the labelled example \(e_{\langle {\varvec{x}}, y\rangle }\).

Given a set \(E^{\prime }\) of WCDPIs, a background knowledge B, and a search space \(S_{M}\), a hypothesis \(H \subseteq S_{M}\) is learned such that H is an optimal inductive solution of the task \(T^{\textrm{noise}}_{\textrm{LAS}}=\langle B, S_{M}, E^{\prime }\rangle \). Let us now present the tasks used in our evaluation, alongside examples of each instantiated FFNSL component.

4.1 Follow suit winner

This is a classification task where 4 players each play 1 card and the goal is to predict the winning player. The symbolic knowledge required to solve the task defines the rules of the game, that is the winner is the player that plays the highest ranked card with the same suit as player 1. Each \(\langle {\varvec{x}}, y\rangle \in D\) is composed of a sequence \({\varvec{x}}\) of 4 card images corresponding to the cards played by players \(1,\ldots ,4\), and a label \(y\in \{1,2,3,4\}\) denoting the player who wins the 4 card trick.

Let us assume  which contains images of the cards 10 of hearts, jack of hearts, 4 of clubs and 8 of spades played by player 1, 2, 3 and 4 respectively. For this trick, the ground truth label is \(y=2\) indicating that player 2 is the winner since player 2 has played the highest ranked card with the same suit as player 1. Since the unstructured inputs in the sequence \({\varvec{x}}\) are of the same type (i.e., card images), FFNSL can simply use a single neural network g pre-trained to predict the features of a card image, that is the rank and suit of each card. Therefore, g has two associated symbolic features rank and suit each with values \(\{2,\ldots ,10, jack, queen, king, ace\}\) and \(\{hearts, clubs, spades, diamonds\}\) respectively. For each input \(x_{i}\), there are 52 possible predictions, one for each combination of rank and suit, i.e., \(g:{\mathcal {X}}\rightarrow [0,1]^{52}\), where \({\mathcal {X}}\) is the set of possible card images. g has an associated feature value mapping \(F_{g}\) which gives for each card prediction \(z_{i}\in \{1,\ldots ,52\}\), a unique set of two pairs, each containing a feature and value, i.e., \(f^{z_{i}}_{g}=\{\langle rank, \nu _{rank}\rangle , \langle suit, \nu _{suit}\rangle \}\), where \(\nu _{rank}\) is one of the 13 rank values and \(\nu _{suit}\) is one of the 4 suit values. Furthermore, each input \(x_{i}\) also has associated symbolic meta-data \(l_{i}=\{\langle player, \nu _{player}\rangle \}\) where \(\nu _{player}\in \{1,2,3,4\}\) indicates the player that has played card \(x_{i}\).

which contains images of the cards 10 of hearts, jack of hearts, 4 of clubs and 8 of spades played by player 1, 2, 3 and 4 respectively. For this trick, the ground truth label is \(y=2\) indicating that player 2 is the winner since player 2 has played the highest ranked card with the same suit as player 1. Since the unstructured inputs in the sequence \({\varvec{x}}\) are of the same type (i.e., card images), FFNSL can simply use a single neural network g pre-trained to predict the features of a card image, that is the rank and suit of each card. Therefore, g has two associated symbolic features rank and suit each with values \(\{2,\ldots ,10, jack, queen, king, ace\}\) and \(\{hearts, clubs, spades, diamonds\}\) respectively. For each input \(x_{i}\), there are 52 possible predictions, one for each combination of rank and suit, i.e., \(g:{\mathcal {X}}\rightarrow [0,1]^{52}\), where \({\mathcal {X}}\) is the set of possible card images. g has an associated feature value mapping \(F_{g}\) which gives for each card prediction \(z_{i}\in \{1,\ldots ,52\}\), a unique set of two pairs, each containing a feature and value, i.e., \(f^{z_{i}}_{g}=\{\langle rank, \nu _{rank}\rangle , \langle suit, \nu _{suit}\rangle \}\), where \(\nu _{rank}\) is one of the 13 rank values and \(\nu _{suit}\) is one of the 4 suit values. Furthermore, each input \(x_{i}\) also has associated symbolic meta-data \(l_{i}=\{\langle player, \nu _{player}\rangle \}\) where \(\nu _{player}\in \{1,2,3,4\}\) indicates the player that has played card \(x_{i}\).

We instantiate our FFNSL framework as follows. Given a sequence \({\varvec{x}}\) of 4 card images, the neural component of FFNSL generates 4 vectors \(g(x_{i})\), where \(1\le i\le 4\). The D2K component generates for each \(x_{i}\), the card prediction \(z_{i}\) and its corresponding symbolic features and meta-data, thus computing the tuple \(D2K(x_{i})=\langle {\texttt{card}}, f^{z_{i}}_{g},l_{i}\rangle \), where \({\texttt{card}}\) is the identifier for the network g (i.e., \(m_{g}={\texttt{card}}\)).

Example 1

Consider the sequence  and \(y=2\). Let us assume that the neural network g computes the outputs \(g(x_{1}),...,g(x_{4})\) from which the D2K generator generates the correct card predictions \(z_{1}=10\), \(z_{2}=11\), \(z_{3}=17\), and \(z_{4}=34\). Let us also assume the neural network confidence scores for these predictions are:

and \(y=2\). Let us assume that the neural network g computes the outputs \(g(x_{1}),...,g(x_{4})\) from which the D2K generator generates the correct card predictions \(z_{1}=10\), \(z_{2}=11\), \(z_{3}=17\), and \(z_{4}=34\). Let us also assume the neural network confidence scores for these predictions are:

\(D2K({\varvec{x}})\) is given by the following tuple:

In this task, FFNSL uses the symbolic learner ILASP. The concept to be learned is not directly expressed as a label, but is related to it. The label is a single winning player for a trick, but the learned concept requires reasoning over the conditions of the suit and rank values of the other players’ cards. We encode as background knowledge, possible suit and rank values, the four players, as well as the definition of a higher rank predicate. ILASP is particularly suited for solving such learning tasks, known as non-observational predicate learning. The full background knowledge B and language bias used to construct the search space \(S_{M}\) for this classification task are given in Appendix F. To generate its learning task, ILASP has to generate its set \(E^{'}\) of WCDPI examples based on the output of the D2K component. For example, the WCDPI generated from the D2K output and the corresponding label in Example 1 is:

where \(e_{\textrm{id}}\) is a unique identifier and \(e_{\textrm{ctx}}\) is the set of facts \(\{{\mathtt {card(1,10,hearts).}}, {\mathtt {card(2,jack,hearts).}}, {\mathtt {card(3,4,clubs).}}, {\mathtt {card(4,8,spades).}}\}\).

4.2 Sudoku grid validity

Our second classification task is Sudoku Grid Validity. This consists of observing a sequence of images of handwritten MNIST digits, corresponding to the digits in a Sudoku grid, and predicting if the grid is valid or not.Footnote 8 The learned symbolic knowledge required to solve this task is the definition of a valid Sudoku grid. In this task, each \(\langle {\varvec{x}},y\rangle \in D\) contains a sequence of digit images \({\varvec{x}}\) with a label \(y\in \{0, 1\}\) for valid and invalid respectively. The length of the sequence depends on the size of the grid. We consider \(4\times 4\) and \(9\times 9\) Sudoku grids as two separate tasks, with respective datasets \(D_{4\times 4}\) and \(D_{9\times 9}\) where the maximum length of the sequence in input is given by \(n=16\) and \(n=81\) respectively. As the images are all MNIST digits, FFNSL uses two neural networks \(g_{4\times 4}\) and \(g_{9\times 9}\), depending on the grid size, pre-trained to predict the feature digit of a single image \(x_{i}\) in \({\varvec{x}}\). So \(g_{k\times k}:{\mathcal {X}}\rightarrow [0,1]^{k}\), where \({\mathcal {X}}\)=MNIST. In the case of \(D_{4\times 4}\), \(n=16\) and \(k=4\) whereas in the case of \(D_{9\times 9}\), \(n=81\) and \(k=9\). The neural network \(g_{k\times k}\) has associated a feature value mapping \(F_{g_{k\times k}}\) which gives for each digit prediction \(z_{i}\in \{1,\ldots ,k\}\) a unique set of pairs \(f^{z_{i}}_{g_{k\times k}} = \{\langle value, \nu \rangle \}\), where \(\nu \) is one of the k digits that can appear in a Sudoku grid of size \(k\times k\). The meta-data related to each \(x_{i}\) is a set of two feature value pairs denoting the row and column that the image \(x_{i}\) has in the Sudoku grid, i.e., \(l_{i}=\{\langle row, \nu _{row}\rangle , \langle col, \nu _{col}\rangle \}\), where \(\nu _{row},\nu _{col}\in \{1,\ldots ,k\}\).

The instantiated FFNSL framework for this classification task is defined as follows. Given a sequence, \({\varvec{x}}\), of MNIST digit images, for each \(x_i\in {\varvec{x}}\), the pre-trained neural network \(g_{k \times k}\) computes the vector \(g(x_{i})\). The D2K component generates for each \(x_{i}\) the tuple \(D2K(x_{i})=\langle {\texttt{digit}}, f^{z_{i}}_{g_{k\times k}}, l_{i}\rangle \) where \({\texttt{digit}}\) is the network identifier, \(f^{z_{i}}_{g_{k\times k}}\) is the set of symbolic feature values associated with the prediction \(z_{i}\), and \(l_{i}\) is the set of symbolic meta-data feature value pairs associated with \(x_{i}\).

Example 2

Consider the task of predicting the validity of a \(4\times 4\) Sudoku grid. Let  , with label \(y=1\), and associated symbolic meta-data:

, with label \(y=1\), and associated symbolic meta-data:

Let us assume the neural network \(g = g_{4 \times 4}\) and g computes the outputs \(g(x_{1}),...,g(x_{5})\) from which the D2K generator generates the correct digit predictions \(z_{1}=2\), \(z_{2}=4\), \(z_{3}=1\), \(z_{4}=3\), and \(z_{5}=4\). Let us also assume the neural network confidence scores for these predictions are: \(g(x_{1})[z_{1}] = 0.88\), \( g(x_{2})[z_{2}] = 0.93\), \(g(x_{3})[z_{3}] = 0.87\), \(g(x_{4})[z_{4}] = 0.97\), and \(g(x_{5})[z_{5}] = 0.99\). The aggregated confidence score \(W({\varvec{x}}) = 0.87\). \(D2K({\varvec{x}})\) is given by the following tuple:

In this task FFNSL uses the FastLAS symbolic learner because the task is to learn the definition of the classification label, and FastLAS has been shown, for these types of learning tasks, to be more scalable than ILASP (Law et al., 2020). For both \(4\times 4\) and \(9\times 9\) Sudoku grids, the knowledge of the grid is encoded as part of the background knowledge B, given in Appendix F together with the language bias used to construct the search space \(S_{M}\). For each \(\langle {\varvec{x}}, y\rangle \), FastLAS takes as input \(D2K({\varvec{x}})\) and generates a WCDPI example. For instance, the WCDPI generated for the D2K output and the corresponding label in Example 2 is:

where \(e_{\textrm{id}}\) is a unique identifier and \(e_{\textrm{ctx}}\) is given by the set of facts \(\{{\mathtt {digit(1,1,2).}}, {\mathtt {digit(1,3,4).}},\; {\mathtt {digit(1,4,1).}},\; {\mathtt {digit(3,2,3).}},\; {\mathtt {digit(4,3,4).}}\}\).

4.3 Crop yield prediction

To demonstrate the application of FFNSL to a real-world problem and dataset, consider the Crop Yield Prediction task. The goal is to classify the quality of yield, given an image and the location of a particular crop. The symbolic knowledge required to solve the task defines the quality of yield according to the crop’s location, species, and any disease that may be present. Each \(\langle {\varvec{x}},y \rangle \in D\) is composed of a sequence \({\varvec{x}}\) containing a single image, and a label \(y\in \{0,1,2\}\) denoting the quality of yield as poor, moderate, and strong respectively.

Let us assume  which contains an image of a peach crop with the bacterial spot disease. Given symbolic meta-data denoting the location of this crop, let us assume the label \(y=0\), indicating poor yield. In this task, we use one neural network g to predict the features of a crop image, which are the crop species and disease. In total, there are 38 possible combinations of crop species and diseases, and g is trained to classify each combination. To assist with neural network training, the image dataset also contains a background class with unrelated images.Footnote 9 Therefore, \(g : {\mathcal {X}} \rightarrow [0,1]^{39}\), where \({\mathcal {X}}\) is the set of possible crop and background images. g has an associated feature value mapping \(F_{g}\), which specifies for the crop prediction \(z_{i} \in \{ 1,...,38\}\), a unique set of feature and value pairs \(f_{g}^{z_{i}}= \{ \langle species, \nu _{species} \rangle , \langle disease, \nu _{disease} \rangle \}\), where \(\nu _{species}\) and \(\nu _{disease}\) are the crop species and disease values respectively. Also, each input \(x_{i}\) has associated symbolic meta-data \(l_{i} = \{ \langle location, \nu _{location}\rangle \}\) where \(\nu _{location} \in \{ 1,...,19\}\) is the location of the crop.Footnote 10

which contains an image of a peach crop with the bacterial spot disease. Given symbolic meta-data denoting the location of this crop, let us assume the label \(y=0\), indicating poor yield. In this task, we use one neural network g to predict the features of a crop image, which are the crop species and disease. In total, there are 38 possible combinations of crop species and diseases, and g is trained to classify each combination. To assist with neural network training, the image dataset also contains a background class with unrelated images.Footnote 9 Therefore, \(g : {\mathcal {X}} \rightarrow [0,1]^{39}\), where \({\mathcal {X}}\) is the set of possible crop and background images. g has an associated feature value mapping \(F_{g}\), which specifies for the crop prediction \(z_{i} \in \{ 1,...,38\}\), a unique set of feature and value pairs \(f_{g}^{z_{i}}= \{ \langle species, \nu _{species} \rangle , \langle disease, \nu _{disease} \rangle \}\), where \(\nu _{species}\) and \(\nu _{disease}\) are the crop species and disease values respectively. Also, each input \(x_{i}\) has associated symbolic meta-data \(l_{i} = \{ \langle location, \nu _{location}\rangle \}\) where \(\nu _{location} \in \{ 1,...,19\}\) is the location of the crop.Footnote 10

We instantiate our FFNSL framework as follows. Given a sequence \({\varvec{x}}\) containing a single crop image, the neural component generates a single vector \(g(x_{i})\). The D2K component generates the prediction \(z_{i}\) and its corresponding symbolic features and meta-data, thus computing the tuple \(D2K(x_{i}) = \langle {\texttt{crop}}, f_{g}^{z_{i}}, l_{i}\rangle \), where \({\texttt{crop}}\) is the identifier for the network g (i.e., \(m_{g}={\texttt{crop}}\)).

Example 3

Consider the sequence  and \(y=0\). Let us assume the neural network g computes the output \(g(x_{1})\) from which the D2K generator generates the correct crop prediction \(z_{1}=17\). Let us also assume the neural network predicts with confidence \(g(x_{1})[z_{1}]=0.98\), and this crop is in location 5. \(D2K({\varvec{x}})\) is given by the following tuple:

and \(y=0\). Let us assume the neural network g computes the output \(g(x_{1})\) from which the D2K generator generates the correct crop prediction \(z_{1}=17\). Let us also assume the neural network predicts with confidence \(g(x_{1})[z_{1}]=0.98\), and this crop is in location 5. \(D2K({\varvec{x}})\) is given by the following tuple:

In this task FFNSL uses the FastLAS symbolic learner which is shown to be more scalable than ILASP. The background knowledge B contains a rule that ensures a classification is performed, i.e., given a crop, disease, and a location, the learned hypothesis should output only one class of crop yield. This rule, alongside the language bias used to construct the search space \(S_{M}\) is given in Appendix F. For each \(\langle {\varvec{x}},y\rangle \), FastLAS takes as input \(D2K({\varvec{x}})\) and generates a WCDPI example. For instance, the WCDPI generated from the D2K output and the corresponding label given in Example 3 is:

where \(e_{\textrm{id}}\) is a unique identifier and \(e_{\textrm{ctx}}\) is given by the set of facts \(\{{\mathtt {species(peach).}}, {\mathtt {disease(bacterial\_spot).}},\; \{{\mathtt {location(5).}}\}\).

4.4 Indoor scene classification

Our final instantiation of FFNSL is with the Indoor Scene Classification task, where both neural and symbolic components are trained with real data. The goal is to learn symbolic knowledge that maps indoor scene classes (e.g., bedroom, bathroom, kitchen) into higher level super-classes (e.g., home), given images of indoor scenes. Each \(\langle {\varvec{x}},y \rangle \in D\) is composed of a sequence \({\varvec{x}}\) containing a single indoor scene image, and a label \(y\in \{0,\ldots ,4\}\) denoting the super-class as store, home, public space, leisure, and working place respectively.

Example bookstore image from the MIT Indoor Scenes dataset. Quattoni and Torralba (2009)

Let us assume  which contains an image of a bookstore (also shown in Fig. 2). The label for this example is \(y=0\) (i.e., store). We use one neural network g to predict the scene class. In total, there are 67 different classes of various indoor scenes, and therefore \(g:{\mathcal {X}}\rightarrow [0,1]^{67}\), where \({\mathcal {X}}\) is the set of possible images in the MIT Indoor Scene dataset. g has an associated feature value mapping \(F_{g}\), which for the scene prediction \(z_{i} \in \{1,...,67\}\), gives a pair that denotes the symbolic scene name \(\nu _{scene}\), i.e., \(f^{z_{i}}_{g}=\{\langle scene, \nu _{scene} \rangle \}\). In this task there is no symbolic meta-data associated with each input \(x \in {\varvec{x}}\). Given a sequence \({\varvec{x}}\) containing a single scene image, the neural component generates a single vector \(g(x_{i})\). The D2K component generates the prediction \(z_{i}\) and its corresponding symbolic feature, thus computing the tuple \(D2K(x_{i})=\langle {\texttt{image}}, f^{z_{i}}_{g}, \{ \}\rangle \), where \({\texttt{image}}\) is the identifier for the network g (i.e., \(m_{g}={\texttt{image}}\)).

which contains an image of a bookstore (also shown in Fig. 2). The label for this example is \(y=0\) (i.e., store). We use one neural network g to predict the scene class. In total, there are 67 different classes of various indoor scenes, and therefore \(g:{\mathcal {X}}\rightarrow [0,1]^{67}\), where \({\mathcal {X}}\) is the set of possible images in the MIT Indoor Scene dataset. g has an associated feature value mapping \(F_{g}\), which for the scene prediction \(z_{i} \in \{1,...,67\}\), gives a pair that denotes the symbolic scene name \(\nu _{scene}\), i.e., \(f^{z_{i}}_{g}=\{\langle scene, \nu _{scene} \rangle \}\). In this task there is no symbolic meta-data associated with each input \(x \in {\varvec{x}}\). Given a sequence \({\varvec{x}}\) containing a single scene image, the neural component generates a single vector \(g(x_{i})\). The D2K component generates the prediction \(z_{i}\) and its corresponding symbolic feature, thus computing the tuple \(D2K(x_{i})=\langle {\texttt{image}}, f^{z_{i}}_{g}, \{ \}\rangle \), where \({\texttt{image}}\) is the identifier for the network g (i.e., \(m_{g}={\texttt{image}}\)).

Example 4

Consider the sequence  and \(y=0\). Let us assume the neural network g computes the output \(g(x_{1})\) from which the D2K generator generates the correct scene prediction \(z_{1}=8\). Let us also assume the neural network predicts with confidence \(g(x_{1})[z_{1}]=0.96\). \(D2K({\varvec{x}})\) gives as output the following tuple:

and \(y=0\). Let us assume the neural network g computes the output \(g(x_{1})\) from which the D2K generator generates the correct scene prediction \(z_{1}=8\). Let us also assume the neural network predicts with confidence \(g(x_{1})[z_{1}]=0.96\). \(D2K({\varvec{x}})\) gives as output the following tuple:

The FastLAS symbolic learner is also used in this task. No background knowledge is required, and the language bias is given in Appendix F. For each \(\langle {\varvec{x}}, y\rangle \), FastLAS takes as input \(D2K({\varvec{x}})\) and generates a WCDPI example. For instance, the WCDPI generated for the D2K output in Example 4 is:

where \(e_{\textrm{id}}\) is a unique identifier and \(e_{\textrm{ctx}}\) is given by the set \(\{{\mathtt {scene(bookstore).}}\}\).

5 Evaluation methodology

In this section we describe the methodology used to evaluate the FFNSL framework. In the first two tasks, the focus is on learning complex first-order knowledge involving negation as failure and predicate invention, which are essential aspects of common-sense learning and reasoning. In the second two tasks, we demonstrate FFNSLs applicability to real-world problems and datasets. For each of the four classification tasks, we divide the evaluation into two types. Firstly, we evaluate the symbolic learning capability of FFNSL, where the goal is to learn interpretable knowledge from symbolic features extracted from pre-trained neural network predictions. Secondly, we evaluate the inference capability of FFNSL, where the pre-trained neural networks together with the learned knowledge are used to make a downstream classification of unseen unstructured data. We refer to the first type of evaluation as the learned hypothesis evaluation and the second type as the FFNSL framework evaluation, since this targets both neural and symbolic components. Let us now describe each evaluation type in more detail.

5.1 Learned hypothesis evaluation

We evaluate the learned hypothesis in terms of accuracy, interpretability and learning time. To measure accuracy, we use a symbolic test set containing ground truth symbolic features. This ensures that the evaluation only targets the accuracy of the learned hypothesis. For each example in the test set, the symbolic features are used with the learned hypothesis to make a prediction of the downstream label. This prediction is compared to the ground truth label in the test set and accuracy is computed using the standard measure. Since FFNSL learns knowledge from a pre-trained neural network, we consider the hypotheses that have been learned at each (increasing) percentage of distributional shift and evaluate the accuracy of the knowledge that FFNSL learns in the presence of incorrect neural network predictions. Note that the symbolic test set remains unchanged and is not affected by the distributional shifts, as we want to evaluate in this case just the accuracy of the learned hypotheses.

To perform a deeper analysis of the accuracy of the learned hypotheses, we take into consideration the following measures. Firstly, the accuracy and confidence score distribution of the pre-trained neural network(s) in classifying unstructured data in the training set D. Since the neural networks were pre-trained on a dataset different from D, this measure enables us to understand the reliability of the pre-trained neural network predictions over new unseen input data (For more dataset details, see Appendix C.). Secondly, we measure the percentage of WCDPI examples generated by the LAS system, that contains features in the context which are incorrect with respect to the label in the inclusion set. This enables us to understand the relationship between incorrect neural network predictions and the accuracy of the learned hypotheses, as well as analyse how many correct WCDPI examples are needed to learn hypotheses with a certain level of accuracy. Thirdly, we calculate the weight penalty ratio r over the generated WCDPI examples, defined as

where \(E^{\prime }_{\textrm{correct}}\) is the set of correctly generated WCDPI examples, (i.e., WCDPI examples with features in the context that are consistent with the label in the inclusion set) and \(E^{\prime }\) is the complete set of generated WCDPI examples. This enables us to measure the bias given to the LAS system by the weights of the WCDPI examples, which are based on the neural network confidence scores. Ideally, FFNSL should allocate a higher proportion of the total weight penalty to WCDPI examples that contain correct neural network predictions. We compare the accuracy of the knowledge learned from these WCDPI examples with that of knowledge learned from corresponding WCDPI examples where we fix the penalty to be constant for all examples, as a baseline. To measure interpretability, we count the total number of atoms in a learned hypothesis: a hypothesis with a lower number of atoms is considered to be more interpretable (Lakkaraju et al., 2016). Finally, we measure the wall-clock time taken to learn a hypothesis at each percentage of distributional shift.

5.2 FFNSL framework evaluation

When a hypothesis has been learned, the entire FFNSL framework can be evaluated using a test set containing unseen labelled unstructured data. In this case, the neural network component of FFNSL classifies each element of a sequence of unstructured data. The symbolic features predicted from the neural network classification are added to the background knowledge alongside the learned hypothesis. The symbolic component of FFNSL is used to compute the downstream prediction. This is compared to the ground-truth label associated with the sequence of unstructured data, and the accuracy is computed with the standard measure. To assist the evaluation, and to provide insight into where mistakes are being made, we evaluate the neural network accuracy in predicting the symbolic features from the unstructured data in the test set with respect to ground truth information. This enables us to identify whether any downstream classification error is due to neural network feature prediction, the learned hypothesis, or both. We also evaluate FFNSL under distributional shifts. We inject into the test data the same percentages of distributional shifts used during the learning of hypotheses, and evaluate the accuracy of FFNSL. This evaluates the performance of FFNSL in realistic scenarios where distributional shifts occur during learning and inference.

5.3 Experimental setting

In the next four sections, we present the results of the Follow Suit Winner, Sudoku Grid Validity, Crop Yield Prediction, and Indoor Scene Classification tasks, using the evaluation methodology outlined in this section. In the first three tasks we pre-train a Softmax CNN and an EDL-GEN neural network and when used in combination with a symbolic learning system in FFNSL, we refer to these as FFNSL Softmax and FFNSL EDL-GEN respectively. For the Indoor Scene Classification task we adopt a pre-trained network, called Semantic Aware Scene Recognition (SASR), tailored to the task of scene classification. We use a Random Forest (RF) and neural network as baseline rule learning approaches in all tasks, and they both use the same pre-trained Softmax neural network for feature extraction as used in FFNSL Softmax, and are trained to learn the knowledge needed to predict the downstream label, given Softmax neural network predictions. In the Indoor Scene Classification task the SASR network is used. The RF is chosen as a powerful decision tree approach, known for being a lightweight model that is quick to train and exhibits a certain level of interpretability. In the Follow Suit Winner and Crop Yield Prediction tasks, the neural network is a Fully Connected Network (FCN), chosen to evaluate a deeper architecture, and in the Sudoku Grid Validity task, the neural network is a Convolutional Neural Network-Long Short Term Memory (CNN-LSTM) designed for sequence classification problems where the CNN component can learn spatial dependencies in the Sudoku grid. Full details of the baseline architectures are given in Appendix D. To measure interpretability of the RF baseline, we used the first tree in the forest and extract a rule from each branch (from root to leaf) of this tree. For the neural network baselines, we fitted a surrogate decision tree model (Molnar, 2019) to approximate black-box predictions, and applied the same rule extraction methods as that used for the RF. Let us now present our results.

6 Follow suit winner

In this section we present the results of the Follow Suit Winner task. We start with Softmax and EDL-GEN neural networks pre-trained on standard playing card images and apply minor and major distributional shifts by substituting standard playing card images with images from alternative decks. Example images are shown in Fig. 3 for the queen of hearts card taken from the Standard Fig. 3a, Batman Joker Fig. 3b, Captain America Fig. 3c, Adversarial Standard Fig. 3d, Adversarial Batman Joker Fig. 3e and Adversarial Captain America Fig. 3f decks.

The Batman Joker and Captain America decks represent minor distributional shifts; the adversarial decks represent instead major distributional shifts where card images from each of the Standard, Batman Joker and Captain America decks are placed against a background containing additional card images from the Standard deck. These adversarial decks are designed to trick the neural networks into predicting incorrectly as images from the Standard deck are from the same distribution as the card images used during neural network pre-training. In order to understand the challenge faced by the LAS system when learning from neural network feature predictions in the presence of distributional shifts, Fig. 4 presents the accuracy and confidence score distribution of pre-trained neural networks when evaluated on different playing card decks than the one used for pre-training.

In Fig. 4, each row shows the type of neural network, the playing card deck used for evaluation, the predictive accuracy, and the confidence score distribution. As one would expect, the accuracy was very high when classifying playing card images from the standard deck, as this was the deck used for pre-training. For the Softmax neural network the confidence score was also very high in this case, whereas EDL-GEN had more distributed confidence scores. When evaluating the pre-trained networks on decks different from the one used in training, the Softmax neural network still reported high confidence despite its overall low accuracy, whereas the EDL-GEN network reported comparable low accuracy but with much lower confidence. For example, evaluating the networks over the Captain America deck (see 3rd and 9th rows), 96% of Softmax predictions were made with confidence in the interval [0.95, 1], despite an accuracy of 0.0697, whereas only 10% of EDL-GEN predictions were made within this same confidence interval. As for the overall accuracy, EDL-GEN performed slightly better than Softmax over decks representing minor distributional shifts, whereas both networks performed in a similar way when applied to decks representing major distributional shifts. This highlights the challenge for our FFNSL framework in learning knowledge when presented with out-of-distribution data, as neural network predictions are likely to be incorrect, and may potentially be made with high confidence.

6.1 Learned hypothesis evaluation

Figure 5 presents the accuracy of the learned hypotheses when an increasing percentage of labelled unstructured data were subject to distributional shifts, applied with cards from the alternative decks. The reported accuracy is the mean accuracy over 5 repeats and the error bars indicate standard error.

FFNSL outperformed the baselines and learned far superior hypotheses when up to 90% of labelled unstructured data were subject to distributional shifts. This was the case for both instances of FFNSL. The baselines required \(100\times \) the number of examples in order to perform close to FFNSL, and despite the significant increase in the amount of data used by the baselines, FFNSL still learned more accurate hypotheses. Figures 5a and b refer to the injection of minor distributional shifts. In these two cases, when the percentage of distributional shift was very high (above \(90\%\)), the accuracy of the FFNSL learned hypotheses decreased, but still remained between \(\sim 70{-}100\%\), whereas the accuracy of the baselines trained with the same amount of data reduced to \(\sim 40\%\). Figure 5c–e refer to the injection of major distribution shifts. FFNSL Softmax had similar performance in Fig. 5c and d but a much lower accuracy than that shown with minor distributional shifts when \(90\%\) or more of the unstructured data were subject to distributional shifts. The FFNSL EDL-GEN maintained instead a higher accuracy in these cases. We now perform a more in-depth analysis to explore the reasons for dropping accuracy in the presence of high percentages of distributional shifts. Given the two groups of similar behaviours we consider only two representative cases: Batman Joker, as minor distributional shift, and Adversarial Batman Joker as major distributional shift. A full set of analysis results, with respect to all the other card decks, is given in Appendix A.

In particular, we explore whether FFNSL EDL-GEN provides a performance benefit over FFNSL Softmax, and if so, what are the contributing factors. Specifically we analyse the accuracy performance in relation to either or both (i) better neural network predictive accuracy, when classifying out-of-distribution data, and (ii) more informative weight penalties of the generated WCDPI examples, calculated from the neural network confidence scores. For this analysis we focus on high percentages of distributional shifts, 95–100%, as this was when FFNSL instances deteriorated in their learned hypothesis accuracy. We run 50 experimental repeats to generate statistically significant results. In order to isolate the effect of the example weight penalties, we also run two additional baseline FFNSL instances where the weight penalties of the generated WCDPI examples are all constant and equal to 10. The results are shown in Fig. 6. We have also included the performances with respect to distributional shifts given by the Adversarial Captain America deck (Fig. 6c), since Fig. 5e shows that in this case the accuracy of both FFNSL instances decreased to around \(40\%\) when nearly \(100\%\) of the data were subject to distributional shifts. Full analysis of this deck is presented in Appendix A.

For both Batman Joker and Adversarial Batman Joker, FFNSL EDL-GEN outperformed FFNSL Softmax. Note, however, the difference in y-axis scale between Fig. 6a and b and the difference in FFNSL performance. This was due to the fact that both Softmax and EDL-GEN neural networks predicted more accurately on the Batman Joker deck than the Adversarial Batman Joker deck, as presented in Fig. 4. The improved performances of FFNSL EDL-GEN versus FFNSL Softmax did not seem to depend on the more informative weights of WCDPI examples with weights calculated from neural network confidence scores, versus constant weights, since the accuracy of FFNSL instances (denoted “...with NN penalties") was similar to that of the respective baselines with constant weight penalties. However, Fig. 6c shows that when the distributional shift was more severe,Footnote 11 the decrease in accuracy of FFNSL EDL-GEN was less drastic than that of its corresponding baseline with constant penalty, whereas there was no difference in the case of FFNSL Softmax. Even though the overall accuracy of the framework was lower than that reported for less drastic forms of distributional shifts, the more informative weight penalties of WCDPI examples, calculated from the EDL-GEN neural network confidence scores, provided a clear benefit compared to using constant weights, in particular when the percentage of distributional shift was very high.

It still remains open the question as to why FFNSL EDL-GEN performed better than FFNSL Softmax in Fig. 6a and b. For the percentages of distributional shifts between 95–100%, the pre-trained neural networks both reported low average accuracy. So a natural question to ask is whether EDL-GEN led to more consistent symbolic feature predictions than Softmax. This is important to investigate because LAS systems are capable of learning accurate hypotheses from few “good" examples. So, we investigated the percentage of incorrect WCDPI examples generated when 95–100% of the unstructured data were subject to distributional shifts. These were examples whose contextual symbolic features were inconsistent with the ground-truth label due to incorrect neural network predictions.

Figure 7 shows, first of all, that the percentage of generated incorrect WCDPI examples was lower than the corresponding percentage of data subject to distributional shifts. This indicated that some correct WCDPI examples could be generated even when the neural networks made incorrect predictions. Incorrect predictions made over the 4 cards played could in combination lead to predicted symbolic features for the trick whose winning player would match the ground truth label. Secondly, more correct WCDPI examples were generated when the distributional shift was given by the Batman Joker deck, compared to that of the Adversarial Batman Joker deck. This was because, as indicated in Fig. 4, the neural network accuracy for the former was better than that for the latter. Furthermore, EDL-GEN led to a lower number of incorrect WCDPI examples compared to that of Softmax in both forms of distributional shifts, and this difference was bigger in the case of the Adversarial Batman Joker deck. Given the relatively small number of WCDPI examples used by the LAS system (104), this difference contributed to the larger gap in accuracy between FFNSL Softmax and FFNSL EDL-GEN in Fig. 6b than Fig. 6a.

Now, how did the weight penalty, generated from the neural network confidence score, effect the accuracy of learned hypotheses? Clearly, EDL-GEN provided improved confidence scores than the Softmax neural network which in-turn, improved the accuracy of FFNSL. This explains why the accuracy of each FFNSL approach was higher in Fig. 6a than that shown in Fig. 6b. However, Fig. 6 shows that for FFNSL Softmax, using WCDPI example weight penalties calculated from Softmax neural network confidence scores appears to have no benefit compared to using WCDPI examples with constant weight penalties. However, this was different in the case of FFNSL EDL-GEN. To investigate this further, we calculated the weight penalty ratio for the WCDPI examples generated from both Softmax and EDL-GEN neural network confidence scores. The analysis is shown in Fig. 8 for each deck and 95–100% distributional shifts.

Figure 8 shows that the weight penalty ratio calculated from EDL-GEN confidence scores provided a clear benefit than that calculated from the Softmax neural network confidence scores, which was instead very similar to the weight penalty ratio given by constant penalties. At 100% distributional shifts, the benefits of calculating WCDPI example weight penalties with the neural network confidence scores reduced, as there were very few correct examples. This explains why the gap between the accuracy of the FFNSL EDL-GEN with neural network penalties and that of FFNSL EDL-GEN with constant penalties, in both decks, reduced as distributional shifts increase towards 100% (see Fig. 6). In summary, improved accuracy of the neural network predictions led to a higher percentage (even if small) of correct WCDPI examples and improved neural network confidence scores led to an improved penalty ratio of correct WCDPI examples. Together they provided an improved bias for the LAS system which even if it was reduced to learn from a small percentage of correct examples, these had improved penalty weight to guide the search for optimal solutions.

Let us now investigate the interpretability of the hypotheses learned using our FFNSL framework compared to that of the baseline approaches. Figure 9 shows the results, where interpretability was measured in terms of the number of atoms that formed the learned hypothesis.

FFNSL learned significantly more interpretable knowledge than the baseline approaches (note the logarithmic scale on the y-axis). In the case of the minor form of distributional shift (see Fig. 9a), the interpretability of the baseline models trained with \(100\times \) the amount of data decreased as distributional shift increased. These models reached high accuracy by training over a much larger dataset (see Fig. 5a), but they did so at the cost of much lower interpretability. This was because they learned a more complex mapping between input and output, instead of learning general rules, as was the case for our FFNSL approach. The FCN trained with the same amount of data as FFNSL had similar interpretability to that of FFNSL, because the model learned to largely predict the same class and the surrogate decision tree was very small. This was reflected in the poor performance of the FCN shown in Fig. 5d for the Adversarial Batman Joker deck. Examples of interpretable knowledge learned by our FFNSL approaches are presented in Appendix A.

Finally, to investigate the scalability of FFNSL, we have also computed the time required to learn an interpretable hypothesis. The results are shown in Fig. 10.

Both FFNSL approaches learned with an order of magnitude of time similar to that of the FCN trained with the same number of examples when no distributional shifts were applied to the data. As distributional shifts increased, FFNSL took longer because the ILASP system required more iterations to prove optimality with respect to minimising the total penalty on the examples. However, the learning time of FFNSL EDL-GEN did not increase as quickly, when compared to FFNSL Softmax. This was because the WCDPI example weight penalties were much more informative (see Fig. 8) and the ILASP learning system required fewer iterations overall to prove optimality.

In conclusion, our analysis shows that FFNSL outperformed the baseline approaches in terms of accuracy and interpretability, even when the baselines were trained with \(100\times \) the amount of data. FFNSL EDL-GEN outperformed FFNSL Softmax, in the accuracy of the learned hypotheses, as EDL-GEN neural network predictions were more accurate, and this influenced the downstream performance of the FFNSL framework more than the neural network confidence scores. When major distributional shifts were applied, the EDL-GEN uncertainty-aware neural network led to significantly more informative WCDPI example weight penalties compared to the Softmax neural network, although this benefit diminished as the percentage of input data subject to distributional shifts approached 100%. Finally, we have shown that more informative WCDPI example weight penalties resulted in faster hypothesis learning times, when the iterative ILASP system was used.

6.2 FFNSL framework evaluation

Figure 11 presents the accuracy of the entire FFNSL framework when evaluated over a test data subject to the same types of distributional shifts used during the learning of interpretable knowledge. The mean accuracy is reported and the error bars denote standard error over 5 repeats.

FFNSL outperformed the baselines trained with the same amount of data at each percentage of distributional shift on the Batman Joker deck, and until \(\sim \)80% distributional shift on the Adversarial Batman Joker deck. The baselines required \(100\times \) the amount of data in order to match or outperform FFNSL. On the Adversarial Batman Joker deck, the performance was lower for all approaches when the percentage of distributional shift was high, due to the neural networks predicting with lower accuracy (see Fig. 4). The baselines trained with the same amount of data outperformed FFNSL for \(>80\%\) distributional shifts. This was because they largely predicted player 1 and was sufficient to reach approximately \(40\%\) of accuracy on the test set: the Follow Suit Winner task is biased towards player 1 because winning depends on playing the highest ranked card with the same suit as player 1. In the test set, 38.6% of the data was indeed labelled with player 1 as the winner, which roughly corresponds to the performance of the baselines trained with the same number of examples at 100% shifts in Fig. 11b. FFNSL EDL-GEN outperformed FFNSL Softmax because of two reasons. Firstly, the rules learned by FFNSL EDL-GEN, in the presence of high percentage of distributional shifts, were more accurate (see Figs. 5a and d) because of the lower number of incorrect WCDPI examples when the EDL-GEN neural network was used (Fig. 7). In addition, the EDL-GEN neural network provided more informative bias to the LAS system through better WCDPI example weight penalties (Fig. 8). Finally, the decrease in performance of the FFNSL approaches over unseen data subject to distributional shift seemed to be linear in the percentage of applied distributional shift. This was primarily due to the accuracy of the neural network feature predictions. Figure 12 shows that indeed the accuracy of neural network predictions over unseen card images decreased linearly with the increase of the percentage of distributional shifts.

As shown in Fig. 12, the EDL-GEN neural network was more accurate than Softmax in predicting unseen playing cards in the case of minor distributional shift given by the Batman Joker deck. However, the accuracy of the neural networks was the same in the case of major distributional shift given by the Adversarial Batman Joker deck. This was why in Fig. 11, FFNSL EDL-GEN’s showed better performance on the Batman Joker deck. For the Adversarial Batman Joker deck, FFNSL EDL-GEN’s better performance than FFNSL Softmax was primarily due to more accurate hypotheses.

7 Sudoku grid validity

Having presented in detail the performance of our FFNSL approaches on the Follow Suit Winner task, we now explore whether the approach can generalise to other tasks. We have applied our approach to a different classification task, the Sudoku Grid Validity task and we present the results in this section. We consider two cases: a \(4\times 4\) Sudoku grid size, for which the sequence \({\varvec{x}}\) of unstructured data is much longer than that used for the Follow Suit Winner task. Therefore, each generated WCDPI example contains more contextual features that are likely to be predicted incorrectly, as a result of distributional shifts applied to input images. We then evaluate the scalability of the FFNSL framework even further by considering \(9\times 9\) Sudoku grid sizes. For the Sudoku grid validiy tasks, the FFNSL instance makes use of the FastLAS system, which has been shown to scale to handle large hypothesis spaces (Law et al., 2020).

We first pre-train both Softmax and EDL-GEN neural networks on standard images from the MNIST training set. In all experiments, we used MNIST digits 1–4 and 1–9 for the respective Sudoku grid size tasks. Figure 13 shows the accuracy and confidence score distribution of the pre-trained neural networks for the \(4\times 4\) and \(9\times 9\) grid tasks, on two test sets: a standard MNIST test set, and a test set where the MNIST digits have been rotated 90\(^{\circ }\) clockwise, representing a distributional shift. The test sets also contain MNIST digits 1-4 or 1-9, depending on the Sudoku grid size.

The results are similar to the Follow Suit Winner task. The Softmax neural network predicted with high confidence also over data subject to distributional shift, despite its low test set accuracy. The EDL-GEN neural network predicted more accurately than Softmax on data subject to distributional shift, but Softmax was slightly more accurate on the standard test sets.

7.1 Learned hypothesis evaluation

Figure 14 presents the accuracy of the hypotheses learned from unstructured data with increasing percentages of distributional shift, given by rotating MNIST digit images. We plot the mean accuracy over 5 repeats and the error bars denote standard error. In both Sudoku Grid Validity tasks the FFNSL approaches have as input a background knowledge that encodes the concept of a Sudoku grid (see Appendix F for details). For the \(4\times 4\) task, we created an additional, more challenging task with a reduced background knowledge where facts about column, row and block were not given but implicitly inferred from a more general notion of division and cell coordinates (given as meta-data). For the \(9\times 9\) task, we also created an additional training task for the RF, (which was the best performing baseline), where pre-trained neural network predictions were post-processed into 3 Boolean features: whether digits were in the same row, column or block, which was given as input to the RF. This type of input effectively encoded the Sudoku grid knowledge into the RF learning task, and constituted even more information than what was provided to our FFNSL approaches. We demonstrate that FFNSL performed similarly to this baseline with additional background information. Full FastLAS task listings are given in Appendix F.