Abstract

Recently, the \({ Tensor}~{ Nuclear}~{ Norm}~{ (TNN)}\) regularization based on t-SVD has been widely used in various low tubal-rank tensor recovery tasks. However, these models usually require smooth change of data along the third dimension to ensure their low rank structures. In this paper, we propose a new definition of data dependent tensor rank named tensor Q-rank by a learnable orthogonal matrix \(\mathbf {Q}\), and further introduce a unified data dependent low rank tensor recovery model. According to the low rank hypothesis, we introduce two explainable selection methods of \(\mathbf {Q}\), under which the data tensor may have a more significant low tensor Q-rank structure than that of low tubal-rank structure. Specifically, maximizing the variance of singular value distribution leads to Variance Maximization Tensor Q-Nuclear norm (VMTQN), while minimizing the value of nuclear norm through manifold optimization leads to Manifold Optimization Tensor Q-Nuclear norm (MOTQN). Moreover, we apply these two models to the low rank tensor completion problem, and then give an effective algorithm and briefly analyze why our method works better than TNN based methods in the case of complex data with low sampling rate. Finally, experimental results on real-world datasets demonstrate the superiority of our proposed models in the tensor completion problem with respect to other tensor rank regularization models.

Similar content being viewed by others

1 Introduction

With the development of data science, multi-dimensional data structures are becoming more and more complex. The low-rank tensor recovery problem, which aims to recover a low-rank tensor from an observed tensor, has also been extensively studied and applied. The problem can be formulated as the following model:

where \(\mathcal {Y}\) is the observed measurement by a linear operator \(\varPsi (\cdot )\) and \(\mathcal {X}\) is the clean data. Generally, it is difficult to solve Eq. (1) directly, and different rank definitions correspond to different models. The commonly used definitions of tensor rank are all related to particular tensor decompositions (Kolda and Bader 2009). For example, CP-rank (Hitchcock 1927) is based on the CANDECOMP/PARAFAC decomposition (Kiers 2000); multilinear rank (Hitchcock 1928) is based on the Tucker decomposition (Tucker 1966); tensor multi-rank and tubal-rank (Kilmer et al. 2013) are based on t-SVD (Kilmer and Martin 2011); and a new tensor rank with invertible linear operator (Lu et al. 2019) is based on T-SVD (Kernfeld et al. 2015). Among them, CP-rank and multilinear rank are both older and more widely studied, while the remaining two mentioned here are relatively new. Minimizing the rank function in Eq. (1) directly is usually NP-hard and is difficult to be solved within polynomial time, hence we often replace \(\text {rank}({\mathcal {X}})\) by a convex/non-convex surrogate function. Similar to the matrix case (Candès and Recht 2009; Candès and Tao 2010), with different definitions of tensor singular values, various tensor nuclear norms are proposed as the rank surrogates (Liu et al. 2013; Friedland and Lim 2018; Kilmer and Martin 2011; Lu et al. 2019).

1.1 Existing mainstream methods and their limitations

Friedland and Lim (2018) introduce cTNN (Tensor Nuclear Norm based on CP) as the convex relaxation of the tensor CP-rank:

where \(\Vert \mathbf {u}_i\Vert = \Vert \mathbf {v}_i\Vert = \Vert \mathbf {w}_i\Vert = 1\) and \(\circ \) represents the vector outer product.Footnote 1 However, for a given tensor \(\mathcal {T}\in \mathbb {R}^{n_1\times n_2\times n_3}\), minimizing the surrogate objection \(\Vert \mathcal {T}\Vert _{cTNN}\) directly is difficult due to the fact that computing CP-rank is usually NP-complete (Håstad 1990; Hillar and Lim 2013) and computing cTNN is NP-hard in some sense (Friedland and Lim 2018), which also mean we cannot verify the consistency of cTNN’s implicit decomposition with the ground-truth CP-decomposition. Meanwhile, it is hard to measure the cTNN’s tightness relative to the CP-rank.Footnote 2 Although Yuan and Zhang (2016) give the sub-gradient of cTNN by leveraging its dual property, the high computational cost makes it difficult to implement.

To reduce the computation cost of computing the rank surrogate function, Liu et al. (2013) define a kind of tensor nuclear norm named SNN (Sum of Nuclear Norm) based on the Tucker decomposition (Tucker 1966):

where \(\mathcal {T} \in \mathbb {R}^{n_1\times \cdots \times n_{{d}}}\), \(\mathbf {T}_{(i)}\in \mathbb {R}^{(n_1\ldots n_{i-1}n_{i+1}\ldots n_{{d}})\times n_i}\) denotes unfolding the tensor along the ith dimension, and \(\Vert \cdot \Vert _*\) is the nuclear norm of a matrix, i.e., sum of singular values. The convenient calculation algorithm makes SNN widely used (Fu et al. 2016; Liu et al. 2015, 2013; Kasai and Mishra 2016; Li et al. 2016). It is worth to mentioned that, although SNN has a similar representation to matrix case, Romera-Paredes and Pontil (2013) point out that SNN is not the tightest convex relaxation of the multilinear rank (Hitchcock 1928), and is actually an overlap regularization of it. References Tomioka et al. (2010); Tomioka and Suzuki (2013); Wimalawarne et al. (2014) also propose a new regularizer named Latent Trace Norm to better approximate the tensor rank function. In addition, due to unfolding the tensor directly along each dimension, the information utilization of SNN based model is insufficient.

To avoid information loss in SNN, Kilmer and Martin (2011) propose a tensor decomposition named t-SVD with a Fourier transform matrix \(\mathbf {F}\), and Zhang et al. (2014) give a definition of the tensor nuclear norm on \(\mathcal {T}\in \mathbb {R}^{n_1\times n_2 \times n_3}\) corresponding to t-SVD, i.e., Tensor Nuclear Norm (TNN):

where \(\mathbf {G}^{(i)}\) denotes the ith frontal slice matrix of tensor \(\mathcal {G}\),Footnote 3 and \(\times _3\) is the mode-3 multilinear multiplication (Tucker 1966). Benefitting from the efficient Discrete Fourier transform and the better sampling effect of Fourier basis on time series features, TNN has attracted extensive attention in recent years (Zhang et al. 2014; Lu et al. 2016, 2018; Yin et al. 2018; Hu et al. 2016). The operation of Fourier transform along the third dimension makes TNN based models have a natural computing advantage for video and other data with strong time continuity along a certain dimension.

However, when considering the smoothness of different data, using a fixed Fourier transform matrix \(\mathbf {F}\) may bring some limitations. In this paper, we define smooth and non-smooth data along a certain dimension as the usual intuitive meaning, which means the slices of tensor data along a dimension are arranged in a certain paradigm, e.g., time series. For example, a continuous video data is smooth. But if the data tensor is a concatenation of several different scene videos or a random arrangement of all frames, then the data is non-smooth.

Firstly, TNN needs to implement Singular Value Decomposition (SVD) in the complex field \(\mathbb {C}\), which is slightly slower than that in the real field \(\mathbb {R}\). Besides, the experiments in related papers (Zhang et al. 2014; Lu et al. 2018; Zhou et al. 2018; Kong et al. 2018) are usually based on some special dataset which have smooth change along the third dimension, such as RGB images and short videos. Those non-smooth data may increase the number of non-zero tensor singular values (Kilmer and Martin 2011; Zhang et al. 2014), weakening the significance of low rank structure. Since tensor multi-rank (Zhang et al. 2014) is actually the rank of each projection matrix on different Fourier basis, the non-smooth change along the third dimension may lead to large singular values appearing on the projection matrix slices which are corresponding to the high frequency.

1.2 Related work

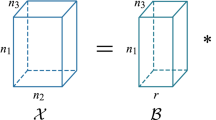

In order to solve the above phenomenon, there are some works (Kernfeld et al. 2015; Xu et al. 2019; Song et al. 2019; Lu et al. 2019; Jiang et al. 2020) that consider to improve the projection matrix of TNN, i.e., the Discrete Fourier transform matrix \(\mathbf {F}\) in Eq. (4). These work want to replace \(\mathbf {F}\) by another measurement matrix \(\mathbf {M}\) and further obtain new definitions of tensor rank \({\text {rank}}_M(\mathcal {X})\) and tensor nuclear norm \(\Vert \mathcal {X}\Vert _{M,*}\) as regularizers. Figure 1 shows the related operations. Their recovery models can be summarized as follows:

Please see Sect. 2 for the relevant definitions in Eq. (5). In the following, we will discuss the motivations and limitations of these work (Kernfeld et al. 2015; Xu et al. 2019; Song et al. 2019; Lu et al. 2019; Jiang et al. 2020), respectively.

Replace \(\mathbf {F}\) in Eq. (4) by matrix \(\mathbf {M}\) and further obtain new definitions of tensor rank \({\text {rank}}_M(\mathcal {X})\) and tensor nuclear norm \(\Vert \mathcal {X}\Vert _{M,*}\) by using \(\mathbf {S}^{(i)}\)

Kernfeld et al. (2015) generalize the t-product by introducing a new operator named cosine transform product with an arbitrary invertible linear transform \(\mathcal {L}\) (or arbitrary invertible matrix \(\mathbf {M}\)). For a given \(\mathcal {T}\in \mathbb {R}^{n_1\times n_2\times n_3}\) and an invertible matrix \(\mathbf {M}\in \mathbb {R}^{n_3\times n_3}\), they have \(\mathcal {L}_{\mathbf {M}}(\mathcal {T}) = \mathcal {T}\times _3 \mathbf {M}\) and \(\mathcal {L}_{\mathbf {M}}^{-1}(\mathcal {T}) = \mathcal {T}\times _3 \mathbf {M}^{-1}\). Different from the commonly used definition of tensor mode-i product in Kolda and Bader (2009); Liu et al. (2013); Kernfeld et al. (2015); Lu et al. (2019), it should be mentioned that for convenience in this paper, we define \(\mathcal {L}_{\mathbf {Q}}(\mathcal {T}) =\mathcal {T}\times _3 \mathbf {Q} = {\text {fold}}_{3}(\mathbf {T}_{(3)}\mathbf {Q})\), where \(\mathbf {T}_{(3)} \in \mathbb {R}^{n_1 n_2\times n_3}\) and is defined by \(\mathbf {T}_{(3)} := {\text {unfold}}_{3}(\mathcal {T})\). That is to say, we arrange the tensor fiber \(\mathcal {T}_{ij:}\) by rows.

Following this idea, Lu et al. (2019) propose a new tensor nuclear norm induced by invertible linear transforms (Kernfeld et al. 2015). Different from Kilmer and Martin (2011); Zhang et al. (2014), they use an fixed invertible matrix to replace the Fourier transform matrix in TNN. Although this method improves the performance of the recovery model to a certain extent, some new problems still arise, such as how to determine the fixed invertible matrix. Normally, different data need different optimal invertible matrix, but a reasonable matrix selection method is not given in Lu et al. (2019). Furthermore, the Frobenius norm of the invertible matrix is uncertain, which may lead to some computational problems, e.g., approaching zero or infinity.

Additionally, Kernfeld et al. (2015) propose an idea that, with the help of Toeplitz-plus-Hankel matrix (Ng et al. 1999), the Discrete cosine transform matrix \(\mathbf {C}\) can also be used to replace \(\mathbf {F}\). Then the work Xu et al. (2019) propose some fast algorithms for diagonalization and the relevant recovery model. However, \(\mathbf {C}\) is still based on trigonometric function, and may lead to the similar problems with TNN based model, as we mentioned in the last paragraph of Sect. 1.1.

Considering the efficiency of time-space transformation, the work Song et al. (2019) use the Daubechies 4 discrete wavelet transform matrix to replace \(\mathbf {F}\). As we know, the wavelet transform can take the position information into account, which may make it better than Fourier transform and cosine transform in handling some special data, e.g., audio data. However, many wavelet bases generate transform matrices in exponential form, which means the large scale wavelet matrix may bring the problem of computational complexity.

Regardless of the computational complexity, Jiang et al. (2020) introduce a new projection matrix named tight framelets transform matrix (Cai et al. 2008; Jiang et al. 2018). They claim that redundancy in the transformation is important as such transformed coefficients can contain information of missing data in the original domain (Cai et al. 2008). However, we consider that redundancy is not a sufficient condition to improve the effect of recovery model shown in Eq. (5).

In summary, different multipliers \(\mathbf {M}\) in Eq. (5) lead to different definitions of regularizer, which may lead to different experimental results. However, there is still no unified rules for selecting \(\mathbf {M}\). It can be seen from the above methods that when \(\mathbf {M}\) is selected as orthogonal matrix, it is convenient for calculation and interpretation. In general, projection bases are unit orthogonal. We further think that each kind of data should have its best matching matrix, i.e., \(\mathbf {M}\) could be data dependent. In this paper, we solve the problem of how to define a better data dependent orthogonal transformation matrix.

1.3 Motivation

In the tensor completion task, we find that when dealing with some non-smooth data, Tensor Nuclear Norm (TNN) based methods usually perform worse than the cases with smooth data. Therefore, we want to improve this phenomenon by changing the projection basis \(\mathbf {F}\) in Eq. (4). In other words, we provide some interpretable selection criteria of \(\mathbf {M}\) in Eq. (5), e.g., make \(\mathbf {M}\) be an orthogonal matrix and data dependent w.r.t. the data tensor \(\mathcal {X}\). The following gives the details:

Whether in the case of matrix recovery (Candès and Recht 2009; Candès and Tao 2010) or tensor recovery (Zhang and Aeron 2017; Lu et al. 2018, 2019), the low rank hypothesis is very important. Generally speaking, the lower the rank of the data, the easier it is to recover with fewer observations. As can be seen from Fig. 2, we can use a better \(\mathbf {Q}\) to make the low rank structure of the non-smooth data more significant.

Considering the convex relaxation, the low rank property is usually represented by (a): the distribution of singular values, or (b): the value of nuclear norm. We may as well take these two points as priori knowledge respectively, and specify the selection rules of \(\mathbf {Q}\) in Eq. (6), so that the low rank property of \(\mathcal {X}\) can be better reflected. Therefore, we provide two methods in this paper as follows:

(a): Let \(\mathbf {Q}\) satisfy a certain selection method to make more tensor singular values close to 0 while the remaining ones are far from 0. From another perspective, the distribution variance of singular values should be larger, which leads to Variance Maximization Tensor Q-Nuclear norm (VMTQN) in Sect. 3.1.

(b): Let \(\mathbf {Q}\) minimize the nuclear norm \(\left\| \mathcal {X} \right\| _{Q,*}\) directly, leading to a bilevel problem. As we know, nuclear norm is usually used as an surrogate function of the rank function. Then we use some manifold optimization method to solve the problem, which leads to Manifold Optimization Tensor Q-Nuclear norm (MOTQN) in Sect. 3.2.

1.4 Contributions

In summary, our main contributions include:

-

We propose a unified data dependent low rank tensor recovery model which is shown in Eq. (6). Among them, the corresponding definitions of tensor Q-rank \({\text {rank}}_Q (\mathcal {X})\) and tensor Q-nuclear norm \(\left\| \mathcal {X} \right\| _{Q,*}\) are proposed along with the learnable data dependent orthogonal \(\mathbf {Q}\).

-

From the low rank hypothesis, we consider the distribution of singular values and the value of nuclear norm as prior knowledge respectively, leading to two different selection rules of \(\mathbf {Q}\). It should be noted that both methods are designed to make the low rank structure more significant. Figure 2 shows an example with background changing video data that, under our proposed selection of \(\mathbf {Q}\), our low rank structure is more significant.

-

For each method, we give relatively complete theoretical derivations, including interpretation and optimization. As for VMTQN in Sect. 3.1, we start from variance maximization and use Theorem 2 to associate \(\ell _{2,1}\) norm minimization with singular value decomposition, and further make \(\mathbf {Q}\) select as the matrix of right singular vectors. On the other hand, MOTQN in Sect. 3.2 minimizes the nuclear norm directly and use manifold optimization algorithm to update \(\mathbf {Q}\) in each iteration.

-

Finally, we apply our proposed regularizers with adaptive \(\mathbf {Q}\) to the tensor completion problem. We analyze the computational complexity, convergence and performance guarantee of our algorithm to a certain extent. Moreover, we explain why the more significant the low rank structure, the easier the data can be recovered, which corresponds to our motivation.

2 Notations and preliminaries

2.1 Notations

We introduce some notations and necessary definitions which will be used later. Tensors are represented by uppercase calligraphic letters, e.g., \(\mathcal {T}\). Matrices are represented by boldface uppercase letters, e.g., \(\mathbf {M}\). Vectors are represented by boldface lowercase letters, e.g., \(\mathbf {v}\). Scalars are represented by lowercase letters, e.g., s. Given a third-order tensor \(\mathcal {T}\in \mathbb {R}^{n_1\times n_2\times n_3}\), we use \(\mathbf {T}^{(k)}\) to represent its kth frontal slice \(\mathcal {T}(:,:,k)\) while its (i, j, k)th entry is represented as \(\mathcal {T}_{ijk}\). \(\sigma _i(\mathbf {X})\) denotes the ith largest singular value of matrix \(\mathbf {X}\). \(\mathbf {X}^{+}\) denotes the pseudo-inverse matrix of \(\mathbf {X}\). \(\Vert \mathbf {X}\Vert _\sigma = \sigma _1(\mathbf {X})\) denotes the matrix spectral norm. \(\Vert \mathbf {X}\Vert _* = \sum _{i=1}^{\min \{n_1,n_2\}} \sigma _i(\mathbf {X})\) denotes the matrix nuclear norm and \(\Vert \mathbf {X}\Vert _{2,1} = \sum _{j=1}^{n_2}\sqrt{\sum _{i=1}^{n_1}\mathbf {X}_{ij}^2}\) denotes the matrix \(\ell _{2,1}\) norm, where \(\mathbf {X}\in \mathbb {R}^{n_1 \times n_2}\) and \(\mathbf {X}_{ij}\) is the (i, j)th entry of \(\mathbf {X}\).

\(\mathbf {T}_{(3)}\in \mathbb {R}^{n_1 n_2\times n_3}\) denotes unfolding the tensor \(\mathcal {T}\) along the 3rd dimension by rows, which is little different from Kolda and Bader (2009); Kernfeld et al. (2015). That is to say, we arrange the tensor fiber \(\mathcal {T}_{ij:}\) by rows. We then define \(\mathcal {L}_{\mathbf {Q}}(\mathcal {T}) =\mathcal {T}\times _3 \mathbf {Q} = {\text {fold}}_{3}(\mathbf {T}_{(3)}\mathbf {Q})\) and have \(\mathcal {L}_{\mathbf {Q}}^{-1}(\mathcal {T}) = \mathcal {T}\times _3 \mathbf {Q}^{-1}\), where \(\mathbf {T}_{(3)} \in \mathbb {R}^{n_1 n_2\times n_3}\) and is defined by \(\mathbf {T}_{(3)} := {\text {unfold}}_{3}(\mathcal {T})\). Due to limited space, for the definitions of \(\mathcal {P}_{\mathcal {T}}\) (Lu et al. 2016), multilinear multiplication (Tucker 1966), t-product (Kilmer and Martin 2011), and so on, please see our Supplementary Materials.

2.2 Tensor Q-rank

For a given tensor \(\mathcal {X}\in \mathbb {R}^{n_1\times n_2\times n_3}\) and a Fourier transform matrix \(\mathbf {F}\in \mathbb {C}^{n_3\times n_3}\), if we use \(\mathbf {G}^{(i)}\) to represent the ith frontal slice of tensor \(\mathcal {G}\), then the tensor multi-rank and Tensor Nuclear Norm (TNN) of \(\mathcal {X}\) can be formulated by mode-3 multilinear multiplication as follows:

Comparing with CP-rank and cTNN mentioned in Sect. 1.1, it is quite easy to calculate Eqs. (7) and (8) through the matrix Singular Value Decomposition (SVD). Kernfeld et al. (2015) generalize the t-product by introducing a new operator named cosine transform product with an arbitrary invertible linear transform \(\mathcal {L}\) (or arbitrary invertible matrix \(\mathbf {Q}\)). For an invertible matrix \(\mathbf {Q}\in \mathbb {R}^{n_3\times n_3}\), they have \(\mathcal {L}_{\mathbf {Q}}(\mathcal {X}) = \mathcal {X}\times _3 \mathbf {Q}\) and \(\mathcal {L}_{\mathbf {Q}}^{-1}(\mathcal {X}) = \mathcal {X}\times _3 \mathbf {Q}^{-1}\).

Here, we further define the invertible multiplier \(\mathbf {Q}\) as any general real orthogonal matrix. It is worth mentioning that the orthogonal matrix \(\mathbf {Q}\) has two good properties: one is invertibility, the other is to keep Frobenius norm invariant, i.e., \(\Vert \mathcal {X}\Vert _F = \Vert \mathcal {L}_{\mathbf {Q}}(\mathcal {X})\Vert _F\). Then we introduce a new definition of tensor rank named Tensor Q-rank.

Definition 1

(Tensor Q-rank) Given a tensor \(\mathcal {X}\in \mathbb {R}^{n_1\times n_2\times n_3}\) and a fixed real orthogonal matrix \(\mathbf {Q} \in \mathbb {R}^{n_3\times n_3}\), the tensor Q-rank of \(\mathcal {T}\) is defined as the following:

The corresponding low rank tensor recovery model can be written as follows:

Generally in the low rank recovery models, due to the discontinuity and non-convexity of the rank function, it is quite difficult to minimize the rank function directly. Therefore, some auxiliary definitions of tensor singular value and tensor norm are needed to relax the rank function.

2.3 Definitions of tensor singular value and tensor norm

Considering the superior recovery performance of TNN in many existing tasks, e.g., video denoising (Lu et al. 2019) and subspace clustering (Yin et al. 2018), we can use the similar singular value definition of TNN. Given a tensor \(\mathcal {X}\in \mathbb {R}^{n_1\times n_2\times n_3}\) and a fixed orthogonal matrix \(\mathbf {Q}\) such that \(\mathcal {G} = \mathcal {L}_{\mathbf {Q}}(\mathcal {X})\), then the \(\mathbf {Q}\)-singular value of \(\mathcal {X}\) is defined as \(\{\sigma _j(\mathbf {G}^{(i)}) \}\), where \(i=1,\ldots ,n_3\), \(j=1,\ldots ,\min \{n_1,n_2\}\), \(\mathbf {G}^{(i)}\) is the i-the frontal slice of \(\mathcal {G}\), and \(\sigma (\cdot )\) denotes the matrix singular value. When an orthogonal matrix \(\mathbf {Q}\) is fixed, the corresponding tensor spectral norm and tensor nuclear norm of \(\mathcal {X}\) can also be given.

Definition 2

(Tensor Q-spectral norm and Tensor Q-nuclear norm) Given a tensor \(\mathcal {X}\in \mathbb {R}^{n_1\times n_2\times n_3}\) and a fixed real orthogonal matrix \(\mathbf {Q} \in \mathbb {R}^{n_3\times n_3}\), the tensor Q-spectral norm and tensor Q-nuclear norm of \(\mathcal {X}\) are defined as the followings:

Moreover, with any fixed orthogonal matrix \(\mathbf {Q}\), the convexity, duality, and envelope properties are all preserved.

Property 1

(Convexity) Tensor Q-nuclear norm and Tensor Q-spectral norm are both convex.

Property 2

(Duality) Tensor Q-nuclear norm is the dual norm of Tensor Q-spectral norm, and vice versa.

Property 3

(Convex envelope) Tensor Q-nuclear norm is the tightest convex envelope of the Tensor Q-rank within the unit ball of the Tensor Q-spectral norm.

These three properties are quite important in the low rank recovery theory. Property 3 implies that we can use the tensor Q-nuclear norm as a rank surrogate. That is to say, when the orthogonal matrix \(\mathbf {Q}\) is given, we can replace the low tensor Q-rank model (10) with model (13) to recover the original tensor:

In some cases, we will encounter the case that \(\mathbf {Q}\) is not a square matrix, i.e., \(\mathbf {Q}\in \mathbb {R}^{n_3\times r}\) is column orthonormal. Then the corresponding definitions of \({\text {rank}}_Q(\mathcal {X})\) in Eq. (9) and \(\left\| \mathcal {X} \right\| _{Q,*}\) in Eq. (12) also change to the sum of r frontal slices instead of \(n_3\). Moreover, as for the convex envelope property, the double conjugate function of rank function \({\text {rank}}_Q(\mathcal {X})\) is still the corresponding nuclear norm \(\left\| \mathcal {X} \right\| _{Q,*}\) within an unit ball. We give the following theorem to illustrate this case:

Theorem 1

Given a tensor \(\mathcal {T}\in \mathbb {R}^{n_1\times n_2\times n_3}\) and a fixed real column orthonormal matrix \(\mathbf {Q} \in \mathbb {R}^{n_3\times r}\). Let \(\mathbf {Q}_\perp \in \mathbb {R}^{n_3\times (n_3-r)}\) be the column complement matrix of \(\mathbf {Q}\), and \(\mathbf {Q}_t = \begin{bmatrix} \mathbf {Q}&\mathbf {Q}_\perp \end{bmatrix}\) be a orthogonal matrix. Then within the unit ball \(\mathcal {D} = \{ \mathcal {X} | \Vert \mathcal {X}\Vert _{{Q}_t} \le 1 \}\), the double conjugate function of \({\text {rank}}_Q(\mathcal {X})\) is \(\left\| \mathcal {X} \right\| _{Q,*}\):

In other words, \(\Vert \mathcal {X}\Vert _{Q,*}\) is still the tightest convex envelope of \(rank_Q^{**}(\mathcal {X})\) within the unit ball \(\mathcal {D}\).

Theorem 1 indicate that even if \(\mathbf{Q}\) is not a square matrix, Eq. (13) can still be used as an effective recovery model.

3 Two ways for determining \(\mathbf {Q}\): maximizing variance & Stiefel manifold optimization

In practical problems, the selection of \(\mathbf {Q}\) often has a tremendous impact on the performance of the model (13). If \(\mathbf {Q}\) is an identity matrix \(\mathbf {I}\), it is equivalent to solving each frontal slice separately by the low rank matrix methods (Candès and Recht 2009). Or if \(\mathbf {Q}\) is a Fourier transform matrix \(\mathbf {F}\), it is equivalent to the TNN-based methods (Zhang et al. 2014; Lu et al. 2016; Zhang and Aeron 2017). Through the analysis of Lu et al. (2019) and our previous section, for a given data \(\mathcal {X}\), those \(\mathbf {Q}\) that make \({\text {rank}}_Q(\mathcal {X})\) lower usually make the recovery problem (13) easier.

Following, if we let \(\mathbf {Q}\) in Eqs. (10) and (13) be a learnable variable w.r.t. data tensor \(\mathcal {X}\), we can get a data-dependent tensor rank and corresponding low rank recovery model:

Easy to see that Eq. (15) is actually a bilevel model and is usually hard to be solved directly. In the following, we will show two ways to solve this problem from the following two perspectives:

-

1.

One is to use the prior knowledge of \(\mathcal {X}\) to specify the selection criteria of \(\mathbf {Q}\). For the low rank hypothesis, we usually measure it by the distribution of singular values. Therefore, we consider artificially specifying the conditions that \(\mathbf {Q}\) should satisfy so as to maximize the variance of the corresponding singular values.

-

2.

The other is to give the function \(\mathbf {Q} = \mathop {\hbox {argmin}}\limits f(\mathcal {X},\mathbf {Q}) = \mathop {\hbox {argmin}}\limits \Vert \mathcal {X}\Vert _{Q,*}\) and then use manifold optimization to solve the bilevel model directly. That is to say, We directly minimize the surrogate function of rank function (Property 3 and Theorem 1). It should be noted that although this way has higher rationality, it corresponds to a higher computational complexity.

From the above two perspectives, \(\mathbf {Q}\) will be data dependent. In the following, we will introduce our two methods in two sub-sections respectively (Sects. 3.1 and 3.2). And in the last part (Sect. 3.3), considering a third-order tensor \(\mathcal {X}\in \mathbb {R}^{n_1\times n_2\times n_3}\), we analyze the applicability of each method in two different situations, i.e., \(n_1n_2 < n_3\) and \(n_1n_2 > n_3\).

3.1 Way I (VMTQN): specify the selection of \(\mathbf {Q}\) by variance maximization

Let \(\mathcal {G} = \mathcal {L}_\mathbf {Q}(\mathcal {X}) = \mathcal {X}\times _3 \mathbf {Q}\) and \(\{\mathbf {G}^{(i)}\}_i\) denotes the frontal slices of \(\mathcal {G}\). We hope to find a data-dependent \(\mathcal {L}_\mathbf {Q}\) in Eqs. (12) and (13) instead of \(\mathcal {L}_\mathbf {F}\) in TNN (Eq. (8)), which can reduce the number of non-zero singular values of each projected slices \(\mathbf {G}^{(i)}\). Our analyses are as follows.

(1) If we make \(\mathbf {Q}\) an orthogonal matrix, then it is also invertible. By using the unitary invariance of the Frobenius norm, the sum of the squares of each projected slice’s Frobenius norm is a constant C, i.e., \(\sum _{i=1}^{n_3} \Vert \mathbf {G}^{(i)}\Vert _F^2 = \Vert \mathcal {X}\Vert _F^2 = C\). Therefore, we need to consider how to select \(\mathbf {Q}\) to make more singular values of \(\{ \mathbf {G}^{(i)} \}\) close to zero while the square sum of all singular values is a constant, i.e., \(\sum _{j=1}^{n_3}\sigma _j(\mathbf {G}^{(i)})^2 = C\).

(2) Considering the definitions of tensor rank, tensor norm and tensor singular value corresponding to TNN in Zhang et al. (2014); Zhang and Aeron (2017), and tensor Q-rank in this paper, the matrix inequality \(\frac{1}{n}\sum _{j=1}^{n}\sigma _j(\mathbf {G}^{(i)}) \le \Vert \mathbf {G}^{(i)}\Vert _\sigma \le \Vert \mathbf {G}^{(i)}\Vert _F\) (singular value, spectral norm and Frobenius norm, respectively) implies that, the closer \(\Vert \cdot \Vert _F\) is to zero, the more singular values are close to zero, which will lead to a more significant tensor low rank structure (w.r.t. \({\text {rank}}_Q(\mathcal {X})\)) with high probability.

3.1.1 From variance maximization to singular matrix

Combined with above two points, it is easy to see that we need to make more \(\Vert \mathbf {G}^{(i)}\Vert _F\) close to 0 while the sum of squares \(\sum _{i=1}^{n_3} \Vert \mathbf {G}^{(i)}\Vert _F^2\) is a constant C. From the perspective of variable distribution, we need to choose a data-dependent \(\mathbf {Q}\) to maximize the distribution variance of \(\{ \Vert \mathbf {G}^{(i)}\Vert _F \}\), where \(\mathcal {G} = \mathcal {L}_\mathbf {Q}(\mathcal {X})\) and \(\mathbf {G}^{(i)}\) is the ith frontal slice matrix of \(\mathcal {G}\). For better explanations, we give the following two lemmas, and the optimality condition of Lemma 1 illustrate our hypothesis that there should be more \(\Vert \mathbf {G}^{(i)}\Vert _F\) close to 0.Footnote 4

Lemma 1

Given n non-negative variables \(\{a_1,a_2,\ldots ,a_n\}\) such that \(\sum _{i=1}^{n} a_i^2 = C\), then maximizing the variance \(\text {Var}[a_i]\) is equivalent to minimizing the summation \(\sum _{i=1}^{n} a_i\). Moreover, the optimal condition is that there is only one non-zero variable in \(\{a_1,a_2,\ldots ,a_n\}\). Please see “Appendix A” for proof.

By using Lemma 1, maximizing the variance of \(\{ \Vert \mathbf {G}^{(i)}\Vert _F \}\) is equivalent to minimizing the sum \(\sum _{i=1}^{n_3}\Vert \mathbf {G}^{(i)}\Vert _F\). Then we have \(\sum _{i=1}^{n_3}\Vert \mathbf {G}^{(i)}\Vert _F = \Vert \mathbf {G}_{(3)}\Vert _{2,1} = \Vert \mathbf {X}_{(3)}\mathbf {Q}\Vert _{2,1}\), where \(\mathbf {G}_{(3)}\) and \(\mathbf {X}_{(3)}\) denote the mode-3 unfolding matrices (Tucker 1966).

Lemma 2

Given a fixed matrix \(\mathbf {X}\in \mathbb {R}^{n_1\times n_2}\), and its full Singular Value Decomposition as \(\mathbf {X} = \mathbf {U}\mathbf {\varSigma }\mathbf {V}^\top \) with \(\mathbf {U}\in \mathbb {R}^{n_1\times n_1}\), \(\mathbf {\Sigma }\in \mathbb {R}^{n_1\times n_2}\), and \(\mathbf {V}\in \mathbb {R}^{n_2\times n_2}\). Then the matrix of right singular vectors \(\mathbf {V}\) optimizes the following:

where \(\Vert \mathbf {M}\Vert _{2,1} = \sum _{i=1}^{col} \Vert \mathbf {M}_{:,i}\Vert _2\) is the sum of the \(\ell _2\) norms of all column vectors. Please see “Appendix B” for proof.

Lemma 1 turns the maximizing variance problem into minimizing summation problem, while Lemma 2 gives a feasible solution to the problem of minimizing the summation of \(\ell _2\) norm. However, when \(n_1\le n_2\), there will be some zero-columns appearing in \(\mathbf {\Sigma }\). We can use skinny SVD to reduce the redundant columns of \(\mathbf {Q}\) in Eq. (16). Note that the size of \(\mathbf {V}\) in skinny SVD is related to the size of \(\mathbf {X}\). Considering the two cases \(n_1\ge n_2\) and \(n_1 < n_2\) of \(\mathbf {X}\in \mathbb {R}^{n_1 \times n_2}\), we introduce an auxiliary variable \(r = \min \{ n_1,n_2\}\) to unify the matrix of right singular vectors as \(\mathbf {V} \in \mathbb {R}^{n_2\times r}\). Furthermore, we need add an extra constraint \(\mathbf {X}\mathbf {Q}\mathbf {Q}^\top = \mathbf {X}\) to avoid the trivial solution when \(r<n_2\). If not, \(\mathbf {Q}\) may converge to the singular spaces which are corresponding to smaller singular values. For example, when \(r = n_1 < n_2\) and \(\mathbf {Q}\in \mathbb {R}^{n_2\times (n_2-r)}\), the optimal solution set of \(\mathbf {Q}^*\) for Eq. (16) includes the null singular spaces of \(\mathbf {X}\), which makes \(\mathbf {X}\mathbf {Q} = \mathbf {O}\) hold and the objective function value is 0. Then we have the following:

Theorem 2

Given a fixed matrix \(\mathbf {X}\in \mathbb {R}^{n_1\times n_2}\) with \(r=\min \{n_1,n_2 \}\), and its skinny Singular Value Decomposition as \(\mathbf {X} = \mathbf {U}\mathbf {\Sigma }\mathbf {V}^\top \) where \(\mathbf {U}\in \mathbb {R}^{n_1\times r}\), \(\mathbf {\Sigma }\in \mathbb {R}^{r\times r}\), and \(\mathbf {V}\in \mathbb {R}^{n_2\times r}\). Then the matrix of right singular vectors \(\mathbf {V}\) optimizes the following:

The proofs of the above please see “Appendix C”. Theorem 2 shows that, to minimize the \(\ell _{2,1}\) norm \(\Vert \mathbf {X}_{(3)}\mathbf {Q}\Vert _{2,1}\) w.r.t. \(\mathbf {Q}\), we can choose \(\mathbf {Q}\) as the matrix of right singular vectors of \(\mathbf {X}_{(3)}\).

3.1.2 Details of how to make \(\mathbf {Q}\) data dependent

Through the analyses in Sect. 3.1.1, we make the selection of \(\mathbf {Q}\) data-dependent, and the following definitions shows the details.

Definition 3

(VMTQN: variance maximization tensor Q-nuclear norm) Let \(\mathcal {X}\in \mathbb {R}^{n_1\times n_2\times n_3}\) be a third-order tensor and \(\mathbf {Q}\) be an orthogonal matrix. If \(\mathcal {G}=\mathcal {X}\times _3 \mathbf {Q}\) and \(\mathbf {G}^{(i)}\) denotes the frontal slices of \(\mathcal {G}\), then the Variance Maximization Tensor Q-Nuclear norm (VMTQN) is defined as follows:

Note that \(\mathbf {Q}\) is determined by \(\mathcal {X}\). With the help of Lemmas 1, 2, and Theorem 2, we can incorporate VMTQN into the low rank recovery model.

Definition 4

(Low tensor Q-rank model with adaptive Q) By setting the adaptive \(\mathbf {Q}\) module as a low-level sub-problem, the low tensor Q-rank model (10) is transformed into the following:

And the corresponding surrogate model (13) is also replaced by the following:

In Eqs. (19) and (20), \(\mathbf {X}_{(3)}\in \mathbb {R}^{n_1n_2\times n_3}\) denotes the mode-3 unfolding matrix of tensor \(\mathcal {X}\in \mathbb {R}^{n_1\times n_2\times n_3}\), and \(\mathbf {Q}\in \mathbb {R}^{n_3\times r}\) with \(r = \min \{n_1n_2,n_3 \}\).

Definition 5

In fact, Theorem 2 implies \(\mathbf {Q} = \mathbf {V}\), where \(\mathbf {V}\) is the matrix of right singular vectors of \(\mathbf {X}_{(3)}\). If we let \({\text {PCA}}(\mathcal {X},3,r) := \mathop {\hbox {argmin}}\limits _{\mathbf {Q}^\top \mathbf {Q} = \mathbf {I}_r} \Vert \mathbf {X}_{(3)}\mathbf {Q}\Vert _{2,1}\) be the operator to obtain the matrix of right singular vectors \(\mathbf {Q}\in \mathbb {R}^{n_3\times r}\), where \(r = \min \{n_1 n_2, n_3\}\), then the models (19) and (20) can be abbreviated as follows:

Remark 1

Notice that \(\mathbf {Q} \in \mathbb {R}^{n_3\times r}\) in Eqs. (19) and (20) may not have full columns, i.e., \(r < n_3\). The corresponding definitions of \({\text {rank}}_Q(\mathcal {X})\) in Eq. (9) and \(\left\| \mathcal {X} \right\| _{Q,*}\) in Eq. (12) also change to the sum of r frontal slices instead of \(n_3\). Then Theorem 1 guarantee the validity of Eq (20).

Remark 2

In fact, from “Appendix C” we can see that, r can be chosen as any value that satisfies the condition \({\text {rank}}(\mathbf {X}_{(3)})\le r\le \min \{n_1 n_2, n_3\}\), as long as \(\mathbf {Q}\in \mathbb {R}^{n_3\times r}\) contains the whole column space of the matrix of right singular vectors \(\mathbf {V}\) and is pseudo-invertible to make \(\mathcal {X}=\mathcal {X}\times _3 \mathbf {Q}\times _3 \mathbf {Q}^{+}\) hold.

Within this framework, the orthogonal matrix \(\mathbf {Q}\) is related to tensor \(\mathcal {X}\). As we analyzed, choosing \(\mathbf {Q}\) as the matrix of right singular vectors may make \({\text {rank}}_Q(\mathcal {X})\) as low as possible. In other words, there should be more “small” frontal slices of \(\mathcal {X}\times _3 \mathbf {Q}\), whose Frobenius norms are close to 0 to guarantee the low tensor Q-rank structure of data with high probability.

Now the question is whether the function \(\Vert \mathcal {X} \Vert _{Q,*}\) in Eq. (22) is still an envelope of the rank function \(\text {rank}_{Q} (\mathcal {X})\) in Eq. (21) within an appropriate region. The following theorem shows that even if \(\Vert \mathcal {X} \Vert _{Q,*}\) is no longer a convex function in the bilevel framework (22) since \(\mathbf {Q}\) is dependent on \(\mathcal {X}\), we can still use it as a surrogate for a lower bound of \(\text {rank}_{Q} (\mathcal {X})\) in Eq. (21).

Theorem 3

Given a column orthonormal matrix \(\mathbf {Q}\in \mathbb {R}^{n_3\times r}\), \(r = \min \{n_1 n_2, n_3\}\), we use \({\text {rank}}_{PCA}(\mathcal {X})\), \(\Vert \mathcal {X}\Vert _{PCA,\sigma }\), and \(\Vert \mathcal {X}\Vert _{PCA,*}\) to abbreviate the corresponding concepts as follows:

Then within the region of \(\mathcal {D} = \{\mathcal {X}\ |\ \Vert \mathcal {X}\Vert _{PCA,\sigma } \le 1\}\), the inequality \(\Vert \mathcal {X}\Vert _{PCA,*} \le {\text {rank}}_{PCA}(\mathcal {X})\) holds. Moreover, for every fixed \(\mathbf {Q}\), let \(\mathcal {S}_Q\) denote the space \(\{\mathcal {X}\ |\ \mathbf {Q} \in {\text {PCA}}(\mathcal {X},3,r)\}\). Then Theorem 1indicates that \(\Vert \mathcal {X}\Vert _{PCA,*}\) is still the tightest convex envelope of \({\text {rank}}_{PCA}(\mathcal {X})\) in \(\mathcal {S}_Q \cap \mathcal {D}\).

Remark 3

For any column orthonormal matrix \(\mathbf {Q}\in \mathbb {R}^{n_3\times r}\), the corresponding conclusion also holds as long as \(\mathcal {X}\times _3 (\mathbf {Q}\mathbf {Q}^\top ) = \mathcal {X}\). That is to say, \(\Vert \mathcal {X}\Vert _{Q,*} \le \text {rank}_{Q}(\mathcal {X})\) holds within the region \(\{\mathcal {X}\ |\ \Vert \mathcal {X}\Vert _{Q,\sigma } \le 1\}\).

Theorem 3 shows that though \(\Vert \mathcal {X}\Vert _{PCA,*}\) could be non-convex, its function value is always below \(\text {rank}_{PCA}(\mathcal {X})\). Therefore, model (22) can be regarded as a reasonable low rank tensor recovery model. Notice that it is actually a bilevel optimization problem.

3.2 Way II (MOTQN): estimate \(\mathbf {Q}\) by manifold optimization

Recalling the data-dependent low rank recovery model Eq. (15) with \(\mathcal {X}\in \mathbb {R}^{n_1\times n_2\times n_3}\), our main idea is to find a learnable \(\mathbf {Q}\in \mathbb {R}^{n_3\times n_3}\) to minimize \(\text {rank}_Q(\mathcal {X})\). Inspired by Remark 3, if we let \(\mathbf {Q} = \mathop {\hbox {argmin}}\limits _{\mathbf {Q}^\top \mathbf {Q} = \mathbf {I}} \Vert \mathcal {X}\Vert _{Q,*}\) to minimize the surrogate function directly, then we can get the following bilevel model:

In Eq. (26), the lower-level problem w.r.t. \(\mathbf {Q}\) is actually a Stiefel manifold optimization problem. Similarly, we can define the corresponding nuclear norm as follows:

Definition 6

(MOTQN: manifold optimization tensor Q-nuclear norm) Let \(\mathcal {X}\in \mathbb {R}^{n_1\times n_2\times n_3}\) be a third-order tensor and \(\mathbf {Q}\in \mathbb {R}^{n_3\times n_3}\) be an orthogonal matrix. Then the Manifold Optimization Tensor Q-Nuclear norm (MOTQN) is defined as:

Different from VMTQN, the learnable \(\mathbf {Q}\) in Eq. (26) should be a square matrix, i.e., \(\mathbf {Q}\in \mathbb {R}^{n_3\times n_3}\). If not, as mentioned in Sect. 3.1.1, \(\mathbf {Q}\) may converge to the singular spaces which are corresponding to smaller singular values. To avoid this case, we let \(\mathbf {Q}\in \mathbb {R}^{n_3\times n_3}\). Following, the key point of solving this model is how to deal with such an orthogonality constrained optimization problem:

Note that Eq. (28) is actually a non-convex problem due to the orthogonality constraint. The usual way is to perform the manifold Gradient Descent on the Stiefel manifold, which evolves along the manifold geodesics (Edelman et al. 1998). However, this method usually requires a lot of computation to calculate the projected gradient direction of the objective function. Meanwhile, the work Wen and Yin (2013) develops a technique to solve such orthogonality constrained problem iteratively, which generates feasible points by the Cayley transformation and only involves matrix multiplication and inversion. Here we consider to use their algorithm to solve the low-level problem.

3.2.1 Optimization with orthogonality constraints

Assume \(\mathbf {Q}\in \mathbb {R}^{n\times r}\) and denote the gradient of the objective function \(f(\mathbf {Q}) = \Vert \mathcal {X}\Vert _{Q,*}\) w.r.t. \(\mathbf {Q}\) at \(\mathbf {Q}_k\) (the kth iteration) by \(\mathbf {P}\in \mathbb {R}^{n\times r}\). Then the projection of \(\mathbf {P}\) in the tangent space of the Stiefel manifold at \(\mathbf {Q}_k\) is \(\mathbf {A}\mathbf {Q}_k\), where \(\mathbf {A} = \mathbf {P}\mathbf {Q}_k^\top - \mathbf {Q}_k\mathbf {P}^\top \) and \(\mathbf {A}\in \mathbb {R}^{n\times n}\) (Wen and Yin 2013). Instead of parameterizing the geodesic of the Stiefel manifold along direction \(\mathbf {A}\) using the exponential function, inspired by Wen and Yin (2013), we generate feasible points by the following Cayley transformation:

where \(\mathbf {I}\) is the identity matrix and \(\tau \in \mathbb {R}\) is a parameter to determine the step size of \(\mathbf {Q}_{k+1}\). That is to say, \(\mathbf {Q}(\tau )\) is a re-parameterized geodesic w.r.t. \(\tau \) on the Stiefel manifold. Moreover, if \(\mathbf {Q}_k^\top \mathbf {Q}_k=\mathbf {I}\) holds, then \(\mathbf {Q}(\tau )\) has the following properties:

(1) \(\frac{d}{d\tau }\mathbf {Q}(0) = -\mathbf {A}\mathbf {Q}_k\), (2) \(\mathbf {Q}(\tau )\) is smooth in \(\tau \), (3) \(\mathbf {Q}(0)= \mathbf {Q}_k\), (4) \(\mathbf {Q}(\tau )^\top \mathbf {Q}(\tau ) = \mathbf {I}\).

The work Wen and Yin (2013) shows that if \(\tau \) is in a proper range, \(\mathbf {Q}(\tau )\) can lead to a lower objective function value than \(\mathbf {Q}(0)\) on the Stiefel manifold. In summery, solving the problem \(\mathbf {Q} = \mathop {\hbox {argmin}}\limits _{\mathbf {Q}^\top \mathbf {Q} = \mathbf {I}} \Vert \mathcal {X}\Vert _{Q,*}\) consists of two steps: (1) find a proper \(\tau ^*\) to make the value of the objective function \(f(\mathbf {Q}(\tau )) = \Vert \mathcal {X}\Vert _{Q(\tau ),*}\) decrease; (2) update \(\mathbf {Q}_{k+1}\) by Eq. (29), i.e., \(\mathbf {Q}_{k+1} = \mathbf {Q}(\tau ^*)\).

3.2.2 Details of how to estimate \(\tau ^*\) and update \(\mathbf {Q}_k\)

(1) We first compute the gradient of the objective function \(f(\mathbf {Q}) = \Vert \mathcal {X}\Vert _{Q,*}\) w.r.t. \(\mathbf {Q}\) at \(\mathbf {Q}_k\). According to the chain rule, we get the following:

Note that \(\mathcal {G} = \mathcal {X}\times _3 \mathbf {Q}\) and \(\mathbf {G}_{(3)} = \mathbf {X}_{(3)} \mathbf {Q}\), then we can get \(\frac{\partial \mathbf {G_{(3)}}}{\partial \mathbf {Q}} = \mathbf {X}_{(3)}^\top \) where \(\mathbf {G}_{(3)}\) and \(\mathbf {X}_{(3)}\) are the mode-3 unfolding matrices. Additionally, Eq. (28) shows that \(f(\mathbf {Q}) = \sum _{i=1}^{n_3} \Vert \mathbf {G}^{(i)} \Vert _*\) where \(\mathbf {G}^{(i)} \) are the frontal slices of \(\mathcal {G}\). We let \(\mathbf {H}^{(i)} = \mathbf {U}^{(i)} \mathbf {V}^{(i)}\), where \(\mathbf {H}^{(i)}\) denotes the frontal slice of \(\mathcal {H}\) and \(\mathbf {U}^{(i)}\mathbf {V}^{(i)}\) denotes the left/right singular matrices of \(\mathbf {G}^{(i)}\) by skinny SVD (Petersen and Pedersen 2008). Therefore, \(\mathcal {H} = \frac{\partial f(\mathbf {Q})}{\partial \mathcal {G}}\) is the same as the matrix case and can be obtained from the singular value decomposition.Footnote 5

In summary, the gradient of the objective function \(f(\mathbf {Q})\) w.r.t. \(\mathbf {Q}\) at \(\mathbf {Q}_k\) (denoted by \(\mathbf {P}\)) can be written as follows:

where \(\mathbf {X}_{(3)}\) and \(\mathbf {H}_{(3)}\) are the mode-3 unfolding matrices of \(\mathcal {X}\) and \(\mathcal {H}\), respectively.

(2) Then we construct a geodesic curve along the gradient direction on the Stiefel manifold by Eq. (29):

We consider the following problem for finding a proper \(\tau \):

where \(\varepsilon \) is a given parameter to ensure that \(\tau ^*\) is small enough and \(\Vert \frac{\tau }{2}\mathbf {A}\Vert \le 1\) holds. Then we can simplify \(g(\tau ) = f(\mathbf {Q}(\tau ))\) with the equation \(\left( \mathbf {I} + \frac{\tau }{2} \mathbf {A}\right) ^{-1} = \mathbf {I} + \sum _{l=1}^{\infty }\left( -\frac{\tau }{2}\mathbf {A} \right) ^l\) and obtain the following:

Given that \(\tau ^*\) is small enough, we can approximate \(g(\tau )\) via its second order Taylor expansion at \(\tau =0\), i.e., \(g(\tau ) = g(0) + g'(0)\cdot \tau + \frac{1}{2} g''(0)\cdot \tau ^2 + \mathcal {O}(\tau ^3)\). It should be mentioned that since \(f(\mathbf {Q})\) is non-convex w.r.t. \(\mathbf {Q}\), the sign of \(g''(0)\) is uncertain. However, Wen and Yin (2013) point out that \(g'(0)=-\frac{1}{2}\Vert \mathbf {A}\Vert _F^2\) always holds. Thus we can estimate an optimal solution \(\tau ^*\) via:

Here we give the following lemma to omit the calculation process (see “Appendix D”).

Lemma 3

Let \(g(\tau ) = f(\mathbf {Q}(\tau )) = \Vert \mathcal {X}\Vert _{Q(\tau ),*}\) and \(\mathbf {Q}(\tau ) \approx \left( \mathbf {I} - \tau \mathbf {A}+ \frac{\tau ^2}{2}\mathbf {A}^2 \right) \mathbf {Q}_k \), where \(\mathbf {A}\) is defined in Eq. (32). Then the first and the second order derivatives of \(g(\tau )\) evaluated at 0 can be estimated as follows:

where \(\mathbf {X}_{(3)}\) and \(\mathbf {H}_{(3)}\) are defined as the same in Eq. (31).

By using Eq. (35) and Lemma 3, we can obtain the optimal step size \(\tau ^*\) and then use Eq. (32) to update \(\mathbf {Q}_{k+1} = \mathbf {Q}(\tau ^*)\). Algorithm 1 organizes the whole calculation process.

Back to the bilevel low rank tensor recovery model Eq. (26), for the lower-level problem Eq. (28), we finish the iterative updating step by Algorithm 1. Once \(\mathbf {Q}_{k+1}\) is fixed, the upper-level problem can be solved easily.

3.3 Applicability of VMTQN and MOTQN

In Sect. 3.2 (MOTQN), we mention that \(\mathbf {Q}\in \mathbb {R}^{n_3\times n_3}\) should be a square matrix but not in Sect. 3.1 (VMTQN). In this section, we start from this point and analyze the impact of the size of \(\mathcal {X}\in \mathbb {R}^{n_1\times n_2\times n_3}\) on the applicability of these two methods.

3.3.1 Case 1: \(r = n_1n_2 \ll n_3\)

In this case, VMTQN model in Eq. (22) usually performs better than other methods in terms of computational efficiency, including MOTQN and other works (Zhang and Aeron 2017; Xu et al. 2019; Song et al. 2019; Lu et al. 2019; Jiang et al. 2020). As we can see from Sect. 3.1 of VMTQN model, we need to calculate a skinny right singular matrix \(\mathbf {V}\) of an unfolding matrix \(\mathbf {X}_{(3)}\in \mathbb {R}^{n_1n_2\times n_3}\). If \(r < n_3\), then not only the computational complexity is not too large, but \(\mathbf {Q}\) can play the role of feature selection like Principal Component Analysis, which corresponds to the notation \(\mathbf {Q} = {\text {PCA}}(\mathcal {X},3,r)\).

Meanwhile, MOTQN and the work Zhang and Aeron (2017),Xu et al. (2019), Song et al. (2019) and Lu et al. (2019) usually need to have a square factor matrix \(\mathbf {Q}\), even that (Jiang et al. 2020) requires the columns of \(\mathbf {Q}\) to be redundant.

3.3.2 Case 2: \(n_1n_2 > n_3 = r\) or even have the same order of magnitude

In this case, MOTQN model in Eq. (26) has the best explainability and rationality. On the one hand, with the same size of \(\mathbf {Q}\in \mathbb {R}^{n_3\times n_3}\), MOTQN minimize the tensor Q-nuclear norm directly, which corresponds to the definition of low rank structure properly. On the other hand, thanks to the algorithm in Wen and Yin (2013), the optimization of MOTQN model has good convergence guarantee.

4 Applications to tensor completion

4.1 Low rank tensor completion model

In the third-order tensor tensor completion task, \(\varOmega \) is an index set consisting of the indices \(\{(i,j,k)\}\) which can be observed, and the operator \(\varPsi \) in Eqs. (21) and (22) is replaced by an orthogonal projection operator \(\mathcal {P}_\varOmega \), where \(\mathcal {P}_\varOmega (\mathcal {X}_{ijk}) = \mathcal {X}_{ijk}\) if \((i,j,k)\in \varOmega \) and 0 otherwise. The observed tensor \(\mathcal {Y}\) satisfies \(\mathcal {Y} = \mathcal {P}_\varOmega (\mathcal {Y})\). Then the tensor completion model based on our two ways are given by:

and

where \(\mathcal {X}\) is the tensor that has low rank structure. In Eq. (37), \(\mathbf {Q}\in \mathbb {R}^{n_3\times r}\) is an column orthonormal matrix with \(r = \min \{n_1 n_2, n_3\}\). While in Eq. (38), \(\mathbf {Q}\in \mathbb {R}^{n_3\times n_3}\) is a square orthogonal matrix. To solve these models by ADMM based method (Lu et al. 2017), we introduce an intermediate tensor \(\mathcal {E}\) to separate \(\mathcal {X}\) from \(\mathcal {P}_\varOmega (\cdot )\). Let \(\mathcal {E} = \mathcal {P}_\varOmega (\mathcal {X}) - \mathcal {X}\), then \(\mathcal {P}_\varOmega (\mathcal {X}) = \mathcal {Y}\) is translated to \(\mathcal {X} + \mathcal {E} = \mathcal {Y},\ \mathcal {P}_{\varOmega }(\mathcal {E}) = \mathcal {O}\), where \(\mathcal {O}\) is an all-zero tensor. Then we get the following two models:

and

Note that in Eq. (40), the constraint \(\mathbf {Q} = \mathop {\hbox {argmin}}\limits _{\mathbf {Q}^\top \mathbf {Q} = \mathbf {I}} \Vert \mathcal {X}\Vert _{Q,*}\) is the same as the objective function, thus it can be omitted. Nevertheless, in order to keep Eqs. (39) and (40) unified in form and express the dependence of \(\mathbf {Q}\) and \(\mathcal {X}\) conveniently, we reserve this constraint here.

4.2 Optimization algorithm

Since \(\mathbf {Q}\) is dependent on \(\mathcal {X}\), it is difficult to solve the models (39) and (40) w.r.t. \(\{\mathcal {X},\mathbf {Q}\}\) directly. Here we adopt the idea of alternating minimization to solve \(\mathcal {X}\) and \(\mathbf {Q}\) alternately. We separate the sub-problem of solving \(\mathbf {Q}\) as a sub-step in every K-iteration, and then update \(\mathcal {X}\) with a fixed \(\mathbf {Q}\) by the ADMM method (Lu et al. 2017, 2018). The partial augmented Lagrangian function of Eqs. (39) and (40) is

where \(\mathcal {Z}\) is the dual variable and \(\mu > 0\) is the penalty parameter. Then we can update each component \(\mathbf {Q}\), \(\mathcal {X}\), \(\mathcal {E}\), and \(\mathcal {Z}\) alternately. Algorithms 2 and 3 show the details about the optimization methods to Eqs. (39) and (40). In order to improve the efficiency and stable convergence of the algorithm, we introduce a parameter K to control the update frequency of \(\mathbf {Q}\) with the help of heuristic design. The different effects of K on the two models are explained in Sect. 4.3 and Sect. 4.4, respectively.

Note that there is one operator \(\mathbf {Prox}\) in the sub-step of updating \(\mathcal {X}\) as follows:

where \(\mathbf {Q}\in \mathbb {R}^{n_3\times r}\) is a given column orthonormal matrix and \(\Vert \mathcal {X}\Vert _{Q,*}\) is the tensor Q-nuclear norm of \(\mathcal {X}\) which is defined in Eq. (12). Algorithm 3 shows the details of solving this operator.

4.3 Convergence analysis

4.3.1 VMTQN model

For the models (37) or (39), it is hard to analyze the convergence of the corresponding optimization method directly. The constraint on \(\mathbf {Q}\) is non-linear and the objective function is essentially non-convex w.r.t. \(\mathbf {Q}\), which increase the difficulty of analysis. However, the conclusions of Lu et al. (2017), Lin et al. (2015), Xu and Yin (2015), Lin et al. (2011) and Absil et al. (2009) guarantee the convergence to some extent.

In practical applications, we can fix \(\mathbf {Q}_k=\mathbf {Q}\) in every K iterations to solve a convex problem w.r.t. \(\mathcal {X}\). As long as \(\mathcal {X}\) is convergent, by using the following Lemma 4, the change of \(\mathbf {Q}\) is bounded.

Lemma 4

(Petersen and Pedersen 2008) Given a matrix \(\mathbf {X}\) and its Singular Value Decomposition \(\mathbf {X} = \mathbf {U}\mathbf {\Sigma }\mathbf {V}^\top \). Let \(\mathbf {v}_i\) denotes the ith column of matrix \(\mathbf {V}\) and \(\sigma _j\) denotes the jth singular value of matrix \(\mathbf {X}\). Denote the sub-differential of a variable by \(\partial (\cdot )\), then we have the following:

If \(v_{ij}\) represents the jth element of \(\mathbf {v}_i\), then \(\left\| \frac{\partial (v_{ij})}{\partial (\mathbf {X}^\top \mathbf {X})}\right\| _2 < \infty \).

Lemma 4 indicates that, as long as the change of \(\mathcal {X}\) is bounded by penalty term with proper K and \(\rho \), the change of \(\mathbf {Q}\) will also be bounded to some extent. Then \(\lim \nolimits _{k\rightarrow \infty } Q_k \approx {\text {PCA}}(\mathcal {X}_k,3,r)\) gradually meets the constraints.

Unfortunately, Updating the variable \(\mathbf {Q}_k\) in Eq. (43) needs to solve a singular linear system, while the objective norm \(\Vert \mathcal {X}\Vert _{Q,*}\) in Eq. (39) is non-convex w.r.t. \(\mathbf {Q}\). Therefore, it is difficult to prove the conclusion that the Lagrangian function in Eq. (41) of Algorithm 2 decreases strictly in each iteration. However, we give another Theorem that the iterations corresponding to Eqs. (45)–(48) are convergent in the case of fixed \(\mathbf {Q}\).

Theorem 4

Given a fixed \(\mathbf {Q}\) in every K iterations, the tensor completion model (39) can be solved effectively by Algorithm 2 with \(\mathbf {Q}_k = \mathbf {Q}\) in Eq. (43), where \(\varPsi \) is replaced by \(\mathcal {P}_\varOmega \). The rigorous convergence guarantees can be obtained directly due to the convexity as follows.

Let \((\mathcal {X}^*,\mathcal {E}^*,\mathcal {Z}^*)\) be one KKT point of problem (39) with fixed \(\mathbf {Q}\), \(\hat{\mathcal {X}}_K=\frac{\sum _{k=0}^K\frac{1}{\mu _k}\mathcal {X}_{k+1}}{\sum _{k=0}^K\frac{1}{\mu _k}}\), and \(\hat{\mathcal {E}}_K=\frac{\sum _{k=0}^K\frac{1}{\mu _k}\mathcal {E}_{k+1}}{\sum _{k=0}^K\frac{1}{\mu _k}}\), then we have

and

4.3.2 MOTQN model

Different from VMTQN model, as we mentioned in Sect. 3.3.2, MOTQN model has a complete guarantee of convergence with the help of Wen and Yin (2013). The updating step in Eq. (44) can strictly guarantee the decrease of the objective function value \(\left\| \mathcal {X} \right\| _{Q,*}\) with a proper step size \(\tau ^*\).

Lemma 5

(Lemma 3 of Wen and Yin (2013)) Denote the gradient of the objective function \(f(\mathbf {Q})\) w.r.t. \(\mathbf {Q}\) at \(\mathbf {Q}_k\) by \(\mathbf {P}\) and let \(\mathbf {A} = \mathbf {P}\mathbf {Q}_k^\top - \mathbf {Q}_k\mathbf {P}^\top \) be a skew-symmetric matrix. If we define \(\mathbf {Q}(\tau )\) by Eq. (32), then \(\mathbf {Q}(\tau )\) is a descent curve at \(\tau =0\), that is,

Lemma 5 indicates that, as long as \(\tau \) is small enough, Eq. (44) usually decreases the value of \(f(\mathbf {Q}(\tau ))\). Notice that Eq. (41) is a partial augmented Lagrangian function, hence the value of Lagrangian function will also decreases after Eq. (44). Therefore, we have the following theorem to ensure the convergence of Algorithm 2:

Theorem 5

Denote the augmented Lagrangian function of low rank tensor recovery model (38) by \(L(\mathbf {Q},\mathcal {X},\mathcal {E},\mathcal {Z},\mu ) \), which is shown as follows:

Then the sequence \(\{\mathbf {Q}_k,\mathcal {X}_k,\mathcal {E}_k,\mathcal {Z}_k, \mu _k\}\) generated in Algorithm 2 with Eq. (44) satisfies the following:

The function value of Eq. (53) decreases monotonically after each iteration as long as \(\mu \ge \sqrt{(\rho +1) C_L}\), where \(\rho \) is defined in Eq. (48) and \(C_L\) is a constant w.r.t. \(\mathcal {X}\). By the monotone bounded convergence theorem, Algorithm 2 is convergent.

4.4 Complexity analysis

The computational complexity of VMTQN in Eq. (43) is \(\mathcal {O}\left( r n_1 n_2 n_3 \right) \), where r denotes the number of columns of \(\mathbf {Q}\in \mathbb {R}^{n_3\times r}\). And the complexity of MOTQN in Eq. (44) is \(\mathcal {O}\left( (n_1 n_2+n_3) n_3^2 \right) \). As for TNN based method Zhang et al. (2014); Zhang and Aeron (2017); Lu et al. (2016) and Lu et al. (2018), they use Fourier transform and have a complexity of \(\mathcal {O}\left( n_1 n_2 n_3 \log n_3 \right) \). As can be seen, if \(r < \log n_3\), VMTQN can be more efficient than the other two methods. Otherwise, we should use a larger K to control the overall calculation speed.

However, when solving our two methods or TNN based method, the most time-consuming part is in the SVD operator of each iteration, which is corresponding to Eqs. (45)–(48). In this part, VMTQN based method has a complexity of \(\mathcal {O}\left( r n_1 n_2 \min \{n_1,n_2\} \right) \) while MOTQN and TNN based methods has a complexity of \(\mathcal {O}\left( n_3 n_1 n_2 \min \{n_1,n_2\} \right) \). That is to say, as long as \(r \ll n_3\), VMTQN based method is usually more efficient than the other two methods.

4.5 Performance analysis

Considering the low rank tensor recovery models in Eqs. (37) and (38), \(\varOmega \) is an index set consisting of the indices \(\{(i,j,k)\}\) which can be observed, and the orthogonal projection operator \(\mathcal {P}_\varOmega \) is defined as \(\mathcal {P}_\varOmega (\mathcal {X}_{ijk}) = \mathcal {X}_{ijk}\) if \((i,j,k)\in \varOmega \) and 0 otherwise. In this part, we discuss at least how many observation samples \(|\varOmega |\) are needed to recover the ground-truth. In fact, \(\mathbf {Q}^*\) obtained from the convergence of Algorithm 2 has a decisive effect on the number of observation samples needed, since the optimal solution satisfies the KKT conditions under \(\mathbf {Q}^*\). That is to say, we only need to analyze the performance guarantee in the case of fixed \(\mathbf {Q}\).

With a fixed \(\mathbf {Q}\), the exact tensor completion guarantee for model (13) is shown in Theorem 6. Lu et al. (2019) also have similar conclusions.

Theorem 6

Given a fixed orthogonal matrix \(\mathbf {Q}\in \mathbb {R}^{n_3\times n_3}\) and \(\varOmega \sim Ber(p)\), assume that tensor \(\mathcal {X}\in \mathbb {R}^{n_1\times n_2\times n_3}\) (\(n_1\ge n_2\)) has a low tensor Q-rank structure and \({\text {rank}}_{Q}(\mathcal {X}) = R\). If \(|\varOmega | \ge \mathcal {O}(\mu Rn_1 \log (n_1 n_3))\), then \(\mathcal {X}\) is the unique solution to Eq. (13) with high probability, where \(\varPsi \) is replaced by \(\mathcal {P}_\varOmega \), and \(\mu \) is the corresponding incoherence parameter (see Supplementary Materials).

Through the proof of Lu et al. (2019) and Lu et al. (2018), the sampling rate p should be proportional to \(\max \{\Vert \mathcal {P}_{\mathcal {T}}(\mathfrak {e}_{ijk})\Vert _F^2\}\). (The definition of projection operators \(\mathcal {P}_{\mathcal {T}}\) and \(\mathfrak {e}_{ijk}\) can be found in Lu et al. (2016) and Lu et al. (2018) or in Supplementary materials, where \(\mathcal {T}\) is the singular space of the ground-truth.) The projection of \(\mathfrak {e}_{ijk}\) onto subspace \(\mathcal {T}\) is greatly influenced by the dimension. Obviously, when \(\mathcal {T}\) is the whole space, \(\left\| \mathcal {P}_{\mathcal {T}_Q}(\mathfrak {e}_{ijk})\right\| _F^2 = 1\). That is to say, a small dimension of \(\mathcal {T}_Q\) may lead to a small \(\max _{ijk}\left\{\left\| \mathcal {P}_{\mathcal {T}_Q}(\mathfrak {e}_{ijk})\right\| _F^2\right\}\).

Proposition 15 in Lu et al. (2018) also implies that for any \(\varDelta \in \mathcal {T}\), we need to have \(\mathcal {P}_\varOmega (\varDelta ) = 0 \Leftrightarrow \varDelta = 0\). These two conditions indicate that once the spatial dimension of \(\mathcal {T}\) is large (\({\text {rank}}_{Q}(\mathcal {X}) = R\) is large), a larger sampling rate p is needed. And Fig. 3 in Lu et al. (2018) verifies the rationality of this deduction by experiment.

In fact, the smoothness of data along the third dimension has a great influence on the Dimension of Freedom (DoF) of space \(\mathcal {T}\). Non-smooth change along the third dimension is likely to increase the spatial dimension of \(\mathcal {T}\) under the Fourier basis vectors, which makes the TNN based methods ineffective. Our experiments on CIFAR-10 (Table 1) confirm this conclusion.

As for the models (39) and (40) with adaptive \(\mathbf {Q}\), our motivation is to find a better \(\mathbf {Q}\) in order to make \({\text {rank}}_{Q}(\mathcal {X}) = R\) smaller and make the spatial dimension of corresponding \(\mathcal {T}_Q\) as small as possible, where \(\mathcal {T}_Q\) is the singular space of the ground-truth under \(\mathbf {Q}\). In other words, for more complex data with non-smoothness along the third dimension, the adaptive \(\mathbf {Q}\) may reduce the dimension of \(\mathcal {T}_Q\) and make \(\max \{\Vert \mathcal {P}_{\mathcal {T}_Q}(\mathfrak {e}_{ijk})\Vert _F^2\}\) smaller than \(\max \{\Vert \mathcal {P}_{\mathcal {T}}(\mathfrak {e}_{ijk})\Vert _F^2\}\), leading to a lower bound for the sampling rate p.

5 Experiments

In this section, we conduct numerical experiments to evaluate our proposed models (39) and (40). The platform is Matlab R2018b under Windows 10 on a PC with an Intel i5-7500 CPU and 16 GB memory. The experimental code of most comparison methods comes from the released version. As for some methods without released code, we reproduce it in Matlab 2018b strictly according to the algorithm in their respective papers.

Assume that the observed corrupted tensor is \(\mathcal {Y}\), and the true tensor is \(\mathcal {X}_0 \in \mathbb {R}^{n_1\times n_2\times n_3}\). We represent the recovered tensor (output of the algorithms) as \(\mathcal {X}\), and use Peak Signal-to-Noise Ratio (PSNR) to measure the reconstruction error:

5.1 Synthetic experiments

In this part we compare our proposed methods (named VMTQN model and MOTQN model) with the mainstream algorithm TNN (Zhang et al. 2014; Lu et al. 2018).

We examine the completion task with varying tensor Q-rank of tensor \(\mathcal {Y}\) and varying sampling rate p. Firstly, we generate a random tensor \(\mathcal {M}\in \mathbb {R}^{50\times 50\times 50}\), whose entries are independently sampled from an \(\mathcal {N}(0,1/50)\) distribution. Actually, the data generated in this way is usually non-smooth along each dimension. Then we choose p in [0.01 : 0.02 : 0.99] and r in [1 : 1 : 50], where the column orthonormal matrix \(\mathbf {W}\in \mathbb {R}^{50\times r}\) satisfies \(\mathbf {W} = {\text {PCA}}(\mathcal {M},3,r)\). We let \(\mathcal {Y} = \mathcal {M}\times _3 \mathbf {W}\times _3 \mathbf {W}^\top \) be the true tensor. After that, we create the index set \(\varOmega \) by using a Bernoulli model to randomly sample a subset from \(\{1,\ldots ,50\}\times \{1,\ldots ,50\}\times \{1,\ldots ,50\}\). The sampling rate p is \(|\varOmega |/ 50^3\). For each pair of (p, r), we simulate 10 times with different random seeds and take the average as the final result. As for the parameters of VMTQN and MOTQN models in Algorithm 2, we set \(\rho = 1.1\), \(\mu _0 = 10^{-4}\), \(\mu _{max} = 10^{10}\), and \(\epsilon = 10^{-8}\).

As shown in the upper left corner regions of VMTQN model and MOTQN model in Fig. 3, Algorithm 2 can effectively solve our proposed recovery models (37) and (38). The larger tensor Q-rank it is, the larger the sampling rate p is needed, which is consistent with our Performance Analysis in Theorem 6. By comparing the results of three methods, we can find that TNN has very poor robustness to the data with non-smooth change. And the results of the left and middle images demonstrate our assumptions (Motivation), which may imply that better low rank structure leads to better recovery.

5.2 Real-world datasets

In this part we compare our proposed method with TNN (Lu et al. 2018) with Fourier matrix, TTNN (Song et al. 2019) with wavelet matrix, TNN-C (Xu et al. 2019) with cosine matrix, F-TNN (Jiang et al. 2020) with framelet matrix, SiLRTC (Liu et al. 2013), Tmac (Xu et al. 2017), and Latent Trace Norm (Tomioka and Suzuki 2013). We validate our algorithm on three datasets: (1) CIFAR-10;Footnote 6 (2) COIL-20;Footnote 7 (3) HMDB51.Footnote 8 We set \(\rho = 1.1\), \(\mu _0 = 10^{-4}\), \(\mu _{max} = 10^{10}\), \(\epsilon = 10^{-8}\), and \(K=1\) in our methods. As for TNN, SiLRTC, Tmac, F-TNN, and Latent Trace Norm, we use the default settings as in their released code, e.g., Lu et al.Footnote 9 and Tomioka et al.Footnote 10 For TTNN and TNN-C of unreleased code, we implement their algorithms in MATLAB strictly according to the corresponding papers.

5.2.1 Influences of \(\mathbf {Q}\)

Corresponding to our motivation, we use a Random orthogonal matrix and an Oracle matrix (the matrix of right singular vectors of the ground-truth unfolding matrix) to test the influence of \(\mathbf {Q}\). The results of TQN models with different orthogonal matrix in Tables 1 and 2 show that \(\mathbf {Q}\) play an important role in tensor recovery, where the best recovery results among the comparison methods are marked in bold. Comparing with Random \(\mathbf {Q}\) case, our Algorithm 2 is effective for searching a better \(\mathbf {Q}\). Table 1 also shows that a proper \(\mathbf {Q}\) may make recover the ground-truth more easily. For example, with sampling rate \(p\ge 0.2\) on 10, 000 images, an Oracle matrix \(\mathbf {Q}\) can lead to an “exact” recovery (\(\text {PSNR} >200\)).

5.2.2 CIFAR-10

We consider the worst case for TNN based methods that there is almost no smoothness along the third dimension of the data. We randomly selected 3000 and 10,000 images from one batch of CIFAR-10 (Krizhevsky and Hinton 2009) as our true tensors \(\mathcal {Y}_1\in \mathbb {R}^{32\times 32\times 3000}\) and \(\mathcal {Y}_2\in \mathbb {R}^{32\times 32\times 10,000}\), respectively. Then we solve the model (39) with our proposed Algorithm 2. The results are shown in Table 1. Note that in the latter case \(r = n_1n_2 \ll n_3\) holds, MOTQN model has high computational complexity. Thus we will not compare it in this part.

Table 1 verifies our hypothesis that TNN regularization performs badly on data with non-smooth change along the third dimension. Our VMTQN method is obviously better than the other methods in the case of low sampling rate. Moreover, by comparing the two groups of experiments, we can see that VMTQN, TMac, and SiLRTC perform better in \(\mathcal {Y}_2\). This may be due to that increasing the data volume will make the principal components more significant. Meanwhile, in the methods of Fourier matrix, cosine matrix and wavelet matrix, they almost have no recovery effect when the sampling rate p is lower. This indicates that these specified projection bases can not learn the data features in the case of poor continuity and insufficient sampling.

The above analyses confirm that our proposed regularization are data-dependent and can lead to a better low rank structure which makes recover easily.

5.2.3 Running time on CIFAR

As shown in Fig. 4, we test the running times of different models. The two figures indicate that, when \(n_3\gg n_1n_2\), our VMTQN model has higher computational efficiency in each iteration and better accuracy than TNN and SiLRTC. As mentioned in our previous complexity analysis, VMTQN method has a great speed advantage in this case. Moreover, for the case \(n_3 < n_1n_2\), Fig. 8 implies that setting \(r<n_1n_2\) can balance computational efficiency and recovery accuracy.

5.2.4 COIL-20 and short video from HMDB51

COIL-20 (Nene et al. 1996) contains 1440 images of 20 objects which are taken from different angles. The size of each image is processed as \(128\times 128\), which means \(\mathcal {Y}\in \mathbb {R}^{128\times 128\times 1440}\). The upper part of Table 2 shows the results of the numerical experiments. We select a background-changing video from HMDB51 (Kuehne et al. 2011) for the video inpainting task, where \(\mathcal {Y}\in \mathbb {R}^{240\times 320\times 146}\). Figure 2 shows some frames of this video. The lower part of Table 2 shows the results. And Figs. 5, 6 and 7 are the the experimental results of COIL-20 and Short Video from HMDB51, respectively.

From the two visual figures we can see that, our VMTQN method and MOTQN method perform the best among all comparative methods. Especially when the sampling rate \(p=0.2\) in Fig. 6, our methods has significant superiority in visual evaluation. What’s more, “Latent Trace Norm” based method performs much better than TNN in COIL, which validates our assumption that with the help of data-dependent \(\mathbf {V}\) tensor trace norm is much more robust than TNN in processing non-smooth data.

Overall, both our methods and t-SVD based methods (e.g., TNN) perform better than the others (e.g., SiLRTC) on these two datasets. It is mainly because the definitions of tensor singular value in tSVD based methods can make better use of the tensor internal structure, and this is also the main difference between tensor Q-nuclear norm (TQN) and sum of the nuclear norm (SNN).

Meanwhile, our method is obviously better than the others at all sampling rates, which reflects the superiority of our data dependent \(\mathbf {Q}\).

5.2.5 Influence of r in \(\mathbf {Q} \in \mathbb {R}^{n_3\times r}\)

Remarks 2 and 3 imply that r of \(\mathbf {Q}\in \mathbb {R}^{n_3\times r}\) in VMTQN denotes the apriori assumption of the subspace dimension of the ground-truth. It means that the dimensions of the frontal slice subspace of the true tensor \(\mathcal {T}\) (also as the column subspace of mode-3 unfolding matrix \(\mathbf {T}_{(3)}\)) are no more than r.

Figure 8 illustrates the relations among running times, different r, and the singular values of \(\mathbf {T}_{(3)}\). We project the solution \(\mathcal {X}_k\) (in Eq. (45)) onto the subspace of \(\mathbf {Q}_k\), which means \(\hat{\mathcal {X}_k} := \mathcal {X}_k\times _3(\mathbf {Q}_k\mathbf {Q}_k^\top )\). Meanwhile, under different r in \(\mathbf {Q} \in \mathbb {R}^{n_3\times r}\), Fig. 9 shows the PSNR results of the completion task with varying tensor Q-rank of tensor and varying sampling rate. The settings in Fig. 9 are consistent with those in Sect. 5.1, and only the size of \(\mathbf {Q}\) is different.

As shown in the conduct of Fig. 8, the column subspace of \(\mathbf {T}_{(3)}\) is more than 360. If \(r\le 360\), the algorithm will converge to a bad point which only has an r-dimensional subspace. Therefore, in our previous experiments, we usually set \(r=\min \{n_1n_2,n_3\}\) to make sure that r is greater than the true tensor’s subspace dimension. This apriori assumption is commonly used in factorization-based algorithms. What’s more, the running time decreases with the decrease of r. Although \(r=1440\) needs more time to converge than TNN, it obtains a better recovery. And a smaller r does speed up the calculation but harms the accuracy.

The results of Fig. 9 intuitively reflect the selection criterion of r in VMTQN, that is, r should be larger than the subspace dimension of the true tensor to get the exact recovery. According to the constraint \(\mathbf {X}\mathbf {Q}\mathbf {Q}^\top = \mathbf {X}\) in Sect. 3.1, if the subspace dimension of the true tensor is larger than r, then this constraint can never be satisfied. Additionally, there must be a distance between the output of Algorithm 2 and the truth tensor, which corresponding to the black areas in the upper right corner of the first two sub-figures. From the left two sub-figures we can see that, if the dimension of true tensor is not greater than r, the recovery performance is consistent with that in the third sub-figure. Combined with the above analyses, \(r=\min \{n_1n_2,n_3\}\) can not only save computational efficiency in some cases, but also make the recovery performance of the model in “the white area”, corresponding to the exact recovery.

5.3 Smooth data experiments

To verify the effectiveness of our proposed methods in smooth data, we select a video from HMDB51 to conduct the experiments, while the background of this video remains unchanged. Figure 10 shows the PSNR and visualization results of the video inpainting tasks. Here we only compare TNN based method (Lu et al. 2018), since in recent years TNN is considered as a benchmark for handling such smooth data. The results in Fig. 10 show that VMTQN method performs best, and with the increase of sampling rate p, MOTQN method outperforms TNN based method, which means our proposed methods are still competitive in processing smooth data.

6 Conclusions

We analyze the advantages and limitations of the current mainstream low rank regularizers, and then introduce a new definition of data dependent tensor rank named tensor Q-rank. To get a more significant low rank structure w.r.t. \(\text {rank}_Q\), we further introduce two explainable selection methods of \(\mathbf {Q}\) and make \(\mathbf {Q}\) to be a learnable variable w.r.t. the data. Specifically, maximizing the variance of singular value distribution leads to VMTQN, while minimizing the value of nuclear norm through manifold optimization leads to MOTQN. We provide an envelope of our rank function and apply it to the tensor completion problem. By analyzing the proof of exact recovery theorem,we explain why our method may perform better than TNN based methods in non-smooth data (along the third dimension) with low sampling rates, and conduct experiments to verify our conclusions.

Notes

Please see Kolda and Bader (2009) or our supplementary materials for more details.

For the matrix case, the nuclear norm is the conjugate of the conjugate function of the rank function in the unit ball. However, it is still unknown whether this property holds for cTNN and CP-rank.

The implementation of Fourier transform along the third dimension of \(\mathcal {T}\) is equivalent to multiplying a DFT matrix \(\mathbf {F}\) by using \(\times _3\). For more details, please see Sect. 2.2.

Notice that minimizing \(\sum _{i=1}^{n} a_i\) in Lemma 1 can be seen as a linear hyperplane optimization problem defined in the first quadrant Euclidean spherical surface: \(\{(a_1,\ldots ,a_n)|\sum _{i=1}^{n} a_i^2 = C, a_i\ge 0\}\). The intersection of sphere and each axis is distributed on the optimal hyperplane, which corresponds to only one non-zero coordinate (more variables close to 0).

The subgradient of matrix nuclear norm \(\Vert \mathbf {M}\Vert _*\) w.r.t. \(\mathbf {M}\) is \(\{ \mathbf {U}\mathbf {V}^\top + \mathbf {W}\ \Vert \ \mathbf {U}^\top \mathbf {W} = \mathbf {O}, \mathbf {W}\mathbf {V} = \mathbf {O}, \Vert \mathbf {W}\Vert \le 1 \}\), where \(\mathbf {M} = \mathbf {U}\varSigma \mathbf {V}^\top \) is the SVD of \(\mathbf {M}\).

References

Absil, P.-A., Mahony, R., & Sepulchre, R. (2009). Optimization algorithms on matrix manifolds. Princeton, NJ: Princeton University Press.

Cai, J.-F., Chan, R. H., & Shen, Z. (2008). A framelet-based image inpainting algorithm. Applied and Computational Harmonic Analysis, 24(2), 131–149.

Candès, E. J., & Recht, B. (2009). Exact matrix completion via convex optimization. Foundations of Computational Mathematics, 9(6), 717.

Candès, E. J., & Tao, T. (2010). The power of convex relaxation: Near-optimal matrix completion. IEEE Transactions on Information Theory, 56(5), 2053–2080.

Edelman, A., Arias, T. A., & Smith, S. T. (1998). The geometry of algorithms with orthogonality constraints. SIAM Journal on Matrix Analysis and Applications, 20(2), 303–353.

Friedland, S., & Lim, L.-H. (2018). Nuclear norm of higher-order tensors. Mathematics of Computation, 87(311), 1255–1281.

Fu, Y., Gao, J., Tien, D., Lin, Z., & Hong, X. (2016). Tensor lrr and sparse coding-based subspace clustering. IEEE Transactions on Neural Networks and Learning Systems, 27(10), 2120–2133.

Håstad, J. (1990). Tensor rank is NP-complete. Journal of Algorithms, 11(4), 644–654.

Hillar, C. J., & Lim, L.-H. (2013). Most tensor problems are NP-hard. Journal of the ACM, 60(6), 45.

Hitchcock, F. L. (1927). The expression of a tensor or a polyadic as a sum of products. Studies in Applied Mathematics, 6(1–4), 164–189.

Hitchcock, F. L. (1928). Multiple invariants and generalized rank of a p-way matrix or tensor. Journal of Mathematics and Physics, 7(1–4), 39–79.

Hu, W., Tao, D., Zhang, W., Xie, Y., & Yang, Y. (2016). The twist tensor nuclear norm for video completion. IEEE Transactions on Neural Networks and Learning Systems, 28(12), 2961–2973.

Jiang, T.-X., Huang, T.-Z., Zhao, X.-L., Ji, T.-Y., & Deng, L.-J. (2018). Matrix factorization for low-rank tensor completion using framelet prior. Information Sciences, 436, 403–417.

Jiang, T.-X., Ng, M. K., Zhao, X.-L., & Huang, T.-Z. (2020). Framelet representation of tensor nuclear norm for third-order tensor completion. IEEE Transactions on Image Processing, 29, 7233–7244.

Kasai, H., & Mishra, B. (2016). Low-rank tensor completion: A Riemannian manifold preconditioning approach. In International conference on machine learning, pp. 1012–1021.

Kernfeld, E., Kilmer, M., & Aeron, S. (2015). Tensor-tensor products with invertible linear transforms. Linear Algebra and its Applications, 485, 545–570.

Kiers, H. A. (2000). Towards a standardized notation and terminology in multiway analysis. Journal of Chemometrics, 14(3), 105–122.

Kilmer, M. E., Braman, K., Hao, N., & Hoover, R. C. (2013). Third-order tensors as operators on matrices: A theoretical and computational framework with applications in imaging. SIAM Journal on Matrix Analysis and Applications, 34(1), 148–172.

Kilmer, M. E., & Martin, C. D. (2011). Factorization strategies for third-order tensors. Linear Algebra and its Applications, 435(3), 641–658.

Kolda, T. G., & Bader, B. W. (2009). Tensor decompositions and applications. SIAM Review, 51(3), 455–500.

Kong, H., Xie, X., & Lin, Z. (2018). t-Schatten-\( p \) norm for low-rank tensor recovery. IEEE Journal of Selected Topics in Signal Processing, 12(6), 1405–1419.