Abstract

We introduce a new recursive aggregation procedure called Bernstein Online Aggregation (BOA). Its exponential weights include a second order refinement. The procedure is optimal for the model selection aggregation problem in the bounded iid setting for the square loss: the excess of risk of its batch version achieves the fast rate of convergence \(\log (M)/n\) in deviation. The BOA procedure is the first online algorithm that satisfies this optimal fast rate. The second order refinement is required to achieve the optimality in deviation as the classical exponential weights cannot be optimal, see Audibert (Advances in neural information processing systems. MIT Press, Cambridge, MA, 2007). This refinement is settled thanks to a new stochastic conversion that estimates the cumulative predictive risk in any stochastic environment with observable second order terms. The observable second order term is shown to be sufficiently small to assert the fast rate in the iid setting when the loss is Lipschitz and strongly convex. We also introduce a multiple learning rates version of BOA. This fully adaptive BOA procedure is also optimal, up to a \(\log \log (n)\) factor.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and main results

We consider the online setting where observations \(\mathcal D_t=\{(X_1,Y_1),\ldots ,(X_t,Y_t)\}\) are available recursively (\((X_0,Y_0)=(x_0,y_0)\) arbitrary). The goal of statistical learning is to predict \(Y_{t+1}\in \mathbb {R}\) given \(X_{t+1}\in \mathcal X\), for \(\mathcal X\) a probability space, on the basis of \(\mathcal D_t\). In this paper, we index with the subscript t any random element that is adapted to \(\sigma (\mathcal D_t)\). A learner is a function \(\mathcal X\mapsto \mathbb {R}\), denoted \( f_t\), that depends only on the past observations \(\mathcal D_t\) and such that \( f_t(X_{t+1})\) is close to \(Y_{t+1}\). This closeness at time \(t+1\) is addressed by the predictive risk

where \(\ell :\mathbb {R}^2\rightarrow \mathbb {R}\) is a loss function. We define an online learner f as a recursive algorithm that produces at each time \(t\ge 0\) a learner: \( f =( f_0, f_1, f_2,\ldots )\). The accuracy of an online learner is quantified by the cumulative predictive risk

We will motivate the choice of this criteria later in the introduction.

Given a finite set \(\mathcal H=\{f_1,\ldots ,f_M\}\) of online learners such that \( f_j=( f_{j,t})_{t\ge 0}\), we aim at finding optimal online aggregation procedures

with \(\sigma (\mathcal D_t)\)-measurable weights \( \pi _{j,t}\ge 0\), \(\sum _{j=1}^M \pi _{j,t}=1\), \(t=0,\ldots ,n\). We call deterministic aggregation procedures \(f_\pi \) any online learner of the form

with \(\pi =(\pi _j)_{1\le j\le M}\) with \(\sum _{j=1}^M \pi _{j}=1\). Notice that \(\pi \) can be viewed as a probability measure on the random index \(J\in \{1,\ldots ,M\}\). We will also use the notation \(\pi _t\) for the probability measure \((\pi _{j,t})_{1\le j\le M}\) on \(\{1,\ldots ,M\}\). Then, \(f_\pi =\mathbb {E}_\pi [f_J]\) and \(\hat{f} =(\mathbb {E}_{\pi _0}[f_{J,0}],\mathbb {E}_{\pi _1}[f_{J,1}],\mathbb {E}_{\pi _2}[f_{J,2}],\ldots )\). The predictive performances of an online aggregation procedure \(\hat{f}\) is compared with the best deterministic aggregation of \(\mathcal H\) or the best element of \(\mathcal H\). We refer to these two different objectives as, respectively, the convex aggregation Problem (C) or the model selection aggregation Problem (MS). The performance of online aggregation procedures is usually measured using the cumulative loss (in the context of individual sequences prediction, see the seminal book Cesa-Bianchi and Lugosi (2006)). The first aim of this paper is to use instead the cumulative predictive risk for any stochastic process \((X_t,Y_t)\). However, to define properly the notion of optimality as in Nemirovski (2000), Tsybakov (2003), we will also consider the specific iid setting of independent identically distributed observations \((X_t,Y_t)\) when the online learners are constants: \(f_{j,t}=f_j\), \( t\ge 0\). In the iid setting, we suppress the indexation with time t as much as possible and we define the usual risk \(\bar{R}(f)= \mathbb {E}[\ell (Y,f(X))]=(n+1)^{-1}R_{n+1}(f)\) for any constant learner f. A batch learner is defined as \( \bar{f}=f_\pi \) for \(\sigma (\mathcal D_n)\)-measurable weights \(\pi _j\ge 0\), \(\sum _{j=1}^M\pi _j=1\). Lower bounds for the excesses of risk

are provided for the expectation of the square loss in Nemirovski (2000), Tsybakov (2003). Theorem 4.1 in Rigollet (2012) provides sharper lower bounds in deviation for some strongly convex Lipschitz losses and deterministic \(X_t\)s. These lower bounds are called the optimal rates of convergence and a weaker version applies also in our more general setting with stochastic (but possibly degenerate) \(X_t\)s. For Problem (C), we retain the rate \(\sqrt{\log (M)/n}\) that is optimal when \(M>\sqrt{n}\) and \(\log (M)/n\) for Problem (MS), see Rigollet (2012) for details. We are now ready to define the notion of optimality:

Definition 1.1

(adapted from Theorem 4.1 in Rigollet 2012) In the iid setting, a batch aggregation procedure is optimal for Problems (C) when \(M>\sqrt{n}\) or (MS) if there exists \(C>0\) such that with probability \(1-e^{-x}\), \(x>0\), it holds

Very few known procedures achieve the fast rate \(\log (M)/n\) in deviation and none of them are issued from an online procedure. In this article, we provide the Bernstein Online Aggregation (BOA) that is proved to be the first online aggregation procedure such that its batch version, defined as \(\bar{f}=(n+1)^{-1}\sum _{t=0}^{n}\hat{f}_t\), is optimal. Before defining it properly, let us review the existing optimal procedures for Problem (MS).

The batch procedures in Audibert (2007), Lecué and Mendelson (2009), Lecué and Rigollet (2014) achieve the optimal rate in deviation. A priori, they face practical issues as they require a computational optimization technique to approximate the weights that are defined as an optimum. A step further has been done in the context of quadratic loss with gaussian noise in Dai et al. (2012) where an explicit iterative scheme is provided. We will now explain why the question of the existence of an online algorithm whose batch version achieves fast rate of convergence in deviations remained open (see the conclusion of Audibert 2007) before our work. Optimal (for the regret) online aggregation procedures are exponential weights algorithms (EWAs), see Vovk (1990), Haussler et al. (1998). The batch versions of EWAs coincides with the Progressive Mixture Rules (PMRs). In the iid setting, the properties of the excess of risk of such procedures have been extensively studied in Catoni (2004). PMRs achieve the fast optimal rate \(\log (M)/n\) in expectation (that follows from the expectation of the optimal regret bound by an application of Jensen’s inequality, see Catoni 2004, Juditsky et al. 2008). However, PMRs are suboptimal in deviation, i.e. the optimal rate cannot hold with high probability, see Audibert (2007), Dai et al. (2012). It is because the optimality for the regret defined as in Haussler et al. (1998) does not coincides with the notion of optimality for the risk in deviation used in Definition 1.1.

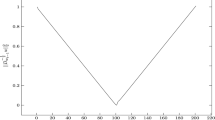

The optimal BOA procedure is obtained using a necessary second order refinement of EWA. Figure 1 describes the computation of the weights in the BOA procedure where \(\ell _{j,t}\) denotes the opposite of the instantaneous regret \(\ell (Y_{t}, f_{j,t-1}(X_{t})) -\mathbb {E}_{\pi _{t-1}}[\ell (Y_{t}, f_{J,t-1}(X_{t}))]\) (linearized when the loss \(\ell \) is convex).

Other procedures already exist with different second order refinements, see Audibert (2009), Hazan and Kale (2010). None of them have been proved to be optimal for (MS) in deviation. The choice of the second order refinement is crucial. In this paper, the second order refinement is chosen as \(\ell _{j,t}^2\) with

Notice that the second order refinement \(\ell _{j,t}^2\) tends to stabilize the procedure as the distances between the losses of the learners and the aggregation procedure are costly.

We achieve an upper bound for the excess of the cumulative predictive risk by first deriving a second order bound on the regret:

Second we extend it to an upper bound on the excess of the cumulative predictive risk \(R_{n+1}(\hat{f})- R_{n+1}(f_\pi )\) in any stochastic environment. In previous works, the online to batch conversion follows from an application of a Bernstein inequality for martingales. It provides a control of the deviations in the stochastic environment via the predictable quadratic variation, see for instance (Freedman 1975; Zhang 2005; Kakade and Tewari 2008; Gaillard et al. 2014). Here we prefer to use an empirical counterpart of the classical Bernstein inequality, based on the quadratic variation instead of the predictive quadratic variation. For any martingale \((M_t)\), we denote \(\Delta M_t=M_t-M_{t-1}\) its difference (\(\Delta M_0=0\) by convention) and \([M]_t=\sum _{j=1}^t\Delta M_j^2\) its quadratic variation. We will use the following new empirical Bernstein inequality:

Theorem 1.1

Let \((M_t)\) be a martingale such that \(\Delta M_t\ge -1/2\) a.s. for all \(t\ge 0\). Then for any \(n\ge 0\) we have \(\mathbb {E}[\exp ( M_n- [M]_n)]\le 1.\) Without any boundedness assumption, we still have

Empirical Bernstein’s inequalities have already been developed in Audibert et al. (2006), Maurer and Pontil (2009) and use in the multi-armed bandit and penalized ERM problems. Applying Theorem 1.1, we estimate successively the deviations of two different martingales

-

1.

\(\Delta M_{J,t}= -\eta \ell _{J,t}\) as a function of J distributed conditionally as \(\pi _{t-1}\) on \(\{1,\ldots ,M\}\),

-

2.

\( M_{j,t}=\eta (R_t(\hat{f})- R_t(f_j)-\text {Err}_t(\hat{f})+\text {Err}_t(f_j))\) such that \(\Delta M_{j,t}=\eta (\mathbb {E}_{t-1}[\ell _{j,t}]-\ell _{j,t})\) where \(\mathbb {E}_{t-1}\) denotes the expectation of \((X_t,Y_t)\) conditionally on \(\mathcal D_{t-1}\), \(1\le j\le M\).

The first application 1. of Theorem 1.1 will provide a second order bound on the regret in the deterministic setting whereas the second application 2. will provide the new stochastic conversion. In both cases, the second order term will be equal to \(\eta ^{-1}[M_j]_{n+1}=\eta \sum _{t=1}^{n+1}\ell _{j,t}^2\) after renormalization. It is the main motivation of BOA; as our notion of optimality requires a stochastic conversion, a second order term necessarily appears in the bound of the excess of the cumulative predictive risk. An online procedure will achieve good performances in the batch setting if it is regularized with the necessary cost due to the stochastic conversion. The BOA procedure achieves this aim by incorporating this second order term in the computation of the weights.

In the first application 1. of Theorem 1.1, we have \(\mathbb {E}_{\pi _{t-1}}[\Delta M_{J,t}]=0\) and an application of Theorem 1.1 yields the regret bound of Theorem 3.1:

where \(\mathbb {E}_{\hat{\pi }}[\text {Err}_{n+1}( f_J)]=\sum _{t=1}^{n+1}\mathbb {E}_{\hat{\pi }_{t-1}}[\ell (Y_{t} f_{J,t}(X_{t}))]\). Such second order bounds also hold for the regret of other algorithms, see Cesa-Bianchi et al. (2007), Gaillard et al. (2014), Luo and Schapire (2015), Koolen and Erven (2015). Using the new stochastic conversion based on the application 2. of Theorem 1.1, this second order regret bound is converted to a one on the cumulative predictive risk (see Theorem 4.2). With probability \(1-e^{-x}\), \(x>0\), we have

Thanks to the use of the cumulative predictive risk, this bound is valid in any stochastic environment. We will extend it in various directions. We will introduce

-

the “gradient trick” to bound the excess of the cumulative predictive risk in Problem (C),

-

the multiple learning rates for adapting the procedure and

-

the batch version of BOA to achieve the fast rate of convergence in Problem (MS).

The “gradient trick” is a standard argument to solve Problem (C), see Cesa-Bianchi and Lugosi (2006). When the loss \(\ell \) is convex with respect to its second argument, its sub-gradient is denoted \(\ell ^{\prime }\). In this case, we consider a convex version of the BOA procedure described in Fig. 1. The original loss \(\ell \) is replaced with its linearized version

and we denote, with some abuse of notation,

Linearizing the loss, we can compare the regret of the (sub-gradient version of the) BOA procedure \(\hat{f}=\mathbb {E}_{\hat{\pi }}[f_J]\) with the best deterministic aggregation of the elements in the dictionary. We obtain in Theorem 3.1 a second order regret bound for Problem (C)

When trying to optimize the regret bound in the learning rate \(\eta >0\), we obtain

As \(\pi \) is unknown, this tuning parameter is not tractable in practice. Its worst case version \( \max _j \sqrt{\log (\pi _{j,0}^{-1})}/\sqrt{\sum _{t=1}^{n+1} \ell _{j,t}^2}\) is not satisfactory. Multiple learning rates have been introduced by Blum and Mansour (2005) to solve this issue (see also Gaillard et al. 2014). We introduce the multiple learning rates version of BOA in Fig. 2 which is also fully adaptative as it adapts to any possible range of observations.

The novelty, compared with the “doubling trick” developed in Cesa-Bianchi et al. (2007), is the dependence of the learning rates and the estimated ranges with respect to j and the expression of the weights with respect to the learning rates and the ranges estimators. For this adaptive BOA procedure we obtain regret bounds such as

for some “constant” \(C>0\) that grows as \(\log \log (n)\), see Theorem 3.3 for details. Such second order bounds involving excess losses terms as the \(\ell _{j,t}\)s have been proved for other algorithms in Gaillard et al. (2014), Luo and Schapire (2015), Koolen and Erven (2015). We refer to these articles for nice consequences of such bounds in the individual sequences framework. Here again, the stochastic conversion holds in any stochastic environment and we obtain with probability \(1-e^{-x}\), \(x>0\)

The optimal bound for Problem (C) is proved in the very general setting: under a boundedness assumption, the rate of convergence of \(R_{n+1}(\hat{f})/(n+1)\) (an upper bound of \(\bar{R}(\bar{f})\) in the iid setting), is smaller than \(\sqrt{\log (M)/n}.\) Notice that the classical online-to-batch conversion leads to similar results, see Cesa-Bianchi et al. (2007), Gerchinovitz (2013), Gaillard et al. (2014), and that our second order refinement is not necessary to obtain such upper bounds. Notice also that such upper bounds were already derived in non iid settings for the excess of risk (and not the cumulative predictive risk) under restrictive dependent assumptions, see Alquier et al. (2013), Mohri and Rostamizadeh (2010), Agarwal and Duchi (2013). It is remarkable to extend the optimal bound of Problem (C) to any stochastic environment thanks to the use of the cumulative predictive risk. It is because the cumulative predictive risk of \(f_\pi \) in the upper bound takes into account the dependence as it is a random variable in non iid settings. We believe that the cumulative predictive risk is the correct criteria to assert the prediction accuracy of online algorithms in stochastic environment as it coincides with the regret in the deterministic setting and with the classical risk for batch procedures in the iid setting. Moreover, it appears naturally when using the minimax theory approach, see Abernethy et al. (2009). However, up to our knowledge, it is the first time that the cumulative predictive risk is used to compare an online procedure with deterministic aggregation procedures.

The fast rate of convergence \(\log (M)/n\) in Problem (MS) is achieved thanks to a careful study of the second order terms \(\sum _{t=1}^{n+1} \ell _{j,t}^2\). It also requires more conditions on the loss in order to behave locally like the square loss, see Audibert (2009). We restrict us to losses \(\ell \) that are \(C_\ell \)-strongly convex and \(C_b\)-Lipschitz functions in the iid setting, see Kakade and Tewari (2008) for an extensive study of this context. We fix the initial weights uniformly \(\pi _{j,0}=M^{-1}\). We obtain in Theorem 4.4 the fast rate of convergence for the batch version of the BOA procedure; with probability \(1-e^{-x}\), \(x>0\),

for \(\eta ^{-1}\) larger than \(C_b^2/C_\ell \) up to a multiplicative constant. The second order term is bounded by the excess of risk using the strong convexity assumption on the loss. We conclude by providing the fast rate bound on the excess of risk of the batch version of the adaptive BOA at the price of larger “constants” that grows at the rate \(\log \log (n)\).

The paper is organized as follows: We present the second order regret bounds for different versions of BOA in Sect. 3. The new stochastic conversion and the excess of cumulative predictive risk bounds in a stochastic environment are provided in Sect. 4. In the next section, we introduce some useful probabilistic preliminaries.

2 Preliminaries

As in Audibert (2009), the recursive argument for supermartingales will be at the core of the proofs developed in this paper. It will be used jointly with the variational form of the entropy to provide second order regret bounds.

2.1 The proof of the martingale inequality in Theorem 1.1

The proof of the first empirical Bernstein inequality for martingales of Theorem 1.1 follows from an exponential inequality and by a classical recursive supermartingales argument, see Freedman (1975). As \(X=\Delta M_t\ge -1/2\) a.s., from the inequality \(\log (1+x)\ge x-x^2\) for \(x>-1/2\) (stated as Lemma 1 in Cesa-Bianchi et al. 2007), we have

Here we used that \(\mathbb {E}_{t-1}[X]=0\) as \(X=\Delta M_t\) is a difference of martingale. The proof ends by using the classical recursive argument for supermartingales; from the definition of the difference of martingale \(X=\Delta M_t\), we obtain as a consequence of (4) that

As \(\mathbb {E}[\exp (M_0-[M]^2_0)]=1\), applying a recursion for \(t=1,\ldots , n\) provides the desired result.

Without any boundedness assumption, we have

As X is centered, we can bound

Using Cauchy–Schwarz inequality and the preceding arguments, we obtain

The desired result follows from the Jensen’s inequality followed by the same recursive argument for supermartingales as above.

2.2 The variational form of the entropy

The relative entropy (or Kullback-Leibler divergence) \(\mathcal K(Q,P)=\mathbb {E}_Q[\log (dQ/dP)]\) is a pseudo-distance between any probability measures P and Q. Let us remind the basic property of the entropy: the variational formula of the entropy originally proved in full generality in Donsker and Varadhan (1975). We consider here a version well adapted for obtaining second order regret bounds:

Lemma 2.1

For any probability measure P on \(\mathcal X\) and any measurable functions h, \(g:\mathcal X\rightarrow \mathbb {R}\) we have:

The left hand side corresponds to the right hand side with Q equals the Gibbs measure \(\mathbb {E}_P\left[ e^{h-g}\right] dQ = e^{h-g } dP \).

That the Gibbs measure realizes the dual identity is at the core of the PAC-bayesian approach. Exponential weights aggregation procedures arise naturally as they can be considered as Gibbs measures, see Catoni (2007).

3 Second order regret bounds for the BOA procedure

3.1 First regret bounds and link with the individual sequences framework

We work conditionally on \(\mathcal D_{n+1}\); it is the deterministic setting, similar than in Gerchinovitz (2013), where \((X_t,Y_t)=(x_t,y_t)\) are provided recursively for \(1\le t\le n\). In that case, the cumulative loss \(\text {Err}_{n+1}(f)\) quantify the prediction of \(f=(f_0,f_1,f_2,\ldots )\). We state first a regret bound for non convex losses, and then move to the case of convex losses combined with the “gradient trick” as in the Appendix of Gaillard et al. (2014). Recall that \(\mathbb {E}_{\hat{\pi }}[\text {Err}_{n+1}( f_J)]=\sum _{t=1}^{n+1}\mathbb {E}_{\hat{\pi }_{t-1}}[\ell (Y_{t} ,f_{J,t}(X_{t}))]\).

Theorem 3.1

Assume that \(\eta >0 \) satisfies

then the cumulative loss of the BOA procedure with \(\ell _{j,t}= \ell (Y_{t},f_{j,t-1}(X_t))-\ell (Y_{t},\hat{f}_{t-1}(X_{t}))\) satisfies

If \(\ell \) is convex with respect to its second argument, the cumulative loss of the BOA procedure with \( \ell _{j,t}= \ell ^{\prime }(Y_{t},\hat{f}_{t-1}(X_{t}))(f_{j,t-1}(X_t)- \hat{f}_{t-1}(X_{t})) \) also satisfies

Proof

We consider \(\Delta M_{J,t+1}=-\eta \ell _{J,t+1}\) that is a centered random variable on \(\{1,\ldots ,M\}\) when J is distributed as \(\pi _{t}\). Under the assumption (6), \(\Delta {M_{J,t+1}}\ge -1/2\) for any \(0\le t\le n\) a.s.. An application of the inequality (4) provides the inequality

From the recursive definition of the BOA procedure provided in Fig. 1, we have the expression

Plugging the expression of the weights \(\pi _{j,t}\) in the inequality (7) provides

By a recursive argument on \(0\le t\le n\) we obtain

Equivalently, using the variational form of the entropy (5),

\(\pi \) denoting any probability measure on \(\{1,\ldots ,M\}\). The first regret bound in Theorem 3.1 follows from the identity \(\sum _{t=1}^{n+1}\ell _{J,t}=\text {Err}_{n+1}(f_J)-\mathbb {E}_{\hat{\pi }}[\text {Err}_{n+1}(f_J)]\). The second result follows by an application of the “gradient trick”, i.e. noticing that

The second order term in the last regret bound is equal to

This term can be small because the sub-gradients are small or because the BOA weights are close to the objective \(\pi \). Such second order upper bounds can heavily depend on the behaviors of the different learners \(f_j\). Thus, a unique learning rate cannot be efficient in cases where the learners have different second order properties. To solve this issue, we consider the multiple learning rates version of BOA

We can extend the preceding regret bound to this more sophisticated procedure:

Theorem 3.2

Consider a loss \(\ell \) convex with respect to its second argument and multiple learning rates \(\eta _j\), \(1\le j\le M\), that are positive. If

then the cumulative loss of the BOA procedure with multiple learning rates satisfies

Proof

Let us consider the weights \( \pi _{i,t}^{\prime }=\eta _i^{-1}\pi _{i,t}/\mathbb {E}_{\pi _t}[\eta _j^{-1}]\), for all \(1\le i\le M\) and \(0\le t\le n+1\). Then, for any function \(j\rightarrow h_j\) measurable on \(\{1,\ldots ,M\}\) we have the relation

Consider \(\Delta M_{j,t}=-\eta _j \ell _{j,t+1}\), \(1\le j\le M\). Thanks to the identity (10), \(\Delta M_{J,t}\) is a centered random variable when J is distributed as \( \pi _{t}^{\prime }\) on \(\{1,\ldots ,M\}\). Moreover, the weights \( (\pi _{t}^{\prime })\) satisfy the recursive relation (9). Thus, one can apply the same reasoning than in the proof of Theorem 3.1. We obtain an equivalent of the inequality (8)

for \( \pi ^{\prime }\) denoting any probability measure on \(\{1,\ldots ,M\}\). Using the identity (10) to define \(\pi \) from \(\pi ^{\prime }\), and multiplying the above inequality with \(\mathbb {E}_{\pi }[\eta _j^{-1}]>0\), we obtain

The proof ends by identifying \(\log ( \pi ^{\prime }_j/\pi _{j,0}^{\prime })\) and using the “gradient trick” as in the proof of Theorem 3.1. \(\square \)

Notice that a simple corollary of the proof above is the simplified upper bound

where \(\pi ^{\prime }_{j,0} =\eta _j^{-1}\pi _{j,t}/\mathbb {E}_{\pi _t}[\eta _J^{-1}]\). The initial weights \(\pi _{j,0}\) are modified and the upper bound favors the learners with small learning rates \(\eta _j\). It constitutes a drawback of the multiple learning rates version of BOA as we will see that small learning rates will be associated with bad experts. One can solve this issue by choosing the initial weights differently than classically. For example, with no information on the learners \(f_j\), the initial weights can be chosen equal to

In this case, \(\log (1/\pi _{j,0}^{\prime })\le \log (M)\) and the weights have the expression

The form of the weights becomes similar than the one of the adaptive BOA introduced in Fig. 2 and studied in the next section. The second order regret bounds becomes

for learning rates tuned optimally

However, the resulting procedure is not recursive because \(\eta _j\) is \(\sigma (D_{n+1})\) measurable. Such non recursive strategies are not convertible to the batch setting.

Second order regret bounds similar to the one of Theorem 3.2 have been obtained in Gaillard et al. (2014), Luo and Schapire (2015), Koolen and Erven (2015) in the context of individual sequences. In this context, we consider that \(Y_t=y_t\) for a deterministic sequence \(y_0,\ldots ,y_n\) (\((X_t)\) is useless in this context), see Cesa-Bianchi and Lugosi (2006) for an extensive treatment of that setting. We have \(\mathcal D_t=\{y_0,\ldots ,y_t\}\), \(0\le t\le n\), and the online learners \(f_j=(y_{j,1},y_{j,2},y_{j,3},\ldots )\) of the dictionary are called the experts. The cumulative loss is \(\text {Err}_{n+1}(\hat{f})=\sum _{t=1}^{n+1}\ell (y_{t},\hat{y}_t)\) for any aggregative strategy \(\hat{y}_t=\hat{f}_{t-1}=\sum _{j=1}^M\pi _{j,t-1}y_{j,t}\) where \(\pi _{j,t-1}\) are measurable functions of the past \(\{y_0,\ldots ,y_{t-1}\}\). We will compare our second order regret bounds to the ones of other adaptive procedures from the individual sequences setting at the end of the next section.

3.2 A new adaptive method for exponential weights

We described in Fig. 2 the adaptive version of the BOA procedure. Notice that the adaptive version of the exponential weights

is different from Cesa-Bianchi et al. (2007) as the multiple learning rates \(\eta _{j,t}\) depend on j. Moreover, the multiple learning rates appear in the exponential and as a multiplicative factor to solve the issue concerning the modification of the initial weights described above. Adaptive procedures of such form have been studied in Gaillard et al. (2014). Another possibility consists in putting a prior on learning rates as in Koolen and Erven (2015). Multiple learning rates versions can be investigated for other exponential weights procedures than BOA. Notice also that for the first time we apply a multiple version of the “doubling trick” of Cesa-Bianchi et al. (2007); the ranges estimators \(E_{j,t}\) depend on j and also appear in the exponential weights as a penalization when the ranges estimators are exceeded. We obtain a second order regret bound for the BOA procedure similar to the second order regret bounds obtained in Corollary 4 of Gaillard et al. (2014):

Theorem 3.3

Assume that \(\ell \) is convex with respect to its second argument and that \(E_j\) defined by

satisfies \(2^{-c}\le E_j\le E\) for all \(1\le j\le M\). We have

where \(B_{n,E}= \log (1 +2^{-1}\log (n)+\log (E)+c\log (2))\) for all \(n\ge 1\).

Proof

We adapt the reasoning of the proof of Theorem 3.2 for learning rates depending on t. Thus, the recursive argument holds only approximatively. For any \(1\le t\le n\), let us consider the weights \( \pi _t^{\prime }\) as

We consider \(\Delta M_{J,t+1}=-\eta _{J,t} \ell _{J,t+1}\) a centered random variable when J is distributed as \( \pi _{t}^{\prime }\) on \(\{1,\ldots ,M\}\). As \(\Delta M_{j,t+1}\ge -\eta _{j,t}E_{j,t+1}\), \(j=1,\ldots ,M\), we apply the inequality (1):

By definition of the weights \(\pi _t^{\prime }\) and \(\pi _t\), we have

Using the expression of the weights in the exponential inequality provides

Using the basic inequality \(x\le \alpha ^{-1}x^\alpha + \alpha ^{-1}(\alpha -1)\le x^\alpha + \alpha ^{-1}(\alpha -1)\) for

and \(\alpha =\eta _{j,t-1}/\eta _{j,t}\ge 1\), we obtain for all \(2\le t\le n\)

Then, combining the inequalities (11) and (12) recursively for \(t=n,\ldots ,2\) and then (11) for \(t=1\) we obtain

We apply the variational form of the entropy (5) in order to derive that

for any probability measure \(\pi ^{\prime }\) on \(\{1,\ldots ,M\}\). We bound the last term \(\mathcal K(\pi ^{\prime },\pi _0)\le \mathbb {E}_{\pi ^{\prime }}[\log (\pi _{J,0}^{-1})]\). By comparing with the integral of 1 / x on the interval \([\eta _{j,n},\eta _{j,1}]\), we estimate

We have the bounds \( \sum _{t=1}^{n+1}E_{j,t}1_{\eta _{j,t-1} \ell _{j,t}>1/2} \le 8E_j\) and \(\log (\eta _{j,1}/\eta _{j,n})\le \log (\sqrt{n} E/2^{-c})\), \(1\le j\le M\). Then Theorem 3.3 is proved using similar arguments than in the proof of Theorem 6 in Cesa-Bianchi et al. (2007), choosing \(\pi ^{\prime }_j=\eta _{j,n}^{-1}\pi _j/\mathbb {E}_\pi [\eta _{j,n}^{-1}]\) and using the “gradient trick” as in the proof of Theorem 3.1. \(\square \)

The advantage of the adaptive BOA procedure compared with the procedures studied in Gaillard et al. (2014), Luo and Schapire (2015), Koolen and Erven (2015) is to be adaptive to unknown ranges. The price to pay is an additional logarithmic term \(\log (E)+c\log (2)\) depending on the variability of the adaptive learning rates \(\eta _{j,t}\) through time. Such losses are avoidable in the case of one single adaptive learning rate \(\eta _{j,t}=\eta _{t}\), for all \(1\le j\le M\). Notice also that the relative entropy bound is only achieved in the case of one single adaptive learning rate as then \(\mathcal K(\pi ^{\prime },\pi _0)=\mathcal K(\pi ,\pi _0)\). It is a drawback of the multiple learning rates procedures compared with the single ones of Luo and Schapire (2015), Koolen and Erven (2015) achieving such relative entropy bounds. Whether those drawbacks of multiple learning rates procedures can be avoided is an open question.

4 Optimality of the BOA procedure in a stochastic environment

4.1 An empirical stochastic conversion

We now turn to a stochastic setting where \((X_t,Y_t)\) are random elements observed recursively for \(1\le t\le n+1\) (\((X_0,Y_0)=(x_0,y_0)\) arbitrary are considered deterministic). Thanks to the empirical Bernstein inequality of Theorem 1.1, the cumulative predictive risk is bounded in term of the regret and a second order term. This new stochastic conversion is provided in Theorem 4.1 below. The main motivation of the introduction of the BOA procedure is the following reasoning: as a second order term appears necessarily in the stochastic conversion, an online procedure regularized by a similar second order term has nice properties in any stochastic environment. The BOA procedure achieves this strategy as the second order term of the regret bound is similar to the one appearing in the stochastic conversion. Let us go back for a moment to the most general case with no convex assumption on the loss and the notation:

for some online aggregation procedure \((\pi _t)_{0\le t\le n}\), i.e. \(\pi _t\) is \(\sigma (\mathcal D_t)\)-measurable. Assume the existence of non increasing sequences \((\eta _{j,t})_t\) that are adapted to \((\mathcal D_t)\) for each \(1\le j\le M\) and that satisfy

We have the following general stochastic conversion that is also valid in the convex case with the associated linearized expression of \(\ell _{j,t}\). It can be seen as an empirical counterpart of the online to batch conversion provided in Zhang (2005), Kakade and Tewari (2008), Gaillard et al. (2014). Thanks to the use of the cumulative predictive risk, the conversion holds in a completely general stochastic context; there is no condition on the dependence of the stochastic environment. Recall that \(\mathbb {E}_{\hat{\pi }}[\text {Err}_{n+1}( f_J)]=\sum _{t=1}^{n+1}\mathbb {E}_{\hat{\pi }_{t-1}}[\ell (Y_{t} f_{J,t}(X_{t}))]\).

Theorem 4.1

Under (13), the cumulative predictive risk of any aggregation procedure satisfies, with probability \(1-e^{-x}\), \(x>0\), for any \(1\le j \le M\):

Proof

We first note that for each \(1\le j\le M\) the sequence \((M_{j,t})_t\) with \(M_{j,t}=\eta (\mathbb {E}_{\hat{\pi }}[R_t(f_J)]-R_{t}(f_j)-(\mathbb {E}_{\hat{\pi }}[\text {Err}_{t}(f_j )]- \text {Err}_{t}(f_j)))\) is a martingale adapted to the filtration \((\mathcal D_t)\). Its difference is equal to \(\Delta M_{j,t}=\eta (\mathbb {E}_{t-1}[\ell _{j,t}]-\ell _{j,t})\). Then the proof will follow from the classical recursive argument for supermartingales applied to the exponential inequality of Theorem 1.1. However, as the learning rates \(\eta _{j,t}\) are not necessarily constant, we adapt the recursive argument as in the proof of Theorem 3.3.

For any \(1\le j\le M\), \(1\le t\le n+1\), denoting \(X=-\eta _{j,t-1}\ell _{j,t}\) we check that \(X\ge -1/2\). We can apply (4) conditionally on \(\mathcal D_{t-1}\) and we obtain

Here we used the fact that \(\eta _{j,t-1}\) is \(\mathcal D_{t-1}\)-measurable. Then we have

To apply the recursive argument we use the basic inequality \(x\le x^{\alpha }+(\alpha -1)/\alpha \) for \(\alpha =\eta _{j,t-2}/\eta _{j,t-1}\ge 1\) and

We obtain

The same recursive argument than in the proof of Theorem 3.3 is applied; we get

We end the proof by an application of the Chernoff bound. \(\square \)

4.2 Second order bounds on the excess of the cumulative predictive risk

Using the stochastic conversion of Theorem 4.1, we derive from the regret bounds of Sect. 3 second order bounds on the cumulative predictive risk of the BOA procedure. As an example, using the second order regret bound of Theorem 3.1 and the stochastic conversion of Theorem 4.1 we obtain

Theorem 4.2

Assume that the non adaptive BOA procedure described in Fig. 1 is such that \(\eta =\eta _{j,t} \) for \(1\le j\le M\), \(0\le t\le n\) satisfies condition (6). The BOA procedure with \( \ell _{j,t}= \ell (Y_{t},f_{j,t-1}(X_t))-\ell (Y_{t},\hat{f}_{t-1}(X_{t})) \) has its cumulative predictive risk that satisfies, with probability \(1-e^{-x}\), \(x>0\):

Moreover, if \(\ell \) is convex with respect to its second argument, the BOA procedure with \( \ell _{j,t}= \ell ^{\prime }(Y_{t},\hat{f}_{t-1}(X_{t}))(f_{j,t-1}(X_t)- \hat{f}_{t-1}(X_{t})) \) has its cumulative predictive risk that also satisfies, with probability \(1-e^{-x}\), \(x>0\):

Proof

We prove the result by integrating the result of Theorem 4.1 with respect to any deterministic \(\pi \) and noticing that, as the learning rates \(\eta _{j,t}=\eta \) are constant in the BOA procedure described in Fig. 1, \( \log (\eta _{j,1}/\eta _{j,n})=0.\)

The main advantage of the new stochastic conversion compared with the one of Gaillard et al. (2014) is that the empirical second order bound of of the stochastic conversion is similar to the one of the regret bound. We can extend Theorem 3.3; as the boundedness condition (13) is no longer satisfied for any \(1\le t\le n+1\), Theorem 4.1 does not apply directly. We still have

Theorem 4.3

Under the hypothesis of Theorem 3.3, the cumulative predictive risk of the adaptive BOA procedure described in Fig. 2 satisfies with probability \(1-e^{-x}\), \(x>0\),

where \(B_{n,E}= \log (1+2^{-1}\log (n)+\log (E)+c\log (2) )\) for all \(n\ge 1\).

Proof

As the boundedness condition (13) is not satisfied for all \(1\le t\le n+1\), we cannot apply directly the result of Theorem 4.1. However, one can adapt the proof of the Theorem 4.1 as we adapted the proof of Theorem 4.1 for proving Theorem 3.3. As \(-\eta _{j,t}(\ell _{j,t+1}-\mathbb {E}_{t-1}[\ell _{j,t+1}])\le \eta _{j,t}E_{j,t+1}\), \(1\le j\le M\), we can apply the inequality (1)

Thus the proof ends by an application of the same recursive argument as in the proof of Theorem 3.3.

For uniform initial weights \(\pi _{j,0}=M^{-1}\), the second order bound becomes

for some “constant” \(C>0\) increasing as \(\log \log n\). The second order term \(\sum _{t=1}^n\ell _{j,t}^2\) is a natural candidate to assert the complexity of Problem (C); the more the \(\sum _{t=1}^n\ell _{j,t}^2\) for \(1\le j\le M\) are uniformly small and the more one can aggregate the elements of the dictionary optimally. Moreover, this complexity term is observable and it would be interesting to develop a parsimonious strategy that would only aggregate the elements of the dictionary with small complexity terms \(\sum _{t=1}^n\ell _{j,t}^2\). Reducing also the size M of the dictionary, the second order bound (14) can be reduced at the price to decrease the generality of Problem (C), i.e. the number of learners in \(\mathcal H\).

The upper bound in (14) is an observable bound for Problem (C) similar than those arising from the PAC bayesian approach, see Catoni (2004) for a detailed study of such empirical bounds in the iid context. It would be interesting to know whether the complexity terms \( \sum _{t=1}^n\ell _{j,t}^2\) are optimal. We are not aware of empirical lower bounds for Problem (C). The bounds developed by Nemirovski (2000), Tsybakov (2003), Rigollet (2012) are deterministic. To assert the optimality of BOA, it is easy to turn from an empirical bound to a deterministic one. In the iid context, \((X_t,Y_t)\) are iid copies of (X, Y) and the learners are assumed to be constant \(f_j=f_{j,t}\), \(t\ge 0\), \(1\le j\le M\). We then have \( (n+1)^{-1}R_{n+1}(f_j)=\bar{R}( f_j)=\mathbb {E}[\ell (Y,f_j(X))]\). It is always preferable to convert any online learner \(\hat{f}\) to a batch learner by averaging

as an application of the Jensen inequality gives \( \bar{R}(\bar{f})\le (n+1)^{-1}R_{n+1}(\hat{f}) \). As \( \ell _{j,t}^2\le E^2\), Eq. (14) implies that the batch version of BOA satisfies, with high probability in the iid setting,

Then the BOA procedure is optimal for Problem (C) in the sense of the Definition 1.1: in the iid context, the excess of risk of the batch version of the BOA procedure is of order \(\sqrt{\log (M)/n}\). The estimate \( \ell _{j,t}^2\le E^2\) is very crude and the complexity terms \(\sum _{t=1}^n\ell _{j,t}^2\) can actually be smaller and close to a variance estimate, especially for losses that are similar to the quadratic loss, see the next section.

4.3 Optimal learning for problem (MS)

The BOA procedure is optimal for Problem (C) and the optimal rate of convergence is also valid in any stochastic environment on the excess of mean predictive risk. To turn to Problem (MS), we restrict our study to the context of Lipschitz strongly convex losses with iid observations. Remind that from Tsybakov (2003), Rigollet (2012) the optimal rate for Problem (MS) is a fast rate of convergence \(\log (M)/n\). Such fast rates cannot be obtained without regularity assumptions on the loss \(\ell \) that force it to behave locally like the square loss, see for instance Audibert (2009). In the sequel \(\ell :\mathbb {R}^2\rightarrow \mathbb {R}\) is a loss function satisfying the assumption called (LIST) after Kakade and Tewari (2008)

- (LIST) :

-

the loss function \(\ell \) is \(C_\ell \)-strongly convex and \(C_b\)-Lipschitz continuous in its second coordinate on a convex set \(\mathcal C\subset \mathbb {R}\).

Recall that a function g is c strongly convex on \(\mathcal C\subset \mathbb {R}\) if there exists a constant \(c>0\) such that

for any \(a,a^{\prime }\) \(\in \mathcal C\), \(0<\alpha <1\). Under the condition (LIST), few algorithms are known to be optimal in deviation, see Audibert (2007), Lecué and Mendelson (2009), Lecué and Rigollet (2014).

Note that Assumption (LIST) is restrictive and can hold only locally; on a compact set \(\mathcal C\), the minimizer \( f(y)^*\) of \(f(y)\in \mathbb {R}\rightarrow \ell (y,f(y))\) exists and satisfies, by strong convexity,

Moreover, by Lipschitz continuity, \(\ell (y,f(y))\le \ell (y, f(y)^*)+C_b|f(y)-f(y)^*|\). Thus, necessarily the diameter D of \(\mathcal C\) is finite and satisfies \({C_\ell } D\le 2 C_b\). Then we deduce that \( |\ell _{j,t}|\le C_bD \), \(1\le t\le n+1\), \(1\le j\le M\), and under (LIST) the ranges are estimated by \(E= C_bD\).

We obtain the optimality of the BOA procedure for Problem (MS). The result extends easily (with different constants) to any online procedures achieving second order regret bounds on the linearized loss similar to BOA such as the procedures described in Gaillard et al. (2014), Luo and Schapire (2015), Koolen and Erven (2015).

Theorem 4.4

In the iid setting, under the condition (LIST), for the uniform initial weights \(\pi _{j,0}=M^{-1}\), \(1\le j\le M\), and for the learning rate \(\eta \) satisfying

the cumulative predictive risk of the BOA procedure described in Fig. 1 and the risk of its batch version satisfy, with probability \(1-2e^{-x}\),

Proof

Inspired by the Q-aggregation procedures of Lecué and Rigollet (2014), we start the proof by adding the two second order empirical bounds obtained in Theorem 4.2 (using that \(\mathcal K(\pi ,\pi _0)\le \log (M)\)):

Then we convert the empirical second order term into a deterministic one. From the “poissonnian” inequality of Lemma A3 of Cesa-Bianchi and Lugosi (2006), as \(0\le \ell _{j,t}^2\le C_b^2D^2 \le 1\) under (15), we have

Applying a recursive argument, we show that with probability \(1-e^{-x}\)

Using that \(\eta C_bD\le (C_\ell D)/(16(e-1)C_b)\le 1/2 \) and an union bound, we obtain the deterministic version of the second order bound (16): with probability \(1-2e^{-x}\), \(x>0\),

The optimal fast rate is achieved thanks to a careful analysis of the second order deterministic bound. From the Lipschitz property, the sub-gradient \(\ell ^{\prime }\) is bounded by \(C_b\) and

where \(V(\pi )= \mathbb {E}_\pi [\mathbb {E}[( f_{J}(X)-f_{\pi }(X))^2]]\). As \(R_{n+1}(f_\pi )=(n+1)\bar{R}(f_\pi )\), \(\mathbb {E}_{\pi }[R_{n+1}(f_J)]=(n+1) \mathbb {E}_\pi [\bar{R}(f_J)]\) and combining those bounds we obtain

with \(\gamma =4C_b^2(e-1)\eta \). The rest of the proof is inspired by the reasoning of Lecué and Rigollet (2014). First, one can check the identity

where \(\pi \) and \(\pi ^{\prime }\) are any weights vectors and \({<}\cdot ,\cdot {>}\) denotes the scalar product on \(\mathbb {R}^M\). By \(C_\ell \)-strong convexity one can also check that

Thus the function H: \(\pi \rightarrow \bar{R}(f_\pi )+\mathbb {E}_\pi [\bar{R}(f_j)]+\gamma V(\pi )\) is convex as \(0\le \gamma \le C_\ell /2\) under (15). Moreover, if one denotes \(\pi ^*\) a minimizer of H, we have for any weights \(\pi \)

Thus, applying this inequality to \(\hat{\pi }\) we obtain

Combining this last inequality with the inequality (17) we derive that

Plugging in this new estimate into (17) we obtain

Now, using \(C_\ell \)-strong convexity as in Proposition 2 of Lecué and Rigollet (2014), we have for any probability measure \(\pi \)

As under condition (15) it holds

we can use the strong convexity argument 18 for any \(\pi _t\), \(0\le t\le n\) and obtain

The proof ends by noticing that

The lower bound on \(R_{n+1}(\hat{f})/(n+1)\) follows by an application of the strong convexity argument applied to \(\bar{f}=(n+1)^{-1}\sum _{t=0}^n\hat{f}_t\).

Theorem 4.4 provides the optimality of the BOA procedure for Problem (MS) because

The additional term

is the benefit of considering the batch version of BOA under the strong convexity assumptions (LIST). As the fast rate is optimal, the partial sums \(\sum _{t=0}^n\mathbb {E}[(\hat{f}_t(X)-\bar{f}(X))^2]\) might converge to a small constant. Assuming that \(\bar{f}\) is converging with n, the convergence of the partial sums implies that \(\mathbb {E}[(\hat{f}_n(X)-\bar{f}(X))^2]=o(n^{-1})\). Thanks to the Lipschitz assumption on the loss, it implies that the difference \(|\bar{R}(\bar{f})-\bar{R}(\hat{f}_n)|\le C_b \mathbb {E}[(\hat{f}_n(X)-\bar{f}(X))^2]\) is small. The difference \(|\bar{R}(\bar{f})-\bar{R}(\hat{f}_n)|\) is then negligible compared with the fast rate \(\log (M)/n\). Then, at the price of some constant \(C>1\), we also have

It means that in the iid setting, the predictive risk of the online procedure \(\bar{R}(\hat{f}_n)=\mathbb {E}[\ell (Y_{n+1},\hat{f}_n(X_{n+1})~|~\mathcal D_n]\) might be optimal. It would be interesting to check rigorously if it is the case and to extend this result to non iid settings following the reasoning developed in Mohri and Rostamizadeh (2010).

The tuning parameter \(\eta \) can be considered as the inverse of the temperature \(\beta \) of the Q-aggregation procedure studied in Lecué and Rigollet (2014). In the Q-aggregation, the tuning parameter \(\beta \) is required to be larger than \(60 C_b^2/C_\ell \). It is a condition similar than our restriction (15) on \(\eta \). The larger is \(\eta \) satisfying the condition (15) and the best is the rate of convergence. The choice \(\eta ^*=(16(e-1)C_b^2/ C_\ell )^{-1} \) is optimal. The resulting BOA procedure is non adaptive in the sense that it depends on the range \(C_b\) of the gradients that can be unknown. On the contrary, the multiple learning rates BOA procedure achieves to tune automatically the learning rates. At the price of larger “constants” that grow as \(\log \log (n)\), we extend the preceding optimal rate of convergence to the adaptive BOA procedure:

Theorem 4.5

In the iid setting, under the condition (LIST), for the uniform initial weights \(\pi _{j,0}=M^{-1}\), \(1\le j\le M\), the mean predictive risk of the adaptive BOA procedure described in Fig. 2 and the risk of its batch version satisfy, with probability \(1-2e^{-x}\),

where \(B_{n,C_bD}= \log (1 +2^{-1}\log (n)+\log (C_bD) +c\log (2))\) for all \(n\ge 1\).

Proof

The proof starts from the second order empirical bound provided in Theorem 4.3 in the iid context under (LIST), where \(|\ell _{j,t}|\le C_bD\), used with and without the gradient trick; next, from the Young inequality, we have for any \(\eta >0\)

using that \(2\sqrt{2}/(\sqrt{2}-1))\le \sqrt{48}\) and \(B_{n,E_j}\le B_{n,C_bD}\). Then we can use the “poissonnian” inequality as in the proof of Theorem 4.4 to obtain the deterministic second order bound, with \(\gamma =4C_b^2(e-1)\eta \),

The proof ends similarly than the one of Theorem 4.4. For \(\eta ^*\) satisfying the equality in the condition (15), we obtain

The result follows from the expression of \(\eta ^*\), the strong convexity of the risk and the estimate \(C_bD\le 2C_b^2/C_\ell \). \(\square \)

The BOA procedure is explicitly computed with complexity O(Mn). It is a practical advantage compared with the batch procedures studied in Audibert (2007), Lecué and Mendelson (2009), Lecué and Rigollet (2014) that require a computational optimization technique. This issue has been solved in Dai et al. (2012) for the square loss using greedy iterative algorithms that approximate the Q-aggregation procedure.

References

Abernethy, J., Agarwal, A., Bartlett, P. L., & Rakhlin, A. (2009). A stochastic view of optimal regret through minimax duality. In COLT.

Agarwal, A., & Duchi, J. C. (2013). The generalization ability of online algorithms for dependent data. IEEE Transactions on Information Theory, 59, 573–587.

Alquier, P., Li, X., & Wintenberger, O. (2013). Prediction of time series by statistical learning: General losses and fast rates. Dependence Modeling, 1, 65–93.

Audibert, J. Y., Munos, R., & Szepesvari, C. (2006). Use of variance estimation in the multi-armed bandit problem. In NIPS.

Audibert, J.-Y. (2007). Progressive mixture rules are deviation suboptimal. In J. C. Platt, D. Koller, Y. Singer, & S. Roweis (Eds.), Advances in neural information processing systems (Vol. 20, pp. 41–48). Cambridge, MA: MIT Press.

Audibert, J.-Y. (2009). Fast learning rates in statistical inference through aggregation. The Annals of Statistics, 37, 1591–1646.

Blum, A., & Mansour, Y. (2005). From external to internal regret. In Proceedings of the 18th annual conference on learning theory (New York) (pp. 621–636). Springer.

Catoni, O. (2004). Statistical learning theory and stochastic optimization. Lecture notes in mathematics (Vol. 1851), Springer-Verlag, Berlin. Lecture notes from the 31st summer school on probability theory held in Saint-Flour, July 8–25, 2001. MR 2163920.

Catoni, O. (2007). Pac-bayesian supervised classification: The thermodynamics of statistical learning. Beachwood, OH: Institute of Mathematical Statistics.

Cesa-Bianchi, N., & Lugosi, G. (2006). Prediction, learning, and games. Cambridge, NY: Cambridge University Press.

Cesa-Bianchi, N., Mansour, Y., & Stoltz, G. (2007). Improved second-order bounds for prediction with expert advice. Machine Learning, 66, 321–352.

Dai, D., Rigollet, P., Xia, L., & Zhang, T. (2012). Deviation optimal learning using greedy Q-aggregation. The Annals of Statistics, 40, 1878–1905.

Donsker, M. D., & Varadhan, S. S. (1975). Asymptotic evaluation of certain markov process expectations for large time, I. Communications on Pure and Applied Mathematics, 28, 1–47.

Freedman, D. A. (1975). On tail probabilities for martingales. The Annals of Probability, 3, 100–118.

Gaillard, P., Stoltz, G., & Van Erven, T. (2014). A second-order bound with excess losses. In COLT. arXiv:1402.2044.

Gerchinovitz, S. (2013). Sparsity regret bounds for individual sequences in online linear regression. JMLR, 14, 729–769.

Haussler, D., Kivinen, J., & Warmuth, M. K. (1998). Sequential prediction of individual sequences under general loss functions. IEEE Transactions on Information Theory, 44, 1906–1925.

Hazan, E., & Kale, S. (2010). Extracting certainty from uncertainty: Regret bounded by variation in costs. Machine Learning, 80, 165–188.

Juditsky, A., Rigollet, P., & Tsybakov, A. B. (2008). Learning by mirror averaging. The Annals of Statistics, 36, 2183–2206.

Kakade, S. M., & Tewari, A. (2008). On the generalization ability of online strongly convex programming algorithms. In NIPS.

Koolen, W., & Van Erven, T. (2015). Second-order quantile methods for experts and combinatorial games. In COLT (pp. 1155–1175).

Lecué, G., & Mendelson, S. (2009). Aggregation via empirical risk minimization. PTRF, 145, 591–613.

Lecué, G., & Rigollet, P. (2014). Optimal learning with Q-aggregation. The Annals of Statistics, 42(1), 211–224.

Luo, H., & Schapire, R. E. (2015). Achieving all with no parameters: Adanormalhedge. In Proceedings of the 28th conference on learning theory (pp. 1286–1304).

Maurer, A., & Pontil, M. (2009). Empirical bernstein bounds and sample variance penalization. In COLT.

Mohri, M., & Rostamizadeh, A. (2010). Stability bounds and for \(\phi \)-mixing and \(\beta \)-mixing processes. JMLR, 4, 1–26.

Nemirovski, A. (2000). Topics in non-parametric statistics. Lectures on probability theory and statistics (Saint-Flour, 1998), Lecture Notes in Math. (Vol. 1738, pp. 85–277). Berlin: Springer. MR 1775640.

Rigollet, P. (2012). Kullback-Leibler aggregation and misspecified generalized linear models. The Annals of Statistics, 40(2), 639–665.

Tsybakov, A. B. (2003). Optimal rates of aggregation. In COLT. Berlin, Heidelberg: Springer.

Vovk, V. G. (1990). Aggregating strategies. In COLT.

Zhang, T. (2005). Data dependent concentration bounds for sequential prediction algorithms. In Proceedings of the 18th annual conference on learning theory. Berlin, Heidelberg: Springer.

Acknowledgments

I am grateful to two anonymous referees for their helpful comments. I would also like to thank Pierre Gaillard and Gilles Stoltz for valuable comments on a preliminary version.

Author information

Authors and Affiliations

Corresponding author

Additional information

Editor: Tong Zhang.

Rights and permissions

About this article

Cite this article

Wintenberger, O. Optimal learning with Bernstein online aggregation. Mach Learn 106, 119–141 (2017). https://doi.org/10.1007/s10994-016-5592-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10994-016-5592-6