Abstract

Let \({\mathbb {R}}^N_+= [0,\infty )^N\). We here make new contributions concerning a class of random fields \((X_t)_{t\in {\mathbb {R}}^N_+}\) which are known as multiparameter Lévy processes. Related multiparameter semigroups of operators and their generators are represented as pseudo-differential operators. We also provide a Phillips formula concerning the composition of \((X_t)_{t\in {\mathbb {R}}^N_+}\) by means of subordinator fields. We finally define the composition of \((X_t)_{t\in {\mathbb {R}}^N_+}\) by means of the so-called inverse random fields, which gives rise to interesting long-range dependence properties. As a byproduct of our analysis, we present a model of anomalous diffusion in an anisotropic medium which extends the one treated in Beghin et al. (Stoch Proc Appl 130:6364–6387, 2020), by improving some of its shortcomings.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we consider multiparameter Lévy processes \((X_t)_{t\in {\mathbb {R}}^N_+}\) in the sense of [5, 38,39,40]. The reason they are called in this way is that they enjoy, in some sense, independence and stationarity of increments. Independence of increments is meant in the following way. First, a partial ordering on \({\mathbb {R}}^N_+\) is established, such that \(a\preceq b\) in \({\mathbb {R}}^N_+\) if \(a_i\le b_i\) for each \(i=1, \dots N\). Then, it is assumed that for any choice of ordered points \(t^{(1)}, t^{(2)}, \dots , t^{(k)}\) in \({\mathbb {R}}^N_+\), we have that \(X_{t^{(j+1)}}-X_{t^{(j)}}\), \(j=1, \dots , k-1\), is a set of independent random variables. On the other hand, stationarity of increments means that \(X_{t+\tau }-X_t\) has the same distribution of \(X_\tau \) for all \(t, \tau \in {\mathbb {R}}^N_+\).

Such processes are not to be confused with other extensions of Lévy processes where the parameter is multidimensional. Among them, we recall a class of processes, including the Brownian sheet and the Poisson sheet, which have a different definition from ours, because in that case independence of increments is understood in another way (consult e.g., [1, 10, 18]).

Multiparameter Lévy processes are of interest in Analysis since they furnish a stochastic solution to some systems of differential equations, as will be recalled in Sect. 2. Roughly speaking, if the vector \(G= (G_1, G_2, \dots , G_N)\) is the generator of a multiparameter Lévy process \((X_t)_{t\in {\mathbb {R}}^N_+}\), then provided that u belongs to suitable function spaces, the function \({\mathbb {E}}u (x+X_t)\) (\({\mathbb {E}}\) denoting the expectation) solves the system

where \(t=(t_1, \dots , t_N)\). Of course, for one-parameter Lévy processes, we have a single differential equation, as stated by the well-known Feller theory of one-parameter Markov processes and semigroups.

The idea of subordination for multiparameter Lévy processes is presented in [5, 38,39,40] (for the classical theory of subordination of one-parameter Lévy processes, see e.g., [43, chapter 6]). The construction is as follows. Let \((X_t)_{t\in {\mathbb {R}}_+ ^N}\) be a multiparameter Lévy process and let \((H_t)_{t\in {\mathbb {R}}_+ ^M}\) be a subordinator field, i.e., a multiparameter Lévy process with values in \({\mathbb {R}}_+^N\), such that it has non-decreasing paths in the sense of the partial ordering (i.e., \(t_1 \preceq t_2\) in \({\mathbb {R}}^M_+\) implies \(H_{t_1}\preceq H_{t_2}\) in \({\mathbb {R}}^N_+\)). The subordinated field is defined by \((X_{H_t})_{t\in {\mathbb {R}}_+ ^M}\), and it is again a multiparameter Lévy process.

One of the main results of this paper is to provide a formula for the generator of the subordinated field. Indeed, we find an extension of the Phillips theorem to the multiparameter case, by involving the so-called multidimensional Bernstein functions. This gives rise to interesting systems of type (1.1). In those systems, the operator on the right side may possibly be pseudo-differential. For example, when the subordinator field is stable, such a system could be interesting for those studying fractional equations, since the operator on the right side involves the fractional Laplacian and the so-called fractional gradient; we recall that the fractional gradient is a generalization of the fractional Laplacian to the case where the jumps are not isotropically distributed (see e.g., [8, Example 2.2] and the references therein). Thus, while the existing theory provides a stochastic solution to single differential equations (where the operator on the right can be pseudo-differential or fractional), here we are able to provide a stochastic solution to systems of differential equations, which can be interesting in Analysis and in the study of fractional calculus (see e.g., the examples in Sect. 3.3).

The basic case of subordinator field is the one with \(M=1\). In this case, we have a one-parameter process \(H_t= (H_1 (t), \dots , H_N(t))\) which the authors in [5] call multivariate subordinator. This is nothing more than a one-parameter Lévy process with values in \({\mathbb {R}}^N_+\), where all the components \(t\rightarrow H_j(t)\) are non-decreasing (namely, each \(H_j\) is a subordinator). Using a multivariate subordinator, subordination of a multiparameter Lévy process gives a one-parameter Lévy process.

In the second part of the paper, by considering a multivariate subordinator \((H_1(t), \dots H_N(t))\), we will construct a new random field

where \(L_j\) is the inverse, also said the hitting time, of the subordinator \(H_j\), i.e.,

We will call (1.2) inverse random field. Now, let \((X_t)_{t\in {\mathbb {R}}^N_+}\) be a multiparameter Lévy process with values in \({\mathbb {R}}^d\), which is assumed to be independent of ((1.2)). We are interested in the subordinated random field \((Z_t)_{t\in {\mathbb {R}}^N_+}\) defined by

Of course, (1.2) and (1.3) are not multiparameter Lévy processes because they enjoy neither independence nor stationarity of increments with respect to the partial ordering on \({\mathbb {R}}^N_+\). However, they may be useful in applications in order to model spatial data exhibiting various correlation structures which cannot fall in the framework of multiparameter Lévy or Markov processes.

Our topic has been inspired by some existing literature. First of all, there are many papers (see e.g., [6, 22, 29,30,31,32,33,34, 48]) concerning semi-Markov processes of the form

where X is a (one-parameter) Lévy process in \({\mathbb {R}}^d\) and L is the inverse of a subordinator H independent of X, i.e.,

Processes of type (1.4) have an important role in statistical physics, since they model continuous time random walk scaling limits and anomalous diffusions. Moreover, it is known that (1.4) is not Markovian, and its density p(x, t) is governed by an equation which is non-local in the time variable:

In the above equation, \(G^*\) is the dual to the generator of X and the operator \({\mathcal {D}}_t\) is the so-called generalized fractional derivative (in the sense of Marchaud), defined by

where \(\nu \) is the Lévy measure of H and \({\overline{\nu }}(t):= \int _t^\infty \nu (dx)\) is the tail of the Lévy measure.

The main results regarding the random fields of type (1.3) are presented in Sect. 4; we will show that they have interesting correlation structures and that they are governed by particular integro-differential equations. Such equations are non-local in the \(t_1, \dots , t_N\) variables and generalize equation (1.5) holding in the one-parameter case. Thus, while the existing theory of semi-Markov processes furnishes a stochastic solution to single equations that are non-local in time, we here furnish a stochastic solution to systems of equations which are non-local in the “time” variables \(t_1, t_2, \dots , t_N\).

We also recall that the first idea of inverse random field appeared in [8, sect. 3], where the authors proposed a model of multivariate time change.

Another source of inspiration is the paper [24], even if it does not exactly fit into our context. Here, the authors considered a Poisson sheet \(N(t_1, t_2)\), which is not a multiparameter Lévy process in the sense of this paper, and studied the composition

where \(L_1\) and \(L_2\) are two independent inverse stable subordinators, of index \(\alpha _1\) and \(\alpha _2\), respectively; the resulting random field showed interesting long-range dependence properties.

2 Basic Notions and Some Preliminary Results

We introduce the partial ordering on the set \({\mathbb {R}}_+^N= [0,\infty )^N\): the point \(a=(a_1, \dots , a_N)\) precedes the point \(b=(b_1, \dots , b_N)\), say \(a \preceq b\), if and only if \(a_j \le b_j\) for each \(j=1, \dots , N\).

A sequence \(\{x_i\}_{i=1}^\infty \) in \({\mathbb {R}}_+^N\) is said to be increasing if \(x_i \preceq x_{i+1}\) for each i; it is said to be decreasing if \(x_{i+1} \preceq x_i\) for each i.

Consider a function \(f: {\mathbb {R}}_+^N\rightarrow {\mathbb {R}}^d\). We say that f is right continuous at \(x \in {\mathbb {R}}_+^N\) if, for any decreasing sequence \(x_i\rightarrow x\) we have \(f(x_i) \rightarrow f(x)\).

We say that \(f: {\mathbb {R}}_+^N\rightarrow {\mathbb {R}}^d\) has left limits at \(x \in {\mathbb {R}}_+^N/\{0\}\) if, for any increasing sequence \(x_i\rightarrow x\), the limit of \(f(x_i)\) exists; such a limit may depend on the choice of the sequence \(x_i\).

Moreover, f is said to be càdlàg if it is right continuous at each \(x \in {\mathbb {R}}_+^N\) and has left limits at each \(x \in {\mathbb {R}}_+^N/\{0\}\).

2.1 Multiparameter Lévy Processes

We here recall the notion of multiparameter Lévy process in the sense of [5, 38,39,40]. We also refer to [17] as a standard reference on Multiparameter Markov processes.

The parameter set is here assumed to be \({\mathbb {R}}_+^N\). An analogous (but more general) definition holds if the parameter set is any cone contained in \({\mathbb {R}}^N\), but this generalization is not essential for the aim of this paper.

Definition 2.1

A random field \((X_t)_{t\in {\mathbb {R}}_+^N}\), with values in \({\mathbb {R}}^d\), is said to be a multiparameter Lévy process if

-

1.

\(X_0=0\) a.s.

-

2.

It has independent increments with respect to the partial ordering on \({\mathbb {R}}_+^N\), i.e., for any choice of \( 0 =t^{(0)} \preceq t^{(1)} \preceq t^{(2)} \dots \preceq t^{(k)}\), the random variables \(X_{t^{(j)}}-X_{t^{(j-1)}}\), \(j=1,\dots , k\), are independent.

-

3.

It has stationary increments, i.e., \(X_{t+\tau }-X_t\overset{d}{=}X_\tau \) for each \(t,\tau \in {\mathbb {R}}^N_+\)

-

4.

It is càdlàg a.s.

-

5.

It is continuous in probability, namely for any sequence \(t^{(i)} \in {\mathbb {R}}_+^N\) such that \(t^{(i)}\rightarrow t\), it holds that \(X_{t^{(i)}}\) converges to \(X_t\) in probability.

If (1), (2), (3), (5) hold, then \((X_t)_{t\in {\mathbb {R}}_+^N}\) is said to be a multiparameter Lévy process in law.

We present some examples of multiparameter Lévy processes, which are constructed from one-parameter ones. Such examples are taken from [5].

Example 2.2

If \((X^{(1)}_{t_1})_{t_1 \in {\mathbb {R}}_+}, \dots , (X^{(N)}_{t_N})_{t_N \in {\mathbb {R}}_+}\) are N independent Lévy processes on \({\mathbb {R}}^d\), with laws \(\nu ^{(1)} _{t_1}, \dots , \nu ^{(N)} _{t_N}\), then

is an N-parameter Lévy process on \({\mathbb {R}}^d\), which is usually called additive Lévy process (see e.g., [19] and [17, p. 405]).

Here, \(X_t\) has law

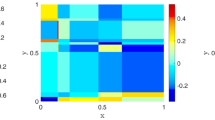

where \(*\) denotes the convolution. Examples of the sample paths are shown in Figs. 1 and 2.

Sample paths of additive Lévy fields, as in Example 2.2

Example 2.3

Let \((X^{(1)}_{t_1})_{t_1 \in {\mathbb {R}}_+}, \dots (X^{(N)}_{t_N})_{t_N \in {\mathbb {R}}_+}\) be independent \({\mathbb {R}}\)-valued Lévy processes with laws \(\nu ^{(1)}_{t_1}, \dots , \nu ^{(N)} _{t_N}\). Then,

is a \({\mathbb {R}}^N\) valued Lévy process, which can be called product Lévy process (in the language of [17, p. 407]). Clearly, this is a particular case of Example 2.2 because

where \(\{e_1, \dots , e_N\}\) denotes the canonical basis of \({\mathbb {R}}^N\).

Here, \(X_t\) has law

where \(\otimes \) denotes the product of measures.

Example 2.4

Let \((V_t)_{t\in \mathbb {{\mathbb {R}}_+}}\) be a Lévy process in \({\mathbb {R}}^d\). Then, \(V_{c_1t_1+\dots + c_Nt_N}\) is a multiparameter Lévy process for any choice of \( (c_1, \dots , c_N)\in {\mathbb {R}}^N_+\).

Remark 2.5

What we have presented is not the only way to extend the notion of independence of increments to the multiparameter case. A very common approach is to define independence of increments over disjoint rectangles (see [1] and [10]). This gives rise to a class of random fields, known as Lévy sheets (e.g., the Poisson sheet or the Brownian sheet).

In the following, \(\delta _0\) will denote the probability measure concentrated at the origin. Moreover, \(\{e_1, \dots , e_N\}\) will denote the canonical basis of \({\mathbb {R}}^N\).

Definition 2.6

A family \((\mu _t)_{t\in {\mathbb {R}}^N _+}\) of probability measures on \({\mathbb {R}}^d\) is said to be a \({\mathbb {R}}^N_+\)-parameter convolution semigroup if

i) \(\mu _{t+\tau }= \mu _t * \mu _{\tau }\), for all \(t, \tau \in {\mathbb {R}}^N_+\)

ii) \( \mu _t \rightarrow \delta _0 \) as \( t\rightarrow 0\)

By Definition 2.6, it follows that \(\mu _t\) is infinitely divisible for each t.

The above notion of multiparameter convolution semigroup is related to multiparameter Lévy processes, as shown in Proposition 2.7, which is a special case of Theorem 4.5 of [5]. We underline that such Proposition will be crucial in the rest of our article.

We preliminarily observe that since \(X_t\) is a multiparameter Lévy process, where \(t=(t_1, \dots , t_N)\), it immediately follows that for each \(j=1, \dots , N\), the process \((X_{t_je_j})_{t_j \in {\mathbb {R}}_+}\) is a classical one-parameter Lévy process. In other words, if \((\mu _t)_{t\in {\mathbb {R}}^N_+}\) is a multiparameter convolution semigroup, then \((\mu _{t_je_j})_{t_j\in {\mathbb {R}}_+}\) is a one-parameter convolution semigroup which is the law of \(X_{t_je_j}\).

Proposition 2.7

Let \((X_t)_{t\in {\mathbb {R}}_+^N}\) be a multiparameter Lévy process on \({\mathbb {R}}^d\) and let \(\mu _t\) be the law of the random variable \(X_t\). Then,

i) the family \((\mu _{t}) _{t \in {\mathbb {R}}_+^N }\) is a \({\mathbb {R}}^N_+\)-parameter convolution semigroup of probability measures.

ii) There exist independent random vectors \(Y^{(j)}_{t_j}\), \(j=1, \dots , N\), with \( Y^{(j)}_{t_j} \overset{d}{=}\ X_{t_je_j}\), such that

Proof

By writing

we observe that \(X_{t+\tau }-X_\tau \) and \(X_\tau \) are independent by the assumption of independence of increments along those sequences that are increasing with respect to the partial ordering. Moreover, \(X_{t+\tau }-X_\tau \) has the same distribution of \(X_t\) by stationarity. Hence, \(\mu _{t+\tau }=\mu _t*\mu _\tau \). Moreover, stochastic continuity of \((X_t)_{t\in {\mathbb {R}}^N_+}\) gives \(\mu _t \rightarrow \delta _0\) as \(t\rightarrow 0\), and thus i) is proved. To prove ii), it is sufficient to write \(t= t_1e_1+ \dots +t_N e_N\) and apply the semigroup property just proved in point i), to have

and the proof is complete since \(\mu _{t_je_j}\) is the law of \(X_{t_je_j}\). \(\square \)

We stress that Proposition 2.7 is a statement about equality in law of random variables (t is fixed) and not equality of processes.

We further observe that Proposition 2.7 says that to each multiparameter Lévy process in law there corresponds a unique convolution semigroup of probability measures. But, unlike what happens for classical Lévy processes (i.e., when \(N=1\)), the converse is not true in general: a multiparameter convolution semigroup \((\mu _t)_{t\in {\mathbb {R}}^N_+}\) can be associated to different multiparameter Lévy processes in law, because \((\mu _t)_{t\in {\mathbb {R}}^N_+}\) does not completely determine all the finite-dimensional distributions. Indeed, only along \({\mathbb {R}}^N_+\)-increasing sequences \(0 \preceq \tau ^{(1)} \preceq \dots \preceq \tau ^{(k)}\), the joint distribution of \((X_{\tau ^{(1)}}, \dots , X_{\tau ^{(k)}})\) can be uniquely determined in terms of \(\mu _t\) by using independence and stationarity of increments, but this is not possible if the points \(\tau ^{(1)}, \dots , \tau ^{(k)} \in {\mathbb {R}}^N_+\) are not ordered (in the sense of the partial ordering). As an example, consider two biparameter processes defined in the following way. The first one is \(Z(t_1, t_2)= B_1(t_1)+B_2(t_2)\), where \(B_1\) and \(B_2\) are independent standard Brownian motions. The second one is \(W(t_1,t_2)=B(t_1+t_2)\) where B(t) is a standard Brownian motion. Both \(Z(t_1,t_2)\) and \(W(t_1, t_2)\) have law \({\mathcal {N}}(0,t_1+t_2)\). However, this law does not identify all the finite-dimensional distributions. Indeed, observe the processes at \((t_1,0)\) and \((0,t_2)\), which are not ordered points (in the sense of the partial ordering). It is clear that \(Z(t_1,0)\) and \(Z(0,t_2)\) are independent, unlike what happens for \(W(t_1,0)\) and \(W(0,t_2)\).

2.1.1 Characteristic Function of Multiparameter Lévy Processes

Consider the \(Y^{(j)}_{t_j}\) involved in Proposition 2.7. By the Lévy Khintchine formula, we have

the Lévy exponent \(\psi _j\) having the form

where \(\gamma _j \in {\mathbb {R}}^d\), \(A_j\) is the Gaussian covariance matrix, \(\nu _j\) denotes the Lévy measure and \(\cdot \) denotes the scalar product. By the above considerations, we thus get the following statement.

Proposition 2.8

Let \((X_t)_{t\in {\mathbb {R}}_+^N}\) be a multiparameter Lévy process with values in \({\mathbb {R}}^d\). Then, \(X_t\) has characteristic function

where \(t= (t_1, \dots , t_N)\), the functions \(\psi _j\) have been defined in (2.2), and

We will call (2.4) the multidimensional Lévy exponent.

2.2 Autocorrelation Function of Multiparameter Lévy Processes

Consider a multiparameter Lévy process \(\{X_t\}_{t\in {\mathbb {R}}_+^N}\) with values in \({\mathbb {R}}\). In the following proposition, we will explicitly compute the autocorrelation function between two ordered points in the parameter space, i.e.,

Of course, (2.5) exists finite only in some cases, which will be specified in the following (e.g., the process must be non-deterministic). What we will find is the N-parameter extension of the well-known formula holding in the case \(N=1\), i.e., for classical Lévy processes (consult e.g., Remark 2.1 in [23]):

Proposition 2.9

Let \(\{X_t\}_{t\in {\mathbb {R}}_+^N}\) be an N-parameter Lévy process with values in \({\mathbb {R}}\), having multidimensional Lévy exponent \(\Psi (\xi )\) defined in (2.3) and (2.4). For each \(j=1, \dots , N\), let \(\xi \rightarrow \psi _j(\xi )\) be twice differentiable in a neighborhood of \(\xi =0\), and such that \(\psi '' _j(0)\ne 0\). Then, the autocorrelation function defined in (2.5) reads

where \(\cdot \) denotes the scalar product and \(\sigma ^2:= -\Psi '' (0)\).

Proof

Consider the decomposition of \(X_t\) given in Proposition 2.7. Since \(\psi _j'' (0)\) exists, then \(Y_{t_j} ^{(j)}\) has finite mean and variance:

Letting \(\mu := \bigg ({\mathbb {E}} Y_{1}^{(1)}, \dots , {\mathbb {E}} Y_{1}^{(N)} \bigg )\) and \( \sigma ^2:= -\Psi '' (0)= \bigg ({\mathbb {V}}\text {ar} Y_{1}^{(1)}, \dots , {\mathbb {V}}\text {ar} Y_{1}^{(N)}\bigg )\), we get

Moreover, for \(s\preceq t\), we have

where we used independence and stationarity of the increments along \({\mathbb {R}}^N_+\) increasing sequences. We thus have

and the desired result immediately follows. \(\square \)

Remark 2.10

Let |v| denote the Euclidean norm of v. In the limit \(|t| \rightarrow \infty \), we have that \(\rho (X_s,X_t)\) behaves like \(|t|^{-1/2}\). Indeed, consider the scalar product in the denominator of (2.6), i.e., \(t\cdot \sigma ^2 = |t|\, |\sigma ^2| \cos \theta \), where \(\theta \) is the angle between t and \(\sigma ^2\). Now, observe that \(\sigma ^2\) is a fixed vector of \({\mathbb {R}}^N_+\), with strictly positive components by the assumption \(\psi ''_j(0)\ne 0\). Since t is in \({\mathbb {R}}^N_+\) also, by simple geometric arguments, it follows that there exist two constants \(c_1>0\) and \(c_2>0\), which do not depend on t, such that \(c_1\le \cos \theta \le c_2\). Then, \(k_1 |t|^{-1/2}\le \rho (X_s,X_t) \le k_2 |t|^{-1/2}\) for two suitable constants \(k_1>0\) and \(k_2>0\) both independent of t.

2.3 Multiparameter Semigroups of Operators and their Generators

Let \({\mathbb {B}}\) be a Banach space equipped with the norm \(||\cdot ||_{{\mathbb {B}}}\). An N-parameter family \((T_t)_{t\in {\mathbb {R}}_+^N}\) of bounded linear operators on \({\mathbb {B}}\) is said to be an N-parameter semigroup of operators if \(T_0\) is the identity operator and the following property holds:

We say that \((T_t)_{t\in {\mathbb {R}}_+^N}\) is strongly continuous if

Moreover, we say that \((T_t)_{t\in {\mathbb {R}}_+^N}\) is a contraction semigroup if, for any \(t\in {\mathbb {R}}^N_+\), we have \(||T_t u||_{{\mathbb {B}}} \le ||u||_{{\mathbb {B}}}\).

Example 2.11

Let \(G_1, G_2, \dots , G_N\) be bounded operators on \({\mathbb {B}}\), such that \([G_i, G_k]:= G_iG_k-G_kG_i=0\) for all \(i\ne k\). Consider the vector

Then, for all \(t=(t_1, \dots , t_N)\), the family

defines a strongly continuous semigroup on \({\mathbb {B}}\). In light of the following Definition 2.13, we will call the vector G the generator of the multiparameter semigroup.

Example 2.12

Let \((\mu _t)_{t\in {\mathbb {R}}^N_+}\) be a multiparameter convolution semigroup of probability measures on \({\mathbb {R}}^d\) (in the sense of Definition 2.6) and let \({\mathcal {C}}_0({\mathbb {R}}^d)\) be the space of continuous functions vanishing at infinity, equipped with the sup-norm. Then,

defines a strongly continuous contraction multiparameter semigroup.

Let \(t=(t_1, \dots , t_N) \in {\mathbb {R}}^N_+\) and let \(\{e_1, \dots , e_N\}\) be the canonical basis of \({\mathbb {R}}^N\). For each \(j=1, \dots , N\), we refer to the one-parameter semigroups \(T_{t_j e_j}\) as the marginal semigroups. By the property (2.7), it follows that the marginal semigroups commute, i.e., \([T_{t_i e_i}, T_{t_j e_j} ]=0\) for \(i\ne j\) and the following relation holds:

Now, let \(G_i\) be the generator of \(T_{t_i e_i}\), defined on \(\text {Dom} (G_i)\). It is well-known that if \(u\in \text {Dom} (G_i)\), then \( T_{t_i e_i} u\in \text {Dom} (G_i)\) and the following differential equation

is solved by \(w(t_i)= T_{t_i e_i} u\). We here recall the notion of generator of a multiparameter semigroup (see [9, chapter 1]).

Definition 2.13

Let \((T_t)_{t\in {\mathbb {R}}^N_+}\) be a strongly continuous N-parameter semigroup on \({\mathbb {B}}\) and let \(G_i, i=1, \dots , N\), be the generators of the marginal semigroups, each defined on \(\text {Dom} (G_i)\). We say that the vector

is the generator of \((T_t)_{t\in {\mathbb {R}}^N_+}\), defined on \(\text {Dom} (G)=\bigcap _{j=1}^N \text {Dom} (G_j)\).

The above definition is intuitively motivated by the following result.

Proposition 2.14

Let \((T_t)_{t\in {\mathbb {R}}^N_+}\) be a strongly continuous N-parameter semigroup with generator G according to Definition 2.13. Then, for \(u\in \bigcap _{j=1}^N Dom (G_j)\), the function \(w(t)= T_tu\) solves the following system of differential equations

where \(\nabla _t\) denotes the gradient with respect to \(t= (t_1, \dots , t_N)\). Namely, we have

subject to \(w(0)=u\).

Proof

Let us introduce a compact notation to denote the composition of operators, namely

Let us fix \(i=1, \dots , N\). For \(q\in \text {Dom} (G_i)\), it is true that \(T_{t_ie_i}q \in \text {Dom} (G_i)\) and

By using Propositions 1.1.8 and 1.1.9 in [9], we know that if \(u\in \text {Dom} (G_i)\), then \(T_tu \in \text {Dom} (G_i)\) for any \(t\in {\mathbb {R}}^N_+\). In particular, we have \(\bigcirc ^N _{k=1, k\ne i} T_{t_ke_k}u \in \text {Dom} (G_i)\). Hence, Eq. (2.10) holds for \(q= \bigcirc ^N _{k=1, k\ne i} T_{t_ke_k}u \):

and Eq. (2.9) for a fixed i is found by using property (2.7). By choosing \(u\in \bigcap _{j=1}^N \text {Dom} (G_j)\), it is possible to repeat the same argument for all \(i=1, \dots , N\), and the system of differential equations is obtained. \(\square \)

By putting \(t=0\) in Eq. (2.8), it follows that the generator G can also be found by

For other results concerning multiparameter semigroups and generators consult [9]. Moreover, for a general discussion on operator semigroups related to multiparameter Markov processes, we refer to [17].

Remark 2.15

A different definition of generator for multiparameter semigroups is given in [14] and [47]. Here, the authors defined the generator as the composition of the marginal generators, i.e.,

The motivation for such definition is that for \(u\in \text {Dom} (G_1 \circ \dots \circ G_N)\), the authors prove that \(w(t)= T_tu\) solves the partial differential equation

where \(t=(t_1, \dots t_N)\). Also this approach seems to be very interesting, especially in the field of partial differential equations as it allows to find probabilistic solutions to equations of type (2.13), containing a mixed derivative.

2.4 Semigroups Associated with Multiparameter Lévy Processes

Let \((X_t)_{t\in {\mathbb {R}}^N_+}\) be a multiparameter Lévy process on \({\mathbb {R}}^d\) and let \((\mu _t)_{t\in {\mathbb {R}}^N_+}\) be the associated convolution semigroup of probability measures, i.e., \(\mu _t\) is the law of \(X_t\) for each t. Consider the operator

where \({\mathcal {C}}_0({\mathbb {R}}^d)\) denotes the space of continuous functions vanishing at infinity. By using the properties of \(\{\mu _t\}_{t\in {\mathbb {R}}^N_+} \), it immediately follows that the family \((T_t)_{t\in {\mathbb {R}}^N_+}\) is a strongly continuous contraction semigroup on \({\mathcal {C}}_0({\mathbb {R}}^d)\); it is also positivity preserving, hence it is a Feller semigroup. We now give a representation of this semigroup and its generator by means of pseudo-differential operators. We restrict to the Schwartz space of functions \({\mathcal {S}}({\mathbb {R}}^d)\).

We define the Fourier transform by

Since \(h \in {\mathcal {S}}({\mathbb {R}}^d)\), the following Fourier inversion formula holds:

Theorem 2.16

Let \((X_t)_{t\in {\mathbb {R}}^N_+}\) be a multiparameter Lévy process with Lévy exponent \(\Psi \) defined in (2.3) and (2.4). Let \((T_t)_{t\in {\mathbb {R}}^N_+}\) be the associated semigroup defined in (2.14) and let \(G=(G_1, \dots , G_N)\) be its generator. Then,

-

1.

For any \(t\in {\mathbb {R}}^N_+\), \(T_t\) is a pseudo-differential operator with symbol \(e^{t\cdot \Psi }\), i.e.,

$$\begin{aligned} T_th(x)= \frac{1}{(2\pi )^{d/2}} \int _{{\mathbb {R}}^d} e^{i\xi \cdot x} e^{t\cdot \Psi (\xi )} {\hat{h}}(\xi ) \textrm{d}\xi , \qquad h\in {\mathcal {S}}({\mathbb {R}}^d). \end{aligned}$$(2.15) -

2.

G is a pseudo-differential operator with symbol \(\Psi \), i.e., for each \(i=1, \dots , N\) we have

$$\begin{aligned} G_ih(x)= \frac{1}{(2\pi )^{d/2}} \int _{{\mathbb {R}}^d} e^{i\xi \cdot x} \psi _i(\xi ) {\hat{h}}(\xi ) \textrm{d}\xi , \qquad h\in {\mathcal {S}}({\mathbb {R}}^d). \end{aligned}$$

Proof

-

1.

Since (2.14) is a convolution integral, its Fourier transform can be computed as

$$\begin{aligned} \frac{1}{(2\pi )^{d/2}} \int _{{\mathbb {R}}^d} e^{-i\xi \cdot x} T_t h(x)\text {d}x= {\hat{h}}(\xi ) {\mathbb {E}} e^{i\xi \cdot X_t}, \end{aligned}$$where \({\mathbb {E}} e^{i\xi \cdot X_t} =e^{t\cdot \Psi (\xi )}\) by using (2.3). Then, Fourier inversion gives the result.

-

2.

By applying formula (2.12), we have that

$$\begin{aligned} G_i h(x)&= \frac{\partial }{\partial t_i} T_tu (x)\biggl |_{t=0}\\&= \biggl [ \lim _{t_i\rightarrow 0} \frac{1}{(2\pi )^{d/2}} \int _{{\mathbb {R}}^d} e^{i\xi \cdot x} \frac{e^{t_i \psi _i(\xi )}-1}{t_i} \prod _{k=1, k\ne i}^N e^{t_k\psi _k(\xi )} {\hat{h}}(\xi ) \text {d}\xi \biggr ]_{t=0}. \end{aligned}$$The limit can be taken inside the integral due to dominated convergence theorem. Indeed, \(| e^{t_k\psi _k(\xi )} | \le 1\) for each k because \(e^{t_k\psi _k(\xi )}\) is the characteristic function of \(\mu _{t_ke_k}\) (see (2.1)); moreover,

$$\begin{aligned} \biggl | \frac{e^{t_i \psi _i(\xi )}-1}{t_i} \biggr |\le |\psi _i(\xi )| \le C_i (1+|\xi |^2), \end{aligned}$$where for the last inequality we used [4, p. 31]. Thus, the absolute value of the integrand is dominated by \((1+|\xi |^2){\hat{h}} (\xi )\). But the last function is independent of \(t_i\) and is integrable on \({\mathbb {R}}^d\) because \({\hat{h}}\) is a Schwartz function. Then, by exchanging the limit and the integral, the result immediately follows.

\(\square \)

3 Composition of Random Fields

3.1 Subordinator Fields

In order to treat the composition of random fields, the main object is provided by the following definition.

Definition 3.1

A multiparameter Lévy process \((H_{t})_{t\in {\mathbb {R}}^M_+}\) is said to be a subordinator field if, for some positive integer N, it takes values in \({\mathbb {R}}^N_+ \) almost surely.

The above definition means that almost surely, \(t\rightarrow H_t\) is a non-decreasing function with respect to the partial ordering, i.e., \(t_1 \preceq t_2\) on \({\mathbb {R}}^M_+\) implies \(H_{t_1} \preceq H_{t_2}\) on \({\mathbb {R}}^N_+\).

Example 3.2

(Classical subordinators) If \(N=M=1\), then \((H_{t})_{t\in \mathbb {R_+}}\) is a classical subordinator, i.e., a non-decreasing Lévy process with values in \({\mathbb {R}}_+\). Hence, it is such that

where the Laplace exponent f is a so-called Bernstein function. Thus, it is defined by

where \(b\ge 0\) is the drift coefficient and \(\phi \) is the Lévy measure, which is supported on \({\mathbb {R}}_+\) and satisfies \(\int _{{\mathbb {R}}_+} \min (x,1) \phi (\text {d}x) <\infty \). For more details on this subject, consult [45].

Example 3.3

(Multivariate subordinators) If \(M=1\) and \(N\ge 1\), then \((H_t) _{t\in {\mathbb {R}}_+ }\) is a multivariate subordinator in the sense of [5]. Thus, it is a one-parameter Lévy process with values in \({\mathbb {R}}^N_+\), i.e., it is non-decreasing in each marginal component. Here, \(H_t\) has Laplace transform

where the Laplace exponent S is a multivariate Bernstein function. Hence, it is defined by

where \(b \in {\mathbb {R}}^N_+\), and the Lévy measure \(\phi \) is supported on \({\mathbb {R}}^N_+\) and satisfies

It is known (see e.g., Sect. 2 in [8]) that if \(H_t\) has a density p(x, t), then it solves

where \( {\mathcal {D}}_x\) denotes the N-dimensional version of the generalized fractional derivative defined in (1.6), i.e.,

Example 3.4

(Multivariate stable subordinators) We here consider a special sub-case of Example 3.3, in which the multivariate subordinator is stable. In order to define this process by means of its Lévy measure, we need to use the spherical coordinates r and \({\hat{\theta }}\), which respectively denote the length and the direction of jumps. Clearly, \({\hat{\theta }}\) takes values in the set \({\mathcal {C}}^{N-1}=\{{\hat{\theta }}\in {\mathbb {R}}^N_+: |{\hat{\theta }}|=1\}\) because, by definition, all the marginal components make positive jumps. So, a multivariate subordinator \((H_t)_{t\in {\mathbb {R}}_+}\) is said to be \(\alpha \)-stable if its Lévy measure can be written in spherical coordinates as

where \(\alpha \in (0,1)\) denotes the stability index and \(\sigma \) is the so-called spectral measure, which is proportional to the probability distribution of the jump direction \({\hat{\theta }}\). By simple calculations, it is easy to see that in this case, the Laplace exponent takes the form

for a suitable \(k>0\). It is known that \(H_t\) has a density p(x, t) solving the following equation:

where \({\mathcal {D}}_x^{\alpha , \sigma }\) is the so-called fractional gradient, i.e., a pseudo-differential operator defined by

Note that (3.4) represents the average under \(\sigma (\text {d}{\hat{\theta }})\) of the fractional power of the directional derivative along the direction \({\hat{\theta }}\). For some theory and applications about this operator, consult Example 2.2 in [8], chapter 6 in [33] and also [13, 28].

When \(N=2\), the Lévy measure has the form

and, by denoting \(\lambda =(\lambda _1, \lambda _2)\), the Laplace exponent can be written as

whence the fractional gradient, acting of a function \((x,y)\rightarrow h(x,y)\), has the form

3.1.1 The General Case

In the general case where N and M are any positive integers, the Laplace transform of \(H_t\) can be computed as follows. Let \(t=(t_1, \dots , t_M)\in {\mathbb {R}}^M_+\) and let \(\{e_1, \dots , e_M\}\) be the canonical basis of \({\mathbb {R}}^M\). We can use Proposition 2.7 to say that there exist independent random vectors \(Z^{(k)}_{t_k}\), \(k=1, \dots , M\), with \( Z ^{(k)}_{t_k} \overset{d}{=}\ H_{t_ke_k}\), such that

But, by the construction of \((H_t)_{t\in {\mathbb {R}}^M_+}\), it follows that for each \(k=1, \dots , M\), the process \((H_{t_ke_k})_{t_k \in {\mathbb {R}}_+}\) is a multivariate subordinator in the sense explained in the previous Example 3.3. Hence, there exist \(b_k\in {\mathbb {R}}^N_+\) and a Lévy measure \(\phi _k\) on \({\mathbb {R}}^N_+\) (satisfying \(\int _{{\mathbb {R}}^N_+} \min (|x|, 1) \phi _k (\text {d}x) <\infty \)) such that \(H_{t_ke_k}\) has Laplace transform

where \(S_k\) is a multivariate Bernstein functions, defined by

Hence, the Laplace transform of \(H_t\) can be compactly written as

where \(t=(t_1, \dots , t_M)\) and

We call (3.8) the multidimensional Laplace exponent of the subordinator field. The above decomposition of a subordinator field into the sum (in distribution) of independent multivariate subordinators will play a decisive role in the following.

A sample path of a stable subordinator field is shown in Fig. 3.

3.2 Subordinated Fields

Let \((X_{s})_{s\in {\mathbb {R}}^N_+}\) be an N-parameter Lévy process with values in \({\mathbb {R}}^d\) and let \((H_{t})_{t\in {\mathbb {R}}^M_+}\) be a subordinator field (in the sense of Sect. 3.1) with values in \({\mathbb {R}}^N_+ \). In the following, \((X_{s})_{s\in {\mathbb {R}}^N_+}\) and \((H_{t})_{t\in {\mathbb {R}}^M_+}\) are assumed to be independent. We consider the subordinated random field

It is known that (3.9) is also a multiparameter Lévy process (see [39, Thm. 3.12]). Let \(\mu _s\), \(\rho _t\) and \(\nu _t\), respectively, denote the probability laws of \(X_s\), \(H_t\) and \(Z_t\). Then, by conditioning, for any Borel set \(B\subset {\mathbb {R}}^d\), we have

Processes of type (3.9) have also been studied in the literature.

In [5], the authors study the case \(M=1\) and prove that \((Z_t)_{t\in {\mathbb {R}}^+}\) is again a Lévy process and find the characteristic triplet.

In [38,39,40], the authors consider the general case \(M\ge 1\); actually their study is more general, since they consider cone-parameter Lévy processes subordinated by cone-valued Lévy processes.

Now, let \((T_t) _{t\in {\mathbb {R}}^N_+}\) be the Feller semigroup associated with \(X _t\), defined in (2.14), with generator \(G=(G_1, \dots , G_N)\). Moreover, let \((T^Z _t)_{t\in {\mathbb {R}}^M_+}\) be the Feller semigroup associated with \(Z_t\), i.e.,

where \(\nu _t\) is the law of \(Z_t\) defined in (3.10), whence we can rewrite (3.11) as a subordinated semigroup:

In the following theorem, we determine the form of the generator \(G^Z= (G^Z_1, \dots , G^Z_M)\) for the subordinated semigroup, by restricting to the Schwartz space \({\mathcal {S}}({\mathbb {R}}^d)\). We obtain a multiparameter generalization of the well-known Phillips formula (see e.g., [43, p. 212]) holding for one-parameter subordinated semigroups.

Theorem 3.5

For each \(k=1, \dots , M\), we have

where \(b_k\) and \(\phi _k\) have been defined in (3.6).

Proof

We first compute the characteristic function of \(Z_t= X_{H_t}\), following the lines of [5]. By conditioning, and using (2.3) and (3.7), we have

where \(t=(t_1, \dots , t_M)\) and

Thus, by using Theorem 2.16, it follows that \(T^Z_t\) is a pseudo-differential operator with symbol \(e^{-t \cdot S(-\Psi )}\), i.e.,

while for each \(k=1, \dots , M\), \(G^Z_k\) is a pseudo-differential operator with symbol

This means that

But, using (3.6), we have that

Then, after substituting (3.16) in (3.15), we can solve the inverse Fourier transform, and taking into account the representation of \(T_t\) given in (2.15), we obtain the result.

\(\square \)

Remark 3.6

In the spirit of operational functional calculus, the well-known Phillips Theorem (see e.g., [43, p. 212]) can be informally stated as follows. Let a Markov process \((X_t)_{t \in {\mathbb {R}}_+}\) have generator G and let a subordinator \((H_t)_{t\in {\mathbb {R}}_+}\) have Bernstein function f. Then, the subordinated process \((X_{H_t})_{t\in {\mathbb {R}}_+}\) has generator \(-f(-G)\).

In a similar way, our Theorem 3.5 can be stated as follows.

Let \((X_t)_{t\in {\mathbb {R}}^N_+}\) be a multiparameter Lévy process with generator \(G=(G_1, \dots , G_N)\) and let \((H_t)_{t\in {\mathbb {R}}^M_+}\) be a subordinator field associated with the multivariate Bernstein functions \(S_1, S_2, \dots , S_M\), namely its Laplace exponent is \(S=(S_1, S_2, \dots , S_M)\). Then, the subordinated field \((X_{H_t})_{t\in {\mathbb {R}}^M_+}\) has generator

3.3 Stochastic Solution to Systems of Integro-Differential Equations

Our extension of the Phillips theorem, given in Theorem 3.5, provides a stochastic solution to some systems of differential equations.

Indeed, let \((X_t)_{t\in {\mathbb {R}}^N_+}\) be a Multiparameter Lévy process with values in \({\mathbb {R}}^d\). Moreover, let \((H_t)_{t\in {\mathbb {R}}^M_+}\) be a subordinator field with values in \({\mathbb {R}}^N_+ \) and let \((Z_t)_{t\in {\mathbb {R}}^M_+} = (X_{H_t})_{t\in {\mathbb {R}}^M_+}\) be the subordinated field. Then, by virtue of Proposition 2.14, and using the symbolic notation of Remark 3.6, we have that, for any \(u\in {\mathcal {S}}({\mathbb {R}}^d)\), the function \({\mathbb {E}}u (x+Z_t)\) solves the system

where \(t= (t_1, \dots , t_M)\), \(G=(G_1, \dots , G_N)\) denotes the generator of \((X_t)_{t\in {\mathbb {R}}^N_+}\) and \(S_1, \dots , S_M\) are the multivariate Bernstein functions, i.e., the components of the Laplace exponent of \((H_t)_{t\in {\mathbb {R}}^M_+}\) defined in (3.8).

Example 3.7

Let \(\{e_1, \dots , e_M\}\) be the canonical basis of \({\mathbb {R}}^M\). Assume that the subordinator field \((H_t)_{t\in {\mathbb {R}}^M_+}\) is such that, for each \(i=1, \dots , M\), the component \(H_{t_ie_i}\) is a multivariate stable subordinator in the sense of Example 3.4, with index \(\alpha _i \in (0,1)\), whose multivariate Bernstein function reads

Then, the system (3.17) takes the form

where, on the right side, the fractional powers \((-G \cdot {\hat{\theta }} )^{\alpha _i}\) are well-defined because \(-G \cdot {\hat{\theta }}\) is the generator of a contraction semigroup.

Example 3.8

Let \(N=M=2\). Consider the biparameter, additive Lévy process

where \(X_1\) and \(X_2\) are independent isotropic stable processes with indices \(\alpha _1 \in (0,2]\) and \(\alpha _2\in (0,2]\), respectively. Let

be a subordinator field, such that \(H(t_1, 0)\) and \(H(0, t_2)\) are two bivariate stable subordinators in the sense of Example 3.4, respectively, having indices \(\beta _1 \in (0,1)\) and \(\beta _2\in (0,1)\) and spectral measures \(\sigma _1\) and \(\sigma _2\). Let

be the subordinated field. Then, for any \(u\in {\mathcal {S}}({\mathbb {R}}^d)\), the function \({\mathbb {E}}u(x+Z(t_1, t_2))\) solves the system

where \(-(-\Delta )^{\alpha _i/2}\) denotes the fractional Laplacian. To write the system (3.21), we used that for \(i=1,2\), the generator of the isotropic stable process \(X_i\) is \(G_i=-(-\Delta )^{\alpha _i/2}\) (see e.g., [4, p. 166]).

Example 3.9

Consider again Example 3.8. In the special case where \(\alpha _1= \alpha _2=2\), the process (3.20) is a so-called additive Brownian motion (see e.g., [17, p. 394]) and the above system simplifies to

for suitable constants \(C_1, C_2 >0\).

Example 3.10

Let \(N=1\) and \(M>1\) (so that subordination increases the number of parameters). So let \((X_t)_{t\in {\mathbb {R}}_+}\) be a one-parameter Lévy process and let \((H_t)_{t\in {\mathbb {R}}^M_+}\) be a subordinator field with values in \({\mathbb {R}}_+\). For example, assume that \((X_t)_{t\in {\mathbb {R}}_+}\) is a standard Brownian motion in \({\mathbb {R}}^d\) and, for each \(k=1, \dots , M\), \(H_{t_ke_k}\) is a stable subordinator of index \(\beta _k \in (0,1)\) (\(e_k\) denoting the k-th vector of the canonical basis). Let \((Z_t)_{t\in {\mathbb {R}}^M_+} = (X_{H_t})_{t\in {\mathbb {R}}^M_+}\) be the subordinated field. Then, \({\mathbb {E}}u(x+Z_t)\) solves

4 Subordination by the Inverse Random Field

Let \((H_t)_{t\in {\mathbb {R}}_+}\) be a multivariate subordinator in the sense of Example 3.3, which takes values in \({\mathbb {R}}^N_+\). Hence, it is defined by \(H_t=(H_1(t), \dots , H_N(t))\), where each marginal component \(H_j(t)\) is a classical subordinator. Consider a new random field \(({\mathcal {L}}_t)_{t\in {\mathbb {R}}^N_+}\) defined by

where \(L_j\) is the inverse hitting time of the subordinator \(H_j\), i.e.,

As stated in the introduction, we will call (4.1) inverse random field.

Now, let \((X_t)_{t\in {\mathbb {R}}^N_+}\) be an N-parameter Lévy process with values in \({\mathbb {R}}^d\). We are interested in the subordinated random field \((Z_t)_{t\in {\mathbb {R}}^N_+}\) defined by

This topic has many sources of inspiration. Above all, there is a well-established theory (consult e.g., [6, 7, 22, 27, 29,30,31,32,33,34, 48]) concerning semi-Markov processes of the form

where X is a Lévy process in \({\mathbb {R}}^d\) and L is the inverse hitting time of a subordinator H, i.e.,

Such processes have a great interest in statistical physics, as they arise as scaling limits of suitable continuous time random walks.

Example 4.1

A special case (see e.g., [2, 3, 21, 25, 26]) is the process

where B is a d-dimensional standard Brownian motion and \(L^\alpha \) is the inverse of a \(\alpha \)-stable subordinator independent of B, where \(\alpha \in (0,1)\). The process (4.4) is a so-called subdiffusion: the mean square displacement behaves as \(C \, t^{\alpha }\), i.e., the motion is delayed with respect to the Brownian behavior. This models the case where the moving particle is trapped by inhomogeneities or perturbations in the medium; thus, the particle runs on Brownian paths, but, for arbitrary time intervals, it is forced to be at rest, which gives rise to a sub-diffusive dynamics. Diffusions in porous media and penetration of a pollutant in the ground have this type of motion (see [35] for other applications of anomalous diffusions). The random variable \(B(L^\alpha (t))\) has a density solving the following anomalous diffusion equation

where \(\Delta \) denotes the Laplacian operator and \( {\mathcal {D}}_t ^\alpha \) is the Marchaud fractional derivative, defined by

See also [11, 12] for a tempered version of such operator and its connections to drifted Brownian motions. We finally recall that recent models of anomalous diffusion in heterogeneous media, where the fractional order \(\alpha \) is space-dependent, have been developed in [20, 41, 44] (see also [16] for a related model).

Equation (4.5) is a special case of a more general theory. Indeed, as anticipated in the Introduction, if X and L are independent, the connection of the process (4.3) with integro-differential equations is given by the following facts. Let X have a density p(x, t) solving

where \(G^*\) is the dual to the Markov generator. Moreover, let L be the inverse of a subordinator with Lévy measure \(\nu \). If L has a density l(x, t), then, by conditioning, X(L(t)) has a density

Such a density solves

where \({\overline{\nu }}(t)= \int _t^\infty \nu (\text {d}x)\) and the operator \({\mathcal {D}}_t\), usually called generalized Marchaud fractional derivative, is defined by

Concerning the link between semi-Markov processes and non-local in time equations, consult also [36, 37] for a discrete-time model and [42] for the theory of abstract equations related to semi-Markov Random evolutions.

The rest of this section will be structured as follows. A special case of biparameter Lévy processes will be treated in Sect. 4.1 and a related model of anisotropic subdiffusion will be presented in Sect. 4.2. Finally, the special case where the \(L_j\), \(j=1, \dots , N\), are independent will be presented in Sect. 4.3 and some long-range dependence properties will be analyzed.

4.1 Subordination of Some Two-Parameter Lévy Processes

Consider the following biparameter Lévy process with values in \({\mathbb {R}}^d\):

where \(X_1\) and \(X_2\) are (possibly dependent) Lévy processes with values in \({\mathbb {R}}^ {d_1}\) and \({\mathbb {R}}^{d_2}\), respectively, with \(d_1+d_2=d\).

Consider now a bivariate subordinator \((H_1 (t), H_2(t))\) and the related bivariate inverse random field \((L_1(t_1), L_2(t_2))\) as defined in (4.1).

We will consider the following assumptions:

A1) \(X_1 (t_1)\) and \(X_2(t_2)\) have marginal densities \(p_1(x_1,t)\) and \(p_2(x_2,t)\) satisfying the following forward equations:

where \(G^*_1\) and \(G^*_2\) are the duals to the generators of \(X_1\) and \(X_2\).

A2) \(X(t_1, t_2)\) has density \(p(x_1, x_2, t_1, t_2)\) satisfying the system

A3) For all \(t_1, t_2>0\), the random vector \((H_1(t_1), H_2(t_2))\) has a density \(q(x_1, x_2, t_1, t_2)\).Footnote 1

We now consider the subordinated random field

The following proposition gives a generalization of equation (4.7) adapted to the random field (4.10).

Proposition 4.2

Under the assumptions A1), A2), A3), the random vector \(X(L_1(t_1), L_2(t_2))\) has a density \(h(x_1, x_2, t_1, t_2)\) satisfying

where \({\mathcal {D}}_{t_1, t_2}\) is the bidimensional version of the generalized fractional derivative, defined in (3.1), i.e.,

Proof

Under assumption A3), the distribution of \((L_1(t_1), L_2(t_2))\) is the sum of two components (see [8, sect. 3.1]): the first one is absolutely continuous with respect to the bidimensional Lebesgue measure, with density l, namely

while the second one has support on the bisector line \(x_1 = x_2\), with one-dimensional Lebesgue density \(l_*(x,t_1, t_2)\) (i.e., \(P(L_1(t_1)= L_2(t_2))= \int _0^\infty l_*(x,t_1, t_2)\text {d}x)\).

Then, by using a simple conditioning argument, the random vector \(X(L_1(t_1), L_2(t_2))\) has density

By applying \({\mathcal {D}}_{t_1, t_2}\) to both sides and by using [8, Thm 3.6], we have

Now, we integrate by parts by using assumptions A1 and A2. We also use that \(X_1(0)=0\) and \(X_2(0)=0\) almost surely, which implies that \(P(X_1(0) \in A, X_2(t_2)\in B)= {\mathcal {I}} _{(0\in A)} P(X_2 (t_2)\in B)\) and \(P(X_1(t_1) \in A, X_2(0)\in B)= P(X_1 (t_1)\in A) {\mathcal {I}} _{(0\in B)}\); thus we get

where

In the above calculations, we have taken into account that

since the total derivative of \(p(x_1, x_2, t_1, t_2)\), with \(t_1=u\) and \(t_2=u\), is given by

In the region \(x_1\ne 0, x_2 \ne 0\), we have

which concludes the proof. \(\square \)

A sample path of a time-changed field is shown in Fig. 4.

4.2 Anomalous Diffusion in Anisotropic Media

As a byproduct of the results of Sect. 4.1, we here propose another model of subdiffusion which extends the one treated in Example (4.1), by including it as a special case.

As explained, the process (4.4) models a subdiffusion through an isotropic medium, i.e., the trapping effect is the same in all coordinate directions (e.g., all components of the Brownian motion are delayed by the same random time process). Hence, the subordinated process (4.4) is isotropic as well as the Brownian motion.

Thus, it is natural to search for a model of subdiffusion in the case where the external medium is not isotropic.

Actually, a first model of anisotropic subdiffusion has been proposed in [8, sect. 5]. Here, the authors defined a process

where \((B_1, B_2)\) is a bidimensional standard Brownian motion with independent components and \((L_1, L_2)\) is the inverse random field of \((H_1, H_2)\), the last one being a bivariate stable subordinator of index \(\alpha \) (in the sense of Example 3.4). The authors in [8] prove that the law of Z(t) is not rotationally invariant; hence, the process is anisotropic. However, the mean square displacement along the direction \(\theta \) grows as \(C_\theta \, t^\alpha \); hence, the spreading rate \(\alpha \) is the same for all coordinate directions (that is, anisotropy is given by the constant \(C_\theta \) alone). Thus, from a physical point of view, the model presented in [8] differs little from the isotropic model, which was previously existing in the literature (where \(C_\theta \) is independent of \(\theta \)). In a sense, this is due to the fact that the subordinator \(H(t)= (H_1(t), H_2(t))\) used in [8] is characterized by the isotropic scaling \(H(ct)\overset{d}{=}c^{1/\alpha }H(t)\). In the following, we will improve these shortcomings, by considering a bivariate operator stable subordinator, which is characterized by the anisotropic scaling \(H(ct)\overset{d}{=}c^A H(t)\), where A is a linear operator (whose eigenvalues determine the spreading rates in different directions). On this points, see the discussion before formula (4.16), and also the Examples from 4.3 onward.

We need to recall some notions on operator stability (consult [15] and [46]). A random vector X with values in \({\mathbb {R}}^d\) is said to be operator stable if, for any positive integer n, there exist a vector \(c_n\in {\mathbb {R}}^d\) and a \(d\times d\) matrix A such that n indipendent copies \(X_1, \dots , X_n\) of X satisfy

where the matrix power \(n^A\) is defined by

In the special case \(A=\frac{1}{\alpha } I\), with \(\alpha \in (0,2]\) and I denoting the identity matrix, we have that X is \(\alpha \)-stable. In the general case, A has eigenvalues whose real parts have the form \(1/\alpha _i\), with \(\alpha _i \in (0,2], i=1, \dots , d\). We stress that the matrix A is not unique, i.e., there may be different \(n\times n\) matrices satisfying (4.13) (unlike what happens in the stable case, where the index \(\alpha \) is uniquely defined).

Operator stable laws are infinite divisible, hence they correspond to some Lévy processes. A Lévy process \(X(t), t\ge 0\) is said to be an operator stable Lévy motion if X(1) is an operator stable random vector. Note that such a process is characterized by the anisotropic scaling \(X(ct) \overset{d}{=}c^A X(t)\). This property is a generalization of self-similarity of \(\alpha \)-stable processes where the scaling is the same for all coordinates, i.e., \(X(ct) \overset{d}{=}\ c^{1/\alpha } X(t) \).

We are now ready to present the model of anisotropic subdiffusion. So, let us consider a bivariate subordinator \((H_1(t), H_2(t))\) which is constructed as an operator stable Lévy motion with values in \({\mathbb {R}}^2_+\). In this case, A has eigenvalues whose real parts have the form \(1/\alpha _i\), with \(\alpha _i \in (0,1)\), \(i=1,2\). Now, let \(r>0\) and \( \theta \in [0, \frac{\pi }{2}]\) be the so-called Jurek coordinates (see e.g., [15] and [33, p.185]) which are defined by the mapping \({\mathbb {R}}^2_+\ni x= r^A{\hat{\theta }} \), where \({\hat{\theta }}= (\cos \theta , \sin \theta )\). In this new coordinates, the bidimensional Lévy measure can be expressed as

where M is a probability measure on the angular component. Then, the operator \({\mathcal {D}}_{x}\), \(x \in {\mathbb {R}}^2_+\), defined in formula (3.1) of Example 3.3, takes the form

If \((H_1(t), H_2(t))\) is a bivariate stable subordinator (see Example 3.4), i.e., \(A= \frac{1}{\alpha } I \), by a simple change of variables, one re-obtains the fractional gradient defined in formula (3.4).

Now, let \((L_1(t_1), L_2(t_2))\) be the inverse random field of \((H_1(t), H_2(t))\) and let \((B_1(t), B_2(t))\) be a bidimensional standard Brownian motion with independent components. Consider the time-changed process

The process (4.15) is a model of anisotropic subdiffusion. Indeed consider the random variable

representing the displacement along the direction \({\hat{\theta }} = (\cos \theta , \sin \theta )\). By conditioning, the mean square displacement can be written as

which, in general, depends on \(\theta \) because of anisotropy.

In the spirit of [8, Sect. 4], a governing equation for the process (4.15) can be obtained by considering the related random field \((B_1(L_1(t_1)), B_2(L_2(t_2)))\). Indeed, by applying Proposition 4.2 of the previous Section, it has a density \(h(x_1, x_2, t_1, t_2)\) satisfying the anomalous diffusion equation

where the operator \({\mathcal {D}} ^{A,M} _{t}\), defined in (4.14), now acts on \(t=(t_1, t_2)\).

Example 4.3

If \(L_1(t)=L_2(t)=L(t)\), where L(t) is the inverse of a \(\alpha \)-stable subordinator, the process (4.15) reduces to the isotropic subdiffusion (4.4). In this case, we have \({\mathbb {E}}L(t)=Ct^\alpha \). Thus, \({\mathbb {E}}Z_{\theta } ^2 (t)=Ct^\alpha \), which is independent of \(\theta \) because of isotropy.

Example 4.4

If A has the form \(\frac{1}{\alpha }I\), where I is the identity matrix, then Z(t) reduces to the anisotropic subdiffusion considered in [8, sect. 5]. In this case, \({\mathbb {E}} L_1(t)= C_1 t^\alpha \) and \({\mathbb {E}} L_2(t)= C_2 t^\alpha \). Hence, following formula (4.16), the mean square displacement along the direction \(\theta \) has the form \({\mathbb {E}}Z_{\theta } ^2 (t)=C_\theta t^\alpha \), where \(C_\theta = C_1 \cos ^2 \theta + C_2 \sin ^2 \theta \).

Example 4.5

If \(H_1(t)\) and \(H_2(t)\) are independent stable subordinators, then the matrix A is diagonal with elements \(1/\alpha _1\) and \(1/\alpha _2\). If \(\alpha _1 \ne \alpha _2\), the process (4.15) is anisotropic, in such a way that \(\alpha _1\) and \(\alpha _2\) represent the spreading rates along the two coordinate directions. Indeed, since \({\mathbb {E}}L_i(t)= C_i t^ {\alpha _i}\) for \(i=1,2\), then the mean square displacement along a direction \({\hat{\theta }}\) has the form \({\mathbb {E}} Z_\theta ^2 (t) = C_1 t^ {\alpha _1} \cos ^2 \theta + C_2 t^ {\alpha _2} \sin ^2\theta \) which depends on \({\hat{\theta }}\) (and, asymptotically, it behaves like \(t ^{\max (\alpha _1, \alpha _2)}\)).

Example 4.6

If A is a symmetric matrix with eigenvalues \(1/\alpha _1\) and \(1/\alpha _2\), where \(\alpha _1\) and \(\alpha _2\) are in (0, 1), then a rigid rotation of the coordinate system allows to find the two eigenvectors, along which the spreading rates are \(\alpha _1\) and \(\alpha _2\), respectively, which corresponds to the situation explained in Example 4.5.

4.3 Subordination by Independent Inverses

In the following, let \(X(t_1, \dots , t_N)\) be an N-parameter Lévy process with density p(x, t) satisfying the system

with the usual notation \(t=(t_1, \dots , t_N)\). Assume that the marginal components \(L_j(t_j)\) of the inverse random field (4.1) are mutually independent, each having density \(l_j(x, t_j)\) and Lévy measure \(\nu _j\). Consider the subordinated random field

Before stating the next result, we introduce the following notation: For a given vector \(v= (v_1, \dots , v_N)\), we introduce the vector \(v^{(j)}\) defined by \(v^{(j)}= (v_1, \dots , v_{j-1}, 0, v_{j+1}, \dots , v_N)\).

Proposition 4.7

Under the above assumptions, the subordinated field (4.17) has a density \(p^*(x,t)\) satisfying the system

where \({\mathcal {D}}^{(\nu _j)} _{t_j}\) denotes the generalized fractional derivative defined in (4.8) with Lévy measure \(\nu _j\), and \({\overline{\nu }}_j(t_j)= \int _{t_j}^\infty \nu _j(\textrm{d}\tau )\).

Proof

By conditioning, (4.17) has a density

By applying \({\mathcal {D}}^{(\nu _j)} _{t_j}\) to both members and taking into account that such operator commutes with the integral, we have

where we used that the density \(l_j(x,t_j)\) of an inverse subordinator satisfies the equation \({\mathcal {D}}^{(\nu _j)} _{t_j} l_j(x,t_j)=- \partial _x l_j(x,t_j)\) under the condition \(l_j(0, t_j)= {\overline{\nu }}_j(t_j)\) (see e.g., [22]).

Integrating by parts, we have

where the last integral can be written as

because \(l_j(u_j,0)= \delta (u_j)\). This completes the proof. \(\square \)

4.3.1 Long-Range Dependence

Consider a process of type (4.17). For each \(k=1, \dots , N\), let \(L_k(t_k)\) be the inverse of a \(\alpha \)-stable subordinator. The subordinated field exhibits a power law decay of the autocorrelation function which is slower with respect to the \(|t|^{-\frac{1}{2}}\) decay holding for multiparameter Lévy processes (which was discussed in Remark 2.10 ). This can be useful in applied fields, where spatial data exhibit long-range dependence properties.

So, let \(s\preceq t\). By using the results of Sect. 2.2, we have

where in the last step we used independence between \(L_i\) and \(L_k\) when \(i\ne k\). Putting \(s=t\), we have

By self-similarity of the inverse stable subordinator (consult e.g., Proposition 3.1 in [31]), we have \(L_k(t_k) \overset{d}{=}\ t_k ^\alpha L_k(1)\). Hence,

Thus, by using the notation \(t^\beta := \big (t_1 ^\beta , \dots , t_N^\beta \big )\), we can write

where we defined \(w_k= \sigma _k^2\, {\mathbb {E}}L_k(1)\) and \(v_k= \mu _k^2 \, {\mathbb {V}}\text {ar} L_k(1)\).

Moreover, by using Formula 10 in [23], we have

In summary, for \(| t| \rightarrow \infty \), we have

Remark 4.8

What we found in (4.18) is the multiparameter extension of the known formula holding in the \(N=1\) case, see e.g., Example 3.2 in [23]. Here, the authors considered the subordinated process \((X_{L(t)})_{t\in {\mathbb {R}}_+}\), where \((X_t) _{t\in {\mathbb {R}}_+} \) is a Lévy process and \((L(t))_{t\in {\mathbb {R}}_+}\) is the inverse of a \(\alpha \)-stable subordinator, with \(\alpha \in (0,1)\). By considering two times s and t, such that \(s<t\), and letting \(t\rightarrow \infty \), they show that the autocorrelation \(\rho (X_{L(t)}, X_{L(s)})\) behaves like \(t^{-\alpha }\) if \({\mathbb {E}}X_1\ne 0\) and \(t^{-\frac{\alpha }{2}}\) if \({\mathbb {E}}X_1= 0\). It is interesting to note that the same power law behavior is observed in the corresponding discrete-time models (see Proposition 4 in [37]).

Data Availability

All of the material is owned by the authors, and no permissions are required.

Notes

Observe that the random field \((t_1, t_2) \rightarrow (H_1(t_1), H_2(t_2))\) is not a biparameter Lévy process even if \(t\rightarrow (H_1(t), H_2(t))\) is a multivariate subordinator, unless the two marginal components are independent.

References

Adler, R.J., Monrad, D., Scissors, R.H., Wilson, R.: Representations, decompositions and sample function continuity of random fields with independent increments. Stoch. Process. Appl. 15(1), 3–30 (1983)

Ascione, G.: Tychonoff solutions of the time-fractional heat equation. Fractal Fract. 6(6), 292 (2022)

Ascione, G., Patie, P., Toaldo, B.: Non-local heat equation with moving boundary and curve-crossing of delayed Brownian motion. https://arxiv.org/pdf/2203.09850.pdf

Applebaum, D.: Lévy Processes and stochastic calculus. In: Cambridge Studies in Advanced Mathematics, vol. 116, Cambridge University Press, Cambridge (2009)

Barndorff-Nielsen, O., Pedersen, J., Sato, K.: Multivariate subordination, self-decomposability and stability. Adv. Appl. Probab. 33(1), 160–187 (2001)

Becker-Kern, P., Meerschaert, M.M., Scheffler, H.P.: Limit theorems for coupled continuous-time random walks. Ann. Probab. 32, 730–756 (2004)

Beghin, L., Macci, C., Martinucci, B.: Random time-changes and asymptotic results for a class of continuous-time Markov chains on integers with alternating rates. Modern Stoch. Theory Appl. 8(1), 63–91 (2018)

Beghin, L., Macci, C., Ricciuti, C.: Random time-change with inverses of multivariate subordinators: governing equations and fractional dynamics. Stoch. Proc. Appl. 130(10), 6364–6387 (2020)

Butzer, P.L., Berens, H.: Semi-groups of Operators and Approximation. Springer-Verlag, Berlin (1967)

Dalang, R., Walsh, J.B.: The sharp Markov property of Lévy sheets. Ann. Probab. 20, 591–626 (1992)

D’Ovidio, M., Iafrate, F., Orsingher, E.: Drifted Brownian motions governed by fractional tempered derivatives. Modern Stoch. Theory Appl. 5, 445–456 (2018)

D’Ovidio, M., Iafrate, F.: Elastic drifted Brownian motions and non-local boundary conditions. Stoch. Process. Appl. 167, 104228 (2024)

D’Ovidio, M., Garra, R.: Multidimensional fractional advection-dispersion equations and related stochastic processes. Electron. J. Probab. 19(61), 31 (2014)

Jacob, N., Schicks, M.: Multiparameter Markov processes: generators and associated martingales. Revue Roumaine des Mathematiques Pures et Appliquees 55(1), 27–34 (2010)

Jurek, Z., Mason, J.: Operator-Limit Distributions in Probability Theory. Wiley, New York (1993)

Kataria, K.K., Vellaisamy, P.: On distributions of certain state-dependent fractional point processes. J. Theoret. Probab. 32, 1554–1580 (2019)

Khoshnevisan, D.: Multiparameter processes. In: An Introduction to Random Fields. Springer Monographs in Mathematics (2002)

Khoshnevisan, D., Shi, Z.: Brownian sheet and capacity. Ann. Probab. 27, 1135–1159 (1999)

Khoshnevisan, D., Xiao, Y.: Level sets of additive Levy processes. Ann. Probab. 30, 62–100 (2002)

Kian, Y., Soccorsi, E., Yamamoto, M.: On time-fractional diffusion equations with space-dependent variable order. Ann. Henry Poincarè 19, 3855–3881 (2018)

Kilbas, A.A., Srivastava, H.M., Trujillo, J.J.: Theory and Applications of Fractional Differential Equations. North-Holland Mathematics Studies, vol. 204, Elsevier Science B.V, Amsterdam (2006)

Kolokoltsov, V.N.: Generalized continuous-time random walks, subordination by hitting times, and fractional dynamics. Theory Probab. Appl. 53, 594–609 (2009)

Leonenko, N.N., Meerschaert, M.M., Shilling, R.L., Sikorskii, A.: Correlation structure of time-changed Lévy processes. Commun. Appl. Ind. Math. 6, e483 (2014)

Leonenko, N., Merzbach, E.: Fractional poisson sheet. Methodol. Comput. Appl. Probab. 17, 155–168 (2015)

Magdziarz, M., Schilling, R.: Asymptotic properties of Brownian motion delayed by inverse subordinators. Proc. Am. Math. Soc. 143, 4485–4501 (2015)

Magdziarz, M., Weron, A.: Ergodic properties of anomalous diffusion processes. Ann. Phys. 326(9), 2431–2443 (2011)

Maheshwari, A., Vellaisamy, P.: Fractional Poisson process time-changed by Lévy subordinator and its inverse. J. Theoret. Probab. 32(3), 1278–1305 (2019)

Meerschaert, M.M., Benson, D., Baumer, B.: Multidimensional advection and fractional dispersion. Phys. Rev. E 59(5), 1–3 (1999)

Meerschaert, M.M., Nane, E., Vellaisamy, P.: The fractional Poisson process and the inverse stable subordinator. Elect. J. Prob. 16(59), 1600–1620 (2011)

Meerschaert, M.M., Scheffler, H.P.: Triangular array limits for continuous time random walks. Stoch. Proc. Appl. 118(9), 1606–1633 (2008)

Meerschaert, M.M., Scheffler, H.P.: Limit theorems for continuous-time random walks with infinite mean waiting times. J. Appl. Probab. 41, 623–638 (2004)

Meerschaert, M.M., Straka, P.: Semi-Markov approach to continuous time random walk limit processes. Ann. Probab. 42(4), 1699–1723 (2014)

Meerschaert, M.M., Sikorskii, A.: Stochastic models for fractional calculus. In: De Gruyter Studies in Mathematics, vol. 43, Walter de Gruyter Co., Berlin (2012)

Meerschaert, M.M., Toaldo, B.: Relaxation patterns and semi-Markov dynamics. Stoch. Proc. Appl. 129(8), 2850–2879 (2019)

Metzler, R., Klafter, J.: The random walk’s guide to anomalous diffusion: a fractional dynamics approach. Phys. Rep. 339, 1–77 (2000)

Michelitsch, T.M., Polito, F., Riascos, A.P.: Asymmetric random walks with bias generated by discrete-time counting processes. Commun. Nonlin. Sc. Num. Simul. 109, 106121 (2022)

Pachon, A., Polito, F., Ricciuti, C.: On discrete-time semi-Markov processes. Discret. Contin. Dyn. Syst. Ser. B 26(3), 1499–1529 (2021)

Pedersen, J., Sato, K.: Cone-parameter convolution semigroups and their subordination. Tokyo J. Math. 26(2), 503–525 (2003)

Pedersen, J., Sato, K.: Relations between cone-parameter Lévy processes and convolution semigroups. J. Math. Soc. Japan 56(2), 541–559 (2004)

Pedersen J., Sato K.: Semigroups and processes with parameter in a cone. In: Abstract and Applied Analysis, pp. 499–513, World Scientific Publishing, River Edge (2004)

Ricciuti, C., Toaldo, B.: Semi-Markov models and motion in heterogeneous media. J. Stat. Phys. 169(2), 340–361 (2017)

Ricciuti, C., Toaldo, B.: From Semi-Markov random evolutions to scattering transport and superdiffusion. Commun. Math. Phys. 401, 2999–3042 (2023)

Sato, K.: Levy Processes and Infinitely Divisible Distributions. Cambridge University Press, Cambridge (1999)

Savov, M., Toaldo, B.: Semi-Markov processes, integro-differential equations and anomalous diffusion-aggregation. Ann. de l’Institut Henri Poincaré (B) Prob. Stat. 56(4):2640–2671 (2020)

Schilling, R.L., Song, R., Vondracek, Z.: Bernstein functions: Theory and applications. Walter de Gruyter GmbH and Company KG, vol 37 of De Gruyter Studies in Mathematics Series (2010)

Sharpe, M.: Operator-stable probability distributions on vector groups. Trans. Amer. Math. Soc. 136, 51–65 (1969)

Schicks, M.: Investigations on Families of Probability Measures Depending on Several Parameters. PhD Thesis, Swansea University (2007)

Straka, P., Henry, B.I.: Lagging and leading coupled continuous time random walks, renewal times and their joint limits. Stoch. Proc. Appl. 121, 324–336 (2011)

Funding

Open access funding provided by Università degli Studi di Roma La Sapienza within the CRUI-CARE Agreement. The author Costantino Ricciuti acknowledges financial support under the National Recovery and Resilience Plan (NRRP), Mission 4, Component 2, Investment 1.1, Call for tender No. 104 published on 2.2.2022 by the Italian Ministry of University and Research (MUR), funded by the European Union - NextGenerationEU- Project Title “Non-Markovian Dynamics and Non-local Equations” - 202277N5H9 - CUP: D53D23005670006 - Grant Assignment Decree No. 973 adopted on June 30, 2023, by the Italian Ministry of Ministry of University and Research (MUR). The author Francesco Iafrate thanks his Institution for the support under the Ateneo Grant 2022 and MUR for the support under PRIN 2022- 2022XZSAFN: Anomalous Phenomena on Regular and Irregular Domains: Approximating Complexity for the Applied Sciences - CUP B53D23009540006.

Author information

Authors and Affiliations

Contributions

Both authors made equal contributions to the work. Both of them reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflict of interest or other interests that might be perceived to influence the results and discussion presented in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Iafrate, F., Ricciuti, C. Some Families of Random Fields Related to Multiparameter Lévy Processes. J Theor Probab (2024). https://doi.org/10.1007/s10959-024-01351-3

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10959-024-01351-3

Keywords

- Multiparameter Lévy processes

- Subordination of random fields

- Fractional operators

- Semi-Markov processes

- Anomalous diffusion