Abstract

In this paper we investigate scaling limits of the odometer in divisible sandpiles on d-dimensional tori following up the works of Chiarini et al. (Odometer of long-range sandpiles in the torus: mean behaviour and scaling limits, 2018), Cipriani et al. (Probab Theory Relat Fields 172:829–868, 2017; Stoch Process Appl 128(9):3054–3081, 2018). Relaxing the assumption of independence of the weights of the divisible sandpile, we generate generalized Gaussian fields in the limit by specifying the Fourier multiplier of their covariance kernel. In particular, using a Fourier multiplier approach, we can recover fractional Gaussian fields of the form \((-\varDelta )^{-s/2} W\) for \(s>2\) and W a spatial white noise on the d-dimensional unit torus.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Main Results

Gaussian random fields arise naturally in the study of many statistical physical models. In particular fractional Gaussian fields \(FGF_s(D):=(-\varDelta )^{-s/2} W\), where W denotes a spatial white noise, \(s\in {\mathbb {R}}\) and \(D\subset {\mathbb {R}}^d\), typically arise in the context of random phenomena with long-range dependence and are closely related to renormalization. Examples of fractional Gaussian fields include the Gaussian free field and the continuum bi-Laplacian model. We refer the reader to Lodhia et al. [14] and references therein for a complete survey on fractional Gaussian fields. In this paper we study a class of divisible sandpile models and show that the scaling limit of its odometer functions converges to a Gaussian limiting field indexed by a Fourier multiplier.

The divisible sandpile was introduced by Levine and Peres [11, 12], and it is defined as follows: A divisible sandpile configuration on the discrete torus \({\mathbb {Z}}_n^d\) of side-length n is a function \(s : {\mathbb {Z}}_n^d \rightarrow {\mathbb {R}}\), where s(x) indicates a mass of particles or a hole at site x. Note that here, unlike the classical Abelian sandpile model [2, 8], s(x) is a real-valued number. Given \((\sigma (x))_{x\in {\mathbb {Z}}_n^d}\) a sequence of centered (possibly correlated) multivariate Gaussian random variables, we choose s to be equal to

If a vertex \(x \in {\mathbb {Z}}_n^d\) is unstable, i.e., \(s(x)> 1\), it topples by keeping mass 1 for itself and distributing the excess \(s(x) -1\) uniformly among its neighbors. At each discrete time step, all unstable vertices topple simultaneously. The configuration s defined as (1.1) will stabilize to the all 1 configuration. The odometer \(u_n:{\mathbb {Z}}^d_n\rightarrow {\mathbb {R}}_{\ge 0}\) collects the information about all mass which was emitted from each vertex in \({\mathbb {Z}}^d_n\) during stabilization. Our main theorem states that \(u_n\), properly rescaled, converges to a Gaussian random field in some appropriate Sobolev space.

In Cipriani et al. [4] the authors consider divisible sandpiles with nearest-neighbor mass distribution and show that for any configuration s given by (1.1) where the \(\sigma \)’s are i.i.d. with finite variance the limiting odometer is a bi-Laplacian Gaussian field \((-\varDelta )^{-1} W\) on the unit torus \({\mathbb {T}}^d\) (or \(FGF_2({\mathbb {T}}^d)\) in the notation of Lodhia et al. [14]). Relaxing the second moment assumption on \(\sigma \) leads to limiting fields which are no longer Gaussian, but alpha-stable random fields, see Cipriani et al. [7]. On the other side, if one keeps the second moment assumption and instead redistributes the mass upon toppling to all neighbors following the jump distribution of a long-range random walk, one can construct fractional Gaussian fields with \(0< s\le 2\) ([3]). To summarize, Gaussian fields appear under the assumption of finite second moments in the initial configuration, while tuning the redistribution of the mass leads to limiting interfaces with smoothness which is at most the one of a bi-Laplacian field.

One natural question arises: What kind of sandpile models give rise to odometer interfaces which are smoother than the bi-Laplacian? It turns out that to obtain limiting fields of the form \((-\varDelta )^{-s/2} W\) such that \(s>2\) the long-range dependence must show up in the initial Gaussian multivariate variables \(\sigma \) rather than in the redistribution rule. The novelty of the present article is that it complements [3, 4, 7] by removing the assumption of independence of the weights (in the Gaussian case), and in addition provides an example of a model defined on a discrete space which scales to a limiting field \((-\varDelta )^{-s/2} W\) such that \(s>2\). Note that for the fractional case with \(s>2\) one does not have the aid of explicit integral representations for the eigenvalues, and therefore constructing the continuum field via a discrete approximation requires new approaches. To the best of the authors’ knowledge this article is the first instance of such a construction.

In the proof, we start by defining a sequence of covariance matrices \((K_n)_{n\in {\mathbb {N}}}\) for the weights of the sandpile. Their Fourier transform \(\widehat{K_n}\) is assumed to have a pointwise limit \({\widehat{K}}\) as n goes to infinity. Under suitable regularity assumptions, \({\widehat{K}}\) defines the Fourier multiplier of the covariance kernel of the limiting field. A key idea in the proof is to absorb the multiplier \({\widehat{K}}\) into the definition of the abstract Wiener space. This defines a new Hilbert space where we will construct the limiting field. Note that this approach is different from the one in Chiarini et al. [3], Cipriani et al. [4], where the covariance structure of the odometer was given. Furthermore we would like to stress that the scaling factor \(a_n\sim n^{-2}\) used for convergence (see Theorem 1) is dimension-independent in contrast to the above-mentioned works. This follows from the fact that, being the \(\sigma \)’s correlated, the dimensional scaling is absorbed in the covariance structure of the odometer (see Lemma 7).

Let us finally remark that depending on the parameters \(s,\,d\) the limiting field will be either a random distribution or a random continuous function. More precisely, if the Hurst parameter H of the \({\textit{FGF}}_s\) field

is strictly negative, then the limit is a random distribution while for \(H\in (k,\,k+1)\), \(k\in {\mathbb {N}}\cup \{0\}\), the field is a \((k-1)\)-differentiable function ([14], with the caveat that the results presented therein are worked out for \({\mathbb {R}}^d\) or domains with zero boundary conditions). In the case of \(H\ge 0\), a stronger result could be pursued, namely an invariance principle à-la Donsker (as, for example, in Cipriani et al. [5, Theorem 2.1], Cipriani et al. [6, Theorem 3]). To keep the same outline for all proofs we will treat the limiting field a priori as a random distribution and thus prove finite-dimensional distribution convergence by testing the rescaled odometer against suitable test functions.

1.1 Main Result

Notation In all that follows, we will consider \(d\ge 1\). We are going to work with the spaces \({\mathbb {Z}}_n^d:=[-n/2,\,n/2]^d\cap {\mathbb {Z}}^d\), the discrete torus of side-length n, and \({\mathbb {T}}^d:=[-1/2,\,1/2]^d\), the d-dimensional torus. Moreover, let \(B(z,\,\rho )\) a ball centered at z of radius \(\rho >0\) in the \(\ell ^\infty \)-metric. We will use throughout the notation \(z\cdot w\) for the Euclidean scalar product between \(z,\,w\in {\mathbb {R}}^d\). We will let \(C, C',c\ldots \) be positive constants which may change from line to line within the same equation. We define the Fourier transform of a function \(f\in L^1({\mathbb {T}}^d)\) as \({\widehat{f}}(y):=\int _{{\mathbb {T}}^d}f(z)\exp \left( -2\pi {\imath }y\cdot z\right) \mathrm {d}z\) for \(y\in {\mathbb {Z}}^d\). We will use the symbol \(\,\widehat{\cdot }\,\) to denote also Fourier transforms on \({\mathbb {Z}}_n^d\) and \({\mathbb {R}}^d\) (cf. Sect. 2.1 for the precise definitions).

We can now state our main result. We consider the piecewise interpolation of the odometer on small boxes of radius 1 / 2n and show convergence to the limiting Gaussian field \(\varXi ^K\) depending on the covariance \(K_n\) of the initial sandpile configuration. This field can be represented in several ways: a convenient one is to let it be the Gaussian field with characteristic functional

where f belongs to the Sobolev space \(H_K^{-1}({\mathbb {T}}^d)\) with norm

We will give the analytical background to this definition in Sect. 2.2. Note that we index the norm and the Sobolev space by the Fourier multiplier K.

Theorem 1

For \(n\in {\mathbb {N}}\), consider an initial sandpile configuration defined by (1.1) where the collection of centered Gaussians \((\sigma (x))_{x\in {\mathbb {Z}}_n^d}\) has covariance

Assume that \(\widehat{K_n}\), the Fourier transform of \(K_n\) on \({\mathbb {Z}}_n^d\), satisfies

and that

exists. Let \((u_n(x))_{x\in {\mathbb {Z}}_n^d}\) be the odometer associated with the collection \((\sigma (x))_{x\in {\mathbb {Z}}^d_n}\) via (1.1). Let furthermore \(a_n:= 4\pi ^2(2d)^{-1} n^{-2}\). We define the formal field on \({\mathbb {T}}^d\) by

Then \(a_n \varXi ^K_n(x) \) converges in law as \(n\rightarrow \infty \) to \(\varXi ^K\) in the topology of the Sobolev space \(H_K^{-\varepsilon }({\mathbb {T}}^d)\), where

(for the analytical specification see Sect. 2.2). The field \(\varXi ^K\) and the space \(H^{-\varepsilon }_K({\mathbb {T}}^d)\) depend on K, the inverse Fourier transform of \(\widehat{K}\) as in (1.3).

Structure of the Paper We will give an overview of the needed results on divisible sandpiles and Fourier analysis on the torus in Sect. 2. The proof of the main result will be shown in Sect. 3. In Sect. 4 we discuss two classes of examples. In the first class, we consider weights with summable covariances, leading to a bi-Laplacian scaling limit. In the second class the limiting odometer is a fractional field of the form \((-\varDelta )^{- s/2 }W\), \(s>2\).

2 Preliminaries

2.1 Fourier Analysis on the Torus

We will use the following inner product for \(\ell ^2({\mathbb {Z}}^d_n)\):

Let \(\varDelta _\mathrm{g}\) denote the graph Laplacian defined by

Consider the Fourier basis of the same space given by the eigenfunctions of the Laplacian \(\{\psi _w\}_{w\in {\mathbb {Z}}^d_n}\) with

The corresponding eigenvalues \(\{\lambda _w\}_{w\in {\mathbb {Z}}^d_n}\) are given by

Given \(f \in \ell ^2({\mathbb {Z}}^d_n)\), we define its discrete Fourier transform by

for \(w\in {\mathbb {Z}}^d_n\). Similarly, if \(f, g \in L^2({\mathbb {T}}^d)\) we will denote

Consider the Fourier basis \(\{\phi _{\xi }\}_{{\xi } \in {\mathbb {Z}}^d}\) of \(L^2({\mathbb {T}}^d)\) given by

and denote

It is important to notice that for \(f \in C^\infty ({\mathbb {T}}^d)\), if we define \(f_n: {\mathbb {Z}}^d_n \rightarrow {\mathbb {R}}\) by \(f_n(z) := f( {z}/{n})\), then for all \({\xi } \in {\mathbb {Z}}^d\), \(\widehat{f_n}({\xi }) \rightarrow \widehat{f}({\xi })\) as \(n\rightarrow \infty \).

Finally, we write \(C^\infty ({\mathbb {T}}^d)/\sim \) for the space of smooth functions with zero mean, that is, the space of smooth functions modulo the equivalence relation of differing by a constant.

2.2 Abstract Wiener Spaces and Continuum Fractional Laplacians

In this subsection our aim is to define the appropriate negative Sobolev space in which the convergence of Theorem 1 occurs. To do so, we repeat the classical construction of abstract Wiener spaces as done in Cipriani et al. [4] and Silvestri [15].

Lemma 2

Let \(\widehat{K}:{\mathbb {Z}}^d\rightarrow {\mathbb {R}}_{>0}\) be the limiting Fourier multiplier as defined in Theorem 1. For \(a < 0\) the following

is an inner product on \(C^{\infty }({\mathbb {T}}^d)/\sim .\)

Proof

The linearity and conjugate symmetry are immediate. Furthermore, since (1.3) holds it follows that

where the sum converges because \(a<0\) and \(f\in (C^\infty ({\mathbb {T}}^d)/\sim ) \subset (L^2({\mathbb {T}}^d)/\sim )\). On the other hand, if we have \((f,f)_{K,a}=0\) then we must have \(\widehat{f}(\xi )=0\) for all \(\xi \in {\mathbb {Z}}^d{\setminus }\{0\}\) and so \(f \equiv 0\). \(\square \)

Define \(H^a_K({\mathbb {T}}^d)\) to be the Hilbert space completion of \(C^\infty ({\mathbb {T}}^d)/\sim \) with respect to the norm \(\Vert \cdot \Vert _{K,a}\). Our goal is to define a Gaussian random variable \(\varXi ^K\) such that for all \(f\in C^{\infty }({\mathbb {T}}^d)/\sim \) we have \(\langle \varXi ^K,f\rangle \sim {\mathcal {N}}(0,\Vert f\Vert _{K,a}^2)\). We do this by constructing an appropriate abstract Wiener space for \(\varXi ^K\). We first of all recall the definition of such a space (see Stroock [16, § 8.2]).

Definition 2.1

A triple \((H,B,\mu )\) is called an abstract Wiener space (from now on abbreviated AWS) if

-

(1)

H is a Hilbert space with inner product \((\cdot ,\cdot )_H\).

-

(2)

B is the Banach space completion of H with respect to the measurable norm \(\Vert \cdot \Vert _B\). Furthermore B is supplied with the Borel \(\sigma \)-algebra \({\mathcal {B}}\) induced by \(\Vert \cdot \Vert _B\).

-

(3)

\(\mu \) is the unique probability measure on B such that for all \(\phi \in B^*\) we have \(\mu \cdot \phi ^{-1} = {\mathcal {N}}(0,\Vert \widetilde{\phi }\Vert _H^2)\), where \(\widetilde{\phi }\) is the unique element of H such that \(\phi (h)=(\widetilde{\phi },h)_H\) for all \(h\in H\).

In order to construct a measurable norm \(\Vert \cdot \Vert _B\) as above it is sufficient to construct a Hilbert–Schmidt operator on H and set \(\Vert \cdot \Vert _B := \Vert T\cdot \Vert _H\). For \(a\in {\mathbb {R}}\) define the continuum fractional Laplace operator \((-\varDelta )^a\) acting on \(L^2({\mathbb {T}}^d)\) functions f w.r.t. the orthonormal basis \(\{\phi _{\nu }\}_{\nu \in {\mathbb {Z}}^d}\) as

We would like to make two remarks at this point. The first one is that a priori the above operator is not defined for all \(L^2({\mathbb {T}}^d)\)-functions for all values of \(a\in {\mathbb {R}}\). In fact we will construct appropriate Sobolev spaces formally consisting of \(L^2({\mathbb {T}}^d)\) functions f such that \((-\varDelta )^a f(x)\) is again square-integrable. The second remark concerns the need of mean zero test functions in order to cancel the atom at \(\nu =0\) which arises from taking an inverse (\(a<0\)) of the Laplacian in the definition above.

In the following Lemma we will construct an orthonormal basis on \(H^{a}_K({\mathbb {T}}^d)\). We set

Lemma 3

\(\{ f_\xi \}_{\xi \in {\mathbb {Z}}^d{\setminus }\{0\}}\) is an orthonormal basis of \(H^{a}_K({\mathbb {T}}^d)\) under the norm \(\Vert \cdot \Vert _{K,a}\).

Proof

First we observe that the \(f_\xi \)’s are orthogonal:

Next we show that all \(g\in H^a_K({\mathbb {T}}^d)\) have a Fourier expansion in the \(f_\xi \)’s. Indeed, choose any \(g\in H^a_K({\mathbb {T}}^d)\); then by definition there exists a Cauchy sequence \(\{g_n\}_{n\in {\mathbb {N}}}\) in \(C^\infty ({\mathbb {T}}^d)/\sim \) such that \(\Vert g_n - g \Vert _{K,a} \rightarrow 0\) as \(n\rightarrow \infty \). As \(\{ g_n \}_{n\ge 1}\) is convergent under \(\Vert \cdot \Vert _{K,a}\), we have \(\sup _{n\in {\mathbb {N}}} \Vert g_n\Vert _{K,a}^2 < \infty \). Denote by

We have for \(\xi \in {\mathbb {Z}}^d{\setminus }\{0\}\) fixed,

as \(\ell ,\,m\rightarrow \infty \). So in fact for \(\xi \) fixed, \(\{\widetilde{g_n}(\xi )\}_{n\ge 1}\) is a Cauchy sequence and thus has a limit \({\widetilde{g}}(\xi )\). We define \(h := \sum _{\xi \in {\mathbb {Z}}^d{\setminus }\{0\}} {\widetilde{g}}(\xi ) f_\xi \). Note we have for all \(\ell \in {\mathbb {N}}\):

Therefore \(\Vert h\Vert _{K,a}^2 <\infty \), so \(h\in H^a_K({\mathbb {T}}^d)\). Moreover, we have \(g_m \rightarrow h\) as \(m\rightarrow \infty \) in \(H^a_K({\mathbb {T}}^d)\). This can be seen by applying Fatou’s lemma:

In this way we see that we must have \(g = h = \sum _{\xi \in {\mathbb {Z}}^d{\setminus }\{0\}} {\widetilde{g}}(\xi ) f_\xi \), so g has a \(f_\xi \)-Fourier expansion. \(\square \)

Next we will define a Hilbert–Schmidt operator on \(H^a_K({\mathbb {T}}^d)\).

Lemma 4

Let \(\varepsilon > {d}/{4}-a\) and \(a<0\). The norm defined by

is a Hilbert–Schmidt norm on \(H_K^a({\mathbb {T}}^d)\).

Proof

First of all, note that for \(a > b\) we have \(\Vert \cdot \Vert _{K,b} \le \Vert \cdot \Vert _{K,a}\), so \(H^a_K({\mathbb {T}}^d)\subset H^b_K({\mathbb {T}}^d)\). Now recall that T is a Hilbert–Schmidt operator on a Hilbert space H if, for \(\{f_i\}_{i\ne 0}\) an orthonormal basis of H, we have

Set \(T := (-\varDelta )^{b-a}\). We have the following for all \(\nu \in {\mathbb {Z}}^d{\setminus }\{0\}\):

In this way, we see that

if and only if \(4(b-a) < -d\) which is equivalent to \(b<- {d}/{4} +a\). We write \(-\varepsilon := b < 0\) with \(\varepsilon > {d}/{4}-a\). Note that the Banach space completion of \(H^{a}_K\) with respect to the measurable norm \(\Vert (-\varDelta )^{-(\varepsilon +a)} \cdot \Vert _{K,a}\) is exactly \(H^{-\varepsilon }_K({\mathbb {T}}^d)\). Indeed, we have

\(\square \)

Definition 2.2

(Definition of the limit field) Our AWS is the triple \((H^{a}_K,H^{-\varepsilon }_K,\mu _{-\varepsilon })\) where \(\varepsilon \) is as in Lemma 4. We will choose from now on \(a:=-1\) and denote \(\Vert \cdot \Vert _{K,\,-1}\) simply as \(\Vert \cdot \Vert _K\). The measure \(\mu _{-\epsilon }\) is the unique Gaussian law on \(H_K^{-\varepsilon }\) whose characteristic functional is given in (1.2). The field associated with \(\varPhi \) will be called \(\varXi ^K\).

2.3 Covariance Kernels

We are going to show that positive, real Fourier coefficients on \({\mathbb {Z}}_n^d\) correspond to a positive definite function \((x,y)\mapsto K_n(x-y)\) on \({\mathbb {Z}}_n^d\times {\mathbb {Z}}_n^d\) by proving the analog of Bochner’s theorem on the discrete torus.

Lemma 5

The function \((x,y)\mapsto K_n(x,y)=K_n(x-y)\) on \(({\mathbb {Z}}_n^d)^2\) is symmetric and positive definite, and thus a well-defined covariance function, if and only if the Fourier coefficients \(\widehat{K_n}\) are real-valued, symmetric and positive.

Proof

Assume first that \(K_n\) is symmetric and positive definite. Then we have for any function \(c:{\mathbb {Z}}_n^d\rightarrow {\mathbb {R}}\) that is not the zero function that

We then find

As this needs to hold for all functions \(c:{\mathbb {Z}}_n^d\rightarrow {\mathbb {R}}\), we necessarily have \(\widehat{K_n}(\xi ) \in {\mathbb {R}}_{>0}\). Since \(K_n\) is symmetric, we also have

thus \(\widehat{K_n}\) is symmetric on \({\mathbb {Z}}_n^d\). The other direction of the proof can be obtained in a similar way; hence we will omit the proof here. \(\square \)

2.4 Divisible Sandpile Model and Odometer Function

A divisible sandpile configuration \(s=(s(x))_{x\in {\mathbb {Z}}^d_n}\) is a map \(s:{\mathbb {Z}}^d_n \rightarrow {\mathbb {R}}\) where s(x) can be interpreted as the mass or hole at vertex \(x\in {\mathbb {Z}}^d_n\). It is known [13, Lemma 7.1] that for all initial configurations s such that \(\sum _{x\in {\mathbb {Z}}^d_n} s(x)=n^d\) the model will stabilize to the all 1 configuration.

We consider in this paper initial divisible sandpile configurations of the form (1.1) where \((\sigma (x))_{x\in {\mathbb {Z}}^d_n}\) are multivariate Gaussians with mean 0 and stationary covariance matrix \({K}_n\) given by

where \(K_n\) is as in Theorem 1. Let us remark that putting \({K_n}(z):=\mathbb {1}_{z=0}\) retrieves the case when the \(\sigma \)’s are i.i.d.

We will study the following quantity. Let \(u_n=(u_n(x))_{x\in {\mathbb {Z}}^d_n}\) denote the odometer [13, Section 1] corresponding to the divisible sandpile model specified by the initial configuration (1.1) on \({\mathbb {Z}}^d_n\). In words, \(u_n(x)\) denotes the amount of mass exiting from x during stabilization. Let

with \(g^z(x,y)\) the expected amount of visits of a simple random walk on \({\mathbb {Z}}^d_n\) starting from x and visiting y before being killed at z. The following characterization of \(u_n\) is similar to Levine et al. [13, Proposition 1.3] and follows a close proof strategy.

Lemma 6

Let \((\sigma (x))_{x\in {\mathbb {Z}}_n^d}\) be a collection of centered Gaussian random variables with covariance given in (2.5) and consider the divisible sandpile s on \({\mathbb {Z}}_n^d\) given by (1.1). Then the sandpile stabilizes to the all 1 configuration and the distribution of the odometer \(u_n(x)\) is given by

Here \((\eta (x))_{x\in {\mathbb {Z}}_n^d}\) is a collection of centered Gaussian random variables with covariance

Proof

By Lemma 7.1 in Levine et al. [13] the sandpile stabilizes to the all 1 configuration and the odometer \(u_n\) satisfies

Setting

and \(v(y) = {n^{-d}} \sum _{z\in {\mathbb {Z}}_n^d} v^z(y) = (2d)^{-1}\sum _{x\in {\mathbb {Z}}_n^d} g(x,y) (s(x)-1)\), we can see as in Levine et al. [13, Proposition 1.3] that \(u_n-v\) is constant. So \( u_n\overset{d}{=} v + c\) for some constant \(c\in {\mathbb {R}}\). Now, since each v(x) is a linear combination of Gaussian random variables, v is again Gaussian with covariance

Observe that

The expectation in the summation (2.6) can be calculated in the following way:

If we now plug this into (2.6), we obtain

Call

and define \(Y\sim {\mathcal {N}}(0,R)\) independent of v. Then

where \((\eta (x))_{x\in {\mathbb {Z}}_n^d}\) is a collection of centered Gaussians with

Now since \(u_n-v\) is constant and \(\min u_n = 0\), we conclude from (2.7) the desired statement:

\(\square \)

The next lemma is concerned with yet another decomposition of the odometer function, namely it allows us to express its covariance in terms of Fourier coordinates. It is the analog of Cipriani et al. [4, Proposition 4].

Lemma 7

Let \(u_n:{\mathbb {Z}}_n^d\rightarrow {\mathbb {R}}_{\ge 0}\) be the odometer function as in Lemma 6. Then

Here \((\chi _{z})_{z\in {\mathbb {Z}}_n^d}\) is a collection of centered Gaussians with covariance

Proof

We denote \(g_x(\cdot ) := g(\cdot ,x)\). Subsequently we utilize Plancherel theorem on the covariance matrix of \(\eta \) (see Lemma 6) to find

We find \(\widehat{g_x}(0) = n^{-d}\sum _{z\in {\mathbb {Z}}_n^d} g(z,x)\), which does not depend on x. In this way, we see that the first term is constant, and thus it gives no contribution to the variance of \(u_n\) due to the recentering by the minimum in a similar way to the proof of Proposition 1.3 of Levine et al. [13] and the proof of Proposition 4 in Cipriani et al. [4]. Considering now the second summand above, we recall Equation (20) in Levine et al. [13], which states that for \(\xi \ne 0\),

We obtain, up to a constant factor which we ignore due to the recentering,

To show the positive-definiteness of \({\mathbb {E}}[\chi _x\chi _y]\) we will show that for any function \(c:{\mathbb {Z}}_n^d \rightarrow {\mathbb {R}}\), such that c is not the zero function, \(\sum _{x,y\in {\mathbb {Z}}_n^d} {\mathbb {E}}[\chi _x\chi _y] c(x) \overline{c(y)} > 0\). First of all, since \(K_n(z-z')\) is positive definite, we conclude that \(\widehat{K_n}\) is positive by Lemma 5. Next we find

which concludes the proof. \(\square \)

3 Proof of Theorem 1

In this section we will prove Theorem 1 using the fact that convergence in distribution for the fields \(\varXi _n^K\) is equivalent to showing [10, Section 2.1]

-

tightness in \(H_K^{-\varepsilon }({\mathbb {T}}^d)\);

-

characterizing the limiting field.

While tightness is deferred to Sect. 3.1, we will now characterize the limiting distribution by proving that for all mean-zero \(f \in C^\infty ({\mathbb {T}}^d)/\sim \) we have, as n goes to infinity,

Observe first that

is a linear combination of Gaussians, and thus Gaussian itself for each n. In order to now prove the convergence \(\langle a_n \varXi _n^K,f\rangle {\mathop {\rightarrow }\limits ^{d}} \langle \varXi ^K,f\rangle \), it is enough to show convergence of the first and second moment of \(\langle a_n \varXi _n^K,f\rangle \) for any mean-zero \(f\in C^\infty ({\mathbb {T}}^d) / \sim \). To this end, note that by Lemma 7

so in fact

Because f is a mean-zero function, we can neglect the random constant \(C'\) and with a slight abuse of notation we write

with

As we have \({\mathbb {E}}[\chi _{nz}] = 0\) for all \(z\in {\mathbb {T}}_n^d\), it follows that \({\mathbb {E}}[\langle a_n \varXi ^K_n,f\rangle ] = 0\) for all n. For the second moment note first that

Here we have defined

In \(E_n\) we essentially approximate the integral with the value at the center of the box \(B(z,\frac{1}{2n})\). The proof will now proceed in two steps: We will first show that the first term of the right-hand side of (3.2) goes to the desired limiting variance (Proposition 8). Then we will argue in Proposition 9 that the second term in (3.2) goes to 0 in \(L^2\) and, likewise, the third term after an application of the Cauchy–Schwarz inequality.

Proposition 8

We have

where \(\Vert \cdot \Vert _{K}\) is defined in (2.3).

Proposition 9

We have that \(\lim _{n\rightarrow \infty }R_n=0\) in \(L^2({\mathbb {T}}^d)\).

First we will give the proof to Proposition 8. As a preliminary tool, we need to recall the following bound on the eigenvalues \(\lambda _\xi \).

Lemma 10

(Cipriani et al. [4, Lemma 7]) There exists \(c>0\) such that for all \(n\in {\mathbb {N}}\) and \(w\in {\mathbb {Z}}^d_n{\setminus } \{0\}\) we have

Proof of Proposition 8

First of all,

If we now use the notation \(f_n :{\mathbb {Z}}_n^d\rightarrow {\mathbb {R}}\) for \(f_n(\cdot ) = f\left( {\cdot }/{n}\right) \), then we find that

We have that

For the next step in the proof we use Lemma 10. Since \(\widehat{K}_n\) is non-negative, we have that on the one hand

and on the other that

where

We will show in the following that A, which is the right-hand side of (3.4), exhibits the following limit:

and B, C vanish as \(n\rightarrow \infty \). As in Cipriani et al. [4], we split the proof into two cases. First we give a more direct proof for \(d\le 3\) and then consider \(d \ge 4\). We have that \(|\widehat{f_n}(\xi )|^2\) is uniformly bounded in \(\xi \), so

We can now use the dominated convergence theorem to obtain that

In \(d\ge 4\) we use a mollifying procedure. Take any \(\phi \in {\mathcal {S}}({\mathbb {R}}^d)\), the space of Schwartz functions, such that \(\phi \) is compactly supported on the unit cube \([-{1}/{2},\,{1}/{2})^d\) with integral 1. We write \(\phi _\kappa (\cdot ):= \kappa ^{-d} \phi \left( {\cdot }/{\kappa }\right) \) for \(\kappa >0\). In order to show the convergence of (3.7), we split the terms:

Next, we take from Cipriani et al. [4] the following bound, where \(C>0\) is some constant:

Plugging this into the above, we find

Note that by Plancherel theorem

Since the right-hand side above converges to \(\int _{{\mathbb {T}}^d}|f(x)|^2\mathrm {d}x<\infty \), one has

uniformly in n. Now taking the limit \(\kappa \rightarrow 0\) in (3.8) gives that

For the other term, we observe that since \(\phi _\kappa \) is smooth, \(\widehat{\phi _\kappa }(\xi )\) decays rapidly in \(\xi \), and since the \(\widehat{f_n}(\xi )\) are uniformly bounded, we can use the dominated convergence theorem to obtain

We have that \(|\widehat{\phi _\kappa }(\xi )|\le 1\) and \(\widehat{\phi _\kappa }(\xi )\rightarrow 1\) as \(\kappa \rightarrow 0\). Applying the dominated convergence theorem once more, we see that

To conclude it remains to show that \(B,\,C \) as defined in (3.6) vanish. This can be achieved in a similar way to Cipriani et al. [4, proof of Proposition 5]. The key point is to observe that

is uniformly bounded in n, thanks to the uniform upper bound on \(\widehat{K_n}(\cdot )\) and (3.9). \(\square \)

Remark 11

One could construct a different approximation \(f_n(\cdot )\) of \(f(\cdot /n)\) by considering the Taylor expansion of the latter. Being the supremal error between \(f_n\) and \(f(\cdot /n)\) of order \(n^{-2d}\), one could use the fast decay of the Fourier coefficients of \(f_n\) to apply dominated convergence directly in (3.7). This would give an alternative proof to the mollifying procedure, valid in all dimensions.

We continue and prove Proposition 9.

Proof of Proposition 9

Let us calculate \({\mathbb {E}}[R^2_n]\) in the following way:

because the \(\widehat{K}_n(\xi )\) are uniformly bounded and \(\Vert \xi \Vert \ge 1\). Now write \(E'_n(x):=E_n\left( {x}/{n}\right) \). Then the term above becomes

The last inequality relies on the bound given in Cipriani et al. [4, Lemma 8]:

This then concludes the proof to Proposition 9. \(\square \)

3.1 Tightness in \(H_K^{-\varepsilon }\)

To complete the proof, we show that the convergence in law \(a_n\varXi ^K_n {\mathop {\rightarrow }\limits ^{d}} \varXi ^K\) as \(n\rightarrow \infty \) holds in the Sobolev space \(H_K^{-\varepsilon }({\mathbb {T}}^d)\) for any \(\varepsilon >\max \{1+{d}/{4},\,{d}/{2}\}\). We state the following theorem:

Theorem 12

The sequence \((a_n\varXi ^K_n)_{n\in {\mathbb {N}}}\) is tight in \(H_K^{-\varepsilon }({\mathbb {T}}^d)\), in fact, for all \(\delta >0\) there exists \(R_\delta >0\) such that

Proof

The proof of this Theorem is analogous to the proof of tightness in Cipriani et al. [4, Section 4.2]. We first apply Markov’s inequality and see

Now whenever we have

the assertion follows as we can choose \(R_\delta \) such that

We calculate the expectation and obtain

Now define

We have that both \(\mathbb {1}_{B(x,\frac{1}{2n})}\) and \( \phi _{{\xi }} \in L^2({\mathbb {T}}^d)\) so by Cauchy–Schwarz inequality \(F_{n, {\xi }}\in L^1({\mathbb {T}}^d)\). Next we claim that, for some \(C'>0\),

Remark that similarly to Cipriani et al. [4, Equation (4.5)], we have

for some \(C>0\). We write \(G_{n, {\xi }}:{\mathbb {Z}}_n^d\rightarrow {\mathbb {R}}\) for \(G_{n, {\xi }}(\cdot ):=F_{n, {\xi }}\left( {\cdot }\,{n}\right) \). Using this, we find

Here we have exploited the fact that \(\sup _{z \in {\mathbb {Z}}_n^d}\widehat{K_n}(z)<\infty \) by (1.3). Now by the triangle inequality,

Thus

We then use this bound to obtain

This is a constant that does not depend on n or \(\xi \), so the claim (3.10) is proven. Using it, we have by the Euler–Maclaurin formula and the boundedness of \({\widehat{K}}(\cdot )\)

The last estimate is due to the fact that \(-\varepsilon < -{d}/{2}\). \(\square \)

4 Some Examples

In this section we want to give some concrete examples of initial distributions and Gaussian fields that can be generated via scaling limits of the odometer.

4.1 Initial Gaussian Distributions with Power-Law Covariance

We would like to look at the case in which the initial distribution of the \(\sigma \)’s is \((\sigma (x))_{x\in {\mathbb {Z}}^d_n} \sim {\mathcal {N}}(0,\, K_n)\) when the covariance matrix \(K_n\) is polynomially decaying. As an example, consider

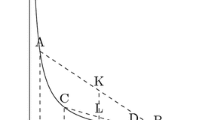

We choose \(K(0)=7\) in order to make the covariance matrix positive definite. The corresponding realizations of the odometer function are indicated in Figs. 1 and 2 and superposed in Fig. 3.

4.2 A Bi-Laplacian Field in the Limit

The next proposition shows that, even if (1.3) does not hold, but instead \(\lim _{n\rightarrow \infty } n^d \widehat{K_n}(\xi )\) exists and is finite for all \(\xi \), one can rescale the weights \(\sigma \) to go back to the setting of Theorem 1.

Proposition 13

Consider the divisible sandpile configuration defined in (1.1) with \((\sigma (x))_{x\in {\mathbb {Z}}^d_n}\) a sequence of centered multivariate Gaussians with covariance \(K_n\) satisfying

-

\(\lim _{n\rightarrow \infty }K_n({w})=K({w})\) exists for all \({w} \in {\mathbb {Z}}^d,\)

-

\(K\in \ell ^1({\mathbb {Z}}^d)\),

-

\(C_K:=\sum _{z\in {\mathbb {Z}}^d}K(z)\ne 0\).

Let \((u_n(x))_{x\in {\mathbb {Z}}_n^d}\) be the associated odometer and furthermore \(b_n:= 4\pi ^2 (2d)^{-1}n^{(d-4)/2} C_K^{-1/2}\). We define the formal field on \({\mathbb {T}}^d\) by

Then \(b_n \varXi ^K_n \) converges in law as \(n\rightarrow \infty \) to \(\varXi ^K=FGF_2({\mathbb {T}}^d)\) in the topology of the Sobolev space \(H_K^{-\varepsilon }({\mathbb {T}}^d)\), where \(\varepsilon >\max \left\{ {d}/{2},\,{d}/{4}+1\right\} \).

Proof

The basic idea is to use, rather than the weights \(\sigma \) as in the assumptions, the rescaled weights

The Fourier transform of the associated covariance kernel is now \(\widehat{K^\prime _n}=n^{d}\widehat{K_n}\). Now observe that

By dominated convergence and the fact that \(K\in \ell ^1({\mathbb {Z}}^d)\) we deduce

We repeat the computation of (3.2) for \(\langle b_n\varXi ^K_n,\,f\rangle ^2\) and we obtain as leading term

To compute the variance \({\mathbb {E}}[\langle b_n\varXi ^K_n,\,f\rangle ^2]\), we have

From this point onward the proof of Proposition 8 applies verbatim. In view of (4.1), the rescaling \(C_K^{-1}\) in the variance is done to obtain a limiting field with characteristic functional

which identifies the \(FGF_2({\mathbb {T}}^d)\). \(\square \)

4.3 Fractional Limiting Fields

We can now use Theorem 1 to construct \((1+s)\)-Laplacian limiting fields for arbitrary \(s\in (0,\infty )\). Define an initial sandpile configuration such that

We do need \(\widehat{K}(0)>0\) to ensure positive-definiteness of the kernel, so one can choose any arbitrary constant to satisfy this constraint. Using the above Fourier multiplier (observe that it is indeed uniformly bounded for \(s\in (0,\infty )\) and hence satisfies (1.3)) and applying Theorem 1, we find the following limiting distribution: for all \(f\in C^\infty ({\mathbb {T}}^d)/\sim \) we have that \(\langle \varXi ^K,f\rangle \) is a centered Gaussian with variance

An example of limiting field and corresponding initial configuration is given in Fig. 4.

4.4 Initial Gaussian Distributions with Fractional Laplacian Covariance

We have seen in the previous example that if we define an initial configuration on \({\mathbb {Z}}_n^d\) with covariance given by \(K_n(\cdot )= \left( \Vert \cdot \Vert ^{-4s}\right) ^\vee \), where the inverse Fourier transform is on \({\mathbb {Z}}_n^d\), the limiting field is Gaussian with variance (for every \(f\in C^\infty ({\mathbb {T}}^d)\) with mean zero):

However, the covariance \(K_n\) does not necessarily agree with that of the discrete s-Laplacian field on \({\mathbb {Z}}_n^d\). Namely, we recall the definition of (minus) the discrete fractional Laplacian \((-\varDelta _\mathrm{g})^{s}\) on \({\mathbb {Z}}_n^d\) [3, Section 2.1]:

where the weights \(p_n^{(s)}(\cdot )\) are given by

and \(c^{(s)}\) is the normalizing constant. The above representation has the advantage that we have an immediate interpretation of the fractional graph Laplacian in terms of random walks.

We introduce the powers \((-\varDelta _\mathrm{g})^s\) differently from (4.4), in a way which is more convenient for us. Since our main working tools are Fourier analytical, we will define the discrete s-Laplacian \((-\varDelta _\mathrm{g})^{-s}\) through its action in Fourier space. Let \(s>0\) and \(f\in \ell ^2({\mathbb {Z}}_n^d) \) be such that

where the functions \(\psi _\nu \) were defined in (2.1) and \(\widehat{f}(\nu ):=\langle f,\,\psi _\nu \rangle \). We thus define the discrete fractional Laplacian as

having \(\lambda _\nu \) as in (2.2). Note that for the above expression to be well-defined we need f as in (4.5), in other words that \(\widehat{f}(0)=0\) which is equivalent to

This space is the discrete analog of \(C^\infty ({\mathbb {T}}^d) /\sim .\) The definition in (4.6) resembles one of the possible ways to define the continuum fractional Laplacian (Kwaśnicki [9]) and is akin to the definition of the zero-average discrete Gaussian free field (Abächerli [1]). Indeed, when \(s=1\), the two definitions coincide.

Proposition 14

Let \(s>0\) and let \((u_n(z))_{z\in {\mathbb {Z}}_n^d}\) denote the odometer function associated with the weights \((\sigma (z))_{z\in {\mathbb {Z}}_n^d}\), which are sampled from a jointly Gaussian distribution \({\mathcal {N}}\left( 0,\,a_n^{2s} (-\varDelta _\mathrm{g})^{-2s}\right) \) with \(a_n:=4\pi ^2 (2d)^{-1}n^{-2}\). Let us define the formal field \(\varXi _n^K\) by

Then as \(n\rightarrow \infty \) the field \( a_n \varXi _n^K\) converges to \( \varXi ^{K}\) in the Sobolev space \(H^{-\varepsilon }_{K}({\mathbb {T}}^d)\), \(\varepsilon > \max \{d/2,1+d/4\}\). \(\varXi ^{K}\) is the Gaussian field on \({\mathbb {T}}^d\) such that for each \(f\in C^\infty ({\mathbb {T}}^d)/\sim \) we have

Proof

In the notation of Theorem 1, which we intend to apply here, we have

It is immediate that \(\widehat{K_n}\) is even and positive. We show that \(\widehat{K_n}(\xi )\) is bounded uniformly and converges to \(\Vert \xi \Vert ^{-4s}\). As the function \(x\mapsto x^{s}\) is strictly increasing for \(x\ge 0\), we can apply Lemma 7 from Cipriani et al. [4] and take s-powers such that the inequality still holds. This gives:

Observe first that since n is fixed and \(\Vert \xi \Vert \ge 1\), we indeed have that \(a_n^{2s} (-\lambda _\xi )^{-2s}\) is uniformly bounded in both n and \(\xi \) (recall (2.2)). Now taking the limit in the above, we have the convergence of \(\widehat{K_n}(\xi )\) to \(\Vert \xi \Vert ^{-4s}.\) Therefore the assumptions of Theorem 1 are satisfied. \(\square \)

References

Abächerli, A.: Local picture and level-set percolation of the Gaussian free field on a large discrete torus. Stoch. Process. Appl. (2018). https://doi.org/10.1016/j.spa.2018.09.017

Bak, P., Tang, C., Wiesenfeld, K.: Self-organized criticality: an explanation of the \(1/f\) noise. Phys. Rev. Lett. 59(4), 381 (1987)

Chiarini, L., Jara, M., Ruszel, W.M.: Odometer of long-range sandpiles in the torus: mean behaviour and scaling limits (2018). arXiv preprint arXiv:1808.06078

Cipriani, A., Hazra, R.S., Ruszel, W.M.: Scaling limit of the odometer in divisible sandpiles. Probab. Theory Relat. Fields 172, 829–868 (2017). Kindly check and confirm the edit made in the Reference [4].

Cipriani, A., Dan, B., Hazra, R.S.: The scaling limit of the membrane model (2018). arXiv preprint arXiv:1801.05663

Cipriani, A., Dan, B., Hazra, R.S.: The scaling limit of the \((\nabla +\Delta ) \)-model (2018). arXiv preprint arXiv:1808.02676

Cipriani, A., Hazra, R.S., Ruszel, W.M.: The divisible sandpile with heavy-tailed variables. Stoch. Process. Appl. 128(9), 3054–3081 (2018). https://doi.org/10.1016/j.spa.2017.10.013

Járai, A.A.: Sandpile models. Probab. Surv. 15, 243–306 (2018)

Kwaśnicki, M.: Ten equivalent definitions of the fractional laplace operator. Fract. Calc. Appl. Anal. 20(1), 7–51 (2017)

Ledoux, M., Talagrand, M.: Probability in Banach Spaces: Isoperimetry and Processes. A Series of Modern Surveys in Mathematics Series. Springer, Berlin (1991)

Levine, L., Peres, Y.: Strong spherical asymptotics for rotor-router aggregation and the divisible sandpile. Potential Anal. 30(1), 1–27 (2009). https://doi.org/10.1007/s11118-008-9104-6

Levine, L., Peres, Y.: Scaling limits for internal aggregation models with multiple sources. J. Anal. Math. 111, 151–219 (2010). https://doi.org/10.1007/s11854-010-0015-2

Levine, L., Murugan, M., Peres, Y., Ugurcan, B.E.: The divisible sandpile at critical density. Ann. Henri Poincaré 17, 1677–1711 (2015). https://doi.org/10.1007/s00023-015-0433-x

Lodhia, A., Sheffield S., Sun, X., Watson, S.S.: Fractional Gaussian fields: a survey (2014). arXiv:1407.5598

Silvestri, V.: Fluctuation results for Hastings–Levitov planar growth. Probab. Theory Relat. Fields 167, 417–460 (2015). https://doi.org/10.1007/s00440-015-0688-7

Stroock, D.: Abstract wiener space, revisited. Commun. Stoch. Anal. 2(1), 145–151 (2008)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The first author acknowledges the support of the Grant 613.009.102 of the Netherlands Organisation for Scientific Research (NWO). The first and third author would like to thank Leandro Chiarini and Rajat Subhra Hazra for helpful discussions.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Cipriani, A., de Graaff, J. & Ruszel, W.M. Scaling Limits in Divisible Sandpiles: A Fourier Multiplier Approach. J Theor Probab 33, 2061–2088 (2020). https://doi.org/10.1007/s10959-019-00952-7

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10959-019-00952-7