Abstract

In the context of finite sums minimization, variance reduction techniques are widely used to improve the performance of state-of-the-art stochastic gradient methods. Their practical impact is clear, as well as their theoretical properties. Stochastic proximal point algorithms have been studied as an alternative to stochastic gradient algorithms since they are more stable with respect to the choice of the step size. However, their variance-reduced versions are not as well studied as the gradient ones. In this work, we propose the first unified study of variance reduction techniques for stochastic proximal point algorithms. We introduce a generic stochastic proximal-based algorithm that can be specified to give the proximal version of SVRG, SAGA, and some of their variants. For this algorithm, in the smooth setting, we provide several convergence rates for the iterates and the objective function values, which are faster than those of the vanilla stochastic proximal point algorithm. More specifically, for convex functions, we prove a sublinear convergence rate of O(1/k). In addition, under the Polyak-łojasiewicz condition, we obtain linear convergence rates. Finally, our numerical experiments demonstrate the advantages of the proximal variance reduction methods over their gradient counterparts in terms of the stability with respect to the choice of the step size in most cases, especially for difficult problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The objective of the paper is to solve the following finite-sum optimization problem

where \(\textsf{H}\) is a separable Hilbert space and for all \(i \in \{1,2, \cdots , n \}\), \(f_i:\textsf{H}\rightarrow \mathbb {R}\).

Several problems can be expressed as in (1.1). The most popular example is the Empirical Risk Minimization (ERM) problem in machine learning [41, Section 2.2]. In that setting, n is the number of data points, \(\varvec{\textsf{x}}\in \mathbb {R}^d\) includes the parameters of a machine learning model (linear functions, neural networks, etc.), and the function \(f_i\) is the loss of the model \(\varvec{\textsf{x}}\) at the i-th data point. Due to the large scale of data points used in machine learning/deep learning, leveraging gradient descent (GD) for the problem (1.1) can be excessively costly both in terms of computational power and storage. To overcome these issues, several stochastic variants of gradient descent have been proposed in recent years.

Stochastic Gradient Descent. The most common version of stochastic approximation [37] applied to (1.1) is that where at each step the full gradient is replaced by \(\nabla f_i\), the gradient of a function \(f_i\), with i sampled uniformly among \(\{1, \cdots , n\}\). This procedure yields stochastic gradient descent (SGD), often referred to as incremental SGD. In its vanilla version or modified ones (AdaGrad [16], ADAM [27], etc.), SGD is ubiquitous in modern machine learning and deep learning. Stochastic approximation [37] provides the appropriate framework to study the theoretical properties of the SGD algorithm, which are nowadays well understood [10, 11, 25, 31]. Theoretical analysis shows that SGD has a worse convergence rate compared to its deterministic counterpart GD. Indeed, GD exhibits a convergence rate for the function values ranging from O(1/k) for convex functions to \(O(\exp \{-C_{\mu } k\})\) for \(\mu \)-strongly convex functions, while for SGD the convergence rates of the function values vary from \(O(1/\sqrt{k})\) to O(1/k). In addition, convergence of SGD is guaranteed if the step size sequence is square summable, but not summable. In practice, this requirement is not really meaningful, and the appropriate choice of the step size is one of the major issues in SGD implementations. In particular, if the initial step size is too large, the SGD blows up even if the sequence of step sizes satisfies the suitable decrease requirement; see, e.g., [31]. Therefore, the step size needs to be tuned by hand, and for solving problem (1.1), this is typically time consuming.

Stochastic Proximal Point Algorithm. Whenever the computation of the proximity operator of \(f_i\), \({\textsf {prox}}_{f_i}\) (for a definition see notation paragraph 2.1), is tractable, an alternative to SGD is the stochastic proximal point algorithm (SPPA). Instead of the gradient \(\nabla f_i\), the proximity operator of a \(f_i\), chosen randomly, is used in each iteration. Recent works, in particular [3, 26, 38], showed that SPPA is more robust to the choice of step size with respect to SGD. In addition, the convergence rates are the same as those of SGD, in various settings [3, 6, 34], possibly including a momentum term [26, 42,43,44].

Variance Reduction Methods. As observed above, the convergence rates for SGD are worse than those of their deterministic counterparts. This is due to the non-vanishing variance of the stochastic estimator of the true gradient. In order to circumvent this issue, starting from SVRG [1, 22], a new wave of SGD algorithms was developed with the aim of reducing the variance and recovering standard GD rates with constant step size. Different methods were developed and all share a O(1/k) and a \(O(e^{-C_{\mu }k})\) convergence rate for function values for convex and \(\mu \)-strongly convex objective functions, respectively. In the convex case, the convergence is ergodic. Apart from SVRG, the other methods share the idea of reducing the variance by aggregating different stochastic estimates of the gradient. Among them we mention SAG [39] and SAGA [14]. In subsequent years, a plethora of papers have appeared on variance reduction techniques; see, for example, [17, 28, 33, 45]. The paper [20] provided a unified study of variance reduction techniques for SGD that encompasses many of them. The latter work [20] has inspired our unified study of variance reduction for SPPA.

Variance-Reduced Stochastic Proximal Point Algorithms. The application of variance reduction techniques to SPPA is very recent and limited: the existing methods are Point-SAGA in [13], the proximal version of L-SVRG [28], and SNSPP proposed in [30]. All existing convergence results are provided in the smooth case, except for Point-SAGA in [13], where an ergodic and sublinear convergence rate with a constant step size is provided for nonsmooth strongly convex functions.

Contributions. Our contribution can be summarized as follows:

-

Assuming that the functions \(f_i\) are smooth, we propose a unified variance reduction technique for stochastic proximal point algorithm (SPPA). We devise a unified analysis that extends several variance reduction techniques used for SGD to SPPA, as listed in Sect. 4, with improved rates over SPPA. In particular, we prove a sublinear convergence rate \(\mathcal {O}(1/k)\) of the function values for convex functions. The analysis in the convex case is new in the literature. Assuming additionally that the objective function F satisfies the Polyak-łojasiewicz (PL) condition, we prove linear convergence rate both for the iterates and the function values. The PL condition on F is less strong than the strong convexity of F or even \(f_i\) used in the related previous work. Finally, we show that these results are achieved for constant step sizes.

-

As a byproduct, we derive and analyze some stochastic variance-reduced proximal point algorithms, in analogy to SVRG [22], SAGA [14] and L-SVRG [28].

-

The experiments show that, in most cases and especially for difficult problems, the proposed methods are more robust to the step sizes and converge with larger step sizes, while retaining at least the same speed of convergence as their gradient counterparts. This generalizes the advantages of SPPA over SGD (see [3, 26]) to variance reduction settings.

Organization. The rest of the paper is organized as follows: In Sect. 2, we present our generic algorithm and the assumptions we will need in subsequent sections. In Sect. 3, we show the results pertaining to that algorithm. Then, in Sect. 4, we specialize the general results to particular variance reduction algorithms. Section 5 collects our numerical experiments. Proofs of auxiliary results can be found in Appendix A.

2 Algorithm and Assumptions

2.1 Notation

We first introduce the notation and recall a few basic notions that will be needed throughout the paper. We denote by \(\mathbb {N}\) the set of natural numbers (including zero) and by \(\mathbb {R}_+ = [0,+\infty [\) the set of positive real numbers. For every integer \(\ell \ge 1\), we define \([\ell ] = \{1, \dots , \ell \}\). \(\textsf{H}\) is a Hilbert space endowed with scalar product \(\langle \cdot , \cdot \rangle \) and induced norm \(\Vert \cdot \Vert \). If \(\textsf{S}\subset \textsf{H}\) is convex and closed and \(\varvec{\textsf{x}}\in \textsf{H}\), we set \(\textrm{dist}(\varvec{\textsf{x}},\textsf{S}) = \inf _{\varvec{\textsf{z}} \in \textsf{S}} \Vert \varvec{\textsf{x}}- \varvec{\textsf{z}}\Vert \). The projection of \(\varvec{\textsf{x}}\) onto \(\textsf{S}\) is denoted by \(P_{\textsf{S}}(\varvec{\textsf{x}})\).

Bold default font is used for random variables taking values in \(\textsf{H}\), while bold sans serif font is used for their realizations or deterministic variables in \(\textsf{H}\). The probability space underlying random variables is denoted by \((\Omega , \mathfrak {A}, {\textsf{P}})\). For every random variable \(\varvec{x}\), \(\textsf{E}[\varvec{x}]\) denotes its expectation, while if \(\mathfrak {F}\subset \mathfrak {A}\) is a sub \(\sigma \)-algebra we denote by \(\textsf{E}[\varvec{x}\,\vert \, \mathfrak {F}]\) the conditional expectation of \(\varvec{x}\) given \(\mathfrak {F}\). Also, \(\sigma (\varvec{y})\) represents the \(\sigma \)-algebra generated by the random variable \(\varvec{y}\).

Let \({\varphi }:\textsf{H}\rightarrow \mathbb {R}\) be a function. The set of minimizers of \({\varphi }\) is \(\mathop {\mathrm {\textrm{argmin}}}\limits {\varphi }= \{\varvec{\textsf{x}}\in \textsf{H}\,\vert \, {\varphi }(\varvec{\textsf{x}}) = \inf {\varphi }\}\). If \(\inf {\varphi }\) is finite, it is represented by \({\varphi }_*\). When \({\varphi }\) is differentiable \(\nabla {\varphi }\) denotes the gradient of \({\varphi }\). We recall that the proximity operator of \(\varphi \) is defined as \({\textsf {prox}}_{\varphi }(\varvec{\textsf{x}}) = \mathop {\mathrm {\textrm{argmin}}}\limits _{\varvec{\textsf{y}}\in \textsf{H}} \varphi (\varvec{\textsf{y}}) + \frac{1}{2} \Vert \varvec{\textsf{y}}-\varvec{\textsf{x}}\Vert ^2\).

In this work, \(\ell ^1\) represents the space of sequences which norms are summable and \(\ell ^2\) the space of sequences which norms are square summable.

2.2 Algorithm

In this paragraph, we describe a generic method for solving problem (1.1), based on the stochastic proximal point algorithm.

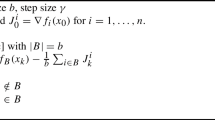

Algorithm 2.1

Let \((\varvec{e}^k)_{k \in \mathbb {N}}\) be a sequence of random vectors in \(\textsf{H}\) and let \((i_k)_{k \in \mathbb {N}}\) be a sequence of i.i.d. random variables uniformly distributed on \(\{1,\dots , n\}\), so that \(i_k\) is independent of \(\varvec{e}^0\), ..., \(\varvec{e}^{k-1}\). Let \(\alpha >0\) and set the initial point \(\varvec{x}^0 \equiv \varvec{\textsf{x}}^0 \in \textsf{H}\). Then define

Algorithm 2.1 is a stochastic proximal point method including an additional term \(\varvec{e}^k\), that will be suitably chosen to reduce the variance. As we shall see in Sect. 4, depending on the specific algorithm (SPPA, SVRP, L-SVRP, SAPA), \(\varvec{e}^k\) may be defined in various ways. Note that \(\varvec{x}^{k+1}\) is a random vector depending on \(\varvec{x}^k, i_k\), and \(\varvec{e}^k\). By definition of the proximal operator, we derive from Algorithm 2.1

By the optimality condition and assuming \(f_{i_{k}}\) is differentiable, we have

Let \(\varvec{w}^k = \nabla f_{i_{k}}(\varvec{x}^{k+1}) - \varvec{e}^k\). Thanks to (2.1), the update in Algorithm 2.1 can be rewritten as

Keeping in mind that actually \(\varvec{w}^k\) depends on \(\nabla f_{i_{k}} (\varvec{x}^{k+1})\), Eq. (2.2) shows that Algorithm 2.1 can be seen as an implicit stochastic gradient method, in contrast to an explicit one, where \(\varvec{w}^k\) is replaced by

This point of view has been exploited in [20, Appendix A], to provide a unified theory for variance reduction techniques for SGD.

Remark 2.2

Due to the dependence of \(\varvec{x}^{k+1}\) on \(i_k\), in general \(\textsf{E}\big [\nabla f_{i_{k}}(\varvec{x}^{k+1})\,\vert \,\varvec{x}^k\big ] \ne \nabla F(\varvec{x}^{k+1})\) and \(\textsf{E}\big [f_{i_{k}}(\varvec{x}^{k+1})\,\vert \,\varvec{x}^k\big ] \ne F (\varvec{x}^{k+1})\), making the analysis of Algorithm 2.1 tricky. We circumvent that problem using \(\varvec{v}^k = \nabla f_{i_{k}}(\varvec{x}^{k}) - \varvec{e}^k\) as an auxiliary variable; see (A.3). This is helpful since \(\varvec{x}^k\) is independent of \(i_k\). Therefore, even though \(\varvec{v}^k\) does not appear explicitly in Algorithm 2.1, it is still relevant in the associated analysis of the convergence bounds, and, for those derivations, some assumptions will be required on \(\varvec{v}^k\).

Remark 2.3

Variance reduction techniques have been already connected to the proximal gradient algorithm to solve structured problems of the form \(\sum _i f_i+g\), where \(f_i\) are assumed to be smooth and g is just prox friendly. Indeed, this is the model corresponding to regularized empirical risk minimization [41]. For this objective functions the stochastic proximal gradient algorithm is as follows

As can be seen from the definition, no sum structure is assumed on the function g which is the one activated through the proximity operator. On the contrary, stochasticity arises from the computation of the gradient of \(\sum _i f_i\) and variance reduction techniques can be exploited at this level. In this paper we tackle the same problem, in the special case where \(g=0\), but we analyze a different algorithm, where the functions \(f_i\)’s are activated through their proximity operator instead of their gradient. The addition of a regularizer g can be considered, but at the moment differentiability is needed for our analysis.

2.3 Assumptions

The first assumptions are made on the functions \(f_i:\textsf{H}\rightarrow \mathbb {R}\), \(i \in [n]\), as well as on the objective function \(F:\textsf{H}\rightarrow \mathbb {R}\).

Assumption 2.4

-

(A.i)

\(\mathop {\mathrm {\textrm{argmin}}}\limits F \ne \varnothing \).

-

(A.ii)

For all \(i \in [n]\), \(f_i\) is convex and L-smooth, i.e., differentiable and such that

$$\begin{aligned} (\forall \varvec{\textsf{x}},\varvec{\textsf{y}}\in \textsf{H}) \quad \Vert \nabla f_i(\varvec{\textsf{x}}) - \nabla f_i(\varvec{\textsf{y}})\Vert \le L\Vert \varvec{\textsf{x}}- \varvec{\textsf{y}}\Vert \end{aligned}$$for some \(L>0\). As a consequence, F is convex and L-smooth.

-

(A.iii)

F satisfies the PL condition with constant \(\mu >0\), i.e.,

$$\begin{aligned} (\forall \varvec{\textsf{x}}\in \textsf{H}) \quad F(\varvec{\textsf{x}}) - F_* \le \frac{1}{2\mu } \Vert \nabla F(\varvec{\textsf{x}})\Vert ^2, \end{aligned}$$(2.4)which is equivalent to the following quadratic growth condition when F is convex

$$\begin{aligned} (\forall \varvec{\textsf{x}}\in \textsf{H}) \quad \frac{\mu }{2}\mathop {\mathrm {\textrm{dist}}}\limits (\varvec{\textsf{x}}, \mathop {\mathrm {\textrm{argmin}}}\limits F)^2 \le F(\varvec{\textsf{x}}) - F_*. \end{aligned}$$(2.5)

(A.i) and (A.ii) constitute the common assumptions that we use for all the convergence results presented in Sects. 3 and 4. Assumption (A.iii) is often called Polyak-Łojasiewicz condition and was introduced in [29] (see also [35]) and is closely connected with the quadratic growth condition (2.5) (they are equivalent in the convex setting, see e.g. [9]). Conditions (2.4) and (2.5) are both relaxations of the strong convexity property and are powerful key tools in establishing linear convergence for many iterative schemes, both in the convex [9, 15, 18, 23] and the non-convex setting [2, 5, 8, 35, 36].

In the same fashion, in this work, Assumption (A.iii) will be used in order to deduce linear convergence rates in terms of objective function values for the sequence generated by Algorithm 2.1.

Assumption 2.5

Let, for all \(k \in \mathbb {N}\), \(\varvec{v}^k:= \nabla f_{i_{k}}(\varvec{x}^{k}) - \varvec{e}^k\). Then there exist non-negative real numbers \(A, B, C \in \mathbb {R}_+\) and \(\rho \in [0,1]\), and a non-positive real-valued random variable D such that, for every \(k \in \mathbb {N}\),

-

(B.i)

\(\textsf{E}[\varvec{e}^k\,\vert \, \mathfrak {F}_k] = 0\) a.s.,

-

(B.ii)

\( \textsf{E}\left[ \Vert \varvec{v}^k\Vert ^2 \,\vert \, \mathfrak {F}_k\right] \le 2 A (F(\varvec{x}^k) - F_*) + B\sigma _k^2 + D\) a.s.,

-

(B.iii)

\(\textsf{E}\left[ \sigma _{k+1}^2\right] \le (1-\rho ) \textsf{E}\left[ \sigma _k^2\right] + 2C\textsf{E}[F(\varvec{x}^k) - F_*]\),

where \(\sigma _k\) is a real-valued random variable, \((\mathfrak {F}_k)_{k \in \mathbb {N}}\) is a sequence of \(\sigma \)-algebras such that, \(\forall k \in \mathbb {N}\), \(\mathfrak {F}_k \subset \mathfrak {F}_{k+1} \subset \mathfrak {A}\), \(i_{k-1}\) and \(x_k\) are \(\mathfrak {F}_k\)-measurables, and \(i_k\) is independent of \(\mathfrak {F}_k\).

Assumption (B.i) ensures that \(\textsf{E}[\varvec{v}^k \,\vert \, \mathfrak {F}_k] = \textsf{E}[\nabla f_{i_{k}} (\varvec{x}^k)\,\vert \, \mathfrak {F}_k] = \nabla F(\varvec{x}^k)\), so that the direction \(\varvec{v}^k\) is an unbiased estimator of the full gradient of F at \(\varvec{x}^k\), which is a standard assumption in the related literature. Assumption (B.ii) on \(\textsf{E}\left[ \Vert \varvec{v}^k\Vert ^2 \,\vert \, \mathfrak {F}_k\right] \) is the equivalent of what is called, in the literature [20, 25], the ABC condition on \(\textsf{E}\left[ \Vert \nabla f_{i_{k}}(\varvec{x}^k)\Vert ^2 \,\vert \, \mathfrak {F}_k\right] \) with \(\sigma _k = \Vert \nabla F(\varvec{x}^k)\Vert \) and D constant (see also [20]). Assumption (B.iii) is justified by the fact that it is needed for the theoretical study and it is satisfied by many examples of variance reduction techniques. For additional discussion on these assumptions, especially Assumption (B.iii), see [20].

3 Main Results

In the rest of the paper, we will always suppose that Assumption (A.i) holds.

Before stating the main results of this work, we start with a technical proposition that constitutes the cornerstone of our analysis. The proof can be found in Appendix A.1.

Proposition 3.1

Suppose that Assumptions 2.5 and (A.ii) are verified and that the sequence \((\varvec{x}^k)_{k \in \mathbb {N}}\) is generated by Algorithm 2.1. Let \(M > 0\). Then, for all \(k \in \mathbb {N}\),

We now state two theorems that can be derived from the previous proposition. The first theorem deals with cases where the function F is only convex.

Theorem 3.2

Suppose that Assumptions (A.ii) and 2.5 hold with \(\rho > 0\) and that the sequence \((\varvec{x}^k)_{k \in \mathbb {N}}\) is generated by Algorithm 2.1. Let \(M>0\) and \(\alpha >0\) be such that \(M \ge B/\rho \) and \(\alpha < 1/(A+MC)\). Then, for all \(k \in \mathbb {N}\),

with \(\bar{\varvec{x}}^{k} = \frac{1}{k}\sum _{t=0}^{k-1}\varvec{x}^t\).

Proof

Since \(B - \rho M \le 0\) and \(\textsf{E}[D] \le 0\), it follows from Proposition 3.1 that

Summing from 0 up to \(k-1\) and dividing both sides by k, we obtain

Finally, by convexity of F, we get

\(\square \)

The next theorem shows that, when F additionally satisfies the PL property (2.4), the sequence generated by algorithm 2.1 exhibits a linear convergence rate both in terms of the distance to a minimizer and also of the values of the objective function.

Theorem 3.3

Suppose that Assumptions 2.4 and 2.5 are verified with \(\rho >0\) and that the sequence \((\varvec{x}^k)_{k \in \mathbb {N}}\) is generated by Algorithm 2.1. Let M be such that \(M > B/\rho \) and \(\alpha > 0\) such that \(\alpha < 1 / ( A+MC)\). Set \( q {:}{=}\max \left\{ 1 - \alpha \mu \left( 1-\alpha (A+ MC)\right) ,\right. \left. 1+\frac{B}{M}-\rho \right\} \). Then \(q \in ]0,1[\) and for all \(k \in \mathbb {N}\),

with \(V^k = \textsf{E}\big [\mathop {\mathrm {\textrm{dist}}}\limits (\varvec{x}^{k}, \mathop {\mathrm {\textrm{argmin}}}\limits F)^2\big ] + \alpha ^2\,M\textsf{E}\big [\sigma _{k}^2\big ]\,\) for all \(k \in \mathbb {N}\). Moreover, for every \(k \in \mathbb {N}\),

Proof

Since \(\alpha < \frac{1}{A+MC}\), we obtain thanks to Assumption (A.iii) and Proposition 3.1

Since \(\alpha < \frac{1}{A+MC}\) and \(M > \frac{B}{\rho }\), we obtain from (3.1) that

Since \(0<1-\rho \le 1+B/M -\rho <1\), it is clear that \(q \in ]0,1[\). Iterating down on k, we obtain

Let \(\varvec{\textsf{x}}^* \in \mathop {\mathrm {\textrm{argmin}}}\limits F\). As F is L-Lipschitz smooth, from the Descent Lemma [32, Lemma 1.2.3], we have

In particular,

Using (3.3) with \(\varvec{x}^k\) in (3.2), we get

\(\square \)

Remark 3.4

-

(i)

The convergence rate of order \(O\left( \frac{1}{k}\right) \) for the general variance reduction scheme 2.1, with constant step size, as stated in Theorem 3.2, is an improved extension of the one found for the vanilla stochastic proximal gradient method (see e.g. [3, Proposition 3.8]). It is important to mention that the convergence with a constant step size is no longer true when D in Assumption (B.ii) is positive. As we shall see in Sect. 4 several choices for the variance term \(\varvec{e}_{k}\) in Algorithm 2.1 can be beneficial regarding this issue, provided Assumption (B.ii) with \(D\le 0\).

-

(ii)

The linear rates as stated in Theorem 3.3 have some similarity with the ones found in [20, Theorem 4.1], where the authors present a unified study for variance-reduced stochastic (explicit) gradient methods. However we note that the Polyak-Łojasiewicz condition (2.4) on F used here is slightly weaker than the quasi-strong convexity used in [20, Assumption 4.2].

4 Derivation of Stochastic Proximal Point Type Algorithms

In this section, we provide and analyze several instances of the general scheme 2.1, corresponding to different choices of the variance reduction term \(\varvec{e}^{k}\). In particular in the next paragraphs we describe four different schemes, namely Stochastic Proximal Point Algorithm (SPPA), Stochastic Variance-reduced Proximal (SVRP) algorithm, Loopless SVRP (L-SVRP) and Stochastic Average Proximal Algorithm (SAPA).

4.1 Stochastic Proximal Point Algorithm

We start by presenting the classic vanilla stochastic proximal method (SPPA), see e.g. [6, 7, 34]. We suppose that Assumptions (A.i) and (A.ii) hold and that for all \(k \in \mathbb {N}\)

Algorithm 4.1

(SPPA) Let \((i_k)_{k \in \mathbb {N}}\) be a sequence of i.i.d. random variables uniformly distributed on \(\{1,\dots , n\}\). Let \(\alpha _k>0\) for all \(k \in \mathbb {N}\) and set the initial point \(\varvec{x}^0 \in \textsf{H}\). Define

Algorithm 4.1 can be directly identified with the general scheme 2.1, by setting \(\varvec{e}^k = 0\) and \(\varvec{v}^k = \nabla f_{i_{k}} (\varvec{x}^{k})\). The following lemma provides a bound in expectation on the sequence \(\Vert \varvec{v}^{k}\Vert \) and can be found in the related literature; see, e.g. [40, Lemma 1].

Lemma 4.2

We suppose that Assumption (A.ii) holds and that \((\varvec{x}^k)_{k \in \mathbb {N}}\) is a sequence generated by Algorithm 4.1 and \(\varvec{v}^k = \nabla f_{i_{k}} (\varvec{x}^{k})\). Then, for all \(k\in \mathbb {N}\), it holds

where \(\mathfrak {F}_k = \sigma (i_0, \dots , i_{k-1})\) and \(\displaystyle \sigma ^2_k\) is defined as a constant random variable in (4.1).

From Lemma 4.2, we immediately notice that Assumptions 2.5 are verified with \(A = 2L, B =2\), \(C = \rho = 0\) and \(D \equiv 0\). In this setting, we are able to recover the following convergence result (see also [3, Lemma 3.10 and Proposition 3.8]).

Theorem 4.3

Suppose that Assumption (A.ii) holds and let \((\gamma _k)_{k \in \mathbb {N}}\) be a positive real-valued sequence such that \(\alpha _k \le \frac{1}{4\,L}\), for all \(k \in \mathbb {N}\). Suppose also that the sequence \((\varvec{x}^k)_{k \in \mathbb {N}}\) is generated by Algorithm 4.1 with the sequence \((\alpha _k)_{k \in \mathbb {N}}\). Then, \(\forall k \ge 1\),

where \(\displaystyle \bar{\varvec{x}}^{k} = \sum _{t=0}^{k-1}\frac{\alpha _t}{\sum _{t=0}^{k-1}\alpha _t} \varvec{x}^t\).

Proof

From Proposition 3.1 adapted to the update (4.2), it follows that

Since \(\alpha _k \le 1/ 4L\), for all \(k \in \mathbb {N}\), we have from (4.3),

Let \(k \ge 1\). Summing from 0 up to \(k-1\) and dividing both side by \(\sum _{t=0}^{k-1}\alpha _t\), we obtain

Finally by convexity of F and using Jensen’s inequality, we obtain

\(\square \)

4.2 Stochastic Variance-Reduced Proximal Point Algorithm

In this paragraph, we present a Stochastic Proximal Point Algorithm coupled with a variance reduction term, in the spirit of the Stochastic Variance Reduction Gradient (SVRG) method introduced in [22]. It is coined Stochastic Variance-Reduced Proximal point algorithm (SVRP).

The SVRP method involves two levels of iterative procedure: outer iterations and inner iterations. We shall stress out that the framework presented in the previous section covers only the inner iteration procedure and thus the convergence analysis for SVRP demands an additional care. In contrast to the subsequent schemes, Theorems 3.2 and 3.3 do not apply directly to SVRP. In particular, as it can be noted below, in the case of SVRP, the constant \(\rho \) appearing in (B.iii) in Assumption 2.5, is null. Nevertheless, it is worth mentioning that the convergence analysis still uses Proposition 3.1.

Algorithm 4.4

(SVRP) Let \(m\in \mathbb {N}\), with \(m\ge 1\), and \((\xi _s)_{s \in \mathbb {N}}\), \((i_t)_{t \in \mathbb {N}}\) be two independent sequences of i.i.d. random variables uniformly distributed on \(\{0,1,\dots , m-1\}\) and \(\{1,\dots , n\}\) respectively. Let \(\alpha >0\) and set the initial point \({\tilde{\varvec{x}}}^0 \equiv {\tilde{\varvec{\textsf{x}}}}^0\in \textsf{H}\). Then

where \(\delta _{k,h}\) is the Kronecker symbol. In the case of the first option, one iterate is randomly selected among the inner iterates \(\varvec{x}_0, \varvec{x}_1, \cdots , \varvec{x}_{m-1}\), losing possibly a lot of information computed in the inner loop. For the second option, those inner iterates are averaged and most of the information are used.

Let \(s \in \mathbb {N}\). In this case, for all \(k \in \{0,1,\cdots , m-1\}\), setting \(j_k = i_{sm+k}\), the inner iteration procedure of Algorithm 4.4 can be identified with the general scheme 2.1, by setting \(\varvec{e}^k {:}{=}\nabla f_{j_{k}}({\tilde{\varvec{x}}}^{s}) - \nabla F({\tilde{\varvec{x}}}^{s})\). In addition let us define

where \({\tilde{\varvec{y}}}^s \in \mathop {\mathrm {\textrm{argmin}}}\limits F\) is such that \(\Vert {\tilde{\varvec{x}}}^s-{\tilde{\varvec{y}}}^s\Vert = \mathop {\mathrm {\textrm{dist}}}\limits ({\tilde{\varvec{x}}}^s, \mathop {\mathrm {\textrm{argmin}}}\limits F)\). Moreover, setting \(\mathfrak {F}_{s,k} = \sigma (\xi _0, \dots , \xi _{s-1, }i_0, \dots , i_{sm+k-1})\), we have that \({\tilde{\varvec{x}}}^s, {\tilde{\varvec{y}}}^s\), and \(\varvec{x}^k\) are \(\mathfrak {F}_{s,k}\)-measurables and \(j_k\) is independent of \(\mathfrak {F}_{s,k}\). The following result is proved in Appendix A.2.

Lemma 4.5

Suppose that Assumption (A.ii) holds true. Let \(s \in \mathbb {N}\) and let \((\varvec{x}^k)_{k \in [m]}\) be the (finite) sequence generated by the inner iteration in Algorithm 4.4. Set \(\varvec{v}^{k}=\nabla f_{j_k}\left( \varvec{x}^{k}\right) -\nabla f_{j_k}({\tilde{\varvec{x}}}^{s})+\nabla F ({\tilde{\varvec{x}}}^{s})\) and \(\sigma _{k}\) as defined in (4.4). Then, for every \(k \in \{0,1,\cdots , m-1\}\), it holds

and

As an immediate consequence, of Proposition 3.1, we have the following corollary regarding the inner iteration procedure of Algorithm 4.4.

Corollary 4.6

Suppose that Assumption (A.ii) holds. Let \(s \in \mathbb {N}\) and let \((\varvec{x}^k)_{k \in [m]}\) be the sequence generated by the inner iteration in Algorithm 4.4. Then, for all \(k \in \{0,1,\ldots , m-1\}\),

Proof

Since Assumption (A.ii) is true, by Lemma 4.5 Assumptions 2.5 are satisfied with \(\varvec{v}^{k}=\nabla f_{j_k}\left( \varvec{x}^{k}\right) -\nabla f_{j_k}({\tilde{\varvec{x}}}^{s})+\nabla F ({\tilde{\varvec{x}}}^{s}), A = 2\,L, B=2, \rho =C=0,\) and \(D = - 2 \Vert \nabla F({\tilde{\varvec{x}}}^{s})\Vert ^2\). So Proposition 3.1 yields

Thus, taking the total expectation, the statement follows. \(\square \)

The next theorem shows that, under some additional assumptions on the choice of the step size \(\alpha \) and the number of inner iterations \(m\in \mathbb {N}\), Algorithm 4.4 yields a linear convergence rate in terms of the expectation of the objective function values of the outer iterates (\({\tilde{\varvec{x}}}^{s})_{s \in \mathbb {N}}\).

Theorem 4.7

Suppose that Assumptions 2.4 are satisfied and that the sequence \(({\tilde{\varvec{x}}}^{s})_{s \in \mathbb {N}}\) is generated by Algorithm 4.4 with

Then, for all \(s\in \mathbb {N}\), it holds

Remark 4.8

-

(i)

Conditions (4.7) is used in this form if \(\alpha \) is set first and m is chosen after. They are needed to ensure that \(q < 1\). Indeed, it is clear that \(0<\alpha < \frac{1}{2(2\,L - \mu )}\) is needed to have \(\frac{2\alpha (L - \mu )}{1-2\,L \alpha } < 1\). If not, q cannot be less than 1. Then we need \(m> \frac{1}{\mu \alpha (1 - 2\alpha (2L-\mu ))}\) once \(\alpha \) is fixed.

-

(ii)

Conditions (4.7) can be equivalently stated as follows

$$\begin{aligned} m{} & {} \ge \frac{8(2L-\mu )}{\mu } \quad \text {and}\quad \frac{1 - \sqrt{1 - 8(2 \kappa - 1)/m}}{4(2L-\mu )} < \alpha \\{} & {} \le \frac{1 + \sqrt{1 - 8(2 \kappa - 1)/m}}{4(2L-\mu )}, \quad \kappa = \frac{L}{\mu }. \end{aligned}$$The above formulas can be useful if one prefers to set the parameter m first and set the step size \(\alpha \) afterwards.

-

(iii)

The convergence rate in Theorem 4.7 establishes the improvement from the outer step s to \(s+1\). Of course, it depends on the number of inner iterations m. As expected, and as we can see from Eq. 4.8, increasing m improve the bound on the rate, and since m is not bounded from above, the best choice would be to let m go to \(+\infty \). In practice, there is no best choice of m, but empirically a balance between the number of inner and outer iterations should be found. Consequently, there is also no optimal choice for \(\alpha \) either.

-

(iv)

It is worth mentioning that the linear convergence factor q in (4.8) is better (smaller) than the one provided in [22, Theorem 1] for the SVRG method for strongly convex functions. There, it is \(\frac{1}{\mu \alpha (1-2\,L \alpha ) m}+\frac{2\alpha L }{1-2\,L \alpha }\). The linear convergence factor in (4.8) is also better than the one in [46, Proposition 3.1], and also [19, Theorem 1], dealing with a proximal version of SVRG for functions satisfying the PL condition (2.4). In both papers, it is \(\frac{1}{2\mu \alpha (1-4\,L \alpha ) m}+\frac{4\alpha L(m+1)}{(1-4\,L \alpha )m}\). However, we note that this improvement can also be obtained for the aforementioned SVRG methods using a similar analysis.

-

(v)

We stress that following the lines of the proof of Theorem 4.7, the same linear convergence rate found in (4.8), holds true also for the averaged iterate \({\tilde{\varvec{x}}}^{s+1} = \frac{1}{m} \sum _{k = 0}^{m-1} \varvec{x}^k\). But, the analysis does not work for the last iterate of the inner loop.

Remark 4.9

In [30] a variant of Algorithm 4.4 (SVRP) in this paper, called SNSPP, has been also proposed and analyzed. The SNSPP algorithm includes a subroutine to compute the proximity operator. This can be useful in practice when a closed form solution of the proximity operator is not available. Contingent on some additional conditions on the conjugate of \(f_i\) and assuming semismoothness of the proximity mapping, [30] provides a linear convergence rate for SNSPP for F Lipschitz smooth and strongly convex. An ergodic sublinear convergence rate is also proved for weakly convex functions.

Proof of Theorem 4.7

We consider a fixed stage \(s \in \mathbb {N}\) and \({\tilde{\varvec{x}}}^{s+1}\) is defined as in Algorithm 4.4. By summing inequality (4.6) in Corollary 4.6 over \(k=0, \ldots , m-1\) and taking the total expectation, we obtain

In the first inequality, we used the fact that

Notice that relation (4.9) is still valid by choosing \({\tilde{\varvec{x}}}^{s+1} = \sum _{k = 0}^{m-1} \frac{1}{m} \varvec{x}^k\), in Algorithm 4.4, and using Jensen inequality to lower bound \(\sum _{k = 0}^{m-1} F(\varvec{x}^k)\) by \(m F({\tilde{\varvec{x}}}^{s+1})\).

The second and the last inequalities use, respectively, the quadratic growth (2.5) and the PL condition (2.4). We thus obtain

\(\square \)

4.3 Loopless SVRP

In this paragraph, we propose a single-loop variant of the SVRP algorithm presented previously, by removing the burden of choosing the number of inner iterations. This idea is inspired by the Loopless Stochastic Variance-Reduced Gradient (L-SVRG) method, as proposed in [21, 28] (see also [20]) and here, we present the stochastic proximal method variant that we call L-SVRP.

Algorithm 4.10

(L-SVRP) Let \((i_k)_{k \in \mathbb {N}}\) be a sequence of i.i.d. random variables uniformly distributed on \(\{1,\dots , n\}\) and let \((\varepsilon ^k)_{k \in \mathbb {N}}\) be a sequence of i.i.d Bernoulli random variables such that \(\textsf{P}(\varepsilon ^k=1)=p \in ]0,1]\). Let \(\alpha >0\) and set the initial points \(\varvec{x}^0 = \varvec{u}^0 \in \textsf{H}\). Then

Here we note that Algorithm 4.10 can be identified with the general scheme 2.1, by setting \(\varvec{e}^k {:}{=}\nabla f_{i_{k}}(\varvec{u}^{k}) - \nabla F(\varvec{u}^{k})\). In addition, we define

with \(\varvec{y}^{k} \in \mathop {\mathrm {\textrm{argmin}}}\limits F\) such that \(\Vert \varvec{u}^{k}-\varvec{y}^{k}\Vert = \mathop {\mathrm {\textrm{dist}}}\limits (\varvec{u}^{k}, \mathop {\mathrm {\textrm{argmin}}}\limits F)\). Moreover, setting \(\mathfrak {F}_k = \sigma (i_0, \dots , i_{k-1}, \varepsilon ^0, \dots , \varepsilon ^{k-1})\), we have that \(\varvec{x}^k\), \(\varvec{u}^k\) and \(\varvec{y}^k\) are \(\mathfrak {F}_k\)-measurable, \(i_k\) and \(\varepsilon ^k\) are independent of \(\mathfrak {F}_k\).

Lemma 4.11

Suppose that Assumption (A.ii) is satisfied. Let \((\varvec{x}^k)_{k \in \mathbb {N}}\) be the sequence generated by Algorithm 4.10, with \(\varvec{v}^{k}=\nabla f_{i_{k}}(\varvec{x}^{k})-\nabla f_{i_{k}}(\varvec{u}^{k}) +\nabla F (\varvec{u}^{k})\) and \(\sigma _{k}\) as defined in (4.10). Then for all \(k\in \mathbb {N}\), it holds

and

Lemma 4.11 whose proof can be found in Appendix A.2 ensures that Assumptions 2.5 hold true with constants \(A = 2\,L, B=2, C = pL\), \(\rho =p\) and \(D \equiv 0\). Then the following corollaries can be obtained by applying respectively Theorem 3.2 and 3.3 on Algorithm 4.10.

Corollary 4.12

Suppose that Assumption (A.ii) holds. Suppose also that the sequence \((\varvec{x}^k)_{k \in \mathbb {N}}\) is generated by Algorithm 4.10. Let M such that \(M \ge \frac{2}{p}\) and \(\alpha > 0\) such that \(\alpha < \frac{1}{L(2 + p M)}\). Then, for all \(k \in \mathbb {N}\),

with \(\bar{\varvec{x}}^{k} = \frac{1}{k}\sum _{t=0}^{k-1}\varvec{x}^t\).

Corollary 4.13

Suppose that Assumptions 2.4 are verified. Suppose now that the sequence \((\varvec{x}^k)_{k \in \mathbb {N}}\) is generated by Algorithm 4.10. Let M such that \(M > 2/p\) and \(\alpha > 0\) such that \(\alpha < \frac{1}{L(2 + p M)}\). Set \( q {:}{=}\max \left\{ 1 - \alpha \mu \left( 1-\alpha L(2 + p M)\right) , 1+\frac{2}{M}-p\right\} \). Then \(q \in ]0,1[\) and for all \(k \in \mathbb {N}\),

Remark 4.14

The proximal version of L-SVRG [28] has been concurrently proposed in [24]. Linear convergence holds in the smooth strongly convex setting. In [24], an approximation of the proximity operator at each iteration is used. Also, Lipschitz continuity of the gradient is replaced by the weaker “second-order similarity”, namely

In [24] the convex case is not analyzed.

4.4 Stochastic Average Proximal Algorithm

In this paragraph, we propose a new stochastic proximal point method in analogy to SAGA [14], called Stochastic Aggregated Proximal Algorithm (SAPA).

Algorithm 4.15

(SAPA) Let \((i_k)_{k \in \mathbb {N}}\) be a sequence of i.i.d. random variables uniformly distributed on \(\{1,\dots , n\}\). Let \(\alpha >0\) and set, for every \(i \in [n]\), \(\varvec{\phi }_i^0=\varvec{x}^0 \in \textsf{H}\). Then

where \(\delta _{i,j}\) is the Kronecker symbol.

Remark 4.16

Algorithm 4.15 is similar but different to the Point-SAGA one proposed in [13]. The update of the stored gradients for Point-SAGA is

whereas in SAGA [14] and its proximal version that we proposed in Algorithm 4.15, the update is

As for the previous cases, SAPA can be identified with Algorithm 2.1, by setting \(\varvec{e}^k {:}{=}\nabla f_{i_{k}}(\varvec{\phi }_{i_{k}}^{k}) - \frac{1}{n} \sum _{i=1}^n \nabla f_i (\varvec{\phi }_i^k)\) for all \(k\in \mathbb {N}\). In addition, let

with \(\varvec{x}^{*} \in \mathop {\mathrm {\textrm{argmin}}}\limits F\) such that \(\Vert \varvec{x}^0-\varvec{x}^{*}\Vert = \mathop {\mathrm {\textrm{dist}}}\limits (\varvec{x}^0, \mathop {\mathrm {\textrm{argmin}}}\limits F)\). Setting \(\mathfrak {F}_k = \sigma (i_0, \dots , i_{k-1})\), we have that \(\varvec{x}^k\) and \(\varvec{\phi }_i^k\) are \(\mathfrak {F}_k\)-measurables and \(i_k\) is independent of \(\mathfrak {F}_k\).

Lemma 4.17

Suppose that Assumption (A.ii) holds. Let \((\varvec{x}^k)_{k \in \mathbb {N}}\) be the sequence generated by Algorithm 4.15, with \(\varvec{v}^{k}=\nabla f_{i_{k}}\left( \varvec{x}^{k}\right) -\nabla f_{i_{k}}(\varvec{\phi }_{i_{k}}^{k})+\frac{1}{n} \sum _{i=1}^n \nabla f_i (\varvec{\phi }_i^k)\) and \(\sigma _{k}\) as defined in (4.13). Then, for all \(k\in \mathbb {N}\), it holds

and

From the above lemma, we know that Assumptions 2.5 are verified with \(A = 2\,L, B=2, C = \frac{L}{n}\), \(\rho =\frac{1}{n}\) and \(D \equiv 0\). These allow us to state the next corollaries obtained by applying respectively Theorem 3.2 and 3.3 on Algorithm 4.15.

Corollary 4.18

Suppose that Assumptions (A.ii) are verified. Suppose also that the sequence \((\varvec{x}^k)_{k \in \mathbb {N}}\) is generated by Algorithm 4.15. Let M such that \(M \ge 2n\) and \(\alpha > 0\) such that \(\alpha < \frac{1}{L(2+M/n)}\). Then, for all \(k \in \mathbb {N}\),

with \(\bar{\varvec{x}}^{k} = \frac{1}{k}\sum _{t=0}^{k-1}\varvec{x}^t\).

Corollary 4.19

Suppose that Assumptions 2.4 hold. Suppose now that the sequence \((\varvec{x}^k)_{k \in \mathbb {N}}\) is generated by Algorithm 4.15. Let M such that \(M > 2n\) and \(\alpha > 0\) such that \(\alpha < \frac{1}{L(2+M/n)}\). Set \(q {:}{=}\max \left\{ 1 - \alpha \mu \left( 1-\alpha L(2+M/n)\right) , 1+\frac{2}{M}-\frac{1}{n}\right\} \). Then \(q \in ]0,1[\) and for all \(k \in \mathbb {N}\),

Remark 4.20

Now, we compare our results with those in [13] for Point-SAGA. In the smooth case, Point-SAGA converges linearly when \(f_i\) is differentiable with \(L-\) Lipschitz gradient and \(\mu -\)strongly convex for every \(i \in [n]\), whereas we require convexity of \(f_i\) and only the PL condition to be satisfied by F. The work [13] does not provide any rate for convex functions but instead does provide an ergodic sublinear convergence rate for nonsmooth and strongly convex functions. While the rate is ergodic and sublinear for strongly convex functions, the algorithm converges with a constant step size.

5 Experiments

In this section, we perform some experiments on synthetic data to compare the schemes presented and analyzed in Sect. 4. We compare the variance-reduced algorithms SAPA (Algorithm 4.15) and SVRP (Algorithm 4.4) with their vanilla counterpart SPPA (Algorithm 4.1) and their explicit gradient counterparts: SAGA [14] and SVRG [22]. The plots presented in the section are averaged of 10 or 5 runs, depending on the computational demand of the problem. The deviation from the average is also plotted. All the codes are available on GitHub.Footnote 1

5.1 Comparing SAPA and SVRP to SPPA

The cost of each iteration of SVRP is different from that of SPPA. More precisely, SVRP consists of two nested iterations, where each outer iteration requires a full explicit gradient and m stochastic gradient computations. We will consider the minimization of the sum of n functions; therefore, we run SPPA for N iterations, with \(N = S(m + n + 1)\), where S is the maximum number of outer iterations and m is that of inner iterations as defined in Algorithm 4.4. Let s be the outer iterations counter. As for m, it is set at 2n like in [22]. Then the step size \(\alpha \) in SVRP is fixed at 1/(5L). For SAPA, we run it for \(N - n\) iterations because there is a full gradient computation at the beginning of the algorithm. The SAPA step size is set to 1/ (5L). Finally, the SPPA step size is is chosen to be \(\alpha _k = 1 / (k^{0.55})\).

For all three algorithms, we normalize the abscissa so to present the convergence with respect to the outer iterates \(({\tilde{\varvec{x}}}^s)_{s \in \mathbb {N}}\) as in Theorem 4.7.

The algorithms are run for \(n \in \{1000, 5000, 10,000\}\), d fixed at 500 and \(cond(A^{\top \!}A)\), the condition number of \(A^{\top \!}A\), at 100.

5.1.1 Logistic Regression

First, we consider experiments on the logistic loss:

where \(\varvec{\textsf{a}}_i\) is the \(i^{th}\) row of a matrix \(A \in \mathbb {R}^{n \times d}\) and \(b_i\in \{-1, 1\}\) for all \(i \in [n]\). If we set \(f_i(\varvec{\textsf{x}}) = \log (1+\exp \{-b_i\langle \varvec{\textsf{a}}_i, \varvec{\textsf{x}}\rangle \})\) for all \(i \in [n]\), then \(F(\varvec{\textsf{x}}) = \sum _{i=1}^n \frac{1}{n} f_i(\varvec{\textsf{x}})\). The matrix A is generated randomly. We first generate a matrix M according to the standard normal distribution. A singular value decomposition gives \(M = UDV^{\top \!}\). We set the smallest singular value to zero and rescale the rest of the vector of singular values so that the biggest singular value is equal to a given condition number and the second smallest to one. We obtain a new diagonal matrix \(D^{\prime }\). Then A is given by \(UD^{\prime }V^{\top \!}\). In this problem, we have \(L = 0.25 \times \max _i \Vert \varvec{\textsf{a}}_i\Vert _2^2\). We compute the proximity operator of the logistic function \(f_i\) according to the formula and the subroutine code available in [12] and considering the rule of calculus of the proximity operator of a function composed with a linear map [4, Corollary 24.15].

Even though we don’t have any theoretical result for SVRP in the convex case, we perform some experiments in this case as well. As it can readily be seen in Fig. 1, both SAPA and SVRP are better than SPPA.

5.1.2 Ordinary Least Squares (OLS)

To analyze the practical behavior of the proposed methods when the PL condition holds, we test all the algorithms on an ordinary least squares problem:

where \(\varvec{\textsf{a}}_i\) is the \(i^{th}\) row of the matrix \(A\in \mathbb {R}^{n\times d}\) and \(b_i\in \mathbb {R}\) for all \(i\in [n]\). In this setting, we have \(F(\varvec{\textsf{x}}) = \sum _{i=1}^n \frac{1}{n} f_i(\varvec{\textsf{x}})\) with \(f_i(\varvec{\textsf{x}}) = \frac{1}{2}\left( \langle \varvec{\textsf{a}}_i, \varvec{\textsf{x}}\rangle - b_i \right) ^2\) for all \(i \in [n]\).

Here, the matrix A was generated as in Sect. 5.1.1. The proximity operator is computed with the following closed form solution:

Like in the logistic case, SVRP and SAPA exhibit faster convergence compared to SPPA. See Fig. 2.

5.2 Comparing SAPA to SAGA

In the next experiments, we implement SAPA and SAGA to solve the least-squares problem (5.2) and logistic problem (5.1). The data are exactly as in Sect. 5.1.2 and the matrix A is generated as explained in Sect. 5.1.1.

The aim of these experiments is twofold: on the one hand, we aim to establish the practical performance of the proposed method in terms of convergence rate and to compare it with the corresponding variance reduction gradient algorithm, on the other hand, we want to assess the stability of SAPA with respect to the step size selection. Indeed, SPPA has been shown to be more robust and stable with respect to the choice of the step size than the stochastic gradient algorithms, see [3, 26, 37]. In Figs. 3 for OLS and 4 for logistic, we plot the number of iterations that are needed for SAPA and SAGA to achieve an accuracy at least \(F(x_{t})-F_{*} \le \varepsilon =0.01\), along a fixed range of step sizes. The algorithms run for \(n \in \{1000, 5000, 10,000\}\), d fixed at 500 and \(cond(A^{\top \!}A)\) at 100.

Our experimental results show that if the step size is small enough, SAPA and SAGA behave very similarly for both problems, see Figs. 3 and 4. This behavior is in agreement with the theoretical results that establish convergence rates for SAPA that are similar to those of SAGA. By increasing the step sizes, we observe that SAPA is more stable than SAGA: the range of step sizes for which SAPA converges is wider than that for SAGA.

5.3 Comparing SVRP to SVRG

In this section, we compare the performance of Algorithm 4.4 (SVRP) with SVRG [22] for the least-squares problem (5.2), in terms of number of inner iterations (oracle calls) to achieve an accuracy at least \(F(x_{t})-F_{*} \le \varepsilon =0.01\), along a fixed range of step sizes. In this experiment, the condition number is set \(\text {cond}(A^{\top }A)=100\), \(n=2000\) and the dimension of the objective variable d varies in \(\{1000,1500,2000, 3000\}\). The number of outer and inner iterations is set to \(s=40\) and \(m=1000\), respectively, and the maximum number of iterations (oracle calls) is set to \(N=s(m+n+1)\).

Two main observations can be made. First, we note that when the step size is optimally tuned, SVRP performs always better than SVRG (overall, it requires fewer iterations to achieve \(\varepsilon =0.01\) accuracy). Secondly, regarding the stability of the two methods with respect to the choice of the step size, while SVRG seems to be a bit more stable for easy problems (\(d=1000\)), the situation is reversed for harder problems (e.g., \(d\in \{2000,3000\}\)), where SVRP is more robust. The results are reported in Fig. 5.

Number of iterations needed in order to achieve an accuracy of at least 0.01 for different step sizes when solving an OLS problem. Here we compare SVRP (in blue) with SVRG (in orange) for four different values of the dimension d in problem (5.2), starting from \(d=1000\) (easy case) to \(d=3000\) (hard case)

6 Conclusion and Future Work

In this work, we proposed a general scheme of variance reduction, based on the stochastic proximal point algorithm (SPPA). In particular, we introduced some new variants of SPPA coupled with various variance reduction strategies, namely SVRP, SAPA and L-SVRP (see Sect. 4) for which we provide improved convergence results compared to the vanilla version. As the experiments suggest, the convergence behavior of these stochastic proximal variance-reduced methods is more stable with respect to the step size, rendering them advantageous over their explicit-gradient counterparts.

Since in this paper, differentiability of the summands is required, an interesting line of future research includes the extension of these results to the nonsmooth case and the model-based framework, as well as the consideration of inertial (accelerated) variants leading, hopefully, to faster rates.

Data Availibility

The datasets generated during the current study are available from the corresponding author on request and on GitHub https://github.com/cheiktraore/Variance-reduction-for-SPPA.

References

Allen-Zhu, Z., Yuan, Y.: Improved SVRG for non-strongly-convex or sum-of-non-convex objectives. In: Balcan, M. F., Weinberger K. Q. (eds) Proceedings of The 33rd International Conference on Machine Learning, volume 48 of Proceedings of Machine Learning Research, New York, USA, 20–22 Jun 2016, pp. 1080–1089. PMLR

Apidopoulos, V., Ginatta, N., Villa, S.: Convergence rates for the heavy-ball continuous dynamics for non-convex optimization, under Polyak-Łojasiewicz condition. J. Glob. Optim. 84(3), 563–589 (2022)

Asi, H., Duchi, J.C.: Stochastic (approximate) proximal point methods: convergence, optimality, and adaptivity. SIAM J. Optim. 29(3), 2257–2290 (2019)

Bauschke, H.H., Combettes, P.L.: Convex Analysis and Monotone Operator Theory in Hilbert Spaces. Springer, New York (2017)

Bégout, P., Bolte, J., Jendoubi, M.A.: On damped second-order gradient systems. J. Differ. Equ. 259(7), 3115–3143 (2015)

Bertsekas, D.P.: Incremental proximal methods for large scale convex optimization. Math. Program. 129(2), 163–195 (2011)

Bianchi, P.: Ergodic convergence of a stochastic proximal point algorithm. SIAM J. Optim. 26(4), 2235–2260 (2016)

Bolte, J., Daniilidis, A., Lewis, A.: The Łojasiewicz inequality for nonsmooth subanalytic functions with applications to subgradient dynamical systems. SIAM J. Optim. 17(4), 1205–1223 (2007)

Bolte, J., Nguyen, T.P., Peypouquet, J., Suter, B.W.: From error bounds to the complexity of first-order descent methods for convex functions. Math. Program. 165(2), 471–507 (2017)

Bottou, L.: On-line Learning and Stochastic Approximations, pp. 9–42. Publications of the Newton Institute, Cambridge University Press, Cambridge (1999)

Bottou, L.: Large-scale machine learning with stochastic gradient descent. In: Lechevallier, Y., Saporta, G. (eds) Proceedings of COMPSTAT’2010, pp. 177–186, Heidelberg, 2010. Physica-Verlag HD

Chierchia, G., Chouzenoux, E., Combettes, P.L., Pesquet, J.-C.: The proximity operator repository. User’s guide (2020). http://proximity-operator.net/download/guide.pdf

Defazio, A.: A simple practical accelerated method for finite sums. In: Lee, D., Sugiyama, M., Luxburg, U., Guyon, I., Garnett, R. (eds.) Advances in Neural Information Processing Systems, vol. 29. Curran Associates Inc, Red Hook (2016)

Defazio, A., Bach, F., Lacoste-Julien, S.: SAGA: a fast incremental gradient method with support for non-strongly convex composite objectives. Adv. Neural. Inf. Process. Syst. 27, 1646–1654 (2014)

Drusvyatskiy, D., Lewis, A.S.: Error bounds, quadratic growth, and linear convergence of proximal methods. Math. Oper. Res. 43(3), 919–948 (2018)

Duchi, J., Hazan, E., Singer, Y.: Adaptive subgradient methods for online learning and stochastic optimization. J. Mach. Learn. Res. 12(61), 2121–2159 (2011)

Fang, C., Li, C.J., Lin, Z., Zhang, T.: SPIDER: near-optimal non-convex optimization via stochastic path-integrated differential estimator. In: Bengio, S., Wallach, H., Larochelle, H., Grauman, K., Cesa-Bianchi, N., Garnett, R. (eds.) Advances in Neural Information Processing Systems, vol. 31, pp. 689–699. Curran Associates Inc, Red Hook (2018)

Garrigos, G., Rosasco, L., Villa, S.: Convergence of the forward-backward algorithm: beyond the worst-case with the help of geometry. Math. Program. 198, 937–996 (2023)

Gong, P., Ye, J.: Linear convergence of variance-reduced stochastic gradient without strong convexity. arXiv preprint arXiv:1406.1102 (2014)

Gorbunov, E., Hanzely, F., Richtarik, P.: A unified theory of SGD: variance reduction, sampling, quantization and coordinate descent. In: Chiappa, S., Calandra, R. (eds) Proceedings of the Twenty Third International Conference on Artificial Intelligence and Statistics, Volume 108 of Proceedings of Machine Learning Research, pp. 680–690. PMLR (2020)

Hofmann, T., Lucchi, A., Lacoste-Julien, S., McWilliams, B.: Variance reduced stochastic gradient descent with neighbors. In: Cortes, C., Lawrence, N., Lee, D., Sugiyama, M., Garnett, R. (eds.) Advances in Neural Information Processing Systems, vol. 28, pp. 2305–2313. Curran Associates Inc, Red Hook (2015)

Johnson, R., Zhang, T.: Accelerating stochastic gradient descent using predictive variance reduction. In: Burges, C., Bottou, L., Welling, M., Ghahramani, Z., Weinberger, K. (eds.) Advances in Neural Information Processing Systems, vol. 26, pp. 315–323. Curran Associates Inc, Red Hook (2013)

Karimi, H., Nutini, J., Schmidt, M.: Linear convergence of gradient and proximal-gradient methods under the Polyak-łojasiewicz condition. In: Frasconi, P., Landwehr, N., Manco, G., Vreeken, J. (eds.) Machine Learning and Knowledge Discovery in Databases, pp. 795–811. Springer International Publishing, Cham (2016)

Khaled, A., Jin, C.: Faster federated optimization under second-order similarity. In: The Eleventh International Conference on Learning Representations (2023)

Khaled, A., Richtárik, P. Better theory for SGD in the nonconvex world. Transactions on Machine Learning Research. Survey Certification (2023)

Kim, J.L., Toulis, P., Kyrillidis, A.: Convergence and stability of the stochastic proximal point algorithm with momentum. In: Firoozi, R., Mehr, N., Yel, E., Antonova, R., Bohg, J., Schwager, M., Kochenderfer, M. (eds) Proceedings of The 4th Annual Learning for Dynamics and Control Conference, Volume 168 of Proceedings of Machine Learning Research, pp. 1034–1047. PMLR (2022)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

Kovalev, D., Horváth, S., Richtárik, and P.: Don’t jump through hoops and remove those loops: SVRG and Katyusha are better without the outer loop. In: Kontorovich, A., Neu, G. (eds) Proceedings of the 31st International Conference on Algorithmic Learning Theory, Volume 117 of Proceedings of Machine Learning Research, pp. 451–467. PMLR (2020)

Łojasiewicz, S.: Une propriété topologique des sous-ensembles analytiques réels. Les équations aux Dérivées Partielles 117, 87–89 (1963)

Milzarek, A., Schaipp, F., Ulbrich, M.: A semismooth newton stochastic proximal point algorithm with variance reduction. SIAM J. Optim. 34(1), 1157–1185 (2024)

Moulines, E., Bach, F.: Non-asymptotic analysis of stochastic approximation algorithms for machine learning. In: Shawe-Taylor, J., Zemel, R., Bartlett, P., Pereira, F., Weinberger, K. (eds.) Advances in Neural Information Processing Systems, vol. 24, pp. 451–459. Curran Associates Inc, Red Hook (2011)

Nesterov, Y.: Introductory Lectures on Convex Optimization: A Basic Course, vol. 87. Springer Science & Business Media, Berlin (2003)

Nguyen, L.M., Liu, J., Scheinberg, K., Takáč, M.: SARAH: a novel method for machine learning problems using stochastic recursive gradient. In: Precup, D., Teh, Y. W. (eds) Proceedings of the 34th International Conference on Machine Learning, Volume 70 of Proceedings of Machine Learning Research, pp. 2613–2621. PMLR (2017)

Patrascu, A., Necoara, I.: Nonasymptotic convergence of stochastic proximal point methods for constrained convex optimization. J. Mach. Learn. Res. 18(1), 7204–7245 (2017)

Polyak, B.T.: Gradient methods for the minimisation of functionals. USSR Comput. Math. Math. Phys. 3(4), 864–878 (1963)

Polyak, B.T.: Some methods of speeding up the convergence of iteration methods. USSR Comput. Math. Math. Phys. 4(5), 1–17 (1964)

Robbins, H., Monro, S.: A stochastic approximation method. Ann. Math. Stat. 22(3), 400–407 (1951)

Ryu, E.K., Boyd, S. Stochastic proximal iteration: a non-asymptotic improvement upon stochastic gradient descent. Author website, early draft (2014)

Schmidt, M., Le Roux, N., Bach, F.: Minimizing finite sums with the stochastic average gradient. Math. Program. 162(1), 83–112 (2017)

Sebbouh, O., Gower, R.M., Defazio, A.: Almost sure convergence rates for stochastic gradient descent and stochastic heavy ball. In: Belkin, M., Kpotufe, S. (eds) Proceedings of Thirty Fourth Conference on Learning Theory, Volume 134 of Proceedings of Machine Learning Research, pp. 3935–3971. PMLR (2021)

Shalev-Shwartz, S., Ben-David, S.: Understanding Machine Learning: From Theory to Algorithms. Cambridge University Press, Cambridge (2014)

Toulis, P., Airoldi, E.M.: Asymptotic and finite-sample properties of estimators based on stochastic gradients. Ann. Stat. 45(4), 1694–1727 (2017)

Toulis, P., Horel, T., Airoldi, E.M.: The proximal Robbins-Monro method. J. R. Stat. Soc. Ser. B Stat. Methodol. 83(1), 188–212 (2021)

Toulis, P., Tran, D., Airoldi, E.: Towards stability and optimality in stochastic gradient descent. In: Gretton, A., Robert, C.C. (eds) Proceedings of the 19th International Conference on Artificial Intelligence and Statistics, Volume 51 of Proceedings of Machine Learning Research, Cadiz, Spain, 09–11 May 2016, pp. 1290–1298. PMLR

Wang, Z., Ji, K., Zhou, Y., Liang, Y., Tarokh, V.: Spiderboost and momentum: faster variance reduction algorithms. In: Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R. (eds.) Advances in Neural Information Processing Systems, vol. 32, pp. 2406–2416. Curran Associates Inc, Red Hook (2019)

Zhang, J., Zhu, X.: Linear convergence of Prox-SVRG method for separable non-smooth convex optimization problems under bounded metric subregularity. J. Optim. Theory Appl. 192(2), 564–597 (2022)

Funding

Open access funding provided by Università degli Studi di Genova within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Sonia Cafieri.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Cheik Traoré: This project has received funding from the European Union’s Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie grant agreement No 861137.

Silvia Villa acknowledges the financial support of the European Research Council (grant SLING 819789), the AFOSR projects FA9550-17-1-0390, FA8655-22-1-7034, and BAAAFRL- AFOSR-2016-0007 (European Office of Aerospace Research and Development), and the EU H2020-MSCA-RISE project NoMADS - DLV-777826

Appendix A: Additional Proofs

Appendix A: Additional Proofs

In this appendix we provide the proofs of some auxiliary lemmas used in Sects. 3 and 4 in the main core of the paper.

1.1 A.1 Proofs of Section \(\textbf{3}\)

Proof of Proposition 3.1

Notice that from (2.2), \(\varvec{x}^{k+1}\) can be identified as

Let \(\varvec{\textsf{x}}\in \textsf{H}\). The function \(R_{i_{k}}(\varvec{\textsf{x}})=f_{i_{k}}(\varvec{\textsf{x}})-\langle \varvec{e}^k,\varvec{\textsf{x}}-\varvec{x}^{k}\rangle \) is convex and continuously differentiable with \(\nabla R_{i_{k}}(\varvec{\textsf{x}})=\nabla f_{i_{k}}(\varvec{\textsf{x}})-\varvec{e}^k\)

By convexity of \(R_{i_{k}}\), we have:

Since \(\nabla R_{i_{k}}(\varvec{x}^{k+1})=\frac{\varvec{x}^{k}-\varvec{x}^{k+1}}{\alpha }\), from (A.1), it follows:

By using the identity \(\langle \varvec{x}^{k}-\varvec{x}^{k+1}, \varvec{\textsf{x}}-\varvec{x}^{k+1}\rangle =\frac{1}{2}\Vert \varvec{x}^{k+1}-\varvec{x}^{k}\Vert ^{2} + \frac{1}{2}\Vert \varvec{x}^{k+1}-\varvec{\textsf{x}}\Vert ^{2} -\frac{1}{2}\Vert \varvec{x}^{k}-\varvec{\textsf{x}}\Vert ^{2}\) in the previous inequality, we find

Recalling the definition of \(\varvec{v}_k\) in (2.3), we can lower bound the left hand term as follows:

where in the first inequality, we used the convexity of \(f_i\), for all \(i \in [n]\). Since

From (A.3), it follows

By using (A.4) in (A.2), we obtain

Now, define \(\textsf{E}_k[\cdot ] = \textsf{E}[\cdot \,\vert \, \mathfrak {F}_k]\), where \(\mathfrak {F}_k\) is defined in Assumptions 2.5 and is such that \(\varvec{x}^k\) is \(\mathfrak {F}_k\)-measurable and \(i_k\) is independent of \(\mathfrak {F}_k\). Thus, taking the conditional expectation of inequality A.5 and rearranging the terms, we have

Replacing \(\varvec{\textsf{x}}\) by \(\varvec{y}^{k}\), with \( \varvec{y}^{k} \in \mathop {\mathrm {\textrm{argmin}}}\limits F\) such that \(\Vert \varvec{x}^{k}-\varvec{y}^{k}\Vert = \mathop {\mathrm {\textrm{dist}}}\limits (\varvec{x}^{k}, \mathop {\mathrm {\textrm{argmin}}}\limits F)\) and using Assumption (B.ii), we get

By taking the total expectation in (A.6) and using (B.iii), for all \(M > 0\) we have

Since \( \textsf{E}[\mathop {\mathrm {\textrm{dist}}}\limits (\varvec{x}^{k+1}, \mathop {\mathrm {\textrm{argmin}}}\limits F)^2] \le \textsf{E}\Vert \varvec{x}^{k+1}-\varvec{y}^{k}\Vert ^{2}\), the statement follows. \(\square \)

1.2 A.2 Proofs of Section \(\textbf{4}\)

Proof of Lemma 4.5

Given any \(i \in [n]\), since \(f_i\) is convex and L-Lipschitz smooth, it is a standard fact (see [32, Equation 2.1.7]) that

By summing the above inequality over \(i=1, \ldots , n\), we have

and hence if we suppose that \(\nabla F\left( \varvec{\textsf{y}}\right) =0\), then we obtain

Now, let \(s \in \mathbb {N}\) and \(k \in \{0,\dots , m-1\}\) and set, for the sake of brevity, \(j_k = i_{sm+k}\). Defining \(\mathfrak {F}_{k} = \mathfrak {F}_{s,k} = \sigma (\xi _0, \dots , \xi _{s-1}, i_0, \dots , i_{sm+k-1})\) and \(\textsf{E}_k[\cdot ] = \textsf{E}[\cdot \,\vert \, \mathfrak {F}_k]\), and recalling that

we obtain

where in the last inequality, we used (6.7) with \(\varvec{\textsf{y}}={\tilde{\varvec{y}}}^s\). \(\square \)

Proof of Lemma 4.11

The proof of equation (4.11) is equal to that of (4.5) in Lemma 4.5 using \(\varvec{u}^k\) instead of \({\tilde{\varvec{x}}}^s\) and \(\textsf{E}_k[\cdot ] = \textsf{E}[\cdot \,\vert \, \mathfrak {F}_k]\) with \(\mathfrak {F}_k = \sigma (i_0, \dots , i_{k-1}, \varepsilon ^0, \dots , \varepsilon ^{k-1})\), which ensures that \(\varvec{x}^k\) and \(\varvec{u}^k\) are \(\mathfrak {F}_k\)-measurables, and \(i_k\) and \(\varepsilon ^k\) are independent of \(\mathfrak {F}_k\). Concerning (4.12), we note that

Therefore, we have

where in the last inequality we used (6.7) with \(\varvec{\textsf{y}}= P_{\mathop {\mathrm {\textrm{argmin}}}\limits F}(\varvec{x}^k)\). \(\square \)

Proof of Lemma 4.17

We recall that, by definition,

Now, set \(\textsf{E}_k [\cdot ] =\textsf{E}[\cdot \vert \, \mathfrak {F}_k]\) with \(\mathfrak {F}_k= \sigma (i_0, \dots , i_{k-1})\), so that \(\varvec{\phi }^k_i\) and \(\varvec{x}^k\) are \(\mathfrak {F}_k\)-measurable and \(i_k\) is independent of \(\mathfrak {F}_k\). By definition of \(\varvec{v}^k\), and using the fact that \(\nabla F(\varvec{x}^{*})=0\) and inequality (6.7), we have

For the second part (4.14), we proceed as follows

where in the last inequality we used inequality (6.7) with \(\varvec{\textsf{y}}= \varvec{x}^*\). \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Traoré, C., Apidopoulos, V., Salzo, S. et al. Variance Reduction Techniques for Stochastic Proximal Point Algorithms. J Optim Theory Appl (2024). https://doi.org/10.1007/s10957-024-02502-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10957-024-02502-6