Abstract

Formative assessment strategies are used to direct instruction by establishing where learners’ understanding is, how it is developing, informing teachers and students alike as to how they might get to their next set of goals of conceptual understanding. For the science classroom, one rich source of formative assessment data about scientific thinking and practice is in notebooks used during inquiry-oriented activities. In this study, the goal was to better understand how student knowledge was distributed between student drawings and writings about magnetism in notebooks, and how these findings might inform formative assessment strategies. Here, drawing and writing samples were extracted and evaluated from our digital science notebook, with embedded content and laboratories. Three drawings and five writing samples from 309 participants were analyzed using a common ten-dimensional rubric. Descriptive and inferential statistics revealed that fourth-grade student understanding of magnetism was distributed unevenly between writing and drawing. Case studies were then presented for two exemplar students. Based on the rubric we developed, students were able to articulate more of their knowledge through the drawing activities than through written word, but the combination of the two mediums provided the richest understanding of student conceptions and how they changed over the course of their investigations.

Similar content being viewed by others

References

Acher A, Arca M, Sanmarti N (2007) Modeling as a teaching learning process for understanding materials: a case study in primary education. Sci Educ 91:398–418

Ainsworth SE, Loizou AT (2003) The effects of self-explaining when learning with text or diagrams. Cogn Sci 27(4):669–681

Alesandrini KL (1981) Pictorial—verbal and analytic—holistic learning strategies in science learning. J Educ Psychol 73:358–368

Aschbacher P, Alonzo A (2006) Examining the utility of elementary science notebooks for formative assessment purposes. Educ Assess 11(3):179–203

Baker RS (2014) Educational data mining: an advance for intelligent systems in education. Intell Syst IEEE 29(3):78–82

Bell B, Cowie B (2001) The characteristics of formative assessment in science education. Sci Educ 85(5):536–553

Bennett RE (2011) Formative assessment: a critical review. Assess Educ: Princ Policy Pract 18(1):5–25. doi:10.1080/0969594X.2010.513678

Berland LK, Hammer DM (2012) Framing for scientific argumentation. J Res Sci Teach 49(1):68–94. doi:10.1002/tea.20446

Berland LK, Reiser BJ (2008) Making sense of argumentation and explanation. Sci Educ 93:26–55

Bransford J, Brown AL, Cocking RR (2000) How people learn: brain, mind, experience, and school, 2nd edn. National Academy Press, Washington

Campbell B, Fulton L (2003) Science notebooks: writing about inquiry. Heinemann, Portsmouth

CAPSI Research Group (2006) Elementary Science Notebooks: Impact on classroom practice and student achievement in science and literacy. http://www.capsi.caltech.edu/research/Projects.htm#Elementary. Accessed May 2008

Creswell JW, Clark Plano VL, Gutman ML, Hanson WE (2003) Advanced mixed methods research designs. In: Tashakkori A, Teddlie C (eds) Handbook of mixed methods in social and behavioral research. Sage, Thousand Oaks

Donnelly DF, Linn MC, Ludvigsen S (2014) Impacts and characteristics of computer-based science inquiry learning environments for precollege students. Rev Educ Res. doi:10.3102/0034654314546954

Falk A (2012) Teachers learning from professional development in elementary science: reciprocal relations between formative assessment and pedagogical content knowledge. Sci Educ 96(2):265–290. doi:10.1002/sce.20473

Forbus K, Usher J, Lovett A, Lockwood K, Wetzel J (2011) CogSketch: sketch understanding for cognitive science research and for education. Top Cogn Sci 3(4):648–666. doi:10.1111/j.1756-8765.2011.01149.x

FOSS Project (2013) Welcome to FOSSWeb. http://www.fossweb.com/. Accessed 15 Oct 2013

Fulantelli G, Taibi D, Arrigo M (2014) A framework to support educational decision making in mobile learning. Comput Hum Behav. doi:10.1016/j.chb.2014.05.045

Furtak EM (2012) Linking a learning progression for natural selection to teachers’ enactment of formative assessment. J Res Sci Teach 49(9):1181–1210. doi:10.1002/tea.21054

Gobert JD, Sao Pedro M, Raziuddin J, Baker RS (2013) From log files to assessment metrics: measuring students’ science inquiry skills using educational data mining. J Learn Sci 22(4):521–563

Groisman A, Shapiro B, Willinsky J (1991) The potential of semiotics to inform understanding of events in science education. Int J Sci Educ 13(3):217–226

Han JY, Roth W-M (2005) Chemical inscriptions in Korean textbooks: semiotics of macro- and microworld. Sci Educ 90(2):173–201. doi:10.1002/sce.20091

Harlen W (2006) Making formative assessment work: effective practice in the primary classroom. Educ Rev 58(1):116–118

Harlow DB (2010) Structures and improvisation for inquiry-based science instruction: a teacher’s adaptation of a model of magnetism activity. Sci Educ 94(1):142–163

Honey MA, Hilton M (eds) (2011) Learning science: computer games, simulations, and education. Committee on Science Learning: Computer Games, Simulations, and Education. National Research Council, Washington

Leeman-Munk S, Wiebe EN, Lester J (2013) Mining student science argumentation text to inform an intelligent tutoring system. Paper presented at the AERA Annual Meeting, San Francisco

Lemke JL (1998) Multiplying meaning: visual and verbal semiotics in scientific text. In: Martin JR, Veel R (eds) Reading science: critical and functional perspectives of the discourses of science. Routledge, New York, pp 87–111

Lester J, Mott B, Wiebe EN, Carter M (2010) The Leonardo Project. http://projects.intellimedia.ncsu.edu/cyberpad/. Accessed Nov 2013

Martinez JF, Borko H, Stecher B, Luskin R, Kloser M (2012) Measuring classroom assessment practice using instructional artifacts: a validation study of the QAS notebook. Educ Assess 17(2–3):107–131

Minogue J, Wiebe E, Madden L, Bedward J, Carter M (2010) Graphically enhanced science notebooks. Sci Child 48(3):52–55

Minogue J, Bedward JC, Wiebe EN, Madden L, Carter M, King Z (2011) An exploration of upper elementary students’ storyboarded conceptions of magnetism. Paper presented at the NARST Annual Meeting, Orlando

Nehm RH, Ha M, Mayfield E (2012) Transforming biology assessment with machine learning: automated scoring of written evolutionary explanations. J Sci Educ Technol 21(1):183–196

Ruiz-Primo MA, Li M, Ayala C, Shavelson RJ (2004) Evaluating students’ science notebooks as an assessment tool. Int J Sci Educ 26(12):1477–1506

Sadler DR (1989) Formative assessment and the design of instructional systems. Instr Sci 18:119–144

Sampson V, Clark DB (2008) Assessment of the ways students generate arguments in science education: current perspectives and recommendations for future directions. Sci Educ 92(3):447–472

Schnotz W, Bannert M (2003) Construction and interference in learning from multiple representations. Learn Instr 13(2):141–156

Schwarz CV, Reiser BJ, Davis EA, Kenyon L, Acher A, Fortus D, Krajcik J (2009) Developing a learning progression for scientific modeling: making scientific modeling accessible and meaningful for learners. J Res Sci Teach 46(6):632–654

Sederberg D, Bryan L (2009) Tracing a prospective learning progression for magnetism with implications at the nanoscale. Paper presented at the learning progressions in science (LeaPS) conference, Iowa City

Sharples M (2013) Shared orchestration within and beyond the classroom. Comput Educ 69:504–506. doi:10.1016/j.compedu.2013.04.014

Shelton A, Wiebe E, Smith PA (2015) What drawing and argumentative writing says about student understanding. Paper presented at the NARST Annual Meeting, Chicago

Siemens G, Long P (2011) Penetrating the fog: analytics in learning and education. Educ Rev 46(5):30–32

Sil A, Shelton A, Ketelhut DJ, Yates A (2012) Automatic grading of scientific inquiry. In Proceedings of the NAACL-HLT 7th workshop on innovative use of nlp for building educational applications (BEA-7), Montreal

Tashakkori A, Teddlie C (2003) Handbook of mixed methods in social and behavioral research. Sage, London

Tempelaar DT, Rienties B, Giesbers B (2014) In search for the most informative data for feedback generation: Learning analytics in a data-rich context. Comput Hum Behav. doi:10.1016/j.chb.2014.05.038

Timms M (2014) Teacher dashboard design. WestEd, San Francisco

Tomanek D, Talanquer V, Novodvorsky I (2008) What do science teachers consider when selecting formative assessment tasks? J Res Sci Teach 45(10):1113–1130. doi:10.1002/tea.20247

Wiliam D, Thompson M (2008) Integrating assessment with learning: what will it take to make it work? In: Dwyer CA (ed) The future of assessment: shaping teaching and learning. Erlbaum, New York, pp 53–82

Wiser M, Smith CL (2008) Learning and teaching about matter in grades K-8: when should the atomic-molecular theory be introduced? In: Vosniadou S (ed) International handbook of research on conceptual change. Routledge, London, pp 205–239

Yin RK (2009) Case study research: design and methods. Sage, Los Angeles

Acknowledgments

This material is based upon work supported by the National Science Foundation under Grant No. DRL-1020229. We would also like to acknowledge the help and support of our cooperating classroom teachers.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors have no known potential conflicts of interest pertaining to the research reported in this manuscript.

Research involving human participants and/or animals

All procedures performed in this study involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki Declaration and its later amendments or comparable ethical standards. This research with human participants was conducted in this reported research and approved by our overseeing Institutional Review Board.

Informed consent

Informed consent was obtained from all individual participants included in this study.

Appendix 1

Appendix 1

Example posttest items

-

5.

When a piece of iron is very close to a magnet

-

a.

Nothing happens to the particles in the steel.

-

b.

All the magnetic particles orient the same way.

-

c.

The magnetic particles orient in different ways.

-

d.

Some magnetic particles orient one way, and others orient the opposite way.

-

a.

-

6.

Which of the following statements best describe what materials are made of?

-

a.

All materials contain magnetic particles

-

b.

All materials contain only one kind of particle

-

c.

Some materials do not contain smaller particles

-

d.

All materials are made of many, many small particles that cannot be seen with your eyes.

-

a.

-

7.

Temporary magnets

-

a.

Have particles that cannot rotate

-

b.

Do not contain magnetic particles

-

c.

Contain magnetic particles

-

d.

Have particles that change from non-magnetic to magnetic

-

a.

-

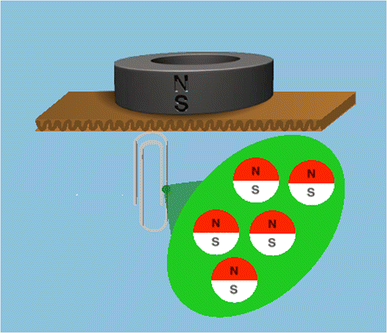

8.

Look at the picture. Choose the best description of what is happening in this image

-

A.

The paperclip is going to fall because the cardboard is blocking the magnetic force

-

B.

The paperclip has not become magnetized because the cardboard is blocking the magnetic force

-

c.

The paperclip is being attracted to the magnet through the cardboard

-

d.

The cardboard has become magnetized

-

A.

-

15.

Non-magnetic particles

-

a.

Do not orient in magnetic fields

-

b.

Can turn into magnetic particles

-

c.

Can be in materials that contain magnetic particles

-

d.

Only exist in plastic

-

a.

-

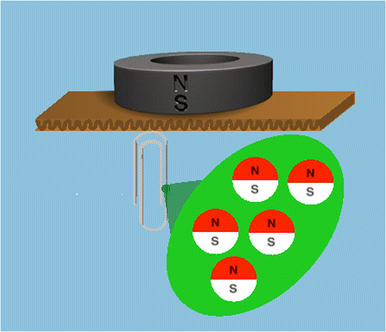

17.

Look at the picture. Choose the best description of what is happening in this image

-

a.

The magnetic field lines pass through the cardboard and magnetize the paperclip

-

b.

The magnetic field lines only go upwards away from the cardboard

-

c.

The particles in the paperclip have not been affected by the magnetic field

-

d.

The paperclip will not be attracted to the magnet

-

a.

-

18.

Magnetic field lines

-

a.

Will not pass through non-magnetic material

-

b.

Pass through aluminum foil and paper

-

c.

Are best represented as a single circle around a magnet

-

d.

Do not affect magnetic particles

-

a.

Rights and permissions

About this article

Cite this article

Shelton, A., Smith, A., Wiebe, E. et al. Drawing and Writing in Digital Science Notebooks: Sources of Formative Assessment Data. J Sci Educ Technol 25, 474–488 (2016). https://doi.org/10.1007/s10956-016-9607-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10956-016-9607-7