Abstract

In this paper we study the properties of the centered (norm of the) gradient squared of the discrete Gaussian free field in \(U_{\varepsilon }=U/\varepsilon \cap \mathbb {Z}^d\), \(U\subset \mathbb {R}^d\) and \(d\ge 2\). The covariance structure of the field is a function of the transfer current matrix and this relates the model to a class of systems (e.g. height-one field of the Abelian sandpile model or pattern fields in dimer models) that have a Gaussian limit due to the rapid decay of the transfer current. Indeed, we prove that the properly rescaled field converges to white noise in an appropriate local Besov-Hölder space. Moreover, under a different rescaling, we determine the k-point correlation function and joint cumulants on \(U_{\varepsilon }\) and in the continuum limit as \(\varepsilon \rightarrow 0\). This result is related to the analogue limit for the height-one field of the Abelian sandpile (Dürre in Stoch Process Appl 119(9):2725–2743, 2009), with the same conformally covariant property in \(d=2\).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The Gaussian free field (GFF) is one of the most prominent models for random surfaces. It appears as scaling limit of observables in many interacting particle systems, see for example Jerison et al. [24], Kenyon [37], Sheffield [21], Wilson [32]. It serves as a building block for defining the Liouville measure in Liouville quantum gravity (see Ding et al. [10] and references therein for a list of works on the topic).

Its discrete counterpart, the discrete Gaussian free field (DGFF), is also very well-known among random interface models on graphs. Given a graph \(\Lambda \), a (random) interface model is defined as a collection of (random) real heights \(\Gamma =(\Gamma (x))_{x\in \Lambda }\), measuring the vertical distance between the interface and the set of points of \(\Lambda \) [15, 36]. The discrete Gaussian free field has attracted a lot of attention due to its links to random walks, cover times of graphs, and conformally invariant processes (see Barlow and Slade [17], Ding et al. [2], Glimm and Jaffe [32], Schramm and Sheffield [9], Sheffield [31], among others). In the present paper, we will consider the DGFF on the square lattice, that is, we will focus on \(\Lambda \subseteq \mathbb {Z}^d\), in which case the probability measure of the DGFF is a Gibbs measure with formal Hamiltonian given by

where \(V(\varphi )=\varphi ^2/2\). We will always work with 0-boundary conditions, meaning that we will set \(\Gamma (x)\) to be zero almost surely outside \(\Lambda \). For general potentials \(V(\cdot )\) the Hamiltonian (1.1) defines a broad class of gradient interfaces which have been widely studied in terms of decay of correlations and scaling limits [5, 7, 29], among others.

The gradient Gaussian free field \(\nabla \Gamma \) is defined as the gradient of the DGFF \(\Gamma \) along edges of the square lattice. This field is a centered Gaussian process whose correlation structure can be written in terms of \(T(\cdot ,\cdot )\), the transfer current (or transfer impedance) matrix [23]. Namely, if we consider the gradient \(\nabla _i \Gamma (\cdot ):=\Gamma (\cdot +e_i)-\Gamma (\cdot )\) in the i-th coordinate direction of \(\mathbb {R}^d\), we have, for \(x,y\in \mathbb {Z}^d,1\le i,j\le d \), that

where \(e=(x,x+e_i)\) and \(f=(y,y+e_j)\) are directed edges of the grid and \(G_{\Lambda }(\cdot ,\cdot )\) is the discrete harmonic Green’s function on \(\Lambda \) with 0-boundary conditions outside \(\Lambda \). Here T(e, f) describes a current flow between e and f.

The main object we will study in our article is the following. Take U to be a connected, bounded subset of \(\mathbb {R}^d\) with smooth boundary. Consider the recentered squared norm of the gradient DGFF, formally denoted by

on the discretized domain \(U_{\varepsilon } = U/\varepsilon \cap \mathbb {Z}^d\), \(\varepsilon >0\), \(d\ge 2\), with \(\Gamma \) a 0-boundary DGFF on \(U_\varepsilon \). The colon \(:(\cdot ):\) denotes the Wick centering of the random variables. In the rest of the paper we will simply call \(\Phi _\varepsilon \) the gradient squared of the DGFF. Let us remark that we do not consider \(d=1\) here since in one dimension the gradient of the DGFF is a collection of i.i.d. Gaussian variables.

1.1 k-Point Correlation Functions

Our first main result determines the k-point correlation functions for the field \(\Phi _{\varepsilon }\) on the discretized domain \(U_\varepsilon \) and in the scaling limit as \(\varepsilon \rightarrow 0\). We defer the precise statement to Theorem 1 in Sect. 3, which we will now expose in a more informal way. Let \(\varepsilon >0\) and \(k \in \mathbb {N}\) and let the points \(x^{(1)},\dots ,x^{(k)}\) in \(U \subset \mathbb {R}^d\), \(d\ge 2\), be given. Define \(x^{(j)}_\varepsilon \) to be a discrete approximation of \(x^{(j)}\) in \(U_\varepsilon \), for \(j = 1,\dots ,k\). Let \(\Pi ([k])\) be the set of partitions of k objects and \(S_\textrm{cycl}^0(B) \) be the set of cyclic permutations of a set B without fixed points. Finally let \(\mathcal E\) be the set of coordinate vectors of \(\mathbb {R}^d\). Then the k-point correlation function at fixed “level” \(\varepsilon \) is equal to

Moreover if \(x^{(i)} \ne x^{(j)}\) for all \(i \ne j\), the scaling limit of the above expression is

where \(G_U(\cdot ,\cdot )\) is the continuum Dirichlet harmonic Green’s function on U. As a corollary (Corollary 1) we also determine the corresponding cumulants on \(U_\varepsilon \) and in the scaling limit.

Let us discuss some interesting observations in the sequel. The k-point correlation function of (1.3) has similarities to the k-point correlation that arises in permanental processes, see Eisenbaum and Kaspi [25], Hough et al. [13], Last and Penrose [19] for relevant literature. In fact, in \(d=1\) one can show that the gradient squared is exactly a permanental process with kernel given by the diagonal matrix whose non-zero entries are the double derivatives of \(G_U\) [27, Theorem 1]. In higher dimensions however we cannot identify a permanental process arising from the scaling limit, since the directions of derivations of the DGFF at each point are not independent. Nevertheless the 2-point correlation functions of \(\Phi _\varepsilon \) are positive (see Eq. (4.20) in Sect. 4), which is consistent with attractiveness of permanental processes [25, Remark on p. 139], and the overall structure resembles closely that of permanental processes marginals.

In \(d=2\), the limiting k-joint cumulants of first order \(\kappa \) of our field are interestingly connected to the cumulants of the height-one field \(\big (h_{\varepsilon }(x_{\varepsilon }^{(i)}):\ x_\varepsilon ^{(i)}\in U_{\varepsilon }\big )\) of the Abelian sandpile model [11, Theorem 2]. Theorem 1 will imply that for every set of \(\ell \ge 2\) pairwise distinct points in \(d=2\) one has

with

see Dürre [12, Theorem 6].

We would also like to point out that the apparently intricate structure of Eqs. (1.2)–(1.3) and of Dürre’s Theorem 2 can be unfolded as soon as one recognizes therein the structure of a Fock space. We will discuss this point in more detail in Sect. 3.1, where in particular in Corollary 2 we will derive a Fock space representation of the k-point function for the height-one field. We will pose further questions on this matter in Sect. 5.

Due to the similar nature of the cumulants in the height-one field of the sandpile and our field, we show in Proposition 1 that in \(d=2\) the k-point correlation functions are conformally covariant (compare Dürre [11, Theorem 1], Kassel and Wu [23, Theorem 2]). This hints at Theorems 2 and 3 of Kassel and Wu [23], in which the authors prove that for finite weighted graphs the rescaled correlations of the spanning tree model and minimal subconfigurations of the Abelian sandpile have a universal and conformally covariant limit.

1.2 Scaling Limit

The second main result of our paper is the scaling limit of the field towards white noise in some appropriate local Besov–Hölder space. As we will show in Theorem 2, Sect. 3, as \(\varepsilon \rightarrow 0\) the gradient squared of the discrete Gaussian free field \(\Phi _{\varepsilon }\) converges as a random distribution to spatial white noise W:

for some explicit constant \(0<\chi <\infty \). The result is sharp in the sense that we obtain convergence in the smallest Hölder space where white noise lives. The constant \(\chi \), defined explicitly in (3.6), is the analogue of the susceptibility for the Ising model, in that it is a sum of all the covariances between the origin and any other lattice point. We will prove that this constant is finite and the field \(\Phi _\varepsilon \) has a Gaussian limit. Note that Newman [30] proves the same result for translation-invariant fields with finite susceptibility satisfying the FKG inequality. In our case we do not have translation invariance since we work on a domain, so we are not able to apply directly this criterion. From a broader perspective there are several other results in the literature that obtain white noise in the limit due to an algebraic decay of the correlations, see for example Bauerschmidt et al. [3].

Note that our field can be understood in a wider class of models having correlations which depend on the transfer current matrix \(T(\cdot ,\cdot )\). An interesting point mentioned in Kassel and Wu [23] is that pattern fields of determinantal processes closely connected to the spanning tree measure and \(T(\cdot ,\cdot )\) (for example the spanning unicycle, the Abelian sandpile model [11] and the dimer model [6]) have a universal Gaussian limit when viewed as random distributions. Correlations of those pattern fields can be expressed in terms of transfer current matrices which decay sufficiently fast and assure the central limit-type behaviour which we also obtain.

Let us comment finally on the differences between expressions (1.3) and (1.6). The scaling factors are different, and this reflects two viewpoints one can have on \(\Phi _\varepsilon \): the one of (1.3) is that of correlation functionals in a Fock space, while in (1.6) we are looking at it as a Gaussian distributional field (compare also Theorems 2 and 3 in Dürre [11]). This is compatible, as there are examples of trivial correlation functionals which are non-zero as random distributions [22].

The novelty of the paper lies in the fact that we construct the gradient squared of the Gaussian free field on a grid, determine its k-point correlation function and scaling limits. We determine tightness in optimal Besov–Hölder spaces (optimal in the sense that we cannot achieve a better regularity for the scaling limit to hold). Furthermore we show the “dual” behavior in the scaling limit of the gradient squared of the DGFF as a Fock space field and as a random distribution. As mentioned before we recognize a similarity to permanental processes, and it is worthwhile noticing that for general point processes there is a Fock space structure, see e.g. Last and Penrose [25, Section 18]. Since there is a close connection to the height-one field via correlation structures, we also unveil a Fock space structure in the Abelian sandpile model.

1.3 Proof Ideas

The main idea for the proof of results (1.2)–(1.3) is to decompose the k-point correlation function in terms of complete Feynman diagrams [20]. Then we can use the Gaussian nature of the field to expand the products of covariances as transfer currents. To determine the scaling limit we will use developments from Funaki [15] and Kassel and Wu [23]. Let us stress that the proof of the scaling limit of cumulants differs from the one of Dürre [11, Theorem 2] who instead uses the correspondence between the height-one field and the spanning tree explicitly to determine the limiting observables.

The proof of the scaling limit (1.6) is divided into two parts. In a first step (Proposition 2) we prove that the family of fields under consideration is tight in an appropriate local Besov–Hölder space by using a tightness criterion of Furlan and Mourrat [16]. The proof requires a precise control of the summability of k-point functions, which is provided by Theorem 1 and explicit estimates for double derivatives of the Green’s function in a domain. Observe that, even if the proof relies on the knowledge of the joint moments of the family of fields, we only use asymptotic bounds derived from them. More specifically, we need to control the rate of growth of sums of moments at different points. The second step (Proposition 3) consists in determining the finite-dimensional distributions and identifying the limiting field. We will first show that the limiting distribution, when tested against test functions, has vanishing cumulants of order higher or equal to three, and secondly that the limiting covariance structure is the \(L^2(U)\) inner product of the test functions. This will imply that the finite-dimensional distributions of converge to those corresponding to d-dimensional white noise. For this we rely on generalized bounds on double gradients of the Green’s function from Lawler and Limic [26] and Dürr [11].

Structure of the Paper

The structure of the paper is as follows. In Sect. 2 we fix notation, introduce the fields that we study and provide the definition of the local Besov–Hölder spaces where convergence takes place. Section 3 is devoted to stating the main results in a more precise manner. The subsequent Sect. 4 contains all proofs and finally in Sect. 5 we discuss possible generalizations and pose open questions.

2 Notation and Preliminaries

Notation Let f, g be two functions \(f,g:\, \mathbb {R}^d\rightarrow \mathbb {R}^d\), \(d\ge 2\). We will use \(f(x)\lesssim g(x)\) to indicate that there exists a constant \(C>0\) such that \(|f(x)|\le C |g(x)|\), where \(|\cdot |\) denotes the Euclidean norm in \(\mathbb {R}^d\). If we want to emphasize the dependence of C on some parameter (for example U, \(\varepsilon \)) we will write \(\lesssim _U\), \(\lesssim _\varepsilon \) and so on. We use the Landau symbol \(f=\mathcal O(g)\) if there exist \(x_0 \in \mathbb {R}^d\) and \(C>0\) such that \(|f(x)|\le C|g(x)|\) for all \(x\ge x_0\). Similarly \(f=o(g) \) means that \(\lim _{x\rightarrow 0}f(x)/g(x)=0\). Furthermore, call \([\ell ] :=\{1,2,\ldots ,\ell \}\) and \(\llbracket -\ell ,\ell \rrbracket :=\{-\ell ,\dots ,-1,0,1,\dots ,\ell \}\), for some \(\ell \in \mathbb {N}\).

We will write |A| for the cardinality of a set A. For any finite set A we define \(\Pi (A)\) as the set of all partitions of A. Let \(\textrm{Perm}(A)\) denote the set of all possible permutations of the set A (that is, bijections of A onto itself). When \(A = [k]\) for some \(k\in \mathbb {N}\), we might also refer to its set of permutations as \(S_k\). If we restrict \(S_k\) to those permutations without fixed points, we denote them as \(S^0_k\). Call \(S_\textrm{cycl}(A)\) the set of the full cyclic permutations of A, possibly with fixed points. More explicitly, any \(\sigma :A\rightarrow A\) bijective is in \(S_\textrm{cycl}(A)\) if \(\sigma (A') \ne A'\) for any subset \(A'\subsetneq A\) with \(\left| A'\right| > 1\). When this condition is relaxed to all \(A'\) with \(\left| A'\right| >0\) we obtain the set of all cyclic permutations without fixed points which is called \(S^0_\textrm{cycl}(A)\).

Let \(n\in \mathbb {N}\) and \(\textbf{X}=(X_{i})_{i=1}^n\) be a vector of real-valued random variables, each of which has all finite moments.

Definition 1

(Joint cumulants of random vector) The cumulant generating function \(K(\textbf{t})\) of \(\textbf{X}\) for \(\textbf{t}=(t_1,\dots ,t_n)\in \mathbb {R}^n\) is defined as

where \(\textbf{t}\cdot \textbf{X}\) denotes the scalar product in \(\mathbb {R}^n\), \(\textbf{m}=(m_1,\dots ,m_n)\in \mathbb {N}^n\) is a multi-index with n components, and

being \(|m|=m_1+\cdots +m_n\). The joint cumulant of the components of \(\textbf{X}\) can be defined as a Taylor coefficient of \(K(t_1,\ldots ,t_n)\) for \(\textbf{m}=(1,\,\,\ldots ,\,1)\); in other words

In particular, for any \(A\subseteq [n]\), the joint cumulant \(\kappa (X_i: i\in A)\) of \(\textbf{X}\) can be computed as

with \(|\pi |\) the cardinality of \(\pi \).

Let us remark that, by some straightforward combinatorics, it follows from the previous definition that

If \(A=\{i,j\}\), \(i,j\in [n]\), then the joint cumulant \(\kappa (X_i,X_j)\) is the covariance between \(X_{i}\) and \(X_{j}\). We stress that, for a real-valued random variable X, one has the equality

which we call the n-th cumulant of X.

2.1 Functions of the Gaussian Free Field and White Noise

Let \(U\subset \mathbb {R}^d\), \(d\ge 2\), be a non-empty bounded connected open set with \(\mathcal C^1\) boundary. Denote by \((U_{\varepsilon }, E_{\varepsilon })\) the graph with vertex set \(U_\varepsilon :=U/\varepsilon \cap \mathbb {Z}^d\) and edge set \(E_\varepsilon \) defined as the bonds induced by the hypercubic lattice \(\mathbb {Z}^d\) on \(U_\varepsilon \). For an (oriented) edge \(e \in E_{\varepsilon }\) of the graph, we denote by \(e^+\) its tip and \(e^-\) its tail, and write the edge as \(e = (e^-, e^+)\). Consider \(\mathcal {E} :=\{e_i\}_{\,1\le i\le d}\), the canonical basis of \(\mathbb {R}^d\). Since we will use approximations via grid points, we need to introduce, for any \(t\in \mathbb {R}^d\), its floor function as

Definition 2

(Discrete Laplacian on a graph) We define the (normalized) discrete Laplacian with respect to a vertex set \(V\subseteq \mathbb {Z}^d\) as

where \(x,y \in V\) and \(x \sim y\) denotes that x and y are nearest neighbors. For any function \(f:V \rightarrow \mathcal \mathbb {R}\) we define

Call the outer boundary of V as

Definition 3

(Discrete Green’s function) The Green’s function \(G_V(x,\cdot ): V \cup \partial ^\text {ex}V \rightarrow \mathbb {R}\), for \(x \in V\), with Dirichlet boundary conditions is defined as the solution of

where \(\delta \) is the Dirac delta function.

Remark 1

When \(V = \mathbb {Z}^d\), we ask for the extra condition \(G_V(x,y) \rightarrow 0\) as \(\Vert y\Vert \rightarrow \infty \).

Denote by \(G_0(\cdot ,\cdot )\) the Green’s function for the whole grid \(\mathbb {Z}^d\) when \(d\ge 3\), or with a slight abuse of notation the potential kernel for \(d=2\). This abuse of notation is motivated by the fact that we will only be interested in the discrete differences of \(G_0\), which exist for the infinite-volume grid in any dimension. Notice that \(G_0(\cdot ,\cdot )\) is translation invariant; that is, \(G_0(x,y) = G_0(0,y-x)\) for all \(x,y\in \mathbb {Z}^d\).

Definition 4

(Continuum Green’s function) The continuum Green’s function \(G_U\) on \({\overline{U}} \subset \mathbb {R}^d\) is the solution (in the sense of distributions) of

for \(y\in U\), where \(\Delta \) denotes the continuum Laplacian and \({\overline{U}}\) is the closure of U.

For an exhaustive treatment on Green’s functions we refer to Evans [14], Lawler and Limic [26] and Spitzer [33].

Definition 5

(Discrete Gaussian free field, Sznitman [35, Section 2.1]) Let \(\Lambda \subset \mathbb {Z}^d\) be finite. The discrete Gaussian free field (DGFF) \((\Gamma (x))_{x\in \Lambda }\) with 0-boundary condition is defined as the (unique) centered Gaussian field with covariance given by

Define for an oriented edge \(e = (e^-, e^+) \in E_\varepsilon \) the gradient DGFF \(\nabla _e\Gamma \) as

In the following, we will define the main object of interest.

Definition 6

(Gradient squared of the DGFF) The discrete stochastic field \(\Phi _\varepsilon \) given by

is called the gradient squared of the DGFF, where \(: \cdot :\) denotes the Wick product; that is, \(:\!X\!:\, = X - \mathbb {E}[X]\) for any random variable X.

The family of random fields \(\left( \Phi _\varepsilon \right) _{\varepsilon >0}\) is a family of distributions, which is defined to act on a given test function \(f\in \mathcal C_c^\infty (U)\) as

where we take \(\Phi _\varepsilon (\left\lfloor x/\varepsilon \right\rfloor ) = 0\) in case \(\left\lfloor x/\varepsilon \right\rfloor \notin U_\varepsilon \), which can happen if \(\varepsilon \) is not small enough.

When no ambiguities appear, we will write \(\left( \nabla _i\Gamma (x)\right) ^2\) for \(\left( \nabla _{e_i}\Gamma (x)\right) ^2\), with \(i=1,2,\dots ,d\). For any given function \(f:\mathbb {Z}^d\times \mathbb {Z}^d\rightarrow \mathbb {R}\), we define the discrete gradient in the first argument and direction \(e_i\in \mathcal E\) as

with \(x,y\in \mathbb {Z}^d\), and analogously

for the second argument. Once again, when no ambiguities arise, we will write \(\nabla _i^{(1)}\) for \(\nabla _{e_i}^{(1)}\), and analogously for the second argument.

For a continuum function \(g:U\times U\rightarrow \mathbb {R}\), \(\partial _{e_i}^{(1)}g(x,y)\) denotes the partial derivative of g with respect to the first argument in the direction of \(e_i\), while \(\partial _{e_i}^{(2)}g(x,y)\) corresponds to the second argument, also in the direction \(e_i\). The same abuse of notation on the subindex \(e_i\) applies here.

Definition 7

(Gaussian white noise) The d-dimensional Gaussian white noise W is the centered Gaussian random distribution on \(U \subset \mathbb {R}^d\) such that, for every \(f,g\in L^2(U)\),

In other words, \(\langle W,f \rangle \sim \mathcal N \big (0, \Vert f\Vert ^2_{L^2(U)} \big )\) for every \(f\in L^2(U)\).

2.2 Besov–Hölder Spaces

In this Subsection we will define the functional space on which convergence will take place. We will use Furlan and Mourrat [16] as a main reference. Local Hölder and Besov spaces of negative regularity on general domains are natural functional spaces when considering scaling limits of certain random distributions or in the context of non-linear stochastic PDE’s, see e.g. Furlan and Mourrat [16], Hairer [18] especially when those objects are well-defined on a domain \(U \subset \mathbb {R}^d\) but not necessarily on the full space \(\mathbb {R}^d\). They are particularly suited for fields which show bad behaviour near the boundary \(\partial U\).

Let \((V_n)_{n\in \mathbb {Z}}\) be a dense subsequence of subspaces of \(L^2(\mathbb {R}^d)\) such that \(\bigcap _{n\in \mathbb {Z}} V_n = \{0\}\). Denote by \(W_n\) the orthogonal complement of \(V_n\) in \(V_{n+1}\) for all \(n\in \mathbb {Z}\). Furthermore, we assume the following properties. The function \(f\in V_n\) if and only if \(f(2^{-n} \cdot ) \in V_0\). Let \((\phi (\cdot -k))_{k\in \mathbb {Z}^d}\) be an orthonormal basis of \(V_0\) and \((\psi ^{(i)}(\cdot -k))_{i<2^d, k\in \mathbb {Z}^d}\) an orthonormal basis of \(W_0\). Note that \(\phi , (\psi ^{(i)})_{i<2^d}\) both belong to \(\mathcal {C}^r_c(\mathbb {R}^d)\) for some positive integer \(r \in \mathbb {N}\), that is, they belong to the set of r times continuously differentiable functions on \(\mathbb {R}^d\) with compact support. For more details about wavelet analysis, see [8, 28].

Define \(\Lambda _n=\mathbb {Z}^d/2^n\) and

resp.

which makes \((\phi _{n,x})_{x\in \Lambda _n}\) an orthonormal basis of \(V_n\) resp. \((\psi ^{(i)}_{n,x})_{x\in \Lambda _n, i<2^d, n\in \mathbb {Z}}\) an orthonormal basis of \(L^2(\mathbb {R}^d)\). Every function \(f\in L^2(\mathbb {R}^d)\) can be decomposed into

for any fixed \(k\in \mathbb {Z}\), where \(\mathcal {V}_n\) resp. \(\mathcal {W}_n\) are the orthogonal projections onto \(V_n\) resp. \(W_n\) defined as

Definition 8

(Besov spaces) Let \(\alpha \in \mathbb {R}\), \(|\alpha |<r\), \(p,q\in [1,\infty ]\) and \(U\subset \mathbb {R}^d\). The Besov space \(\mathcal {B}^{\alpha }_{p,q}(U)\) is the completion of \(\mathcal {C}^{\infty }_c(U)\) with respect to the norm

The local Besov space \(\mathcal {B}^{\alpha , \textrm{loc}}_{p,q}(U)\) is the completion of \(\mathcal {C}^{\infty }(U)\) with respect to the family of semi-norms

indexed by \(\widetilde{\chi }\in \mathcal {C}^{\infty }_c(U)\).

We will use the following embedding property of Besov spaces in the tightness argument.

Lemma 1

(Furlan and Mourrat [16, Remark 2.12]) For any \(1\le p_1 \le p_2 \le \infty \), \(q\in [1,\infty ]\) and \(\alpha \in \mathbb {R}\), the space \(\mathcal B_{p_2,q}^{\alpha ,\textrm{loc}}(U)\) is continuously embedded in \(\mathcal B_{p_1,q}^{\alpha ,\textrm{loc}}(U)\).

Finally let us define the functional space where convergence will take place, the space of distributions with locally \(\alpha \)-Hölder regularity. For that, we denote as \(\mathcal C^r\) the set of r times continuously differentiable functions on \(\mathbb {R}^d\), with \(r\in \mathbb {N}\cup \{\infty \}\). We also define the \(\mathcal C^r\) norm of a function \(f\in \mathcal C^r\) as

being \(i\in \mathbb {N}^d\) a multi-index.

Definition 9

(Hölder spaces) Let \(\alpha < 0,r_0=-\lfloor \alpha \rfloor \). The space \(\mathcal C^{\alpha }_{\textrm{loc}}(U)\) is called the locally Hölder space with regularity \(\alpha \in \mathbb {R}\) on the domain U. It is the completion of \(\mathcal {C}^{\infty }_c(U)\) with respect to the family of semi-norms

indexed by \(\widetilde{\chi }\in \mathcal {C}^{\infty }_c(U)\) and

where

Note that by Furlan and Mourrat [16, Remark 2.18] one has \(\mathcal C^{\alpha }_{\textrm{loc}}(U) = \mathcal {B}^{\alpha , \textrm{loc}}_{\infty ,\infty }(U)\).

3 Main Results

The first result we would like to present is an explicit computation of the k-point correlation function of the gradient squared of the DGFF field \(\Phi _\varepsilon \) defined in Definition 6.

Theorem 1

Let \(\varepsilon >0\) and \(k \in \mathbb {N}\) and let the points \(x^{(1)},\dots ,x^{(k)}\) in \(U \subset \mathbb {R}^d\), \(d\ge 2\), be given. Define \(x^{(j)}_\varepsilon :=\left\lfloor x^{(j)}/\varepsilon \right\rfloor \) and choose \(\varepsilon \) small enough so that \(x^{(j)}_\varepsilon \in U_\varepsilon \), for all \(j = 1,\dots ,k\). Then

where \(G_{U_{\varepsilon }}(\cdot ,\cdot )\) was defined in Definition 3. Moreover if \(x^{(i)} \ne x^{(j)}\) for all \(i \ne j\), then

where \(G_U(\cdot ,\cdot )\) was defined in Eq. (2.4).

Remark 2

It will sometimes be useful to write (3.1) as the equivalent expression

where the condition of \(\sigma \) belonging to full cycles of B without fixed points is inserted in the no-singleton condition of the permutations \(\pi \).

Remark 3

From the above expression it is immediate to see that the 2-point function is given by

which will be useful later on.

The following Corollary is a direct consequence of Theorem 1.

Corollary 1

Let \(\ell \in \mathbb {N}\). The joint cumulants \(\kappa \left( \Phi _\varepsilon \big (x^{(j)}_\varepsilon \big ):j\in [\ell ],\,x^{(j)}_\varepsilon \in U_\varepsilon \right) \) of the field \(\Phi _{\varepsilon }\) at “level” \(\varepsilon >0\) are given by

Moreover if \(x^{(i)} \ne x^{(j)}\) for all \(i \ne j\), then

As already mentioned in the introduction, comparing our result with Dürre [11, Theorem 2] we obtain (1.4).

The following proposition states that in \(d=2\) the limit of the field \(\Phi _{\varepsilon }\) is conformally covariant with scale dimension 2. This result can also be deduced for the height-one field for the sandpile model, see Dürre [11, Theorem 1].

Proposition 1

Let \(U,U' \subset \mathbb {R}^2\), \(k\in \mathbb {N}\), \(\big \{x^{(j)}\big \}_{j\in [k]}\), and \(\big \{x^{(j)}_\varepsilon \big \}_{j\in [k]}\) be as in Theorem 1. Furthermore let \(h:U\rightarrow U'\) be a conformal mapping and call \(h_\varepsilon \big (x^{(j)}\big ) :=\left\lfloor h\big (x^{(j)}\big )/\varepsilon \right\rfloor \), for \(\varepsilon \) small enough so that \(h_\varepsilon \big (x^{(j)}\big ) \in U'_\varepsilon \) for all \(j\in [k]\). Then

where now for clarity we emphasize the dependence of \(\Phi _\varepsilon \) on its domain.

Finally we will show that the rescaled gradient squared of the discrete Gaussian free field will converge to white noise in some appropriate locally Hölder space with negative regularity \(\alpha \) in \(d\ge 2\) dimensions. This space is denoted as \(\mathcal C_\textrm{loc}^\alpha (U)\) (see Definition 9).

Theorem 2

Let \(U\subset \mathbb {R}^d\) with \(d\ge 2\). The gradient squared of the discrete Gaussian free field \(\Phi _{\varepsilon }\) converges in the following sense as \(\varepsilon \rightarrow 0\):

where the white noise W is defined in Definition 7. This convergence takes place in \(\mathcal C^{\alpha }_{\textrm{loc}}(U)\) for any \(\alpha < -d/2\), and the constant \(\chi \) defined as

is well-defined, in the sense that \(0<\chi <\infty \).

Remark 4

Let us remind the reader that \(\mathcal C^{\alpha }_{\textrm{loc}}(U)\) with \(\alpha < -d/2\) are the optimal spaces in which the white noise lives. See for example Armstrong et al. [1, Proposition 5.9].

3.1 Fock Space Structure

Let us discuss in the following the connection to Fock spaces. We start by reminding the reader of the definition of the continuum Gaussian free field (GFF).

Definition 10

(Continuum Gaussian free field, Berestycki [4, Section 1.5]) The continuum Gaussian free field \(\overline{\Gamma }\) with 0-boundary (or Dirichlet) conditions outside U is the unique centered Gaussian process indexed by \(\mathcal C_c^\infty (U)\) such that

where \(G_U(\cdot , \cdot )\) was defined in Definition 4.

We can think of it as an isometry \(\overline{\Gamma }: \mathcal H \rightarrow L^2(\Omega ,\mathbb P)\), for some Hilbert space \(\mathcal H\) and some probability space \((\Omega , \mathcal {F},\mathbb P)\). To fix ideas, throughout this Section let us fix \(\mathcal H:=\mathcal H_0^1(U)\), the order one Sobolev space with Dirichlet inner product (see Berestycki [4, Section 1.6]). Note that, even if the GFF is not a proper random variable, we can define its derivative as a Gaussian distributional field.

Definition 11

(Derivatives of the GFF, Kang and Makarov [22, p. 4]) The derivative of \(\overline{\Gamma }\) is defined as the Gaussian distributional field \(\partial _i \overline{\Gamma }\), \(1\le i\le d\), in the following sense:

There is however another viewpoint that one can take on the GFF and its derivatives, and is that of viewing them as Fock space fields. This approach will be used to reinterpret the meaning of Theorem 1. For the reader’s convenience we now recall here some basic facts about Fock spaces and their fields. Our presentation is drawn from Janson [20, Section 3.1] and Kang and Makarov [22, Sec. 1.2\(-\)1.4].

For \(n\ge 0\), we denote \(\mathcal H^{\odot n}\) as the n-th symmetric tensor power of \(\mathcal H\); in other words, \(\mathcal H^{\odot n}\) is the completion of linear combinations of elements \(f_1\odot \cdots \odot f_n\) with respect to the inner product

The symmetric Fock space over \(\mathcal H\) is

We now introduce elements in \(Fock(\mathcal H)\) called Fock space fields. We call basic correlation functionals the formal expressions of the form

for \(p\in \mathbb {N}\), \(x_{1},\dots ,x_{p}\in U\), and \(X_{1},\dots ,X_{p}\) derivatives of \(\overline{\Gamma }\). The set \(\mathcal S(\mathcal X_p):=\{x_1,\ldots ,x_p\}\) is called the set of nodes of \(\mathcal X_p\). Basic Fock space fields are formal expressions written as products of derivatives of the Gaussian free field \(\overline{\Gamma }\), for example \(1\odot \overline{\Gamma }\), \(\partial \overline{\Gamma }\odot \overline{\Gamma }\odot \overline{\Gamma }\) etc. A general Fock space field X is a linear combination of basic fields. We think of any such X as a map \(u\mapsto X(u)\), \(u\in U\), where the values \(\mathcal X=X(u)\) are correlation functionals with \(\mathcal S(\mathcal X)=\{u\}\). Thus Fock space fields are functional-valued functions. Observe that Fock space fields may or may not be distributional random fields, but in any case we can think of them as functions in U whose values are correlation functionals.

Our goal is to define now tensor products. We will restrict our attention to tensor products over an even number of correlation functionals, even if the definition can be given for an arbitrary number of them. The reason behind this presentation is due to the set-up we will be working with.

Definition 12

(Tensor products in Fock spaces) Let \(m\in 2\mathbb {N}\). Given a collection of correlation functionals

with pairwise disjoint \(\mathcal S(\mathcal X_j)\)’s, the tensor product of the elements \(\mathcal X_1,\ldots ,\mathcal X_m\) is defined as

where the sum is taken over Feynman diagrams \(\gamma \) with vertices u labeled by functionals \(X_{pq}\) in such a way that there are no contractions of vertices in the same \(\mathcal S(\mathcal X_p)\). \(E_\gamma \) denotes the set of edges of \(\gamma \). One extends the definition of tensor product to general correlation functionals by linearity.

The reader may have noticed that (3.7) is simply one version of Wick’s theorem. It is indeed this formula that will allow us in Sect. 4.2 to prove Theorem 1, and that enables one to bridge Fock spaces and our cumulants in the following way. For any \(j\in [k]\), \(k\in \mathbb {N}\), \(i_j\in [d]\), one can define the basic Fock space field \(X_{i_j} :=\partial _{i_j} \overline{\Gamma }\). Introduce the correlation functional

for \(x^{(j)}\in U\). We obtain now the statement of the next Lemma.

Lemma 2

(k-point correlation functions as Fock space fields) Under the assumptions of Theorem 1,

where \({\mathcal Y}_B\big (x^{(B)}\big ):=2{\mathcal Y}_1\odot \cdots \odot 2 {\mathcal Y}_j\), \(\mathcal S(\mathcal { Y}_j)=\{x^{(j)}\}\), \(j\in B\). Here \(|\pi |\) stands for the number of blocks of the partition \(\pi \) and the tensor product on the r.h.s. is taken in the sense of (3.7).

The Fock space structure is more evident from the Gaussian perspective of the DGFF, but (1.4) together with Dürre’s theorem entail a corollary which we would like to highlight. We remind the reader of the definition of the constant C in (1.5).

Corollary 2

(Height-one field k-point functions, \(d=2\)) With the same notation of Theorem 1 one has in \(d=2\) that

where \(\widetilde{\mathcal Y}_B\big (x^{(B)}\big ):=\widetilde{\mathcal Y}_1\odot \cdots \odot \widetilde{\mathcal Y}_j,\,\,\mathcal S(\widetilde{\mathcal Y}_j)=\{x^{(j)}\}\) and \(\widetilde{\mathcal Y}_j:=C\,\,\mathcal Y_j\), \(j\in B\). As before, \(|\pi |\) stands for the number of blocks of the partition \(\pi \).

Remark 5

Mind that our Green’s functions differ from those of [11] by a factor of 2d since in their definitions we use the normalized Laplacian, whereas Dürre uses the unnormalized one. This has to be accounted for when comparing the corresponding results in both papers.

4 Proofs

4.1 Previous Results from Literature

Let us now expose some important results that we will refer to throughout the proofs. They refer to partially known results and partially consist of straightforward generalizations of previous results.

Our computations will rely on the fact that the distribution of the gradient field \(\nabla _i \Gamma \), \(i\in [d]\), is well-known. The following result is quoted from Funaki [15, Lemma 3.6].

Lemma 3

Let \(\Lambda \subset \mathbb {Z}^d\) be finite, and let \((\Gamma _x)_{x\in \Lambda }\) be a 0-boundary conditions DGFF on \(\Lambda \) (see Definition 5). Then

Consequently, we can directly link the gradient DGFF to so-called transfer current matrix \(T(\cdot ,\cdot )\) by

where \(e,\,f\) are oriented edges of \(\Lambda \) (see Kassel and Wu [23, Section 2]). Equivalently we can write

From Lemma 3, it is clear that we need to control the behaviour of double derivatives of discrete Green’s function in the limit \(\varepsilon \rightarrow 0\). In order to find the limiting joint moments of the point-wise field \(\Phi _\varepsilon (x)\) we will need the following result about the convergence of the discrete difference of the Green’s function on \(U_\varepsilon \) (see Definition 3) to the double derivative of the continuum Green’s function \(G_U(\cdot ,\cdot )\) on a set U (see Eq. (2.4)). This result follows from Theorem 1 of Kassel and Wu [23].

Lemma 4

(Convergence of the Green’s function differences) Let v, w be points in the set U, with \(v \ne w\). Then for all \(a,b \in \mathcal E\),

The next lemma is a generalization of Dürre [12, Lemma 31] for general dimensions \(d\ge 2\). The proof is straightforward and will be omitted. It provides an error estimate when replacing the double difference of \(G_{U_\varepsilon }(\cdot ,\cdot )\) on the finite set by that of \(G_0(\cdot ,\cdot )\) defined on the whole lattice.

Lemma 5

Let \(D\subset U\) be such that the distance between D and U is non-vanishing, that is, \({{\,\textrm{dist}\,}}{(D,\partial U)} :=\inf _{(x,y)\in D\times \partial U}\left| x-y\right| > 0\). There exist \(c_D > 0\) and \(\varepsilon _D > 0\) such that, for all \(\varepsilon \in (0,\varepsilon _D]\), for all \(v,w \in D_\varepsilon :=D/\varepsilon \cap \mathbb {Z}^d\) and \(i,j\in [d]\),

and also

An immediate consequence of (4.4) and the expression (3.1) in Theorem 1 for two points gives us the following bound on the covariance of the field:

Corollary 3

Let D, v and w be as in Lemma 5. Then

On the other hand, we will also make use of a straightforward extension of Lawler and Limic [26, Corollary 4.4.5] for \(d=2\) and Lawler and Limic [26, Corollary 4.3.3] for \(d\ge 3\), yielding the following Lemma.

Lemma 6

(Asymptotic expansion of the Green’s function differences) As \(|v|\rightarrow +\infty \), for all \(i,\,j\in [d]\)

The following technical combinatorial estimate, which is an immediate extension of a corollary of Dürre [12, Lemma 37], will be important when proving tightness of the family \((\Phi _\varepsilon )_\varepsilon \), in order to bound the rate of growth of the moments of \(\langle \Phi _\varepsilon ,f\rangle \) for some test function f:

Lemma 7

Let \(D \subset U\) such that \({{\,\textrm{dist}\,}}{(D,\partial U)} > 0\) and \(p\ge 2\). Then

where \(D_\varepsilon :=D/\varepsilon \cap \mathbb {Z}^d\).

4.2 Proof of Theorem 1

The strategy to prove the first theorem is based on decomposing the k-point functions into combinatorial expressions that involve basically covariances of Gaussian random variables. This is made possible by our explicit knowledge of the Gaussian field which underlies \(\Phi _\varepsilon \). These covariances can be estimated using the transfer matrix (Eq. (4.2)), whose scaling limit is well-known: it is the differential of the Laplacian Green’s function (cf. Kassel and Wu [23, Theorem 1]).

In order to compute the k-point function we will first make use of Feynman diagrams techniques, of which we provide a brief exposition in the appendix at the end of the present paper. In particular we will make use of Theorem A.4.

Proof of Theorem 1

Let us compute the function

From Definition 6 of \(\Phi _\varepsilon \big (x^{(j)}_\varepsilon \big )\) we know that

with \(\mathcal E\) the canonical basis of \(\mathbb {R}^d\). In our case we have k products of the Wick product \(:\!\big (\nabla _{i_j}\Gamma \big (x^{(j)}_\varepsilon \big )\big )^2\!:\) (indexed by j, not \(i_j\)). So we can identify \(Y_j\) in Theorem A.4 with \(:\!\big (\nabla _{i_j}\Gamma \big (x^{(j)}_\varepsilon \big )\big )^2\!:\) for any \(j\in [k]\), being \(\xi _{j1}=\xi _{j2}=\nabla _{i_j}\Gamma \big (x^{(j)}_\varepsilon \big )\).

Let us denote \(\overline{x_{e_i}^{(j)}} :=\big (x^{(j)}, x^{(j)} + e_i\big ),\, i\in [d]\,,\, j \in [k]\) (we drop the dependence on \(\varepsilon \) to ease notation). Also to make notation lighter we fix the labels \(i_j\) for the moment and keep them implicit. We then define \(\mathcal {U} :=\big \{\overline{x^{(1)}},\overline{x^{(1)}},\dots ,\overline{x^{(k)}},\overline{x^{(k)}}\big \}\), where each copy is considered distinguishable. We also define \(FD_0\) as the set of complete Feynman diagrams on \(\mathcal U\) such that no edge joins \(\overline{x^{(i)}}\) with (the other copy of) \(\overline{x^{(i)}}\). That is, a typical edge b in a Feynman diagram \(\gamma \) in \(FD_0\) is of the form \(\big (\overline{x^{(j)}}, \overline{x^{(m)}}\big )\), with \(j\ne m\) and \(j,m\in [k]\). Thus by Definition A. 1 we have

where \(E_\gamma \) are the edges of \(\gamma \) (note that the edges of \(\gamma \) connect edges of \(U_\varepsilon \)) and \((b^+)^-\) denotes the tail of the edge \(b^+\) (analogously for \(b^-\)). Lemma 3 and Eq. (4.2) yield

Now we would like to express Feynman diagrams in terms of permutations. We first note that any given \(\gamma \in FD_0\) cannot join \(\overline{x^{(i)}}\) with itself (neither the same nor the other copy of itself). So instead of considering permutations \(\sigma \in \textrm{Perm}(\mathcal U)\) we consider permutations \(\sigma '\in S_k\), being \(S_k\) the group of permutations of the set [k]. Any \(\gamma \in FD_0\) is a permutation \(\sigma \in \textrm{Perm}(\mathcal U)\), but given the constraints just mentioned, we can think of them as permutations \(\sigma '\in S_k\) without fixed points; that is, \(\sigma '\in S_k^0\). Thus

with \(c(\sigma ')\) a constant that takes into account the multiplicity of different permutations \(\sigma \) that give rise to the same \(\sigma '\), depending on its number of subcycles.

Let us disassemble this expression even more. In general \(\sigma '\) can be decomposed in q cycles. Since \(\sigma '\in S_k^0\) (in particular, it has no fixed points), there are at most \(\left\lfloor k/2\right\rfloor \) cycles in a given \(\sigma '\). Hence,

where the notation \(j\in \sigma _h'\) means that j belongs to the domain where \(\sigma _h'\) acts (non trivially). As for \(c(\sigma ')\), given a cycle \(\sigma '_i\), \(i\in [q]\), it is straightforward to see that there are \(2^{|\sigma '_i|-1}\) different Feynman diagrams in \(FD_0\) that give rise to \(\sigma _i'\), where \(|\sigma '_i|\) is the length of the orbit of \(\sigma '_i\). This comes from the fact that we have two choices for each element in the domain, but swapping them gives back the original Feynman diagram, so we obtain

Now we note that a cyclic decomposition of a permutation of the set [k] determines a partition \(\pi \in \Pi \left( [k]\right) \) (although not injectively). This way, a sum over the number of partitions q and \(\sigma '\in S_k^0\) with q cycles can be written as a sum over partitions \(\pi \) with no singletons, and a sum over full cycles in each block B (that is, those permutations consisting of only one cycle). Hence

where we also made the switch between \(\prod _{B\in \pi }\) and \(\sum _{\sigma \in S_\textrm{cycl}(B)}\) by grouping by factors. Alternatively, we can express this average in terms of \(S_\textrm{cycl}^0(B)\), the set of full cycles without fixed points, as

Finally, we need to put back the subscript \(i_j\) in the elements \(\overline{x^{(j)}}\) and sum over \(i_1,\dots ,i_k\in \mathcal {E}\). Note that for any function \(f:\mathcal E^k \rightarrow \mathbb {R}\) we have

so that

and grouping the \(\eta (j)\)’s according to each block \(B\in \pi \) we get

Regarding the transfer matrix T, using Eq. (4.2) we can write the above expression as

obtaining the first result of the theorem. Finally, using Lemma 4 we obtain the second statement. \(\square \)

4.3 Proof of Corollary 1 and Proposition 1

Proof of Corollary 1

Recall that Definition 1 yields

From expressions (3.1) and (3.2) in Theorem 1 let us see that the equality follows factor by factor by using strong induction. For \(k=1\) it is trivially true since the mean of the field is 0. Now let now us assume that it holds for \(n=1,\dots ,k-1\). From (2.1) we have that

Using again (2.1) on the expectation term and the induction hypothesis, after cancellations we get

Thus the proof follows by induction. \(\square \)

The equality in absolute value between our cumulants and those of Dürre [11, Theorem 1] allow us to adapt his proof and conclude that, in the case of \(d=2\), our field is conformally covariant with scale dimension 2.

Proof of Proposition 1

It is known [4, Proposition 1.9] that the continuum Green’s function \(G_U(\cdot , \cdot )\), defined in Eq. (2.4), is conformally invariant against a conformal mapping \(h:U\rightarrow U'\); that is, for any \(v\ne w\in U\),

Recalling expression (3.5) for the limiting cumulants we see that, for any integer \(\ell \ge 2\),

where the derivatives on the right hand side act on \(G_{U'}\circ (h_\varepsilon ,h_\varepsilon )\), not on \(G_{U'}\). From the cumulants expression we deduce that, for a given permutation \(\sigma \) and assignment \(\eta \), each point \(x^{(j)}\) will appear exactly twice in the arguments of the product of differences of \(G_{U'}\). Thus, using the chain rule and the Cauchy–Riemann equations, for a fixed \(\sigma \) we obtain an overall factor \(\prod _{j=1}^\ell \big |h'\big (x^{(j)}\big )\big |^2\) after summing over all \(\eta \). We then obtain

The result follows plugging this expression into the moments. \(\square \)

4.4 Proof of Theorem 2

The proof of this Theorem will be split into two parts. First we will show that the family \((\Phi _\varepsilon )_{\varepsilon >0}\) is tight in some appropriate Besov space and then we will show convergence of finite-dimensional distributions \((\langle \Phi _{\varepsilon }, f_i\rangle )_{i\in [m]}\) and identify the limit.

Tightness

Proposition 2

Let \(U\subset \mathbb {R}^d\), \(d\ge 2\). Under the scaling \(\varepsilon ^{-d/2}\), the family \((\Phi _\varepsilon )_{\varepsilon >0}\) is tight in \(\mathcal B_{p,q}^{\alpha ,\textrm{loc}}(U)\) for any \(\alpha < -d/2\) and \(p,q \in [1,\infty ]\). The family is also tight in \(\mathcal C^\alpha _\textrm{loc}(U)\) for every \(p,q\in [1,\infty ]\) and \(\alpha < -d/2\).

Recall that the local Besov space \(\mathcal B_{p,q}^{\alpha ,\textrm{loc}}(U)\) was defined in Definition 8 and the local Hölder space \(\mathcal C^\alpha _\textrm{loc}(U)\) in Definition 9.

Finite-Dimensional Distributions

Proposition 3

Let \(U\subset \mathbb {R}^d\) and \(d\ge 2\). There exists a normalization constant \(\chi >0\) such that, for any set of functions \(\left\{ f_i\in L^2(U):\, i\in [m], \, m\in \mathbb {N}\right\} \), the random elements \(\langle \Phi _\varepsilon ,f_i\rangle \) converge in the following sense:

as \(\varepsilon \rightarrow 0\).

4.4.1 Proof of Proposition 2

We will use the tightness criterion given in Theorem 2.30 in Furlan and Mourrat [16]. First we need to introduce some notation. Let f and \((g^{(i)})_{1\le i<2^d}\) be compactly supported test functions of class \(\mathcal C^r_c(\mathbb {R}^d)\), \(r\in \mathbb {N}\). Let \(\Lambda _n :=\mathbb {Z}^d/2^n\), and let \(R>0\) be such that

Let \(K \subset U\) be compact and \(k \in \mathbb {N}\). We say that the pair (K, k) is adapted if

We say that the set \(\mathcal K\) is a spanning sequence if it can be written as

where \((K_n)\) is an increasing sequence of compact subsets of U such that \(\bigcup _n K_n = U\), and for every n the pair \((K_n, k_n)\) is adapted.

Theorem 3

(Tightness criterion, Furlan and Mourrat [16, Theorem 2.30]) Let \(f, (g^{(i)})_{1\le i<2^d}\) in \(\mathcal C^r_c(\mathbb {R}^d)\) with the support properties mentioned above, and fix \(p\in [1,\infty )\) and \(\alpha ,\beta \in \mathbb {R}\) satisfying \(\left| \alpha \right| , \left| \beta \right|< r, \alpha <\beta \). Let \((\Phi _m)_{m\in \mathbb {N}}\) be a family of random linear functionals on \(\mathcal {C}_c^r(U)\), and let \(\mathcal K\) be a spanning sequence. Assume that for every \((K, k) \in \mathcal K\), there exists a constant \(c = c(K,k) < \infty \) such that for every \(m \in \mathbb {N}\),

and

Then the family \((\Phi _m)_m\) is tight in \(\mathcal {B}^{\alpha ,\textrm{loc}}_{p,q}(U)\) for any \(q\in [1,\infty ]\). If moreover \(\alpha < \beta - d /p\), then the family is also tight in \(\mathcal C^\alpha _\textrm{loc}(U)\).

Proof of Proposition 2

We will consider an arbitrary scaling \(\varepsilon ^{\gamma }, \gamma \in \mathbb {R}\), and then choose an optimal one to make the fields tight. We define \(\widetilde{\Phi }_\varepsilon \) as the scaled version of \(\Phi _\varepsilon \), that is,

The family of random linear functionals \((\Phi _m)_{m\in \mathbb {N}}\) in Theorem 3 is to be identified with the fields \((\widetilde{\Phi }_\varepsilon )_{\varepsilon >0}\) taking for example \(\varepsilon \) decreasing to zero along a dyadic sequence. Now let us expand the expressions (4.8) and (4.9) in Theorem 3. To simplify notation, let us define \(f_{k,x}(\cdot ) :=f\big (2^k(\cdot -x)\big )\) for \(k\in \mathbb {N}\) and \(x\in \mathbb {R}^d\), and analogously for \(g^{(i)}\).

In the proof we will set \(p\in 2\mathbb {N}\). This will not affect the generality of our results because of the embedding of local Besov spaces described in Lemma 1. This means that we can read (4.8) and (4.9) forgetting the absolute value in the left-hand side. Let us rewrite the p-th moment of \(\big \langle \widetilde{\Phi }_\varepsilon ,f_{k,x}\big \rangle \) as

We will seek for a more convenient expression to work with. If we allow ourselves to slightly abuse the notation for \(\widetilde{\Phi }_\varepsilon \), then we can express it in a piece-wise continuous fashion as

where \(S_a(y)\) is the square of side-length a centered at y. Under a change of variables, if we define \(U^\varepsilon :=U\cap \varepsilon \mathbb {Z}^d\) (mind the superscript and the definition which is different from that of \(U_\varepsilon \) in Sect. 2) then

This way, expression (4.10) now reads

Therefore the left-hand side of expression (4.8) from Theorem 3 is upper-bounded by

Analogously, expression (4.9) from Theorem 3 reads

Choose \(\mathcal K = (K_n,n)_{n\in \mathbb {N}}\) with

for some \(\delta > 0\) and R such that (4.7) holds. Let us first consider (4.12). Given that \({{\,\textrm{supp}\,}}{g^{(i)}\big (2^n(\cdot -x)\big )} \subset B_x(R2^{-n})\) we can restrict the sum over \(y_j\) to the set

We now bound (4.12) separately for the cases \(2^n \ge R\varepsilon ^{-1}\) and \(2^n < R\varepsilon ^{-1}\). If \(2^n \ge R\varepsilon ^{-1}\), we have

The sum over \(\Omega _{n,x}\) can be bounded by a sum over a finite amount of points independent of n, since under the condition \(2^n \ge R\varepsilon ^{-1}\) the set \(\Omega _{x,n}\) has at most \(3^d\) points for any x, \(\varepsilon \) and n. Let us show that the sum of these expectations is uniformly bounded by a constant.

Looking at expression (3.3) we observe the following: any given partition \(\pi \in \Pi ([p])\) with no singletons can be expressed as \(\pi = \{B_1,\dots ,B_\ell \}\), with \(1\le \ell \le p\) such that \(\sum _{1\le i\le \ell } n_i = p\), with \(n_i :=\left| B_i\right| \). Then the cumulant corresponding to any given \(B_i\) (see Corollary 1) is proportional to a sum over \(\sigma \in S^0_\textrm{cycl}(B_i)\) and \(\eta :B_i\rightarrow \mathcal E\) of terms of the form

Using (4.4) we can bound this expression (up to a constant) by

where the minimum takes care of the case in which the set \(\{y_j:j\in B_i\}\) has repeated values, so that \(y_j=y_{\sigma (j)}\) for some \(j\in B_i\) and some \(\sigma \). So we have that

for some constant c(|B|) depending on B that accounts for the sum over \(\sigma \in S^0_\text {cycl}(B)\) and over \(\eta :B\rightarrow \mathcal E\). Since \(|y_i-y_j|\ge 1\) for any \(y_i,y_j\in \Omega _{n,x}\) and any n and x, (4.13) is bounded by a constant depending only on p, so that

since \(|\Omega _{n,x}| \le 3^d p\) for all n and x.

On the other hand, using the fact that

we obtain

which gives the bound

Observe that Theorem 3 allows the constant c to depend on \(K=K_n\), so the symbol \(\lesssim _{K_n}\) is not an issue. Then, for any \(\gamma \le 0\) we can bound the above expression by a constant multiple of \( 2^{-\gamma n}\). On the other hand, if \(2^n < R\varepsilon ^{-1}\), we have

We also note that

Using this and calling \(N:=\lfloor 2R2^{-n}\varepsilon ^{-1}\rfloor \), we obtain

Let us first study the behaviour of this expression for \(p=2\). By Corollary 3 we get

Let us now analyze \(\mathbb {E}\left[ \Phi _\varepsilon (y_1)\cdots \Phi _\varepsilon (y_p)\right] \) for an arbitrary p. In the same spirit as the case \(2^n \ge R \varepsilon ^{-1}\), by expression (4.13) we know that

Using Lemma 7 we get

by identifying \(\varepsilon \) with 1/N. So we arrive to

Now we use that

and since the sum takes place over partitions of the set [p] with no singletons, putting everything back into (3.3) we see that the term with the largest value of \(\left| \pi \right| \) will dominate for large N. For p even this happens when \(\pi \) is composed of cycles of two elements, in which case \(\left| \pi \right| = p/2\). Hence,

for p even. Finally,

If \(\gamma \ge -d/2\) then we can bound the above expression by a constant multiple of \( 2^{\frac{dn}{2}} 2^{-\left( \gamma +\frac{d}{2}\right) n} = 2^{-\gamma n}\). Otherwise, we cannot bound it uniformly in \(\varepsilon \), as the bound depends increasingly on \(\varepsilon \) as it approaches 0.

Now we need to obtain similar bounds for (4.8), which applied to our case takes the expression given in (4.11). For the case \(2^n \ge R\varepsilon ^{-1}\) we have

which is bounded by some \(c = c(K_n,n)\) whenever \(\gamma \ge -d\). If \(2^n < R\varepsilon ^{-1}\) instead we get

As before, only if \(\gamma \ge -{d}/{2}\) we have the required bound.

Theorem 3 now implies that under scaling \(\varepsilon ^{-d/2}\) the family \((\Phi _\varepsilon )_{\varepsilon >0}\) is tight in \(\mathcal B_{p,q}^{\alpha ,\textrm{loc}}(U)\) for any \(\alpha < -{d}/{2}\), any \(q\in [1,\infty ]\) and any \(p \ge 2\) and even. Using Lemma 1 this holds for any \(p \in [1,\infty ]\). This way, the family is also tight in \(\mathcal C^\alpha _\textrm{loc}(U)\) for every \(\alpha < -{d}/{2}\). \(\square \)

Remark 6

Observe that the scaling \(\varepsilon ^{-d}\) (the one used for the joint moments in Theorem 1) is outside the range of \(\gamma \) required for the tightness bounds, and therefore it will give a trivial scaling.

4.4.2 Proof of Proposition 3

The proof of this proposition will be divided into three parts. Firstly, we will determine the normalizing constant \(\chi \) and show that it is well-defined, in the sense that it is a strictly positive finite constant. Secondly, recalling Definition 1, we will demonstrate that the n-th cumulant \(\kappa _n(\langle \Phi _{\varepsilon }, f\rangle )\) of each random variable \(\langle \Phi _{\varepsilon }, f\rangle \), \(f\in L^2(U)\), vanishes for \(n\ge 3\). Finally we show that the second cumulant \(\kappa _2(\langle \Phi _{\varepsilon }, f\rangle , \langle \Phi _{\varepsilon }, g\rangle )\), \(g\in L^2(U)\), which is equal to the covariance, converges to the appropriate one corresponding to that of white noise. Once we have this, we can show that any collection \(\left( \langle \Phi _{\varepsilon }, f_1\rangle ,\dots ,\langle \Phi _{\varepsilon }, f_k\rangle \right) \), \(k\in \mathbb {N}\), is a Gaussian vector. To see this it suffices to take any linear combination \(f=\sum _{i\in [k]}\alpha _i \langle \Phi _{\varepsilon }, f_i\rangle \), \(\alpha _i\in \mathbb {R}\) for all \(i\in [k]\) so that, by multilinearity, all the cumulants \(\kappa _n\left( \langle \Phi _{\varepsilon }, f\rangle \right) \) converge to those of a centered normal with variance \(\int _U f(x)^2\textrm{d}x\). The ideas are partially inspired from Dürre [12, Section 3.6].

For the rest of this Subsection we will work with test functions \(f\in \mathcal C_c^\infty (U)\). The lifting of the results to every \(f\in L^2(U)\) follows by a standard density argument [20, Chapter 1, Section 3]. Let us first derive a convenient representation of the action \(\langle \Phi _{\varepsilon }, f\rangle \) defined in Eq. (2.6). More precisely, defining \(\langle \Phi _{\varepsilon }, f\rangle _S\) as

for any test function \(f\in \mathcal C^{\infty }_c(U)\) we can write

where \(R_{\varepsilon }(f)\) denotes the reminder term that goes to 0 in \(L^2\), as we show in the next lemma.

Lemma 8

Let \(U\subset \mathbb {R}^d\), \(d\ge 2\). For any test function \(f\in \mathcal C^\infty _c(U)\) as \(\varepsilon \rightarrow 0\) it holds that

Proof

Observe that

where \(A_x :=\left\{ a\in U: \left\lfloor a/\varepsilon \right\rfloor =x\right\} \). It is easy to see that \(\left| A_x\right| \le \varepsilon ^d\), and given that the support of f is compact and strictly contained in U, for \(\varepsilon \) sufficiently small (depending on f), the distance between this support and the boundary \(\partial U\) will be larger than \(\sqrt{d}\varepsilon \). So there is no loss of generality if we assume that \(\left| A_x\right| = \varepsilon ^d\).

Now, we can rewrite (4.14) as

Let us call \(\mathcal I(x)\) the term

The set \(A_x\) is not a Euclidean ball, but it has bounded eccentricity (see Stein and Shakarchi [34, Corollary 1.7]). Therefore we can apply the Lebesgue differentiation theorem to claim that \(\mathcal I(x)\) will be of order o(1), where the rate of convergence possibly depends on x and f.

To see statement (4.14), we square the expression in (4.15) and take its expectation, obtaining

where we used the Cauchy–Schwarz inequality. By Corollary 3 the expectation on the right-hand side can be bounded as

while the second term in (4.16) is of order \(o(\varepsilon ^{-d})\). With the outer factor \(\varepsilon ^{2d}\) (4.16) goes to 0, as we wanted to show. \(\square \)

Let us remark that, by the previous lemma, proving finite-dimensional convergence of \(\left\{ \frac{\varepsilon ^{-d/2}}{\sqrt{\chi }}\langle \Phi _\varepsilon ,f_p\rangle :\,p\in [m]\right\} \) will be equivalent to proving finite-dimensional convergence of \(\left\{ \frac{\varepsilon ^{d/2}}{\sqrt{\chi }}\langle \Phi _\varepsilon ,f_p\rangle _S:\,p\in [m]\right\} \).

4.4.3 Definition of \(\chi \)

Lemma 9

Let \(G_0(\cdot , \cdot )\) be the Green’s function on \(\mathbb {Z}^d\) defined in Sect. 2.1. The constant

is well-defined. In particular \(\chi \in (8,+\infty )\).

Proof

Let us define \(\kappa _0\) as

By translation invariance we notice that \(\kappa _0(v,w) = \kappa _0(0,w-v)\). Moreover, using Lemma 6, we have that as \(|v|\rightarrow +\infty \)

so that we can bound \(\chi \) from above by

For the lower bound, since \(\kappa _0(0,v) \ge 0 \) for all \( v\in \mathbb {Z}^d\) we can take \(v = 0\). Choosing the differentiation directions \(i=j=1\) in (4.17) we get the term \(2\big (\nabla _1^{(1)}\nabla _1^{(2)}G_0(0,0)\big )^2\), which can be expressed as \(8\left( G_0(0,0)-G_0(e_1,0)\right) ^2\) using translation and rotation invariance of \(G_0\). Now, by definition

from which \(G_0(0,0) - G_0(e_1,0) = 1\). This implies that \(\chi \ge 8\), and the lemma follows. \(\square \)

4.4.4 Vanishing Cumulants \(\kappa _n\) for \(n\ge 3\)

Lemma 10

For \(n\ge 3\), \(f\in \mathcal C_c^\infty (U)\), the cumulants \(\kappa _n \left( \varepsilon ^{d/2}\langle \Phi _\varepsilon ,f\rangle _S\right) \) go to 0 as \(\varepsilon \rightarrow 0\).

Proof

Recall that, by the multilinearity of cumulants, for \(n\ge 2\) the n-th cumulant satisfies

with \(D:={{\,\textrm{supp}\,}}f\), which is compact inside U. The goal now is to show that

as \(\varepsilon \rightarrow 0\).

First, we note from the cumulants expression (3.4) and bound (4.4) in Lemma 5 that, for any set V of (possibly repeated) points of \(D_\varepsilon \), with \(\left| V\right| = n\), we have

Using the above expression and Lemma 7, it is immediate to see that, if V has m distinct points with \(1\le m\le n\),

so that

We observe in particular that for \(d\ge 2\) this expression goes to 0 for any \(n\ge 3\). Furthermore, going back to (4.18), since f is uniformly bounded this shows that for \(n\ge 3\) the cumulants \(\kappa _n\) go to 0 as \(\varepsilon \rightarrow 0\), as we wanted to show. \(\square \)

4.4.5 Covariance Structure \(\kappa _2\)

Lemma 11

For any two functions \(f_p,f_q \in \mathcal C^{\infty }_c(U)\), with \(p,q\in [m]\) for \(m \in \mathbb {N}\), we have

Proof

Without loss of generality we define the compact set \(D\subset U\) as the intersection of the supports of \(f_p\) and \(f_q\). Then

From Theorem 1, we know the exact expression of \(\kappa \left( \Phi _\varepsilon (v),\Phi _\varepsilon (w)\right) \), given by

Recall the constant \(\kappa _0(v,w)\), defined in (4.17). We will approximate \(\kappa \left( \Phi _\varepsilon (v),\Phi _\varepsilon (w)\right) \) by \(\kappa _0(v,w)\) and then plug it in (4.19). In other words, we will approximate \(G_{U_\varepsilon }(\cdot ,\cdot )\) by \(G_0(\cdot ,\cdot )\). First we split Eq. (4.19) into two parts:

The second term above can be easily disregarded: remember that the cumulant for two random variables equals their covariance, so using Corollary 3 we get

which goes to 0 as \(\varepsilon \rightarrow 0\). For the first sum in (4.21), let us compute the error we are committing when replacing \(G_{U_\varepsilon }\) by \(G_0\). We notice that

justified by (4.4) in Lemma 5, combined with

for some \(c>0\), which is a consequence of Lemma 6. Recalling that \(|a^2-b^2|= |a-b||a+b|\) for any real numbers a, b, and setting

and

together with (4.3) from Lemma 5, we obtain

We can use this approximation in the first summand in (4.21) and obtain

since \(\left| \left\{ v,w \in D_\varepsilon :|v-w| < 1/\sqrt{\varepsilon }\right\} \right| = \mathcal O\big (\varepsilon ^{-\frac{3}{2}d}\big )\). Now, given that both \(f_p\) and \(f_q\) are in \(\mathcal C_c^\infty (U)\), they are also Lipschitz continuous. Hence

so that we can replace, up to a negligible error, \(f_q(\varepsilon w)\) by \(f_q(\varepsilon v)\) in (4.22), getting

Finally the translation invariance of \(\kappa _0\) implies

as claimed. \(\square \)

5 Discussion and Open Questions

In this paper we studied properties of the gradient squared of the discrete Gaussian free field on \(\mathbb {Z}^d\) such as k-point correlation functions, cumulants, conformal covariance in \(d=2\) and the scaling limit on a domain \(U\subset \mathbb {R}^d\).

One of the most striking result we have obtained is the “almost” permanental structure of our field contrasting the block determinantal structure of the height-one field of the Abelian sandpile studied in Dürre [11, 12], Kassel and Wu [23]. We plan to investigate implications of these structures further in the future.

In fact, the idea of the proof for tightness in Proposition 2 is based on the application of a criterion by Furlan and Mourrat [16] for local Hölder and Besov spaces. The proof requires a precise control of the summability of k-point functions, which is provided by Theorem 1 and explicit estimates for double derivatives of the Green’s function in a domain. Observe that the proof is based only on the growth of sums of moments at different points. Thus this technique can be generalized to prove tightness of other fields just by having information on these bounds, which is usually easier to obtain than the whole expression on the joint moments.

Regarding the convergence of finite-dimensional distributions, Proposition 3, note that this strategy can be generalized to prove convergence to white noise of other families of fields, given the relatively mild conditions that we used from the field in question. Among them, one only requires knowledge on bounds of sums of joint cumulants, the existence of an infinite volume measure, and the finiteness of the susceptibility constant. Note that similar scaling results were given for random fields on the lattice satisfying the FKG inequality in Newman [30].

Data Availability

We do not analyse or generate any datasets, because our work proceeds within a theoretical and mathematical approach.

References

Armstrong, S., Kuusi, T., Mourrat, J.-C.: Quantitative Stochastic Homogenization and Large-Scale Regularity. Springer, New York (2017)

Barlow, M.T., Slade, G.: Random Graphs, Phase Transitions, and the Gaussian Free Field. Springer, Cham (2019)

Bauerschmidt, R., Brydges, D.C., Slade, G.: Scaling limits and critical behaviour of the \(4\)-dimensional \(n\)-component \(|\varphi |^4\) spin model. J. Stat. Phys. 157(4), 692–742 (2014)

Berestycki, N.: Introduction to the Gaussian free field and Liouville quantum gravity. https://www.math.stonybrook.edu/~bishop/classes/math638.F20/Berestycki_GFF_LQG.pdf (2015). Accessed 30 June 2022

Biskup, M., Spohn, H.: Scaling limit for a class of gradient fields with nonconvex potentials. Ann. Probab. 39(1), 224–251 (2011)

Boutillier, C.: Pattern densities in non-frozen planar dimer models. Commun. Math. Phys. 271, 55–91 (2007)

Cotar, C., Deuschel, J.-D., Müller, S.: Strict convexity of the free energy for a class of non-convex gradient models. Commun. Math. Phys. 286(1), 359–376 (2009)

Daubechies, I.: Ten Lectures on Wavelets. CBMS-NSF Regional Conference Series in Applied Mathematics. Society for Industrial and Applied Mathematics (1992)

Ding, J., Lee, J.R., Peres, Y.: Cover times, blanket times, and majorizing measures. Ann. Math. 175(3), 1409–1471 (2012)

Ding, J., Dubedat, J., Gwynne, E.: Introduction to the Liouville quantum gravity metric. arXiv, Sept. (2021)

Dürre, F.M.: Conformal covariance of the Abelian sandpile height one field. Stoch. Process. Appl. 119(9), 2725–2743 (2009)

Dürre, F.M.: Self-organized critical phenomena. PhD thesis, Ludwig-Maximilians-Universität München, June (2009)

Eisenbaum, N., Kaspi, H.: On permanental processes. Stoch. Process. Appl. 119, 1401–1415 (2009)

Evans, L.C.: Partial Differential Equations. American Mathematical Society, Providence, RI (2010)

Funaki, T.: Stochastic Interface Models. Lectures on Probability Theory and Statistics, vol. 1869, pp. 103–274 (2005)

Furlan, M., Mourrat, J.-C.: A tightness criterion for random fields, with application to the Ising model. Electron. J. Probab. 22, 1–29 (2017)

Glimm, J., Jaffe, A.: Quantum Physics. Springer, New York (1987)

Hairer, M.: A theory of regularity structures. Invent. Math. 198(2), 269–504 (2014)

Hough, J., Krishnapur, M., Peres, Y., Virag, B.: Zeros of Gaussian Analytic Functions and Determinantal Point Processes, volume 51 of University Lecture Series. American Mathematical Society, Providence, RI (2009)

Janson, S.: Gaussian Hilbert Spaces. Cambridge Tracts in Mathematics. Cambridge University Press, Cambridge (1997)

Jerison, D., Levine, L., Sheffield, S.: Internal DLA and the Gaussian free field. Duke Math. J. 163(2), 267–308 (2014)

Kang, N.-G., Makarov, N.G.: Gaussian free field and conformal field theory. Astérisque 353, 1–136 (2013)

Kassel, A., Wu, W.: Transfer current and pattern fields in spanning trees. Probab. Theory Relat. Fields 163(1), 89–121 (2015)

Kenyon, R.: Dominos and the Gaussian Free Field. Ann. Probab. 29(3), 1128–1137 (2001)

Last, G., Penrose, M.: Lectures on the Poisson Process. IMS. Cambridge University Press, Cambridge (2017)

Lawler, G., Limic, V.: Random Walk: A Modern Introduction. Cambridge Studies in Advanced Mathematics. Cambridge University Press, Cambridge (2010)

McCullagh, P., Møller, J.: The permanental process. Adv. Appl. Probab. 38(4), 873–888 (2006)

Meyer, Y., Salinger, D.: Wavelets and Operators: Volume 1. Cambridge Studies in Advanced Mathematics. Cambridge University Press, Cambridge (1992)

Nadaf, A., Spencer, T.: On homogenization and scaling limit of some gradient perturbations of a massless free field. Commun. Math. Phys. 183, 55–84 (1997)

Newman, C.M.: Normal fluctuations and the FKG inequalities. Commun. Math. Phys. 74(2), 119–128 (1980)

Schramm, O., Sheffield, S.: Contour lines of the two-dimensional discrete Gaussian free field. Acta Math. 202(1), 21–137 (2009)

Sheffield, S.: Gaussian free fields for mathematicians. Probab. Theory Relat. Fields 139(3), 521–541 (2007)

Spitzer, F.: Principles of Random Walk. Graduate Texts in Mathematics. Springer, New York (1964)

Stein, E., Shakarchi, R.: Real Analysis: Measure Theory, Integration, and Hilbert Spaces. Princeton University Press, Princeton (2009)

Sznitman, A.S.: Topics in Occupation Times and Gaussian Free Fields. Zurich Lectures in Advanced Mathematics. European Mathematical Society, Zurich (2012)

Velenik, Y.: Localization and delocalization of random interfaces. Probab. Surv. 3, 112–169 (2006)

Wilson, D.: Xor-Ising loops and the Gaussian free field. arXiv:1102.3782 (2011)

Acknowledgements

We would like to thank Antal Járai for bringing this problem to our attention, sharing his ideas with the authors and for helpful discussions on the topic. We would like to thank the participants of the workshop “Challenges in Probability and Statistical Mechanics” at the Technion, Haifa, and Günter Last for interesting comments and feedback on this work.

Funding

AC initiated this work at TU Delft funded by Grant 613.009.102 of the Dutch Organisation for Scientific Research (NWO). RSH was supported by a STAR cluster visitor grant during a visit to TU Delft where part of this work was carried out. AR is supported by Klein-2 Grant OCENW.KLEIN.083 and did part of the work at TU Delft.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Yvan Velenik.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

A Appendix: Feynman Diagrams

A Appendix: Feynman Diagrams

When calculating expectations of products of Gaussian variables, one often obtains expressions consisting of pairwise combinations of the variables in question. It is then useful to define a graphical representation for these objects, the so-called Feynman diagrams. For a complete exposition on the subject we refer the reader to Janson [20, Chapters 1, 3].

Definition A. 1

(Feynman diagrams, Janson [20, Definition 1.35]) A Feynman diagram \(\gamma \) of order \(n\ge 0\) and rank \(r=r(\gamma )\ge 0\) is a graph consisting of a set of n vertices and r edges without common endpoints. These are r disjoint pairs of vertices, each joined by an edge, and \(n-2r\) unpaired vertices. A Feynman diagram is said to be complete if \(r=n/2\) and incomplete if \(r<n/2\). Let \(FD_0\) denote the set of all complete Feynman diagrams. A Feynman diagram labeled by n random variables \(\xi _1,\dots ,\xi _n\) defined on the same probability space is a Feynman diagram of order n with vertices \(1,\dots ,n\), where \(\xi _i\) is thought as being attached to vertex i. The value \(v(\gamma )\) of such a Feynman diagram \(\gamma \) with edges \((i_k,j_k)\), \(k=1,\dots ,r\) and unpaired vertices \(\{i:i\in A\}\) is given by

Observe that this value is in general a random variable, and it is deterministic whenever the diagram is complete.

This definition allows us to express the expectation of the product of n Gaussian random variables in terms of Feynman diagrams as follows:

Theorem A.1

[20, Theorem 1.36] Let \(\xi _1,\dots ,\xi _n\) be centered jointly normal random variables. Then

where the sum takes place over all \(\gamma \in FD_0\) labeled by \(\xi _1,\dots ,\xi _n\).

We can also decompose the Wick product of n Gaussian variables in terms of Feynman diagrams, as stated in the following theorem:

Theorem A.2

[20, Theorem 3.4] Let \(\xi _1,\dots ,\xi _n\) be centered jointly normal random variables. Then

being \(r(\gamma )\) the rank of \(\gamma \), where the sum takes place over all Feynman diagrams \(\gamma \) labeled by \(\xi _1,\dots ,\xi _n\).

An extension of Theorem A.1 now reads:

Theorem A.3

[20, Theorem 3.8] Let \(\xi _1,\dots ,\xi _{n+m}\) be centered jointly normal random variables, with \(m,n\ge 0\). Then

where the sum takes place over all complete Feynman diagrams \(\gamma \) labeled by \(\xi _1,\dots ,\xi _{n+m}\) such that no edge joins any pair \(\xi _i\) and \(\xi _j\) with \(i<j\le n\).

A formula for an even more general case can be obtained as follows:

Theorem A.4

[20, Theorem 3.12] Let \(Y_i = :\!\xi _{i1}\cdots \xi _{i l_i}\!:\), where \(\left\{ \xi _{ij}\right\} _{\begin{array}{c} 1\le i\le k, \\ 1\le j\le l_i \end{array}}\) are centered jointly normal variables, with \(k\ge 0\) and \(l_1,\dots ,l_k\ge 0\). Then

where we sum over all complete Feynman diagrams \(\gamma \) labeled by \(\left\{ \xi _{ij}\right\} _{ij}\) such that no edge joins two variables \(\xi _{i_1j_1}\) and \(\xi _{i_2j_2}\) with \(i_1=i_2\).

Remark 7

We said this is a formula for an even more general case than Theorem A.3 because \(:\!X\!:\, = X\) for any centered normal variable.

This theorem will be used for the proof of Theorem 1. In that case, each \(Y_i\) is the Wick product of two variables, namely \(Y_i = :\!\xi _{i1}\xi _{i2}\!:\), for all \(i=1,\dots ,n\). In this specific case it will hold, in fact, that \(\xi _{i1} = \xi _{i2}\) for all i, but we keep a different notation for each variable in order to keep track of every possible Feynman diagram that can be made up from the variables \(Y_i\). The value of a complete Feynman diagram \(\gamma \) in this setting will be given by the expression

with \(\alpha _s,\beta _s\in [k]\), \(\alpha _s \ne \beta _s\) for all s, and \(m_{\alpha _s}, m_{\beta _s} \in \{1,2\}\).

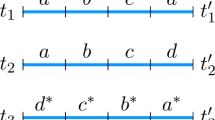

Let us discuss a concrete example for the case \(k=3\). One example is \(\gamma =(V,E)\) with two copies of nodes per vertex \(V=\{x_i, \tilde{x}_i: i=1,2,3\}\) and the set of undirected edges \(E=\{(x_1, {x}_2), (\tilde{x}_1, x_3), (\tilde{x}_2,\tilde{x}_3)\}\) which pictorially can be depicted in Fig. 1. We have in total 8 complete Feynman diagrams in this case which can be obtained by considering the different edges resulting from pairings of the nodes \(\{x_i,\tilde{x}_i: i= 1,2,3\}\) ignoring all pairings of the sort \((x_i,\tilde{x}_i)\) for all \(i=1,2,3\).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cipriani, A., Hazra, R.S., Rapoport, A. et al. Properties of the Gradient Squared of the Discrete Gaussian Free Field. J Stat Phys 190, 171 (2023). https://doi.org/10.1007/s10955-023-03187-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10955-023-03187-3

Keywords

- Scaling limit

- Gaussian free field

- Abelian sandpile model

- Cumulants

- K-point correlation functions

- Fock spaces

- Besov–Hölder spaces

- Point processes