Abstract

We show that the derivative of the (measure) transfer operator with respect to the parameter of the map is a divergence. Then, for physical measures of discrete-time hyperbolic chaotic systems, we derive an equivariant divergence formula for the unstable perturbation of transfer operators along unstable manifolds. This formula and hence the linear response, the parameter-derivative of physical measures, can be sampled by recursively computing only 2u many vectors on one orbit, where u is the unstable dimension. The numerical implementation of this formula in [46] is neither cursed by dimensionality nor the sensitive dependence on initial conditions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Literature Review

The transfer operator, also known as the Ruelle–Perron–Frobenius operator, describes how the density of a measure is evolved by a map, and is frequently used to study the behavior of dynamical systems. The transfer operator was historically used for expanding maps because it makes the density smoother. The anisotropic Banach space of Gouëzel, Liverani, and Baladi extends the operator theory to hyperbolic maps, which has both expanding and contracting directions [6, 30, 31]. The physical measure, or the SRB measure, has the eigenvalue 1, so it encodes the long-time statistics of the system, and is typically singular with respect to the Lebesgue measure [12, 50, 54].

The derivative of the transfer operator with respect to system parameters is useful in several settings, especially in linear response, which is the derivative of the physical measure [5, 19, 26, 55]. The operator formula for the linear response (see Sect. 4.1) is particularly attractive in numerical computations because it is not affected by the exponential growth of unstable vectors. Since the physical measure is typically singular, researchers need to use finite-elements to approximate and mollify the measure.

However, When the phase space is high-dimensional, the cost of approximating a measure by finite-elements is cursed by dimensionality (see Appendix A for a cost estimation). Given a \(C^2\) objective function \(\Phi \), the more efficient way to sample the physical measure \(\rho \) of f is to ‘sample by an orbit’, that is

This approach’s main cost is using a recursive relation f to compute an orbit \(\{x_i\}_{k=1}^K\), where each \(x_i\) is essentially an M-dimensional vector, M being the dimension of the system.

It is natural to ask whether the derivative of the transfer operator and hence the linear response can also be sampled by an orbit, that is, by a formula which involves computing several vectors recursively along a single orbit. This is impossible for the entire derivative operator, which is typically singular for physical measures, and is not pointwise defined. Similarly, the two most well-known linear response formulas, the ensemble formula and the operator formula, involve distributional derivatives, which are not well-defined pointwise.

However, we typically only need the transfer operator to handle the unstable perturbations, which turns out to be well-defined pointwise. There were pioneering works concerning pointwise formulas of the unstable part of the linear response, though they were not very clearly related to the perturbation of transfer operators within unstable manifolds, which acts on conditional measures. Ruelle mentioned how to derive a pointwise defined formula for the unstable divergence, but no explicit formulas were given [56, Lemma 2]. Gouëzel and Liverani gave an explicit pointwise formula via a cohomologous potential function, but the differentiation is in the stable subspace, which typically has very high dimension [31, Proposition 8.1]. No recursive formulas were given, and even the potential formulas are likely to involve the evolution of a lot of vectors. It is also difficult to obtain coordinate-independent formulas.

In this paper, we derive a new divergence formula for the unstable perturbation of unstable transfer operators on physical measures. We then give a new interpretation of the unstable part of the linear response by such unstable transfer operators. We think this interpretation has a more direct physical meaning compared to previous works on linear responses, which typically involve distributional derivatives or require moving to the sequence space. Also, our formula is coordinate independent: it only requires a basis of the unstable subspace, but does not specify the individual vectors of a basis. More importantly, our formulas are recursive: to evaluate the formula, we only need to track the evolution of 2u vectors or covectors. Here u is the dimension of the unstable subspace; we also use superscript u to denote quantities related to the unstable subspace. This number of recursive relations is likely to be minimal.

Our work bridges two previously competing approaches for computing the linear response, the orbit/ensemble approach and the measure/operator approach. That is, we should add up the orbit change caused by stable/shadowing perturbations and the measure change caused by unstable perturbations; both changes are sampled by an orbit. Our work may be viewed as the generalization of the well-known MCMC (Markov Chain Monte Carlo) method for sampling derivatives of transfer operators and physical measures [13, 43].

We compare the current paper with our other papers on related topics. The current paper is inspired by our recent numerical algorithm, the fast (forward) response algorithm, for computing the linear response on a sample orbit [44]. The unstable part of the fast forward response algorithm runs forward on an orbit, and the cost is linear to the number of parameters and observables. In comparison, the current paper is the adjoint theory of sampling linear response on an orbit. The numerical implementation of the main results in this paper is in [46]. The cost of the adjoint method for the unstable part of the linear response is independent of the number of parameters \(\gamma \) and observables \(\Phi \). In other words, if we have several X’s, where each X gives the perturbation of f corresponding to changing each \(\gamma \), then the cost to compute the linear responses of all X’s is almost the same as that of only one X. Moreover, this paper generalizes the previous results to derivative operators using a new and intuitive proof by transfer operators, which is useful for the study of transient perturbations. Finally, the papers [47,48,49] concerns mainly about shadowing part of the linear response, whereas the current paper concerns the unstable part, though it also uses shadowing as a tool.

1.2 Main Results

As a warm up, in Sect. 2, we start with the easier case where we are given a measure with a smooth density function. Lemma 1 gives a divergence formula for \(\delta {{\tilde{L}}}\), which is the derivative of the transfer operator \({{\tilde{L}}}\) with respect to the parameter \(\gamma \) at \(\gamma =0\). Here \({{\tilde{L}}}\) is the transfer operator of \({{\tilde{f}}}\), which is a one-parameter family of maps parameterized by \(\gamma \), and \(\gamma \mapsto {{\tilde{f}}}\) is \(C^1\) from \({\mathbb {R}}\) to \(C^3\) maps on the background manifold \({\mathcal {M}}\). This is just the mass continuity equation on Riemannian manifolds [38]. It can be proved simply by an integration by parts, but we shall also give a pointwise proof, which can be generalized to the more complicated scenario later on.

Lemma 1

(mass continuity equation) For a measure with fixed smooth density h, and any \({{\tilde{f}}}\) such that \(\delta {{\tilde{f}}}:=\left. \frac{\partial {{\tilde{f}}}}{\partial \gamma }\right| _{\gamma =0} = X\), then where \(h>0\) we have

In the simple case above, the density h is a priori known, and it is not related to a dynamical system. But it prepares us for the more interesting case, when the measure is a physical measures of a hyperbolic attractor. Now physical measures are defined by typical orbits of the underlying dynamical system. Hence, it is natural to ask if the perturbation of the physical measure can be also expressed by recursive relations, on the same orbit we sample the physical measure.

Section 3 proves our main result, Theorem 2. Let \({{\tilde{L}}}^u\) be the transfer operator of \(\xi {{\tilde{f}}}\), which is a map such that \(\delta (\xi {{\tilde{f}}})=X^u\) (see Sect. 3.1 for detailed definitions), then Theorem 2 is a new formula for \(\delta {{\tilde{L}}}^u\) on the conditional density \(\sigma \) on unstable manifolds. Here \(X^u\) is the unstable part of X, \(\sigma \) is the density of the conditional measure of the physical measure \(\rho \) for a local unstable foliation. Notice that \(\sigma \) and \(\delta {{\tilde{L}}}^u \sigma \) may differ by a constant factor, depending on the choice of the neighborhood of the local foliation, so they can only be defined locally; but the ratio \(\frac{\delta {{\tilde{L}}}^u \sigma }{\sigma }\) does not depend on that choice, since the constant factors are cancelled, so it is globally well-defined.

The main significance is that this formula is defined pointwise and it involves only 2u recursive relations on an orbit, where u is the unstable dimension. This number should be close to the fewest possible, since we need at least u modes to capture the most significant perturbative behaviors of a chaotic system, that is, there are u many unstable directions.

Theorem 2

(equivariant divergence formula) Let \(\sigma \) be the density of the conditional measure of \(\rho \), which is the physical measure on a mixing axiom A attractor of a \(C^3\) diffeomorphism f, denote \(\delta {{\tilde{f}}}:=\left. \frac{\partial {{\tilde{f}}}}{\partial \gamma }\right| _{\gamma =0} = X\), then

Here \(\text {div}^v\) is the derivative tensor contracted by the unstable hypercube and its co-hypercube in the adjoint unstable subspace, so \(\text {div}^v f_*\) is a covector (see Sect. 3.1). \({\mathcal {S}}\) is the adjoint shadowing operator, that is, \(\omega := {\mathcal {S}}(\text {div}^vf_*) \) is the only bounded covector field such that \(\omega = f^*\omega + \text {div}^v f_*\). Note that \(X^u\) is not differentiable, so \(\text {div}^u_\sigma X^u\), the unstable submanifold divergence under conditional measure \(\sigma \), is defined via the equivalence in the smooth situation in Theorem 1. Hence, here we can not directly use Lemma 1, since it involves exploding intermediate quantities.

Section 4 gives a new interpretation of the unstable part of the linear response by \(\delta {{\tilde{L}}}^u\), and shows how to use the equivariant divergence formula to compute the linear response recursively on an orbit. We do not prove the linear response, the focus is to sample on an orbit. In high-dimensional phase spaces, sampling by an orbit is much more efficient than finite element methods, whose cost is estimated in appendix A on a simple example. More specifically, using the following formula, the linear response is expressed by recursively computing about 2u many M-dimensional vectors on an orbit. Here M is the dimension of the system. Appendix B gives another proof of this formula using the so-called fast forward formula from a previous paper [44].

Let \(\rho \) and \({{\tilde{\rho }}}\) denote the SRB measure of f and \({{\tilde{f}}}\circ f\), let \(\Phi :{\mathcal {M}}\rightarrow {\mathbb {R}}\) be a \(C^2\) observable function. First recall that the linear response has the expression [55]

Here \(X_{-n}(x):=X(f^{-n}x)\), x being the dummy variable in the above integration, \((f^{n}_{*} X_{-n})(x)\) is a vector at x, and \(f^{n}_{*} X_{-n} (\Phi )=f^{n}_{*} X_{-n} \cdot {{\,\textrm{grad}\,}}\Phi \). Here S.C. and U.C. are the so-called shadowing and unstable contribution,

Here \(X^u\) and \(X^s\) are the unstable and stable part of X. We may also decompose into stable and unstable contributions, \(S.C.'\) and \(U.C.'\),

The shadowing and stable contributions are very similar in terms of numerics. Theorem 2 gives a new formula of U.C. and hence the linear response.

Proposition 3

(fast adjoint formula for linear response) The shadowing contribution and the unstable contribution of the linear response can be expressed by integrations of quantities from the unperturbed dynamics with respect to \(\rho \),

Here \(\sigma \) is the density of the conditional measure of \(\rho \), and \(\frac{\delta {{\tilde{L}}}^u \sigma }{\sigma }\) is given by Theorem 2.

We explain how to compute the above formula on an orbit to overcome the curse of dimensionality. For a finite W, the size of the integrand is about \(\sqrt{W}\), and we can sample the physical measure \(\rho \) by an orbit. Then we need to compute a basis of the unstable subspace. This can be achieved by pushing forward u many randomly initialized vectors while performing occasional renormalizations, on the same orbit we used to sample \(\rho \). Similarly, we can compute a basis of the adjoint unstable subspace. With these two basis we can compute the equivariant divergence \(\text {div}^v\). The adjoint unstable subspace is also the main data required by the nonintrusive shadowing algorithm for computing \({\mathcal {S}}\). Note that we only need data obtained at \(\gamma =0\), and knowing the expression of \(f|_{\gamma =0}\) is sufficient for obtaining these data.

The numerical implementation of our formulas, including a detailed algorithm and numerical examples, is in [46]; the so-called fast adjoint response algorithm is very efficient in high dimensional phase spaces; it is also robust in stochastic noise and some nonuniform hyperbolicity. Its cost is neither cursed by the dimensionality nor the sensitive dependence on initial conditions, and the cost is almost independent of the number of parameters.

2 Divergence Formula of Derivative Operator

We first assume that the measure on which we apply the transfer operator is smooth (means \(C^\infty \)) and a priori known, then we give a divergence formula for the perturbation of the transfer operator. The techniques and notations we use for the pointwise proof of this simple case shall prepare us for the proof of Theorem 2. The main difference with Theorem 2 is that here the measure is not given by an orbit, so we can not sample its perturbation by an orbit.

2.1 A Functional Proof

We denote the background M-dimensional Riemannian manifold by \({\mathcal {M}}\). In this paper, we use \({{\tilde{\cdot }}}\) to denote perturbative quantities. We think of perturbative maps, such as \({{\tilde{f}}}\) and the \({{\tilde{L}}}\), as being smoothly parameterized by a small real number \(\gamma \), whose values at \(\gamma =0\) are the identity. Let \({{\tilde{f}}}\) be the perturbation appended to f, which is also a smooth diffeomorphism on \({\mathcal {M}}\). More specifically, we assume the map \(\gamma \mapsto {{\tilde{f}}}\) is \(C^1\) from \({\mathbb {R}}\) to the family of \(C^3\) diffeomorphisms on \({\mathcal {M}}\). The default value of \(\gamma \) is zero, and \(\gamma \) may vary in a neighborhood of zero in the real numbers. For a fixed measure with a smooth density function h, the transfer operator \({{\tilde{L}}}\) gives the new density function after pushing forward by \({{\tilde{f}}}\). More specifically, \({{\tilde{L}}}\) of \({{\tilde{f}}}\) is defined by the duality

where \(\Phi :{\mathcal {M}}\rightarrow {\mathbb {R}}\) is a \(C^2\) observable function with compact support. In this paper, all integrals are taken with respect to the Lebesgue measure, except when another measure is explicitly mentioned. Note that \({{\tilde{L}}}\) operates on the entire density function, and \({{\tilde{L}}}h(x):=({{\tilde{L}}}h)(x)\). We shall refer to h as the ‘source’ of Lh.

We are interested in how perturbations in \(\gamma \) would affect \({{\tilde{L}}}\). Define

We emphasize that the base value of \(\gamma \) is zero, and all derivatives with respect to \(\gamma \) in this paper are evaluated at \(\gamma =0\). Define the perturbation vector field X as

Note that \({\tilde{f}}\) depends on \(\gamma \) and \(\delta \) is the partial derivative. Since we only consider the derivatives at \(\gamma =0\), hence, we can freely assume the value of \(\partial {{\tilde{f}}}/\partial \gamma \) when \(\gamma \ne 0\), so long as it is smooth and its value at \(\gamma =0\) is X. Without loss of generality, we may assume that \({{\tilde{f}}}\) is the flow of X. If so, and regarding \(\gamma \) as ‘time’, then Theorem 1 is exactly the mass continuity equation on Riemannian manifolds.

We define \({{\,\textrm{div}\,}}_{h}\) as the divergence under the measure with density h,

where \(X(\cdot )\) is to differentiate a function in the direction X, that is, \(X(h)={{\,\textrm{grad}\,}}h \cdot X\). For two densities \(h'\) and \(h''\), if \(h'= Ch''\) for a constant \(C>0\), then \({{\,\textrm{div}\,}}_{h'} = {{\,\textrm{div}\,}}_{h''}\). Then we prove

Lemma 1

(mass continuity equation) For a measure with fixed smooth density h, and any \({{\tilde{f}}}\) such that \(\delta {{\tilde{f}}}:=\left. \frac{\partial {{\tilde{f}}}}{\partial \gamma }\right| _{\gamma =0} = X\), then where \(h>0\) we have

Remark

When \(h=0\) in an open subset, then it typically suffices to use the fact \(\delta {{\tilde{L}}}h =0\) in that open set.

Proof

Differentiate Eq. (1). Notice that at \(\gamma =0\), \(\delta (\Phi \circ {{\tilde{f}}}) = \delta {{\tilde{f}}}(\Phi ) = X(\Phi )\). Then we have

We call the left hand side the operator formula, and the right side the Koopman formula for the perturbation.

Recall that \(\Phi \) is compactly supported, then there is no boundary term for integration-by-parts, and we have

Since this holds for any \(\Phi \), it must be \(\delta {{\tilde{L}}}h = - \text {div}(hX)\). \(\square \)

2.2 A Pointwise Proof

This section derives \(\delta {{\tilde{L}}}\) using the pointwise definitions of \({{\tilde{L}}}\), which is useful later when we consider perturbations on the conditional measure on unstable manifolds. Note that \({{\tilde{L}}}\) is equivalently defined by a pointwise expression,

Here the point x is fixed, whereas y and \({{\tilde{f}}}\) varies according to \(\gamma \), so the perturbative map \({{\tilde{L}}}\) also depends on \(\gamma \). Here \({{\tilde{f}}}_*\) and \(f_*\) are the pushforward acting on vectors, \({{\tilde{f}}}_* e:= D{{\tilde{f}}}\, e\). Later we use \(f^*\) to denote the pullback acting on covectors. \(|{{\tilde{f}}}_*|\) is the Jacobian determinant, or the norm as an operator on M-vectors,

Here \(e_i\)’s are smooth 1-vector fields; \(e^{\mathcal {M}}\) is a smooth M-vector field, which is basically an M-dimensional hyper-cube field, and \(|\cdot |\) is its volume. Here \({{\tilde{f}}}_*\) is the Jacobian matrix. Note that \(|{{\tilde{f}}}_*|\) is independent of the choice of basis, and we expect this independence to hold throughout our derivation.

The volume of M-vectors, \(|\cdot |\), is a tensor norm induced by the Riemannian metric,

For two 1-vectors, \(\left\langle \cdot ,\cdot \right\rangle \) is the typical Riemannian metric. For simple M-vectors,

When the operands are summations of simple M-vectors, the inner-product is the corresponding sum.

Applying \(\delta \) on both sides of Eq. (3), notice that h is fixed, also that \(|{{\tilde{f}}}_*|=1\) when \(\gamma =0\), we have

Here \(\delta y=-X\), and we use it to differentiate h in the coordinate variable. Note that \(\frac{d }{d \gamma }\) is the total derivative: \({{\tilde{f}}}\) has two direct parameters y and \(\gamma \), where y implicitly depends on \(\gamma \). Substituting the following lemma into Eq. (4), we get a pointwise proof of Theorem 1.

Lemma 4

\(\left. \frac{d }{d \gamma } (|{{\tilde{f}}}_*|(y))\right| _{\gamma =0} = {{\,\textrm{div}\,}}X \), where \(X:=\delta {{\tilde{f}}}\).

Proof

By the chain rule, also notice that \(y|_{\gamma =0}=x\), the total derivative is

Since \(|{{\tilde{f}}}_*|\equiv 1\) at \(\gamma =0\), the second term is zero. The first term \(\delta |{{\tilde{f}}}_*|\) is the partial derivative with respect to \(\gamma \) while fixing x. Fix any M-vector \(e^{\mathcal {M}}\) at x, by the Leibniz rule,

where \(\varepsilon ^i\) is the i-th covector in the dual basis of \( \{e_i \}_{ i=1 }^M\).

Because X generates the flow \({{\tilde{f}}}\), we have the Lie bracket \(\left[ {{\tilde{f}}}_*e_i, X\right] =0\). Let \(\nabla _{(\cdot )}(\cdot )\) denote the Riemannian derivative, then \(\nabla _X {{\tilde{f}}}_*{e_i}|_{\gamma =0}=\nabla _{e_i}X\), and

Hence, we see that \(\delta |{{\tilde{f}}}_*|\) is the contraction of \(\nabla X\): this is another definition of the divergence, which is independent of the choice of the basis \(\{e_i \}_{ i=1 }^M\). \(\square \)

We may as well write the above proof using matrix determinants, which is essentially a more compact set of notations for the outer algebras we used, but is more familiar to some readers.Footnote 1 More specifically,

The reason the determinant notation is simpler is that, in this section, we do not need the derivative of \(e^{\mathcal {M}}\): \(e^{\mathcal {M}}\) can be chosen to be essentially a constant on \({\mathcal {M}}\). Hence, we can use the matrix notation to completely hide away our usage of \(e^{\mathcal {M}}\). However, the matrix notation is no longer convenient for the next section, because we will be working on submanifolds, which requires us keeping track of the derivative of the tangent space, which is non-trivial on submanifolds.

3 Equivariant Divergence Formula for the Unstable Perturbation of Transfer Operator

Many important measures typically live in high dimensions, such as physical measures of chaotic systems. Efficient handling of such measures requires sampling by an orbit, since it is very expensive to approximate a high-dimensional object by finite elements, for which we give a rough cost estimation in appendix A. But \(\delta {{\tilde{L}}}\) is not even defined pointwise for typical physical measures. However, we only need the derivative operator to handle the unstable perturbations; the stable perturbations are typically computed by the Koopman formula on the right of Eq. (2).

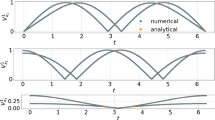

In this section, we derive the equivariant divergence formula of the unstable perturbation operator on the unstable manifold. The formula is defined pointwise, moreover, it is in the form of a few recursive relations on one orbit. As shown in Fig. 1, we first write the derivative operator as the derivative of the ratio between two volumes. Then we can obtain an expansion formula, which can be summarized into a recursive formula using the adjoint shadowing lemma.

3.1 Notations

We assume that the dynamical system of the \(C^3\) diffeomorphism f has a mixing axiom A attractor K. Denote a compact basin of the attractor by \({\mathcal {V}}^s(K)\), which is a set containing an open neighborhood of K and such that

There is a continuous \(f_*\)-equivariant splitting of the tangent vector space into stable and unstable subspaces, \(V^s \bigoplus V^u\), such that there are constants \(C>0\), \(0<\lambda < 1\), and

where \(f_*\) is the Jacobian matrix. We still assume that the map \(\gamma \mapsto {{\tilde{f}}}\) is \(C^1\) from \({\mathbb {R}}\) to the family of \(C^3\) diffeomorphisms on \({\mathcal {M}}\), and define \(X:= \delta {{\tilde{f}}}:=\left. \frac{\partial {{\tilde{f}}}}{\partial \gamma }\right| _{\gamma =0}\). Define oblique projection operators \(P^u\) and \(P^s\), such that

The stable and unstable manifolds, \({\mathcal {V}}^s\) and \({\mathcal {V}}^u\), are submanifolds tangential to the equivariant subspaces. Superscripts of manifolds typically denote dimensions, so we also use u and s to denote the unstable and stable dimension, and

The physical measure is defined as the weak-* limit of the empirical distribution of a typical orbit. Under our assumptions, the physical measure is also SRB, which is smooth on the unstable manifold.

We introduce some general notations to be used. We use subscripts i and j to label directions, and use subscripts m, n, k to label steps. Let \(\{ e_i \}_{ i=1 }^M\subset T{\mathcal {M}}\) be a basis vector field such that the first u vectors satisfy \(\text {span}\{e_i\}_{i=1}^u=V^u\), while the other vectors satisfy \(\text {span}\{e_i\}_{i=u+1}^M = V^s\); we further require that

Let \(\{ \varepsilon ^i \}_{i=1 }^M\) be the dual basis covector field of \(\{ e_i \}_{ i=1 }^M\), that is,

We further require that

In other words, \(\varepsilon \) removes the stable component, and gives the unstable component of u-vectors.

The main results in our paper are coordinate-independent. Note that e belongs to the one-dimensional space \(\wedge ^u V^u\). So e is the same, up to a coefficient, so long as \(e_1\sim e_u\) spans \(V^u\). The case with \(\varepsilon \) is similar. If a formula uses only the normalized e and \(\varepsilon \), then it does not depend on the particular choice of \(e_i\) and \(\varepsilon ^i\), and we say this formula is coordinate-independent. Indeed, most formulas in this paper involve only e and \(\varepsilon \) but not individual \(e_i\) and \(\varepsilon ^i\).

We use \(\nabla _YX\) to denote the (Riemann) derivative of the vector field X along the direction of Y. \(\nabla _{(\cdot )} f_*\), the derivative of the Jacobian, is the Hessian

This is essentially the Leibniz rule. Note that \((\nabla _{Y}f_*)X=(\nabla _{X}f_*)Y\). Denote

When e is the unstable u-vector, it is differentiable only in the unstable direction, so in the second equation we differentiate by \(X^u\in V^u\). One of the slots of \(\nabla _{(\cdot )} f_*(\cdot )\) can take a u-vector, in which case

There are two different divergences on an unstable manifold. The first divergence applies to a vector field within the unstable submanifold,

We call this the submanifold unstable divergence, or u-divergence. Typically \(X^u\) is not differentiable, and \(\nabla _{e} X^{u}\) is a distribution rather than a function. But \(\text {div}^u X^u\) is a Holder function: because Lemma 1 shows that \(\text {div}^u_\sigma X^u\) is a transfer operator, and Theorem 2 shows that the transfer operator has an expansion formula, which is Holder.

The second kind of unstable divergence applies to vector fields not necessarily in the unstable manifold; it might be more essential for hyperbolic systems. The two divergences coincide only if both are applied to a vector field in the unstable subspace and \(V^u\perp V^s\). Define the equivariant unstable divergence, or v-divergence, as

We define the v-divergence of the Jacobian matrix \(f_*\),

By our notation, \(\varepsilon _1(x)\) is a covector at fx. Note that \(\text {div}^v f_*\) is a Holder continuous covector field on the attractor. On a given orbit, we denote

Note that \(\varepsilon _1(x) = \varepsilon (fx)\) up to an orientation. It is convenient to assume that on the orbit we pick, the orientations are consistent, that is, in terms of vector fields,

This is typically true in practice, since we shall obtain unstable vectors and covectors by repeatedly pushing-forward or pulling-backward on the given orbit. However, it is not necessary that we assume this.

3.2 Expressing Transfer Operator \({{\tilde{L}}}^u\) on \({\mathcal {V}}^u\) by Holonomy Map \(\xi \)

We define the unstable perturbation on the unstable manifold as the composition of a perturbation of \({{\tilde{f}}}\) and a holonomy map \(\xi \), which is a projection along stable manifolds. As illustrated in Fig. 1, fix x, and let \({\mathcal {V}}^u(x)\) be the global unstable manifold through x and \({\mathcal {V}}_{r}^u(x)\) be the local unstable manifold through x of size r in the ambient manifold; for any \(\gamma \), \({\mathcal {V}}^{u\gamma }:=\{{{\tilde{f}}}(z):z\in {\mathcal {V}}^u\}\) is a u-dimensional manifold. For any \(z\in {\mathcal {V}}^{u\gamma }\), denote the stable manifold that goes through it by \({\mathcal {V}}^s(z)\). Define \(\xi (z)\) as the unique intersection point of \({\mathcal {V}}^s(z)\) and \({\mathcal {V}}^u(x)\). Since \(\delta {{\tilde{f}}}:=\left. \frac{\partial }{\partial \gamma }\right| _{\gamma =0} {{\tilde{f}}}=X\) is the perturbation and \(\xi \) is the projection along stable directions, if we take partial derivative with respect to \(\gamma \) while fixing the base point, we have

Note the above equation holds only when the equation is restricted to \({\mathcal {V}}^u\).

The next lemma shows that for a small range of \(\gamma \), \(\xi \) and \(\xi {{\tilde{f}}}\) are well-defined on the entire attractor K; this then allows us to define locally the transfer operator \({{\tilde{L}}}^u\). Among the many technical details below, the main facts to recall are that the unstable manifolds through any points of K lie within K, whereas stable manifolds fill a neighborhood of K.

Lemma 5

Given any \(r>0\), there is small number \(\gamma _0>0\), such that, for all \(|\gamma |<\gamma _0\), for any \(y\in K\), the point \(x=\xi {{\tilde{f}}}y\) uniquely exists, and \(|x-y|\le 0.1r\). From now on, we always assume \(|\gamma |<\gamma _0\).

Proof

Cover the compact set K by a finite number of coordinate charts. Shrink r if necessary, so that for any \(x\in K\), B(x, r) belongs to a finite positive number of charts. In the following paragraphs of this subsection, the angles and distances are measured in one of the charts (not the Riemannian metric).

More specifically, for all \(x\in K\), the local stable manifolds \({\mathcal {V}}_{loc}^s(x)\) and local unstable manifolds \({\mathcal {V}}_{loc}^u(x)\) depend continuously on \(x\in K\). Since the hyperbolic set K is compact, by further shrinking r, the sizes of local stable and unstable manifolds are uniformly larger than r. By further shrinking r again, we can find a positive lower bound for the angles between stable and unstable manifolds,

Recall that hyperbolic attractors are isolated hyperbolic sets, so any compact basin \({\mathcal {V}}^s(K)\) of the attractor is the union of stable manifolds through points in K. More specifically, by the proof of Theorem 4.26 in [57] (the proof is a bit stronger than the statement of the theorem), we can see that, after further shrinking r and after passing \({\mathcal {V}}^s(K)\) to \(f^n({\mathcal {V}}^s(K))\) for some large n, we have

We constrain the size of our perturbation, or equivalently, constrain the range of \(\gamma \), so that, first, \({{\tilde{f}}}(K)\in {\mathcal {V}}^s(K)\), where \({\mathcal {V}}^s(K)\) is the basin of the attractor; second,

Since \({{\tilde{f}}}(y)\in {\mathcal {V}}^s(K)\), by Eq. (6), there is a stable manifold going through \({{\tilde{f}}}(y)\), centered at some \(z\in K\), and \({{\tilde{f}}}(y)-z\le 0.1r\). Since the stable manifold centered at z has size larger than r, the stable manifold centered at \({{\tilde{f}}}(y)\) has size larger than 0.9r. By Eqs. (7) and (5), \({\mathcal {V}}^s_{0.9r}({{\tilde{f}}}(y))\) and \({\mathcal {V}}^u_r(y)\) are two transverse manifolds whose centers are close to each other, so they intersect at a unique point \(x=\xi {{\tilde{f}}}y\), and we have \(|x-y|\le 0.1r\). \(\square \)

We define \({{\tilde{L}}}^u\) as the local transfer operator of \(\xi {{\tilde{f}}}:{\mathcal {V}}^u \rightarrow {\mathcal {V}}^u\); note that \({{\tilde{\cdot }}}\) indicates dependence on \(\gamma \). Let r be the uniform size of local unstable manifolds; for each \(x\in K\), let \(P:=(\xi {{\tilde{f}}})^{-1}{\mathcal {V}}^u_{0.1r}(x)\), we define \({{\tilde{L}}}^{u}\) as the transfer operator from \(C^0(P)\) function space to \(C^0({\mathcal {V}}_{0.1r}^{u}(x))\). Since \(\delta (\xi {{\tilde{f}}}) = X^u\), \(\delta {{\tilde{L}}}^u\) is the perturbation by \(X^u\). Let \(y = (\xi {{\tilde{f}}})^{-1} x\), then the pointwise definition of \({{\tilde{L}}}^u\) on any density function \(\sigma \) is

Here the last expression, roughly speaking, dissects the perturbation by \(X^u\) into the perturbation by X and \(-X^s\).

Then we define \(\frac{{{\tilde{L}}}^u\sigma }{\sigma } (x)\), where \(\sigma \) is the conditional density. Let r be such that \(B(x,r)\cap K\) is folicated by unstable leaves at \(\gamma =0\), and \(\sigma \) is the density of the conditional measure of the physical measure \(\rho \). In particular, the domain of \(\sigma \) includes \({\mathcal {V}}_{r}^{u}(x)\), and \(P:=(\xi {{\tilde{f}}})^{-1}{\mathcal {V}}^u_{0.1r}(x)\subset {\mathcal {V}}^u_r(x) \subset K\cap B(x,r)\), so we can define \(\frac{{{\tilde{L}}}^u\sigma }{\sigma } (x)\) on the smaller leaf \({\mathcal {V}}^u_{0.1r}(x)\) for the particular r. Moreover, notice that both \(\sigma |_{{\mathcal {V}}_{0.1r}^{u}(x)}\) and \(\sigma |_P\), the source of \({{\tilde{L}}}^u \sigma |_{{\mathcal {V}}_{0.1r}^{u}(x)}\), are restrictions of the same \(\sigma \) from the same larger leaf \({\mathcal {V}}_{r}^{u}(x)\) of the same foliation. Hence, we can expect that, in \(\frac{{{\tilde{L}}}^u\sigma }{\sigma } (x)\), the factor due to the selection of B(x, r) would cancel. Indeed, as we shall see by the expression in Lemma 6 and Theorem 2, \(\frac{{{\tilde{L}}}^u\sigma }{\sigma }(x)\) and \(\frac{\delta {{\tilde{L}}}^u\sigma }{\sigma }(x)\) do not involve \(\sigma \) and do not depend on the selection of B(x, r), and \(\frac{\delta {{\tilde{L}}}^u\sigma }{\sigma }\) is a continuous function on K.

An additional technical subtlety is that, we want to say \(\xi \) is a holonomy map, which is defined only on the hyperbolic set K [7, Section 4.3]. This follows from that the unstable manifold through any \(x\in K\) lies in K. As a result, we can use the standard absolute continuity lemma of holonomy maps to resolve the change of the conditional SRB measure on all unstable manifolds.

3.3 One Volume Ratio for the Entire Unstable Perturbation Operator

Lemma 6

(A volume ratio) Let \(e_{-k}\) be the unit u-vector field on \({\mathcal {V}}^u(x_{-k})\), where \(x_{-k}:=f^{-k}x\). Denote \(y:=(\xi {{\tilde{f}}})^{-1}x\) and \(y_{-k}:=f^{-k}y\). Then

Proof

First, we find an expression for \(\sigma \) by considering how the Lebesgue measure on \({\mathcal {V}}^u(x_{-k})\) is evolved. The mass contained in the cube \(e_{-k}\) is preserved via pushforwards, but the volume increased to \(f_*^ke_{-k}\). Hence, for \(y\in {\mathcal {V}}^u(x)\), the density \(\sigma \) satisfies

This expression was stated for example in [56, Proposition 1] using unstable Jacobians; note that the conditional measure is determined up to a constant coefficient. Hence,

Here \(|{{\tilde{f}}}_{*}(y)|:= \frac{|{{\tilde{f}}}_* e(y)|}{|e(y)|}\), \(|\xi _{*}({{\tilde{f}}}y)|:= \frac{|\xi _*{{\tilde{f}}}_* e(y)|}{|{{\tilde{f}}}_*e(y)|}\), where \({{\tilde{f}}}_* e(y)\) is a vector at \({{\tilde{f}}}y\), \(\xi _*{{\tilde{f}}}_* e(y)\) is a vector at x.

By a corollary of the absolute continuity of the holonomy map [7, Theorem 4.4.1] (we provide an intuition for this corollary after this proof),

By substitution and cancellation,

Both \(f_*^k e_{-k}(x_{-k})\) and \(\xi _*{{\tilde{f}}}_*e(y)\) are in the one-dimensional subspace \(\wedge ^u V^u(x)\), so the growth rate of their volumes are the same when pushing forward by \(f_*\), hence

Similarly,

Finally, by substitution and cancellation,

\(\square \)

We give an intuitive explanation of Eq. (8). Because \(\xi \) is projection along the stable manifolds, intuitively, for any z near K, such as \(z = {{\tilde{f}}}y\), we have

where d is the distance on \({\mathcal {M}}\). For any vector \(e'\) at z transverse to \(V^s(z)\), such as \(e'={{\tilde{f}}}_* e(y) \), vaguely speaking, the vector \(e'\) and \(\xi _* e'\) collapse after pushforward many times, so we have

This equation is equivalent to Eq. (8), after cancellation from both sides of Eq. (8).

Lemma 7

(Expanded equivariant divergence formula)

If we evaluate this formula at x, then here \(X^s\) is a vector at x, \(f_*^{n} X^s\) is a vector at \(f^{n}x\), \((\text {div}^vf_*)_{n}(x)=(\text {div}^vf_*)(x_{n})\).

Proof

Formally differentiate the expression in Lemma 6,

At \(\gamma =0\), we have \(x=y\), \({{\tilde{f}}}\) is identity, so

Here

Here the second equality demands that \(\frac{d }{d \gamma }\), applied on vectors, is the Riemannian derivative. Moreover, we emphasize that \(\frac{d }{d \gamma }\) is also the total derivative: \({{\tilde{f}}}\) has two direct parameters y and \(\gamma \); f has only one variable y, and y depends on \(\gamma \). Summarizing, we have,

Recursively apply the Leibniz rule, note that \({{\tilde{f}}}_*=I_d\) when \(\gamma =0\), we get

Then we substitute into the previous equation to get

The convergence as \(k\rightarrow \infty \) is uniform for a small range of \(|\gamma |\), justifying the formal differentiation. Then we shall simplify each term in Eq. (9) to prove the lemma.

The second term on the right of this equation is zero, since

Then we consider the first term in Eq. (9).

Since \({{\tilde{f}}}_*e|_{\gamma =0}=e\), we have \(\nabla _{\delta y}({{\tilde{f}}}_* e|_{\gamma =0} )=\nabla _{\delta y}e\), so the last two terms cancel each other. Since \({{\tilde{f}}}\) is the flow of X, we can use the same Lie bracket statement as in Lemma 4, to get \(\nabla _{\frac{\partial }{\partial \gamma }}({{\tilde{f}}}_*e)=\nabla _{{{\tilde{f}}}_*e}(X)=\nabla _{e} X\) at \(\gamma =0\). Hence,

Then we show where \(\text {div}^vX\) in the lemma comes from. Roughly speaking, e grows faster than all the other u-vectors, so after pushing-forward many times, e becomes dominant: this is proved in Theorem 12 in the appendix. Hence,

This is the first term in the right hand side of the lemma.

Then we consider the terms in the second last sum of Eq. (9). Roughly speaking, for large k

To make this rigorous, use Theorem 12 in the appendix, which estimates the error of the above approximation, and we see that

Here the C’s are different in each appearance, and the last C does not depend on X and k. Also note that the first equality employs the choice of orientation of \(V^u\) on the orbit, which is implied by our notation, that is,

and it does not matter whether \(e_{n}=e\circ f^{n}\) or \(e_{n}=-e\circ f^{n}\) in terms of the vector fields. Hence, the second last sum of Eq. (9) converges uniformly and absolutely, and the limit

This is the first sum in the lemma.

Similarly, for the last sum in Eq. (9),

The convergence is absolute and uniform. Hence,

This is the last sum in the lemma. Summarizing, we have simplified each term in Eq. (9) and thus proved the lemma. \(\square \)

3.4 Recursive Formula

The adjoint shadowing operator on covectors is equivalently defined by three characterizations [47]:

-

(1)

\({\mathcal {S}}\) is the linear operator \({\mathcal {S}}:{\mathfrak {X}}^{*\alpha }(K) \rightarrow {\mathfrak {X}}^{*\alpha } (K)\), such that

$$\begin{aligned} \begin{aligned} \rho (\omega S(X)) = \rho ({\mathcal {S}}(\omega ) X) \quad \text {for any } X\in {\mathfrak {X}}^\alpha (K). \end{aligned} \end{aligned}$$Here \( {\mathfrak {X}}^{\alpha }(K)\) and \( {\mathfrak {X}}^{*\alpha }(K)\) denote the space of Holder-continuous vector and covector fields on K. S is the (forward) shadowing operator, that is, \(v=S(X)\) is the only bounded solution of the variational equation \(v\circ f = f_* v + X\).

-

(2)

\({\mathcal {S}}(\omega )\) has the expansion formula given by a ‘split-propagate’ scheme,

$$\begin{aligned} {\mathcal {S}}( \omega ):= \sum _{n\ge 0} f^{*n} {\mathcal {P}}^s \omega _n - \sum _{n\le -1} f^{*n} {\mathcal {P}}^u \omega _n. \end{aligned}$$ -

(3)

The shadowing covector \(\nu ={\mathcal {S}}(\omega )\) is the unique bounded solution of the inhomogeneous adjoint equation,

$$\begin{aligned} \begin{aligned} \nu = f^* \nu _1 + \omega , \quad \text {where} \quad \nu _1:=\nu \circ f. \end{aligned} \end{aligned}$$

Here \(f^*\) is the pullback operator on covector, which is dual of \(f_*\). Here \({\mathcal {P}}^s, {\mathcal {P}}^u\), and \(f^*\) are transposed matrices, or adjoint operators, of \(P^s, P^u\), and \(f_*\). More specifically, define the adjoint projection operators, \({\mathcal {P}}^u\) and \({\mathcal {P}}^s\), such that for any \(w\in T_{x}{\mathcal {M}}\), \(\eta \in T^*_{x}{\mathcal {M}}\),

We can show that \({\mathcal {P}}^s, {\mathcal {P}}^u\) in fact project to unstable and stable subspaces for the adjoint system. This is the adjoint theory of the conventional shadowing Lemma [11, 58]. Then we can prove

Theorem 2

(equivariant divergence formula) Let \(\sigma \) be the density of the conditional measure of \(\rho \), which is the physical measure on a mixing axiom A attractor of a \(C^3\) diffeomorphism f, denote \(\delta {{\tilde{f}}}:=\left. \frac{\partial {{\tilde{f}}}}{\partial \gamma }\right| _{\gamma =0} = X\), then

Remark

-

(1)

Note that the middle expression is the same for two local density function \(\sigma \)’s which are different only by a constant multiplier; the rightmost expression does not explicitly involve \(\sigma \).

-

(2)

This theorem can be proved via another approach, via the fast formula in [44], as given in appendix B, but that proof is longer and less intuitive.

Proof

The first equality is due to the distributional definition of \(\text {div}^u_\sigma X^u\).

Since the unstable and stable subspaces are invariant, we have

Hence, By Lemma 7

By the definition of adjoint projection operators,

By the definition of the pullback operator \(f^*\),

By the expansion formula of the adjoint shadowing operator, we prove the lemma. \(\square \)

The significance of the formula in Theorem 2 is that it can be sampled by an orbit, just as how the physical measure is defined. More specifically, this means two things

-

The formula is defined pointwise.

-

The formula can be computed by recursively applying a map on a few vectors and covectors.

The formula is defined pointwise: all differentiations hit only X and \(f_*\): these vector or tensor fields are at least \(C^2\) on \({\mathcal {M}}\). Also, all intermediate quantities have bounded sup norm. There are previous works achieving pointwise formula for the unstable part of linear responses [31, 56]. However, it was not clear back then that those formulas were related to unstable transfer operators, and the formulas are not recursive.

More importantly, our formula can be sampled on an orbit via only \(2u+1\) many recursive relations. The numerical implementations, including several none-trivial tricks such as renormalizations and matrix notations, and several numerical examples are given in another paper [46]. It is inobvious, if not impossible, that previous pointwise formulas, even with extra work, can be realized by this many recursive relations. First, e can be efficiently computed via u-many forward recursion. Since unstable vectors grow while stable vectors decay, we can pushforward almost any set of u vectors, and their span will converge to \(V^u\), while their normalized wedge product converges to e. Note that this convergence is measured by the metric on the Grassmannian of u-dimensional subspaces. Numerically, we need to perform occasional renormalizations when pushing forward the set of u many single vectors; renormalization does not change the span, but avoids the clustering of single vectors. This classical result is used in the algorithms for Lyapunov vectors by Ginelli and Benettin [8, 27, 28], although here we only need the unstable subspace instead of individual unstable Lyapunov vectors. Similarly, \(\varepsilon \) can be efficiently computed via pulling-back u-many covectors, since it is the unstable subspace of the adjoint system.

The adjoint shadowing form \(\nu :={\mathcal {S}}(\text {div}^vf_*)\) can also be efficiently computed with one more backward recursion and an orthogonal condition at the first step. The second characterization in the adjoint shadowing lemma states that \(\nu \) is the only bounded solution of the inhomogeneous adjoint equation,

Hence \(\nu \) can be well approximated by solving the following equations for \(a_1,\ldots , a_u \in {\mathbb {R}}\),

Here \(\nu '\) is a particular inhomogeneous adjoint solution. Intuitively, the unstable modes are removed by the orthogonal projection at the first step, where the unstable adjoint modes are the most significant. This is known as the nonintrusive (adjoint) shadowing algorithm [48] (also see [9, 45, 49]).

Hence, we can compute the v-divergence formula on a sample orbit, with sampling error \(E\sim O(1/\sqrt{T})\), and the cost is

In particular, this is not cursed by dimensionality. Compared with the zeroth-order finite-element method for the whole \(\delta L\), whose cost is estimated in Appendix A, the efficiency advantage is significant when the dimension is larger than 4. The numerical implementations of our formula takes seconds to run on an \(M=21\) system, which is almost out of reach for finite-element methods [46].

4 Sampling Linear Responses by an Orbit

This section uses our equivariant divergence formula to sample linear responses recursively on an orbit, which is the derivative of the physical measure with respect to the parameter of the system. We do not reprove linear responses, rather, the focus is to sample it by recursively applying a map to evolve vectors. We first review the two linear response formula of physical measures. Then we explain how to blend the two linear response formulas for physical measures. In particular, the unstable part is given by the unstable perturbation of the unstable transfer operator, which can be sampled by an orbit, according to our Theorem 2.

4.1 Two Formulas for Linear Response and Their Formal Derivations

The application that we are interested in is the linear response of physical measures. In fact, most cases where we favor the derivative of transfer operators are when the perturbation is evolved for a long-time, as in the case of linear responses. Otherwise, if we are interested in the perturbation for only a few steps, we may as well use the Koopman formula, the left side of Eq. (2), which can be evaluated much more easily than derivative of transfer operators. This subsection reviews the linear response and its two more well-known formulas, the ensemble formula and the operator formula. The arguments in subsection are all formal, and the purpose is to help readers review those formulas, whose rigorous proof is much more difficult than our presentation.

We stil use f to denote the fixed base diffeomorphism, \({{\tilde{f}}}\) the perturbation, and \(\xi \) the projection along stable foliations. At \(\gamma =0\), \({{\tilde{f}}}\) is identity. In this subsection, we assume that \({{\tilde{f}}}\circ f\) is still hyperbolic; this can be achieved by further shrinking the range of \(\gamma \) prescribed in Lemma 5. Let \({{\tilde{h}}}\) and h denote the ‘density’ of the physical measure \({{\tilde{\rho }}}\) and \(\rho \). Note that \({{\tilde{h}}}\) is not single-step perturbed densities, which is denoted by \({{\tilde{L}}}h\) instead. More specifically,

where \(\mu \) is any smooth density function of a measure supported on the basin of the attractor. The convergence is in the weak-* sense; that is, for any \(C^2\) function \(\Phi \) over \({\mathcal {M}}\),

The physical measure encodes the long-time-average statistics, and it has regularities in the unstable directions for axiom A systems. A perturbation \({{\tilde{f}}}\) gives a new physical measure, and their linear relation was discussed by the pioneering works [10, 16, 26, 35], then justified rigorously for hyperbolic systems. There are other attempts to compute the linear response which do not need ergodic theory, such as the gradient clipping and the reservoir computing method from machine learning [34, 51].

One way to derive the linear response formula is to average the perturbation of individual orbits over the physical measure. More specifically, for a smooth observable function \(\Phi \),

Apply the chain rule recursively,

To intuitively explain the chain rule, consider an orbit starting from x, running for n steps. Then we add a perturbation \({{\tilde{f}}}\) to each step, and above expression gives how \(x_n\) is perturbed. Hence, the integrand can be expressed by the perturbation of an orbit, and

Here the last equality is the definition of pushforward of vectors. We may also directly obtain the above formula from a bigger chain rule including \(\Phi \), but here we take a detour to explain the relation to the perturbation of orbits. Hence, the linear response is

We call this the ensemble formula for the linear response, because it is formally an average of orbit-wise perturbations over an ensemble of orbits.

For contracting maps, the ensemble formula converges, and we we only need one orbit to sample each attractor and its perturbation. For hyperbolic systems, above formula was proved in [19, 55]. This formula was numerically realized in [20, 32, 39, 42]. However, due to exponential growth of the integrand, it is typically unaffordable for ensemble methods to actually converge. This issue is sometimes known as the ‘gradient explosion’.

The dual way to derive the linear response formula is to differentiate

where \(\mu \) is the density of a measure absolute continuous with respect to Lebesgue and supported on the attractor basin. Since the transfer operator is, very vaguely speaking, a giant matrix, so we can formally apply the Leibniz rule at \(\gamma =0\),

where we formally interchanged the limit and the differentiation, and used the fact that that \(\lim _{n\rightarrow \infty } L^{n-m} \mu = h\). Hence, we get the so-called operator formula for the linear response,

Here \(\rho \) is the physical measure of f. The convergence is due to decay of correlations, since formal integration-by-parts shows that,

Here \(\mathbbm {1}\) is a constant function. Since this term has zero mean, the decay of correlation could be faster than normal [29, 37].

Above formal arguments can be directly proved for expanding map f with \(C^3\) regularity, for example for tent maps on circles or cat maps on torus [5, 23]. Because, roughly speaking, expanding maps make the densities smoother, so h and \({{\tilde{h}}}\) are well-defined functions, and \(\delta {{\tilde{L}}}h\) is also a function. For expanding maps, it is not hard to imagine that we can sample \(\frac{\delta {{\tilde{L}}}h}{h}\) on an orbit recursively; to do this, just linearly remove the stable part from our equivariant divergence formula.

For the case of hyperbolic sets, h and \(\delta {{\tilde{L}}}h\) are no longer functions. They belong to the so-called anisotropic Banach space [4, 14, 18, 29, 40]. However, the objects in the anisotropic Banach space are not pointwisely defined functions, so there is no hope to compute \(\frac{\delta {{\tilde{L}}}h}{h}\) on an orbit. Hence, to keep using the operator formula, we have to use finite elements to to generate a mollified approximation of the singular objects. As shown in Appendix A, the cost of the finite element method is exponential to M, which is too high for typical physical systems. This issue is known as ‘curse by dimensionality’.

Finally, the operator formula and the ensemble formula are formally equivalent under integration-by parts. From the ensemble formula,

By Theorem 1, we have

To summarize, both the ensemble formula and the operator formula give the true derivative for hyperbolic systems, which have both expanding and contracting directions. However, in high dimensions, we want to sample by orbits, and the ensemble formula is still suitable mainly for contracting systems, whereas the operator formula is suitable mainly for expanding systems.

4.2 Blending Two Linear Response Formulas

It is a natural idea to combine the two linear response formulas. That is, to look at the orbit change for the stable or shadowing part of the linear response, and the density change for the unstable part. This subsection derives this blended formula for the linear response, which can be sampled by an orbit.

We call such linear response formulas the blended formulas, such as the formula in [31, Proposition 8.1]. Another blended formula, which has a numerical implementation, is the one used by the blended response algorithm [1]. But the blended response algorithm computed the unstable divergence by summing directional derivatives, which is not defined pointwise. With our formula for the unstable perturbations of transfer operators, we can sample the unstable divergence and hence the entire linear response by an orbit. Proposition 3 is part of the so-called fast (adjoint) response algorithm. It is numerically demonstrated on a 21 dimensional example with 20 unstable dimensions [44, 46].

In this subsection we shall assume linear responses proved by previous literature. Let \(\rho \) and \({{\tilde{\rho }}}\) denote the SRB measure of f and \({{\tilde{f}}}\circ f\) supported on an axiom A attractor. The map \(\gamma \mapsto {{\tilde{f}}}\) is \(C^1\) from \({\mathbb {R}}\) to the family of \(C^3\) diffeomorphisms on \({\mathcal {M}}\), Let \(\Phi :{\mathcal {M}}\rightarrow {\mathbb {R}}\) be a \(C^2\) observable function. The linear response has the expression [19, 35, 55]

Here \(X_{-n}(x) = X(x_{-n})\), x is the dummy variable in the integration, \(f^{n}_{*} X_{-n}\) is a vector at x. We do not give a new proof of this formula, rather, we shall give a new formula which can be sampled by 2u recursive relations on an orbit. The proof of our new formula starts from the above formula. We define the shadowing and unstable contribution of the linear response as:

Proposition 3

(fast adjoint formula for linear response) The shadowing contribution and the unstable contribution of the linear response can be expressed by integrations of quantities from the unperturbed dynamics with respect to \(\rho \),

Here \(\sigma \) is the density of the conditional measure of \(\rho \), and \(\frac{\delta {{\tilde{L}}}^u \sigma }{\sigma }\) is given by Theorem 2.

Proof

Recall that we have

so we can calculate that

Notice that \(\rho \) is the invariant measure, so we have

Take it back to the formula, then we have

For the unstable contribution, take a Markov partition of the attractor so that each rectangle is foliated by unstable leaves. Let \(\sigma '\) denote the factor measure (also called the quotient measure), and \(\sigma \) denote the conditional density of \(\rho \) with respect to this foliation. Notice that the boundary of the Markov partition has zero physical measure, we take the following integral on the rectangles.

Integrate-by-parts on unstable manifolds, note that the flux terms on the boundary of the partition cancel (this technique was used for example in [56]), so

Take it back to the formula and we can finish the proof. \(\square \)

4.3 A Formal But Intuitive Derivation of Our Blended Formula

The proof of the fast adjoint response formula in the previous subsection feels like taking a detour. It is based on linear response formulas proved by other people, whose proofs typically require moving to another space such as the sequence space, or anisotropic Banach space. This section formally derives the unstable contribution in Proposition 3 by transfer operators. This approach is more direct, since we proved Theorem 2 by transfer operators. Moreover, we only need to work within \({\mathcal {M}}\) in this formal argument.

In this subsection we assume that linear response exists and is truly linear, whose proof should still requires using advanced spaces. More specifically, denote \(\delta \rho (\Phi , X)\) as the linear response corresponding to a perturbation \(\delta {{\tilde{f}}}=X\), then we assume that we already know

Define the ‘stable contribution’ of the linear response as the linear response caused by the perturbation \(X^s\). Since stable vectors decay exponentially via pushforward, the argument in the first half of Sect. 4.1 still applies, so

This formula can be naturally sampled by an orbit.

The rest of this subsection derives the unstable contribution of the total linear response, which is the linear response caused by perturbing \({{\tilde{\rho }}}\) by \(X^u\). Recall that the physical measure is the weak-* limit of pushing forward a Lebesgue measure. Intuitively, since the stable direction contracts the measures, the densities of the measure asymptotically approach the unstable manifolds, and eventually, the physical measure is carried by the unstable manifolds. The unstable perturbation by \(X^u=\delta (\xi {{\tilde{f}}})\) will re-distribute the densities within each unstable manifold, but will not move densities across different unstable manifolds. Hence, we can think of the dynamical system as time-inhomogeneous, hopping from one unstable manifold to another: this model is purely expanding. In this model, the phase space is a family of unstable manifolds, which is preserved under the unstable perturbation. Hence, roughly speaking, the transfer operator version of linear response formula still applies.

More specifically, first, we fix a Markov partition for f, denote the local foliation of local unstable manifolds by \(F^u\), and let \(\sigma '\) be the quotient measure (not density) of the physical measure \(\rho \) in the stable direction. We also fix \(F^u\), which is not changed by the unstable perturbation. Since the unstable perturbation does not change the attractor, we can further fix \(\sigma '\) for the rest of this subsection, even though \(\sigma '\) is no longer the quotient measure of \(\xi {{\tilde{f}}}f\) under \(F^u\) when \(\gamma \ne 0\). We shall designate \(\delta {{\tilde{\rho }}}\) to the change of \(\sigma \), the density of the conditional measure, which is no longer a conditional probability when \(\gamma \ne 0\).

After we fixed the quotient measure, i.e. the factor measure \(\sigma '\) on leaves, then we can define a conditional density s for each signed Radon measure \(\mu \) which is absolutely continuous to the product measure \(Leb\times \sigma '\) on each rectangle, where Leb denote the Lebesgue measure on each leaf. Conversely, if we have a positive function s which is integrable with respect to \(Leb\times \sigma '\) we can get a Radon measure \(\mu \). We denote x as a position on the manifold, and y as the unstable leaf which contains x. So actually \(y=y(x)\) depends on x, and we also set \(d\sigma '(x)=d\sigma '(y(x))\) under our notions.

Let \(L^u s\) be the conditional density of the pushforward measure \(f_*\mu \) and \(J^u_f(x)\) be the Jacobian of f restricted to the unstable manifolds. Then we have

holds under the sense of integral, i.e. for any A with positive \(Leb\times \sigma '\) measure, take integral on A for both side, this equation holds. To see this, let A be any set with positive \(Leb\times \sigma '\) measure; without loss of generality, we can let A small, so that A and fA are each contained in a rectangle. Then by changing variables,

Hence Eq. (11) holds.

On the set where s(x) is positive, \(\frac{L^u s(fx)}{s(x)}dxd\sigma '(fx) = \frac{1}{J^u_f(x)}dxd\sigma '(x) \) holds under the sense of integral. As a result, if \(s_1\) is everywhere positive (even if \(s_2\) is not always non-zero), then we have

holds for \(Leb\times \sigma '\ a.e.\ x\in K\). In particular, it holds almost everywhere for the physical measure \(\rho \). We mention that all the point-wise equations later in this section should be understood in the sense of \(Leb\times \sigma '\ a.e.\ x\in K\).

Let \(\sigma _0\) be a conditional density of a measure independent of \(\gamma \). Denote \(\sigma _{n+1}:={{\tilde{L}}}^uL^u\sigma _n\), where \(L^u\) is the renormalized transfer operator of f defined above. Let \({{\tilde{L}}}^u\) be the transfer operator of \(\xi {{\tilde{f}}}\). All equations below are still evaluated at \(\gamma =0\). First, by the chain rule,

where the last equality uses that \({{\tilde{L}}}^uL^u=L^u\) at \(\gamma =0\). Divide both sides by \(\sigma _{n+1}=L^u\sigma _n>0\), to get

Substitute \(s_2=\delta \sigma _n\) and \(s_1=\sigma _n\) into Eq. (12), we get

By substitution, we have

Apply this equation repeatedly, notice that \(\delta \sigma _{0}=0\), we get

By the same argument in the proof of Theorem 2, we can see that the right side of the formula is independent of the choice of the Markov partition and is continuous across the boundaries of the sets in the partition.

Now, let \(\rho \) be the physical measure of f. Let \(\rho _n=({{\tilde{L}}}L)^n \rho \) where \({{\tilde{L}}}\) is the transfer operator of \(\xi {{\tilde{f}}}\). We set \(\sigma _n\) and \(\sigma \) as the density of the conditional measures of \(\rho _n\) and \(\rho \) under \(F^u\) and \(\sigma '\). By definition, \(\sigma _{n+1}={{\tilde{L}}}^uL^u\sigma _n\), so we have

Notice that at \(\gamma =0\), we have \(\sigma _k = \sigma \), and \(\rho \) is f-invariant. Let \(m=n-k\),

Finally, let \(n\rightarrow \infty \), we formally get

This is equivalent to the expression in Proposition 3, except for that here we are using the stable/unstable decomposition instead of the shadowing/unstable decomposition of the linear response.

5 Conclusions

The phase space is typically high dimensional, so efficient computations demand sampling by an orbit rather than approximating high-dimensional measures by finite-elements. In this paper we solve this problem for the more difficult part, the unstable perturbation operator of a physical measure. It was well known that the physical measure can be sampled by an orbit; now, with our results, we know that the unstable derivative of the transfer operator and hence the linear response can also be sampled on a orbit by 2u recursive relations. This cost is perhaps optimal, since we need at least u many modes to capture all the unstable perturbative behaviors of a chaotic system.

Data Availibility

This manuscript has no associated data. The paper detailing the algorithm for Theorem 2 and Proposition 3 is [46], and the code is at https://github.com/niangxiu/far.

Notes

This proof was suggested by a referee during the review process.

References

Abramov, R.V., Majda, A.J.: New approximations and tests of linear fluctuation-response for chaotic nonlinear forced-dissipative dynamical systems. J. Nonlinear Sci. 18, 303–341 (2008)

Antown, F., Froyland, G., Galatolo, S.: Optimal linear response for Markov hilbert-schmidt integral operators and stochastic dynamical systems. (2021) arXiv:2101.09411

Bahsoun, W., Galatolo, S., Nisoli, I., Niu, X.: A rigorous computational approach to linear response. Nonlinearity 31, 1073–1109 (2018)

Baladi, V.: Decay of correlations. 0:1–29 (1999)

Baladi, V.: Linear response, or else. Proc. Int. Cong. Math. Seoul 2014, 525–545 (2014)

Baladi, V.: The quest for the ultimate anisotropic Banach space. J. Stat. Phys. 166, 525–557 (2017)

Barreira, L., Pesin, Y.B.: Lyapunov Exponents and Smooth Ergodic Theory, vol. 23. American Mathematical Society, Providence, RI (2002)

Benettin, G., Galgani, L., Giorgilli, A., Strelcyn, J.-M.: Lyapunov characteristic exponents for smooth dynamical systems and for hamiltonian systems; a method for computing all of them. Part 2: Numerical application. Meccanica 15, 21–30 (1980)

Blonigan, P.J.: Adjoint sensitivity analysis of chaotic dynamical systems with non-intrusive least squares shadowing. J. Comput. Phys. 348, 803–826 (2017)

Bonetto, F., Gallavotti, G., Giuliani, A., Zamponi, F.: Chaotic hypothesis, fluctuation theorem and singularities. J. Stat. Phys. 123(4), 39–54 (2006)

Bowen, R.: Markov partitions for axiom A diffeomorphisms. Am. J. Math. 92, 725–747 (1970)

Bowen, R., Ruelle, D.: The ergodic theory of axiom A flows. Invent. Math. 29, 181–202 (1975)

Chorin, A., Hald, O. H.: Stochastic Tools in Mathematics and Science. (2009)

Collet, P., Eckmann, J.-P.: Liapunov multipliers and decay of correlations in dynamical systems. J. Stat. Phys. 115, 217–254 (2004)

Crimmins, H., Froyland, G.: Fourier approximation of the statistical properties of Anosov maps on tori. Nonlinearity 33, 6244–6296 (2020)

de la Llave, R., Marco, J.M., Moriyon, R.: Canonical perturbation theory of Anosov systems and regularity results for the livsic cohomology equation. Ann. Math. 123(5), 537 (1986)

Ding, J., Zhou, A.: The projection method for computing multidimensional absolutely continuous invariant measures. J. Stat. Phys. 77, 899–908 (1994)

Dolgopyat, D.: On decay of correlations in Anosov flows. Ann. Math. 147, 357–390 (1998)

Dolgopyat, D.: On differentiability of SRB states for partially hyperbolic systems. Invent. Math. 155, 389–449 (2004)

Eyink, G.L., Haine, T.W.N., Lea, D.J.: Ruelle’s linear response formula, ensemble adjoint schemes and lévy flights. Nonlinearity 17, 1867–1889 (2004)

Froyland, G.: On Ulam approximation of the isolated spectrum and eigenfunctions of hyperbolic maps. Discret. Contin. Dyn. Syst. 17, 671–689 (2007)

Froyland, G., Junge, O., Koltai, P.: Estimating long-term behavior of flows without trajectory integration: the infinitesimal generator approach. SIAM J. Numer. Anal, 51, 223–247 (2013)

Galatolo, S., Bahsoun, W.: Linear response due to singularities. 1 (2023)

Galatolo, S., Nisoli, I.: An elementary approach to rigorous approximation of invariant measures. SIAM J. Appl. Dyn. Syst. 13, 958–985 (2014)

Galatolo, S., Nisoli, I.: Rigorous computation of invariant measures and fractal dimension for maps with contracting fibers: 2d c-like maps. Ergodic Theory Dyn. Syst. 36, 1865–1891 (2016)

Gallavotti, G.: Chaotic hypothesis: onsager reciprocity and fluctuation-dissipation theorem. J. Stat. Phys. 84, 899–925 (1996)

Ginelli, F., Chaté, H., Livi, R., Politi, A.: Covariant Lyapunov vectors. J. Phys. A 46, 254005 (2013)

Ginelli, F., Poggi, P., Turchi, A., Chaté, H., Livi, R., Politi, A.: Characterizing dynamics with covariant Lyapunov vectors. Phys. Rev. Lett. 99, 1–4 (2007)

Gouézel, S.: Sharp polynomial estimates for the decay of correlations. Isr. J. Math. 139(12), 29–65 (2004)

Gouëzel, S., Liverani, C.: Banach spaces adapted to Anosov systems. Ergodic Theory Dyn. Syst. 26, 189–217 (2006)

Gouëzel, S., Liverani, C.: Compact locally maximal hyperbolic sets for smooth maps: fine statistical properties. J. Differ. Geom. 79, 433–477 (2008)

Gritsun, A., Lucarini, V.: Fluctuations, response, and resonances in a simple atmospheric model. Physica D 349, 62–76 (2017)

Gutiérrez, M.S., Lucarini, V.: Response and sensitivity using Markov chains. J. Stat. Phys. 179, 1572–1593 (2020)

Huhn, F., Magri, L.: Gradient-free optimization of chaotic acoustics with reservoir computing. Phys. Rev. Fluids 7, 1 (2022)

Jiang, M., de la Llave, R.: Linear response function for coupled hyperbolic attractors. Commun. Math. Phys. 261(1), 379–404 (2006)

Keane, M., Murray, R., Young, L.S.: Computing invariant measures for expanding circle maps. Nonlinearity 11, 27–46 (1998)

Korepanov, A.: Linear response for intermittent maps with summable and nonsummable decay of correlations. Nonlinearity 29(5), 1735–1754 (2016)

Lamb, H.: Hydrodynamics. University Press (1924)

Lea, D.J., Allen, M.R., Haine, T.W.N.: Sensitivity analysis of the climate of a chaotic system. Tellus A 52, 523–532 (2000)

Liverani, C.: Decay of correlations. Ann. Math. 142, 239–301 (1995)

Liverani, C.: Rigorous numerical investigation of the statistical properties of piecewise expanding maps. A feasibility study. Nonlinearity 14, 463–490 (2001)

Lucarini, V., Ragone, F., Lunkeit, F.: Predicting climate change using response theory: global averages and spatial patterns. J. Stat. Phys. 166, 1036–1064 (2017)

Metropolis, N., Rosenbluth, A.W., Rosenbluth, M.N., Teller, A.H., Teller, E.: Equation of state calculations by fast computing machines. J. Chem. Phys. 21(6), 1087–1092 (1953)

Ni, A.: Fast differentiation of chaos on an orbit, pp. 1–28 (2020) arXiv:2009.00595

Ni, A.: Approximating linear response by nonintrusive shadowing algorithms. SIAM J. Numer. Anal. 59, 2843–2865 (2021)

Ni, A.: Fast adjoint algorithm for linear responses of hyperbolic chaos. 11 (2021) arXiv:2111.07692

Ni, A.: Adjoint shadowing for backpropagation in hyperbolic chaos. 7 (2022) arXiv: 2207.06648

Ni, A., Talnikar, C.: Adjoint sensitivity analysis on chaotic dynamical systems by non-intrusive least squares adjoint shadowing (NILSAS). J. Comput. Phys. 395, 690–709 (2019)

Ni, A., Wang, Q.: Sensitivity analysis on chaotic dynamical systems by non-intrusive least squares shadowing (NILSS). J. Comput. Phys. 347, 56–77 (2017)

Pacifico, M. J., Yang, F., Yang, J.: Equilibrium states for the classical Lorenz attractor and sectional-hyperbolic attractors in higher dimensions. 9 (2022)

Pascanu, R., Mikolov, T., Bengio, Y.: On the difficulty of training recurrent neural networks. International conference on machine learning, pp. 1310–1318 (2013)

Pollicott, M., Jenkinson, O.: Computing invariant densities and metric entropy. Commun. Math. Phys. 211, 687–703 (2000)

Pollicott, M., Vytnova, P.: Linear response and periodic points. Nonlinearity 29(8), 3047–3066 (2016)

Ruelle, D.: A measure associated with axiom-a attractors. Am. J. Math. 98, 619 (1976)

Ruelle, D.: Differentiation of SRB states. Commun. Math. Phys 187, 227–241 (1997)

Ruelle, D.: Differentiation of SRB states: correction and complements. Commun. Math. Phys. 234, 185–190 (2003)

Wen, L.: Differentiable Dynamical Systems, vol. 173, p. 7. American Mathematical Society, Providence (2016)

Wen, X., Wen, L.: No-shadowing for singular hyperbolic sets with a singularity. Discret. Contin. Dyn. Syst. A 40(10), 6043–6059 (2020)

Wormell, C.: Spectral Galerkin methods for transfer operators in uniformly expanding dynamics. Numer. Math. 142, 421–463 (2019)

Wormell, C.L., Gottwald, G.A.: Linear response for macroscopic observables in high-dimensional systems. Chaos 29, 112137 (2019)

Zhang, H., Harlim, J., Li, X.: Estimating linear response statistics using orthogonal polynomials: an RKHS formulation. Found. Data Sci. 2, 443–485 (2020)

Acknowledgements

Both authors thank Yi Shi for helpful discussions. Angxiu Ni very grateful to Stefano Galatolo, Wael Bahsoun, Gary Froyland, and Caroline Wormell for very helpful discussions. Angxiu Ni is partially supported by the China Postdoctoral Science Foundation 2021TQ0016 and the International Postdoctoral Exchange Fellowship Program YJ20210018. This work is partially done during Angxiu Ni’s postdoctoral period at Peking University and during his visit to Mark Pollicott at the University of Warwick.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Marco Lenci.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: A Very Rough Cost Estimation of Finite-Element Method for Approximating High-Dimensional Measures

When the measure is singular, \(\delta {{\tilde{L}}}h\) has infinite sup norm. Although this is a well-defined mathematical objects in suitable Banach spaces, computers can not process infinite sup norm. Currently, the main numerical practice for computing derivative operators is to first approximate the measure by finite-elements [2, 15, 17, 21, 22, 24, 25, 36, 41, 52, 53, 59,60,61], then compute the derivative operator. Here the finite-elements are in the vague sense, which includes both finite-element, finite-difference, kernel method, Markov partition, Markov approximation. This approximation approach allows us to ignore the singularities and subtle structures of measures; however, the cost is still affected.

Computing the entire derivative operator was not numerically realized for discrete-time systems with dimensions larger than 1: for example, Bahsoun, Galatolo, Nisoli, and Niu did computations on 1-dimensional expanding maps [3]. For continuous-time systems, Gutiérrez and Lucarini numerically computed the derivative operator for a continuous-time 3-dimensional system [33], There are two difficulties, the easier one is the lack of convenient formulas, which we solved via Theorem 1. The more essential difficulty is that the finite-element method is cursed by the dimension of the dynamical system.

Galatolo and Nisoli gave a rigorous posterior error bound for the finite-element method, where some quantities in the bound are designated to be computed by numerical simulations [25]. That bound, though precise, does not give the cost-error relation and how it depends on dimensions.

This section gives an a priori cost-error estimation on a simple singular measure approximated by zero-order isotropic finite elements. A more general and precise estimation is more difficult, but should not change the qualitative conclusion. That is, the cost is ‘cursed by dimensionality’, or it increases exponentially fast with respect to the dimension of the attractor. For physical or engineering systems, this cost is too high.

Consider the example where the singular measure is uniformly distributed on the a-dimensional attractor, \(\{0\}^{M-a}\times {\mathbb {T}}^a\), where \({\mathbb {T}}:=[-0.5,0.5]\). We use the zeroth order finite-elements in the M-dimensional cubes of length b on each side. The density h is a distribution; and we still formally denote the SRB measure as the integration of h with respect to the Lebesgue measure. Let \(h' \) be the finite-element approximation of h, so

For the smooth objective function, \(\Phi \), we assume that for any unit tangent vector Y, the second order derivative \(Y^2(\Phi )(x):=Y(Y(\Phi )) (x) \le 1\), where we use geometer’s notation that \(Y(\Phi )={{\,\textrm{grad}\,}}\Phi \cdot Y\).

The approximation error E caused by using \(h' \) instead of h is

where \(dx^{1\sim M-a} = dx^1\ldots dx^{M-a}\), and

where \(\varphi (y):= \Phi (y, x^{M-a+1},\ldots , x^M)\), \(y\in {\mathbb {T}}^{M-a}\). Then by Taylor expansion, there is \(\xi (y)\) such that

Here \(Y:=y/|y|\). The first term is zero due to symmetry, hence by assumptions

Hence,

For more general cases, there should be another error due to approximation within the attractor, but here we neglect it.

It is nontrivial to achieve optimal mesh adaptation in higher dimensions. For now we assume optimal mesh, then we can restrict our computation to the attractor, and the cost is at least propotional to the number of cells in the mesh, so

When the attractor dimension a is higher than 4, this cost is much larger than the cost of our equivariant divergence formula estimated in Eq. (10). On the other hand, if the finite-elements are all globally supported, such as the Fourier basis, or if the optimal implementation is not achieved, the cost can be as high as \(O(b^{-M})\).

In higher dimensions, it is expensive to approximate the entire attractor by finite-elements. Hence, it is also expensive to compute \(\delta {{\tilde{L}}}h\) via finite-elements.

Appendix B: Equivalence Between the Equivariant Divergence Formula and the Fast Fast Tangent Response Formula

This section shows that the fast (tangent) formula in our previous paper [44] is equivalent to the fast adjoint formula for the linear response in Proposition 3 of this paper. Both formulas can be either proved directly, or by first proving the other formula and then proving the equivalence between the two. However, proving Proposition 3 from the indirect approach is less intuitive; moreover, if we want to start from Theorem 8, prove Proposition 3, then prove Theorem 2, then we can only prove Theorem 2 for \(\rho \)-almost everywhere. Besides giving another proof of the main results in this paper, the equivalence also verifies the fast formula in our previous paper, which runs only forward along an orbit.

The main difference, in terms of utility, between the adjoint and tangent formulas is that the adjoint is more suitable for cases with many parameters, whereas the tangent is suitable for cases with a few parameters. Because if we want to compute linear responses with respect to many perturbations, each controlled by a separate parameter \(\gamma \), then we would have to compute our formula for many different X’s. The main term in the adjoint formula, \({\mathcal {S}}(\text {div}^vf_*)\), does not depend on X, so this main term needs not be recomputed; the case with multiple parameters, solved by the adjoint algorithm, is tested in another paper [46]. In contrast, the main term in the fast tangent formula needs to be recomputed for each X, so the marginal cost is larger for a new parameter. On the other hand, when we have only one parameter, then the tangent algorithm is faster, since it involves only the pushforward of vectors, whose computation is faster than the pullback of covectors in terms of clock time, even though the the number of flops (float point operations) is the same.

The main idea to prove the equivalence is ‘adjointing’ the fast formula of the unstable contribution. More specifically, we shall expand the unstable divergence, move major computations away from X and \(\varphi _W\), and obtain an expansion formula for an adjoint operator. Then we seek a neat characterization of the expansion formula, and we prove Proposition 3 by further using the adjoint shadowing lemma.

1.1 B.1: Fast Formula for the Unstable Contribution

Recall that the unstable contribution is

Here \({{\,\textrm{div}\,}}_\sigma ^u\) is the submanifold divergence on the unstable manifold under the conditional SRB measure. The norm of this integrand is \(O(\sqrt{W})\), much smaller than the ensemble formula. Note that the directional derivatives of \(X^u\) are distributions. We gave a fast formula for the unstable divergence. It involves only u many second-order tangent equations on one sample orbit, which runs forwardly in time.

Theorem 8

(fast formula for unstable contribution [44]) Let \(\{x_n:=f^nx_0\}_{n\ge 0}\) be a orbit on the attractor, then for almost all \(x_0\) according to the SRB measure \(\rho \), for any \(r_0\in {\mathcal {D}}^u(x_0)\),