Abstract

In light of recent advances in time-step independent stochastic integrators for Langevin equations, we revisit the considerations for using non-Gaussian distributions for the thermal noise term in discrete-time thermostats. We find that the desirable time-step invariance of the modern methods is rooted in the Gaussian noise, and that deviations from this distribution will distort the Boltzmann statistics arising from the fluctuation-dissipation balance of the integrators. We use the GJ stochastic Verlet methods as the focus of our investigation since these methods are the ones that contain the most accurate thermodynamic measures of existing methods. Within this set of methods we find that any distribution of applied noise, which satisfies the two first moments given by the fluctuation-dissipation theorem, will result in correct, time-step independent results that are generated by the first two moments of the system coordinates. However, if non-Gaussian noise is applied, undesired deviations in higher moments of the system coordinates will appear to the detriment of several important thermodynamic measures that depend especially on the fourth moments. The deviations, induced by non-Gaussian noise, become significant with the one-time-step velocity attenuation, thereby inhibiting the benefits of the methods. Thus, we conclude that the application of Gaussian noise is necessary for reliable thermodynamic results when using modern stochastic thermostats with large time steps.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Over the past several decades, discrete-time Langevin and Brownian simulations in computational statistical mechanics have been a centerpiece in many specific simulation applications to molecular dynamics, e.g., materials science, soft matter, and biomolecular modeling [1,2,3,4,5]. Inherent to simulations is the fundamental complication of time discretization, which on one hand is necessary for advancing time, but on the other hand inevitably corrupts the desired, continuous-time system features of interest. An integral part of a simulation task is therefore to explore and optimize the balance between the two conflicting core objectives; namely simulation efficiency by increasing the time step, and simulation accuracy by decreasing it.

Since discrete-time algorithms for time evolution produce only approximate solutions to a continuous-time solution, and since the errors must vanish in the limit of infinitesimal time step, most considerations for a useful simulation environment have been considered in the limit of small time steps, where the simulated system variables change only little in each step. In that limit, the discrete-time system behaves much like the continuous-time system, and algorithm development has therefore mostly been conducted by formulating objectives and measures in continuous-time, whereafter time has been discretized and executed with relatively small steps in order to retain accuracy.

For stochastic differential equations, notably Langevin equations, an added complication is that it is not a specific trajectory that is of interest, but instead the statistics of trajectories that determines if the desired continuous-time behavior has been adequately represented. The traditional approach to these problems has therefore been to acquire accurate statistics from accurate trajectories obtained from small time steps, where continuous-time behavior is well approximated. However, with the recent advent of discrete-time stochastic algorithms that can produce correct statistics from inaccurate, large time-step trajectories [6,7,8], the details of, e.g., stochastic noise correlation and distribution now become essential for obtaining the advantages of the statistical accuracy for all meaningful time steps. The significance of these details arises from the discretization of time, as outlined in the following.

We are here concerned with the Langevin equation [9, 10]

where m is the mass of an object with spatial (configurational) coordinate r and velocity (kinetic) coordinate \(v={\dot{r}}\). The object is subjected to a force \(f=-\nabla E_p(r)\), with \(E_p(r)\) being a potential energy surface for r, and linear friction that is represented by the non-negative constant \(\alpha \). The fluctuation-dissipation relationship specifies that the thermal fluctuations \(\beta \) can be represented by a distribution for which the following first two moments are given [11]:

where \(\delta (t)\) is Dirac’s delta function signifying that the fluctuations are temporally uncorrelated, \(k_B\) is Boltzmann’s constant, and T is the thermodynamic temperature of the heat-bath, to which the system is coupled through the friction constant \(\alpha \). A feature in statistical mechanics is that the fluctuation-dissipation theorem for the noise distribution \(\beta (t)\) only specifies the two moments given in Eq. (2) [10,11,12]. Thus, there is a great deal of freedom in choosing the distribution of the noise in the Langevin equation such that correct thermodynamics is represented. The reason that the physical (statistical) results do not depend on this freedom is that continuous-time demands a new, uncorrelated noise value \(\beta (t)\) be contributed and accumulated at every instant, resulting in a Gaussian outcome at any non-zero time-scale due to the central limit theorem (see, e.g., Ref. [13]). As a result, the sampled (one-dimensional) Maxwell–Boltzmann velocity distribution \(\rho _{k,e}(v)\) and the configurational Boltzmann distribution \(\rho _{c,e}(r)\) always become [10]

with the constant \({{\mathcal {C}}}_c\) determined by the normalization of probability

regardless of which temporally uncorrelated distribution \(\beta (t)\) is at play in Eq. (1), and for as long as Eq. (2) is satisfied. Similarly, it is given that diffusive transport for a flat surface is represented by a diffusion constant [1]

where d is the dimension of the configurational space.

Given that the central limit theorem ensures a Gaussian noise distribution for any appreciable integration of the instantaneous variable \(\beta (t)\), it is natural to choose \(\beta (t)\) to be the Gaussian that conforms to Eq. (2). However, in discrete-time simulations it is not uncommon to directly employ a uniform distribution that satisfies Eq. (2). The rationale is rooted in the computational efficiency of uniformly distributed pseudo-random generators [14], compared with the additional computational load required for transforming those variables into Gaussian distributed variables before use. A thorough investigation of using non-Gaussian valuables in discrete time was presented in the 1988 publication Ref. [15], where it was concluded that, given the inherent inaccuracies of the stochastic discrete-time integrators of the time, the scaling in statistical moments upon the applied time step did not change adversely when using non-Gaussian noise distributions, specifically the uniform distribution. Building upon this work, Ref. [16] extended the investigation in 1991 to a broader class of noise distributions, which would yield indistinguishable statistical results compared to the use of Gaussian noise for vanishing time steps, where the underlying systemic time-step errors of the then contemporary methods would vanish as well. The studies highlighted the benefits of computational efficiencies in using simple, non-Gaussian noise given that the statistical differences were deemed to be insignificant for small time steps, which in any case were necessary for the accuracy of the stochastic integrators, even using Gaussian noise. As pointed out in a recent article [17] that compares the quality of contemporary stochastic integrators, this practice has been widespread when using traditional algorithms, such as the Brünger–Brooks–Karplus [18] (also analyzed in Ref. [19]) or the Schneider–Stoll [20] method, for as long as small time steps are applied (for a more expansive list of traditional and recent Langevin integrators, please see Ref. [8]). For example, these two methods are used with non-Gaussian noise in, e.g., the wide-distribution molecular dynamics simulation suites, LAMMPS [21,22,23] and ESPResSo [24].

It is the aim of this work to revisit the discussion of applying non-Gaussian noise in light of the most modern stochastic integrators, which have been designed to produce accurate, time-step independent statistics and transport through the use of Gaussian noise. We demonstrate that even if the low order moments of the configurational and kinetic distributions are seemingly unaffected by the details of the noise distribution, there may still be significant differences in the sampled phase space that affect the statistical sampling of the system. We base the analysis exclusively on the recently formulated GJ set of methods [8], since these methods have the most optimized statistical properties that are invariant to the time step for linear systems when using Gaussian noise. This also allows us to investigate the large time-step implications of using non-Gaussian noise without being subject to other inherent time-step errors of the integrators. The presentation is organized as follows. Section 2 reviews the basic features and structure of the GJ methods formulated in both standard Verlet and split operation forms, Sect. 3 analyzes analytically the first four statistical moments of the configurational and kinetic coordinates for linear systems, Sect. 4 presents companion simulations to the analysis conducted in Sect. 3, and Sect. 5 summarizes the discussion with specific conclusions.

2 Review of the GJ Methods

We adopt the complete set of GJ methods [8] for solving Eq. (1) in the form

where a superscript n on the variables r, v, u, and f pertains to the discrete time \(t_n=t_0+n\,\Delta {t}\) at which the numerical approximation is given, with \(\Delta {t}\) being the discrete time step. Thus, \(f^n=f(t_n,r^n)\). The two represented velocities are, respectively, the half-step velocity \(u^{n+\frac{1}{2}}\) at time \(t_{n+\frac{1}{2}}\), and the on-site velocity \(v^{n}\) at \(t_n\), given by

The statistical definitions of what it means to be half-step and on-site are given in Ref. [8]. The functional parameter \(c_2=c_2(\frac{\alpha \Delta {t}}{m})\) is the one-time-step velocity attenuation, which is a function of \(\alpha \Delta {t}/m\). The two other functional parameters, \(c_1\) and \(c_3\), are given by

It is the choice of the function \(c_2\) that distinguishes the GJ methods from each other (see Ref. [8] for several choices of \(c_2\)). The basic requirement for \(c_2\) is that \(c_2\rightarrow 1-\frac{\alpha \Delta {t}}{m}\) for \(\frac{\alpha \Delta {t}}{m}\rightarrow 0\), ensuring that also \(c_1\rightarrow 1\) and \(c_3\rightarrow 1\) for \(\frac{\alpha \Delta {t}}{m}\rightarrow 0\) in accordance with the frictionless Verlet method [25,26,27,28,29]. Finally, the discrete-time stochastic noise variable is defined by

such that the discrete-time fluctuation-dissipation theorem follows from Eqs. (9) and (2) to become

where \(\delta _{n,\ell }\) is Kronecker’s delta function.

The GJ methods represented in Eq. (6) can equally well be written in the velocity-explicit and leap-frog Verlet forms [8] or it can be written in the so-called splitting form that partitions the evolution into inertial, interactive, and thermodynamic operations. One way to accomplish this is given in Ref. [30], where the pivotal pair of thermodynamic operations are condensed into one operation, and does therefore not explicitly include the half-step velocity. Here we write a GJ splitting form as follows:

where \(\sigma ^n\) is a stochastic variable with \(\langle \sigma ^n\rangle =0\) and \(\langle \sigma ^n\sigma ^\ell \rangle =\delta _{n,\ell }\), and where the half-step velocity \(u^{n+\frac{1}{2}}\) of Eq. (7a) appears explicitly in Eq. (11c).

Eliminating the velocities from either Eq. (6) or Eq. (11) yields the purely configurational GJ stochastic Verlet form

which is the only stochastic Verlet form that 1) for a harmonic potential \(E_p(r)\) with a Hooke’s force \(f=-\kappa r\) (\(\kappa >0\)) and Gaussian fluctuations \(\beta ^n\), will yield the correct Boltzmann distribution \(\rho _{c,e}(r^n)\) given by Eq. (3b), such that

for any time step within the stability range (\(\Omega _0\Delta {t}<2\sqrt{c_1/c_3}\)); 2) for a linear potential where \(f=\textrm{const}\), yields the correct configurational drift velocity

and 3) for \(f=0\), yields the correct Einstein diffusion given by Eq. (4), such that

Similarly, it was found [8] that for a single Gaussian noise value \(\beta ^n\) per time step, a Hooke’s force \(f=-\kappa r\) (\(\kappa \ge 0\)) results in the unique half-step velocity \(u^{n+\frac{1}{2}}\) in Eq. (7a) with correct velocity distribution function \(\rho _{k,e}(u^{n+\frac{1}{2}})\) from Eq. (3a) such that

In contrast, it was also found in Ref. [8] that it is not possible to define an onsite velocity \(v^n\) with the same time-step independent property. Given the statistical invariance of these equations upon the time step when using Gaussian noise, we will now investigate the statistical properties of \(r^n\) and \(u^{n+\frac{1}{2}}\) when using other distributions than Gaussian for \(\beta ^n\).

3 Moment Analysis for Linear Systems

We will here investigate the relevant moments of the configurational coordinate \(r^n\) and half-step velocity \(u^{n+\frac{1}{2}}\) as a function of the applied noise distribution \(\rho (\beta )\) of \(\beta ^n\), which satisfies Eq. (10). For this analysis we will mostly assume a linear system with a harmonic potential

such that the linearized configurational stochastic GJ methods in Eq. (12) can be written

with

The natural frequency \(\Omega _0\) of the oscillator is given by \(\Omega _0^2=\kappa /m\).

3.1 First and Second Moments

For the first moments of the variables, it is obvious that Eq. (10a) ensures both \(\langle r^n\rangle =0\) (from Eq. (19)) and subsequently \(\langle u^{n+\frac{1}{2}}\rangle =0\) (from Eq. (7a)) regardless of which distribution is chosen for \(\beta ^n\). It similarly follows that Eq. (10a) also ensures that the drift velocity in Eq. (14) for \(f=\textrm{const}\) remains constant and correct regardless of both time step and distribution \(\beta ^n\).

For the second moments, \(\langle r^nr^n\rangle \) and \(\langle u^{n+\frac{1}{2}}u^{n+\frac{1}{2}}\rangle \), we first find \(\langle r^nr^n\rangle \) as the solution to the system of equations given by the statistical averages of Eq. (19) multiplied by, respectively \(r^{n-1}\), \(r^n\), and \(r^{n+1}\). This linear system of equations can be conveniently written

We immediately observe that this equation is unaffected by the details of the distribution of \(\beta ^n\) for as long as Eq. (10) is satisfied. Thus, also the second moment, \(\langle r^nr^n\rangle \), is unaffected by the chosen distribution of \(\beta ^n\), regardless of the time step \(\Delta {t}\). The corresponding second moment of the half-step velocity in Eq. (7a) is then given by

where both correlations on the right hand side are solutions found from Eq. (21), which is unchanged for as long as Eq. (10b) is satisfied. Thus, the second moment of the half-step velocity is also unaffected by the chosen distribution of \(\beta ^n\), regardless of the time step \(\Delta {t}\). The averages of potential (Eq. (17)) and kinetic energies,

are therefore unaffected for any applied stochastic variable \(\beta ^n\) satisfying Eq. (10).

Since the second moments of the coordinates \(r^n\) and \(u^{n+\frac{1}{2}}\) of the GJ methods are invariant to the specific choice of noise distribution that satisfies Eq. (10), we conclude that the GJ methods display time-step invariance in both potential and kinetic energy, and therefore temperature, regardless of the applied \(\beta ^n\) distribution. The invariance also extends to the above-mentioned diffusion Eqs. (4) and (15), since this quantity is rooted in only second moments of the noise variable.

Numerical validation of the time-step independence of the second moments is shown in Figs. 2a, b and 6a, b for a couple of non-Gaussian noise variables, and simulations are discussed in Sect. 4. While the invariance of the second moments against the choice of \(\beta ^n\) distribution is appealing, as it gives the impression that temperature is correctly achieved, it may not be sufficient to ensure proper thermodynamics sampling as we will see in the following.

3.2 Third Moments

The third moments of \(r^n\) and \(u^{n+\frac{1}{2}}\) are generally not needed for the calculations of thermodynamic quantities, especially if the system potentials are symmetric. However, they may be relevant to study in case asymmetric fluctuations are applied to the system for some reason. Following the same procedure that was used for calculating the second moments, we form the statistical averages of the product of Eq. (19) with the six unique combinations of \(r^{\ell _1}r^{\ell _2}\), where \(\ell _i=n-1,n,n+1\). The result is the linear system

where

The right hand side \({{\mathcal {R}}} = \{{{\mathcal {R}}}_i\}\) can after some calculations be expressed as

where the fluctuations \(\beta ^n\) satisfy Eq. (10), and the skewness is denoted by \(\Gamma _3\),

As is the case when using any symmetric distribution for \(\beta ^n\), all odd moments for the configurational coordinate \(r^n\) will be zero for a Gaussian distribution of \(\beta ^n\) applied to a symmetric potential, \(E_p(r)\).

The third moment of the velocity \(u^{n+\frac{1}{2}}\) is given by

where the two correlations on the right hand side are part of the solution to Eq. (25).

For any given choice of GJ method (\(c_2\)) the above system of equations can be easily solved numerically for given system parameters and skewness \(\Gamma _3\). Examples of this will be discussed in Sect. 4, and are shown in Fig. 6c, d along with the results of corresponding numerical solution of Eqs. (6) and (18) for a particular asymmetric noise distribution. Closed form expressions are obtained in the following for a couple of special cases.

-

Special Case, X=0: The matrix \({{\mathcal {A}}}_3\) and the vector \({{\mathcal {R}}}\) simplify considerably with easy accessible solutions

$$\begin{aligned} \langle r^nr^nr^n\rangle= & {} \frac{2}{1+c_2^3}\left( \frac{k_BT}{\kappa }\right) ^{\frac{3}{2}}\,\mathcal{R}_4 \end{aligned}$$(30a)$$\begin{aligned} \langle r^nr^nr^{n+1}\rangle= & {} \frac{1-c_2}{1+c_2^3}\left( \frac{k_BT}{\kappa }\right) ^{\frac{3}{2}}\,\mathcal{R}_4 \end{aligned}$$(30b)$$\begin{aligned} \langle r^nr^{n+1}r^{n+1}\rangle= & {} \frac{1+c_2^2}{1+c_2^3}\left( \frac{k_BT}{\kappa }\right) ^{\frac{3}{2}}\,\mathcal{R}_4 , \end{aligned}$$(30c)where we have used that this special case for \(\Omega _0^2\Delta {t}^2=2\frac{c_3}{c_1}\) offers \({{\mathcal {R}}}_6=2\mathcal{R}_5=2{{\mathcal {R}}}_4\), and where

$$\begin{aligned} {{\mathcal {R}}}_4= & {} \left( \frac{1-c_2^2}{2}\right) ^{\frac{3}{2}}\,\Gamma _3. \end{aligned}$$(31)Equations (29), (30b), and (30c) give

$$\begin{aligned}{} & {} \left\langle \left( u^{n+\frac{1}{2}}\right) ^3\right\rangle \; = \; - \frac{3}{\left( \Delta {t}\sqrt{c_3}\right) ^3}\frac{c_2}{c_2^2-c_2+1}\left( \frac{k_BT}{\kappa }\right) ^{\frac{3}{2}}\mathcal{R}_4 \end{aligned}$$(32)$$\begin{aligned}{} & {} = \; -\frac{3}{2\sqrt{2}}\,\left( \frac{1-c_2^2}{1+c_2}\right) ^\frac{3}{2} \frac{c_2}{c_2^2-c_2+1}\,\left( \frac{k_BT}{m}\right) ^\frac{3}{2} \,\Gamma _3 . \end{aligned}$$(33)Examples of the use of these expressions are shown in Fig. 6c, d as open markers (\(\circ \)) for a particular asymmetric noise distribution.

-

Special Case, \({\varvec{c}}_{{\textbf {2}}}={\textbf {0}}\). In this case we have that \(c_1=\frac{1}{2}\), \(c_3 = m/\alpha \Delta {t}\), and \(X=1-\Delta {t}\kappa /\alpha \). The simplified matrix \({{\mathcal {A}}}_3\) and vector \({{\mathcal {R}}}\) yield

$$\begin{aligned} \langle r^nr^nr^n\rangle= & {} \frac{3X^2+3X+2}{1-X^3}\left( \frac{k_BT}{\kappa }\right) ^{\frac{3}{2}}\,\mathcal{R}_4 \end{aligned}$$(34a)$$\begin{aligned} \langle r^nr^nr^{n+1}\rangle= & {} \frac{2X^3+3X^2+2X+1}{1-X^3}\left( \frac{k_BT}{\kappa }\right) ^{\frac{3}{2}}\mathcal{R}_4 \end{aligned}$$(34b)$$\begin{aligned} \langle r^nr^{n+1}r^{n+1}\rangle= & {} \frac{X^4+2X^3+2X^2+2X+1}{1-X^3}\left( \frac{k_BT}{\kappa }\right) ^{\frac{3}{2}}\mathcal{R}_4, \end{aligned}$$(34c)where

$$\begin{aligned} {{\mathcal {R}}}_4= & {} \left( \frac{\kappa \Delta {t}}{2\alpha }\right) ^\frac{3}{2}\,\Gamma _3. \end{aligned}$$(35)Equations (29), (34b), and (34c) give

$$\begin{aligned} \left\langle \left( u^{n+\frac{1}{2}}\right) ^3\right\rangle= & {} \frac{3X^2}{2\sqrt{2}}\frac{1-X^2}{1-X^3}\left( \frac{k_BT}{m}\right) ^\frac{3}{2}\Gamma _3. \end{aligned}$$(36)Examples of the use of these expressions are shown in Fig. 6c, d as closed markers (\(\bullet \)) for a particular asymmetric noise distribution.

3.3 Fourth Moments

The fourth moments of \(r^n\) and \(u^{n+\frac{1}{2}}\) are needed for the calculations of energy and pressure fluctuations, which are the basis of several important thermodynamic quantities, such as heat capacity, thermal expansion, compressibility, and the thermal pressure coefficient [1]. Fourth moments are therefore critical for proper thermodynamic characterization of a system. Following the same procedure that was used for calculating the second and third moments, we form the statistical averages of the product of Eq. (19) with the ten unique combinations of \(r^{\ell _1}r^{\ell _2}r^{\ell _3}\), where \(\ell _i=n-1,n,n+1\). The result is the linear system

where

The right hand side \({{\mathcal {R}}} = \{{{\mathcal {R}}}_i\}\) can after some cumbersome work be expressed as

where we have used Eq. (10b). Notice that \(\mathcal{R}_7\)-\({{\mathcal {R}}}_{10}\) in Eqs. (39g)–(39j) depend on the kurtosis \(\Gamma _4\)

which is not determined by the fluctuation-dissipation relationship in Eq. (10).

While Eqs. (37)–(39) are not immediately informative, they provide a straightforward path to calculating the sought-after fluctuation \(\sigma _p\) in potential energy of Eq. (17)

Equations (37)–(39) also lead directly to the fluctuations \(\sigma _k\) in kinetic energy of the velocity from Eq. (7a)

where \(\langle u^{n+\frac{1}{2}}u^{n+\frac{1}{2}}\rangle \) is given by Eq. (16), and

All the correlations on the right hand side are given from the solution to Eqs. (37)–(39).

For any given choice of GJ method (\(c_2\)) the above system of equations can be easily solved numerically for given system parameters and kurtosis \(\Gamma _4\). Examples of this will be discussed in Sect. 4, and are shown in Figs. 2c, d and 6e, f along with the results of corresponding numerical solution of Eqs. (6) and (18) for particular noise distributions. Closed form expressions can be given in the special cases of \(X=0\) and \(c_2=0\).

-

Special Case, \({\textbf {X}}={\textbf {0}}\): This particular time step is

$$\begin{aligned} \Omega _0\Delta {t}= & {} \sqrt{2}\sqrt{\frac{c_1}{c_3}}. \end{aligned}$$(44)Cumbersome, yet straightforward, algebra leads to the closed expressions for \(\langle r^nr^nr^nr^n\rangle \) and \(\langle u^{n+\frac{1}{2}}u^{n+\frac{1}{2}}u^{n+\frac{1}{2}}u^{n+\frac{1}{2}}\rangle \) for \(X=0\); i.e., for \(\Omega _0\Delta {t}=\sqrt{2}\sqrt{c1/c3}\). The corresponding fluctuations in potential and kinetic energies can be written

$$\begin{aligned} \sigma _p= & {} \frac{\kappa }{2}\sqrt{\langle r^nr^nr^nr^n\rangle - \langle r^nr^n\rangle ^2} \nonumber \\= & {} \frac{k_BT}{\sqrt{2}}\, \sqrt{\frac{1}{4}\frac{1+7c_2^2}{1+c_2^2}+\frac{1}{4}\Gamma _4\frac{1-c_2^2}{1+c_2^2}} \end{aligned}$$(45)$$\begin{aligned} \sigma _k= & {} \frac{m}{2}\sqrt{\langle u^{n+\frac{1}{2}}u^{n+\frac{1}{2}}u^{n+\frac{1}{2}}u^{n+\frac{1}{2}}\rangle - \langle u^{n+\frac{1}{2}}u^{n+\frac{1}{2}}\rangle ^2} \nonumber \\= & {} \frac{k_BT}{\sqrt{2}}\, \sqrt{\frac{1}{2}\frac{c_2^3}{1+c_2^2}\left[ 3-\Gamma _4\right] +\frac{1}{4}\left[ 1+\Gamma _4\right] }. \end{aligned}$$(46)Notice that if \(\beta ^n\) is a Gaussian distributed variable, where the kurtosis is \(\Gamma _4=3\), the above energy fluctuations yield the expected values, \(\sigma _p=\sigma _k=k_BT/\sqrt{2}\). Examples of the use of these expressions are shown in Figs. 2c, d and 6e, f as open markers (\(\circ \)) for particular noise distributions.

-

Special Case, \({\textbf {c}}_{{\textbf {2}}}={\textbf {0}}\): This special case (GJ-0, see Refs. [8]) corresponds to the first order difference equation

$$\begin{aligned} r^{n+1}= & {} r^n+\frac{1}{\alpha }\left[ \Delta {t}\,f^n+\frac{1}{2}(\beta ^{n}+\beta ^{n+1})\right] , \end{aligned}$$(47)which can approximate the solution to the Langevin equation with no inertia; i.e., Brownian motion. The linearized, Hooke’s law system (\(f=-\kappa r\)) reads

$$\begin{aligned} r^{n+1}= & {} Xr^n+\frac{1}{2\alpha }(\beta ^n+\beta ^{n+1}), \end{aligned}$$(48)with \(X=1-\kappa \Delta {t}/\alpha \), in correspondence with Eqs. (19) and (20). This simplicity allows the last four of the ten equations in Eq. (37) to decouple from the rest, and the fourth moments can be calculated to yield the average and fluctuations in potential energy

$$\begin{aligned} \left\langle E_p(r^n)\right\rangle= & {} \frac{\kappa }{2}\,\left\langle r^nr^n\right\rangle \; = \; \frac{1}{2}k_BT \end{aligned}$$(49)$$\begin{aligned} \sigma _p= & {} \frac{\kappa }{2}\,\sqrt{\left\langle r^nr^nr^nr^n\right\rangle -\left\langle r^nr^n\right\rangle ^2}\nonumber \\= & {} \frac{k_BT}{\sqrt{2}}\,\sqrt{\frac{\frac{1}{2}X^4\left[ 3-\Gamma _4\right] +\frac{1}{4}X^3\left[ 7-\Gamma _4\right] +\frac{1}{4}(X^2+X+1)\left[ 1+\Gamma _4\right] }{X^3+X^2+X+1}}. \nonumber \\ \end{aligned}$$(50)We also here notice that if \(\beta ^n\) is a Gaussian distributed variable, where the kurtosis is \(\Gamma _4=3\), the potential energy fluctuations yield the expected value, \(\sigma _p=k_BT/\sqrt{2}\). Similarly, we can find the fluctuations \(\sigma _k\) in kinetic energy from the correlation

$$\begin{aligned} \left\langle \left( u^{n+\frac{1}{2}}\right) ^4\right\rangle \;= & {} \; (1-X)^2\frac{4\sigma _p^2+(k_BT)^2}{m^2}+6\left( \frac{k_BT}{m}\right) ^2X^2(1-X)\nonumber \\{} & {} - \left( \frac{k_BT}{m}\right) ^2(3+\Gamma _4)X\left( 1-\frac{3}{2}X+X^2\right) , \end{aligned}$$(51)such that

$$\begin{aligned} \sigma _k= & {} \frac{1}{2}m\,\sqrt{\left\langle \left( u^{n+\frac{1}{2}}\right) ^4\right\rangle -\left( \frac{k_BT}{m}\right) ^2}, \end{aligned}$$(52)where we have used Eq. (16). Thus, \(\sigma _k\) can be easily computed also in this special case. We again notice that for Gaussian fluctuations, where \(\Gamma _4=3\), the kinetic energy fluctuations yield the expected value \(\sigma _k=k_BT/\sqrt{2}\). Examples of the use of these expressions are shown in Figs. 2c, d and 6e, f as closed markers (\(\bullet \)) for particular noise distributions.

4 Simulations

While the above expressions provide both direct and indirect means for evaluating the thermodynamically important first four moments of the configurational and kinetic variables, we test these results against numerical simulations such that both simulations and analysis can be mutually verified. Further, the simulations provide additional details on the actual distributions \(\rho _c(r^n)\) and \(\rho _k(u^{n+\frac{1}{2}})\), which in some cases reveal significant deviations from Boltzmann statistics when the fluctuations \(\beta ^n\) are non-Gaussian.

We show results of three different distributions for the fluctuations \(\beta ^n\), all satisfying the discrete-time fluctuation-dissipation balance that constrains the first two moments given in Eq. (10), and thus, all resulting in correct first and second moments of \(r^n\) and \(u^{n+\frac{1}{2}}\), leading to correct temperature as measured by the kinetic and potential energies (see Sect. 3.1):

-

Gaussian: \(\beta ^n=\beta _G^n\) with distribution \(\rho _G(\beta )\):

$$\begin{aligned} \rho _G(\beta )= & {} \frac{1}{\sqrt{2\pi \langle \beta ^n\beta ^n\rangle }}\,\exp \left( -\frac{\beta \beta }{2\langle \beta ^n\beta ^n\rangle }\right) , \end{aligned}$$(53a)with skewness and kurtosis

$$\begin{aligned} \Gamma _3= & {} 0 \end{aligned}$$(53b)$$\begin{aligned} \Gamma _4= & {} 3. \end{aligned}$$(53c) -

Uniform: \(\beta ^n=\beta _U^n\) with distribution \(\rho _U(\beta )\):

$$\begin{aligned} \rho _U(\beta )= & {} \frac{1}{2\sqrt{3\langle \beta ^n\beta ^n\rangle }}\times \left\{ \begin{array}{ccc} 1 &{}, &{} -\sqrt{3}\,\le \,\frac{\beta }{\sqrt{\langle \beta ^n\beta ^n\rangle }}\,<\,\sqrt{3}\\ 0 &{}, &{} \textrm{otherwise} \end{array}\right. , \end{aligned}$$(54a)with skewness and kurtosis

$$\begin{aligned} \Gamma _3= & {} 0 \end{aligned}$$(54b)$$\begin{aligned} \Gamma _4= & {} \frac{9}{5} . \end{aligned}$$(54c) -

Peaked: \(\beta ^n=\beta _P^n\) with distribution \(\rho _P(\beta )\):

$$\begin{aligned} \rho _P(\beta )= & {} \frac{2}{3}\,\delta \left( \frac{\beta }{\sqrt{\langle \beta ^n\beta ^n\rangle }} + \frac{1}{\sqrt{2}}\right) + \frac{1}{3}\,\delta \left( \frac{\beta }{\sqrt{\langle \beta ^n\beta ^n\rangle }}-\sqrt{2}\right) , \end{aligned}$$(55a)with skewness and kurtosis

$$\begin{aligned} \Gamma _3= & {} \frac{1}{\sqrt{2}}\end{aligned}$$(55b)$$\begin{aligned} \Gamma _4= & {} \frac{12}{5}. \end{aligned}$$(55c)

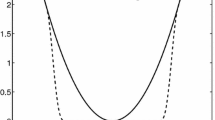

The three distributions are sketched in Fig. 1, and the realizations of the pseudo-random numbers \(\beta ^n\) are produced from the uniformly distributed numbers in [0, 1) generated by the RANMAR algorithm (used in LAMMPS [31]).

The three applied distributions, \(\rho _G(\beta ^n)\) (Gaussian), \(\rho _U(\beta ^n)\) (Uniform), and \(\rho _P(\beta ^n)\) (Peaked), given in Eqs. (53), (54), and (55), respectively. All three distributions satisfy the discrete-time fluctuation-dissipation relationship, Eq. (10)

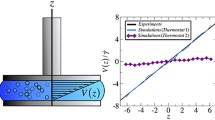

Simulations and analyses of average potential and kinetic energies, \(\langle E_p\rangle \) from Eq. (23) (a) and \(\langle E_k\rangle \) from Eq. (24) (b), and their respective fluctuations, \(\sigma _p\) from Eq. (41) (c) and \(\sigma _k\) from Eq. (42) (d), as a function of reduced time step for the harmonic system, Eqs. (6) and (18), with non-zero temperature T. Results are shown for Gaussian \(\beta _G^n\) (Eq. (53a)) and uniform \(\beta _U^n\) (Eq. (54a)) noise distributions. Several friction parameters \(\alpha \) are used, as indicated in the figure. Simulation results are indistinguishable from the analytical ones. Markers indicate special cases for \(c_2=0\) (\(\bullet \) from Eqs. (50)–(52)) and \(X=0\) (\(\circ \) from Eqs. (45) and (46))

Configurational and kinetic distributions \(\rho _c(r^n)\) and \(\rho _k(u^{n+\frac{1}{2}})\) for simulations with Gaussian and uniform noise applied to the linear system Eqs. (6) and (18) with \(\alpha =1m\Omega _0\), \(\Omega _0\Delta {t}=0.5\), \(k_BT=E_0\). Shown curves are Eq. (3) (solid, labeled exact), simulations data using Gaussian noise \(\beta _G^n\) (dashed, labeled Gaussian), and simulation data using uniform noise \(\beta _U^n\) (dash-dot, labeled uniform). Distributions from simulations using Gaussian noise are indistinguishable from the exact references Eq. (3). (c) and (d) show the distributions, (a) and (b) show the deviations from the exact distributions Eq. (3), and (e) and (f) show the effective potential (PMF) and kinetic (MB) potentials (Eq. (58)) derived from the distributions

Configurational and kinetic distributions \(\rho _c(r^n)\) and \(\rho _k(u^{n+\frac{1}{2}})\) for simulations with Gaussian and uniform noise applied to the linear system Eqs. (6) and (18) with \(\alpha =1m\Omega _0\), \(\Omega _0\Delta {t}=1.5\), \(k_BT=E_0\). Shown curves are Eq. (3) (solid, labeled exact), simulations data using Gaussian noise \(\beta _G^n\) (dashed, labeled Gaussian), and simulation data using uniform noise \(\beta _U^n\) (dash-dot, labeled uniform). Distributions from simulations using Gaussian noise are indistinguishable from the exact references Eq. (3). (c) and (d) show the distributions, (a) and (b) show the deviations from the exact distributions Eq. (3), and (e) and (f) show the effective potential (PMF) and kinetic (MB) potentials (Eq. (58)) derived from the distributions

Configurational and kinetic distributions \(\rho _c(r^n)\) and \(\rho _k(u^{n+\frac{1}{2}})\) for simulations with Gaussian and uniform noise applied to the linear system Eqs. (6) and (18) with \(\alpha =1m\Omega _0\), \(\Omega _0\Delta {t}=1.975\), \(k_BT=E_0\). Shown curves are Eq. (3) (solid, labeled exact), simulations data using Gaussian noise \(\beta _G^n\) (dashed, labeled Gaussian), and simulation data using uniform noise \(\beta _U^n\) (dash-dot, labeled uniform). Distributions from simulations using Gaussian noise are indistinguishable from the exact references Eq. (3). (c) and (d) show the distributions, (a) and (b) show the deviations from the exact distributions Eq. (3), and (e) and (f) show the effective potential (PMF) and kinetic (MB) potentials (Eq. (58)) derived from the distributions

Simulations and analyses of average potential and kinetic energies, \(\langle E_p\rangle \) from Eq. (23) (a) and \(\langle E_k\rangle \) from Eq. (24) (b), the respective third moments \(\langle (r^n)^3\rangle \) from Eqs. (25)–(27) (c) and \(\langle (u^{n+\frac{1}{2}})^3\rangle \) from Eq. (29) (d), and the respective energy fluctuations, \(\sigma _p\) from Eq. (41) (e) and \(\sigma _k\) from Eq. (42) (f), as a function of reduced time step for the harmonic system, Eqs. (6) and (18), with non-zero temperature T. Results are shown for Gaussian \(\beta _G^n\) (Eq. (53a)) and the asymmetrically peaked \(\beta _P^n\) (Eq. (55a)) noise distributions. Several friction parameters \(\alpha \) are used, as indicated in the figure. Simulation results are indistinguishable from the analytical ones. Markers indicate special cases for \(c_2=0\) (\(\bullet \) from Eqs. (34a) and (36), Eqs. (50)–(52)) and \(X=0\) (\(\circ \) from Eqs. (30a) and (33), Eqs. (45) and (46))

Configurational and kinetic distributions \(\rho _c(r^n)\) and \(\rho _k(u^{n+\frac{1}{2}})\) for simulations with Gaussian and peaked noise applied to the linear system Eqs. (6) and (18) with \(\alpha =0.5m\Omega _0\), \(\Omega _0\Delta {t}=0.5\), \(k_BT=E_0\). Shown curves are Eq. (3) (solid, labeled exact), simulations data using Gaussian noise \(\beta _G^n\) (dashed, labeled Gaussian), and simulation data using peaked noise \(\beta _P^n\) (dash-dot, labeled uniform). Distributions from simulations using Gaussian noise are indistinguishable from the exact references Eq. (3). (c) and (d) show the distributions, (a) and (b) show the deviations from the exact distributions Eq. (3), and (e) and (f) show the effective potential (PMF) and kinetic (MB) potentials (Eq. (58)) derived from the distributions

Configurational and kinetic distributions \(\rho _c(r^n)\) and \(\rho _k(u^{n+\frac{1}{2}})\) for simulations with Gaussian and uniform noise applied to the linear system Eqs. (6) and (18) with \(\alpha =0.5m\Omega _0\), \(\Omega _0\Delta {t}=1.5\), \(k_BT=E_0\). Shown curves are Eq. (3) (solid, labeled exact), simulations data using Gaussian noise \(\beta _G^n\) (dashed, labeled Gaussian), and simulation data using peaked noise \(\beta _P^n\) (dash-dot, labeled uniform). Distributions from simulations using Gaussian noise are indistinguishable from the exact references Eq. (3). (c) and (d) show the distributions, (a) and (b) show the deviations from the exact distributions Eq. (3), and (e) and (f) show the effective potential (PMF) and kinetic (MB) potentials (Eq. (58)) derived from the distributions

Configurational and kinetic distributions \(\rho _c(r^n)\) and \(\rho _k(u^{n+\frac{1}{2}})\) for simulations with Gaussian and uniform noise applied to the linear system Eqs. (6) and (18) with \(\alpha =0.5m\Omega _0\), \(\Omega _0\Delta {t}=1.975\), \(k_BT=E_0\). Shown curves are Eq. (3) (solid, labeled exact), simulations data using Gaussian noise \(\beta _G^n\) (dashed, labeled Gaussian), and simulation data using peaked noise \(\beta _P^n\) (dash-dot, labeled uniform). Distributions from simulations using Gaussian noise are indistinguishable from the exact references Eq. (3). (c) and (d) show the distributions, (a) and (b) show the deviations from the exact distributions Eq. (3), and (e) and (f) show the effective potential (PMF) and kinetic (MB) potentials (Eq. (58)) derived from the distributions

In order to validate the analysis of the previous section, we simulated the noisy harmonic oscillator Eqs. (6) and (18) with several of the GJ methods, which all give the same statistical results for Gaussian noise once the time step is appropriately scaled according to the method. For simplicity we limit the displayed results to those of the GJ-I (GJF-2GJ) method [7, 8], for which

The GJF-2GJ method overlaps in the configurational coordinate with the GJF method [6]. We note that the GJ-I (GJF-2GJ) method is available for use in LAMMPS [31]. With time normalized by the characteristic unit \(t_0\) given by the inverse of the natural frequency \(\Omega _0\), the reduced damping is \(\alpha /m\Omega _0\), and the characteristic energy is \(E_0=\kappa r_0^2\), where \(r_0\) is a chosen characteristic length to which r is normalized. The simulations are conducted for \(k_BT=E_0\) for a variety of values of \(\alpha /m\Omega _0\) in the entire stability range \(\Omega _0\Delta {t}<2\). Each statistical value is calculated from averages over 1,000 independent simulations, each with 10\(^8\) time steps.

Figure 2 shows the results of using the uniform noise distribution \(\beta _U^n\) (Eq. (54a)) alongside the results of using the Gaussian \(\beta _G^n\) (Eq. (53a)) as reference. Figure 2a, b, respectively displaying results for configurational and kinetic variables, verify the general result from Sect. 3.1; namely that a statistical property, which depends only on the first and second moments of the noise distribution, will be correctly evaluated for the GJ methods if the noise satisfies Eq. (10). The shown data on each plot are for damping parameters \(\alpha /m\Omega _0\)=0.1, 0.25, 0.5, 1, 2.5, 5, and the results for both Gaussian and uniform noise distributions are clearly in close agreement with the expected values given in Eqs. (23) and (24). This has previously been extensively validated for Gaussian noise [7, 8].

Figure 2c, d displays simulation results and comparisons to the expectations from the analysis in Sect. 3.3 for the configurational and kinetic energy fluctuations \(\sigma _p\) and \(\sigma _k\) found in Eqs. (41) and (42). Since the Gaussian noise \(\beta _G^n\) from Eq. (53a) applied to the linear system produces Gaussian distributions for \(r^n\) and \(u^{n+\frac{1}{2}}\) with correct, and time-step independent, first and second moments (see Sect. 3.1), it follows that the fourth moments are also correct and time-step independent. This is clearly observed on the energy fluctuation figures, where both simulation results and the results of solving Eqs. (37)–(39) for \(\Gamma _4=3\) are shown to be indistinguishable and constant at the correct values \(\sigma _p=\sigma _k=k_BT/\sqrt{2}\). However, the uniformly distributed noise \(\beta _U^n\) (Eq. (54a)) produces neither Gaussian nor uniform distributions for \(r^n\) and \(u^{n+\frac{1}{2}}\), but instead produces distributions that depend on the time step and the damping parameter, even if Eq. (10) is satisfied. This can be seen in the figure for the energy fluctuations, Fig. 2c, d, where both simulation results and the results of Eqs. (37)–(39) for \(\Gamma _4=3\) and \(\Gamma _4=\frac{9}{5}\), the latter being the kurtosis for the uniform distribution, are shown. The simulation results are indistinguishable from the analysis. It is obvious that when using the uniform noise, the energy fluctuations, which depend on the fourth moments of the variables, can deviate significantly from the correct value, given by the Gaussian noise. We also observe that the fluctuations approach the correct values for small time steps or small damping parameters. This is understandable since the damping per time step in those limits is very small, and the fluctuations in \(r^n\) and \(u^{n+\frac{1}{2}}\) therefore are composed of \(\beta _U^n\) contributions from many time steps, allowing the integrated noise in \(r^n\) and \(u^{n+\frac{1}{2}}\) to become near-Gaussian by the central limit theorem. Conversely, if the damping per time step becomes appreciable, then the effective noise in \(r^n\) and \(u^{n+\frac{1}{2}}\) will be composed by only a few \(\beta _U^n\) contributions, which do not approximate a Gaussian outcome very well.

In order to look at the details of the simulated distributions, we have selected a few representative parameter values as illustrations. Figures 3, 4, and 5 show the simulated configurational and kinetic distributions, \(\rho _c(r)\) and \(\rho _k(u)\), for \(\alpha =1\,m\Omega _0\) and \(\Omega _0\Delta {t}=0.5, 1.5, 1.975\), respectively, for simulations using Gaussian (dashed) and uniform (dash-dotted) noise distributions. Also shown are the results from the exact Gaussian distributions, \(\rho _{k,e}(u)\) and \(\rho _{c,e}(r)\), (solid, from Eqs. (3a) and (13a)) that are expected from continuous-time Langevin dynamics. The distributions, \(\rho _c(r^n)\) and \(\rho _k(u^{n+\frac{1}{2}})\), are shown in Figs. 3, 4, and 5c, d, the deviations,

from the expected (correct) distributions are shown in Figs. 3, 4, and 5a, b, and the effective configurational and kinetic potentials,

where \(\tilde{{{\mathcal {C}}}}_c\) and \(\tilde{{{\mathcal {C}}}}_k\) are determined such that \(\textrm{min}[U_{PMF}(r^n)]=\textrm{min}[U_{MB}(u^{n+\frac{1}{2}})]=0\), are shown in Figs. 3, 4, and 5e, f. For the harmonic potential, the statistically correct values of these potentials should be \(\frac{1}{2}\kappa (r)^2\) and \(\frac{1}{2}m(u)^2\), which are indicated by thin solid curves. For \(\Omega _0\Delta {t}=0.5\), Fig. 3 shows that even a seemingly modest deviation from a pure Gaussian distribution can have rather large impacts on fourth-moment thermodynamic measures, which for these parameters in Fig. 2c, d are seen to result in configurational and kinetic energy fluctuations being depressed by about 7% and 14%, respectively. Increasing the time step to \(\Omega _0\Delta {t}=1.5\) amplifies the deformation of the \(\beta _U^n\)-generated distributions away from the Gaussian. As seen in Fig. 4e, f, the increasingly noticeable difference is that the uniform noise yields a more confined exploration of phase-space than the Gaussian distribution does, consistent with the depression in the fourth moment seen in Fig. 2c, d. Finally, in Fig. 5, we show the results for a time step, \(\Omega _0\Delta {t}=1.975\), very close to the stability limit. This extreme case shows that the uniform distribution in noise also can produce a near uniform distribution for \(r^n\), while the distribution for \(u^{n+\frac{1}{2}}\) is near triangular, consistent with a sum of two uniformly distributed numbers contributing to the kinetic fluctuations. Again, we see from Fig. 5e, f that the sampling of the phase space is much more limited when using uniform noise than when using Gaussian, even if the measured temperatures, configurational as well as kinetic, are measured to the correct values.

Following the spirit of Refs. [15, 16] we explore the application of a more challenging noise distribution \(\beta _P^n\) (the peaked distribution defined in Eq. (55a)), which is both discrete and asymmetric (see Fig. 1). As was the case for the uniform noise discussed above, we also here reference the results next to those of Gaussian noise, which give time-step independent and correct behavior. Conducting numerical simulations of Eqs. (6) and (18) for different time steps and for normalized friction \(\alpha /m\Omega _0=0.1, 0.25, 0.5, 1, 2.5, 5\) as described above, and comparing to the evaluation of the moments from the analyses of Sect. 3, we obtain the data shown in Fig. 6, where the simulation results are indistinguishable from the results of the analyses for third and fourth moments in Sects. 3.2 and 3.3. As expected from the analysis, the results of the second moments shown in Fig. 6a, b of \(r^n\) and \(u^{n+\frac{1}{2}}\), namely the potential and kinetic energies, are perfectly aligned with statistical mechanics, since the peaked distribution for \(\beta _P^n\) satisfies the two moments in Eq. (10). Since the applied noise is here asymmetric, the resulting third moments of \(r^n\) and \(u^{n+\frac{1}{2}}\) may also become non-zero for non-zero time steps. This is seen in Fig. 6c, d, where we observe rather complex behavior as a function of the system parameters. We do, however, see that for \(\Omega _0\Delta {t}\rightarrow 0\) the third moments approach zero, consistent with the central limit theorem that guarantees a Gaussian outcome when a very large number of noise values contribute to the variables. Finally, in comparison with Fig. 2c, d for uniform noise, we see very similar behavior for the energy fluctuations (fourth moments) for the application of the peaked distribution in Fig. 6e, f. Even if the uniform and peaked distributions are very different in appearance, the similarities between their outcomes in their fourth moments are not surprising, given that the analysis for the fourth moments in Sect. 3.3 shows that the difference between the two only depends on the kurtoses, which have the values \(\Gamma _4=\frac{9}{5}\) (uniform) and \(\Gamma _4=\frac{12}{5}\) (peaked). Again, we find that all signatures of non-Gaussian noise vanish for \(\Omega _0\Delta {t}\rightarrow 0\), and we find that non-zero time steps result in depressions of the thermodynamically important energy fluctuations, as derived from the fourth moments of the configurational and kinetic coordinates.

The details of the simulated coordinate distributions arising from the peaked noise are exemplified in Figs. 7, 8, and 9 for \(\alpha /m\Omega _0=0.5\) and \(\Omega _0\Delta {t}=0.5, 1.5, 1.975\), respectively. For the smaller of the time steps, shown in Fig. 7, we see the seemingly modest skewness and deformations of the coordinate distributions arising from the peaked noise. Yet, these modest deformations are what provide the somewhat significant deviations from Gaussian characteristics in third and fourth moments seen in Fig. 6c–f for \(\Omega _0\Delta {t}=0.5\). More dramatic deviations from Gaussian/Boltzmann characteristics are found in a large range of \(\Omega _0\Delta {t}\), including the value \(\Omega _0\Delta {t}=1.5\) shown in Fig. 8. The seemingly discontinuous distribution is not a result of insufficient statistics. Rather, it is the interference between the discrete nature of the applied noise distribution with the discrete time step that happens to distinctively select certain preferred values of \(r^n\) and \(u^{n+\frac{1}{2}}\) over others. It is noticeable that these types of peculiar distributions appear without any obvious or abrupt signatures in the first four moments, as seen in Fig. 6. As the time step \(\Omega _0\Delta {t}=1.975\) is pushed close to the stability limit, we again find smooth coordinate distributions, seen in Fig. 9, where the applied peaked noise is visible throughout the different displays. In analogy with the visualization of the distributions from the uniform noise, this is the limit where the friction per time step is relatively large, thereby making the behavior of the coordinates \(r^n\) and \(u^{n+\frac{1}{2}}\) subject to only a few noise contributions at a time.

5 Discussion

In light of recent advances in stochastic thermostats for simulating Langevin equations with accurate statistics across the stability range of the applied time step when using Gaussian noise [7, 8], we have revisited the investigations of advantages and disadvantages of using non-Gaussian thermal noise in discrete time. Given the systemic first and second order time-step errors of the traditional methods (e.g., Refs. [18, 20]), which necessitated rather small time steps for accurate simulations, it was previously concluded [15, 16] that other distributions, including the desirable uniform distribution, would be efficient substitutions since the central limit theorem would ensure near-Gaussian outcomes for small time steps, thereby not further significantly distort the simulation results due to the imperfect noise. This result has been a very useful tool over the years when computational efficiency could benefit from not converting stochastic variables from uniform to Gaussian. However, as we have demonstrated in this paper, when the time step becomes large, which is allowed by the modern GJ methods when using Gaussian noise, the application of non-Gaussian noise does not retain the time-step independent benefits of these methods in thermodynamic measures that involve moments higher than the second. When the applied noise conforms to Eq. (10), we have found that the GJ methods are invariant to the specific noise distributions in measures of first and second moments, such as configurational and kinetic temperatures. Thus, it is deceiving to judge the quality of the thermostat based on those moments alone. As is evident from the third and fourth moments, as well as the visual impressions of the actual distributions of \(r^n\) and \(u^{n+\frac{1}{2}}\), the sampling of the phase-space can be quite distorted (non-Boltzmann) even if the measured temperatures yield correct values. All of these results are consistent with the significance of how the noise must be defined in discrete-time; namely through the integral Eq. (9), which ensures that any underlying distribution for \(\beta (t)\) will yield a Gaussian outcome for \(\beta ^n\) due to the central limit theorem. It follows that applying any other distribution than Gaussian in discrete time is formally invalid, and certainly leads to significant sampling errors unless \(\alpha \Delta {t}/m\) is small enough for the one-time-step velocity attenuation parameter to be \(c_2\approx 1\). It is therefore the conclusion of this work that Gaussian noise must be applied to the modern stochastic integrators if one wishes to take advantage of the large time-step benefits of their properties.

Data Availability

The data presented and discussed in the current study are available from the author on reasonable request.

References

Allen, M.P., Tildesley, D.J.: Computer Simulation of Liquids. Oxford University Press, Oxford (1989)

Frenkel, D., Smit, B.: Understanding Molecular Simulations: From Algorithms to Applications. Academic Press, San Diego (2002)

Rapaport, D.C.: The Art of Molecular Dynamics Simulations. Cambridge University Press, Cambridge (2004)

Hoover, W.M.: Computational Statistical Mechanics. Elsevier, New York (1991)

Leach, A.: Molecular Modeling: Principles and Applications, 2nd edn. Prentice Hall, Harlow (2001)

Grønbech-Jensen, N., Farago, O.: A simple and effective Verlet-type algorithm for simulating Langevin dynamics. Mol. Phys. 111, 983 (2013)

Jensen, L.F.G., Grønbech-Jensen, N.: Accurate configurational and kinetic statistics in discrete-time Langevin systems. Mol. Phys. 117, 2511 (2019)

Grønbech-Jensen, N.: Complete set of stochastic Verlet-type thermostats for correct Langevin simulations. Mol. Phys. 118, e1662506 (2020)

Langevin, P., Acad, C.R.: On the Theory of Brownian motion. Sci. Paris 146, 530 (1908)

Coffey, W.T., Kalmykov, Y.P.: The Langevin Equation, 3rd edn. World Scientific, Singapore (2012)

Parisi, G.: Statistical Field Theory. Addison-Wesley, Menlo Park (1988)

Although not necessary, it is generally reasonable to expect that the distribution of \(\beta (t)\) is symmetric, yielding all odd moments zero

Papoulis, A.: Probability, Random Variables, and Stochastic Processes. McGraw-Hill, London (1965)

For a general description of pseudo-random generators, see, e.g., W. H. Press, S. A. Teukolsky, W. T. Vetterling, and B. P. Flannery, Numerical Recipes 3rd. ed. (Cambridge University Press, Cambridge, 2007)

Greiner, A., Strittmatter, W., Honerkamp, J.: Numerical integration of stochastic differential equations. J. Stat. Phys. 51, 95 (1988)

Dünweg, B., Paul, W.: Brownian dynamics simulations without Gaussian random numbers. Int. J. Mod. Phys. C 2, 817 (1991)

Finkelstein, J., Fiorin, G., Seibold, S.: Comparison of modern Langevin integrators for simulations of coarse-grained polymer melts. Mol. Phys. 118, e1649493 (2020)

Brünger, A., Brooks, C.L., Karplus, M.: Stochastic boundary conditions for molecular dynamics simulations of ST2 water. Chem. Phys. Lett. 105, 495 (1984)

Pastor, R.W., Brooks, B.R., Szabo, A.: An analysis of the accuracy of Langevin and molecular dynamics algorithms. Mol. Phys. 65, 1409 (1988)

Schneider, T., Stoll, E.: Molecular-dynamics study of a three-dimensional one-component model for distortive phase transitions. Phys. Rev. B 17, 1302 (1978)

Plimpton, S.: Fast parallel algorithms for short-range molecular dynamics. J. Comput. Phys. 117, 1 (1995)

Thompson, A. P., Aktulga, H. M., Berger, R., Bolintineanu, D. S., Brown, W. M., Crozier, P. S., in \(^\prime \)t Veld, P. J., Kohlmeyer, A., Moore, S. G., Nguyen, T. D., Shan, R., Stevens, M., Tranchida, J., Trott, C., Plimpton, S. J.: LAMMPS—a flexible simulation tool for particle-based materials modeling at the atomic, meso, and continuum scales. Comput. Phys. Commun. 271, 108171 (2022)

See the LAMMPS documentation [31] for the use of fix_langevin using the Schneider and Stoll thermostat of Ref. [20]

Weik, F., Weeber, R., Szuttor, K., Breitsprecher, K., de Graaf, J., Kuron, M., Landsgesell, J., Menke, H., Sean, D., Holm, C.: ESPResSo 4.0—an extensible software package for simulating soft matter systems. Euro. Phys. J. Special Topics 227, 1789 (2019). See also https://espressomd.github.io/ documentation for ESPResSo-4.2.0 Sec. 6.3.1

Verlet, L.: Computer experiments on classical fluids. I. Thermodynamical properties of Lennard-Jones molecules. Phys. Rev. 159, 98 (1967)

Swope, W.C., Andersen, H.C., Berens, P.H., Wilson, K.R.: A computer simulation method for the calculation of equilibrium constants for the formation of physical clusters of molecules: application to small water clusters. J. Chem. Phys. 76, 637 (1982)

Beeman, D.: Some multistep methods for use in molecular dynamics calculations. J. Comput. Phys. 20, 130 (1976)

Buneman, O.: Time-reversible difference procedures. J. Comput. Phys. 1, 517 (1967)

Hockney, R.W.: The potential calculation and some applications. Methods Comput. Phys. 9, 136 (1970)

Finkelstein, J., Cheng, C., Fiorin, G., Seibold, B., Grønbech-Jensen, N.: Bringing Langevin splitting methods into agreement with correct discrete-time thermodynamics. J. Chem. Phys. 155, 184104 (2021)

See http://lammps.sandia.gov/doc/Manual.pdf, for the description of the “fix_langevin” command

Acknowledgements

The author is grateful to Charlie Sievers for sharing LAMMPS simulation results using the GJF-2GJ method, supporting the conclusions of this paper for more complex molecular dynamics systems, and to Lorenzo Mambretti for assistance with the RANMAR random number generator. The author is also grateful for initial discussions with Chungho Cheng on non-Gaussian noise.

Funding

No funding was received for conducting this study.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author has no relevant financial or non-financial interests to disclose.

Additional information

Communicated by Ludovic Berthier.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Grønbech-Jensen, N. On the Application of Non-Gaussian Noise in Stochastic Langevin Simulations. J Stat Phys 190, 96 (2023). https://doi.org/10.1007/s10955-023-03104-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10955-023-03104-8