Abstract

For the discrete random field Curie–Weiss models, the infinite volume Gibbs states and metastates have been investigated and determined for specific instances of random external fields. In general, there are not many examples in the literature of non-trivial limiting metastates for discrete or continuous spin systems. We analyze the infinite volume Gibbs states of the mean-field spherical model, a model of continuous spins, in a general random external field with independent identically distributed components with finite moments of some order larger than four and non-vanishing variances of the second moments. Depending on the parameters of the model, we show that there exist three distinct phases: ordered ferromagnetic, ordered paramagnetic, and spin glass. In the ordered ferromagnetic and ordered paramagnetic phases, we show that there exists a unique infinite volume Gibbs state almost surely. In the spin glass phase, we show the existence of chaotic size dependence, provide a construction of the Aizenman–Wehr metastate, and consider both the convergence in distribution and almost sure convergence of the Newman–Stein metastates. The limiting metastates are non-trivial and their structure is universal due to the presence of Gaussian fluctuations and the spherical constraint.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The mean-field spherical model in a random external field, which can also be called the random field mean-field spherical model(RFMFS) is a variant of the mean-field spherical model(MFS), introduced in [18], where the homogeneous external field is replaced by a random external field. Due to the introduction of external randomness, as opposed to the internal randomness of the associated Gibbs state, the finite volume Gibbs states(FVGS) of this model form a collection of random probability measures. In this paper, we will prove theorems concerning the infinite volume limits of the collection of FVGS for a number of different modes of convergence introduced in the disordered systems literature. Due to model specific features, we are able to prove these theorems in a significantly more general setting than other similar models which can be found in the literature.

The Curie–Weiss model in a random external field with independent Bernoulli distributed components was first introduced in [31]. In [1, 30, 31] the thermodynamics and the phase diagram of this model are determined. In [3], the fluctuations of magnetization are considered for the Curie–Weiss model in a random external field where the Bernoulli distributed components are replaced by independent identically distributed components with finite absolute first moments. We will refer to the general random external field case as the random field Curie–Weiss model(RFCW), and the Bernoulli distributed external field as the Bernoulli field Curie–Weiss model(BFCW). One of the key results in [3] is the classification of the magnetization fluctuations in the RFCW model in terms of the “type” and “strength” of the global maximizing points of the free energy. This classification is similar to the classification given by Ellis and Newman in [13] for the magnetization fluctuations of the generalized Curie–Weiss model(GCW), but the external randomness modifies the scaling of the fluctuations.

The infinite volume limits of the finite marginal distributions of the GCW model are given in [13], and the result is that the they are convex combinations of product measures where the number of such product measures is related to the amount, type, and strength of the global maximizing points of the free energy of the model. Due to the similarities in results and proof techniques concerning the magnetization fluctuations between the GCW model and the RFCW model, one might suspect that the infinite volume limits would also be similar. This, however, is not necessarily the case.

For the RFCW model, in [4], the authors show that the infinite volume Gibbs states(IVGS), i.e., any element of the collection of limit points of the collection of FVGS, always belongs to the set of convex combinations of some product measures almost surely, where the number of product measures is determined by the amount of global maximizing points of the free energy. However, for the BFCW model where the parameters of the model are chosen so that there are exactly two global maximizing points, they show that there exists a set of probability 1 such that a countable collection of convex combinations of the two associated product measures can be obtained as convergent subsequences almost surely. This phenomenon is known as chaotic size dependence due to Newman and Stein [26]. The implication is that there do not exist a definitive unique almost sure limits of FVGS, instead, they are dependent on the subsequence of volumes chosen.

To rectify the problem of chaotic size dependence, other forms of convergence in the infinite volume limit must be considered. Let us briefly motivate two such forms of convergence and introduce the notion of metastates. Since the FVGS are random probability measures, if one considers their distributions as random variables, then the distribution is essentially a probability measure of probability measures, and we call it a metastate. One can then consider the convergence in distribution of the external field and the FVGS simultaneously. For the resulting limit in distribution, if it exists, one can then take the regular conditional distribution of the random probability measure given the external field, and this is then a metastate which is defined almost surely. This procedure is due to Aizenman and Wehr [2], and it is typically called the Aizenman–Wehr metastate(A–W) or the conditioned metastate. For the other form of convergence, one considers the empirical measure of the sequence of FVGS and its limit either almost surely or in distribution. The empirical measures are called the Newman–Stein metastates(N–S) due to Newman and Stein [26]. We refer to [9, Chapter 6] for more details and definitions concerning the metastates.

In [4], for the BFCW in the case where there are two global maximizing points, it was shown that the limit in distribution of the random FVGS corresponds to a random probability measure which is split between the associated product measures with probability \(\frac{1}{2}\) independent of the external field. A similar result, concerning the A–W metastate, is obtained in [21]. In [21], the author also provides a proof that the N–S metastates corresponding to the FVGS do not converge almost surely, but they do converge in distribution. In doing so, it is also shown that the limit in distribution of the N–S metastates is more general in some sense since it also contains the result concerning the A–W metastate.

Let us now comment on some of the technical details in the RFCW model and the BFCW model so that we have a reference point for some of the results in this paper. The characterization given in [3] for the free energy of the RFCW suggests that one can find random external fields which would yield any amount, type, and strength of the global maximizing points of the free energy. In principle, one could perform a similar analysis for the IVGS of the RFCW model as in [4, 21], but both of these works focused on the BFCW model, and there is an explicit mention of the difficulties associated with the general RFCW models in [4]. There are two features which make the RFCW models technically simple to deal with. The first feature is that the FVGS are probability measures on a compact space, and, as such, the space of probability measures on this compact space is compact itself. As a result, one does not need to provide uniform tightness results for the metastates since they are automatic. The second feature is that the FVGS can be immediately written as a continuous mixture of product states via the Hubbard–Stratonivich transform. Using these ingredients, most of the analysis of these models comes down to the analysis of the free energy and the limiting procedure by which it was obtained.

In this paper, we will rigorously determine the IVGS and the limiting metastates of the RFMFS in the general setting where the external field has components which are independent identically distributed random variables with finite moments of some order larger than four and non-vanishing variances of the second moments. We show that the IVGS of this model is always either unique or a convex combination of two pure states. We will provide a characterization of the phases of this model, and we will show that in the spin glass phase the model exhibits CSD. For the spin glass phase, we will provide a construction of the A–W metastate and consider the limiting properties of the N–S metastates both in distribution and almost surely. The results obtained are universal in the sense that they hold for any random external fields with the assumptions given.

The main results of this paper that concern the case of the random external field are presented in Theorems 2.3.5, 2.3.10, 2.3.15, and 2.3.16. In order, the results are the existence of CSD and the determination of unique almost sure limits of the FVGS, the construction of the A–W metastate from the limit in distribution of the conditioned metastate probability measures, the almost sure divergence of the N–S metastates and the almost sure convergence of a random subsequence of N–S metastates to the A–W metastate, and, finally, the limit in distribution of the N–S metastates. More minor, but contextually important results, are the characterization of the spin glass phase provided in Table 3 and the triviality of the metastates in Theorem 2.3.17.

The methods of proof for this model differ substantially from those for the RFCW. We will first prove results for a deterministic inhomogeneous external field with convergent sample means and non-vanishing convergent sample standard deviations. Such a deterministic inhomogeneous external field will be called strongly varying. For strongly varying external fields, we are able to fully determine the infinite volume Gibbs states by specifying the rates of convergence of the sample means and sample standard deviations. The IVGS and metastate results are then applications of specific instances of strongly varying fields. The technical difficulties of this model are twofold. The first difficulty is that the state space is not compact and thus we must provide a number of uniform tightness results for the different metastates. The second difficulty is that the inclusion of the external field breaks the permutation invariance of the standard mean-field spherical model, and, as a result, we must provide methods of proof for the resolution of finite marginals of certain singular probability distributions which do not require such symmetries.

To our knowledge, the results of this paper for the mean-field spherical model in a strongly varying field and the RFMFS are novel contributions to the literature. In particular, as noted in [9], there are very few explicit constructions of metastates for non-trivial lattice models, and those that do exist, such as the results of the BFCW model, are for specific choices of random external fields. In this paper, we are able to provide an explicit construction of the metastates of the RFMFS model for a large collection of general random external fields, and show that they have a relatively simple and universal structure despite the generality of the random external field.

1.1 Related Works

Recently, the free energy, overlap structures, and thermodynamic fluctuations were studied for the spherical Sherrington–Kirkpatrick model(SSK) and a variant of it in [5,6,7]. The SSK model is similar to our model in the sense that it is a lattice model with a spherical constraint and some sort of external randomness added to the model. However, the critical difference in these models is that the external randomness typically appears in the interactions between spins. Furthermore, these works are not concerned with the construction of metastates. In general, there is a vast literature concerning these types of models too long to cite, instead we refer to the book [33].

Let us also mention the large and moderate deviation results for the RFCW models given in [23, 24] which initially spurred our interest in these types of models and provided valuable references in their introductions. In particular, there are examples given in [24] which consider random external fields which do not have independent identically distributed components.

To accompany the original introduction of the MFS model in [18], the IVGS of the MFS model can be deduced from results presented in [20]. They are not explicitly mentioned as IVGS, but the finite marginal distributions are deduced there. In general, the work of [20] can be used to understand some of the rigorous methods of proof used in this paper as well, but the core methods of this paper differ significantly due to the lack of permutation invariance of the models.

1.2 Reading Guide

This paper is split into two major sections which are Sects. 2 and 3. The main results are contained in Sect. 2, and they are presented along with a significant amount of exposition, intermediate results, and proof sketches. When necessary, details for non-trivial proofs are provided in Sect. 3. In general, the main results of this paper and their proof sketches should be able to be understood by reading only Sect. 2.

Although this paper is primarily concerned with the random external field, Sect. 2 is split into two subsections which are Sects. 2.2 and 2.3. Many key results are first developed for a deterministic inhomogeneous external field in Sect. 2.2 and then applied to the case of the random external field in Sect. 2.3. The main results concerning the random external field are provided in Sect. 2.3, but most of their proofs rely directly on results developed in Sect. 2.2.

In Sect. 3, we will provide the non-trivial proofs of main results and intermediate results from Sect. 2. There are also intermediate results and their proofs which may not be found in Sect. 2. To save space and provide clarity, we will typically omit extraneous sup- and subindices if it is clear what object is being referred to in the proof. If there is a relevant variable dependence in these indices, we will keep them in the proofs. In general, we have tried to remain consistent with the naming of certain key objects so that they are the same throughout the paper.

The appendix contains general definitions and proofs related to the uniform tightness, approximation, and weak convergence of probability measures related to the metastates.

2 Main Results

2.1 Preliminaries

The model of interest in this paper is an equilibrium statistical mechanical model of an unbounded continuous spin system with long-range interactions, a spherical constraint, and an external field which is initially deterministic. Later on, we will consider the case where the external field is random. We will refer to this model and its constituents, which are to be defined, as the RFMFS model.

To define this model, we begin with the mean-field Hamiltonian in an external field \(H_n^{J,h}: \mathbb {R}^n \rightarrow \mathbb {R}\) given by

where \(J > 0\) is a coupling constant, and \(h \in \mathbb {R}^\mathbb {N}\) is an external field. The state space on which this Hamiltonian is defined is continuous and unbounded as opposed to discrete and bounded like the Ising model. Since each spin interacts with all other spins, the interaction defined by this Hamiltonian is a long-range interaction, and it is often also referred to as a mean-field interaction. The uniform probability measure \(\omega _n\) on the \(n-1\)-dimensional sphere with radius \(\sqrt{n}\) is formally given by its density \(\omega _n(d \phi )\) on \(\mathbb {R}^n\) which has the formula

where \(\delta (\cdot )\) is the Dirac-delta, \(\Vert \cdot \Vert \) is the Euclidean norm, and \(d \phi \) is the Lebesgue measure. We refer to the utilization of this uniform measure as the spherical constraint in this model. The Gibbs state \(\mu _n^{\beta ,J,h}\) of this model is the probability measure on \(\mathbb {R}^n\) formally given by its density \(\mu _n^{\beta ,J,h} (d \phi )\) on \(\mathbb {R}^n\) which has the formula

where \(\beta > 0\) is the inverse temperature, and \(Z_n(\beta ,J,h)\) is the partition function formally given by

It is common to scale or set certain parameters to fixed values in these kinds of models. We insist on leaving the parameters without rescaling so that their contributions can be seen more transparently in results to come such as Lemma 3.4.2 or the results presented in Table 1, where the ratio of the coupling constant J and the, to be introduced, limiting sample standard deviation \(m^\perp \) is of interest.

In this paper, a majority of the practical calculations are first done using the formal calculation properties of the \(\delta \)-functions, and these calculations are then given rigorous proofs later on. The Gibbs state is rigorously redefined by its action on \(f \in C_b (\mathbb {R}^n)\), where \(C_b (\mathbb {R}^n)\) is the space of continuous bounded functions on \(\mathbb {R}^n\), given by

where

and \(d \Omega \) is the integral over the angular part of the hyperspherical coordinates on \(\mathbb {R}^n\). Note that this is a redefinition, and the n-dependent factors in this definition have been chosen for convenience.

Note that we have explicitly defined the Gibbs state by its action on continuous bounded functions and we reserve the term expectation for real-valued random variables that will appear later on in this paper. We will encounter probabilistic objects such as probability measure-valued random variables and \(\mathbb {R}^\mathbb {N}\)-valued random variables which are defined on a common underlying probability space. For such objects, it is convenient to consider many of their properties as expectations with respect to this underlying probability measure, and this is why we want to have distinguished definitions of the Gibbs state and the distribution of a random variable although both are probability measures.

For technical reasons, we will extend the underlying space on which \(\mu _n^{\beta , J,h}\) acts on as a probability measure to \(\mathbb {R}^\mathbb {N}\) by “tensoring on 0” to the remaining \(\mathbb {R}^{\mathbb {N} \setminus \{ 1,2,...,n\}}\) portion of the space. For the final time, we redefine \(\mu _n^{\beta ,J,h}:= \mu _n^{\beta ,J,h} \otimes \delta _0\), where \(\delta _0\) is the Dirac measure on the point with all components 0 in \(\mathbb {R}^{\mathbb {N} \setminus \{ 1,2,...,n\}}\). The probability measure on \(\mathbb {R}^\mathbb {N}\) constructed from probability measures on \(\mathbb {R}^n\) by this method will be referred to as 0-tensored versions. A function \(f: \mathbb {R}^\mathbb {N} \rightarrow \mathbb {R}\) is said to be local if there exists a finite index set \(I \subset \mathbb {N}\) and a function \(f': \mathbb {R}^I \rightarrow \mathbb {R}\) such that \(f(x) = f' (\pi _I (x))\), where \(\pi _I\) is the canonical projection from \(\mathbb {R}^\mathbb {N}\) to \(\mathbb {R}^I\). When dealing with local functions, we will typically refer to the representation function \(f'\) as f, and the index set on which the function is local will be called I. The reason why this extension procedure is purely technical is that for a fixed local function and large enough n, the expectation of this local function is the same for the normal Gibbs state and the redefined extended Gibbs state.

For a fixed n, such a probability measure \(\mu _n^{\beta ,J,h}\) is referred to as a finite volume Gibbs state(FVGS), and the collection of FVGS will be denoted by \(\mathcal {G}(\beta ,J,h):= \{ \mu _n^{\beta ,J,h} \}_{n \in \mathbb {N}}\). The entire collection \(\mathcal {G} (\beta ,J,h)\) is then a collection of probability measures on \(\mathbb {R}^\mathbb {N}\).

The two principle objects of interest in this paper are the limiting free energy and the infinite volume Gibbs states(IVGS). We define them here explicitly due to their importance.

Definition 2.1.1

(Limiting free energy) The limiting free energy \(f(\beta ,J,h)\), when it exists, is given by

Note that the limiting free energy is defined here with a different sign and unit convention than the physicist’s limiting free energy.

To define the IVGS, recall first that the ambient space \(\mathbb {R}^\mathbb {N}\) can be equipped with the product topology to make it a topological space which is separable, metrizable, and complete with respect to this metric. Such a topological space is called a Polish space. Recall also that the space of probability measures \(\mathcal {M}_1(\mathbb {R}^\mathbb {N})\) on \(\mathbb {R}^\mathbb {N}\), by virtue of \(\mathbb {R}^\mathbb {N}\) being a Polish space, can be equipped with a topology which is separable, metrizable by the Prokhorov metric d, and complete with respect to this metric, see [14, Chapter 3].

Definition 2.1.2

(Infinite volume Gibbs states) The collection infinite volume Gibbs states \(\mathcal {G}_\infty (\beta ,J,h)\), when it is non-empty, is the collection of probability measures on \(\mathbb {R}^\mathbb {N}\) given by

where \(L(\mathcal {G}(\beta ,J,h))\) is the collection of limits points of the finite volume Gibbs states.

In this definition, it is useful to use the metric space characterization of a limit point. A limit point of a set A in a metric space X is any point not in A which can be obtained as the limit of a convergent subsequence of elements in the set.

Since mean-field models typically do not involve boundary conditions or local specifications in the same sense as the classical Gibbsian formalism for lattice spin systems, see [9, Chapter 4], the definition for the IVGS is less involved and does not initially require any further study of the convexity properties of the Gibbs states. This definition of the IVGS reflects the fact that a typical mean-field interaction is invariant under permutations of the underlying spins, and that the notion of increasing volumes in the thermodynamic limit can be replaced by increasing number of lattice sites, since the structure of the sequence of increasing volumes is irrelevant to the mean-field interaction. This is why the FVGS are labelled as a sequence of probability measures rather than a collection of probability measures indexed by subsets corresponding to volumes.

The metrization of \(\mathcal {M}_1(\mathbb {R}^\mathbb {N})\) by the Prokhorov metric d is somewhat intractable for practical calculations. Instead, we will use the fact that collection of local bounded Lipschitz functions \({\text {LBL}} (\mathbb {R}^\mathbb {N})\) from \(\mathbb {R}^\mathbb {N}\) to \(\mathbb {R}\) is convergence determining on \(\mathcal {M}_1(\mathbb {R}^\mathbb {N})\), see [14, Chapter 3] and [19, Chapter 13]. A local function f is Lipschitz if it is Lipschitz on \(\mathbb {R}^I\) with the standard Euclidean norm. In terms of the Prokhorov metric, it follows that a sequence of probability measures \(\{ \mu _n \}_{n \in \mathbb {N}}\) converges to a probability measure \(\mu \) with respect to the Prokhorov metric if and only if \(\mu _n[f] \rightarrow \mu [f]\) in the limit as \(n \rightarrow \infty \) for any \(f \in {\text {LBL}} (\mathbb {R}^\mathbb {N})\). By using the collection \({\text {LBL}}(\mathbb {R}^\mathbb {N})\), it follows that \(\mu \in \mathcal {G}_\infty (\beta ,J,h)\) if and only if there exists a subsequence \(\{ n_k \}_{k \in \mathbb {N}}\) such that \(\mu _{n_k}^{\beta ,J,h} [f] \rightarrow \mu [f]\) for any \(f \in {\text {LBL}} (\mathbb {R}^\mathbb {N})\). Convergence with respect to the Prokhorov metric is often called weak convergence and we will sometimes write \(\mu _n \rightarrow \mu \) weakly in the limit as \(n \rightarrow \infty \) by which we mean that \(d(\mu _n, \mu ) \rightarrow 0\) in the limit as \(n \rightarrow \infty \). If it is clear from the context, we will omit “weakly” and simply say that \(\mu _n \rightarrow \mu \) in the limit as \(n \rightarrow \infty \).

In the literature, the mean-field spherical model was introduced in [18], and it corresponds to the model presented in this paper with the specific choice of external field h in which all components are equal. In [18], the authors computed the limiting free energy, with a different sign and unit convention, of the mean-field spherical model. In [20], the IVGS are indirectly identified for the mean-field spherical model. Historically mean-field models were introduced as simplified models of more complicated models which retain enough non-trivial features to be of use to study. For example, the Curie–Weiss model, which is the mean-field version of the classical Ising model, was introduced to exactly study a simplified model which still exhibits interesting thermodynamic phenomena such as phase transitions, anomalous thermodynamic fluctuations, etc. We refer to [16] for more details on the Curie–Weiss model and the classical Ising model. In addition, various features of certain generalizations of the classical Curie–Weiss model were studied in depth in [13]. From this perspective, the mean-field spherical model is the mean-field version of the continuous spin version of the Ising model known as the Berlin-Kac model introduced in [8].

2.2 Deterministic Inhomogeneous External Field

We will now begin the exposition and presentation of the main results concerning the deterministic inhomogeneous external field. Denote \(m_n^h:= (m_n^{h, \parallel }, m_n^{h, \perp }) \in \mathbb {R}^2\), where \(m_n^{h,\parallel }\) is the finite sample mean and \(m_n^{h,\perp }\) is the finite sample standard deviation of the external field h given by

The magnetization \(M_n: \mathbb {R}^n \rightarrow \mathbb {R}\) is given by

where \(1 \in \mathbb {R}^\mathbb {N}\) is the vector with all components 1, \(1_n:= \pi _{\{ 1,2,...,n\}} (1)\), and \(\left\langle \cdot , \cdot \right\rangle \) is the Euclidean inner-product. Observe that the Hamiltonian can be written in the following form

where \(h_n:= \pi _{\{ 1,2,...,n\}} (h)\). Suppose now that h satisfies \(m_n^{h,\perp } \not = 0\), and let us consider the plane \(W_n^h \subset \mathbb {R}^n\) spanned by the vectors \(1_n\) and \(h_n\). An orthonormal basis of \(W_n^h\) is given by the span of the unit vectors \(\{ w_{1,n}, w^h_{2,n} \}\) given by

The Hamiltonian can be written as

Let \(\{ v_{k,n}^h \}_{k=3}^n\) be an orthonormal basis of \(\left( W_n^h \right) ^\perp \), where \(\left( W_n^h \right) ^\perp \) is the orthogonal complement of \(W_n^h \). Let \(O^h_n\) be the orthogonal change of coordinates \(O^h_n: \mathbb {R}^n \rightarrow \mathbb {R}^n\) given by \(\left( O^h_n(\phi ) \right) _1 = \left\langle w_{1,n}, \phi \right\rangle \), \(\left( O^h_n(\phi ) \right) _2 = \left\langle w^h_{2,n}, \phi \right\rangle \), and \(\left( O^h_n(\phi ) \right) _k = \left\langle v_{k,n}^h, \phi \right\rangle \) for \(k=3,...,n\). Using this change of coordinates and the hyperspherical change of coordinates, formally, we have

where \(z = (x,y) \in \mathbb {R}^2\), \(|\mathbb {S}^{n - 3}|\) is the integral over the angular coordinates of the \(n-3\)-dimensional unit sphere, and \(B(0,1) \subset \mathbb {R}^2\) is the 2-dimensional unit sphere. This formal calculation leading to Equation (2.2.5) is given a rigorous proof in Lemma 3.1.1.

Let us remark that if \(m_n^{h, \perp } = 0\), then it follows that \(h_n\) is a vector with equal components and the calculation is the same as for the standard mean-field spherical model in [18]. This is why the standard mean-field spherical model concerns a homogeneous external field while the model present in the paper concerns an inhomogeneous external field. From here on out, whenever we refer to an external field, we mean an inhomogeneous external field unless otherwise stated.

2.2.1 Limiting Free Energy

To continue, we state the first condition that the external field must satisfy as a definition.

Definition 2.2.1

An external field h is strongly varying if

Subsequently, one could define the weakly varying external field as an external field where the limit \(m \in \mathbb {R} \times \{ 0 \}\). In other words, the “strongly varying” part of the field is associated with a non-vanishing limiting sample standard deviation. Note also that the strongly varying condition ensures that \(m_n^{h, \perp } \not = 0\) for large enough n. Note also that whenever an external field is strongly varying it is also thus inhomogeneous. From this point onwards, unless explicitly stated otherwise, we will assume that the external field is strongly varying.

Denote the exponential tilting function \(\psi _n^{\beta ,J,h}\) to be the function \(\psi _n^{\beta ,J,h}: B(0,1) \rightarrow \mathbb {R}\) given by

By setting \(f \equiv 1\) in Lemma 3.1.1, we see that the exponential tilting function is related to the partition function \(Z_n(\beta ,J,h)\) by the following formula

Motivated by this representation, we denote the limiting exponential tilting function \(\psi ^{\beta ,J,m}\) to be the function \(\psi ^{\beta ,J,m}: B(0,1) \rightarrow \mathbb {R}\) given by

For the exponential tilting functions, it follows that

from which it follows that

By using this uniform convergence, we have the first main result.

Theorem 2.2.2

Let h be a strongly varying external field.

It follows that

The proof of this result, see Sect. 3.3, is a routine large deviations calculation once we show that set of global maximizing points \(M^*(\beta ,J,m)\) of \(\psi ^{\beta ,J,m}\) is compact and non-empty, see Lemma 3.3.1 for the proof.

One can see from this theorem that if we allowed for a weakly varying external field, i.e., we have \(m^\perp = 0\), then we would recover the same free energy as for the standard mean-field spherical model presented in [18]. With respect to the manipulation of the Hamiltonian in Equation (2.2.4), when one applies a homogeneous external field, which is characterized by the external field h having equal components, we see that there is a single magnetization “component” which corresponds to the projection of \(\phi \) along the normalized unit vector \(w_{1,n}\). If the external field is not homogeneous, there exists a second magnetization component corresponding to the projection of \(\phi \) along the normalized unit vector \(w^h_{2,n}\). Subject to the strongly varying condition, this perpendicular magnetization component is non-vanishing in the limit and it is the distinguishing feature between the standard mean-field spherical model in a homogeneous external field and the mean-field spherical model in a strongly varying field.

2.2.2 Partial Classification of Infinite Volume Gibbs States

Our next main result concerns a partial classification of the IVGS. With reference to Lemma 3.1.1, we begin by defining the mixture probability measure \(\rho _n^{\beta ,J,h}\) on B(0, 1) by its density

where dz is the Lebesgue measure on B(0, 1). Next, we define the “microcanonical” probability measure on \(\mathbb {R}^\mathbb {N}\) as the 0-tensored version of the probability measure given via its action on continuous bounded functions \(f \in C_b (\mathbb {R}^n)\) by

As a direct application of Lemma 3.1.1 along with the definitions given by Equation (2.2.9) and Equation (2.2.10), we have the following central representation result for the FVGS.

Lemma 2.2.3

Let h be a strongly varying external field.

It follows that

By using a similar calculation to the one used for the proof of the form of the limiting free energy, we show that the collection of mixture probability measures \(\{ \rho _n^{\beta ,J,h}\}_{n \in \mathbb {N}}\) is uniformly tight and its limit points are probability measures supported on the set \(M^*(\beta ,J,m)\).

Lemma 2.2.4

Let h be a strongly varying external field.

It follows that the collection of probability measures \(\{ \rho ^{\beta ,J,h}_n\}_{n \in \mathbb {N}}\) is uniformly tight and

For the full proof, see Sect. 3.3. Note that the definition of the support we are using is \({\text {supp}}(\rho ):= \{ x \in B(0,1): \forall \delta> 0, \ \rho (B(x, \delta )) > 0 \}\).

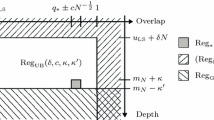

For this model, we show that the structure of \(M^*(\beta ,J,m)\) is simple and completely characterizable. It is either a set with one element or two elements depending on the parameters of the model, see Lemmas 3.4.1 and 3.4.2 for the proofs. For the parameter range where \(m^\parallel = 0\) and \(m^\perp < J\) simultaneously, the transition from a single element to two elements is marked by a critical inverse temperature \(\beta _c:= \frac{J}{(J - m^\perp )(J + m^\perp )}\), such that the set consists of a single element when \(\beta \le \beta _c\) and it consists of two elements when \(\beta > \beta _c\). When the mean is non-vanishing i.e., \(m^\parallel \not = 0\), the set consists of a single element \(z^*\) such that \(x^* > 0\) if \(m^\parallel > 0\) and \(x^* < 0\) if \(m^\parallel < 0\). For the other parameter ranges, the set consists of a single element \(z^0\) such that \(x^0 = 0\).

We summarize these results in Table 1.

Let us now classify these parameter ranges in the following way. We will say that we are in the pure state(PS) parameter range if the set \(M^*(\beta ,J,m)\) consists of a single element. This parameter range can be deduced from the first three rows of the above table. We will say that we are in the mixed state(MS) parameter range if the set \(M^*(\beta ,J,m)\) consists of two elements. This parameter range can be deduced from the last row of the above table. We will always refer to the two elements of this set as \(z^+\) and \(z^-\), where \(x^+ > 0\).

As for the probability measure \(\nu _n^{z,h}\), we show that it satisfies a type of uniform convergence in the variable z to a sufficiently regular probability measure on \(\mathbb {R}^\mathbb {N}\) in the limit as \(n \rightarrow \infty \). To begin with, denote \(\eta \) to be the probability measure on \(\mathbb {R}^\mathbb {N}\) given via its action on \(f \in {\text {LBL}} (\mathbb {R}^\mathbb {N})\) by

where \(I \subset \mathbb {N}\) is the local index set of f. This probability measure on \(\mathbb {R}^\mathbb {N}\) is the countable dimensional version of the standard finite dimensional Gaussian probability measure.

We will need the projection \(P_n^h: \mathbb {R}^n \rightarrow \mathbb {R}^n\) to the subspace \(W_n^h\) given by the formula

Let \(T_n^{z,h}: \mathbb {R}^\mathbb {N} \rightarrow \mathbb {R}^{n} \times \mathbb {R}^{\mathbb {N} {\setminus } [n]} \subset \mathbb {R}^\mathbb {N}\) be the transport map given by

and \((T_n^{z,h} (\phi ))_2 = 0\), where \(z = (x,y) \in B(0,1)\). For the given probability measures and transport maps, we show that \(\nu _n^{z,h} = { T_{n}^{z,h} }_* \eta \), see Lemma 3.2.1, where \({ T_{n}^{z,h} }_* \eta \) is the pushforward measure of \(\eta \) by \(T_n^{z,h}\).

Continuing, note that

where \(I \subset \mathbb {N}\) is any finite index set. Let \(T_\infty ^{z,h}: \mathbb {R}^\mathbb {N} \rightarrow \mathbb {R}^\mathbb {N}\) be the transport map given by

where \(z = (x,y) \in B(0,1)\) and \(m^\perp \not = 0\). Using this transport map, consider the probability measure \(\nu _\infty ^{z,h}\) on \(\mathbb {R}^\mathbb {N}\) given by \(\nu _\infty ^{z,h}:={T^{z,h}_\infty }_* \eta \). We show the following uniform convergence result concerning the convergence of \(\nu _n^{z,h}\) to \(\nu _\infty ^{z,h}\).

Lemma 2.2.5

Let h be a strongly varying external field.

It follows that

for any \(f \in {\text {LBL}} (\mathbb {R}^\mathbb {N})\).

For the full proof, see Sect. 3.2. The proof of the uniform convergence result relies on specific asymptotic properties of the spherical constraint. The main technical difficulties involve the lack of symmetries such as permutation invariance or translation invariance which are present in the MFS model.

By combining together the limiting point result Lemma 2.2.4 for the collection of mixture probability measures \(\{ \rho _n^{\beta ,J,h} \}_{n \in \mathbb {N}}\), the uniform convergence result from Lemma 2.2.5, and repeated use of Prokhorov’s theorem, see [14, Chapter 3], we have the following partial classification of the IVGS.

Theorem 2.2.6

Let h be a strongly varying external field.

-

1.

For the pure state parameter range, we have

$$\begin{aligned} \mathcal {G}_\infty (\beta ,J,h) = \left\{ \nu _\infty ^{z^*,h} \right\} . \end{aligned}$$ -

2.

For the mixed state parameter range, we have

$$\begin{aligned} \mathcal {G}_\infty (\beta ,J,h) \subset {\text {conv}} \left( \nu _\infty ^{z^+,h}, \ \nu _\infty ^{z^-,h} \right) := \{ \lambda \nu _\infty ^{z^+,h} + (1 - \lambda ) \nu _\infty ^{z^-,h} : \lambda \in [0,1] \} . \end{aligned}$$

For the full proofs, see Corollarys 3.3.4 and 3.3.3.

In the PS parameter range, we see that there exists a unique IVGS. Since the proof of this result relies on Prokhorov’s theorem, we do not obtain a complete classification of the collection of IVGS for the MS parameter range. Indeed, it is possible that there could still exist a single unique limit, but this method of proof does not provide it.

Let us emphasize that the method of proof used in Theorem 2.2.6 relies heavily on Prokhorov’s theorem, and the uniqueness of the IVGS in the PS parameter range is due to the fact that the only probability measure supported by a single point is the Dirac measure at that point. In general, when \(M^*(\beta ,J,m)\) does not consist of a single point, to fully characterize the limit points of the mixtures measures as in Lemma 2.2.4, one would need to characterize the probability measures supported by \(M^*(\beta ,J,m)\) that can be obtained as weakly convergent subsequence of the mixtures measures \(\{ \rho _n^{\beta ,J,h}\}_{n \in \mathbb {N}}\). Later on in this paper, we prove two theorems concerning this phenomenon. For the deterministic inhomogeneous external field, subject to additional assumptions to the magnetization vectors \(\{ m_n^h \}_{n \in \mathbb {N}}\), we show that in the MS parameter range, the IVGS can still consist of a single unique point, see Theorem 2.2.9. For the random external field, we show that all convex combinations of the pure states can be obtained, see Theorem 2.3.5. These results show that in order to give results concerning the opposite inclusion or characterization of weakly converging subsequences of the mixture measures, one must control the, to be introduced in Equation (2.2.15), relative weights associated with the FVGS. We will discuss these results in depth once they are proven.

2.2.3 Full Classification of the Infinite Volume Gibbs States

In the MS parameter range, there are two global maximizing points \(z^\pm \in M^*(\beta ,J,m)\). They are related by the fact that \(x^- = - x^+ < 0\) and \(y^- = y^+\). This suggests studying the mixture probability measure \(\rho _n^{\beta ,J,h}\) conditioned to the positive and negative quadrants \(B_{+} (0,1):= B(0,1) \cap ((0, \infty ) \times \mathbb {R})\) and \(B_- (0,1):= B(0,1) \cap ((-\infty , 0) \times \mathbb {R})\), which is equivalent to conditioning the FVGS on the set where the magnetization is positive for the positive quadrant and negative for the negative quadrant. The conditioned FVGS \(\mu _n^{\beta ,J,h, \pm }\) act on \(f \in C_b (\mathbb {R}^\mathbb {N})\) by

To accompany the conditioned FVGS, the weights \(W_n^{\beta ,J,h, \pm }\) are given by

This conditioning yields a representation of the form

By reusing the proof of Theorem 2.2.6 for the PS parameter range, we show that the probability measures \(\nu _n^{\beta ,J,h, \pm }\) converge weakly in the limit as \(n \rightarrow \infty \).

Lemma 2.2.7

Let h be a strongly varying external field.

For the mixed state parameter range, it follows that

For the full proof, see Sect. 3.5.

It thus follows that the convergence properties of the weights \(W_n^{\beta ,J,h, \pm }\) determine the limiting structure of the FVGS. By rearranging the form of the weights, we see that

To resolve the convergence properties, we introduce a sequence of local maximizing points \(\{ z_n^* \}_{n \in \mathbb {N}}\) such that each \(z_n^*\) is a local maximizing point of \(\psi _n^{\beta ,J,h}\), \(z_n^*\) satisfies the critical point equation \(\nabla \psi _n^{\beta ,J,h} (z_n^*) = 0\), and \(z_n^* \rightarrow z^*\) in the limit as \(n \rightarrow \infty \), where \(z^*\) is a global maximizing point of \(\psi ^{\beta ,J,m}\), see Lemma 3.5.1 for the construction. From the proof presented for Lemma 3.4.2, we know that the Hessian \(H[\psi ^{\beta ,J,m}]\) of \(\psi ^{\beta ,J,m}\) is negative definite at the points \(z^\pm \). With these observations, we show that

where \(\{ z_n^\pm \}_{n \in \mathbb {N}}\) is the collection of local maximizing points in their own respective quadrants of B(0, 1). The proof, see Lemma 3.5.3, is essentially a modification of a similar proof for Laplace-type integrals which can be found in [35, Chapter 2]. The idea and method of constructing sequences of local maximizing points in this way is a model specific adaptation of the same method presented in [3, 4].

Let us also remark that all of the proofs that are presented in Sect. 3.5 use the same notation and sequence of local maximizing points \(\{ z_n^* \}_{n \in \mathbb {N}}\), and that the proofs and techniques presented here are only valid when the Hessian of \(\psi ^{\beta ,J,m}\) at \(z^*\) is negative definite. This point is also emphasized below the proof of Lemma 3.5.1.

In the following exposition, we will present a series of results concerning the rates of convergence of various quantities present in these calculations. We will use the symbol \(\approx \) to imply that the results hold in the large n limit with a suitable error term for the desired application. In this notation, the weights satisfy

Using the critical point equations, we show that the local maximizing points satisfy

see Lemma 3.5.2 for the proof. As a direct application of this result, we show that the exponential tilting functions evaluated at the local maximizing points satisfy

see Lemma 3.5.4 for the proof. Finally, using the fact that \(x^- = - x^+\), \(y^+ = y^-\), and \(\psi ^{\beta ,J,m} (z^+) = \psi ^{\beta ,J,m} (z^-)\), and the previous result, we show that

By combining all of these results together, we can compute the limit of the weights by specifying the rates of convergence of the sample mean and sample standard deviation.

Lemma 2.2.8

Let h be a strongly varying external field, and suppose that we are in the mixed state parameter range.

Suppose there is \(\delta \in [0, \infty )\) such that \(n^\delta (m_n^h - m) \rightarrow \gamma := (\gamma ^{\parallel }, \gamma ^\perp ) \in \mathbb {R}^2\) in the limit as \(n \rightarrow \infty \).

-

1.

If \(\delta \in [0,1)\) and \(\gamma ^{\parallel } \not = 0\), it follows that

$$\begin{aligned} \lim _{n \rightarrow \infty } W_n^{\beta ,J,h,+} = \mathbbm {1}(\gamma ^\parallel > 0) . \end{aligned}$$ -

2.

If \(\delta = 1\), it follows that

$$\begin{aligned} \lim _{n \rightarrow \infty } W_n^{\beta ,J,h,+} = \frac{1}{1 + e^{- 2 \beta x^+ \gamma ^\parallel }} . \end{aligned}$$ -

3.

If \(\delta \in (1, \infty )\), it follows that

$$\begin{aligned} \lim _{n \rightarrow \infty } W_n^{\beta ,J,h,+} = \frac{1}{2} . \end{aligned}$$

For the full proof, see Sect. 3.5.

Combining together the conditioned representation from Equation (2.2.16), the weak convergence of the conditioned probability measures from Lemma 2.2.7, and the asymptotics of the weights from Lemma 2.2.8, we present the full classification of the IVGS given sublinear, linear, and superlinear rates of convergence of \(m_n^h\) to m in the limit as \(n \rightarrow \infty \).

Theorem 2.2.9

Let h be a strongly varying external field, and suppose that we are in the mixed state parameter range.

Suppose there is \(\delta \in [0, \infty )\) such that \(n^\delta (m_n^h - m) \rightarrow \gamma := (\gamma ^{\parallel }, \gamma ^\perp ) \in \mathbb {R}^2\) in the limit as \(n \rightarrow \infty \).

-

1.

If \(\delta \in [0,1)\) and \(\gamma ^{\parallel } \not = 0\), it follows that

$$\begin{aligned} \lim _{n \rightarrow \infty } \mu _n^{\beta , J, h} = \mathbbm {1}(\gamma ^\parallel > 0) \nu _\infty ^{z^+,h} + \mathbbm {1}(\gamma ^\parallel < 0) \nu _\infty ^{z^-,h}. \end{aligned}$$ -

2.

If \(\delta = 1\), it follows that

$$\begin{aligned} \lim _{n \rightarrow \infty } \mu _n^{\beta , J, h} = \frac{1}{1 + e^{- 2 \beta x^+ \gamma ^\parallel }} \nu _\infty ^{z^+,h} + \frac{1}{1 + e^{ 2 \beta x^+ \gamma ^\parallel }} \nu _\infty ^{z^-,h} . \end{aligned}$$ -

3.

If \(\delta \in (1, \infty )\), it follows that

$$\begin{aligned} \lim _{n \rightarrow \infty } \mu _n^{\beta , J, h} = \frac{1}{2} \nu _\infty ^{z^+,h} + \frac{1}{2}\nu _\infty ^{z^-,h} . \end{aligned}$$

This result improves on Theorem 2.2.6 for the mixed state parameter range. In particular, this result shows that when the external field is strongly varying, one can construct any IVGS by a specific choice of the rate of convergence of the sample mean and sample standard deviation. Later on in this paper, in Theorems 2.3.5 and 2.3.9, we will give concrete probabilistic examples which apply Theorem 2.2.9 for \(\delta = \frac{1}{2}\) and \(\delta = 1\) for some \(\gamma \). However, we were unable to find a general way to construct deterministic inhomogeneous external fields which would realize the other possible asymptotic rates presented in Theorem 2.2.9. In general, this problem concerns the simultaneous control of the Cesàro sums of the sequences \(\{ h_i \}_{i \in \mathbb {N}}\) and \(\{ h_i^2 \}_{i \in \mathbb {N}}\), which seems difficult. A result in this direction for the RFCW model is the so-called generalized quasi-average method utilized in [4], but this method, from our perspective, involves a perturbed external field rather than a fixed external field.

2.2.4 Summary and Remarks

Let us now summarize these results for the strongly varying external field. When the parallel magnetization component is non-vanishing i.e., \(m^\parallel \not = 0\), irrespective of all other details of the model, there is a single unique IVGS. When the parallel magnetization component is vanishing i.e., \(m^\parallel = 0\), and the perpendicular magnetization component is large enough i.e., \(m^\perp \ge J\), there is a single unique IVGS. When the parallel magnetization component is vanishing, the perpendicular magnetization component is small enough i.e., \(m^\perp < J\), and the inverse temperature is small enough i.e., \(\beta \le \beta _c\), there is a single unique IVGS. Finally, when the parallel magnetization component is vanishing, the perpendicular magnetization component is small enough, and the inverse temperature is large enough i.e., \(\beta > \beta _c\), the IVGS can be realized as any convex combination of the pure states \(\nu _\infty ^{z^+,h}\) and \(\nu _\infty ^{z^-,h}\) subject to the sublinear, linear, and superlinear rates of convergence assumptions for the convergence of the sample mean and sample standard deviation.

To our knowledge, the results concerning the limiting free energy, classification of IVGS, and rate of convergence analysis are novel contributions in the literature for this specific model, and, in general, this level of detail and specification is rare even for similar models. The last point about details and specification will be discussed further for the random external field.

For a deterministic inhomogeneous external field, a result in the literature in the same spirit is the classification of the IVGS for the classical Curie–Weiss model presented in [10]. The major difference to our work is that the intent of this paper is to realize convex combinations of pure states in the standard Curie–Weiss model by perturbing the Curie–Weiss Hamiltonian with a small symmetry breaking external field. This would be similar to studying the weakly varying case for our model, which we explicitly exclude. Very briefly, the method of solution for this model involves using the Hubbard–Stratonovich transform, to write the perturbed finite volume Gibbs states as a mixture of product states. In our case, we do not, and cannot, in some sense, apply the Hubbard-Stratonivich transform, nor is there an immediate product structure. This is why one needs the uniform convergence lemma, which is Lemma 2.2.5 in the paper, used in the proof of one of the main results. In [20], the authors were able to utilize permutation invariance of the standard mean-field spherical model for a relatively simple proof using Wasserstein distances for weak convergence. In this work, due to the permutation invariance breaking external field, we needed a different technique which is the uniform convergence lemma.

Let us also remark that our paper does not make use of the method of steepest descent utilized by the authors in the original work which introduced the spherical model [8]. We only mention this since there are several instances of the utilization of this method for spherical models, yet we opted for a direct approach since it was possible. The method of steepest descent has been applied to study the spherical model in a specific deterministic non-homogeneous external field in [28] and the limiting Gibbs states of spherical model with a small homogeneous external field in [11].

2.3 Random External Field

Next, we begin the presentation and specification our results when the deterministic inhomogeneous external field is replaced by a random external field. A measurable map \(h: (\Omega , \mathcal {F}, \mathbb {P}) \rightarrow (\mathbb {R}^\mathbb {N}, \mathcal {B}(\mathbb {R}^\mathbb {N}))\) is said to be a random external field, where \((\Omega , \mathcal {F}, \mathbb {P})\) is a probability triple, and \((\mathbb {R}^\mathbb {N}, \mathcal {B}(\mathbb {R}^\mathbb {N}))\) is a measurable space, where \(\mathcal {B}(\mathbb {R}^\mathbb {N})\) is the Borel \(\sigma \)-algebra associated with the product topology on \(\mathbb {R}^\mathbb {N}\). Since the map \(h \mapsto \mu _n^{\beta ,J,h}\) is continuous and thus measurable, it follows that \(\omega \mapsto h(\omega ) \mapsto \mu _n^{\beta ,J,h(\omega )}\) is also measurable, and thus \(\mu _n^{\beta ,J,h}\) interpreted as a probability measure-valued random variable \(\mu _n^{\beta ,J,h(\cdot )}: (\Omega , \mathcal {F}, \mathbb {P}) \rightarrow (\mathcal {M}_1 (\mathbb {R}^\mathbb {N}), \mathcal {B}(\mathbb {R}^\mathbb {N}))\) is a random probability measure, see Lemma 3.6.2 for this justification. The collection of FVGS is then a collection of probability measure-valued random variables, and we are interested in studying the limiting properties of this collection subject to additional assumptions to the random external field.

Let us also briefly remark on the distinction of convergence in distribution and weak convergence of random probability measures. If \(\mathcal {S}\) is a Polish space and \(\{ X_n \}_{n \in \mathbb {N}}\) and X are \(\mathcal {S}\)-valued random variables, we say that \(X_n\) converges to X in distribution if the probability distributions of \(X_n\) converge weakly to the probability distribution of X. For random probability measures \(\{ \mu _n \}_{n \in \mathbb {N}}\) and \(\mu \), when we say that \(\mu _n\) converges to \(\mu \) in distribution, we mean it in the sense that we just explained. If \(\mu _n\) converges to \(\mu \) almost surely, we mean that \(d(\mu _n, \mu ) \rightarrow 0\) in the limit as \(n \rightarrow \infty \) almost surely, which is equivalent to saying that \(\mu _n\) converges weakly to \(\mu \) almost surely. We will try to stay consistent with this terminology so that weak convergence is reserved for probability measures and convergence in distribution is reserved for random variables.

For the following assumptions to the random external field, we will need the concept of possible values of a random walk. Let \(\{ X_i \}_{i \in \mathbb {N}}\) be a collection of independent identically X-distributed \(\mathbb {R}^d\)-valued random variables. Denote \(\{ S_n' \}_{n \in \mathbb {N}}\) to be the centred random walk with step length \(X - \mathbb {E} X\) given by \(S_n':= \sum _{i=1}^n (X_i - \mathbb {E} X_i)\). We say that a point \(x \in \mathbb {R}^d\) is a possible value of \(S_n'\) if for any \(\varepsilon > 0\) there exists \(n \in \mathbb {N}\) such that \(\mathbb {P} (|| S_n' - x || < \varepsilon ) > 0\). Denote the collection of possible values by P. We say that a point \(x \in \mathbb {R}^d\) is a recurrent value of \(S_n'\) if for any \(\varepsilon > 0\), we have \(\mathbb {P} (|| S_n' - x|| < \varepsilon \text { infinitely often}) = 1\).

We present the following further assumptions for the random external field.

Assumption 2.3.1

(A1) The components of h are independent \(h_0\)-distributed real-valued random variables such that \(\mathbb {E} h_0^2 < \infty \) and \(\mathbb {V} h_0 > 0\), where \(\mathbb {V} h_0:= \mathbb {E} h_0^2 - \left( \mathbb {E} h_0 \right) ^2\)

-

(A2)

The random variable \(h_0\) satisfies \(\mathbb {E} h_0^4 < \infty \) and \(\mathbb {V} h_0^2 > 0\)

-

(A3)

The set of possible values P of the centred random walk with step length \((h_0 - \mathbb {E} h_0, h_0^2 - \mathbb {E} h_0^2)\) satisfies \(\pi _1 (P) = \mathbb {R}\), where \(\pi _1 (\cdot )\) is the canonical projection to the first coordinate

-

(A4)

The random variable \(h_0\) satisfies \(\mathbb {E} h_0^{4 + \xi }\) for some \(\xi > 0\)

Note that the moment conditions of (A2) imply the moment conditions of (A1). For the rest of this paper, we will denote \(\{ S_n \}_{n \in \mathbb {N}}\) to be the random walk with step length \((h_0, h_0^2)\) and \(\{ S_n'\}_{n \in \mathbb {N}}\) to be the centred random walk with step length \((h_0 - \mathbb {E} h_0, h_0^2 - \mathbb {E} h_0^2)\).

2.3.1 Self-averaging of the Limiting Free Energy

In terms of the random walk \(\{ S_n \}_{n \in \mathbb {N}}\), we can write the vector \(m_n^h\) as

From this simple observation, as an application of the strong law of large numbers for the random walk \(\{ S_n \}_{n \in \mathbb {N}}\), we show that the sequence of vectors \(\{ m_n^h \}_{n \in \mathbb {N}}\) satisfies a strong law of large numbers.

Lemma 2.3.2

Let h be a random external field which satisfies (A1).

It follows that

almost surely.

For the proof, see Lemma 3.6.3.

We see that the condition \(\mathbb {V} h_0 > 0\) ensures that the random external field h is strongly varying almost surely. Subject to assumption (A1), it immediately follows that the limiting free energy is given by

almost surely. Note that although the partition functions \(Z_n (\beta ,J,h)\) are random variables, the limiting free energy is a deterministic quantity. We show that the collection of finite volume free energies is uniformly integrable and thus we have the following result concerning the self-averaging of the limiting free energy.

Theorem 2.3.3

Let h be a random external field which satisfies (A1).

It follows that

almost surely.

For the proof, see Sect. 3.6.

Since the random external field is almost surely strongly varying, the classification of the parameter ranges for the pure states and mixed states is the same as for the deterministic inhomogeneous external field. The updated values of m and \(\beta _c\) are \(m^\parallel = \mathbb {E} h_0\), \(m^\perp = \sqrt{\mathbb {V} h_0}\), and \(\beta _c = \frac{J}{(J - \sqrt{\mathbb {V} h_0}) (J + \sqrt{\mathbb {V} h_0})}\). We present the updated values in Table 2.

As a direct application of Theorem 2.2.6, we have the same partial classification of the IVGS for the random external field as for the deterministic field, the only difference being that the classification only holds almost surely. For the random external field, the IVGS can be characterized almost surely also in the case where we are in the MS parameter range subject to further assumptions to the random external field. Recall that the first three rows of Table 2 describe the PS parameter range and the last row describes the MS parameter range.

2.3.2 Chaotic Size Dependence

For the PS parameter range, the IVGS is unique almost surely and its proof only relies on assumption (A1). For the MS parameter range, we begin by noting that the sequence of vectors \(\{ m_n^h \}_{n \in \mathbb {N}}\) can be written entirely in terms of the centred random walk \(\{ S_n' \}_{n \in \mathbb {N}}\) by

Subject to assumption (A2), it follows that \(\frac{1}{\sqrt{n}} S_n' \rightarrow G\) in distribution in the limit as \(n \rightarrow \infty \), where G is a non-degenerate 2-dimensional Gaussian, and, as a result, the recurrent and possible values of the centred random walk \(\{ S_n' \}_{n \in \mathbb {N}}\) are the same, see [12, Chapter 5]. Using the recurrence of the centred random walk, we show that the sequence of vectors \(\{ m_n^h \}_{n \in \mathbb {N}}\) satisfies a similar recurrence result.

Lemma 2.3.4

Let h be a random external field which satisfies (A1) and (A2).

It follows that

almost surely.

For the proof, see Lemma 3.6.3. Let us also briefly remark and clarify on proofs of this kind which involve many steps which hold almost surely. If there is a collection, with at most countable size, of statements which all hold almost surely, then the intersection of these statements also holds almost surely. In the proofs, there will typically be a number of such almost sure statements which are used in the order they appear. These proofs should be read so that one collects all almost sure statements made in the proof, and the set of probability 1 for which the theorem holds is the intersection of all of these statements.

As an application of Theorem 2.2.9 in the case where \(\delta = 1\) and \(\gamma = \left( p_1, \frac{1}{2 \sqrt{\mathbb {E} h_0^2}} p_2\right) \), we have the following complete classification of the IVGS almost surely.

Theorem 2.3.5

Let h be a random external field which satisfies (A1).

For the pure state parameter range, it follows that

almost surely.

If h also satisfies (A2) and (A3), for the mixed state parameter range, it follows that

almost surely.

The proof of this result, see Sect. 3.6, is given for the case where \(\pi _1 (P)\) is not necessarily the whole space.

The phenomenon proven in this result is referred to as chaotic size dependence(CSD) due to Newman and Stein [25]. For more physically relevant models, this property is distressing in the sense that it predicts that the IVGS depend on the way the subsequences of finite volumes are selected when the external field is random. This property has been studied for a variety of systems including the BFCW in [4, 21]. For the Ising model with random boundary conditions, a result of this type was obtained in [34]. To our knowledge, our result is novel for this particular model, and the generality of the result is greater than similar results obtained for other models with random external fields. In particular, the works of [4, 21, 30] give a certain emphasis to the case where the random external field has components which are Bernoulli distributed. In addition, in [21], it is remarked that a random external field with continuous components, as opposed to the discrete components of the Bernoulli field, is expected to realize all convex combinations of the pure states. This is indeed the case in this model for any \(h_0\) satisfying (A1), (A2) and (A3).

2.3.3 Construction of the Aizenman–Wehr Metastate

Since almost sure convergence is too strong of a form of convergence for FVGS, we will instead consider weaker forms of convergence which ultimately result in constructions of limiting objects similar to the IVGS. These constructions have been introduced in the disordered systems literature, and we will reference them as they appear in this paper. For more details and exposition, we refer to [9, Chapter 6]. In addition, since we are dealing with random probability measures, for convergence properties of random probability measures, we refer to [17, Chapter 4].

We begin with the collection of joint probability measures \(\{ K_n^{\beta ,J}\}_{n \in \mathbb {N}}\) which act on \(f \in C_b (\mathbb {R}^\mathbb {N} \times \mathbb {R}^\mathbb {N})\) by

where the expectation \(\mu _n^{\beta ,J,h} [f(h, \cdot )]\) is taken with respect to the second argument. Note that the marginal distribution of the first component is simply the distribution of h, and the marginal distribution of the second component is given by the intensity measure \(\mathbb {E} \mu _n^{\beta ,J,h}\) which acts on \(f \in C_b (\mathbb {R}^\mathbb {N})\) by \(f \mapsto \mathbb {E} \mu _n^{\beta ,J,h} [f]\). We denote the weak limit, when it exists, of the joint probability measures by \(K^{\beta ,J}\).

Next, we will consider the collection of metastate probability measures \(\{ \mathcal {K}_{n}^{\beta ,J}\}_{n \in \mathbb {N}}\) which are the probability distributions of the \(\mathbb {R}^\mathbb {N} \times \mathcal {M}_1 (\mathbb {R}^\mathbb {N})\)-valued random variables \((h, \mu _n^{\beta ,J,h})\). Note that the marginal distribution of the first component of the metastate probability measure is the distribution of h, and the marginal distribution of the second component is the distribution of \(\mu _n^{\beta ,J,h}\). We denote the probability measure corresponding to the limit in distribution, when it exists, of the metastate probability measures by \(\mathcal {K}^{\beta ,J}\). Since \(\mathcal {K}^{\beta ,J}\) is a probability measure on \(\mathbb {R}^\mathbb {N} \times \mathcal {M}_1 (\mathbb {R}^\mathbb {N})\), we can obtain a random probability measure on probability measures by taking the regular conditional distribution of the second argument given the first argument.

Definition 2.3.6

A conditioned metastate probability measure or Aizenman–Wehr metastate \(\kappa ^{\beta ,J,h}\), when it exists, is a measurable map \(\kappa ^{\beta ,J, \cdot }: \mathbb {R}^\mathbb {N} \rightarrow \mathcal {M}_1 (\mathcal {M}_1 (\mathbb {R}^\mathbb {N}))\) which satisfies

for all \(f \in C_b (\mathbb {R}^\mathbb {N} \times \mathcal {M}_1 (\mathbb {R}^\mathbb {N}))\).

This construction is due to Aizenman and Wehr [2], and the name metastate refers to the fact that the resulting object is a probability measure on probability measures. Although we gave here the definition of a conditioned metastate probability measure, we will still refer to upcoming construction as the conditioned metastate probability measure.

Let us now remark on some properties of the joint probability measures and the metastate probability measures. If \(f \in C_b (\mathbb {R}^\mathbb {N} \times \mathbb {R}^\mathbb {N})\), then it follows that the map \((h, \mu ) \mapsto \mu [f(h, \cdot )]\) is continuous and bounded. As a result, if the weak limit of the metastate probability measures exists in the limit as \(n \rightarrow \infty \), we must have

It follows that the weak limit of the metastate probability measures completely determines the weak limit of the joint probability measures. As a result, the joint probability measures are in some sense redundant if one has limiting results pertaining to the metastate probability measures. In this paper, we will use the joint probability measures primarily as a tool to prove uniform tightness results of the metastate probability measures. To that end, although we will not make immediate use of the following result, we show the uniform tightness of the collection of intensity measures \(\{ \mathbb {E} \mu _n^{\beta ,J,h} \}_{n \in \mathbb {N}}\).

Lemma 2.3.7

Let h be a random external field which satisfies (A1).

It follows that the collection of intensity measures \( \left\{ \mathbb {E} \mu _n^{\beta , J, h} \right\} _{n \in \mathbb {N}}\) is uniformly tight.

For the full proof, see Sect. 3.7. The uniform tightness of the various metastate probability measures follow by combining this result with Lemmas A.2.1 and A.2.3.

For the MS parameter range, recall that the random variable \(m_n^h\) can be written in terms of the 2-dimensional centred random walk \(S_n'\) presented in Equation (2.3.1). By using the multivariate delta method, we have the following central limit theorem for the sequence of vectors \(\{ m_n^h \}_{n \in \mathbb {N}}\).

Lemma 2.3.8

Let h be a random external field which satisfies (A1) and (A2).

It follows that

in distribution, where G is a non-degenerate 2-dimensional Gaussian random variable independent of h.

For the proof, see Lemma 3.6.3. The multivariate delta method is a standard tool of statistics, one can see [29] for some more direct references and discussion.

By using Skorohod’s representation theorem, see [19, Chapter 17], we can construct another probability space on which the convergence in distribution of \((h, \sqrt{n} (m_n^h - m)) \rightarrow (h,G)\) is elevated to almost sure convergence. On this new probability space, subject to a slight abuse of notation, we can apply the previous main result Theorem 2.2.9 in the case where \(\delta = \frac{1}{2}\) and \(\gamma = G\) almost surely. Using these methods, we have the following result concerning the weak limit of the metastate probability measures.

Theorem 2.3.9

Let h be a random external field which satisfies (A1) and (A2).

For the mixed state parameter range, it follows

for any \(f \in C_b (\mathbb {R}^\mathbb {N} \times \mathcal {M}_1 (\mathbb {R}^\mathbb {N}))\).

For the full proof, see Sect. 3.7.

As a direct corollary, using the fact that \(h \mapsto \nu _\infty ^{z^\pm ,h}\) is a continuous mapping, we construct the Aizenman–Wehr metastate of this model.

Theorem 2.3.10

Let h be a random external field which satisfies (A1) and (A2).

For the mixed state parameter range, the Aizenman–Wehr metastate is given by

To our knowledge this is a novel result for this particular model and it surpasses other similar models in its level of generality. Similar results have been obtained in [4] and [21] for the BFCW model. We also emphasize that the proof of weak convergence of the metastate probability measures is almost a direct corollary of the previous main result Theorem 2.2.9 by using Skorohod’s representation theorem. This proof strategy does not seem to be utilized in either [4] or [21].

2.3.4 Phase Characterization

To better understand this result, we will characterize this model in terms of the phase characterization of disordered systems given in [27]. This characterization describes the phases in terms of the expectation and variance of the magnetization density. We can give an equivalent characterization of the RFMFS model.

If we return to the representation Lemma 3.1.1, we see that the magnetization density of this model is given by

We have the following result.

Lemma 2.3.11

Let h be a random external field which satisfies (A1) and (A2).

For the pure state parameter range, it follows that

For the mixed state parameter range, it follows that

The proof of this statement is a direct application of the convergence in distribution of the expectation of magnetization, see Lemma 3.7.1.

We present the phase characterization for the RFMFS model in Table 3.

We see that the transition from the PS parameter range to the MS parameter range involves the phase transition from the ordered paramagnetic phase to the spin glass phase. In the spin glass phase, the model exhibits chaotic size dependence and a unique “splitting” of the pure states unlike that of the deterministic inhomogeneous external field model. In particular, the A–W metastate describes a model where limiting states are positive magnetization states with equal probability to the negative magnetization states.

2.3.5 Convergence of the Newman–Stein Metastates

We introduce the collection of empirical metastates or Newman–Stein metastates \(\{ \overline{\kappa }_N^{\beta ,J,h}\}_{N \in \mathbb {N}}\) which are given by

almost surely. The collection of probability distributions of the N–S metastates is denoted by \(\{ \overline{\mathcal {K}}_N^{\beta ,J,h}\}_{N \in \mathbb {N}}\). The N–S metastates were introduced in [26] by Newman and Stein.

To begin the study of their convergence properties, we first consider their uniform tightness. The almost sure uniform tightness of the collection of N–S metastates follows from the almost sure uniform tightness of \(\mathcal {G}(\beta ,J,h)\), which can be deduced from either Theorem 2.3.5 or Lemmas 3.3.2, and A.2.2. The uniform tightness of the collection of probability measures of N–S metastates follows from the uniform tightness of the collection of intensity measures given in Lemmas 2.3.7 and A.2.3. We state these two results as a lemma.

Lemma 2.3.12

Let h be a random external field which satisfies (A1).

It follows that the collection of Newman–Stein metastates \(\{ \overline{\kappa }_N^{\beta ,J,h}\}_{N \in \mathbb {N}}\) is uniformly tight almost surely, and the collection of probability measures of Newman–Stein metastates \(\{ \overline{\mathcal {K}}^{\beta ,J}_N\}_{N \in \mathbb {N}}\) is uniformly tight.

Given the uniform tightness of these collections, it is enough to study expectations of the form

where \(P: \mathbb {R}^m \rightarrow \mathbb {R}\) is a finite degree polynomial of m-variables and \(\{ f_i \}_{i=1}^m\) is a finite collection belonging to \({\text {LBL}} (\mathbb {R}^\mathbb {N})\), see Lemma A.3.3. In the case of almost sure convergence, one considers such expectations in the limit as \(N \rightarrow \infty \) almost surely, and, in the case of weak convergence of the probability distributions of the N–S metastates, one considers such expectations in the limit in distribution in the limit as \(N \rightarrow \infty \).

To study the limits, we begin by introducing a collection of sets \(\{ A_{n, \delta } \}_{n \in \mathbb {N}}\) given by

where \(0< \delta < \frac{1}{6}\). We will use this collection of sets as “conditioning sets” for the N–S metastates. We show the following three results for this collection. First, subject to the addition of assumption (A4), we show that

almost surely, see Lemma 3.8.1 for the proof.

This result allows one to consider the Newman–Stein metastates only “along” the sets \(A_{n, \delta }\). Using the control of the fluctuation of \(m_n^h - m\) provided by conditioning on the sets \(A_{n, \delta }\), along with the asymptotics developed for the weights \(W_n^{\beta ,J,h,+}\) in Lemma 3.5.4, we show that

and

where \(f \in {\text {LBL}} (\mathbb {R}^\mathbb {N})\), see Lemma 3.8.2 for the full proof. Combining these results together, we have the following result concerning the pathwise asymptotics of the N–S metastates.

Lemma 2.3.13

Let h be a random external field which satisfies (A1), (A2), and (A4).

For the mixed state parameter range, it follows that

almost surely, where

For the full proof and notation, see Sect. 3.8. The results presented and proved here for conditioning sets are model specific adaptations of the ideas and methods concerning “regular sets” presented in [21]. In the above result, we are using the standard little-o asymptotic notation.

The limiting structure of the N–S metastates is then determined by the properties of the collection of random variables \(\{ T_N^+ \}_{N \in \mathbb {N}}\), which correspond to the portion of time that a 1-dimensional random walk with step-length \(h_0\) spends in the upper half-plane. Results concerning this collection of random variables are classical, and we refer to [32, Chapter 4]. We will utilize the following two results. The first results concerns the convergence in distribution of \(\{ T_N^+\}_{N \in \mathbb {N}}\) to an arcsine distributed random variable. The second related result concerning the characterization of the set of limit points of \(\{ T_N^+\}_{N \in \mathbb {N}}\), follows by using the Hewitt-Savage 0-1 law, see [19, Chapter 12], and the convergence in distribution to an arcsine distributed random variable.

Lemma 2.3.14

Let h be a random external field which satisfies (A1) and (A2).

It follows that

in distribution, where \(\alpha \) is an arcsine distributed random variable independent of h given by its distribution function

for \(x \in [0,1]\), and

almost surely.

For the full proof, see Sect. 3.8.

By combining the almost sure uniform tightness of the N–S metastates from Lemma 2.3.12, the pathwise asymptotics from Lemma 2.3.13, and the limit point density from Lemma 2.3.14, we have the following CSD result for the almost sure convergence of the N–S metastates.

Theorem 2.3.15

Let h be a random external field which satisfies (A1), (A2), and (A4).

For the mixed state parameter range, it follows that

almost surely.

In particular, it follows that \(\{ \overline{\kappa }^{\beta ,J,h}_N \}_{N \in \mathbb {N}}\) does not converge almost surely but there does exist a random subsequence \(\{ N_{k} \}_{k \in \mathbb {N}}\) such that

almost surely.

See Sect. 3.8 for the full proof.

By combining the almost sure uniform tightness of the N–S metastates from Lemma 2.3.12, the pathwise asymptotics from Lemma 2.3.13, and the convergence in distribution to the arcsine distributed random variable, we have the following convergence in distribution result.

Theorem 2.3.16

Let h be a random external field which satisfies (A1), (A2), and (A4).

For the mixed state parameter range, it follows that

in distribution, where \(\alpha \) is an arcsine distributed random variable independent of h.

See Sect. 3.8 for the full proof.

The Newman–Stein metastates were introduced as a way to obtain some form of almost sure convergence for the FVGS. However, as can be seen from these results, this is not the case, but at least one can realize the A–W metastate as random subsequence of the N–S metastates.

The convergence in distribution of the N–S metastates clearly shows the pathwise dependence of the model. In some sense, the presence of the arcsine random variable is a result of the pathwise dependence of the weights of the FVGS. Since the weights behave like indicator functions for large enough n, the result is somewhat expected.

This result is novel for this specific model, and similar, almost identical, results have been obtained by [21] for the BFCW model. The biggest difference between the proof technique of these results is that for Bernoulli components, one can work directly with the 1-dimensional simple random walks. For this model, it seems necessary to use some methods of non-linear statistics for 2-dimensional random walks as in the proof of Lemma 3.8.1.

2.3.6 Triviality of Metastates in the Pure State Parameter Range

We have not yet discussed the metastates for the pure state parameter range. This is because they are trivial due to to the almost sure convergence of the FVGS from Theorem 2.3.5. As a direct application of Lemma A.3.1, we have the following result.

Theorem 2.3.17

Let h be a random external field which satisfies (A1).

For the pure state parameter range, we have

in distribution, and

almost surely.

2.3.7 Summary and Remarks

As we earlier remarked, the results obtained are novel for this particular model and they are universal for random external fields in the precise sense given by the assumptions of the theorems concerning the random variable \(h_0\).

In the introduction, we noted that the RFCW model, in principle, should allow one to study limiting free energies with many global maximizing points with varying strengths. For the RFMFS model, due possibly to the spherical constraint, the only options are that the global maximizing point is unique, in which case the structure of the metastates is trivial, or there are exactly two global maximizing points of quadratic type which constitute the simplest form of global maximizing points. The results then for the case of two global maximizing points are almost identical to the results concerning the metastate obtained for the BFCW model. As such, it is natural to see many methods repeated such as the construction of sequence of local maximizing points of the exponential tilting functions, and the conditioning sets used for the analysis of the N–S metastate. The most significant difference between the BFCW model and the RFMFS model is that main calculations for the BFCW model concerning the limiting free energy consider the analysis of a smooth function on an unbounded set where all derivatives contain some form of randomness while the analogous analysis for the RFMFS model considers a two-dimensional smooth function on a bounded set such that the randomness vanishes for derivatives of order two or higher.