Abstract

The discrete membrane model is a Gaussian random interface whose inverse covariance is given by the discrete biharmonic operator on a graph. In literature almost all works have considered the field as indexed over \({{\,\mathrm{{\mathbb {Z}}}\,}}^d\), and this enabled one to study the model using methods from partial differential equations. In this article we would like to investigate the dependence of the membrane model on a different geometry, namely trees. The covariance is expressed via a random walk representation which was first determined by Vanderbei in (Ann Probab 12:311–314, 1984). We exploit this representation on m-regular trees and show that the infinite volume limit on the infinite tree exists when \(m\ge 3\). Further we determine the behavior of the maximum under the infinite and finite volume measures.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The main object of study in this article is the membrane model (MM), also known as discrete bilaplacian or biharmonic model. As a random interface, the MM can be defined as a collection of Gaussian heights indexed over a graph. In this article, we will study the MM on regular trees. Let \({{\,\mathrm{{\mathbb {T}}}\,}}_m\) be an m-regular infinite tree, that is, a rooted tree with the root having m-children and each of the children thereafter having \(m-1\) children. With abuse of notation we will denote the vertex set of \({{\,\mathrm{{\mathbb {T}}}\,}}_m\) by \({{\,\mathrm{{\mathbb {T}}}\,}}_m\) itself. Then the MM is defined to be a Gaussian field \(\varphi =(\varphi _{x})_{x\in {{\,\mathrm{{\mathbb {T}}}\,}}_m}\), whose distribution is determined by the probability measure on \({\mathbb {R}}^{{{\,\mathrm{{\mathbb {T}}}\,}}_m}\) with density

Here \(\Lambda \subset {{\,\mathrm{{\mathbb {T}}}\,}}_m\) is a finite subset, \(\Delta \) is the discrete Laplacian defined by

where \(y\sim x\) means that y is a neighbor of x, \(\textrm{d}\varphi _{x}\) is the Lebesgue measure on \({{\,\mathrm{{\mathbb {R}}}\,}}\), \(\delta _{0}\) is the Dirac measure at 0, and \(Z_{\Lambda }\) is a normalising constant. We are imposing zero boundary conditions i.e. almost surely \(\varphi _{x}=0\) for all \(x\in {{\,\mathrm{{\mathbb {T}}}\,}}_m\setminus {\Lambda }\), but the definition holds for more general boundary conditions.

The membrane model was introduced and studied mostly in the case \(\Lambda \subset {{\,\mathrm{{\mathbb {Z}}}\,}}^d\). For example, the existence of an infinite volume measure for \(d\ge 5\) was proved in [16] and later the model and its properties were studied in details in [13]. The point process convergence of extremes on \({{\,\mathrm{{\mathbb {Z}}}\,}}^d\) for \(d\ge 5\) was dealt with in [7]. The case of \(d=4\) is related to log-correlated models and the limit of the extremes was derived in [17]. Finally the scaling limit of the maximum in lower dimensions follows from the scaling limit of the model which was obtained by [6] in \(d=1\) and by [8] in \(d=2,\,3\).

The discrete Gaussian free field (DGFF) is a well studied example of a discrete interface model and has connections to other stochastic processes, such as branching random walk and cover times. Most of these connections arise due to the fact that the covariance of the DGFF is the Green’s function of the simple random walk. This is not the case for the MM, essentially because the biharmonic operator does not satisfy a maximum principle. This also depends heavily on the boundary conditions: closed formulas for the bilaplacian covariance matrix have been found [10, 11, 13], however they do not apply to our choice of boundary values. On the square lattice one can rely on other techniques, namely discrete PDEs, to prove results in the bilaplacian case. However as soon as one goes beyond \({{\,\mathrm{{\mathbb {Z}}}\,}}^d\) approximations of boundary value problems are less straightforward, and our work is prompted from this aspect. We will use a probabilistic solution of the Dirichlet problem for the bilaplacian [19] to investigate the membrane model indexed on regular trees. We restrict our study to regular trees because these graphs have many features which are different from \({{\,\mathrm{{\mathbb {Z}}}\,}}^d\). One of the most striking contrasts is that the number of vertices in the n-th generation is comparable to the size of the graph up to the n-th generation. From Vanderbei’s representation, it is clear that the boundary plays a prominent role in the behavior of the covariance structure. We will use this representation to derive the maximum of the field under the infinite and finite volume measures. In the next section we describe our set-up and also state the main results, followed by a discussion on future directions.

2 Main Results

2.1 The Model

For any two vertices \(x,y\in {{\,\mathrm{{\mathbb {T}}}\,}}_m\), we denote d(x, y) to be the graph distance between x and y. Then the Laplacian, whose definition was given in (1.2), can also be viewed as the following matrix:

We write \(\Delta ^2\) for its iteration, i.e., \(\Delta ^2f_x{:}{=}\Delta (\Delta f(x))\) and define \(\Delta ^2_\Lambda \) to be the matrix \((\Delta ^2(x,y))_{x,y\in \Lambda }\).

Lemma 1

The Gibbs measure \({\textbf{P}}_\Lambda \) on \({{\,\mathrm{{\mathbb {R}}}\,}}^\Lambda \) with 0-boundary conditions outside \(\Lambda \) given by (1.1) exists for any finite subset \(\Lambda \). It is the centered Gaussian field on \(\Lambda \) with covariance matrix \((\Delta ^2_\Lambda )^{-1}\).

Proof

We first prove that \(\Delta ^2\) is symmetric and positive definite, i.e., for any function \(f:{{\,\mathrm{{\mathbb {T}}}\,}}_m \rightarrow {{\,\mathrm{{\mathbb {R}}}\,}}\) which vanishes outside a finite subset and which is not identically zero

From (2.1) it is clear that \(\Delta \) is symmetric, and hence \(\Delta ^2\) is so. Let \(g=\Delta f\) and to prove (2.2) we observe that

Also, one can show using summation by parts that if \(\varphi :{{\,\mathrm{{\mathbb {T}}}\,}}_m\rightarrow {{\,\mathrm{{\mathbb {R}}}\,}}\) vanishes outside \(\Lambda \) then

The proof is now complete by using Proposition 13.13 of [9]. \(\square \)

2.2 Main Results

We denote the root of the tree by o. We will consider \(m\ge 3\). In the case when \(m=2\) the tree is isomorphic to \({{\,\mathrm{{\mathbb {Z}}}\,}}\) and the MM on \({{\,\mathrm{{\mathbb {Z}}}\,}}\) has been studied in the literature, see for instance [5, 6]. For any \(n\in {{\,\mathrm{{\mathbb {N}}}\,}}\), we define

Let \(\varphi =(\varphi _{x})_{x\in {{\,\mathrm{{\mathbb {T}}}\,}}_m}\) be the membrane model on \({{\,\mathrm{{\mathbb {T}}}\,}}_m\) with zero boundary conditions outside \(V_n\). In this case, we denote the corresponding measure \({\textbf{P}}_{V_n}\) by \({\textbf{P}}_n\). Also we denote the covariance function for this model by \(G_n\), that is, \(G_n(x,y){:}{=} {\textbf{E}}_n[\varphi _x\varphi _y]\). Let \((S_k)_{k\ge 0}\) be the simple random walk on \({{\,\mathrm{{\mathbb {T}}}\,}}_m\). We write \({\textbf{P}}_x\) for the canonical law of the simple random walk starting at x. The following theorem proves the existence of the infinite volume limit.

Theorem 2

The measures \({\textbf{P}}_n\) converge weakly to a measure \({\textbf{P}}\), which is the law of a Gaussian process \((\varphi _{x})_{x\in {{\,\mathrm{{\mathbb {T}}}\,}}_m}\) with covariance function G given by

We will see later (in Lemma 9) that for any \(x\in {{\,\mathrm{{\mathbb {T}}}\,}}_m\)

We define two sequences as follows

where \(N{:}{=}|V_n|\). We have

Our main result in this paper concerns the scaling limit of the maximum of the field, namely the Gumbel convergence of the rescaled maximum.

Theorem 3

For any \(\theta \in {{\,\mathrm{{\mathbb {R}}}\,}}\)

We show in the following result that up to the first order the constants do not change for the extremes and when we look at the expected maximum under the finite volume, it still converges to \(\sqrt{G(o,o)}\), after appropriate scaling. The same result can be proved under the infinite volume measure, so we stick to the finite volume case, the infinite volume situation being completely analogous.

Theorem 4

For \(m\ge 3\),

In case of the finite volume field we show that the maximum field normalised to have variance one converges in distribution to the Gumbel distribution. We define \(B_n{:}{=} b_n/\sqrt{G(o,o)}\) and \(A_n{:}{=} B_n^{-1}\).

Theorem 5

Let \(\psi _x= \varphi _x/\sqrt{G_n(x,x)}\) for \(x\in V_n\). Then for any \(\theta \in {{\,\mathrm{{\mathbb {R}}}\,}}\) and \(m\ge 14\) we have that

Remark 6

In exposing our results we keep all the constants depending on m explicit. We emphasize that \(m \ge 14\) is just the bound that our approach yields, while presumably the result holds for all \(m \ge 3\).

2.3 Discussion

-

Our result is heuristically motivated by the fast decay of correlations of the DGFF on a tree. As we shall see in Lemma 9 correlations decay exponentially in the distance between points, which suggests a strong decoupling and a behavior of the rescaled maximum similar to that of independent and identically distributed Gaussians.

-

In Theorem 3, the scaling constants show that the correlation structure can be ignored for the extremes and the behaviour is similar to that of i.i.d. centered Gaussian random variables with variance G(o, o). In the finite volume case, we rescaled the field to have variance one and hence the behaviour remains the same as that of the i.i.d. case. An interesting open problem is whether this behavior is retained for the finite volume field divided by \(\sqrt{G(o,\,o)}\). This convergence is by no means trivial to obtain as the finite volume variance convergence to \(G(\cdot ,\,\cdot )\) is not uniform, in particular the error depends on the distance to the leaves. Moreover, since the size of the boundary of a finite tree is non-negligible with respect to the total size we cannot claim that extremes are achieved in the bulk and not near the boundary.

-

For scaling extremes we use a comparison theorem of [12] that is based on Stein’s method. There are many different approaches to the question of convergence of extremes using Stein’s method, one notable instance being [2]. Compared to their method the advantage of [12] is that it does not require to control the conditional expectation of the field that emerges from the spatial Markov property. While for other interfaces, like the DGFF, the harmonic extension has a closed form in terms of random walk probabilities, the biharmonic extension is more subtle to bound, and [12] allow one to bypass this step.

-

The main contribution of the article is the analysis of the covariance structure for a membrane model on the tree. We are not aware of any prior work which deals with the bilaplacian model on graphs beyond \({{\,\mathrm{{\mathbb {Z}}}\,}}^d\), whilst there is an extensive literature on the discrete Gaussian free field on general graphs. We exploit the representation of the solution of a biharmonic Dirichlet problem in terms of the random walk on the graph. In the bulk the behaviour is easy to derive and is close to that of

$$\begin{aligned} {\overline{G}}_n(x,y){:}{=} {\textbf{E}}_x\left[ \sum \limits _{k=0}^{\tau _{0}-1} (k+1){{\,\mathrm{\mathbbm {1}}\,}}_{[S_k=y]} \right] , \end{aligned}$$where \(\tau _0\) is the first exit time from a bounded subgraph (see Sect. 3). Around the boundary additional effects arising out of boundary excursions kick in, in particular we will use the successive excursion times of the random walk and the local time of the random walk between two consecutive visits to the complement of a set. Control of such observables on general graphs will open up avenues for further interesting studies in the area of random interfaces.

-

Although we consider regular trees we believe that our results can be extended to rooted trees where the same scaling limit for the maximum will hold. The case of Galton–Watson tree will be more challenging due to the randomness of the offspring distribution, but would be an intriguing direction to extend the study of random interfaces to random graphs.

Structure of the article In Sect. 3 we recall the random walk representation for the solution of the biharmonic Dirichlet problem for a general graph and also rewrite the formula in our set-up. In Sect. 4 we show that the infinite volume membrane measure exists and provide a proof of Theorem 2. In Sect. 5 we prove Theorem 3 providing a limit for the expected maximum under the finite volume measure. In Sect. 6 we use the estimates to determine the fluctuations of the extremes in the infinite volume and prove Theorem 4. In Sect. 7 we show the fluctuations of the maximum under the finite volume measure. Section 8 is devoted to the proof of Lemma 15 which is related to finer estimates on the covariance of the model.

Notation In the following C is a generic constant which may depend on m and may change in each appearance within the same equation.

3 A Random Walk Representation for the Covariance Function

In this section we shall revisit the random walk representation for the covariance function \(G_n\). From the definition of the model it follows that \(G_n\) satisfies the following Dirichlet problem: for \(x\in V_n\)

If one considers the Dirichlet problem above but with \(-\Delta \) replacing \(\Delta ^2\) then the solution is the well-known expected local time of the simple random walk on the graph [18, Chapter 1]. In our set-up such a general easy formulation is not available. In particular, to the best of the authors’ knowledge one cannot relate the covariance of the MM to a stochastic process. The solution is then given by a weighted local times and an expression involving the boundary excursion times of the random walk. The boundary effects are more profound in the membrane model and this is documented in the existing works on \({{\,\mathrm{{\mathbb {Z}}}\,}}^d\) ( [8, 14, 15, 17]).

3.1 Intermezzo: Random Walk Representation on General Graphs

In this subsection we discuss the probabilistic solution of the Dirichlet problem for the discrete biharmonic operator obtained by [19], whose set-up is much more general in that it considers general graphs and not only trees. We recall it here for completeness. Let \({\mathcal {G}}\) be a connected graph and let \(\Lambda \subset {\mathcal {G}}\) be a finite subgraph. With a slight abuse we will confound the graph \({\mathcal {G}}\) resp. \(\Lambda \) with its vertex set, but this should not cause any confusion. Let \(\rho \) be a strictly positive measure on the discrete state space \({\mathcal {G}}\) and for all \(x,y\in {\mathcal {G}}\), q(x, y) be a positive symmetric transition function such that

Let \(P=(p(x,y))_{x,y\in {\mathcal {G}}}\) be a transition matrix such that

Let \((S_k)_{k\ge 1}\) be a random walk on \({\mathcal {G}}\), defined on a probability space \((\Omega ,{\mathcal {F}})\), with transition matrix P making the random walk symmetric. Now the Laplacian operator acting on a function \(f:{\mathcal {G}}\rightarrow {{\,\mathrm{{\mathbb {R}}}\,}}\) is defined as

The one-step transition operator P is defined as

Then

where I is the identity operator. We say that f is a solution to the non-homogeneous Dirichlet problem for the bilaplacian if f satisfies the following:

where \(\partial _k\Lambda \) is defined by

and where \(\psi ,\,\phi \) are graph functions representing the input datum resp. boundary datum. We want to obtain a probabilistic solution of the problem (3.2). We define \(\tau _i\) to be the \((i+1)\)-th visit time to \(\Lambda ^c\) by the random walk \(S_k\). Formally,

Note that \(\tau _0\) is the first exit time from \(\Lambda \). We will keep two assumptions throughout the Section:

-

(i)

\(\Lambda \cup \partial _1\Lambda \) is finite;

-

(ii)

\({\textbf{E}}_x\left[ \tau _0^2\right] <\infty \) for all \(x\in {\mathcal {G}}\).

Let

and the inner-product in \(L^2({\mathcal {G}},\rho )\) be defined as follows: for \(f,g\in L^2({\mathcal {G}},\rho )\)

One can show that P is a self-adjoint operator on \(L^2(\mathcal G,\rho )\). Hence \(\Delta \) is also self-adjoint. We define

Next we define an operator acting on \({\mathcal {A}}=\left\{ f\left| f:\Lambda ^c\rightarrow {{\,\mathrm{{\mathbb {R}}}\,}}\right. \right\} \). The operator Q acting on \({\mathcal {A}}\) is defined as

Observe that, if \(x\in \left( \Lambda \cup \partial _1\Lambda \right) ^c\), then \(Qf(x)=0\) for all \(f\in {\mathcal {A}}\). Therefore

Since \(\partial _1\Lambda \) is finite, one can show with the help of (ii) that (Qf)(x) is bounded. It can be shown that the operator Q is positive semi-definite on \(L^2(\Lambda ^c,\rho )\) (see [19]). Therefore Q can be diagonalized and can be written as

where the sum is over all the eigenvalues of Q and \(\Pi _\lambda \) are the projection operators onto the eigenspace corresponding to the eigenvalue \(\lambda \). Observing the range of the Q-operator in (3.6) and the fact that \(\partial _1\Lambda \) is finite, we can say that the operator Q is compact and \(\text {Range}(Q)\) is finite-dimensional. Therefore, the spectrum of Q is finite. Also, as Q is positive semi-definite, we conclude that all the eigenvalues are non-negative. A probabilistic solution of the problem (3.2) is given in [19].

Theorem 7

([19, Theorem 4]) Let \((\eta _t)_{t\ge 0}\) be a Poisson process with parameter 1 which is independent of the random walk \((S_k)\). Then the solution of (3.2) is given by

Alternatively, the above solution can be written in terms of the eigenvalues of Q and the corresponding projection operators as follows

where \({{\widetilde{P}}}f(z){:}{=}{\textbf{E}}_z[f(S_{\tau _1})]\) is the operator acting on functions defined on \(\partial _1\Lambda \) and

Note that (3.7) is a re-writing of the solution (3.6) which is a by-product of Vanderbei’s proof.

Remark 8

Without the presence of \(\eta \) the series describing the covariances might not be absolutely summable on every graph, as discussed in an example in [19]. However in our case, that is for regular trees where we have exponential decay of correlations for \(\varphi \), one can show that \(\eta _t\) does not play any role and can in fact be avoided altogether. Also note that since \(\{\tau _i-\tau _{i-1}:\, i\ge 1\}\) need not be i.i.d. the terms involving excursion times to \(\Lambda ^c\) in (3.6) must be dealt carefully.

We also note that the representation (3.7) is not directly stated as a theorem in [19] but if one goes through the proof of Theorem 4 in [19] then it follows immediately.

3.2 Back to Regular Trees

In our set-up, \(V_n\) consists in the first n generations of the regular tree. Note that \(V_n \cup \partial _1 V_n\) is finite. It follows from Lemma 11 that \({\textbf{E}}_x[\tau _0^2]<\infty \) for all \(x\in \mathcal {{\,\mathrm{{\mathbb {T}}}\,}}_m\) with \(m\ge 3\), so that (i)-(ii) are satisfied. It can be easily proved using the theory of electrical networks that the simple random walk on \({{\,\mathrm{{\mathbb {T}}}\,}}_m\) is transient for all \(m\ge 3\). Using the solution (3.6) we have the random walk representation of \(G_n(x,y)\) as follows:

We have used \(\phi (z)=0\) and \(\psi (z)={{\,\mathrm{\mathbbm {1}}\,}}_{[z=y]}\) in equation (3.6).

We write \(G_n(x,y)\) as

where

Note that \({\overline{G}}_n(x,y)= {\textbf{E}}_{x,y}\left[ \sum _{k=0}^{\tau _{0}-1}\sum _{\ell =0}^{\tau ^\prime _{0}-1} {{\,\mathrm{\mathbbm {1}}\,}}_{[S_k= S^\prime _\ell ]}\right] \) where \(S_k\) and \(S^\prime _\ell \) are two independent simple random walks starting from x and y respectively, and \(\tau _0\) and \(\tau _0^\prime \) are their first visit times to \(V_n^c\) respectively. \({\overline{G}}_n(x,y)\) plays crucial role in the study of the membrane model: in the \({{\,\mathrm{{\mathbb {Z}}}\,}}^d\) case, it was shown in [13] that \(G_n\) and \({\overline{G}}_n\) are close in the bulk of the domain. We will also see here that \(E_n(x,y)\) plays a role of the error term. We observe from (3.9) and (3.7) that

where \(h(z)={\textbf{E}}_z\left[ \sum \limits _{k=0}^{\tau _{1}-1} k{{\,\mathrm{\mathbbm {1}}\,}}_{[S_k=y]} \right] \) for \(z\in V_n^c\).

4 Proof of Theorem 2

If the infinite volume limit exists then it is supposed to have the covariance function G. We first show \(G(x,y)= \sum _{k=0}^\infty (k+1) {\textbf{P}}_x(S_k=y)\) can be computed in terms of d(x, y) for a m-regular tree and that it has exponential decay in the distance d(x, y).

Lemma 9

We have for any \(x, y\in {{\,\mathrm{{\mathbb {T}}}\,}}_m\) that

Proof

We define the Green’s function of the simple random walk on \({{\,\mathrm{{\mathbb {T}}}\,}}_m\) as the power series

From [20, Lemma 1.24] we have

We fix \(x,y\in {{\,\mathrm{{\mathbb {T}}}\,}}_m\) and write \(d=d(x,y)\), \(g({\textbf{z}}){:}{=}\Gamma (x,y|{\textbf{z}})\) for \({\textbf{z}}\in {\mathbb {C}}\). Now observe that

From (4.1) we get

So taking a derivative we have

and hence evaluation at \({\textbf{z}}=1\) gives

Also

Now we obtain

\(\square \)

The behavior of G depends crucially on the graph distance d(x, y) between two points x and y on the tree. We would need an estimate on the number of points (x, y) which are at a fixed distance k. The following lemma gives a bound on this.

Lemma 10

Let

Then

where C is a constant which depends on m.

Proof

Let \(e(x){:}{=}d(o,x)\) and for any \(\ell >0\) define

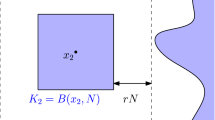

In other words, \(\partial _0 V_\ell \) is the set of all leaf-vertices in \(V_\ell \). Let us now fix any vertex in \(\partial _0 V_{n-\ell }\) and count the number of y’s in \(V_n\) such that \(d(x,y)=k\). Notice that for any \(x\in {{\,\mathrm{{\mathbb {T}}}\,}}_m\setminus \{o\}\), we have \(e(y)=e(x)\pm 1\) for all y with \(x\sim y\). Moreover, \(e(y)=e(x)- 1\) holds for only one such y and \(e(y)=e(x)+ 1\) holds for remaining \((m-1)\) many such y. In other words, from any \(x\in {{\,\mathrm{{\mathbb {T}}}\,}}_m\setminus \{o\}\) there is only one way to move closer to o and \((m-1)\) way to move farther away from o. Now let us first consider the case when \(k\le n\). If \(0\le \ell <k\), then for any \(x\in \partial _0 V_{n-\ell }\) the number of vertices in \(V_n\) which are at a distance k from x is \((m-1)^{{\lfloor }(k-\ell )/2{\rfloor }+\ell }\). This is because the unique path of length k from x to some vertex in \(V_n\) must consists of first \({\lceil }(k-l)/2{\rceil }\) steps moving closer to o and the remaining steps moving in any of the \((m-1)\) available directions. The situation is explained graphically in Figure 1. On the other hand if \(\ell \ge k\), then for any \(x\in \partial _0 V_{n-\ell }\) the number of vertices in \(V_n\) which are at a distance k from x is \(m(m-1)^{k-1}\). Also, note that for any \(j\ge 1\) there are \(m(m-1)^{j-1}\) vertices in \(\partial _0 V_j\). Therefore in this case

Now we consider the case when \(k>n\). In this case for any \(x\in \partial _0 V_{n-\ell }\) the maximum number of vertices which are at a distance k from x is \((m-1)^{{\lfloor }(k-\ell )/2{\rfloor }+\ell }\) (here we are over-counting and for \(k>2n-\ell \) this number is 0). Therefore

\(\square \)

Case \(0\le \ell <k\le n\) for the proof of Lemma 10. If \(d(x,\,y)=k\), in order to reach y from x one must move to their least common ancestor in \(\lceil (k-\ell )/2\rceil \) steps and from there move to y in \(k-\lceil (k-\ell )/2\rceil \) steps. The \(3-\)ary tree is partially drawn for simplicity

Our final lemma before the proof of Theorem 2 gives an estimate on the second moment of the exit time.

Lemma 11

For any \(x\in V_n\)

Proof

Observe that \({\textbf{E}}_x\left[ \sum _{z\in V_n} \sum _{\ell =0}^{\tau _0-1}\ell {{\,\mathrm{\mathbbm {1}}\,}}_{[S_\ell =z]}\right] = {\textbf{E}}_x\left[ {\tau _0 (\tau _0-1)}/{2}\right] \) which implies

By using Lemma 9 we get

Now suppose \(\ell = d(0,x)\). With arguments similar to the proof of Lemma 10, we have

Hence we have

\(\square \)

Proof of Theorem 2

Since the random walk on \({{\,\mathrm{{\mathbb {T}}}\,}}_m\) starting from vertex x is transient, \(\tau _0\) is finite almost surely and \(\tau _0\ge n-d(o,x)\) for all \(n\ge 1\). Therefore \(\tau _0\) increases to \(\infty \) as \(n\rightarrow \infty \). Hence as an immediate conclusion \(\{{\overline{G}}_n(x,y)\}_{n\ge 1}\) is an increasing sequence. By monotone convergence theorem we have

We now show that \(|E_n(x,y)|\rightarrow 0\) as n tends to infinity so that we have

As the measures under consideration are Gaussian, this will complete the proof. Recall the representation of \(E_n(x,y)\) from (3.11) with \(h(z)={\textbf{E}}_z\left[ \sum \limits _{k=0}^{\tau _{1}-1} k{{\,\mathrm{\mathbbm {1}}\,}}_{[S_k=y]} \right] \). Since Q has eigenvalues \(\lambda \) it can be seen that \(\left( \frac{1}{I+Q}\right) = \sum _{\lambda } \frac{1}{1+\lambda } \Pi _{\lambda }\). So we can rewrite (3.11) as

By Cauchy-Schwarz inequality and the fact that \(\Vert \left( {I+Q}\right) ^{-1}\Vert \le 1\) we get

Now we obtain a bound for the second factor in (4.4). We have for \(y\in \partial _0 V_\ell \)

Thus we have

Plugging in the bounds (4.3), (4.5) in (4.4) we obtain

From symmetry we can conclude

It follows from (4.6) that \(|E_n(x,y)|\rightarrow 0\) as \(n\rightarrow \infty \). \(\square \)

An alternative proof of (4.6) using the maximum principle for harmonic functions is provided in Appendix A.

5 Proof of Theorem 3

In this section we prove Theorem 3.

Proof of Theorem 3

For any \(x\in {{\,\mathrm{{\mathbb {T}}}\,}}_m\), we define \(\psi _x{:}{=}\varphi _x/\sqrt{G(o,o)}\). Then for each x, \(\psi _x\) is a standard Gaussian random variable. Recall that for any n

We set

Let \(\theta \in {{\,\mathrm{{\mathbb {R}}}\,}}\) be fixed and define

Let \(Poi(\lambda )\) denote a Poisson random variable with parameter \(\lambda \). We shall use a Binomial-to-Poisson approximation by [12]. By Theorem 3.1 of [12] we get

where \(d_{TV}\) is the total variation distance. We want to prove that \(d_{TV}\left( W_n, Poi(\lambda _n)\right) \) goes to zero as n tends to infinity. Once we prove it, we will have

But

Using Mill’s ratio

one can show that \(\lambda _n = N{\textbf{P}}(\psi _o>u_n(\theta ))\) converges to \(\textrm{e}^{-\theta }\) as n tends to infinity. Hence the proof will be complete.

We now obtain bounds for the terms in (5.1) to prove that \(d_{TV}\left( W_n, Poi(\lambda _n)\right) \) goes to zero as n tends to infinity.

Another use of Mill’s ratio gives

Now we give a bound on the other term in (5.1). Let \(x,y\in V_n\) with \(d(x,y)=k\ge 1\) and define \(r_k{:}{=}\textbf{Cov}(\psi _x, \psi _y)\). From Lemma 9 it follows that \(r_k\) depends only on k, not on x or y, and moreover \(0<r_k <1\) for all \(k\ge 1\). Now from Lemma 3.4 of [12] we obtain the following bounds:

We fix \(\delta \) such that \(0\le \delta < (1-r_1)/(1+r_1)\). We have

Now we use the bounds (5.2), (5.3) and get the following bound for the above term:

Note that in the second inequality we have used Lemma 10 and the fact that \(r_k\) is decreasing in k with \(r_k \le C k(m-1)^{-k}\), which follows from Lemma 9. Now observe that by definition \(1+\delta < 2/(1+r_1)\) and \(r_{{\lfloor }2n\delta {\rfloor }} < 1/2\) for large enough n. Thus \(I_n\) goes to 0 as n tends to infinity and we conclude \(d_{TV}\left( W_n, Poi(\lambda _n)\right) \) goes to zero as n tends to infinity. \(\square \)

6 Proof of Theorem 4

In this section we prove Theorem 4. We use the following

Lemma 12

For any \(x\in V_n\) one has \(G_n(x,x) \le G(o,\,o)\).

Proof

The proof can be readily adapted from that of [4, Corollary 3.2] which is carried out for \({{\,\mathrm{{\mathbb {Z}}}\,}}^d\). \(\square \)

The following lemma gives a bound on \({\textbf{E}}_x[\tau _0]\) in terms of the distance of the point x from the boundary.

Lemma 13

For \(x\in V_n\)

where

Proof

Using (4.2) we have

Suppose \(d(o,x)=k\). Then similarly to the proof of Lemma 10 we argue that

Therefore splitting the sum according to the distance d(x, y) we get,

As \({\lfloor }\frac{\ell -n+k}{2}{\rfloor } \le \frac{\ell }{2}-\frac{n-k}{2}\), we have

\(\square \)

The following bound will also be useful.

Lemma 14

For any \(z\in \partial _1V_n\)

where

Proof

We have

\(\square \)

We now proceed to prove Theorem 4.

Proof of Theorem 4

First we prove an upper bound for the expected maximum using a standard trick. Using Jensen’s inequality and Lemma 12 we have for any \(\beta >0\)

Optimizing over \(\beta \) we obtain

Thus

Next we prove the lower bound for the limes inferior. We use a Gaussian comparison inequality on an appropriate set of vertices. For this we need a lower bound on \(G_n(x,x)\) for x in an appropriate subset of \(V_n\). Using (3.9) we write

where

Our target is to obtain a bound for \({\overline{E}}_n(x,y)\). We have using Lemma 13, Lemma 14 and (4.1) with \({\textbf{z}}=1\)

To prove the bound for the limes inferior we define a subset \(U_n\) of \(V_n\) as follows. For each \(z\in \partial _0 V_{n-2\lfloor \log n\rfloor }=\{x\in V_n: d(o,x)=n-2\lfloor \log n\rfloor \}\), choose exactly one \(y_z \in \partial _0 V_{n-\lfloor \log n\rfloor }\) such that \(d(z,\,y_z)=\lfloor \log n\rfloor \). Then define

Note that \(U_n \subset \partial _0 V_{n-\lfloor \log n\rfloor }\) but \(|U_n| = |\partial _0 V_{n-2\lfloor \log n\rfloor }| = m(m-1)^{n-2\lfloor \log n\rfloor -1}\). Also from the definition of \(U_n\) it follows that for any \(x,y\in U_n\), \(d(x,y) \ge 2{\lfloor }\log n{\rfloor }\) and for any \(x\in U_n\), \(d(x,\partial _1 V_n)= \lfloor \log n\rfloor +1\). Now using the crude bound for \(E_n(x,y)\) in (4.6) we have that for any \(x,y\in U_n\)

where \(C_0(m)\) is a constant dependent on m only. Also from (6.6) we have for \(x\in U_n\)

Using these bounds we get from (6.5) that for any \(y\in U_n\)

Also from Lemma 9 we have for \(y,\,y'\in U_n\)

and hence

Now using (6.7) and (6.8) we have for \(y,y'\in U_n\)

We define

Note that \(\gamma (n,m)\rightarrow G(o,o)\) as \(n\rightarrow \infty \). Suppose n is large enough so that \(\gamma (n,m) >0\). Let \((\xi _x)_{x\in U_n}\) be i.i.d. centered Gaussian random variables with variance \(\gamma (n,m)\). Then from (6.9) we have

Therefore by the Sudakov-Fernique inequality [1, Theorem 2. 2. 3] we have

As \(U_n\subset V_n\), we get

But

Therefore

So the result follows now combining the lower bound (6.10) with the upper bound (6.4). \(\square \)

7 Proof of Theorem 5

In this section we prove Theorem 5. Before proving, we state two estimates which are crucially used in the proof. Recall the crucial error term in the Vanderbei’s representation (3.10)

Previously in (4.6) we showed that

This bound does not say anything about the dependency of the error on d(x, y). We improve the bound to get a dependence on the distance between the two points and this is crucial for our proof.

Lemma 15

Let \(x,y\in V_n\). For \(m \ge 5\) and any \(0\le J_0<\infty \) we have

where \(C_1(m)\) and \(C_2(m)\) are constants defined in (6.2) and (6.3) respectively.

As the proof requires some lengthy computations we devote Sect. 8 to it. Note that when we take \(J_0=0\), the above bound improves (4.6) and we understand the constants better as well. In the proof of Theorem 5 we shall use \(J_0=0\) and \(J_0=\log (d(x,y))\).

We know that \(G_n(x,x)\rightarrow G(o,o)\) but we do not know if this convergence is uniform as the error term depends on the distance of x from the boundary. However we see that for \(m\ge 10\) we can bound \(G_n(x,x)\) uniformly from below.

Lemma 16

For \(m\ge 10\) there is a positive constant \(C_3(m)\) which converges to 1 as \(m\rightarrow \infty \) such that

Proof

Note that \({\overline{G}}_n(x,x)\ge 1\). Taking \(J_0=0\) in (7.1) we get for \(m\ge 5\)

Therefore

But by definition of \(C_1(m)\) and \(C_2(m)\) in (6.2) and (6.3) respectively, it follows

One can observe that the function \(m \mapsto C_3(m)\) is an increasing function for \(m\ge 5\) and for \(m=9\) and 10 we compute that \(C_3(9)=-0.06 \) and \(C_3(10)= 0.2\). Hence for \(m\ge 10\) we have \(G_n(x,x) \ge C_3(m)>0\). \(\square \)

We now prove Theorem 5. In the proof we again use the comparison theorem of [12].

Proof of Theorem 5

We set

From the proof of Theorem 3 we observe that in this case it suffices to prove

for all \(\theta \).

We define \(R_n(x,y){:}{=} {\textbf{E}}_n[\psi _x \psi _y]\). First we obtain a bound for \(|R_n(x,y)|\). By using Lemma 9, Lemma 15 and (7.2) we obtain

where \(0\le J_0 <\infty \). Taking \(J_0=0\) in (7.4) we observe that for all distinct \(x,y\in V_n\)

It is easy to check that the function

is decreasing for \(m\ge 10\). For \(m=13\) and 14 we evaluate the above expression as 1.13 and 0.89 respectively. Therefore we conclude that for all distinct \(x,\,y\in V_n\) and for \(m\ge 14\)

for some fixed \(\eta \) with \(0<\eta <1\). We are now ready to prove (7.3). Let \(\theta \in {{\,\mathrm{{\mathbb {R}}}\,}}\) be fixed. We will use the following bounds which are obtained from Lemma 3.4 of [12].

Lemma 17

For \(x,y\in V_n\) the following hold.

-

(1)

If \(0 \le R_n(x,y) <1\),

$$\begin{aligned} 0\le \textbf{Cov}\left( {{\,\mathrm{\mathbbm {1}}\,}}_{[\psi _x> u_n(\theta )]}, {{\,\mathrm{\mathbbm {1}}\,}}_{[\psi _y > u_n(\theta )]}\right) \le C N^{-\frac{2}{1+R_n(x,y)}} .\end{aligned}$$(7.7) -

(2)

If \(0 \le R_n(x,y) \le 1\),

$$\begin{aligned} 0\le \textbf{Cov}\left( {{\,\mathrm{\mathbbm {1}}\,}}_{[\psi _x> u_n(\theta )]}, {{\,\mathrm{\mathbbm {1}}\,}}_{[\psi _y > u_n(\theta )]}\right) \le C R_n(x,y) N^{-2}\log N \textrm{e}^{2R_n(x,y)\log N}.\end{aligned}$$(7.8) -

(3)

If \(-1 \le R_n(x,y) <0\),

$$\begin{aligned} 0\ge \textbf{Cov}\left( {{\,\mathrm{\mathbbm {1}}\,}}_{[\psi _x> u_n(\theta )]}, {{\,\mathrm{\mathbbm {1}}\,}}_{[\psi _y > u_n(\theta )]}\right) \ge -C N^{-2}.\end{aligned}$$(7.9) -

(4)

If \(-1\le R_n(x,y) \le 0\),

$$\begin{aligned} 0\ge \textbf{Cov}\left( {{\,\mathrm{\mathbbm {1}}\,}}_{[\psi _x> u_n(\theta )]}, {{\,\mathrm{\mathbbm {1}}\,}}_{[\psi _y > u_n(\theta )]}\right) \ge -C |R_n(x,y)| N^{-2}\log N.\end{aligned}$$(7.10)

We write

We show that both \(T_1\) and \(T_2\) go to zero as n tends to infinity. First we consider \(T_1\). Let us choose \(\varepsilon \) such that \(0<\varepsilon<(1-\eta )/(1+\eta ) <1\), where \(\eta \) is the same as in (7.6). We now split \(T_1\) as

Using bound (7.7) with (7.6) and Lemma 10 we observe that the first term of (7.11) is bounded by

which goes to zero as n tends to infinity by the choice of \(\varepsilon \).

For the second term in (7.11) we use the bound (7.8) together with the bound (7.4) with \(J_0=\log (d(x,y))\). We get that the second term is bounded by

where

We now use the following fact for \(B_k\) whose proof is given in the end of this section.

Claim 18

For large n and for all \(k\ge {\lfloor }n\varepsilon {\rfloor }\)

Using the above claim we get that the second term of (7.11) is bounded by

Note that to show that the above bound goes to zero as n tends to infinity it is enough to prove \(nB_{{\lfloor }n\varepsilon {\rfloor }} \rightarrow 0\) as \(n\rightarrow \infty \). We have

Here the magnitude of m is used to get that

We observe that the function \(m \mapsto \log (C_1(m)/m)\) is a decreasing function and moreover that \(\log (C_1(9)/9)=-1.08\). Hence (7.12) holds for all \(m\ge 9\). Thus we proved that \(T_1\rightarrow 0\) as \(n\rightarrow \infty \).

Next we consider \(T_2\). For \(T_2\) we use the bounds (7.9), (7.10) together with the bound (7.4) with \(J_0=\log (d(x,y))\) to get

Clearly, the first part in the above bound goes to zero as n tends to infinity. For the second part we use the fact that \(B_k \le B_n\) for all \(k\ge n\) for large n which can be proved similarly as the Claim 18. Then we get that the second part is bounded by \(C n(\frac{n}{(m-1)^n} + B_n)\) which can be shown to go to zero as \(n\rightarrow \infty \) similarly as in the case of \(T_1\). Thus \(T_2\rightarrow 0\) as \(n\rightarrow \infty \). This completes the proof of (7.3). \(\square \)

We now prove Claim 18.

Proof of Claim 18

We define for \(t\ge 0\)

Then

This shows that \(F{'}(t) <0\) for all \(t \ge t_0\) for some \(t_0\). Hence F is decreasing on \([t_0, \infty )\). Therefore \(F(k)= B_k\le F({\lfloor }n\varepsilon {\rfloor })=B_{{\lfloor }n\varepsilon {\rfloor }}\) for all \(k\ge {\lfloor }n\varepsilon {\rfloor }\) for large n. \(\square \)

8 Finer Estimate on the Error and Proof of Lemma 15

In this section we will derive a finer estimate on the error \(E_n(x,y)\) which was crucially used to prove Theorem 5. We look at each individual term of the series which appears in \(E_n(x,y)\) and find a better bound than what we have before.

Proof of Lemma 15

Recall from (3.10)

Now conditioning on \(\eta _t\) we get

where

We now obtain bounds for \(a_j\). First we bound each of the \(a_j\)’s in terms of the distance of x and y from the boundary of \(V_n\) and then we bound the \(a_j\)’s in terms of the distance d(x, y).

Claim 19

For all \(j\ge 1\) the following two estimates hold.

-

(a)

Bound in terms of distance from boundary.

$$\begin{aligned} a_j \le C_1(m) C_2(m) d(x,\partial _1V_n)d(y,\partial _1V_n) (m-1)^{-d(y,\partial _1V_n)} \left( \frac{C_1(m)}{m}\right) ^{j-1}. \end{aligned}$$(8.2) -

(b)

Bound in terms of d(x, y).

$$\begin{aligned} a_j&\le (2j+1)^{4j+2} 3^{4j^2} \left( \frac{4(m-1)}{m-2}\right) ^{2j+1} \frac{m-1}{m-2}(d(x,y))^{2j+1} (m-1)^{-d(x,y)}. \end{aligned}$$(8.3)

To obtain a finer estimate on \(|E_n(x,y)|\) let us fix \(J_0\in [0,\infty )\) with the notion that when \(J_0=0\), the sum \(\sum _{j=1}^{J_0}\) is 0. Using the bounds (8.2) and (8.3) we have

Here we have used the fact that \(C_1(m)/m <1\) for \(m \ge 5\). Indeed, one can observe that the function \(m \mapsto C_1(m)/m\) is a decreasing function and for \(m=4\) and 5 we compute that \(C_1(4)/4=1.39\) and \(C_1(5)/5 = 0.87\).

Now from (8.1) it follows that

From symmetry we conclude that (7.1) holds. We are now left to prove Claim 19.

Proof of Claim 19

First we prove part (a). The proof involves the successive use of the strong Markov property. We have

Iteratively using the strong Markov property we obtain

Note that for any \(z\in \partial _1 V_n\)

This together with Lemma 14 and Lemma 13 gives the bound (8.2). \(\square \)

Part (b)

We obtain a bound for \(a_j\) in terms of the distance between x and y. Let \(p_k(z, w)= {\textbf{P}}_z[ S_k=w]\) be the k-step transition probability. We show it in two steps. First we show

and then we express \(\sum \limits _{k=0}^\infty k^{2j+1} p_{k}(x,y)\) in terms of the derivatives of \(g({\textbf{z}})=\Gamma (x,y|{\textbf{z}})=\sum _{k=0}^\infty {\textbf{P}}_x\left[ S_k=y\right] {\textbf{z}}^k\). We explicitly compute these derivatives in Sect. 8.1.

We have from (8.4) that

We now use the bound on the derivatives of g from (8.7) in (8.5) to obtain a bound for \(a_j\) in terms of d(x, y). For that we first write \(k^{\ell }\) in terms of \(\prod _{i=0}^{i_0}(k-i)\), \(i_0=0,\,1,\,\ldots ,\, \ell -1\). We observe that

In general we have that for any \(k,\ell \ge 1\)

where the coefficients \(\alpha ^{(\ell )}_{i}\) for all \(\ell \ge 1\) and \(i=0,1,\ldots , \ell -1\) are given recursively as follows

It follows that for all \(\ell \ge 1\) and \(i=0,\,1,\,\ldots , \ell -1\)

Now from (8.5) we have

Now using (8.6) and (8.7) we obtain

\(\square \)

8.1 Bound on the Higher Derivatives of \(\Gamma (x,y|{\textbf{z}})\)

In this section we obtain bound for the higher derivatives of the function \(g({\textbf{z}})=\Gamma (x,y|{\textbf{z}})\) evaluated at the point \({\textbf{z}}=1\). Recall from (4.1) that for \(x,\,y\in {{\,\mathrm{{\mathbb {T}}}\,}}_m\)

We prove the following bound.

Lemma 20

Let \(x,y\in {{\,\mathrm{{\mathbb {T}}}\,}}_m\) and \(d=d(x,y)\). Then for \(k\ge 1\)

and

Proof

We write \(\rho ({\textbf{z}}){:}{=}\sqrt{m^2-4(m-1){\textbf{z}}^2}\). Then

We have

Taking logarithms on both sides of (8.8) and then differentiating we get

Note that here we have used \(\rho {'}({\textbf{z}})=-(4(m-1){\textbf{z}})/\rho ({\textbf{z}})\). So we have

To obtain bounds for the derivatives of g we first bound h and its derivatives evaluated at \({\textbf{z}}=1\). We have

Differentiating h we get

Note that \(\rho (1)=m-2\) and \(\rho {'}(1)= - (4(m-1))/(m-2)\). Using these values we have

To obtain a bound on \(h^{''}(1)\) we write \(h{'}({\textbf{z}})\) as

Now differentiating with respect to \({\textbf{z}}\) we obtain

Putting \({\textbf{z}}=1\) we have

We observe that the term inside the square bracket in each summand is bounded by \((9(4(m-1)))/(m-2)\) and the other terms are the same as the summands in \(h{'}(1)\) except for the last term. So we conclude using (8.9) that

In a similar way we can write \(h^{(k)}(1)\) so that the term inside the square bracket in each summand is bounded by \((3 (2k-1)(4(m-1)))/(m-2)\) and the other terms are the same as the summands in \(h^{(k-1)}(1)\) except the last term. Hence we conclude that

where we obtain the second inequality by using the relation between the arithmetic and the geometric mean. We now prove (8.7) by the method of induction. We have

Assume that (8.7) holds true for \(k=1,\,\ldots ,\,\ell -1\). Now we have

Therefore by induction (8.7) holds for all \(k\ge 1\). \(\square \)

References

Adler, R., Taylor, J.E.: Random Fields and Geometry. Springer, New York (2007)

Arratia, R., Goldstein, L., Gordon, L.: Two moments suffice for Poisson approximations: the Chen-Stein method. Ann. Probab. 17(1), 9–25 (1989). https://doi.org/10.1214/aop/1176991491

Barlow, M.T.: Random Walks and Heat Kernels on Graphs. Cambridge University Press, Cambridge, England, UK (2017)

Bolthausen, E., Cipriani, A., Kurt, N.: Exponential decay of covariances for the supercritical membrane model. Commun. Math. Phys. 353(3), 1217–1240 (2017)

Caravenna, F., Deuschel, J.-D.: Pinning and wetting transition for \((1+1)\)-dimensional fields with Laplacian interaction. Ann. Probab. 36(6), 2388–2433 (2008) ISSN 0091-1798

Caravenna, F., Deuschel, J.-D.: Scaling limits of \((1+1)\)-dimensional pinning models with Laplacian interaction. Ann. Probab. 37(3), 903–945 (2009). https://doi.org/10.1214/08-AOP424

Chiarini, A., Cipriani, A., Hazra, R.S.: Extremes of some Gaussian random interfaces. J. Stat. Phys. 165(3), 521–544 (2016)

Cipriani, A., Dan, B., Hazra, R.S.: The scaling limit of the membrane model. Ann. Probab. 47(6), 3963–4001 (2019). https://doi.org/10.1214/19-AOP1351

Georgii, H.O.: Gibbs Measures and Phase Transitions. de Gruyter, Berlin (1988)

Hirschler,T., Woess, W.: Polyharmonic functions for finite graphs and Markov chains. In: Frontiers in Analysis and Probability, pp. 77–90. Springer, New York (2020)

Hirschler, T., Woess, W.: Laplace and bi-Laplace equations for directed networks and Markov chains. In: Expositiones Mathematicae (2021)

Holst, L., Janson, S.: Poisson approximation using the stein-chen method and coupling: number of exceedances of gaussian random variables. Ann. Probab. 18(2), 713–723 (1990)

Kurt, N.: Entropic repulsion for a Gaussian membrane model in the critical and supercritical dimension. PhD thesis, University of Zurich (2008). https://www.zora.uzh.ch/6319/3/DissKurt.pdf

Kurt, N.: Maximum and entropic repulsion for a Gaussian membrane model in the critical dimension. Ann. Probab. 37(2), 687–725 (2009)

Müller, S., Schweiger, F.: Estimates for the Green’s function of the discrete bilaplacian in dimensions 2 and 3. Vietnam J. Math. 47(1), 133–181 (2019)

Sakagawa, H.: Entropic repulsion for a Gaussian lattice field with certain finite range interactions. J. Math. Phys. 44(7), 2939–2951 (2003)

Schweiger, F.: The maximum of the four-dimensional membrane model. Ann. Probab. 48(2), 714–741 (2020). ISSN 0091-1798. https://doi.org/10.1214/19-AOP1372

Sznitman, A.-S.: Topics in Occupation Times and Gaussian Free Fields. Zurich Lectures in Advanced Mathematics. American Mathematical Society (2012). ISBN 9783037191095

Vanderbei, R.: Probabilistic solution of the dirichlet problem for biharmonic functions in discrete space. Ann. Probab. 12, 311–324 (1984)

Woess, W.: Random Walks on Infinite Graphs and Groups, Volume 138 of Cambridge Tracts in Mathematics. Cambridge University Press, Cambridge (2000). ISBN 0-521-55292-3. https://doi.org/10.1017/CBO9780511470967

Acknowledgements

Part of this work was carried out when BD, RSH and RR were at Indian Statistical Institute, Kolkata. They thank the institute for the great hospitality. RSH thanks Sayan Das for sharing an estimate for Lemma 10. The authors would like to thank two anonymous referees for several comments and suggestions that improved considerably the article. In particular we would like to thank one referee for suggesting us the proof presented in Appendix A.

AC is supported by the grant 613.009.102 of the Netherlands Organisation for Scientific Research (NWO). BD is supported by IISc through C. V. Raman postdoctoral fellowship and RSH was supported by the DST-MATRICS grant.RR is supported by the European Union’s Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie grant agreement no. 945045, and by the NWO Gravitation project NETWORKS under grant no. 024.002.003.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Availability of Data and Materials

Data sharing not applicable to this article as no datasets were generated or analysed during the current study.

Additional information

Communicated by Yvan Velenik.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A: An Alternative Argument for (4.6)

Appendix A: An Alternative Argument for (4.6)

After the revision of the paper it was pointed out to us by an anonymous referee that an alternative proof can be carried out to quantitatively estimate the error one commits by replacing \(G_n\) by G. This proof gives a bound comparable to (4.6) for points that are far away from the boundary. For completeness we would like to outline this proof here.

Proof

The proof is based on a double application of the maximum principle for harmonic functions [3, Theorem 1.37]. Fix \(y\in V_n\). We define the function \(H_y(\cdot )\) as

We then set

We have that

so that \({\Delta H_{y}(\cdot )}\) is harmonic in \(V_{n}\). We can invoke the the maximum principle to say that \(\displaystyle \max _{x\in V_{n}}\left| \Delta H_{y}(x)\right| \le 2a.\) Now consider the function

It is clear that \(f(x)=a\) on \(V_{n}^{\textrm{c}}\), and moreover that for \(x\ne o\)

while for \(x=o\) we have \(\Delta f(x)=-2am/(m-2)\le -2a.\) So the function

is subharmonic in \(V_{n}\) and again by the maximum principle

since \(\left| H_{y}(x)\right| \le a=f(x)\) by the definition of a for \(x\in V_{n+1}\setminus V_{n}.\) Running the same argument for \(-f\) rather than f we finally obtain that \(\left| H_{n}(x)\right| \le f(x)\) in \(V_{n}.\)

This implies that for \(x\in V_{n}\)

Being our argument symmetric in x and y, we can conclude our result. \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cipriani, A., Dan, B., Hazra, R.S. et al. Maximum of the Membrane Model on Regular Trees. J Stat Phys 190, 25 (2023). https://doi.org/10.1007/s10955-022-03043-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10955-022-03043-w