Abstract

Given a random walk \((S_n)\) with typical step distributed according to some fixed law and a fixed parameter \(p \in (0,1)\), the associated positively step-reinforced random walk is a discrete-time process which performs at each step, with probability \(1-p\), the same step as \((S_n)\) while with probability p, it repeats one of the steps it performed previously chosen uniformly at random. The negatively step-reinforced random walk follows the same dynamics but when a step is repeated its sign is also changed. In this work, we shall prove functional limit theorems for the triplet of a random walk, coupled with its positive and negative reinforced versions when \(p < 1/2\) and when the typical step is centred. The limiting process is Gaussian and admits a simple representation in terms of stochastic integrals,

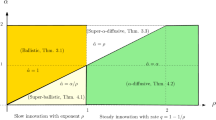

for properly correlated Brownian motions \(B, B^r\), \(B^c\). The processes in the second and third coordinate are called the noise reinforced Brownian motion (as named in [1]), and the noise counterbalanced Brownian motion of B. Different couplings are also considered, allowing us in some cases to drop the centredness hypothesis and to completely identify for all regimes \(p \in (0,1)\) the limiting behaviour of step reinforced random walks. Our method exhausts a martingale approach in conjunction with the martingale functional CLT.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In short, the purpose of this work is to establish invariance principles for random walks with step reinforcement, a particular class of random walks with memory that has been of increasing interest in recent years. Historically, the so-called elephant random walk (ERW) has been an important and fundamental example of a step-reinforced random walk that was originally introduced in the physics literature by Schütz and Trimper [2] more than 15 years ago. We shall first recall the setting of the ERW in order to motivate the two types of reinforcement that we will work with.

The ERW is a one-dimensional discrete-time nearest neighbour random walk with infinite memory, in allusion to the traditional saying that an elephant never forgets where it has been before. It can be depicted as follows: Fix some \(q \in (0,1)\), commonly referred to as the memory parameter, and suppose that an elephant makes an initial step in \(\{-1,1\}\) at time 1. After, at each time \(n \ge 2\), the elephant selects uniformly at random a step from its past; with probability q, the elephant repeats the remembered step, whereas with complementary probability \(1-q\) it makes a step in the opposite direction. In particular, in the case \(q= 1/2\), the elephant merely follows the path of a simple symmetric random walk. Notably, the ERW is a time-inhomogeneous Markov chain (although some works in the literature improperly assert its non-Markovian character). The ERW has generated a lot of interest in recent years, a non-exhaustive list of references (with further references therein) is [3,4,5,6,7,8,9,10,11,12], see also [13,14,15] for variations. A striking feature that has been pointed at in those works, is that the long-time behaviour of the ERW exhibits a phase transition at some critical memory parameter. Functional limit theorems for the ERW were already proved by Baur and Bertoin in [3] by means of limit theorems for random urns. Indeed, the key observation is that the dynamics of the ERW can be expressed in terms of Pólya-type urn experiments and fall in the framework of the work of Janson [16]. For a strong invariance principle for the ERW, we refer to Coletti, Gava and Schütz in [8].

The framework of the ERW is however limited, and it is natural to look for generalisation of its dynamics that allow the typical step to have an arbitrary distribution on \(\mathbb {R}\). In this work, we aim to study the more general framework of step-reinforced random walks. We shall discuss two such generalisations, called positive and negative step-reinforced random walks, the former generalising the ERW when \(q \in (1/2, 1)\) while the later covers the spectrum \(q \in [0,1/2]\), in both cases when the typical step is Rademacher distributed. We start by introducing the former. For the rest of the work, X stands for a random variable that we assume belongs to \(L^2(\mathbb {P})\), we denote by \(\sigma ^2\) its variance and by \(\mu \) its law. Moreover, unless specified otherwise, \((S_n)\) will always denote a random walk with typical step distributed as \(\mu \).

The noise reinforced random walk: A (positive) step-reinforced random walk or noise reinforced random walk is a generalisation of the ERW, where the distribution of a typical step of the walk is allowed to have an arbitrary distribution on \(\mathbb {R}\), rather than just Rademacher. The impact of the reinforcement is still described in terms of a fixed parameter \(p \in (0,1)\), that we also refer to as the memory parameter or the reinforcement parameter. We will work with different values of p but for readability purposes p does not explicitly appear in the notation or terminology used in this work. Vaguely speaking, the dynamics are as follows: at each discrete time, with probability p a step reinforced random walk repeats one of its preceding steps chosen uniformly at random, and otherwise, with complementary probability \(1-p\), it has an independent increment with a fixed but arbitrary distribution. More precisely, given an underlying probability space \(( \Omega , {\mathcal {F}}, \mathbb {P})\) and a sequence \({X}_1, {X}_2, \dots \) of i.i.d. copies of the random variable X with law \(\mu \), we define \({\hat{X}}_1, {\hat{X}}_2, \dots \) recursively as follows: First, let \(( \varepsilon _i : i \ge 2)\) be an independent sequence of Bernoulli random variables with parameter \(p \in (0,1)\) and also consider \((U[i] : i \ge 2)\) an independent sequence where each U[i] is uniformly distributed on \(\{1, \dots , i \}\). We set first \({\hat{X}}_1= {X}_1\), and next for \(i \ge 2\), we let

Finally, the sequence of the partial sums

is referred to as a positive step-reinforced random walk. We have from the definition of the sequence \(( \hat{X}_i)\) that

which implies that for any bounded measurable \(f: \mathbb {R}\mapsto \mathbb {R}^+\),

and it follows by induction that each \(\hat{X}_n\) has law \(\mu \). Beware however that the sequence \((\hat{X}_i)\) is not stationary. Notice that if \((\hat{S}_n)\) is not centred, it is often fruitful to reduce our analysis to the centred case by considering \((\hat{S}_n - n \mathbb {E}(X))\), which is a centred noise reinforced random walk with typical step distributed as \(X - \mathbb {E}(X)\). Observe that in the degenerate case \(p=1\), the dynamics of the positive step-reinforced random walk become essentially deterministic. Indeed when \(p=1\) we have \(\hat{S}_n=nX_1\) for all \(n \ge 1\), in particular the only remaining randomness for this process stems from the random variable \(X_1\).

In this setting, when \(\mu \) is the Rademacher distribution, Kürsten [12] (see also [17]) pointed out that \(\hat{S}= ( \hat{S}_n)_{n \ge 1}\) is a version of the elephant random walk with memory parameter \(q=(p+1)/2 \in (1/2,1)\) in the present notation. The remaining range of the memory parameter can be obtained by a simple modification that we will address when we introduce random walks with negatively reinforced steps. When \(\mu \) has a symmetric stable distribution, \(\hat{S}\) is the so-called shark random swim which has been studied in depth by Businger [18]. More general versions when the distribution \(\mu \) is infinitely divisible have been considered by Bertoin in [19], and we will briefly comment on this setting in a moment. Finally, when we replace the sequence of Bernoulli random variables \((\epsilon _n)\) by a deterministic sequence \((r_n)\) with \(r_n \in \{ 0,1 \}\), the scaling exponents of the corresponding step reinforced random walks have been studied by Bertoin in [20].

In stark contrast to the ERW, the literature available on general step-reinforced random walks remains rather sparse. Quite recently, Bertoin [1] established an invariance principle for the step-reinforced random walk in the diffusive regime \(p \in (0,1/2)\). Bertoin’s work concerned a rather simple real-valued and centered Gaussian process \(\hat{B}=( \hat{B}(t))_{t \ge 0}\) with covariance function given by

This process has notably appeared as the scaling limit for diffusive regimes of the ERW and other Polya urn related processes, see [3, 6, 7] for higher dimensional generalisations, and [21]. In [1] the process displayed in (1.1) is referred to as a noise reinforced Brownian motion and belongs to a larger class of reinforced processes recently introduced by Bertoin in [19] called noise reinforced Lévy processes. The noise reinforced Brownian motion plays, in the framework of noise reinforced Lévy processes, the same role as the standard Brownian motion in the context of Lévy processes. Moreover, just as the standard Brownian motion B corresponds to the integral of a white noise, \(\hat{B}\) can be thought of as the integral of a reinforced version of the white noise, hence the name. More precisely, from (1.1) it readily follows that the law of \(\hat{B}\) admits the following integral representation

where \(B^r=(B^r_s)_{ s \ge 0}\) is a standard Brownian motion, or equivalently, \(\hat{B}=( \hat{B}(t))_{t \ge 0}\) has the same law as

Some further properties of the noise reinforced Brownian motion can be found in [1], where the following functional limit theorem [1, Theorem 3.3] has been established: let \(p \in (0,1/2)\) and suppose that \(X \in L^2(\mathbb {P})\). Then, we have the weak convergence of the scaled sequence in the sense of Skorokhod as n tends to infinity

where \((\hat{B}(t))_{t \ge 0}\) is a noise reinforced Brownian motion.

Our work generalises this result but our approach differs from [1] as we work with a discrete martingale introduced by Bercu [4] for the ERW and later generalised in [22] for step-reinforced random walks. The martingale we work with is a discrete-time stochastic process of the form \(\widehat{a}_n \hat{S}_n\), where \((\widehat{a}_n)_{n \ge 0}\) is a properly defined sequence of positive real numbers of order \(n^{-p}\). As we shall see, investigation of said martingale and in particular its quadratic variation process, in conjunction with the functional martingale CLT [23], yields an alternative proof of Theorem 3.3 in [1].

The counterbalanced random walk: Next we turn our attention to the second process of interest, called the counterbalanced random walk or negative step-reinforced random walk, introduced recently by Bertoin in [24]. Beware that p in our work always corresponds to the probability of a repetition event, while in [24] this happens with probability \(1-p\). Similarly, we will consider a sequence of i.i.d. random variables \((X_n)_{n \in \mathbb {N}}\) with distribution \(\mu \) on \(\mathbb {R}\) and at each time step, the step performed by the walker will be, with probability \(1-p \in (0,1)\), an independent step \(X_n\) from the previous ones while with complementary probability p, the new step is one of the previously performed steps, chosen uniformly at random, with its sign changed. This last action will be referred to as a counterbalance of the uniformly chosen step. In particular, when \(\mu \) is the Rademacher distribution, we obtain an ERW with parameter \((1-p)/2 \in [0,1/2]\).

Formally, recall that \({X}_1, {X}_2, \dots \) is a sequence of i.i.d. copies of X and \(( \varepsilon _i : i \ge 2)\) is an independent sequence of Bernoulli random variables with parameter \(p \in (0,1)\). We define the sequence of increments \({\check{X}}_1, {\check{X}}_2, \dots \) recursively as follows (beware of the difference of notation between \(\hat{X}\) and \(\check{X}\)): we set first \({\check{X}}_1= {X}_1\), and next for \(i \ge 2\), we let

where \(U[i-1]\) denotes an independent uniform random variable in \(\{ 1, \dots , i-1\}\). Finally, the sequence of partial sums

is referred to as a counterbalanced random walk (or random walk with negatively reinforced steps). Notice also that, in contrast with the positive step-reinforced random walk, when \(p=1\) we still get a stochastic process, consisting of consecutive counterbalancing of the initial step \(X_1\) while for \(p=0\) we just get the dynamics of a random walk. For the positive reinforced random walk we already pointed out that the steps are identically distributed and hence are centred as soon as X is centred. On the other hand, for the negatively step-reinforced random walk, since

we clearly have

with initial condition \(\mathbb {E}({\check{S}_1})=\mathbb {E}(\check{X}_1) = m\). As was noted in [24], it follows from the previous recurrence that:

and note that the process \((\check{S}_n)\) is also centered if X is centred. Observe however that in stark contrast to the positive step-reinforced random walk, we cannot say that the typical step is centered without loss of generality: Indeed, since \(n \mapsto \mathbb {E}(\check{X}_n)\) is no longer constant as soon as \(m \ne 0\), due to the random swap of signs in the negative reinforcement algorithm, the centered process \((\check{S}_n-\mathbb {E}(\check{S}_n))\) is also no longer a counterbalanced random walk.

Turning our attention to its asymptotic behaviour, Proposition 1.1 in [24] shows that the behaviour of the counterbalanced random walk \(\check{S}_n\) is ballistic. More precisely, denoting by \(m=\mathbb {E}(X)\) the mean of the typical step X, then for all \(p \in [0,1]\) the process \((\check{S}_n)\) satisfies a law of large numbers:

Moreover, Theorem 1.2 in [24] shows that if we also assume that the second moment \(m_2 = \mathbb {E}(X^2)\) is finite, then the fluctuations are Gaussian for all choices \(p \in [0,1)\):

In particular, when X is centred as will be our case, we simply get

On the other hand, when \(p =1\) which corresponds to the purely counterbalanced case, and under the additional assumption that X follows the Rademacher distribution, then

The proofs of these results rely on remarkable connections with random recursive trees and even if these will not be needed in the present work, we encourage the interested reader to consult [24] for more details. In this article, we will establish a functional version of the asymptotic normality mentioned above under the additional assumption that \(m=0\), i.e. the typical step is centered. We recall that this assumption cannot be made without the loss of generality.

In the same spirit as in the noise-reinforced setting, we will call a noise counterbalanced Brownian motion of parameter \(p \in [0,1)\) a Gaussian process \(\check{B}\) with covariance given by

and it follows that the law of \(\check{B}\) admits the following integral representation

in terms of a standard Brownian motion \(B^c=(B^c(s))_{s \ge 0}\). Let us now state the main results of this work.

Law of large numbers for step-reinforced random walks: In order to establish our invariance principles, we shall need to investigate the asymptotic behaviour of step-reinforced random walks. In this direction, we establish in Section 2 the following result:

Theorem 1.1

(Law of large numbers) For any \(p \in (0,1)\), we have the \(L^{2}(\mathbb {P})\) and almost sure convergences:

Moreover, if \(p = 1\), (1.7) still holds for the counterbalanced random walk.

Note that if \(p = 1\), in the noise-reinforced case the result is clearly false, since we just have \(\hat{S}_n = n X_1\) for \(n \ge 1\) while in the counterbalanced case, we can write \(\check{S}_n = X_1 \check{S}_n'\) for \(n \ge 1\), where \(\check{S}'\) is a counterbalanced random walk with same parameter and with typical step distributed \(\delta _1\). Theorem 1.1 will be proved by means of two remarkable martingales, denoted throughout this work by \(\hat{M}\) and \(\check{M}\), associated respectively to noise reinforced and counterbalanced random walks. These will be introduced and studied in Sect. 2 and will play a crucial role in this work. We stress that the second convergence in Theorem 1.1 was already established in [24] in probability by different methods.

The invariance principles: Before stating the functional versions of the results we just mentioned, notice that given a sample of i.i.d. random variables \((X_n)\) with law \(\mu \), and an additional independent collection \((\epsilon _i)\), (U[i]) of Bernoulli random variables and uniform random variables respectively as before, we can construct from the same sample simultaneously to the associated random walk \((S_n)\), the processes \((\hat{S}_n)\) and \((\check{S}_n)\), that we refer respectively as the positive step-reinforced version and the negative step-reinforced version of \(({S}_n)\). It is then natural to compare the dynamics of the triplet \((S_n, \hat{S}_n, \check{S}_n)\), instead of individually working with \((\hat{S}_n)\) and \((\check{S}_n)\). When considering such a triplet, it will always be implicitly assumed that \((\hat{S}_n), (\check{S}_n)\) have been constructed in this special way from \((S_n)\). In particular, we used the same sequence of uniform and Bernoulli random variables to define both reinforced versions. Now we have all the ingredients to state our first main result:

Theorem 1.2

Fix \(p \in [0,1/2)\) and consider the triplet \((S_n, \hat{S}_n, \check{S}_n)\) consisting of the random walk \((S_n)\) with its reinforced version and its counterbalanced version of parameter p. Assume further that X is centred. Then, the following weak convergence holds in the sense of Skorokhod as n tends to infinity,

where B, \(\hat{B}\), \(\check{B}\) denote respectively a standard BM, a noise reinforced BM and a counterbalanced BM with covariances, \(\mathbb {E}(B(s) \check{B}(t)) = t^{-p}(t \wedge s)^{p+1}(1-p)/(1+p)\), \(\mathbb {E}( B(s) \hat{B}(t)) = t^p (t \wedge s)^{1-p}\), \(\mathbb {E}( \hat{B}(t) \check{B}(s) ) = t^{p}s^{-p} (t \wedge s) (1-p)/(1+p)\).

Notice that in the case \(p=0\), i.e. when no reinforcement events occur, this is just Donsker’s invariance principle since \((\check{S}_n), (\hat{S}_n)\) are just the random walk \((S_n)\) and \(\hat{B}\), \(\check{B}\) are just B. Hence, from now on we will assume that \(p >0\). The process in the limit admits the following simple integral representation in terms of stochastic integrals

where \(B=(B(t))_{t \ge 0}\), \(B^r = (B^r(t))_{t \ge 0}\), \(B^c = (B^c(t))_{t \ge 0}\) denote three standard Brownian motions with covariance structure \( \mathbb {E}( B(s) B^r(t)) = (1-p) (t \wedge s)\), \(\mathbb {E}( B(s) B^c(t)) = (1-p)(t \wedge s)\), \(\mathbb {E}( B^r(s) B^c(t)) = (t \wedge s)(1-p)/(1+p)\).

The restriction on the parameter \(p \in (0,1/2)\) comes from the fact that, as we will see, for the noise reinforced random walk only for such parameter the functional version works with this scaling, while the centred hypothesis is a restriction coming from the counterbalanced random walk. Now we point at some variants with less restrictive hypothesis, holding as long as we no longer consider the triplet. This allows us to drop some of the conditions we just mentioned, and the proofs will be embedded in the proof of Theorem 1.2. We start by removing the centred hypothesis when only working with the pair \((S_n, \hat{S}_n)\) in the diffusive regime \(p \in [0,1/2)\).

Theorem 1.3

Let \(p \in [0,1/2)\) and suppose that \(X \in L^2(\mathbb {P})\). Let \((S_n)\) be a random walk with typical step distributed as X and denote by \((\hat{S}_n)\) its positive step reinforced version. Then, we have the weak joint convergence of the scaled sequence in the sense of Skorokhod as n tends to infinity towards a Gaussian process

where B is a Brownian motion, \(\hat{B}\) is a noise reinforced Brownian motion with covariance \(\mathbb {E}[B(s) \hat{B}(t)] = t^p (t \wedge s)^{1-p}\).

It follows that the limit process in (1.10) admits the integral representation

where \(B=(B(t))_{t \ge 0}\) and \(B^r = (B(t)^r)_{t \ge 0}\) denote two standard Brownian motions with \( \mathbb {E}( B(t) B^r(s)) = (1-p) (t\wedge s)\). This result extends Theorem 3.3 in [1] to the pair \((S , \hat{S})\). Notice that the factor \(1-p\) in the correlation can be interpreted in terms of the definition of the noise reinforced random walk, since at each discrete time step, with probability \(1-p\) the processes \(\hat{S}\) and S share the same step \(X_n\).

Turning our attention to the counterbalanced random walk, when only working with the pair \((S_n, \check{S}_n)\) we can extend the convergence to \(p \in [0,1)\), and is the content of the following result:

Theorem 1.4

Let \(p\in [0,1)\) and suppose that \(X \in L^2(\mathbb {P})\) is centred. If \((S_n)\) is a random walk with typical step distributed as X and \((\check{S}_n)\) is its counterbalanced version of parameter p, then we have the weak convergence of the sequence of processes in the sense of Skorokhod as n tends to infinity

where B is a Brownian motion and \(\check{B}\) is a noise counterbalanced Brownian motion with covariance \(\mathbb {E}[B(s) \check{B}(t)] = t^{-p}(t \wedge s)^{p+1}(1-p)/(1+p)\) and \(\sigma ^2 = \mathbb {E}[X^2]\). If \(p=1\) and X follows the Rademacher distribution, the result still holds and in particular B and \(\check{B}\) are independent.

Moreover, the limit process in (1.11) admits the simple integral representation

where \(B=(B(t))_{t \ge 0}\) and \(B^c = (B^c(t))_{t \ge 0}\) denote two standard Brownian motions with \( \mathbb {E}( B(s) B^c(t)) = (1-p) (t \wedge s)\).

Finally, we turn back our attention to the noise reinforced setting when the parameter is \(p = 1/2\). Our method allows us to establish an invariance principle for the step-reinforced random walk at criticality \(p=1/2\) but notice that in this case we do not establish a joint convergence, as the required scalings are no longer compatible.

Theorem 1.5

Let \(p=1/2\) and suppose that \(X \in L^2(\mathbb {P})\). Then, we have the weak convergence of the sequence of processes in the sense of Skorokhod as n tends to infinity

where \(B=(B(t))_{t \ge 0}\) denotes a standard Brownian motion.

Our proofs rely on a version of the martingale Functional Central Limit Theorem (abreviated MFCLT), which we state for the reader’s convenience. For more general versions, we refer to Chapter VIII in [25]. If \(M=(M^{1}, \dots , M^{d} )\) is a real rcll d-dimentional process, we denote by \(\Delta M\) its jump process, which is the d-dimensional process null at 0 defined as \(( M^{1}_t- M^{1}_{t-}, \dots , M^{d}_t- M^{d}_{t-})_{t \in \mathbb {R}^+}\).

Theorem 1.6

(MFCLT, VIII-3.11 from [25]) Assume \(M= (M^1, \dots , M^d)\) is d-dimentional continuous Gaussian martingale with independent increments, and predicable covariance process \((\langle M^i,M^j \rangle )_{i,j \in \{ 1,\dots , d \}}\). For each n, let \(M^n = (M^{n,1}, \dots , M^{n,d})\) be a d-dimentional local martingale with uniformly bounded jumps \(|\Delta M^n| \le K\) for some constant K. The following conditions are equivalent:

- (i):

-

\(M^n \Rightarrow M\) in the sense of Skorokhod,

- (ii):

-

There exists some dense set \(D\subset \mathbb {R}^+\) such that for each \(t \in D\) and \(i,j \in \{1,\dots , d \}\),

as \(n \uparrow \infty \),

$$\begin{aligned} \langle M^{n,i} , M^{n,j} \rangle _t \rightarrow \langle M^{i} , M^{j} \rangle _t \quad \quad \text { in probability,} \end{aligned}$$(1.13)and

$$\begin{aligned} \sup _{s \le t} |\Delta M_s^n| \rightarrow 0 \quad \text { in probability}. \end{aligned}$$(1.14)

The rest of this paper is organised as follows: In Section 2 we introduce two crucial martingales for our reasoning associated with step-reinforced random walks and investigate their properties. We derive maximal inequalities and asymptotic results for step reinforced random walks that will be needed in the sequel and establish Theorem 1.1. Sect. 3 is devoted to the proof of Theorem 1.2 under the additional assumption that the typical step X is bounded and in Sect. 4 we discuss how to relax this assumption to the general case of unbounded steps by a truncation argument. In the process, we will also deduce the proofs of Theorem 1.3 and Theorem 1.4. Finally, in Sect. 5 we address the proof of Theorem 1.5 and we shall again proceed in two stages. Since many arguments can be carried over from the previous sections, some details are skipped.

2 The Martingales Associated to a Reinforced Random Walk and Proof of Theorem 1.1

In this section we work under the additional assumption that the typical step \(X \in L^2(\mathbb {P})\) is centred and recall that we denote by \(\sigma ^2= \mathbb {E}(X^2)\) its variance. The centred hypothesis is maintained for Sections 3 and 4, but dropped in Section 5.

Recall that if \(M = (M_n)_{n \ge 0}\) is a discrete-time real-valued and square integrable martingale with respect to a filtration \(( {\mathcal {F}}_n)\), then its predicable variation process \(\langle M \rangle \) is the process defined by \(\langle M \rangle _0=0\) and for \(n \ge 1\),

while if \((Z_n)\) is another martingale, the predictable covariation of the pair \(\langle M , Z \rangle \) is the process defined by \(\langle M , Z \rangle _0 = 0\) and for \(n \ge 1\),

We define two sequences \((\widehat{a}_n, n \ge 1)\), \((\check{a}_n, n \ge 1)\) as follows: Let \(\widehat{a}_1=\check{a}_1=1\) and for each \(n \in \{2, 3, \dots \}\), set

for respectively \(\widehat{\gamma }_n = \frac{n+p}{n} \), \(\check{\gamma }_n = \frac{n-p}{n}\) when \(n \ge 2\).

Proposition 2.1

The processes \(\hat{M} = (\hat{M}_n)_{n \ge 0}\), \(\check{M} = (\check{M}_n)_{n \ge 0}\) defined as \(\hat{M}_0 = \check{M}_0 = 0\) and \(\hat{M}_n = \widehat{a}_n \hat{S}_n\), \(\check{M}_n = \check{a}_n \check{S}_n\) for \(n \ge 1\) are centred square integrable martingales and we denote the natural filtration generated by the pair by \(({\mathcal {F}}_n)\), where \({\mathcal {F}}_0\) is the trivial sigma-field. Further, their respective predictable quadratic variation processes is given by \(\langle \hat{M} \rangle _0= \langle \check{M} \rangle _0 = 0 \) and, for all \(n \ge 1\)

and

where \((\hat{V}_n)_{n \ge 1}\) is the step-reinforced process given by \(\hat{V}_n= \hat{X}_1^2 + \dots + \hat{X}_n^2\) and the sums should be considered identical to zero for \(n=1\).

Proof

Starting with the positive-reinforced case, notice that for any \(n \ge 1\) we have

Hence, since \(\hat{S}_{n+1}=\hat{S}_n+\hat{X}_{n+1}\), and \( \widehat{\gamma }_n = (n+p)/n\),

and therefore, we obtain

Moreover, as X is centred and the steps \((\hat{X}_k)\) are identically distributed by what was discussed in the introduction, we have

and we conclude that \((\hat{M}_n)_{n \ge 0}\) is a martingale. Turning our attention to its quadratic variation, we have \(\mathbb {E}( \hat{S}_n^2) \le n^2 \mathbb {E}(X^2)= n^2 \sigma ^2\) and hence, \(\hat{M}_n\) is indeed square integrable and its predictable quadratic variation exists. Next, we observe that for \(n \ge 1\) we have

Finally, as was pointed out in the proof of Lemma 3 in [19], and can be verified from the definition of the \(\hat{X}_n\), it holds that

and hence we arrive at the formula (2.2).

For the negative-reinforced case, the proof follows very similar steps after minor modifications have been made. Since for \(n \ge 1\),

we now have

and the martingale property for \((\check{M}_n)_{n \ge 0}\) follows. For the quadratic variation, the proof is the same after noticing that since clearly \(\check{X}_k^2 = \hat{X}_k^2\), we can also write \(\hat{V}_n= \check{X}_1^2 + \dots + \check{X}_n^2\). \(\square \)

We write for further use the following asymptotic behaviours: the first ones are related to the study of the positive-reinforced case and hold for \(p \in (0,1/2)\):

while for \(p = 1/2\) we have a change on the asymptotic behaviour in the series,

which is the reason behind the different scaling showing in Theorem 1.5. On the other hand, for the negatively-reinforced case we have for \(p \in (0,1]\),

The limits are derived from standard Gamma function asymptotic behaviour, and were already pointed out in Bercu [4].

Before turning our attention to the proof of Theorem 1.1, let us introduce a more general version of \(\check{M}\) that will be needed in our analysis, when the steps of the counterbalanced random walk are not centred. In this direction set \(Y_0 := 0\) and for \(n \ge 1\), let

It readily follows by (2.8) and the recursive formula (1.3) that for \(n \ge 1\), we have

and we deduce that \((Y_n)_{n \ge 1}\) is a centred a martingale – note that if \(m = 0\), we have \(Y= \check{M}\).

We shall now make use of \(\hat{M}\), \(\check{M}\) and Y to study the rate of growth of \(\hat{S}\), \(\check{S}\) and to establish Theorem 1.1. In this direction, the following lemma has already been observed in [1, 22] using a different technique, we present here a more elementary approach.

Lemma 2.2

For every fixed \(p \in (1/2,1)\), the following convergence holds a.s. and in \(L^2(\mathbb {P})\),

where \(\hat{W} \in L^2(\mathbb {P})\) is a non-degenerate random variable.

Proof

Thanks to Proposition 2.1 we know that \(\hat{M}_n = \hat{a}_n \hat{S}_n\) is a martingale. Further, we obtain from (2.6) and the asymptotics \(\widehat{a}_n \sim n^{-p}\) that, for some constant C large enough,

for all \(n \in \mathbb {N}\). Since \(p>1/2\), the latter series is summable and we conclude that

By Doob’s martingale convergence theorem there exists a non-degenerate random variable \(\hat{W} \in L^2(\mathbb {P})\) such that \(\hat{M}_n \rightarrow \hat{W}\) a.s. and in \(L^2(\mathbb {P})\) as \(n \rightarrow \infty \). Using the asymptotics \(\widehat{a}_n \sim n^{-p}\) we conclude the proof. \(\square \)

We now focus our attention on establishing the almost sure convergence of Theorem 1.1. We shall show in Corollary 2.5 below that both convergences also hold in \(L^{2}(\mathbb {P})\). However, additional estimates are still needed to deduce the \(L^2(\mathbb {P})\) convergence.

Proof of the a.s. convergences in Theorem 1.1

Let us start with the NRRW and in this direction recall that \(E[\hat{X}_n] = m\) for all \(n \ge 1\). First, since \(\hat{S}(n) - n \mathbb {E}[X]\), for \(n \ge 1\) is a NRRW with same parameter p and centered steps with law \(X-\mathbb {E}[X]\), it would suffice to show that for centered X, we have \(n^{-1}\hat{S}(n) = 0\). Considering first the case \(p \in (0,1/2]\), this can now be achieved by making use of Theorem 1.3.17 in [26] and the martingale \(\hat{M}\) that we introduced in Proposition 2.1. More precisely, remark that for any \(p \in (0,1/2]\) and \(\alpha > 0\), we have

where the asymptotic behaviour of \(\sum _{k=1}^n\hat{a}_k^2\) as \(n \uparrow \infty \) is dictated for \(p \in (0,1/2)\) and \(p = 1/2\) respectively by (2.10) and (2.11). Now, it readily follows from these estimations that if \( p \in (0,1/2)\), for \(\alpha := 2p-1\) we have

while if \(p = 1/2\), for \(\alpha := \epsilon \) for any \(\epsilon > 0\) it holds that

We deduce from Theorem 1.3.17 in [26], taking \(\beta := \alpha \), that \(n^{-(1-2p)}\hat{M}_n \rightarrow 0\) and \(n^{-\epsilon } \hat{M}_n \rightarrow 0\), the convergences holding a.s. Recalling that \(a_n \sim n^{-p}\), we get from the definition of \(\hat{M}\) that \(n^{-1} \hat{S}_n \rightarrow 0\) almost surely. The case \(p \in (1/2, 1)\) now easily follows from the convergence of Proposition 2.2.

The counterbalanced case will follow from Theorem 1.3.24 in [26]. In this direction, fix \(p \in (0,1]\), recall that the process \((Y_n)\) defined in (2.13) is a martingale, and we claim that:

Let us first explain why this yields the desired result. Recalling from (1.4) that \(\mathbb {E}(\check{S}_n) \sim n (1-p)m/(1+p)\) and \(\check{a}_n \sim n^p\) as \(n \uparrow \infty \), it follows that

by definition of \((Y_n)\). The second convergence in (1.7) now follows.

We now shall prove (2.15) and we start investigating the case \(p \in (0,1)\). In this direction, we set

Since \(\mathbb {E}[ \epsilon _{k+1} \mid {\mathcal {F}}_n ] = 0\), to make use of Theorem 1.3.24 [26] it remains to show that

First, from the definition of \(\epsilon _n\) and \({\check{\gamma }}_n\) we can write:

Now, remark that \(\check{X}_{n+1}^2 =\hat{X}_{n+1}^2\) as well as the identities (2.7) and (2.8). Conditioning with respect to \({\mathcal {F}}_n\) in the previous display, we obtain that

Since \((\hat{V}_n)\) is a noise reinforced random walk with step distributed \(X^2\), we get that \(n^{-1}\hat{V}_n \rightarrow \sigma ^2\) and the condition (2.16) will follow as soon as we establish that

Indeed, once we establish (2.17), then the first term satisfies \(\sup _n (n-p)^{-2} \check{S}_n^2< \infty \) which easily implies that also \(\sup _n (n-p)^{-1} \check{S}_n < \infty \), thus all other terms appearing in the conditional expectation \(\mathbb {E}[|\epsilon _{n+1}|^2 \mid {\mathcal {F}}_n]\) have a finite supremum too. In order to verify (2.17) observe that

However, we know that the last term converges towards \(\sigma ^2\) for any \(p \in (0,1)\) by the first part of our result and (2.16) follows. Putting everything together and writing \(s_n = \sum _{k=1}^n a_k\), we deduce from Theorem 1.3.24 in [26] that

Finally, since \(s_{n} \sim K n^{1+p}\) for some konstant K we conclude the proof for \(p \in (0,1)\).

For the remaining case \(p=1\), recalling the discussion following Theorem 1.1 it suffices to establish that \(n^{-1} \check{S}_n \rightarrow 0\) a.s. for a counterbalanced random walk with typical step distributed \(\delta _1\). Now, this follows reasoning as before, noting that now \(|\hat{X}_n| = 1\) and that we have the simple bound \(|\check{S}_n| \le n\) for every \(n \ge 1\). \(\square \)

When the typical step is centred, we can derive from our previous arguments sharper results:

Corollary 2.3

Suppose that \(p \in (0,1/2)\) and that \(E[X] = 0\). We have the almost sure convergences:

Proof

The first convergence has already been established during the proof of Theorem 1.1. Indeed, it is a consequence of the convergence \(n^{-(1-2p)}\hat{M}_n \rightarrow 0\) and the asymptotic behaviour \(a_n \sim n^{-p}\). For the counterbalanced case, we can proceed similarely, noticing that by (2.12), we now have:

Let now \(\alpha = 1+2p\) and \(\beta =1\). Then, \(\alpha < 2 \beta \) if and only if \(p < 1/2\) and the conditions of Theorem 1.3.17 in [26] are again satisfied. It follows, as before, that \(n^{-1} \check{M}_n =0 \rightarrow 0 \text { a.s.}\) and since \(\check{a}_n \sim n^p\) as \(n \rightarrow \infty \), the claim follows. \(\square \)

We continue by investigating bounds for the second moments of the supremum process of the step-reinforced random walk \(\hat{S}\) for all regimes. These bound will be needed for establishing the \(L_2(\mathbb {P})\) convergences of Theorem 1.1.

Lemma 2.4

For every \(n \ge 1\), the following bounds hold for some numerical constant c:

Proof

We tackle each of the three cases \(p \in (0,1/2)\), \(p=1/2\) and \(p \in (1/2,1)\) individually:

-

(i)

Let us first consider the case when \(p \in (0, 1/2)\). We observe that by (2.6) and by (2.10)

$$\begin{aligned} \mathbb {E}(\hat{M}_n^2) = \mathbb {E}( \langle \hat{M} \rangle _n) \le \sum _{k=1}^{n} \sigma ^2 \widehat{a}_{k}^2 \sim \sigma ^2 \frac{1}{1-2p}n^{1-2p}, \quad \text {as } n \rightarrow \infty . \end{aligned}$$Hence we obtain by Doob’s inequality that

$$\begin{aligned} \mathbb {E}\left( \sup _{k \le n} | \hat{M}_k|^2 \right) \le c_1 \sigma ^2 n^{1-2p} \end{aligned}$$where \(c_1>0\) is some constant. Since it evidently holds that

$$\begin{aligned} \mathbb {E}\left( \sup _{k \le n} | \hat{S}_k|^2 \right) \le \frac{1}{\widehat{a}_n^2} \mathbb {E}\left( \sup _{k \le n} | \hat{M}_k|^2 \right) , \end{aligned}$$it follows readily that

$$\begin{aligned} \mathbb {E}\left( \sup _{k \le n} | \hat{S}_k|^2 \right) \le c_1 \sigma ^2 \frac{n^{1-2p}}{\widehat{a}_n^2} \sim c_1 \sigma ^2 n, \quad \text {as } n \rightarrow \infty . \end{aligned}$$By monotonicity, we conclude the proof for this case.

-

(ii)

Let us now assume that \(p=1/2\), we then obtain by (2.11) and monotonicity that for all \(n \ge 1\) we have

$$\begin{aligned} \mathbb {E}( \langle \hat{M} \rangle _n) \le \sigma ^2 \log n. \end{aligned}$$We conclude as in the previous case that this implies

$$\begin{aligned} \mathbb {E}\left( \sup _{k \le n} | \hat{S}_k|^2 \right) \le c_2 \sigma ^2 n \log n, \end{aligned}$$where \(c_2>0\) is some constant.

-

(iii)

Finally, let us consider the case \(p>1/2\). Here, we then have as \(n \rightarrow \infty \)

$$\begin{aligned} \sigma ^2 \sum _{k=1}^n \widehat{a}_{k+1}^2 \le C\sigma ^2 \sum _{k=1}^n \frac{1}{k^{2p}} < {\tilde{c}} \end{aligned}$$for a constant C large enough and some finite constant \({\tilde{c}}\). This entails that \(\mathbb {E}( \langle \hat{M} \rangle _n) \le \sigma ^2 {\tilde{c}}\) and we deduce as before the bound

$$\begin{aligned} \mathbb {E}\left( \sup _{k \le n} | \hat{S}_k|^2 \right) \le c_3 \sigma ^2 n^{2p}, \end{aligned}$$where \(c_3>0\) is some constant.

Thus we have established the desired bounds for all regimes. \(\square \)

As an application of the maximal inequalities displayed in Lemma 2.4 for the noise reinforced random walk, we establish \(L^2(\mathbb {P})\) convergence type results for all regimes \(p \in (0,1)\) and we deduce that the LLN stated in Theorem 1.1 also hold in \(L^2(\mathbb {P})\).

Corollary 2.5

We have the following convergences in the \(L^2(\mathbb {P})\)-sense.

- (i):

-

For \(p \in (0,1/2)\) we have

$$\begin{aligned} \lim _{n \rightarrow \infty } \frac{\hat{S}_n}{n^{1-p}} =0. \end{aligned}$$ - (ii):

-

For \(p=1/2\) we have

$$\begin{aligned} \lim _{n \rightarrow \infty } \frac{\hat{S}_n}{\sqrt{n} \log n} =0. \end{aligned}$$ - (iii):

-

For \(p \in (1/2,1)\) we have

$$\begin{aligned} \lim _{n \rightarrow \infty } \frac{\hat{S}_n}{n}=0. \end{aligned}$$

In particular, all the convergences in Theorem 1.1 also hold in \(L^2(\mathbb {P})\).

Proof

Let (f(n)) be a sequence of positive numbers and notice that by Lemma 2.4, if as \(n \uparrow \infty \)

then we have convergence in the \(L^2\)-sense to 0 of the sequence \((\hat{S}_n/f(n))\). Now, respectively for each one of the tree cases:

-

(i)

We take \(f(n):= n^{1-p}\) and observe that \(n^{2p-1} \rightarrow 0\) as \(n \rightarrow \infty \) since \(p \in (0,1/2)\).

-

(ii)

We take \(f(n):= \sqrt{n} \log n\), plainly \(1/ \log (n) \rightarrow 0\) as \(n \rightarrow \infty \).

-

(iii)

We take \(f(n):=n\) and observe that \(n^{2(p-1)} \rightarrow 0\) as \(n \rightarrow \infty \) because \(p<1\).

This concludes the first part of the proof. Next, notice that (i), (ii), (iii) imply that for any \(p \in (0,1)\), we have \(n^{-1}\hat{S}_n \rightarrow m\) in \(L^2(\mathbb {P})\). Indeed, it suffices to notice once again that \(\hat{S}_n - n\mathbb {E}[X]\) for \(n \ge 1\) is a centered noise reinforced random walk. To deduce the convergence in the counterbalanced case, fix \(p \in (0,1)\) and remark that we can bound,

where now, \(\sum _{i=1}^n |\check{X}_i|\) for \(n \ge 1\) is a noise reinforced random walk with typical step distributed |X|. In particular, it follows from the first part of the proof that \(n^{-1}\sum _{i=1}^n |\check{X}_i| \rightarrow \mathbb {E}[|X|]\) in \(L^2(\mathbb {P})\). Now, the desired convergence follows by the (generalized) dominated convergence theorem. \(\square \)

This concludes the proof of Theorem 1.1 and we shall now turn our attention to the proof of the stated invariance principles.

3 Proof of Theorem 1.2, 1.3 and 1.4 when X is Bounded

Recall that in this section and Sect. 4 we work under the additional assumption that X is centred. As was discussed in the introduction, for positive step-reinforced random walks the centredness hypothesis can be assumed without loss of generality, but that is no longer the case for negative step-reinforced random walks. We are now in a position to prove Theorem 1.2 when X is bounded and in the process we will also establish Theorem 1.3 and Theorem 1.4. For that reason, in several statements we also consider \(p \in [1/2, 1]\) when working with the counterbalanced random walk. Additionally, when we work with the counterbalanced random walk for \(p=1\), we assume as in Theorem 1.4 that X is Rademacher distributed, this will be recalled when necessary. Our approach relies on using the martingale introduced in Proposition 2.1 and applying the MFCLT 1.6. We will establish the general case for \(X \in L^2(\mathbb {P})\) by a truncation argument, detailed in Section 4.

Now, the key is to notice that, since by (2.10) resp. (2.12) we have for any \(t \ge 0\)

in order to get the convergence (1.8) it is enough to prove (except for a technical detail at the origin in the third coordinate that will be properly addressed), the convergence

for Brownian motions B and \(B^r\) and \(B^c\) defined as in (1.9) where the sequence on the left-hand side is now composed by martingales. More precisely, for each \(n \in \mathbb {N}\), the processes

are just rescaled, continuous-time versions of the martingales we introduced in Proposition 2.1, multiplied by respective factors of \(n^{p-1/2}\) and \(n^{-1-p}\). We will also denote as \(N^{(n)}\) the scaled random walk in the first coordinate and we proceed at establishing (3.1) by verifying that the conditions of the MFCLT 1.6 are satisfied. In that direction and recalling the condition (1.13), we start by investigating the asymptotic negligibility of the jumps:

Lemma 3.1

(Asymptotic negligibility of jumps)

- (i):

-

Fix \(p \in (0,1/2)\). For each \(t >0\), the following convergence holds almost surely:

$$\begin{aligned}&\sup _{s \le t } |\Delta \hat{N}^{(n)}_s| \rightarrow 0 \quad \quad \text { as } n \uparrow \infty . \end{aligned}$$ - (ii):

-

Fix \(p \in (0,1]\). For each \(t >0\), the following convergence holds almost surely:

$$\begin{aligned} \sup _{s \le t} |\Delta \check{N}_s^{(n)}| \rightarrow 0 \quad \quad \text { as } n \uparrow \infty . \end{aligned}$$

Proof

(i) Notice that

where by hypothesis we have \(\Vert X\Vert _\infty < \infty \). Now, since \(\widehat{a}_k \sim k^{-p}\), we have \(\sup _k \widehat{a}_k < \infty \) and we deduce recalling the definition \({\widehat{\gamma }}_k = (k+p)/k\) that:

for some constant \(C>0\) and (i) follows.

(ii) Similarly, since we also have \(\Delta \check{M}_{k+1} = \check{a}_{k+1}(\check{S}_{k+1} - {\check{\gamma }}_k \check{S}_k)\), arguing as before we get:

since \(\check{\gamma }_n = (n-p)/n\). Recalling from (2.12) the asymptotic behaviour \(\check{a}_n \sim n^p\), we get that \(\sup _{s \le t} |\Delta \check{N}^{(n)}_s| \rightarrow 0\) pointwise for each t. \(\square \)

Now we turn our attention to the joint convergence of the quadratic variation process, and this is the content of the following lemma:

Lemma 3.2

(Convergence of quadratic variations) For each fixed \(t \in \mathbb {R}^+\), the following convergences hold almost surely for \(p \in (0,1/2)\), unless specified otherwise:

- (i):

-

\(\displaystyle \lim _{n \rightarrow \infty } \langle \hat{N}^{(n)}, \hat{N}^{(n)} \rangle _t = { \sigma ^2 } \int _0^t s^{-2p} \mathrm {d}s.\)

- (ii):

-

\(\displaystyle \lim _{n \rightarrow \infty } \langle \check{N}^{(n)}, \check{N}^{(n)} \rangle _t = { \sigma ^2 } \int _0^t s^{2p} \mathrm {d}s\), for \(p \in (0,1]\).

- (iii):

-

\(\displaystyle \lim _{n \rightarrow \infty } \langle \hat{N}^{(n)}, N^{(n)} \rangle _t = { \sigma ^2 } (1-p) \int _0^t s^{-p} \mathrm {d}s.\)

- (iv):

-

\(\displaystyle \lim _{n \rightarrow \infty } \langle \check{N}^{(n)}, N^{(n)} \rangle _t = { \sigma ^2 } (1-p) \int _0^t s^{p} \mathrm {d}s\), for \(p \in (0,1]\).

- (v):

-

\(\displaystyle \lim _{n \rightarrow \infty } \langle \hat{N}^{(n)} , \check{N}^{(n)} \rangle _t = t \sigma ^2 \frac{1-p}{1+p}\).

where for the case \(p=1\) in (ii) and (iv) we assume that X is distributed Rademacher.

Lemma 3.2 provides the key asymptotic behaviour for the sequence of quadratic variations and its proof is rather long.

Proof

We tackle each item (i)–(v) individually, item (v) being the most arduous.

-

(i)

For each \(n \in \mathbb {N}\), we gather from (2.2) that the predictable quadratic variation of this martingale is given by for \(t \ge 1/2\) by

$$\begin{aligned}&\langle \hat{N}^{(n)}, \hat{N}^{(n)} \rangle _t = \\&\quad \frac{1}{n^{1-2p}} \left( \sigma ^2+ (1-p)\sigma ^2 \sum _{k=2}^{\lfloor nt \rfloor } \widehat{a}_{k}^2 - p^2 \sum _{k=2}^{\lfloor nt \rfloor } \widehat{a}_{k}^2 \left( \frac{\hat{S}_{k-1} }{k-1} \right) ^2 + p \sum _{k=2}^{\lfloor nt \rfloor } \widehat{a}_{k}^2 \left( \frac{{\hat{V}}_{k-1} }{k-1} \right) \right) , \end{aligned}$$with \(\langle \hat{N}^{(n)}, \hat{N}^{(n)} \rangle _t=0\) if \(t < 1/n\). We will study separately the limit as \(n \rightarrow \infty \) of the three nontrivial terms, as the first one evidently vanishes. To start with, it follows readily from (2.10) that

$$\begin{aligned} \lim _{n \rightarrow \infty } \frac{{\sigma ^2 }}{n^{1-2p}} (1-p) \sum _{k=2}^{\lfloor nt \rfloor } \widehat{a}_{k}^2 = \frac{{\sigma ^2 }}{1-2p} t^{1-2p} (1-p). \end{aligned}$$(3.3)Now, we claim that the second term converges to zero:

$$\begin{aligned} \lim _{n \rightarrow \infty } \frac{1}{n^{1-2p}} p^2 \sum _{k=1}^{\lfloor nt \rfloor } \widehat{a}_{k}^2 \left( \frac{\hat{S}_{k-1}}{k-1} \right) ^2 = 0 \quad \text { a.s. } \end{aligned}$$(3.4)Indeed, by (2.10) it suffices to notice that by Proposition 1.1, we have

$$\begin{aligned} \lim _{k \rightarrow \infty } \frac{ \hat{S}_k}{k} = 0 \quad \text {a.s.} \end{aligned}$$since we recall that by our standing assumptions X is centered. Finally, we claim that for the last term, the following limit holds:

$$\begin{aligned} \lim _{n \rightarrow \infty } \frac{1}{n^{1-2p}} p \sum _{k=1}^{\lfloor nt \rfloor } \widehat{a}_{k}^2 \frac{{\hat{V}}_{k-1} }{(k-1)} = \frac{{ \sigma ^2 }}{1-2p} t^{1-2p} p \quad \text { a.s.} \end{aligned}$$(3.5)In this direction, notice that \((\hat{V}_n)_{n \in \mathbb {N}}\) is the reinforced version of the (non-centered) random walk

$$\begin{aligned} V_n = {X}_1^2 + \dots + {X}^2_n, \quad \quad n \in \mathbb {N} \end{aligned}$$with mean \(\mathbb {E}(\hat{X}_i^2) = \mathbb {E}({X}_i^2)=\sigma ^2\). Hence, by Theorem 1.1, we have \(n^{-1}\hat{V}_n \rightarrow \sigma ^2\) as \(n \uparrow \infty \) and (3.5) follows. Now, combining (3.3), (3.4) and (3.5) we conclude that

$$\begin{aligned} \lim _{n \rightarrow \infty } \langle \hat{N}^{(n)}, \hat{N}^{(n)} \rangle _t = \frac{\sigma ^2}{1-2p}t^{1-2p} \quad \text {a.s.} \end{aligned}$$ -

(ii)

By (2.3), we have

$$\begin{aligned} \langle \check{N}^{(n)},\check{N}^{(n)} \rangle _t = \frac{1}{n^{1+2p}} \left( \sigma ^2 + \sum _{k=2}^{\lfloor nt \rfloor } \check{a}^2_k \left( (1-p) \sigma ^2 - p^2 \left( \frac{\check{S}_{k-1} }{k-1} \right) ^2 + p \frac{\hat{V}_{k-1}}{k-1} \right) \right) \end{aligned}$$and we shall now study the convergence of the normalised series in the previous expression. First, by (2.12), the first term converges towards

$$\begin{aligned} \lim _{n \rightarrow \infty } \sigma ^2 \frac{(1-p) }{n^{1+2p}} \sum _{k=2}^{\lfloor nt \rfloor }\check{a}^2_k = \sigma ^2 \frac{1-p}{1+2p} t^{1+2p} = \sigma ^2 (1-p) \int _0^t s^{2p} \mathrm {d}s. \end{aligned}$$Turning our attention to the second term, we recall from Proposition 1.1 that \((\check{S}_n)\) satisfies a law of large numbers:

$$\begin{aligned} \lim _{n \rightarrow \infty } \frac{1}{n} \check{S}_n = \frac{(1-p)}{1+p} m =0 \quad \text { a.s.} \end{aligned}$$This paired with the asymptotic behaviour of the series (2.12) yields:

$$\begin{aligned} \lim _{n \rightarrow \infty } \frac{1}{n^{1+2p}} \sum _{k=2}^{\lfloor nt \rfloor } \check{a}^2_k \left( \frac{\check{S}_{k-1}}{k-1} \right) ^2 =0 \quad \quad \text { a.s. for every } t \ge 0. \end{aligned}$$Finally, assuming first that \(p < 1\), we can proceed as in (3.5) to deduce from (2.12) that

$$\begin{aligned} \lim _{n \rightarrow \infty } \frac{p}{n^{1+2p}} \sum _{k=2}^{\lfloor nt \rfloor } \check{a}^2_k \left( \frac{\hat{V}_{k-1}}{k-1} \right) = \sigma ^2 \frac{p}{1+2p} t^{1+2p} = \sigma ^2 p \int _0^t s^{2p} \mathrm {d}s \quad \text {a.s. } \end{aligned}$$(3.6)If \(p=1\), by hypothesis X takes its values in \(\{ -1, 1\}\) and \(\check{V}_{k-1} ={k-1}\), yielding that the previously established limit (3.6) still holds. Notice however that if we allowed X to take arbitrary values, we can no longer proceed as we just did since in that case, \(\hat{V}_n\) is a straight line with random slope:

$$\begin{aligned} \check{V}_n = n \check{X}_1^2. \end{aligned}$$Putting all pieces together, we obtain (ii).

-

(iii)

Recalling that \(\hat{X}_k = X_k {\mathbf {1}}_{\{ \epsilon _k=0 \}} + \hat{X}_{U[k-1]} {\mathbf {1}}_{\{ \epsilon _k=1 \}}\), and from independence of \(X_k\), \(\epsilon _k\) and \(U[k-1]\) from \({\mathcal {F}}_{k-1}\), we get for \(k \ge 2\)

$$\begin{aligned} \mathbb {E}( \Delta \hat{M}_{k} X_{k} \mid {\mathcal {F}}_{k-1} )&= \mathbb {E}\left( ( \hat{S}_{k-1} ( \widehat{a}_k - \widehat{a}_{k-1} ) + \hat{X}_k \widehat{a}_k ) X_k \mid {\mathcal {F}}_{k-1} \right) \\&= \widehat{a}_k \mathbb {E}\left( \left( X_k {\mathbf {1}}_{\{ \epsilon _k=0 \}} + \hat{X}_{U[k-1]} {\mathbf {1}}_{\{ \epsilon _k=1 \}} \right) X_k \mid {\mathcal {F}}_{k-1} \right) \\&= \widehat{a}_k (1-p) \mathbb {E}(X^2) + \sum _{j=1}^{k-1} \mathbb {E}\left( X_k X_j {\mathbf {1}}_{\{ U[k-1] =j , \epsilon _k=1 \}} \mid {\mathcal {F}}_{k-1} \right) \\&=\widehat{a}_k (1-p) \sigma ^2 \end{aligned}$$since the steps are centered, while for \(k=1\) we simply get \(\mathbb {E}(\hat{M}_1X_1) = \sigma ^2\). From here, we deduce

$$\begin{aligned} \langle \hat{N}^{(n)}, N^{(n)} \rangle _t = n^{p-1} \sum _{k=1}^{\lfloor nt \rfloor } \mathbb {E}( \Delta M_{k} X_{k} \mid {\mathcal {F}}_{k-1} ) = \sigma ^2 (1-p) n^{p-1} \left( (1-p)^{-1} + \sum _{k=2}^{\lfloor nt \rfloor } \widehat{a}_k \right) \end{aligned}$$and from the convergence

$$\begin{aligned} \lim _{n \rightarrow \infty } n^{p-1} \sum _{k=2}^n \widehat{a}_k = (1-p)^{-1} \end{aligned}$$we conclude:

$$\begin{aligned} \lim _{n \rightarrow \infty } \langle \hat{N}^{(n)}, N^{(n)} \rangle _t = \sigma ^2 (1-p) \lim _{n \rightarrow \infty } {n^{p-1}} \sum _{k=2}^{\lfloor nt \rfloor } \widehat{a}_k = t^{1-p} = \sigma ^2 (1-p) \int _0^t s^{-p} \mathrm {d}s. \end{aligned}$$ -

(iv)

Recalling that in the counterbalanced case \(\check{X}_k = X_k {\mathbf {1}}_{\{ \epsilon _k=1 \}} -\check{X}_{U[k-1]} {\mathbf {1}}_{\{ \epsilon _k=0 \}}\), we deduce from similar arguments as in the reinforced case that for \(k \ge 2\) we have,

$$\begin{aligned} \mathbb {E}( \Delta \check{M}_{k} X_{k} \mid {\mathcal {F}}_{k-1} )&= \mathbb {E}\left( ( \check{S}_{k-1} ( \check{a}_k - \check{a}_{k-1} ) + \check{X}_k \check{a}_k ) X_k \mid {\mathcal {F}}_{k-1} \right) \\&= \check{a}_k\mathbb {E}\left( \left( X_k {\mathbf {1}}_{\{ \epsilon _k=1 \}} -\check{X}_{U[k-1]} {\mathbf {1}}_{\{ \epsilon _k=0 \}} \right) X_k \mid {\mathcal {F}}_{k-1} \right) \\&= \check{a}_k \cdot (1-p) \mathbb {E}(X^2) - \sum _{j=1}^{k-1} \mathbb {E}\left( X_k X_j {\mathbf {1}}_{\{ U[k-1] =j , \epsilon _k=0 \}} \mid {\mathcal {F}}_{k-1} \right) \\&= \check{a}_k \cdot (1-p) \sigma ^2. \end{aligned}$$Notice that if \(p=1\) the argument still holds and hence the above quantity is null for all \(k \ge 2\). Since if \(k = 1\) we simply have \(\mathbb {E}[\Delta \check{M}_1 X_1] = \sigma ^2\), it follows that for \(t \ge 1/n\),

$$\begin{aligned} \langle \check{N}^{(n)}, N^{(n)} \rangle _t&= n^{-(1+p)} \sum _{k=1}^{\lfloor nt \rfloor } \mathbb {E}( \Delta \check{M}_{k} X_{k} \mid {\mathcal {F}}_{k-1} ) \\&= \sigma ^2 (1-p) \cdot n^{-(1+p)} \left( (1-p)^{-1} + \sum _{k=2}^{\lfloor nt \rfloor } \check{a}_k\right) \end{aligned}$$and from the convergence

$$\begin{aligned} \lim _{n \rightarrow \infty } n^{-(1+p)} \sum _{k=1}^n \check{a}_k = (1+p)^{-1} \end{aligned}$$we conclude

$$\begin{aligned} \sigma ^{-2} \lim _{n \rightarrow \infty } \langle \check{N}^{(n)} , N^{(n)} \rangle _t = (1-p) \lim _{n \rightarrow \infty } {n^{-(1+p)}} \sum _{k=1}^{\lfloor nt \rfloor } \check{a}_k = \frac{1-p}{(1+p)} t^{1+p} = (1-p) \int _0^t s^{p} \mathrm {d}s. \end{aligned}$$Finally if \(p=1\), we clearly have \(\lim _{n \rightarrow \infty } \langle \check{N}^{(n)},N^{(n)} \rangle _t =0\).

-

(v)

Notice that

$$\begin{aligned} \mathbb {E}( \Delta \check{M}_k \Delta \hat{M}_k \mid {\mathcal {F}}_{k-1} )&= \mathbb {E}\left( ( \hat{S}_{k-1} ( \widehat{a}_k - \widehat{a}_{k-1} ) + \hat{X}_k \widehat{a}_k ) ( \check{S}_{k-1} ( \check{a}_k - \check{a}_{k-1} ) + \check{X}_k \check{a}_k ) \mid {\mathcal {F}}_{k-1} \right) \\&= \hat{S}_{k-1} ( \widehat{a}_k - \widehat{a}_{k-1} ) \check{S}_{k-1} ( \check{a}_k - \check{a}_{k-1} ) + \hat{S}_{k-1} ( \widehat{a}_k - \widehat{a}_{k-1} ) \mathbb {E}(\check{X}_k | {\mathcal {F}}_{k-1}) \check{a}_k \\&\quad + \check{S}_{k-1} ( \check{a}_k - \check{a}_{k-1} ) \mathbb {E}(\hat{X}_k \mid {\mathcal {F}}_{k-1}) \widehat{a}_k + \mathbb {E}( \check{X}_k \hat{X}_k \mid {\mathcal {F}}_{k-1}) \widehat{a}_k \check{a}_k \\&=: P^{(a)}_k + P^{(b)}_k + P^{(c)}_k + P^{(d)}_k \end{aligned}$$where the notation was assigned in order of appearance. We write,

$$\begin{aligned} \langle \check{N}^{n}, \hat{N}^{n} \rangle _t = n^{-1} \sum _{k=1}^{\lfloor nt \rfloor } \left( P^{(a)}_k + P^{(b)}_k + P^{(c)}_k + P^{(d)}_k \right) \end{aligned}$$and study the asymptotic behaviour of these four terms individually. In that direction, we recall from (2.4) and (2.8) the identities \(\mathbb {E}(\hat{X}_k \mid {\mathcal {F}}_{k-1}) = p \hat{S}_{k-1}/(k-1)\), \(\mathbb {E}(\check{X}_k \mid {\mathcal {F}}_{k-1}) = -p \check{S}_{k-1}/(k-1)\) as well as the asymptotic behaviour \((\widehat{a}_k - \widehat{a}_{k-1}) \sim - p k^{-(p+1)}\) and \((\check{a}_k - \check{a}_{k-1}) \sim p k^{p-1}\).

-

We first show that

$$\begin{aligned} \lim _{n \rightarrow \infty } n^{-1} \sum _{k=1}^{ \lfloor nt \rfloor } P_k^{(c)}=0 \quad \text {a.s.}. \end{aligned}$$From the identities and asymptotic estimates we just recalled, we have

$$\begin{aligned} \check{S}_{k-1} ( \check{a}_k - \check{a}_{k-1} ) \mathbb {E}(\hat{X}_k \mid {\mathcal {F}}_{k-1}) \widehat{a}_k = \check{S}_{k-1} ( \check{a}_k - \check{a}_{k-1} )p \frac{\hat{S}_{k-1}}{k-1} \widehat{a}_k \sim \frac{\check{S}_{k-1}}{k} k^p p^2 \frac{\hat{S}_{k-1}}{k-1} \widehat{a}_k \end{aligned}$$and since \(\widehat{a}_k \sim k^{-p}\), we have for some constant C large enough that

$$\begin{aligned} n^{-1} \biggl | \sum _{k=1}^{\lfloor nt \rfloor } P_k^{(c)} \biggr | \le n^{-1} C \sum _{k=1}^{\lfloor nt \rfloor } \biggl | \frac{\check{S}_{k-1} }{k} \frac{\hat{S}_{k-1} }{k-1} \biggr |. \end{aligned}$$However, this converges a.s. towards 0 as \(n \uparrow \infty \) by Proposition 1.1.

-

Next, since

$$\begin{aligned} \hat{S}_{k-1} ( \widehat{a}_k - \widehat{a}_{k-1} ) \mathbb {E}(\check{X}_k \mid {\mathcal {F}}_{k-1}) \check{a}_k = -\hat{S}_{k-1} ( \widehat{a}_k - \widehat{a}_{k-1} )p \frac{\check{S}_{k-1}}{k-1} \check{a}_k \sim \frac{\hat{S}_{k-1}}{k} k^{-p} p^2 \frac{\check{S}_{k-1}}{k-1} \check{a}_k \end{aligned}$$we can follow exactly the same line of reasoning in order to establish

$$\begin{aligned} \lim _{n \rightarrow \infty } n^{-1} \sum _{k=1}^{ \lfloor nt \rfloor } P_k^{(b)}=0 \quad \text {a.s.}. \end{aligned}$$ -

Since

$$\begin{aligned} (\widehat{a}_k - \widehat{a}_{k-1})(\check{a}_k - \check{a}_{k-1}) \sim - p^2 k^{-2}, \end{aligned}$$we deduce that

$$\begin{aligned} \hat{S}_{k-1} ( \widehat{a}_k - \widehat{a}_{k-1} ) \check{S}_{k-1} ( \check{a}_k - \check{a}_{k-1} ) \sim \hat{S}_{k-1} \check{S}_{k-1} (-p^2) k^{-2} \end{aligned}$$and we conclude as before that we have:

$$\begin{aligned} \lim _{n \rightarrow \infty } n^{-1} \sum _{k=1}^{ \lfloor nt \rfloor } P_k^{(a)}=0 \quad \text {a.s.}. \end{aligned}$$ -

Finally, since by definition

$$\begin{aligned} \hat{X}_k = X_k {\mathbf {1}}_{\{ \epsilon _k = 0 \}} + \hat{X}_{U[k-1]}{\mathbf {1}}_{\{ \epsilon _k = 1 \}}, \qquad \check{X}_k = X_k {\mathbf {1}}_{\{ \epsilon _k = 0 \}} - \check{X}_{U[k-1]}{\mathbf {1}}_{\{ \epsilon _k = 1 \}} \end{aligned}$$we have

$$\begin{aligned} \widehat{a}_k \check{a}_k E ( \check{X}_k \hat{X}_k \mid {\mathcal {F}}_{k-1})&= \widehat{a}_k \check{a}_k E ( {X}_k ^2 {\mathbf {1}}_{\{ \epsilon _k = 0 \}} \mid {\mathcal {F}}_{k-1})\\&\quad - \widehat{a}_k \check{a}_k E ( \check{X}_{U[k-1]} \hat{X}_{U[k-1]} {\mathbf {1}}_{\{ \epsilon _k = 1 \}} \mid {\mathcal {F}}_{k-1}) \\&= \widehat{a}_k \check{a}_k (1-p) \sigma ^2 - \widehat{a}_k \check{a}_k \sum _{j=1}^{k-1} E ( \check{X}_{j} \hat{X}_{j} {\mathbf {1}}_{\{ \epsilon _k = 1 , U[k-1] = j \}} \mid {\mathcal {F}}_{k-1}). \end{aligned}$$Since on one hand, \(\check{X}_j,\hat{X}_j\) for \(j < k\) are \({\mathcal {F}}_{k-1}\) measurable while \(\epsilon _k, U[k-1]\) are independent of \({\mathcal {F}}_{k-1}\), denoting as \(\check{G}\) the counterbalanced random walk made from the i.i.d. sequence \(X^2_1, X^2_2, \dots \) from the same instance of the reinforcement algorithm, we deduce

$$\begin{aligned} P^{(d)}_k = \widehat{a}_k \check{a}_k \left( (1-p) \sigma ^2 -\frac{1}{k-1} p \sum _{j=1}^{k-1} \check{X}_{j} \hat{X}_{j} \right) = \widehat{a}_k \check{a}_k \left( (1-p) \sigma ^2 - p \frac{\check{G}(k-1)}{k-1}\right) \end{aligned}$$and since \(\hat{a}_k \check{a}_k \rightarrow 1\) as \(k \rightarrow \infty \), the problem boils down to studying the convergence as \(n \uparrow \infty \) of

$$\begin{aligned} \frac{1}{n} \sum _{k=1}^{\lfloor nt\rfloor } \left( (1-p)\sigma ^2 - p\frac{\check{G}{(k-1)}}{k-1} \right) . \end{aligned}$$The first term obviously converges towards \(t(1-p) \sigma ^2\) and we turn our attention to the second one. Now, by Theorem 1.1 applied to \(\check{G}\) we get:

$$\begin{aligned} \lim _{n \rightarrow \infty } \frac{p}{n}\sum _{k=1}^{\lfloor nt \rfloor } \frac{\check{G}(k-1)}{k} = p \sigma ^2 t \frac{1-p}{1+p} \quad \text { a.s.} \end{aligned}$$and we conclude that the following convergence holds almost surely:

$$\begin{aligned} \lim _{n \rightarrow \infty } \frac{1}{n} \sum _{k=1}^{\lfloor nt \rfloor } P_k^{(d)} = t(1-p) \sigma ^2 - p \sigma ^2 t\frac{1-p}{1+p}. \end{aligned}$$

Bringing all our calculations above together we deduce the following almost sure convergence:

$$\begin{aligned} \lim _{n \rightarrow \infty } \langle \hat{N}^n, \check{N}^{n} \rangle _t = \sigma ^2 (1-p) t - p \sigma ^2 (1-p)(1+p)^{-1} t. \end{aligned}$$ -

This concludes the proof of the lemma. \(\square \)

With this, we conclude the proof of Theorem 1.2 when X is bounded with an appeal to Lemma 3.1, Lemma 3.2 and the MFCLT (Theorem 1.6).

4 Reduction to the Case of Bounded Steps.

In this section, we shall only assume that the typical step \(X \in L^2(\mathbb {P})\) of the step-reinforced random walk \(\hat{S}\) is centred and no longer that it is bounded. We shall complete the proof of Theorem 1.2 by means of the truncation argument reminiscent to the one of Section 4.3 in [1].

4.1 Preliminaries

The reduction argument relies on the following lemma taken from [25], that we state for the reader’s convenience:

Lemma 4.1

(Lemma 3.31 in Chapter VI of [25]) Let \((Z^n)\) be a sequence of d-dimensional rcll (cádlág) processes and suppose that

If \((Y^n)\) is another sequence of d-dimensional rcll processes with \(Y^n \Rightarrow Y\) in the sense of Skorokhod, then \(Y^n + Z^n \Rightarrow Y\) in the sense of Skorokhod.

Finally, we will need the following lemma concerning convergence on metric spaces:

Lemma 4.2

Let (E, d) be a metric space and consider \((a_n^{(m)} \, : \, m,n \in \mathbb {N})\) a family of sequences, with \(a_n^{(m)} \in E\) for all \(n, m \in \mathbb {N}\). Suppose further that the following conditions are satisfied:

- (i):

-

For each fixed m, \(a_n^{(m)} {\rightarrow } a_\infty ^{(m)}\) as \(n \uparrow \infty \) for some element \(a_\infty ^{(m)} \in E\).

- (ii):

-

\(a_\infty ^{(m)} {\rightarrow } a_{\infty }^{(\infty )}\) as \(m \uparrow \infty \), for some \(a_\infty ^{(\infty )} \in E\).

Then, there exists a non-decreasing subsequence \((b(n))_{n}\) with \(b(n) \rightarrow \infty \) as \(n \uparrow \infty \), for which the following convergence holds:

Proof

Since the sequence \((a_\infty ^{(m)})_m\) converges, we can find an increasing subsequence \(m_1 \le m_2 \le \dots \) satisfying

Moreover, since for each fixed \(m_k\) the corresponding sequence \((a_n^{(m_k)})_n\) converges, there exists a strictly increasing sequence \((n_k)_k\) satisfying that, for each k,

Now, we set for \(n < n_1\), \(b(n) := m_1\) and for \(k \ge 1\), \(b(n) := m_k\) if \(n_k\le n < n_{k+1}\) and we claim \((a_n^{b(n)})_n\) is the desired sequence. Indeed, it suffices to observe that for \(n_k \le n < n_{k+1}\),

\(\square \)

4.2 Reduction Argument

Recall that we are assuming that the typical step is centred. During the course of this section we will use that the truncated versions of the counterbalanced and noise reinforced random walks are still counterbalanced (resp. noise reinforced) random walks.

Indeed, notice that if \((\check{S}_n)\) and \((\hat{S}_n)\) have been built from the i.i.d. sequence \((X_n)_{n \ge 1}\) by means of the negative-reinforcement and positive-reinforcement algorithms described in the introduction, splitting each \(X_i\) for \(i \in \mathbb {N}\) as

where respectively,

yields a natural decompositions for \((\check{S}_n)\) and \((\hat{S}_n)\) in terms of two counterbalanced (reps. noise reinforced) random walks:

where now \((\check{S}^{ \le K}_n)\), \((\check{S}^{> K}_n )\) are counterbalanced versions with typical step centred and distributed respectively as

and

an analogue statement holding in the reinforced case for \((\hat{S}^{ \le K}_n)\), \((\hat{S}^{> K}_n )\). Moreover, \(X^{\le K}\) is centred with variance \({\sigma }^2_K\) and \(\sigma ^2_K \rightarrow \sigma ^2\) as \(K \nearrow \infty \) while the variance of \(X^{> K}\) that we denote by \(\eta ^2_K\), converges towards zero as \(K \uparrow \infty \). We will also write the respective truncated random walk as

Notice that \((S^{\le K})\), \((\hat{S}^{ \le K}_n)\) and \((\check{S}^{ \le K}_n)\) have now bounded steps, allowing us to apply the result established in Section 3 to this triplet.

Remark 4.3

We point out that while \((\hat{S}_n^{\le K})\) can be simply obtained by considering the NRRW made from the steps \(X_i 1_{\{ |X_i| \le K \}}\), \(i \ge 1\) and substracting \(n \mathbb {E}(X 1_{\{ X \le K \}})\) at the n-th step for each \(n\ge 1\), and hence yielding a NRRW with steps given by

for the counterbalanced case we need to subtract the counterbalanced random walk issued from the constants \(\mathbb {E}( X_i 1_{\{|X_i| \le K \}})\), \(i \ge 1\) , which in contrast with the reinforced case, is a process on its own right because of the sign swap.

For each k, write as \(N^{n,K}\) \(\hat{N}^{n,K}\) and \(\check{N}^{n,K}\) the corresponding martingales as defined in (3.1) relative to \(S^{\le K}\), \(\hat{S}^{\le K}\) and \(\check{S}^{\le K}\) respectively. An application of Theorem 1.2 in the bounded case yields for every K, that

However recalling the asymptotic behaviour \({n^p}{ \widehat{a}_{\lfloor nt \rfloor }} \sim t^{-p} \text { as } n \rightarrow \infty \) and the definition of \(N^{n, \le K}\), we deduce that

Since as \(K \uparrow \infty \), the right hand side converges weekly towards \((\sigma B_t, \sigma t^p \int _0^t s^{-p} d B^r_s , \sigma \int _0^t s^{p} dB^c_s)\) and the convergence in distribution is metrisable, by Lemma 4.2 there exists a slowly increasing sequence converging towards infinity that we denote as \((K(n): n \ge 1)\), satisfying that, as \(n \uparrow \infty \),

On the other hand, for each n we can clearly decompose

and in order to apply Lemma 4.1 we need the following lemma:

Lemma 4.4

For any sequence \((K(n): n \ge 1)\) increasing towards infinity the following limits hold:

- (i):

-

\(\displaystyle \lim _{n \rightarrow \infty } \frac{1}{n} \mathbb {E}\left( \sup _{k \le nt} \left| {S}^{> K(n)}_k\right| ^2 \right) = 0.\)

- (ii):

-

\(\displaystyle \lim _{n \rightarrow \infty } \frac{1}{n} \mathbb {E}\left( \sup _{k \le nt} \left| \hat{S}^{> K(n)}_k \right| ^2 \right) = 0, \qquad \text {for } p \in (0,1/2).\)

- (iii):

-

\(\displaystyle \lim _{n \rightarrow \infty } \mathbb {P}\left( \sup _{s \le T} \left| \check{N}_s^{n, >K(n)} \right| ^2 \ge \epsilon \right) = 0,\) for every \(\epsilon >0\) and \(p \in (0,1).\)

Proof

Recall that we denoted by \(\eta ^2_K\) the variance of \(X^{> K}\).

-

(i)

By Doob’s inequality and independence of the steps we inmediatly get that

$$\begin{aligned} \frac{1}{n} \mathbb {E}\left( \sup _{k \le nt} |{S}^{> K(n)}_k|^2 \right) \le \frac{4}{n}\eta _{K(n)} {\lfloor nt \rfloor } \end{aligned}$$which converges towards 0 as \(n \uparrow \infty \).

-

(ii)

From Lemma 2.4 for \(0<p<1/2\) we deduce that for any \(t>0\),

$$\begin{aligned} \lim _{n \rightarrow \infty } \frac{1}{n} \mathbb {E}\left( \sup _{k \le nt} | \hat{S}^{>K(n)}(k)|^2 \right)&\le c_1 \lim _{n \rightarrow \infty } \eta _{K(n)}^2 t =0, \end{aligned}$$(4.3)proving the claim.

-

(iii)

Doob’s maximal inequality yields

$$\begin{aligned} \mathbb {P}\left( \sup _{s \le T} |\check{N}^{n,> K(n) }_s| \ge \epsilon \right) \le \epsilon ^{-2} \mathbb {E}\left( \langle \check{N}^{n,> K(n) }, \check{N}^{n,> K(n) } \rangle _T \right) , \end{aligned}$$and if we denote by \(\hat{V}^{> (n)}\) the sum of squared steps associated to \((S^{>K(n)})\), notice that

$$\begin{aligned}&\langle \check{N}^{n,> K(n) }, \check{N}^{n,> K(n) } \rangle _T \\&\quad \le \frac{1}{n^{1+2p}} \left( \eta ^2_{K(n)}+ \sum _{k=2}^{\lfloor nT \rfloor } \check{a}^2_k \left( (1-p) \eta ^2_{K(n)} + p \frac{\hat{V}^{> K(n)}_{k-1}}{k-1} \right) \right) . \end{aligned}$$Recalling that \(\mathbb {E}(\hat{V}_{k-1}^{> K(n)}) = (k-1)\eta _{K(n)}^2\), this yields the bound

$$\begin{aligned} \mathbb {P}\left( \sup _{s \le T} |\check{N}^{n, K(n) }_s| \ge \epsilon \right) \le \epsilon ^{-2} \eta _{K(n)}^2 \frac{1}{n^{1+2p}} \left( 1+ \sum _{k=2}^{\lfloor nT \rfloor }\check{a}^2_k \right) . \end{aligned}$$Since on the one hand we have \(\eta ^2_{K(n)} \rightarrow 0\) as \(n \uparrow \infty \) while on the other by (2.12) it holds that

$$\begin{aligned} \limsup _{n \uparrow \infty } n^{-(1+2p)} \sum _{k=2}^{\lfloor nT \rfloor } \check{a}_k^2 < \infty , \end{aligned}$$the desired convergence follows.

This concludes the proof of the lemma. \(\square \)

Now, recalling the definition of \(\check{N}^{n}\), we deduce from Lemma 4.1 that as \(n \uparrow \infty \),

and since \(b_{\lfloor nt \rfloor }/ n^{p} \sim t^{p}\), we conclude that for any \(\delta >0\), the desired convergence

holds away for the origin (this restriction is due to the fact that \(t^{-p}\) is unbounded on any neighbourhood of 0). In order to get the convergence on \(\mathbb {R}^+\) and finally prove the claimed convergence in Theorem 1.2, we proceed as follows: We will only work with the third coordinate, as it is the only one presenting the difficulty. The argument is readily adapted to the triplet. Assume without loss of generality that \(\sigma ^2 = 1\), fix \(\delta >0\) and consider the partition of \([0,\delta ]\), with points \(\{ \delta 2^{-i} :\, i = 0, 1, 2, \dots \}\). Since the sequence \((\check{a}_k)\) is increasing we obtain,

Denoting as usual by \((\check{M}_n)\) the martingale \((\check{a}_n\check{S}_n)_{n \ge 0}\), notice that by (2.3), the remark that follows, and (2.12),

for some constant c that might change from one inequality to the other. We deduce by Doob’s inequality

which, recalling the asymptotic behaviour \(\check{a}_n \sim n^p\), yields for some constant c that might differ from one line to the other:

From the previous estimate, we deduce the uniform bound

Finally, write \(X^{(n)} = (\frac{1}{\sqrt{n}} \check{S}_{\lfloor nt \rfloor })_{t \in \mathbb {R}^+}\). Since for any \(\delta >0\) we have \((X^{(n)}_t)_{t \ge \delta } \Rightarrow (\check{B}_{t})_{t \ge \delta }\) as \(n \uparrow \infty \) and of course \((\check{B}_{t +\delta })_{t \in \mathbb {R}^+} \Rightarrow (\check{B}_{t })_{t \in \mathbb {R}^+}\) as \(\delta \downarrow 0\), we deduce that there exists some decreasing sequence \((\delta (n)) \downarrow 0\) such that

while by (4.4),

This establishes that the convergence \(\left( \frac{1}{\sqrt{n}} \check{S}_{\lfloor nt \rfloor }\right) _{t \in \mathbb {R}^+} \Rightarrow \check{B}\) holds on \(\mathbb {R}^+\) and with this, we conclude our proof of Theorem 1.2.

Remark 4.5

In the process of proving Theorem 1.2 in Section 3 and 4 , we showed also that if we no longer consider the noise-reinforced random walk, we can extend the convergence of the pair to \(p \in (0,1)\),

where as usual \(B^c\), B are two Brownian motions with \(\langle B,B^c \rangle _t = (1-p) t\), and that the result still holds if \(p = 1\) if we assume X follows the Rademacher distribution, in which case the processes are independent. This is precisely the content of Theorem 1.4. Finally, Theorem 1.3 also follows by recalling that \(\hat{S}_n - n \mathbb {E}(X)\) is a centred positive step-reinforced random walk and hence falls in our framework.

5 The Critical Regime for the Positive-Reinforced Case: Proof of Theorem 1.5

In this last section we turn our attention to the critical regime \(p=1/2\) for the noise reinforced case and prove the invariance principle with our martingale approach. The arguments are very similar and rely on exploiting the martingale defined in Proposition 2.1, the MFCLT and a truncation argument. The main difference comes from the fact that, for \(p=1/2\), the asymptotic behaviour of \(\sum _{k=1}^n \widehat{a}_k^{2}\) is no longer the one claimed in (2.10). Namely, as we pointed out previously,

and the different scaling that we will use makes impossible to couple the convergence with the random walk or the counterbalanced random walk. Once again, we start with a law of large numbers-type result:

Lemma 5.1

Suppose \(\Vert X\Vert _\infty < \infty \). We have the almost sure convergence

and fortiori we have \(\lim _{n \rightarrow \infty } n^{-1} {\hat{S}_n}=0 \quad \text {a.s.}\)

Proof

The proof of this statement follows along the same lines as the proof of Corollary 2.3. We obtain again from Theorem 1.3.24 in [26] that

Hence, as \(\hat{M}_n= \widehat{a}_n \hat{S}_n\), the above readily implies that

Further, we deduce from (2.11) that for \(p=1/2\), \( \lim _{n \rightarrow \infty } \widehat{a}_n^2 \cdot {n}= 1 \) and hence we deduce that

which immediately implies the claim. \(\square \)

We now prove the invariance principle under the assumption of boundedness for X.

Proof of Theorem 1.5

when \(\Vert X\Vert _\infty < \infty \) The proof relies on similar ideas to the ones used in the proof of Theorem 1.3. Recalling that,

from the substitution \(k = \lfloor n^t \rfloor \), we deduce that

Then, the limit (1.12) can equivalently be shown by establishing the desired convergence towards \(B=(B_t)_{t \ge 0}\) for the following sequence of martingales:

Once again, we denote

and deduce as before that for each \(n \in \mathbb {N}\), the predictable quadratic variation of \(\hat{N}^n\) is given by

By the MFCLT, in order to prove our claim it suffices to show that

and that \(\sup _t |\Delta \hat{N}^{(n)}_t| \rightarrow 0\) in probability as \(n \rightarrow \infty \). Since \(\Vert X\Vert _\infty < \infty \), this last requirement follows from very similar arguments to the ones we used in the proof of Theorem 1.3. On the other hand, since \(\log (\lfloor n^t \rfloor ) / \log (n) \rightarrow t\) as \(n \rightarrow \infty \) and by (2.11), the first nontrivial term of (5.1) satisfies the following convergence

By the same arguments we used in the proof of Theorem 1.3 but using the law of large numbers for the critical regime (Lemma 5.1), we obtain that the second term in (5.1) converges to zero while for the last term,

It follows that \(\langle \hat{N}^{(n)} , \hat{N}^{(n)} \rangle _t \rightarrow t { \sigma ^2 }\) for each t as \(n \rightarrow \infty \), which proves the desired result under the additional assumption that \(\Vert X\Vert _\infty < \infty \). \(\square \)

Now we establish the general case by means of the usual reduction argument. We will not be as detailed as before, since the ideas are exactly the same. We do still assume without loss of generality that the steps are centred.

Proof of Theorem 1.5, general case

Maintaining the notation introduced for the truncated reinforced random walks of Section 4 as well as for the respective variances \(\eta _K\) and \(\sigma _K\) for \(K > 0\), Theorem 1.5 in the bounded step case shows for each \(K >0\) the convergences in distribution as n tends to infinity in the sense of Skorokhod,

and from \(\lim _{K \rightarrow \infty } \sigma _K = \sigma \), it follows readily from (5.2) and the same arguments as before that as n tends to infinity,

for some increasing sequence \((K(n))_{n \ge 0}\) of positive real numbers converging towards infinity. On the other hand, from Lemma 2.4 for \(p=1/2\) we deduce that

and from here we can proceed as we did in the previous section. With this, we conclude the proof of Theorem 1.5. \(\square \)

References

Bertoin, Jean.: Universality of noise reinforced Brownian motions. Progress in Probability, vol 77. Birkhäuser, (2021). https://doi.org/10.1007/978-3-030-60754-8_7

Schütz, Gunter M., Trimper, Steffen.: Elephants can always remember: Exact long-range memory effects in a non-Markovian random walk. Phys. Rev. E, 70, (2004). https://doi.org/10.1103/PhysRevE.70.045101

Baur, Erich, Bertoin, Jean.: Elephant random walks and their connection to Pólya-type urns. Phys. Rev. E, 94, 2016. https://doi.org/10.1103/PhysRevE.94.052134

Bercu, Bernard: A martingale approach for the elephant random walk. J. Phys. A: Mathemat. Theo. (2017). https://doi.org/10.1088/1751-8121/aa95a6

Bercu, Bernard, Laulin, Lucile: On the center of mass of the elephant random walk. Stochastic Processes and their Applications (2020). https://doi.org/10.1016/j.spa.2020.11.004

Bertenghi, Marco.: Functional limit theorems for the multi-dimensional elephant random walk. Stochastic Models, 38(1), 37–50 (2022). https://doi.org/10.1080/15326349.2021.1971092

Coletti, Cristian F., Gava, Renato, Schütz, Gunter M.: Central limit theorem and related results for the elephant random walk. Journal of Mathematical Physics, 58, 2017. https://doi.org/10.1063/1.4983566

Coletti, Cristian F., Gava, Renato, Schütz, Gunter M.: A strong invariance principle for the elephant random walk. J. Statist. Mech. 2017(12), 123207 (2017). https://doi.org/10.1088/1742-5468/aa9680

Coletti, Cristian F., Papageorgiou, Ioannis.: Asymptotic analysis of the elephant random walk. Journal of Statistical Mechanics: Theory and Experiment, 2021(1):013205, 2021. https://doi.org/10.1088/1742-5468/abcd36

Vıctor Hugo Vázquez Guevara and Hugo Cruz Suárez. An elephant random walk based strategy for improving learning (preprint). https://doi.org/10.13140/RG.2.2.10920.72960

Kubota, Naoki, Takei, Masato: Gaussian fluctuation for superdiffusive elephant random walks. J. Statist. Phys. 177, 1157–1171 (2019). https://doi.org/10.1007/s10955-019-02414-0

Kürsten, Rüdiger.: Random recursive trees and the elephant random walk. Phys. Rev. E 93, 032111 (2016). https://doi.org/10.1103/PhysRevE.93.032111

Baur, Erich: On a class of random walks with reinforced memory. J. Statist. Phys. 181(3), 772–802 (2020). https://doi.org/10.1007/s10955-020-02602-3

Bercu, Bernard, Laulin, Lucile: On the multi-dimensional elephant random walk. J. Statist. Phys. 175(6), 1146–1163 (2019). https://doi.org/10.1007/s10955-019-02282-8

González-Navarrete, Manuel: Multidimensional walks with random tendency. J. Stat. Phys. 181, 1138–1148 (2020). https://doi.org/10.1007/s10955-020-02621-0

Janson, Svante: Functional limi theorems for multitype branching processes and generalized polya urns. Stochastic processes and their Applications 110, 177–245 (2004). https://doi.org/10.1016/j.spa.2003.12.002

González-Navarrete, Manuel, Lambert, Rodrigo: Non-markovian random walks with memory lapses. J. Math. Phys. 59(11), 113301 (2018). https://doi.org/10.1063/1.5033340

Businger, Silvia: The shark random swim. J. Statist. Phys. 172(3), 701–717 (2018). https://doi.org/10.1007/s10955-018-2062-5

Bertoin, Jean: Noise reinforcement for Lévy processes. Annales de l’Institut Henri Poincaré, Probabilities et Statistiques 56(3), 2236–2252 (2020)

Bertoin, Jean: Scaling exponents of step-reinforced random walks. Probability Theory and Related Fields (2021). https://doi.org/10.1007/s00440-020-01008-2

Bai, Z.D., Hu, Feifang, Zhang, Li-Xin.: Gaussian approximation theorems for urn models and their applications. Ann. Appl. Probability 12(4), 1149–1173 (2002)

Bertenghi, Marco.: Asymptotic normality of superdiffusive step-reinforced random walks, (2021). arXiv:2101.00906

Whitt, Ward: Proofs of the martingale FCLT. Probab. Surveys 4, 268–302 (2007). https://doi.org/10.1214/07-PS122

Bertoin, Jean.: Counterbalancing steps at random in a random walk. arXiv preprint arXiv:2011.14069, (2020)

Jacod, Jean, Shiryaev, Albert N.: Limit Theorems for Stochastic Processes. Springer (2003). https://doi.org/10.1007/978-3-662-05265-5

Duflo, Marie.: Random iterative models, volume 34. Springer Science & Business Media, (2013)

Acknowledgements