Abstract

We propose a field-theoretic thermodynamic uncertainty relation as an extension of the one derived so far for a Markovian dynamics on a discrete set of states and for overdamped Langevin equations. We first formulate a framework which describes quantities like current, entropy production and diffusivity in the case of a generic field theory. We will then apply this general setting to the one-dimensional Kardar–Parisi–Zhang equation, a paradigmatic example of a non-linear field-theoretic Langevin equation. In particular, we will treat the dimensionless Kardar–Parisi–Zhang equation with an effective coupling parameter measuring the strength of the non-linearity. It will be shown that a field-theoretic thermodynamic uncertainty relation holds up to second order in a perturbation expansion with respect to a small effective coupling constant. The calculations show that the field-theoretic variant of the thermodynamic uncertainty relation is not saturated for the case of the Kardar-Parisi-Zhang equation due to an excess term stemming from its non-linearity.

Similar content being viewed by others

1 Introduction

The thermodynamic uncertainty relation (TUR) in a non-equilibrium steady state (NESS) provides a bound on the entropy production in terms of mean and variance of an arbitrary current [1]. Specifically, in the NESS, after a time t a fluctuating integrated current X(t) has a mean \(\left\langle X(t)\right\rangle =j\,t\), and a diffusivity \(D=\lim _{t\rightarrow \infty }\left\langle (X(t)-j\,t)^2\right\rangle /(2\,t)\). With the entropy production rate \(\sigma \) the expectation of the total entropy production in the NESS is given by \(\sigma \,t\). These quantities satisfy the universal thermodynamic uncertainty relation

i.e. \(\sigma \) is bounded from below by \(j^2/D\). The TUR has been proven for a Markovian dynamics on a general network by Gingrich et al. [2, 3] and further investigated for a number of different settings, both in the classical (see, e.g., [4,5,6,7,8,9,10,11,12,13,14,15]) and the quantum domain (see, e.g., [16,17,18,19,20,21,22]). It has led to a deeper understanding of systems far from equilibrium as it introduces a lower bound on the dissipation given the knowledge of the occurring fluctuations. Such a relation is of interest for the modeling and analysis of e.g. biomolecular processes, which may often be described as a Markov network (see e.g. [23,24,25]).

Of particular interest is the work by Gingrich et al. [8], where the authors extend the relation from mesoscopic Markov jump processes to overdamped Langevin equations. Here a temporal coarse-graining procedure is described, which allows the formulation of a discrete Markov jump process in terms of an overdamped Langevin equation for the mesoscopic states of the model. These authors observe that for purely dissipative dynamics the TUR is saturated. An additional spatial coarse-graining performed in [8] results in a macroscopic description, where it is found that the tightness of the resulting uncertainty relation increases with the strength of the Gaussian potential wells (see [8], fig. 9).

In this work, we present a field-theoretic equivalent to the TUR. Such a thermodynamic uncertainty relation for general field-theoretic Langevin equations may prove helpful in further understanding complex dynamics like turbulence for fluid flow or non-linear growth processes, described by the stochastic Navier-Stokes equation (e.g. [26]) or the Kardar–Parisi–Zhang equation [27], respectively. Both are prominent representatives of field-theoretic Langevin equations. For the latter, we highlight the recent progress concerning a study of the inward growth of interfaces in liquid crystal turbulence as an experimental realization [28]. On the theory side, analytic results on the effect of aging of two-time correlation functions for the interface growth were found [29]. Furthermore we refer the reader to three review articles [30,31,32] concerning the latest developments around the Kardar–Parisi–Zhang universality class. Recently, ‘generalized TURs’ have been derived from fluctuation relations [33, 34]. For current-like observables, the original TUR [1] is stronger than the ‘generalized TURs’. In our manuscript we use these current-like observables and thus we focus on the original TUR.

The paper is organized as follows. In order to state a field-theoretic version of the thermodynamic uncertainty relation, we translate in Sect. 2 the notion of current, diffusivity and entropy production known from the setting of coupled Langevin equations to their respective equivalents for general field-theoretic Langevin equations. As an illustration of the generalizations introduced in Sect. 2, we will then study the one-dimensional Kardar–Parisi–Zhang (KPZ) equation as a paradigmatic example of such a field-theoretic Langevin equation. As the calculation of the current, diffusivity and entropy production in the NESS requires a solution to the KPZ equation, we will use spectral theory and construct an approximate solution in the weak-coupling regime of the KPZ equation in Sect. 3. With this approximation, we will then derive in Sect. 4 the thermodynamic uncertainty relation to quadratic order in the coupling parameter.

2 Thermodynamic Uncertainty Relation for a Field Theory

In this section, we will present a generalization of the thermodynamic uncertainty relation introduced in [1] to a field theory. Consider a generic field theory of the form

Here \(\varPhi _\gamma (\mathbf {r},t)\) is a scalar field or the \(\gamma \)-th component of a vector field \((\gamma \in [1,n];\,n\in \mathbb {N})\) with \(\mathbf {r}\in \varOmega \subset \mathbb {R}^d\), \(F_\gamma \left[ \{\varPhi _\mu (\mathbf {r},t)\}\right] \) represents a (possibly non-linear) functional of \(\varPhi _\mu \) and \(\eta _\gamma (\mathbf {r},t)\) denotes Gaussian noise, which is white in time, and with \(K(\mathbf {r}-\mathbf {r}^\prime )\) as spatial noise correlations. Prominent examples of (1) are the stochastic Navier–Stokes equation for turbulent flow (see e.g. [26]) or the Kardar–Parisi–Zhang equation for non-linear growth processes [27] to name only two. The latter will be treated in the subsequent sections within the framework established in the following.

Let us begin with the introduction of some notions. A natural choice of a local fluctuating current \(\mathbf {j}(\mathbf {r},t)\) is

with \({{\varvec{\Phi }}}(\mathbf {r},t)=\left( \varPhi _1(\mathbf {r},t),\ldots ,\varPhi _n(\mathbf {r},t)\right) ^\top \). The local current \(\mathbf {j}(\mathbf {r},t)\) is fluctuating around its mean, i.e.

with \(\delta \mathbf {j}(\mathbf {r},t)\) denoting the fluctuations. Given that the system (1) possesses a NESS, the long-time behavior of the local current (2) can be described as

with \(\delta \mathbf {j}(\mathbf {r},t)\) being now a stationary stochastic process with zero mean and with

Here \(\left\langle \cdot \right\rangle \) denotes averages with respect to the noise history. Thus, in a NESS, the local current \(\mathbf {j}(\mathbf {r},t)=\partial _t{{\varvec{\Phi }}}(\mathbf {r},t)\) is in a statistically stationary state, i.e. becomes a stationary stochastic process with mean \(\mathbf {J}(\mathbf {r})\). As the thermodynamic uncertainty relation in a Markovian network is formulated for some form of integrated currents, we define in analogy the projection of the local current onto an arbitrarily directed weight function \(\mathbf {g}(\mathbf {r})\)

The integral in (6) represents the usual \(\mathcal {L}_2\)-product of the two vector fields \(\mathbf {j}(\mathbf {r},t)\) and \(\mathbf {g}(\mathbf {r})\) with \(\mathbf {j}(\mathbf {r},t)\cdot \mathbf {g}(\mathbf {r})=\sum _kj_k(\mathbf {r},t)g_k(\mathbf {r})\) as the scalar product between \(\mathbf {j}\) and \(\mathbf {g}\). With this projected current \(j_g(t)\), we associate a fluctuating ‘output’

Hence \(j_g(t)=\partial _t\varPsi _g(t)\) and in the NESS

The fluctuating output \(\varPsi _g(t)\) provides us with the means to define a measure of the precision of the system output, namely the squared variational coefficient \(\epsilon ^2\), as

If the system is in its non-equilibrium steady state, we can rewrite (9) as

Let us now connect the variance of the output \(\varPsi _g(t)\) to the Green–Kubo diffusivity given by

Using (6) and (2), it is straightforward to verify that

Thus,

By dividing both sides of (12) by 2t and taking the limit of \(t\rightarrow \infty \) it is found in analogy to [35], that

with \(D_g\) from (11) and therefore

Since in the NESS \(\varPsi _g(t)\) is stochastically independent of the initial configuration \(\varPsi _g(0)\), we can simplify the expression for the diffusivity in the NESS according to

With the result of (14) and \(\epsilon ^2\) from (9), an alternative formulation of the precision in a NESS is

We proceed with expressing the total entropy production \(\varDelta s_\text {tot}\). The total entropy production is given by the sum of the entropy dissipated into the medium along a single trajectory, \(\varDelta s_\text {m}\), and the stochastic entropy, \(\varDelta s\), of such a trajectory; see e.g. [36]. The medium entropy is given by,

Here \(p[{{\varvec{\Phi }}}(\mathbf {r},t)|{{\varvec{\Phi }}}(\mathbf {r},t_0)]\) denotes the functional probability density of the entire vector field \({{\varvec{\Phi }}}(\mathbf {r},t)\), i.e. the field configuration after some time t has elapsed since a starting-time \(t_0<t\), conditioned on an initial value \({{\varvec{\Phi }}}(\mathbf {r},t_0)\), i.e. a certain field configuration at the starting time \(t_0\). In contrast, \(p[\widetilde{{{\varvec{\Phi }}}}(\mathbf {r},t)|\widetilde{{{\varvec{\Phi }}}}(\mathbf {r},t_0)]\) is the conditioned probability density of the time reversed process, i.e. starting in the final configuration at time \(t_0\) and ending up in the original one at time t. For the sake of simplicity, we will write in the following \(p[{{\varvec{\Phi }}}]\) and \(p[\widetilde{{{\varvec{\Phi }}}}]\) instead of \(p[{{\varvec{\Phi }}}(\mathbf {r},t)|{{\varvec{\Phi }}}(\mathbf {r},t_0)]\) and \(p[\widetilde{{{\varvec{\Phi }}}}(\mathbf {r},t)|\widetilde{{{\varvec{\Phi }}}}(\mathbf {r},t_0)]\), respectively. The functional probability density can be expressed via a so-called action functional, \(\mathcal {S}[{{\varvec{\Phi }}}]\), according to

For the system (1), the action functional (see e.g. [36,37,38,39,40,41,42,43,44] and references therein) is given by

where \(K^{-1}(\mathbf {r}-\mathbf {r}^\prime )\) is the inverse of the noise correlation kernel \(K(\mathbf {r}-\mathbf {r}^\prime )\) from (1). The two integral kernels fulfill

Before we proceed with the calculation of the medium entropy, let us give the following general remarks. Throughout the paper, stochastic integrals are interpreted in the Stratonovitch sense, i.e. mid-point discretization is used. This is essential for the calculation of the medium entropy \(\varDelta s_\text {m}\) via (16), where Ito discretization may lead to incompatibilities. Using Stratonovitch discretization, however, gives rise to an additional term in the action functional (18), which is given by the functional derivative of the generalized force term F from (1) with respect to the field \({{\varvec{\Phi }}}\). This contribution stems from the Jacobian ensuing from the variable transformation from the noise field to the field \({{\varvec{\Phi }}}\) in the functional integral used for calculating expectation values of path dependent observables. As this addition to the action functional does not contribute to the medium entropy (cf. [36, 45]) it is neglected in (18).

Inserting (17), (18) into (16) and noticing that only the time-antisymmetric part of the action functional (18) and its time-reversed counterpart survives, leads to (see also [36, 45, 46])

\(\varDelta s_\text {m}\) is a measure of the energy dissipated into the medium during the time interval \([t_0,\,t]\), in analogy to the Langevin case. The stochastic entropy change \(\varDelta s\) for the same trajectory, is given by (see also [45])

Thus, the total entropy production \(\varDelta s_\text {tot}\) reads

With (22) we may also define the rate of total entropy production \(\sigma \) in a NESS according to

The expressions stated in (9) and (22) provide us with the necessary ingredients to formulate the field-theoretic thermodynamic uncertainty relation as

with \(\sigma \) from (23), \(D_g\) from (13) and \(J_g\) from (8). The higher the precision, i.e. the smaller \(\epsilon ^2\), the more entropy \(\left\langle \varDelta s_\text {tot}\right\rangle \) is generated, i.e. the higher the thermodynamic cost. Or, in other words, in order to sustain a certain NESS current \(J_g\), a minimal entropy production rate \(\sigma \ge J_g^2/D_g\) is required. We anticipate that for the case of the KPZ equation, the constant on the right hand side of (24) will turn out to be equal to five (see (111)), i.e. the TUR given in (24) is not saturated. As will be discussed below, we can attribute this greater value to the KPZ non-linearity.

3 Theoretical Background

Within this section we will lay the groundwork for the calculation of the quantities entering the TUR for the KPZ equation. The main focus thereby is on the perturbative solution of the KPZ equation in the weak-coupling regime and the discussion of issues with diverging terms due to a lack of regularity.

3.1 The KPZ Equation in Spectral Form

Consider the one-dimensional KPZ equation [27] on the interval [0, b], \(b>0\), with Gaussian white noise \(\eta (x,t)\)

subject to periodic boundary conditions and, for simplicity, vanishing initial condition \(h(x,0)=0\), \(x\in [0,b]\) (i.e. the growth process starts with a flat profile). Here \(\hat{L}=\nu \partial _x^2\) is a differential diffusion operator, \(\varDelta _0\) a constant noise strength, and \(\lambda \) the coupling constant of the non-linearity.

A Fourier-expansion of the height field h(x, t) and the stochastic driving force \(\eta (x,t)\) reads

The set of \(\{\phi _k(x)\}\) is given by

and thus \(h_k(t)\), \(\eta _k(t)\in \mathbb {C}\) in (26). A similar proceeding for the case of the Edwards–Wilkinson equation was used in [47,48,49,50]. Inserting (26) into (25) leads to

with \(\{\mu _k\}\) defined as

For the \(\{\phi _k(x)\}\) the relation \(\phi _l(x)\phi _m(x)=\phi _{l+m}(x)/\sqrt{b}\) holds and thus the double-sum in the Fourier expansion of the KPZ equation can be rewritten in convolution form setting \(k=l+m\). This yields

which implies ordinary differential equations for the Fourier-coefficients \(h_k(t)\),

The above ODEs (30) are readily ‘solved’ by the variation of constants formula, which leads for flat initial condition \(h_k(0)\equiv 0\) to

\(k\in \mathbb {Z}\). Note, that the assumption of flat initial conditions is not in conflict with (21) as in the NESS, in which the relevant quantities will be evaluated, the probability density becomes stationary. With (31), a non-linear integral equation for the k-th Fourier coefficient has been derived. In Sect. 3.4, the solution to (31) will be constructed by means of an expansion in a small coupling parameter \(\lambda \). We close this section with the following general remarks.

- (i)

Equation (31) has been derived on a purely formal level. In particular, the integral \(\int dt^\prime e^{\mu _k(t-t^\prime )}\eta _k(t^\prime )\) has to be given a meaning. In a strict mathematical formulation, this integral has to be written as

$$\begin{aligned} \int _0^te^{\mu _k(t-t^\prime )}dW_k(t^\prime ), \end{aligned}$$(32)which is called a stochastic convolution (see e.g. [51,52,53,54]). [Note that due to the deterministic integrand of (32), the integral can optionally be interpreted in the Ito or Stratonovitch sense, respectively [51]]. This has its origin in the fact that the noise \(\eta (x,t)\) in (25) is mathematically speaking a generalized time-derivative of a Wiener process W(x, t) (see also Sect 3.2, (35)). In this spirit, (31) with the first integral on the right hand side replaced by (32) may be called the mild form of the KPZ equation (in its spectral representation) and \(h(x,t)=\sum _{k\in \mathbb {Z}}h_k(t)\phi _k(x)\), \(h_k(t)\) solution of equation (31), is then called a mild solution of the KPZ equation. In mathematical literature, proofs of existence and uniqueness of such a mild solution can be found for various assumptions on the spatial regularity of the noise (see e.g. [53, 55, 56] and references therein). In particular, these assumptions are reflected by conditions for the explicit form of the spatial noise correlator \(K(x-x^\prime )\) from (1) (see (46)). An assumption will be adopted (see Sect. 3.2), which guarantees the existence of \(\left\| h(x,t)\right\| _{\mathcal {L}_2 ([0,b])}\), i.e. the norm on the Hilbert space of square-integrable functions \(\mathcal {L}_2\). This norm, or respectively the corresponding \(\mathcal {L}_2\)-product, denoted in the following by \((\cdot ,\cdot )_0\), of h with any \(\mathcal {L}_2\)-function g, i.e. \((h,g)_0\), will be used in Sect. 4.1 and Sect. 4.4 to calculate the necessary contributions to a field-theoretic thermodynamic uncertainty relation. Furthermore, with this assumption on the noise, it is shown in Appendix C for the mild solution that almost surely \(h(x,t)\in \mathcal {C}([0,T],\mathcal {L}_2([0,b]))\), \(T>0\), i.e. the trajectory \(t\mapsto h(x,t)\) is a continuous function in time t with values \(h(\cdot ,t)\in \mathcal {L}_2([0,b])\). This justifies the choice \(H=\mathcal {L}_2([0,b])\) in the following calculations.

- (ii)

The Fourier expansion applied above can be understood in a more general sense. For the case of periodic boundary conditions, the differential operator \(\hat{L}\) possesses the eigenfunctions \(\{\phi _k(x)\}\) and corresponding eigenvalues \(\{\mu _k\}\) from (27) and (28), respectively. It is well-known that the set \(\{\phi _k(x)\}\) constitutes a complete orthonormal system in the Hilbert space \(\mathcal {L}_2(0,b)\) of all square-integrable functions on (0, b). Thus the Fourier-expansion performed above can also be interpreted as an expansion in the eigenfunctions of the operator \(\hat{L}\).

- (iii)

With this interpretation, (31) also holds for a ‘hyperdiffusive’ version of the KPZ equation in which the operator \(\hat{L}\) is replaced by \(\hat{L}_p\equiv (-1)^{p+1}\partial _x^{2p}\), with \(p\in \mathbb {N}\) and adjusted eigenvalues \(\{\mu _k^p\}\). This may be used to introduce a higher regularity to the KPZ equation.

- (iv)

Besides the complex Fourier expansion in (26) with coefficients \(h_k(t)\in \mathbb {C}\), the real expansion \(h(x,t)=\sum _{k\in \mathbb {Z}}\widetilde{h}_k(t)\gamma _k(x)\), \(\widetilde{h}_k(t)\in \mathbb {R}\) (e.g. [53]) and

$$\begin{aligned} \gamma _0=\frac{1}{\sqrt{b}},\quad \gamma _k=\sqrt{\frac{2}{b}}\sin 2\pi k\frac{x}{b},\quad \gamma _{-k}=\sqrt{\frac{2}{b}}\cos 2\pi k\frac{x}{b}\quad k\in \mathbb {N}, \end{aligned}$$(33)will be used in the next section. The relationship between \(h_k(t)\) and \(\widetilde{h}_k(t)\) reads

$$\begin{aligned} h_k(t)=\frac{\widetilde{h}_{-k}(t)-i\widetilde{h}_k(t)}{\sqrt{2}}\,,\qquad h_{-k}(t)=\frac{\widetilde{h}_{-k}(t)+i\widetilde{h}_k(t)}{\sqrt{2}}=\overline{h_k}(t), \end{aligned}$$(34)with \(\overline{h_k}(t)\) as the complex conjugate.

3.2 A Closer Look at the Noise

In the following discussion of the noise it is instructive to pretend, for the time being, that the noise is spatially colored with noise correlator \(K(x-x^\prime )\) instead of assuming directly spatially white noise.

The noise \(\eta (x,t)\) is given by a generalized time-derivative of a Wiener process \(W(x,t)\in \mathbb {R}\) [26, 51,52,53], i.e.

Such a Wiener process W(x, t) can be written as (e.g. [51, 53])

Here \(\{\alpha _k\}\in \mathbb {R}\) are arbitrary expansion coefficients that may be used to introduce a spatial regularization of the Wiener process, \(\{\beta _k(t)\}\in \mathbb {R}\) are stochastically independent standard Brownian motions and \(\{\gamma _k(x)\}\) from (33). A well-known result for the two-point correlation function of two stochastically independent Brownian motions \(\beta _k(t)\) reads [51]

with \((t\wedge t^\prime )=\min (t,t^\prime )\).

In the following it will be shown that the noise \(\eta \) defined by (35) and (36) possesses the autocorrelation

which for \(K(x-x^\prime )=\varDelta _0\delta (x-x^\prime )\) results in the one assumed in (25). Furthermore, an explicit expression of the kernel \(K(x-x^\prime )\) by means of the Fourier coefficients \(\{\alpha _k\}\) of W(x, t) from (36) will be given.

To this end, first an expression for the two-point correlation function of the Wiener process itself can be derived according to

To represent the noise structure dictated by (25), the expression in (39) has to be an even, translationally invariant function in space. Thus, the following relation has to be fulfilled

Then the two-point correlation function of the Wiener process is given by

With \(W(x,t)=\sum _{k\in \mathbb {Z}}W_k(t)\phi _k(x)\), \(\phi _k(x)\) from (27), equation (41) implies for the two-point correlation function of the Fourier coefficients \(W_k(t)\)

This result leads immediately to

using \(\partial _t\partial _{t^\prime }(t\wedge t^\prime )=\delta (t-t^\prime )\).

For the relation between (41) and the noise from (38), we differentiate (41) with respect to t and \(t^\prime \) yielding

The following identification can be made

which structurally represents the standard implicit assumption that \(K(x-x^\prime )\) is translationally invariant, positive definite and even. Note, that the regularity of the noise-kernel \(K(|x-x^\prime |)\) is given by the behavior of the set of \(\{\alpha _k\}\) for \(k\rightarrow \infty \), where \(\{\alpha _k\}\) are the dimensionless Fourier coefficients of the underlying Wiener process from (36) for all k. For the case of \(\alpha _k=1\)\(\forall \;k\in \mathbb {Z}\), spatially white noise is obtained.

Thus, the derivation via the Wiener process has indeed led to a translationally invariant real-valued two-point correlation function for \(\eta (x,t)\), given by (38), with \(K(|x-x^\prime |)\) from (45), which describes white in time and spatially colored Gaussian noise. In the following, we will use (45) to approximate spatially white noise to meet the required form in (25).

Now the assumption mentioned in the remarks in Sect. 3.1 can be made more precise. In the following it will be assumed that (see Appendix C)

This assumption excludes spatially white noise, but via the introduction of a cutoff parameter \(\varLambda \in \mathbb {N}\), \(\varLambda \gg 1\) arbitrarily large but finite, for the range of k, white noise is accessible, i.e. for \(k\in \mathfrak {R}\) with

Note that for the linear case, i.e. the Edwards–Wilkinson model, the authors of [48] also introduce a cutoff, albeit in a slightly different manner. Such a cutoff amounts to an orthogonal projection of the full eigenfunction expansion of (25) to a finite-dimensional subspace spanned by the eigenfunctions \(\phi _{-\varLambda }(x),\ldots ,\phi _\varLambda (x)\). Mathematically, this projection may be represented by a linear projection operator \(\mathcal {P}_\varLambda \), which maps the Hilbert space \(\mathcal {L}_2(0,b)\) to \(\text {span}\{\phi _{-\varLambda }(x),\ldots ,\phi _\varLambda (x)\}\), acting on (29). This mapping, however, causes a problem in the non-linear term of (29), where by mode coupling the k-th Fourier mode (\(-\varLambda \le k\le \varLambda \)) is influenced also by modes with \(|l|>\varLambda \). This issue can be resolved by choosing \(\varLambda \) large enough, for modes with \(h_l(t)\sim \exp [\mu _lt]\), \(\mu _l\) from (28), (61), \(|l|>\varLambda \) will be damped out rapidly so that the bias introduced by limiting l to the interval \(\mathfrak {R}\) is small. Note that the restriction to \(h\in \text {span}\{\phi _{-\varLambda },\,\ldots ,\,\phi _\varLambda \}\) also implies the introduction of restricted summation boundaries in the convolution term in (31), namely

with \(\mathfrak {R}_k\) defined by

This restriction to finitely many Fourier modes is not as harsh as it might seem, since for very large wavenumbers the dynamics of the KPZ equation is dominated by its diffusive term and the non-linearity may safely be neglected. This reasoning is based on arguments for turbulence theory in e.g. [26, 57,58,59] and for the KPZ-case e.g. [60], where a momentum-scale separation is in effect. Specifically, in the case of the one-dimensional Burgers equation, the momentum scale is divided into a small-wavenumber regime where the non-linearity is dominant and a large-wavenumber regime where dissipation dominates (see also [61,62,63,64]). Hence, in the latter wavenumber range the KPZ equation reduces to the Edwards–Wilkinson equation, which, due to its equilibrium behavior, does not affect the thermodynamic uncertainty relation (24).

With the cutoff \(\varLambda \), condition (46) is of course fulfilled for \(\alpha _k=1\)\(\forall \,k\in \mathfrak {R}\) and \(\alpha _k=0\)\(\forall k\)\(\notin \mathfrak {R}\). Inserting this choice of \(\alpha _k\) into (45) yields

Also, the choice of \(\alpha _k=1\)\(\forall \,k\in \mathfrak {R}\) implies for the correlation function of the Fourier coefficients \(\eta _k(t)\) from (43)

To end this section, a noise operator \(\hat{K}\) describing spatial noise correlations will be introduced as

with kernel \(K(x-x^\prime )\) from (49) and its inverse \(\hat{K}^{-1}\) given by

where its kernel reads \(K^{-1}(x-x^\prime )=\varDelta _0^{-1}\delta (x-x^\prime )\Big |_{\text {span}\{\phi _{-\varLambda },\ldots ,\phi _\varLambda \}}\).

3.3 Dimensionless Form of the KPZ Equation

Before the KPZ equation is analyzed further, it is prudent to relate all physical quantities to suitable reference values so that the scaled quantities are dimensionless and that the equation is characterized by only one dimensionless parameter. In anticipation of the calculations below, we choose this parameter to represent a dimensionless effective coupling parameter \(\lambda _\text {eff}\), that replaces the coupling constant \(\lambda \) from (25). To this end the following characteristic scales are introduced,

Here H is a characteristic scale for the height field (not to be confused with the notation for the Hilbert space), N a scale for the noise field, b is the characteristic length scale in space and T the time scale of the system. Choosing the three respective scales according to

leads to the dimensionless KPZ equation on the interval \(x\in [0,1]\)

Here, the effective dimensionless coupling constant is given by

and

The effective coupling constant \(\lambda _\text {eff}\) is found in various works concerning the KPZ–Burgers equation; see e.g. [40, 65,66,67].

In the following sections we will perform all calculations for the dimensionless KPZ equation. This requires one simple adjustment in the linear differential operator \(\hat{L}\) on \(x_\text {s}\in [0,1]\), which is now given by

with eigenvalues

to the orthonormal eigenfunctions

Furthermore, the noise correlation function in Fourier space from (50) now reads

The scaling also affects the noise operators defined in (51), (52) at the end of Sect. 3.2. The scaled ones read

and

with \(K_\text {s}(x_\text {s}-x_\text {s}^\prime )\) from (59) and \(K^{-1}_\text {s}(x_\text {s}-x^\prime _\text {s})\) is defined via the integral-relation \(\int dy_\text {s}\,K_\text {s}(x_\text {s}-y_\text {s})K_\text {s}^{-1}(y_\text {s}-z_\text {s})=\delta (x_\text {s}-z_\text {s})\).

Note that for the sake of simplicity the subscript \(\text {s}\) will be dropped in the calculations below where all quantities are understood as the scaled ones.

3.4 Expansion in a Small Coupling Constant

Returning to the nonlinear integral equation of the k-th Fourier coefficient of the heights field, \(h_k(t)\) from (31), now in its dimensionless form and with the restricted spectral range given by

\(k\in \mathfrak {R}\), with \(\{\mu _k\}\) from (61), \(\mathfrak {R}_k\) from (48) and all quantities dimensionless, an approximate solution will be constructed. Note, that the summation of the discrete convolution in (66) is chosen such that it respects the above introduced cutoff in l as well as \(k-l\), i.e. |l|, \(|k-l|\le \varLambda \). For small values of the coupling constant we expand the solution in powers of \(\lambda _\text {eff}\), i.e.

with

Thus every \(h_{k}^{(n)}\), \(n>1\), can be expressed in terms of \(h_{m}^{(0)}\), \(m\in \mathfrak {R}\), i.e. the stochastic convolution according to (32), which is known to be Gaussian.

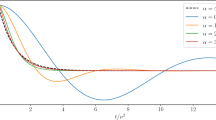

In the following calculations multipoint correlation functions have to be evaluated, which can be simplified by Wick’s theorem, where a recurring term reads \(\left\langle h_{k}^{(0)}(t)h_{l}^{(0)}(t^\prime )\right\rangle \). It is thus helpful to determine this correlation function in general once and use this result later on. With (63) and \(k,l\in \mathbb {Z}\) (and therefore also for \(k,l\in \mathfrak {R}\)) it follows that:

with

Since for the auxiliary expression \(\varPi _{k,l}\) the symmetries

hold, it is found that

4 Thermodynamic Uncertainty Relation for the KPZ Equation

In this section we will show that the thermodynamic uncertainty relation from (24) holds for the KPZ equation driven by Gaussian white noise in the weak-coupling regime. In particular, the small-\(\lambda _\text {eff}\) expansion from Sect. 3.4 will be employed.

To recapitulate, the two ingredients needed for the thermodynamic uncertainty relation are (i) the long time behavior of the squared variation coefficient or precision \(\epsilon ^2\) of \(\varPsi _g(t)\) from (9); (ii) the expectation value of the total entropy production in the steady state, \(\left\langle \varDelta s_\text {tot}\right\rangle \) from (22).

4.1 Expectation and Variance for the Height Field

With (7) adapted to the KPZ equation, namely

with g(x) as any real-valued \(\mathcal {L}_2\)-function fulfilling \(\int _0^1dxg(x)\ne 0\), i.e. g(x) possessing non-zero mean, we rewrite the variance as

As is shown below, \(\epsilon ^2\) can be evaluated for arbitrary time \(t>0\). However, the final interest is on the non-equilibrium steady state of the system. Therefore, the long-time asymptotics will be studied.

4.2 Evaluation of Expectation and Variance

In the small-\(\lambda _\text {eff}\) expansion, the expectation of the output \(\varPsi _g(t)\) from (74), with h(x, t) solution of the dimensionless KPZ equation (55) to (57) reads:

where \(g_k\) and \(\overline{g_k}\) are the k-th Fourier coefficient of the weight function g(x) and its complex conjugate, respectively. Here the result from (68) is used as well as the fact that odd moments of Gaussian random variables vanish identically. Replacing \(h_{k}^{(1)}(t)\) by the expression derived in (69) and using (73) leads to

Note, that in the case of \(k=0\) the second term in the last line of (77) is evaluated in the limit \(\mu _k\rightarrow 0\), which yields t. Since the interest is in the NESS-current, the long-time asymptotics of the two expressions in (77) above is studied. So, eq. (76) yields

where \(g_k=g_{-k}\)\(\forall k\) as \(g(x)\in \mathbb {R}\). Note, that the formulation of (78) reflects our claim, that \(\left\langle \varPsi _g(t)\right\rangle \sim t\) for \(t\gg 1\), see also the related reasoning in Appendix D. Using the explicit form of \(\mu _k\) from (61), the expression in (78) can be simplified according to

with \(\varLambda \) from (47). Equivalently, the steady state current from (8) reads

The first term of the variance as defined in (75) reads in the small-\(\lambda _\text {eff}\) expansion

where moments proportional to \(\lambda _\text {eff}\) (and \(\lambda _\text {eff}^3\)) vanish due to (68) and (69) as the two-point correlation function \(\left\langle h_{k}^{(0)}h_{l}^{(1)}\right\rangle \) and its complex conjugate are odd moments.

In Appendix A, we present the rather technical derivation of

Subtraction of (78) squared from (82) leads to

Here, \(\left\langle \left( \varPsi _g(t)\right) ^2\right\rangle -\left\langle \varPsi _g(t)\right\rangle ^2\sim t\), \(t\gg 1\) is expected due to our reasoning in Appendix D. Again, with \(\mu _k\) from (61), the above expression in (83) can be reduced to

Here \(\mathcal {H}_\varLambda ^{(2)}=\sum _{l=1}^\varLambda 1/l^2\) is the so-called generalized harmonic number, which converges to the Riemann zeta-function \(\zeta (2)\) for \(\varLambda \rightarrow \infty \). Using (13), eq. (84) yields the diffusivity \(D_g\),

With (84) and (79) squared, the first constituent of the thermodynamic uncertainty relation, \(\epsilon ^2=\text {Var}[\varPsi _g(t)]/\left\langle \varPsi _g(t)\right\rangle ^2\) from (9), is given for large times by

Note, since \(\epsilon ^2\approx 4/(\lambda _\text {eff}^2t)\), the long time asymptotics of the second term has to scale as \(\left\langle \varDelta s_\text {tot}\right\rangle \sim \lambda _\text {eff}^2t\) for the uncertainty relation to hold. Note further, that the result for the precision of the projected output \(\varPsi _g(t)\) in the NESS is independent of the choice of g(x).

4.3 Alternative Formulation of the Precision

Before we continue with the calculation of the total entropy production, we would like to mention an intriguing observation. From the field-theoretic point of view, it seems natural to define the precision \(\epsilon ^2\) as

This is due to the fact that the height field h(x, t) is at every time instant an element of the Hilbert-space \(\mathcal {L}_2([0,1])\) as mentioned in Sect. 3.1. Hence, the difference between h(x, t) and its expectation is measured by its \(\mathcal {L}_2\)-norm. Also the expectation squared is in this framework given by the \(\mathcal {L}_2\)-norm squared. At a cursory glance, the definitions in (87) and (9) seem to be incompatible. However, for the case of the above calculations of \(\epsilon ^2\) for the one-dimensional KPZ equation, it holds up to \(O(\lambda _\text {eff}^3)\) in perturbation expansion that

Thus, with (88), it is obvious that in terms of the perturbation expansion both definitions of the precision, as in (9) and (87), respectively, are equivalent. Equation (88) can be verified by direct calculation along the same lines as above in this section. By studying these calculations it is found perturbatively that the height field h(x, t) is spatially homogeneous, which is reflected by \(\left\langle h_k(t)h_l(t)\right\rangle \sim \delta _{k,-l}\) (see (73)) for the correlation of its Fourier-coefficients. Further, the long-time behavior is solely determined by the largest eigenvalue of the differential diffusion operator \(\hat{L}=\partial _x^2\), namely by \(\mu _0=0\) (see e.g. (78) and (83), the essential quantities for deriving (88)).

In the following, we would like to give some reasoning why the above two statements should also hold for a broad class of field-theoretic Langevin equations as in (1). For simplicity, we restrict ourselves in (1) to the case of one-dimensional scalar fields \(\varPhi (x,t)\) and \(F[\varPhi (x,t)]=\hat{L}\varPhi (x,t)+\hat{N}[\varPhi (x,t)]\). Here \(\hat{L}\) denotes a linear differential operator and \(\hat{N}\) a non-linear (e.g. quadratic) operator. \(\hat{L}\) should be selfadjoint and possess a pure point spectrum with all eigenvalues \(\mu _k\le 0\) (e.g. \(\hat{L}=(-1)^{p+1}\partial _x^{2p}\), \(p\in \mathbb {N}\), i.e. an arbitrary diffusion operator subject to periodic boundary conditions). For this class of operators \(\hat{L}\) there exists a complete orthonormal system of corresponding eigenfunctions \(\{\phi _k\}\) in \(\mathcal {L}_2(\varOmega )\). If it is further known, that the solution \(\varPhi (x,t)\) of (1) belongs at every time t to \(\mathcal {L}_2(\varOmega )\), we can calculate e.g. the second moment of the projected output \(\varPsi _g(t)\) according to \(\left\langle (\varPsi _g(t))^2\right\rangle =\left\langle (\int _\varOmega dx\,\varPhi (x,t)g(x))^2\right\rangle \), where \(g(x)\in \mathcal {L}_2(\varOmega )\) as well. As is the case in e.g. equation (81), the second moment is determined by the Fourier-coefficients \(\varPhi _k(t)\) of \(\varPhi (x,t)\) and \(g_k\) of g(x), namely

Like the KPZ equation, (1) is driven by spatially homogeneous Gaussian white noise \(\eta (x,t)\) with two-point correlations of the Fourier-coefficients \(\eta _k(t)\) given by \(\left\langle \eta _k(t)\eta _l(t)\right\rangle \sim \delta _{k,-l}\). Therefore, we expect the solution to (1) subject to periodic boundary conditions to be spatially homogeneous as well, at least in the steady state, which implies

see e.g. [57, 58]. Hence, with (90), the expression in (89) becomes

Comparing (91) to \(\left\langle \left\| \varPhi (x,t)\right\| _0^2\right\rangle \), which is given by

we find in the NESS

provided that the long-time behavior is dominated by the Fourier-mode with largest eigenvalue, i.e. \(k=0\) with \(\mu _0=0\). Under the same condition, the first moment of the projected output reads in the NESS

and thus

Similarly,

which implies

Note, that \(g_0\) and \(\varPhi _0(t)\) have to be real throughout the argument (which is indeed the case for expansions with respect to the eigenfunctions of the general diffusion operators \(\hat{L}\) from above). Hence, under the assumption that the prior mentioned requirements are met, which, of course, would have to be checked for every individual system (as was done in this section for the KPZ equation), the asymptotic equivalence in (93) and (97) validates the statement in (88) (and therefore, in the NESS, also (87)) for a whole class of one-dimensional scalar SPDEs from (1).

4.4 Total Entropy Production for the KPZ Equation

The total entropy production for the KPZ equation is obtained by inserting \(F_\gamma [h_\mu (\mathbf {r},t)]=\partial _x^2h(x,t)+\frac{\lambda _\text {eff}}{2}\left( \partial _xh(x,t)\right) ^2\) and the explicit expression for the one-dimensional stationary probability distribution \(p^s[h]\) into (22). The form of the latter is given in the following.

4.4.1 The Fokker–Planck Equation and its 1D Stationary Solution

Let us briefly recapitulate the Fokker–Planck equation and its stationary solution in one spatial dimension for the KPZ equation.

The Fokker–Planck equation corresponding to (55) for the functional probability distribution p[h] reads, e.g. [32, 43, 68,69,70],

with j[h] as a probability current.

It is well known that for the case of pure Gaussian white noise, a stationary solution, i.e. \(\partial _tp^s[h]=0\), to the Fokker–Planck equation is given by [32, 68, 70]

This stationary solution is the same as the one for the linear case, namely for the Edwards–Wilkinson model. Note that in (100) we denote by \(\left\| \cdot \right\| _0^2\) the standard \(\mathcal {L}_2\)-norm.

4.4.2 Stationary Total Entropy Production

With (22), the total entropy production in the NESS for the KPZ equation reads

Using \(\left( \dot{h},\partial _x^2h\right) _0=\frac{1}{2}\frac{d\, }{d t}\left( h,\partial _x^2h\right) _0\), and the initial condition \(h(x,0)=0\), the first term in (101) vanishes and thus

For Gaussian white noise, the expectation value of (102) is given by

For a derivation of this result see Appendix B. Note that (103) and its derivation remains true for \(h\in \text {span}\{\phi _{-\varLambda },\,\ldots ,\,\phi _\varLambda \}\). More generally, the expectation of the total entropy production may also be written as

with \(\hat{K}^{-1}\) from (65).

4.4.3 Evaluating the Expectation of the Stationary Total Entropy Production

Above, an expression for the stationary total entropy production \(\varDelta s_\text {tot}\) and its expectation value were derived (see eq. (103)). Inserting the Fourier representation from (26) and (62) into (103) leads to

with \(\mathfrak {R}_k\) from (48). As (105) above is already of order \(\lambda _\text {eff}^2\), it suffices to expand the Fourier coefficients \(h_i(t^\prime )\) to zeroth order, which yields

with \(h_{i}^{(0)}(t^\prime )\) given by (68). Via a Wick contraction and using (73), the four-point correlation function in (106) reads

Inserting (107) into (106) leads to the following form of the total entropy production in the NESS,

Note, \(\left\langle \varDelta s_\text {tot}\right\rangle \sim t\) for \(t\gg 1\) is expected to hold due to our reasoning in Appendix D. Note further, that the long time behavior of \(\left\langle \varDelta s_\text {tot}\right\rangle \) is indeed of the form required, i.e. \(\left\langle \varDelta s_\text {tot}\right\rangle \sim \lambda _\text {eff}^2t\) (see remark after (86)), for the uncertainty relation to hold. With \(\mu _k\) from (61), the expression for the total entropy production from (108) reads

Thus, with (23) and (109), the total entropy production rate becomes

With (86) and (109), or, equivalently, (80), (85) and (110), the constituents of the thermodynamic uncertainty relation are known. Hence, the product entering the TUR from (24) for the KPZ equation reads

Note, that the result given in (111) holds strictly for ‘almost’ white noise only, i.e. for the truncated noise spectrum with cutoff \(\varLambda \) (see e.g. (49) and (50)). However, we choose \(\varLambda =\varLambda _0\) large enough, such that all contributions from modes with \(|k|>\varLambda _0\) are expected to be dominated by the diffusive term of the KPZ equation, hence, be effectively described by the Edwards–Wilkinson equation (see further the comments below (48)). As for very large times t, the Edwards–Wilkinson model displays a genuine equilibrium, it does not contribute to the current (80) nor the entropy production rate (110). Consequently, modes with \(|k|>\varLambda _0\) do not affect the TUR in (111) and thus we expect it to hold also for ‘fully’ white noise, i.e. without the need to increase the cutoff parameter \(\varLambda \). The TUR in (111) is the central result of this paper.

Note further, that in (111), we deliberately refrain from writing \(\left\langle \varDelta s_\text {tot}\right\rangle \,\epsilon ^2=5-1/\varLambda \) as this would somewhat mask the physics causing this result. This point will be discussed further in the following.

4.5 Edwards–Wilkinson Model for a Constant Driving Force

To give an interpretation of the two terms in (108) and consequently in (111), we believe it instructive to briefly calculate the precision and total entropy production for the case of the one-dimensional Edwards–Wilkinson model modified by an additional constant non-random driving ‘force’ \(v_0\) and subject to periodic boundary conditions. To be specific, we consider

already in dimensionless form and with space-time white noise \(\eta \). The stochastic partial differential equation in (112) has the same form like the KPZ equation from (55) but with the non-linearity replaced by \(v_0\). This ensures a NESS, such that quantities like current and entropy production can be calculated, without the difficulties of the mode-coupling encountered with the KPZ non-linearity. We denote (112) in the sequel with FEW for ‘forced Edwards–Wilkinson equation’. Following the same procedure as described in Sect. 3, we find as an integral expression for the k-th Fourier coefficient of the height field in FEW,

where again a flat initial configuration was assumed and \(\mu _k=-4\pi ^2k^2\) as above. With (113), we get immediately in the NESS

and thus \(\left\langle \varPsi _g(t)\right\rangle ^2=g_0^2v_0^2t^2\) as well as

Thus,

As already discussed above in Sect. 3, the Fokker–Planck equation corresponding to (112) has the stationary solution \(p^s[h]=\exp \left[ -\int dx\,(\partial _xh)^2\right] \) and thus, with (22) and (113), the total entropy production reads in the NESS

With (115) and (116), the TUR product for (112) is given by

i.e. the thermodynamic uncertainty relation is indeed saturated for the Edwards–Wilkinson equation subject to a constant driving ‘force’ \(v_0\). For the sake of completeness we state the expressions for the current, diffusivity and rate of entropy production in the non-equilibrium steady state, namely

With the calculations for FEW, we can now give an interpretation of the two terms in (109) and (111). The first term in the inner brackets in (109) originates from the first term of (108), where the latter represents the action of all higher-order Fourier modes on the mode \(k=0\) (see (107)). To illustrate this point further, observe that, in the NESS, we get according to (76) to (80) for the current:

and from the calculation above we see that it contains only the impact of Fourier modes \(l\ne 0\) on the mode \(k=0\), which belongs to the constant eigenfunction \(\phi _0(x)=1\). In other words, the modes \(l\ne 0\) act like a constant external excitation, just in the same manner as \(v_0\) acts for FEW in (114). Comparing (119) to (114), we may set

and get \(J_g=g_0v_0\) in both cases.

Following now the calculations for FEW, we would expect from (116)

which is in fact exactly the first term in the inner brackets from (108) and (109), respectively. Since with (120) also the expression for \(\epsilon ^2\) from (115) coincides with the first summand on the r.h.s. of (86), it is clear that both cases result in the saturated TUR. This explains the value 2 on the r.h.s. of (111).

Turning to the second term of (109), we see that it stems from the second term in (108). In contrast to the first \(\lambda _\text {eff}^2\)-term in (108), the second one does not only measure the effect of the modes on the \(k=0\) mode but also on all other modes \(k\ne 0\). It further features interactions of the k and l modes among each other via mode coupling. Hence, the mode coupling seems responsible for the larger constant on the right hand side of (111), since by neglecting the mode coupling term in (109), the thermodynamic uncertainty relation was saturated also for the KPZ equation up to \(O(\lambda _\text {eff}^2)\). To conclude this brief discussion, we give the respective relations of the KPZ current (80), diffusivity (85) and total entropy production rate (110) to FEW, namely

with \(J_g^\text {FEW}\), \(D_g^\text {FEW}\) and \(\sigma ^\text {FEW}\) from (118). We see that the additional mode coupling term in KPZ leads to corrections in \(D_{g}^\text {KPZ}\) and \(\sigma ^{\text {KPZ}}\) of at least second order in \(\lambda _\text {eff}\). For the case of \(\lambda _\text {eff}\rightarrow 0\) the KPZ equation becomes the standard Edwards–Wilkinson equation (EW), namely \(\partial _th(x,t)=\partial _x^2h(x,t)+\eta (x,t)\), which possesses a genuine equilibrium steady state. Therefore, for the standard EW we have \(J_g^\text {EW}=0\), \(\sigma ^\text {EW}=0\) and \(D_g^\text {EW}=g_0^2/2\). From (122) it follows that for \(\lambda _\text {eff}\rightarrow 0\), \((J_g,\sigma ,D_g)_\text {KPZ}\rightarrow (J_g,\sigma ,D_g)_\text {FEW}\) and from (118), (120) that \((J_g,\sigma ,D_g)_\text {FEW}\rightarrow (J_g,\sigma ,D_g)_\text {EW}=(0,0,g_0^2/2)\). Hence, the non-zero expressions for \(J_g^\text {KPZ}\) and \(\sigma ^\text {KPZ}\) result solely from the KPZ non-linearity. The impact of the latter on the \(k=0\) Fourier mode (i.e. the spatially constant mode) results in contributions to \(J_g^\text {KPZ}\) and \(\sigma ^\text {KPZ}\) that can be modeled exactly by FEW, the Edwards–Wilkinson equation driven by a constant force \(v_0\) from (112).

5 Conclusion

We have proposed an analog of the TUR [1, 2] in a general field-theoretic setting (see (24)) and shown its validity for the Kardar–Parisi–Zhang equation up to second order of perturbation. To ensure convergence of the quantities entering the thermodynamic uncertainty relation, we had to introduce an arbitrarily large but finite cutoff \(\varLambda \) of the corresponding Fourier spectrum, which restricted the considered Gaussian white-in-time noise to be only ‘almost white’ in space. However, the cutoff was chosen large enough such as to guarantee the dominance of the diffusive term over the non-linear term. This led us to expect our field-theoretic TUR to hold for spatially ‘fully white’ noise as well (see the reasoning below (111)).

To circumvent the introduction of a cutoff for ensuring convergence, a possible solution may be to induce a higher regularity by treating spatially colored noise instead of Gaussian white noise and/or choosing a higher order diffusion operator \(\hat{L}\) (see e.g. [71, 72]) . This is currently under investigation.

As is obvious from (111), the field-theoretic version of the TUR is not saturated for the KPZ equation. This is due to the mode-coupling of the fields as a consequence of the KPZ non-linearity. To illustrate this point, we also treated the Edwards–Wilkinson equation in Sect. 4.5, driven out of equilibrium by a constant velocity \(v_0\), see (112). By identifying \(v_0\) with the influence of higher-order Fourier modes on the mode \(k=0\), we may interpret the first \(\lambda _\text {eff}^2\)-term in (108) as the contribution from the forced Edwards–Wilkinson equation, for which the TUR is saturated (see (117)), an observation which is in accordance with findings in [8] for finite dimensional driven diffusive systems. The second \(\lambda _\text {eff}^2\)-term in (108) is the contribution to the entropy production made up by the interaction between Fourier modes of arbitrary order, which is due to the mode-coupling generated by the KPZ non-linearity. It is this additional entropy production that leads to the TUR being not saturated. Note, that also the first term in (108) is due to the mode-coupling, however is special in thus far that it measures only the impact of the other modes on the zeroth k-mode and does not include a response of the mode \(k=0\).

Regarding future research, an intriguing topic is the question as to whether the findings in [8] concerning conditions for the saturation of the dissipation bound in the TUR for an overdamped two-dimensional Langevin equation can be recovered in the present field-theoretic setting. Furthermore, it would be of great interest to employ the developed framework to other spatio-temporal noise systems in order to observe the resulting dissipation bounds in the corresponding TURs. Of special interest in this context is the stochastic Burgers equation, excited by a noise term suitable for generating turbulent response (see [59]). A comparison of the predictions made in the present paper to numerical simulations of the KPZ equation seems to be another intriguing task, currently under investigation. Besides numerical calculations, it would also be of great interest to test our predictions via experimental realizations of KPZ interfaces. Lastly, the formulation of a genuine non-perturbative, analytic formalism would also be of utmost interest.

References

Barato, A.C., Seifert, U.: Thermodynamic uncertainty relation for biomolecular processes. Phys. Rev. Lett. 114, 158101 (2015). https://doi.org/10.1103/PhysRevLett.114.158101

Gingrich, T.R., Horowitz, J.M., Perunov, N., England, J.L.: Dissipation bounds all steady-state current fluctuations. Phys. Rev. Lett. 116, 120601 (2016). https://doi.org/10.1103/PhysRevLett.116.120601

Horowitz, J.M., Gingrich, T.R.: Proof of the finite-time thermodynamic uncertainty relation for steady-state currents. Phys. Rev. E 96, 020103 (2017). https://doi.org/10.1103/PhysRevE.96.020103

Pietzonka, P., Barato, A.C., Seifert, U.: Universal bounds on current fluctuations. Phys. Rev. E 93, 052145 (2016). https://doi.org/10.1103/PhysRevE.93.052145

Polettini, M., Lazarescu, A., Esposito, M.: Tightening the uncertainty principle for stochastic currents. Phys. Rev. E 94, 052104 (2016). https://doi.org/10.1103/PhysRevE.94.052104

Pietzonka, P., Ritort, F., Seifert, U.: Finite-time generalization of the thermodynamic uncertainty relation. Phys. Rev. E 96, 012101 (2017). https://doi.org/10.1103/PhysRevE.96.012101

Proesmans, K., den Broeck, C.V.: Discrete-time thermodynamic uncertainty relation. Europhys. Lett. 119(2), 20001 (2017). https://doi.org/10.1209/0295-5075/119/20001

Gingrich, T.R., Rotskoff, G.M., Horowitz, J.M.: Inferring dissipation from current fluctuations. J. Phys. A 50(18), 184004 (2017). https://doi.org/10.1088/1751-8121/aa672f

Gingrich, T.R., Horowitz, J.M.: Fundamental bounds on first passage time fluctuations for currents. Phys. Rev. Lett. 119, 170601 (2017). https://doi.org/10.1103/PhysRevLett.119.170601

Garrahan, J.P.: Simple bounds on fluctuations and uncertainty relations for first-passage times of counting observables. Phys. Rev. E 95, 032134 (2017). https://doi.org/10.1103/PhysRevE.95.032134

Dechant, A., Sasa, S.ichi: Current fluctuations and transport efficiency for general Langevin systems. J. Stat. Mech. 2018(6), 063209 (2018). https://doi.org/10.1088/1742-5468/aac91a

Dechant, A., Sasa, Si: Entropic bounds on currents in Langevin systems. Phys. Rev. E 97, 062101 (2018). https://doi.org/10.1103/PhysRevE.97.062101

Koyuk, T., Seifert, U.: Operationally accessible bounds on fluctuations and entropy production in periodically driven systems. Phys. Rev. Lett. 122, 230601 (2019). https://doi.org/10.1103/PhysRevLett.122.230601

Chun, H.M., Fischer, L.P., Seifert, U.: Effect of a magnetic field on the thermodynamic uncertainty relation. Phys. Rev. E 99, 042128 (2019). https://doi.org/10.1103/PhysRevE.99.042128

Falasco, G., Esposito, M., Delvenne, J.C.: Unifying Thermodynamic Uncertainty Relations, arXiv:1906.11360

Brandner, K., Hanazato, T., Saito, K.: Thermodynamic bounds on precision in ballistic multiterminal transport. Phys. Rev. Lett. 120, 090601 (2018). https://doi.org/10.1103/PhysRevLett.120.090601

Macieszczak, K., Brandner, K., Garrahan, J.P.: Unified thermodynamic uncertainty relations in linear eesponse. Phys. Rev. Lett. 121, 130601 (2018). https://doi.org/10.1103/PhysRevLett.121.130601

Agarwalla, B.K., Segal, D.: Assessing the validity of the thermodynamic uncertainty relation in quantum systems. Phys. Rev. B 98, 155438 (2018). https://doi.org/10.1103/PhysRevB.98.155438

Ptaszyński, K.: Coherence-enhanced constancy of a quantum thermoelectric generator. Phys. Rev. B 98, 085425 (2018). https://doi.org/10.1103/PhysRevB.98.085425

Carrega, M., Sassetti, M., Weiss, U.: Optimal work-to-work conversion of a nonlinear quantum Brownian duet. Phys. Rev. A 99, 062111 (2019). https://doi.org/10.1103/PhysRevA.99.062111

Guarnieri, G., Landi, G.T., Clark, S.R., Goold, J.: Thermodynamics of precision in quantum nonequilibrium steady states. Phys. Rev. Res. 1, 033021 (2019). https://doi.org/10.1103/PhysRevResearch.1.033021

Carollo, F., Jack, R.L., Garrahan, J.P.: Unraveling the large deviation statistics of markovian open quantum systems. Phys. Rev. Lett. 122, 130605 (2019). https://doi.org/10.1103/PhysRevLett.122.130605

Nguyen, M., Vaikuntanathan, S.: Design principles for nonequilibrium self-assembly. Proc. Nat.l Acad. Sci. 113(50), 14231 (2016). https://doi.org/10.1073/pnas.1609983113

Pietzonka, P., Barato, A.C., Seifert, U.: Universal bound on the efficiency of molecular motors. J. Stat. Mech. 2016(12), 124004 (2016). https://doi.org/10.1088/1742-5468/2016/12/124004

Hwang, W., Hyeon, C.: Energetic costs, precision, and transport efficiency of molecular motors. J. Phys. Chem. Lett. 9(3), 513 (2018). https://doi.org/10.1021/acs.jpclett.7b03197

Foias, C.: (ed.), Navier-Stokes equations and turbulence, 1st edn. Encyclopedia of mathematics and its applications (Cambridge University Press, Cambridge, 2001)

Kardar, M., Parisi, G., Zhang, Y.C.: Dynamic scaling of growing interfaces. Phys. Rev. Lett. 56, 889 (1986). https://doi.org/10.1103/PhysRevLett.56.889

Fukai, Y.T., Takeuchi, K.A.: Kardar-Parisi-Zhang interfaces with inward growth. Phys. Rev. Lett. 199(3), 030602 (2017). https://doi.org/10.1103/PhysRevLett.119.030602

De Nardis, J., Le Doussal, P., Takeuchi, K.A.: Memory and universality in interface growth. Phys. Rev. Lett. 118, 125701 (2017). https://doi.org/10.1103/PhysRevLett.118.125701

Halpin-Healy, T., Takeuchi, K.A.: A KPZ Cocktail-Shaken, not stirred. J. Stat. Phys. 160(4), 794 (2015). https://doi.org/10.1007/s10955-015-1282-1

Spohn, H.: in Stochastic processes and random matrices. Lecture notes of the Les Houches summer school. Volume 104, Les Houches, France, July 6–31, 2015 (Oxford: Oxford University Press, 2017), pp. 177–227

Takeuchi, K.: An appetizer to modern developments on the Kardar-Parisi-Zhang universality class. Stat Mech Appl Phys A 504, 77–105 (2017)

Timpanaro, A.M., Guarnieri, G., Goold, J., Landi, G.T.: Thermodynamic uncertainty relations from exchange fluctuation theorems. Phys. Rev. Lett. 123, 090604 (2019). https://doi.org/10.1103/PhysRevLett.123.090604

Hasegawa, Y., Van Vu, T.: Fluctuation theorem uncertainty relation. Phys. Rev. Lett. 123, 110602 (2019). https://doi.org/10.1103/PhysRevLett.123.110602

Kubo, R.: The fluctuation-dissipation theorem. Rep. Prog. Phys. 29(1), 255 (1966). https://doi.org/10.1088/0034-4885/29/1/306

Seifert, U.: Stochastic thermodynamics, fluctuation theorems and molecular machines. Rep. Prog. Phys. 75(12), 126001 (2012)

Janssen, H.K.: On a Lagrangean for classical field dynamics and renormalization group calculations of dynamical critical properties. Zeitschrift für Physik B Condensed Matter 23(4), 377 (1976). https://doi.org/10.1007/BF01316547

Janssen, H.K.: On a Lagrangean for classical field dynamics and renormalization group calculations of dynamical critical properties. Journal de Physique Colloques 37(C1), C1 (1976)

Martin, P.C., Siggia, E.D., Rose, H.A.: Statistical dynamics of classical systems. Phys. Rev. A 8, 423 (1973). https://doi.org/10.1103/PhysRevA.8.423

Niggemann, O., Hinrichsen, H.: Sinc noise for the Kardar-Parisi-Zhang equation. Phys. Rev. E 97, 062125 (2018). https://doi.org/10.1103/PhysRevE.97.062125

Hochberg, D., Molina-París, C., Pérez-Mercader, J., Visser, M.: Effective potential for the massless KPZ equation. Physica A 280(3), 437 (2000). https://doi.org/10.1016/S0378-4371(99)00611-1

Täuber, U.: Critical Dynamics: A Field Theory Approach to Equilibrium and Non-Equilibrium Scaling Behavior. Cambridge University Press, Cambridge (2014)

Altland, A., Simons, B.D.: Condensed Matter Field Theory, 2nd edn. Cambridge University Press, Cambridge (2010)

Chernyak, V.Y., Chertkov, M., Jarzynski, C.: Path-integral analysis of fluctuation theorems for general Langevin processes. J. Stat. Mech. 2006(08), P08001 (2006). https://doi.org/10.1088/1742-5468/2006/08/p08001

Seifert, U.: Stochastic thermodynamics: principles and perspectives. Eur. Phys. J. B 64(3), 423 (2008). https://doi.org/10.1140/epjb/e2008-00001-9

Maes, C., Netocny, K., Wynants, B.: On and beyond entropy production: the case of Markov jump processes. Markov Process. Relat. Fields 14, 445 (2008)

Chou, Y.L., Pleimling, M., Zia, R.K.P.: Changing growth conditions during surface growth. Phys. Rev. E 80, 061602 (2009). https://doi.org/10.1103/PhysRevE.80.061602

Chou, Y.L., Pleimling, M.: Characterization of non-equilibrium growth through global two-time quantities. J. Stat. Mech. 2010(08), P08007 (2010). https://doi.org/10.1088/1742-5468/2010/08/p08007

Chou, Y.L., Pleimling, M.: Kinetic roughening, global quantities, and fluctuation-dissipation relations. Physica A (2012). https://doi.org/10.1016/j.physa.2012.02.022

Henkel, M., Noh, J.D., Pleimling, M.: Phenomenology of aging in the Kardar-Parisi-Zhang equation. Phys. Rev. E 85, 030102 (2012). https://doi.org/10.1103/PhysRevE.85.030102

Da Prato, G., Zabczyk, J. (eds.): Stochastic equations in infinite dimensions. Cambridge University Press, Cambridge (1992)

Evans, L.C.: (ed.), Partial differential equations, reprint. with corr. edn. Graduate studies in mathematics ; 19 (American Mathematical Society, Providence, Rhode Island, 2002)

Da Prato, G., Debussche, A., Temam, R.: Stochastic Burgers’ equation. Nonlinear Diff. Equ. Appl. NoDEA 1(4), 389 (1994). https://doi.org/10.1007/BF01194987

Prato, G. Da, Zabczyk, J.: (eds.), Ergodicity for infinite dimensional systems. London Mathematical Society lecture note series (Cambridge University Press, Cambridge, 1996)

Goldys, B., Maslowski, B.: Exponential ergodicity for stochastic Burgers and 2D Navier-Stokes equations. J. Funct. Anal. 226(1), 230 (2005). https://doi.org/10.1016/j.jfa.2004.12.009

Blömker, D., Kamrani, M., Hosseini, S.M.: Full discretization of the stochastic Burgers equation with correlated noise. IMA J. Numer. Anal. 33(3), 825 (2013). https://doi.org/10.1093/imanum/drs035

Hayot, F., Jayaprakash, C.: Structure functions in the stochastic Burgers equation. Phys. Rev. E 56, 227 (1997). https://doi.org/10.1103/PhysRevE.56.227

McComb, W.: The Physics of Fluid Turbulence. Oxford Engineering Science Series (Clarendon Press, 1990). https://books.google.de/books?id=iF3jaZlMFP8C

Chekhlov, A., Yakhot, V.: Kolmogorov turbulence in a random-force-driven Burgers equation: anomalous scaling and probability density functions. Phys. Rev. E 52, 5681 (1995). https://doi.org/10.1103/PhysRevE.52.5681

Meerson, B., Sasorov, P.V., Vilenkin, A.: Nonequilibrium steady state of a weakly-driven Kardar-Parisi-Zhang equation. J. Stat. Mech. 2018(5), 053201 (2018). https://doi.org/10.1088/1742-5468/aabbcc

Fogedby, H.C.: Soliton approach to the noisy Burgers equation: steepest descent method. Phys. Rev. E 57, 4943 (1998). https://doi.org/10.1103/PhysRevE.57.4943

Fogedby, H.C.: Nonequilibrium dynamics of a growing interface. J. Phys. Cond. Matter 14(7), 1557 (2002). https://doi.org/10.1088/0953-8984/14/7/313

Fogedby, H.C.: Kardar-Parisi-Zhang equation in the weak noise limit: pattern formation and upper critical dimension. Phys. Rev. E 73, 031104 (2006). https://doi.org/10.1103/PhysRevE.73.031104

Fogedby, H.C.: Patterns in the Kardar-Parisi-Zhang equation. Pramana 71(2), 253 (2008). https://doi.org/10.1007/s12043-008-0158-1

Forster, D., Nelson, D.R., Stephen, M.J.: Large-distance and long-time properties of a randomly stirred fluid. Phys. Rev. A 16, 732 (1977). https://doi.org/10.1103/PhysRevA.16.732

Frey, E., Täuber, U.C.: Two-loop renormalization-group analysis of the Burgers-Kardar-Parisi-Zhang equation. Phys. Rev. E 50, 1024 (1994). https://doi.org/10.1103/PhysRevE.50.1024

Medina, E., Hwa, T., Kardar, M., Zhang, Y.C.: Burgers equation with correlated noise: renormalization-group analysis and applications to directed polymers and interface growth. Phys. Rev. A 39, 3053 (1989). https://doi.org/10.1103/PhysRevA.39.3053

Halpin-Healy, R., Zhang, Y.C.: Kinetic roughening phenomena, stochastic growth, directed polymers and all that. Aspects of multidisciplinary statistical mechanics. Phys. Rep. 254(4), 215 (1995). https://doi.org/10.1016/0370-1573(94)00087-J

Frusawa, H.: Stochastic dynamics and thermodynamics around a metastable state based on the linear Dean-Kawasaki equation. J. Phys. A 52(6), 065003 (2019). https://doi.org/10.1088/1751-8121/aaf65c

Krug, J.: Origins of scale invariance in growth processes. Adv. Phys. 46(2), 139 (1997). https://doi.org/10.1080/00018739700101498

Wang, F.Y., Xu, L.: Derivative formula and applications for hyperdissipative stochastic navier-stokes/burgers equations, infinite dimensional analysis. Quant. Prob. Relat. Topics (2010). https://doi.org/10.1142/S0219025712500208

Wolf, D.E., Villain, J.: Growth with surface diffusion. Europhys. Lett. (EPL) 13(5), 389 (1990). https://doi.org/10.1209/0295-5075/13/5/002

Blömker, D., Jentzen, A.: Galerkin approximations for the stochastic burgers equation. SIAM J. Numer. Anal. 51(1), 694 (2013). https://doi.org/10.1137/110845756

Blömker, D., Kamrani, M.: arXiv e-prints arXiv:1311.2207 (2013)

Hairer, M.: Solving the KPZ equation. Ann. Math. (2011). https://doi.org/10.4007/annals.2013.178.2.4

Gubinelli, M., Perkowski, N.: KPZ reloaded. Commun. Math. Phys. 349(1), 165 (2017). https://doi.org/10.1007/s00220-016-2788-3

Cannizzaro, G., Matetski, K.: Space-time discrete KPZ equation. Commun. Math. Phys. 358(2), 521 (2018). https://doi.org/10.1007/s00220-018-3089-9

Hairer, M.: A theory of regularity structures. Invent. Math. 198(2), 269 (2014). https://doi.org/10.1007/s00222-014-0505-4

Yakhot, V., She, Z.S.: Long-time, large-scale properties of the random-force-driven Burgers equation. Phys. Rev. Lett. 60, 1840 (1988). https://doi.org/10.1103/PhysRevLett.60.1840

Acknowledgements

Open Access funding provided by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Abhishek Dhar.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Evaluation of (81)

Using (73), the first term in (81) reads

Note that the case of \(k=0\) is treated like in (77). The second term in (81) is given by

where we used Wick’s-theorem, (73) and (69). Note that the two Kronecker-deltas in the last term of (124) can also be written as \(\delta _{0,k}\delta _{0,l}\delta _{k,-l}\), such that the whole expression is multiplied by \(\delta _{k,-l}\). Again with Wick’s-theorem, (73) and (70) we can calculate the third and forth term of (81) accordingly and find

As can be seen from (123) to (125), all four terms in (81) contain a \(\delta _{k,-l}\) and thus (81) reduces to

The first term of (126) is readily evaluated with (123) as

The second term of (126) reads with (124):

Hence, with \(\left\langle h_{k}^{(1)}(t)\right\rangle \) from (77), the expression in (128) becomes

Here the choice of the minimum of \((t^\prime \wedge r)\) is arbitrary, since for \((t^\prime \wedge r)=r\) the other case is obtained by simply interchanging \(r\leftrightarrow t^\prime \) under the integral and vice versa; thus the results for both choices are equivalent. In the following \((t^\prime \wedge r)=r\) is chosen. Hence, the integral expression in (129) can be evaluated as

Thus, with (129) and (130), the long time behavior of \(\left\langle h_{k}^{(1)}(t)h_{-k}^{(1)}(t)\right\rangle \) is given by

where we changed \(m\rightarrow l\). To save computational effort, rewrite the last two terms of (126) in the following way

Hence, it suffices to calculate one of the two expectation values. With (125) we see that

where we substituted \(m\rightarrow k-m\) and used the symmetry of \(\varPi _{k,l}(t,t^\prime )\) from (72). Note that for \(k=0\), the above expression in (133) vanishes. Thus in the following calculations \(k\ne 0\) is assumed. In this setting, (133) reads with (73)

where we changed summation index \(m\rightarrow l\). Thus, with the results from (127), (131), (132) and (134), the expectation value of (81) reads in the long-time asymptotics

Appendix B: Expectation of the Total Entropy Production

The Fokker–Planck equation for the KPZ equation from (55) reads, like in Sect. 4.4.1,

Due to the conservation of probability, there is a current j[h] given by

Following [36], expectation values of expressions like \(\left\langle \dot{h}\mathcal {G}[h]\right\rangle \) are interpreted as

Since the goal is to find an expression for the expectation value of the total entropy production in the stationary state, \(\varDelta s_\text {tot}\), it is useful to choose p[h] as being the stationary solution \(p^s[h]\) of the one-dimensional Fokker–Planck equation, which is given by

Inserting this in (137) yields for the stationary probability current

where it was used that

Using the result from (140) and (142) leads to

Here it is understood that \(\left\langle \cdot \right\rangle \) now denotes the expectation value with regard to the stationary distribution \(p^s[h]\).

The total stationary entropy production \(\varDelta s_\text {tot}\) is given by (see (102))

Hence its expectation value reads

which is evaluated with the aid of (144):

Appendix C: Regularity Results for the one-dimensional KPZ Equation

Dealing with the one-dimensional KPZ equation allows us to make use of the equivalence to the stochastic Burgers equation and adapt the regularity results for the latter from [53, 55, 56, 73, 74]. In Sect. 3.1 and Sect. 3.2, we found that our operators \(\hat{L}\) and \(\hat{K}\) share the same set of eigenfunctions, which simplifies the results obtained by the authors of [55, 56] to the following. Under the assumption that

it is guaranteed almost surely that the mild solution u(x, t) of the one-dimensional noisy Burgers equation \(u\in \mathcal {C}([0,T],H)\), \(T>0\), with \(H=\mathcal {L}_2([0,1])\) or even \(H=\mathcal {C}([0,1])\) and the spectral Galerkin approximation converges in H to the solution u. Utilizing the mapping from KPZ to Burgers via \(u(x,t)\equiv -\partial _xh(x,t)\), with h solution to the KPZ equation, which implies

and therefore

we get the following result for the 1d-KPZ equation:

Here \(H^1([0,1])\) denotes the Sobolev space of order one on [0, 1], i.e. \(f\in H^1([0,1])\)\(\Leftrightarrow \)\(\Vert f\Vert _{\mathcal {L}_2([0,1])}<\infty \) and \(\Vert f^\prime \Vert _{\mathcal {L}_2([0,1])}<\infty \), where \(f^\prime \) is understood as the weak derivative of f. It holds that \(H^1([0,1])\subset \mathcal {L}_2([0,1])\), which is what we used in Sect. 3.2.

As spatial white noise is excluded by (151), we introduced a finite cutoff \(\varLambda \) of the Fourier spectrum instead of using our approximation to the KPZ solution as a spectral Galerkin scheme and letting \(\varLambda \rightarrow \infty \). However, for the KPZ equation driven by spatially colored noise satisfying (151) or even an adapted version of (151) to a higher order diffusion operator as defined in Sect. 3.1 (see e.g. [71, 72]), in future work we want to derive a TUR taking the full Fourier spectrum into account.

We would like to conclude with the following remark. Since a couple of years, there exists a complete existence and regularity theory for the KPZ equation driven by space-time white noise introduced by Hairer [75] (see also [76, 77] and for further reading on the so-called regularity structures developed in [75] see [78]). In [75] it is shown that the solutions of the KPZ equation with mollified noise converge after a suitable renormalization to the solution of the renormalized KPZ equation with space-time white noise, when removing the regularization. It is due to this renormalization procedure (where a divergent quantity needs to be subtracted) and the poor regularity of the solution, that at present it is not obvious to us how the method developed in [75] can be of use for constructing a TUR.

Appendix D: Long-Time Behavior of \(\left\langle h\right\rangle \), \(\varDelta s_\text {tot}\) and \(\left\langle (h-\left\langle h\right\rangle )^2\right\rangle \)

We present a more detailed reasoning for the claim made in the text that \(\left\langle h\right\rangle \), \(\varDelta s_\text {tot}\), \(\left\langle (h-\left\langle h\right\rangle )^2\right\rangle \sim t\) for \(t\gg 1\) to arbitrary order in the perturbation expansion with respect to \(\lambda _\text {eff}\) (see eqs. (78), (83) and (108)). Our argument uses the relation between the KPZ and Burgers equation, respectively, in one spatial dimension, i.e. by setting \(u(x,t)=-\partial _xh(x,t)\) the KPZ equation becomes the Burgers equation with the velocity field u(x, t) solution to the Burgers equation excited with \(\eta ^\text {B}=-\partial _x\eta ^\text {KPZ}\). It is known from e.g. [79] that the two-point velocity correlation function in Fourier-space, \(C(q,\omega )\), is given by

where a, b denote positive constants calculated explicitly in [79] but their exact form is irrelevant for our argument. With (152) the energy spectrum E(q) can be calculated via an integration over \(\omega \) and yields a momentum independent constant [79]. Thus, the velocity–velocity correlation function becomes a constant, namely

with K essentially the kinetic energy [65, 79]. We use this result to find a solution to

i.e. to the averaged, dimensionless KPZ equation from (55). Taking the long-time limit of (154) and employing (153), with, again \(u(x,t)=-\partial _xh(x,t)\), it follows that

The PDE in (155) is readily solved for \(\left\langle h(x,0)\right\rangle =0\) (i.e. flat initial condition) and yields

Hence, it is to be expected that in the perturbation expansion

\(\left\langle h_{k}^{(n)}(t)\right\rangle \sim t\) for \(t\gg 1\) holds for all non-vanishing \(\left\langle h_{k}^{(n)}(t)\right\rangle \). Thus, we factored out the time t in (78) and (79). A similar argument explains the form of the total entropy production in (108) and (109).

For the case of the variance of h(x, t) we use the shorthand notation \(\widetilde{h}=h-\left\langle h\right\rangle \), so \(\text {var}[h]=\left\langle (h-\left\langle h\right\rangle )^2\right\rangle =\left\langle \widetilde{h}^2\right\rangle \) and, again, the velocity correlation function (152). Thus, with \(u=-\partial _xh\) we get

Let us now introduce a small wavenumber \(0<q_0\ll 1\) and split up the momentum integral according to

with \(c_1>0\) a constant. Using now that \(|q|^3\rightarrow 0\) in the momentum interval \([0,q_0]\), we neglect the second term in the denominator of the integrand in the last line of (159), which yields

where it was used that \(\omega _0\sim t_0^{-1}\) and \(\widetilde{c}_2\), \(c_2\) positive constants in frequency, time respectively. Hence, in the limit of large times we get

The finding in (161) explains the form of (83) and (84) in the text above. Finally, let us remark that the general form in (160) is also found in the calculations in Appendix A, specifically, the constant \(c_1\) in (160) corresponds to the contributions from modes with \(k\ne 0\) in Appendix A and the constant \(c_2\) in (160) to the \(k=0\) mode, which is the only mode yielding contributions linear in t.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Niggemann, O., Seifert, U. Field-Theoretic Thermodynamic Uncertainty Relation. J Stat Phys 178, 1142–1174 (2020). https://doi.org/10.1007/s10955-019-02479-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10955-019-02479-x