Abstract

Data assessment tools designed to improve data quality and real-time delivery of seismic and infrasound data produced by six seismoacoustic research arrays in South Korea are documented and illustrated. Three distinct types of tools are used including the following: (1) data quality monitoring; (2) real-time station state of health (SOH) monitoring; and (3) data telemetry and archive monitoring. The data quality tools quantify data gaps, seismometer orientation, infrasound polarity, digitizer timing errors, absolute noise levels, and coherence between co-located sensors and instrument-generated signals. Some of the tools take advantage of co-located or closely spaced instruments in the arrays. Digitizer timing errors are identified by continuous estimates of the relative orientation of closely spaced horizontal seismic components based on the root-mean-square error between a reference seismometer and each seismometer in the array. Noise level estimates for seismic and infrasound data are used to assess local environmental effects, seasonal noise variations, and instrumentation changes for maintenance purposes. The SOH monitoring system includes the status of individual ancillary equipment (battery, solar power, or components associated with communication) and provides the operator the capability to compare the current status to the historical data and possibly make remote changes to the system. Finally, monitoring data telemetry and overall data archival provide an assessment of network performance. This collection of tools enables array operators to assess operational issues in near real-time associated with individual instruments or components of the system in order to improve data quality of each seismoacoustic array.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

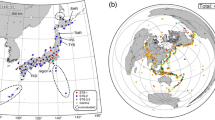

Southern Methodist University (SMU) and Korea Institute of Geoscience and Mineral Resources (KIGAM) have cooperatively operated a network of seismoacoustic research arrays (SARAs) composed of six arrays, BRDAR, CHNAR, KSGAR, KMPAR, TJIAR, and YPDAR in South Korea beginning in 1999 (Fig. 1). The goal of the SARAs is to provide state-of-the-art seismoacoustic data in real-time for the purposes of detection and characterization of interesting local and regional seismoacoustic events. The initial array configuration and subsequent installation were designed to record seismic and infrasound waves from natural and artificial sources in order to not only improve the characterization of these sources but also contribute to the understanding of both seismic and infrasound propagation path effects across the Korean Peninsula as a function of time (Stump et al. 2004). Studies based on data from the SARA network have provided an assessment of signals from large earthquakes (Kim et al. 2004; Che et al. 2013), infrasound sensor calibration (Kim et al. 2010), documentation of infrasound propagation effects (McKenna et al. 2008; Che et al. 2011), evidence of infrasound environmental effects (Park et al. 2016), infrasound signal detector performance (Park et al. 2017), network detection capability (Che et al. 2017), atmospheric change that is used in model inversions (Park et al. 2023), seismic and infrasound signals from surface explosions (Che et al. 2002, 2009a), and nuclear explosions (Che et al. 2009b, 2014, 2022; Park et al. 2018, 2022). Details of these SARA based studies can be found in Che et al. (2019). Recently, Stump et al. (2022) documented the public release of the regional seismic and infrasound waveforms recorded across the SARA network for the six Democratic People’s Republic of Korea nuclear tests including an assessment of data quality at each array for the explosion dataset. This paper further illustrates, documents, and discusses the data quality tools for the network as they may be applicable to other array operations.

High data quality is an operational goal for these seismoacoustic arrays, similar to operations of others. A data quality analyzer was introduced by Ringler et al. (2015) designed to evaluate seismic data and document instrument quality at the Albuquerque Seismological Laboratory. Casey et al. (2018) introduced an automated program, Modular Utility for Statistical Knowledge Gathering (MUSTANG), to assess the quality of seismic data archived at the Incorporated Research Institutions for Seismology Data Management Center. Recently, Aur et al. (2021) developed a Python-based library for quality control of seismic waveform data (Pycheron), which includes more functionality for MUSTANG. Building on this previous work, this paper discusses new tools designed to monitor seismic and infrasound array data quality in a quantitative and automated manner. This paper also documents the implementation of these tools on the data recorded by the six SARAs over the last 20 years.

Three types of monitoring tools were developed to improve network operations: (1) data quality tools based on analysis of the seismic and infrasound data; (2) a system designed to quantify and archive the state of health (SOH) of equipment in near, real-time; and (3) monitoring of data telemetry and archival processes. We, first, summarize the background and development of the SARAs. Next, we document the current data monitoring tools that quantify data gaps, relative seismometer orientations across the arrays, infrasound polarity, noise estimates for seismometers and infrasound sensors at the arrays, and a characterization of the instrument-generated signals. These monitoring tools are used to evaluate overall data quality in order to identify technical issues at the arrays. The second set of tools focuses on real-time station SOH monitoring of the SARAs. In the subsequent section, we document a tool designed to track the overall status of the data archiving system and network performance. Finally, we summarize the tools in the conclusions section.

2 Seismoacoustic research arrays

Since 1999, the SARAs have evolved from a single prototype research array with vertical short-period seismometers and custom infrasound sensors into the existing network of multiple arrays with broadband three-component (3C) seismometers and up to 16 infrasound sensors per array (Stump et al. 2022). The arrays are aligned approximately east–west across the far north of South Korea with a spacing of about 100 km, except for TJIAR, which is located near the center of South Korea (Fig. 1a). To better characterize the infrasound wavefield from sources across the Korean Peninsula, the infrasound arrays have multiple sensor spacings varying from 0.2 to 1.0 km with co-located seismometers at some locations (Fig. 1b).

CHNAR and KSGAR each have four primary seismoacoustic elements (~ 1 km spacing), consisting of one broadband 3C seismometer (Guralp CMG-3TD) in a shallow borehole (~ 10 m depth) and an infrasound subarray with sensor spacings of 60–70 m. Each of these arrays has a total of 13 infrasound sensors and 4 seismometers. BRDAR has one additional element (16 infrasound sensors and 5 seismometers). KMPAR, YPDAR, and TJIAR have 7, 5, and 4 infrasound sensors, respectively, configured with sensor spacings from 0.2 to 1 km. KMPAR and YPDAR have 1 and 2 broadband 3C seismometers, respectively, while TJIAR has no seismometer within the array. KMPAR is sampled at 100 samples/s, while all other arrays are sampled at 40 samples/s. Chaparral Physics Model 2.3 microphones are used for all infrasound sensors and are recorded on 24-bit digitizers. The arrays have weather sensors that measure horizontal wind speed, wind direction, and temperature (4 sample/s), installed 2 m above the surface. Each acoustic sensor is attached to 10, 8-m porous hoses in a star-like configuration to reduce background noise from winds at the boundary layer. Data telemetry relies on a hybrid real-time design including radios, internet, or cell modems to KIGAM in South Korea and SMU in TX, USA. The tools described in this paper were developed to provide rapid assessment of these instruments and their associated data quality and telemetry in order to promptly identify needed maintenance or repair.

3 Data quality monitoring tools

Data gaps, seismometer orientation, infrasound polarity, noise levels, and characterization of the signals from instrument activities are assessed for the SARAs on a regular basis with weekly and monthly reporting. This paper uses recent data examples to illustrate the tools as well as performance differences across the arrays.

3.1 Data gaps

Near, real-time data telemetry includes inter-array and long-haul communication to SMU and KIGAM which makes data gap monitoring critical to the rapid identification and assessment of failures for maintenance purposes. Data gaps are summarized by element and array in order to help identify possible causes of the outages. The tool remotely identifies a variety of network communication problems as well as power failures related to severe weather conditions and ongoing maintenance work. Under some situations, these outage reports can be used to trigger manual backfill of data when the CD 1.1 telemetry protocol fails to fill transmission outages automatically. Summaries are reported weekly after building the full database. Weekly data gap summaries typically lag real-time by 2 days in order to capture any data automatically back-filled following a communication lapse. The subsequent monthly data gap summary is used to check whether issues identified during the short term are addressed.

A graphical data gap summary for all SARAs for 2018 is included in Fig. 2. Note that some elements of BRDAR, CHNAR, and KSGAR have similar data stream issues indicative of radio telemetry issues that affect multiple sensors or array elements. Data gaps are sorted into 1-min increments in order to track problems and document temporal trends. Most data gaps are associated with communication problems during severe weather including lightning, fieldwork, or server maintenance. Although many individual gaps are included in Fig. 2, the overall data acquisition rates are high, with an average rate of 99.4% (BRDAR; 98.9%, CHNAR; 99.8%, KSGAR; 99.9%, KMPAR; 99.9%, TJIAR; 99.6%, and YPDAR; 98.4%) as a result of prompt triggered by the monitoring and include either remote access to the array to solve a problem or a physical visit to the array. A physical visit is relatively easy for TJIAR, which is located at KIGAM facility in Daejeon, while visit to other arrays requires more effort and time due to the great distance from KIGAM.

A summary of data gaps associated with individual seismic and infrasound data streams for 2018. Blue diamonds indicate gaps of less than 1 min, while red diamonds are gaps extending beyond 1 min (red lines depict the time period of longer gaps, i.e., YPDAR 21 in Jan–Feb). Average data acquisition rates for each seismoacoustic array are summarized to the left. Data gaps as percentages for each element are summarized to the right

3.2 Seismometer orientation

Accurate horizontal orientation of the 3C seismometers is important for data quality control as it contributes to a variety of different types of seismological studies such as seismic source characterization, anisotropy quantification, receiver function estimates, and surface wave dispersion or polarization (Zha et al. 2013; Xie et al. 2018). Many of the seismometers in the SARAs are deployed in shallow boreholes (~ 10 m) without hole locks, so orientation may change whenever an instrument is replaced and thus must be documented if absolute orientations are required for subsequent analysis. Optimum emplacement of a seismometer requires identification and orientation along true north or some other agreed standard. However, there are often significant errors in seismometer orientation as a result of the field environments, such as strong magnetic anomalies near the seismometer, human error in declination calibration (Wang et al. 2016), or other errors associated with emplacement. In order to track seismometer orientation, numerous studies use ambient noise data (Xu et al. 2018), synthetic experimental data from the instrument (Xie et al. 2018), and P-wave particle motions (Wang et al. 2016).

As part of routine quality control assessment, it has been found useful to continuously estimate seismometer orientation differences across array elements. A reference seismometer is identified at each array. Usually, the reference is the center array element (BRD01, CHN01, and KSG01), except for YPDAR (YPD21) where only two seismometers are installed. Seismometers at BRDAR and YPDAR are deployed in near-surface vaults, while CHNAR and KSGAR use postholes for the seismometers (~ 10 m depth from the surface). As a result of the vaults, the orientation of seismometers at BRDAR and YPDAR can be readily set to true north, but those at CHNAR and KSGAR cannot and require comparison to a seismometer of known orientation. The absolute and relative orientation angles between seismometers as a function of time are tracked, assuming tilt effects can be ignored.

Horizontal component waveforms are filtered from 0.10 to 0.35 Hz in order to emphasize the microseisms which are used to estimate the relative orientation angle. The horizontal components (EW and NS) of waveform \(x(t)\) at station, \(k,\) are reformatted to a complex coordinate defined as follows:

We estimate the \(\mathrm{semblance}, S\left(t\right)\), which is the ratio of the power of average absolute \({z}_{k}\left(t\right)\) and average power of absolute \({z}_{k}\left(t\right)\), at each time point:

where N is the number of stations. We extract points with \(S\left(t\right)>0.8\) and amplitude (displacement) < 30 nm as a random set of samples. A total of 1000 data points are extracted from segments of each array element.

The extracted points \(\left({t}_{e}\right)\) are used to calculate the root mean square (rms) amplitude between a known reference station, \({z}_{\mathrm{ref}}\left({t}_{e}\right)\), and the comparing station, \({z}_{k}\left({t}_{e}\right)\) at each point (t) while rotating the angle (\(\theta\)) from 0 to 360 \(^\circ\).

The minimum \({Z}_{rms}\left({t}_{e,\theta }\right)\) provides the orientation angle estimate between the reference, \({z}_{\mathrm{ref}}\left({t}_{e}\right)\), and the comparing station, \({z}_{k}\left({t}_{e}\right)\).

An example seismometer orientation plot as a function of time is given in Fig. 3, documenting maintenance issues at several seismic stations for the time period from June 2020 to March 2021. Calculations can fail due to missing data from one of the seismometers which can be confirmed by comparison with the data gap characterization. When the orientation between seismometers is expected to be stable (i.e., no fieldwork or a change in calibration), sudden changes in orientation can be indicative of either a GPS timing problem or a sensor problem (Fig. 3). The monitoring is also useful to confirm times and associated orientation changes that occur during field maintenance. BRDAR and YPDAR both have vault installations where it is easier to assess the relative orientation of instruments and compare to the automated estimates. The relative orientations of BRDAR and YPDAR are close to 0°. The tool identified seismometers that diverged from 0° following initial installation at BRDAR, motivating a separate reorientation of seismometers (in September 2020 in Fig. 3).

Seismometer orientation values as a function of time document maintenances issues associated with particular seismometers from June 2020 to March 2021. Note that the scale of the y-axis is different for vault (BRDAR and YPDAR) and posthole (CHNAR and KSGAR) seismometers. The orientation estimate fails during data gaps. Instrument issues or human actions are also noted with yellow arrows in the plot

The relative orientation estimates for CHNAR and KSGAR were corrected to absolute values using two vault seismometers of known orientation at these two sites, CHNB and KSA operated by KIGAM (not part of the SARAs). These seismometers are each located close to the center of CHNAR (CHN01) and KSGAR (KSG11). Several orientation changes are documented at CHN31 due to seismometer replacements. All orientations for CHNAR were re-adjusted in September 2020 when the standard seismometer, CHNB, was identified to have had an error in its estimate that was confirmed during fieldwork. Orientation estimates for KSGAR are relatively stable, reflecting a lack of instrumental issues (except for small data gaps) for the documented time period.

Although the 1-h orientation estimates produce scatter that can be reduced using longer windows, the shorter windows provide the ability to promptly estimate changes in seismometer orientation that can be incorporated into weekly and monthly reporting. Table 1 summarizes the average and standard deviation of orientation estimates at BRDAR, YPDAR, CHNAR, and KSGAR for the time periods of February to March 2021 shown in Fig. 3. Under healthy and stable seismometer conditions, standard deviation estimates for orientation at all arrays are less than 2.5°.

3.3 Infrasound polarity

An incorrect polarity of a single infrasound waveform can degrade signal detection that relies on array processing using waveform similarity. Strong impulsive signals with inverted waveforms can easily be recognized by eye, but when signals are obscured by high background noise, it can be challenging to detect an inverted channel. Prompt monitoring for inverted infrasound polarities allows problems to be quickly corrected in the field or documented for later research, especially for data used in array processing. The importance of array to infrasound data motivated the development of an automated polarization algorithm.

This quality control tool like the others is closely related to maintenance operations where the replacement of an infrasound sensor can result in a change in polarity due to incorrect wiring or cabling. Polarity assessment relies on cross-correlation between pairs of closely spaced elements at low frequencies. Based upon an initial review of infrasound data, a filter band of 0.5–1.0 Hz was used to identify inverted waveforms relative to others with consistent polarity. Normalized correlation coefficients (CC) between the reference element and each near-by sensor are computed using 60-s windows and averaged into 1-h blocks, under the assumption that the reference element is operating with normal polarity. CC values range from − 1 to 1 (correlation + 1 = perfect match, 0 = no linear relation, and − 1 = perfect match, but inverted polarity) with negative CC values indicative of an inverted waveform.

The automatic polarity monitoring tool is illustrated using KMPAR infrasound array data (1–30 November 2018), which had a polarity issue at the time. CC values between the reference array element (KMP01) and other array elements (KMP02, KMP03, KMP11, KMP12, and KMP13) were estimated and displayed (Fig. 4). The results show that the CC value between KMP01 and KMP02 is higher than other pairs because this pair of elements are closest together (~ 20 m) relative to the other pairs (~ 60–110 m). The KMP03 sensor was inverted, and KMP13 was broken at the time of these estimates, as reflected in the automatic monitoring results (minus and zero CC values, respectively). These maintenance issues were fixed during fieldwork on 15 November 2018 (positive CC values from 15 November in Fig. 4) documenting the effectiveness of this automated tool. The sudden dip (CC values ~ 0) in the correlation coefficient across all channels on 8–9 November was caused by heavy rain at the site producing incoherent noise across the array elements.

Automatic infrasound polarity estimates at KMPAR for 1–30 November 2018. Correlation coefficients between the reference element (KMP01) and other elements, KMP02, KMP03, KMP11, KMP12, and KMP13 are displayed. KMP03 had an inverted waveform, and KMP13 was broken at the beginning of month which were subsequently fixed during fieldwork on 15 November 2018 as documented by the output of the automated tool. Distances between KMP01 and other infrasound array elements are summarized at the bottom right

3.4 Seismometer and infrasound noise evaluation

Noise generated by weather systems, ocean waves, local environment, and cultural activities can be either coherent or incoherent. During detection processing, this noise can impact the separation and interpretation of signals of interest and thus need quantification. This section summarizes the different noise assessment tools and documents results from their application to seismic and infrasound noise spectra for the SARAs on a monthly basis. These noise estimates are also used to identify technical issues with the instrumentation. There is no seismic data at TJIAR, so infrasound data is only analyzed from this array. Two spectral methods are used, one designed for single sensor data, and a temporal change method that compares multiple closely spaced sensors.

3.4.1 Noise characteristics using single sensor data

The first noise characterization is based on a standard spectral estimation procedure that estimates the power spectral density (PSD) function and is commonly used to specify instrument performance (Hedlin et al. 2002; Ringler et al. 2010). In this study, spectral estimates use Welch’s method (Welch 1967), producing a single power spectral estimate from an average of spectra taken over regular time intervals. These spectral estimates are based on an average of four spectra, each taken from consecutive 204.8 s time intervals, following a procedure described by Hedlin et al. (2002). This analysis quantifies the range of noise conditions at each array as a function of time. The 5th and 90th percentile (low and high) noise levels observed at all arrays for example seismic and infrasound sensors based on 1 month of data are displayed in Fig. 5. Data for June and December 2018 are compared to illustrate seasonal variations. Noise levels also vary between individual array elements as discussed in the next section. The array element with the lowest noise in each array was used in the array comparisons.

The 5th (dashed lines) and 90th (solid lines) percentile noise spectrum from seismic (a and b) and infrasound data (c and d) during June and December 2018. Global low and high noise models (HNM and LNM) for seismic (Peterson 1993) and infrasound (Marty et al. 2021) data are compared to the noise spectra

Seismic noise associated with ocean wave interactions consists of two types of microseisms: primary (0.05–0.1 Hz) and secondary (0.1–0.2 Hz) (Sheen 2014). These noise sources are observed in Fig. 5a and b. The seismic noise spectra are compared to the global low and high noise models (HNM and LNM) by Peterson (1993) based on the Global Seismographic Network (GSN) data. The 5th percentile seismic noise spectra from all seismometers at frequencies below 0.2 Hz are similar to the LNM during summer (June) and winter (December). At frequencies > 0.4 Hz, noise levels rise above the LNM, indicative of increased cultural noise at local distances. KMPAR has relatively high noise levels due to its proximity to both a metropolitan and an estuary environment. BRDAR also has high noise levels in the 0.5–3 Hz range, caused by ocean wave interactions with the island where the array is deployed. During the wintertime, the noise level increases by up to 10 dB over a broad range of frequencies (0.2 to 20 Hz) for all arrays close to ocean environments (BRDAR, YPDAR, and KSGAR). Additional contributions from near-surface winds to the noise estimates are discussed in subsequent sections. The noise levels at arrays located far inland (CHNAR) have reduced seasonal effects. BRDAR and YPDAR seismometers are installed near the surface (1-m depth) in vaults, while all other seismometers are deployed in shallow postholes (~ 10-m depth). The noise estimates indicate that noise levels are affected by the local environmental conditions as well as the installation depth. The 90th percentile noise spectra are similar in shape to the 5th spectra but are increased by 25 dB.

The 5th and 90th percentile infrasonic noise levels are compared to the global HNM and LNM by Marty et al. (2021) based on infrasound station data from the International Monitoring System (IMS) operated by the Comprehensive Nuclear-Test-Ban Treaty Organization (CTBTO) (Fig. 5c and d). The microbarom peak is dominant around 0.2 Hz in the 5th percentile noise estimates for all elements at all arrays. Overall, the 5th percentile spectra are closest to the LNM, while the 90th noise spectra fall between the LNM and HNM. Arrays located on islands such as BRDAR and YPDAR are noisier (difference of about 25 dB at 0.1 Hz) than the inland arrays, CHNAR and TJIAR. KSGAR produces intermediate noise levels indicative of the effect from the ocean which is nearby (Park et al. 2016).

When seismic and infrasound sensors are damaged or degrade with age, their spectra are observed to depart from the typical spectral shapes, providing a basis to trigger maintenance actions. Therefore, spectra that depart from the long-term estimates are highlighted each month. Abnormalities are quantified by comparing closely spaced instruments within array, as discussed in the next section. These evaluations are also used to verify calibration values following essential fieldwork. The current monthly monitoring tool documents noise power densities at the 5th percentile for all seismic and infrasound sensors in order to evaluate data quality under low noise conditions.

3.4.2 Temporal change noise method using multiple sensor data

Tools designed to highlight temporal changes in seismic data provide an additional quality control tool as illustrated by Ringler et al. (2010). Temporal analysis produces a time-dependent relative spectral comparison between a reference instrument and all other instruments in an array under the assumption that the reference element is operational and stable. We utilize the fact that many infrasound sensors and seismometers are nearly co-located. Spectral power levels from individual three-component seismic instruments are compared with data from a nearby neighbor in frequency as a function of time.

Seismic spectral estimates are compared at BRDAR (sensor 01 to 11/21/31/41), CHNAR (01 to 11/21/31), KSGAR (01 to 11/21/31), and YPDAR (21 to 31), similar to the seismometer orientation analysis. In each case, the center element of the array is the reference, except for YPDAR. KMPAR has only one seismometer, so the three components are compared to each other (HHZ to HH1, HH1 to HH2, and HHZ to HH2, where HH1 and HH2 are horizontal components). In the case of infrasound data, spectral estimates from small-aperture arrays at BRDAR, CHNAR, and KSGAR are compared (for example, sites 0, 1, 2, 3, and 4 shown in Fig. 1 are separately evaluated) under the assumption that closely spaced sensors should exhibit similar noise conditions. Infrasound spectral estimates for KMPAR, TJIAR, and YPDAR are compared using the center array element as a reference. Information summarizing the comparisons of pairs of instruments is found in Table 2. In these temporal comparisons, hourly PSDs are estimated using Welch’s method and averaged in discrete frequency bands followed by the calculation of the difference in the hourly band-averaged power levels between sensors.

Spectral comparisons of seismic data (BHZ) at BRDAR from the time period 2016 to 2018 are displayed in Fig. 6. The 5th percentile spectral differences indicate that BRDAR seismometer noise at the different array elements has similar spectral shapes, except for BRD21, which had a sensor issue at the time of the estimates (Fig. 6a). The seismic spectral difference using BRD01 to compare to all other seismometers (spacing of 1 km) documents nearly identical levels in the frequency band of 0.05 to 1 Hz, which includes the two microseism peaks (Fig. 6b). Cultural noise effects (i.e., vehicle movement on the road) are documented above 1 Hz as BRD01 has higher noise levels above 8 Hz. Data gaps during the 3 years produce noise comparison failures as indicated by the blue vertical lines in Fig. 6b. There are several gradual changes in the relative comparisons as a function of frequency and time for the BRD01-BRD21 comparison. These changes are indicative of the slow failure of BRD21 beginning in January 2017 which culminated in the sensor replacement in June 2018 (Fig. 6b). In this case, the sensor failure began with abnormal low-frequency amplitudes that gradually spread across the entire frequency band.

Infrasound data is more sensitive to wind and temperature than seismic data, and the resulting infrasonic noise differences are less consistent and more scattered in frequency and time than the seismic data. A similar assessment as shown in Fig. 6 using infrasound data at KSGAR is described in Text S1 and Fig. S1. It was found that the spectral comparison monitoring captures the infrasound sensor or hose (water-filled) issues that were resolved by prompt field work. These assessments highlight issues related to the instruments, identify starting and ending times of problems (usually, ending time is correlated with the completion of fieldwork or remote action), and document the frequency extent of sensor problems. These data assessments can also be used to track ongoing fieldwork over short time periods, documenting when problematic issues have been resolved or if additional actions are needed before the field team leaves the site.

Temporal change methods are used to routinely identify possible technical problems with a sensor or hose. These types of issues are typically captured by abnormal power differences in the frequency band around 0.1 Hz (empirically observed) for both seismic and infrasound data. Therefore, we quantify temporal noise changes in this limited frequency band to provide a simple measure to identify such technical issues promptly, automatically, and effectively.

Figure 7 displays seismic noise comparison on the north component (BHN) between BRD01 and BRD21 for 6 months (July to December 2018). The BRD21 seismometer exhibited abnormal noise during this time period, as shown in the red or blue area in Fig. 7a. Extracting noise level differences centered at 0.1 Hz captured the sensor problem as indicated by the dashed line in Fig. 7a with absolute levels plotted in Fig. 7b. The area in red at the end of August (in Fig. 7a) is correlated with a data gap at BRD21 due to a broken sensor. The broken sensor at BRD21 also produced a gradual change in noise in the time and frequency plot from October as captured in the 0.1 Hz noise difference plot until the end of December. The average absolute noise level Differences (ANLDs) at 0.1 Hz are summarized weekly as a result (Fig. 7c). This example illustrates that high average ANLDs in a week are often correlated with technical issues (sensor, hose, connection, and network, etc.) providing a simple weekly monitoring tool.

a Differences in noise estimates between BRD01 and BRD21 (BHN) as a function of time and frequency for the time period from July to December 2018. b Noise level differences (NLDs) between these two array elements at 0.1 Hz for the same time period. The black dashed line in figure (a) denotes the frequency that noise differences are extracted and plotted in (b). c Average of absolute noise level differences (ANLDs) are plotted at 1-week increments

Figure 8a displays ANLDs for two array elements at all pairs of seismic and infrasound elements for 3 years (2016–2018). Table 3 summarizes the average values of all ANLDs (in log-scale) over the 3-year time period. Seismic noise differences are lower than infrasound noise differences, reflecting the fact that infrasound differences are more sensitive to environmental effects such as wind or ocean waves near the arrays. In the case of BRDAR, the average ANLD for infrasound (13.6 dB) is nearly ten times larger than the estimate for the seismic data (1.3 dB). CHNAR has the smallest scatter in ANLD values, consistent with lower noise levels across the array (Park et al. 2016). Similar to the seismic data, CHNAR infrasound noise is the quietest with the least scatter in ANLD values. TJIAR also has noise estimates that are similar between sensors. CHNAR, YPDAR, and TJIAR display seasonal variations in microbarom energy with increased noise in the summer and decreased noise in the winter. This effect might be due to the variations in wind speed and direction across the Korean Peninsula.

a Average noise level difference (ANLD; dB) at 0.1 Hz for two array elements of infrasound (top) and seismic (bottom) data for 3 years (2016–2018). Gray points represent the instrument pairs used for temporal comparison. The average (black line) and standard deviation (blue line) for ANLD values at all arrays are plotted. Thresholds (red line) summarized in Table 3 were set to identify technical issues. b ANLD for seismic and infrasound array data (top) plotted against average wind speed measured at each site from 2016 to 2018

We set a statistical monitoring threshold (\(MT\)) to identify abnormal features at 0.1 Hz, as defined by:

where \(\mu\) is the average ANLD (in log-scale) for the entire time period; \(\sigma\) is a standard deviation (in log-scale); and \(c\) is a scaling factor. We set \(c\) to 3 and 5 for seismic and infrasound data, respectively, with infrasound \(MT\) set at a higher value as a result of the greater noise variation between sites. In the case of YPDAR infrasound, we set c to 7 as this array has a higher ANLD than others due to both its proximity to the ocean and larger sensor spacing than other array configurations in Table 2. Based on these criteria, the \(MT\) of all arrays is summarized in Table 3 and displayed in Fig. 8a (red lines). Both seismic and infrasound array comparisons with low ANLDs below \(MT\) are consistent with healthy operating conditions. Points above the appropriate \(MT\) correlate with sensor, cabling, and network issues.

Average ANLDs for seismic and infrasound array data are compared in the top of Fig. 8b. Infrasound ANLDs are larger than those for the seismic one, due to several factors. In order to aid the physical interpretation of the variations in noise levels, weather data from co-installed weather sensor at each site are plotted (bottom of Fig. 8b). These results indicate that (1) surface winds (higher wind speeds at arrays near ocean, BRDAR and YPDAR) may have a bigger effect on infrasound ANLD than for the seismic data; (2) KMPAR has high ANLDs attributable to frequent instrument issues or cultural noise near the site; (3) increased wind speed at YPDAR, CHNAR, and TJIAR during the wintertime reduces ANLD, as a result of a propagation effects (high surface wind increases the spectral similarity between sensors); and (4) there may be seasonal variations in strength or detection of microbaroms and microseisms that need further analysis, including the assessment of the impact of regional and global atmospheric propagation effects on the observations. Variations in infrasound noise between two array elements are also dependent upon the distance between elements. Larger sensor spacings (YPDAR) produce higher ANLD estimates consistent with different site and atmosphere effects, as a result of the spatial scale length of the noise. On the other hand, TJIAR, which has the largest spacing (1 km) and the smallest scatter in the trend (Fig. 8a), suggesting that the noise levels between array elements are similar.

ANLD analysis was applied to illustrate weekly ANLD results for seismic and infrasound data (December 25–31, 2018) (Fig. 9). Note that ANLD values for the horizontal components are larger than those for the vertical components (except for KSG01-31). The long-period noise levels on the horizontal components are higher than those for the vertical component noise due to noise associated with the seismometer tilt and the effects of local atmospheric pressure fluctuations (Beauduin et al. 1996). These effects also produce noise levels that are more variable in time (Webb 1998; Berger et al. 2004). At BRDAR and YPDAR (vault seismometers), the horizontal ANLD values exhibit more scatter than for the vertical estimates which may reflect variable atmospheric conditions. As documented in Fig. 7, a high ANLD at BRD21 is a result of a sensor problem. ANLD values for pairs of infrasound sensors are quite different. There is a significantly higher ANLD (~ 200 dB) at BRD30-32 as a result of a broken sensor at BRD32. Other ANLDs above 30 were observed at KSG00-02 and KSG00-04 again due to sensor issues (KSG02 can no longer be physically accessed and is failing and a broken sensor at KSG04, as shown in Fig. S1). These results suggest that this simple automated assessment can be used to interpret data quality in a timely manner.

3.5 Signal detection with analyst review for automated instrument activity

An additional monitoring tool was developed to detect and identify signals associated with instrument calibration, seismometer re-centering, and seismometer lock/unlock activities. A record of these activities is typically kept as they relate to seismometer health. The tool automatically identifies these activities based on waveform analysis and is used to confirm field work and document its success or failure. The tool is illustrated using instrument-generated signals that occurred in a 10-day period from July 22 to 31, 2020. A total of 48 segments (seismometers; BRDAR/CHNAR/KMPAR/KSGAR/YPDAR and channels; BH1/BH2/BHE/BHN/BHZ/HHE/HHN/HHZ) were evaluated. In order to identify lock/unlock and re-centering signals, waveforms are filtered above 0.2 Hz with a window length of 20 s.

Each seismometer is calibrated once a week with a relatively high amplitude sine wave signal. Sine wave frequencies are varied each week so that over multiple weeks the full instrument bandwidth is covered. An automatic analysis routine locates the calibration signals then fits a synthetic sine wave to measure the amplitude and phase at the determined calibration frequency. These values are compared to the factory calibrations to determine if the seismometer is within a 5% tolerance in amplitude and phase. Since the sine wave signals have a high spectral density, their estimates are little affected by seismic noise. Figure 10a displays the segment time frame (HHC/BHC channels) that we feed for the 10 days. An example calibration signal recorded on CHN01 BHZ has a duration of ~ 7 min (left figure of Fig. 10a).

Automatic instrument activity signal detections for calibration (a), and lock/unlock and re-centering signals (b) for 10 days of data (July 22–31, 2020). Example signals are displayed in the left plots. The red boxes indicate the day (Julian date) including re-centering signal that has high cepstral values (> threshold, 0.23). The color bar indicates the cepstral value

To detect a repeated step signal associated with mass-lock-unlock, the convolution of the continuous waveform and the input signal (30-s boxcar function) is calculated. And then, we find the time where the convolution output rises to a value greater than 0.5. An example signal is shown in the top left of Fig. 10b. In order to detect a re-centering signal (bottom left of Fig. 10b), we use a Cepstogram, the inverse of the Fourier transform of the estimated signal spectrum (called the spectrum of a spectrum). This tool identifies signals that are periodic in frequency. Maximum cepstral values are empirically picked in the quefrency domain (a measure of time from a cepstral graph) from 1.9 to 2.15. The peak in the cepstral plot indicates harmonics in the spectrum. A cepstral threshold of 0.23 is used. The analyzed time period includes known lock/unlock and re-centering operations performed by KIGAM and SMU as documented below. The outputs from the automatic procedures (below) identify these activities (Fig. 10b).

-

KIGAM performed a mass-lock-unlock on BRD11 on 28-July-2020 at about 00:15–00:30UTC, then re-centered BRD11 at 00:33UTC and again at 03:01UTC.

-

SMU re-centered KSG11 on 29-July-2020 at 16:57UTC.

4 Real-time station state of health (SOH) monitoring

Operation and maintenance of the network of arrays are improved by a continuous, integrative monitoring system. There are various points of hardware failure that can impact the network, related to power, GPS, communication links, batteries, solar panels, and equipment temperature, among others. An additional integrated monitoring system has been developed and implemented to quickly check the status and fault points of these hardware systems. The SARAs currently use Guralp equipment, which provide state of health (SOH) information related to the equipment in the extensible markup language (XML). This XML information is reformulated and integrated into a single integrated monitoring tool using InfluxDB (see Code Availability section), which is a time-series database. The integrated database is displayed using Grafana (see Code availability section), a data visualization program.

The system monitors the SOH of all elements of the arrays in real-time and can quickly compare current conditions to historical SOH data. Currently, collected data includes digitizer’s internal and external disk usage rates, ratio received signal strength indicator, GPS status and time error, sensor input voltage, seismometer mass-position, network packet delays and loss, system temperature, data reception rate, and digitizer operation (Fig. S2) as well as various system characteristics (Fig. S3). Data availability and data delay statistics for real time CD1.1 data stream are evaluated by using the Standard Station Interface provided by the CTBTO. A threshold value based on empirical monitoring experience is set in the software so that if the threshold for any monitored process is exceeded an alert is sent to the operation manager or the field team using Telegram (see Code availability section). As an example, Fig. 11 displays information from CHN11_14 including serial number, reboot information, log entry, and measurements such as root file system usage, storage disk usage, GPS status, network time protocol (NTP), estimated error, network delay, and temperature.

The SOH monitoring contributes to diagnosing a failure, sometimes before any loss or degradation of data, and triggers a prompt problem response as well as documents actions in real-time during maintenance fieldwork. The monitoring of the system voltages for each array element is especially critical but complicated by the fact that some stations rely on AC power and others on solar power. As cloud cover reduces sun light, aging batteries can suffer from decreased charging efficiency or an AC power charger malfunction can lead to a failure. The tool provides the data needed to assess whether an outage is due to a failing battery, solar power, or a charging abnormally at a site. The example display includes system voltages for BRDAR from 16 to 21 May 2020 (Fig. 12). When the voltage of BRD10_13 and BRD11_14 dropped below 11.7 V around 12:00 UTC (17 May 2020), an alert was generated using Telegram. As a result of the alert, AC chargers at these sites were subsequently repaired on 20 May 2020 without any loss of data as the batteries had not yet fully discharged.

5 Data telemetry and archive monitoring

Finally, it is important to monitor the data archiving system that receives and stores the data telemetered from the field. This aspect of monitoring is designed to check that the field data is complete, document any data latency, and assure that the data is available for post processing systems. To track the overall communications network status and alert operators to general network and computer problems, a NAGIOS (Code availability section) type status tracking system, familiar to many system administrators, runs at the datacenter. It relies on monitoring tasks including data latency, expected data volumes from each site, communications link status, and various computer hardware status indicators, such as power, or CPU load. It also monitors periodic data quality checks, such as the automatic calibration routines. Again, out-of-range conditions trigger email and text messages to appropriate personnel and automatically escalate the warnings if the condition persists.

6 Conclusions

Tools have been designed, implemented, and integrated to monitor the quality of both seismic and infrasound data collected by six SARAs in South Korea as well as provide data to support maintenance and repair operations. Three types of monitoring tools were developed and are reviewed: (1) data quality tools that rely on the analysis of seismic and infrasound data; (2) a monitoring system that integrates state of health (SOH) information from the equipment in real-time; and (3) a system designed to quantify data archival and availability.

Data quality tools document data gaps, seismometer orientation, infrasound polarity, noise levels and coherence between co-located sensors, seismic calibration, and instrument-generated signals. Data gap monitoring contributes to solving problems associated with either data delivery or database construction and is used to track network issues or system power failures related to weather or server maintenance. The seismometer orientation monitoring is used to identify the problems associated with GPS timing as well as document the history of seismometer maintenance or replacement that can be accompanied by a change in sensor orientation, especially for borehole seismometers. The infrasound polarity tool, based on the correlation between two array elements waveforms, is used to identify inverted waveforms in order to improve array processing. Two spectral methods, single sensor data and a temporal change method between two closely spaced sensors, are used to quantify the range of noise conditions at each array and assess longer term data quality changes. The 5th and 90th percentile noise levels for both seismic and infrasound data from all arrays are regularly compared to the global noise models by Peterson (1993) and Marty et al. (2021), respectively. These noise assessments are used to highlight issues related to instruments, identifying the frequency band of the sensor issue and quantifying the start and end times of these abnormalities (end times often correlates with completion of fieldwork or a remote action). The noise comparison tool is also used to track ongoing fieldwork and can in some cases identify additional actions needed to resolve problems while the field team is at the site. Noise level differences between individual sensors are found to depend on local winds, instrument health, site environment (near ocean or inland), and spacing between array elements, especially for infrasound data.

The integrated monitoring system provides the state of health of the equipment, including disk usage rates in the digitizer, power, GPS status, sensor input voltage, seismometer mass-position, communication statistics, battery status, and temperature. Alerts are sent when abnormal features are automatically identified. The operations manager and field team use this information to diagnose problems and develop solutions. This analysis is critical to effective field maintenance and repair.

The overall status of the data archiving system, communications, network, and computer hardware are monitored by a NAGIOS type tracking system. This third component of the system is designed to assure the timely delivery of data into the database and access to this data for various processing tasks. Similar to the SOH system, out-of-range conditions trigger email and text messages to appropriate personnel and automatically escalate the issue if the condition persists.

This collection of monitoring tools including the data quality measurements, the equipment SOH and data transmittal, and archival measures enables us to automatically assess technical issues and promptly take corrective measures to ensure high-quality data. Our analysis provides feedback to operations on daily, weekly, and monthly basis. There is value in further research and development into data quality control methods. A few of the comparative techniques in the waveform-based analysis are based on the assumption that reference sensor is healthy. Therefore, this comparative method can fail if the reference sensor itself has problems unless we switch the reference to another healthy element data. To effectively monitor anomalies across all pairwise comparisons, all elements pairwise can be simultaneously utilized in a comparative analysis. Other techniques possibly including machine learning tools can be developed to target specific uses such as identifying a slow failure of pieces of equipment previously identified.

Data availability

The datasets generated during and analyzed during the current study are not publicly available.

Code availability

The codes used in the Data Quality Monitoring Tools section are available from the corresponding author on the request. Programs introduced in the Real-Time Station State of Health (SOH) Monitoring section can be obtained from the websites (InfluxDB, https://www.influxdata.com/; Grafana, http://grafana.org/; Telegram, https://telegram.org/; and NAGIOS, https://www.nagios.com/, last accessed on July 2022).

References

Aur KA, Bobeck J, Albert A, Kay P (2021) Pycheron: a python-based seismic waveform data quality control software package. Bull Seismol Soc Am 92(5):3165–3178

Beauduin R, Lognonne P, Montagner JP, Cacho S, Karczewski JF, Morand J (1996) The effects of the atmospheric pressure changes on seismic signals or how to improve the quality of a station. Bull Seismol Soc Am 86:1760–1769

Berger J, Davis P, Ekström G (2004) Ambient Earth noise: a survey of the global seismographic network. J Geophys Res 109:B11307. https://doi.org/10.1029/2004JB003408

Casey R, Templeton ME, Sharer G, Keyson L, Weertman BR, Ahern T (2018) Assuring the quality of IRIS data with MUSTANG. Seismol Res Lett 89(2A):630–639. https://doi.org/10.1785/0220170191

Che IY, Jun MS, Jeon JS (2002) Analysis of local seismo-acoustic events in the Korean Peninsula. Geophys Res Lett 29(12):1589. https://doi.org/10.1029/2001GL014060

Che IY, Shin JS, Kang IB (2009a) Seismo-acoustic location method for small-magnitude surface explosions. Earth Planet Space 61:e1–e4

Che IY, Kim TS, Jeon JS, Lee HI (2009b) Infrasound observation of the apparent North Korean nuclear test of 25May 2009. Geophys Res Lett 36:L22802

Che IY, Stump B, Lee HI (2011) Experimental characterization of seasonal variations in infrasonic travel times on the Korean Peninsula with implications for infrasound event location. Geophys J Int 185(1):190–200

Che IY, Kim G, Pichon AL (2013) Infrasound associated with the deep M7.3 northeastern China earthquake of June 28, 2002. Earth Planet Space 65(2):109–113. https://doi.org/10.5047/eps.2012.07.010

Che IY, Park J, Kim I, Kim TS, Lee HI (2014) Infrasound signals from the underground nuclear explosions of North Korea. Geophys J Int 198:495–503

Che IY, Pichon AL, Kim K, Shin IC (2017) Assessing the detection capability of a dense infrasound network in the southern Korean Peninsula. Geophys J Int 210:1105–1114

Che IY, Park J, Kim TS, Hayward C, Stump B (2019) On the use of dense seismo-acoustic arrays for near-regional environmental monitoring. In: Le Pichon A, Blanc E, Hauchecorne A (eds) Infrasound Monitoring for Atmospheric Studies (challenges in middle atmosphere dynamics and societal benefits), 2nd edn. Springer Nature, Switzerland, pp 409–448

Che IY, Kim K, Le Pichon A, Park J, Arrowsmith S, Stump B (2022) Illuminating the North Korean nuclear explosion test in 2017 using remote infrasound observations. Geophys J Int 228(1):308–315. https://doi.org/10.1093/gji/ggab338

Hedlin MAH, Berger J, Vernon FL (2002) Surveying infrasonic noise on Oceanic islands. Pure Appl Geophys 159:1127–1152

Kim TS, Hayward C, Stump B (2004) Local infrasound signals from the Tokachi-Oki earthquake. Geophys Res Lett 31:L20605

Kim TS, Hayward C, Stump B (2010) Calibration of acoustic gauges in the field using Lg phases and coupled high frequency local infrasound. Bull Seismol Soc Am 100(4):1806–1815

Marty J, Doury B, Kramer A (2021) Low and high broadband spectral models of atmospheric pressure fluctuation. J Atmos Ocean Technol. 38:1813–1822. https://doi.org/10.1175/JTECH-D-21-0006.1

McKenna MH, Stump BW, Hayward C (2008) Effect of time-varying tropospheric models on near-regional and regional infrasound propagation as constrained by observational data. J Geophys Res 113:D11111. https://doi.org/10.1029/2007JD009130

Park J, Stump BW, Hayward C, Arrowsmith SJ, Che IY, Drob DP (2016) Detection of regional infrasound signals using array data: testing, tuning, and physical interpretation. J Acoust Soc Am 140(1):239–259

Park J, Hayward CT, Zeiler CP, Arrowsmith SJ, Stump BW (2017) Assessment of infrasound detectors based on analyst review, environmental effects, and detection characteristics. Bull Seismol Soc Am 107(2):674–690. https://doi.org/10.1785/0120160125

Park J, Che IY, Stump B, Hayward C, Dannemann F, Jeong S, Kwong K, McComas S, Oldham HR, Scales MM, Wright V (2018) Characteristics of infrasound signals from North Korean underground nuclear explosions on 2016 January 6 and September 9. Geophys J Int 214(3):1865–1885. https://doi.org/10.1093/gji/ggy252

Park J, Stump B, Che IY, Hayward C, Yang X (2022) Relative seismic source scaling based on pn observations from the North Korean underground nuclear explosions. Bull Seismol Soc Am XX 1–19. https://doi.org/10.1785/0120220003

Park J, Assink J, Stump B, Hayward C, Arrowsmith S, Che IY (2023) Atmospheric model inversion using infrasound signals from the North Korean underground nuclear explosion and the subsequent collapse event in 2017. Geophys J Int 232:902–922. https://doi.org/10.1093/gji/ggac366

Peterson J (1993) Observation and modeling of seismic background noise. US Geol Surv Tech Rept 93–322:1–95. https://doi.org/10.3133/ofr93322

Ringler AT, Gee LS, Hutt CR, McNamara DE (2010) Temporal variations in global seismic station ambient noise power levels. Seismol Res Lett 81(4):605–613. https://doi.org/10.1785/gssrl.81.4.605

Ringler AT, Hagerty MT, Holland J, Gonzales A, Gee LS, Edwards JD (2015) The data quality analyzer: a quality control program for seismic data. Comput Geosci 76:96–111. https://doi.org/10.1016/j.cageo.2014.12.006

Stump B, Jun MS, Hayward C, Jeon JS, Che IY, Thomason K, House SM, McKenna J (2004) Small-aperture seismo-acoustic arrays: design, implementation, and utilization. Bull Seismol Soc Am 94:220–236

Stump B, Hayward C, Golden P, Park J, Kubacki R, Cain C, Arrowsmith S, McKenna Taylor MH, Jeong SJ, Ivey T et al (2022) Seismic and infrasound data recorded at regional seismoacoustic research arrays in South Korea from the six DPRK underground nuclear explosions. Seismol Res Lett XX: 1–12. https://doi.org/10.1785/0220220009

Wang X, Chen QF, Li J, Wei S (2016) Seismic sensor misorientation measurement using P-Wave particle motion: an application to the NECsaids array. Seismol Res Lett 87(4):901–911. https://doi.org/10.1785/0220160005

Webb SC (1998) Broadband seismology and noise under the ocean. Rev Geophys 36:105–142. https://doi.org/10.1029/97RG02287

Welch PD (1967) The use of fast Fourier transforms for the estimation of power spectra: a method based on time averaging over short modified periodograms. Trans IEEE AU 70–73. https://doi.org/10.1109/TAU.1967.1161901

Xie J, Yang D, Ding L, Yuan S, Chen J, Lu Z, Ye S, Ma J, Zhao J, Li X (2018) A comparison of azimuth, misalignment, and orthogonality estimation methods in seismometer testing. Bull Seismol Soc Am 108(2):1004–1017. https://doi.org/10.1785/0120170055

Xu H, Luo Y, Tang CC, Zhao K, Xie J, Yang X (2018) Systemic comparison of seismometer horizontal orientations based on teleseismic earthquakes and ambient-noise data. Bull Seismol Soc Am 108(6):3576–3589. https://doi.org/10.1785/0120180087

Zha Y, Webb SC, Menke W (2013) Determining the orientations of ocean bottom seismometers using ambient noise correlation. Geophys Res Lett 40:3585–3590. https://doi.org/10.1002/grl.50698

Acknowledgements

This research was in part supported by the Basic Research Project of the Korea Institute of Geoscience and Mineral Resources (KIGAM) GP2020-017 funded by the Ministry of Science and Information & Communications Technology of Korea. The authors are grateful for two anonymous reviewers whose comments greatly improved the manuscript.

Funding

Open access funding provided by SCELC, Statewide California Electronic Library Consortium This research was in part supported by the Basic Research Project of the Korea Institute of Geoscience and Mineral Resources (KIGAM) GP2020-017 funded by the Ministry of Science and Information & Communications Technology of Korea.

Author information

Authors and Affiliations

Contributions

Junghyun Park wrote the main manuscript text and prepared Figs. 1, 2, 3, 4, 5, 6, 7, 8, 9, 10 and S1 with inputs from Brain Stump, Il-Young Che, and Stephen Arrowsmith. Junghyun Park and Chris Hayward developed the tools in the Data Quality Monitoring Tools section, and Byung-Il Kim developed the tools and prepared Figs. 11–12, S2-S3 in the Real-Time Station State of Health Monitoring section. Byung-Il Kim and Kwangsu Kim provided detailed information regarding maintenance fieldwork and data issue. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Highlights

• We document data quality control tools developed for seismic and infrasound data recorded at multiple co-located seismoacoustic arrays.

• Data assessment tools quantify data gaps, seismometer orientation, noise levels, infrasound polarity, and equipment conditions.

• Monitoring real-time station state of health, data telemetry, and archival provides analyst additional capability to assess operational issues and network performance.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Park, J., Hayward, C., Kim, BI. et al. Data quality control tools used to monitor seismoacoustic research arrays in South Korea. J Seismol 27, 659–679 (2023). https://doi.org/10.1007/s10950-023-10164-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10950-023-10164-6