Abstract

Objectives

Questionable research practices (QRPs) lead to incorrect research results and contribute to irreproducibility in science. Researchers and institutions have proposed open science practices (OSPs) to improve the detectability of QRPs and the credibility of science. We examine the prevalence of QRPs and OSPs in criminology, and researchers’ opinions of those practices.

Methods

We administered an anonymous survey to authors of articles published in criminology journals. Respondents self-reported their own use of 10 QRPs and 5 OSPs. They also estimated the prevalence of use by others, and reported their attitudes toward the practices.

Results

QRPs and OSPs are both common in quantitative criminology, about as common as they are in other fields. Criminologists who responded to our survey support using QRPs in some circumstances, but are even more supportive of using OSPs. We did not detect a significant relationship between methodological training and either QRP or OSP use. Support for QRPs is negatively and significantly associated with support for OSPs. Perceived prevalence estimates for some practices resembled a uniform distribution, suggesting criminologists have little knowledge of the proportion of researchers that engage in certain questionable practices.

Conclusions

Most quantitative criminologists in our sample have used QRPs, and many have used multiple QRPs. Moreover, there was substantial support for QRPs, raising questions about the validity and reproducibility of published criminological research. We found promising levels of OSP use, albeit at levels lagging what researchers endorse. The findings thus suggest that additional reforms are needed to decrease QRP use and increase the use of OSPs.

Similar content being viewed by others

Notes

Randomization of question ordering (see below) meant that breakoffs equally (on expectation) affected all practices, but also meant that item nonresponse was not concentrated at the end of the survey. As a result, there are many respondents who answered questions about only one randomly presented QRP or OSP.

In using the 0–100% response scale, our assumption was that criminologists would be as able to use it as laypeople, who regularly respond on this scale in major surveys (Manski 2004).

Because this may bias the coefficients toward 0, such that estimates are underestimates, we estimated supplementary models using only those respondents with complete data on the indices. The findings were the same.

The findings are the same when negative binomial regression is used for the outcome variables measuring usage.

We define support as any answer other than “never.”.

CIs calculated as -/ + 1.96*(sd/(sqrt(n)).

References

Agnoli F, Wicherts JM, Veldkamp CLS, Albiero P, Cubelli R (2017) Questionable research practices among italian research psychologists. PLoS ONE 12(3):e0172792

Allen C, Mehler DMA (2019) Open science challenges, benefits and tips in early career and beyond. PloS Biol 17(5):e3000246

American Association for the Advancement of Science (2019) Retraction of the Research Article: Police Violence and the Health of Black Infants

Anderson MS, Martinson BC, De Vries R (2007) Normative dissonance in science: Results from a national survey of US scientists. J Empir Res Hum Res Ethics 2(4):3–14

Apel R (2013) Sanctions, perceptions, and crime: implications for criminal deterrence. J Quant Criminol 29:67–101

Ashby MPJ (2020) The open-access availability of criminological research to practitioners and policy makers. J Crim Justice Educ 32:1–21

Bakker M, Wicherts JM (2011) The (mis)reporting of statistical results in psychology journals. Behav Res Methods 43(3):666–678

Bakker BN, Jaidka K, Dörr T, Fasching N, Lelkes Y (2020) Questionable and open research practices: attitudes and perceptions among quantitative communication researchers. https://doi.org/10.31234/osf.io/7uyn5

Beerdsen E (2021) Litigation science after the knowledge crisis. Cornell Law Rev 106:529–590

Bem DJ (2011) Feeling the future: experimental evidence for anomalous retroactive influences on cognition and affect. J Personal Soc Psychol 100(3):435

Bishop D (2019) Rein in the four horsemen of irreproducibility. Nature, 568(7753)

Braga AA, Papachristos AV, Hureau DM (2014) The effects of hot spots policing on crime: An updated systematic review and meta-analysis. Justice Q 31(4):633–663

Braga AA, Weisburd D, Turchan B (2018) Focused deterrence strategies and crime control: an updated systematic review and meta-analysis of the empirical evidence. Criminol Public Policy 17(1):205–250

Brauer JR, Tittle CR (2017) When crime is not an option: inspecting the moral filtering of criminal action Alternatives. Justice Q 34(5):818–846

Brodeur A, Cook N, Heyes A (2020) Methods matter: P-hacking and publication bias in causal analysis in economics. Am Econ Rev 110(11):3634–3660

Burt C (2020) Doing better science: improving review & publication protocols to enhance the quality of criminological evidence. Criminologist 45(4):1–6

Cairo AH, Green JD, Forsyth DR, Behler AMC, Raldiris TL (2020) Gray (literature) matters: evidence of selective hypothesis reporting in social psychological research. Personal Soc Psychol Bull 46(9):1344–1362

Camerer CF, Dreber A, Forsell E, Ho T, Huber J, Johannesson M, Kirchler M, Almenberg J, Altmejd A, Chan T, Heikensten E, Holzmeister F, Imai T, Isaksson S, Nave G, Pfeiffer T, Razen M, Wu H (2016) Evaluating replicability of laboratory experiments in economics. Science 351(6280):1433–1436

Camerer CF et al. (2018) Evaluating the replicability of social science experiments in Nature and Science between 2010 and 2015. Nat Human Behav 2: 637–644

Carney, D. My position on “Power Poses”. https://faculty.haas.berkeley.edu/dana_carney/pdf_my%20position%20on%20power%20poses.pdf

Carpenter J, Kenward M (2012) Multiple imputation and its application. Wiley

Chin JM (2018) Abbey road: the (ongoing) journey to reliable expert evidence. Can Bar Rev 96(3):422–459

Chin JM, Growns B, Mellor DT (2019) Improving expert evidence: the role of open science and transparency. Ott Law Rev 50:365–410

Christensen G, Wang Z, Paluck EL, Swanson N, Birke DJ, Miguel E, Littman R (2019) Open science practices are on the rise: the state of social science (3S) survey. https://doi.org/10.31222/osf.io/5rksu

Dahlgaard JO, Hansen JH, Hansen KM, Bhatti Y (2019) Bias in self-reported voting and how it distorts turnout models: disentangling nonresponse bias and overreporting among danish voters. Polit Anal 27(4):590–598

de Bruin A, Treccani B, Sala SD (2015) Cognitive advantage in bilingualism: an example of publication bias? Psychol Sci 26(1):90–107

DeJong C. St. George S (2018) Measuring journal prestige in criminal justice and criminology. J Crim Justice Educ 29(2): 290-309

Ebersole CR et al. (2016) Many Labs 3: evaluating participant pool quality across the academic semester via replication. J Exp Soc Psychol 67: 68-82

Efron B, Tibshirani RJ (1994) An introduction to the bootstrap. CRC Press

Fanelli D (2012) Negative results are disappearing from most disciplines and countries. Scientometrics 90(3):891–904

Fidler F, Wilcox J (2018) Reproducibility of scientific results. In Zalta EN (ed) The Stanford Encyclopedia of Philosophy, Stanford University

Franco A, Malhotra N, Simonovitz G (2014) Publication bias in the social sciences: unlocking the file drawer. Science 345(6203):1502–1505

Franco A, Malhotra N, Simonovits G (2015) Underreporting in political science survey experiments: comparing questionnaires to published results. Polit Anal 23:306–312

Fraser H, Parker T, Nakagawa S, Barnett A, Fiddler F (2018) Questionable research practices in ecology and evolution. PLoS ONE 13(7):e0200303

Gelman A, Loken E (2014) The statistical crisis in science: data-dependent analysis–a" garden of forking paths"–explains why many statistically significant comparisons don’t hold up. Am Sci 102(6):460–466

Gelman A, Skardhamar T, Aaltonen M (2020) Type M error might explain Weisburd’s paradox. J Quant Criminol 36(2):395–604

Hardwicke TE, Mathur MB, MacDonald K, Nilsonne G, Banks GC, Kidwell MC, Mohr AH, Clayton E, Yoon EJ, Tessler MH, Lenne RL, Altman S, Long B, Frank MC (2018) Data availability, reusability, and analytic reproducibility: evaluating the impact of a mandatory open data policy at the journal Cognition. R Soc Open Sci 5(8):180448

Hopp C, Hoover GA (2017) How prevalent is academic misconduct in management research? J Bus Res 80:73–81. https://doi.org/10.1016/j.jbusres.2017.07.003

Horbach SP, Halffman W (2020) Journal peer review and editorial evaluation: cautious innovator or sleepy giant? Minerva 58(2):139–161

John LK, Loewenstein G, Prelec D (2012) Measuring the prevalence of questionable research practices with incentives for truth telling. Psychol Sci 23(5):524–532

Keeter S, Hatley N, Kennedy C, Lau A (2017) What Low Response Rates Mean for Telephone Surveys. Pew Research Center. Retrieved from: https://www.pewresearch.org/methods/2017/05/15/what-low-response-rates-mean-for-telephone-surveys/.

Kidwell MC, Lazarević LB, Baranski E, Hardwicke TE, Piechowski S, Falkenberg LS, Kennett C, Slowik A, Sonnleitner C, Hess-Holden C, Errington TM, Fiedler S, Nosek BA (2016) Badges to acknowledge open practices: a simple, low-cost, effective method for increasing transparency. PLoS Biol 14(5):e1002456

Klein RA. et al. (2014) Investigating variation in replicability. Soc Psychol 45(3): 142-152

Klein O. et al. (2018a) A practical guide for transparency in psychological science. Collabra: Psychol 4(1) https://online.ucpress.edu/collabra/article/4/1/20/112998/A-Practical-Guidefor-Transparency-in

Klein R. A et al. (2018b) Many Labs 2: investigating variation in replicability across samples and settings. Adv Methods Pract Psychol Sci 1(4): 443-490

Krosnick JA, Presser S, Fealing KH, Ruggles S (2015) The Future of Survey Research: Challenges and Opportunities. The National Science Foundation Advisory Committee for the Social, Behavioral and Economic Sciences Subcommittee on Advancing SBE Survey Research. Available online at: http://www.nsf.gov/sbe/AC_Materials/The_Future_of_Survey_Research.pdf

Kvarven A, Strømland E, Johannesson M (2020) Comparing meta-analyses and preregistered multiple-laboratory replication projects. Nat Hum Behav 4:423–434

Levine T, Asada KJ, Carpenter C (2009) Sample sizes and effect sizes are negatively correlated in meta-analyses: evidence and implications of a public bias against nonsignificant findings. Commun Monogr 76(3):286–302

Makel MC, Hodges J, Cook BG, Plucker J (2021) Both questionable and open research practices are prevalent in education research. Educ Res 1–12. https://journals.sagepub.com/doi/full/10.3102/0013189X211001356

Manski C (2004) Measuring expectations. Econometrica 72:1329–1376

McNeeley S, Warner JJ (2015) Replication in criminology: a necessary practice. Eur J Criminol 12(5):581–597

Meyer MN (2018) Practical tips for ethical data sharing. Adv Methods Pract Psychol Sci 1(1):131–144

Moher D et al. (2020) The Hong Kong Principles for assessing researchers: Fostering research integrity. PLoS Biol 18(7): e3000737

Munafò MR et al. (2017) A manifesto for reproducible science. Nat Human Behav 1(1): 1-9

Nelson MS, Wooditch A, Dario LM (2015) Sample size, effect size, and statistical power: a replication study of Weisburd’s paradox. J Exp Criminol 11:141–163

Nelson LD, Simmons J, Simonsohn U (2018) Psychology’s renaissance. Annu Rev Psychol 69:511–534

Nuijten MB, Hartgerink CH, van Assen MA, Epskamp S, Wicherts JM (2016) The prevalence of statistical reporting errors in psychology (1985–2013). Behav Res Methods 48(4):1205–1226

O’Boyle EH Jr, Banks GC, Gonzalez-Mulé E (2017) The chrysalis effect: how ugly initial results metamorphize into beautiful articles. J Manag 43(2):376–399

Open Science Collaboration (2015) Estimating the reproducibility of psychological science. Science, 349(6251) 943.

Parsons S, Azevedo F, FORRT (2019) Introducing a Framework for Open and Reproducible Research Training (FORRT). https://osf.io/bnh7p/

Pickett JT (2020) The stewart retractions: a quantitative and qualitative analysis. Econ J Watch 7(1):152

Pridemore WA, Makel MC, Plucker JA (2018) Replication in criminology and the social sciences. Annu Rev Criminol 1:19–38

Rabelo ALA, Farias JEM, Sarmet MM, Joaquim TCR, Hoersting RC, Victorino L, Modesto JGN, Pilati R (2020) Questionable research practices among Brazilian psychological researchers: results from a replication study and an international comparison. Int J Psychol 55(4):674–683

Ritchie S (2020) Science fictions: how fraud, bias, negligence, and hype undermine the search for truth. Metropolitan Books, New York

Rohrer JM et al. (2018) Putting the self in self-correction: findings from the loss-of-confidence project. https://doi.org/10.31234/osf.io/exmb2

Rowhani-Farid A, Barnett AG (2018) Badges for sharing data and code at Biostatistics: an observational study. F1000Research, 7

Scheel AM, Schijen M, Lakens D (2020) An excess of positive results: comparing the standard Psychology literature with Registered Reports. https://doi.org/10.31234/osf.io/p6e9c

Schumann S, van der Vegt I, Gill P, Schuurman B (2019) Towards open and reproducible terrorism studies: current trends and next steps. Perspect Terror 13(15):61–73

Silver JR, Silver E (2020) The nature and role of morality in offending: a moral foundations approach. J Res Crime Delinq 56(3):343–380

Simmons JP, Nelson LD, Simonsohn U (2011) False-positive psychology: undisclosed flexibility in data collection and analysis allows presenting anything as significant. Psychol Sci 22(11):1359–1366

Simonsohn U, Nelson LD, Simmons JP (2014) P-curve: a key to the file-drawer. J Exp Psychol Gen 143(2):534

Sorensen JR (2009) An assessment of the relative impact of criminal justice and criminology journals. J Crim Justice 37(5):505–511

Spellman BA (2015) A short (personal) future history of revolution 2.0. Perspect Psychol Sci 10(6):886–899

Sweeten G (2020) Standard errors in quantitative criminology: taking stock and looking forward. J Quant Criminol 36(2):263–272

Thomas KJ, Nguyen H (2020) Status gains versus status losses: Loss aversion and deviance. Justice Quarterly. Advanced online publication. Retrieved from: https://www.tandfonline.com/doi/abs/https://doi.org/10.1080/07418825.2020.1856400?journalCode=rjqy20

Tourangeau R, Conrad FG, Couper MP (2013) The science of web surveys. Oxford University Press, New York

Uggen C, Inderbitzin M (2010) Public criminologies. Criminol Public Policy 9(4):725–749

van Assen MALM, van Aert RCM, Wicherts JM (2015) Meta-analysis using effect size distributions of only statistically significant studies. Psychol Methods 20(3):293–309

Vazire S (2018) Implications of the credibility revolution for productivity, creativity, and progress. Perspect Psychol Sci 13(4):411–417

Vazire S, Holcombe AO (2020) Where are the self-correcting mechanisms in science?. https://doi.org/10.31234/osf.io/kgqzt

Vazire S, Schiavone SR, Bottesini JG (2020) Credibility beyond replicability: improving the four validities in psychological science. https://doi.org/10.31234/osf.io/bu4d3

Weisburd D, Lum CM, Petrosino A (2001) Does research design affect study outcomes in criminal justice? Ann Am Acad Pol Soc Sci 578:50–70

Welsh B, Peel M, Farrington D, Elffers H, Braga A (2011) Research design influence on study outcomes in crime and justice: a partial replication with public area surveillance. J Exp Criminol 7:183–198

Wolfe SE, Lawson SG (2020) The organizational justice effect among criminal justice employees: a meta-analysis. Criminology 58(4):619–644

Wooditch A, Sloan LB, Wu X, Key A (2020) Outcome reporting bias in randomized experiments on substance abuse disorders. J Quant Criminol 36(2):273–293

Young JTN, Barnes JC, Meldrum RC, Weerman FW (2011) Assessing and explaining misperceptions of peer delinquency. Criminology 49(2):599–630

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Jason Chin is the president of the Association for Interdisciplinary Meta-research and Open Science (AIMOS), a charitable organization. This is an upaid position.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

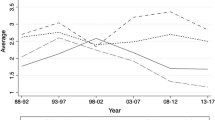

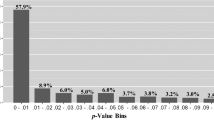

Appendix: Distribution of Outcomes Used in the Regression Models

Appendix: Distribution of Outcomes Used in the Regression Models

Rights and permissions

About this article

Cite this article

Chin, J.M., Pickett, J.T., Vazire, S. et al. Questionable Research Practices and Open Science in Quantitative Criminology. J Quant Criminol 39, 21–51 (2023). https://doi.org/10.1007/s10940-021-09525-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10940-021-09525-6