Abstract

The mobility of the nodes and their limited energy supply in mobile ad hoc networks (MANETs) complicates network conditions. Having an efficient topology control mechanism in the MANET is very important and can reduce the interference and energy consumption in the network. Indeed, since current networks are highly complex, an efficient topology control is expected to be able to adapt itself to the changes in the environment drawing upon a preventive approach and without human intervention. To accomplish this purpose, the present paper proposes a learning automata-based topology control method within a cognitive approach. This approach deals with adding cognition to the entire network protocol stack to achieve stack-wide and network-wide performance goals. In this protocol, two cognitive elements are embedded at each node: one for transmission power control, and the other for channel control. The first element estimates the probability of link connectivity, and then, in a non-cooperative game of learning automata, it sets the proper power for the corresponding node. Subsequently, the second element allocates efficient channel to the corresponding node, again using learning automata. Having a cognitive network perspective to control the topology of the network brings about many benefits, including a self-aware and self-adaptive topology control method and the ability of nodes to self-adjust dynamically. The experimental results of the study show that the proposed method yields more improvement in the quality of service (QoS) parameters of throughput and end-to-end delay more than do the other methods.

Similar content being viewed by others

References

Siripongwutikorn, P., Thipakorn, B.: Mobility-aware topology control in mobile ad hoc networks. Comput. Commun. 31(14), 3521–3532 (2008)

Thomas, R.W., Friend, D.H., DaSilva, L.A., MacKenzie, A.B.: Cognitive networks. In: Cognitive radio, Software Defined Radio, and Adaptive Wireless Systems, pp. 17–41. Springer (2007)

Thomas, R.W., Friend, D.H., Dasilva, L.A., Mackenzie, A.B.: Cognitive networks: adaptation and learning to achieve end-to-end performance objectives. IEEE Commun. Mag. 44(12), 51–57 (2006)

Thomas, R.W.: Cognitive Networks. Ph.D. thesis, Electrical and Computer Engineering Department, Virginia Tech University, Blacksburg, Virginia (2007)

Friend, D.H.: Cognitive Networks: Foundations to Applications. Virginia Polytechnic Institute and State University, Blacksburg (2009)

Meshkova, E., Riihijarvi, J., Achtzehn, A., Mahonen, P.: Exploring simulated annealing and graphical models for optimization in cognitive wireless networks. In: Global Telecommunications Conference, 2009. GLOBECOM 2009. IEEE, 2009, pp. 1–8. IEEE (2009)

Gheisari, S., Meybodi, M.R.: LA-CWSN: a learning automata-based cognitive wireless sensor networks. Comput. Commun. 94, 46–56 (2016)

Zhang, X., Granmo, O.-C., Oommen, B.J.: The Bayesian pursuit algorithm: a new family of estimator learning automata. In: Proceedings of the 24th International Conference on Industrial Engineering and Other Applications of Applied Intelligent Systems Conference on Modern Approaches in Applied Intelligence-Volume Part II 2011, pp. 522–531. Springer (2011)

Zhang, X., Granmo, O.-C., Oommen, B.J.: On incorporating the paradigms of discretization and Bayesian estimation to create a new family of pursuit learning automata. Appl. Intell. 39(4), 782–792 (2013)

Li, N., Hou, J.C., Sha, L.: Design and analysis of an MST-based topology control algorithm. IEEE Trans. Wirel. Commun. 4(3), 1195–1206 (2005)

Miyao, K., Nakayama, H., Ansari, N., Kato, N.: LTRT: an efficient and reliable topology control algorithm for ad-hoc networks. IEEE Trans. Wirel. Commun. 8(12), 6050–6058 (2009)

Nishiyama, H., Ngo, T., Ansari, N., Kato, N.: On minimizing the impact of mobility on topology control in mobile ad hoc networks. IEEE Trans. Wirel. Commun. 11(3), 1158–1166 (2012)

Gui, J., Zhou, K.: Flexible adjustments between energy and capacity for topology control in heterogeneous wireless multi-hop networks. J. Netw. Syst. Manag. 24(4), 789–812 (2016)

Shirali, N., Jabbedari, S.: Topology control in the mobile ad hoc networks in order to intensify energy conservation. Appl. Math. Model. 37(24), 10107–10122 (2013)

Shirali, M., Shirali, N., Meybodi, M.R.: Sleep-based topology control in the Ad Hoc networks by using fitness aware learning automata. Comput. Math Appl. 64(2), 137–146 (2012)

Jeng, A.A.-K., Jan, R.-H.: Adaptive topology control for mobile ad hoc networks. IEEE Trans. Parallel Distrib. Syst. 22(12), 1953–1960 (2011)

Zhang, X.M., Zhang, Y., Yan, F., Vasilakos, A.V.: Interference-based topology control algorithm for delay-constrained mobile ad hoc networks. IEEE Trans. Mob. Comput. 14(4), 742–754 (2015)

Guan, Q., Yu, F.R., Jiang, S., Wei, G.: Prediction-based topology control and routing in cognitive radio mobile ad hoc networks. IEEE Trans. Veh. Technol. 59(9), 4443–4452 (2010)

Zarifzadeh, S., Yazdani, N., Nayyeri, A.: Energy-efficient topology control in wireless ad hoc networks with selfish nodes. Comput. Netw. 56(2), 902–914 (2012)

Beheshtifard, Z., Meybodi, M.R.: Learning automata based channel assignment with power control in multi-radio multi-channel wireless mesh networks. J. Telecommun. Syst. Manag. 5(3), 139 (2016). doi:10.4172/2167-0919.1000139

Raniwala, A., Chiueh, T.-C.: Architecture and algorithms for an IEEE 802.11-based multi-channel wireless mesh network. In: INFOCOM 2005. 24th Annual Joint Conference of the IEEE Computer and Communications Societies. Proceedings IEEE 2005, pp. 2223–2234. IEEE (2005)

Khan, S., Loo, K.-K., Mast, N., Naeem, T.: SRPM: secure routing protocol for IEEE 802.11 infrastructure based wireless mesh networks. J. Netw. Syst. Manag. 18(2), 190–209 (2010)

Komali, R.S., Thomas, R.W., DaSilva, L.A., MacKenzie, A.B.: The price of ignorance: distributed topology control in cognitive networks. IEEE Trans. Wirel. Commun. 9(4), 1434–1445 (2010)

Singh, V., Kumar, K.: Literature survey on power control algorithms for mobile ad-hoc network. Wirel. Pers. Commun. 60(4), 679–685 (2011)

Hong, Z., Wang, R., Wang, N.: A tree-based topology construction algorithm with probability distribution and competition in the same layer for wireless sensor network. Peer Peer Netw. Appl. 10(3), 658–669 (2017)

Deniz, F., Bagci, H., Korpeoglu, I., Yazıcı, A.: An adaptive, energy-aware and distributed fault-tolerant topology-control algorithm for heterogeneous wireless sensor networks. Ad Hoc Netw. 44, 104–117 (2016)

Blough, D.M., Leoncini, M., Resta, G., Santi, P.: The k-neigh protocol for symmetric topology control in ad hoc networks. In: Proceedings of the 4th ACM International Symposium on Mobile Ad Hoc Networking & Computing, 2003. vol., pp. 141–152. ACM (2003)

Marina, M.K., Das, S.R., Subramanian, A.P.: A topology control approach for utilizing multiple channels in multi-radio wireless mesh networks. Comput. Netw. 54(2), 241–256 (2010)

Subramanian, A.P., Gupta, H., Das, S.R., Cao, J.: Minimum interference channel assignment in multiradio wireless mesh networks. IEEE Trans. Mob. Comput. 7(12), 1459–1473 (2008)

Granmo, O.-C., Oommen, B.J., Myrer, S.A., Olsen, M.G.: Learning automata-based solutions to the nonlinear fractional knapsack problem with applications to optimal resource allocation. IEEE Trans. Syst. Man Cybern. Part B (Cybernetics) 37(1), 166–175 (2007)

Beigy, H., Meybodi, M.R.: Utilizing distributed learning automata to solve stochastic shortest path problems. Int. J. Uncertain. Fuzz. Knowl. Based Syst. 14(05), 591–615 (2006)

Torkestani, J.A.: Mobility prediction in mobile wireless networks. J. Netw. Comput. Appl. 35(5), 1633–1645 (2012)

Torkestani, J.A., Meybodi, M.R.: A mobility-based cluster formation algorithm for wireless mobile ad-hoc networks. Clust. Comput. 14(4), 311–324 (2011)

Narendra, K.S., Thathachar, M.A.: Learning automata: an introduction. Courier Corporation, North Chelmsford (2012)

Thathachar, M.A., Sastry, P.S.: Varieties of learning automata: an overview. IEEE Trans. Syst. Man Cybern. Part B (Cybernetics) 32(6), 711–722 (2002)

Haas, Z.J., Pearlman, M.R.: The performance of query control schemes for the zone routing protocol. IEEE/ACM Trans. Netw. (TON) 9(4), 427–438 (2001)

Thathachar, M.A., Sastry, P.S.: Networks of Learning Automata: Techniques for Online Stochastic Optimization. Springer, Berlin (2011)

Song, Q., Ning, Z., Wang, S., Jamalipour, A.: Link stability estimation based on link connectivity changes in mobile ad-hoc networks. J. Netw. Comput. Appl. 35(6), 2051–2058 (2012)

Kubale, M.: Graph Colorings, vol. 352. American Mathematical Society, New York (2004)

Lim, C., Choi, C.-H., Lim, H., Park, K.-J.: Optimization approach for throughput analysis of multi-hop wireless networks. In: Telecommunications Network Strategy and Planning Symposium (Networks), 2014 16th International, 2014. vol., pp. 1–7. IEEE (2014)

Acknowledgements

"Funding was provided by Islamic Azad University, Pardis Branch.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix

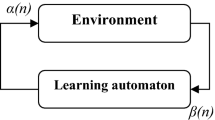

The proposed protocol employs the learning automata (\({\text{L}}_{R - I}\)) for power and channel adjustment. First, there will be an explanation of how a learning automaton performs and converges to the best action (i.e., best power or best channel). Then, there will be a discussion of how a team of learning automata of the \({\text{L}}_{R - I}\) type reaches the Nash equilibrium.

Operation of the Learning Automata Algorithm

In all models of learning automata, interaction with the environment and hence the evolution of action probabilities proceed at discrete time steps. At each instant, t, t = 0,1,2,…, the automaton chooses an action \(\alpha \left( t \right)\) from an action list \(A = \{ \alpha_{1} ,\alpha_{2} , \ldots ,\alpha_{r} \}\) at random based on its current action probability distribution \(p = \{ p_{1} ,p_{2} , \ldots ,p_{r} \}\). This action is input to the environment which responds with a reinforcement signal, \(\beta (t)\). This reinforcement from the environment is the input to the automaton. It is important to note that in the beginning, all the actions have the same probability value, which is as follows:

\(p_{1..r} = \frac{1}{r}\) where r is number of actions.

The finite action-set learning automata \({\text{L}}_{R - I}\) (linear reward-inaction) algorithm is known to be very effective in many applications. The \({\text{L}}_{R - I}\) algorithm updates the action probabilities as Eq. (22). Let us assume that the learning automaton chooses the action \(\alpha \left( t \right) = \alpha_{j}\) at time t. Then, the action probability vector p(t) is updated as below:

The above algorithm can be rewritten in vector notation as fallows (Eq. (23)):

Here, e j is the unit vector with the j th component unity, where the index j corresponds to the action selected at t, (that is, \(\alpha \left( t \right) = \alpha_{j}\)), and \(\lambda\) is the learning parameter satisfying 0 < \(\lambda\) < 1.

In the L R-I algorithm, we have seen that the action probability vector converges to a unit vector. In other words, in the action probability vector, the probability value of the best action converges to 1, and the probability value of the other actions in the vector converges to zero. Thus, each automaton has a set of actions. At each iteration, the automaton chooses an action \(\alpha_{j}\) according to the probability value of the action and receives its feedback \(\beta_{j}\) from the environment. Each automaton has a payoff function which is calculated as follows [34]:

F is the payoff function is calculated using Eq. (24):

where \(\beta_{j} \in [0,1]\) is the payoff or reinforcement signal received by \({\text{L}}_{R - I}\) from the environment.

NB: In the propounded method, there is a team of learning automata of the LR-I type which participate in the game of learning automata in an attempt to adjust power control and channel control. As for power control, there is one automaton at each node i to adjust power. The action set of this node is a finite set of power values \(A = \left\{ { tpow_{i1} ,tpow_{i2} , \ldots ,tpow_{imax} } \right\}\). Likewise, for channel adjustment, the action set of node i is a finite set of channels \(A = \left\{ { ch_{i1} ,ch_{i2} , \ldots ,ch_{im} } \right\}\). The function \(F\left( \alpha \right)\) is \(F\left( \alpha \right) = E [ Upow_{i} | Lr - i chose \alpha_{i} \in A]\) in the power control algorithm and \(F\left( \alpha \right) = E [ Uch_{i} | Lr - i chose \alpha_{i} \in A]\) in the channel control algorithm. Both automata seek to converge to the optimum action and to maximize the function \(F\left( \alpha \right)\).

Analysis of the Automata Game Algorithm

Now, let us assume that there is a team of N learning automata (LR-I). The i th automaton LR-I chooses an action α i (t) independently of the other automata according to p i (t), 1 ≤ i ≤ N. This set of N selected actions (N is the number of LA) is input to the environment which responds with N random payoffs, which are supplied as reinforcements to the corresponding learning automata.

\(F^{i}\) is the payoff function of Player i and is calculated using Eq. (25): [37]

where \(\beta_{i} \in [0,1]\) is the payoff or reinforcement signal received by the i th player from the environment. Each player only receives the reinforcement signal pertinent to its chosen action and has no knowledge of the payoff functions of the other players [37].

NB In a game of learning automata for power adjustment, the feedback (\(\beta_{i}\)) received from the environment is the utility function \(Upow_{i}\). Similarly, in the game of learning automata for channel adjustment, the feedback (\(\beta_{i}\)) obtained from the environment is the utility function \(Uch_{i}\).

Definition 1

\(\left( {\alpha^{*} } \right), \alpha^{*} = (\alpha_{1}^{*} , \ldots ,\alpha_{N}^{*} )\) is an optimal point of the game if

for each i, \(1 \le i \le N\quad F^{i} \left( {\alpha^{*} } \right) \ge F^{i} (\alpha )\)

for all \(\alpha = (\alpha_{1}^{*} ,\alpha_{2}^{*} , \ldots ,\alpha_{i - 1}^{*} ,\alpha_{i} ,\alpha_{i + 1}^{*} , \ldots ,\alpha_{N}^{*} )\) such that \(\alpha_{i} \ne \alpha_{i}^{* } , \alpha_{i} \in A_{i}\).

In this definition, \(\alpha^{*}\) is the Nash equilibrium of the game, and N is the number of LA on the team. [34]

NB In a game of learning automata for power adjustment, the optimal point \(\alpha^{*} = (tpow_{1}^{*} , \ldots ,tpow_{N}^{*} )\) of the game is indeed the best power value that is chosen by each node (each learning automata). Likewise, in the game of learning automata for channel adjustment, the optimal point \(\alpha^{*} = \left( {ch_{1}^{*} , \ldots ,ch_{N}^{*} } \right)\) of the game is the best channel that is chosen by each node to reach the Nash equilibrium.

The optimal point of the game corresponds to the local maxima of the payoff function (F) and is the Nash equilibrium. The game presented allows for a more general setting of multiple payoff functions. Identifying these optimal points or reaching the Nash equilibrium can be regarded as the goal of the learning algorithm.

In this case, if \(\alpha^{*}\) is the vector of the optimal action for N automata, then \(\alpha^{*} = (\alpha_{1}^{*} , \ldots ,\alpha_{N}^{*} )\) is an optimum point in the game. In this equation, \(\alpha_{1}^{*}\) is the best action of the first automaton. Based on the discussion appearing in the second paragraph after Eq. (23) earlier in in the paper, the probability value of each of these optimum actions will be 1 as below:

where \(P^{*}\) is the probability vector of all the learning automata for the optimal action, and indeed \(\alpha^{*}\) is the optimum point of the game. Now, it should be proven that the team of learning automata reaches the Nash equilibrium, whether the team is used for power adjustment (that is, when the actions are power values) or for channel adjustment (i.e., when the actions are channels).

3.1 Analysis of the Game of Learning Automata L R-I and Reaching the Nash Equilibrium

In this section, we demonstrate that the team of learning automata converges to optimum points, also referred to as the equilibrium points. The convergence of the learning automata is directly related to the reinforcement plan L R-I used in this study. In the learning automata L R-I , the probability of the actions (i.e., channels and power values) converges to unity [37].

The state of the team of learning automata at instant t is denoted by P(t), with \(P\left( t \right) = [P_{1} ,P_{2} , \ldots ,P_{N} ]\) being the probability vector all of the automata. The proposed learning algorithm determines a random differential equation, Eq. (26), which governs P(t).

where \(\psi\left( t \right) = (\alpha \left( t \right),\beta \left( t \right))\), G(·, ·) would represent the updating scheme or the updating function for the i th LR-I algorithm, and \(b = \lambda\) is the learning parameter.

In Eq. (27), \(1 \le i \le N\) is the number of automata, \(\alpha \left( t \right) = \alpha_{j}\) is the chosen action in the i th automaton at instant t, and \(e_{j}\) is a vector with dimension r, and its jth element is unit.

At the first step of the analysis, we obtain an Ordinary Differential Equation (ODE) that approximates this difference (Eq. (26)). The second step and main part of our analysis here concentrates on characterizing the asymptotic solutions of the ODE. We show that all maximal points are asymptotically stable for the ODE. Further, if there is any equilibrium point of the ODE that is not a maximal point, then this equilibrium point is unstable.

3.1.1 First Step: Approximating P(t) Resulting from the L R-I Algorithm Using the ODE

We can define a function, \(\omega :R^{N} \to R^{N} ,\) by

We are assuming here that the expectation in the RHS of the above equation is independent of the time step t, which is true for all our algorithms. Using (Eq. (28)), we rewrite Eq. (26) as

where \(\theta \left( t \right) = G\left( {P\left( t \right),\psi \left( t \right)} \right) - \omega \left( {P\left( t \right)} \right)\).

We want to prove that Eq. (29) can be approximated using the ODE in Eq. (30):

where \(P = [P_{1} ,P_{2} , \ldots ,P_{N} ]\).

In order to prove that Eq. (29) can be approximated using the ODE, we should prove that the following four assumptions (A1–A4) hold in Eq. (29) [37]:

A1: \(\left\{ {P_{t}^{b} , \psi_{t}^{b} } \right\}\) is a Marcov process.

Clearly, this assumption holds in Eq. (29) because this algorithm is simple and repetitive.

A2: For any appropriate Borel set B, \(prob [ \psi_{t}^{b} \in B | P_{t}^{b} , \psi_{t - 1}^{b} ] = prob [\psi_{t}^{b} \in B | P_{t}^{b} ]\).

The value of \(\psi_{t}\), which is independent of \(\psi_{t - 1}\), is determined completely and randomly by \(P_{n}\). In other words, the probability distribution \(\left( {\alpha \left( t \right),\beta \left( t \right)} \right)\) is independent of \(\left( {\alpha \left( {t - 1} \right),\beta \left( {t - 1} \right)} \right)\).

A3: \(G\left( P \right): R^{N} \to R^{N}\) is independent of k and is globally Lipchitz.

A function such as \(\omega\) is Lipchitz if \(\left| {\omega \left( x \right) - \omega \left( y \right)} \right| \le |x - y|\). Study [37] demonstrates that \(\omega\) is Lipchitz.

A4: \(\theta_{t}^{b} = G\left( {P_{t}^{b} ,\psi_{t}^{b} } \right) - \omega \left( {P_{t}^{b} } \right)\)

\(\theta_{t}^{b}\) is a random variable with a mean of zero. In order to approximate Eq. (29) using the ODE, we should prove that the variance \(\theta\) is bounded. On the other hand, since the elements P and \(\psi\) are finite in the learning automata, the variance \(\theta\) is bounded, and the fourth assumption holds.

3.1.2 Second Step: Proving that ODE Stable Points are Algorithm Equilibrium Points

Now that we proved that the learning algorithm of the team of automata can be approximated using the ODE, we want to prove that if the ODE has local asymptotic stable points, \(P_{t}^{\lambda }\) converges to one of these equilibrium points at high values of t, which is the maximum point.

Here, we focus on ODE solutions. As we saw, the ODE corresponding to the algorithm used in the study is as follows (Eq. (31)):

where \(\omega_{ij}^{p} \left( P \right)\) is calculated using Eqs. (27) and (29), reproduced in a simplified form as Eq. (32):

where e i is a vector with dimension r, and its j th selected action is unit. e i is zero for other actions such as k.

Now, Eq. (32) can be simplified as Eq. (33):

Definition 4

According to Eq. (34), every point in \({\text{K}}\) indicates a probability distribution for the actions chosen by the team of automata.

where \(p_{ri}\) is the r th action probability of the i th automaton.

Definition 5

According to Eq. (35), every corner point in \({\text{K}}\) is where the probability vector of each automaton \((P_{i} )\) converges to \((P_{i}^{*} )\).

Definition 6

We know that in each automaton, the probability vector converges to a unit vector (such as [0, 0, … 1, 0]). As a result, \(P^{*} = \left[ {1,1, \ldots ,1} \right]\).

Theorem 3

If \(\chi\) is defined as follows (Eq. (36)):

Prove that all corners of \({\text{K}}\) belong to \(\chi\), where \(\chi\) is the equilibrium point of the ODE.

Proof

Let \(P^{*} = \left[ {P_{1}^{*} ,P_{2}^{*} , \ldots ,P_{N}^{*} } \right]\) be the corner points in \({\text{K}}\). Now, we should prove that \(\omega_{ij}^{p} \left( {P^{*} } \right) = 0\),□

Substituting \(P^{*} = \left[ {P_{1}^{*} ,P_{2}^{*} , \ldots ,P_{N}^{*} } \right]\) in Eq. (33), we have

According to Definition (6), in Eq. (37), either \(p_{ij}^{*} = 0\) or \(p_{ik}^{*} = 0\), \(k \ne j,\) here \(P^{*} \in \chi\).

As a result, \(\omega_{iq}^{p}\) is equal to zero \((\omega_{ij}^{p} \left( {P^{*} } \right) = 0)\) for the corner points in \({\text{K}}\). In other words, these points are the equilibrium points of the ODE. Moreover, as the function is zero for these points, the team of learning automata will converge to one of these points.

Rights and permissions

About this article

Cite this article

Rahmani, P., Javadi, H.H.S., Bakhshi, H. et al. TCLAB: A New Topology Control Protocol in Cognitive MANETs Based on Learning Automata. J Netw Syst Manage 26, 426–462 (2018). https://doi.org/10.1007/s10922-017-9422-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10922-017-9422-3