Abstract

Impaired facial affect recognition is common after traumatic brain injury (TBI) and linked to poor social outcomes. We explored whether perception of emotions depicted by emoji is also impaired after TBI. Fifty participants with TBI and 50 non-injured peers generated free-text labels to describe emotions depicted by emoji and rated their levels of valence and arousal on nine-point rating scales. We compared how the two groups’ valence and arousal ratings were clustered and examined agreement in the words participants used to describe emoji. Hierarchical clustering of affect ratings produced four emoji clusters in the non-injured group and three emoji clusters in the TBI group. Whereas the non-injured group had a strongly positive and a moderately positive cluster, the TBI group had a single positive valence cluster, undifferentiated by arousal. Despite differences in cluster numbers, hierarchical structures of the two groups’ emoji ratings were significantly correlated. Most emoji had high agreement in the words participants with and without TBI used to describe them. Participants with TBI perceived emoji similarly to non-injured peers, used similar words to describe emoji, and rated emoji similarly on the valence dimension. Individuals with TBI showed small differences in perceived arousal for a minority of emoji. Overall, results suggest that basic recognition processes do not explain challenges in computer-mediated communication reported by adults with TBI. Examining perception of emoji in context by people with TBI is an essential next step for advancing our understanding of functional communication in computer-mediated contexts after brain injury.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Every year, an estimated 69 million people worldwide sustain a traumatic brain injury (TBI) (Dewan et al., 2019). TBI is a leading cause of injury-related death and disability, and it frequently results in chronic deficits across social-cognitive domains such as attention, learning, memory, executive function, and emotion (Centers for Disease Control & Prevention, 2015; Maas et al., 2017). Cognitive and communication impairments have been linked to negative outcomes such as reduced social and community participation (Kersey et al., 2020), difficulty maintaining friends, and reduced opportunities for positive social interactions (Salas et al., 2018; Shorland & Douglas, 2010; Ylvisaker & Feeney, 1998).

In an increasingly technological world, social media and other computer-mediated communication (CMC) methods are essential for social participation and could benefit users with TBI who are seeking social contacts. People with TBI, however, report cognitive and technical barriers to their CMC use (Ahmadi et al., 2022; Brunner et al., 2015, 2019), and a subset report changes in their social media use after brain injury (Morrow et al., 2021). One potential barrier to CMC use is impaired comprehension of representations of nonverbal cues, such as emoji, an impairment that might be expected given common emotion recognition deficits in people with TBI (Babbage et al., 2011). In the current study, we examined if people with TBI perceived emoji similarly to their non-brain injured peers by asking adults with and without TBI to rate the valence and arousal of 33 frequently-used facial emoji and comparing the free-text labels participants from the two groups generated to describe them.

Emotion Representation in Emoji

Emoji are ubiquitous graphical icons that accompany written text in CMC contexts. Emoji depicting facial expressions are among the most frequently used emoji and serve multiple communicative functions such as expressing the sender’s emotions, reducing ambiguity, enhancing context appropriateness, promoting interaction, and intensifying or softening verbal communication (Bai et al., 2019). Facial emoji convey a variety of moods and emotions. Indeed, a single emoji can represent multiple emotions (Jaeger & Ares, 2017; Jaeger et al., 2019). Emoji are a rich communication modality that can be flexibly and creatively used and combined in digital communication contexts. Although this flexibility can be viewed as a strength, it creates opportunities for message ambiguity and miscommunication.

To characterize ambiguities in emoji interpretation, Jaeger and colleagues (Jaeger & Ares, 2017) asked participants to select all relevant emotion labels depicted by 33 frequently-used emoji. Fifteen emoji had strong associations to a single emotional meaning and were endorsed by more than 50% of respondents. Another 10 were associated with multiple emotions with endorsement frequencies ranging from 20–40%. The remaining eight emoji lacked strong associations and were labeled with multiple and often unrelated meanings. For example, Downcast Face with Sweat 😓 was associated with “embarrassed,” “depressed,” and “frustrated.” In another study (Jaeger et al., 2019), participants generated their own free-text labels for emoji. As in the prior study, whereas some emoji depicted specific emotions with high agreement across raters (e.g., Angry Face 😠), others did not clearly map onto a specific emotion (e.g., Smirking Face 😏), and many spanned multiple emotions. Differences in perceived meaning of emoji can be compounded by cross-platform differences in how emoji are drawn (e.g., the same emoji looks different on Android vs. Apple devices), increasing the potential for miscommunication (Miller et al., 2016; Tigwell & Flatla, 2016).

Recently, the study of emotion recognition in emoji has been extended to individuals with clinical conditions known to affect emotion recognition, including individuals with autism spectrum disorder (ASD). Hand et al. (2022a) found that compared to neurotypical peers, participants diagnosed with ASD had significantly reduced labeling accuracy for fearful, sad, and surprised emoji. Individuals with ASD also rated sentences paired with sad emoji as significantly more negative than did their neurotypical peers (Hand et al., 2022a, 2022b). Taylor et al. (2022) found that participants with ASD who had reduced labeling accuracy for emoji depicting surprise, fear, and disgust, also had higher scores on both autism trait and alexithymia questionnaires, suggesting that emoji recognition errors may be a manifestation of underlying differences in social cognition that extend across media.

Emotion Recognition in TBI

The possibility that emoji recognition is linked to affect recognition in other media raises questions about social perception in adults with TBI. There is strong evidence of affect recognition impairments in as much as 40% of adults with moderate-severe TBI (2011). Many studies have shown impairments in recognition of basic emotions (i.e., happiness, surprise, anger, fear, disgust, sadness) (Byom et al., 2019; Radice-Neumann et al., 2007; Rigon et al., 2017, 2018b, with some evidence that recognition of negative emotions is more impaired than positive emotions (Croker & McDonald, 2005; Green et al., 2004; Rigon et al., 2016; Rosenberg et al., 2014). More often, people with TBI have difficulty recognizing social emotions that require mentalizing (e.g., embarrassment, sarcasm) (Turkstra et al., 2018). These deficits have been linked to social communication problems (McDonald & Flanagan, 2004; Turkstra et al., 2018; Rigon et al., 2018a). Deficits in recognizing basic and social emotions could be particularly penalizing in CMC, where emoji are ubiquitous (Cramer et al., 2016; Subramanian et al., 2019).

In a prior study (Clough et al., 2023), we examined whether facial affect recognition deficits in adults with TBI extended to emotion recognition of facial emoji. Participants with and without moderate-severe TBI viewed photographs of human faces and facial emoji depicting basic and social emotions and selected the best-matching emotion label from a list of seven. Adults with TBI did not differ from non-brain-injured peers in overall emotion labeling accuracy, and both groups demonstrated lower emotion recognition for emoji than human faces. However, a significant interaction between group and emotion type revealed that participants with TBI, and not non-brain-injured peers, showed reduced accuracy labeling social emotions depicted by emoji compared to basic emotions depicted by emoji. These results suggested that emoji may depict emotions more ambiguously than human faces and that people with TBI may be at increased risk for misinterpretation of emoji depicting social emotions in particular. A limitation of this prior study, and most emotion assessment tools in general, was the use of a forced-choice response method, which arguably tests the participant’s ability to match their perception to a limited list of options, rather than their ability to generate a label that reflects their true perception (Turkstra et al., 2017; Zupan et al., 2022). The current study extended this work by using an open-ended response format, asking adults with and without TBI to generate their own labels for emotions depicted by emoji.

Research Questions

Valence and arousal ratings: The first aim of the study was to compare valence and arousal ratings that participants with and without TBI assigned to common facial emoji. We used the set of 33 emoji used by Jaeger et al. (2019), who clustered ratings of these emoji from a large sample of adult participants (ages 18–60) into three groups on the valence dimension: a positive sentiment group, a neutral/dispersed sentiment group, and a negative sentiment group. Kutsuzawa et al. (2022b) expanded on this work, increasing the number of emoji to 74 and performing cluster analysis on both valence and arousal ratings by adult participants (ages 20–39). When considering valence and arousal dimensions together, the larger set of 74 emoji clustered into six groups: strong negative sentiment, moderately negative sentiment, neutral sentiment with a negative bias, neutral sentiment with a positive bias, moderately positive sentiment, and strong positive sentiment. In a subsequent study, Kutsuzawa et al. (2022a) found that these same 74 emoji also grouped into six clusters when comparing young adults (ages 20–39) and middle-aged adults (ages 40–59); however, middle-aged adults rated the arousal of emoji in the strong negative and moderately negative clusters as having significantly higher levels of arousal than young adult participants did.

We compared the valence and arousal ratings of 33 emoji by participants with and without TBI using hierarchical clustering analysis. Given the prevalence of emotion recognition deficits in TBI (Babbage et al., 2011) and initial findings from our group showing decreased accuracy labeling social emotions in emoji by individuals with TBI (Clough et al., 2023), we predicted that participants with TBI would differ from non-injured peers in their valence and arousal ratings of emoji. We had no specific predictions about the directions of these differences.

Free-text labels: Our second aim was to characterize the frequency and variety of emotion words participants with and without TBI generated for the 33 emoji and to identify particular emoji that might be at increased risk of being misinterpreted both within and between participant groups. We used a mixed-methods approach. In a quantitative analysis, we used a bag of words approach to vectorize participants’ free-text responses and calculate cosine similarity scores for each emoji by group. In a qualitative analysis, we coded responses into pre-defined emotion categories. We predicted that the TBI group would have greater variability in emotion labels than the non-injured group, resulting in poorer agreement in emotion labels between groups.

Methods

Participants

Participants were 50 individuals with moderate-severe TBI and 50 non-brain injured comparison (NC) participants. TBI and NC groups were matched on sex, age, and education. Participants with TBI were recruited from the Vanderbilt Brain Injury Patient Registry (Duff et al., 2022). All participants with TBI sustained their injuries in adulthood and were in the chronic phase of their injury, at least six months since onset of injury and thus, were out of post-traumatic amnesia with stable neuropsychological profiles. All participants with TBI met inclusion criteria for history of moderate-severe TBI using the Mayo Classification System (Malec et al., 2007), along with health history information collected through medical records and intake interviews. Participants were classified as having sustained a moderate-severe TBI if at least one of the following criteria was met: (1) Glasgow Coma Scale (Teasdale & Jennett, 1974) < 13 within 24 h of acute care admission (i.e., moderate or severe injury according to the Glasgow Coma Scale), (2) positive neuroimaging findings (acute CT findings or lesions visible on a chronic MRI), (3) loss of consciousness (LOC) > 30 min, or (4) post-traumatic amnesia (PTA) > 24 h. Common injury etiologies included motor vehicle accidents, motorcycle crashes, non-motorized vehicle accidents, and falls. Demographic information is summarized by group in Table 1. A full summary of demographic information for individuals with TBI including age, education, injury etiology, time since onset, and Mayo Classification System criteria findings can be found in supplementary materials (SI Table 1).

Stimuli

Participants provided perceptual judgments for 33 of the most frequently used facial expression emoji, following the protocol developed by Jaeger and colleagues (2019). All emoji were depicted using the Apple (iOS 14.2) renderings. An index of the emoji used in the study can be found in supplementary materials (SI Table 2).

Procedure

Participants completed the study remotely using Gorilla Experiment Builder (www.gorilla.sc; Anwyl-Irvine et al., 2020). Participants were not restricted by device type. The majority used laptop or desktop computers in both groups (n = 44 in the NC group; n = 42 in the TBI group), and the remaining participants used a tablet or a mobile device. Prior to beginning the experiment, participants read instructions on a screen simultaneously narrated by an experimenter via pre-recorded audio. Participants were not allowed to advance until the instructions finished playing. Participants were shown the “Thinking Face” emoji ( ) on the screen while receiving the following instructions:

) on the screen while receiving the following instructions:

“For this task, you will see pictures of emoji like the one on this screen. Emoji are digital images that can accompany written text and are often used in text messages or on social media. Many emoji are intended to represent emotions. For each emoji, you will be asked to describe in one or two words the emotion or mood you think it conveys. For example, for this emoji, you might say something like, “puzzled” or “skeptical.” Try to avoid using labels that are just physical descriptions of the emoji. For example, you would not say, “scratching chin.” You will also be asked to rate how positive or negative the emoji is and how calm or activated the emoji is. At the end of the task, there will be a brief survey. Please note: We want only your responses for this task. Please do not consult anyone else in your household or search engines like Google when making your responses.”

Each trial also had a prompt that read, “In one or two words, describe the emotion or mood you think the emoji conveys.” On each trial screen, a single emoji was presented. Participants were provided a text box to type their emotion label. Below, participants rated the emotional valence of each emoji on a scale of 1 (negative) to 9 (positive) and the emotional arousal of each emoji on a scale of 1 (calm) to 9 (activated), following methods from Jaeger et al. (2019) (Fig. 1). All participants completed all study items. Although there was variability in how much time participants spent on each trial, it did not vary systematically by group, and there were no implausibly fast response times (minimum response time was 5.22 s in NC group and 4.84 s in TBI group). See supplementary materials for additional descriptive statistics of participant response times (Supplemental Methods and SI Table 3).

After providing perceptual judgments for all 33 emoji, participants answered questions about their emoji use, rating their confidence in the labels they provided for the emoji (1 = not confident at all, 5 = extremely confident), their frequency of emoji use (1 = Never, 5 = Always), and their attitudes and motives for using emoji, following methods from Prada et al. (2018). Participants rated their attitudes toward emoji on a scale of 1–7 across six bipolar scales (1 = Useful, 7 = Useless; 1 = Uninteresting, 7 = Interesting; 1 = Fun, 7 = Boring; 1 = Hard, 7 = Easy; 1 = Informal, 7 = Formal; 1 = Good, 7 = Bad). Similarly, participants rated their motives for using emoji on a scale of 1–7 (1 = completely disagree, 7 = completely agree), including the motive to “express how I feel to others,” “strengthen the content of my message,” “soften the content of my message,” “make the content of my message more ironic/sarcastic,” “make the content of my message more fun/comic,” “make the content of my message more serious,” “make the content of my message more positive,” “make the content of my message more negative,” and “express through images what I can’t express using words.” Following Prada et al. (2018), we used these responses to create an emoji attitude and emoji motive index, reverse scoring items when appropriate.

Analyses

We used the following approaches to compare ratings and emotion labels generated by NC and TBI groups:

Hierarchical Clustering: We examined whether emoji form distinct clusters in the NC and TBI groups based on mean valence and arousal ratings. To do so, we performed agglomerative hierarchical clustering, which successively combines items (in this case, emoji) based on their proximity until they form compact clusters, minimizing the distance within clusters and maximizing the distance between clusters (Everitt et al., 2010; Yim & Ramdeen, 2015). See supplementary materials (Supplemental Methods) for detailed procedure. To follow up on this analysis, we conducted Mann–Whitney-Wilcoxon tests for each of the 33 emoji by group.

Bag of words: To analyze differences in the labels that participants with and without TBI generated to describe the emoji, we used a bag-of-words approach, an approach to modeling text data that sums occurrences of words and converts these frequencies to numerical vectors. We calculated cosine similarity between the two vectors representing the words generated by the NC and TBI groups for each of the 33 emoji using the cosine function of the lsa package in R (Wild, 2022). See supplementary materials (Supplemental Methods) for detailed analysis procedure.

Qualitative Coding of Emotion Categories: Following Jaeger et al. (2019), we coded each free-text label response into 44 predetermined emotion codes to compare type and frequency of emotion categories generated by NC and TBI groups. Surveying the range of responses generated by participants, two authors established consensus to create a detailed coding guide of words that fit each of the 44 content codes (See Supplementary Materials, SI Table 4). Authors were blind to participant group and which emojis were being described when developing the coding guide. Two coders independently used the coding guide to assign emotion codes to each participant’s responses. Although participants were encouraged to describe each emoji in one or two words, they often used multiple words. A single word could only be assigned one emotion code, but responses with multiple words could receive up to four emotion codes (e.g., “funny, flirtatious, pleased” was coded into fun, flirty, and pleasure categories). Agreement between the two coders was 95%.

Emoji Use Survey: We conducted Mann-Whitney-Wilcoxon tests to compare participants’ confidence in emoji labels, frequency of emoji use, emoji attitude index, emoji motive index by group (NC, TBI).

Results

Hierarchical Clustering of Affect Ratings

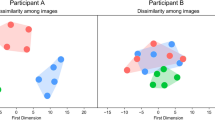

We examined mean valence and arousal ratings of the 33 emoji by group. Descriptive statistics of ratings for each emoji by group can be found in supplementary materials (SI Table 5). We performed an agglomerative hierarchical clustering analysis to explore how participants with and without TBI grouped emoji on the dimensions of valence and arousal, making up a two-dimensional affective space. Visual inspection of the scatterplot (Fig. 2) suggested that Sleeping Face 😴 had no near neighbors in affective space, particularly given its low arousal rating, and was determined to be an outlier. Therefore, we excluded it from the hierarchical clustering analysis. The analysis determined that the optimal number of clusters was four in the NC group and three in the TBI group (Fig. 3).

To qualitatively describe clusters, we examined the location of cluster centroids (see Fig. 3) within four quadrants divided by axes at arousal = 0 and valence = 0. Both the NC and TBI groups produced two negative valence clusters, one characterized by low arousal and the other characterized by higher arousal. Whereas the TBI group produced a single large positive valence cluster undifferentiated by arousal, the NC group produced two positive valence clusters, one with low-moderate arousal and one with high arousal. Table 2 displays descriptive statistics for each of the clusters, including mean valence and arousal ratings. Figure 4 shows the dendrograms for both NC and TBI groups, depicting the hierarchical relationship of the emoji clusters in both groups. The structure of the dendrograms show how emoji were successively combined based on Euclidian distance between emoji in two-dimensional valence-arousal space. The Baker’s Gamma Index suggests the two dendrograms are highly and significantly correlated, γ = 0.61, p < 0.001, though not perfectly so (i.e., γ < + 1). In an exploratory analysis, we compared the valence and arousal ratings for each of the 33 emoji by group (Table 3). Given non-normality of data, we used the Mann–Whitney-Wilcoxon test. We used Bonferroni correction for multiple comparisons (alpha level: 0.05/33 = 0.0015); however, due to the exploratory nature of this analysis, we also highlight emoji that differed between groups at α < 0.05. Future confirmatory studies should verify any group differences.

Dendrograms depicting the hierarchical structure of emoji clusters in NC and TBI groups. The structure of the clusters was determined by successively combining emoji based on Euclidean distance in 2D valence-arousal space. Individual clusters are identified by different colors in the branches of the tree (four clusters in the NC group and three clusters in the TBI group). Lines are drawn connecting the same emoji between groups to facilitate comparison. Colored lines connecting emoji indicate subclusters of two or three emoji that have the same underlying substructure in both groups, emphasizing between-group similarities in relative locations of emoji in 2D space. For connecting lines, the different colors indicate different subclusters shared by the two groups at the lowest levels of the hierarchy. Grey lines indicate emoji that do not share a common substructure between groups

Along the valence dimension, the Neutral Face 😐 emoji was rated as more positive by TBI than NC participants (p = 0.003, r = 0.30), but its significance level did not survive multiple comparison correction. Along the arousal dimension, only the Winking Face with Tongue 😜 emoji showed a moderate significant difference by group (p = 0.001, effect size r = 0.33), with the TBI group rating the emoji as significantly less aroused than NC peers. Three additional emoji trended toward significant differences (p’s < 0.03): Crying Face 😢, Loudly Crying Face 😭, and Flushed Face 😳. However, all effect sizes were small (range r = 0.22 to r = 0.23), and significance levels did not survive multiple comparison correction. Numerically, TBI participants rated all four emoji as less aroused than NC peers.

Emotion Labels

Bag of Words

We calculated cosine similarity scores to compare the words participants with and without TBI used to describe the 33 emoji (Fig. 5). Measuring the cosine angle between the two vectors resulted in a similarity value between 0 and 1, where 0 is completely dissimilar (i.e., no common words used to describe emoji) and 1 is perfect similarity. For the 33 emoji, cosine similarity values ranged from 0.983 (Sleeping Face 😴) to 0.384 (Expressionless Face 😑). The mean cosine similarity was 0.828. The high cosine value for Sleeping Face 😴 indicates high agreement in the words participants with and without TBI used to describe the emoji. For example, of the 50 participants in each group, 37 participants with TBI and 38 neurotypical participants used the word “sleepy,” with a smaller number in both groups using the words “tired,” “bored,” and “asleep.” In general, agreement tended to be lower for emoji with negative valence. Expressionless Face 😑 had the poorest agreement across groups in the words that were used to describe it. The most frequent responses in the NC group were “bored” (n = 5), “annoyed” (n = 5), and “neutral” (n = 5), while the most frequent response in the TBI group was “neutral” (n = 4). All other responses in the TBI group had frequencies of two or less. Note that there also appears to be much less consensus within a group for the Expressionless Face emoji 😑. We suspected other emoji with low cosine similarity scores may have larger within-group variability as well. To explore this possibility, we conducted a follow-up analysis in which we calculated van der Eijk’s measure of agreement (van der Eijk, 2001) using the agrmt package in R (Ruedin, 2021). The pattern was very similar across emoji both between- and within-group, where emoji that had lower between-group agreement also tended to have lower within-group agreement for both participant groups. See supplementary materials (Supplemental Analysis, SI Table 6) for more detailed within-group agreement results. SI Fig. 2 compares the variability of labels given within and between groups for each of the 33 emoji.

Qualitative Content Codes

To supplement the bag of words analysis, we also qualitatively coded participants’ emoji labels into one or more of 44 emotion content codes (see SI Table 4 for detailed coding guide). Unlike the bag of words approach which counts occurrences of words but does not consider grammar, word order, or semantics, this approach allowed us to interpret each response in context and categorize it into predefined emotion labels (Jaeger et al., 2019). For example, labels such as “enraged,” “furious,” and “mad” were all categorized as “angry.” Table 4 describes the frequency of categories assigned to each of the emoji for words generated by ≥ 10% of participants in the NC and TBI groups. The qualitative coding replicates findings from the bag of words approach where emoji such as Sleeping Face 😴, Smiling Face with Heart Eyes 😍, and Angry Face 😠 all had high agreement in both groups for the emotion categories they represent, whereas emoji such as Persevering Face 😣, Smirking Face 😏, and Winking Face 😉 had much lower agreement.

Emoji Use and Attitudes

We explored group differences in emoji use and attitudes using Mann-Whitney-Wilcoxon tests given non-normality of the data. Participants rated their confidence in their emoji free-text labels and frequency of emoji use on five-point scales. Participants with TBI rated their confidence as significantly lower than non-brain injured peers, although the effect was small (MTBI = 2.16, MNC = 2.44; p = 0.02, effect size r = 0.23). Participants with and without TBI did not differ in their reported frequency of emoji use (MTBI = 2.58, MNC = 2.52; p = 0.64, effect size r = 0.05).

Participants rated their attitudes and motives toward using emoji on seven-point scales. These responses were summarized by attitude and motive index scores (Prada et al., 2018), where higher numbers indicate more positive attitudes and stronger endorsed motives. Participants with and without TBI did not differ in their overall attitudes toward (MTBI = 5.51, MNC = 5.77; p = 0.11, effect size r = 0.16) or motives for using emoji (MTBI = 2.58, MNC = 2.52; p = 0.64, effect size r = 0.05).

Discussion

We compared the perception of emoji in isolation by participants with and without moderate-severe TBI. Participants generated free-text emotion labels for 33 emoji and rated their valence and arousal on nine-point scales. Participants with TBI shared many similarities with the NC group in their ratings and labeling of emoji; both groups produced two negative valence emoji clusters, one with lower arousal and one with high arousal, and participants in both groups generated similar labels to describe most emoji, as indicated by both high cosine similarity scores and between-group agreement in qualitative coding of emotion categories. Some differences also emerged. Valence and arousal ratings produced four clusters in the NC group but only three clusters in the TBI group. This difference stemmed from the positive valence emoji, for which the NC group produced both a moderate and high arousal cluster, whereas the TBI group produced one single positive valence cluster, undifferentiated by arousal.

Although participant groups did differ in the number of clusters produced by the hierarchical clustering analysis, the general pattern of valence and arousal ratings was strikingly similar between the two groups. At the individual emoji level, group differences in average valence and arousal ratings were within one point on the nine-point scale for all emoji, and only one emoji (Winking Face with Tongue 😜) had a significant between-group difference in arousal ratings after correction for multiple comparisons. Thus, in the current sample of TBI participants, these differences are unlikely to result in functional impacts in emoji perception in isolation. This finding of intact emoji perception in individuals with TBI suggests that emoji could potentially be leveraged to support computer-mediated communication. However, it is also possible that the slight differences in perceived arousal we identified in isolation might be amplified in more complex communication contexts. For example, although people with TBI may be able to easily interpret the distinction between sentences framed by emoji with large differences in arousal levels (e.g., “My date was 😁” vs. “My date was 😌”), they may have more difficulty interpreting distinctions that span a smaller arousal range (e.g., “My date was 😁” vs. “My date was 😊”). Thus, future studies should examine how people with TBI perceive and use emoji in context.

Although like the TBI group in the current study, Jaeger et al. (2019) found that these same 33 emoji produced three clusters, they clustered only on the valence dimension whereas we clustered on both valence and arousal dimensions. Thus, differences between our NC group clusters and those reported previously in the literature may reflect differences in the number of dimensions used, differences in the number of emoji examined (e.g., 74 emoji as in Kutsuzawa et al., 2022a, 2022b), or our relatively smaller sample size, restricted by careful demographic-matching with the TBI clinical group sample. Although a handful of emoji were identified as having lower arousal ratings in the TBI group, these emoji did not stand out as also having poorer agreement in the words the groups used to describe them (e.g., all cosine similarity scores > 0.85), suggesting that emotion perception is multifaceted and differences in a single dimension of emotion affect (i.e., arousal) had minimal impact on overall emoji labeling. Finally, although some emoji had low cosine similarity scores indicated by poor between-group agreement in the words participants used to describe emoji, supplementary analyses revealed that these emoji tended to have lower within-group agreement as well. The poor levels of between- and within-group agreement on these emoji also indicates a potential need to redesign these emoji or to provide guidelines on their use by CMC platforms. That emoji are designed by platform developers, as opposed to facial expressions that emerge from biological and cultural antecedents, point to another avenue for future research on how emoji can be designed to better reflect human affective states.

Studying emoji perception in clinical groups with differences in emotion processing may uniquely inform our understanding of the emotionality of emoji. Participants with TBI show reduced accuracy identifying basic emotions in human faces compared to non-injured peers when emotion intensity is manipulated (Rigon et al., 2016; Rosenberg et al., 2014), and emotion blunting, flat affect, and apathy are also common in adults with moderate-severe TBI (Arnould et al., 2013, 2015; Lane-Brown & Tate, 2011). Importantly, not all people with TBI have emotion recognition deficits. Heterogeneity is a hallmark characteristic of TBI (Covington & Duff, 2021), and more work is needed to identify whether individuals with emotion expression or recognition deficits are more at risk for differences in emoji perception. Indeed, previous work in autism has shown that high ratings on Alexithymia corresponding to increased emotional processing difficulties are related to both poorer facial affect recognition (Bothe et al., 2019) and emoji affect recognition (Taylor et al., 2022). Whereas the current study found evidence of dampened arousal ratings of some emoji by people with TBI relative to non-brain injured peers, Kutsuzawa et al. (2022a) found that middle-aged adults rated negative valence emoji as significantly higher in arousal than young adults did. Variability in affective ratings across age or clinical groups could reflect several possibilities, such as true differences in emoji perception, differences in emoji experience and social learning of emoji use in context, or differences in participants’ understanding of subjective rating scales. Although the current study, as did the studies mentioned above (Jaeger et al., 2019; Kutsuzawa et al., 2022a, 2022b), used a nine-point scale with positive integers only, scales containing both negative and positive integers around a neutral zero midpoint may more accurately reflect verbal-spatial processing (see Hand et al., 2022a for a discussion). Future replications or extensions of this work should consider these factors to identify the stability of emotion perception within and across age or clinical groups, as well as factors that predict differences in emoji perception.

In line with other studies (Jaeger & Ares, 2017; Jaeger et al., 2019), we found that some emoji lend themselves to multiple emotion representations. In this case, correct interpretation of an emoji depends on context. It is possible that emoji with lower agreement scores in the current study (e.g., Expressionless Face 😑, Neutral Face 😐, Unamused Face 😒, Confounded Face 😖) may be more likely to contribute to misinterpretations of intended messages, for which people with TBI, who commonly have social inference deficits (Bibby & McDonald, 2005; McDonald, 2013; McDonald & Flanagan, 2004), might be particularly at risk. This is an avenue for future research. Similarly, our group found previously (Clough et al., 2023) that people with TBI were impaired relative to non-brain injured peers in assigning emotion labels to emoji depicting social emotions (e.g., embarrassed, anxious) but performed similarly to their peers in assigning emotion labels to emoji depicting basic emotions (e.g., angry, happy). Thus, emotion ambiguity in emoji may disproportionately affect people with TBI.

Our findings suggest there are many similarities in the way people with TBI and non-brain injured peers perceive emoji in isolation, with small differences in arousal ratings for only a minority of emoji. However, despite this evidence of intact emoji perception, participants with TBI rated their confidence in emoji labels as significantly lower than non-injured peers. This has important clinical implications both for helping participants with TBI recognize areas of competence and for potentially leveraging areas of strength to support functional communication after brain injury. Future research should examine emoji perception and use in more ecologically valid contexts to identify barriers or supports to improving social participation in computer-mediated communication contexts in this population. As emoji become increasingly ubiquitous across technological platforms and social and vocational contexts, studying emoji perception and use by people with TBI is essential for advancing our understanding of functional communication and social participation in TBI.

References

Ahmadi, R., Lim, H., Mutlu, B., Duff, M., Toma, C., & Turkstra, L. (2022). Facebook Experiences of Users With Traumatic Brain Injury: A Think-Aloud Study. JMIR Rehabilitation and Assistive Technologies, 9(4), e39984. https://doi.org/10.2196/39984

Anwyl-Irvine, A. L., Massonnié, J., Flitton, A., Kirkham, N., & Evershed, J. K. (2020). Gorilla in our midst: An online behavioral experiment builder. Behavior Research Methods, 52(1), 388–407. https://doi.org/10.3758/s13428-019-01237-x

Arnould, A., Rochat, L., Azouvi, P., & van der Linden, M. (2013). A multidimensional approach to apathy after traumatic brain injury. Neuropsychology Review, 23(3), 210–233. https://doi.org/10.1007/s11065-013-9236-3

Arnould, A., Rochat, L., Azouvi, P., & van der Linden, M. (2015). Apathetic symptom presentations in patients with severe traumatic brain injury: Assessment, heterogeneity and relationships with psychosocial functioning and caregivers’ burden. Brain Injury, 29(13–14), 1597–1603. https://doi.org/10.3109/02699052.2015.1075156

Babbage, D. R., Yim, J., Zupan, B., Neumann, D., Tomita, M. R., & Willer, B. (2011). Meta-analysis of facial affect recognition difficulties after traumatic brain injury. Neuropsychology, 25(3), 277–285. https://doi.org/10.1037/a0021908

Bai, Q., Dan, Q., Mu, Z., & Yang, M. (2019). A Systematic Review of Emoji: Current Research and Future Perspectives. Frontiers in Psychology. https://doi.org/10.3389/FPSYG.2019.02221

Bibby, H., & McDonald, S. (2005). Theory of mind after traumatic brain injury. Neuropsychologia, 43(1), 99–114. https://doi.org/10.1016/j.neuropsychologia.2004.04.027

Bothe, E., Palermo, R., Rhodes, G., Burton, N., & Jeffery, L. (2019). Expression recognition difficulty is associated with social but not attention-to-detail autistic traits and reflects both alexithymia and perceptual difficulty. Journal of Autism and Developmental Disorders, 49(11), 4559–4571. https://doi.org/10.1007/s10803-019-04158-y

Brunner, M., Hemsley, B., Palmer, S., Dann, S., & Togher, L. (2015). Review of the literature on the use of social media by people with traumatic brain injury (TBI). Disability and Rehabilitation, 37(17), 1511–1521. https://doi.org/10.3109/09638288.2015.1045992

Brunner, M., Palmer, S., Togher, L., & Hemsley, B. (2019). ‘I kind of figured it out’: The views and experiences of people with traumatic brain injury (TBI) in using social media—self-determination for participation and inclusion online. International Journal of Language and Communication Disorders, 54(2), 221–233. https://doi.org/10.1111/1460-6984.12405

Byom, L., Duff, M., Mutlu, B., & Turkstra, L. (2019). Facial emotion recognition of older adults with traumatic brain injury. Brain Injury, 33(3), 322–332. https://doi.org/10.1080/02699052.2018.1553066

Centers for Disease Control and Prevention. (2015). Report to Congress on Traumatic Brain Injury in the United States: Epidemiology and Rehabilitation.

Clough, S., Morrow, E., Mutlu, B., Turkstra, L., & Duff, M. C. (2023). Emotion recognition of faces and emoji in individuals with moderate-severe traumatic brain injury. Brain Injury. https://doi.org/10.1080/02699052.2023.2181401

Covington, N. V., & Duff, M. C. (2021). Heterogeneity Is a hallmark of traumatic brain injury, not a limitation: A new perspective on study design in rehabilitation research. American Journal of Speech-Language Pathology, 30(2S), 974–985. https://doi.org/10.1044/2020_AJSLP-20-00081

Cramer, H., de Juan, P., & Tetreault, J. (2016). Sender-intended functions of emojis in US messaging. In Proceedings of the 18th International Conference on Human-Computer Interaction with Mobile Devices and Services, 504–509. https://doi.org/10.1145/2935334.2935370

Croker, V., & McDonald, S. (2005). Recognition of emotion from facial expression following traumatic brain injury. Brain Injury, 19(10), 787–799. https://doi.org/10.1080/02699050500110033

Dewan, M. C., Rattani, A., Gupta, S., Baticulon, R. E., Hung, Y.-C., Punchak, M., Agrawal, A., Adeleye, A. O., Shrime, M. G., Rubiano, A. M., Rosenfeld, J. V., & Park, K. B. (2019). Estimating the global incidence of traumatic brain injury. Journal of Neurosurgery, 130(4), 1080–1097. https://doi.org/10.3171/2017.10.JNS17352

Duff, M. C., Morrow, E. L., Edwards, M., McCurdy, R., Clough, S., Patel, N., Walsh, K., & Covington, N. V. (2022). The value of patient registries to advance basic and translational research in the area of traumatic brain injury. Frontiers in Behavioral Neuroscience, 16(April), 1–15. https://doi.org/10.3389/fnbeh.2022.846919

Everitt, B. S., Landau, S., Leese, M., & Stahl, D. (2010). Cluster Analysis. In S. Landau, M. Leese, & D. Stahl (Eds.), Book. Wiley.

Green, R. E. A., Turner, G. R., & Thompson, W. F. (2004). Deficits in facial emotion perception in adults with recent traumatic brain injury. Neuropsychologia, 42, 133–141. https://doi.org/10.1016/j.neuropsychologia.2003.07.005

Hand, C. J., Burd, K., Oliver, A., & Robus, C. M. (2022a). Interactions between text content and emoji types determine perceptions of both messages and senders. Computers in Human Behavior Reports, 8, 100242. https://doi.org/10.1016/j.chbr.2022.100242

Hand, C. J., Kennedy, A., Filik, R., Pitchford, M., & Robus, C. M. (2022b). Emoji identification and emoji effects on sentence emotionality in ASD-diagnosed adults and neurotypical controls. Journal of Autism and Developmental Disorders. https://doi.org/10.1007/s10803-022-05557-4

Jaeger, S. R., & Ares, G. (2017). Dominant meanings of facial emoji: Insights from Chinese consumers and comparison with meanings from internet resources. Food Quality and Preference, 62, 275–283.

Jaeger, S. R., Roigard, C. M., Jin, D., Vidal, L., & Ares, G. (2019). Valence, arousal and sentiment meanings of 33 facial emoji: Insights for the use of emoji in consumer research. Food Research International, 119(2018), 895–907. https://doi.org/10.1016/j.foodres.2018.10.074

Kersey, J., McCue, M., & Skidmore, E. (2020). Domains and dimensions of community participation following traumatic brain injury. Brain Injury, 34(6), 708–712. https://doi.org/10.1080/02699052.2020.1757153

Kutsuzawa, G., Umemura, H., Eto, K., & Kobayashi, Y. (2022a). Age differences in the interpretation of facial emojis: classification on the arousal-valence space. Frontiers in Psychology. https://doi.org/10.3389/fpsyg.2022.915550

Kutsuzawa, G., Umemura, H., Eto, K., & Kobayashi, Y. (2022b). Classification of 74 facial emoji’s emotional states on the valence-arousal axes. Scientific Reports, 12(1), 398. https://doi.org/10.1038/s41598-021-04357-7

Lane-Brown, A. T., & Tate, R. L. (2011). Apathy after traumatic brain injury: An overview of the current state of play. Brain Impairment, 12(1), 43–53. https://doi.org/10.1375/brim.12.1.43

Maas, A. I. R., Menon, D. K., Adelson, P. D., Andelic, N., Bell, M. J., Belli, A., Bragge, P., Brazinova, A., Büki, A., Chesnut, R. M., Citerio, G., Coburn, M., Cooper, D. J., Crowder, A. T., Czeiter, E., Czosnyka, M., Diaz-Arrastia, R., Dreier, J. P., Duhaime, A.-C., & Zumbo, F. (2017). Traumatic brain injury: integrated approaches to improve prevention, clinical care, and research. The Lancet Neurology, 16(12), 987–1048. https://doi.org/10.1016/S1474-4422(17)30371-X

Malec, J. F., Brown, A. W., Leibson, C. L., Flaada, J. T., Mandrekar, J. N., Diehl, N. N., & Perkins, P. K. (2007). The mayo classification system for traumatic brain injury severity. JOURNAL OF NEUROTRAUMA, 24, 1417–1424. https://doi.org/10.1089/neu.2006.0245

McDonald, S. (2013). Impairments in social cognition following severe traumatic brain injury. Journal of the International Neuropsychological Society, 19(3), 231–246. https://doi.org/10.1017/S1355617712001506

McDonald, S., & Flanagan, S. (2004). Social perception deficits after traumatic brain injury: Interaction between emotion recognition, mentalizing ability, and social communication. Neuropsychology, 18(3), 572–579. https://doi.org/10.1037/0894-4105.18.3.572

Miller, H., Thebault-Spieker, J., Chang, S., Johnson, I., Terveen, L., & Hecht, B. (2016). “blissfully happy” or “ready to fight”: Varying interpretations of emoji. In Proceedings of the 10th International Conference on Web and Social Media, ICWSM 2016, Icwsm, 259–268.

Morrow, E. L., Zhao, F., Turkstra, L., Toma, C., Mutlu, B., & Duff, M. C. (2021). Computer-mediated communication in adults with and without moderate-to-severe traumatic brain injury: survey of social media use. JMIR Rehabilitation and Assistive Technologies, 8(3), e26586. https://doi.org/10.2196/26586

Prada, M., Rodrigues, D. L., Garrido, M. V., Lopes, D., Cavalheiro, B., & Gaspar, R. (2018). Motives, frequency and attitudes toward emoji and emoticon use. Telematics and Informatics, 35(7), 1925–1934. https://doi.org/10.1016/j.tele.2018.06.005

Radice-Neumann, D., Zupan, B., Babbage, D. R., & Willer, B. (2007). Overview of impaired facial affect recognition in persons with traumatic brain injury. Brain Injury, 21(8), 807–816. https://doi.org/10.1080/02699050701504281

Rigon, A., Turkstra, L., Mutlu, B., & Duff, M. (2016). The female advantage: Sex as a possible protective factor against emotion recognition impairment following traumatic brain injury. Cognitive, Affective and Behavioral Neuroscience, 16(5), 866–875. https://doi.org/10.3758/s13415-016-0437-0

Rigon, A., Turkstra, L. S., Mutlu, B., & Duff, M. C. (2018a). Facial-affect recognition deficit as a predictor of different aspects of social-communication impairment in traumatic brain injury. Neuropsychology, 32(4), 476–483. https://doi.org/10.1037/neu0000368

Rigon, A., Voss, M. W., Turkstra, L. S., Mutlu, B., & Duff, M. C. (2017). Relationship between individual differences in functional connectivity and facial-emotion recognition abilities in adults with traumatic brain injury. NeuroImage: Clinical, 13, 370–377. https://doi.org/10.1016/j.nicl.2016.12.010

Rigon, A., Voss, M. W., Turkstra, L. S., Mutlu, B., & Duff, M. C. (2018b). Different aspects of facial affect recognition impairment following traumatic brain injury: The role of perceptual and interpretative abilities. Journal of Clinical and Experimental Neuropsychology, 00(00), 1–15. https://doi.org/10.1080/13803395.2018.1437120

Rosenberg, H., McDonald, S., Dethier, M., Kessels, R. P. C., & Westbrook, R. F. (2014). Facial emotion recognition deficits following moderate-severe traumatic brain injury (TBI): re-examining the valence effect and the role of emotion intensity. Journal of the International Neuropsychological Society, 20(10), 994–1003. https://doi.org/10.1017/S1355617714000940

Ruedin, D. (2021). agrmt: Calculate Concentration and Dispersion in Ordered Rating Scales (1.42.8). https://CRAN.R-project.org/package=agrmt.

Salas, C. E., Casassus, M., Rowlands, L., Pimm, S., & Flanagan, D. A. (2018). “Relating through sameness”: A qualitative study of friendship and social isolation in chronic traumatic brain injury. Neuropsychological Rehabilitation, 28(7), 1161–1178

Shorland, J., & Douglas, J. M. (2010). Understanding the role of communication in maintaining and forming friendships following traumatic brain injury. Brain Injury, 24(4), 569–580. https://doi.org/10.3109/02699051003610441

Subramanian, J., Sridharan, V., Shu, K., & Liu, H. (2019). Exploiting Emojis for Sarcasm Detection. In R. Thomson, H. Bisgin, C. Dancy, & A. Hyder (Eds.), Social, Cultural, and Behavioral Modeling (pp. 70–80). Springer.

Taylor, H., Hand, C. J., Howman, H., & Filik, R. (2022). Autism, attachment, and alexithymia: investigating emoji comprehension. International Journal of Human-Computer Interaction. https://doi.org/10.1080/10447318.2022.2154890

Teasdale, G., & Jennett, B. (1974). Assessment of coma and impaired consciousness: A practical scale. The Lancet, 304(7872), 81–84. https://doi.org/10.1016/S0140-6736(74)91639-0

Tigwell, G. W., & Flatla, D. R. (2016). Oh that’s what you meant! In Proceedings of the 18th International Conference on Human-Computer Interaction with Mobile Devices and Services Adjunct, 859–866. Doi https://doi.org/10.1145/2957265.2961844

Turkstra, L. S., Kraning, S. G., Riedeman, S. K., Mutlu, B., Duff, M., & VanDenHeuvel, S. (2017). Labelling facial affect in context in adults with and without TBI. Brain Impairment, 18(1), 49–61. https://doi.org/10.1017/BrImp.2016.29

Turkstra, L. S., Norman, R. S., Mutlu, B., & Duff, M. C. (2018). Impaired theory of mind in adults with traumatic brain injury: A replication and extension of findings. Neuropsychologia, 111(January), 117–122. https://doi.org/10.1016/j.neuropsychologia.2018.01.016

van der Eijk, C. (2001). Measuring agreement in ordered rating scales. In Quality & Quantity. https://doi.org/10.1023/A:1010374114305

Wild, F. (2022). lsa: Latent Semantic Analysis (0.73.3). https://CRAN.R-project.org/package=lsa.

Yim, O., & Ramdeen, K. T. (2015). Hierarchical cluster analysis: comparison of three linkage measures and application to psychological data. The Quantitative Methods for Psychology, 11(1), 8–21. https://doi.org/10.20982/tqmp.11.1.p008

Ylvisaker, M., & Feeney, T. J. (1998). Collaborative brain injury intervention: Positive everyday routines. Singular Publishing Group

Zupan, B., Dempsey, L., & Hartwell, K. (2022). Categorising emotion words: the influence of response options. Language and Cognition. https://doi.org/10.1017/langcog.2022.24

Acknowledgements

This work was supported by NIDCD grant R01 HD071089 to M.C.D., L.S.T., & B.M. Thank you to Nirav Patel and Kim Walsh for help with participant recruitment and data collection and to Emma Montesi for help with qualitative coding.

Funding

National Institute on Deafness and Other Communication Disorders,R01 HD071089,R01 HD071089, R01 HD071089

Author information

Authors and Affiliations

Contributions

Conceptualization, S.C., M.D., L.T., and B.M.; methodology, S.C., A.T., M.D., L.T., and B.M.; formal analysis, S.C. and A.T.; resources, M.D.; writing—original draft preparation, S.C.; writing—review and editing, A.T., M.D., L.T., and B.M.; visualization, S.C.; supervision, M.C.D.; funding acquisition, M.D., L.T., and B.G. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Clough, S., Tanguay, A.F.N., Mutlu, B. et al. How do Individuals With and Without Traumatic Brain Injury Interpret Emoji? Similarities and Differences in Perceived Valence, Arousal, and Emotion Representation. J Nonverbal Behav 47, 489–511 (2023). https://doi.org/10.1007/s10919-023-00433-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10919-023-00433-w