Abstract

Behavioural coding is time-intensive and laborious. Thin slice sampling provides an alternative approach, aiming to alleviate the coding burden. However, little is understood about whether different behaviours coded over thin slices are comparable to those same behaviours over entire interactions. To provide quantitative evidence for the value of thin slice sampling for a variety of behaviours. We used data from three populations of parent-infant interactions: mother-infant dyads from the Grown in Wales (GiW) cohort (n = 31), mother-infant dyads from the Avon Longitudinal Study of Parents and Children (ALSPAC) cohort (n = 14), and father-infant dyads from the ALSPAC cohort (n = 11). Mean infant ages were 13.8, 6.8, and 7.1 months, respectively. Interactions were coded using a comprehensive coding scheme comprised of 11–14 behavioural groups, with each group comprised of 3–13 mutually exclusive behaviours. We calculated frequencies of verbal and non-verbal behaviours, transition matrices (probability of transitioning between behaviours, e.g., from looking at the infant to looking at a distraction) and stationary distributions (long-term proportion of time spent within behavioural states) for 15 thin slices of full, 5-min interactions. Measures drawn from the full sessions were compared to those from 1-, 2-, 3- and 4-min slices. We identified many instances where thin slice sampling (i.e., < 5 min) was an appropriate coding method, although we observed significant variation across different behaviours. We thereby used this information to provide detailed guidance to researchers regarding how long to code for each behaviour depending on their objectives.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Microanalytic behavioural coding describes the categorisation of overt behaviours at high temporal resolution, capturing detailed information from observational data. This approach can be applied to a variety of interactions, and is effective for identifying subtle behaviours (Beebe et al., 2010). Parent-infant interaction studies have previously used microanalysis to improve understanding of behaviours across a range of contexts, e.g., associations between maternal behaviour and postpartum depression (Beebe et al., 2008) or infant behaviour and attachment outcomes (Koulomzin et al., 2002). Others have used behavioural coding to investigate many aspects of parent-infant interactions, including behaviour regulation (Feldman & Eidelman, 2007; Northrup & Iverson, 2020), synchrony and communication (Cote & Bornstein, 2021; Galligan et al., 2018; Papaligoura & Trevarthen, 2001) and impacts for infant cognitive and neuro-development (Feldman & Eidelman, 2003). Studies in this field have coded observations of varying lengths of time, including 2.5 min (Beebe et al., 2011), 5 min (Northrup & Iverson, 2020), and 15 min (Feldman & Eidelman, 2007).

Behavioural coding is a rigorous, iterative, and time-intensive process. Pesch and Lumeng (2017) outlined several factors that may increase the amount of time that researchers spend coding, including using coding schemes with complex behavioural categories, implementing novel coding schemes, and measuring contingencies between behaviours. Consequently, it is accepted practice to only code short portions of longer interactions (James et al., 2012), although these decisions are not always well justified or supported by evidence. James et al. (2012) examined researchers’ justifications for the length of the coded timeframe in 18 mother-infant interaction studies. The most common of these were “follows previous research” or “pilot study”, with only one study citing scientific evidence for their choice.

This evidence was a meta-analysis conducted by Ambady and Rosenthal (1992), which suggested that “thin slices”—defined as brief (< 5 min) observations of expressive behaviours—are predictive of a number of outcomes, such as deception, trustworthiness, and satisfaction. Many works have since investigated the use of thin slice sampling to predict outcome variables and have reproduced similar results. For example, Murphy (2007) demonstrated that 1-min slices were sufficient to predict participant intelligence, Hirschmann et al. (2018) found that 10- and 40-min slices were comparable for predicting maternal sensitivity, and Roter et al. (2011) showed that 1-min slices were adequate for predicting communication over sessions longer than 10 min. However, other studies have identified losses in predictive validity of various outcomes whilst using the thin slice sampling approach (e.g., Murphy et al., 2019; Wang et al., 2020).

Whilst there are many examples of studies investigating the suitability of thin slice sampling for outcome prediction, less is known about whether thin slice sampling of individual behaviours can accurately predict the same behaviour across the total interaction (or in the long-term). Few studies have compared behaviour proportions, frequencies and durations over thin slices and full session interactions, and those that have done so demonstrate conflicting findings. For example, Murphy (2005) found strong evidence for a moderate to high correlation between coding 1-, 2- and 3-min slices of non-verbal behaviours and full 15-min interactions, and Carcone et al. (2015) found comparable proportions of verbalisations in 1- and 2-min slices compared to the full 30-min session. Both studies found higher correlation as the lengths of slices increased. Findings from these studies indicate that behavioural features over thin slices may be predictive of those same behaviours over longer timeframes. Conversely, James et al. (2012) found evidence that continency measures of visual and vocal behaviours over 3- and 6-min slices differed significantly from those drawn from the full 18-min interactions. There is a clear need for further guidance or quantitative evidence to outline the value of varying lengths of coding, and how the accuracy of predictions may vary between different behaviours (e.g., verbal, visual).

Further, whilst thin slice sampling has currently been applied to mother-infant interactions in a small handful of studies (Hirschmann et al., 2018; James et al., 2012), the approach has not yet been applied to father-infant interactions, so to generate new insight in this area would be beneficial for future parent-infant interaction studies.

The aims of this work were two-fold: (1) to provide quantitative evidence to inform researchers decisions regarding the length of interaction needed for coding different behavioural groups, and (2) to supplement existing understanding of using thin slices in parent-infant interactions (particularly as analyses of this kind have not yet been applied to fathers). We hypothesised that measures drawn from parent and infant behaviours over longer slices (i.e. 3-, 4-min)—as opposed to shorter slices (i.e. 1-, 2-min)—would be most representative of measures drawn from fully coded, 5-min interactions. To address our aims, we compared behavioural measures over various slices of interactions using three distinct populations of parent-infant interactions, building upon previous works that have compared behavioural counts over thin slices and full sessions (e.g., Carcone et al., 2015; Murphy, 2005). Following each section of analysis (frequencies, transition matrices and stationary distributions), we have provided detailed guidance for interpreting how many minutes of coding may be necessary to capture the characteristic of each behaviour and measure.

Methodology

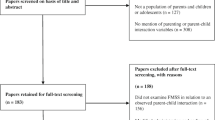

Participants

The datasets used in this research are comprised of videos of parent-infant interactions, taken from two cohort studies: Avon Longitudinal Study of Parents and Children (ALSPAC) and Grown in Wales (GiW). From these cohorts, we selected three distinct populations of participants: from GiW we used “Cardiff Mums” (n = 31 dyads), and from ALSPAC we used “Bristol Mums” (n = 14) and “Bristol Dads” (n = 11). For the three populations, we analysed 15 distinct, cumulative thin slices of lengths 1-, 2-, 3-, 4- and 5-min (described in more detail later). Further demographic information for each population is provided below.

Cardiff Mums (n = 31): Mothers and infants in the Cardiff population are participants from the Grown in Wales (GiW) cohort study, previously described in Janssen et al. (2018) and Savory et al (2020). Briefly, the GiW study aimed to examine the relationship between prenatal mood symptoms, placental genomic characteristics, and offspring outcomes. The GiW cohort is based in South Wales (UK), consisting of women recruited between 1 September 2015 and 31 November 2016 at a presurgical appointment prior to a booked elective caesarean section. Mothers were recruited if they met the criteria of a singleton term pregnancy without fetal anomalies and infectious diseases. At the time of recruitment, the cohort consisted of 355 women between the ages of 18 and 45.

One year after birth, all GiW participants were invited for an in-person assessment, where the video interactions were recorded. In total, 85 dyads attended the assessment between September 2016 and December 2017, and we selected 31 video interactions based on video quality for our work. We refer to these participants as “Cardiff Mums” and “Cardiff Infants”. The mean maternal age at the time of assessment was 35.4 years (SD = 0.33), and the average infant age was 13.8 months (SD = 0.98). 16 infants were male; 15 infants were female. In terms of parity, 16.1% were nulliparous and 83.9% were multiparous. For the mother's education, 16.1% were educated to GCSE level, 12.9% to A-Levels, 31% had an undergraduate degree, and 38.7% held a postgraduate degree.

Bristol Mums (n = 14): Mothers and infants in the Bristol Mums population are participants from the Avon Longitudinal Study of Parents and Children (ALSPAC). The study website (http://www.bristol.ac.uk/alspac/researchers/our-data/) contains details of all ALSPAC data that is available through a fully searchable data dictionary and variable search tool. ALSPAC study data were collected and managed using REDCap electronic data capture tools hosted at the University of Bristol (Harris et al., 2009). REDCap (Research Electronic Data Capture) is a secure, web-based software platform designed to support data capture for research studies.

Full cohort demographics and recruitment details have been provided previously elsewhere (Boyd et al., 2013; Fraser et al., 2013; Lawlor et al., 2019; Northstone et al., 2019). Briefly, ALSPAC is an ongoing longitudinal, population-based study based in Bristol, UK. The original cohort was recruited via a set of 14 541 pregnancies with expected delivery dates between 1 April 1991 and 31 December 1992. The children born to the original cohort (ALSPAC-G0) in 1992 are referred to as generation 1, or ALSPAC-G1, and the children born to those children over two decades later are referred to as generation 2, or ALSPAC-G2. Our work comprises mothers from ALSPAC-G1 (born in 1992), and their corresponding infants from ALSPAC-G2 (born over two decades later). We refer to these participants as “Bristol Mums” and “Bristol Infants (1)”.

Bristol Mums were recruited via a research clinic at the University of Bristol, which they attended approximately 6 months after the birth of their infant (mothers at the clinic were invited to take part in the headcam project). The videos used in this work were collected between July 2016 and July 2018. There were no selection criteria for mothers other than being part of the original ALSPAC cohort. Mean maternal age was 24.4 years (SD = 0.9), and mean age of infants was 29.5 weeks (SD = 1.4). Nine infants were male; five infants were female. In terms of parity, 78.6% were primiparous and 21.4% were multiparous. For the mother's education, 7.1% were educated to GCSE level, 71.4% to A-levels, and 7.1% held a higher education degree (14.3% unknown).

Bristol Dads (n = 11): Fathers and infants in the Bristol Dads population are also participants from ALSPAC-G1 and ALSPAC-G2, as described above. Explicitly, the fathers belong to generation 1, and were born to the original ALSPAC-G0 mothers in 1992, and the infants in these dyads were born over two decades later, comprising generation 2. We refer to these participants as “Bristol Dads” and “Bristol Infants (2)”. Bristol Dads were recruited via a father-specific research clinic (“Focus on Fathers”), which invited fathers to attend multiple assessments when their infant was 6 months old. Data was collected between July 2019 and 2020, and there were no specific selection criteria other than being a partner of an original ALSPAC participant. Mean paternal age was 27.5 (SD = 3.5), and mean age of infants was 30.8 weeks (SD = 3.8). Six infants were male; five infants were female. In terms of parity, 54.5% were primiparous and 45.5% were multiparous. For the father’s education, 63.6% were educated to GCSE level, and 18.2% to A-level (18.2% unknown).

Video Recording Procedures

Cardiff Mums (n = 31): Interactions were recorded by a tripod-mounted video recorder. Mothers and infants were recorded during an unstructured, “free play” session in a research laboratory at Cardiff University. Free play means that mothers and infants were not provided with a set of play instructions, and were free to move about the room.

Two researchers were present at the time of video recording; one of whom was responsible for operating the video recorder (with the aim to keep as much as the infant and mother in shot as possible, but with a focus on the infant preferentially). The room set up was a soft play pen (that the infant was able to climb in and out of) placed in the middle of the room, and a number of toys placed inside it. There was also a bookcase displaying a number of toys at the back of the room. In the left hand corner of the room there was a chair for the mother to sit on. The mother was instructed to play as she normally would at home with the infant for 5 min, using any of the toys available in the room.

Bristol Mums (n = 15) and Bristol Dads (n = 17): Videos for both populations were recorded by cameras worn on headbands by both the parent and the infant, capturing two separate videos for each interaction. First-person headcams have previously been shown to be reliable for capturing mother and infant behaviours (Lee et al., 2017). Separate headcam footage from both the parent and infant cameras were synced by researchers for coding purposes.

Bristol Mums and Dads were given the fully-charged wearable headcams, and asked to use them at home during different types of interactions. For the mothers, the interactions analysed in this study were classed as: “mealtime” (infant engages in eating; n = 11) and “stacking task” (mother and infant engage with stacking toy; n = 4). For the fathers, the interactions were classed as: “mealtime” (n = 10), “stacking task” (n = 3), “reading” (parent reads book to infant; n = 1), “bedtime” (parent puts infant to bed; n = 1), and “mixed” (combination of interaction types; n = 2). Due to the videos being taken in the home, it was possible for siblings/other caregivers/pets to be present during the interactions.

Our analyses feature 15 videos from the Bristol Mums population. These videos were provided by 14 individual mother-infant dyads, as one dyad provided two videos to the analyses. Explicitly, this dyad provided both a “feeding” and a “stacking task” video, whereas the other thirteen dyads each provided a single video representing one of the following activities: "feeding", "stacking task", "bedtime", "mealtime", or "reading". Our analyses also feature 17 videos from the Bristol Dads population, provided by 11 individual father-infant dyads. In this case, seven dyads provided one video, three dyads provided two videos, and one dyad provided four videos.

We ran the analyses twice—once using only one video from each dyad, and the second time using all the videos, including the second/third/fourth videos from some dyads. We looked to identify whether correlations were inflated due to re-using multiple videos from the same subjects, and also to see if the corresponding trends drastically differed in any way. For this, we carried out chi-squared analyses on the two separate datasets: one dataset comprised of the correlations in Tables 4 and 5 (including multiple videos per subject), and the equivalent data calculated using one video per subject only. The findings from this analysis are presented in the “Appendix” (“Analysis of Multiple Videos per Subject” section); However, it is clear from these results that the two datasets were considerably similar, and there was no evidence to indicate that including multiple videos inflated our correlations. So, in the interest of including all available observations and maximising statistical power to the analyses, we made the decision to include the multiple videos per subject where these existed.

Behavioural Coding

All interactions were coded on an event-basis using Noldus Observer XT 14.0 (Noldus, 2021). The full micro-coding manual used for this research is available online (Costantini et al., 2021). The behavioural coding scheme, including overarching codes and individual subcodes, is summarised in Table 1. All populations used the same coding scheme, although the Cardiff population applied a reduced subset of behavioural categories (see Table 1).

Within each behavioural group (e.g., Caregiver Posture), behaviours (e.g., Lying down, Lie on side) are mutually exclusive and exhaustive; this means that at each point in time, exactly one behaviour from each behavioural group must be coded. It follows that at any given moment, Cardiff Mums must have 8 codes applied, Cardiff Infants must have 7, Bristol Mums and Dads must have 11, and Bristol Infants (1) and (2) must have 10.

The original Bristol videos varied in length (ranging from 5 to 20 min). This is because the parents were advised to record a “typical” interaction, so the length of the video was therefore dependent on how long the infant naturally took to be fed, how long the parent took to put the infant to bed, etc. A 5 min portion of each interaction was chosen for coding, a choice that was made for a previous, unrelated study involving the Bristol videos. This choice is consistent with previous studies in thin slice sampling that have also used 5-min slices to represent entire sessions (e.g., Murphy, 2007; Murphy et al., 2019). At the beginning of the Bristol Mums and Bristol Dads videos, the parent would typically spend a few moments setting up the infant headcam and reading the study information sheet provided, before starting the interaction. In line with guidance from an information sheet, the parent would announce the “official” start of the interaction by stating “Today it is X date, and we are doing X activity”. It is from this vocalisation that the 5-min coded segment began. For the Cardiff videos, the recording began immediately once the participant ID had been shown to the camera. Each interaction lasted for 5 min, and the entire interaction was coded.

The three populations were coded by separate researchers, all of whom had been independently trained in using the coding scheme. Cardiff Mums were coded by one researcher (HT), Bristol Mums were coded by three researchers (IC, AC, RP), and Bristol Dads were coded by four researchers (MK, PC, JS, LM). For each of the three populations, 20% of videos were double coded for reliability purposes: 6 from the Cardiff Mums population, 3 from the Bristol Mums and 4 from the Bristol Dads. Two additional researchers were recruited for double coding (HD, RB). Inter-rater reliability was assessed using Cohen’s kappa, separated according to behavioural group. All reliability analyses were conducted using the Observer XT 14.0 (Noldus, 2021). These analyses are provided in Table 2.

Data Analysis

Each parent-infant interaction was split into 15 distinct thin slices, representing various cumulative minute combinations of the full, 5-min session. For clarity, we named these slices according to the minutes of the interaction that they include. For example, slice One represents the coded data from the first minute of the interaction, slice OneTwo represents the coded data from the first two minutes of the interaction, etc. This gives five 1-min slices (One, Two, Three, Four, Five), four 2-min slices (OneTwo, TwoThree, ThreeFour, FourFive), three 3-min slices (OneTwo-Three, TwoThreeFour, ThreeFourFive), two 4-min slices (OneTwoThreeFour, TwoThreeFourFive) and the full 5-min slice (OneTwoThreeFourFive) (i.e. the fully coded session).

Our work applies Markov Chain analysis—specifically, transition matrices and stationary distributions—to the parent-infant interactions. We outline these methodologies below, and how we applied them to our work. However, a more thorough walkthrough with a representative example can be found in “Markov Chain Analyses Walkthrough with Example” section of the “Appendix”. The walkthrough details the mathematics involved in deriving transition matrices and stationary distributions, and also how to interpret them.

In brief, Markov chains are probabilistic models describing processes of events over time. The Markov chains in this work are discrete-time—as behaviours are analysed on a second-by-second basis—and finite state—as there are a finite number of behavioural states. An important feature of Markov chains is that in order to predict the state (or behaviour) at time n, we only need to know the state (behaviour) at time n − 1.

Transition matrices contain the probabilities of transitioning between states (Gagniuc, 2017), in our case, transitioning between behaviours within the same behavioural group. An example transition matrix is given in Fig. 1a, showing the probabilities of a mother transitioning between Visual Attention behaviours over a 60 s period. In our work, transitions were calculated on a second-by-second basis, meaning that 60 transitions between behaviours were recorded for a 60 s period. In order to derive the transition matrices, we recorded the behaviours that occurred at second 0, second 1, second 2, etc., then calculated the number of transitions between all behavioural states. The example matrix in Fig. 1a shows that 28 s were spent looking at the infant, 20 s were spent looking at the focus object, and 12 s were spent looking at a distraction. Additionally, the example shows that the probability of transitioning from “Look at infant” to “Look at infant” is 0.64, to “Look at focus object” is 0.25, and to “Look at distraction” is 0.11. It is important to note that rows must sum to 1.

A stationary distribution is a vector representing the long-term probabilities of being within behavioural states (Gagniuc, 2017). The full derivation of a stationary distribution is detailed in “Markov Chain Analyses Walkthrough with Example” section of the “Appendix”, but in brief, it is derived from the equation s = sT, where T is the n × n transition matrix and s is the 1 × n stationary distribution row vector (Ross, 2014). An example stationary distribution is given in Fig. 1b, derived from the transition matrix in Fig. 1a. This may be interpreted as the long-run proportion of time that the mother spends within each visual attention state. Here, over 100 s, we would expect the mother to be in the state “Look at infant” for 52 s, “Look at focus object” for 32 s, and “Look at distraction” for 16 s. In this way, we can use the values within stationary distributions as measures for duration of behaviours (for this reason, we have not included specific behaviour duration measures within our analyses, in order to avoid repetition).

As the length of thin slice increases, the transition matrices become populated with more data, and the stationary distributions become more precise. Therefore, we expect that transition matrices and stationary distributions generated from the longest thin slices will be most similar to those generated by the full interactions.

The earliest application of Markov processes to the mother-infant dyad was performed by Freedle and Lewis (1971), who illustrated how these methods could be used to identify sequences of infant vocalisation behaviours. This work outlined the value of Markov modelling for interaction studies; specifically, how current behavioural states within transition matrices influence the conditional probability of the subsequent behavioural states, and how the diagonal probabilities within the matrices can be used to estimate the likelihood of the subject remaining within a given state (a large probability on the diagonal indicates persistence of one behavioural state over time). Further applications of Markov Chain analysis to mother-infant dyads include: investigating differences in vocal affect in depressed and non-depressed mothers (Friedman et al., 2010), differentiating secure vs. avoidant attachment (Koulomzin et al., 2002), quantifying vocal reciprocity (Anderson et al., 1977), and understanding the process of soothing distressed infants (Stifter & Rovine, 2015). To our knowledge, no existing studies have compared transition matrices and stationary distributions over thin slices and full session interactions, in any context.

These three measures of behaviour—frequencies, transition matrices and stationary distributions—were calculated at each of the 15 slices, for both subjects within each population. We used Pearson correlations to measure the strength of the relationships between behavioural frequencies over thin slices and fully coded interactions. We also calculated the absolute differences between: (1) the rows within the transition matrices, and (2) the full stationary distribution vectors. These were calculated over each thin slice and full 5-min session. Finally, chi-squared analyses were employed to evaluate whether these measures, calculated over 1-, 2-, 3- and 4-min slices, were comparable to the full session counterparts. All calculations were conducted using Python 3.8.

Following each of the three analyses, we chose some example “level of agreement” between the thin slice and full session measures, which would indicate that the behavioural measure over the thin slice was adequately representative of that same behavioural measure over the full session (e.g., a “very strong” Pearson correlation, or p < 0.05 for a chi-squared test between two transition matrices). We used this information to provide guidance at the end of each section, outlining how to interpret an appropriate thin slice for each specific behaviour.

Results

Frequencies of Parent and Infant Behaviours

A total of 19 309 behaviours were coded in the Cardiff Mums population, 7 296 in Bristol Mums, and 8 964 in Bristol Dads; parent behaviours accounted for 57.3%, 53.9% and 54.9% of data, respectively. The mean frequencies of all behaviours over each thin slice are provided within Tables 7, 8, 9, 10, 11 and 12 of the “Appendix” (a separate table is given for each population).

These data show that frequencies of behaviours were almost always higher in the Cardiff population than in both Bristol Mums and Bristol Dads. To illustrate this, note that for the 1-min slice One, the mean frequency for Cardiff Mum Proximity (2.90) was higher than the equivalent values for both Bristol Mums (1.87) and Bristol Dads (2.24). Similarly, the mean frequency for Cardiff Infants Visual Attention for the 2-min slice OneTwo (26.16) was higher than the equivalent values for both Bristol Infants (1) and (2) (15.33 and 16.24, respectively). These differences could be attributed to the difference in setting between the Cardiff and Bristol populations (i.e., in-lab vs. at home).

We can also compare frequencies of parent and infant behaviours. For example, across all populations and slices, parent vocalisation occurred more frequently than infant vocalisation (unsurprising, as the infants in this work were not of speaking age). Other behaviours—such as Touch and Physical Play—also often occurred more frequently in parents than infants. Conversely, Head Orientation and Body Orientation were both more frequent in infants than in parents for all three populations. Visual Attention, Facial Expressions and Hand Movements occurred with similar frequencies throughout all parents and infants.

A key finding from these analyses is that many behaviours were most frequent in the earliest slices of the interaction, and least frequent in the latest slices. These patterns together were most strongly prevalent in the parents’ behaviours, much more so than the infants (although similar patterns did emerge to a lesser degree in Bristol Infants (1) and (2)). There are many examples to illustrate how slice Five shows lower frequencies than other 1-min slices: Cardiff mums Touch R (One 14.68, Two 14.77, Three 13.71, Four 14.65 and Five 9.94), Bristol Mums Vocalisation (One 17.80, Two 18.00, Three 19.60, Four 15.87, Five 12.73), and Bristol Dads Head Orientation (One 7.59, Two 6.29, Three 5.53, Four 5.06 and Five 4.65). This pattern also extends into the other, longer slices that occur latest in the interaction, for example: Cardiff mums Visual Attention (OneTwo 31.90, TwoThree 29.48, ThreeFour 26.58 and FourFive 24.68), Bristol Mums Facial Expressions (OneTwoThree 27.27, TwoThreeFour 28.40 and ThreeFourFive 26.67) and Bristol Dads Posture (OneTwoThreeFour 5.76, TwoThreeFourFive 4.88).

Conversely, many behaviours were most frequent in the earliest slices, for example: Bristol Dads Body Orientation (One 2.82, Two 2.65, Three 1.53, Four 1.47 and Five 1.29), Bristol Mums Touch L (OneTwo 8.93, TwoThree 8.07, ThreeFour 8.27, FourFive 7.07), and Cardiff Mums Proximity (OneTwoThree 7.45, TwoThreeFour 7.06, ThreeFourFive 7.16). This pattern is strongest in the Cardiff Mums population: slice One has the highest frequency of behaviours compared to all other 1-min slices for all behaviours except Touch R, and slices OneTwo, OneTwoThree, and OneTwoThreeFour have the highest frequency of all behaviours compared to other 2-, 3- and 4-min slices, respectively. These findings should be interpreted with caution in many cases, however, as it is often true that the standard deviations are large and overlap across slices. We provide our interpretations of these findings within the discussion.

Pearson correlations: Using the frequency data, we calculated Pearson correlations to evaluate the relationship between the frequency of behaviours extracted from the 1-, 2-, 3- and 4-min slices and the fully coded interactions (shown in Tables 3, 4, 5). Schober et al. (2018) suggest that correlations from 0.10 to 0.39 may be interpreted as “weak”, those from 0.40 to 0.69 are “moderate”, those from 0.70 to 0.89 are “strong”, and those higher than 0.90 may be classed as “very strong”.

Most correlations were found to be either “strong” or “very strong”, with three examples demonstrating “very strong” correlations between all thin slices and full sessions (i.e., Bristol Mums Body Orientation, Bristol Dads Hand Movements and Bristol Infants (2) Physical Play). There were several instances of “weak” correlation between a 1 or 2-min slice and full session (e.g., Cardiff Mums Physical Play, slice Five; Cardiff Mums Posture, slice Four; Bristol Dads Posture, slices Three, Four and ThreeFour), which may in part be explained by the frequencies of these behaviour being very low (see Tables 7 and 11 of the “Appendix”). There were also many examples of “moderate” correlations between 1-, 2- and 3-min slices and full sessions (e.g., Cardiff Infants Touch R, slice One; Bristol Mums Visual Attention, slices One, Two and OneTwo; Cardiff Mums Posture, slice ThreeFourFive).

Within Tables 3, 4 and 5 below, we have also selected an example “criterion” to indicate a strong correlation between frequencies of a given thin slice and the full interaction; specifically, where r > 0.9, as shown in bold. We have chosen to highlight correlations of this magnitude as the goal of this analysis is to understand which slice pairings yield greatest agreement, and a strength of association of this magnitude indicates that slices are closely related. However, it should be noted that this criterion is provided as an example baseline for our own interpretations, and we recognise that for the benefit of other research aims, it may be more appropriate for researchers to select their own criterion accordingly.

These data lend support to our hypothesis that longer slices would demonstrate the highest correlation with fully coded sessions. The lowest correlations were generally found between the full sessions and slices of length 1-min (mean = 0.81, range = 0.00–0.99), followed by those of length 2-min (mean = 0.90, range = 0.38–0.99), 3-min (mean = 0.94, range = 0.66–0.99) and 4-min (mean = 0.97, range = 0.82–0.99). Specifically, as the slice length increased, behavioural frequencies showed stronger correlations with the fully coded interactions. This was shown to be true across all behaviours, populations, and subjects. As an example, for Cardiff Infant Body Orientation, the correlations for 1-min slices (r(31) = [0.57, 0.61, 0.74, 0.72, 0.81]) were lower than for 2 min slices (r(31) = [0.75, 0.82, 0.85, 0.93]); these were lower than the 3-min slice correlations (r(31) = [0.92, 0.89, 0.94]); and these were lower than the 4-min slice correlations (r(31) = [0.96, 0.97]).

Correlations varied in strength depending on behavioural group. For example, across all slice lengths and populations, Caregiver Touch R correlations were greater than 0.69, Caregiver Vocalisation correlations were greater than 0.75, and Caregiver Head Orientation correlations were greater than 0.80. However, Posture, for example, showed generally weaker correlations both over shorter slices (e.g., Bristol Dads slice Three, r = 0.38), and longer slices (e.g., Cardiff Mums slice ThreeFourFive, r = 0.66).

Additionally, by providing correlations for individual slices, we can begin to see which portion of the interaction the behaviours best correlate with the full sessions. For the Cardiff Mums population, the original interactions and recordings were all of duration 5 min, so we can fully interpret which portion of the session—the beginning, middle or end—was most (and least) representative of the full session. We cannot speculate in this way for the Bristol Mums and Dads populations, given that many original videos used in were originally longer than 5 min, and these interactions were cut short so to not include the original “ending” of the interaction. For these two populations, we can however look to compare how the slices at the beginning of the interaction compare to those that occur later.

Looking first at 1-min slices, our findings reveal that the middlemost slices best correlated with the full session. This is evident from the bold text in Tables 3, 4 and 5, as most bold values are within columns Two, Three and Four for 1-min slices (particularly for the Bristol Mums and Dads populations). A good illustration of this pattern is Bristol Dads Visual Attention (r(17) = [0.84, 0.90, 0.93, 0.94, 0.85]). Here, slices Two, Three and Four have very strong correlations, whereas slices One and Five have only strong correlations. Another example is Cardiff Infants Touch R (r(31) = [0.62, 0.76, 0.85, 0.82, 0.73]. In this instance, the highest correlations are in slices Three and Four, followed by slice Two, then slice Five, then slice One.

For 2-min slices, it is less clear whether any specific slice correlated better than another. In some instances, slice OneTwo had the highest correlation (e.g., Bristol Dads Touch R), in others, slice TwoThree (e.g., Bristol Mums Vocalisation), slice ThreeFour (e.g., Bristol Infants (2) Hand Movements), or FourFive (e.g., Cardiff Infants Body Orientation). We observed variation by both behaviour and population. For 3-min slices, we observed that highest correlations most commonly arose for the earliest slice, OneTwoThree, whilst the latest slice, ThreeFourFive, contained the lowest correlations. These trends were particularly evident within Cardiff Mums, Cardiff Infants and Bristol Mums. A similar result was found for 4-min slices, where slice OneTwoThreeFour generally outperformed slice TwoThreeFourFive (although values are very similar, TwoThreeFourFive was the only 4-min slice to contain r < 0.9).

It is worth mentioning that these values may be inflated, given that the thin slices are themselves contained within the full session (and therefore represent a higher portion of the full session as the slice lengths increase). This could be addressed by evaluating correlations between non-overlapping slices. We have provided a brief example of such an analysis within the “Appendix” (see “Additional Non-overlapping Slices Analyses” section). However, there are a very large number of potential thin slice/full session combinations, and so on account of paper length constraints we have not provided a comprehensive analysis here. Future work could therefore aim to address this limitation, by evaluating patterns between various combinations of non-overlapping slices.

Selecting Thin Slices Based on Frequencies: Here we exemplify how researchers could use our analysis to determine how long to code for a given behaviour. In brief, researchers would choose a behaviour of interest, use Tables 3, 4 and 5 to evaluate correlations between thin slices and full-sessions for that behaviour, and use the example criterion provided to determine the appropriate thin slice length to code.

The bold values in Tables 3, 4 and 5 above provide an example “criterion” for a sufficient correlation between frequencies over thin slices and full interactions, i.e., r > 0.9 for all slices of the specified length. This criterion suggests that it is only necessary to code a specific behaviour up until the first fully-bold region within the corresponding table row, where all slices of a given length are suitably correlated with the full-session. For example, data for Cardiff Mums in Table 3 suggests the following coding durations: 2 min for Vocalisation, 3 min for Visual Attention, Touch R and Touch L, 4 min for Proximity, Body Orientation and Physical Play, and the full 5 min for Posture. Similarly, with this criterion, data for Cardiff Infants in Table 3 suggests coding: 3 min for Visual Attention, Posture, Touch R, Touch L and Vocalisation, and 4 min for Body Orientation and Physical Play. However, it is important to note that the threshold in this criterion is arbitrary and has been provided only as guidance for the purposes of interpreting these data. What constitutes an acceptable correlation is subjective, and researchers may wish to adjust the criterion according to their own research aims.

Given that we have identified correlations for individual slices, it would also be reasonable in some cases to select a specific slice for coding based on the correlations above. However, this would only be viable if the video procedures within the research followed the same process as ours (i.e., the 5 min session begins when the participant/researcher announces the beginning of an interaction, any remaining video past these 5 min are not included in the full session). Else, if the video procedures differed to our methodology (for example, selecting a 5-min video segment was chosen randomly out of longer video), it would not make sense to choose to code a specific slice based on the correlations in Tables 3, 4 and 5, because the beginning, middle and end portions of the video would not be representative of our beginning, middle and end portions. In this case, we would recommend using a criterion similar to our example above.

Transition Matrices

For all behaviours and populations, transition matrices were calculated over the 1-, 2-, 3-, 4- and 5-min slices (where the 5-min slice OneTwoThreeFourFive is equivalent to the full session). The absolute differences between thin slice and full session transition matrices were calculated and averaged across all mothers/fathers/infants within the population. Then we plotted the absolute agreement between transition matrices at 1-, 2-, 3- and 4-min slices and those from the 5-min full session, separated by subject. Due to paper length constraints, this plot has been included in the “Transition Matrices” section of the “Appendix” (Fig. 2). From Fig. 2 we can interpret which coded slices generate transition matrices that are most similar to the full session transition matrices (i.e., the slices with a “left-most” distribution, with a median absolute difference close to 0). Note that absolute differences may only be between 0 and 1, as transitions are represented by probabilities.

Across all populations, Fig. 2 (of the “Appendix”) highlights a positive correlation between slice length and the absolute difference between transition matrices over thin slices and full sessions. To illustrate this, consider Cardiff Mums: the absolute difference between transition matrices over the fully coded interaction and the 1-min slice One was 0.131, the 2-min slice OneTwo is 0.071, the 3-min slice OneTwoThree is 0.037, and the 4-min slice OneTwoThreeFour is 0.018. Consistent with our hypothesis, these data suggest that transition matrices extracted from thin slices of coded data become more similar to those from fully coded interactions as slice length increases.

We can use these data to identify for which specific slices of a given length transition matrices were most similar to the full session equivalents. This gives us an idea of which slices are most representative of full sessions in terms of transitions between behaviours, and can help us to identify which slices to target (or not to target) for coding. For example, for the Cardiff Mums slices of length 1-min (slices One, Two, Three, Four and Five), we see that the smallest absolute difference between any 1-min slice and the full session (or the “left-most” median for 1-min slices) occurs in slice One. This means that the transitions between behaviours within the first minute (One) were most similar to behavioural transitions in the fully coded session, compared to the middlemost (Two, Three, Four) and final (Five) 1-min slices. Similarly for Cardiff Mums, we see that the most representative 2-min slice is slice OneTwo, the most representative 3-min slice is slice OneTwoThree, and the most representative 4-min slice was slice OneTwoThreeFour (these are the slices with the smallest median absolute difference). As such, this indicates that the earliest slices, regardless of length, were more representative of full-session transitions compared to the middlemost and later slices.

This pattern persists across many subplots within Fig. 2: slices taken from early in the interaction show lower absolute differences in comparison to later slices. One specific example is the 3-min slices for Bristol Mums: the mean absolute difference between the full session and slice OneTwoThree is 0.046, for slice TwoThreeFour is 0.055, and for slice ThreeFourFive is 0.096. The slice including the first minute (OneTwoThree) showed the lowest absolute difference. Conversely, we also observe that behavioural transitions within the latest slices (e.g., Five, FourFive, ThreeFourFive) are least representative of those within the full interaction. This pattern is almost consistent across samples (except for Cardiff Infants), but is particularly strong for Bristol Infants (1) and (2).

Chi-squared Analyses: Chi-squared analyses were performed to test the null hypothesis that as slice length increased, transition matrices would become more similar to those obtained by full sessions, for all behaviour categories. For these tests, transition frequencies were used as opposed to transition probabilities (similar to Friedman et al., 2010), and the degrees of freedom (df) were equal to the number of unique behaviours within each behavioural group.

The complete findings from these analyses are very large, so are provided in detail in the “Appendix” (Tables 13, 14, 15, 16, 17, 18). Similarly to within our frequency analyses, values within Tables 13, 14, 15, 16, 17 and 18 have also been made bold appropriately to highlight an example “criterion”, indicating “sufficient” similarity between transitions over a given thin slice and full session; specifically, where p < 0.05 for all slices of a given length. This criterion was chosen as the low p value indicates that the transitions within the given slice pairings are closely related.

In brief, these data indicate that transition matrices drawn from slices of the shortest length (1-min) are the least similar to the full session transition matrices, whilst transition matrices drawn from the longest slices (4-min) are the most similar. This is consistent with our hypothesis and is shown to be true across all populations and subjects. As an example, consider Bristol Mums transitions from Out of Reach (Table 15). Comparing the full, 5-min session and the 1-min slice One, we have χ2 (4, N = 15) = 33.64, p < 0.001; for the full session and the 2-min slice OneTwo, χ2 (4, N = 15) = 30.38, p < 0.001; for the full session and the 3-min slice OneTwoThree, χ2 (4, N = 15) = 19.93, p < 0.001; and for the full session and the 4-min slice OneTwoThreeFour, χ2 (4, N = 15) = 6.85, p > 0.05. In this example, we see that the χ2 value decreases as slice length increases, indicating the increased similarity between thin slice and full session transition matrices.

Additionally, comparing chi-squared analyses for individual slices allows us to again interpret which specific slices—or which portions of an interaction—contain transitions which best represent full interaction transitions. In terms how we interpret this from the given tables (Tables 13, 14, 15, 16, 17, 18), we look for the lowest χ2 value for a behaviour at a given slice length (this signifies the slice containing transitions most similar to full-session counterparts).

To illustrate this, consider Bristol Infants (1) Head Orientation behaviours over 1-min slices (rows 55–11, Table 16). For “vis-à-vis—infant and caregiver”, for example, we have that: for One, χ2 (4, N = 15) = 75.93, for Two, χ2 (4, N = 15) = 61.03, for Three, χ2 (4, N = 15) = 53.74, for Four, χ2 (4, N = 15) = 65.18 and for Five, χ2 (4, N = 15) = 69.36. In this case, the lowest χ2 value for 1-min slices, is for slice Three. This indicates that transitions from vis-à-vis in slice Three are most similar to transitions from vis-à-vis across the full interaction, compared to the equivalent measure in slices One, Two, Four and Five. We can find similar results for the other head orientation behaviours: for “Slight (30°–90°) aversion right”, slice Four is most similar, for “Slight (30°–90°) aversion left”, slice Two is most similar, etc. Looking at all of the Head Orientation behaviours together, we see that slices Two and Five most frequently have the lowest χ2 value. So if we wanted to look at transitions between Head Orientation behaviours and we only were only able to code 1-min of the interaction, then either of these would be the optimum choice. By the same argument (selecting the slice with lowest χ2 values), if we were to choose to code 2-min of Head Orientation then we should choose either slice OneTwo or ThreeFour, if coding 3-min we should choose either slice TwoThreeFour or ThreeFourFive, or if coding 4-min we should choose slice TwoThreeFourFive.

Similar interpretations can be drawn across all behaviours and slice lengths. We can observe that the specific slices that were most representative of full session transitions varied considerably depending on behaviour, and that even within the same behaviour, there was variation across population (we consider the reasons for this in more detail in the Discussion).

Selecting Thin Slices Based on Transition Matrices: For ease of translating these data into an applicable coding framework, appropriate values within Tables 13, 14, 15, 16, 17 and 18 have been made bold to exemplify a potential “criterion” for sufficient similarity between transitions over thin slices and full sessions, i.e. where p < 0.05 for all slices of a given length. Using this criterion, fully-boldened regions within these tables indicate the suitable coding timeframe for each behavioural group. For example, if we want to understand transitions between behaviours within a category, Table 15 suggests that coding 4 min is suitable for all caregiver behavioural groups except for Posture and Hand Movements, where the full 5-min of coding (or longer) is required. Similarly for Bristol Infants (1), Table 16 suggests coding 4 min for Body Orientation, 4 min for Visual Attention, and the full 5 min (or longer) for Head Orientation. However, it is important to note that whilst this criterion has been provided as guidance, an acceptable p value is subjective, and researchers may wish to adjust this criterion according to their own research aims.

Given that we have carried out chi-squared analyses for individual slices, it would also be reasonable in some cases to select a specific slice for coding based on the χ2 (and p) values provided. This would be done by choosing the slice containing the lowest χ2 values, compared to other slices of the same length. However, as before, this would only be viable if the 5-min coding segment was selected in the same way as within our work. Else, choosing a specific slice based on the lowest χ2 values would not make sense, as the beginning, middle and end portions of the video would not be representative of our beginning, middle and end portions. In this case, we would recommend using a criterion similar to our example above (p < 0.05 for all slices of a given length).

Stationary Distributions

Using the transition matrices, corresponding stationary distributions were calculated for all slices over each population and behaviour. The absolute differences between thin slice and full session stationary distributions were calculated, and averaged across all mothers/fathers/infants within the population. Then we plotted the absolute agreement between stationary distributions at 1-, 2-, 3- and 4-min slices and those from the 5-min full session, separated by subject. Due to size constraints, this plot has been included in “Stationary Distributions” section of the “Appendix” (Fig. 3). From Fig. 3, we can interpret which coded slices obtain stationary distributions that are most similar to the full session stationary distributions (i.e., the slices with a “left-most” median absolute difference, close to 0). Note that absolute differences range between 0 and 1, as stationary distribution values are probabilities.

Across all populations, this plot highlights a positive correlation between slice length and the absolute difference between stationary distributions at thin slices and full sessions. As an example, consider Cardiff Infants: the absolute difference between stationary distributions over the fully coded interaction (OneTwoThreeFourFive) and the 1-min slice One is 0.044, the 2-min slice OneTwo is 0.033, the 3-min slice OneTwoThree is 0.032, and the 4-min slice OneTwoThreeFour is 0.016. Consistent with our hypothesis, these data suggest that stationary distributions extracted from thin slices become more similar to those from fully coded sessions as slice length increases.

Figure 3 (of the “Appendix”) emphasizes that absolute differences in the Cardiff Mums population showed higher variation compared to the Bristol populations. This is likely due to differences in frequencies; particularly, Tables 7, 8 and 9 above showed how behavioural frequencies were highest in Cardiff Mums and Infants compared to the other subjects. These higher frequencies may have caused higher absolute differences between thin slices and full sessions.

We can also use these data to compare results between slices of the same length, in order to identify which slices to target (or not to target) for coding. For each slice length, we can identify within which specific slice the stationary distributions were most similar to the full session equivalents. For example, for the Bristol Mums slices of length 1-min (slices One, Two, Three, Four and Five), we can see that the lowest absolute difference between any 1-min slice and the full session (or the “left-most” median for 1-min slices) occurs in slice Four. This means that the time spent within each behavioural state during the fourth minute were most similar to behavioural transitions in the fully coded session, compared to the other 1-min slices. As slice length increases, it becomes harder to distinguish which slice shows the lowest absolute difference, as all differences are very small and similar in value. This is true for many of the populations for slices longer than 2 min, and suggests that as slice length increases, it is less important which specific slice is chosen for coding.

Chi-Squared Analyses: Chi-squared analyses were performed to test the null hypothesis that as slice length increased, stationary distributions would become more similar to those obtained by full sessions, for all behaviour categories. In these tests, the degrees of freedom (df) were equal to the number of unique behaviours within each behavioural group.

The complete findings from these analyses are very large, so are provided in the “Appendix” (Tables 19, 20, 21, 22, 23, 24). Similarly to above, appropriate values within Tables 19, 20, 21, 22, 23 and 24 have also been made bold to highlight an example “criterion”, indicating “sufficient” similarity between stationary distributions over the thin slice and full session; specifically, where p < 0.05 for all slices of a given length. This criterion was chosen as the low p value indicates that the stationary distributions within the given slice pairings are closely related.

In sum, these data demonstrate that stationary distributions from slices of the shortest length (e.g., One, Three) were least similar compared to those from the fully coded interactions, whilst stationary distributions from the longest slices (e.g., OneTwo-ThreeFour) were most similar. Consistent with our hypothesis, this means that the probabilities of remaining within behavioural states as predicted by thin slices become more similar to full session probabilities as slice length increases. As an example, consider the Bristol Infants (2) stationary distribution for Touch left Hand (Table 24). Comparing distributions for the full 5-min session and the 1-min slice One, we have χ2 (3, N = 15) = 36.27, p < 0.001; for the full session and the 2-min slice OneTwo, χ2 (3, N = 15) = 9.19, p < 0.05; for the full session and the 3-min slice OneTwoThree, χ2 (3, N = 15) = 1.75, p > 0.05; and for the full session and the 4-min slice OneTwoThreeFour, χ2 (3, N = 15) = 0.54, p > 0.05. Here we see that the χ2 value decreases as slice length increases, indicating the increased similarity between thin slice and full session stationary distributions.

Additionally, comparing chi-squared analyses for slices of a given length allows us to identify which specific slice—or which portion of an interaction—should be targeted for coding. In terms how we interpret this from the given tables (Tables 19, 20, 21, 22, 23, 24), we look for the lowest χ2 value for a behaviour at a given slice length (this signifies the slice containing stationary distributions most similar to full-session counterparts).

To illustrate this, consider Bristol Dads Body Orientation behaviours over 1-min slices (Table 23 of the “Appendix”). We have that: for slice One, χ2 (4, N = 17) = 9.14, for Two, χ2 (4, N = 17) = 44.92, for Three, χ2 (4, N = 17) = 1.04, for Four, χ2 (4, N = 17) = 4.55, and for Five, χ2 (4, N = 17) = 31.09. The lowest χ2 value for 1-min slices is for slice Three. This means that the long-run proportions of time spent within Proximity behaviours are best approximated by slice Three, as opposed to slices One, Two, Four and Five. So if we wanted to analyse the durations of proximity behaviours, and were only able to code 1-min of the interaction, then slice Three would be the optimum choice. By the same argument (selecting the slice with lowest χ2 value), if we were to choose to code 2-min of Proximity then we should choose slice FourFive, if coding 3-min we should choose slice ThreeFourFive, or if coding 4-min we should choose slice TwoThreeFourFive.

Similar interpretations can be drawn across all behaviours and slice lengths. Comparing results across populations, we see that the lowest χ2 values are most commonly derived from the middlemost and end slices, as opposed to the first slices of the interaction (particularly true as slice length increases). Potential reasons for this trend are detailed within the discussion.

Selecting Thin Slices Based on Stationary Distributions: For ease of translating these data into an interpretable coding framework, appropriate values within Tables 19, 20, 21, 22, 23 and 24 have been made bold to exemplify a potential “criterion” for sufficient similarity between stationary distributions over thin slices and full interactions, i.e. where p < 0.05 for all slices of a given length. Particularly, the bold values within these tables indicate the suitable coding timeframe for each behavioural group. For example, if our aim is to understand the prediction of long-term behavioural states, Table 23 (Bristol Dads) suggests coding 1 min for Posture, 2 min for Visual Attention, and 3 min for Vocalisations. Similarly, Table 24 (Bristol Infants (2)) suggests coding 2 min for Head Orientation, 3 min for Physical Play, and the full 5 min (or longer) for Facial Expressions. However, it is important to note that this criterion has been suggested only as guidance, and choosing an acceptable p value is subjective, so researchers may wish to adjust this criterion according to their own research aims.

As before, the chi-squared analyses for individual slices means that specific slices could be for coding based on the χ2 (and p) values provided, but only if video recording and coding followed the same procedures as ours.

Discussion

Summary of Results

Behavioural coding can be challenging, time intensive and laborious. Researchers’ struggles with this process can be intensified by many factors, including long coding intervals, complex coding schemes with multiple behaviour categories, or the implementation of novel coding schemes (Pesch & Lumeng, 2017). Thin slice coding offers an alternative approach which aims to alleviate the coding burden. However, there is not yet a strong conclusion on whether behavioural features coded over thin slices of an interaction are representative of the full session, particularly for parents and infants. By comparing frequencies, transition matrices and stationary distributions for a range of behaviours over thin slices and fully coded observations, this work aimed to provide quantitative evidence for the value of thin slice coding in parent-infant interactions.

We hypothesised that as slice length increased, behavioural frequencies, transition matrices, and stationary distributions would become more similar to the full session equivalents. Our results were consistent with this hypothesis, across all populations, subjects, and behaviours. Particularly, we found stronger correlations between frequencies of behaviours over thin slices and fully coded interactions as the length of the slice increased; the strongest correlations occurred between 4-min slices and full sessions, and the weakest correlations occurred between 1-min slices and full sessions. Some behaviours demonstrated worse correlations than others. Additionally, we used an example criterion of r > 0.9 to indicate a “standard” strength of association, highlighting the most closely related slices.

Further, using absolute differences and chi-squared analyses, we showed that transition matrices drawn from thin slices became more similar to those from full sessions as slice length increased, i.e., transitions from one behaviour to another were most accurately predicted by longer slices. Similarly, we showed that stationary distributions drawn from thin slices became more similar to those from fully coded interactions as slice length increased, i.e., long term behavioural states were most accurately predicted by longer slices. For both transition matrices and stationary distributions, we used an example criterion of p < 0.05 for all slices of a given length to indicate a “standard” sufficient similarity between slices, highlighting those that were most closely related.

These findings are consistent with those of both Murphy (2005) and Carcone et al. (2015), who found higher correlations between frequencies of coded behaviours over thin slices and fully coded interactions as the lengths of slices increased. Whilst these studies utilised full sessions of length 15- and 30-min, respectively, we have reproduced similar results for full sessions at a shorter length of 5-min. Our results are also consistent with those of James et al. (2012), who found that behavioural measures over short portions of interactions (3- and 6-min) were significantly different from full sessions (18-min). We have found this to be true for transitions between behaviours and the prediction of long-term states, specifically when comparing 1- and 2-min slices to the full, 5-min interactions (Tables 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24 of the “Appendix”). This is in line with Holden and Miller (1999), who emphasized that brief assessments of parenting practices can reflect characteristics that endure over time.

Suitability of Thin Slice Sampling

This work contributes further understanding to the instances in which thin slice sampling is an effective, suitable practice for reducing the coding burden. The detail we have provided on specific behaviours, populations and measures means that results can be tailored to individual studies, and researchers can use the correlations and agreements provided to choose for themselves the level of accuracy that they deem acceptable for their own research aims. As such, we separate the following discussion into points (1)–(5) below, each addressing a different factor that may affect the suitability of thin slice sampling in practice.

1. Behaviours of Interest: Murphy et al. (2019) suggested that future work should examine what kinds of behaviours are more and less suitable for thin slice analysis. We have aimed to do this by incorporating as many behaviours as possible into our coding methodology. In comparison to previous literature in thin slice sampling, we coded a large number of behavioural groups and individual behaviours. Previously, some have focused on a single type of behaviour (e.g., Carcone et al. (2015) and Roter et al. (2011) both focused only on verbal communication behaviours). Others have coded behaviours across multiple behavioural groups (e.g., Murphy (2005) and Wang et al. (2020) each focused on a combination of five, distinct non-verbal and vocal behaviours, such as ‘lean in’ or ‘nod’). In a coding scheme most similar to ours, Hirschmann et al. (2018) coded multiple behavioural groups that were each comprised of several mutually exclusive behaviours. By including as many behaviours as possible in our analysis, we believe that our behaviour-specific findings may inform future studies across a variety of contexts.

However, whilst including a large range of behaviours ultimately allowed us to assess many different contexts for thin slice sampling, we acknowledge that this has led to the analyses including some specific scenarios which may not well address the research question. For example, knowing infant posture is very useful for providing both physical and social context to a parent-infant interaction (e.g., infant posture is linked to gazing at mothers face (Fogel et al., 1999)), yet, for infants of aged 6–7 months (as within this work), there is unlikely to be much self-led variation in this behaviour. Of course, parents will likely adjust the infants position throughout the interaction, but this is likely to significantly vary dependant on the interaction type and physical setting. Another example is the presence of “Physical Play” within bedtime, reading or feeding interactions, where there is likely to be very little of this behaviour. The inclusion of this scenario is likely to have led to inflated correlations for “Physical Play” in Tables 8 and 9 (only for Bristol Mums and Dads).

Within the “Results” section, we have demonstrated how to extract suitable coding timeframes for specific behaviours from the tables provided, according to individual behavioural measures. Here we give a full example of this process, encompassing all extracted measures for one behavioural group: Caregiver Vocalisation. For simplicity, we will only consider measures obtained from the Cardiff Mums population. Firstly, Table 7 (of the “Appendix”) shows the frequency of Caregiver Vocalisation behaviours across all slices. We can see how the most vocalisations occurred in early slices One and Two, and the least vocalisations occurred in the later slices Four and Five. Table 7 (in the “Results” section) shows the correlations between Vocalisation frequencies over 1-, 2-, 3- and 4-min slices compared to the full session. For each slice length, we can see for which specific slice the vocalisations best correlate with the full session (for 1-min slices, slice One best correlates; for 2-min slices, slices TwoThree and ThreeFour, for 3-min, slice TwoThreeFour; for 4-min, slice TwoThreeFourFive). We can also see that r > 0.9 for all slices of length 2-min or longer; using our criterion of r > 0.9, this suggests that coding 2 min of Vocalisations would be sufficient for representing full session frequencies.

In terms of transitions between individual vocalisation behaviours, using our example criterion of p < 0.05 for sufficient similarity, the bold values within Table 13 (of the “Appendix”) show that transitions from multiple vocal states (e.g., Musical Sounds, Nervous Laugh) predicted by 1-min met this criterion. By 3 min, transitions from Speech met the criterion, and by 4-min slices, all transitions from any vocal state met the criterion. This suggests that 4 min is a suitable coding timeframe for representing full session transitions between vocalisation behaviours (as indicated by the fully-boldened region in Table 13).

Finally, using our example criterion of p < 0.05, the bold values in Table 19 (of the “Appendix”) shows that Vocalisation stationary distributions from 3- and 4-min slices met the criterion, i.e., distributions from these slices were suitably similar to the distribution from the full 5-min session. This suggests that in terms of long-term behaviour prediction, 3 min of coding is a suitable coding timeframe in order to represent the full 5 min interaction.

We have outlined many examples of where our findings indicate that coding time of a behavioural group could be largely reduced; however, this was not always the case. To illustrate this, consider Caregiver Posture for Bristol Mums. The frequencies of this behaviour were extremely low compared to other behaviours (for 1-min slices, frequencies ranged from 0.73 to 1.27, see Table 9 of the “Appendix”). Consequently, correlations between frequencies over thin slices and full sessions were low compared to other behavioural groups (e.g., Posture was the only behaviour to have r < 0.9 for any 4-min slice, see Table 3 in the “Results” section), and transition matrices from many thin slices—even over the longest, 4-min slices (e.g., see Sit on object, and Sit on floor, Table 15 of the “Appendix”)—did not meet our example criterion (p < 0.05). Together, these findings suggest that the full 5-min session should be coded for Caregiver Posture, as coding for a reduced amount of time did not generate the same outcome measures as the fully coded interaction. In this case, thin slice sampling was not a suitable practice.

Roter et al. (2011) suggested that further understanding of the efficacy of thin slice sampling would “…help researchers decide on the trade-offs entailed in adopting this methodology in their own studies”. We believe that our contribution addresses this question. By incorporating a large variety of behavioural groups into our coding scheme, our findings have provided new knowledge regarding specific instances in which thin slice sampling is an effective, suitable practice for reducing the coding burden. We have shown this suitability to be dependent on the behavioural group of interest.

2. Type of Behavioural Measure: Our analyses have employed three separate behavioural measures—frequencies, transition matrices and stationary distributions—and each of these measures represents a different aspect of behaviour. Frequencies calculate how often a behaviour occurs within a period of time; transition matrices identify the number of times that a parent/infant transitions between two behaviours; and stationary distributions tell us about the long-run proportion of time spent engaging in (or duration of) a given behaviour. It is therefore unsurprising that the efficacy of thin slice sampling is linked to the chosen measure.

For example, we have seen that correlations between thin slice frequencies and full sessions were “strong” or “very strong” for most behaviours, even at short slices, suggesting that thin slice sampling of 1- or 2-min slices may be suitable for some behaviours (e.g., Bristol Mums Body Orientation and Proximity, Bristol Infants (2) Physical Play and Hand Movements). However, chi-squared analyses for many behavioural groups revealed that transitions predicted by thin slices did not meet the example criterion, and were therefore not considered representative of transitions within the fully coded sessions. This suggests that thin slice sampling of 1- or 2-min slices may not always be suitable for accurately calculating transitions between behaviours. In this way, it is up to researchers to make their own decisions regarding the suitability of this practice, dependent on the type of behavioural measures applied in their methodologies.

3. Subjectivity of Interpretation: It is important to note that implications of our findings are also dependent on researchers’ personal perceptions of what level of agreement they deem “acceptable”, for example, the necessity of a “very strong” (r > 0.9) or “strong” (r > 0.7) Pearson correlation.

Throughout our work, however, we have provided detailed guidance for how researchers may choose an appropriate thin slice, depending on the behaviour and measure. We have chosen an “acceptable” correlation of frequencies of r > 0.9, and an “acceptable” similarity between transitions/stationary distributions indicated by p < 0.05 for all slices of a given length. With these criteria, we have found many examples where 2, 3 or 4 min of coding is an acceptable proxy for 5 min, depending on the behaviour, population and measure. However, whilst we have used these criteria as a baseline for our own data interpretations, we recognise that the levels of agreement chosen here may not be suitable for all research aims, and that other researchers may wish to adapt these criteria accordingly.

4. Physical Context of an Interaction: The three populations used within our work encompass some differences in physical context for the interactions. Namely, some videos were collected in-lab (Cardiff Mums), and others at home (Bristol Mums and Dads). This key difference has, unsurprisingly, been reflected in our findings, and is an important factor to consider as it demonstrates that videos recorded at home vs. in-lab may require different thin slice sampling decisions to be made.

One such difference between these populations is that the Bristol interactions varied in type (e.g., eating, playing, reading), whereas all Cardiff interactions were classed as “free play”. This is likely to have contributed to greater variation in behaviours within the population (as reflected in higher standard deviations within Table 3 of the “Results” section). Also, the use of headcams for recording mother-infant interactions at home has been linked to less socially desirable behaviours occurring in mothers (Lee et al., 2017), which may account for any differences between Bristol and Cardiff Mums. The Cardiff Mums population also has an unusually high proportion of mothers with postgraduate degrees (38.7%), and mothers are around 8–10 years older than the Bristol Mums and Dads (35.4 years compared to 24.4 and 27.5, respectively). Finally, for the Bristol Dads videos, fathers often started the video recording by introducing the activity and vocalising the time and date. These introductions took place within the first minute of coding, which may account for some differences between at-home/in-lab findings (and also the differences between slices including minute One and those that do not, in Fig. 2 of the “Appendix”).

Given the multiple potential reasons for variation of behaviours captured within in-lab and at-home data collection, it is not surprising that these differences have been reflected in our findings. As an example, comparing Cardiff findings to those from both Bristol Mums and Dads in correlation Table 3 (in the “Results” section), we observe differences in the correlations between behavioural frequencies over thin slices and full sessions. Correlations in the Cardiff population were generally not classed as “very strong” (r > 0.90) for the earlier slices, and correlations remained much lower than the equivalent values for the Bristol Mums and Dads population. Comparatively, correlations in both Bristol populations were often “very strong” at 1- or 2-min slices. Another example of differences between Cardiff and Bristol findings is the “cut off” for accurately predicting behavioural transitions from a thin slice (Tables 13, 14, 15, 16, 17, 18 of the “Appendix”); these data suggest that 4 min is suitable for Bristol Mums and Dads, whereas a decreased slice length of 3 min is suitable for Cardiff Mums.

Therefore, we can see how the suitability of thin slice sampling varies between populations, depending on whether video recordings were taken in-lab or at-home. We recommend that researchers should consider these differences in the future if choosing to implement a thin slice sampling methodology.

5. Portion of the Interaction: Given the choice to code a specific number of minutes of an interaction, researchers will likely want to choose to code a slice from the most representative portion—the beginning, middle or end.

The findings for the Cardiff Mums population can be used to interpret which portion of an interaction—the beginning, middle, or end—is most representative of the full interaction, in terms of frequency and duration of behaviours, and the transitions between them. Whilst these exact interpretations cannot be drawn from the Bristol populations, given the methods of video coding, we can however observe differences between earlier slices (equivalent to the beginning portion of the interaction) and the remaining slices (equivalent to the “rest” of the interaction, including middle and end portions).

Looking first at our frequency correlation results in Table 3 in the “Results” section, for all populations and across different slice lengths, the prevalent pattern was that the largest correlations were found for the middlemost slices (e.g. TwoThreeFour), compared to the earliest (e.g., OneTwo) and final slices (e.g., FourFive). Examples of this include: Bristol Dads Proximity and Facial Expression; Bristol Mums Touch R and Posture; and Cardiff Mums Touch L and Visual Attention. Importantly, this trend was stronger in the parent samples compared to the infants. As slice length increased, we found that correlations were most often highest in the earliest slices, and this was true regardless of population and sample. In contrast, correlations were often lowest in the latest slices.

For transitions between behaviours, we found that the earliest slices, regardless of length, were most representative of full-session transitions compared to the middlemost and later slices (this is shown in Fig. 2 of the “Appendix”). Further, the end-most slices were often the least representative. For stationary distributions, there was no clear pattern linking slice position to how representative it was of the full session (this was shown in Fig. 3 of the “Appendix”).

There are many potential reasons for these observed differences between behaviours within the beginning, middle and end of the interactions. From the parent perspective, it could be that the parents were aware of being filmed, so acted more “performatively” for the camera as the recording started (but dropped this behaviour as the interaction persisted). The parents may also have been more fidgety early on, if they were feeling nervous about the recorded task, or if they were taking time to set up a toy/food for the session (something we did observe in the home based videos). Finally, parents may have been more engaged in the beginning of the interaction, but lost interest over time. All of these example scenarios would contribute to a higher frequency of behaviours early in the interaction, compared to the middle and end, affecting how reflective the beginning slices are of the full interaction, compared to other slices.

It is not surprising that the differences between early and later slices are more pronounced in the parent data compared to the infant data. As infants are too young to comprehend that they are being recorded, they would not consciously (or subconsciously) adapt their behaviour in any way. This could partly explain why this pattern is not strongly present in the infant behaviour frequency correlations (Tables 3, 4, 5 in the “Results” section). However, it is likely that infant behaviour is reflective of parents behaviour in some regard, for example, infants may become more fidgety (i.e., a higher frequency of behaviours) when the parent is taking some time to start feeding or set up a toy. This would explain why differences between early and later slices still occur within the infant data, but are just less pronounced. At this stage we are only speculating about potential correlations in dyadic behaviours, however, this is something we intend to explore in detail in our future work.