Abstract

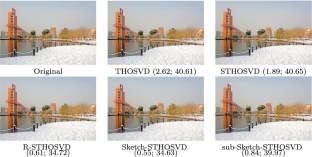

Low-rank approximation of tensors has been widely used in high-dimensional data analysis. It usually involves singular value decomposition (SVD) of large-scale matrices with high computational complexity. Sketching is an effective data compression and dimensionality reduction technique applied to the low-rank approximation of large matrices. This paper presents two practical randomized algorithms for low-rank Tucker approximation of large tensors based on sketching and power scheme, with a rigorous error-bound analysis. Numerical experiments on synthetic and real-world tensor data demonstrate the competitive performance of the proposed algorithms.

Similar content being viewed by others

Data Availability

Enquiries about data availability should be directed to the authors.

References

Comon, P.: Tensors: a brief introduction. IEEE Signal Process. Mag. 31(3), 44–53 (2014)

Hitchcock, F.L.: Multiple invariants and generalized rank of a P-Way matrix or tensor. J. Math. Phys. 7(1–4), 39–79 (1928)

Kiers, H.A.L.: Towards a standardized notation and terminology in multiway analysis. J. Chemom Soc. 14(3), 105–122 (2000)

Tucker, L.R.: Implications of factor analysis of three-way matrices for measurement of change. Probl. Meas. Change 15, 122–137 (1963)

Tucker, L.R.: Some mathematical notes on three-mode factor analysis. Psychometrika 31(3), 279–311 (1966)

De Lathauwer, L., De Moor, B., Vandewalle, J.: A multilinear singular value decomposition. SIAM J. Matrix Anal. Appl. 21(4), 1253–1278 (2000)

Hackbusch, W., Kühn, S.: A new scheme for the tensor representation. J. Fourier Anal. Appl. 15(5), 706–722 (2009)

Grasedyck, L.: Hierarchical singular value decomposition of tensors. SIAM J. Matrix Anal. Appl. 31(4), 2029–2054 (2010)

Oseledets, I.V.: Tensor-train decomposition. SIAM J. Sci. Comput. 33(5), 2295–2317 (2011)

De Lathauwer, L., De Moor, B., Vandewalle, J.: On the best rank-1 and rank-(r1, r2,...,rn) approximation of higher-order tensors. SIAM J. Matrix Anal. Appl. 21(4), 1324–1342 (2000)

Vannieuwenhoven, N., Vandebril, R., Meerbergen, K.: A new truncation strategy for the higher-order singular value decomposition. SIAM J. Sci. Comput. 34(2), A1027–A1052 (2012)

Zhou, G., Cichocki, A., Xie, S.: Decomposition of big tensors with low multilinear rank. arXiv preprint, arXiv:1412.1885 (2014)

Che, M., Wei, Y.: Randomized algorithms for the approximations of Tucker and the tensor train decompositions. Adv. Comput. Math. 45(1), 395–428 (2019)

Minster, R., Saibaba, A.K., Kilmer, M.E.: Randomized algorithms for low-rank tensor decompositions in the Tucker format. SIAM J. Math. Data Sci. 2(1), 189–215 (2020)

Che, M., Wei, Y., Yan, H.: The computation of low multilinear rank approximations of tensors via power scheme and random projection. SIAM J. Matrix Anal. Appl. 41(2), 605–636 (2020)

Che, M., Wei, Y., Yan, H.: Randomized algorithms for the low multilinear rank approximations of tensors. J. Comput. Appl. Math. 390(2), 113380 (2021)

Sun, Y., Guo, Y., Luo, C., Tropp, J., Udell, M.: Low-rank tucker approximation of a tensor from streaming data. SIAM J. Math. Data Sci. 2(4), 1123–1150 (2020)

Tropp, J.A., Yurtsever, A., Udell, M., Cevher, V.: Streaming low-rank matrix approximation with an application to scientific simulation. SIAM J. Sci. Comput. 41(4), A2430–A2463 (2019)

Malik, O.A., Becker, S.: Low-rank tucker decomposition of large tensors using Tensorsketch. Adv. Neural. Inf. Process. Syst. 31, 10116–10126 (2018)

Ahmadi-Asl, S., Abukhovich, S., Asante-Mensah, M.G., Cichocki, A., Phan, A.H., Tanaka, T.: Randomized algorithms for computation of Tucker decomposition and higher order SVD (HOSVD). IEEE Access. 9, 28684–28706 (2021)

Halko, N., Martinsson, P.-G., Tropp, J.A.: Finding structure with randomness: probabilistic algorithms for constructing approximate matrix decompositions. SIAM Rev. 53(2), 217–288 (2011)

Tropp, J.A., Yurtsever, A., Udell, M., Cevher, V.: Practical sketching algorithms for low-rank matrix approximation. SIAM J. Matrix Anal. Appl. 38(4), 1454–1485 (2017)

Rokhlin, V., Szlam, A., Tygert, M.: A randomized algorithm for principal component analysis. SIAM J. Matrix Anal. Appl. 31(3), 1100–1124 (2009)

Xiao, C., Yang, C., Li, M.: Efficient alternating least squares algorithms for low multilinear rank approximation of tensors. J. Sci. Comput. 87(3), 1–25 (2021)

Zhang, J., Saibaba, A.K., Kilmer, M.E., Aeron, S.: A randomized tensor singular value decomposition based on the t-product. Numer. Linear Algebra Appl. 25(5), e2179 (2018)

Acknowledgements

We would like to thank the anonymous referees for their comments and suggestions on our paper, which lead to great improvements of the presentation.

Funding

G. Yu’s work was supported in part by the National Natural Science Foundation of China (No. 12071104) and the Natural Science Foundation of Zhejiang Province (No. LD19A010002).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have not disclosed any competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Lemma 1

([25], Theorem 2) Let \(\varrho < k-1\) be a positive natural number and \({\varvec{\Omega }}\in \mathbb {R}^{k\times n}\) be a Gaussian random matrix. Suppose \(\textbf{Q}\) is obtained from Algorithm 7. Then \(\forall \textbf{A}\in \mathbb {R}^{m\times n}\), we have

Lemma 2

([22], Lemma A.3) Let \(\textbf{A}\in \mathbb {R}^{m\times n}\) be an input matrix and \(\hat{\textbf{A}}=\textbf{QX}\) be the approximation obtained from Algorithm 7. The approximation error can be decomposed as

Lemma 3

([22], Lemma A.5) Assume \({\varvec{\Psi }}\in \mathbb {R}^{l\times n}\) is a standard normal matrix independent from \({\varvec{\Omega }}\). Then

The error-bound for Algorithm 7 can be shown in Lemma 4 below.

Lemma 4

Assume the sketch size parameter satisfies \(l>k+1\). Draw random test matrices \(\varvec{\Omega }\in \mathbb {R}^{n\times k}\) and \(\varvec{\Psi }{\in \mathbb {R}}^{l\times m}\) independently from the standard normal distribution. Then the rank-k approximation \(\hat{\textbf{A}}\) obtained from Algorithm 7 satisfies

Proof

Using Eqs. (23), (24) and (25), we have

After minimizing over eligible index \(\varrho <k-1\), the proof is completed. \(\square \)

We are now in the position to prove Theorem 5. Combining Theorem 2 and Lemma 4, we have

which completes the proof of Theorem 5.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Dong, W., Yu, G., Qi, L. et al. Practical Sketching Algorithms for Low-Rank Tucker Approximation of Large Tensors. J Sci Comput 95, 52 (2023). https://doi.org/10.1007/s10915-023-02172-y

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-023-02172-y

Keywords

- Tensor sketching

- Randomized algorithm

- Tucker decomposition

- Subspace power iteration

- High-dimensional data