Abstract

This paper studies distributionally robust optimization (DRO) when the ambiguity set is given by moments for the distributions. The objective and constraints are given by polynomials in decision variables. We reformulate the DRO with equivalent moment conic constraints. Under some general assumptions, we prove the DRO is equivalent to a linear optimization problem with moment and psd polynomial cones. A Moment-SOS relaxation method is proposed to solve it. Its asymptotic and finite convergence are shown under certain assumptions. Numerical examples are presented to show how to solve DRO problems.

Similar content being viewed by others

1 Introduction

Many decision problems are involved with uncertainties. People often like to make a decision that works well with uncertain data. The distributionally robust optimization (DRO) is a frequently used model for this kind of decision problems. A typical DRO problem is

where \(f:{\mathbb {R}}^n\rightarrow {\mathbb {R}}\), \(h:{\mathbb {R}}^n\times {\mathbb {R}}^p\rightarrow {\mathbb {R}}\), \(x := (x_1, \ldots , x_n)\) is the decision variable constrained in a set \(X\subseteq {\mathbb {R}}^n\) and \(\xi := (\xi _1,\ldots , \xi _p) \in {\mathbb {R}}^p\) is the random variable obeying the distribution of a measure \(\mu \in {\mathcal {M}}\). The notation \({\mathbb {E}}_{\mu }[h(x,\xi )]\) stands for the expectation of the random function \(h(x,\xi )\) with respect to the distribution of \(\xi \). The set \({\mathcal {M}}\) is called the ambiguity set, which is used to describe the uncertainty of the measure \(\mu \).

The ambiguity set \({\mathcal {M}}\) is often moment-based or discrepancy-based. For the moment-based ambiguity, the set \({\mathcal {M}}\) is usually specified by the first, second moments [11, 17, 50]. Recently, higher order moments are also often used [8, 15, 28], especially in relevant applications with machine learning. For discrepancy-based ambiguity sets, popular examples are the \(\phi \)-divergence ambiguity sets [2, 31] and the Wasserstein ambiguity sets [40]. There are also some other types of ambiguity sets. For instance, [22] assumes \({\mathcal {M}}\) is given by distributions with sum-of-squares (SOS) polynomial density functions of known degrees.

We are mostly interested in Borel measures whose supports and moments, up to a given degree d, are respectively contained in given sets \(S \subseteq {\mathbb {R}}^p\) and \(Y\subseteq {\mathbb {R}}^{\left( {\begin{array}{c}p+d\\ d\end{array}}\right) }\). Let \({\mathcal {B}}(S)\) denote the set of Borel measures supported in S. We assume the ambiguity set is given as

where \([\xi ]_d\) is the monomial vector

The problem (1.1) equipped with the above ambiguity set is called the distributionally robust optimization of moment (DROM). When all the defining functions are polynomials, the DROM is an important class of distributionally robust optimization. It has broad applications. Polynomial and moment optimization are studied extensively [25, 29, 34, 38]. This paper studies how to solve DROM in the form (1.1) by using Moment and SOS relaxations (see the preliminary section for a brief review of them). Currently, there exists relatively few work on this topic.

Solving DROM is of broad interests recently. It is studied in [22, 31] when the density functions are given by polynomials. Polynomial and moment optimization are studied extensively [25, 29, 34, 38]. In this paper, we study how to solve DROM in the form (1.1) by using Moment-SOS relaxations. Currently, there exists relatively less work on this topic.

We remark that the distributionally robust min-max optimization

is a special case of the distributionally robust optimization in the form (1.1). Assume each \(\mu \in {\mathcal {M}}\) is a probability measure (i.e., \({\mathbb {E}}_{\mu }[1] = 1\)), then the min-max optimization (1.3) is equivalent to

This is a distributionally robust optimization problem in the form (1.1).

The distributionally robust optimization is frequently used to model uncertainties in various applications. It is closely related to stochastic optimization and robust optimization. Under certain conditions, the DRO can be transformed into other two kinds of problems. In stochastic optimization (see [6, 14, 24, 45, 47]), people often need to solve decision problems such that the true distributions can be well approximated by sampling. The performance of computed solutions heavily relies on the quality of sampling. In order to get more stable solutions, regularization terms can be added to the optimization (see [39, 43, 49]). In robust optimization (see[1, 4]), the uncertainty is often assumed to be freely distributed in some sets. This approach is often computationally tractable and suitable for large-scale data. However, it may produce too pessimistic decisions for certain applications. Combining these two approaches may give more reasonable decisions sometimes. Some information of random variables may be well estimated, or even exactly generated from the sampling or historic data. For instance, people may know the support of the measure, discrepancy from a reference distribution, or its descriptive statistics. The ambiguity set can be given as a collection of measures satisfying such properties. It contains some exact information of distributions, as well as some uncertainties. For decision problems with ambiguity sets, it is naturally to find optimal decisions that work well under uncertainties. This gives rise to distributionally robust optimization like (1.1).

We refer to [7, 22, 41, 51, 53, 54, 56] for recent work on distributionally robust optimization. For the min-max robust optimization (1.3), we refer to [11, 46, 50]. The distributionally robust optimization has broad applications, e.g., portfolio management [11, 13, 55], network design [31, 52], inventory problems [5, 50] and machine learning [12, 16, 32]. For more general work on distributonally robust optimization, we refer to the survey [44] and the references therein.

1.1 Contributions

This article studies the distributionally robust optimization (1.1) with a moment ambiguity set \({\mathcal {M}}\) as in (1.2). Assume the measure support set S is a semi-algebraic set given by a tuple \(g := (g_1,\ldots ,g_{m_1})\) of polynomials in \(\xi \). Similarly, assume the feasible set X is given by a polynomial tuple \(c := (c_1,\ldots ,c_{m_2})\) in x. We consider the case that the objective f(x) is a polynomial in x, constrained in a set \(X \subseteq {\mathbb {R}}^n\), and that the function \(h(x,\xi )\) is polynomial in the random variable \(\xi \) and is linear in x. The function \(h(x,\xi )\) can be written as

where each coefficient \(h_\alpha (x)\) is a linear function in x. The total degree in \(\xi := (\xi _1, \ldots , \xi _p)\) is at most d. For neatness, we also write that

for a given matrix A and vector b. Recall that \({\mathcal {M}}\) has the expression (1.2). It is clear that the set \({\mathcal {M}}\) consists of truncated moment sequences (tms)

such that the moment vector \(y = \int [\xi ]_d {\texttt{d}} \mu \) is contained in a given set Y. In this paper, we focus on the case that S is compact and that Y is a set whose conic hull \({ cone}({Y})\) can be represented by linear, second order or semidefinite conic inequalities. For convenience, define the conic hull of moments

Note that K can also be expressed with cone(Y); see (3.6). The constraint in (1.1) is the same as

Let \(K^*\) denote the dual cone of K, then the above is equivalent to \(Ax+b \in K^*\). Therefore, the problem (1.1) can be equivalently reformulated as

The moment constraining cone K and its dual cone \(K^*\) are typically difficult to describe computationally. However, they can be successfully solved by Moment-SOS relaxations (see [35, 38]).

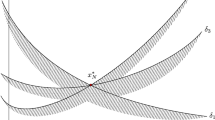

A particularly interesting case is that \(\xi \) is a univariate random variable, i.e., \(p=1\). For this case, the dual cone \(K^*\) can be exactly represented by semidefinite programming constraints. For instance, if \(d=4\), Y is the hypercube \([0,1]^5\) and \(S=[a_1,a_2]\), then cone(Y) is the nonnegative orthant and the cone K can be expressed by the constraints

In the above, \(X_1 \succeq X_2\) means that \(X_1-X_2\) is a positive semidefinite (psd) matrix. The dual cone \(K^*\) can be given by semidefinite programming constraints dual to the above. The proof for such expression is shown in Theorem 4.6.

For the case that \(\xi \) is multi-variate, i.e., \(p>1\), there typically do not exist explicit semidefinite programming representations for the cone K and its dual cone \(K^*\). However, they can be approximated efficiently by Moment-SOS relaxations (see [35, 38]).

This paper studies how to solve the equivalent optimization problem (1.8) by Moment-SOS relaxations. In computation, the cone of Y is usually expressed as a Cartesian product of linear, second order, or semidefinite conic constraints. A hierarchy of Moment-SOS relaxations is proposed to solve (1.8) globally, which is equivalent to the distributionally robust optimization (1.1). It is worthy to note that our convex relaxations use both “moment” and “SOS” relaxation techniques, which are different from the classic work of polynomial optimization and DROM problems. In most prior work, usually one of moment and SOS relaxation is used, but rarely two are used simultaneously. Under some general assumptions (e.g., the compactness or archimedeanness), we prove the asymptotic and finite convergence of the proposed Moment-SOS method. The property of finite convergence makes our method very attractive for solving DROM. To check whether a Moment-SOS relaxation is tight or not, one can solve an \({\mathcal {A}}\)-truncated moment problem with the method in [35]. By doing so, we not only compute the optimal values and optimizers of (1.8), but also obtain a measure \(\mu \) that achieves the worst case expectation constraint. This is a major advantage that most other methods do not own. In summary, our main contributions are:

-

We consider the new class of distributionally robust optimization problems in the form (1.1), which are given by polynomial functions and moment ambiguity sets. The Moment-SOS relaxation method is proposed to solve them globally. It has more attractive properties than prior existing methods. Numerical examples are given to show the efficiency.

-

When the objective f(x) and the constraining set X are given by SOS-convex polynomials, we prove the DROM is equivalent to a linear conic optimization problem.

-

Under some general assumptions, we prove the asymptotic and finite convergence of the proposed method. There is little prior work on finite convergence for solving DROM. In particular, when the random variable \(\xi \) is univariate, we show that the lowest order Moment-SOS relaxation is sufficient for solving (1.8) exactly.

-

We also show how to obtain the measure \(\mu ^*\) that achieves the worst case expectation constraint.

The rest of the paper is organized as follows. Section 2 reviews some preliminary results about moment and polynomial optimization. In Sect. 3, we give an equivalent reformulation of the distributionally robust optimization, expressing it as a linear conic optimization problem. In Sect. 4, we give an algorithm of Moment-SOS relaxations to solve (1.8). Some numerical experiments and applications are given in Sect. 5. Finally, we make some conclusions and discussions in Sect. 6.

2 Preliminaries

2.1 Notation

The symbol \({\mathbb {R}}\) (resp., \({\mathbb {R}}_+\), \({\mathbb {N}}\)) denotes the set of real numbers (resp., nonnegative real numbers, nonnegative integers). For \(t\in {\mathbb {R}}\), \(\lceil t\rceil \) denotes the smallest integer that is greater or equal to t. For an integer \(k>0\), \([k] := \{1,\cdots ,k\}\). The symbol \({\mathbb {N}}^n\) (resp., \({\mathbb {R}}^n\)) stands for the set of n-dimensional vectors with entries in \({\mathbb {N}}\) (resp., \({\mathbb {R}}\)). For a vector v, we use \(\Vert v\Vert \) to denote its Euclidean norm. The superscript \(^T\) denotes the transpose of a matric or vector. For a set S, the notation \({\mathcal {B}}(S)\) denotes the set of Borel measures whose supports are contained in S. For two sets \(S_1,S_2\), the operation

is the Minkowski sum. The symbol e stands for the vector of all ones and \(e_i\) stands for the ith standard unit vector, i.e., its ith entry is 1 and all other entries are zeros. We use \(I_n\) to denote the n-by-n identity matrix. A symmetric matrix W is positive semidefinite (psd) if \(v^TWv\ge 0\) for all \(v\in {\mathbb {R}}^n\). We write \(W\succeq 0\) to mean that W is psd. The strict inequality \(W \succ 0\) means that W is positive definite.

The symbol \({\mathbb {R}}[x] := {\mathbb {R}}[x_1,\cdots ,x_n]\) denotes the ring of polynomials in x with real coefficients, and \({\mathbb {R}}[x]_d\) is the subset of \({\mathbb {R}}[x]\) with polynomials of degrees at most d. For a polynomial \(f\in {\mathbb {R}}[x]\), we use \(\deg (f)\) to denote its degree. For a tuple \(f = (f_1,\ldots ,f_r)\) of polynomials, the \(\deg (f)\) denotes the highest degree of \(f_i\). For a polynomial p(x), \({ vec}({p})\) is the coefficient vector of p. For \(\alpha := (\alpha _1, \ldots , \alpha _n)\) and \(x := (x_1, \ldots , x_n)\), we denote that

For a degree d, denote the power set

Let \([x]_d\) denote the vector of all monomials in x that have degrees at most d, i.e.,

The notation \(\xi ^\alpha \) and \([\xi ]_d\) are similarly defined for \(\xi := (\xi _1, \ldots , \xi _p)\). The notation \({\mathbb {E}}_{\mu }[h(\xi )]\) denotes the expectation of the random function \(h(\xi )\) with respect to \(\mu \) for the random variable \(\xi \). The Dirac measure, which is supported at a point u, is denoted as \(\delta _u\).

Let V be a vector space over the real field \({\mathbb {R}}\). A set \(C \subseteq V\) is a cone if \(a x\in C\) for all \(x\in C\) and \(a>0\). For a set \(X\subset V\), we denote its closure by \(\overline{X}\) in the Euclidean topology. Its conic hull, which is the minimum convex cone containing X, is denoted as \({ cone}({X})\). The dual cone of the set X is

where \(V^*\) is the dual space of V (i.e., the space of linear functionals on V). Note that \(X^*\) is a closed convex cone for all X. For two nonempty sets \(X_1, X_2 \in V\), we have \((X_1+X_2)^*=X_1^*\cap X_2^*\). When \(X_1+X_2\) is a closed convex cone, we also have \(( X_1^*\cap X_2^* )^* = X_1 + X_2\).

In the following, we review some basics in optimization about polynomials and moments. We refer to [21, 25, 27, 29, 36, 37] for more details about this topic.

2.2 SOS and Nonnegative Polynomials

A polynomial \(f\in {\mathbb {R}}[x]\) is said to be SOS if \( f = f_1^2+\cdots +f_k^2\) for some real polynomials \(f_i \in {\mathbb {R}}[x]\). We use \(\varSigma [x]\) to denote the cone of all SOS polynomials in x. The dth degree truncation of the SOS cone \(\varSigma [x]\) is

It is a closed convex cone for each degree d. For a polynomial \(f\in {\mathbb {R}}[x]\), the membership \(f\in \varSigma [x]\) can be checked by solving semidefinite programs [25, 29]. In particular, f is said to be SOS-convex [18] if its Hessian matrix \(\nabla ^2f(x)\) is SOS, i.e., \(\nabla ^2 f=V(x)^T V(x)\) for a matrix polynomial V(x).

In this paper, we also need to work with polynomials in \(\xi := (\xi _1, \ldots , \xi _p)\). For a tuple \(g := (g_1,\ldots ,g_{m_1})\) of polynomials in \(\xi \), its quadratic module is the set

The dth degree truncation of \(\text{ QM }[{g}]\) is

Let \(S =\{ \xi \in {\mathbb {R}}^p : g(\xi )\ge 0\}\) be the set determined by g and let \(\mathscr {P}(S)\) denote the set of polynomials that are nonnegative on S. We also frequently use the dth degree truncation

Then it holds that for all degree d

The quadratic module \(\text{ QM }[{g}]\) is said to be archimedean if there exists a polynomial \(\phi \in \text{ QM }[{g}]\) such that \(\{\xi \in {\mathbb {R}}^p: \phi (\xi ) \ge 0\}\) is compact. If \(\text{ QM }[{g}]\) is archimedean, then S must be a compact set. The converse is not necessarily true. However, for compact S, the quadratic module \(\text{ QM }[{\tilde{g}}]\) is archimedean if g is replaced by \(\tilde{g} := (g, N-\Vert \xi \Vert ^2)\) for N sufficiently large. When \(\text{ QM }[{g}]\) is archimedean, if a polynomial \(h>0\) on S, then we have \(h \in \text{ QM }[{g}]\) (see [42]). Furthermore, under some classical optimality conditions, we have \(h \in \text{ QM }[{g}]\) if \(h \ge 0\) on S (see [36]).

2.3 Truncated Moment Problems

For the variable \(\xi \in {\mathbb {R}}^p\), the space of truncated multi-sequences (tms) of degree d is

Each \(z \in {\mathbb {R}}^{{\mathbb {N}}_d^p}\) determines the linear Riesz functional \(\mathscr {L}_z\) on \({\mathbb {R}}[\xi ]_d\) such that

For convenience of notation, we also write that

For a polynomial \(q \in {\mathbb {R}}[\xi ]_{2d}\) and a tms \(z \in {\mathbb {R}}^{{\mathbb {N}}_{2k}^p}\), with \(k \ge d\), the kth order localizing matrix \(L_q^{(d)}[z]\) is such that

for all \(a,b\in {\mathbb {R}}[\xi ]_s\), where \(s = k-\lceil \deg (q)/2\rceil \). In particular, for \(q=1\) (the constant one polynomial), the \(L_1^{(k)}[z]\) becomes the so-called moment matrix

We can use the moment matrix and localizing matrices to describe dual cones of quadratic modules. For a polynomial tuple \(g=(g_1,\ldots , g_{m_1})\) with \(\deg (g) \le 2k\), define the tms cone

It can be verified that (see [38])

A tms \(z = (z_\alpha ) \in {\mathbb {R}}^{{\mathbb {N}}_d^p}\) is said to admit a representing measure \(\mu \) supported in a set \(S \subseteq {\mathbb {R}}^p\) if \(z_{\alpha } = \int \xi ^{\alpha } \texttt{d} \mu \) for all \(\alpha \in {\mathbb {N}}_d^p\). Such a measure \(\mu \) is called an S-representing measure for z. In particular, if \(z=0\) is the zero tms, then it admits the identically zero measure. Denote by meas(z, S) the set of S-measures admitted by z. This gives the moment cone

It is interesting to note that \(\mathscr {R}_d(S)\) can also be written as the conic hull

Recall that \(\mathscr {P}_d(S)\) denotes the cone of polynomials in \({\mathbb {R}}[\xi ]_d\) that are nonnegative on S. It is a closed and convex cone. For all \(h \in \mathscr {P}_d(S)\) and \(z\in \mathscr {R}_d(S)\), it holds that for every \(\mu \in meas(z,S)\),

This implies that \(\mathscr {R}_d(S)^* = \mathscr {P}_d(S)\). When S is compact, we also have \({\mathcal {P}}_d(S)^* = \mathscr {R}_d(S)\). If S is not compact, then

We refer to [29, Sect. 5.2] and [38] for this fact.

A frequent case is that \(S = \{ \xi : g(\xi ) \ge 0\}\) is determined by a polynomial tuple \(g= (g_1,\ldots , g_{m_1})\). For an integer \(k\ge \deg (g)/2\), a tms \(z\in {\mathbb {R}}^{{\mathbb {N}}_{2k}^p}\) admits an S-representing measure \(\mu \) if \(z\in \mathscr {S}[g]_{2k}\) and

where \(d_0 = \lceil \deg (g)/2\rceil \). Moreover, the measure \(\mu \) is unique and is r-atomic, i.e., \(|\text{ supp }({\mu })| = r\), where \(r = \text{ rank }\,M_k[z]\). The above rank condition is called flat extension or flat truncation [9, 34]. When it holds, the tms z is said to be a flat tms. When z is flat, one can obtain the unique representing measure \(\mu \) for z by computing Schur decompositions and eigenvalues (see [19]).

To obtain a representing measure for a tms \(y\in {\mathbb {R}}^{ {\mathbb {N}}_d^p }\) that is not flat, a semidefinite relaxation method is proposed in [35]. Suppose S is compact and the quadratic module \(\text{ QM }[{g}]\) is archimedean. Select a generic polynomial \(R \in \varSigma [\xi ]_{2k}\), with \(2k > \deg (g)\), and then solve the moment optimization

In the above \(\omega |_d\) denotes the dth degree truncation of \(\omega \), i.e.,

As k increases, by solving (2.12), one can either get a flat extension of y, or a certificate that y does not have any representing measure. We refer to [35] for more details about solving truncated moment problems.

3 Moment Optimization Reformulation

In this section, we reformulate the distributionally robust optimization equivalently as a linear conic optimization problem with moment constraints. We consider the DROM problem

where x is the decision variable constrained in a set \(X \subseteq {\mathbb {R}}^n\) and \(\xi \in {\mathbb {R}}^p\) is the random variable obeying the distribution of the measure \(\mu \) that belongs to the moment ambiguity set \({\mathcal {M}}\). We assume that the objective f(x) is a polynomial in x and \(h(x,\xi )\) is a polynomial in \(\xi \) whose coefficients are linear in x. Equivalently, one can write that

Suppose measures in the ambiguity set \({\mathcal {M}}\) have supports contained in the set

for a given tuple \(g := (g_1,\ldots ,g_{m_1})\) of polynomials in \(\xi \). The ambiguity set \({\mathcal {M}}\) can be expressed as

where Y is the constraining set for moments of \(\mu \). The set Y is not necessarily closed or convex. The closure of its conic hull is denoted as \(\overline{ { cone}({Y}) }\). In computation, it is often a Cartesian product of linear, second order or semidefinite cones. The constraining set X for x is assumed to be the set

for a tuple \(c=(c_1,\ldots , c_{m_2})\) of polynomials in x.

The DROM (3.1) can be equivalently reformulated as polynomial optimization with moment conic conditions. Observe that

The set \(\mathscr {R}_d(S)\) is the moment cone defined as in (2.8). It consists of degree-d tms’ admitting S-measures. For convenience, we denote the intersection

Therefore, we get that

where \(K^*\) denotes the dual cone of K. In view of (2.1) and (2.3), the dual cone \(Y^*\) is the following polynomial cone

Observe the dual cone relations

When both \(\mathscr {R}_d(S)\) and \({ cone}({Y})\) are closed, we have

If one of them is not closed, the above may or may not be true. Note that \(C^{**} = C\) if C is a closed convex cone. When (3.9) holds and the sum \(\mathscr {P}_d(S)+Y^*\) is a closed cone, we can express the dual cone \(K^*\) as

As shown in [3, Proposition B.2.7], the above equality holds if \(\mathscr {R}_d(S), cone(Y)\) are closed and their interiors have non-empty intersection. Such conditions are often satisfied for most applications. Recall that \(h(x,\xi ) = (Ax+b)^T[\xi ]_d\). The membership \(Ax+b \in K^*\) means that \(h(x,\xi ) \in K^*\). Therefore, we get the following result.

Theorem 3.1

Assume the set X is given as in (3.5). If the equality (3.10) holds, then (3.1) is equivalent to the following optimization

The membership constraint in (3.11) means that \(h(x,\xi )\), as a polynomial in \(\xi \), is the sum of a polynomial in \(\mathscr {P}_d(S)\) and a polynomial in \(Y^*\). When \(f, c_1, \ldots , c_{m_2}\) are all linear functions, (3.11) is a linear conic optimization problem. When f and every \(c_i\) are polynomials, we can apply Moment-SOS relaxations to solve it.

Recall that X is the set given as in (3.5). Denote the degree

Observe that for all \(x\in X\) and \(w = [x]_{2d_1}\), it holds that

We refer to the Subsection 2.2 for the above notation. For convenience, define the projection map \(\pi : {\mathbb {R}}^{ {\mathbb {N}}^n_{2d_1}} \, \rightarrow \, {\mathbb {R}}^n\) such that

So, the optimization (3.11) can be relaxed to

The relaxation (3.13) is said to be tight if it has the same optimal value as (3.11) does. Under the SOS-convexity assumption, the relaxation (3.13) is equivalent to (3.11). This is the following result.

Theorem 3.2

Suppose the ambiguity set \({\mathcal {M}}\) is given as in (3.4) and the set X is given as in (3.5). Assume the polynomials \(f, -c_1, \ldots , -c_{m_2}\) are SOS-convex. Then, the optimization problems (3.13) and (3.11) are equivalent in the following sense: they have the same optimal value, and \(w^*\) is a minimizer of (3.13) if and only if \(x^* := \pi (w^*)\) is a minimizer of (3.11).

Proof

Let w be a feasible point for (3.13) and \(x = \pi (w)\), then \(Ax + b \in K^*\). Since \(f, -c_1, \ldots , -c_{m_2}\) are SOS-convex, by the Jensen’s inequality (see [26]), we have the inequalities

The (1, 1)-entry of \(L_{c_i}^{(d_1)}[w]\) is \(\langle c_i, w \rangle \), so \(L_{c_i}^{(d_1)}[w] \succeq 0\) implies that \(\langle c_i, w \rangle \ge 0\). This means that \(x = \pi (w) \in X\) for every w that is feasible for (3.13). Let \(f_0, f_1\) denote the optimal values of (3.11), (3.13) respectively. Since the latter is a relaxation of the former, it is clearly that \(f_0 \ge f_1\). For every \(\epsilon >0\), there exists a feasible w such that \(\langle f, w \rangle \le f_1 + \epsilon \), which implies that \(f(\pi (w)) \le f_1 + \epsilon \). Hence \(f_0 \le f_1 + \epsilon \) for every \(\epsilon > 0\). Therefore, \(f_0 = f_1\), i.e., (3.13) and (3.11) have the same optimal value.

If \(w^*\) is a minimizer of (3.13), we also have \(x^* = \pi (w^*) \in X\) and

Since (3.13) is a relaxation of (3.11), they must have the same optimal value and \(x^*\) is a minimizer of (3.11). For the converse, if \(x^*\) is a minimizer of (3.11), then \(w^* := [x^*]_{2d_1}\) is feasible for (3.13) and \(f(x^*) = \langle f, w^* \rangle \) . So \(w^*\) must also be a minimizer of (3.13), since (3.13) and (3.11) have the same optimal value. \(\square \)

In the following, we derive the dual optimization of (3.13). As in Subsection 2.2, we have seen that

where \(\mathscr {S}[c]_{2d_1}\) is given similarly as in (2.6). Recall the dual relationship

as shown in (2.7). The Lagrange function for (3.13) is

for \(\gamma \in {\mathbb {R}}, q\in \text{ QM }[{c}]_{2d_1}, y \in \overline{K}\). (Note that the cone K is not necessarily closed.) To make \({\mathcal {L}}(w;\gamma ,q,y,z)\) have a finite infimum for \(w \in {\mathbb {R}}^{ {\mathbb {N}}_{2d_1}^n }\), we need the constraint

Therefore, the dual optimization of (3.13) is

The first membership in (3.14) means that \(f(x) - y^TAx-\gamma \), as a polynomial in x, belongs to the truncated quadratic module \(\text{ QM }[{c}]_{2d_1}\). So it gives a constraint for both \(\gamma \) and y.

4 The Moment-SOS Relaxation Method

In this section, we give a Moment-SOS relaxation method for solving the distributionally robust optimization and prove its convergence.

In Sect. 3, we have seen that the DROM (3.1) is equivalent to the linear conic optimization (3.13) under certain assumptions. It is still hard to solve (3.13) directly, due to the membership constraint \(h(x,\xi ) \in \mathscr {P}_d(S)+Y^*\). This is because the nonnegative polynomial cone \(\mathscr {P}_d(S)\) typically does not have an explicit computational representation. For its dual problem (3.14), it is similarly difficult to deal with the conic membership \(y \in \overline{K}\). However, both (3.13) and (3.14) can be solved efficiently by Moment-SOS relaxations.

Recall that S is a semi-algebraic set given as in (3.3). For every integer \(k \ge d/2\), it holds the nesting containment

We thus consider the following restriction of (3.13):

The integer k is called the relaxation order. Since \((\text{ QM }[{g}]_{2k})^*=\mathscr {S}[g]_{2k}\), the dual optimization of (4.1) is

We would like to remark that \(\text{ QM }[{g}]\) is a quadratic module in the polynomial ring \({\mathbb {R}}[\xi ]\), while \(\text{ QM }[{c}]\) is a quadratic module in the polynomial \({\mathbb {R}}[x]\). The notation \(z|_d\) denotes the degree-d truncation of z; see (2.13) for its meaning. The optimization (4.2) is a relaxation of (3.14), since it has a bigger feasible set. There exist both quadratic module and moment constraints in (4.2). The primal-dual pair (4.1)-(4.2) can be solved as semidefinite programs. The following is a basic property about the above optimization.

Theorem 4.1

Assume (3.9) holds. Suppose \((\gamma ^*,y^*,z^*)\) is an optimizer of (4.2) for the relaxation order k. Then \((\gamma ^*,y^*)\) is a maximizer of (3.14) if and only if it holds that \(y^* \in \overline{ \mathscr {R}_d(S)}\).

Proof

If \((\gamma ^*,y^*)\) is a maximizer of (3.14), then it is clear that \(y^* \in \overline{ \mathscr {R}_d(S) }\). Conversely, if \(y^* \in \overline{ \mathscr {R}_d(S) }\), then \((\gamma ^*, y^*)\) is feasible for (3.14), since (3.9) holds. Since (4.2) is a relaxation of (3.14), we know \((\gamma ^*,y^*)\) must also be a maximizer of (3.14). \(\square \)

If \(\mathscr {R}_d(S)\) is a closed cone, then we only need to check \(y^* \in \mathscr {R}_d(S)\) in the above. Interestingly, when S is compact, the moment cone \(\mathscr {R}_d(S)\) is closed [27, 29, 38]. As introduced in the Subsection 2.2, the membership \(y^* \in \mathscr {R}_d(S)\) can be checked by solving a truncated moment problem. This can be done by solving the optimization (2.12) for a generically selected objective. Once \((\gamma ^*,y^*)\) is confirmed to be a maximizer of (3.14), we show how to get a minimizer for (3.1). This is shown as follows.

Theorem 4.2

Assume (3.9) holds. For a relaxation order k, suppose \((x^*,w^*)\) is a minimizer of (4.1) and \((\gamma ^*,y^*,z^*)\) is a maximizer of (4.2) such that \(y^* \in \overline{ \mathscr {R}_d(S) }\). Assume there is no duality gap between (4.1) and (4.2), i.e., they have the same optimal value. If the point \(x^*\) belongs to the set X and \(f(x^*)= \langle f, w^* \rangle \), then \(x^*\) is a minimizer of (3.11). Moreover, if in addition the dual cone \(K^*\) can be expressed as in (3.10), then \(x^*\) is also a minimizer of (3.1).

Proof

Let \(f_1, f_2\) be optimal values of the optimization problems (3.13) and (3.14) respectively. Then, by the weak duality, it holds that

The membership \(y^* \in \overline{ \mathscr {R}_d(S) }\) implies that \((\gamma ^*,y^*)\) is a maximizer of (3.14), by Theorem 4.1. So \(f_2 = \gamma ^*-b^Ty^*\). By the assumption, the primal-dual pair (4.1)-(4.2) have the same optimal value, so

The constraint \(h(x^*, \xi ) \in \text{ QM }[{g}]_{2k} + Y^*\) implies that \(h(x^*, \xi ) \in \mathscr {P}_d(S) + Y^*\). Since \(x^* \in X\), we know \(x^*\) is a feasible point of (3.11). The optimal value of (3.11) is greater than or equal to that of (3.13), hence

So \(f(x^*) = f_1\). This implies that \(x^*\) is a minimizer of (3.11). Moreover, if in addition \(K^*\) can be expressed as in (3.10), the optimization (3.1) is equivalent to (3.11), by Theorem 3.1. So \(x^*\) is also a minimizer of (3.1). \(\square \)

In the above theorem, the assumptions that \(x^* \in X\) and \(f(x^*)= \langle f, w^* \rangle \) must hold if \(f,-c_1, \ldots , -c_{m_2}\) are SOS-convex polynomials. We have the following theorem.

Theorem 4.3

Assume (3.9) holds. For a relaxation order k, suppose \((x^*,w^*)\) is a minimizer of (4.1) and \((\gamma ^*,y^*,z^*)\) is a maximizer of (4.2) such that \(y^* \in \overline{ \mathscr {R}_d(S) }\). Assume there is no duality gap between (4.1) and (4.2), i.e., they have the same optimal value. If \(f,-c_1, \ldots , -c_{m_2}\) are SOS-convex polynomials, then \(x^* := \pi (w^*)\) is a minimizer of (3.11). Moreover, if in addition \(K^*\) can be expressed as in (3.10), then \(x^*\) is also a minimizer of (3.1).

Proof

Since f and \(-c_1, \ldots , -c_{m_2}\) are SOS-convex polynomials, by the Jensen’s inequality (see [26]), it holds that

Similarly, the constraint \(L_{c_i}^{(d_1)}[w^*] \succeq 0\) implies that \(\langle c_i, w^* \rangle \ge 0\). So \(x^* \in X\) is a feasible point of (3.11). As in the proof of Theorem 4.2, we can similarly show that

so \(f(x^*)= \langle f, w^* \rangle \). The conclusions follow from Theorem 4.2. \(\square \)

4.1 An Algorithm for Solving the DROM

Based on the above discussions, we now give the algorithm for solving the optimization problem (3.13) and its dual (3.14), as well as the DROM (3.1).

Algorithm 4.4

For given \(f, h, {\mathcal {M}}, S, X, Y\) and the defining polynomial tuples g and c, do the following:

- Step 0:

-

Get a computational representation for \(\overline{ { cone}({Y}) }\) and the dual cone \(Y^*\). Initialize

$$\begin{aligned} d_0 := \lceil \deg (g)/2\rceil , \quad t_0 := \lceil d/2 \rceil ,\quad k := \lceil d/2 \rceil , \quad l := t_0+1. \end{aligned}$$Choose a generic polynomial \(R \in \varSigma [\xi ]_{2t_0+2}\).

- Step 1:

-

Solve (4.1) for a minimizer \((x^*, w^*)\) and solve (4.2) for a maximizer \((\gamma ^*,y^*,z^*)\).

- Step 2:

-

Solve the moment optimization

$$\begin{aligned} \left\{ \begin{array}{cl} \min \limits _{\omega } &{} \langle R, \omega \rangle \\ { s.t.}&{} \omega |_d =y^* , \, \omega \in \mathscr {S}[g]_{2\ell }, \, \omega \in {\mathbb {R}}^{ {\mathbb {N}}^p_{2\ell } }. \end{array}\right. \end{aligned}$$(4.3)If (4.3) is infeasible, then \(y^*\) admits no S-measure, update \(k := k+1\) and go back to Step 1. Otherwise, solve (4.3) for a minimizer \(\omega ^*\) and go to Step 3.

- Step 3:

-

Check whether or not there exists an integer \(s\in [\max (d_0,t_0), \ell ]\) such that

$$\begin{aligned} \text{ rank }\, M_{s-d_0}[\omega ^*] \,= \, \text{ rank }\, M_{s}[\omega ^*]. \end{aligned}$$If such s does not exist, update \(\ell := \ell +1\) and go to Step 2. If such s exists, then \(y^* = \int [\xi ]_d {\texttt{d}} \mu \) for the measure

$$\begin{aligned} \mu \, = \, \theta _1 \delta _{u_1}+\cdots + \theta _r \delta _{u_r} . \end{aligned}$$In the above, the scalars \(\theta _1,\ldots ,\theta _r>0\), \(u_1, \ldots , u_r \in S\) are distinct points, \(r =\text{ rank }\, M_{s}[\omega ^*]\), and \(\delta _{u_i}\) denotes the Dirac measure supported at \(u_i\). Up to scaling, a measure \(\mu ^*\in {\mathcal {M}}\) that achieves the worst case expectation constraint can be recovered as a multiple of \(\mu \).

Remark 4.5

All optimization problems in Algorithm 4.4 can be solved numerically by the software GloptiPoly3 [20], YALMIP [30] and SeDuMi [48]. In Step 0, we assume \(\overline{ { cone}({Y}) }\) can be expressed by linear, second order or semidefinite cones. See Sect. 5 for more details. In Step 1, if (4.1) is unbounded from below, then (3.11) must also be unbounded from below. If (4.2) is unbounded from above, then (3.14) may be unbounded from above (and hence (3.11) is infeasible) , or it may be because the relaxation order k is not large enough. We refer to [38] for how to verify unboundedness of (3.14). Generally, one can assume (4.1) and (4.2) have optimizers. In Step 3, the finitely atomic measure \(\mu \) can be obtained by computing Schur decompositions and eigenvalues. We refer to [19] for the method. It is also implemented in the software GloptiPoly3. Note that the measure \(\mu \) associated with \(y^*\) may not belong to \({\mathcal {M}}\). This is because (4.2) has the conic constraint \(y\in \overline{cone(Y)}\) instead of \(y\in Y\). Once the atomic measure \(\mu \) is extracted, we can choose a scalar \(\beta >0\) such that \(\beta \mu \in {\mathcal {M}}\).

4.2 Convergence of Algorithm 4.4

In this subsection, we prove the convergence of Algorithm 4.4. The main results here are based on the work [35, 38].

First, we consider the relatively simple but still interesting case that \(\xi \) is a univariate random variable (i.e., \(p=1\)) and the support set \(S=[a_1, a_2]\) is an interval. For this case, Algorithm 4.4 must terminate in the initial loop \(k := \lceil d/2 \rceil \) with \(y^* \in \mathscr {R}_d(S)\), if \((\gamma ^*, y^*, z^*)\) is a maximizer of (4.2).

Theorem 4.6

Suppose the random variable \(\xi \) is univariate and the set \(S=[a_1,a_2]\), for scalars \(a_1 < a_2\), is an interval with the constraint \(g(\xi ) := (\xi -a_1)(a_2-\xi ) \ge 0\). If \((\gamma ^*, y^*, z^*)\) is a maximizer of (4.2) for \(k = \lceil d/2 \rceil \), then we must have \(z^* \in \mathscr {R}_{2k}(S)\) and hence \(y^* \in \mathscr {R}_d(S)\).

Proof

In the relaxation (4.2), the tms z has the even degree 2k. We label the entries of z as \(z = (z_0, z_1, \ldots , z_{2k} ).\) The condition \(z \in \mathscr {S}[g]_{2k}\) implies that

Since \(g = (\xi -a_1)(a_2-\xi )\), one can verify that \(L_{g}^{(k)}[z] \succeq 0\) is equivalent to

As shown in [9, 23], the (4.4) are sufficient and necessary conditions for \(z \in \mathscr {R}_{2k}(S)\). So, if \((\gamma ^*, y^*, z^*)\) is a maximizer of (4.2), then \(M_k[z^*]\succeq 0\) and \(L_{g}^{(k)}[z^*] \succeq 0\). Hence, we have \(z^* \in \mathscr {R}_{2k}(S)\) and hence \(y^* = z^*|_d \in \mathscr {R}_d(S)\). \(\square \)

Second, we prove the asymptotic convergence of Algorithm 4.4 when the random variable \(\xi \) is multi-variate. It requires that the quadratic module \(\text{ QM }[{g}]\) is archimedean and (3.13) has interior points.

Theorem 4.7

Assume that \(\text{ QM }[{g}]\) is archimedean and there exists a point \(\hat{x} \in X\) such that \(h(\hat{x}, \xi ) = a_1(\xi ) + a_2(\xi )\) with \(a_1 > 0\) on S and \(a_2 \in Y^*\). Suppose \((\gamma ^{(k)}, y^{(k)},z^{(k)})\) is an optimal triple of (4.2) when its relaxation order is k. Then, the sequence \(\{ y^{(k)} \}_{k=1}^\infty \) is bounded and every accumulation point of \(\{ y^{(k)} \}_{k=1}^\infty \) belongs to the cone \(\mathscr {R}_d(S)\). Therefore, every accumulation point of \(\{ (\gamma ^{(k)}, y^{(k)}) \}_{k=1}^\infty \) is a maximizer of (3.14).

Proof

For every \((\gamma , y, z)\) that is feasible for (4.2) and for \(\hat{w} := [\hat{x}]_{2d_1}\), it holds that

There exists \(\epsilon >0\) such that \(a_1(\xi ) - \epsilon \in \text{ QM }[{g}]_{2k_0}\), for some \(k_0 \in {\mathbb {N}}\), since \(\text{ QM }[{g}]\) is archimedean. Noting \(a_2 \in Y^*\), one can see that

For all \(k \ge k_0\), it holds that

(Note \(\langle 1, y \rangle = y_0\).) Let \(f_2\) be the optimal value of (3.14), then

because \((\gamma ^{(k)}, y^{(k)},z^{(k)})\) is an optimizer of (4.2), and (4.2) is a relaxation of the maximization (3.14). So (4.5) implies that

Hence, we can get that

The sequence \(\big \{ (y^{(k)})_0 \big \}_{k=1}^\infty \) is bounded.

Since \(\text{ QM }[{g}]\) is archimedean, there exists \(N>0\) such that \(N - \Vert \xi \Vert ^2 \in \text{ QM }[{g}]_{2k_1}\) for some \(k_1 \ge k_0\). For all \(k \ge k_1\), the membership \(z^{(k)} \in \mathscr {S}[g]_{2k}\) implies that

Note that \(y^{(k)}=z^{(k)}|_d\), hence \((y^{(k)})_0 = (z^{(k)})_0\). Since \(z^{(k)} \in \mathscr {S}[g]_{2k}\) and the sequence \(\big \{ (z^{(k)})_0 \big \}_{k=1}^\infty \) is bounded, one can further show that the set

is bounded. We refer to [38, Theorem 4.3] for more details about the proof. Therefore, the sequence \(\{ y^{(k)} \}_{k=1}^\infty \) is bounded. Since \(\text{ QM }[{g}]\) is archimedean, we also have

This is shown in Proposition 3.3 of [38]. So, if \(\hat{y}\) is an accumulation point of \(\{ y^{(k)} \}_{k=1}^\infty \), then we must have \(\hat{y} \in \mathscr {R}_d(S)\). Similarly, if \((\hat{\gamma }, \hat{y}, \hat{z})\) is an accumulation point of \(\{ (\gamma ^{(k)}, y^{(k)},z^{(k)}) \}_{k=1}^\infty \), then \(\hat{y} \in \mathscr {R}_d(S)\). As in the proof of Theorem 4.1, one can similarly show that \((\hat{\gamma }, \hat{y})\) is a maximizer of (3.14). \(\square \)

Last, we prove that Algorithm 4.4 will terminate within finitely many steps under certain assumptions. Like Theorem 4.7, we also assume the archimedeanness of \(\text{ QM }[{g}]\). When \(\text{ QM }[{g}]\) is not archimedean, if the set \(S = \{\xi \in {\mathbb {R}}^p: g(\xi )\ge 0\}\) is bounded, we can replace g by \(\tilde{g} = (g, N-\Vert \xi \Vert ^2)\) where N is such that \(S \subseteq \{ \Vert \xi \Vert ^2 \le N\}\). Then \(\text{ QM }[{\tilde{g}}]\) is archimedean. Moreover, we also need to assume the strong duality between (3.13) and (3.14), which is guaranteed under the Slater’s condition for (3.14). These assumptions typically hold for polynomial optimization.

Theorem 4.8

Assume \(\text{ QM }[{g}]\) is archimedean and there is no duality gap between (3.13) and (3.14). Suppose \((x^*, w^*)\) is a minimizer of (3.13) and \((\gamma ^*, y^*)\) is a maximizer of (3.14) satisfying:

-

(i)

There exists \(k_1\in {\mathbb {N}}\) such that \(h(x^*, \xi ) = h_1(\xi ) + h_2(\xi )\), with \(h_1\in \text{ QM }[{g}]_{2k_1}\) and \(h_2 \in Y^*\).

-

(ii)

The polynomial optimization problem in \(\xi \)

$$\begin{aligned} \left\{ \begin{array}{cl} \min \limits _{\xi \in {\mathbb {R}}^p} &{} h_1(\xi ) \\ { s.t.}&{} g_1(\xi ) \ge 0, \ldots , g_{m_1}(\xi ) \ge 0 \end{array} \right. \end{aligned}$$(4.6)has finitely many critical points u such that \(h_1(u) = 0\).

Then, when k is large enough, for every optimizer \((\gamma ^{(k)}, y^{(k)}, z^{(k)})\) of (4.2), we must have \(y^{(k)} \in \mathscr {R}_d(S)\).

Proof

Since there is no duality gap between (3.13) and (3.14),

Due to the feasibility constraints, we further have

Therefore, it holds that

The conic membership \(y^* \in \overline{K}\) implies that

We consider the polynomial optimization problem (4.6) in the variable \(\xi \). For each order \(k \ge k_1\), the kth order Moment-SOS relaxation pair for solving (4.6) is

The archimedeanness of \(\text{ QM }[{g}]\) implies that S is compact, so

The membership \(y^* \in \overline{K}\) implies that \(y^* \in \mathscr {R}_d(S)\). Since

the polynomial \(h_1(\xi )\) vanishes on the support of each S-representing measure for \(y^*\), so the optimal value of (4.6) is zero. By the given assumption, the sequence \(\{\nu _k\}\) has finite convergence to the optimal value 0 and the relaxation (4.8) achieves its optimal value for all \(k \ge k_1\). The optimization (4.6) has only finitely many critical points that are global optimizers. So, Assumption 2.1 of [34] for the optimization (4.6) is satisfied. Moreover, the given assumption also implies that \((x^*, w^*)\) is an optimizer of (4.1) and \((\gamma ^*, y^*, z^*)\) is an optimizer of (4.2) for all \(k \ge k_1\). Suppose \((x^{(k)}, w^{(k)})\) is an arbitrary optimizer of (4.1) and \((\gamma ^{(k)}, y^{(k)}, z^{(k)})\) is an arbitrary optimizer of (4.2), for the relaxation order k.

When \((z^{(k)})_{0}=0\), we have \(vec(1)^T M_k [z^{(k)}] vec(1) =0\). Since \(M_k[z^{(k)}] \succeq 0\),

Consequently, we further have \(M_k[z^{(k)}] vec(\xi ^\alpha )=0\) for all \(|\alpha | \le k-1\) (see Lemma 5.7 of [29]). Then, for each power \(\alpha = \beta + \eta \) with \(|\beta |,|\eta | \le k-1\), one can get

This means that \(z^{(k)}|_{2k-2}\) is the zero vector and hence \(y^{(k)} \in \mathscr {R}_d(S)\).

For the case \((z^{(k)})_{0}>0\), let \(\hat{z} := z^{(k)}/(z^{(k)})_0\). The given assumption implies that \((x^*, w^*)\) is also a minimizer of (4.1) and \((\gamma ^*, y^*,z^*)\) is optimal for (4.2), for all \(k \ge k_1\). So there is no duality gap between (4.1) and (4.2). Since \((\gamma ^{(k)}, y^{(k)}, z^{(k)})\) is optimal for (4.2), so \(\langle h_1(\xi ), z^{(k)} \rangle = 0\) and hence \(\hat{z}\) is a minimizer of (4.7) for all \(k\ge k_1\). By Theorem 2.2 of [35], the minimizer \(z^{(k)}\) must have a flat truncation \(z^{(k)}|_{2t}\) for some t, when k is sufficiently big. This means that the truncation \(z^{(k)}|_{2t}\), as well as \(y^{(k)}\), has a representing measure supported in S. Therefore, we have \(y^{(k)} \in \mathscr {R}_d(S)\). \(\square \)

The conclusion of Theorem 4.8 is guaranteed to hold under conditions (i) and (ii), which depend on the constraints g and the set Y. These two conditions are not convenient to verify computationally. However, in computational practice of Algorithm 4.4, there is no need to check or verify them. The correctness of computational results by Algorithm 4.4 does not depend on conditions (i) and (ii). In other words, the conditions (i) and (ii) are sufficient for Algorithm 4.4 to have finite convergence, but they may not be necessary. It is possible that the finite convergence occurs even if some of them fail to hold. In our numerical experiments, the finite convergence is always observed. We also like to remark that the conditions (i) and (ii) generally hold, which is a main topic of the work [36]. In particular, when \(h_1\) has generic coefficients, the optimization (4.6) has finitely many critical points and so the condition (ii) holds. This is shown in [34].

5 Numerical Experiments

In this section, we give numerical experiments for Algorithm 4.4 to solve distributionally robust optimization problems. The computation is implemented in MATLAB R2018a, in a Laptop with CPU 8th Generation Intel\(\circledR \) Core\(^{\textrm{TM}}\) i5-8250U and RAM 16 GB. The software GloptiPoly3 [20], YALMIP [30] and SeDuMi [48] are used for the implementation. For neatness of presentation, we only display four decimal digits.

To apply implement Algorithm 4.4, we need a computational representation for the cone \(\overline{cone(Y)}\). For a given set Y, it may be mathematically hard to get a computationally efficient description for the closure of its conic hull. However, in most applications, the set Y is often convex and there usually exist convenient representations for \(\overline{cone(Y)}\). For instance, the \(\overline{cone(Y)}\) is often a polyhedra, second order, or semidefinite cone, or a Cartesian product of them. The following are some frequently appearing cases.

-

If \(Y=\{y : T y + u \ge 0\}\) is a nonempty polyhedron, given by some matrix T and vector u, then

$$\begin{aligned} \overline{ cone(Y)} \, = \, \{y: Ty+s u \ge 0,\, s \in {\mathbb {R}}_+ \}. \end{aligned}$$(5.1)It is also a polyhedron and is closed.

-

Consider that \(Y =\{y : {\mathcal {A}}(y) + B \succeq 0 \}\) is given by a linear matrix inequality, for a homogeneous linear symmetric matrix valued function \({\mathcal {A}}\) and a symmetric matrix B. If Y is nonempty and bounded, then

$$\begin{aligned} \overline{ cone(Y) } \, = \, \left\{ y : {\mathcal {A}}(y) + s B \succeq 0,\, s \in {\mathbb {R}}_+ \right\} . \end{aligned}$$(5.2)When Y is unbounded, the \({ cone}({Y})\) may not be closed and its closure \(\overline{ { cone}({Y}) }\) may be tricky. We refer to the work [33] for such cases. When Y is given by second order conic conditions, we can do similar things for obtaining \(\overline{ { cone}({Y}) }\).

Example 5.1

Consider the DROM problem

where (the random variable \(\xi \) is univariate, i.e, \(p=1\))

The \(\overline{cone(Y)}\) is given as in (5.1). The objective f and constraints \(c_1,c_2\) are all linear. We start with \(k=3\), and the Algorithm 4.4 terminates in the initial loop. The optimal value \(F^*\) and the optimizer \(x^*\) for (3.11) are respectively

The optimizer for (4.2) is

The measure \(\mu \) for achieving \(y^* = \int [\xi ]_5 {\texttt{d}} \mu \) is supported at the points

By a proper scaling, we get the measure \(\mu ^* = 0.9957 \delta _{u_1} + 0.0043 \delta _{u_2}\) that achieves the worst case expectation constraint.

Example 5.2

Consider the DROM problem

where (the random variable \(\xi \) is bivariate, i.e, \(p=2\))

The \(\overline{cone(Y)}\) is given as in (5.2). One can verify that f and all \(-c_i\) are SOS-convex. We start with \(k=2\), and Algorithm 4.4 terminates in the initial loop. The optimal value \(F^*\) and optimizer \(x^*\) of (3.11) are respectively

The optimizer for (4.2) is

The measure \(\mu \) for achieving \(y^* = \int [\xi ]_4 {\texttt{d}} \mu \) is supported at the points

By a proper scaling, we get the measure \(\mu ^* = 0.2527 \delta _{u_1}+ 0.7473 \delta _{u_2}\) that achieves the worst case expectation constraint.

Example 5.3

Consider the DROM problem

where (the random variable \(\xi \) is bivariate, i.e, \(p=2\))

In the above, \(\overline{cone(Y)}\) is given as in (5.1). The objective f and \(-c_1\) are not convex. We start with \(k=2\), while the algorithm terminates at \(k=3\). In the last loop, the optimizers for (4.1) and (4.2) are

The optimal value \(F^* \approx -7.0017\) for both of them. The measure for achieving \(y^* = \int [\xi ]_4 {\texttt{d}} \mu \) is supported at the points

By a proper scaling, we get the measure \(\mu ^* = 0.0877\delta _{u_1}+0.9123\delta _{u_2}\) that achieves the worst case expectation constraint. The point

is feasible for (5.5) as \(c_1(x^*)\approx 2.1822\) and \(c_2(x^*)\approx 3.9919\cdot 10^{-8}\). Moreover, \(F^*-f(x^*)\approx 1.2204\cdot 10^{-7}.\) By Theorem 4.2, we know \(F^*\) is the optimal value and \(x^*\) is an optimizer for (5.5).

Example 5.4

Consider the DROM problem

where (the random variable \(\xi \) is bivariate, i.e, \(p=2\))

The set Y is not convex. Its convex hull is \(\Vert y \Vert \le \sqrt{37}\) with \(y_{00} = 1\). Hence,

The functions f and \(-c_1,-c_2\) are not convex. We begin with \(k=2\). The optimizers for (4.1) and (4.2) are respectively

The optimal value is \(F^* \approx -12.6420\) for both of them. The measure for achieving \(y^* = \int [\xi ]_4 {\texttt{d}} \mu \) is \(\mu = 1.2272 \delta _{ u }\), with \(u \approx (0.2438,-0.9698)\in S\). So \(\mu ^*=\delta _u\). For the point

one can verify that \(x^*\) is feasible for (5.6), since

By Theorem 4.2, we know \(x^*\) is the optimizer for (5.6).

Example 5.5

(Portfolio selection [11, 22]) Consider that there exist n risky assets that can be chosen by the investor in the financial market. The uncertain loss \(r_i\) of each asset can be described by the random risk variable \(\xi \) which admits a probability measure supported in \(S=[0,1]^p\). Assume the moments of \(\mu \in {\mathcal {M}}\) are constrained in the set

The cone \(\overline{cone(Y)}\) can be given as in (5.1). Minimizing the portfolio loss over the ambiguity set \({\mathcal {M}}\) is equivalent to solving the following min-max optimization problem

for the simplex \(\varDelta _n := \left\{ x\in {\mathbb {R}}^3\left| e^Tx=1,\,x\ge 0\right. \right\} \). The functions \(r_i(\xi )\) are

Then (5.7) can be equivalently reformulated as

Applying Algorithm 4.4 to solve (5.9), we get the optimal value \(F^*\) and the optimizer \((x_0^*,x^*)\) in the initial loop \(k=2\):

The optimizer for (4.2) is

The measure for achieving \(y^*= \int [\xi ]_4 {\texttt{d}} \mu \) is

with the following two points in S:

Since \(\mu \) belongs to \({\mathcal {M}}\), it is also the measure that achieves the worst case expectation constraint. Therefore, the optimizer for (5.7) is \(x^*\) and the optimal value is \(-1.0136\).

Example 5.6

(Newsvendor problem [50]) Consider that there is a newsvendor trade product with an uncertain daily demand. Assume the demand quantity \(D(\xi )\) is affected by a random variable \(\xi \in {\mathbb {R}}^2\) such that

In each day, the newsvendor orders x units of the product at the wholesale price \(P_1\), sells the product with quantity \(\min \{x,D(\xi )\}\) at the retail price \(P_2\) and clears the unsold stock at the salvage price \(P_0\). Assume that \(P_0<P_1<P_2\), then the newsvendor’s daily loss is given as

Clearly, the newsboy will earn the most if he can buy the greatest order quantity that is guaranteed to be sold out. Suppose \(\xi \) admits a probability measure supported in S and has its true distribution contained in the ambiguity set \({\mathcal {M}}\). Then the best order decision for the newsvendor product can be obtained from the following DROM problem

Suppose \(P_0 = 0.25, P_1 = 0.5, P_2=1\), and

The cone \(\overline{cone(Y)}\) can be given as in (5.1). Applying Algorithm 4.4 to solve (5.10), we get the optimal value F and the optimizer \(x^*\) respectively

The optimizer of (4.2) is

The measure for achieving \(y^* = \int [\xi ]_4 {\texttt{d}} \mu \) is \(\mu = 0.5 \delta _{ u }\) with

So \(\mu ^*=\delta _u\) achieves the worst case expectation constraint.

We would like to remark that the ambiguity set \({\mathcal {M}}\) can be constructed by samples or historic data. It can also be updated as the sampling size increases. Assume the support set S is given and each \(\mu \in {\mathcal {M}}\) is a probability measure. The moment ambiguity set Y can be estimated by statistical samplings. Suppose \(T = \{\xi ^{(1)},\ldots , \xi ^{(N)}\}\) is a given sample set for \(\xi \). One can randomly choose \(T_1,\ldots ,T_s\subseteq T\) such that each \(T_i\) contains \(\lceil N/2\rceil \) samples. Choose a smaller sample size s, say, \(s = 5\). For a given degree d, choose the moment vectors \(l,\, u\in {\mathbb {R}}^{{\mathbb {N}}_d^n}\) such that

for every power \(\alpha \in {\mathbb {N}}_d^n\). The moment constraining set Y, e.g., as in Example 5.5, can be estimated as

Other types of moment constraining set Y can be estimated similarly. Suppose each \(\xi ^{(i)}\) independently follows the distribution of \(\xi \). As the sample size N increases, the moment ambiguity set \({\mathcal {M}}\) with Y in (5.11) is expected to give a better approximation of the true distribution of \(\xi \). This is indicated by the Law of Large Numbers and the convergence results of sample average approximations. The following is an example for how to do this.

Example 5.7

Consider the portfolio selection optimization problem as in Example 5.5. The DROM is (5.7), or equivalently (5.9). Assume each \(r_i(\xi )\) is given as in (5.8). Suppose \(\xi = (\xi _1,\xi _2,\xi _3)\) is the random variable, where each \(\xi _i\) is independently distributed. Assume \(\xi _1\) follows the uniform distribution on [0, 1], \(\xi _2\) follows the truncated standard normal distribution on [0, 1] and \(\xi _3\) follows the truncated exponential distribution with the mean value 0.5 on [0, 1]. We use the MATLAB commands makedist and truncate to generate samples of \(\xi \) with the sample size \(N\in \{50,100,200\}\), and then construct Y as in (5.11) with \(s = 5\) and \(d = n = 3\).

-

(i).

When \(N = 50\), we get that

$$\begin{aligned} l= & {} (1.0000,0.4354,0.3779,0.3873,0.2757,0.1916,0.1872, \\{} & {} 0.1975,0.1549,0.2018, 0.2027,0.1299,0.1161,0.1111, \\{} & {} 0.0848,0.1025,0.1193,0.0801,0.0866,0.1207), \\ u= & {} (1.0000,0.5803,0.4606,0.4808,0.3938, 0.2696,0.2579, \\{} & {} 0.2838,0.2109,0.3293,0.2913,0.1870,0.1821,0.1662, \\{} & {} 0.1091,0.1793, 0.2027,0.1235,0.1361,0.2560). \end{aligned}$$ -

(ii).

For \(N = 100\), we get that

$$\begin{aligned} l= & {} (1.0000,0.4935,0.3799,0.4135,0.3150,0.1828,0.2065, \\{} & {} 0.1975,0.1745,0.2459,0.2261,0.1061,0.1268,0.0924, \\{} & {} 0.0837,0.1280,0.1195,0.0926,0.1102,0.1709),\\ u= & {} (1.0000,0.5882,0.4545,0.5182,0.4156,0.2529,0.2838, \\{} & {} 0.2833,0.2294,0.3545,0.3178,0.1768,0.1941,0.1565, \\{} & {} 0.1242,0.1844, 0.2035,0.1451,0.1570,0.2716). \end{aligned}$$ -

(iii).

For \(N = 200\), we get that

$$\begin{aligned} l= & {} (1.0000,0.4803,0.4177,0.4157,0.3170, 0.1957,0.2253, \\{} & {} 0.2508,0.1784,0.2580,0.2310,0.1274,0.1459,0.1170, \\{} & {} 0.0875,0.1348,0.1719,0.0998,0.1048,0.1886), \\ u= & {} (1.0000,0.5647,0.4698,0.5137,0.3939, 0.2712,0.2738, \\{} & {} 0.2883,0.2250,0.3387,0.3097,0.1904,0.1950,0.1662, \\{} & {} 0.1300, 0.1889,0.2062,0.1396,0.1510,0.2470). \end{aligned}$$

Applying Algorithm 4.4, we get the optimal value \(F^*\) and the optimizer \((x_0^*,x^*)\) in the initial loop \(k=2\) for each case. The computational results are given in Table . Since \(x_0^*=F^*\) and \(y^*\) admits a measure \(\mu =\theta _1\delta _{u_1}+\theta \delta _{u_2}+\theta _3\delta _{u_3}\), we only list \(F^*\), \(\theta = (\theta _1,\theta _2,\theta _3)\) and \(u_1,u_2,u_3\) for convenience. As the sample size increases, the optimal value of \(F^*\) improves. This indicates that the ambiguity set can be estimated by sampling averages and the accuracy increases as the sampling size increases.

6 Conclusions and Discussions

This paper studies distributionally robust optimization when the ambiguity set is given by moment constraints. The DROM has a deterministic objective, some constraints on the decision variable and a worst case expectation constraint. The distributionally robust min-max optimization is a special case of DROM. The objective and constraints are assumed to be polynomial functions in the decision variable. Under the SOS-convexity assumption, we show that the DROM is equivalent to a linear conic optimization problem with moment constraints, as well as the psd polynomial conic condition. The Moment-SOS relaxation method (i.e., Algorithm 4.4) is proposed to solve the linear conic optimization. The method can deal with moments of any order. Moreover, it not only returns the optimal value and optimizers for the original DROM, but also gives the measure that achieves the worst case expectation constraint. Under some general assumptions (e.g., the archimedeanness), we proved the asymptotic and finite convergence of the proposed method (see Theorems 4.6, 4.7 and 4.8). Numerical examples, as well as some applications, are given to show how it solves DROM problems.

The distributionally robust optimization is attracting broad interests in various applications. There is much future work to do. In this paper, we assumed the random function \(h(x, \xi )\) is linear in the decision variable x. How can we solve the DROM if \(h(x, \xi )\) is not linear in x? To prove the DROM (3.1) is equivalent to the linear conic optimization (3.13), we assumed the objective and constraints are SOS-convex. When they are not SOS-convex, how can we get equivalent linear conic optimization for (3.1)? They are important future work.

Data Availability

The paper does not analyse or generate any datasets, because the work proceeds within a theoretical and mathematical approach.

References

Ben-Tal, A., El Ghaoui, L., Nemirovski, A.: Robust Optim., vol. 28. Princeton University Press, New Jersey (2009)

Ben-Tal, A., Den Hertog, D., et al.: Robust solutions of optimization problems affected by uncertain probabilities. Manag. Sci. 59(2), 341–357 (2013)

Ben-Tal, A., Nemirovski, A.: Lectures on modern convex optimization. SIAM, Philadelphia, PA (2012)

Bertsimas, D., Brown, D., Caramanis, C.: Theory and applications of robust optimization. SIAM Rev. 53(3), 464–501 (2011)

Bertsimas, D., Sim, M., Zhang, M.: Adaptive distributionally robust optimization. Manag. Sci. 65(2), 604–618 (2019)

Birge, J., Louveaux, F.: Introduction to stochastic programming. Springer, Newyork (2011)

Chen, Y., Sun, H., Xu, H.: Decomposition and discrete approximation methods for solving two-stage distributionally robust optimization problems. Comput. Optim. Appl. 78(1), 205–238 (2021)

Chen, Z., Sim, M., Xu, H.: Distributionally robust optimization with infinitely constrained ambiguity sets. Oper. Res. 67(5), 1328–1344 (2019)

Curto, R., Fialkow, L.: Recursiveness, positivity, and truncated moment problems. Houston J. Math. 17, 603–635 (1991)

Curto, R., Fialkow, L.: Truncated \(K\)-moment problems in several variables. J. Oper. Theory 54, 189–226 (2005)

Delage, E., Ye, Y.: Distributionally robust optimization under moment uncertainty with application to data-driven problems. Oper. Res. 58(3), 595–612 (2010)

Duchi, J., Namkoong, H.: Learning models with uniform performance via distributionally robust optimization, Preprint, (2018). arXiv:1810.08750

Esfahani, P., Kuhn, D.: Data-driven distributionally robust optimization using the Wasserstein metric: performance guarantees and tractable reformulations. Math. Program. 171(1–2), 115–166 (2018)

Ghadimi, S., Lan, G.: Stochastic first-and zeroth-order methods for nonconvex stochastic programming. SIAM J. Optim. 23(4), 2341–2368 (2013)

Guo, B., Nie, J., Yang, Z.: Learning diagonal gaussian mixture models and incomplete tensor decompositions. Vietnam J. Math. 50(2), 421–446 (2022)

Gürbüzbalaban, M., Ruszczyński, A.: Zhu, L.: A stochastic subgradient method for distributionally robust non-convex learning, Preprint, (2020). arXiv:2006.04873

Hanasusanto, G., Roitch, V., et al.: A distributionally robust perspective on uncertainty quantification and chance constrained programming. Math. Program. 151(1), 35–62 (2015)

Helton, J.W., Nie, J.: Semidefinite representation of convex sets. Math. Program. 122(1), 21–64 (2010)

Henrion, D., Lasserre, J.: Detecting global optimality and extracting solutions in GloptiPoly, Positive polynomials in control, 293–310. Springer, Berlin, Heidelberg (2005)

Henrion, D., Lasserre, J., Loefberg, J.: GloptiPoly3: moments, optimization and semidefinite programming. Optim. Methods Softw. 24(4–5), 761–779 (2009)

Henrion, D., Korda, M., Lasserre, J.: The moment-SOS hierarchy. World Scientific, Singapore (2020)

de Klerk, E., Kuhn, D., Postek, K.: Distributionally robust optimization with polynomial densities: theory, models and algorithms. Math. Program. 181, 265–296 (2020)

Krein, M., Louvish, D.: The Markov moment problem and extremal problems. American Mathematical Society, Washington, D.C (1977)

Lan, G.: First-order and stochastic optimization methods for machine learning. Springer, Newyork (2020)

Lasserre, J.: Global optimization with polynomials and the problem of moments. SIAM J. Optim. 11, 796–817 (2001)

Lasserre, J.: Convexity in semi-algebraic geometry and polynomial optimization. SIAM J. Optim. 19, 1995–2014 (2009)

Lasserre, J.: Moment, Positive Polynomials and Their Applications. Imperial College Press, London (2009)

Lasserre, J., Weisser, T.: Distributionally robust polynomial chance-constraints under mixture ambiguity sets. Math. Program. 185(1), 409–453 (2021)

Laurent, M.: Sums of squares, moment matrices and optimization over polynomials, Emerging Applications of Algebraic Geometry of IMA Volumes in Mathematics and its Applications 149, pp. 157–270, Springer, (2009)

Lofberg, J.: YALMIP: A toolbox for modeling and optimization in MATLAB, IEEE international conference on robotics and automation (IEEE Cat. No. 04CH37508), IEEE, (2004)

Mevissen, M., Ragnoli, E., Yu, J.: Data-driven distributionally robust polynomial optimization. Adv. Neural Inf. Process. Syst. 26, 37–45 (2013)

Mohri, M., Sivek, G., Suresh A.:, Agnostic federated learning, Preprint, (2019). arXiv:1902.00146

Netzer, T.: On semidefinite representations of non-closed sets. Linear Algebra Appl. 432, 3072–3078 (2010)

Nie, J.: Certifying convergence of Lasserre’s hierarchy via flat truncation. Math. Program. 142(1–2), 485–510 (2013)

Nie, J.: The \({\cal{A} }\)-truncated \(K\)-moment problem. Found. Comput. Math. 14(6), 1243–1276 (2014)

Nie, J.: Optimality conditions and finite convergence of Lasserre’s hierarchy. Math. Program. 146(1–2), 97–121 (2014)

Nie, J.: The hierarchy of local minimums in polynomial optimization. Math. Program. 151(2), 555–583 (2015)

Nie, J.: Linear optimization with cones of moments and nonnegative polynomials. Math. Program. 153(1), 247–274 (2015)

Nie, J., Yang, L., Zhong, S.: Stochastic polynomial optimization. Optim. Methods Softw. 35(2), 329–347 (2020)

Pflug, G., Wozabal, D.: Ambiguity in portfolio selection. Quantitative Finance 7(4), 435–442 (2007)

Postek, K., Ben-Tal, A., et al.: Robust optimization with ambiguous stochastic constraints under mean and dispersion information. Oper. Res. 66(3), 814–833 (2018)

Putinar, M.: Positive polynomials on compact semi-algebraic sets. Indiana Univ. Math. J. 42(3), 969–984 (1993)

Rachev, S., Römisch, W.: Quantitative stability in stochastic programming: the method of probability metrics. Math. Oper. Res. 27(4), 792–818 (2002)

Rahimian, H., Mehrotra, S.: Distributionally robust optimization: A review, Preprint, (2019). arXiv:1908.05659

Ruszczyński, A., Shapiro, A., (Eds.), Stochastic programming models, Handbook in Operations Research and Management Science, Elsevier Science, Amsterdam, (2003)

Shapiro, A., Ahmed, S.: On a class of minimax stochastic programs. SIAM J. Optim. 14(4), 1237–1249 (2004)

Shapiro, A., Dentcheva, D., Ruszczyński, A.: Lectures on stochastic programming: modeling and theory. SIAM, Philadelphia (2014)

Sturm, J.: Using SeDuMi 1.02, a MATLAB toolbox for optimization over symmetric cones. Optim. Methods Softw. 11(1–4), 625–653 (1999)

Sun, H., Shapiro, A., Chen, X.: Distributionally robust stochastic variational inequalities. Math. Program. (2022). https://doi.org/10.1007/s10107-022-01889-2

Wiesemann, W., Kuhn, D., Sim, M.: Distributionally robust convex optimization. Oper. Res. 62(6), 1358–1376 (2014)

Xu, H., Liu, Y., Sun, H.: Distributionally robust optimization with matrix moment constraints: Lagrange duality and cutting plane methods. Math. Program. 169(2), 489–529 (2018)

Yang, Y., Wu, W.: A distributionally robust optimization model for real-time power dispatch in distribution networks. IEEE Trans. Smart Grid 10(4), 3743–3752 (2018)

Zhang, J., Xu, H., Zhang, L.: Quantitative stability analysis for distributionally robust optimization with moment constraints. SIAM Optim. 26(3), 1855–1862 (2016)

Zhang, Z., Ahmed, S., Lan, G.: Efficient algorithms for distributionally robust stochastic optimization with discrete scenario support, Preprint, (2019). arXiv:1909.11216

Zhu, S., Fukushima, Masao: Worst-case conditional value-at-risk with application to robust portfolio management. Oper. Res. 57(5), 1155–1168 (2009)

Zymler, S., Kuhn, D., Rustem, B.: Distributionally robust joint chance constraints with second-order moment information. Math. Program. 137(1–2), 167–198 (2013)

Author information

Authors and Affiliations

Contributions

The authors have done analysis and computational results.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Nie, J., Yang, L., Zhong, S. et al. Distributionally Robust Optimization with Moment Ambiguity Sets. J Sci Comput 94, 12 (2023). https://doi.org/10.1007/s10915-022-02063-8

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-022-02063-8