Abstract

The computation of lower bounds via the solution of convex lower bounding problems depicts current state-of-the-art in deterministic global optimization. Typically, the nonlinear convex relaxations are further underestimated through linearizations of the convex underestimators at one or several points resulting in a lower bounding linear optimization problem. The selection of linearization points substantially affects the tightness of the lower bounding linear problem. Established methods for the computation of such linearization points, e.g., the sandwich algorithm, are already available for the auxiliary variable method used in state-of-the-art deterministic global optimization solvers. In contrast, no such methods have been proposed for the (multivariate) McCormick relaxations. The difficulty of determining a good set of linearization points for the McCormick technique lies in the fact that no auxiliary variables are introduced and thus, the linearization points have to be determined in the space of original optimization variables. We propose algorithms for the computation of linearization points for convex relaxations constructed via the (multivariate) McCormick theorems. We discuss alternative approaches based on an adaptation of Kelley’s algorithm; computation of all vertices of an n-simplex; a combination of the two; and random selection. All algorithms provide substantial speed ups when compared to the single point strategy used in our previous works. Moreover, we provide first results on the hybridization of the auxiliary variable method with the McCormick technique benefiting from the presented linearization strategies resulting in additional computational advantages.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

State-of-the-art algorithms for deterministic global optimization of nonconvex problems are mainly based on adaptations of the spatial branch-and-bound algorithm [17, 25, 35], where lower and upper bounds are computed in each node of the branch-and-bound tree. In most cases, established nonlinear local solvers supported by several heuristics are used to compute valid upper bounds. The computation of lower bounds is performed through the application of a general method for the construction of valid convex and concave relaxations of the original functions participating in the optimization problem. Due to numerical reliability and computational costs, established deterministic global solvers such as, e.g., ANTIGONE [27], BARON [22] or SCIP [38], construct a linear outer approximation of the nonlinear convex relaxation and use well-developed linear programming methods for the determination of valid lower bounds instead of applying local convex techniques directly. The selection of points for linearization affects the tightness of the linear relaxation and consequently the lower bound obtained through the solution of the resulting linear program.

The auxiliary variable method (AVM) [33, 35, 36] is a general method for the construction of convex and concave relaxations of factorable functions, i.e., functions representable as a finite recursion of addition, multiplication and composition of intrinsic functions. The AVM introduces an auxiliary variable together with a corresponding auxiliary equality constraint for every intermediate nonlinear factor of a given function. Then, the convex and concave envelopes of each factor are constructed providing a convex relaxation and a concave relaxation of the original function. To linearize the convex and concave envelopes of each factor provided by the AVM, the so-called sandwich algorithm has been developed (Section 4.2 in [35]). The algorithm starts with a small set of linearization points and computes additional points based on a particular rule, e.g., the maximum error rule or the chord rule, for every auxiliary factor until a pre-defined maximum of linearization points has been computed. This method works very well for the AVM, since each introduced auxiliary factor is defined by a low dimensional function for which the convex and concave envelopes are known analytically allowing for an efficient computation of maximum distances and/or angles used in the sandwich procedure. Moreover, all computations are conducted in the low dimensional domain space of the intermediate factor further lowering the computational effort. However, the resulting linear program suffers from an increased dimensionality and a large number of constraints because of the auxiliary variables and equality constraints added.

The method of McCormick [26], extended to multivariate compositions in [37], is a different method for the construction of valid convex and concave relaxations of factorable functions. In contrast to the AVM, the McCormick technique does not introduce any auxiliary variables when constructing convex and concave relaxations of a given function, thus always preserving the original dimension of the underlying function. Since the resulting McCormick relaxations may be nonsmooth, we use subgradient propagation [28] in order to construct valid affine under- and overestimators for the convex and concave McCormick relaxations. It is also possible to use the recently developed differentiable McCormick relaxations [23] in order to replace the computation of subgradients by the computation of derivatives, but they are not as tight as the nonsmooth McCormick relaxations and are computationally more costly to calculate. Furthermore, Cao et al. [6] have shown that affine subtangents used for the linearization of the convex relaxation of the lower bounding problem benefit from the favorable convergence order of McCormick relaxations. Thus, we stick to the nonsmooth McCormick relaxations and its subgradients throughout this article. As currently no algorithms exist for the selection of suitable linearization points for the McCormick relaxations, the goal of this work lies in the development of algorithms for the computation of a set of linearization points. We briefly discuss the idea of direct propagation and combination of all subgradients in each intermediate factor. However, this approach results in the computation of a combinatorial number of affine functions and thus, we focus on methods for computing linearization points in the original variable space either iteratively or a priori. All methods developed for the linearization of multivariate McCormick relaxations are implemented on the basis of the open-source MC++ library [7] and are available in the open-source deterministic global optimization solver MAiNGO [4].

Tawarmalani and Sahinidis [36] showed that for a specific set of linearization points, the linear outer approximation obtained via the AVM can be tighter than the linear outer approximation computed with McCormick relaxations. Later Tsoukalas and Mitsos demonstrated that the McCormick method extended to multivariate outer functions provides the same convex and concave relaxations if no reoccurring intermediate factors of a given function are recognized by the AVM. However, if the AVM recognizes reoccurring intermediate factors, it provides tighter convex relaxations than the multivariate McCormick approach. This problem, can, at least in princible, be solved by the addition of only a sufficient number of auxiliary optimization variables when applying the McCormick method, thus providing equally tight relaxations as the AVM. The authors thus suggest a combination of the AVM and the multivariate McCormick technique (Section 4 in [37]), but do not provide any computational insights or results. Modern computational representations of factorable functions through directed acyclic graphs (DAGs) allow for the recognition of factors occurring multiple times in a given optimization problem [7, 32]. This allows for the development of a method that introduces common factors as auxiliary optimization variables together with the corresponding auxiliary constraints. Herein, we first briefly summarize the theory discussed in [37], showing that the AVM and the McCormick relaxations provide equally tight relaxations if common factors occurring at least twice are recognized. Then, we present a heuristic for the addition of intermediate factors occurring most often in a given DAG as auxiliary optimization variables in the lower bounding procedure and show that this hybridization of the AVM and the McCormick propagation technique results in large computational speed ups. We add only a limited number of auxiliary optimization variables in order get the advantage of tighter relaxations when common factors are recognized and keep the benefit of lower dimensional optimization problems when using the McCormick method. Last, we apply the linearization algorithms developed herein to the hybridization of the AVM and multivariate McCormick relaxations to come up for the increased dimensionality and resulting complexity of the lower bounding problems. Again, the implemented heuristic is available in the open-source solver MAiNGO [4].

The remainder of this article is structured as follows. Section 2 introduces the mathematical notation used throughout the manuscript. In Sect. 3 we present alternative algorithms for the computation of linearization points for McCormick relaxations a priori, iteratively and a combination of both supported by numerical results. Section 4 provides results on the application of the presented linearization algorithms to the hybridization of the McCormick technique and the auxiliary variable method. We summarize and discuss future work in Sect. 5.

2 Preliminaries

If not stated otherwise, we consider continuous functions \(f:Z\rightarrow \mathbb {R}\) with \(Z \in \mathbb {I}\mathbb {R}^n\), where \(\mathbb {I}\mathbb {R}\) denotes the set of closed bounded intervals in \(\mathbb {R}\). \(Z\in \mathbb {I}\mathbb {R}^n\), also called box, is defined as \(Z\equiv [\mathbf {z}^L,\mathbf {z}^U]=[z^L_1,z^U_1]\times \dots \times [z^L_n,z^U_n]\) with \(\mathbf {z}^L,\mathbf {z}^U \in \mathbb {R}^n\) where the superscripts L and U always denote a lower and upper bound, respectively. We denote the range of f over Z by \(f(Z)\in \mathbb {I}\mathbb {R}\).

We call a convex function \(f^{cv}:Z\rightarrow \mathbb {R}\) a convex relaxation (or convex underestimator) of f on Z if \(f^{cv}(\mathbf {z})\le f(\mathbf {z})\) for every \(\mathbf {z}\in Z\). Similarly, we call a concave function \(f^{cc}:Z\rightarrow \mathbb {R}\) a concave relaxation (or concave overestimator) of f on Z if \(f^{cc}(\mathbf {z})\ge f(\mathbf {z})\) for every \(\mathbf {z}\in Z\). We call the tightest convex and concave relaxations of f the convex and concave envelopes \(f^{cv}_e, f^{cc}_e\) of f on Z, respectively, i.e., it holds \(f^{cv}(\mathbf {z})\le f^{cv}_e(\mathbf {z})\le f(\mathbf {z})\) and \(f(\mathbf {z})\le f^{cc}_e(\mathbf {z})\le f^{cc}(\mathbf {z})\) for all \(\mathbf {z}\in Z\) and all convex relaxations \(f^{cv}\) and concave relaxations \(f^{cc}\) of f on Z, respectively.

Definition 1

(Subgradients and linearization points). For a convex and concave function \(f^{cv},f^{cc}:Z\rightarrow \mathbb {R}\), we call \(\mathbf {s}^{cv}(\bar{\mathbf {z}}),\mathbf {s}^{cc}(\bar{\mathbf {z}})\in \mathbb {R}^n\) a convex and a concave subgradient of \(f^{cv},f^{cc}\) at \(\bar{\mathbf {z}}\in Z\), respectively, if

We denote the affine functions on the right-hand side of inequalities (A1), (A2) constructed with the convex and concave subgradient \(\mathbf {s}^{cv}(\bar{\mathbf {z}}),\mathbf {s}^{cc}(\bar{\mathbf {z}})\) by \(f^{cv,sub}(\bar{\mathbf {z}},\mathbf {z})\) and \(f^{cc,sub}(\bar{\mathbf {z}},\mathbf {z})\), respectively.

Note that \(f^{cv,sub}\) and \(f^{cc,sub}\) are valid under- and overestimators of f on Z, respectively. We call the point \(\bar{\mathbf {z}}\) in Definition 1 a linearization point of f.

2.1 McCormick relaxations and subgradient propagation

All relaxations considered herein are constructed with the use of the McCormick propagation rules originally developed by McCormick (Section 3 in [26]) and extended to multivariate compositions of functions by Tsoukalas and Mitsos (Theorem 2 in [37]). The corresponding subgradients are constructed as described by Mitsos et al. (Sections 2.4-3.1 in [28]) and Tsoukalas and Mitsos (Theorem 4 in [37]). We use the open-source library MC++ [7] for the automatic computation of convex and concave McCormick relaxations and its subgradients at a single linearization point. Moreover, we extend the library to handle a set of linearization points directly by adapting the original implementation of McCormick propagation to vector valued calculations. This extension is available in the MC++ library used in the open-source deterministic global solver MAiNGO [4].

3 Computation of linearization points for McCormick relaxations

Before describing the methods and algorithms used in this work, we briefly discuss the computation of linearization points for the AVM, its relationship with McCormick relaxations and the associated difficulties. We then begin the presentation of methods with an adaptation of the iterative Kelley’s cutting plane algorithm for convex optimization [21]. Subsequently, we develop a method for a priori computation of linearization points (before calculating relaxations). As a last point of this section, we present numerical results comparing the described methods and the combination of the two methods to a computationally cheap single-point approach and random selection.

3.1 Auxiliary variable method and McCormick relaxations

For the sake of demonstration, let us consider a simple abstract box-constrained minimization of \(f:\mathbb {I}\mathbb {R}^n\ni X\rightarrow \mathbb {R}\)

When applying the AVM to a given optimization problem, each intermediate factor is replaced by an auxiliary variable together with an appropriate equality constraint. For now, let us assume that the AVM introduces exactly one auxiliary variable \(z_1\) for the intermediate factor g with range \(g(X)=[g^L,g^U]\) providing the equivalent optimization problem

with \(\tilde{f}:[g^L,g^U]\rightarrow \mathbb {R}, g:X\rightarrow [g^L,g^U]\) and \(\tilde{f}(g(\mathbf {x}))=f(\mathbf {x})\) for all \(\mathbf {x}\in X\). Then, convex and concave relaxations \(\tilde{f}^{cv},g^{cv},g^{cc}\) of \(\tilde{f}\) and g over \([g^L,g^U]\) and X, respectively, are constructed. Note that in general the range set of g overestimates the true image of g under X, but to keep this example simple, we assume that the exact image of g under X is known. Finally, a set of linearization points \(\mathscr {L}_{AVM}\) with \(|\mathscr {L}_{AVM}| = N_\mathscr {L}\) is computed, e.g., with the sandwich algorithm and one of its different rules (Section 4.2 in [35]), and all convex and concave relaxations are replaced by their affine under- and overestimators computed at points in \(\mathscr {L}_{AVM}\). For the above optimization problem (AVM), when assuming that every function is linearized the same number of times, we end up with \(N_\mathscr {L}\) affine inequalities consisting of \(\frac{N_\mathscr {L}}{3}\) for the convex relaxation of the objective, \(\frac{N_\mathscr {L}}{3}\) for the convex relaxation, and \(\frac{N_\mathscr {L}}{3}\) for the concave relaxation of the auxiliary function g. The linearized lower bounding problem is given as

It is desirable to achieve the same tightness of the lower bounding linear optimization problem when using McCormick relaxations. Since no auxiliary variables are introduced when McCormick’s theorems are applied, this amounts to computing the projection of (\(\hbox {AVM}_{\text {LP}}\)) to the original variable space X. This is done by combining each of the affine under- or overestimators of function g with each of the affine relaxations of f. The choice whether the affine under- or overestimators of g have to be used, depends on the monotonicity of the affine relaxations of f (Corollary 3 in [37]). This combination results in a linear optimization problem with \((N_\mathscr {L})^{2}\) linear inequalities in the original variables \(\mathbf {x}\) and only one (free) auxiliary variable \(\eta \). Generalizing, if \(F>1\) auxiliary variables are introduced in the AVM, we end up with \(\mathscr {O}\left( (N_\mathscr {L})^{(F+1)}\right) \) affine inequalities for the McCormick method. The estimation of number of inequalities is not exact since some of the resulting affine inequalities may be redundant. Thus, propagating all affine inequalities with the McCormick method is not a promising idea as solely the computation of all affine functions would require an exponentional computational time.

For the AVM, we can say that the method pays for the lower number of affine inequalities with the increase of the dimension of the final linear problem (\(\hbox {AVM}_{\text {LP}}\)). This increase of number of variables however seems acceptable. The resulting LP is typically solved with (variants of the) simplex method [10]. In practice, such methods perform very well for large problems, and does not require anywhere near the worst-case exponential complexity. When propagating all combinations with the McCormick method, we have to compute all facets explicitly and thus, also all vertices of the underlying polytope. In contrast to the computation of vertices in the linear programming simplex algorithm, this propagation thus inevitably results in exponential computational runtime.

In order to achieve a similar tightness without the necessity of propagating all combinations of the affine functions and avoiding the combinatorial complexity, the following possibility can be considered. We choose only a limited number of what we think are promising linearizations in each factor and propagate only a small part of all possible combinations. The difficulty emerging with this approach is the decision making in a given factor \(f_j\) over domain \(X_{f_j}\) of the original function f, i.e., telling what is a promising linearization point, since only local information about the factor \(f_j\) on its domain \(X_{f_j}\) and not on the final function f over \(X_f\) is available. This local information is unsatisfactory because it is not possible to know which affine under- and overestimator of \(f_j\) is the best to be used for further propagation, since f may have many nonlinear factors following \(f_j\). Still, several heuristics to overcome this issue can be applied to the concave and convex relaxations. First, we can choose the affine underestimator whose minimum value is the closest to the minimum value of the convex factor \(f_j\) can be considered. As an alternative, we can compute the value at the minimum point of the factor \(f_j\) over \(X_{f_j}\) among all propagated affine underestimators and save the tightest underestimator for further propagation. Finally, we can choose the affine underestimators within each intermediate factor randomly. Unfortunately, in preliminary experiments none of the above methods provides results which could compete with the algorithms and ideas described throughout the rest of this manuscript. Thus, we focus the scope of this paper on the iterative and direct computation of the set of linearization points \(\mathscr {L}_{MC}\) providing the linear lower bounding problem in the space of original variables

3.2 Adaptation of Kelley’s algorithm

Cutting plane or localization methods are commonly used in continuous convex optimization [16] and are often also called bundle methods. The probably best known methods are the algorithm by Kelley [21] and the method by Cheney and Goldstein [8]. These algorithms may be inefficient in the sense that they may require the solution of multiple small linear programs until they stabilize but are computationally very cheap. To improve the efficiency, extensions of the methods by Kelley and Cheney and Goldstein have been developed during the last decade. The center of gravity method proposed by Newman [30] and Levin [24] computes the center of gravity of the given polyhedron approximating the convex set of interest. Although it is proven to decrease the volume of the polyhedron by a large part in each iteration, it is computationally not practicable. The maximum volume inscribed ellipsoid method [34] and the analytic center method [13] both require the solution of subsequent convex optimization problems in order to obtain a set of linearization points. As we have to compute possibly multiple linearization points in each node of the B&B algorithm, it is intuitive to avoid this additional computational overhead. The Chebyshev center cutting-plane method [11] requires the solution of a linear program to compute a linearization point (Chapter 8 in [5]) but it is prone to scaling and coordinate transformations. Since we have to compute multiple linearization points in each B&B node, the two algorithms by Kelley and Cheney and Goldstein seem acceptable in terms of computational time and resulting tightness of the lower bounding problem. Next, we present the method we use in this work and stick to the notion of Kelley’s algorithm. We adapt the original algorithm to be able to directly use McCormick relaxations and the corresponding subgradients instead of computing tangents of (smooth) convex functions.

The idea of bundle methods is to approximate a given convex function by a set or a so-called bundle of affine underestimators. The bundle is constructed by iteratively generating and adding new affine functions. The pseudo-code of the procedure can be found in Algorithm 1. In particular, we start with an initial set of linearization points \(\mathscr {L}\), e.g., the mid-point of the underlying interval domain (Line 4). We construct the lower bounding linear optimization problem L (\(\hbox {MC}_{\text {LP}}\)) with the use of McCormick relaxations by using subgradient propagation [28] with reference points defined in \(\mathscr {L}\) (Line 5). We solve L to obtain the optimal solution point \(\mathbf {x}^*\) and a corresponding solution value \(f^*\) (Line 9). We add the solution point \(\mathbf {x}^*\) to the set of linearization points \(\mathscr {L}\). We now extend the linear problem L with the inequalities which we get by the computation of subgradients of the McCormick relaxations at the new point \(\mathbf {x}^*\) of every function participating in the original problem (Lines 16-17). We then re-solve L to get a new solution point \(\mathbf {x}^*_{new}\) and its corresponding solution value \(f^*_{new}\) and repeat the procedure until either a maximum number \(N_{K}\) of linearizations has been reached (Line 8) or the difference of the solution values \(f^*\) and \(f^*_{new}\) reach a given threshold (Line 13). The implementation of the described method is available in the open-source solver MAiNGO [4].

3.3 Computation of linearization points as vertices of an n-simplex

We have shown how to construct lower bounding linear problems iteratively with a simple adaptation of well-known bundle methods in the previous section. In this section we discuss how to compute a promising set of linearization points a priori, i.e., before the first lower bounding linear optimization problem is solved.

First, we need to clarify what we denote as a promising set of linearization points. A promising set of linearization points consists of points, that are well-distributed among the n-dimensional interval domain of the optimization variables and at the same time is not too large. Intuitively, since the nonlinear n-dimensional function \(f^{cv}:X\in \mathbb {I}\mathbb {R}^n\rightarrow \mathbb {R}\), which we want to approximate through affine estimators, is convex, it should be enough to approximate the shape of f with the use of \(n+1\) affine underestimators. This intuition comes from the fact that a set \(\mathscr {L}\) of \(n+1\) points is enough to encapsulate any interior point \(\mathbf {x}^* \in X\) within the convex hull of the points \(\mathscr {L}\) in the domain space X. Moreover, since the function we aim to underestimate is convex, a set of points which is well-distributed among the domain appears to be more favorable when computing the lower bound rather than a possibly clustered set of linearization points. In practice, we cannot expect the linearization points based on this set to be exact at the minimum of f, but we hope that the affine linearizations defined by the set of linearization points, which is well-distributed among the domain of the optimization variables, can approximate f well enough. Additionally, the computation of only a limited number of affine underestimators does not increase the computational cost too much allowing for a possible trade-off between accuracy of the affine approximation and computational time.

In order to obtain a well-distributed set of linearization points, it is also possible to use well-known space covering algorithms such as Monte-Carlo methods [15] or quasi-random sequences such as the Sobol or the Halton sequence [9, 19]. These methods cover the given space well for a large number of samples. However, we desire only a very limited number of linearization points when constructing lower bounding problems, making these methods unsuitable for our procedure. Additionally, Monte-Carlo methods and quasi-random sequences obviously posses the randomness factor, whereas the methods presented herein do not. Thus, we propose a different approach based on the computation of all vertices of an n-simplex. An n-simplex is defined as an n-dimensional polytope which also is the convex hull of its \(n+1\) vertices. The idea is to compute all \(n+1\) vertices of an n-simplex, with all vertices lying on an n-dimensional Ball centered at \(\mathbf {0}\) in \([-1,1]^n\) with radius \(r \in (0,1]\). These points can then be rescaled to the original interval domains of the optimization variables and then used for the computation of subgradients and McCormick relaxations. The \(n+1\) points are well-distributed in a sense that each vertex has the same distance to every other vertex in the \([-1,1]^n\) box, namely \(2\cdot r \frac{\sqrt{3}}{2}\). Since subgradients of McCormick relaxations are steeper at the boundary of the domain if the convex relaxation has its minimum in the interior of the domain and thus, tend to contribute less to the tightness of the resulting linear program it is favorable to choose \(r<1\). It is also reasonable to choose the radius larger than 0 to avoid a possible clustering of the linearization set resulting in possibly many similar subgradients of the affine underestimators and finally redundant constraints. Thus, we recommend to choose \(r\in (0.7,0.9)\). The pseudo-code of the described procedure can be found in Algorithm 2. In the pseudo-code, the index (i, j) of a vertex \(\mathbf {v}\) denotes the ith row of the jth vertex in the set \(\mathscr {V}\). Throughout the algorithm we make use of the fact that all edges of the n-simplex have the same length, meaning that the sum \(\sum _{i=1}^n v_{i,j}\) has to equal 0 for a fixed j. After the computation of the \(n+1\) vertices of the n-simplex, it is advisable to rotate the polytope by a given angle \(\gamma \) with the help of a standard rotation matrix to avoid the computation of points with having multiple equal coordinates. It is not recommended to rotate the matrix with respect to each axis due to high computational effort in high dimensions, e.g., \(n>100\), but rather rotate with respect to every kth axis to avoid a large number of calculations. The case \(n=1\) is a special case, where we always use 3 points equally spread among the 1-dimensional interval \(X=[x^L,x^U]\), being

We also experienced that using all \(n+1\) points is not optimal if each function in the optimization problem only depends on a subset of the optimization variables. If the maximum number of variables participating non-linearly in any function of the optimization problem is \(p<n\), we filter the points further and choose only p out of \(n+1\) vertices. Note that when a p-simplex is computed, we obtain \(p+1\) p-dimensional points. Just because there are at most p variables participating nonlinearly in some function \(f_i\), it is not excluded that subsets of these variables and some additional variables participate nonlinearly in some other function \(f_j\) with \(i\ne j\). It is possible to rescale these p vertices to the n variables, but then the same simplex vertices for multiple variables are used which could intuitively lead to possibly very similar and often redundant affine underestimators. Moreover, since the computation of the n-simplex is performed only once in the global optimization procedure, the computation of a larger simplex is negligible. We choose only p points instead of \(p+1\) and one of these points is always the mid point of the domain, since this choice has shown the best results in the numerical case studies performed in this work. Since the mid point is always used, we choose the \(p-1\) points with the highest absolute improvement in interval tightness when applying the subgradient interval heuristic presented in our previous work [29] for computation of McCormick relaxations of the given optimization problem.

3.4 Illustrative example

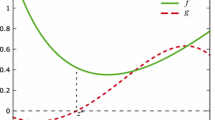

Example of the n-simplex algorithm described in Sect. 3.3. 2-simplex vertices on the 2 dimensional ball with radius r. The original points computed by Algorithm 2 are depicted as blue circles. The rotated original points by an angle of \(30^\circ \) are depicted as red crosses

Figures showing the affine underestimators of the three-hump camel function over \([-0.75,1]\times [-1,0.25]\) at linearization points obtained with the adaptation of Kelley’s algorithm (Figure (a)) described in Sect. 3.2 and the n-simplex algorithm (Figure (b)) described in Sect. 3.3. a The linearizations are conctructed starting at the middle point \(\mathbf {m}\) and conducting one iteration \(it_1\) of the adapted Kelley’s algorithm. b The linearizations are constructed at the middle point \(\mathbf {m}\) and the point \(\mathbf {v}_1\) obtained with the n-simplex algorithm

The following example illustrates application of Algorithm 2 in practice. Consider the three-hump camel function

First, we compute the vertices of the n-simplex lying on the 2 dimensional ball \(\mathscr {B}\) with radius \(r=0.725\). The points of the 2-simplex on \(\mathscr {B}\) are

Then, we rotate the points by \(30^\circ \) counterclockwise and finally obtain

Note that the similarity of the rotated points with respect to the original points is a special case of the 2 dimensional case. Figure 1 shows the (not) rotated points of the 2-simplex computed on the ball with radius \(r=0.725\).

Next, we scale the points to the domain \([-0.75,1]\times [-1,0.25]\) of f and get

As final linearization points, we choose the mid point \(\mathbf {m}=(0.125,-0.375)\) and the point \(\mathbf {v}_i\) providing the most improvement when using the subgradient interval heuristic presented in our previous work [29], given by \(\mathbf {v}_1\) in this example. Finally, we compute the linearization of convex McCormick relaxations of f at \(\mathscr {L} = \{\mathbf {m},\mathbf {v}_1\}\) (cf. Fig. 2a). Figure 2b shows the resulting affine underestimators of f when using the adaptation of Kelley’s algorithm described in the previous subsection. The difference between the linearizations with respect to the resulting lower bound of the respective linear program is only marginal in this example although different linearization points are used.

3.5 Numerical results

We compare the presented linearization strategies by the solution of various problems with the deterministic global optimization solver MAiNGO [4] using CPLEX v12.8 [18] for the solution of linear lower bounding problems, IPOPT v3.12.0 [39] for local optimization in the pre-processing step, and the SLSQP solver found in the NLOPT package v2.5.0 [20] for local optimization within the B&B algorithm. For range reduction, we use optimization-based bound tightening with the trivial filtering algorithm with filtering value 0.001 [12], duality-based bound tightening using dual values obtained from CPLEX [31], and basic constraint propagation. All calculations are conducted on an Intel\(^{\textregistered }\) Core™ i5-3570 CPU with 3.4 GHz running Fedora Linux 30.

We solve 9 problems of varying sizes with up to 57 variables chosen from the MINLPLIB2 library; 4 case studies of a combined-cycle power plant presented in Sections 5.2 and 5.2 of [1] where we either maximize the power output of the net or minimize the levelized cost of electricity; 2 case studies of the William-Otto process with 4 and 5 degrees of freedom, respectively [2]; and 3 case studies of chemical processes presented in [3], where we consider the example of a flash modeled with the NRTL mixture model as well as the optimization of a methanol production process from \(\hbox {H}_2\) and \(\hbox {CO}_2\), once with original degrees of freedom (case c) and once additionally using the \(\hbox {CO}_2\) inlet flowrate as degree of freedom making the case study somewhat more complex. All problem statistics can be found in Table 6 in Appendix A.

In the 9 problems found in the MINLPLIB2 library, we set the absolute and relative tolerances to \(\epsilon =10^{-4}\) and for the process engineering case studies, we set both optimality tolerances to \(\epsilon =10^{-2}\). We allow for a maximum of 8 hours. We consider the computational performance of the B&B algorithm for five cases:– Mid, where every convex and/or concave relaxation is linearized at the middle point of the optimization variable domain. This is the setting used in all of our previous work.– Kelley, where every convex and/or concave relaxation is linearized iteratively using the adaptation of Kelley’s algorithm described in Sect. 3.2. We allow for at most p linearizations, where p is the maximum number of variables participating nonlinearly in any function of the optimization problem.– Simplex, where every convex and/or concave relaxation is linearized at points determined through the application of the n-simplex algorithm described in Sect. 3.3. We choose p linearizations, where p is the maximum number of variables participating nonlinearly in any function of the optimization problem. We choose the p points with the highest absolute improvement in interval tightness when applying the subgradient interval heuristic presented in our previous work [29] for computation of McCormick relaxations of the given optimization problem. – S+K, where every convex and/or concave relaxation is linearized iteratively using the adaptation of Kelley’s algorithm with the initial set of linearization points \(\mathscr {L}\) being determined with the use of the n-simplex algorithm. We add at most 5 additional linearizations with Kelley’s algorithm.– Random, where every convex and/or concave relaxation is linearized at p points determined randomly, where p is the maximum number of optimization variables participating nonlinearly in any function found in the optimization problem. Here we report the results for the best random seed among 5 different random seeds we used in C++.

In all numerical experiments, we set the maximum number of iterations in the Kelley’s Algorithm 1 to p denoting the maximum number of optimization variables participating non-linearly in any function found in the optimization problem and the minimum difference thresholds to \(\mu ^a=\epsilon ^a \cdot 10\) and \(\mu ^r=10^{-2}\). For the determination of n-simplex vertices, we set the ball radius to \(r=0.725\) and rotate the n-simplex by \(\gamma =30^\circ \) counterclockwise. Furthermore, in any algorithm, in addition to the at most p or \(n+1\) linearization points, we always use the mid point of the interval domain as linearization point. The detailed CPU times and number of iterations can be found in Table 7 in Appendix A. Since performance plots can be easily misinterpreted [14], we present the computational results with the use of the shifted geometric mean defined as

where n is the number of instances, \(t_i\) the reported computational time and s a constant shift set to \(s=10\) in this work. We also report the shifted geometric mean with \(t_i\) denoting the number of iterations needed. If a method reaches the maximum computational time of 8 hours, we use \(t_i = 28 800~\hbox {s}\) or \(t_i= 2\cdot 10^6\) iterations (this is the maximum iterations needed among all runs that converged within the time limit of 8 hours). Table 1 shows the speed up differences among the considered methods. We see that the simple method using a single linearization point performs worst with respect to the number of iterations. It also performs worst with respect to the computational time in all but the smallest case studies. All methods are at least \(50\%\) faster than the single middle point linearization and need up to 5 times less iterations. The n-simplex method seems to perform best on the considered test set with respect to computational time, but all other presented method are only slightly weaker. Indeed the n-simplex method solves more instances than the other considered methods in the given time frame. The hybrid method has the lowest number of iterations but performs slightly worse in terms of computational time. Kelley’s algorithm performs best regarding computational time for the methanol production processes but performs worse than the n-simplex algorithm on the other process engineering case studies. It also almost always needed strictly less than p iterations to converge to the preset tolerances. This may be an indicator that Kelley’s algorithm is already good enough for our purposes but obviously this cannot be guaranteed. Note that for the minimization of the levelized cost of electricity (LCOE) in case 3, the methods which did not converge in time have a very small remaining gap. This may be misleading as for this particular problem, the last few percent needed to close the optimality gap are the most time consuming, meaning that the remaining \(\approx 1\%\) would most likely need more than 1 additional hour to converge. It is also interesting that the random generation of points performed slightly better than the adaptation of Kelley’s algorithm and the hybrid of n-simplex and Kelley’s. This can be easily explained with the fact that the random seed we chose in C++ seems to work well for the instances considered. Unfortunately, this behavior cannot be guaranteed if a different machine or a different random seed is chosen, making the random generation of linearization points undesirable in general. We can conclude that except for the easiest instances or the instances with already very tight relaxations, the computation of additional linearization points seems favorable.

4 Hybridization of the auxiliary variable method and McCormick relaxations

Tsoukalas and Mitsos already discussed the relationship between the auxiliary variable method and multivariate McCormick relaxations (Section 4 in [37]). Their conclusion was that McCormick relaxations can be modified to provide equally tight relaxations as the AVM. The modification consists of the introduction of auxiliary optimization variables and corresponding equality constraints for common intermediate factors. We first briefly present the main result again herein. Since the introduction of additional optimization variables together with the corresponding equality constraints increases the complexity of the lower bounding problems, in a B&B framework it is favorable to use the linearization algorithms presented in the previous section. Then, we conduct additional numerical experiments to check the impact of the introduction of common factors as auxiliary optimization variables when using multivariate McCormick relaxations.

4.1 Tightness of McCormick relaxations and the auxiliary variable method

Consider the functions

and the composition

with \(X\subset X_3, X\subset X_4, f_3(X_3)\times f_4(X_4) \subset X_1\) and \(f_3(X_3)\subset X_2\). When minimizing g, the AVM could formulate the problem in two different ways, depending on whether the intermediate factor \(f_3\) is recognized as a common term (Formulation 2) or not (Formulation 1).

with the corresponding convex relaxations used for the lower bounding problem

Formulation 1 is in general a relaxation of Formulation 2 and results in a worse lower bound if the nonlinear convex problem is solved. Although, theoretically the better lower bound cannot be guaranteed when the formulations are linearized, in practice it is still expected that the linearization of Formulation 2 will result in a tighter bound.

When using multivariate McCormick relaxations we obtain the same bound for Formulation 1

Note that in the above multivariate McCormick formulation, we have only one optimization variable x. Now, if the intermediate factor \(f_3(x)\) is recognized and treated as a common variable, it is necessary to introduce one additional optimization variable \(z_3\). The alternative formulation we get then is

providing the same bound as Formulation 2 in the AVM by only introducing a single additional auxiliary optimization variable. This also means that the tightness of the linear lower bounding problem obtained through linearization of the convex multivariate McCormick relaxation is equal to the bound obtained by the linearized AVM Formulation 2 if the same linearization points are used (which in general we do not recommend due to the combinatorial complexity (Sect. 3.1)). Although not explicitly mentioned in the original publication [37], the above formulation already suggests that a hybridization of the AVM and the multivariate McCormick relaxations may result in computational advantages. In this work, instead of trying to achieve the tightness of the AVM, we experiment with the addition of only a few auxiliary optimization variables in order to achieve best computational results.

4.2 Numerical results

Modern implementations of directed acyclic graphs such as the one in MC++ [7] allow for the recognition of common factors. The recognition is done automatically and has a quadratic complexity, since in the worst-case all previous factors have to be checked whenever a new factor is introduced. When comparing the AVM with McCormick relaxations, it is of interest to investigate the impact of the intermediate common factors on computational time in a B&B algorithm. Note that the addition of all intermediate factors, also those occurring only once, results in the AVM.

In our experiments, we always choose the intermediate nonlinear factors participating most often in the optimization problems and replace them by an auxiliary optimization variable and a corresponding equality constraint in the lower bounding problem. We use the same optimization problems as in Sect. 3.5 with the exact same computational setup. In our experiments we add up to 3 auxiliary variables and solve the problems with the same 5 presented linearization strategies as in Sect. 3.5. However, we do not branch on these auxiliary variables but rather only apply bound tightening heuristics to them. Initial valid bounds for the introduced variables are obtained through interval arithmetic and constraint propagation. We report only the problems where at least one common intermediate factor has been found. Again, we use the shifted geometric mean (cf. Sect. 3.5) to compare the different linearization strategies for a fixed number of additional auxiliary optimization variables and also to compare the same linearization strategies for the different numbers of introduced auxiliary optimization variables (cf. Tables 2, 4). Table 3 reports the shifted geometric mean comparing the different linearization strategies over all numbers of additional auxiliary variables (1–3). Table 5 reports the shifted geometric mean comparing the different linearization strategies and all different number of auxiliary variables (0–3).

In the cases where 1 or 2 auxiliary optimization variables are added, the n-simplex method outperforms all other considered procedures and that it is the only one to solve all considered numerical examples in the time frame of 8 hours (cf. Tables 8, 9, 10 found in “Appendix A”) with up to 2 auxiliary variables. The adaptation of Kelley’s algorithm performs best when 3 auxiliary variables are added, but in general all procedures perform worse than when less auxiliaries are used. Overall, Kelley’s and the n-simplex algorithm perform very similar when auxiliary variables are added (cf. Tables 3, 5). The introduction of auxiliary variables allows an improvement of up to \(\approx 20\%\) with respect to solution times for all procedures (cf. Table 4). It is also comprehensible that linearization strategies using multiple linearizations achieve computational advantages when introducing auxiliary optimization variables when compared to the simple single point linearization due to the increased dimensionality and thus also an increased complexity of the optimization problem. Unfortunately, we cannot say that introducing more auxiliary variables always results in best performance as, e.g., when introducing auxiliary variables for the minimization of the levelized cost of electricity in case 3 [1], we achieve the best computational performance when adding only 1 auxiliary variable. However, the number of iterations needed decreases as more auxiliary optimization variables are added, confirming the improved tightness of the resulting lower bounding relaxations. This may be an indicator that replacing the common factors occurring the most in the optimization problem is not always the best heuristic but rather different properties are crucial for the computational advantage. It is also possible that it is required to branch on the auxiliary variables to possibly further improve the computational performance. The improvement of the choice of intermediate factors and the development of specialized heuristics for the hybridization of McCormick relaxations with the AVM requires further investigation and remains an active research topic of the authors.

The present results for the NRTL flash case (NRTL_RS_IdealGasFlash) also provide an explanation for the differences in performance of the model formulations considered by Bongartz and Mitsos [3]. In the version of the case study used herein, only the liquid mole fractions of the flash are optimization variables while the vapor mole fractions are calculated from the isofugacity equations. Bongartz and Mitsos [3] observed that adding the vapor mole fractions as optimization variables, thus turning the isofugacity equations into constraints, improved computational performance. When allowing the addition of auxiliary variables, the variables added herein correspond exactly to these vapor mole fractions, showing that their addition to the problem results in tighter relaxations because they are re-occurring factors.

5 Conclusion

Algorithms for the computation of linearization points for the auxiliary variable method are available and are widely used in state-of-the-art deterministic global optimization solvers [35]. No such methods have been proposed for the (multivariate) McCormick relaxations. We discuss difficulties and limits of methods for the computation of affine underestimators of McCormick relaxations when the idea of subgradient propagation is considered [28]. An iterative method based on well-known cutting plane algorithms from the field of convex optimization [8, 21] and an own algorithm based on the computation of all vertices of an n-simplex for the computation of linearization points a priori are developed. We combine both methods and compare all procedures in numerical experiments. The n-simplex algorithms performs best on the considered test set, but all method perform up to \(\approx 60\%\) better than the single point linearization strategy used in our previous works. Furthermore we tie on the discussion on the relaxation tightness and introduction of auxiliary variables in the AVM and McCormick relaxations presented in [37]. The theoretical results are applied in the numerical experiments by recognizing common intermediate factors in the directed acyclic graph and introducing additional optimization variables while still using the McCormick propagation rules. The introduction of a limited number of auxiliary variables provides large improvements in the computational times. We deduce that it is not always best (w.r.t. computational time) to introduce all commonly occurring factors as auxiliary optimization variables. The development of methods for the determination of when to replace a common factor by an auxiliary optimization variable remains an active field of research of the authors.

References

Bongartz, D., Mitsos, A.: Deterministic global optimization of process flowsheets in a reduced space using mccormick relaxations. J. Global Optim. 69(4), 761–796 (2017)

Bongartz, D., Mitsos, A.: Infeasible Path Global Flowsheet Optimization Using McCormick Relaxations. In: A. Espuña, M. Graells, L. Puigjaner (eds.) Computer Aided Chemical Engineering: 27th European Symposium on Computer Aided Chemical Engineering (ESCAPE 27), pp. 631–636 (2017). https://doi.org/10.1016/B978-0-444-63965-3.50107-0

Bongartz, D., Mitsos, A.: Deterministic global flowsheet optimization: between equation-oriented and sequential-modular methods. AIChE J. 65(3), 1022–1034 (2019)

Bongartz, D., Najman, J., Sass, S., Mitsos, A.: MAiNGO - McCormick-based Algorithm for mixed-integer Nonlinear Global Optimization. Tech. rep., Process Systems Engineering (AVT.SVT), RWTH Aachen University (2018). http://permalink.avt.rwth-aachen.de/?id=729717, MAiNGO Git: https://git.rwth-aachen.de/avt.svt/public/maingo

Boyd, S., Vandenberghe, L.: Convex Optimization. Cambridge University Press, Cambridge (2004)

Cao, H., Song, Y., Khan, K.A.: Convergence of subtangent-based relaxations of nonlinear programs. Processes 7(4), 221 (2019). https://doi.org/10.3390/pr7040221

Chachuat, B., Houska, B., Paulen, R., Perić, N., Rajyaguru, J., Villanueva, M.: Set-theoretic approaches in analysis, estimation and control of nonlinear systems. IFAC-PapersOnLine 48(8), 981–995 (2015)

Cheney, E.W., Goldstein, A.A.: Newton’s method for convex programming and Tchebycheff approximation. Numer. Math. 1(1), 253–268 (1959)

Chi, H., Mascagni, M., Warnock, T.: On the optimal Halton sequence. Math. Comput. Simul. 70(1), 9–21 (2005)

Dantzig, G.B., Orden, A., Wolfe, P., et al.: The generalized simplex method for minimizing a linear form under linear inequality restraints. Pac. J. Math. 5(2), 183–195 (1955)

Elzinga, J., Moore, T.G.: A central cutting plane algorithm for the convex programming problem. Math. Prog. 8(1), 134–145 (1975)

Gleixner, A., Berthold, T., Müller, B., Weltge, S.: Three enhancements for optimization-based bound tightening. J. Global Optim. 67, 731–757 (2017)

Goffin, J.L., Vial, J.P.: On the computation of weighted analytic centers and dual ellipsoids with the projective algorithm. Math. Prog. 60(1–3), 81–92 (1993)

Gould, N., Scott, J.: A note on performance profiles for benchmarking software. ACM Trans. Math. Softw. 43(2), 15:1–15:5 (2016)

Hastings, W.K.: Monte carlo sampling methods using markov chains and their applications. Biometrika 57(1), 97–109 (1970)

Hiriart-Urruty, J.B., Lemaréchal, C.: Convex Analysis and Minimization Algorithms II Advanced Theory and Bundle Methods, vol. 306. Springer, Berlin (1993). https://doi.org/10.1007/978-3-662-06409-2

Horst, R., Tuy, H.: Global Optimization: Deterministic Approaches. Springer, Berlin (2013)

International Business Machines Corporation: IBM ILOG CPLEX v12.8. Armonk, NY (2017)

Joe, S., Kuo, F.Y.: Constructing Sobol sequences with better two-dimensional projections. SIAM J. Sci. Comput. 30(5), 2635–2654 (2008)

Johnson, S.: The NLopt nonlinear-optimization package (2016). http://ab-initio.mit.edu/nlopt. Last Accessed 13 Sept 2018

Kelley Jr., J.E.: The cutting-plane method for solving convex programs. J. Soc. Ind. Appl. Math. 8(4), 703–712 (1960)

Khajavirad, A., Sahinidis, N.V.: A hybrid LP/NLP paradigm for global optimization relaxations. Math. Prog. Comput. 10(3), 383–421 (2018)

Khan, K., Watson, H., Barton, P.: Differentiable McCormick relaxations. J. Global Optim. 67(4), 687–729 (2017)

Levin, A.Y.: An algorithm for minimizing convex functions. In: Doklady Akademii Nauk, vol. 160, pp. 1244–1247. Russian Academy of Sciences (1965)

Locatelli, M., Schoen, F.: Global optimization: theory, algorithms, and applications, vol. 15. SIAM (2013)

McCormick, G.: Computability of global solutions to factorable nonconvex programs: part I-convex underestimating problems. Math. Prog. 10, 147–175 (1976)

Misener, R., Floudas, C.: ANTIGONE: algorithms for coNTinuous/integer global optimization of nonlinear equations. J. Global Optim. 59, 503–526 (2014)

Mitsos, A., Chachuat, B., Barton, P.: McCormick-based relaxations of algorithms. SIAM J. Optim. 20(2), 573–601 (2009)

Najman, J., Mitsos, A.: Tighter McCormick relaxations through subgradient propagation. J. Global Optim. (2019). https://doi.org/10.1007/s10898-019-00791-0

Newman, D.J.: Location of the maximum on unimodal surfaces. JACM 12(3), 395–398 (1965)

Ryoo, H., Sahinidis, N.: Global optimization of nonconvex NLPs and MINLPs with applications in process design. Comput. Chem. Eng. 19(5), 551–566 (1995)

Schichl, H., Neumaier, A.: Interval analysis on directed acyclic graphs for global optimization. J. Global Optim. 33(4), 541–562 (2005)

Smith, E.M., Pantelides, C.C.: Global optimisation of nonconvex MINLPs. Comput. Chem. Eng. 21, 791–796 (1997)

Tarasov, S.: The method of inscribed ellipsoids. Soviet Math. Doklady 37, 226–230 (1988)

Tawarmalani, M., Sahinidis, N.: Convexification and Global Optimization in Continuous and Mixed-Integer Nonlinear Programming. Kluwer Academic Publishers, Dordrecht (2002)

Tawarmalani, M., Sahinidis, N.V.: A polyhedral branch-and-cut approach to global optimization. Math. Prog. 103(2), 225–249 (2005)

Tsoukalas, A., Mitsos, A.: Multivariate McCormick relaxations. J. Global Optim. 59, 633–662 (2014)

Vigerske, S., Gleixner, A.: SCIP: global optimization of mixed-integer nonlinear programs in a branch-and-cut framework. Optim. Methods Softw. 33(3), 563–593 (2018)

Wächter, A., Biegler, L.: On the implementation of an interior-point filter line-search algorithm for large-scale nonlinear programming. Math. Prog. 106(1), 25–57 (2006)

Acknowledgements

We would like to thank Dr. Benoît Chachuat for providing MC++ and for discussions on polyhedral relaxations. We would like to thank Prof. Angelos Tsoukalas for discussions on localization methods. This project has received funding from the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) Improved McCormick Relaxations for the efficient Global Optimization in the Space of Degrees of Freedom MA 1851/4-1. We gratefully acknowledge additional funding by the German Federal Ministry of Education and Research (BMBF) within the “Kopernikus Project P2X: Flexible use of renewable resources exploration, validation and implementation of ‘Power-to-X’ concepts”.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

A Appendix

A Appendix

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Najman, J., Bongartz, D. & Mitsos, A. Linearization of McCormick relaxations and hybridization with the auxiliary variable method. J Glob Optim 80, 731–756 (2021). https://doi.org/10.1007/s10898-020-00977-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-020-00977-x