Abstract

We present a composite design methodology for the simulation and optimization of the solar cell performance. Our method is based on the synergy of different computational techniques and it is especially designed for the thin-film cell technology. In particular, we aim to efficiently simulate light trapping and plasmonic effects to enhance the light harvesting of the cell. The methodology is based on the sequential application of a hierarchy of approaches: (a) full Maxwell simulations are applied to derive the photon’s scattering probability in systems presenting textured interfaces; (b) calibrated Photonic Monte Carlo is used in junction with the scattering matrices method to evaluate coherent and scattered photon absorption in the full cell architectures; (c) the results of these advanced optical simulations are used as the pair generation terms in model implemented in an effective Technology Computer Aided Design tool for the derivation of the cell performance; (d) the models are investigated by qualitative and quantitative sensitivity analysis algorithms, to evaluate the importance of the design parameters considered on the models output and to get a first order descriptions of the objective space; (e) sensitivity analysis results are used to guide and simplify the optimization of the model achieved through both Single Objective Optimization (in order to fully maximize devices efficiency) and Multi Objective Optimization (in order to balance efficiency and cost); (f) Local, Global and “Glocal” robustness of optimal solutions found by the optimization algorithms are statistically evaluated; (g) data-based Identifiability Analysis is used to study the relationship between parameters. The results obtained show a noteworthy improvement with respect to the quantum efficiency of the reference cell demonstrating that the methodology presented is suitable for effective optimization of solar cell devices.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the last decades a lot of effort has been devoted to find optimal solar cells design, creating a very vast research field [1,2,3]. Three main kinds of solar cell devices had captured most of the attention: (I) photovoltaic (PV), which convert energy transported in light directly into electrical energy using devices based on semiconductor materials (this device will be analysed in this works), (II) thermophotovoltaic (TPV) [4], which convert heat into electricity by radiating photons that are then converted into electron-hole pairs via a photovoltaic medium, (III) nanophotonic thermophotovoltaic, promising devices recently developed in [5] which combines the best features of PV and TPV. However, optimization and analysis of solar cells have revealed to be a hard task, mainly because of the high number of (microscopic and conflicting) parameters to be set and the difficulties on finding a computationally-competitive accurate model.

In this work we present a combination of numerical algorithms especially designed for thin-film cell analysis and optimizations. Precisely, our approach is composed of four distinct steps: Sensitivity Analysis (SA) of model parameters, in order to study the effect that each parameter has on the model; Single Objective optimization (SOO) and Multi Objective Optimization (MOO) applied to the most sensitive parameters (we call these “SA + SOO” and “SA + MOO”), to find designs maximizing the efficiency of the cell along with trade-offs, balancing the efficiency of the cell and its production costs; robustness analysis, local, global and glocal, of the best designs found on the optimization cycle, to fully evaluate a device resistance against perturbation (e.g. usage, production imprecisions etc.); identifiability analysis, used to investigate the model for parameters functional relationships. We demonstrate the capabilities of our approach for the optimization and analysis of Tandem Thin-Film Silicon devices, using different Transparent Conductive Oxide (TCO) and Back Reflector (BR) materials. We show that we are able to obtain remarkable efficiency-cost improvements, up to \(6.71\%\) with respect to the reference cell considered.

The choice of tandem thin-film silicon solar cell in this study, is justified by the recent amount of work that has been spent in optimizing the efficiency of these promising devices. In fact the second generation of thin-film cells has recently arose as a valuable alternative to the more expensive devices, previously built, made of thick polycrystalline silicon wafers. However, the reduced thickness of the absorbing layer, as it will be demonstrated in the next sections, inevitably leads to a reduction in the device efficiency. Hence, several light trapping techniques have been proposed and introduced, during the years, in thin-film cells in order to balance such an effect. Examples are the introduction of a Back Reflector metals layer and, mainly, the chemical introduction of random nano-texturing at the interfaces separating the cell layers [6, 7]. The former has been exhaustively investigated in this work. Indeed, a part of our study concerns the explorations of different doping dosage (four dosage level have been considered), together with two level of back reflector smoothness. We found that the best materials to be used, compared to the ones here analysed, are the ZnO for the TCO layer, and smooth Ag for the back reflector layer. However different doping dosage of ZnO should be applied to obtain different trade-offs balancing efficiency and cost of the cell.

The presence of random nano-texturing, whereas, lead to the computational intractability of this devices using Maxwell solvers [8], thus a lot of effort has been recently spent in the development of accurate, however, computational tractable models. The one we have implemented is based on a well balanced combination and generalization of the different approaches presented in [9, 10].

Finally we perform a comparison among the results obtained by using the methodological approach here presented and by applying OptIA [11, 12] , an immune system based optimization algorithm for single and multi objective optimization.

The remainder of the paper is organized as it follows. In the second section we explain the details of the algorithms composing our methodology. The optical model implemented is discussed along with the experimental results obtained using different materials in the third section. The simulation results obtained with our methodology are presented and commented in the fourth section. Finally, in the last section we present our conclusions and possible remarks for future works.

2 Building blocks and literature review

In this section we introduce the problem addressed in this paper, the algorithms we used and the main reasons of our choices. The general form of a constrained global optimization problem is:

where \(F:\mathbb {R}^k \rightarrow \mathbb {R}^n\) is the n-component objective functions vector, i.e. \(F(x) \equiv (f_1 (x), f_2 (x), \ldots , f_n (x))\) for some scalar functions \(f_i (x), i = 1, 2, \ldots , n\) (assuming that F is to be minimized is not restrictive), k is the domain space dimension, n is the number of objective functions (if \(n=1\) we talk about Single Objective Optimization (SOO), otherwise Multi-Objective Optimization (MOO)), and T is a subset of \(\mathbb {R}^k\). A point \(x =(x_1, \ldots , x_k) \in \mathbb {R}^k\) is said to be a feasible (respectively unfeasible) point with respect to problem (1), if \( x \in T\) (respectively \( x \notin T)\). The presence of constraints in an optimization problem may greatly increase its complexity [13].

In Fig. 1 we summarise the composite design methodology presented in this work. Initially the model is investigated by means of Sensitivity Analysis, that evaluate the magnitude of the effect that each parameter has on the solar cell efficiency, and possibly remove from the model input parameters that have only weak influence on the latter. The (reduced size) model is hence fed into black-box Single and Multi Objective Optimization solvers, respectively for full optimization of optical efficiency of the solar cell, and for trade-off analysis of efficiency and production costs. Finally optimal and Pareto-optimal designs are evaluated by means of Robustness Analysis, and the Identifiability Analysis is used to invert the relationship between model parameters and Pareto optimality.

2.1 Single objective Optimization

Almost all non-trivial engineering designing problems concern the optimization of an objective function characterized by a complex domain-codomain behaviour, for which traditionally technique cannot be applied [14,15,16]. Clearly there is currently no algorithm, which performs better than the others in every possible engineering problem [17], justifying the fact that new algorithms are continuously designed. In this work we use the Multilevel Coordinate Search (MCS) algorithm for solving the single objective optimization problem associated to thin-film silicon solar cell efficiency. However, in some particular cases MCS is strongly competitive and outperforms some state-of-art algorithms [18]. We here give just a quick explanation of the working principle of the method, refer to [19] for details.

The MCS algorithm is a combination of heuristics and calculus approximations; it is composed of two main sub-routines: a global routine and a local one. The global part is obtained via a branch-based algorithm. The domain is repeatedly split, along a coordinate direction, into, usually, three sub-boxes; each one of them containing a base point (a promising point for the optimization problem) and an opposite point, used to determine the sub-box. The splitting process is iterated up to an user-defined-number of times. The local search is whereas obtained via quadratic approximations of the function in the neighbourhood of some optimal points previously selected. Interpolating points used in the quadratic models are selected using a combination of triple and coordinate search.

2.2 Multi-objective optimization

SOO may reveal to be restrictive for the majority of real world problems; often mathematical models present more than one objective function to be optimized, usually those being in contrast with each other (e.g., generally the more efficient a device is the more expensive it is). We thus introduce the well known definition of Pareto optimality, and the partial order deriving from it. A point \(x \in T\) of the domain space, is said to dominate a point \(y \in T\), with respect to problem (1), if \(f_i(x)\le f_i(y) \; \forall \; i = 1, \ldots , n \; \wedge \exists \; j \; s.t. \; f_j(x) < f_j(y)\). The Pareto-Front is then defined as the total set of non-dominated feasible points.

Evolutionary algorithms (EA) are a class of meta-heuristic methods that operate on a set of candidate solutions and subsequently, using biologically-derived evolution techniques (e.g. mutation, crossover), modify it. Because of their intrinsic nature, this class of algorithms may be easily adapted to face MOO problems [20]. Contrarily, exact algorithms for most of real world problems, as thin-film solar cell optimization process, may reveal to be unsuitable due to a high dimensionality of the problem considered. Indeed, in order to avoid an increase of the computational complexity in the optimization process, we do not consider a robust MOO [21,22,23] as a core of our optimization but, instead, we tackle this problem using a post-processing robustness analysis techniques (see 2.2.2). Among a vast literature on MOO algorithms [24,25,26,27], in this work we have used the NSGA-II algorithm, which we will briefly explain in the following.

NSGA-II [28] is a multi-objective evolutionary algorithm based on non-domination, elitism and crowding distances. At each iteration the algorithm assigns two attributes to each point (candidate solution) of the current population, namely rank and crowding distance, as it follows. The fast non-dominated sort algorithm implemented in NSGA-II, sorts the points of the current population P in different fronts \(\mathcal {F}_1, \mathcal {F}_2 \ldots , \mathcal {F}_s\), such that \(\mathcal {F}_1\) contains all non-dominated points of the current population, \(\mathcal {F}_2\) contains the non-dominated points of the current population minus those ones which are in \(\mathcal {F}_1\), and so on. We then say that a point x has rank p if \(x \in \mathcal {F}_p\). So the rank is the measure of a point quality (the less the better). The crowding distance of x, estimates how isolated x is in the domain space. Hence the crowding distance of a point x is high if F(x) lies in unexplored regions of the objective space (thus the higher the better). The points are thus sorted according to the order induced by rank and crowding distance. The crowding distance of x, is an estimation of the density of points near x. It is calculated by selecting, for each objective, the two closest points on each side of x, calculating their distance and finally summing them over all the objective functions. Namely it is defined Front-wise as it follows: let \(x^j\), \(j=1,\ldots , l \) be all the points in the pth front \(\mathcal {F}_p\). Let \(f_i\), \(i=1,\ldots , n \), be the ith objective function; assume that the \(x^j\)s are indexed accordingly to their ith objective function values, define:

Finally the crowding distance of x is defined as: \(c_{dist}(x)= \sum _{i=1}^{n}m_i(x).\)

2.2.1 Sensitivity analysis

Sensitivity analysis (SA) may be loosely defined as the study of how output uncertainty of a model may be justified in terms of uncertainty in the model input parameters [29]. We use SA both to give an accurate mathematical description of the model parameters considered in the optimization problems and to reduce the domain space dimension, in order to obtain a computational reduction. In order to accomplish this task we implement two different SA algorithms, namely the Morris algorithm and the Sobol’s indexes based approach. Methods as the Morris one are usually referred to in literature as screening methods or qualitative methods, because they tend to give a qualitative description of the parameters influence rather than a quantitative [30, 31], indeed ranking the factors on an interval scale (i.e. it is a one-step-at-a-time method). The Sobol’s indexes approach is whereas a Variance-based quantitative SA method, which indeed gives quantitative information about the output variance portion that may be explained by each parameters or even by groups of parameters [29]. The cost of this information is, of course, a significantly higher computational burden. It follows a review of the functioning principles of both methods.

The basic idea behind the Morris method is that, given the model function and the ith parameter of the domain, low values of \(\frac{\partial F}{\partial x_i}\) may be interpreted as low influence of the ith parameter on the output. Hence the method consists of random sampling of the domain T (assumed to be a k-dimensional equally spaced grid) and approximation of the effect that every parameter has. Namely, given \(x=(x_1,\ldots , x_k)\) on the domain and a \(\varDelta \in \mathbb {R}\), multiple of the grid step, such that \(x+e_i \,\varDelta =(x_1, x_2, \ldots , x_i + \varDelta , \ldots , x_k)\) still belongs to the domain, where \(e_i\) is a vector of zeros but with a unit as its ith component, the algorithm computes the elementary effect:

As x spans the domain we obtain the set:

The ith parameter overall influence is thus estimated considering the mean \( \mu ^*_i\) and standard deviation \( \sigma _i\) of the absolute values of \(\mathcal {EE}_i\) elements [32]. Specifically, high values of mean stands for high influence of the ith parameter on the output, high values of standard deviation stands for strongly non-linear effects and/or correlation with other parameters.

The computation of Sobol’s indexes [33] is based on a high dimensional model representation of the objective function F (known as ANOVA decomposition). It can be proved [34] that:

where V is the total variance. The right-side terms of this equation represent the contribution of each and group of parameters on the total variance. The first order sensitivity index for the ith parameter is thus defined as \(S_i=\frac{V_i}{V}\). It gives information on the contribution that each factor (taken alone) has to the output. Whereas the total effect Sobol’s indexes \(S_{T_i}\) is defined as \( S_{T_i} = 1 - \frac{V_{-i}}{V}\), where \(V_{-i}\) is the variance computed on all parameters except \(x_i\), which is supposed to be fixed to its true (nominal) value [31].

To efficiently approximate the Sobol’s indexes we implemented a Monte Carlo approach [29]. The complete procedure is illustrated in Algorithm 1. Let \(A=(A_1, A_2, \ldots , A_k) \in \mathbb {R}^{N,k}\) and \( B=(B_1 , B_2, \ldots , B_k)\in \mathbb {R}^{N,k}\) (where k is the domain space dimension and N is the number of samples considered) be two input sample matrices randomly generated in the domain space and let \(C_i=(B_1, \ldots , B_{i-1}, A_i,\) \( B_{i+1}, \ldots , B_{k})\), \(\forall \; i=1,\ldots ,k\). Let \(Y\equiv F(x_1, \ldots , x_k) \) be the model output (i.e. in our case a n-dimensional vector), the first-order indexes \(S_i\) and the total effects \(S_{T_i}\) can be respectively computed as:

where \(y_A,y_B\) and \(y_{C_i}\) are the evaluation of objective function on the matrices of data A, B and on \(C_i\), and \(f_0\) is the mean, that can be either estimated by using sample A or B, defined as (e.g. using sample A)

2.2.2 Robustness analysis

In this section we introduce the concept of robust design and the three different indexes we have used to evaluate thin-film devices robustness. It is indeed clear that the quality of a solar cell cannot be evaluated only considering its Pareto-optimality with respect to the objective functions. We argue that an important factor that has to be taken into account, especially in such a design optimization problem (i.e. solar cell), is the robustness of the device. Intuitively, a device (more generally a point of the domain) is said to be robust with respect to problem (1) if small changes on the design parameters correspond to small changes on the objective function computed on the “perturbed design” [35,36,37]. In order to precise this intuitive definition of robustness we introduced three robustness indexes, which will reveal to be essential for the Decision Making.

The Local robustness (LR)and Global robustness (GR) indexes are based on the idea that perturbations, interpreted to be production errors or usage, may be both assumed to be Gaussian distributed. We set to zero the distribution mean, and the standard deviation of the distribution to \(1\%\) of the corresponding parameter value. The computation of the Local index is as it follows: for \(i=1,\ldots ,k\), the ith Local robustness index of x (\(LR_i(x)\)) is computed via a Monte Carlo approach by perturbing N times the ith parameter of x (say \(x+ e_i \varDelta \) the perturbed point) using Gaussian noise (set as explained above), and then computing the fraction of perturbed points for which \(F(x + e_i \varDelta )\), where \(e_i\) is a k-vector of zeros but with a unit as its ith component, does not deviate more than \(1\%\) from F(x). In other words:

Analogously the Global robustness index of x (GR(x)) is computed by perturbing N times all the parameters of x with Gaussian noise and calculating the fraction of perturbed points (say \(x+\varDelta \) every each perturbation) such that \(F(x+\varDelta )\) do not deviate more than \(1\%\) from F(x); in short:

The Local robustness index is computed only considering perturbation along coordinate directions, it does work well as a screening of a point robustness, and quantifies the robustness of each parameter of the point. The Global robustness index quantifies, on the other hand, the point resistance against random perturbations. However these methods work with a fixed perturbation strength (\(\sigma \)) that bounds the region in which perturbed points are explored.

The “Glocal” robustness index (proposed in [38]) is a hybrid method for the evaluation of a point robustness. Given an initial point, \(x\in T\), it iteratively tries to find the biggest viable region (which is a region in which \(F(x+\varDelta )\) does not deviate too much from F(x)) in a neighbourhood of x; then a Monte-Carlo integration is used to approximate the volume of the Viable region, and finally the volume is normalized in order to get a robustness index which is independent on the number of parameters composing the model. Hence the “Glocal” index depends on the biggest “stable” region around x. An obvious drawback of this method is the tremendous computational burden. In order to reduce the computational effort in the Viable region search, the latter is guided by a Principal Component Analysis (PCA), which is a standard statistical tool for non-parametrically extracting relevant information from complex data-sets (see for example [39]).

2.2.3 Identifiability analysis

The last step of the methodology we present is the Identifiability Analysis (IA). In the most general form (see [40] for further details on the definition), consider a system governed by a set of (possibly differential) equation. Let z be the state variable of the model, with \(z_0\) the initial value for z. Let t be the time variable and x be the parameter of the model (i.e. the design parameter of the solar cell in our case). Identifiability analysis is applied to a model of the form

where \(\varphi \) is a function of the state variable x, its derivatives, a k-dimensional parameters vector x, and time t. Equation (3) enforces the initial condition for the model considered. y, the observation variable, is a function of z, t and x. A parameter \(x_i\) is said to be identifiable (with respect to the model considered) if there exist, for given values of \( z_0\) and y , a unique solution for \(x_i\) from equations (2)–(4); otherwise it is said to be (structural) non-identifiable. Non identifiability manifests itself through functional relations between the non-identifiable parameters, thus most of the IA algorithm analyse the model looking for those.

In this work we use the MOTA (mean optimal transformation approach) algorithm, a data-based Identifiability Analysis algorithm, which are a class of a-posteriori model-independent method, hence perfectly suited for our application. The MOTA [40] algorithm is based on consecutive iterations of the ACE (alternating conditional expectation) algorithm [41], which estimates optimal transformations that maximize the linear correlation between parameters. Briefly by choosing a parameter as response, say \(x_j\), and the others as predictors, assuming that there exist transformations such that

\(\epsilon \) being Gaussian noise, the ACE algorithm estimates, via an iterative process, optimal transformations \(\overline{\psi }\) and \(\overline{\phi _j}\) such that:

However the ACE algorithm itself cannot be used for IA: if, say, \(\phi _i(x_i)=0\) (i.e. \(x_i\) is not correlated to \(x_j\)) then it may occur that \(\overline{\phi _i}(x_i) \ne 0\), because \(x_i\) will be used by the algorithm to justify the noise in Eq. (5). The idea behind the MOTA algorithm is then easy to grasp: running the ACE algorithm more than once, if the ith transformation, \(\phi _i(x_i)\), remains “quite” stable (the authors introduced a precise index for that), then parameter \(x_i\) is considered to be correlated to \(x_j\). Finally parameters that have been found correlated with each other are retained to be non-identifiable.

In the next section we will introduce the optical model we have used for the evaluation of the optical efficiency of tandem thin-film silicon solar cell. As conclusion of this methodology section we present in Algorithm 2 the pseudo-code of our methodology, in the MOO case.

3 Thin-film silicon solar cell

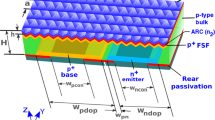

The structure of a Thin-Film Silicon Solar Cell used as a case of study is shown in Fig. 2. The cell structure is based on a two coupled p-i-n devices, one made by amorphous Si (\(a-Si\)) the second by micro-crystalline Si (\(\mu c-Si\)) separated by a thin ZnO layer. The two p-i-n photo-diodes are sequentially grown on a thick \(SiO_2 + ZnO\) substrate and a metallic (Ag in our case) electrode completes the structure. The light is collected through the glass. The tandem solar cell takes the vantage of different regions of high light harvesting efficiency of the two materials in the solar spectrum related to the different band gaps \(\sim 1.1\)eV for the \(\mu c-Si\) and \(\sim 1.7\)eV for the \(a-Si\). Thin-Film technology gives the possibility of building cheaper PV devices. Namely instead of cutting thick wafers of polycrystalline Si and then using them to build the device, the layers are subsequently grown using the Plasma-assisted chemical vapour deposition technique [42]. This makes possible to design devices with a Si layer (more generally the absorber layer) thickness of the \(\mu m\) order, which is 2 order of magnitude less then previously used devices. However, clearly, less thickness means less absorption, so light trapping techniques have been used to improve the photon optical path in the layers. Examples of this are the introduction of a back reflectors layer in the device architecture and the nano-texturing in the cell layer interfaces. The latter is usually obtained using alkaline solutions, as KOH or NaOH, which corrode the silicon forming randomly positioned square pyramids. The depth of those can be accurately tuned controlling the temperature and the time length of the corrosion process (an overview of the recent advances may be found in [43]). The optimization of the cell geometry in term of light harvesting can be performed modifying meso-scale features (thickness of the different layer) and nano-scale features (nano-texturing of the interface). However, the meso-scale features cannot be optimized without considering the processing time (and the related cost), which is a monotonic function of the layer thickness (especially the \(\mu c-Si:i\) one [1]). It is worth noting that there are alternatives to random nano-texturing: the work in [44] demonstrates that periodic nano-texturing may strongly improves the efficiency of the solar device. Understanding the trade-off between the different effects in the cell design is, of course, a complex problem and it needs a suitable numerical approach. We now give a quick overview of the two methods [9, 10] we have combined in order to compute devices quantum efficiency.

3.1 Electromagnetic fields computation

Let us assume that the electromagnetic radiation is normal to the device. First, we define:

-

I, R, T the amplitude of incident, reflected and transmitted electric fields.

-

\(\sigma _i\), \(i=1, \ldots , 11\), as the roughness of the interface between the \((i-1)\)th and the ith layer (where the 0th layer is the external ambient);

-

\(d_i\), \(i=1, \ldots , 11\), the thickness of the ith layer;

-

\(N_i = n_i - \imath \; k_i\) the complex index of refraction of the ith layer;

-

\(S_{r,i}=e^{-\frac{1}{2}(\frac{2 \pi n_{i-1} \sigma _i}{\lambda })^2}\;\) and \(\;S_{t,i}=e^{-\frac{1}{2}(\frac{2 \pi [n_{i-1} - n_i]\sigma _i}{\lambda })^2}\), \(\lambda \) being the wavelength;

-

\(E^{LF}_i\), \(E^{RF}_i\), \(E^{LB}_i\), \(E^{RB}_i\), \(i = 1, \ldots , 12\), respectively the amplitude of the incident wave on the ith interface travelling in a forward direction, backward direction, left and right.

According to Fresnel’s equations for nano-rough interfaces the complex reflection and transmitted coefficients (forward and backward) are given by

The second factors in (7)–(8) are due to the roughness of the interfaces. Hence, the relationship between the incident, reflected and transmitted amplitude is

where

where \(i = 1, 2, \ldots , 11, 12 \) and \(\beta _i \equiv \frac{2 \pi n_i}{\lambda }- \frac{2 \pi k_i}{\lambda }\imath \).

Having calculated the amplitudes, the respective intensity may be easily computed. Using (9) we can determine \(E^{RF}_i\) and \(E^{RB}_i\) and hence the resultant forward-going wave \(E_m^F(x)\) and the resultant backward-going wave \(E_m^B(x)\). Finally, we can obtain the absorption profile in the ith layer and the corresponding integrated absorption and sum them over all the wavelength to obtain the total absorption profile and relative integrated absorption. Thus, as explained in [10], the scattered light in the ith layer can be approximated by

Refer to the original work for details on the computation of \(\alpha \).

3.1.1 Scattered light computation

In order to evaluate the total absorption profile of scattered light we have used the Monte Carlo approach presented in [10]: a certain number of photons are traced until the photon absorption in a layer or the photon reflection to air takes place. Photon possible behaviours depend on his position within the cell:

-

A photon in a layer may be absorbed with a probability that depends on \(k_i,\) \(d_i\) and \(\theta \), the latter being the convex angle between the photon direction and the normal direction to the layer.

-

A photon incident with a rough interface may be (i) transmitted and scattered, (ii) reflected and scattered, (iii) refractively transmitted and specularly reflected; these events probabilities depend on \(\theta _i\), \(\theta _{i+1}\) (the refractive angles that can be calculated using Snell’s law), \(n_i\) and \(\sigma _i\).

3.2 Thin-film silicon solar cell problem

As previously explained the parameters we consider are: (i) the roughness of each interface: \(\sigma _i\), \(i=1, \ldots , 11\); (ii) the thickness of the micro-crystalline silicon layer: \(d_{\mu c -Si}\). The multi-objective optimization problem can be summarized as:

in which \(Q_e\) is the average overall quantum efficiency of the cell in the ideal charge collection conditions (100 % collection efficiency independently on the frequency) calculated as the average absorption in the two intrinsic \(\mu c-Si\):i and \(a-Si\):i layers, \(d_{\mu c -Si}\) is the thickness of the intrinsic \(\mu c-Si\) layer and \(\overline{d}_i\) is the thickness of the ith reference cell material (Table 1). The minimization of the micro crystalline layer thickness is justified, as already mentioned, by the high fabrication cost of this particular layer. Constraints in (10) are introduced to mitigate the scattering weight in case of film much thinner than the first ZnO layer roughness. In these cases the approximation made by the modelling approach used in this paper does not hold any more; an improved description can be obtained using the effective medium approximation [45] and it will be included in the next version of the algorithm.

Results of the Morris analysis applied on solar cells optical model for a number of trials \(N =10{,}000\). The result shows that the most important parameters considered are \(d_{\mu c -Si}\), as it could be easily expected, \(\sigma _2\) and \(\sigma _4\), hence these three are the parameters to be considered during the analysis and the designing. Parameter as \(\sigma _5, \; \sigma _8, \; \sigma _9\) have a weak effect on the model output

4 Results

In this section we present step-by-step all the results obtained by our methodology.

4.1 Sensitivity analysis

The results of the Morris analysis with a number of trials \(N =10{,}000\), plotted in Fig. 3, show a qualitative ranking of the most relevant factors (represented by high values of \(\mu ^*\)) are: (i) the thickness of the intrinsic layer, demonstrating what previously stated; (ii) the interface roughness above the ZnO-layer, which is the first textured materials encountered by an incident photon and shall, thus, have a relevant effect in terms of scattering events; (iii) and the one of the intrinsic amorphous silicon layer, which is the first absorbing layer encountered by an incident photon. It is worth noting that their respective values of \(\sigma \) are high too, i.e. the relationships between these three parameters and the model objectives are strongly non-linear. Parameters \(\sigma _5, \; \sigma _8, \; \sigma _9\) have a weak effect on the model output (less than \(1\%\) of maximum effect).

The Morris analysis results are confirmed, extended and quantified by the Sobol’s indexes for the first order effects and the total effects, listed in Table 2 along with their relative errors (\(50\%\) probability). Once again parameters as \(\sigma _3\), \(\sigma _5\), \(\sigma _6\), \(\sigma _8\) and \(\sigma _9\) are ranked as non-effective parameters (less than \(1\%\) of maximum \(S_{T_i}\) value) confirming the preliminary hypothesis of the Morris analysis qualitative ranking; whereas \(d_{\mu c -Si}\) , \(\sigma _2\) and \(\sigma _4\) are the most influent parameters (the total effect of the other parameters is at least reduced of a \(10^{-1}\) factor). Notice however that results for \(\sigma _{11}\) and \(\sigma _{7}\) are different for the two SA here performed. The latter are ranked as insensitive by the Sobol analysis; whereas the Morris analysis ranks them as the fifth and sixth important parameters for the model. We here perform a conservative choice, selecting as in-sensitive only the parameter for which both analysis agree. We hence discard (i.e. fixing to some predefined values) the non-influent parameters. However in order to demonstrate the power of the “SA + Optimization” approach we ran the optimization algorithms twice: considering the full parametrized model, and the reduced one. We find that computational effort in the “SA + Optimization” is remarkably lower with respect to the full parametrized model, however a little loss in the optimal point is found in the SOO, whereas a significant improvement is found in the MOO case.

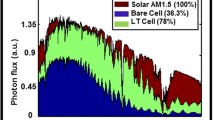

4.2 Single objective optimization

The termination criterion we used in the single objective optimizations is either an upper bound on functions evaluations (\(50k^2\), k being the domain space dimension) or the stagnation of the algorithm. Notice that in our simulations the algorithm always terminates for the stagnation of the solution. The efficiency gain of the best design found, compared to the reference cell one, is definitely remarkable holding a relative improvement in \(Q_e\) about \(6.71\%\). The best total absorption profile obtained is plotted in Fig. 4. The improvement, compared to the reference cell, is of \(5.88\%\) (Quantum efficiency and thickness of the intrinsic layer are 0.604169 and 2210). However, notice that almost all parameter values are maximized (with respect to constraints in (10)), hence leading to a fragile design. To overcome this important issue, we ran the algorithm another time reducing the \(d_{\mu c -Si}\) constrain to \(d_{\mu c -Si}\le 1.1 \, \overline{d}_{\mu c -Si}\). Three notable designs found are: (i) the one which maximize \(Q_e\) (objective functions values: \(Q_e=0.5928\), \(d_{\mu c -Si}=1814.45\)); (ii) the one minimizing \(d_{\mu c -Si}\) (objective functions values: \(Q_e=0.5721\), \(d_{\mu c -Si}=1190\)) and (iii) the best trade-off (i.e. the closest design to the ideal point [26]) obtained (\(Q_e=0.5758\), \(d_{\mu c -Si}=1305\)). Note that the design minimizing the micro-crystalline intrinsic layer thickness (\(30\%\) less than the reference cell) still holds a \(1.27\%\) absorption improvement and a relative higher \(Q_e\).

Finally the results obtained trough the “SA + MCS” reach a \(5.55\%\) total absorption improvement. Therefore, using this type of optimizations we have a little loss on the performance with respect to the “full optimization” MCS, yet we want to underline that the computational burden of the “SA + SOO” optimizations is significantly reduced: 24.3% on average.

Pareto-Front approximations obtained and feasible points explored by using NSGA-II, in the multi objective optimization of the optical model for tandem-thin film silicon solar cell with the “Full parametrized” model (a) and for the reduced “SA + MOO” (b). Notice how the SA + MOO approach seems to perform surprisingly better than the simple-MOO one (c). a Full parameterized model. b Reduced order model. c Comparison of the Pareto-front approximations obtained using NSGA-II

4.3 Multi objective optimization

The Pareto-Front approximation obtained applying NSGA-II to the multi-objective, full-parametrized, problem is plotted in Fig. 5a (total number of generations is 700, population size is 100). \(Q_e\) of non-dominated designs, found by the algorithm, spans from \(\approx 0.567\) to \(\approx 0.6\), whereas \(d_{\mu c-Si}\) spans from 1190 to \(\approx 2210\) (which is the maximum range allowed by the constrains). Thus we have obtained a wide spread Pareto-front approximation.

Total absorption profile (in red) of the cell with the maximum \(Q_e\) value obtained using NSGA-II. Reference cell total absorption profile is plotted, in blue, for comparison. The improvement, compared to the reference cell absorption profile, is 5.083%. The \(Q_e\) and \(d_{\mu c -Si}\) values for this cell are: 0.599967 and 2209.97876, respectively. In Table 3 (fifth column) all the parameters values of the cell are listed along with robustness indexes. (Color figure online)

We may notice that the designs obtained using MOO are definitely more robust than the previously obtained. The total absorption profile of the cell with the maximum value of quantum efficiency (\(Q_e=0.6000\), \(d_{\mu c -Si}=2209\)) obtained in these runs is plotted, along with the reference cell one, in Fig. 6: the improvement, which mainly lies in the \( \left[ 700 , 900\right] \) wavelength region, is of \(5.083 \%\). The best absorption improvement obtained in these runs is \(5.17\%\).

The Pareto-front approximation obtained in the “SA + MOO” optimization (using NSGA-II set as above), along with the feasible designs explored, is plotted in Fig. 5b, whereas the Pareto Pareto-front approximations obtained in the “MOO” and in the “SA + MOO” approach are compared in Fig. 5c . The improvement, with respect to the “full-parameterized” Pareto-front approximation, is remarkable. Figure 5c clearly shows how the “SA + MOO” Pareto-front dominates the one obtained using the simple MOO approach. This significant improvement may be due to the particular way in which EAs explore the objective space; a reduced domain space seems to significantly enhance the search. This result demonstrates the consistency of our combined algorithmic approach, which in complex problem (such as the one associated to thin-film cell design) shall enhance the capability of a heuristic optimization approach. In particular, SA reveals to be a fundamental tool as a pre-processing phase to investigate parameter effects on the model. The convergence rate of the algorithm is graphically analysed in Fig. fig:convergence, where we plot the non-dominated fronts computed during the iterations of NSGA-II (Fig. 7).

The Pareto-orientation of the optimization allows us to perform a parametric analysis of the absorption profile of optimal trade-offs. Figure 8 shows the absorption profile of Pareto-optimal solutions as a function of the quantum efficiency. This plot demonstrates that most of the gain in the optical efficiency of Pareto-optimal cell is due to improved absorption capabilities in long wavelengths. Indeed, the effect is reflected by a shift in the figure, which is appreciable even for quantum efficiency variation of the order of \(10^{-2}\).

Finally Fig. 9 depicts the absorption profile associated to the closest-to-ideal design found by OptIA for this optimization problem. This has a \(Q_e\) of: 0.5719 and a \(d_{\mu c -Si}\) of 1190, i.e. a variation of \( -\,2.29\) and \(-\,25.63 \%\) ,respectively, compared to the closest-to-ideal cell design found by NSGA-II.

4.3.1 Robustness analysis of notable designs

The Local and Global robustness indexes of the trade-offs found by NSGA-II are listed in Table 3. Even if the Local indexes are very high, the Global Robustness indexes do not even reach \(70\%\). Thus, the designs analysed are very resistant to perturbations along coordinate directions, but become unrobust (fragile) if the perturbations are combined. This means that, at least locally for the points analysed, the objective functions of the model are strongly non-linear, depending mostly on parameters combinations, rather then disjoint effects due to single parameters. The only not maximized Local indexes are the ones associated to \(d_{\mu c -Si}\), \(\sigma _2\) and \(\sigma _4\). This locally confirms the global results given by the SA. In Fig. 10 are highlighted the knee points of the Pareto front, along with their respective Global robustness indexes. The highest index found so far is the one of Trade-off 3, namely \(67.37\%\); which makes it the most robust design analysed with respect to the optical model. Its “Glocal” index is 1.8035, which, compared to the total volume of the feasible region, indicates a relatively big Viable region, confirming and improving our hypothesis on the robustness of this device.

4.4 Identifiability analysis

In Table 4 we list the results of MOTA applied to \(10^{-5}\) non-dominated points. The important relationships found using these points are between p4 and p5 and between p7 and p9, plotted in Fig. 11a, b respectively. Notice that these hidden functional relationships cause complex behaviour of the model output with respect to the domain space, and thus making harder the task of an optimization algorithm. However, all of them do not show up in the “SA + MOO” Optimization (at least one parameter involved in these relationships is considered fixed in the latter), and this may be one of the reason for the improved behaviour of the optimization algorithms.

Pareto Front obtained in the 8 NSGA-II simulations (population size set to 100, total number of generations 200). Each simulation is performed with a different ZnO, used for the TCO layer, doping dosage (optimal, normal, low, not) and Ag ,the back reflector used, roughness (smooth, rough) combination

4.5 TCO and back reflector materials

In the previous sections we have discussed about the results of our optimization and the analysis of the most common structure of tandem solar cells: highly doped ZnO used as TCO and smooth Ag used as a BR. Of course this is not the only possible choice, there is a huge amount of different possible materials (having price comparable with Si) and different doping dosage that may be used in solar cell design. In this section we will discuss the results that we have obtained exploring some alternatives: (i) varying the dope dosage of the ZnO layers in five conditions: non-doped, low doped (resistivity larger than 100 m\(\varOmega \times \) cm), normally doped (resistivity of the order 1 m\(\varOmega \times \) cm) and optimally doped (resistivity less than 1 m\(\varOmega \times \) cm); (ii) considering rough Ag instead of smooth Ag.

Varying the ZnO layer doping dosage and the roughness value of the Ag we have obtained a considerable gain on quantum efficiency. We have considered eight different cell architectures (see Sect. 4.5 for the details on the materials) which arise from the combination of: (i) Not, lowly, normally, optimally doped ZnO; (ii) smooth, rough Ag. The optimally doped ZnO with smooth Ag combination, is the device analysed in Sect. 3.2. We applied NSGA-II algorithm setting the population size to 100 and the maximum number of generation to 200, in order to find which of these devices better perform. The eight Pareto-Front approximations obtained are showed in Fig. 12. The results clearly show that, for low efficiency, the best combination of material is given by normal doped ZnO and smooth Ag. For high efficiency the devices seems to perform in a very similar way. In Tables 5 and 6 we report comparison among the results obtained by using NSGA-II and OptIA in term of optimality of the closest-to-ideal trade off for the eighth optimization performed in this section (Table 7).

5 Conclusions

In this work we have given a complete analysis of a tandem thin-film Silicon solar cell model. The combination between the matrix method and the calibrated photonic Monte Carlo simulations allowed us to have an accurate fairly-quick optical model that could thus be analysed.

The results obtained in all the different optimizations performed, not only widely demonstrate the applicability of our methodology but also show the importance of every step: the understanding of a high dimensional model would have not been possible without a preliminary sensitivity analysis; MCS algorithm was used both to find extremely efficient designs, up to a \(6.71\%\) Quantum Efficiency improvement and a \(5.88\%\) gain on the total absorption profile, and fair efficient designs, up to a \(3.70\%\) gain on the total absorption profile, considering cost-savings bounds; NSGA-II was used to find Pareto-Optimal points of the problem: Maximize Quantum Efficiency, Minimize Intrinsic Layer Thickness; hence balancing Efficiency and cost-savings. An interesting fact about the points found in NSGA-II runs is the higher Global Robustness indexes they hold compared to SOO results, even exceeding \(80\%\). Hence MCS results behave better in terms of efficiency, while NSGA-II results shows a better behaviour in terms of stability. Of great importance was the improved behaviour of NSGA-II algorithm when used in combination with the SA results. In fact “SA + MOO” NSGA-II optimization, performed significantly better then the full-parameterized optimization in terms of both optimality of the results obtained and reduced computational effort. Finally Identifiability Analysis gave us higher order information on the domain parameters and their behaviours with respect to the model outputs.

It was also demonstrated that our approach could be systematically applied on designing thin-film solar cell devices, ensuring remarkable improvements in terms of efficiency, cost savings and robustness. In particular, the robustness designs found in our optimization process might be useful for a decision maker and should be take in consideration in a design process. Future works will concern the exploration of alternative materials and solar cell types. An interesting direction of work lies in experimental validations of the optical efficiency of several Pareto-optimal designs obtained by our methodology, which could lead to new possible prototypes in collaboration with industrial partners.

References

Shah, A.V., Schade, H., Vanecek, M., Meier, J., Vallat-Sauvain, E., Wyrsch, N., Kroll, U., Droz, C., Bailat, J.: Thin-film silicon solar cell technology. Prog. Photovolt. Res. Appl. 12, 113–142 (2004)

Geiszl, J.F., Friedman, D.J., Ward, J.S., Duda, A., Olavarria, W.J., Moriarty, T.E., Kiehl, J.T., Romero, M.J., Norman, A.G., Jone, K.M.: 40.8% efficient inverted triple-junction solar cell with two independently metamorphic junctions. Appl. Phys. Lett. 93(12), 123505 (2008)

Atwater, H.A., Polman, A.: Plasmonics for improved photovoltaic devices. Nat. Mater. 9, 205213 (2010)

Bermel, P., Ghebrebrhan, M., Chan, W., Yeng, Y.X., Araghchini, M., Hamam, R., Marton, C.H., Jensen, K.F., Soljai, M., Joannopoulos, J.D., Johnson, S.G., Celanovic, I.: Design and global optimization of high-efficiency thermophotovoltaic systems. Opt. Express 18(S3), A314–A334 (2010)

Lenert, A., Bierman, D.M., Nam, Y., Chan, W.R., Celanovic, I., Soljacic, M., Wang, E.N.: A nanophotonic solar thermophotovoltaic device. Nat. Nanotechnol. 9, 126–130 (2014)

Sheng, X., Johnson, S.G., Michel, J., Kimerling, L.C.: Optimization-based design of surface textures for thin-film Si solar cells. Opt. Express 19(S4), A841–A850 (2011)

Dimitrova, D.Z., Dua, C.H.: Crystalline silicon solar cells with micro/nano texture. Appl. Surf. Sci. 266, 1–4 (2013)

Zaidi, S.H., Marquadt, R., Minhas, B., Tringe, J.W.: Deeply etched grating structures for enhanced absorption. In: 29th IEEE PVSC, pp. 1290–1293 (2002)

Prentice, J.: Coherent, partially coherent and incoherent light absorption in thin-film multilayer structures. J. Phys. D Appl. Phys. 33, 3139–3145 (2000)

Springer, J., Poruba, A., Vanecek, M.: Improved tree-dimensional optical model for thin-film silicon solar cells. J. Appl. Phys. 96, 5329–5337 (2004)

Castrogiovanni, M., Nicosia, G., Rascuna’, R.: Experimental analysis of the aging operator for static and dynamic optimisation problems. In: 11th International Conference on Knowledge-Based and Intelligent Information and Engineering Systems—KES 2007, 12–14 September 2007, Vietri sul Mare, Italy. Springer, LNCS, vol. 4694, pp. 804–811 (2007)

Nicosia, G.: Immune Algorithms for Optimization and Protein Structure Prediction. Ph.D. Thesis (2005)

Pardalos, P.M., Resende, M.G.C.: Handbook of Applied Optimization. Oxford University Press, Oxford (2002)

Metropolis, N., Rosenbluth, A.W., Rosenbluth, M.N., Teller, A.H.: Equation of state calculations by fast computing machines. J. Chem. Phys. 21(6), 1087–1092 (1953)

Powell, M.J.D.: Developments of newuoa for minimization without derivatives. J. Numer. Anal. 28, 649–664 (2008)

Conca, P., Nicosia, G., Stracquadanio, G., Timmis, J.: Nominal-yield-area tradeoff in automatic synthesis of analog circuits: a genetic programming approach using immune-inspired operators. In: NASA/ESA Conference on Adaptive Hardware and Systems (AHS-2009), July 29–August 1, San Francisco, CA, USA, IEEE Computer Society Press, pp. 399–406 (2009)

Rios, L.M., Sahinidis, N.V.: Derivative-free optimization: a review of algorithms and comparison of software implementations. J. Glob. Optim. 56, 1247–1293 (2013)

Wang, X., Haynes, R.D., Feng, Q.: A multilevel coordinate search algorithm for well placement, control and joint optimization. Comput. Chem. Eng. 95, 75–96 (2016)

Huyer, W., Neumaier, A.: Global optimization by multilevel coordinate search. J. Glob. Optim. 14(4), 331–355 (1999)

Deb, K.: Multi-objective Optimization Using Evolutionary Algorithms. Wiley, New York (2001)

Deb, K., Gupta, H.: Introducing robustness in multi-objective optimization. Evolut. Comput. 14(4), 463–494 (2006)

Beyera, H.G., Sendhoff, B.: Robust optimization—a comprehensive survey. Comput. Methods Appl. Mech. Eng. 196(33–34), 3190–3218 (2007)

Iancu, D.A., Trichakis, N.: Pareto efficiency in robust optimization. Manag. Sci. 60(1), 130–147 (2014)

Reyes-Sierra, M., Coello Coello, C.A.: Multi-objective particle swarm optimizers: a survey of the state-of-the-art. Int. J. Comput. Intell. Res. 2(3), 287–308 (2006)

Nebro, A.J., Durillo, J.J., García-Nieto, J., Coello Coello, C.A., Luna, F., Alba, E.: SMPSO: a new PSO-based metaheuristic for multi-objective optimization. In: Proceedings of IEEE Symposium Computational Intelligence MCDM, pp. 66–73 (2009)

Deb, K.: Multi-objective optimization. In: Search Methodologies, Springer, New York, pp. 403–449 (2014)

Deb, K., Jain, H.: An evolutionary many-objective optimization algorithm using reference-point-based nondominated sorting approach, part I: solving problems with box constraints. IEEE Trans. Evolut. Comput. 18(4), 577–601 (2014)

Deb, K., Pratap, A., Agarwal, S., Meyarivan, T.: A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans. Evolut. Comput. 6(2), 182–197 (2002)

Saltelli, A., Ratto, M., Andres, T., Campolongo, F., Cariboni, J., Gatelli, D., Saisana, M., Tarantola, S.: Global Sensitivity Analysis: The Primer. Wiley, New York (2008)

Saltelli, A., Tarantolaa, S., Chana, K.P.S.: A quantitative model-independent method for global sensitivity analysis of model output. Technometrics 41, 39–56 (1999)

Homma, T., Saltelli, A.: Importance measures in global sensitivity analysis of nonlinear models. Reliab. Eng. Syst. Saf. 52(1), 1–17 (1996)

Campolongo, F., Cariboni, J., Saltelli, A.: An effective screening design for sensitivity analysis of large models. Environ. Model. Softw. 22(10), 1509–1518 (2007)

Sobol, I.M.: Global sensitivity indices for nonlinear mathematical models and their monte carlo estimates. Math. Comput. Simul. 55(1–3), 271–280 (2001)

Sobol, I.M.: On sensitivity estimation for nonlinear mathematical models. Mat. Mod. 2(1), 112–118 (1990)

Shinar, G., Alon, U., Feinberg, M.: Sensitivity and robustness in chemical reaction networks. SIAM J. Appl. Math. 69, 977–998 (2009)

Patane’, A., Santoro, A., Costanza, J., Nicosia, G.: Pareto optimal design for synthetic biology. IEEE Trans. Biomed. Circ. Syst. 9(4), 555–571 (2015)

Kitano, H.: Towards a theory of biological robustness. Mol. Syst. Biol. 3(137), 1–7 (2007)

Hafner, M., Koeppl, H., Hasler, M., Wagner, A.: Glocal robustness analysis and model discrimination for circadian oscillators. PLoS Comput. Biol. 5(10), 1–10 (2009)

Jolliffe, I.T.: Principal Component Analysis. Springer, Berlin (1986)

Hengl, S., Kreutz, C., Timmer, J., Maiwald, T.: Data-based identifiability analysis of non-linear dynamical models. Bioinformatics 23(19), 2612–2618 (2007)

Breiman, L., Friedman, J.H.: Estimating optimal transformations for multiple regression and correlation. J. Am. Stat. Assoc. 80, 580–598 (1985)

Klein, S., Repmann, T., Brammer, T.: Microcrystalline silicon films and solar cells deposited by PECVD and HWCVD. Solar Energy 77(6), 893–908 (2004)

Rech, B., Kluth, O., Repmann, T., Roschek, T., Springer, J., Muller, J., Finger, F., Stiebig, H., Wagner, H.: New materials and deposition techniques for highly efficient silicon thin film solar cells. Solar Energy Mater. Solar Cells 74, 439–447 (2002)

Mailoa, J.P., Lee, Y.S., Buonassisi, T., Kozinsky, I.: Textured conducting glass by nanosphere lithography for increased light absorption in thin-film solar cells. J. Phys. D Appl. Phys. 47(8), 85–105 (2014)

Ward, L.: The Optical Constants of Bulk Materials and Films. IOP Publishing, Bristol (1994)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Patanè, A., Santoro, A., Romano, V. et al. Enhancing quantum efficiency of thin-film silicon solar cells by Pareto optimality. J Glob Optim 72, 491–515 (2018). https://doi.org/10.1007/s10898-018-0639-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-018-0639-9