Abstract

Gas electron multiplier (GEM) detectors (Sauli in Nucl Instrum Methods Phys Res A 805:2–24, 2016. https://doi.org/10.1016/j.nima.2015.07.060 (special issue in memory of Glenn F. Knoll); Buzulutskov in Instrum Exp Tech 50(3):287–310, 2007. https://doi.org/10.1134/S0020441207030013) are widely used for detection of ionizing radiation. When used in the proportional mode, they provide information about time, location, and energy of a detected particle (Chernyshova et al. in Fusion Eng Design, 2017. https://doi.org/10.1016/j.fusengdes.2017.03.107; Altunbas et al. in Nucl Instrum Methods Phys Res A 490(1–2):177–203, 2002. https://doi.org/10.1016/S0168-9002(02)00910-5. http://linkinghub.elsevier.com/retrieve/pii/S0168900202009105). Modern technologies allow full utilization of detector properties, by acquiring the waveform of output current pulses and processing them using sophisticated digital signal processing (DSP) algorithms. The current pulses must be digitized at high speed (up to 125 MHz) with high resolution (up to 12-bits). Due to the high volume of the produced data, it is necessary to provide the high-performance data acquisition system (DAQ) to transmit the data to processing units. Efficient processing of the GEM data requires distributed parallel processing system to perform multiple tasks (Czarski et al. in Rev Sci Instrum 87(11), 11E336, 2016. https://doi.org/10.1063/1.4961559): (1) Filter out the background and transmit only hit related data. (2) Extract the parameters of a hit, describing the time and charge (related to energy). (3) Estimate the hit position by combining information from multiple anode pads. (4) In case of 2D GEM detectors, correlate pulses received from X and Y pads (pixels) or W, U and V pads (pixels). (5) Separate the hits overlapping in space or in time (if possible) to support detector operation at higher rates. The above functionalities may be achieved in different hardware architectures. The typical hardware platforms include FPGA chips, standard or embedded computer systems with different computation accelerators (Wojenski et al. in J Instrum 11(11):C11035, 2016. http://stacks.iop.org/1748-0221/11/i=11/a=C11035; Nowak et al. in J Phys Conf Ser 513(5):052–024, 2014. https://doi.org/10.1088/1742-6596/513/5/052024. http://stacks.iop.org/1742-6596/513/i=5/a=052024?key=crossref.c5912cfa72c30b309821e14c4384948f. The paper shows possible solutions with their feasibility for particular applications.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Gas Electron Multiplier (GEM) detectors are widely used for detection of X-ray, gamma radiation or ionizing particles. Detection of fast neutrons is also possible after conversion via scattering on hydrogen (e.g., in a polyethylene foil). Detection of thermal neutrons may be achieved by using a borated aluminum cathode [1]. To fully utilize the potential of GEM detectors, including their energy resolution in proportional mode, it is necessary to provide a readout system able to extract the information about the location, time and energy of a detected particle. Additionally, for diagnostic and debugging activities, it is desirable that such a system should optionally provide access to raw GEM signals.

GEM Detectors

GEM detectors have been invented in 1997 by Fabio Sauli [2] in Gas Detector Development Group at CERN, and are under continuous development [3]. The structure and operating principle of a GEM detector are shown in Fig. 1. The GEM detectors consist of a few GEM foils placed between the cathode (located on the inner side of the detector’s window) and the anode—created by readout strips, placed on the inner side of a readout board. The space inside the detector is divided into the conversion and drift area (between the anode and the first GEM foil), the transfer areas (between each two GEM foils) and the induction area (between the last GEM foil and the anode pads). Electrical voltages are applied, usually via a voltage divider, to the whole structure so that an electrical field is created in each area, and also between both sides of each GEM foil. The GEM foil is a thin (typically 50 \({\upmu }{\hbox {m}}\)) isolating kapton film, covered with conducting planes on both sides. The foil is perforated with regular circular holes. When the voltage is applied between both sides of the foil, the strong electrical field is created in the holes. Electrons produced by the ionizing particle in the conversion area, after reaching the first GEM foil, are accelerated in the strong electrical field in the holes and produce secondary electrons. The process repeats in each GEM foil. The charge gain in each foil may be between 10 and 100, giving the final gain for the triple GEM structure between 1000 and \(10^5\) [4]. The cloud of electrons finally reaches the readout board and produces an electrical current pulse in readout pads. The important feature of GEM detectors is that in properly chosen operating conditions, the charge delivered to the readout pads is proportional to the energy of the ionizing particle. The shape of the current pulse may be different [5, 6] depending on the gas used in the detector and on the operating conditions. The current pulses from each readout pad are received by the shaping charge amplifier with introduced low cut frequency, which forms the voltage pulses of standard shape. The properties of that amplifier are tuned according to the requirements of further signal processing stages. For example, lowering the upper cut-off frequency allows eliminating a high-frequency noise. The standard shape of the pulse allows separation of overlapping pulses described later.

Application of GEM Detectors

Depending on the application, the GEM detectors may be used either in one-dimensional (1-D) or in two-dimensional (2-D) configurations. The 1-D version is for example used for X-Ray spectroscopy, where a collimated X radiation is reflected from a Bragg’s crystal. In that configuration, the readout pads have a form of strips. The position (the strip number) of the charge pulse informs about the reflection angle and hence about the energy of the photon. The total charge of the pulse provides redundant information about the energy of the photon and may be used to separate signals generated by different orders of reflection.

The 2-D version may be used for X-ray tomography. In that application, the radiation enters the detecting system via a pinhole and creates a 2-D image of the radiation source on the GEM detector. In that configuration, the readout pads should be arranged in a 2-D structure creating image pixels. Unfortunately, the number of pixels quickly increases with the increase of resolution. For rectangular pixels organized in a \(16 \times 16\) matrix, the number of required readout channels is equal to 256. For higher resolutions, it is not possible to read each pad with a separate readout channel. Therefore, other readout systems must be used. The basic one uses two perpendicular systems of readout strips X and Y, or interlaced pads connected to X and Y lines (see Fig. 2). The more complex readout system uses pads organized in three sets of readout lines U, V, W (see Fig. 3) [7] and provides a better possibility to separate simultaneous pulses. Of course, finding the correlation between pulses in pads associated with different coordinates is a complex task at high rates, and poses significant requirements for a data processing part of the system.

Reception and Processing of GEM Pulses

The proper readout of GEM detectors depends on the operation mode of the detector. For triggered High Energy Physics (HEP) experiments, there are ASIC based solutions [8] that allow reading of the data collected from a GEM detector, after a global trigger was generated. However, the spectrography and tomography systems do not use a global trigger. They require the continuous acquisition of data during a longer period of time (several seconds or even a few tens of minutes, depending on the experiment). Therefore, another system for data acquisition was developed that allows continuous acquisition of digitized data from a GEM detector. First experiments and analyzes [9] have shown, that the shaped GEM signal may be digitized at the relatively low frequency, even 40 MHz and successfully processed to calculate the time and the charge of a single event. Therefore, the system was built with the direct simultaneous digitalization of all GEM channels with analog to digital (ADC) converters. The achievable sampling frequencies using reasonably priced components were between 77.7 and 125 MHz. The ADC converters are directly controlled by FPGA chips, which also preprocess data, detecting the events. Of course, the FPGAs must also perform the zero suppression to avoid transmission of a huge volume of empty data containing only noise. Therefore, the trigger that was avoided when reading analog data from the GEM detector is introduced here. It is based on digitized data, which allows introducing of flexible solutions like compensation of the low-frequency drift. The trigger used depends on the algorithms applied for further data processing. It may be a global trigger when sufficiently strong signal in any channel triggers transmission of data from all channels. The opposite is a local trigger when each channel individually detects the threshold crossing in its data. There is also an intermediate solution, where a trigger generated in one channel is also distributed to its neighbors, as each pulse is usually distributed between the adjacent channels.

The acquired data are then processed, and the useful information for each event is extracted: the time (T), position (P) and charge (Q). If the pulse is distributed between multiple readout pads, the charge centroid may be calculated to achieve spatial resolution higher, than the distance between pads.

In both mentioned applications—the spectrography and the imaging, the extracted data are further used for histogramming. Histogram bins are defined by the energy range and by the position of an event.

The final data must be transmitted to an experiment data acquisition system (DAQ) for further processing and archiving. The communication with DAQ is performed by the embedded computer system (ES) because it usually involves complex communication protocols and handling of big data volumes.

As we can see, in the GEM data processing system we have four representations of data

-

Raw GEM signal—a continuous stream of samples from all channels.

-

Triggered GEM signal—the separated samples with detected events.

-

Aggregated event data TPQ (time, position, charge)

-

Calculated histograms

The boundary between the FPGA implemented processing, and the ES implemented processing depends on the particular application and requirements.

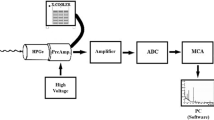

The general structure of the GEM data processing system is shown in Fig. 4.

Example Implementations of the FPGA Readout Systems

The described above concept of the FPGA and ES based DAQ for GEM detectors may be best explained by showing a few implementations elaborated by our team. Due to different requirements, different implementations have been used.

GEM System for KX1 Diagnostics at JET in Culham

The KX1 GEM system was built for the upgrade of KX1 diagnostics [10]. It is a high-resolution X-ray spectroscope able to detect and measure X-ray radiation emitted by different plasma impurities. The implemented system uses two detectors tuned for detection of the tungsten (\(W^{46+}\)) 2.4 keV radiation and nickel (\(Ni^{26+}\)) 7.8 keV radiation. High-resolution spectroscopy allows measuring the impurities concentration, the ion temperature—by the line broadening, and the plasma rotation velocity profile.

The system uses an additional \(^{55}\)Fe source to perform calibration of the gain of the GEM detector. The system detects events and calculates soft X-ray (SXR) photons histograms using both—energy and position bins. All calculations are performed in FPGAs [11]. The histogramming algorithm detects and counts the signals that are corrupted (i.e., saturated or overlapped in time or in space).

The JET experiment uses relatively short plasma pulses (shots), and therefore all data could be stored in an FPGA-connected DDR RAM of the GEM system. Transmission of data to the ES is performed later, after the shot. The architecture of the system is shown in Fig. 5. Due to long pauses between plasma pulses at JET, it was possible to use a relatively slow, Python-based software system to control the detector and to transfer the data [13]. Object features of Python language allowed creating the reliable framework for configuration and monitoring of the hardware, which is also very important in measurement systems. Therefore, the data transfer from the GEM system DDR memory to the ES RAM could be done without using a DMA.

Architecture od the GEM readout system for KX1 diagnostics [12]. FEB—Front-end boards, ADC—Analog to digital converters, DAQ—Data acquisition system

The GEM readout system built for KX1 was also able to detect and transfer extremely short sequences of raw pulses.

The important part of that algorithm was the detection of signals corrupted due to overlap or due to saturation. Such signals were not included in histograms but were counted as erroneous signals.

System Based on Serial Data Acquisition for ASDEX Upgrade

A similar hardware system as the one used for KX1 was also used for tests in the ASDEX Upgrade experiment in Germany [14]. The purpose of those tests was to study the detector’s operation at high intensity of radiation. In that application, access to the histograms only was not sufficient. Investigating fast changes of the detector gain occurring at high radiation intensities, requires delivery of the information about each detected pulse (the T, P, Q data), to the ES. In this approach, events are detected in each channel individually with detection of erroneous (overlapping or saturated) pulses. Data of the correct pulses are transferred to the new “serial data acquisition” system [15, 16] consisting of a binary tree of registers. Each node accepts data from the two preceding nodes. In case if both preceding nodes provide data, the data with lower timestamp has priority. If the timestamps are equal, the data with the lower channel number has priority. Finally, data streams from different channels are concentrated into a single register. Therefore, if the overall event rate is too high, it may happen that it is not possible to transfer the new data from a certain channel because the associated register is still occupied with old data. In such a case the data is lost, and the appropriate flag is set in the data kept in the register. Such a system ensures the “best effort” delivery of data from all channels and warrants that each drop of data is noticed. This system also ensures that the output data are correctly sorted in time. The data are buffered in a DDR memory connected to the FPGA. The ES part of the system receives the data from that DDR memory via PCIe interface with the appropriate DMA engine.

Example of separation of multiple overlapped pulses [17]

One of the findings from the ASDEX Upgrade tests was the fact that at higher radiation intensity, the probability of overlapping pulses is not negligible. The further work was performed, aimed at a separation of overlapping pulses [17, 18] (see Fig. 6). Even though it is possible to split the overlapping pulses in FPGA, such a hardware implementation consumes too many resources. Therefore it should be done in the software in the ES part, which requires yet another change of the GEM readout architecture.

System Prepared for WEST Tokamak

The system designed for WEST tokamak is intended to provide plasma SXR tomography [19]. The system is supposed to provide plasma imaging with 1 ms time resolution. Raw GEM signals will be shaped using the second-order low-pass filtering with attenuation of 50 dB at 35 MHz. That forming of the signal should allow correct splitting of overlapped signals in the software. The sampling frequency may be selected between 80 and 125 MHz. The transmission of data used during the preliminary tests is described in [19]. The final transmission system will support the transmission of overlapped signals and is described below. The events are detected in each channel independently. When crossing the threshold level is detected, the frame of samples is recorded and transmitted to the ES part. Each frame is 32 samples long and contains up to 10 samples before the threshold trespassing. If the signal does not fall below the threshold level before the end of the frame, it is assumed that the pulse overlap occurred and the second frame with next 32 samples is transmitted.

Transmission of raw signal samples instead of extracted event’s data results in significant increase of the data volume that must be transmitted from the FPGA part to the ES part. Therefore it is essential that the most efficient DMA engine is used to transfer the data from the FPGA part to the ES RAM [20]. The efficiency of data transfer and further data processing also depends on the implementation of the device driver in the ES. The data should be delivered directly to the memory buffer accessible for the data processing application, but the memory caching should remain active. Careful configuration of the operating system is needed to maximize the throughput and minimize the latency [21]. It is especially important as the system is also supposed to deliver real-time plasma imaging for control of the experiment—the histograms with counting period of 1 ms delivered with the latency, not higher than 1 ms.

The GEM system for WEST tokamak delivers a huge stream of data. For 128 channels, 80 MHz sampling rate and 2 bytes per sample we get 20.48 GB/s. Transmission and processing of such stream are above the possibilities of the standard PC computers or embedded systems. Possible hardware solutions for acceleration of computations are discussed in the next section.

Possibilities to Accelerate Computations in the GEM Readout System

Processing of raw GEM data to produce histograms or a 2D image requires a huge number of computations, that must be divided between the FPGA part and the ES part of the system.

FPGA as a Computational Platform

The data processing algorithms for FPGA are usually written in hardware description languages (HDL) like VHDL or Verilog. The description itself is similar to a program written in a standard programming language, but after synthesis and implementation, the algorithms are executed in hardware, without the intermediate software layer. It is advantageous from the performance point of view, as it is possible to implement highly parallel, hard real-time and time-deterministic data processing. That is why FPGAs are perfect to interface ADC converters. Unfortunately, there are also certain drawbacks, because similarities between standard programming languages and HDLs are often misleading. A software engineer who starts to write code for FPGAs does not often understand how the truly parallel continuous assignment instructions work. Another error prone construct is a sequential assignment in the VHDL process that modifies the values of the signal only when the process suspends (usually at the end of the process). Those two are just the first pitfalls faced by the beginners, but there is much more. Therefore, porting of algorithms from computer languages like C or C++ to HDLs is rather difficult [22].

There are some attempts to allow software engineers to enter the world of FPGAs [23, 24]. The first of them was SystemC [25], introduced in 2002 and still being developed. Last years the most important FPGA vendors have provided a possibility to automatically translate C++ or C-described algorithms into HDL, using so-called High-Level Synthesis (HLS). Xilinx offers the Vivado HLS technology [26], while Altera (currently acquired by Intel) provides the Intel FPGA SDK for OpenCL [27] and Intel HLS Compiler [28]). Those approaches are worth investigating and may be used for further development. However, there is a risk of vendor lock-in, as the solutions of different vendors are not fully compatible.

Except for the problems related to the algorithm development, FPGAs also have certain hardware-related limitations. First of all the scalability of FPGA based designs is much worse, than in case of software solutions. If the algorithm fully utilizes resources provided by the FPGA chip, the only solution to add new functionality is to modify the hardware replacing the FPGA with a bigger (and more expensive) one or splitting the functionality between two FPGAs. Unfortunately, dividing the processing between a few FPGAs is also somewhat complicated, because the communication between different chips usually becomes a bottleneck. Therefore FPGAs are perfect for local processing of data, like filtering and triggering of data in individual GEM channels or concentrating the data from multiple channels into a single stream transmitted to the ES. Their performance is usually much worse when used for processing huge volumes of data (like correlating pulses in X and Y strips to reconstruct the 2D image).

Communication Between the FPGA and the ES Parts

Transmission of data from the FPGA part to the ES part of the system significantly affects the performance of the whole system. The communication channel should provide high throughput and (especially in case of real-time processing) low latency. The optimal technology may depend on the mechanical structure of the system. If the FPGA part is directly connected to the ES part, the optimal solution is the PCIe bus. However, if the FPGA part must be separated from the ES part, it may be reasonable to utilize the network interfaces.

PCIe as the FPGA-ES Communication Bus

Current FPGA chips offer high-speed PCIe interfaces. For example the chips from the newest Xilinx Ultrascale+ family [29] offer either x8 Gen4 or x16 Gen3 PCIe interfaces with throughput up to 16GB/s. Even the older chips, like the Artix 7, used in the current version of the GEM readout for WEST offer the x4 Gen2 PCIe with throughput up to 2 GB/s. Those interfaces are supported with dedicated IP cores equipped with the bus mastering DMA functionality. An excellent example of such IP core may be the DMA for PCI Express Subsystem [30].

However, current experiences show that even though PCIe may be the main interface for the high-performance data transmission, it should be supported with additional highly-reliable interfaces for FPGA configuration.

Network Interfaces for FPGA-ES Communication

The maximum length of the PCIe cable is 7 m, but for high-speed versions like Gen3 or Gen4, it is advisable to avoid cables longer than 1 m. Therefore, for longer connections, it is required to use either proprietary communication interfaces (which may be expensive) or standard network interfaces.Footnote 1 The network connection may be a perfect solution if due to high required computational power the ES part must be implemented as a computer farm. Such solution is rather not needed for currently built GEM detectors but is often used for big detectors in HEP experiments. The perfect solution for that purpose may be the Infiniband interface, which offers low latency transfer with RDMA. Unfortunately, there are no widely available, reasonably priced implementations of Infiniband IP core for FPGAs. The full implementation of the standard TCP/IP protocol consumes a lot of resources in the FPGA. Therefore other non-standard or simplified protocols are used [31, 32]. The network interface may also be used to control the FPGA part, for example via the IPbus [33] protocol.

Acceleration of Computations in the ES Part

The High-Performance Computing (HPC) is widely used in modern information technology. Therefore different accelerating technologies are available, with the price significantly reduced thanks to the mass production. Our team has performed a thorough review of possible solutions, and some of them have also been tested with the GEM processing algorithms. In [34, 35], the following approaches were investigated

-

Using the additional Intel PCIe card with Xeon Phi MIC

-

Using the graphical card with CUDA-compatible NVIDIA graphics card (GPU)

-

Using the multicore Intel Xeon CPU

Extension of the ES part with additional cards (the first two points above) provides a certain amount of flexibility and scalability, because additional cards may be added as long as free extension slots (e.g., PCIe) are available. Unfortunately, this approach requires transmission of processed data to the accelerator card and reception of results. This may significantly load the communication bus. Therefore that approach is applicable only if the data may be split into blocks processed independently. The newest version of the Xeon Phi technology, formerly known as “Knights Landing” may be used as a standard CPU, avoiding the problems related to the limited PCIe bandwidth.

The tests [34,35,36] have shown, that to fully utilize the acceleration potential offered by the GPU or the vector instructions in the CPU, it is required that the algorithm processes regularly distributed data and that certain data are reused multiple times. All irregularities in the processing algorithm (e.g. branches) or in the data location impair the acceleration. In case of GPUs with SIMT (Single Instructions Multiple Threads), it is advisable that multiple (e.g., 32) threads are processing data in parallel. Therefore acceleration may be easily achieved in histogramming but is much more difficult in other parts of algorithms like separation of pulses. Additionally, implementation of the GEM signal processing with GPUs requires a special programming approach.

Summarizing, if the aim is not only the maximal performance but also the ease of development and maintenance, the multicore server processor like Intel Xeon may be a preferred choice.

Current Results for the WEST System

In the current version of GEM system for WEST two Artix-7 FPGA chips are connected to the PCIe switch. According to the information from Sect. 6.2.1, they should provide throughput of 4 GB/s. However, due to protocol overhead and internal bus inefficiencies, the measured throughput was 2.36 GB/s. This is 8.6 times less than the 20.48 GB/s data bandwidth produced by the detector (see Sect. 5.3). The Monte Carlo simulations have shown, that the optimized trigger system implemented in FPGA may reduce the data stream by a factor of 8.6 for photon rates below 220 thousand of photons per detector strip per second. That gives the limit of 28.16 million of photons per second for the whole detector. Further limitations are introduced by the ES part. Latest analyzes [37, 38] have shown that a system based on Xeon e5 S2600 v4 CPU with 10 cores is able to process up to 6.9 million photons per second. However, the system is still under development, and further improvement both regarding the PCIe bandwidth and processing throughput is expected.

Conclusion

Modern electronics provides technologies suitable for high-performance, digital readout of GEM detectors. Data may be digitized at a high sampling frequency and delivered to the FPGA-based preprocessing system. That allows both efficient data filtering in the standard mode of operation, but also a possibility to access raw detector signals for diagnostic purposes.

The system architecture may be selected depending on the requirements (e.g., pulsed or continuous operation, a possibility of off-line processing or the necessity of soft real-time or even hard real-time processing).

The processing tasks may be divided between the FPGA part (for time-deterministic tasks or simple and highly parallel tasks) and the processor-based ES part of the system (for more complex tasks using the huge volumes of data).

With the high-speed processors available today it is possible to increase the acceptable event-rate of the detector by splitting of overlapping events.

Usage of FPGA and ES technologies allows creating a highly flexible system, that may be tuned to the particular needs by modification of the software and firmware according to the specific requirements. [39,40,41,42,43]

Notes

Use of proprietary communication interfaces usually requires FPGA-based boards at both sides of the link which increases the cost. In case of standard network interfaces, typical network interface cards may be used at the ES side of the link.

References

G. Albani, G. Croci, C. Cazzaniga, M. Cavenago, G. Claps, A. Muraro, F. Murtas, R. Pasqualotto, E.P. Cippo, M. Rebai, M. Tardocchi, G. Gorini, Neutron beam imaging with GEM detectors. J. Instrum. 10(04), C04040–C04040 (2015). https://doi.org/10.1088/1748-0221/10/04/C04040

F. Sauli, GEM: a new concept for electron amplification in gas detectors. Nucl. Instrum. Methods Phys. Res. A 386(2–3), 531–534 (1997). https://doi.org/10.1016/S0168-9002(96)01172-2

F. Sauli, The gas electron multiplier (GEM): operating principles and applications. Nucl. Instrum. Methods Phys. Res. A 805, 2–24 (2016). https://doi.org/10.1016/j.nima.2015.07.060. (special issue in memory of Glenn F. Knoll)

S. Bachmann, A. Bressan, M. Capeáns, M. Deutel, S. Kappler, B. Ketzer, A. Polouektov, L. Ropelewski, F. Sauli, E. Schulte, L. Shekhtman, A. Sokolov, Discharge studies and prevention in the gas electron multiplier (GEM). Nucl. Instrum. Methods Phys. Res. A 479(2–3), 294–308 (2002). https://doi.org/10.1016/S0168-9002(01)00931-7

Y. Takeuchi, T. Tamagawa, F. Asami, A. Yoshikawa, T. Iwahashi, S. Konami, W. Iwakiri, Signal shape and charge sharing between electrodes of GEM in dimethyl ether. J. Instrum. 7(03), C03042–C03042 (2012). https://doi.org/10.1088/1748-0221/7/03/C03042

G. Guedes, A. Breskin, R. Chechik, D. Mörmann, Effects of the induction-gap parameters on the signal in a double-GEM detector. Nucl. Instrum. Methods Phys. Res. A 497(2–3), 305–313 (2003). https://doi.org/10.1016/S0168-9002(02)01924-1

F. Sauli, T. Meinschad, L. Musa, L. Ropelewski, Photon detection and localization with GEM. IEEE Nucl. Sci. Symp. 1, 12–15 (2004). https://doi.org/10.1109/NSSMIC.2004.1462059

A. Wojenski, G. Kasprowicz, K.T. Poźniak, R. Romaniuk, Data acquisition methods for GEM detectors. Proc. SPIE 9290, 9290–9290 (2014). https://doi.org/10.1117/12.2074970

W.M. Zabołotny, T. Czarski, M. Chernyshova, H. Czyrkowski, R. Dąbrowski, W. Dominik, K. Jakubowska, L. Karpiński, G. Kasprowicz, K. Kierzkowski, I.M. Kudła, K. Poźniak, J. Rzadkiewicz, Z. Sałapa, M. Scholz, Optimization of FPGA processing of GEM detector signal. Proc. SPIE 8008, 8008–8008 (2011). https://doi.org/10.1117/12.905427

J. Rzadkiewicz, W. Dominik, M. Scholz, M. Chernyshova, T. Czarski, H. Czyrkowski, R. Dabrowski, K. Jakubowska, L. Karpinski, G. Kasprowicz, K. Kierzkowski, K. Pozniak, Z. Salapa, W. Zabolotny, P. Blanchard, S. Tyrrell, K.D. Zastrow, Design of T-GEM detectors for X-ray diagnostics on JET. Nucl. Instrum. Methods Phys. Res. A 720, 36–38 (2013). https://doi.org/10.1016/j.nima.2012.12.041

K.T. Poźniak, A. Byszuk, M. Chernyshova, R. Cieszewski, T. Czarski, W. Dominik, K. Jakubowska, G. Kasprowicz, J. Rzadkiewicz, M. Scholz, W. Zabolotny, FPGA based charge fast histogramming for GEM detector. In: R.S. Romaniuk (ed.) Proceedings of SPIE, vol. 8903 (Wilga, Poland, 2013), p. 89032F. https://doi.org/10.1117/12.2037047

W.M. Zabołotny, A. Byszuk, M. Chernyshova, R. Cieszewski, T. Czarski, W. Dominik, K.L. Jakubowska, G. Kasprowicz, K. Poźniak, J. Rzadkiewicz, M. Scholz, Embedded controller for GEM detector readout system. Proc. SPIE 8903, 8903–8903 (2013). https://doi.org/10.1117/12.2033281

W.M. Zabołotny, A. Byszuk, M. Chernyshova, R. Cieszewski, T. Czarski, S. Dalley, C. Hogben, K.L. Jakubowska, G. Kasprowicz, K. Poźniak, J. Rzadkiewicz, M. Scholz, A. Shumack, Python based integration of GEM detector electronics with JET data acquisition system. Proc. SPIE 9290, 929024 (2014). https://doi.org/10.1117/12.2073379

M. Chernyshova, K. Malinowski, T. Czarski, A. Wojeński, D. Vezinet, K.T. Poźniak, G. Kasprowicz, D. Mazon, A. Jardin, A. Herrmann, E. Kowalska-Strzęciwilk, R. Krawczyk, P. Kolasiński, W. Zabołotny, P. Zienkiewicz, ASDEX Upgrade Team, Eurofusion MST1 Team: Gaseous electron multiplier-based soft X-ray plasma diagnostics development: preliminary tests at ASDEX Upgrade. Rev. Sci. Instrum. 87(11), 11E325 (2016). https://doi.org/10.1063/1.4960305

P. Kolasinski, K.T. Pozniak, T. Czarski, M. Linczuk, A. Byszuk, M. Chernyshova, B. Juszczyk, G. Kasprowicz, A. Wojenski, W. Zabolotny, P. Zienkiewicz, D. Mazon, P. Malard, A. Herrmann, D. Vezinet, Serial data acquisition for GEM-2D detector. Proc. SPIE 9290, 9290–9290 (2014). https://doi.org/10.1117/12.2075911

P. Kolasinski, K.T. Pozniak, T. Czarski, A. Byszuk, M. Chernyshova, G. Kasprowicz, R.D. Krawczyk, A. Wojenski, W. Zabolotny, Fast data transmission from serial data acquisition for the GEM detector system. In: R.S. Romaniuk (ed.) Proc. SPIE, vol. 9662 (Wilga, Poland, 2015), p. 96622J. https://doi.org/10.1117/12.2205264

T. Czarski, M. Chernyshova, K. Pozniak, G. Kasprowicz, W. Zabolotny, P. Kolasinski, R. Krawczyk, A. Wojenski, P. Zienkiewicz, Serial data acquisition for the X-ray plasma diagnostics with selected GEM detector structures. J. Instrum. 10(10), P10013–P10013 (2015). https://doi.org/10.1088/1748-0221/10/10/P10013

T. Czarski, K.T. Pozniak, M. Chernyshova, K. Malinowski, G. Kasprowicz, P. Kolasinski, R. Krawczyk, A. Wojenski, W. Zabolotny, On line separation of overlapped signals from multi-time photons for the GEM-based detection system. In: R.S. Romaniuk (ed.) Proceedings of SPIE, vol. 9662 (Wilga, Poland, 2015), p. 96622W. https://doi.org/10.1117/12.2205804

M. Chernyshova, T. Czarski, K. Malinowski, E. Kowalska-Strzęciwilk, J. Król, K.T. Poźniak, G. Kasprowicz, W. Zabołotny, A. Wojeński, R.D. Krawczyk, P. Kolasiński, I.N. Demchenko, Y. Melikhov, Development of GEM detector for tokamak SXR tomography system: preliminary laboratory tests. Fusion Eng. Design (2017). https://doi.org/10.1016/j.fusengdes.2017.03.107

W.M. Zabołotny, DMA implementations for FPGA-based data acquisition systems. p. 1044548 (2017). https://doi.org/10.1117/12.2280937

P. Linczuk, W.M. Zabolotny, A. Wojenski, R.D. Krawczyk, K.T. Pozniak, M. Chernyshova, T. Czarski, M. Gaska, G. Kasprowicz, E. Kowalska-Strzeciwilk, K. Malinowski, Evaluation of FPGA to PC feedback loop. p. 104454B (2017). https://doi.org/10.1117/12.2280947

What every software programmer needs to understand about hardware design. https://www.nandland.com/articles/what-software-programmers-need-to-understand.html. Accessed 10 June 2018

D. Koch, F. Hannig, D. Ziener, (eds.): FPGAs for Software Programmers. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-26408-0

International workshop on FPGAs for software programmers. http://www.fsp-workshop.org. Accessed 10 June 2018

SystemC. http://www.accellera.org/downloads/standards/systemc. Accessed 10 June 2018

Vivado High-Level Synthesis. https://www.xilinx.com/products/design-tools/vivado/integration/esl-design.html. Accessed 10 June 2018

Intel FPGA SDK for OpenCL - Overview. https://www.altera.com/products/design-software/embedded-software-developers/opencl/overview.html. Accessed 10 June 2018

Intel®HLS Compiler, Accelerate FPGA development with C++ using Intel HLS Compiler. https://www.altera.com/products/design-software/high-level-design/intel-hls-compiler/overview.html. Accessed 10 June 2018

PCI Express (PCIe). https://www.xilinx.com/products/technology/pci-express.html. Accessed 10 June 2018

DMA for PCI express (PCIe) subsystem. https://www.xilinx.com/products/intellectual-property/pcie-dma.html. Accessed 10 June 2018

G. Bauer, T. Bawej, U. Behrens, J. Branson, 10 Gbps TCP/IP streams from the FPGA for high energy physics. J. Phys. Conf. Ser. 513(1), 012–042 (2014)

W. Zabolotny, Low latency protocol for transmission of measurement data from FPGA to Linux computer via 10 gbps Ethernet link. J. Instrum. 10(07), T07005 (2015)

IPbus. https://ipbus.web.cern.ch/ipbus/. Accessed 10 June 2018

R. Krawczyk, P. Linczuk, P. Kolasinski, A. Wojenski, G. Kasprowicz, K. Pozniak, R. Romaniuk, W. Zabolotny, P. Zienkiewicz, T. Czarski, M. Chernyshova, The speedup analysis in GEM detector based acquisition system algorithms with CPU and PCIe Cards. Acta Phys. Pol. B 9(2), 257 (2016). https://doi.org/10.5506/APhysPolBSupp.9.257

P. Linczuk, R.D. Krawczyk, K.T. Poźniak, G. Kasprowicz, A. Wojeński, M. Chernyshova, T. Czarski, Algorithm for fast event parameters estimation on GEM acquired data. p. 100313M (2016). https://doi.org/10.1117/12.2249058

P. Linczuk, R.D. Krawczyk, W. Zabolotny, A. Wojenski, P. Kolasinski, K.T. Pozniak, G. Kasprowicz, M. Chernyshova, T. Czarski, Feasibility of FPGA to HPC computation migration of plasma impurities diagnostic algorithms. Int. J. Electron. Telecommun. 63(3), 323–328 (2017). https://doi.org/10.1515/eletel-2017-0043

R.D. Krawczyk, T. Czarski, P. Linczuk, A. Wojeński, P. Kolasiński, M. Gąska, M. Chernyshova, D. Mazon, A. Jardin, P. Malard, K. Poźniak, G. Kasprowicz, W. Zabołotny, E. Kowalska-Strzęciwilk, K. Malinowski, The software-defined fast post-processing for GEM soft X-ray diagnostics in the tungsten environment in steady-state tokamak thermal fusion reactor. Rev. Sci. Instrum. 89(6), 063504 (2018). https://doi.org/10.1063/1.5022131

R. Krawczyk, P. Linczuk, A. Wojeński, K. Poźniak, G. Kasprowicz, W. Zabołotny, M. Gaska, D. Mazon, A. Jardin, T. Czarski, P. Kolasiński, M. Chernyshova, E. Kowalska-Strzęciwilk, K. Malinowski, Novel application of parallel computing techniques in soft x-rays plasma measurement systems for the WEST experimental thermal fusion reactor. In: 2018 26th Euromicro International Conference on Parallel, Distributed and Network-based Processing (PDP) (IEEE, 2018), pp. 118–125. https://doi.org/10.1109/PDP2018.2018.00024. https://ieeexplore.ieee.org/document/8374447/

A.F. Buzulutskov, Radiation detectors based on gas electron multipliers (review). Instrum. Exp. Tech. 50(3), 287–310 (2007). https://doi.org/10.1134/S0020441207030013

C. Altunbas, M. Capéans, K. Dehmelt, J. Ehlers, J. Friedrich, I. Konorov, A. Gandi, S. Kappler, B. Ketzer, R. De Oliveira, S. Paul, A. Placci, L. Ropelewski, F. Sauli, F. Simon, M. van Stenis, Construction, test and commissioning of the triple-GEM tracking detector for COMPASS. Nucl. Instrum. Methods Phys. Res. A 490(1–2), 177–203 (2002). https://doi.org/10.1016/S0168-9002(02)00910-5

T. Czarski, M. Chernyshova, K. Malinowski, K.T. Pozniak, G. Kasprowicz, P. Kolasinski, R. Krawczyk, A. Wojenski, W. Zabolotny, The cluster charge identification in the gem detector for fusion plasma imaging by soft X-ray diagnostics. Rev. Sci. Instrum. 87(11), 11E336 (2016). https://doi.org/10.1063/1.4961559

A. Wojenski, K.T. Pozniak, G. Kasprowicz, P. Kolasinski, R. Krawczyk, W. Zabolotny, M. Chernyshova, T. Czarski, K. Malinowski, FPGA-based GEM detector signal acquisition for SXR spectroscopy system. J. Instrum. 11(11), C11035 (2016)

A. Nowak, G. Bitzes, A. Dotti, A. Lazzaro, S. Jarp, P. Szostek, L. Valsan, M. Botezatu, J. Leduc, Does the Intel Xeon Phi processor fit HEP workloads? J. Phys. Conf. Ser. 513(5), 052024 (2014). https://doi.org/10.1088/1742-6596/513/5/052024

Acknowledgements

This scientific work was partly supported by Polish Ministry of Science and Higher Education within the framework of the scientific financial resources in the years 2016 and 2017 allocated for the realization of the international cofinanced Project—Agreement Nos. 332380/PnH/2016 and 3814/H2020-Euratom/2017/2.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Zabołotny, W.M., Kasprowicz, G., Poźniak, K. et al. FPGA and Embedded Systems Based Fast Data Acquisition and Processing for GEM Detectors. J Fusion Energ 38, 480–489 (2019). https://doi.org/10.1007/s10894-018-0181-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10894-018-0181-2