Abstract

If the semigroup is slowly non-dissipative, i.e., its solutions can diverge to infinity as time tends to infinity, one still can study its dynamics via the approach by the unbounded attractors—the counterpart of the classical notion of global attractors. We continue the development of this theory started by Chepyzhov and Goritskii (Unbounded attractors of evolution equations. Advances in Soviet mathematics, American Mathematical Society, Providence, 1992). We provide the abstract results on the unbounded attractor existence, and we study the properties of these attractors, as well as of unbounded \(\omega \)-limit sets in slowly non-dissipative setting. We also develop the pullback non-autonomous counterpart of the unbounded attractor theory. The abstract theory that we develop is illustrated by the analysis of the autonomous problem governed by the equation \(u_t = Au + f(u)\). In particular, using the inertial manifold approach, we provide the criteria under which the unbounded attractor coincides with the graph of the Lipschitz function, or becomes close to the graph of the Lipschitz function for large argument.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Summary of Concepts

Typically, to build the theory of the global attractors (and their non-autonomous counterparts) one needs the dynamical system to be dissipative, i.e. there should exist a bounded absorbing set [2, 7, 12, 19, 30]. However, starting from the work of Chepyzhov and Goritskii [9], the concept of the global attractor has been generalized to the case of slowly non-dissipative systems, i.e. such that the orbits are not absorbed by one given bounded set, and they possibly diverge to infinity when time tends to infinity, but there is no blow-up in finite time. We remark, that in recent years, stemming from [9], there appeared significant work in the framework of unbounded attractors, cf., [3, 6, 13, 18, 21, 27]. An abstract approach to autonomous and non-autonomous unbounded attractors has been very recently proposed in [4].

To illustrate the underlying concept we start from two simple motivating examples.

Example 1

Consider the ODE

The point (0, 0) is the equilibrium. Solutions starting from (x, 0) tends to (plus of minus) infinity in x as time tends to infinity, and tend to (0, 0) as time tends to \(-\infty \). Any solution starting from (0, y) goes to (0, 0) as time tends to infinity. All other solutions are unbounded both in past and in future. The set \(\{ (x,0)\,:\ x\in {{\mathbb {R}}}\}\) is invariant, attracting, and unbounded. Moreover every solution in this set is bounded in the past.

Example 2

Consider another ODE

Again, the point (0, 0, 0) is the equilibrium. All other solutions with \(z=0\) are periodic—they are circles in (x, y) centered at zero. In fact every (x, y) circle centered at zero is a periodic solution. The set \(\{ (x,y,0)\, :\ (x,y)\in {{\mathbb {R}}}^2 \}\) is invariant, unbounded and attracting. Every solution in this set is bounded both in the past and in the future. All other solutions are unbounded in the past and bounded in the future.

Above two examples show that it is natural to consider the points in the phase space through which there exists a solution defined for all \(t\in {\mathbb {R}}\) which is bounded in the past, and one can expect that the set of such points should be invariant, and, in appropriate sense, attracting. This observation allowed Chepyzhov and Goritskii [9] to define the concept of unbounded attractor for the problem governed by the PDE \(u'=Au + f(u)\) as a the set of initial conditions of solutions which bounded in the past. In this paper we follow the same concept.

The key contribution of the present article is the refinement and extension in several directions of the results from [9]. We pass to the detailed description of the contribution and novelty of our work in comparison with the previous research on unbounded attractors. First of all we provide the new criteria for the semigroup of operators \(\{S(t)\}_{t\ge 0}\) on a Banach space X to have the unbounded attractor. This semigroup does not have necessarily to be governed by the differential equation, be it PDE or ODE. Hence, the result on the existence of the unbounded attractor, Theorem 3, the first important theorem of the present article, is formulated and proved for an abstract semigroup. We stress, that while the argument to prove this theorem mostly follows the lines of the corresponding proofs in [9], the present contribution lies in finding the abstract criteria, cf., (H1)–(H3) in the sequel, which guarantee its existence.

The important property needed in the proof is to be able to split the phase space of the problem X as the sum of two spaces \(X=E^+\oplus E^-\) such that the projection P on \(E^+\) of the solution can possibly diverge to infinity, and its projection on \(E^-\) denoted by \(I-P\) enjoys the dissipative properties. For the general setup of semigroups, following [9], under our criteria, the unbounded attractor \({\mathcal {J}}\) attracts bounded sets B only in bounded sets in \(E^+\), that is

as long as the sets \(S(t)B \cap \{ \Vert Px\Vert \le R\}\) stay nonempty. A natural question that appears is, when one can remove the set \(\{ \Vert Px\Vert \le R\}\) from the above attracting property. While in [9] the authors prove such convergence if the linear manifold \(E^+\) is attracting for the solutions that diverge to infinity, we provide more general criterion [see condition (A1) in Sect. 2.3], and show such attraction in Theorem 4. The criterion says that the attraction in the whole space occurs if there exists the attracting set with the thickness in space \(E^-\) tending to zero as \(\Vert Pu\Vert \) tends to infinity. This result later becomes useful in the part on inertial manifolds for the problem governed by PDEs, see Sect. 4.4.3.

We compare the results of our article with the results of the very recent paper of Bortolan and Fernandes [4], where another abstract framework also stemming from the work of [9] has been proposed. The authors there provide abstract conditions which guarantee the existence of unbounded attractor, which they define as the smallest closed set \({\mathcal {U}}\) which is at the same time invariant and attracting in the sense that

Similar to us, and following the approach of Chepyzhov and Goritskii [9], the authors of [4] consider the maximal invariant set \({\mathcal {I}}\) of a semigroup and find the conditions which guarantee that it is an unbounded attractor. The crucial difference between [4] and our approach is the way to obtain the key property of this attractor: that it attracts. Namely, in [4] it is assumed that the maximal invariant set attracts the absorbing set, which allows the authors to get the attraction (2). This assumption on one hand appears strong, but, on the other hand, allows the authors to build an abstract framework without the need of the spaces \(E^+\) and \(E^-\). Our approach is based on these spaces which, in principle, makes it less general, but, at the same time, we do not need to assume any attraction, we recover it from the existence of appropriate absorbing set and generalized asymptotic compactness. Moreover, in our approach the attraction is considered in bounded sets in the sense (1). After we obtain it, we provide additional criterion on the thickness of absorbing set, under which the attraction occurs in the standard sense (2). Same comparison extends to the non-autonomous theory built in [4] and in the present paper.

The next series of our results is inspired by the works of [6, 18] later extended and refined in [3, 21, 27]. Namely, for the case of the semigroup governed by the following PDE in one space dimension

on the space interval, with appropriate (typically Neumann) boundary data, and sufficiently large constant \(b>0\) the authors there study the detailed structure of the unbounded attractor which consists of equilibria, their heteroclinic connections, appropriately understood ‘equilibria at infinity’ and their connections, as well as heteroclinic connections from the equilibria to ‘equilibria at infinity’. We present the new abstract results on the global attractor structure for the general, not necessarily gradient case. Clearly, for such general case it is not possible to fully describe the structure of the attractor. However, we prove in Sect. 2.4, that the unbounded attractor must consist of an invariant set \({\mathcal {J}}_b\), built from points through which passes a bounded, both in the past and in the future, solution and the remainder, \({\mathcal {J}}\setminus {\mathcal {J}}_b\) which consists of the heteroclinics to infinity, namely of the points for which the alpha limits belong to \({\mathcal {J}}_b\) and for which the dynamics diverges to infinity as time tends to infinity. We study the properties of these sets, and in particular we show that \({\mathcal {J}}_b\) enjoys properties of the global attractor: it is compact under additional natural assumption (H4), which, however excludes the cases like Example 2 above, cf. Theorem 8, and by taking the set of points in the phase space which upon evolution converge to it, one obtains a complete metric space on which it is a classical global attractor, cf. Lemma 8.

We conclude the abstract results on slowly non-dissipative autonomous problems with several observations, which are, to our knowledge, new. The first one is, that the unbounded attractor coincides with a multivalued graph over \(E^+\), which we call a multivalued inertial manifold in spirit of [15, 29], cf., our Sect. 2.5. The second observation concerns the unbounded \(\omega \)-limit sets, whose properties we study in Sect. 2.6, and which, to our knowledge, have not been studied before. Interestingly, while we prove the unbounded counterparts of the classical properties of \(\omega \)-limit sets, i.e. invariance, compactness, and attraction, we were not able to prove their connectedness, and we leave, for now, the question of their connectedness open. Finally, in Sect. 2.7, we discus, on an abstract level, the dynamics at infinity, which corresponds to the equilibria at infinity and their connections in [3, 6, 18, 21, 27], and we prove, using the Hopf lemma, and homotopy invariance of the degree, that the attractor at infinity always coincides with the whole unit sphere in \(E^+\).

The study on unbounded non-autonomous attractors has been initiated by Carvalho and Pimentel in [13]. The authors study there the non-autonomous problem governed by the equation

For this problem they formulate the definition of the unbounded pullback attractor, being the natural extension of the autonomous definition, and provide the result on its existence. We continue the study on pullback unbounded attractors: we give the general definition of such object and prove the result on its existence in the abstract framework, cf., Theorem 11, for slowly non-dissipative processes. The unbounded pullback attractor is the family of sets \(\{{\mathcal {J}}(t) \}_{t\in {\mathbb {R}}}\), each of them being unbounded, we obtain the result on its structure similar as in the autonomous situation. \({\mathcal {J}}(t) = {\mathcal {J}}_b(t) \cup ({\mathcal {J}}(t)\setminus {\mathcal {J}}_b(t))\), where \({\mathcal {J}}_b(t)\) shares the properties of classical pullback attractors, and the solutions through points in \({\mathcal {J}}(t)\setminus {\mathcal {J}}_b(t)\) (at time t) that diverge to infinity forward in time. Note that the construction of sets \({\mathcal {J}}_b(t)\) is not the simple transition to non-autonomous case of the autonomous one. In the autonomous case the corresponding set \({\mathcal {J}}(t)\) is constructed using the concept of \(\alpha \)-limit sets, while in the non-autonomous situation we need to study the forward behavior of the points from the unbounded pullback attractor. Finally, we provide the construction of pullback \(\omega \)-limit sets in the unbounded non-autonomous framework. As we prove, there naturally appear two universes of sets for which one can define such \(\omega \)-limits: the backward bounded ones, and the ones whose forward solutions stay bounded. We prove that for the first universe non-autonomous \(\omega \)-limit sets are attracting and invariant in \({\mathcal {J}}(t)\), while for the second one they are attracting and invariant in \({\mathcal {J}}_b(t)\).

The second part of this paper is devoted to the study of the unbounded attractors for the dynamical system governed by the following differential equation in the Banach space X

where A is the linear, closed and densely defined operator, which is sectorial, has compact resolvent and no eigenvalues on the imaginary axis. Denoting by P its spectral projector on the space associated with \(\{ \text {Re}\, \lambda > 0 \}\), which is our space \(E^+\), we have

where \(\gamma _0, \gamma _1, \gamma _2, M\) are positive constants. Thus, our analysis is based on the general concept of the mild solutions and the Duhamel formula, rather then on weak solutions, and energy estimates as in [9]. Our first interest lies in showing, in Theorem 15, that if f(u) tends to zero, with appropriate rate, as \(\Vert Pu\Vert \) tends to infinity, then the thickness of the unbounded attractor also tends to zero. In such case one can remove the restriction in the definition of the unbounded attractor that the attraction takes place in balls. Similar result has already been obtained in [9], where, however, the polynomial decay of f is assumed, namely, \(\Vert f(u)\Vert \le \frac{C}{\Vert Pu\Vert ^\alpha }\). We recover and improve this result in the framework of mild solutions, by allowing for very slow decay, such as \(\Vert f(u)\Vert \le \frac{C}{\ln (\Vert Pu\Vert )}\) for large \(\Vert Pu\Vert \).

We continue the analysis by trying to answer the question whether the thickness of the unbounded attractor can tend to zero (and thus the attraction by the unbounded attractor can occur in the whole space) without the decay of f. We give the positive answer to this question with the help of the theory of inertial manifolds. Inertial manifolds in the framework of the unbounded attractors have already been studied by Ben-Gal [6]. In this classical approach the spectral gap can be taken between any two (sufficiently large) eigenvalues of \(-A\), and leads to the inertial manifold which contains the unbounded attractor. We follow the different path: namely we choose the spectral gap exactly between the spaces \(E^+\) and \(E^-\). While this gives strong restriction on the Lipschitz constant of the nonlinearity, interestingly, as we show, such approach leads to the inertial manifold which exactly coincides with the unbounded attractor. We construct this manifold by the two methods: the Hadamard graph transform method, where we base our approach on the classical article [24] (note that the non-autonomous version of the construction of inertial manifolds by the graph transform method valid in context of the unbounded attractors but based on the energy estimates rather than the Duhamel formula has been realized in [17]), and the Lyapunov–Perron method, based on its modern and non-autonomous rendition in framework of inertial manifolds [11]. We find out, that while the graph transform method works only for the case \(M=1\), from the numerical comparison of the restrictions on \(L_f\), the Lipschitz constants of f, which we found in both methods, that the graph transform approach gives the better (i.e. larger, although still expected to be non-sharp, cf. [31, 32]) bound on \(L_f\) than the Lyapunov–Perron method. Both above restrictions likely occur due to unoptimality of our approach (see, for example [28], where the author, for the case of dissipative and self adjoint principal operator reduces a version of the Lyapunov–Perron method to the verification of the cone condition, and thus shows that the restriction on the Lipschitz constant in both approaches is the same), but we cannot entirely exclude the possibility that for the problem under consideration there exist some inherent limitations of both approaches. The question to optimize the constant in Lyapunov–Perron method and realize the graph transform for \(M>1\), in our opinion, deserves further study.

In our further analysis we demonstrate that it is in fact sufficient to assume that the nonlinearity has the small Lipschitz constant only for large \(\Vert Pu\Vert \), while for small \(\Vert Pu\Vert \) it does not have to be Lipschitz at all. While in such a case the unbounded attractor does not have to be a Lipschitz manifold, we demonstrate by the appropriate modification of the nonlinearity f, that for large \(\Vert Pu\Vert \) it has to stay close to a Lipschitz manifold, whence its thickness, although it may be nonzero contrary to the case of small global Lipschitz constant, tends to zero with increasing \(\Vert Pu\Vert \).

In the last result of this article we discuss the asymptotic behavior at infinity, which is the more general version of the results from [13, 18]. Namely, we show that the rescaled problem at infinity is asymptotically autonomous, and hence its \(\omega \)-limits coincide with the \(\omega \)-limits of the finite dimensional autonomous problem at infinity whose dynamics can be explicitly constructed.

We stress that while we endeavor on building the comprehensive theory of unbounded attractors, our approach still has limitations. While we consider only the possibility of grow-up solutions, there are other possibilities of asymptotic behaviors which we could encompass in our theory (see [8] for a recent and comprehensive overview). Moreover, in most of the results we consider only directions that are dissipative (those in \(E^-\)) and expanding (those in \(E^+\)). It would be interesting to extend the analysis of the case of neutral dimensions, in which the solutions are not absorbed in finite time by a given bounded set, but still stay bounded, such as in Example 2, especially that such problems has recently arisen a significant interest in fluid mechanics, cf. [5].

The plan of this article is the following: Sect. 2 is devoted to the autonomous abstract theory of unbounded attractors. In the next Sect. 3 we study, on abstract level, the non-autonomous unbounded attractors in the abstract framework. Finally, in Sect. 4 we present the results on unbounded attractors for the problem governed by the equation \(u_t = Au + f(u)\).

2 Unbounded Attractors and \(\omega \)-Limit Sets: Autonomous Case

2.1 Non-dissipative Semigroups and Their Invariant Sets

If X is a metric space (\({\mathcal {P}}(X)\) represents the subsets of X and \({\mathcal {P}}_0(X)\) represents the non-empty subsets of X) then \({\mathcal {P}}(X)\) are its nonempty subsets and \({\mathcal {B}}(X)\) are its nonempty and bounded subsets.

Definition 1

Let X be a metric space. A family of mappings \(\{ S(t):D(S(t)) \subset X \rightarrow X \}_{t\ge 0}\) is a solution operator family if

-

\(D(S(0))=X\) and \(S(0) = I\) (identity on X),

-

For each \(x\in X\) there exists a \(\tau _x\in (0,\infty ]\) such that \(x\in D(S(t))\) for all \(t\in [0,\tau _x)\).

-

For every \(x\in X\) and \(t,s\in {{\mathbb {R}}}^+\) such that \(t+s\in [0,\tau _x)\) we have \(S(t+s)x = S(t)S(s)x\),

-

If \(E=\{(t,x)\in {{\mathbb {R}}}^+\times X: t\in [0,\tau _x)\}\), the mapping \(E \ni (t,x)\mapsto S(t)x\in X\) is continuous.

If \(\tau _x=+\infty \) for all \(x\in X\), \(D(S(t))=X\) for all \(t\ge 0\) and \(E={{\mathbb {R}}}^+\times X\). In this case we say that the family \(\{ S(t)\}_{t\ge 0}\) is a continuous semigroup.

When we will apply the above definition to differential equations, the mapping S(t) will assign to the initial data the value of the solution at the time t.

Definition 2

The function \([0,\tau _x)\ni t \mapsto S(t)x\in X\) is called a solution.

Definition 3

The function \(\gamma :{\mathbb {R}}\rightarrow X\) is a global solution if \(S(t)\gamma (s) = \gamma (s+t)\) for every \(s\in {\mathbb {R}}\) and \(t\ge 0\).

Definition 4

The global solution \(\gamma \) is bounded in the past if the set \(\gamma ({\mathbb {R}}^-)=\{\gamma (t)\in X:t\le 0\}\) is bounded.

Definition 5

The global solution \(\gamma \) is bounded if the set \(\gamma ({\mathbb {R}})=\{\gamma (t)\in X:t\in {\mathbb {R}}\}\) is bounded. If \(x=\gamma (0)\) we say that \(\gamma :{{\mathbb {R}}}\rightarrow X\) is a global solution through x.

The non-dissipativity of the problem can be manifested threefold:

-

(A)

solutions may cease to exist (e.g. blow up to infinity) in finite time, that is, for some \(x\!\in \! X\), \(\tau _x\!<\!\infty \).

-

(B)

solutions may grow-up, that is, it could happen that \(\tau _x=\infty \) for some \(x\in X\), with the set \(\{ S(t)x: t\ge 0\}\) being unbounded (this is the case in Example 1),

-

(C)

all solutions may stay bounded but, even so, there may not exist a bounded set which absorbs all of them (this is the case in Example 2).

We will assume that \(\tau _x=\infty \) for all \(x\in X\), that is, that \(\{S(t)\}_{t\ge 0}\) is a semigroup. Hence we exclude the situation of item (A) above.

Systems which exhibit the behaviors (B) or (C) for some solutions are called slowly non-dissipative, cf. [6]. In case of grow-up, i.e. in the case (B), it is still possible to distinguish between two situations: either for some \(x\in X\) we have that \(\lim _{t\rightarrow \infty }d(S(t)x,x) = \infty \) (solution diverges) or \(\limsup _{t\rightarrow \infty }d(S(t)x,x)=+\infty \) but the solution does not diverge (oscillate). To see that the latter situation is possible, a simple non-autonomous example is

then if \(x(0) = 0\) the solution is \(x(t) = t\sin (t)\), so we have the oscillations with the amplitude growing to infinity as \(t\rightarrow \infty \). Later we will introduce the assumption on the semigroup which exclude such situation.

We define two notions of invariant sets for non-dissipative semigropups. The first definition follows [9] where it is called the maximal invariant set.

Definition 6

Let \(\{ S(t) \}_{t\ge 0} \) be a continuous semigroup. The set \({\mathcal {I}}\) is the maximal invariant set if

It is also possible to define another notion, which coincides with the classical concept in the theory of global attractors.

Definition 7

Let \(\{ S(t) \}_{t\ge 0} \) be a continuous semigroup. The set \({\mathcal {I}}_b\) maximal invariant set of bounded solutions if

We make a simple observation.

Observation 1

We have \({\mathcal {I}}_b \subset {\mathcal {I}}\) with the possibility of strict inclusion. \({\mathcal {I}}\) is unbounded and \({\mathcal {I}}_b\) may be unbounded. Both sets are invariant, i.e. \(S(t) {\mathcal {I}} = {\mathcal {I}}\) and \(S(t) {\mathcal {I}}_b = {\mathcal {I}}_b\) for all \(t\ge 0\). Furthermore,

In Example 2 above \({\mathcal {I}} = {\mathcal {I}}_b = {\mathbb {R}}^2\). In Example 1 above \({\mathcal {I}} = \{0\}\times {\textbf{R}}\) and \({\mathcal {I}}_b =\{ (0,0) \}\). Note that in Example 1\({\mathcal {I}}\setminus {\mathcal {I}}_b\) consists of heteroclinics to infinity as defined by [6], see also [18] and the articles [3, 13, 21, 27].

2.2 Existence of Unbounded Attractors

The content of this chapter relies in putting to the abstract setting the results of Chepyzhov and Goritskii [9] obtained there for the particular initial and boundary value problem governed by the PDE \(u' = \Delta u + \beta u + f(u)\) on a bounded domain with the homogeneous Dirichlet boundary data. We will frequently use in the proofs the following result which appears in [9, Lemma 2.1].

Lemma 1

Let \(\{L_n\}\) be a sequence of nested sets in a Banach space X, i.e.

such that every set \(L_n\) lies in the \(\epsilon _n\)-neighborhood of some compact subset \(K_n\), namely \(L_n\subset {\mathcal {O}}_{\epsilon _n}(K_n)\), with \(\lim _{n\rightarrow \infty }\epsilon _n= 0\). Then from any sequence of points \(\{y_n\}\), where \(y_n\in L_n\), we can choose a convergent subsequence. Moreover the set \(L = \bigcap _{n=1}^\infty {\overline{L}}_n\) is compact.

Definition 8

The semigroup \(\{S(t)\}_{t\ge 0}\) is generalized asymptotically compact if for every \(B\in {\mathcal {B}}(X)\) and every \(t>0\) there exists a compact set \(K(t,B)\subset X\) and \(\varepsilon (t,B)\rightarrow 0\) as \(t\rightarrow \infty \) such that

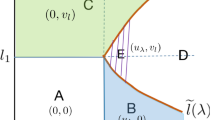

Now let X be a Banach space and let \(E^+\) and \(E^-\) its subspaces. We assume that \(E^+\) is finite dimensional and that \(E^-\) is closed. Moreover, \(X= E^+ \oplus E^-\), i.e. we can uniquely represent any \(x\in X\) as \(x=p+q\) with \(p\in E^+\) and \(q\in E^-\). Then we will denote \(p = Px\) and \(q=(I-P)x\). The closed graph theorem implies that the projections P and \(I-P\) are continuous. We will use the shorthand notation \(\{ \Vert Px\Vert \le R\} = \{ x\in X\;:\ \Vert Px\Vert \le R \}\) and \(\{ \Vert (I-P)x\Vert \le D\} = \{ x\in X\;:\ \Vert (I-P)x\Vert \le D \}\). We will impose the following assumptions to the semigroup \(\{S(t)\}_{t\ge 0}\), in order to prove the existence of an unbounded attractor

-

(H1)

There exist \(D_1, D_2 > 0\) and a closed set Q with \(\{ \Vert (I-P)x\Vert \le D_1\} \subset Q \subset \{ \Vert (I-P)x\Vert \le D_2\}\) such that Q absorbs bounded sets, and is positively invariant, i.e. for every \(B\in {\mathcal {B}}(X)\) there exists \(t_0(B) > 0\) such that \(\bigcup _{t\ge t_0}S(t)B \subset Q\) and \(S(t)Q \subset Q\) for every \(t\ge 0\).

-

(H2)

There exist the constants \(R_0\) and \(R_1\) with \(0<R_0\le R_1\) and an ascending family of closed and bounded sets \(\{H_R\}_{R\ge R_0}\) with \(H_R \subset Q\), such that

-

(1)

for every \(R\ge R_1\) we can find \(S(R)\ge R_0\) such that \(\{ \Vert Px\Vert \le S(R)\} \cap Q \subset H_R\) and moreover \(\lim _{R\rightarrow \infty } S(R) = \infty \),

-

(2)

for every \(R\ge R_1\) we have \( H_{R} \subset \{ \Vert Px\Vert \le R\}\),

-

(3)

and \(S(t)(Q \setminus H_R) \subset Q \setminus H_R\) for every \(t\ge 0\).

-

(1)

-

(H3)

The semigroup \(\{S(t)\}_{t\ge 0}\) is generalized asymptotically compact.

Define the unbounded attractor as

It is clear that the unbounded attractor is a closed set. We begin with establishing the relation between the maximal invariant set \({\mathcal {I}}\) and the unbounded attractor \({\mathcal {J}}\). The argument follows the lines of the proof of [9, Proposition 2.7 and Proposition 2.10 c)].

Theorem 1

If (H1)–(H3) hold, then \({\mathcal {I}} = {\mathcal {J}}\).

Proof

We first prove that \({\mathcal {I}}\subset {\mathcal {J}}\). To this end assume that \(u \in {\mathcal {I}}\) and denote by \(\gamma \) the bounded in the past solution such that \(u = \gamma (0)\). Moreover let T be such that \(S(T)\{ \gamma (s)\,:\ s\le 0 \} \subset Q\). If \(t\ge 0\) then \(S(T+t)\{ \gamma (s)\,:\ s\le 0 \} \subset S(t)Q\). But, since \(u = S(T+t)\gamma (-T-t)\), then \(u\in S(t)Q\) and, consequently \(u\in {\mathcal {J}}\).

We establish the converse inclusion. To this end take \(u\in {\mathcal {J}}\). Then there exists a sequence \(\{y_n\} \subset Q\) such that \(S(n)y_n \rightarrow u\) as \(n\rightarrow \infty \). Since \(\{ S(n)y_n \}\) is a convergent sequence, it is bounded, it follows that \(\{ S(n)y_n \} \subset H_R\) for some \(R\ge R_0\) and \(u\in H_R\). By item 3 of (H2) this means that for every n and for every \(t\in [0,n]\) we have \(S(t)y_n \in H_R\). As \(S(n)y_n = S(1)S(n-1)y_n\) we deduce that \(S(n-1)y_n \in H_R\cap S(n-1)H_R = H_R \cap S(n-1)Q\). By (H3) and by Lemma 1, it follows that, for a subsequence, \(S(n-1)y_n \rightarrow z\), whence \(S(1)z = u\) and \(z\in H_R\). Picking \(t\ge 0\), for n large enough \(S(t)Q \ni S(t)S(- t+n-1)y_n = S(t - t+n-1)y_n \rightarrow z\) and hence \(z\in {\mathcal {J}}\). Proceeding recursively, we can construct the solution \(\{ \gamma (t)\,:\ t\le 0\} \subset Q\) such that \(\gamma (0) = u\). As \(u\in H_R\), by item 3 of (H2) it must be that \(\gamma (t) \in H_R\) for every \(t\le 0\) and the proof is complete. \(\square \)

We formulate a simple lemma on the possible behavior of bounded sets upon evolution.

Lemma 2

Assume (H1)–(H2) and let \(R\ge R_0\). If \(B\in {\mathcal {B}}(X)\) then exactly one of three cases holds:

-

(1)

there exists \(t_1 > 0\) such that for every \(t\ge t_1\)

$$\begin{aligned} S(t)B \subset Q \setminus H_R. \end{aligned}$$ -

(2)

there exists \(t_1 > 0\) such that for every \(t\ge t_1\)

$$\begin{aligned} S(t)B \cap H_R \ne \emptyset \ \ \text {and}\ \ S(t)B \cap (Q \setminus H_R) \ne \emptyset . \end{aligned}$$ -

(3)

there exists \(t_1 > 0\) such that for every \(t\ge t_1\)

$$\begin{aligned} S(t)B \subset H_R . \end{aligned}$$

Proof

Suppose that (3) does not hold. This means that there exists \(t_1 > t_0(B)\) such that \(S(t_1)B \cap (Q\setminus H_R) \ne \emptyset \). By (H2) we deduce that \(S(t)B \cap (Q\setminus H_R) \ne \emptyset \) for every \(t\ge t_1\). If for some \(t_2\ge t_1\) there holds \(S(t_2) B \cap H_R = \emptyset \) then \(S(t_2)B\subset Q\setminus H_R\) and (H2) implies that \(S(t)B\subset Q\setminus H_R\) for every \(t\ge t_2\), which completes the proof. \(\square \)

The proof of the following result is established in [9, Lemma 2.8], here we present the proof that makes the explicit use of the Brouwer degree. This result, in particular, implies that the set \({\mathcal {I}} = {\mathcal {J}}\) is nonempty.

Lemma 3

Assume (H1)–(H3). For every \(p \in E^+\) there exists \(q\in E^-\) such that \(p+q \in {\mathcal {J}}\).

Proof

Let \(p\in E^+\). By item 1 of (H2) there exists \(R\ge R_0\) such that \(\{x\in E^+ \,:\ \Vert x\Vert \le \Vert p\Vert +1 \} \subset H_R\). Define \(B = \{ x\in E^+\, :\ \Vert x\Vert < R + 1\}\). Since, by item 2 of (H2), \(p \in B\) it is clear that the Brouwer degree \(\text {deg}(I,B,p)\) is equal to one, cf. [25, Theorem 1.2.6 (1)]. Pick \(t>0\) and define the mapping \([0,1]\times {\overline{B}} \ni (\theta ,p) \mapsto P S(\theta t) p \in E^+\). This mapping is continuous. Moreover, as, by item 3 of (H2), \(\partial B = \{ x\in E^+\, :\ \Vert x\Vert = R + 1 \} \subset Q\setminus H_R\) we deduce that \(p \not \in PS(\theta t)\partial B\) for every \(\theta \in [0,1]\). Hence, by the homotopy invariance of the Brouwer degree, cf. [25, Theorem 1.2.6 (3)] we deduce that \(\text {deg}(P S(t),B,p) = 1\), whence, cf. [25, Theorem 1.2.6 (2)], there exists \(p_t\in B\) such that \(PS(t) p_t = p\). Let \(t_n\rightarrow \infty \). There exists a sequence \(p_n \in B\) such that \(PS(t_n)p_n = p\). Hence we can find \(q_n \in E^-\) such that \(y_n = p+q_n = S(t_n)p_n \in S(t_n) Q\). From the fact that \(p\in B\) we deduce by item 1 of (H2) that there exists \(R' \ge R_1\) such that \(y_n \in H_{R'}\) for every n. This means that \(y_n \in S(t_n)H_{R'} \cap H_{R'}\). As \(H_{R'}\) is bounded and the sequence of sets \(S(t_n)H_{R'} \cap H_{R'}\) is nested, we can use (H3) and Lemma 1 to deduce that, for a subsequence, \(y_n \rightarrow y\). Since for every \(t\ge 0\) we have \(y_n \in S(t)Q\) for every n such that \(t\le t_n\) it follows that \(y\in {\mathcal {J}}\). The continuity of P implies that \(Py = p\) and the proof is complete. \(\square \)

The proof of the following result uses the argument of [9, Proposition 3.4]

Theorem 2

Let (H1)–(H3) hold. Let \(R\ge R_1\) and let \(B\in {\mathcal {B}}(X)\). Then either there exist \(t_1>0\) such that for every \(t\ge t_1\) we have \(S(t)B\subset Q\setminus H_{R}\) or

Proof

We can assume that there exists \(t_1>0\) such that \(S(t)B \cap H_{R}\ne \emptyset \) for every \(t\ge t_1(B)\) (i.e. 2. or 3. Lemma 2 hold). Then for \(t\ge t_1\) the sets \(S(t)B \cap H_{R} \subset S(t)B \cap \{ \Vert Px\Vert \le R\}\) are nonempty. For the proof by contradiction let us take the sequences \(\{x_n\}\subset B\) and \(t_n \rightarrow \infty \) such that \(S(t_n)x_n \in S(t_n)B \cap \{ \Vert Px\Vert \le R \}\) for every n, and

for some \(\varepsilon >0\). By (H1) we can assume without loss of generality that \(B\subset Q\). Denote \(y_n=S(t_n)x_n\). Then by item 1 of (H2) we can find \({\overline{R}}\ge R_0\) such that \(\{y_n\}\subset H_{{\overline{R}}}\) and it is a bounded sequence. By item 3 of assumption (H2) we know that \(\{x_n\}\subset H_{{\overline{R}}}\). We define the nested sequence of sets \(L_n=S(t_n)Q\cap H_{{\overline{R}}}=S(t_n)H_{{\overline{R}}}\cap H_{{\overline{R}}}\), and by assumption (H3) we know that for every n there exist a compact set \(K_n\) such that \(L_n\subset {\mathcal {O}}_{\epsilon _n}(K_n)\), and \(\varepsilon _n\rightarrow 0\) when \(n\rightarrow \infty \). By Lemma 1 there exists y such that \(y_n\rightarrow y\), so by definition, \(y\in {\mathcal {J}}\), and then, we arrive to a contradiction. \(\square \)

The above result guarantees only the attraction in the bounded sets. The next example of an ODE in 2D shows that under our assumptions the ’classical’ notion of attraction, in the sense \(\lim _{t\rightarrow \infty }\textrm{dist}(S(t)B,{\mathcal {J}}) = 0\), cannot hold.

Example 3

The vector field for the next system of two ODEs is defined only in the quadrant \(\{ x\ge 0, y\ge 0\}\). In the remaining three quadrants it can be extended by the symmetry.

Then

-

the maximal invariant set is given by \({\mathcal {J}} = \{(x,0)\,:\ x\in {\mathbb {R}}\}\),

-

the set \(Q = \{ (x,y)\in {\mathbb {R}}^2\,:\ |y|\le 2 \}\) is absorbing and positively invariant,

-

the sets \(\{ (x,y)\in {\mathbb {R}}^2\,:\ |y|\le 2,|x|>a \}\) are positively invariant for every \(a>0\),

-

the image of a bounded set by S(t) is relatively compact, and we have the continuous dependence on initial data,

-

the set \({\mathcal {J}}\) is not attracting as one can construct solutions satisfying \(x(t)\rightarrow \infty \) and \(y(t) \rightarrow c\) for every \(c\in (0,1)\).

Finally, the following result on \(\sigma \)-compactness is a counterpart of [9, Proposition 2.6]

Lemma 4

For every bounded and closed set B the set \({\mathcal {J}}\cap B\) is compact. Hence, \({\mathcal {J}}\) is a countable sum of compact sets.

Proof

Since \({\mathcal {J}}\cap B\) is closed it is enough to show that it is relatively compact. To this end, it is sufficient to prove that for every \(R\ge R_0\) the set \({\mathcal {J}}\cap H_R \) is relatively compact. Assume that \(\{ u_n\} \subset {\mathcal {J}}\cap H_R\) is a sequence. By invariance of \({\mathcal {J}}\) there exists \(x_n\in {\mathcal {J}}\) such that \(u_n = S(n) x_n\). It must be that \(x_n\in H_R\) and hence \(u_n\in S(n)H_R \cap H_R = S(n)Q \cap H_R\). Using (H3) and Lemma 1 we obtain the assertion. \(\square \)

The last result is on the unbounded attractor minimality

Lemma 5

Assume (H1)–(H3). If C is a closed set such that for every \(B\in {\mathcal {B}}(X)\) for which there exists \(t_1>0\) and \(R\ge R_1\) such that \(S(t)B\cap \{\Vert Px\Vert \ge R\}\) is nonempty for \(t\ge t_1\) we have \(\lim _{t\rightarrow \infty }\textrm{dist}(S(t)B\cap \{\Vert Px\Vert \le R\},C) = 0,\) then \({\mathcal {J}}\subset C\).

Proof

Suppose, for contradiction, that \(v \in {\mathcal {J}} \setminus C\). There exists the global solution \(\gamma \) with \(\gamma (0) = 0\) such that \(\gamma ((-\infty ,0])\) is a bounded set. Moreover there exists \(R\ge R_1\) such that \(v = S(t)\gamma (-t) \in S(t) \gamma ((-\infty ,0]) \cap \{\Vert Px\Vert \le R \}\). Hence

whence it must be that \(v\in C\) which concludes the proof. \(\square \)

We summarize the results of this section in the following result.

Theorem 3

Assume (H1)–(H3). Then the set \({\mathcal {J}}\) given by (4) is the unbounded attractor for the semiflow \(\{ S(t) \}_{t\ge 0}\), that is:

-

\({\mathcal {J}}\) is nonempty, closed, and invariant,

-

intersection of \({\mathcal {J}}\) with any bounded set is relatively compact,

-

if for some \(R\ge R_1\), \(B\in {\mathcal {B}}(X)\), and \(t_1>0\) the sets \(S(t)B \cap \{ \Vert Px\Vert \le R \}\) are nonempty for every \(t\ge t_1\) then

$$\begin{aligned} \lim _{t\rightarrow \infty } \textrm{dist}(S(t)B\cap \{\Vert Px\Vert \le R\},{\mathcal {J}}) = 0, \end{aligned}$$and \({\mathcal {J}}\) is the minimal closed set with the above property.

Moreover \(P{\mathcal {J}}=E^+\) and \({\mathcal {J}}\) is the maximal invariant set for \(\{ S(t) \}_{t\ge 0}\), that is it consists of those points through which there exists a global solution bounded in the past.

2.3 Attraction of All Bounded Sets

We will impose additional assumption.

-

(A1)

There exists a closed set \(Q_1\subset Q\) such that:

-

(a)

For every \(\varepsilon >0\) there exist \(r>0\) such that for every \(p\in E^+\) for which \(\left\| {p}\right\| \ge r\) there holds \(\textrm{diam }( Q_1\cap \{x\in X: Px=p\} )\le \varepsilon \).

-

(b)

The set \(Q_1\) is attracting, that is for every \(B\in {\mathcal {B}}(x)\) we have \(\lim _{t\rightarrow \infty }\textrm{dist}(S(t) B, Q_1) = 0.\)

-

(a)

We will prove following theorem.

Remark 1

If the conditions (H1)–(H3) and (A1) hold, then \({\mathcal {J}}\subset Q_1.\) In fact, if \(u\in {\mathcal {J}}={\mathcal {I}}\), there is a global solution \(\gamma :{\mathbb {R}}\rightarrow X\) through u (\(\gamma (0)=u\)) which is bounded in the past. As \(\gamma ((-\infty ,0]) \subset S(t)\gamma ((-\infty ,0])\) then \(\text {dist}(\gamma ((-\infty ,0]),Q_1) \le \text {dist}(S(t)\gamma ((-\infty ,0]),Q_1)\rightarrow 0\) as \(t\rightarrow \infty \). Hence, as \(Q_1\) is closed \(u\in Q_1\).

Theorem 4

Assume that (H1)–(H3) and (A1) hold. Then for every bounded set B we have

Proof

Assume that there are sequences \(t_n\rightarrow \infty \) and \(u_n\in B\) such that \(\inf _{j\in {\mathcal {J}}}\left\| { S(t_n)u_n- j}\right\| >\varepsilon ,\) for some \(\varepsilon <1.\) By Theorem 3 it follows that \(\left\| {P S(t_n)u_n}\right\| \rightarrow \infty .\) By item (a) of (A1) we can pick \(r>0\) such that for every \(x_0\in X\) for which \(\left\| {Px_0}\right\| >r\) we have \(\textrm{diam }(Q \cap \{x\in X: Px= Px_0\}) \le \frac{\varepsilon }{4}.\) Then we pick \(n_0\) such that \(S(t_{n_0})u_{n_0} \in S(t_{n_0}) B \subset {\mathcal {O}}_{\frac{\varepsilon }{8}}(Q_1)\) and \(\left\| { PS(t_{n_0})u_{n_0}}\right\| \ge r+1.\) So we find \(v\in Q_1 \) such that \(\left\| { S(t_{n_0})u_{n_0}-v}\right\| \le \frac{\varepsilon }{4}.\) Observe that \(\left\| {Pv}\right\| \ge r+\frac{1}{2}.\) Indeed if \(\left\| {Pv}\right\| \) would be less than \(r+\frac{1}{2}\) then we would have that \(\left\| { S(t_{n_0})u_{n_0}-v}\right\| \ge \left\| {P(S(t_{n_0})u_{n_0}-v)}\right\| \ge \frac{1}{2}.\) By Lemma 3 we can find \(w\in {\mathcal {J}}\) such that \(Pv = Pw.\) Observe that \(w,v\in Q_1 \cap \{x\in X: Pw = Px\}\) and \(\left\| {Pv}\right\| >r.\) We deduce that \(\left\| {w-v}\right\| \le \frac{\varepsilon }{4}.\) We observe that

which is contradiction. \(\square \)

2.4 Structure of the Unbounded Attractor

Since the unbounded attractor is an invariant set, there exists a global (eternal) solution bounded in the past in the attractor through each point in it. Some of these solutions may be bounded and others may be unbounded in the future, and, due to the forward uniqueness, for a given point in \({\mathcal {J}}\) only one of these two possibilities may occur. We remember, that a bounded invariant set \({\mathcal {I}}_b\) consisted of those points through which there exist the complete bounded solutions. This gives us the decomposition of the unbounded attractor into two disjoint sets \({\mathcal {J}} = {\mathcal {I}}_b \cup ({\mathcal {J}} \setminus {\mathcal {I}}_b)\). We will provide the properties of this decomposition. Observe that \({\mathcal {J}}\), as a nonempty and closed subset of a Banach space, is a complete metric space. We denote by \({\mathcal {B}}({\mathcal {J}})\) the family of nonempty and bounded subsets of \({\mathcal {J}}\). We shall start from the definition of the \(\alpha \)-limit set.

Definition 9

Let \(B\in {\mathcal {B}}({\mathcal {J}})\). We define the \(\alpha \)-limit set of B as

In the next result we establish the properties of the \(\alpha \)-limit set.

Theorem 5

Let \(B\in {\mathcal {B}}({\mathcal {J}})\) and assume (H1)–(H3). Then \(\alpha (B)\) is nonempty, compact, invariant, and attracts B in the past, i.e.

Proof

Pick \(B\in {\mathcal {B}}({\mathcal {J}})\). Let \(u_n \in {\mathcal {J}}\) and \(t_n\rightarrow \infty \) be such that \(S(t_n)u_n \in B\). As B is bounded, then \(B\in H_R\) for some R and it must be that \(u_n \in H_R\). As \(H_R\cap {\mathcal {J}}\) is a compact set it must be that, for a subsequence, \(u_n \rightarrow u\) for some \(u\in {\mathcal {J}}\cap H_R\) and hence \(\alpha (B)\) is nonempty. Moreover \(\alpha (B) \subset {\mathcal {J}} \cap H_R\) so it must be relatively compact. To prove that it is closed pick a sequence \(\{v_n\} \subset \alpha (B)\) with \(v_n\rightarrow v\). There exist sequences \(t_n^k\rightarrow \infty \) as \(k\rightarrow \infty \) and \(\{ v^n_k\}_{k=1}^\infty \subset {\mathcal {J}}\) with \(S(t^n_k)v^n_k\in B\) and \(v^n_k \rightarrow v_n\) as \(k\rightarrow \infty \). It is enough to use a diagonal argument to get that \(v\in \alpha (B)\). Hence \(\alpha (B)\) is a compact set. To establish the invariance let us first prove that \(S(t)\alpha (B) \subset \alpha (B)\). To this end let \(u\in \alpha (B)\) and \(t>0\). By continuity, \(S(t)u_n \rightarrow S(t)u\), and \(S(t_n-t)S(t)u_n = S(t_n)u_n \in B\), whence \(S(t)u \in \alpha (B)\). To establish negative invariance also take \(u\in \alpha (B)\) and \(t>0\). There exist \(v_n \subset {\mathcal {J}}\cap H_R\) such that \(S(t)v_n = u_n\). By compactness, for a subsequence, \(v_n\rightarrow v\) and by continuity \(S(t)v_n \rightarrow S(t)v\), whence \(S(t)v = u\). Moreover \(S(t_n+t)v_n = S(t_n)u_n \in B\), whence \(v\in \alpha (B)\), which ends the proof of invariance. The proof of attraction is also standard. For contradiction assume that there exists \(t_n\rightarrow \infty \) and \(u_n\in {\mathcal {J}}\) with \(S(t_n)u_n\in B\) such that \(\textrm{dist}(u_n,\alpha (B)) \ge \varepsilon \) for every n and some \(\varepsilon > 0\). As \(u_n \in {\mathcal {J}}\cap H_R\), by compactness we obtain that \(u_n\rightarrow u\), for a subsequence and it must be \(u\in \alpha (B)\) which is a contradiction. \(\square \)

An alternative approach to prove the above result would be to inverse time, which is possible on the unbounded attractor, and consider the \(\omega \)-limit set of inversed in time dynamical system, which can be possibly multivalued, as we do not assume the backward uniqueness.

As a consequence of the above result we obtain the following corollary

Corollary 1

For every \(B \in {\mathcal {B}}({\mathcal {J}})\) there holds \(\alpha (B)\subset {\mathcal {I}}_b\). In consequence, \({\mathcal {I}}_b\) is nonempty.

Define the set

For elements of \({\mathcal {J}}\) we define \(S(t)^{-1}\) as the inverse in \({\mathcal {J}}\) which, as we do not assume backward uniqueness, can be possibly multivalued.

Theorem 6

There holds \({\mathcal {J}}_b = {\mathcal {I}}_b\). Moreover for every \(B\in {\mathcal {B}}({\mathcal {J}})\) there holds

Proof

If \(u \in {\mathcal {J}}_b\) then \(u\in \alpha ({\mathcal {J}}\cap H_R)\) for some R, an invariant and compact set. Hence there exists a bounded global solution through u in \({\mathcal {J}}\) and thus \(u\in {\mathcal {I}}_b\). To get the opposite inclusion assume that \(u\in {\mathcal {I}}_b\) whence \(\{S(t)u\}_{t\ge 0}\) is bounded and there exists \(R>0\) such that \(S(t)u \in {\mathcal {J}}\cap H_R\) for every \(t\ge 0\). It means that \(u\in \alpha ({\mathcal {J}}\cap H_R)\).

To get the backward attraction observe that \(B\subset {\mathcal {J}}\cap H_R\) for some R and hence \(\alpha (B) \subset \alpha (J\cap H_R)\) which yields the assertion. \(\square \)

It is easy to see that if \({\mathcal {J}}_b\) is bounded then it has to be closed. In general, however, \({\mathcal {J}}_b\) can be unbounded and then it does not have to be a closed set as seen in the following example.

Example 4

The vector field is defined only in the quadrant \(\{ x\ge 0, y\ge 0 \}\). It can be extended to other quadrants by the symmetry. Namely, we consider the following system of two ODEs,

The unbounded attractor is given by \({\mathcal {J}} = {{\mathbb {R}}}\times [-1,1]\), and the bounded invariant set is given by

We have proved that \({\mathcal {J}}_b\) attracts in the past the bounded sets in \({\mathcal {J}}\). We will next show that it attracts in the future such bounded sets from X, which upon evolution stay in a bounded set, namely we prove the following result.

Theorem 7

If \(B\in {\mathcal {B}}(X)\) is such that there exist \(t_1>0\) and \(R\ge R_0\) with \(S(t)B\subset H_R\) for \(t\ge t_1\), i.e. the case (3) from Lemma 2 holds, then

Proof

Define the \(\omega \)-limit set \(\omega (B)=\bigcap _{s\ge t_1}\overline{\bigcup _{t\ge s}S(t)B}\). We will show that this set is compact, nonempty, invariant, and it attracts B, whence it must be that \(\omega (B) \subset {\mathcal {J}}_b\) and the assertion will follow. Observe that the family of sets

is decreasing. Moreover

Hence

If \(x\in \overline{S(s-t_1)Q} \cap H_R\) then \(x\in H_R\) and \(x = \lim _{n\rightarrow \infty } x_n\), where \(x_n \in S(s-t_1)Q\). We deduce that, for sufficiently large n, there holds \(x_n \in H_{{\overline{R}}}\), where \({\overline{R}}\) depends only on R. This means that \(x_n\in S(s-t_1)H_{{\overline{R}}} \cap H_{{\overline{R}}}\), whence \(x\in \overline{S(s-t_1)H_{{\overline{R}}}} \cap H_{{\overline{R}}}\). We are in position to use (H3) and Lemma 1 to deduce that \(\omega (B)\) is nonempty and compact. The proof that \(\omega (B)\) attracts B is standard and follows the lines of the argument in Theorem 2. Also the proof of invariance of \(\omega (B)\) is classical, once we have the compactness, and follows the lines of the proof in Theorem 5. \(\square \)

In a straightforward way, by taking as B the sets \(\alpha ({\mathcal {J}}\cap H_R)\), we obtain the following characterization of \({\mathcal {J}}_b\)

where the summation is made over all bounded sets which for some \(R\ge R_0\) and some \(t_1 > 0\) satisfy the assertion 3. of Lemma 2.

Remembering the decomposition \({\mathcal {J}} = {\mathcal {J}}_b \cup ({\mathcal {J}}\setminus {\mathcal {J}}_b)\) we know from Corollary 1 that the set \({\mathcal {J}}_b\) is nonempty. The set \({\mathcal {J}}\setminus {\mathcal {J}}_b\) can, however, be empty as the following example shows.

Example 5

Consider the following system of two ODEs

Clearly \({\mathcal {J}} = {\mathcal {J}}_b = {\mathbb {R}}\times [-1,1]\).

We can make, however, the following easy observation which says that if \(u\in {\mathcal {J}}\setminus {\mathcal {J}}_b\) then it must be \(\lim _{t\rightarrow \infty }\Vert S(t)u\Vert = \infty \), i.e. it excludes the situation when \(\lim _{t\rightarrow \infty }\Vert S(t)u\Vert \) does not exist, but \(\Vert S(t)u\Vert \) is unbounded. This means that the unbounded attractor consists of the set \({\mathcal {J}}_b\), a global-attractor-like object, and the solutions which are backward in time attracted to \({\mathcal {J}}_b\) and whose norm, forward in time, has to go to infinity.

Lemma 6

If \(u \in {\mathcal {J}}\setminus {\mathcal {J}}_b\) then \(\lim _{t\rightarrow \infty } \Vert S(t)u\Vert = \infty \).

Proof

We know that S(t)u is unbounded. If for some sequence \(t_n\rightarrow \infty \) there holds \(S(t_n)u\in H_R\) for some \(R\ge R_0\) then it has to be \(S(t)u\in H_R\) for every t and we have the contradiction. \(\square \)

A similar simple argument allows us to split all points of X into two sets, those points whose \(\omega \)-limits are well defined compact and invariant subsets of \({\mathcal {J}}_b\) and those points, whose solutions are unbounded in the future.

Lemma 7

If \(u \in X\) then either there exists \(t_1>0\) and \(R\ge R_1\)such that \(S(t)u \in H_R\) for \(t\ge t_1\) and then \(\omega (u) \subset {\mathcal {J}}_b\) is a compact and invariant set which attracts u, or \(\Vert S(t)u\Vert \rightarrow \infty \) as \(t\rightarrow \infty \).

Proof

If there exists \(R\ge R_0\) and \(t\ge t_1\) such that \(S(t)u \in H_R\) for every \(t\ge t_1\) then the result holds by Theorem 7. Otherwise, by Lemma 2 for every \(R\ge R_0\) there exists \(t_1\) such that \(S(t)u\in Q\setminus H_R\) for \(t\ge t_1\) and the proof is complete. \(\square \)

If we reinforce the item 3. of the assumption (H2) to its stronger version which states that the sets \(Q\setminus H_R\) are not only positively invariant, but the evolution in them is expanding to infinity, then we can guarantee compactness (and hence closedness) and the simpler characterization of \({\mathcal {J}}_b\). We make the following assumption.

-

(H4)

For every \( R \ge R_0\) there exists \(t=t(R)>0\) such that \(S(t)(Q\setminus H_{R_0}) \subset Q\setminus H_{R}\).

Theorem 8

Assuming (H1)–(H4), the set \({\mathcal {J}}_b = \alpha ({\mathcal {J}}\cap H_{R_0})\) is compact. Moreover the set \({\mathcal {J}}\setminus {\mathcal {J}}_b\) in the decomposition of the unbounded attractor \({\mathcal {J}}_b \cup ({\mathcal {J}}\setminus {\mathcal {J}}_b)\) is nonempty.

Proof

It is sufficient to prove that if \(R\ge R_0\) then \(\alpha ({\mathcal {J}}\cap H_R) = \alpha ({\mathcal {J}}\cap H_{R_0})\). To see that this is true, take \(u\in \alpha ({\mathcal {J}}\cap H_R)\). This means that there exists \(u_n\rightarrow u\) in \({\mathcal {J}}\) and \(t_n\rightarrow \infty \) such that \(S(t_n)u_n \in {\mathcal {J}}\cap H_R\). Now (H4) implies that \(S(t_n-t(R)-1)u_n \in {\mathcal {J}}\cap H_{R_0}\) and the assertion follows. \(\square \)

Assumption (H4) also implies that the set of those points in X whose \(\omega \)-limit sets are well defined and compact is a closed subset of the space X. Hence the set of those points constitutes a complete metric space, and then \({\mathcal {J}}_b\) is the global attractor of \(\{ S(t) \}_{t\ge 0}\) on that space.

Lemma 8

The set of points \(u\in X\) which have the compact and invariant \(\omega \)-limit set \(\omega (u)\subset {\mathcal {J}}_b\) is a closed set in X.

Proof

Assume for contradiction that \(u_n\rightarrow u\) with \(\lim _{t\rightarrow \infty }\textrm{dist}(S(t)u_n,{\mathcal {J}}_b) = 0\) and \(\lim _{t\rightarrow \infty }\Vert S(t)u\Vert = \infty \). For every large R there exists \(t_0>0\) such that for every \(t\ge t_0\) we can find \(n_0\) such that for every \(n\ge n_0\) we have \(\Vert S(t)u_n\Vert \ge R\). But we can find sufficiently large R and \(t_0\) such that this means that for some t and for sufficiently large n there holds \(S(t)u_n\in Q\setminus H_{R_0}\) which means that \(\Vert S(t)u_n\Vert \rightarrow \infty \), a contradiction. \(\square \)

2.5 Multivalued Inertial Manifold

The concept of multivalued inertial manifolds has been introduced in [15] and further developed in [29]. While, classically, inertial manifolds require the so called spectral gap condition to exist, their multivalued counterpart, as shown in [15, 29], does not require such condition, at the price of ’multivaluedness’. Motivated by Lemma 3, we show that the concept of multivalued inertial manifold is compatible with unbounded attractors. Namely, Lemma 3 states that for every \(p\in E^+\) there exists at least one \(q\in E^-\) such that \(p+q\in {\mathcal {J}}\). This makes it possible to define the multivalued mapping \(\Phi :E^+ \rightarrow {\mathcal {P}}(E^-)\) by

Then Lemma 3 states that for every \(p\in E^+\) the set \(\Phi (p)\) is nonempty. We continue by the analysis of \(\Phi \).

Lemma 9

Under assumptions (H1)–(H3) the multifuction \(\Phi \) has nonempty, compact values, closed graph, and is upper-semicontinuous.

Proof

The graph of \(\Phi \) is \({\mathcal {J}}\), a closed set. Moreover \(\Phi (p)\) is closed for every \(p\in E^+\), an since by Lemma 4 it must be relatively compact, it is also compact. Since, by the same Lemma, for every bounded set \(B \in {\mathcal {B}}(E^+)\) the set \(\overline{\Phi (B)}\) is compact, using Proposition 4.1.16 from [14] we deduce that \(\Phi \) is upper-semicontinuous, and hence also Hausdorff upper-semicontinuous, i.e. if \(p_n\rightarrow p\) in \(E^+\), then \(\lim _{n\rightarrow \infty }\text {dist}(\Phi (p_n),\Phi (p)) \rightarrow 0\). \(\square \)

If, in addition to (H1)–(H3), we assume (A1), we arrive at the next result which follows directly from Remark 1.

Remark 2

Assume (H1)–(H3) and (A1). Then

2.6 Unbounded \(\omega \)-Limit Sets and Their Properties

Following the classical definition we can define the \(\omega \)-limit sets for slowly non-dissipative dynamical systems

Definition 10

An \(\omega \)-limit set of the set \(B\subset {\mathcal {B}}(X)\) is the set

Using Lemma 2 and Theorem 7 we can formulate the following result on \(\omega \)-limit sets.

Corollary 2

Assume (H1)–(H3). Let \(B\in {\mathcal {B}}(X)\). If there exists \(R\ge R_0\) and \(t_1>0\) such that for every \(t\ge t_1\) we have \(S(t)B \subset H_{R}\) then \(\omega (B) \subset {\mathcal {J}}_B\) is a nonempty, compact and invariant set which attracts B. If for every \(R\ge R_0\) there exists \(t_1>0\) such that for every \(t\ge t_1\) we have \(S(t)B \in Q\setminus H_{R}\) holds then \(\omega (B)\) is empty.

We continue by the analysis of the situation when both \(S(t)B \cap H_R\) and \(S(t)B \cap (Q\setminus H_R)\) are nonempty. The next result is a complement to Theorem 7.

Lemma 10

Assume (H1)–(H3) and let \(B\in {\mathcal {B}}(X)\). If there exists \(R\ge R_0\) and \(t_1 > 0\) such that for every \(t\ge t_1\) both sets \(S(t)B \cap H_R\) and \(S(t)B \cap (Q\setminus H_R)\) are nonempty (i.e. case (2) of Lemma 2 holds), then \(\omega (B) \subset {\mathcal {J}}\) is a nonempty, closed, and invariant set which attracts B in the bounded sets in \(E^+\), i.e.

Proof

Take \(t_n\rightarrow \infty \). There exists \(u_n \in B\) such that \(S(t_n)u_n \in H_R\), whence, arguing as in the proof of Theorem 2, \(S(t_n)u_n\) is relatively compact and hence \(\omega (B)\) is nonempty. Its closedness follows by a diagonal argument, while the fact that \(\omega (B) \subset {\mathcal {J}}\) follows from the definition of \({\mathcal {J}}\) and the fact that Q is absorbing and positively invariant. The proof of invariance of \(\omega (B)\) is straightforward and follows from the fact that we can enclose the convergent sequence \(S(t_n)u_n\) in some \(H_{{\overline{R}}}\). Finally the attraction in bounded sets is obtained in the same way as in Theorem 2. \(\square \)

2.7 The Dynamics at Infinity

In [6, 13, 27] the authors define the dynamics at infinity by means of the Poincaré projection. Observe, however, that for the solutions u(t) that diverge to infinity, the projection \((I-P)u(t)\) remains bounded, and \(\Vert Pu(t)\Vert \) tends to infinity. Hence, when we rescale those solutions by \(\Vert P u(t)\Vert \), i.e. we consider

the infinite dimensional component, which belongs to \(E^-\) tends to zero, while the term \(\frac{Pu(t)}{\Vert Pu(t)\Vert }\) evolves on the unit sphere. We recover the dynamics at infinity by the analysis of the asymptotic behavior of this, rescaled, Pu(t). Denoting the unit sphere in \(E^+\) by \({\mathbb {S}}_{E^+} = \{ x\in E^+\; :\ \Vert x\Vert = 1\}\), we have the next result. Note that this result actually describes the dynamics at infinity if we assume (H4) in addition to (H1)–(H3) but it is valid in the more general case.

Lemma 11

Assume (H1)–(H3). Then for every \(R\ge R_0\)

Moreover \({\mathbb {S}}_{E^+}\) is the smallest closed set such that for every \(B\in {\mathcal {B}}(X)\) satisfying 1. of Lemma 2 for sufficiently large R, there holds

Proof

We will prove that

By Lemma 3, the fact that \({\mathcal {J}}\subset Q\) and the invariance of \({\mathcal {J}}\) it follows that \( \{ Px: x\in S(t)Q\} = E^+. \) Now

Since by (H3) the set \(PS(t) H_R\) is bounded, there exists \(R>0\) such that \(\{ x\in E^+\,:\ \Vert x\Vert =R \} \subset PS(t)(Q\setminus H_R)\) and the assertion follows.

To get the second assertion pick \(R\ge R_0\) and choose \(B = \{ x\in E^+ \, :\ \Vert x\Vert = {\overline{R}} \}\), where \({\overline{R}}\) is sufficiently large to get \(S(t) B \subset Q\setminus H_R\) for every \(t\ge 0\) (and hence B satisfies 1. of Lemma 2). Such choice of \({\overline{R}}\) is possible by (H2). We will show that

To this end choose \(t > 0\) and consider the mapping \(H:[0,1]\times {\mathbb {S}}_{E^+}\rightarrow {\mathbb {S}}_{E^+}\) defined as

Clearly \(H(0,\cdot )\) is an identity, and H is continuous. Hence, by the Hopf lemma, the degree of the mapping H(1, x) is equal to the degree if the identity of the sphere, that is, one. On the other hand assume for contradiction that the image of \(H(1,\cdot )\) is not equal to the whole \({\mathbb {S}}_{E^+}\). Then, as the image of \(H(1,\cdot )\) is compact, it is included in \({\mathbb {S}}_{E^+} \setminus U\) for some nonempty open set U. This set is contractible to one point, which in turn means that \(H(1,\cdot )\) must be homotopic to a constant map, which has the degree zero, a contradiction. \(\square \)

We can also define \(\omega \)-limits in \({\mathbb {S}}_{E^+}\) of nonempty bounded sets \(B \subset Q\setminus H_{R_0}\).

Lemma 12

Let \(B \subset Q\setminus H_{R_0}\) be bounded. Then \(\omega _\infty (B)\) is nonempty, compact, and attracts B in the sense

Proof

Non-emptiness follows from the compactness of \({\mathbb {S}}_{E^+}\) and we can obtain closedness (and hence compactness) of \(\omega _\infty (B)\) from a diagonal argument, the argument closely follows the classical proof of closedness of \(\omega \)-limit sets. Also the proof of the attraction is standard: for contradiction assume that for some B there exists the sequence \(\{x_n \} \subset B\) and \(t_n\rightarrow \infty \) such that

But for a subsequence, still denoted by n

where, by definition \(y\in \omega _\infty (B),\) a contradiction. \(\square \)

For particular slowly non-dissipative semigroups it is possible to construct the dynamical system on \(E^+\) such that \(\omega _\infty (B)\) are invariant and whole \({\mathbb {S}}_{E^+}\) is the global attractor. This dynamical system reflects the dynamics at infinity of the original problem. We will present such construction in the part of the paper devoted to the example of a slowly non-dissipative problem governed by a PDE.

3 Non-autonomous Unbounded Attractors and Their Properties

The non-autonomous version of the definition of the pullback attractor was proposed in [13, Definition 3.1] together with a result on its existence for the problem governed by the following PDE

where the space domain is the one-dimensional (an interval) with homogeneous Dirichlet boundary conditions. The function b(t) is allowed to oscillate between two gaps of the spectrum of the leading elliptic operator. In this section we propose a systematic abstract approach to non-autonomous unbounded pullback attractors.

3.1 Definition of Pullback Unbounded Attractor

We first note that, if X is a normed space, than any family of nonempty sets \(\{ {\mathcal {A}}(t) \}_{t\in {\mathbb {R}}}\) in X will be called a non-autonomous set. We will also use the notation \({\mathcal {A}}(\cdot )\) for such sets. Exactly as in the autonomous case we will assume that the phase space X is Banach, and can be represented as \(X=E^+\oplus E^-\), with \(E^+\) being finite dimensional. Using the same notation as in Sect. 2 we can represent any \(x\in X\) as \(x=p+q\). We remind some definitions concerning the dissipative non-autonomous dynamical systems, beginning with the definition of a process and its pullback attractor, see [12] for the systematic treatise on the theory. In the same way as in the autonomous case, in our requirement for the attraction we impose that it takes place only in the bounded sets in \(E^+\).

Definition 11

Let X be a metric space. A family of mappings \(\{ S(t,s)\}_{t\ge s}\), where \(S(t,s):X\rightarrow X\) is a continuous process if

-

\(S(t,t) = I\) (identity on X) for every \(t\in {\mathbb {R}}\),

-

for every \(t\ge \tau \ge s\), and for every \(x\in X\) we have \(S(t,s)x = S(t,\tau )S(\tau ,s)x\),

-

the mapping \((t,s,x)\mapsto S(t,s)x\) is continuous for every \(t\ge s\), \(x\in X\).

Definition 12

A family of sets \(\{{\mathcal {A}}(t)\}_{t\in {\mathbb {R}}}\) is the unbounded pullback attractor for a process \(S(\cdot ,\cdot )\) if

-

(1)

\({\mathcal {A}}(\cdot )\) are nonempty and closed,

-

(2)

\({\mathcal {A}}(\cdot )\) is invariant with respect to \(S(\cdot ,\cdot )\), that is

$$\begin{aligned} S(t,\tau ){\mathcal {A}}(\tau )={\mathcal {A}}(t) \ \text { for every } \ t,\tau \in {\mathbb {R}} \ \text { with } \ t\ge \tau . \end{aligned}$$ -

(3)

for every \(t\in {\mathbb {R}}\) the set \({\mathcal {A}}(t)\) is pullback attracting in the bounded sets in \(E^+\) at time t, that is

$$\begin{aligned} \lim _{s\rightarrow -\infty } \textrm{dist} (S(t,s)B\cap \{ \Vert Px\Vert \le R \},{\mathcal {A}}(t))=0 \end{aligned}$$for every \(B\in {\mathcal {B}}(X)\) and every \(R>0\) for which there exists \(t_1\le t\) such that \(S(t,s)B\cap \{ \Vert Px\Vert \le R \}\) is nonempty for every \(s\le t_1\).

-

(4)

\({\mathcal {A}}(\cdot )\) is the minimal family of closed sets with property (3).

We will also need the notions of pullback absorption and pullback positive invariance.

Definition 13

A set \(B\subset X\) pullback absorbs bounded sets at time \(t\in {\mathbb {R}}\) if, for each bounded subset D of X, there exists \(T=T(t,D)\le t\) such that

Definition 14

A non-autonomous set \(\{Q(t)\}_{t\in {\mathbb {R}}}\) is positively invariant if

Finally, we propose the concept of generalized pullback asymptotic compactness, as a non-autonomous variant of the notion given in Definition 8,

Definition 15

A process \(S(\cdot ,\cdot )\) is generalized pullback asymptotically compact if for every \(B\in {\mathcal {B}}(X)\) and for every \(t,s\in {\mathbb {R}}\), \(s\le t,\) there exists a \(K(t,s,B)\subset X\) and \(\varepsilon (t,s,B)\rightarrow 0\) when \(s\rightarrow -\infty \) such that

3.2 Existence of Unbounded Pullback Attractors

The following assumptions which are the non-autonomous versions of (H1)–(H3) from Sect. 2 will guarantee the existence of the unbounded pullback attractor.

-

(H1)\(_{\textrm{NA}}\) There exist \(D_1,D_2>0\) and the closed sets \(\{Q(\cdot )\}_{t\in {\mathbb {R}}}\) such that for every \(t\in {\mathbb {R}}\),

$$\begin{aligned} \{ \Vert (I-P)x\Vert \le D_1 \} \subset Q(t) \subset \{ \Vert (I-P)x\Vert \le D_2 \} \end{aligned}$$such that Q(t) pullback absorbs bounded sets at time t and family \(Q(\cdot )\) is positively invariant.

-

(H2)\(_{\textrm{NA}}\) There exists the constants \(R_0\) and \(R_1\) with \(0< R_0\le R_1\) and an ascending family of closed and bounded sets \(\{\{H_R(\cdot )\}_{t\in {\mathbb {R}}}\}_{R\ge R_0}\) with \(H_R(t)\subset Q(t)\) for every \(t\in {\mathbb {R}}\) such that

-

1.

for every \(R\ge R_1\) we can find \(S(R)\ge R_0\) such that \(\{\Vert Px\Vert \le S(R)\}\cap Q(t)\subset H_R(t)\) for every \(t\in {\mathbb {R}}\), and moreover \(\lim _{R\rightarrow \infty } S(R)=\infty \),

-

2.

for every \(R\ge R_1\) we have \(H_R(t)\subset \{\Vert Px\Vert \le R\}\) for every \(t\in {\mathbb {R}},\)

-

3.

for every \(R\ge R_0\) and \(t\in {\mathbb {R}}\), \(S(t,s)Q(s)\setminus H_R(s)\subset Q(t)\setminus H_R(t)\), for every \(s\le t\).

-

1.

-

(H3)\(_{\textrm{NA}}\) The process \(S(\cdot ,\cdot )\) is generalized pullback asymptotically compact.

The candidate for the unbounded pullback attractor is the non-autonomous set \({\mathcal {J}}(\cdot )\) given by

The rest of this section is devoted to the proof that assuming (H1)\(_{\textrm{NA}}\)–(H3)\(_{\textrm{NA}}\) this set satisfies the requirements of Definition 12. We also establish its relation with the time dependent maximal kernel and its section. To this end we first define the time dependent maximal kernel as the family of those complete non-autonomous solutions \(u:{\mathbb {R}}\rightarrow X\) which are bounded in the past.

Definition 16

The set \({\mathcal {K}}\) is called a maximal kernel if

It is clear that if the assertion of the above definition holds for some \(T\in {\mathbb {R}}\) then it also holds for every \({\overline{T}} < T\). The continuity of the process S with respect to time also implies that this assertion also holds for every \({\overline{T}} > T\). We continue with the definition of the maximal kernel section.

Definition 17

The non-autonomous set \(\{{\mathcal {K}}(t) \}_{t\in {\mathbb {R}}}\) is called a maximal kernel section if

Clearly, for a continuous process this is an invariant set, namely

Observation 2

If \(S(\cdot ,\cdot )\) is a continuous process, then \({\mathcal {K}}(t) = S(t,s){\mathcal {K}}(s)\) for every \(s\in {\mathbb {R}}\) and \(t\ge s\).

At the moment we do not yet know if either of the sets \({\mathcal {J}}(t)\) or \({\mathcal {K}}(t)\) are nonempty. We establish, however, then they must coincide. This is a non-autonomous version of Theorem 1.

Theorem 9

Assume \((H1)_{\textrm{NA}}-(H3)_{\textrm{NA}}\). Then for every \(t\in {\mathbb {R}}\) \({\mathcal {J}}(t)={\mathcal {K}}(t)\).

Proof

The proof is analogous to the proof of Theorem 1—the autonomous counterpart of the result. Take \(t\in {\mathbb {R}}\). First we prove the inclusion \({\mathcal {K}}(t)\subset {\mathcal {J}}(t)\). We suppose that \(u(t)\in {\mathcal {K}}(t)\), then we can find \(C\in {\mathbb {R}}^+\) such that \(\Vert u(s)\Vert \le C\) for every \(s\le t\). Let \(B=\{x\in X\, : \ \Vert x\Vert \le C\}\). By \((H1)_{\textrm{NA}}\) we know that for every \(\tau \in {\mathbb {R}}\) there exists \(s\le \tau \) such that \(S(\tau ,s)B\subset Q(\tau )\). Then, taking any \(\tau \le t\) we obtain

So, for every \(\tau \le t\), \(u(t)\in S(t,\tau )Q(\tau )\), and hence \(u(t)\in {\mathcal {J}}(t)\).

To prove the equality, take \(u_0\in {\mathcal {J}}(t)\). Then there exists a sequence \(\{y_n\}\) such that \(y_n\in Q(t-n)\) and \(S(t,t-n)y_n \rightarrow u_0\) as \(n\rightarrow \infty \). The convergent sequence \(\{S(t,t-n)y_n\}\) is contained in Q(t), whence there exists \(R\ge R_0\) such that \(\{S(t,t-n)y_n\}\subset H_R(t)\).

Notice that for \(s\in [t-n,t]\) also \(S(s,t-n)y_n\in H_R(s)\), because if \(S(s,t-n)y_n\in Q(s)\setminus H_R(s)\), then it would be \(S(t,t-n)y_n=S(t,s)S(s,t-n)y_n\in Q(t)\setminus H_R)(t)\). In particular it must be \(y_n\in H_R(t-n)\). So \(S(s,t-n)y_n\in H_R(s)\cap S(s,t-n)H_R(t-n)=L_n(s)\), and, for each \(s\le t\) this sequence of sets is nested. By Lemma 1 and \((H3)_{\textrm{NA}}\), for each s one can take a subsequence of indexes, still denoted by n such that \(S(s,t-n)y_n\) is convergent and the limit is always in \(H_R(s)\). In particular, for \(s=t-1\) we have \(S(t-1,t-n)y_n \rightarrow u_1\) and \(S(t,t-n)y_n = S(t,t-1)S(t-1,t-n)y_n \rightarrow S(t,t - 1)u_1\) and hence \(S(t,t-1)u_1 = u\) with \(u_1\in H_R(t-n)\). Passing to the subsequence in each step of the iterative procedure we are able to construct the sequence \(u_n \in H_R(t-n)\) such that \(S(t-n+1,t-n)u_n = u_{n-1}\), which allows us to define the solution \(u(\cdot )\) by taking \(u(r) = S(r,t-n)u_n\) for \(t\in [t-n,t-n+1]\). As \(u(r) \in H_R(r)\) for every \(r\le t\) it follows that there exists a constant C such that \(\sup _{s\in (-\infty , t]}\Vert u(s)\Vert \le C\) and the proof is complete. \(\square \)

Now, we state the non-autonomous version of Lemma 3. This result also implies that \(P{\mathcal {J}}(t) = E^+\) for every \(t\in {\mathbb {R}}\) and hence sets \({\mathcal {J}}(t)\) are nonempty.

Lemma 13

Assume \((H1)_{\textrm{NA}}-(H3)_{\textrm{NA}}\), for every \(t\in {\mathbb {R}}\) it holds that for every \(p\in E^+\), there exists a \(q\in E^-\), such that \(p+q\in {\mathcal {J}}(t)\).

Proof

Analogously as in autonomous case we pick \(p\in E^+\) and define \(B=\{x\in E^+\, :\, \Vert x\Vert <R+1\}\) for appropriately large R such that \(p\in H_R(\tau ) \subset B\) for every \(\tau \in {\mathbb {R}}\), which is possible by items 1 and 2 of \((H2)_{NA}\). Then \(\text {deg}(I,B,p)=1\). Now we pick \(t\in {\mathbb {R}}\) and we choose \(s>0\). We define continuous mapping

By item 2. of \((H2)_{NA}\), since \(\delta B=\{x\in E^+\,:\ \Vert x\Vert =R+1\} \subset Q(\tau )\setminus H_R(\tau ),\) for every \(\tau \in {\mathbb {R}}\), then \(S(t,t-\theta s)\delta B\subset Q(t)\setminus H_R(t)\) so \(p\notin P(S(t,t-\theta s)\delta B)\) for every \(\theta \in [0,1]\). By the homotopy invariance of the degree it follows that \(\text {deg}(PS(t,t-s),B,p)=1\) whence for every sequence \(s_n\rightarrow -\infty \) we can find \(p_n \in E^+\) and \(q_n\in E^-\) such \(y_n=p+q_n=S(t,t-s_n)p_n\in S(t,t-s_n)Q(t-s_n).\) As in the autonomous case we can find \(R'\) such that \(y_n \in L_n=S(t,t-s_n)H_{R'}\cap H_{R'} := L_n\), where \(L_n\) is a decreasing sequence of sets. We use Lemma 1 and \((H3)_{\text {NA}}\), to deduce that \(y_n\rightarrow y\) for a subsequence with \(Py=p\). Since \(y_n\in S(t,t-s_n)Q(t-s_n)\), it follows that \(y\in {\mathcal {J}}(t).\) \(\square \)

We provide the result that the non-autonomous set \({\mathcal {J}}(\cdot )\) is attracting in every ball.

Theorem 10

Let \((H1)_{\textrm{NA}}-(H3)_{\textrm{NA}}\). Let \(t\in {\mathbb {R}}\) and \(R\ge R_1.\) We take \(B\in B(X)\) such that there exists a \(t_1\le t\) that for every \(s\le t_1\) there holds \(S(t,s)B\cap \{\Vert Px\Vert \le R\} \not = \emptyset \). Then we have

Proof

To prove the attraction we proceed in a standard way, i.e. for contradiction we choose the sequences \(\{x_n\}\subset B\) and \(s_n\rightarrow -\infty \) such that \(S(t,s_n)x_n\in S(t,s_n)B\cap \{\Vert Px\Vert \le R\}\) and \(\textrm{dist}(S(t,s_n)x_n,{\mathcal {J}}(t))>\varepsilon \) for every n and for some \(\varepsilon >0.\)

By \((H1)_{\textrm{NA}}\) we know that for every \(n\in {\mathbb {N}}\) there exists \(k(n) \ge n\) such that \(S(s_n,s_k)x_k \in Q(s_n)\), then \(y_k=S(t,s_k)x_k\in S(t,s_n)Q(s_n)\subset Q(t)\). Since \(\Vert P(S(t,s_k)x_k)\Vert \le R\) for every k, by \((H2)_{\textrm{NA}}\) there exists \({\overline{R}}\ge R_0\) and such that the sequence \(\{y_{k(n)}\}_{n\in {\mathbb {N}}}\subset H_{{\overline{R}}}(t)\). We define the sets \(L_n=S(t,s_n)Q(s_n) \cap H_{{\overline{R}}}(t)\), these sets are nested, and \(y_{k(n)}\in L_n\) for every n. By Lemma 1 and \((H3)_{\textrm{NA}}\), we know that for a subsequence, we must have \(y_{k(n)}\rightarrow y\), and by definition of \({\mathcal {J}}(t)\), it must be \(y\in {\mathcal {J}}(t)\), which gives a contradiction. \(\square \)

We end the chapter with the result of minimality of \(\{ {\mathcal {J}}(t) \}_{t\in {\mathbb {R}}}\), which is the last property of the unbounded pullback attractor which we need to verify.

Theorem 11

Assume \((H1)_{\textrm{NA}}-(H3)_{\textrm{NA}}\), then the non-autonomous set \(\{ {\mathcal {J}}(t) \}_{t\in {\mathbb {R}}}\) is the unbounded pullback attractor of the process \(S(\cdot ,\cdot )\).

Proof

It only remains to verify the minimality in Definition 12. To this end, suppose that there exists a non-autonomous closed set \(\{ {\mathcal {M}}(t) \}_{t\in {\mathbb {R}}}\) which satisfies property (3) of Definition 12, and for a certain \(t\in {\mathbb {R}}\), there exist an \(u\in {\mathcal {J}}(t)\) such that \(u\notin {\mathcal {M}}(t)\). By Theorem 9, we know that the orbit \(\bigcup _{s\le t} \{ u(s) \}\) is bounded. Since \(u = u(t) = S(t,r) u(r) \subset S(t,r) (\bigcup _{s\le t} \{ u(s) \})\) we know that there exists some \(R\ge R_0\) such that \(u(t) \in S(t,r) (\bigcup _{s\le t} \{ u(s) \}) \cap \{ \Vert Px\Vert \le R \}\) and hence the latter sets are nonempty for every \(r\le t\). Then

whence it must be \(\text {dist}(u(t),{\mathcal {M}}(t))=0\), so \(u=u(t)\in {\mathcal {M}}(t)\), since \({\mathcal {M}}(t)\) is closed, and the proof of minimality is complete. \(\square \)

We conclude the section withe the result on \(\sigma \)-compactness of \({\mathcal {J}}(t)\).

Lemma 14

Assume \((H1)_{\textrm{NA}}-(H3)_{\textrm{NA}}\). For every \(t\in {\mathbb {R}}\) and for every closed and bounded set B the set \(B \cap {\mathcal {J}}(t)\) is compact.

Proof

Since \(B \cap {\mathcal {J}}(t)\subset H_R(t) \cap {\mathcal {J}}(t)\) for some \(R\ge R_0\) it is enough to prove that \(H_R(t) \cap {\mathcal {J}}(t)\) is relatively compact. The proof follows the lines of the proof of Theorem 4—the corresponding result for the autonomous case. We take a sequence \(\{u_n\}\subset H_R(t)\cap {\mathcal {J}}(t). \) Then we can take a sequence \(t_n\rightarrow -\infty \), and \(x_n\in {\mathcal {J}}(t_n)\) with \(u_n=S(t,t_n)x_n\). Then \(x_n\in H_R(t_n)\), so \(u_n\in S(t,t_n)H_R(t_n)\cap H_R(t)\). By Lemma 1 and \((H3)_{\text {NA}}\) we obtain the desired result. \(\square \)

3.3 Structure of the Unbounded Pullback Attractor

We begin with the definition of the kernel section in the non-autonomous case.

Clearly \({\mathcal {I}}_b(t) \subset {\mathcal {J}}(t) = {\mathcal {K}}(t)\), i.e. the kernel section is the subset of the maximal kernel section (which contains those points, through which there pass solutions bounded in the past but possibly unbounded in the future). Moreover \(S(s,t){\mathcal {I}}_b(t) = {\mathcal {I}}_b(s)\) for every \(s\ge t\), i.e. kernel sections are invariant. In the autonomous case the corresponding set, \({\mathcal {I}}_b = {\mathcal {J}}_b\) was constructed as the \(\alpha \)-limit of bounded sets in the unbounded attractor. Since, as it seems to us, such construction is no longer possible in the non-autonomous case, we provide an alternative way to obtain \({\mathcal {I}}_b(t)\) from \({\mathcal {J}}(t)\). Since the non-autonomous set \(\{{\mathcal {J}}(t)\}_{t\in {\mathbb {R}}}\) is invariant we can define the inverse mapping \((S(t,s))^{-1}:{\mathcal {J}}(t)\rightarrow 2^{{\mathcal {J}}(s)}\) for \(t>s\). We will use the notation \((S(t,s))^{-1} = S(s,t)\) for \(t>s\). Note that, as we do not assume the backward uniqueness, if we consider S(s, t) on the whole space X, its image can go beyond \( {\mathcal {J}}(s)\), and, moreover S(s, t) (even as considered as mapping from \({\mathcal {J}}(t)\) to \({\mathcal {J}}(s)\)) can be multivalued, From the invariance we have, however, the guarantee that for every \(u\in {\mathcal {J}}(t)\) the set S(s, t)u is nonempty. We define the following non-autonomous set.

In the next results we will prove that \({\mathcal {J}}_b = {\mathcal {I}}_b\), and it is nonempty.

Lemma 15

Assume \((H1)_{\textrm{NA}}-(H3)_{\textrm{NA}}\). For every \(t\in {\mathbb {R}}\), the set \({\mathcal {J}}_b(t)\) is nonempty.

Proof

We will prove the result using the Cantor intersection theorem. First, we see that the inverse image \(S(\tau ,t)^{-1}\) of the closed set \(H_R(\tau )\cap {\mathcal {J}}(\tau )\) is closed, since the process \(S(\cdot ,\cdot )\) is continuous. We also observe that for every \(\tau \ge t\), we have \(S(t,\tau )(H_R(\tau )\cap {\mathcal {J}}(\tau ))\subset H_R(t)\cap {\mathcal {J}}(t)\), a compact set by Lemma 14, then so the sets \(S(t,\tau )(H_R(\tau )\cap {\mathcal {J}}(\tau ))\) are compact. They are also nested: indeed, if \(\tau _2>\tau _1\ge t\) and \(x\in S(t,\tau _2)(H_R(\tau _2)\cap {\mathcal {J}}(\tau _2))\), then \(S(\tau _1,t)x\in H_R(\tau _1)\), and, as we are taking inverse image in the set \({\mathcal {J}}(\cdot )\), also \(S(\tau _1,t)x\in {\mathcal {J}}(\tau _1).\) \(\square \)

Lemma 16

Assuming \((H1)_{\textrm{NA}}-(H3)_{\textrm{NA}}\), for every \(t\in {\mathbb {R}}\), \({\mathcal {J}}_b(t)={\mathcal {I}}_b(t).\)

Proof

We take first \(u\in \bigcup _{R\ge R_0} \bigcap _{\tau \ge t}S(t,\tau )(H_R(\tau ) \cap {\mathcal {J}}(\tau ))\), so there exists \(R\ge R_0\) such that for every \(\tau \ge t\), \(S(\tau ,t)u\in H_R(\tau ) \cap {\mathcal {J}}(\tau )\). Since \(u\in {\mathcal {J}}(t)\), then there exists a backwards bounded solution through u at time t. Thus, there exists a global bounded solution through u at time t.

To prove the opposite inclusion, since u(t) is bounded for every \(t\in {\mathbb {R}}\), there exists \(R\ge R_0\), such that \(u(t)\in H_R\) for every \(t\in {\mathbb {R}}\), and \(\sup _{\tau \le t} \Vert u(\tau )\Vert \le C_u\). Since u is an orbit, for \(\tau \ge t\), \(u(\tau )=S(\tau ,t)u(t)\in H_R(\tau )\cap {\mathcal {J}}(\tau ),\) so \(u(t)\in S(t,\tau )(H_R(\tau )\cap {\mathcal {J}}(\tau ))\) for every \(\tau \ge t\). \(\square \)

As a consequence of above results we will get the following corollary

Corollary 3

For any \(R\ge R_0\) there holds

As in the autonomous case, we can observe that if \(u\in {\mathcal {J}}(t)\setminus {\mathcal {J}}_b(t)\) for a certain \(t\in {\mathbb {R}}\), then \(\lim _{\tau \rightarrow \infty }\Vert S(\tau ,t)u\Vert =\infty \). The proof is similar to the autonomous case Lemma 6

Lemma 17

Assume \((H1)_{\textrm{NA}}-(H3)_{\textrm{NA}}\), and let \(u\in {\mathcal {J}}(t)\setminus {\mathcal {J}}_b(t)\) for a certain \(t\in {\mathbb {R}}\). Then \(\lim _{\tau \rightarrow \infty }\Vert S(\tau ,t)u\Vert =\infty \).

Remark 3

In above results we decompose the unbounded attractor into backward bounded part and backward unbounded part. It would be worthwhile to study the concept of unbounded uniform attractors and their decomposition into fibers (which could be backward bounded or unbounded) as well as the relation with unbounded pullback attractors just as it has been done for their classical counterparts in [1, 12, 20]. In particular it would be interesting to extend the theory of uniform attractors to the case when the hull of the non-autonomous part can be unbounded.

3.4 Pullback Behavior of Bounded Sets