Abstract

In a widely-studied class of multi-parametric optimization problems, the objective value of each solution is an affine function of real-valued parameters. Then, the goal is to provide an optimal solution set, i.e., a set containing an optimal solution for each non-parametric problem obtained by fixing a parameter vector. For many multi-parametric optimization problems, however, an optimal solution set of minimum cardinality can contain super-polynomially many solutions. Consequently, no polynomial-time exact algorithms can exist for these problems even if \(\textsf {P}=\textsf {NP}\). We propose an approximation method that is applicable to a general class of multi-parametric optimization problems and outputs a set of solutions with cardinality polynomial in the instance size and the inverse of the approximation guarantee. This method lifts approximation algorithms for non-parametric optimization problems to their parametric version and provides an approximation guarantee that is arbitrarily close to the approximation guarantee of the approximation algorithm for the non-parametric problem. If the non-parametric problem can be solved exactly in polynomial time or if an FPTAS is available, our algorithm is an FPTAS. Further, we show that, for any given approximation guarantee, the minimum cardinality of an approximation set is, in general, not \(\ell \)-approximable for any natural number \(\ell \) less or equal to the number of parameters, and we discuss applications of our results to classical multi-parametric combinatorial optimizations problems. In particular, we obtain an FPTAS for the multi-parametric minimum s-t-cut problem, an FPTAS for the multi-parametric knapsack problem, as well as an approximation algorithm for the multi-parametric maximization of independence systems problem.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Many optimization problems depend on parameters whose values are unknown or can only be estimated. Changes in the parameters may alter the set of optimal solutions or even affect feasibility of solutions. Multi-parametric optimization models describe the dependencies of the objective function and/or the constraints on the values of the parameters. That is, for any possible combination of parameter values, multi-parametric optimization problems ask for an optimal solution and its objective value.

In this article, we consider linear multi-parametric optimization problems in which the objective depends affine-linearly on each parameter. For simplicity, we focus on minimization problems, but all our reasoning and results can be applied to maximization problems as well. Formally, for \(K \in {\mathbb {N}}\setminus \{0\}\), (an instance of) a linear K-parametric optimization problem \(\Pi \) is given by a nonempty (finite or infinite) set X of feasible solutions, functions \(a, b_k : X \rightarrow {\mathbb {R}}\), \(k = 1, \dots , K\), and a parameter set \(\Lambda \subseteq {\mathbb {R}}^K\). Then, the optimization problem is typically formulated [cf. Oberdieck et al. (2016) and Pistikopoulos et al. (2012)] as

Fixing a parameter vector \(\lambda \in \Lambda \) yields (an instance of) the non-parametric version \(\Pi (\lambda )\) of the linear K-parametric optimization problem. Moreover, the function \(f: \Lambda \rightarrow {\mathbb {R}}\cup \{-\infty \}, \lambda \mapsto f(\lambda ) :=\inf _{x \in X} f(x, \lambda )\) that assigns the optimal objective value of \(\Pi (\lambda )\) to each parameter vector \(\lambda \in \Lambda \), is called the optimal cost curve. The goal is to find a set \(S'\subseteq X\) of feasible solutions that contains an optimal solution for \(\Pi (\lambda )\) for each \(\lambda \in \Lambda \) for which \(\inf _{x \in X} f(x,\lambda )\) is attained. Such a set \(S'\) is called an optimal solution set of the multi-parametric problem and induces a decomposition of the parameter set \(\Lambda \): For each solution \(x \in S'\), the associated critical region \(\Lambda (x)\) subsumes all parameter vectors \(\lambda \in \Lambda \) such that x is optimal for \(\Pi (\lambda )\).

For many linear multi-parametric optimization problems, however, the cardinality of any optimal solution set can be super-polynomially large, even if \(K=1\) [see, for example, Allman et al. (2022), Carstensen (1983a), Gassner and Klinz (2010), Nikolova et al. (2006) and Ruhe (1988)]. In general, this rules out per se the existence of polynomial-time exact algorithms even if \(\textsf {P}=\textsf {NP}\). Approximation provides a concept to substantially reduce the number of required solutions while still obtaining provable solution quality. For the non-parametric version \(\Pi (\lambda )\), approximation is defined as follows [cf. Williamson and Shmoys (2011)]:

Definition 1.1

For \(\beta \ge 1\) and a parameter vector \(\lambda \in \Lambda \) such that \(f(\lambda ) \ge 0\), a feasible solution \(x \in X\) is called \(\beta \)-approximate (or a \(\beta \)-approximation) for the non-parametric version \(\Pi (\lambda )\) if \(f(x, \lambda ) \le \beta \cdot f(x',\lambda )\) for all \(x' \in X\).

This concept can be adapted to linear multi-parametric optimization problems. There, the task is then to find a set of solutions that contains a \(\beta \)-approximate solution for each non-parametric problem \(\Pi (\lambda )\). Formally, this is captured in the following definition [cf. Bazgan et al. (2022) and Giudici et al. (2017)]:

Definition 1.2

For \(\beta \ge 1\), a finite set \(S \subseteq X\) is called a \(\beta \)-approximation set for \(\Pi \) if it contains a \(\beta \)-approximate solution \(x \in S\) for \(\Pi (\lambda )\) for any \(\lambda \in \Lambda \) for which \(f(\lambda )\ge 0\). An algorithm \({\mathcal {A}}\) that computes a \(\beta \)-approximation set for any instance \(\Pi \) in time polynomially bounded in the instance size is called a \(\beta \)-approximation algorithm.Footnote 1 A polynomial-time approximation scheme (PTAS) is a family \(({\mathcal {A}}_{\varepsilon })_{\varepsilon >0}\) of algorithms such that, for every \(\varepsilon >0\), algorithm \({\mathcal {A}}_{\varepsilon }\) is a \((1 + \varepsilon )\)-approximation algorithm. A PTAS \(({\mathcal {A}}_{\varepsilon })_{\varepsilon >0}\) is a fully polynomial-time approximation scheme (FPTAS) if the running time of \({\mathcal {A}}_\varepsilon \) is in addition polynomial in \(\frac{1}{\varepsilon }\).

Next, we discuss some assumptions that are necessary in order to ensure a well-defined notion of approximation and to allow for the existence of efficient approximation algorithms. Note that the outlined (technical) assumptions are rather mild and they are satisfied for multi-parametric formulations of a large variety of well-known optimization problems. This includes well-known problems such as the knapsack problem, the minimum s-t-cut problem, and the maximization of independence systems problem (see Sect. 6), as well as the assignment problem, the minimum cost flow problem, the shortest path problem, and the metric traveling salesman problem [see Section 5 in Bazgan et al. (2022)].

Similar to the case of non-parametric problems, where non-negativity of the optimal objective value is usually required in order to define approximation [cf. Williamson and Shmoys (2011) and Definition 1.1 above], we require that the optimal objective value \(f(\lambda )\) is non-negative for any \(\lambda \in \Lambda \). To ensure this, assumptions on the parameter set and the functions \(a,b_k\), \(k=1,\dots ,K\), are necessary. An initial approach would be to assume nonnegativity of the parameter vectors as well as nonnegativity of the functions \(a,b_k\), \(k=1,\dots ,K\). A natural generalization also allows for negative parameter vectors. To this end, we consider a lower bound \(\lambda ^{\min }\in {\mathbb {R}}^K\) on the parameter set, i.e.,  (Assumption 1.3.1). Then, assuming \(f(x,\lambda ^{\min })\) and \(b_k(x)\), \(k=1,\dots ,K\), to be nonnegative for all \(x\in X\) (Assumption 1.3.3) guarantees nonnegativity of the optimal objective value for any \(\lambda \in \Lambda \).

(Assumption 1.3.1). Then, assuming \(f(x,\lambda ^{\min })\) and \(b_k(x)\), \(k=1,\dots ,K\), to be nonnegative for all \(x\in X\) (Assumption 1.3.3) guarantees nonnegativity of the optimal objective value for any \(\lambda \in \Lambda \).

Moreover, solutions must be polynomially encodableFootnote 2 and the values a(x) and \(b_k(x)\), \(k=1,\dots ,K\), must be efficiently computable for any \(x \in X\) in order for the problem to admit any polynomial-time approximation algorithm. Hence, we assume that any solution \(x \in X\) is of polynomial encoding length and the values a(x) and \(b_k(x)\), \(k=1,\dots ,K\), can be computed in time polynomial in the instance size and the encoding length of x (Assumption 1.3.2). This implies that the values a(x) and \(b_k(x)\), \(k=1,\dots ,K\), are rationals of polynomial encoding length. Consequently, the assumptions made so far imply the existence of positive rational bounds \(\text{ LB }\) and \(\text{ UB }\) such that \(b_k(x), f(x,\lambda ^{\min }) \in \{0\} \cup [\text{ LB }, \text{ UB}]\) for all \(x \in X\) and all \(k = 1,\dots ,K\). It is further assumed that \(\text{ LB }\) and \(\text{ UB }\) can be computed polynomially in the instance size (Assumption 1.3.3). Note that the numerical values of \(\text{ LB }\) and \(\text{ UB }\) may still be exponential in the instance size.

Extending the results for 1-parametric optimization problems from Bazgan et al. (2022), we study how an exact or approximate algorithm \(\mathtt {ALG}\) for the non-parametric version can be used in order to approximate the multi-parametric problem and which approximation guarantee can be achieved when relying on polynomially many calls to \(\mathtt {ALG}\). Hence, the last assumption is the existence of an exact algorithm or an approximation algorithm for the non-parametric version (Assumption 1.3.4). In summary, the following assumptions are made:

Assumption 1.3

-

1.

For some given \(\lambda ^{\min }= (\lambda ^{\min }_1,\dots ,\lambda ^{\min }_K)^\top \in {\mathbb {R}}^K\), the parameter set is of the form

.

. -

2.

Any \(x \in X\) can be encoded by a number of bits polynomial in the instance size and the values a(x) and \(b_k(x)\), \(k =1,\dots ,K\), can be computed in time polynomial in the instance size and the encoding length of x.

-

3.

Positive rational bounds \(\text{ LB }\) and \(\text{ UB }\) such that \(b_k(x), f(x,\lambda ^{\min }) \in \{0\} \cup [\text{ LB }, \text{ UB}]\) for all \(x \in X\) and all \(k = 1, \dots , K\) can be computed in time polynomial in the instance size.

-

4.

For some \(\alpha \ge 1\), there exists an algorithm \(\mathtt {ALG}_{\alpha }\) that returns, for any parameter vector \(\lambda \in \Lambda \), a solution \(x'\) such that \(f(x',\lambda ) \le \alpha \cdot f(x,\lambda )\) for all \(x \in X\).Footnote 3 The running time is denoted by \(T_{\mathtt {ALG}_{\alpha }}\).

2 Related literature

Linear 1-parametric problems are widely-studied in the literature. Under the assumption that there exists an optimal solution for any non-parametric version, the parameter set can be decomposed into critical regions consisting of finitely many intervals \((-\infty , \lambda ^1],[\lambda ^1, \lambda ^2], \dots , [\lambda ^L, \infty )\) with the property that, for each interval, one feasible solution is optimal for all parameters within the interval. Assuming that L is chosen as small as possible, the parameter values \(\lambda ^1,\dots , \lambda ^L\) are exactly the points of slope change (the breakpoints) of the piecewise-linear optimal cost curve. A general solution approach for obtaining the optimal cost curve is presented by Eisner and Severance (1976). Exact solution methods for specific optimization problems exist for the linear 1-parametric shortest path problem (Karp and Orlin 1981), the linear 1-parametric assignment problem (Gassner and Klinz 2010), and the linear 1-parametric knapsack problem (Eben-Chaime 1996). Note that linear 1-parametric optimization problems also appear in the context of some well-known combinatorial problems. For example, Karp and Orlin (1981) observe that the minimum mean cycle problem can be reduced to a linear 1-parametric shortest path problem (Carstensen 1983a; Mulmuley and Shah 2001), and Young et al. (2006) note that linear 1-parametric programming problems arise in the process of solving the minimum balance problem, the minimum concave-cost dynamic network flow problem (Graves and Orlin 1985), and matrix scaling (Orlin and Rothblum 1985; Schneider and Schneider 1991).

These and many other problems share an inherent difficulty [see, e.g., Carstensen (1983a)]: The optimal cost curve may have super-polynomially many breakpoints in general. This precludes the existence of polynomial-time exact algorithms even if \(\textsf {P} = \textsf {NP}\). Nevertheless, there exist 1-parametric optimization problems for which the number of breakpoints is polynomial in the instance size. For example, this is known for linear 1-parametric minimum spanning tree problems (Fernández-Baca et al. 1996) as well as for special cases of (1) linear 1-parametric binary integer programs (Carstensen 1983a, b), (2) linear 1-parametric maximum flow problems (Gallo et al. 1989; McCormick 1999), and (3) linear 1-parametric shortest path problems (Erickson 2010; Karp and Orlin 1981; Young et al. 2006).

Exact solution methods for general linear multi-parametric optimization problems are studied by Gass and Saaty (Gass and Saaty 1955; Saaty and Gass 1954) and Gal and Nedoma (Gal and Nedoma 1972). The minimum number of solutions needed to decompose the parameter set into critical regions, called the parametric complexity,Footnote 4 is a natural criterion to measure the complexity. As for the 1-parametric case, the parametric complexity of a variety of problems is super-polynomial in the instance size. This even holds true for the special cases of minimum s-t-cut problems whose 1-parametric versions are tractable (Allman et al. 2022). Known exceptions are certain linear K-parametric binary integer programs (Carstensen 1983a), various linear K-parametric multiple alignment problems (Fernández-Baca et al. 2000), linear K-parametric global minimum cut problems (Aissi et al. 2015; Karger 2016), and the linear K-parametric minimum spanning tree problem (Seipp 2013).

As outlined above, many linear K-parametric optimization problems do not admit polynomial-time algorithms in general, even if \(\textsf {P}=\textsf {NP}\) and \(K = 1\). This fact strongly motivates the design of approximation algorithms for K-parametric optimization problems. So far, approximation schemes exist only for linear 1-parametric optimization problems. A general algorithm, which can be interpreted as an approximate version of the method of Eisner and Severance, is presented in Bazgan et al. (2022). The approximation of the linear 1-parametric 0-1-knapsack problem is considered in Giudici et al. (2017), Halman et al. (2018) and Holzhauser and Krumke (2017).

We conclude this section by expounding the relationship between multi-parametric optimization and multi-objective optimization. We first mention similarities and then discuss differences between (the approximation concepts for) both types of problems. In a multi-objective optimization problem, the \(K+1\) objective functions \(a,b_k\), \(k=1,\dots ,K\), are to be optimized over the feasible set X simultaneously, and a \(\beta \)-approximation set is a set \(S'\subseteq X\) of feasible solutions such that, for each solution \(x \in X\), there exists a solution \(x' \in S'\) that is at most a factor of \(\beta \) worse than x in each objective function \(a,b_k\), \(k=1,\dots ,K\). We refer to the seminal work of Papadimitriou and Yannakakis (2000) for further details.

When restricting to nonnegative parameter sets \(\Lambda \), linear multi-parametric problems can be solved exactly by methods that compute so-called (extreme) supported solutions of multi-objective problems. Moreover, since the functions \(a,b_k\), \(k=1,\dots ,K\), are linearly combined by a nonnegative parameter vector, multi-objective approximation sets are also multi-parametric approximation sets in this case. Surveys on exact methods and on the approximation of multi-objective optimization problems are provided by Ehrgott et al. (2016) and Herzel et al. (2021), respectively. Using techniques from multi-objective optimization with the restriction that the functions \(a,b_k\) are assumed to be strictly positive, multi-parametric optimization problems with nonnegative parameter sets are approximated in Daskalakis et al. (2016), Diakonikolas (2011) and Diakonikolas and Yannakakis (2008). We note that the proposed concepts heavily rely on scaling of the objectives such that, for each solution \(x \in X\), all the pair-wise ratios of \(a(x),b_k(x)\), \(k=1,\dots ,K\), are bounded by two. This clearly cannot be done if (strict subsets of) the function values of solutions \(x\in X\) are equal to zero.

Despite these connections, there are significant differences between the approximation of multi-parametric and multi-objective problems: (1) As already pointed out in Diakonikolas (2011), the class of problems admitting an efficient multi-parametric approximation algorithm is larger than the class of problems admitting an efficient multi-objective approximation algorithm. For example, the multi-parametric minimum s-t-cut problem with positive parameter set can be approximated efficiently (Diakonikolas 2011), whereas it is shown in Papadimitriou and Yannakakis (2000) that there is no FPTAS for constructing a multi-objective approximation set for the bi-objective minimum s-t-cut problem unless \(\textsf {P}=\textsf {NP}\). This is also highlighted by the simple fact that (2) multi-objective approximation is not well-defined for negative objectives, whereas multi-parametric approximation allows the functions \(a,b_k\) to be negative as long as the parameter set \(\Lambda \) is restricted such that \(f(x,\lambda ) \ge 0\) for all solutions \(x \in X\) and all parameter vectors \(\lambda \in \Lambda \). (3) For nonnegative parameter sets, Helfrich et al. (2021) show that, in the case of minimization, a multi-parametric \(\beta \)-approximation set is only a multi-objective \(((K+1) \cdot \beta )\)-approximation set. In the case of maximization, they even show that no multi-objective approximation guarantee can be achieved by a multi-parametric approximation in general. (4) There also exist substantial differences with respect to the minimum cardinality of approximation sets: For some \(M \in {\mathbb {N}}\), consider a 2-parametric maximization problem with feasible solutions \(x^0, \dots , x^M\) such that \(a(x^i) = \beta ^{2i}\), \(b_1(x^1) = \beta ^{2(M-i)}\) for \(i = 0,\dots , M\). Then, any multi-objective \(\beta \)-approximation set must contain all solutions, whereas \(\{x^0,x^M\}\) is a multi-parametric \(\beta \)-approximation set.

Consequently, existing approximation algorithms for 1-parametric and/or multi-objective optimization problems are not sufficient for obtaining efficient and broadly-applicable approximation methods for general multi-parametric optimization problems. This motivates the article at hand, in which we establish a theory of and provide an efficient method for the approximation of general linear multi-parametric problems.

3 Our contribution

We provide a general approximation method for a large class of multi-parametric optimization problems by extending the ideas of both the approximation algorithm of Diakonikolas (2011) and Diakonikolas and Yannakakis (2008) and the 1-parametric approximation algorithm of Bazgan et al. (2022) to linear multi-parametric problems. Note that, in Bazgan et al. (2022), only 1-parametric problems are considered, which leads to an easier structure of the optimal cost curve due to the one-dimensional parameter set, which allows for a bisection-based approximation algorithm. This bisection-based approach cannot be generalized to multi-dimensional parameter sets as considered here.

For any \(0< \varepsilon < 1\), we show that, if the non-parametric version can be approximated within a factor of \(\alpha \ge 1\), then the linear multi-parametric problem can be approximated within a factor of \((1 + \varepsilon ) \cdot \alpha \) in running time polynomially bounded by the size of the instance and \(\frac{1}{\varepsilon }\). That is, the algorithm outputs a set of solutions that, for any feasible vector of parameter values, contains a solution that \(((1+ \varepsilon ) \cdot \alpha )\)-approximates all feasible solutions in the corresponding non-parametric problem. Consequently, the availability of a polynomial-time exact algorithm or an (F)PTAS for the non-parametric problem implies the existence of an (F)PTAS for the multi-parametric problem.

In Sect. 4, we show basic properties of the parameter set with respect to approximation. These results allow a decomposition of the parameter set by means of assigning each vector of parameter values to the approximating solution. We state our polynomial-time (multi-parametric) approximation method for the general class of linear multi-parametric optimization problems.

Furthermore, we discuss the task of finding a set of solutions with minimum cardinality that approximates the linear K-parametric optimization problem in Section 5. We adapt the impossibility result of Diakonikolas (2011) and Diakonikolas and Yannakakis (2008), which states that there does not exist an efficient approximation algorithm that provides any constant approximation factor on the minimum cardinality if the non-parametric problem can be approximated within a factor of \(1+ \delta \) for some \(\delta >0\). We extend this to the case that an exact non-parametric algorithm is available. Here, we show that there cannot exist an efficient approximation algorithm that yields an approximation set with cardinality less than or equal to K times the minimum cardinality.

Section 6 discusses applications of our general approximation algorithm to multi-parametric versions of several well-known optimization problems. In particular, we obtain fully polynomial-time approximation schemes for the linear multi-parametric minimum s-t-cut problem and the multi-parametric knapsack problem [where approximation schemes for the linear 1-parametric version have been presented in Giudici et al. (2017) and Holzhauser and Krumke (2017)]. We also obtain an approximation algorithm for the multi-parametric maximization problem of independence systems, a class of problems where the well-known greedy method is an approximation algorithm for the non-parametric version.

4 A general approximation algorithm

We now present our approximation method for linear multi-parametric optimization problems satisfying Assumption 1.3. We first sketch the general idea and then discuss the details. In the following, given some \(\beta \ge 1\), we simply say that x is a \(\beta \)-approximation for \(\lambda \) instead of x is a \(\beta \)-approximation for \(\Pi (\lambda )\) if this does not cause any confusion. Clearly, for each solution \(x \in X\), there is a (possibly empty) subset of parameter vectors \(\Lambda ' \subseteq \Lambda \) such that x is a \(\beta \)-approximation for all parameter vectors \(\lambda ' \in \Lambda '\). Hence, the notion of \(\beta \)-approximation (sets) is relaxed as follows: A solution x is a \(\beta \)-approximation for \(\Lambda ' \subseteq \Lambda \) if it is a \(\beta \)-approximation for every \(\lambda ' \in \Lambda '\). Analogously, a set \(S \subseteq X\) is a \(\beta \)-approximation set for \(\Lambda ' \subseteq \Lambda \) if, for any \(\lambda ' \in \Lambda '\), there exists a solution \(x \in S\) that is a \(\beta \)-approximation for \(\lambda '\).

Let \(0< \varepsilon < 1\) be given and let \(\alpha \ge 1\) be the approximation guarantee obtained by the algorithm \(\mathtt {ALG}_\alpha \) for the non-parametric version as in Assumption 1.3.4. The general idea of our multi-parametric approximation method can be described as follows: We show that there exists a compact subset \(\Lambda ^{\text{ compact }}\subseteq \Lambda \) with \(\lambda _i > \lambda ^{\min }_i\) for any \(\lambda \in \Lambda ^{\text{ compact }}\) and all \(i = 1, \ldots ,K\) that satisfies the following property:

-

A.

For each parameter vector \(\lambda \in \Lambda \setminus \Lambda ^{\text{ compact }}\), there exists a parameter vector \(\lambda ' \in \Lambda ^{\text{ compact }}\) such that any \(((1 + \frac{\varepsilon }{2}) \cdot \alpha )\)-approximation for \(\lambda '\) is also a \(((1+ \frac{\varepsilon }{2}) \cdot \alpha + \frac{\varepsilon }{2})\)-approximation for \(\lambda \) (see Proposition 4.7 and Corollary 4.8). Thus, since \(((1+ \frac{\varepsilon }{2}) \cdot \alpha + \frac{\varepsilon }{2}) \le (1 + 2 \cdot \frac{\varepsilon }{2}) \cdot \alpha ) = (1+\varepsilon )\cdot \alpha \), any \(((1 + \frac{\varepsilon }{2}) \cdot \alpha )\)-approximation for \(\lambda '\) is, in particular, a \(((1+\varepsilon )\cdot \alpha )\)-approximation for \(\lambda \).

Then, a grid \(\Lambda ^{\text{ Grid }}\subseteq \Lambda \) is constructed, where each \(\lambda ' \in \Lambda ^{\text{ Grid }}\) is computed as \(\lambda '_k = \lambda ^{\min }_k + (1 + \frac{\varepsilon }{2})^{l_k}\) for some \(l_k \in {\mathbb {Z}}\) and \(k = 1, \dots , K\), such that the following holds:

-

B.

The cardinality of \(\Lambda ^{\text{ Grid }}\) is polynomially bounded in the encoding length of the instance and \(\frac{1}{\varepsilon }\) (but exponential in K),Footnote 5 see Proposition 4.12.

-

C.

For each parameter vector \(\lambda ' \in \Lambda ^{\text{ compact }}\), there exits a grid vector \({\bar{\lambda }} \in \Lambda ^{\text{ Grid }}\) such that

$$\begin{aligned} {\bar{\lambda }}_k - \lambda ^{\min }_k \le \lambda '_k - \lambda ^{\min }_k \le \left( 1 + \frac{\varepsilon }{2}\right) ({\bar{\lambda }}_k - \lambda ^{\min }_k) \quad \text { for } k=1,\dots ,K. \end{aligned}$$Then, any \(\alpha \)-approximation for \({\bar{\lambda }}\) is a \(((1 + \frac{\varepsilon }{2}) \cdot \alpha )\)-approximation for \(\lambda '\) (see Proposition 4.13).

It follows that, for each parameter vector \(\lambda \in \Lambda \setminus \Lambda ^{\text{ compact }}\), there exist a parameter vector \({\bar{\lambda }} \in \Lambda ^{\text{ compact }}\) and a grid vector \(\lambda ' \in \Lambda ^{\text{ Grid }}\) such that any \(\alpha \)-approximation for \(\lambda '\) is a \(((1 + \frac{\varepsilon }{2}) \cdot \alpha )\)-approximation for \({\bar{\lambda }}\) and, thus, a \(((1 + \varepsilon ) \cdot \alpha )\)-approximation for \(\lambda \). Hence, algorithm \(\mathtt {ALG}_{\alpha }\) can be applied for the polynomially many parameter vectors in \(\Lambda ^{\text{ Grid }}\), and collecting all solutions results in a \(((1+ \varepsilon ) \cdot \alpha )\)-approximation set S for \(\Lambda \). This method yields a multi-parametric (F)PTAS if either a polynomial-time exact algorithm \(\mathtt {ALG}_{1}\) or an (F)PTAS for the non-parametric version is available. Moreover, this approximation algorithm allows to easily assign the corresponding approximate solution \(x \in S\) to each parameter vector \(\lambda \in \Lambda \).

We now present the details of the algorithm and start with Property A. To this end, it is helpful to also allow parameter dependencies in the constant term. Hence, we define \(F_0(x) :=f(x,\lambda ^{\min })\) and \(F_k(x) :=b_k(x)\), \(k =1, \dots , K\), for all \(x \in X\). Further, we let \({\mathbb {R}}^{K+1}_\geqq :=\{ w \in {\mathbb {R}}^{K+1} : w_i \ge 0, i = 0, \dots , K\}\) denote the \((K+1)\)-dimensional nonnegative orthant. Using this notation, the augmented multi-parametric problem reads

and the goal is to provide an optimal solution for any \(w \in {\mathbb {R}}^{K+1}_\geqq \). The vectors \(w \in {\mathbb {R}}^{K+1}_\geqq \) are called weights, and the set of all weights is called the weight set in order to distinguish it from the parameter set of the non-augmented problem. The terms \(\beta \)-approximate solution and \(\beta \)-approximation set for the augmented problem are defined analogously to Definitions 1.1 and 1.2, respectively. Note that the non-parametric version \(\Pi (\lambda )\) of \(\Pi \) for some \(\lambda = (\lambda _1, \dots , \lambda _K) \in \Lambda \) coincides with the non-parametric version of the augmented problem for the weight \(w = (1, \lambda _1 - \lambda ^{\min }_1,\dots ,\lambda _K - \lambda ^{\min }_K)\).

A solution \(x^*\) is optimal for some weight \(w \in {\mathbb {R}}^{K+1}_\geqq \) if and only if \(x^*\) is optimal for \(t \cdot w\) for any positive scalar \(t>0\). An analogous result holds in the approximate sense:

Observation 4.1

Let \(x,x^* \in X\) be two feasible solutions. Then, for any positive scalar \(t >0\) and \(\beta \ge 1\), it holds that \(\sum _{i = 0}^K w_i F_i(x^*) \le \beta \cdot \sum _{i = 0}^K w_i F_i(x)\) if and only if \(t \cdot \sum _{i = 0}^K w_i F_i(x^*) \le t \cdot \beta \cdot \sum _{i = 0}^K w_i F_i(x).\)

The conclusion of this observation is twofold: On the one hand, any \(\beta \)-approximation set for the augmented multi-parametric problem (1) is also a \(\beta \)-approximation set for \(\Pi \). On the other hand, restricting the weight set to the bounded K-dimensional simplex \(W_1 :=\{w \in {\mathbb {R}}^{K+1}_\geqq : \sum _{i = 0}^K w_i= 1\}\) again yields an equivalent problem.

The compact set \(\Lambda ^{\text{ compact }}\subseteq \Lambda \) satisfying Property A can now be derived as follows: For \(\beta \ge 1\) and \(0< \varepsilon ' < 1\), a closed cone \(W^{\text{ cone }}\subseteq {\mathbb {R}}^{K+1}_{\geqq }\) is constructed such that any \(\beta \)-approximation set for \(W^{\text{ cone }}\) is a \((\beta + \varepsilon ')\)-approximation set for \({\mathbb {R}}^{K+1}_\geqq \), \(W_1\), and \(\Pi \). Then, by Observation 4.1, a \(\beta \)-approximation set for the intersection \(W^{\text{ compact }}:=W^{\text{ cone }}\cap W_1\) is also a \((\beta + \varepsilon ')\)-approximation set for \({\mathbb {R}}^{K+1}_\geqq \), \(W_1\), and \(\Pi \). Since \(W_1\) is compact, any closed subset of \(W_1\) is also compact. Thus, denoting the Minkowski sum of two sets \(A,B \subseteq \Lambda \) of parameter vectors by \(A+B\), the (continuous) function

can be defined to obtain a compact subset \(\Lambda ^{\text{ compact }}:=\phi (W^{\text{ compact }})\) of \(\Lambda \). By choosing \(\varepsilon ' = \frac{\varepsilon }{2}\) and \(\beta = (1 + \varepsilon ')\), a compact subset \(\Lambda ^{\text{ compact }}\) that satisfies Property A is obtained.Footnote 6

The next results formalize this outline. Initially, an auxiliary result about convexity and approximation is given: For \(\gamma \ge 1\), if a solution x is a \(\gamma \)-approximation for several weights \(w^1, \dots , w^L \in {\mathbb {R}}^{K +1}_\geqq \), the same solution x is a \(\gamma \)-approximation for any weight in their convex hull.

Lemma 4.2

Let \(\gamma \ge 1\) and a subset \(W' \subseteq {\mathbb {R}}^{K+1}_\geqq \) be given. Then, any \(\gamma \)-approximation \(x \in X\) for \(W'\) is also a \(\gamma \)-approximation for the convex hull \({{\,\mathrm{conv}\,}}(W')\).

Proof

Let w be some weight in the convex hull of \(W'\). Then, \(w = \sum _{l=1}^L \theta _l w^l\) for some \(L \in {\mathbb {N}}\), \(w^1, \dots , w^L \in W'\), and \(\theta _1, \dots , \theta _L \in [0,1]\) with \(\sum _{l=1}^L \theta _l = 1\). Thus, for any \(x ' \in X\),

which implies that x is a \(\gamma \)-approximation for w. \(\square \)

The next results establish the compact set \(\Lambda ^{\text{ compact }}\subseteq \Lambda \) of parameter vectors such that any \(\beta \)-approximation set for \(\Lambda ^{\text{ compact }}\) is a \((\beta + \varepsilon ')\)-approximation set for \(\Lambda \).

Let w be a strictly positive weight whose components \(w_i\) for i in some index set \(\emptyset \ne I \subseteq \{0,\dots , K\}\) sum up to a small threshold. The next lemma states that, instead of computing an approximate solution for w, one can compute an approximate solution for the weight obtained by projecting all components \(w_i\), \(i \in I\), to zero, and still obtain a ‘sufficiently good’ approximation guarantee for w.

To this end, for a set \(I \subseteq \{0, \dots , K\}\) of parameter indices, the projection \(\text{ proj}^I:{\mathbb {R}}^{K+1} \rightarrow {\mathbb {R}}^{K+1}\) that maps all components \(w_i\) of a vector \(w \in {\mathbb {R}}^{K+1}\) with indices \(i\in I\) to zero is defined by

Lemma 4.3

Let \(0< \varepsilon ' < 1\) and \(\beta \ge 1\). Further, let \(\emptyset \ne I \subsetneq \{0,\dots ,K\}\) be an index set and let \(w \in {\mathbb {R}}^{K+1}_\geqq \) be a weight for which

Then, any \(\beta \)-approximation for w is a \((\beta + \varepsilon ')\)-approximation for \(\text{ proj}^{I}(w)\).

Proof

Let \(x \in X\) be a \(\beta \)-approximate solution for w. We have to show that, for any \(x' \in X\),

Since x is a \(\beta \)-approximation for w, we know that, for any solution \(x' \in X\),

which implies that

Note that, for any solution \(x'' \in X\), it holds that

If \(\sum _{j \notin I} w_j F_j(x') = 0\) and \(\sum _{j \notin I} w_j > 0\), we obtain

and, therefore, \(\sum _{j \notin I} w_j F_j(x) = 0\). If \(\sum _{j \notin I} w_j F_j(x') = 0\) and \(\sum _{j \notin I} w_j = 0\), it follows that \(w_j = 0\) for all \(j \notin I\) and, therefore, \(\sum _{j \notin I} w_j F_j(x) = 0\) as well. Hence, in these cases, the claim holds since

If \(\sum _{j \notin I} w_j F_j(x') \ge \text{ LB }\cdot \min _{j \notin I} w_j\), it holds that

which proves the claim. \(\square \)

Let \(w \in {\mathbb {R}}^{K+1}_\geqq \) and \(\emptyset \ne I \subsetneq \{0,\dots ,K\}\) be given as in Lemma 4.3. By the convexity property from Lemma 4.2, every \(\beta \)-approximation for w is not only a \((\beta + \varepsilon ')\)-approximation for \(\text{ proj}^{I}(w)\), but also a \((\beta + \varepsilon ')\)-approximation for all weights in \({{\,\mathrm{conv}\,}}(\{w,\text{ proj}^{I}(w)\})\). This suggests the following definition:

Definition 4.4

Given \(0< \varepsilon ' < 1\) and \(\beta \ge 1\), the factor used in Lemma 4.3 is denoted by

Additionally, for any index set \(\emptyset \ne I \subsetneq \{0,\dots ,K\}\), we define

The set \(P_\le (I)\) is defined analogously by replacing “<” by “\(\le \)” in (3). Note that \(P_\le (I)\) is a polyhedron. Moreover, we define

and, finally,

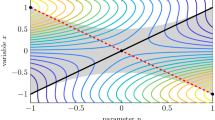

Figure 1 provides a visualization of the sets defined in Definition 4.4.

Illustration of the sets \(P_=(I)\), \(P_\le (I)\), and \(W^{\text{ cone }}\) for a linear multi-parametric problem with \(K =2\). Top left: Visualization of \(P_{=}(\{0,1\})\) and \(P_{=}(\{2\})\). Top right: Visualization of the corresponding full-dimensional sets \(P_\le (\{0,1\})\) and \(P_\le (\{2\})\). Bottom: Visualization of all sets \(P_{\le }(I)\). The set \(W^{\text{ cone }}\) is the complement of the union of the sets \(P_{<}(I) = P_{\le }(I) \setminus P_{=}(I)\) for \(\emptyset \ne I \subsetneq \{0,\dots , K\}\). Note that none of the visualized sets are bounded from above

Let \({\bar{w}} \in W^{\text{ cone }}\) be such that \({\bar{w}} \in P_=(I)\) for some index set \(\emptyset \ne I \subsetneq \{0,\dots ,K\}\). Lemma 4.3 implies that a \(\beta \)-approximation for \({\bar{w}}\) is a \((\beta + \varepsilon ')\)-approximation for \({{\,\mathrm{conv}\,}}( {\bar{w}}, \text{ proj}^I({\bar{w}}))\). However, the reverse statement is needed: For \(w \in {\mathbb {R}}^{K+1}_\geqq \setminus W^{\text{ cone }}\), does there exist a weight \({\bar{w}} \in W^{\text{ cone }}\) such that a \(\beta \)-approximation for \({\bar{w}}\) is a \((\beta + \varepsilon ')\)-approximation for w? Proposition 4.7 will show that this holds true. In fact, the corresponding proof is constructive and relies on the lifting procedure described in the following.

Consider some weight \(w \in {\mathbb {R}}^{K+1}_\geqq \setminus W^{\text{ cone }}\), i.e., \(w \in P_\le (I)\) for some index set \(\emptyset \ne I \subsetneq \{0,\dots ,K\}\). Instead of computing an approximate solution for w, a \(\beta \)-approximation for the corresponding lifted weight \({\bar{w}} \in P_=(I)\) (satisfying \(w \in {{\,\mathrm{conv}\,}}(\{{\bar{w}}, \text{ proj}^I({\bar{w}})\})\)) can be computed, which is then a \((\beta + \varepsilon ')\)-approximation for w. The next lemma formalizes the lifting.

Lemma 4.5

Let \(\emptyset \ne I \subsetneq \{0,\dots ,K\}\) be an index set and let \(w \in P_<(I)\). Define

Then, \({\bar{w}} \in P_=(I)\) and \(w \in {{\,\mathrm{conv}\,}}(\{{\bar{w}}, \text{ proj}^I({\bar{w}})\})\). In particular, \({\bar{w}}_i \ge w_i\) for all \(i \in I\).

Proof

First consider the case that \(w_j = 0\) for all \(j \in I\). Then it holds that

which yields that \({\bar{w}} \in P_=(I)\). Moreover, \(w = \text{ proj}^I({\bar{w}}) \in {{\,\mathrm{conv}\,}}(\{{\bar{w}}, \text{ proj}^I({\bar{w}})\})\). Now consider the case that \(w_j \ne 0\) for some \(j \in I\). Here, it holds that

which again yields that \({\bar{w}} \in P_=(I)\). Note that, since \(w \in P_<(I)\), we must have \(c \cdot \displaystyle \min _{j \notin I} w_j > \sum _{j \in I} w_j \ge 0\). Thus, the weight w can be written as a convex combination of \({\bar{w}}\) and \(\text{ proj}^I({\bar{w}})\) by

which concludes the proof. \(\square \)

When given a weight \(w \in P_<(I)\) for some index set I, a lifted weight \({\bar{w}} \in P_=(I)\) can be constructed using Lemma 4.5. A \(\beta \)-approximation for \({\bar{w}}\) is then a \((\beta + \varepsilon ')\)-approximation for w due to Lemmas 4.2 and 4.3. Next, it is shown that this idea generalizes to the set \(W^{\text{ cone }}\) in the following way: For each weight \(w \notin W^{\text{ cone }}\), a weight \({\bar{w}} \in W^{\text{ cone }}\) can be found such that any \(\beta \)-approximation for \({\bar{w}}\) is a \((\beta + \varepsilon ')\)-approximation for w. The remaining task is to prove that this holds true for weights contained in \(P_<(I) \cap P_<(I')\) for two (or more) different index sets I and \(I'\), since using the previous construction for I might result in a lifted weight that is still contained in \(P_<(I')\) and vice versa. Notwithstanding, such weights can inductively be lifted with respect to different index sets and, if this is done in a particular order, a weight is obtained that is contained in \(W^{\text{ cone }}\) after at most K lifting steps.

The following lemma states that, for a weight w that is not contained in \(P_<(I)\) for some index set I, increasing any of its components \(w_i\) with indices \(i \in I\) preserves the fact that the weight is not contained in \(P_<(I)\).

Lemma 4.6

Let \(w \in {\mathbb {R}}^{K+1}_\geqq \setminus P_<(I)\) for some index set \(\emptyset \ne I \subsetneq \{0,\dots ,K\}\). Let \({\bar{w}} \in {\mathbb {R}}^{K+1}_\geqq \) be a weight such that \({\bar{w}}_i \ge w_i\) for all \(i \in I\) and \({\bar{w}}_j = w_j\) for all \(j \notin I\). Then, \({\bar{w}} \notin P_<(I)\).

Proof

Since \(w \in P_<(I)\), it holds that

which proves the claim. \(\square \)

Now, we can prove the central result for Property A. Note that the proof is constructive.

Proposition 4.7

Let \(0< \varepsilon ' < 1\) and \(\beta \ge 1\) be given. Then, for any weight \(w \in {\mathbb {R}}^{K+1}_\geqq \setminus W^{\text{ cone }}\), there exists a weight \({\bar{w}} \in W^{\text{ cone }}\) such that any \(\beta \)-approximation for \({\bar{w}}\) is a \((\beta + \varepsilon ')\)-approximation for w.

Proof

Let \(w \in {\mathbb {R}}^{K+1}_\geqq \). Without loss of generality, assume that \(w_0 \le w_1 \le \dots \le w_K\) holds (otherwise, the ordering of the indices can be changed due to symmetry of \(W^{\text{ cone }}\)). First, it is shown that, in this case, all index sets I such that \(w \in P_<(I)\) are of the form \(I =\{0,\dots ,k\}\) for some \(k \in \{0,\dots ,K-1\}\): Let \(i \in I\) and \(j \notin I\) for some index set \(\emptyset \ne I \subsetneq \{0,\dots ,K\}\) for which \(w \in P_<(I)\). Then,

which implies that \(i < j\) and, thus, \(I = \{0,\dots ,k\}\) for some \(k \in \{0,\dots ,K-1\}\). To shorten the notation, we use the abbreviations \([K] :=\{0, \dots , K\}\) and \([{\bar{k}}] :=\{0, \dots , {\bar{k}}\}\) for \({\bar{k}} \in [K]\) in the remainder of this proof.

Since \(w \notin W^{\text{ cone }}\), we have \(w \in P_<(I)\) for at least one index set \(\emptyset \ne I \subsetneq [K]\). Hence, we choose \(k^{\max } \in [K-1]\) to be the largest index such that \(w \in P_<([k^{\max }])\) holds. Similarly, choose \(k^0 \in [K-1]\) to be the smallest index such that \(w \in P_<([k^0])\) holds. This means that \(w \notin P_<([k])\) for all \(k \in [K-1]\) with \(0 \le k < k^0\) and \(k^{\max }< k < K\). Further, set \(w^0 :=w\) and construct a (finite) sequence \(w^0,w^1, \dots , w^L\) of weights and a corresponding sequence \(k^0< k^1< \dots < k^{L-1}\) of indices such that, for each \(\ell \in \{1, \dots , L\}\), the following statements hold:

-

1.

\(w^{\ell }_i \ge w^{\ell -1}_i\) for \(i=0, \dots , k^{\ell -1}\) and \(w^{\ell }_j = w^{\ell -1}_j\) for \(j = k^{\ell -1} +1 ,\dots , K\).

-

2.

\(0 < w^\ell _0 \le w^\ell _1 \le \ldots \le w^\ell _K \)

-

3.

\(w^{\ell -1} \notin P_<([k])\) for \(k \in [K-1]\) with \(0\le k < k^{\ell -1}\) or \(k^{\max }< k < K\).

-

4.

\(w^{\ell } \in P_=([k^{\ell '}])\) for \(\ell ' = 0,\dots , \ell -1\).

-

5.

\(w \in {{\,\mathrm{conv}\,}}(\{w^{\ell }\} \cup \{\text{ proj}^{[k^0]}(w^\ell ), \dots , \text{ proj}^{[k^{\ell -1}]}(w^{\ell })\})\).

The construction, which is illustrated in Fig. 2, is as follows: Given a weight \(w^\ell \in {\mathbb {R}}^{K+1}_\geqq \setminus W^{\text{ cone }}\) with \(w^\ell _0 \le w^\ell _1 \le \ldots \le w^\ell _K\), we set \(k^{\ell }\) to be the smallest index such that \(w^\ell \in P_<([k^\ell ])\) and, analogously to Lemma 4.5, define

We repeat this construction until, for some \(L \in {\mathbb {N}}\), the weight \(w^L\) is not contained in \(P_<([k])\) for any \(k \in [K-1]\). Note that Statement 2 implies that, for any \(\ell \in {\mathbb {N}}\), the weight \(w^\ell \) cannot be contained in \(P_<(I)\) for any index set I that is not of the form \(I = [k]\) for some \(k \in [K-1]\). Moreover, Statement 3 implies that \(k^{\max } \ge k^\ell \) and that, for \(\ell \ge 1\) and \(k \in [k^{\ell -1} - 1]\), it holds that \(\sum _{i = 0}^k w^{\ell -1}_i \ge c \cdot w^{\ell -1}_{k+1}\). Therefore, if \(\sum _{j=0}^{k^{\ell -1}} w^{\ell -1}_j >0\), it holds that

Similarly, if \(w^{\ell -1}_j = 0\) for all \(j \in [k^{\ell -1}]\), it holds that

Thus, in both cases, we obtain that \(w^{\ell }\notin P_<([k])\) for \(k = 0,\dots , k^{\ell -1}-1\). Since Statement 4 implies that \(w^{\ell } \notin P_<([k^{\ell -1}])\), this yields that \(k^{\ell } > k^{\ell -1}\) (for \(\ell \ge 1\)) and, hence, the construction indeed terminates after at most \(k^{\max } - k^0<K\) steps with \(w^L \in W^{\text{ cone }}\).

Furthermore, Statement 4 and Lemma 4.3 imply that any \(\beta \)-approximation for \(w^L\) is a \((\beta + \varepsilon ')\)-approximation for \(\text{ proj}^{[k^{\ell '}]}(w^L)\) for each \(l' \in 0,\dots , L-1\) and, thus, also for w using Statement 5 and the convexity Lemma 4.2.

It remains to show that Statements 1–5 hold for each \(\ell \in \{1,\dots ,L\}\). Statement 1 holds due Lemma 4.5. Statements 2–5 are proven by induction over \(\ell \):

For \(\ell = 1\), in order to prove Statement 2, first consider the case that \(w^0_0 > 0\). In this case, \(w^1_0>0\) by Statement 1. Next, consider the case that \(w^0_0 =\dots , w^0_k = 0\) and \(w^0_{k+1} >0\) for some \(k \in [K-1]\) (note that w cannot be the zero vector since \(w \notin W^{\text{ cone }}\)). In this case, we must have \(k = k^0\) by definition of \(k^0\), and, therefore,

The inequality \(w^1_{k^0} \le w^1_{k^0 + 1}\) even holds with strict inequality since, in both cases, it holds that \(w^1_{k^0} \le c \cdot w^0_{k^0 +1} < w^0_{k^0 +1} = w^1_{k^0 + 1}\). All other inequalities of Statement 2 follow from the corresponding inequalities for \(\ell = 0\) (or trivially hold for \(i = 1,\dots ,k^1\) if \(w^0_0 = \dots = w^0_{k^0} = 0\)). Statement 3 is a direct consequence of our choice of \(k^0\) and \(k^{\max }\), and Statements 4 and 5 immediately follow from Lemma 4.5.

Now assume that Statements 2–5 hold for some \(\ell \in \{1,\dots , L-1\}\). Then, Statements 2–5 hold for \(\ell +1\): The inequality \(w^{\ell +1}_0 > 0\) holds since \(w^{\ell +1}_0 \ge w^{\ell }_0 >0\) due to Statements 1 and 2. Again, the inequality \(w^{\ell +1}_{k^\ell } \le w^{\ell +1}_{k^\ell +1}\) holds with strict inequality since

and all other inequalities of Statement 2 immediately follow from the corresponding inequalities for \(\ell \). In order to prove Statement 3, note that, for \(k \in [k^\ell -1]\), it holds that \(w^\ell \notin P_<([k])\) by the choice of \(k^\ell \). For \(k = k^{\max } +1 , \dots , K\), we have \(w^\ell \notin P_<([k])\) by Statement 1 and Lemma 4.6. For Statement 4, we have \(w^\ell \in P_=([k^{\ell '}])\), i.e.,

for \(\ell ' = 0, \dots , \ell -1\). Thus,

for \(\ell ' = 0,\dots , \ell -1\). Moreover, by Lemma 4.5, it holds that \(w^{\ell +1} \in P_=([k^\ell ])\), which concludes the proof of Statement 4. Finally, Statement 5 holds for \(\ell +1\) since, by induction hypothesis, we know that \(w \in {{\,\mathrm{conv}\,}}\left( \{w^\ell \} \cup \{ \text{ proj}^{[k^0]}(w^\ell ), \dots , \text{ proj}^{[k^{\ell -1}]}(w^\ell )\} \right) \), which means that there exist coefficients \(\theta _0,\dots , \theta _\ell \in [0,1]\) such that

Lemma 4.5 implies that \(w^\ell \in {{\,\mathrm{conv}\,}}\left( \{w^{\ell +1},\text{ proj}^{[k^{\ell }]}(w^{\ell +1})\}\right) \), i.e., there exists some \(\mu \in [0,1]\) such that

Note that, since \([k^{\ell '}] \subseteq [k^{\ell }]\) for \(\ell ' = 0, \dots , \ell -1\), it holds that

and, thus,

with

i.e., \(w \in {{\,\mathrm{conv}\,}}\left( \{w^{\ell +1} \} \cup \{ \text{ proj}^{[k^0]}(w^{\ell +1}), \dots , \text{ proj}^{[k^{\ell }]}(w^{\ell +1}) \}\right) \), which completes the induction and the proof. \(\square \)

Illustration of the sequence of (lifted) weights constructed in the proof of Proposition 4.7 for a weight \(w \notin W^{\text{ cone }}\) with \(w_0< w_1 < w_2 = 1\). Left: Embedding of \(\{ w \in {\mathbb {R}}^3_\geqq : w_2 = 1 \}\) into \({\mathbb {R}}^3_\geqq \). Right: Cross-section at \(w_2 = 1\). Note that \(w^2 \in W^{\text{ cone }}\) and \(w = w^0 \in {{\,\mathrm{conv}\,}}\left( \{w^2,\text{ proj}^{\{0\}}(w^2),\text{ proj}^{\{0,1\}}(w^2)\} \right) \)

The following corollary states that the same result holds true for the K-dimensional simplex \(W_1 = \{ w \in {\mathbb {R}}^{K+1}_\geqq : \sum _{i = 0}^K w_i = 1\}\), see Figure 3 for an illustration.

Corollary 4.8

For \(0< \varepsilon ' <1\) and \(\beta \ge 1\), define

For each weight \(w \in W_1 \setminus W^{\text{ compact }}\), there exists a weight \(w' \in W^{\text{ compact }}\) such that any \(\beta \)-approximation for \(w'\) is a \((\beta + \varepsilon ')\)-approximation for w.

Proof

Note that \(W^{\text{ cone }}\) is a cone, i.e., \(w \in W^{\text{ cone }}\) if and only if \(t \cdot w \in W^{\text{ cone }}\) for each \(t >0\). In particular, for each weight \(w \in {\mathbb {R}}^{K+1}_\geqq \setminus \{0\}\), it holds that

Thus, the claim follows immediately from Observation 4.1 and Lemma 4.3. \(\square \)

Illustration of the set \(W^{\text{ compact }}\) for a linear multi-parametric optimization problem (\(K = 2\)). Left: \(W^{\text{ compact }}\) as a subset of \({\mathbb {R}}^3_\geqq \). Right: Schematic view of \(W^{\text{ compact }}\). The dashed lines indicate the boundary of \({\bar{W}}^{\text{ compact }}\) defined in Lemma 4.9

The following lemma provides a lower bound on the components of weights \(w \in W^{\text{ compact }}\). This allows us to derive lower and upper bounds on \(\Lambda ^{\text{ compact }}\), which will be useful when proving the polynomial cardinality of the grid \(\Lambda ^{\text{ Grid }}\).

Lemma 4.9

Let \(0< \varepsilon ' < 1\) and \(\beta \ge 1\). Define

Then, \(W^{\text{ compact }}\subseteq {\bar{W}}^{\text{ compact }}\subseteq W_1\).

Proof

Let \(w \in W^{\text{ compact }}\). By symmetry of \(W_1\), \(W^{\text{ compact }}\), and \({\bar{W}}^{\text{ compact }}\), we can assume without loss of generality that \(w_0 \le w_1 \le \dots \le w_K\) holds. Since \(w \in W^{\text{ cone }}\), w satisfies \(w \notin P_{<}(I)\) for all \( \emptyset \ne I \subsetneq \{0,\dots ,K\}\). In particular, this holds for all \(I = \{0,\dots ,k\}\) with \(0 \le k \le K-1\). Hence,

With \((K +1) \cdot w_K \ge \sum _{i = 0}^K w_i = 1\), it follows that \(w_i \ge \frac{1}{ ( K +1)!}\cdot c^K\) for all \(i \in \{0,\dots , K\}\). \(\square \)

Next, \(W^{\text{ compact }}\) is transformed to \(\Lambda ^{\text{ compact }}\), see Fig. 4 for an illustration. Recall that

Corollary 4.10

For \( 0< \varepsilon ' <1\) and \(\beta \ge 1\), define \(\Lambda ^{\text{ compact }}:=\phi (W^{\text{ compact }})\). Then, for each parameter vector \(\lambda \in \Lambda \setminus \Lambda ^{\text{ compact }}\), there exists a parameter vector \(\lambda ' \in \Lambda ^{\text{ compact }}\) such that any \(\beta \)-approximation for \(\lambda '\) is a \((\beta +\varepsilon ')\)-approximation for \(\lambda \).

Proof

Let \(\lambda \in \Lambda \setminus \Lambda ^{\text{ compact }}\). Define \(w=(w_0,\dots ,w_k)\) by

Then, \(w \in W_1\) and, thus, there exists a weight \(w' \in W^{\text{ compact }}\) such that any \(\beta \)-approximation for \(w'\) is a \((\beta +\varepsilon ')\)-approximation for w by Corollary 4.8. Observation 4.1 implies that any \(\beta \)-approximation for \(\phi (w') \in \Lambda ^{\text{ compact }}\) is a \(\beta \)-approximation for \(w'\), which in turn is a \((\beta +\varepsilon ')\)-approximation for w. Applying Observation 4.6 again yields that any \((\beta + \varepsilon ')\)-approximation for w is also a \((\beta +\varepsilon ')\)-approximation for \(\lambda = \phi (w)\). \(\square \)

Illustration of the set \(\Lambda ^{\text{ compact }}\) (white region). Left: Full schematic view. Right: Focus on \( \{\lambda ^{\min }\} + [0,1]^K\). The dashed lines indicate the boundary of the set \({\bar{\Lambda }}^{\text{ compact }}= \phi ({\bar{W}}^{\text{ compact }})\) considered in the proof of Lemma 4.11

With \(\varepsilon ' = \frac{\varepsilon }{2}\) and \(\beta = (1 + \varepsilon ')\), Corollary 4.10 states that the set \(\Lambda ^{\text{ compact }}\) indeed satisfies Property A.

Now, to prove Property B, the following lemma provides useful upper and lower bounds on \(\Lambda ^{\text{ compact }}\).

Lemma 4.11

For \(0< \varepsilon ' <1\), \(\beta \ge 1\), and c defined as in (2), it holds that

In particular, \(\Lambda ^{\text{ compact }}\) is compact.

Proof

Let \({\bar{W}}^{\text{ compact }}\subseteq W_1\) be defined as in Corollary 4.8. Then, since \(W^{\text{ compact }}\subseteq {\bar{W}}^{\text{ compact }}\), we have

Also, note that \({\bar{W}}^{\text{ compact }}= {{\,\mathrm{conv}\,}}\left( \{ {\bar{w}}^0, \dots , {\bar{w}}^K \} \right) \), where, for \(i,k \in \{0,\dots ,K\}\),

Thus, for any parameter \(\lambda \in \Lambda ^{\text{ compact }}\), there exist scalars \(\theta _{0}, \theta _1 \dots , \theta _K \in [0,1]\) with \(\sum _{k = 0}^K \theta _k = 1\) such that \(\lambda = \phi \left( \sum _{k=0}^K \theta _k {\bar{w}}^k\right) \). Consequently, for \(i=1,\dots , K\), both

and

hold, which shows the claim. \(\square \)

Next, we construct a grid \(\Lambda ^{\text{ Grid }}\subseteq \Lambda \) possessing Properties B and C. That is, the cardinality is polynomially bounded in the encoding length of the instance and \(\frac{1}{\varepsilon }\), and computing a \(((1 + \frac{\varepsilon }{2}) \cdot \alpha )\)-approximation for any \(\lambda \in \Lambda ^{\text{ compact }}\) is possible by computing an \(\alpha \)-approximation for each grid point \(\lambda ' \in \Lambda ^{\text{ Grid }}\).

Let \(c = \frac{\varepsilon \cdot \text{ LB }}{2 \cdot (1+\varepsilon ') \cdot \alpha \cdot \text{ UB }}\) be defined as in (2) with \(\varepsilon ' = \frac{\varepsilon }{2}\) and \(\beta = (1 + \varepsilon ') \cdot \alpha \). We employ the bounds on \(\Lambda ^{\text{ compact }}\) given by Lemma 4.11, and define a lower bound as well as an upper bound by

We then set

Now, Property B can be shown using the construction of \(\Lambda ^{\text{ Grid }}\):

Proposition 4.12

Let \(\Lambda ^{\text{ Grid }}\) be defined as in (5). Then,

Proof

We have \(|\Lambda ^{\text{ Grid }}|= (\text{ ub }- \text{ lb }+1)^K\), where

since \(0< \varepsilon < 1\). Here, note that \(a^{\frac{\varepsilon }{2}} = a^{(1 - \frac{\varepsilon }{2}) \cdot 0 + \frac{\varepsilon }{2} \cdot 1} \le (1 - \frac{\varepsilon }{2}) \cdot a^0 + \frac{\varepsilon }{2} \cdot a^1 = 1 + \frac{\varepsilon }{2}\) by convexity of exponential functions with base \(a >0\), which implies \(\frac{\varepsilon }{2} \le \log (1 + \frac{\varepsilon }{2})\). \(\square \)

It remains to prove that \(\Lambda ^{\text{ Grid }}\) indeed satisfies Property C, for which the main idea is motivated by the approximation of multi-objective optimization problems, cf. Papadimitriou and Yannakakis (2000).

Proposition 4.13

Let \({\bar{\lambda }} \in \Lambda \) such that \({\bar{\lambda }}_i > \lambda ^{\min }_i\) for \(i = 1, \dots ,K\). If \(x \in X\) is an \(\alpha \)-approximation for \({\bar{\lambda }}\), then x is a \(((1+\frac{\varepsilon }{2}) \cdot \alpha )\)-approximation for all parameter vectors

Proof

First let \(\lambda \in {\mathbb {R}}^K\) such that \({\bar{\lambda }}_k - \lambda ^{\min }_k \le \lambda _k -\lambda ^{\min }_k \le (1+\frac{\varepsilon }{2}) \cdot ({\bar{\lambda }}_k - \lambda ^{\min }_k)\) for \(k=1,\dots , K\). Then, for any \(x' \in X\), it holds that

\(\square \)

Note that \(\Lambda ^{\text{ Grid }}\) is constructed in a way such that, for any parameter vector \(\lambda ' \in \Lambda ^{\text{ compact }}\), there exists a parameter vector \({\bar{\lambda }} \in \Lambda ^{\text{ Grid }}\) satisfying \({\bar{\lambda }}_k - \lambda ^{\min }_k \le \lambda '_k - \lambda ^{\min }_k \le (1 + \frac{1}{\varepsilon }) ({\bar{\lambda }}_k - \lambda ^{\min }_k)\) for \(k=1,\dots ,K\). Hence, Property C follows immediately by Proposition 4.13. This concludes the discussion of the details.

Our general approximation method for multi-parametric optimization problems is now obtained as follows: Given an instance \(\Pi \), an \(\alpha \)-approximation algorithm \(\mathtt {ALG}_\alpha \) for the non-parametric version, and \(\varepsilon >0\), we construct the grid \(\Lambda ^{\text{ Grid }}\) defined in (5), apply \(\mathtt {ALG}_\alpha \) for each parameter vector \(\lambda \in \Lambda ^{\text{ Grid }}\), and collect all solutions in a set S. Since, as shown before, Properties A–C hold true, the set S is indeed a \(((1+\varepsilon )\cdot \alpha )\)-approximation set. Algorithm 1 summarizes the method.

Theorem 4.14

Algorithm 1 returns a \(((1 + \varepsilon ) \cdot \alpha )\)-approximation set S in time

where \(T_{\text{ LB }/ \text{ UB }}\) denotes the time needed for computing the bounds \(\text{ LB }\) and \(\text{ UB }\), and \(T_{\mathtt {ALG}_{\alpha }}\) denotes the running time of \(\mathtt {ALG}_{\alpha }\).

Proof

By Lemma 4.12, the number of iterations and, thus, the number of calls to \(\mathtt {ALG}_{\alpha }\) is asymptotically bounded by

Now, it remains to show that the set S returned by the algorithm is a \(((1+ \varepsilon ) \cdot \alpha )\)-approximation set, i.e., that, for each parameter vector \(\lambda \in \Lambda \), there exists a parameter vector \({\bar{\lambda }} \in \Lambda ^{\text{ Grid }}\) such that any \(\alpha \)-approximation for \({\bar{\lambda }}\) is a \(((1+\varepsilon ) \cdot \alpha )\)-approximation for \(\lambda \). Let \(\lambda \in \Lambda \) be a parameter vector. By Corollary 4.10, there exists a parameter vector \(\lambda ' \in \Lambda ^{\text{ compact }}\) such that any \(((1+\frac{\varepsilon }{2}) \cdot \alpha )\)-approximation for \(\lambda '\) is a \(((1 + \frac{\varepsilon }{2})\cdot \alpha + \frac{\varepsilon }{2})\)-approximation, and thus a \(((1+\varepsilon )\cdot \alpha )\)-approximation for \(\lambda \). We set

Then, by Lemma 4.11, we have \(\text{ lb }\le m_i \le \text{ ub }\) for \(i = 1, \dots , K\) and, thus, \({\bar{\lambda }} \in \Lambda ^{\text{ Grid }}\). Moreover, \({\bar{\lambda }}_i -\lambda ^{\min }_i \le \lambda '_i - \lambda ^{\min }_i \le (1+\frac{\varepsilon }{2}) \cdot ({\bar{\lambda }}_i - \lambda ^{\min }_i)\) and, hence, any \(\alpha \)-approximation for \({\bar{\lambda }}\) is a \(((1+\frac{\varepsilon }{2})\cdot \alpha )\)-approximation for \(\lambda '\) by Proposition 4.13. This concludes the proof. \(\square \)

In particular, Theorem 4.14 yields:

Corollary 4.15

Algorithm 1 yields a FPTAS if either an exact algorithm \(\mathtt {ALG}_{1}\) or an FPTAS is available for the non-parametric version of \(\Pi \). If a PTAS is available for the non-parametric version, Algorithm 1 yields a PTAS.

Proof

If an exact algorithm \(\mathtt {ALG}_{1}\) is available, the statement directly follows from Theorem 4.14. Otherwise, for any \(\varepsilon >0\), set \(\delta :=\sqrt{1+ \varepsilon } -1\). Then, by Theorem 4.14, we can compute a \((1+\varepsilon )\)-approximation set in time

\(\square \)

5 Minimum-cardinality approximation sets

In this section, the task of finding a \(\beta \)-approximation set \(S^*\) with minimum cardinality is investigated. It is stated in Vassilvitskii and Yannakakis (2005) that no constant approximation factor on the cardinality of \(S^*\) can be achieved in general for multi-parametric optimization problems with positive parameter set and positive, polynomial-time computable functions \(a, b_k\) if only \((1+\delta )\)-approximation algorithms for \(\delta >0\) are available for the non-parametric problem. Thus, the negative result also holds in the more general case considered here.

Theorem 5.1

For any \(\beta > 1\) and any integer \(L \in {\mathbb {N}}\), there does not exist an algorithm that computes a \(\beta \)-approximation set S such that \(|S|< L \cdot |S^*|\) for every 3-parametric minimization problem and generates feasible solutions only by calling \(\mathtt {ALG}_{1 + \delta }\) for values of \(\delta >0\) such that \(\frac{1}{\delta }\) is polynomially bounded in the encoding length of the input.

We remark that the corresponding proof (published in Diakonikolas (2011), Theorem 5.4.12) is imprecise, but the idea remains valid with a more careful construction. We provide a counterexample and a correction of the proof in the appendix.

Note that this result does not rule out the existence of a method that achieves a constant factor if a polynomial-time exact algorithm \(\mathtt {ALG}_{1}\) is available. We now show that, in this case, there cannot exist a method that yields an approximation factor smaller than \(K+1\) on the cardinality of \(S^*\).

Theorem 5.2

For any \(\beta >1\) and \(K \in {\mathbb {N}}\), there does not exist an algorithm that computes a \(\beta \)-approximation set S with \(|S|< (K+1) \cdot |S^*|\) for every K-parametric minimization problem and generates feasible solutions only by calling \(\mathtt {ALG}_{1}\).

Proof

Let \(\beta > 1\). In the following, an instance of the augmented multi-parametric optimization problem with parameter set given by the bounded K-dimensional simplex \(W_1\) is constructed such that the minimum-cardinality \(\beta \)-approximation set \(S^*\) has cardinality one, but the unique solution \(x \in S^*\) cannot be obtained by \(\mathtt {ALG}_{1}\), and any other \(\beta \)-approximation set must have cardinality greater than or equal to \(K+1\). Consider an instance with \(X = \{x, x^0,\dots ,x^K\}\) such that

and, for \(i = 0,\dots ,K\),

We show that the solution x cannot be obtained via \(\mathtt {ALG}_{1}\), the set \(\{x\}\) a \(\beta \)-approximation set, and the only \(\beta \)-approximation set that does not contain x is \(\{x^0, \dots , x^K\}\) with cardinality \(K+1\).

First, we show that x cannot be obtained via \(\mathtt {ALG}_{1}\). For any \(w \in W_1\), there exists an index \(i \in \{0,\dots ,K\}\) such that \(w_i \ge \frac{1}{K+1}\) and, thus,

Hence, the solution x cannot be obtained via \(\mathtt {ALG}_{1}\). Next, we show that the set \(\{x\}\) is a \(\beta \)-approximation set. For any \(w \in W_1\) and any \(i = 0, \dots , K\), it holds that

Hence, the solution x is a \(\beta \)-approximation for any \(w \in W_1\). Finally, we show that the only \(\beta \)-approximation set that does not contain x is \(\{x^0, \dots , x^K\}\). Let \(e^i \in W_1\) be the ith unit vector. Then, for any \(j \in \{0, \dots ,K\} \setminus \{i\}\), we have

Note that, by continuity of \(w^\top F(x)\) in \(w_i\) for \(i = 0,\dots , K\), there exists a small \(t > 0\) such that, for each \(i \in \{0, \dots , K\}\), the weight \(w^i\) defined by \(w^i_i :=1 - \frac{K \cdot t}{K+1}\) and \(w^i_j = \frac{t}{K+1}\), \(j \ne i\), satisfies \(\beta \cdot (w^i)^\top F(x^i) < (w^i)^\top F(x^j)\) for all \(j \ne i\). Hence, the above arguments also hold for weights \(w \in W_1 \cap {\mathbb {R}}^{K+1}_>\). Therefore, the above instance shows that no \(\beta \)-approximation set with cardinality less than \(K+1\) times the size of the smallest \(\beta \)-approximation set can be obtained using \(\mathtt {ALG}_{1}\) for linear multi-parametric optimization problems in general.

\(\square \)

6 Applications

In this section, the established results are applied to linear multi-parametric versions of important optimization problems. The 1-parametric versions of the shortest path problem, the assignment problem, linear mixed-integer programs, the minimum cost flow problem, and the metric traveling salesman problem have previously been covered in Bazgan et al. (2022). By employing Theorem 4.14, it is easy to see that the stated results generalize to the multi-parametric case in a straightforward manner. We now apply Theorem 4.14 to several other well-known problems. Note that, for a maximization problem and some \(\beta \ge 1\), a \(\beta \)-approximate solution for the non-parametric version \(\Pi (\lambda )\) is a feasible solution \(x \in X\) such that \(f(x,\lambda ) \ge \frac{1}{\beta } \cdot f(x',\lambda )\) for all \(x' \in X\).

Multi-parametric minimum s-t-cut problem Given a directed graph \(G=(V,R)\) with \(|V|=n\) and \(|R|= m\), a multi-parametric cost function \(a_r + \sum _{k=1}^K b_{k,r}\) for each \(r \in R\), where \(a_r, b_{k,r} \in {\mathbb {N}}_{0}\), and two vertices \(s,t \in V\) with \(s \ne t\), the multi-parametric minimum s-t-cut problem asks to compute an s-t-cut \((S_{\lambda },T_{\lambda })\), \(s \in S_{\lambda }\) and \(t \in T_{\lambda }\), of minimum total cost \(\sum _{r:\alpha (r)\in S_{\lambda },\omega (r)\in T_{\lambda }} a_r + \sum _{k=1}^K \lambda _k b_r\) for each \(\lambda \in \Lambda \) (where \(\alpha (r)\) denotes the start vertex and \(\omega (r)\) the end vertex of an arc \(r\in R\)). Here, \(\lambda ^{\min }\) can be defined by setting \(\lambda ^{\min }_k :=\max _{r \in R} \{ - \frac{a_r}{K \cdot b_{k,r}}: b_{k,r} \ne 0\}\) such that, for each parameter vector greater than or equal to \(\lambda ^{\min }\), the cost of each s-t-cut is nonnegative.

A positive rational upper bound \(\text{ UB }\) as in Assumption 1.3 can be obtained by summing up the m cost components \(a_r\) and summing up the m cost components \(b_{k,r}\) for each k, and taking the maximum of these \(K+1\) sums. The lower bound \(\text{ LB }\) can be chosen as \(\text{ LB }:=1\). The non-parametric problem can be solved in \({\mathcal {O}} \left( n \cdot m \right) \) for any fixed \(\lambda \) [cf. Orlin (2013)]. Hence, an FPTAS for the multi-parametric minimum s-t-cut problem with running time \({\mathcal {O}}\left( (n \cdot m)(\frac{1}{\varepsilon } \log \frac{1}{\varepsilon } + \frac{1}{\varepsilon } \log (mC) )^{K} \right) \) is obtained, where C denotes the maximum value among all \(a_r,b_{k,r}\).

The number of required solutions in an optimal solution set can be super-polynomial even for \(K=1\) (Carstensen 1983b). Remarkably, a recent result shows that the number of required solutions in an optimal solution set of the K-parametric minimum s-t-cut problem with \(K >1\) can be exponential even for instances that satisfy the so-called source-sink-monotonicity (Allman et al. 2022), whereas instances of the 1-parametric minimum s-t-cut problem satisfying source-sink-monotonicity can be solved exactly in polynomial time (Gallo et al. 1989; McCormick 1999). Consequently, in the multi-parametric case, an FPTAS is the best-possible approximation result even for instances satisfying source-sink-monotonicity.

Multi-parametric maximization of independence systems Let a finite set \(E=\{1,\dots ,n\}\) of elements and a nonempty family \({\mathcal {F}} \subseteq 2^E\) be given. The pair \((E,{\mathcal {F}})\) is called an independence system if \(\emptyset \in {\mathcal {F}}\) and, for each set \(x \in {\mathcal {F}}\), it follows that all its subsets \(x' \subseteq x\) are also contained in \({\mathcal {F}}\). The elements of F are then called independent sets. The lower rank l(F) and the upper rank r(F) of a subset \(F \subseteq E\) of elements are defined by \(l(F) :=\min \{|B|:B \subseteq E, B \in {\mathcal {F}} \text { and } B \cup \{e\} \notin {\mathcal {F}} \text { for all } e \in E \setminus F \}\) and \(r(F) :=\max \{|B|:B \subseteq E, B \in {\mathcal {F}} \}\), respectively. The rank quotient \(q(E,{\mathcal {F}})\) of the independence system \((E, {\mathcal {F}})\) is then defined as \(q(E,{\mathcal {F}}) :=\min _{F \subseteq E, l(F) \ne 0} \frac{r(F)}{l(F)}\). Moreover, let a multi-parametric cost of the form \(a_e + \sum _{k=1}^K \lambda _k b_{k,e}\), where \(a_e,b_{k,e} \in {\mathbb {N}}_0\), \(k=1,\dots ,K\), be given for each element \(e \in E\). Then, with \(\lambda ^{\min }\) defined by \(\lambda ^{\min }_k :=\max _{e \in E} \{ - \frac{a_e}{K \cdot b_{k,e}}: b_{k,e} \ne 0 \}\), \(k=1,\dots ,K\), the multi-parametric maximization of independence systems problem asks to compute, for each parameter vector \(\lambda \) greater than or equal to \(\lambda ^{\min }\), an independent set \(x_\lambda \in {\mathcal {F}}\) of maximum cost \(\sum _{e \in E} a_e + \sum _{k=1}^K \lambda _k b_{k,e}\).

Here, a positive rational upper bound \(\text{ UB }\) as in Assumption 1.3 can be obtained by summing up the n profit components \(a_e\) and summing up the n profit components \(b_{k,e}\) for each k, and taking the maximum of these \(K+1\) sums. The lower bound \(\text{ LB }\) can again be chosen as \(\text{ LB }:=1\). For independence systems \((E,{\mathcal {F}})\) with rank quotient \(q(E,{\mathcal {F}})\), it is known that the greedy algorithm is a \(q(E,{\mathcal {F}})\)-approximation algorithm for the non-parametric problem obtained by fixing any parameter vector \(\lambda \) (Korte and Hausmann 1978). Hence, for any \(\epsilon >0\), the maximization version of Theorem 4.14 yields a \(((1+\epsilon )\cdot q(E,{\mathcal {F}}))\)-approximation algorithm with running time \({\mathcal {O}} \left( T_{\text {Greedy}} \cdot (\frac{1}{\varepsilon } \log \frac{1}{\varepsilon } + \frac{1}{\varepsilon } \log (nC) )^{K} \right) \), where C denotes the maximum profit component among all \(a_e, b_{k,e}\), and \(T_{\text {Greedy}}\) denotes the running time of the greedy algorithm (which can often be seen to be in \(\mathcal {O}(n\log (n))\) times the running time of deciding whether \(F \in {\mathcal {F}}\) holds for any set \(F \subseteq E\) of elements). Since the maximum matching problem (with the assignment problem as a special case) in an undirected graph \(G = (V,E)\) constitutes a special case of the maximization of independence systems problem (Korte and Hausmann 1978), the number of required solutions in an optimal solution set for the multi-parametric maximization of independence systems problem can be super-polynomial in n even for \(K=1\) (Carstensen 1983a, b).

For example, our result yields a \(((1 + \varepsilon ) \cdot 2)\)-approximation algorithm for the multi-parametric b-matching problem and a \(((1 + \varepsilon ) \cdot 3)\)-approximation algorithm for the multi-parametric maximum asymmetric TSP [cf. Mestre (2006)]. Note that the knapsack problem can also be formulated using independence systems. Here, an approximation scheme for the non-parametric version is known:

Multi-parametric knapsack problem Let a set \(E = \{1,\dots ,n\}\) of items and a budget \(W \in {\mathbb {N}}_0\) be given. Each item \(e \in E\) has a multi-parametric profit of the form \(a_e + \sum _{k=1}^K \lambda _k \cdot b_{k,e}\), where \(a_e, b_{k,e} \in {\mathbb {N}}_0\), \(k=1,\dots ,K\), are nonnegative integers, and a weight \(w_e \in {\mathbb {N}}_0\). The parameter vector \(\lambda ^{\min }\) is chosen by \(\lambda ^{\min }_k :=\max _{e \in E} - \frac{a_{e}}{K \cdot b_{k,e}}: b_{k,e} \ne 0 \}\), \(K=1, \dots ,K\), such that, for each set \(x \subseteq \{1,\dots ,n\}\) of items, the profit components \(\sum _{e \in x} a_e\) and \(\sum _{e\in x} b_{k,e}\), \(k = 1,\dots ,K\), are nonnegative. Then, the multi-parametric knapsack problem asks to compute a subset \(x \subseteq E\) satisfying \(\sum _{e \in x} w_e \le W\) of maximum profit for each parameter vector \(\lambda \) greater than or equal to \(\lambda ^{\min }\).

For this problem, a positive rational upper bound \(\text{ UB }\) as in Assumption 1.3 can again be obtained by summing up the n profit components \(a_e\) and summing up the n profit components \(b_{k,e}\) for each k, and taking the maximum of these \(K+1\) sums. The lower bound \(\text{ LB }\) can again be chosen as \(\text{ LB }:=1\). The currently best approximation scheme for the non-parametric problem is given in Kellerer and Pferschy (1999, 2004), which computes, for any \(\varepsilon ' > 0\), a feasible solution whose profit is no worse than \((1-\varepsilon ')\) times the profit of any other feasible solution in time \( {\mathcal {O}}\left( n \cdot \min \left\{ \log n, \log \frac{1}{\varepsilon '} \right\} + \frac{1}{\varepsilon '}\log \frac{1}{\varepsilon '}\cdot \min \left\{ n , \frac{1}{\varepsilon '} \log \frac{1}{\varepsilon '} \right\} \right) \). Assuming that n is much larger than \(\frac{1}{\varepsilon '}\) [cf. Kellerer et al. (2004)], choosing \(\varepsilon '= \frac{1}{2} \cdot \varepsilon \) and applying the maximization version of Theorem 4.14 with \(\frac{1}{2} \cdot \varepsilon \) yields an FPTAS for the multi-parametric knapsack problem with running time \({\mathcal {O}}\left( (n \log \frac{1}{\varepsilon } + \frac{1}{\varepsilon ^3} \log ^2 \frac{1}{\varepsilon } ) (\frac{1}{\varepsilon } \log \frac{1}{\varepsilon } + \frac{1}{\varepsilon } \log (nC) )^{K} \right) \), where C denotes the maximum profit component among all \(a_e, b_{k,e}\).

Again, the number of required solutions in an optimal solution set can be super-polynomial in n even for \(K=1\) (Carstensen 1983a).

7 Conclusion

Exact solution methods, complexity results, and approximation methods for multi-parametric optimization problems are of major interest in recent research. In this paper, we establish that approximation algorithms for many important non-parametric optimization problems can be lifted to approximation algorithms for the multi-parametric version of such problems. The provided approximation guarantee is arbitrarily close to the approximation guarantee of the non-parametric approximation algorithm. This implies the existence of a multi-parametric FPTAS for many important multi-parametric optimization problems for which optimal solution sets require super-polynomially many solutions in general.

Moreover, our results show that computing an approximation set containing the smallest-possible number of solutions is not possible in general. However, practical routines to reduce the number of solutions in the approximation set, based for example on the convexity property of Lemma 4.2 and the approximation method in Bazgan et al. (2022), might be of interest. Another direction of future research could be the approximation of multi-parametric MILPs with parameter dependencies in the constraints. Here, relaxation methods or a multi-objective multi-parametric formulation of the problems may provide a suitable approach.

Data availability

Not applicable.

Notes

Note that any \(\beta \)-approximation algorithm returns a \(\beta \)-approximation set of polynomial cardinality. Under the assumptions discussed next, given such a polynomial-size \(\beta \)-approximation set S and some parameter vector \(\lambda \in \Lambda \), it is possible to compute a \(\beta \)-approximate solution for \(\Pi (\lambda )\) in time polynomial in the instance size and the encoding length of \(\lambda \) by iterating over S and choosing a solution \(x \in S\) with minimum value \(f(x,\lambda )\).

This is a typical assumption in approximation [see, e.g., Papadimitriou and Yannakakis (2000)]. Nevertheless, our method can also be applied to problems where only the ‘relevant solutions’ can be encoded polynomially in the instance size. For example, not all feasible solutions of linear programs can be encoded polynomially in the instance size since they are implicitly determined by finitely many inequalities. However, it is sufficient to restrict to basic feasible solutions, which have encoding length polynomially bounded in the instance size (Grötschel et al. 1993).

The approximation guarantee \(\alpha \) is assumed to be independent of \(\lambda \). However, it is allowed that \(\alpha \) depends on the instance (such that the encoding length of \(\alpha \) is polynomially bounded in the encoding length of the instance).

Also referred to as combinatorial facet complexity or facet complexity (Aissi et al. 2015).

Note that compactness of \(\Lambda ^{\text{ compact }}\) and that \(\lambda _i > \lambda ^{\min }_i \) for any \(\lambda \in \Lambda ^{\text{ compact }}\) and all \(i = 1, \ldots ,K\) is necessary to achieve a finite cardinality of \(\Lambda ^{\text{ Grid }}\).

Note that \(\Lambda ^{\text{ compact }}\) could also be defined by means of the intersection \(W^{\text{ cone }}\cap \{w \in {\mathbb {R}}^{K+1}_\ge : w_0 = 1\}\). However, structural insights into the geometry of \(\Lambda ^{\text{ compact }}\) would then be missed. Moreover, the presented construction allows to easily derive lower and upper bounds on \(\Lambda ^{\text{ compact }}\), which are necessary for proving the polynomial bound on the cardinality of the grid \(\Lambda ^{\text{ Grid }}\).

For example \(F_1(x^*) :=F_2(x^*) :=(2 z_0 \cdot \beta )^{-2-n}\) and \(F_1(x^\ell ) :=(2 z_0 \cdot \beta )^{\ell - L}\), \(F_2(x^\ell ) :=(2 z_0 \cdot \beta )^{1 - \ell }\) with weights \(w^\ell _1 :=(2 z_0 \cdot \beta )^{L-\ell }\) and \(w^\ell _2 :=(2 z_0 \cdot \beta )^{\ell -1}\).

References

Aissi H, Mahjoub AR, McCormick ST, Queyranne M (2015) Strongly polynomial bounds for multiobjective and parametric global minimum cuts in graphs and hypergraphs. Math Program 154(1–2):3–28

Allman M, Lo V, McCormick ST (2022) Complexity of source-sink monotone 2-parameter min cut. Oper Res Lett 50(1):84–90

Bazgan C, Herzel A, Ruzika S, Thielen C, Vanderpooten D (2022) An approximation algorithm for a general class of parametric optimization problems. J Comb Optim 43:1328–1358

Carstensen PJ (1983a) The complexity of some problems in parametric, linear, and combinatorial programming. PhD thesis, University of Michigan

Carstensen PJ (1983b) Complexity of some parametric integer and network programming problems. Math Program 26(1):64–75

Daskalakis C, Diakonikolas I, Yannakakis M (2016) How good is the chord algorithm? SIAM J Comput 45(3):811–858

Diakonikolas I (2011) Approximation of multiobjective optimization problems. PhD thesis, Columbia University

Diakonikolas I, Yannakakis M (2008) Succinct approximate convex Pareto curves. In: Proceedings of the nineteenth annual ACM-SIAM symposium on Discrete algorithms, pp 74–83

Eben-Chaime M (1996) Parametric solution for linear bicriteria knapsack models. Manag Sci 42(11):1565–1575

Ehrgott M, Gandibleux X, Przybylski A (2016) Exact methods for multi-objective combinatorial optimisation. Springer, New York, pp 817–850

Eisner MJ, Severance DG (1976) Mathematical techniques for efficient record segmentation in large shared databases. J ACM 23(4):619–635

Erickson J (2010) Maximum flows and parametric shortest paths in planar graphs. In: Proceedings of the 21st ACM-SIAM symposium on discrete algorithms (SODA), pp 794–804

Fernández-Baca D, Slutzki G, Eppstein D (1996) Using sparsification for parametric minimum spanning tree problems. In: Proceedings of the 5th Scandinavian workshop on algorithm theory (SWAT), pp 149–160

Fernández-Baca D, Seppäläinen T, Slutzki G (2000) Parametric multiple sequence alignment and phylogeny construction. In: Combinatorial pattern matching. Springer Berlin Heidelberg, pp 69–83

Gal T, Nedoma J (1972) Multiparametric linear programming. Manag Sci 18(7):406–422

Gallo G, Grigoriadis MD, Tarjan RE (1989) A fast parametric maximum flow algorithm and applications. SIAM J Comput 18(1):30–55

Gass S, Saaty T (1955) Parametric objective function (part 2)—generalization. J Oper Res Soc Am 3(4):395–401

Gassner E, Klinz B (2010) A fast parametric assignment algorithm with applications in max-algebra. Networks 55(2):61–77

Giudici A, Halffmann P, Ruzika S, Thielen C (2017) Approximation schemes for the parametric knapsack problem. Inf Process Lett 120:11–15

Graves SC, Orlin JB (1985) A minimum concave-cost dynamic network flow problem with an application to lot-sizing. Networks 15:59–71

Grötschel M, Lovász L, Schrijver A (1993) Geometric algorithms and combinatorial optimization. Springer

Halman N, Holzhauser M, Krumke SO (2018) An FPTAS for the knapsack problem with parametric weights. Oper Res Lett 46(5):487–491