Abstract

This paper develops an in-depth treatment concerning the problem of approximating the Gaussian smoothing and the Gaussian derivative computations in scale-space theory for application on discrete data. With close connections to previous axiomatic treatments of continuous and discrete scale-space theory, we consider three main ways of discretizing these scale-space operations in terms of explicit discrete convolutions, based on either (i) sampling the Gaussian kernels and the Gaussian derivative kernels, (ii) locally integrating the Gaussian kernels and the Gaussian derivative kernels over each pixel support region, to aim at suppressing some of the severe artefacts of sampled Gaussian kernels and sampled Gaussian derivatives at very fine scales, or (iii) basing the scale-space analysis on the discrete analogue of the Gaussian kernel, and then computing derivative approximations by applying small-support central difference operators to the spatially smoothed image data.

We study the properties of these three main discretization methods both theoretically and experimentally and characterize their performance by quantitative measures, including the results they give rise to with respect to the task of scale selection, investigated for four different use cases, and with emphasis on the behaviour at fine scales. The results show that the sampled Gaussian kernels and the sampled Gaussian derivatives as well as the integrated Gaussian kernels and the integrated Gaussian derivatives perform very poorly at very fine scales. At very fine scales, the discrete analogue of the Gaussian kernel with its corresponding discrete derivative approximations performs substantially better. The sampled Gaussian kernel and the sampled Gaussian derivatives do, on the other hand, lead to numerically very good approximations of the corresponding continuous results, when the scale parameter is sufficiently large, in most of the experiments presented in the paper, when the scale parameter is greater than a value of about 1, in units of the grid spacing. Below a standard deviation of about 0.75, the derivative estimates obtained from convolutions with the sampled Gaussian derivative kernels are, however, not numerically accurate or consistent, while the results obtained from the discrete analogue of the Gaussian kernel, with its associated central difference operators applied to the spatially smoothed image data, are then a much better choice.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

When operating on image data, the earliest layers of image operations are usually expressed in terms of receptive fields, which means that the image information is integrated over local support regions in image space. For modelling such operations, the notion of scale-space theory [24, 31, 34,35,36, 44, 46, 50, 84, 86, 96, 97] stands out as a principled theory, by which the shapes of the receptive fields can be determined from axiomatic derivations, that reflect desirable theoretical properties of the first stages of visual operations.

In summary, this theory states that convolutions with Gaussian kernels and Gaussian derivatives constitutes a canonical class of image operations as a first layer of visual processing. Such spatial receptive fields, or approximations thereof, can, in turn, be used as basis for expressing a large variety of image operations, both in classical computer vision [8, 12, 15, 42, 48, 49, 51, 53, 62, 63, 79, 89] and more recently in deep learning [26, 32, 57, 58, 69, 72, 78].

The theory for the notion of scale-space representation does, however, mainly concern continuous image data, while implementations of this theory on digital computers require a discretization over image space. The subject of this article is to describe and compare a number of basic approaches for discretizing the Gaussian convolution operation, as well as convolutions with Gaussian derivatives.

While one could possibly argue that at sufficiently coarse scales, where sampling effects ought to be small, the influence of choosing one form of discrete implementation compared to some other ought to be negligible, or at least of minor effect, there are situations where it is desirable to apply scale-space operations at rather fine scales, and then also to be reasonably sure that one would obtain desirable response properties of the receptive fields.

One such domain, and which motivates the present deeper study of discretization effects for Gaussian smoothing operations and Gaussian derivative computations at fine scales, is when applying Gaussian derivative operations in deep networks, as done in a recently developed subdomain of deep learning [26, 32, 57, 58, 69, 72, 78].

A practical observation, that one may make, when working with deep learning, is that deep networks may tend to have a preference to computing image representations at very fine scale levels. For example, empirical results indicate that deep networks often tend to perform image classification based on very fine-scale image information, corresponding to the local image texture on the surfaces of objects in the world. Indirect support for such a view may also be taken from the now well-established fact that deep networks may be very sensitive to adversarial perturbations, based on adding deliberately designed noise patterns of very low amplitude to the image data [3, 5, 30, 65, 85]. That observation demonstrates that deep networks may be very strongly influenced by fine-scale structures in the input image. Another observation may be taken from working with deep networks based on using Gaussian derivative kernels as the filter weights. If one designs such a network with complementary training of the scale levels for the Gaussian derivatives, then a common result is that the network will prefer to base its decisions based on receptive fields at rather fine scale levels.

When to implement such Gaussian derivative networks in practice, one hence faces the need for being able to go below the rule of thumb for classical computer vision, of not attempting to operate below a certain scale threshold, where the standard deviation of the Gaussian derivative kernel should then not be below a value of say \(1/\sqrt{2}\) or 1, in units of the grid spacing.

From a viewpoint of theoretical signal processing, one may possibly take the view to argue that one should use the sampling theorem to express a lower bound on the scale level, which one should then never go below. For regular images, as obtained from digital cameras, or already acquired data sets as compiled by the computer vision community, such an approach based on the sampling theorem, is, however, not fully possible in practice. First of all, we almost never, or at least very rarely, have explicit information about the sensor characteristics of the image sensor. Secondly, it would hardly be possible to model the imaging process in terms of an ideal bandlimited filter with frequency characteristics near the spatial sampling density of the image sensor. Applying an ideal bandpass filter to an already given digital image may lead to ringing phenomena near the discontinuities in the image data, which will lead to far worse artefacts for spatial image data than for e.g. signal transmission over information carriers in terms of sine waves.

Thus, the practical problem, that one faces when designing and applying a Gaussian derivative network to image data, is to in a practically feasible manner express a spatial smoothing process, that can smooth a given digital input image for any fine scale of the discrete approximation to a Gaussian derivative filter. A theoretical problem, that then arises, concerns how to design such a process, so that it can operate from very fine scale levels, possibly starting even at scale level zero corresponding to the original input data, without leading to severe discretization artefacts.

A further technical problem that arises is that even if one would take the a priori view of basing the implementation on the purely discrete theory for scale-space smoothing and scale-space derivatives developed in [43, 45], and as we have taken in our previous work on Gaussian derivative networks [57, 58], one then faces the problem of handling the special mathematical functions used as smoothing primitives in this theory (the modified Bessel functions of integer order) when propagating gradients for training deep networks backwards by automatic differentiation, when performing learning of the scale levels in the network. These necessary mathematical primitives do not exist as built-in functions in e.g. PyTorch [67], which implies that the user then would have to implement a PyTorch interface for these functions himself, or choose some other type of discretization method, if aiming to learn the scale levels in the Gaussian derivative networks by back propagation. There are a few related studies of discretizations of scale-space operations [41, 73, 83, 87, 94]. These do not, however, answer the questions that need to be addressed for the intended use cases for our developments.

Wang [94] proposed pyramid-like algorithms for computing multi-scale differential operators using a spline technique, however, then taking rather coarse steps in the scale direction. Lim and Stiehl [41] studied properties of discrete scale-space representations under discrete iterations in the scale direction, based on Euler’s forward method. For our purpose, we do, however, need to consider the scale direction as a continuum. Tschirsich and Kuijper [87] investigated the compatibility of topological image descriptors with a discrete scale-space representations and did also derive an eigenvalue decomposition in relation to the semi-discrete diffusion equation, that determines the evolution properties over scale, to enable efficient computations of discrete scale-space representations of the same image at multiple scales. With respect to our target application area, we are, however, more interested in computing image features based on Gaussian derivative responses, and then mostly also computing discrete scale-space representation at a single scale only, for each input image. Slavík and Stehlík [83] developed a theory for more general evolution equations over semi-discrete domains, which incorporates the 1-D discrete scale-space evolution family, that we consider here, as corresponding to convolutions with the discrete analogue of the Gaussian kernel, as a special case. For our purposes, we are, however, more interested in performing an in-depth study of different discrete approximations of the axiomatically determined class of Gaussian smoothing operations and Gaussian derivative operators, than expanding the treatment to other possible evolution equations over discrete spatial domains.

Rey-Otero and Delbracio [73] assumed that the image data can be regarded as bandlimited, and did then use a Fourier-based approach for performing closed-form Gaussian convolution over a reconstructed Fourier basis, which in that way constitutes a way to eliminate the discretization errors, provided that a correct reconstruction an underlying continuous image, notably before the image acquisition step, can be performed.Footnote 1 A very closely related approach, for computing Gaussian convolutions, based on a reconstruction of an assumed-to-be bandlimited signal, has also been previously outlined by Åström and Heyden [2].

As argued earlier in this introduction, the image data that is processed in computer vision are, however, not generally accompanied with characteristic information regarding the image acquisition process, specifically not with regard to what extent the image data could be regarded as bandlimited. Furthermore, one could question if the image data obtained from a modern camera sensor could at all be modelled as bandlimited, for a cutoff frequency very near the resolution of the image. Additionally, with regard to our target application domain of deep learning, one could also question if it would be manageable to invoke a Fourier-based image reconstruction step for each convolution operation in a deep network. In the work to be developed here, we are, on the other hand, more interested in developing a theory for discretizing the Gaussian smoothing and the Gaussian derivative operations at very fine levels of scale, in terms of explicit convolution operations, and based on as minimal as possible assumptions regarding the nature of the image data.

The purpose of this article is thus to perform a detailed theoretical analysis of the properties of different discretizations of the Gaussian smoothing operation and the Gaussian derivative computations at any scale, and with emphasis on reaching as near as possible to the desirable theoretical properties of the underlying scale-space representation, to hold also at very fine scales for the discrete implementation.

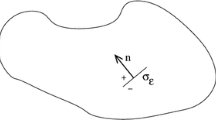

For performing such analysis, we will consider basic approaches for discretizing the Gaussian kernel in terms of either pure spatial sampling (the sampled Gaussian kernel) or local integration over each pixel support region (the integrated Gaussian kernel) and compare to the results of a genuinely discrete scale-space theory (the discrete analogue of the Gaussian kernel), see Fig. 1 for graphs of such kernels. After analysing and numerically quantifying the properties of these basic types of discretizations, we will then extend the analysis to discretizations of Gaussian derivatives in terms of either sampled Gaussian derivatives, integrated Gaussian derivatives, and compare to the results of a genuinely discrete theory based on convolutions with the discrete analogue of the Gaussian kernel followed by discrete derivative approximations computed by applying small-support central difference operators to the discrete scale-space representation. We will also extend the analysis to the computation of local directional derivatives, as a basis for filter-bank approaches for receptive fields, based on either the scale-space representation generated by convolution with rotationally symmetric Gaussian kernels, or the affine Gaussian scale space.

It will be shown that, with regard to the topic of raw Gaussian smoothing, the discrete analogue of the Gaussian kernel has the best theoretical properties, out of the discretization methods considered. For scale values when the standard deviation of the continuous Gaussian kernel is above 0.75 or 1, the sampled Gaussian kernel does also have very good properties, and leads to very good approximations of the corresponding fully continuous results. The integrated Gaussian kernel is better at handling fine scale levels than the sampled Gaussian kernel, but does, however, comprise a scale offset that hampers its accuracy in approximating the underlying continuous theory.

Concerning the topic of approximating the computation of Gaussian derivative responses, it will be shown that the approach based on convolution with the discrete analogue of the Gaussian kernel followed by central difference operations has the clearly best properties at fine scales, out of the studied three main approaches. In fact, when the standard deviation of the underlying continuous Gaussian kernel is a bit below about 0.75, the sampled Gaussian derivative kernels and the integrated Gaussian derivative kernels do not lead to accurate numerical estimates of derivatives, when applied to monomials of the same order as the order of spatial differentiation, or lower. Over an intermediate scale range in the upper part of this scale interval, the integrated Gaussian derivative kernels do, however, have somewhat better properties than the sampled Gaussian derivative kernels. For the discrete approximations of Gaussian derivatives defined from convolutions with the discrete analogue of the Gaussian kernel followed by central differences, the numerical estimates of derivatives obtained by applying this approach to monomials of the same order as the order of spatial differentiation do, on the other hand, lead to derivative estimates exactly equal to their continuous counterparts, and also over the entire scale range.

For larger scale values, for standard deviations greater than about 1, relative to the grid spacing, in the experiments to be reported in the paper, the discrete approximations of Gaussian derivatives obtained from convolutions with sampled Gaussian derivatives do on the other hand lead to numerically very accurate approximations of the corresponding results obtained from the purely continuous scale-space theory. For the discrete derivative approximations obtained by convolutions with the integrated Gaussian derivatives, the box integration introduces a scale offset, that hampers the accuracy of the approximation of the corresponding expressions obtained from the fully continuous scale-space theory. The integrated Gaussian derivative kernels do, however, degenerate less seriously than the sampled Gaussian derivative kernels within a certain range of very fine scales. Therefore, they may constitute an interesting alternative, if the mathematical primitives needed for the discrete analogues of the Gaussian derivative are not fully available within a given system for programming deep networks.

For simplicity, we do in this treatment restrict ourselves to image operations that operate in terms of discrete convolutions only. In this respect, we do not consider implementations in terms of Fourier transforms, which are also possible, while less straightforward in the context of deep learning. We do furthermore not consider extensions to spatial interpolation operations, which operate between the positions of the image pixels, and which can be highly useful, for example, for locating the positions of image features with subpixel accuracy [10, 11, 91, 92, 95, 104]. We do additionally not consider representations that perform subsamplings at coarser scales, which can be useful for reducing the amount of computational work [13, 16, 17, 59, 62, 81, 82], or representations that aim at speeding up the spatial convolutions on serial computers based on performing the computations in terms of spatial recursive filters [14, 19, 22, 27, 93, 99]. For simplicity, we develop the theory for the special cases of 1-D signals or 2-D images, while extensions to higher-dimensional volumetric images is straightforward, as implied by separable convolutions for the scale-space concept based on convolutions with rotationally symmetric Gaussian kernels.

Concerning experimental evaluations, we do in this paper deliberately focus on and restrict ourselves to the theoretical properties of different discretization methods, and only report performance measures based on such theoretical properties. One motivation for this approach is that the integration with different types of visual modules may call for different relative properties of the discretization methods. We therefore want this treatment to be timeless, and not biased to the integration with particular computer vision methods or algorithms that operate on the output from Gaussian smoothing operations or Gaussian derivatives. Experimental evaluations with regard to Gaussian derivative networks will be reported in follow-up work. The results from this theoretical analysis should therefore be more generally applicable to a larger variety of approaches in classical computer vision, as well as to other deep learning approaches that involve Gaussian derivative operators.

2 Discrete Approximations of Gaussian Smoothing

The Gaussian scale-space representation \(L(x, y;\; s)\) of a 2-D spatial image f(x, y) is defined by convolution with 2-D Gaussian kernels of different sizes

according to [24, 31, 34, 44, 50, 86, 96, 97]

Equivalently, this scale-space representation can be seen as defined by the solution of the 2-D diffusion equation

with initial condition \(L(x, y;\; 0) = f(x, y)\).

2.1 Theoretical Properties of Gaussian Scale-Space Representation

2.1.1 Non-Creation of New Structure with Increasing Scale

The Gaussian scale space, generated by convolving an image with Gaussian kernels, obeys a number of special properties, that ensure that the transformation from any finer scale level to any coarser scale level is guaranteed to always correspond to a simplification of the image information:

-

Non-creation of local extrema For any one-dimensional signal f, it can be shown that the number of local extrema in the 1-D Gaussian scale-space representation at any coarser scale \(s_2\) is guaranteed to not be higher than the number of local extrema at any finer scale \(s_1 < s_2\).

-

Non-enhancement of local extrema For any N-dimensional signal, it can be shown that the derivative of the scale-space representation with respect to the scale parameter \(\partial _s L\) is guaranteed to obey \(\partial _s L \le 0\) at any local spatial maximum point and \(\partial _s L \ge 0\) at any local spatial minimum point. In this respect, the Gaussian convolution operation has a strong smoothing effect.

In fact, the Gaussian kernel can be singled out as the unique choice of smoothing kernel as having these properties, from axiomatic derivations, if combined with the requirement of a semi-group property over scales

and certain regularity assumptions, see Theorem 5 in [43], Theorem 3.25 in [44] and Theorem 5 in [50] for more specific statements.

For related treatments about theoretically principled scale-space axiomatics, see also Koenderink [34], Babaud et al. [4], Yuille and Poggio [103], Koenderink and van Doorn [36], Pauwels et al. [68], Lindeberg [47], Weickert et al. [96] and Duits et al. [20].

2.1.2 Cascade Smoothing Property

Due to the semi-group property, it follows that the scale-space representation at any coarser scale \(L(x, y;\; s_2)\) can be obtained by convolving the scale-space representation at any finer scale \(L(x, y;\; s_1)\) with a Gaussian kernel parameterized by the scale difference \(s_2 - s_1\):

This form of cascade smoothing property is an essential property of a scale-space representation, since it implies that the transformation from any finer scale level \(s_1\) to any coarser scale level \(s_2\) will always be a simplifying transformation, provided that the convolution kernel used for the cascade smoothing operation corresponds to a simplifying transformation.

2.1.3 Spatial Averaging

The Gaussian kernel is non-negative

and normalized to unit \(L_1\)-norm

In these respects, Gaussian smoothing corresponds to a spatial averaging process, which constitutes one of the desirable attributes of a smoothing process intended to reflect different spatial scales in image data.

2.1.4 Separable Gaussian Convolution

Due to the separability of the 2-D Gaussian kernel

where the 1-D Gaussian kernel is of the form

the 2-D Gaussian convolution operation (2) can also be written as two separable 1-D convolutions of the form

Methods that implement Gaussian convolution in terms of explicit discrete convolutions usually exploit this separability property, since if the Gaussian kernel is truncatedFootnote 2 at the tails for \(x = \pm N\), the computational work for separable convolution will be of the order

per image pixel, whereas it would be of order

for non-separable 2-D convolution.

2.2 Modelling Situation for Theoretical Analysis of Different Approaches for Implementing Gaussian Smoothing Discretely

From now on, we will, for simplicity, only consider the case with 1-D Gaussian convolutions of the form

which are to be implemented in terms of discrete convolutions of the form

for some family of discrete filter kernels \(T(n;\; s)\).

2.2.1 Measures of the Spatial Extent of Smoothing Kernels

The spatial extent of these 1-D kernels can be described by the scale parameter s, which represents the spatial variance of the convolution kernel

and which can also be parameterized in terms of the standard deviation

For the discrete kernels, the spatial variance is correspondingly measured as

Graphs of the main types of Gaussian smoothing kernels and Gaussian derivative kernels considered in this paper, here at the scale \(\sigma = 1\), with the raw smoothing kernels in the top row and the order of spatial differentiation increasing downwards up to order 4: (left column) continuous Gaussian kernels and continuous Gaussian derivatives, (middle left column) sampled Gaussian kernels and sampled Gaussian derivatives, (middle right column) integrated Gaussian kernels and integrated Gaussian derivatives, and (right column) discrete Gaussian kernels and discrete analogues of Gaussian derivatives. Note that the scaling of the vertical axis may vary between the different subfigures. (Horizontal axis: the 1-D spatial coordinate \(x \in [-5, 5]\))

2.3 The Sampled Gaussian Kernel

The presumably simplest approach for discretizing the 1-D Gaussian convolution integral (13) in terms of a discrete convolution of the form (14) is by choosing the discrete kernel \(T(n;\; s)\) as the sampled Gaussian kernel

While this choice is easy to implement in practice, there are, however, three major conceptual problems with using such a discretization at very fine scales:

-

the filter coefficients may not be limited to the interval [0, 1],

-

the sum of the filter coefficients may become substantially greater than 1, and

-

the resulting filter kernel may have too narrow shape, in the sense that the spatial variance of the discrete kernel \(V(T_{\text{ sampl }}(\cdot ;\; s))\) is substantially smaller than the spatial variance \(V(g(\cdot ;\; s))\) of the continuous Gaussian kernel.

The first two problems imply that the resulting discrete spatial smoothing kernel is no longer a spatial weighted averaging kernel in the sense of Sect. 2.1.3, which implies problems, if attempting to interpret the result of convolutions with the sampled Gaussian kernels as reflecting different spatial scales. The third problem implies that there will not be a direct match between the value of the scale parameter provided as argument to the sampled Gaussian kernel and the scales that the discrete kernel would reflect in the image data.

Figures 2 and 3 show numerical characterizations of these entities for a range of small values of the scale parameter.

More fundamentally, it can be shown (see Section VII.A in Lindeberg [43]) that convolution with the sampled Gaussian kernel is guaranteed to not increase the number of local extrema (or zero-crossings) in the signal from the input signal to any coarser level of scale. The transformation from an arbitrary scale level to some other arbitrary coarser scale level is, however, not guaranteed to obey such a simplification property between any pair of scale levels. In this sense, convolutions with sampled Gaussian kernels do not truly obey non-creation of local extrema from finer to coarser levels of scale, in the sense described in Sect. 2.1.1.

2.4 The Normalized Sampled Gaussian Kernel

A straightforward, but ad hoc, way of avoiding the problems that the discrete filter coefficients may, for small values of the scale parameter have their sum exceed 1, is by normalizing the sampled Gaussian kernel with its discrete \(l_1\)-norm:

By definition, we in this way avoid this problems that the regular sampled Gaussian kernel is not spatial weighted averaging kernel in the sense of Sect. 2.1.3.

The problem that the spatial variance of the discrete kernel \(V(T_{\text{ normsampl }}(\cdot ;\; s))\) is substantially smaller that the spatial variance \(V(g(\cdot ;\; s))\) of the continuous Gaussian kernel, will, however, persist, since the variance of a kernel is not affected by a uniform scaling of its amplitude values. In this sense, the resulting discrete kernels will not for small scale values accurately reflect the spatial scale corresponding to the scale argument, as specified by the scale parameter s.

2.5 The Integrated Gaussian Kernel

A possibly better way of enforcing the weights of the filter kernels to sum up to 1, is by instead letting the discrete kernel be determined by the integral of the continuous Gaussian kernel over each pixel support region [44, Equation (3.89)]

which in terms of the scaled error function \({\text {erg}}(x;\; s)\) can be expressed as

with

where \({\text {erf}}(x)\) denotes the regular error function according to

A conceptual argument for defining the integrated Gaussian kernel model is that, we may, given a discrete signal f(n), define a continuous signal \({\tilde{f}}(x)\), by letting the values of the signal in each pixel support region be equal to the value of the corresponding discrete signal, see Appendix A.2 for an explicit derivation. In this sense, there is a possible physical motivation for using this form of scale-space discretization.

By the continuous Gaussian kernel having its integral equal to 1, it follows that the sum of the discrete filter coefficients will over an infinite spatial domain also be exactly equal to 1. Furthermore, the discrete filter coefficients are also guaranteed to be in the interval [0, 1]. In these respects, the resulting discrete kernels will represent a true spatial weighting process, in the sense of Sect. 2.1.3.

Concerning the spatial variances \(V(T_{\text{ int }}(\cdot ;\; s))\) of the resulting discrete kernels, they will also for smaller scale values be closer to the spatial variances \(V(g(\cdot ;\; s))\) of the continuous Gaussian kernel, than for the sampled Gaussian kernel or the normalized sampled Gaussian kernel, as shown in Figs. 3 and 4. For larger scale values, the box integration over each pixel support region, will, on the other hand, however, introduce a scale offset, which for larger values of the scale parameter s approaches

which, in turn, corresponds to the spatial variance of a continuous box filter over each pixel support region, defined by

and which is used for defining the integrated Gaussian kernel from the continuous Gaussian kernel in (20).

Figure 3 shows a numerical characterization of the difference in scale values between the variance \(V(T_{\text{ int }}(n;\; s))\) of the discrete integrated Gaussian kernel and the scale parameter s provided as argument to this function.

In terms of theoretical scale-space properties, it can be shown that the transformation from the input signal to any coarse scale always implies a simplification, in the sense that the number of local extrema (or zero-crossings) at any coarser level of scale is guaranteed to not exceed the number of local extrema (or zero-crossings) in the input signal (see Section 3.6.3 in Lindeberg [44]). The transformation from any finer scale level to any coarser scale level will, however, not be guaranteed to obey such a simplification property. In this respect, the integrated Gaussian kernel does not fully represent a discrete scale-space transformation, in the sense of Sect. 2.1.1.

2.6 The Discrete Analogue of the Gaussian Kernel

According to a genuinely discrete theory for spatial scale-space representation in Lindeberg [43], the discrete scale space is defined from discrete kernels of the form

where \(I_n(s)\) denote the modified Bessel functions of integer order (see [1]), which are related to the regular Bessel functions \(J_n(z)\) of the first kind according to

and which for integer values of n, as we will restrict ourselves to here, can be expressed as

The discrete analogue of the Gaussian kernel \(T_{\text{ disc }}(n;\; s)\) does specifically have the practically useful properties that:

-

the filter coefficients are guaranteed to be in the interval [0, 1],

-

the filter coefficients sum up to 1 (see Equation (3.43) in [44])

$$\begin{aligned} \sum _{n \in {{\mathbb {Z}}}} T_{\text{ disc }}(n;\; s) = 1, \end{aligned}$$(29) -

the spatial variance of the discrete kernel is exactly equal to the scale parameter (see Equation (3.53) in [44])

$$\begin{aligned} V(T_{\text{ disc }}(\cdot ;\; s)) = s. \end{aligned}$$(30)

These kernels do also exactly obey a semi-group property over spatial scales (see Equation (3.41) in [44])

which implies that the resulting discrete scale-space representation also obeys an exact cascade smoothing property

More fundamentally, these discrete kernels do furthermore preserve scale-space properties to the discrete domain, in the sense that:

-

the number of local extrema (or zero-crossings) at a coarser scale is guaranteed to not exceed the number of local extrema (or zero-crossings) at any finer scale,

-

the resulting discrete scale-space representation is guaranteed to obey non-enhancement of local extrema, in the sense that the value at any local maximum is guaranteed to not increase with increasing scale, and that the value at any local minimum is guaranteed to not decrease with increasing scale.

In these respects, the discrete analogue of the Gaussian kernel obeys all the desirable theoretical properties of a discrete scale-space representation, corresponding to discrete analogues of the theoretical properties of the Gaussian scale-space representation stated in Sect. 2.1.

Specifically, the theoretical properties of the discrete analogue of the Gaussian kernel are better than the theoretical properties of the sampled Gaussian kernel, the normalized sampled Gaussian kernel or the integrated Gaussian kernel.

2.6.1 Diffusion Equation Interpretation of the Genuinely Discrete Scale-Space Representation Concept

In terms of diffusion equations, the discrete scale-space representation generated by convolving a 1-D discrete signal f by the discrete analogue of the Gaussian kernel according to (26)

satisfies the semi-discrete 1-D diffusion equation [44] Theorem 3.28

with initial condition \(L(x;\; 0) = f(x)\), where \(\delta _{xx}\) denotes the second-order discrete difference operator

Over a 2-D discrete spatial domain, the discrete scale-space representation of an image f(x, y), generated by separable convolution with the discrete analogue of the Gaussian kernel

satisfies the semi-discrete 2-D diffusion equation [44] Proposition 4.14

with initial condition \(L(x, y;\; 0) = f(x, y)\), where \(\nabla _5^2\) denotes the following discrete approximation of the Laplacian operator

In this respect, the discrete scale-space representation generated by convolution with the discrete analogue of the Gaussian kernel can be seen as a purely spatial discretization of the continuous diffusion equation (3), which can serve as an equivalent way of defining the continuous scale-space representation.

2.7 Performance Measures for Quantifying Deviations from Theoretical Properties of Discretizations of Gaussian Kernels

To quantify the deviations between properties of the discrete kernels, and desirable properties of the discrete kernels that are to transfer the desirable properties of a continuous scale-space representation to a corresponding discrete implementation, we will in this section quantity such deviations in terms of the following error measures:

-

Normalization error The difference between the \(l_1\)-norm of the discrete kernels and the desirable unit \(l_1\)-norm normalization will be measured byFootnote 3

$$\begin{aligned} E_{\text{ norm }}(T(\cdot ;\; s)) = \sum _{n \in {{\mathbb {Z}}}} T(n;\; s) - 1. \end{aligned}$$(39) -

Absolute scale difference The difference between the variance of the discrete kernel and the argument of the scale parameter will be measured by

$$\begin{aligned} E_{\varDelta s}(T(\cdot ;\; s)) = V(T(\cdot ;\; s)) - s. \end{aligned}$$(40)This error measure is expressed in absolute units of the scale parameter. The reason, why we express this measure in units of the variance of the discretizations of the Gaussian kernel, is that variances are additive under convolutions of non-negative kernels.

-

Relative scale difference The relative scale difference, between the actual standard deviation of the discrete kernel and the argument of the scale parameter, will be measured by

$$\begin{aligned} E_{\text{ relscale }}(T(\cdot ;\; s)) = \sqrt{\frac{V(T(\cdot ;\; s))}{s}} - 1. \end{aligned}$$(41)This error measure is expressed in relative units of the scale parameter.Footnote 4 The reason, why we express this entity in units of the standard deviations of the discretizations of the Gaussian kernels, is that these standard deviations correspond to interpretations of the scale parameter in units of \([\text{ length}]\), in a way that is thus proportional to the scale level.

-

Cascade smoothing error The deviation from the cascade smoothing property of a scale-space kernel according to (5) and the actual result of convolving a discrete approximation of the scale-space representation at a given scale s, with its corresponding discretization of the Gaussian kernel, will be measured by

$$\begin{aligned} E_{\text{ cascade }}(T(\cdot ;\; s)) = \frac{\Vert T(\cdot ;\; s) * T(\cdot ;\; s) - T(\cdot ;\; 2s) \Vert _1}{\Vert T(\cdot ;\; 2s) \Vert _1}. \nonumber \\ \end{aligned}$$(42)While this measure of the cascade smoothing error could in principle instead be formulated for arbitrary relations between the scale level of the discrete approximation of the scale-space representation and the amount of additive spatial smoothing, we fix these scale levels to be equal for the purpose of conceptual simplicity.Footnote 5

In the ideal theoretical case, all of these error measures should be equal to zero (up to numerical errors in the discrete computations). Any deviations from zero of these error measures do therefore represent a quantification of deviations from desirable theoretical properties in a discrete approximation of the Gaussian smoothing operation.

Graphs of the \(l_1\)-norm-based normalization error \(E_{\text{ norm }}(T(\cdot ;\; s))\), according to (39), for the discrete analogue of the Gaussian kernel, the sampled Gaussian kernel and the integrated Gaussian kernel. Note that this error measure is equal to zero for both the discrete analogue of the Gaussian kernel, the normalized sampled Gaussian kernel and the integrated Gaussian kernel. (Horizontal axis: Scale parameter in units of \(\sigma = \sqrt{s} \in [0.1, 2]\))

Graphs of the spatial standard deviations \(\sqrt{V(T(\cdot ;\; s))}\) for the discrete analogue of the Gaussian kernel, the sampled Gaussian kernel and the integrated Gaussian kernel. The standard deviation is exactly equal to the scale parameter \(\sigma = \sqrt{s}\) for the discrete analogue of the Gaussian kernel. The standard deviation of the normalized sampled Gaussian kernel is equal to the standard deviation of the regular sampled Gaussian kernel. (Horizontal axis: Scale parameter in units of \(\sigma = \sqrt{s} \in [0.1, 2]\))

Graphs of the absolute scale difference \(E_{\varDelta s}(T(\cdot ;\; s))\), according to (40) and in units of the spatial variance \(V(T(\cdot ;\; s))\), for the discrete analogue of the Gaussian kernel, the sampled Gaussian kernel and the integrated Gaussian kernel. This scale difference is exactly equal to zero for the discrete analogue of the Gaussian kernel. For scale values \(\sigma < 0.75\), the absolute scale difference is substantial for the sampled Gaussian kernel, and then rapidly tends to zero for larger scales. For the integrated Gaussian kernel, the absolute scale difference does, however, not approach zero with increasing scale. Instead, it approaches the numerical value \(\varDelta s \approx 0.0833\), close to the spatial variance 1/12 of a box filter over each pixel support region. The spatial variance-based absolute scale difference for the normalized sampled Gaussian kernel is equal to the spatial variance-based absolute scale difference for the regular sampled Gaussian kernel. (Horizontal axis: Scale parameter in units of \(\sigma = \sqrt{s} \in [0.1, 2]\))

Graphs of the relative scale difference \(E_{\text{ relscale }}(T(\cdot ;\; s))\), according to (41) and in units of the spatial standard deviation of the discrete kernels, for the discrete analogue of the Gaussian kernel, the sampled Gaussian kernel and the integrated Gaussian kernel. This relative scale error is exactly equal to zero for the discrete analogue of the Gaussian kernel. For scale values \(\sigma < 0.75\), the relative scale difference is substantial for sampled Gaussian kernel, and then rapidly tends to zero for larger scales. For the integrated Gaussian kernel, the relative scale difference is significantly larger, while approaching zero with increasing scale. The relative scale difference for the normalized sampled Gaussian kernel is equal to the relative scale difference for the regular sampled Gaussian kernel. (Horizontal axis: Scale parameter in units of \(\sigma = \sqrt{s} \in [0.1, 2]\))

Graphs of the cascade smoothing error \(E_{\text{ cascade }}(T(\cdot ;\; s))\), according to (42), for the discrete analogue of the Gaussian kernel, the sampled Gaussian kernel, the integrated Gaussian kernel, as well as the normalized sampled Gaussian kernel. For exact numerical computations, this cascade smoothing error would be identically equal to zero for the discrete analogue of the Gaussian kernel. In the numerical implementation underlying these computations, there are, however, numerical errors of a low amplitude. For the sampled Gaussian kernel, the cascade smoothing error is very large for \(\sigma \le 0.5\), notable for \(\sigma < 0.75\), and then rapidly decreases with increasing scale. For the normalized sampled Gaussian kernel, the cascade smoothing error is for \(\sigma \le 0.5\) significantly lower than for the regular sampled Gaussian kernel. For the integrated Gaussian kernel, the cascade smoothing error is lower than for the sampled Gaussian kernel for \(\sigma \le 0.5\), while then decreasing substantially slower to zero than for the sampled Gaussian kernel. (Horizontal axis: Scale parameter in units of \(\sigma = \sqrt{s} \in [0.1, 2]\))

2.8 Numerical Quantifications of Performance Measures

In the following, we will show results of computing the above measures concerning desirable properties of discretizations of scale-space kernels for the cases of (i) the sampled Gaussian kernel, (ii) the integrated Gaussian kernel and (iii) the discrete analogue of the Gaussian kernel. Since the discretization effects are largest for small scale values, we will focus on the scale interval \(\sigma \in [0.1, 2.0]\), however, in a few cases extended to the scale interval \(\sigma \in [0.1, 4.0]\). (The reason for delimiting the scale parameter to the lower bound of \(\sigma \ge 0.1\) is to avoid the singularity at \(\sigma = 0\).)

2.8.1 Normalization Error

Figure 2 shows graphs of the \(l_1\)-norm-based normalization error \(E_{\text{ norm }}(T(\cdot ;\; s))\) according to (39) for the main classes of discretizations of Gaussian kernels. For the integrated Gaussian kernel, the discrete analogue of the Gaussian kernel and the normalized sampled Gaussian kernel, the normalization error is identically equal to zero. For \(\sigma \le 0.5\), the normalization error is, however, substantial for the regular sampled Gaussian kernel.

2.8.2 Standard Deviations of the Discrete Kernels

Figure 3 shows graphs of the standard deviations \(\sqrt{V(T(\cdot ;\; s))}\) for the different main types of discretizations of the Gaussian kernels, which constitutes a natural measure of their spatial extent. For the discrete analogue of the Gaussian kernel, the standard deviation of the discrete kernel is exactly equal to the value of the scale parameter in units of \(\sigma = \sqrt{s}\). For the sampled Gaussian kernel, the standard deviation is substantially lower than the value of the scale parameter in units of \(\sigma = \sqrt{s}\) for \(\sigma \le 0.5\). For the integrated Gaussian kernel, the standard deviation is for smaller values of the scale parameter closer to a the desirable linear trend. For larger values of the scale parameter, the standard deviation of the discrete kernel is, however, notably higher than \(\sigma \).

2.8.3 Spatial Variance Offset of the Discrete Kernels

To quantify in a more detailed manner how the scale offset of the discrete approximations of Gaussian kernels depends upon the scale parameter, Fig. 4 shows graphs of the spatial variance-based scale difference measure \(E_{\varDelta s}(T(\cdot ;\; s))\) according to (40) for the different discretization methods. For the discrete analogue of the Gaussian kernel, the scale difference is exactly equal to zero. For the sampled Gaussian kernel, the scale difference measure differs significantly from zero for \(\sigma < 0.75\), while then rapidly approaching zero for larger scales. For the integrated Gaussian kernel, the variance-based scale difference measure does, however, not approach zero for larger scales. Instead, it approaches the numerical value \(\varDelta s \approx 0.0833\), close to the spatial variance 1/12 of a box filter over each pixel support region. The spatial variance-based scale difference for the normalized sampled Gaussian kernel is equal to the spatial variance-based scale difference for the regular sampled Gaussian kernel.

2.8.4 Spatial Standard-Deviation-Based Relative Scale Difference

Figure 5 shows the spatial standard-deviation-based relative scale difference \(E_{\text{ relscale }}(T(\cdot ;\; s))\) according to (41) for the main classes of discretizations of Gaussian kernels. This relative scale difference is exactly equal to zero for the discrete analogue of the Gaussian kernel. For scale values \(\sigma < 0.75\), the relative scale difference is substantial for sampled Gaussian kernel, and then rapidly tends to zero for larger scales. For the integrated Gaussian kernel, the relative scale difference is significantly larger, while approaching zero with increasing scale. The relative scale difference for the normalized sampled Gaussian kernel is equal to the relative scale difference for the regular sampled Gaussian kernel.

2.8.5 Cascade Smoothing Error

Figure 6 shows the cascade smoothing error \(E_{\text{ cascade }}(T(\cdot ;\; s))\) according to (42) for the main classes of discretizations of Gaussian kernels, while here complemented also with results for the normalized sampled Gaussian kernel, since the results for the latter kernel are different than for the regular sampled Gaussian kernel.

For exact numerical computations, this cascade smoothing error should be identically equal to zero for the discrete analogue of the Gaussian kernel. In the numerical implementation underlying these computations, there are, however, numerical errors of a low amplitude. For the sampled Gaussian kernel, the cascade smoothing error is very large for \(\sigma \le 0.5\), notable for \(\sigma < 0.75\), and then rapidly decreases with increasing scale. For the normalized sampled Gaussian kernel, the cascade smoothing error is for \(\sigma \le 0.5\) significantly lower than for the regular sampled Gaussian kernel. For the integrated Gaussian kernel, the cascade smoothing error is lower than for the sampled Gaussian kernel for \(\sigma \le 0.5\), while then decreasing much slower than for the sampled Gaussian kernel.

2.9 Summary of the Characterization Results from the Theoretical Analysis and the Quantitative Performance Measures

To summarize the theoretical and the experimental results presented in this section, the discrete analogue of the Gaussian kernel stands out as having the best theoretical properties in the stated respects, out of the set of treated discretization methods for the Gaussian smoothing operation.

The choice, concerning which method is preferable out of the choice between either the sampled Gaussian kernel or the integrated kernel, depends on whether one would prioritize the behaviour at either very fine scales or at coarse scales. The integrated Gaussian kernel has significantly better approximation of theoretical properties at fine scales, whereas its variance-based scale offset at coarser scales implies significantly larger deviations from the desirable theoretical properties at coarser scales, compared to either the sampled Gaussian kernel or the normalized sampled Gaussian kernel. The normalized sampled Gaussian kernel has properties closer to the desirable properties than the regular sampled Gaussian kernel. If one would introduce complementary mechanisms to compensate for the scale offset of the integrated Gaussian kernel, that kernel could, however, also constitute a viable solution at coarser scales.

3 Discrete Approximations of Gaussian Derivative Operators

According to the theory by Koenderink and van Doorn [35, 36], Gaussian derivatives constitute a canonical family of operators to derive from a Gaussian scale-space representation. Such Gaussian derivative operators can be equivalently defined by, either differentiating the Gaussian scale-space representation

or by convolving the input image by Gaussian derivative kernels

where

and \(\alpha \) and \(\beta \) are non-negative integers.

3.1 Theoretical Properties of Gaussian Derivatives

Due to the cascade smoothing property of the Gaussian smoothing operation, in combination with the commutative property of differentiation under convolution operations, it follows that the Gaussian derivative operators also satisfy a cascade smoothing property over scales:

Combined with the simplification property of the Gaussian kernel under increasing values of the scale parameter, it follows that the Gaussian derivative responses also obey such a simplifying property from finer to coarser levels of scale, in terms of (i) non-creation of new local extrema from finer to coarser levels of scale for 1-D signals, or (ii) non-enhancement of local extrema for image data over any number of spatial dimensions.

3.2 Separable Gaussian Derivative Operators

By the separability of the Gaussian derivative kernels

the 2-D Gaussian derivative response can also be written as a separable convolution of the form

In analogy with the previous treatment of purely Gaussian convolution operations, we will henceforth, for simplicity, consider the case with 1-D Gaussian derivative convolutions of the form

which are to be implemented in terms of discrete convolutions of the form

for some family of discrete filter kernels \(T_{x^{\alpha }}(n;\; s)\).

3.2.1 Measures of the Spatial Extent of Gaussian Derivative or Derivative Approximation Kernels

The spatial extent (spread) of a Gaussian derivative operator \(g_{x^{\alpha }}(\xi ;\, s)\) of the form (49) will be measured by the variance of its absolute value

Explicit expressions for these spread measures computed for continuous Gaussian derivative kernels up to order 4 are given in Appendix A.4.

Correspondingly, the spatial extent of a discrete kernel \(T_{x^{\alpha }}(n;\; s)\) designed to approximate a Gaussian derivative operator will be measured by the entity

3.3 Sampled Gaussian Derivative Kernels

In analogy with the previous treatment for the sampled Gaussian kernel in Sect. 2.3, the presumably simplest way to discretize the Gaussian derivative convolution integral (49), is by letting the discrete filter coefficients in the discrete convolution operation (50) be determined as sampled Gaussian derivatives

Appendix A.1 describes how the Gaussian derivative kernels are related to the probabilistic Hermite polynomials and does also give explicit expressions for the 1-D Gaussian derivative kernels up to order 4.

For small values of the scale parameter, the resulting discrete kernels may, however, suffer from the following problems:

-

the \(l_1\)-norms of the discrete kernels may deviate substantially from the \(L_1\)-norms of the corresponding continuous Gaussian derivative kernels (with explicit expressions for the \(L_1\)-norms of the continuous Gaussian derivative kernels up to order 4 given in Appendix A.3),

-

the resulting filters may have too narrow shape, in the sense that the spatial variance of the absolute value of the discrete kernel \(V(|T_{\text{ sampl },x^{\alpha }}(\cdot ;\, s)|)\) may differ substantially from the spatial variance of the absolute value of the corresponding continuous Gaussian derivative kernel \(V(|g_{x^{\alpha }}(\cdot ;\, s)|)\) (see Appendix A.4 for explicit expressions for these spatial spread measures for the continuous Gaussian derivatives up to order 4).

Figures 9 and 10 show how the \(l_1\)-norms as well as the spatial spread measures vary as function of the scale parameter, with comparisons to the scale dependencies for the corresponding fully continuous measures.

3.4 Integrated Gaussian Derivative Kernels

In analogy with the treatment of the integrated Gaussian kernel in Sect. 2.5, a possible way of making the \(l_1\)-norm of the discrete approximation of a Gaussian derivative kernel closer to the \(L_1\)-norm of its continuous counterpart, is by defining the discrete kernel as the integral of the continuous Gaussian derivative kernel over each pixel support region

again with a physical motivation of extending the discrete input signal f(n) to a continuous input signal \(f_c(x)\), defined to be equal to the discrete value within each pixel support region, and then integrating that continuous input signal with a continuous Gaussian kernel, which does then correspond to convolving the discrete input signal with the corresponding integrated Gaussian derivative kernel (see Appendix A.2 for an explicit derivation).

Given that \(g_{x^{\alpha - 1}}(x;\; s)\) is a primitive function of \(g_{x^{\alpha }}(x;\; s)\), we can furthermore for \(\alpha \ge 1\), write the relationship (54) as

With this definition, it follows immediately that the contributions to the \(l_1\)-norm of the discrete kernel \(T_{\text{ int },x^{\alpha }}(n;\; s)\) will be equal to the contributions to the \(L_1\)-norm of \(g_{x^{\alpha }}(n;\; s)\) over those pixels where the continuous kernel has the same sign over the entire pixel support region. For those pixels where the continuous kernel changes its sign within the support region of the pixel, however, the contributions will be different, thus implying that the contributions to the \(l_1\)-norm of the discrete kernel may be lower than the contributions to the \(L_1\)-norm of the corresponding continuous Gaussian derivative kernel, see Fig. 1 for an illustration of such graphs of integrated Gaussian derivative kernels.

Similarly to the previously treated case with the integrated Gaussian kernel, the integrated Gaussian derivative kernels will also imply a certain scale offset, as shown in Figs. 10 and 11.

3.5 Discrete Analogues of Gaussian Derivative Kernels

Common characteristics of the approximation methods for computing discrete Gaussian derivative responses considered so far are that the computation of each Gaussian derivative operator of a given order will imply a spatial convolution with a large-support kernel. Thus, the amount of necessary computational work will increase with the number of Gaussian derivative responses, that are to be used when constructing visual operations that base their processing steps on using Gaussian derivative responses as input.

A characteristic property of the theory for discrete derivative approximations with scale-space properties in Lindeberg [44, 45], however, is that discrete derivative approximations can instead be computed by applying small-support central difference operators to the discrete scale-space representation, and with preserved scale-space properties in terms of either (i) non-creation of local extrema with increasing scale for 1-D signals, or (ii) non-enhancement of local extrema towards increasing scales in arbitrary dimensions. With regard to the amount of computational work, this property specifically means that the amount of additive computational work needed, to add more Gaussian derivative responses as input to a visual module, will be substantially lower than for the previously treated discrete approximations, based on computing each Gaussian derivative response using convolutions with large-support spatial filters.

According to the genuinely discrete theory for defining discrete analogues of Gaussian derivative operators, discrete derivative approximations are, from the discrete scale-space representation, generated by convolution with the discrete analogue of the Gaussian kernel according to (26)

computed as

where \(\delta _{x^{\alpha }}\) are small-support difference operators of the following forms in the special cases when \(\alpha = 1\) or \(\alpha = 2\)

to ensure that the estimates of the first- and second-order derivatives are located at the pixel values, and not in between, and of the following forms for higher values of \(\alpha \):

for integer i, where the special cases \(\alpha = 3\) and \(\alpha = 4\) then correspond to the difference operators

For 2-D images, corresponding discrete derivative approximations are then computed as straightforward extensions of the 1-D discrete derivative approximation operators

where \(L(x, y;\; s)\) here denotes the discrete scale-space representation (36) computed using separable convolution with the discrete analogue of the Gaussian kernel (26) along each dimension.

In terms of explicit convolution kernels, computation of these types of discrete derivative approximations correspond to applying discrete derivative approximation kernels of the form

to the input data. In practice, such explicit derivative approximation kernels should not, however, never be applied for actual computations of discrete Gaussian derivative responses, since those operations can be carried out much more efficiently by computations of the forms (57) or (63), provided that the computations are carried out with sufficiently high numerical accuracy, so that the numerical errors do not grow too much because of cancellation of digits.

3.5.1 Cascade Smoothing Property

A theoretically attractive property of these types of discrete approximations of Gaussian derivative operators, is that they exactly obey a cascade smoothing property over scales, in 1-D of the form

and in 2-D of the form

where \(T_{\text{ disc }}(\cdot , \cdot ;\; s)\) here denotes the 2-D extension of the 1-D discrete analogue of the Gaussian kernel by separable convolution

In practice, this cascade smoothing property implies that the transformation from any finer level of scale to any coarser level of scale is always a simplifying transformation, implying that this transformation always ensures: (i) non-creation of new local extrema (or zero-crossings) from finer to coarser levels of scale for 1-D signals, and (ii) non-enhancement of local extrema, in the sense that the derivative of the scale-space representation with respect to the scale parameter, always satisfies \(\partial _{s} L \le 0\) at any local spatial maximum point and \(\partial _{s} L \ge 0\) at any local spatial minimum point.

The responses to M:th-order monomials \(f(x) = x^M\) for different discrete approximations of M:th-order Gaussian derivative kernels, for orders up to \(M = 4\), for either discrete analogues of Gaussian derivative kernels \(T_{\text{ disc },x^{\alpha }}(n;\; s)\) according to (64), sampled Gaussian derivative kernels \(T_{\text{ sampl },x^{\alpha }}(n;\, s)\) according to (53) or integrated Gaussian derivative kernels \(T_{\text{ int },x^{\alpha }}(n;\, s)\) according to (54). In the ideal continuous case, the resulting value should be equal to M!. (Horizontal axis: Scale parameter in units of \(\sigma = \sqrt{s} \in [0.1, 2]\))

The responses to different N:th-order monomials \(f(x) = x^N\) for different discrete approximations of M:th-order Gaussian derivative kernels, for \(M > N\), for either discrete analogues of Gaussian derivative kernels \(T_{\text{ disc },x^{\alpha }}(n;\; s)\) according to (64), sampled Gaussian derivative kernels \(T_{\text{ sampl },x^{\alpha }}(n;\, s)\) according to (53) or integrated Gaussian derivative kernels \(T_{\text{ int },x^{\alpha }}(n;\, s)\) according to (54). In the ideal continuous case, the resulting value should be equal to 0, whenever the order M of differentiation is higher than the order N of the monomial. (Horizontal axis: Scale parameter in units of \(\sigma = \sqrt{s} \in [0.1, 2]\))

3.6 Numerical Correctness of the Derivative Estimates

To measure how well a discrete approximation of a Gaussian derivative operator reflects a differentiation operator, one can study the response properties to polynomials.Footnote 6 Specifically, in the 1-D case, the M:th-order derivative of an M-order monomial should be:

Additionally, the derivative of any lower-order polynomial should be zero:

With respect to Gaussian derivative responses to monomials of the form

the commutative property between continuous Gaussian smoothing and the computation of continuous derivatives then specifically implies that

and

If these relationships are not sufficiently well satisfied for the corresponding result of replacing a continuous Gaussian derivative operator by a numerical approximations of a Gaussian derivative, then the corresponding discrete approximation cannot be regarded as a valid approximation of the Gaussian derivative operator, that in turn is intended to reflect the differential structures in the image data.

It is therefore of interest to consider entities of the following type

to characterize how well a discrete approximation \(T_{x^{\alpha }}(n;\; s)\) of a Gaussian derivative operator of order \(\alpha \) serves as a differentiation operator on a monomial of order k.

Figures 7 and 8 show the results of computing the responses of the discrete approximations of Gaussian derivative operators to different monomials in this way, up to order 4. Specifically, Fig. 7a shows the entity \(P_{1,1}(s)\), which in the continuous case should be equal to 1. Figure 7b shows the entity \(P_{2,2}(s)\), which in the continuous case should be equal to 2. Figure 7c shows the entity \(P_{3,3}(s)\), which in the continuous case should be equal to 6. Figure 7d shows the entity \(P_{4,4}(s)\), which in the continuous case should be equal to 24. Figure 8a and b show the entities \(P_{3,1}(s)\) and \(P_{4,2}(s)\), respectively, which in the continuous case should be equal to zero.

As can be seen from the graphs, the responses of the derivative approximation kernels to monomials of the same order as the order of differentiation do for the sampled Gaussian derivative kernels deviate notably from the corresponding ideal results obtained for continuous Gaussian derivatives, when the scale parameter is a bit below 0.75. For the integrated Gaussian derivative kernels, the responses of the derivative approximation kernels do also deviate when the scale parameter is a bit below 0.75. Within a narrow range of scale values in intervals of the order of [0.5, 0.75], the integrated Gaussian derivative kernels do, however, lead to somewhat lower deviations in the derivative estimates than for the sampled Gaussian derivative kernels. Also the responses of the third-order sampled Gaussian and integrated Gaussian derivative approximation kernels to a first-order monomial as well as the response of the fourth-order sampled Gaussian and integrated Gaussian derivative approximation kernels to a second-order monomial differ substantially from the ideal continuous values when the scale parameter is a bit below 0.75.

For the discrete analogues of the Gaussian derivative kernels, the results are, on the other hand, equal to the corresponding continuous counterparts, in fact, in the case of exact computations, exactly equal. This property can be shown by studying the responses of the central difference operators to the monomials, which are given by

and

Since the central difference operators commute with the spatial smoothing step with the discrete analogue of the Gaussian kernel, the responses of the discrete analogues of the Gaussian derivatives to the monomials are then obtained as

implying that

and

In this respect, there is a fundamental difference between the discrete approximations of Gaussian derivatives obtained from the discrete analogues of Gaussian derivatives, compared to the sampled or the integrated Gaussian derivatives. At very fine scales, the discrete analogues of Gaussian derivatives produce much better estimates of differentiation operators, than the sampled or the integrated Gaussian derivatives.

The requirement that the Gaussian derivative operators and their discrete approximations should lead to numerically accurate derivative estimates for monomials of the same order as the order of differentiation is a natural consistency requirement for non-infinitesimal derivative approximation operators. The use of monomials as test functions, as used here, is particularly suitable in a multi-scale context, since the monomials are essentially scale-free and are not associated with any particular intrinsic scales.

3.7 Additional Performance Measures for Quantifying Deviations from Theoretical Properties of Discretizations of Gaussian Derivative Kernels

To additionally quantify the deviations between the properties of the discrete kernels, designed to approximate Gaussian derivative operators, and desirable properties of discrete kernels, that are to transfer the desirable properties of the continuous Gaussian derivatives to a corresponding discrete implementation, we will in this section quantify these deviations in terms of the following complementary error measures:

-

Normalization error The difference between the \(l_1\)-norm of the discrete kernel and the desirable \(l_1\)-normalization to a similar \(L_1\)-norm as for the continuous Gaussian derivative kernel will be measured by

$$\begin{aligned} E_{\text{ norm }}(T_{x^{\alpha }}(\cdot ;\; s)) = \frac{\Vert T_{x^{\alpha }}(\cdot ;\; s) \Vert _1}{\Vert g_{x^{\alpha }}(\cdot ;\; s) \Vert _1} - 1. \end{aligned}$$(79) -

Spatial spread measure The spatial extent of the discrete derivative approximation kernel will be measured by the entity

$$\begin{aligned} \sqrt{V(|T_{x^{\alpha }}(\cdot ;\; s)|)} \end{aligned}$$(80)and will be graphically compared to the spread measure \(S_{\alpha }(s) = V(|g_{x^{\alpha }}(\cdot ;\; s)|)\) for a corresponding continuous Gaussian derivative kernel. Explicit expressions for the latter spread measures \(S_{\alpha }(s)\) computed from continuous Gaussian derivative kernels are given in Appendix A.4.

-

Spatial spread measure offset To quantify the absolute deviation between the above measured spatial spread measure \(\sqrt{V(|T_{x^{\alpha }}(\cdot ;\; s)|)}\) with the corresponding ideal value \(V(|g_{x^{\alpha }}(\cdot ;\; s)|)\) for a continuous Gaussian derivative kernel, we will measure this offset in terms of the entity

$$\begin{aligned} O_{\alpha }(s) = \sqrt{V(|T_{x^{\alpha }}(\cdot ;\; s)|)} - \sqrt{V(|g_{x^{\alpha }}(\cdot ;\; s)|)}. \end{aligned}$$(81) -

Cascade smoothing error The deviation, from the cascade smoothing property of continuous Gaussian derivatives according to (46) and the actual result of convolving a discrete approximation of a Gaussian derivative response at a given scale with its corresponding discretization of the Gaussian kernel, will be measured by

$$\begin{aligned}{} & {} E_{\text{ cascade }}(T_{x^{\alpha }}(\cdot ;\; s)) = \nonumber \\{} & {} \quad = \frac{\Vert T_{x^{\alpha }}(\cdot ;\; 2s) - T(\cdot ;\; s) * T_{x^{\alpha }}(\cdot ;\; s) \Vert _1}{\Vert T_{x^{\alpha }}(\cdot ;\; 2s) \Vert _1}. \end{aligned}$$(82)For simplicity, we here restrict ourselves to the special case, when the scale parameter for the amount of incremental smoothing with a discrete approximation of the Gaussian kernel is equal to the scale parameter for the finer scale approximation of the Gaussian derivative response.Footnote 7

Similarly to the previous treatment about error measures in Sect. 2.7, the normalization error and the cascade smoothing error should also be equal to zero in the ideal theoretical case. Any deviations from zero of these error measures do therefore represent a quantification of deviations from desirable theoretical properties in a discrete approximation of Gaussian derivative computations.

Graphs of the \(l_1\)-norms \(\Vert T_{x^{\alpha }}(\cdot ;\, s) \Vert _1\) of different discrete approximations of Gaussian derivative kernels of order \(\alpha \), for either discrete analogues of Gaussian derivative kernels \(T_{\text{ disc },x^{\alpha }}(n;\; s)\) according to (64), sampled Gaussian derivative kernels \(T_{\text{ sampl },x^{\alpha }}(n;\, s)\) according to (53) or integrated Gaussian derivative kernels \(T_{\text{ int },x^{\alpha }}(n;\, s)\) according to (54), together with the graph of the \(L_1\)-norms \(\Vert g_{x^{\alpha }}(\cdot ;\, s) \Vert _1\) of the corresponding fourth-order Gaussian derivative kernels. (Horizontal axis: Scale parameter in units of \(\sigma = \sqrt{s} \in [0.1, 2]\))

Graphs of the spatial spread measure \(\sqrt{V(|T_{x^{\alpha }}(\cdot ;\; s)|)}\), according to (80), for different discrete approximations of Gaussian derivative kernels of order \(\alpha \), for either discrete analogues of Gaussian derivative kernels \(T_{\text{ disc },x^{\alpha }}(n;\; s)\) according to (64), sampled Gaussian derivative kernels \(T_{\text{ sampl },x^{\alpha }}(n;\, s)\) according to (53) or integrated Gaussian derivative kernels \(T_{\text{ int },x^{\alpha }}(n;\, s)\) according to (54). (Horizontal axis: Scale parameter in units of \(\sigma = \sqrt{s} \in [0.1, 4]\))

Graphs of the spatial spread measure offset \(O_{\alpha }(s)\), relative to the spatial spread of a continuous Gaussian kernel, according to (81), for different discrete approximations of Gaussian derivative kernels of order \(\alpha \), for either discrete analogues of Gaussian derivative kernels \(T_{\text{ disc },x^{\alpha }}(n;\; s)\) according to (64), sampled Gaussian derivative kernels \(T_{\text{ sampl },x^{\alpha }}(n;\, s)\) according to (53) or integrated Gaussian derivative kernels \(T_{\text{ int },x^{\alpha }}(n;\, s)\) according to (54). (Horizontal axis: Scale parameter in units of \(\sigma = \sqrt{s} \in [0.1, 4]\))

Graphs of the cascade smoothing error \(E_{\text{ cascade }}(T_{x^{\alpha }}(\cdot ;\; s))\), according to (82), for different discrete approximations of Gaussian derivative kernels of order \(\alpha \), for either discrete analogues of Gaussian derivative kernels \(T_{\text{ disc },x^{\alpha }}(n;\; s)\) according to (64), sampled Gaussian derivative kernels \(T_{\text{ sampl },x^{\alpha }}(n;\, s)\) according to (53) or integrated Gaussian derivative kernels \(T_{\text{ int },x^{\alpha }}(n;\, s)\) according to (54). (Horizontal axis: Scale parameter in units of \(\sigma = \sqrt{s} \in [0.1, 2]\))

3.8 Numerical Quantification of Deviations from Theoretical Properties of Discretizations of Gaussian Derivative Kernels

3.8.1 \(l_1\)-Norms of Discrete Approximations of Gaussian Derivative Approximation Kernels

Figure 9 shows the \(l_1\)-norms \(\Vert T_{x^{\alpha }}(\cdot ;\, s) \Vert _1\) for the different methods for approximating Gaussian derivative kernels with corresponding discrete approximations for differentiation orders up to 4, together with graphs of the \(L_1\)-norms \(\Vert g_{x^{\alpha }}(\cdot ;\, s) \Vert _1\) of the corresponding continuous Gaussian derivative kernels.

From these graphs, we can first observe that the behaviour between the different methods differ significantly for values of the scale parameter \(\sigma \) up to about 0.75, 1.25 or 1.5, depending on the order of differentiation.

For the sampled Gaussian derivatives, the \(l_1\)-norms tend to zero as the scale parameter \(\sigma \) approaches zero for the kernels of odd order, whereas the \(l_1\)-norms tend to infinity for the kernels of even order. For the kernels of even order, the behaviour of the sampled Gaussian derivative kernels has the closest similarity to the behaviour of the corresponding continuous Gaussian derivatives. For the kernels of odd order, the behaviour is, on the other hand, worst.

For the integrated Gaussian derivatives, the behaviour is for the kernels of odd order markedly less singular as the scale parameter \(\sigma \) tends to zero, than for the sampled Gaussian derivatives. For the kernels of even order, the behaviour does, on the other hand differ more. There is also some jaggedness behaviour at fine scales for the third- and fourth-order derivatives, caused by positive and negative values of the kernels cancelling their contribution within the support regions of single pixels.

For the discrete analogues of Gaussian derivatives, the behaviour is, qualitatively different for finer scales, in that the discrete analogues of the Gaussian derivatives tend to the basic central difference operators, as the scale parameter \(\sigma \) tends to zero, and do therefore show a much more smooth behaviour as \(\sigma \rightarrow 0\).

3.8.2 Spatial Spread Measures

Figure 10 shows graphs of the standard-deviation-based spatial spread measure \(\sqrt{V(|T_{x^{\alpha }}(\cdot ;\; s)|)}\) according to (80), for the main classes of discretizations of Gaussian derivative kernels, together with graphs of the corresponding spatial spread measures computed for continuous Gaussian derivative kernels.

As can be seen from these graphs, the spatial spread measures differ significantly from the corresponding continuous measures for smaller values of the scale parameters; for \(\sigma \) less than about 1 or 1.5, depending on the order of differentiation, and caused by the fact that too fine scales in the data cannot be appropriately resolved after a spatial discretization. For the sampled and the integrated Gaussian kernels, there is a certain jaggedness in some of the curves at fine scales, caused by interactions between the grid and the lobes of the continuous Gaussian kernels, that the discrete kernels are defined from. For the discrete analogues of the Gaussian kernels, these spatial spread measures are notably bounded from below by the corresponding measures for the central difference operators, that they approach with decreasing scale parameter.

Figure 11 shows more detailed visualizations of the deviations between these spatial spread measures and their corresponding ideal values for continuous Gaussian derivative kernels in terms of the spatial spread measure offset \(O_{\alpha }(s)\) according to (81), for the different orders of spatial differentiation. The jaggedness of these curves for orders of differentiation greater than one is due to interactions between the lobes in the derivative approximation kernels and the grid. As can be seen from these graphs, the relative properties of the spatial spread measure offsets for the different discrete approximations to the Gaussian derivative operators differ somewhat, depending on the order of spatial differentiation. We can, however, note that the spatial spread measure offset for the integrated Gaussian derivative kernels is mostly somewhat higher than the spatial spread measure offset for the sampled Gaussian derivative kernels, consistent with the previous observation that the spatial box integration used for defining the integrated Gaussian derivative kernel introduces an additional amount of spatial smoothing in the spatial discretization.

3.8.3 Cascade Smoothing Errors

Figure 12 shows graphs of the cascade smoothing error \(E_{\text{ cascade }}(T_{x^{\alpha }}(\cdot ;\; s))\) according to (82) for the main classes of methods for discretizing Gaussian derivative operators.

For the sampled Gaussian kernels, the cascade smoothing error is substantial for \(\sigma < 0.75\) or \(\sigma < 1.0\), depending on the order of differentiation. Then, for larger scale values, this error measure decreases rapidly.

For the integrated Gaussian kernels, the cascade smoothing error is lower than the cascade smoothing error for the sampled Gaussian kernels for \(\sigma < 0.5\), \(\sigma < 0.75\) or \(\sigma < 1.0\), depending on the order of differentiation. For larger scale values, the cascade smoothing error for the integrated Gaussian kernels do, on the other hand, decrease much less rapidly with increasing scale than for the sampled Gaussian kernels, due to the additional spatial variance in the filters caused by the box integration, underlying the definition of the integrated Gaussian derivative kernels.

For the discrete analogues of the Gaussian derivatives, the cascade smoothing error should in the ideal case of exact computations lead to a zero error. In the graphs of these errors, we do, however, see a jaggedness at a very low level, caused by numerical errors.

3.9 Summary of the Characterization Results from the Theoretical Analysis and the Quantitative Performance Measures

To summarize the theoretical and the experimental results presented in this section, there is a substantial difference in the quality of the discrete approximations of Gaussian derivative kernels at fine scales:

For values of the scale parameter \(\sigma \) less than about a bit below 0.75, the sampled Gaussian kernels and the integrated Gaussian kernels do not produce numerically accurate or consistent estimates of the derivatives of monomials. In this respects, these discrete approximations of Gaussian derivatives do not serve as good approximations of derivative operations at very fine scales. Within a narrow scale interval below about 0.75, the integrated Gaussian derivative kernels do, however, degenerate in a somewhat less serious manner than the sampled Gaussian derivative kernels.

For the discrete analogues of Gaussian derivatives, obtained by convolution with the discrete analogue of the Gaussian kernel followed by central difference operators, the corresponding derivative approximations are, on the other hand, exactly equal to their continuous counterparts. This property does, furthermore, hold over the entire scale range.

For larger values of the scale parameter, the sampled Gaussian kernel and the integrated Gaussian kernel do, on the other hand, lead to successively better numerical approximations of the corresponding continuous counterparts. In fact, when the value of the scale parameter is above about 1, the sampled Gaussian kernel leads to the numerically most accurate approximations of the corresponding continuous results, out of the studied three methods.

Hence, the choice between what discrete approximation to use for approximating the Gaussian derivatives, depends upon what scale ranges are important for the analysis, in which the Gaussian derivatives should be used.