Abstract

Manufacturing industries are eager to replace traditional robot manipulators with collaborative robots due to their cost-effectiveness, safety, smaller footprint and intuitive user interfaces. With industrial advancement, cobots are required to be more independent and intelligent to do more complex tasks in collaboration with humans. Therefore, to effectively detect the presence of humans/obstacles in the surroundings, cobots must use different sensing modalities, both internal and external. This paper presents a detailed review of sensor technologies used for detecting a human operator in the robotic manipulator environment. An overview of different sensors installed locations, the manipulator details and the main algorithms used to detect the human in the cobot workspace are presented. We summarize existing literature in three categories related to the environment for evaluating sensor performance: entirely simulated, partially simulated and hardware implementation focusing on the ‘hardware implementation’ category where the data and experimental environment are physical rather than virtual. We present how the sensor systems have been used in various use cases and scenarios to aid human–robot collaboration and discuss challenges for future work.

Similar content being viewed by others

Introduction

Digitisation and the increasing demand for unique and customised products have motivated manufacturing industries to move beyond the mass production paradigm and develop smarter production systems by involving humans as their core parameter (Bragança et al., 2019; Sherwani et al., 2020) This has led to a concept named Industry 5.0 (I5.0) (Industry 5.0: Towards More Sustainable, Resilient and Human-Centric Industry, 2021) where humans work in parallel with robots. Although smart machines, a key development in Industry 4.0, are more precise and accurate than humans, robot-centric manufacturing systems suffer from limitations in a more custom production environment as robots lack the flexibility and adaptability of human workers. Therefore, by combining humans’ coordination, proficiency and cognitive potential with the accuracy, efficiency and dexterity of robots, I5.0 brings together the best of both to enable a mass customization production environment.

Human–robot interaction (HRI) can generally be classified into three categories as illustrated in Fig. 1b–d. Figure 1a shows the traditional industry where robots are totally isolated from human operator. Then in HRI, first, Human–robot coexistence, in which both humans and robots work together but never overlap each other’s workspace and have different tasks. Second, human–robot cooperation, in which both human operator and robot have some common goals or tasks to perform. In this category, both agents work in a shared space but do not come in direct contact. The third category of HRI is Human–Robot Collaboration (HRC), in which both agents interact with each other in one of two ways:

-

Physical collaboration, in which robots are enabled to identify and predict human interactions using force or torque signals.

-

Non-physical collaboration, in which the information between human and robot is exchanged using either direct (gestures, speech etc.) or indirect communication (facial expression, human presence etc.) (Hentout et al., 2019)

Multiple robotic systems and architectures are present in industry, including serial robot manipulators (either with fixed base or mounted on mobile platforms), and parallel systems (for example delta robots and gantry or Cartesian systems). A robotic manipulator is an arm-like structure that is mainly used to handle materials without direct contact with the operator. A manipulator can be mounted on a fixed base, and carry out specific tasks by moving its end-effector [e.g. a PUMA robot (Jin et al., 2017)], or it can have a mobile platform to move around. Robots have been utilised in the manufacturing industry for decades to enhance production speed and accuracy. Industrial robots traditionally operate inside cages, isolated from humans for safety reasons. The ability to have robots sharing the workspace and working in parallel with humans is a key factor within the I5.0 concept and is at the core of the smart, flexible factory.

In HRC, a robot may help its human co-operator carry and manipulate sensitive and heavy objects safely (Solanes et al., 2018) or to position these precisely by hand-guiding (Safeea et al., 2019a, 2019b). Moreover, as robots are becoming cheaper, more flexible, and more self-governing by incorporating artificial intelligence, they may replace human workers, while others, which work alongside workers—complementing them, are called collaborative robots or cobots.

Cobots have now been implemented in many fields and sectors. In production, they are used in manufacturing, transportation (autonomous guided vehicles or logistics) and construction (bricks or material transfers). Moreover, there has also been a rise in cobot applications in the medical field for various operations, including robotic surgery (Sefati et al., 2021) (manipulation of needles or surgical grippers), assistance (autonomous wheelchairs or walking aid) or diagnosis [automatic positioning of endoscopes or ultrasound probes (Zhang et al., 2020a, 2020b)] etc. The use of cobots in the service sector is also rapidly increasing. Cobots have potential for growth in the coming years for various applications like companionship, domestic cleaning, object retrieving or as chat partners (Nam et al., 2021; Zhong et al., 2021). Sectors like military and international space exploration are also taking advantage of collaborative robots (Roque et al., 2016). For instance, the Space Rider (a planned uncrewed orbital lifting body spaceplane) spent months on international space missions where it often encountered debris. Therefore, for the inspection and thorough cleaning of the plane, a cobot was used (Bernelin et al., 2019). Moreover, recently cobots are also seen working actively as front-line workers during the global pandemic of COVID-19 (Deniz & Gökmen, 2021).

Manufacturing industries are eager to replace traditional robot manipulators with cobots due to their cost-effectiveness, safety and intuitive user interfaces (Ma et al., 2020). Cobots are especially affordable for Small and Medium Enterprises (SMEs), which face difficulties automating their manufacturing using traditional industrial robots (Collaborative Robotics for Assembly and Kitting in Smart Manufacturing, 2019). On the other hand, Multi-National Corporations (MNCs) are equally interested in deploying cobots to maintain competitiveness and ensure their factories adapt to the next level of advancement in manufacturing. For example, cobots have been introduced in the Spartanburg site of BMW Group to improve the worker’s efficiency by taking over the repetitive and precise tasks like equipping the car doors from inside with sound or moisture insulation etc. (Innovative Human–robot Cooperation in BMW Group Production., 2013).

An industrial cobot is developed for direct interaction with human co-workers to provide an efficient manufacturing work environment to complete tasks. Cobots can assist humans in various industrial tasks like co-manipulation (Ibarguren & Daelman, 2021), handover of objects during assembly (Raessa et al., 2020) picking and placing materials (Borrell et al., 2020), soldering (Mejia et al., 2022), inspection (Trujillo et al., 2019), drilling (Ayyad et al., 2023), screwing (Koç & Doğan, 2022), packaging etc., on a manufacturing line. They can also relieve human operators (Li et al., 2022) and precisely and quickly lift and place loads (Javaid et al., 2021). To perform these tasks, cobots need to actively perceive human actions.

Perception of human actions and intentions is critical to have a safe and efficient collaboration. This is achieved in cobots in different ways. In some scenarios, the human operator guides the cobot manually using hand or facial gestures and sometimes using voice commands (Neto et al., 2018). In other cases, the cobots are equipped with their own sensing modalities to establish awareness of their environment (Han et al., 2019; Siva & Zhang, 2020), recognise objects (Juel et al., 2019) and behavior of things (Berg et al., 2019; Ragaglia et al., 2018; Sakr et al., 2020), or detect and avoid collisions (Su et al., 2020; Zabalza et al., 2019) to ensure their own safety and that of their co-workers. As in HRC human workers work in very close proximity to cobots, the safety of the human operators is of utmost importance.

To equip the cobot with perception to keep track of its working space and the entities present in it, sensors are used. Sensors comprise an essential part of robotics to accomplish any task. In robotics, sensors are generally categorized in two groups. First, internal or proprioceptive sensors, come fixed within the robot for example, position sensors, motors, and torque sensors at joints. These obtain information about the robot itself. Second, external or exteroceptive sensors, for example, cameras, laser scanners, and IMUs, which gather information about the environment. The data acquired from both type of sensors can be used to analyse the circumstances of the workspace and judge the state of the robot, which, in turn, helps to control and regulate the defined tasks. In a collaborative environment, where humans are working side by side with robots, one of the vital tasks is to enable the robot to detect the presence of humans in its workspace.

Recent review studies in robotics focus on applications of cobots (Afsari et al., 2018; Hentout et al., 2019; Knudsen & Kaivo-oja, 2020; Wang et al., 2020a, 2020b), programming methods used in collaborative environments for various purposes (De Pace et al., 2020; Villani et al., 2018; Wang et al., 2019; Zaatari et al., 2019), specific tasks like gesture recognition (Liu & Wang, 2018), path planning (Manoharan & Kumaraguru, 2018), the methods used for human–robot collaboration (Halme et al., 2018; Martínez-Villaseñor & Ponce, 2019; Wang et al., 2019, 2020a), or tools/medium-specific systems like vision or inertial etc. (Majumder & Kehtarnavaz, 2021; Mohammed et al., 2016).

Li and Liu (2019) reviewed the standard sensors used in industrial robots and a brief working principle. They have further discussed the applications in which these sensors have been used such as HRI, Automated Guided Vehicles (AVG) navigation, manipulator control, object grasping etc. Ogenyi et al. (2021) presented a survey on robotic systems, sensors, actuators and collaborative strategies for a cobot workspace. The robotic systems are also discussed in detail under categories including collaborative arms, wearable robotic arms and robot assistive devices. Ding et al. (2022) present an overview of state-of-the-art perception technologies used with collaborative robots, focusing on algorithms for fusing heterogeneous data from different sensors. The existing sensor-based control methods for various applications in a human–robot environment have been discussed in a recently published work by Cherubini and Navarro-Alarcon (2021). The authors surveyed the sensor types, their integration methods and application domains.

The existing literature, discussed above, reviews various aspects of human robot collaborative environment including application of cobots, programming methods to detect collision, specific tasks such as gesture recognition or path planning, tools/medium-specific systems like vision or inertial sensors etc. In terms of sensor specific literature, reviews on standard sensors used in industrial robots or their applications in HRI are documented. In contrast to the existing literature, this paper provides an in-depth review of sensors and methods that have been utilised specifically in detection and tracking of humans in an industrial collaborative environment (with both fixed and mobile base cobots). This study provides a complete overview of sensor types, models, and locations (where they were mounted in the workspace) when detecting the obstacle. Moreover, we discuss in detail the pros and cons of each sensing approach and the emerging technologies for human detection and tracking. Due to the versatile nature of the obstacle detection module, we limit our review to manipulator workspaces in this study.

The rest of the manuscript is organised as follows: section “Obstacle detection and collision avoidance in cobots” describes the cobot systems available in the industrial domain and also the importance of external sensors in completing cobot tasks. Section “Sensors used for detecting obstacles” discusses in detail the types of sensors presented in the relevant literature. Section “Methods used for detecting the human in a human–robot collaboration environment” discusses and highlights the various algorithms used for detecting humans in industrial collaborative environments. Section “Discussion” discusses the limitations and advantages of the various sensor modalities and briefly discusses the research on sensor fusion and its benefits in this context.

Obstacle detection and collision avoidance in cobots

The interest in HRC has increased vastly in recent years from both the research and the industrial perspective. One of the main reasons for that is the advancement in technology that has made robot systems safer around human co-workers, typically using geometric design to limit pinching risks and force calculation or estimation to detect collisions. With respect to the robot itself, there are several ‘safe’ generations of robots which allow collaborative work between the robot and human workers. Rethink Robotics (https://www.rethinkrobotics.com/) offers a 7 degree of freedom robot arm, Sawyer. The key feature is that the joint motors incorporate in series with an elastic element (a mechanical spring) to ensure that the robot arm remains soft (flexible) to external contact even in case of software failure. The collaborative robots from Universal Robots (https://www.universalrobots.com/) look like traditional industrial robots but are certifiable for most HRC tasks according to the ISO 15066 (ISO/TS 15066:2016, 2016). They include several features such as, force detection and speed reduction if a human is detected by external sensors. Kuka robotics (https://www.kuka.com/) has introduced LBR iiwa (KUKA AG, 2021) which is a powerful but lightweight robot with extremely sensitive torque sensors in the joints. The torque sensors provide immediate information regarding contact with the environment, which can be used for avoiding unsafe collision. While more recently Kuka robotics have complemented the LKBR iiwa with the LBR iisy system (KUKA AG, 2024).

Moreover, almost every robot manufacturer now includes cobots in their portfolio. For example, Fanuc launched its CRX Collaborative Robot Series (Fraka https://www.franka.de/), ABB group (https://global.abb/group/en) (dual-arm robot), Yaskawa has Motoman HC10 (YASKAWA (https://www.yaskawa.eu.com/)), or COMAU with its cobot AURA (COMAU (https://www.comau.com/en/)). Most of these new generations of robots offer maximum flexibility to be programmed even by those without specialised robotics training, having intuitive methods to teach the robot about its environment and surrounding obstacles (De Gea Fernández et al., 2017).

However, it should be noted that cobots cannot be considered intrinsically safe because of their design features, indeed prior to deployment the cobot is considered a partially completed machine. For instance, a cobot will decelerate and stop when the force limit due to collision with an object is exceeded. However, the definition of the force limit is application dependent. To ensure the system is safe, time-consuming mechanical testing must be carried out to demonstrate that any collision at any configuration in the reachable workspace does not exceed the force and pressure limits defined per body part and per collision type in ISO TS 15066. Additionally, these tests must consider any potential tool and/or environmental changes. Thus, to improve system productivity it is often imperative to implement an efficient collision avoidance in a human–robot collaborative environment i.e., the obstacles need to be detected, and their motion needs to be predicted to prevent contact occurring.

For this study, we have categorised the previous research work into three categories, entirely simulated (simulated or generated data and an augmented environment), partially simulated (real sensor data but augmented environment) and hardware implementation (real sensor data and real physical environment). Moreover, in a robot workspace the obstacles can be static/fixed (e.g. machine equipment) or these can be dynamic (moving objects like human co-workers) (Majeed et al., 2021). The three defined categories are detailed below and shown in Fig. 2.

-

Entirely Simulated (ES): a simulated version of the cobot is used, in different scenarios, using pre-defined or recorded data. For example, Safeea et al. (2020), presented a work in which they have applied newton’s formula for collision avoidance. The method was tested in a simulated environment where the obstacles and targets were assumed only by the coordinate’s positions. Moreover, in another work (Safeea et al., 2019a), Safees et al., calculated the minimum distance between obstacles and human operators using QR factorization method. The minimum distance evaluations thus help in efficient collision avoidance. In another work, Flacco and De Luca (2010) presented a novel approach to avoid collision by formulating a probabilistic framework of cell decomposition using both single and multiple depth sensors. The work was implemented in a purely augmented environment using simulated data.

-

Partially Simulated (PS): In this category, the data was collected from real sensors while treated in a simulated environment. For example, Yang et al. (2018) collected multi-source data using Kinect and leap motion sensors. The comprehensive surrounding information was collected by fusing the vision data, using a point cloud, and the operator’s current movement data (captured using leap motion sensors attached to the worker’s hand). In this category, the developed models are not tested on real manufacturing robots, which would require further tuning and testing when deploying in a real time environment.

-

Hardware Implementation (HI): In this category, for efficient collision avoidance, both dynamic and static obstacles were detected using real data, and the experimentation is also physically performed on a real robot. For example, Safeea and Neto (2019) investigated the use of laser scanners along with inertial measurement units (IMUs). Data from both kinds of sensors was fused to find the position of human worker. Further, potential field method was used to avoid the collision between a worker and Kuka iiwa robot.

The use of external sensors monitoring the work-space environment can facilitate the adaptation of classical robot systems to collaborative environments by providing extra layers of safety for human co-workers. Therefore, the main focus of this paper is to review the third category listed above, where real-time data is captured using different types of sensing modalities, and the system is implemented in a real industrial work-space. However, the second category (i.e., PS) is also discussed to some extent.

Sensors used for detecting obstacles

Sensors are of utmost importance for collaborative industrial robots to complete their operations. In particular, when the robot cannot be considered safe, due to for instance a dangerous end-effector, the use of external sensors and monitoring of the shared work-space, can enable the system to be used in a coexistence environment. Even as the manufacturing industry increasingly introduces new safe cobot technologies, the use of additional sensing modalities in the workspace provides additional information, thus providing an extra layer of safety for human operators. For example, if the cobot has a sharp object at its end effector, any collision with a human operator will be very dangerous, likely exceeding the pressure threshold even if contact force is minimal. In this case, it is necessary to guarantee that no collision with a human can possibly occur. Furthermore, even in scenarios where contact below the threshold is allowable, the robot must be stopped on contact. This leads to reduced productivity as there is usually a delay as the system must be manually restarted. Hence, external sensors not only provide safety by avoiding collision, but can also help to improve productivity.

Sensors acquire information from the shared human–robot work-space on the state of the robot and the environment. With the help of this information, the controller issues instructions for the robot to complete the appointed tasks. Sensor-based obstacle detection strongly resembles our central nervous system (The Brain’s Sense of Movement, 2002) and its origin can be related back to the servomechanism problem (Davison & Goldenberg, 1975). For instance, in image-based environment servoing (Cherubini & Chaumette, 2012; Perdereau et al., 2002) vision sensors are used to obtain visual feedback to control the motion of the robot. Shared work-space information can be acquired using different kinds of sensing modalities in an industrial environment. In a shared workspace there are two phases: pre-impact/collision and post-impact/collision. Hence, the type of sensors also can be divided into these two categories. In this section, the type of sensors used in pre-impact/collision and post-impact/collision are discussed. Examples of sensors used for obstacle detection in a human–robot collaboration environment are shown in Fig. 3.

Sensors used in pre-impact/collision phase

Visual sensors

Visual sensors have evolved expeditiously over the past few years. They are now being used in many fields like autonomous vehicles (Guang et al., 2018); enhancing security using face recognition (Kortli et al., 2020); detecting abnormal behavior in scenes (Fang et al., 2021); and human arm motion tracking in robotics (Palmieri et al., 2020) etc. Visual sensing technology includes various camera types, such as RGB cameras, hyper-spectral and multi-spectral cameras and depth cameras (Li & Liu, 2019). Different types of cameras provide diverse data. RGB cameras, the most common in daily life, are designed to create two-dimensional images that simulate the human vision system, capturing light information in three color wavelengths, i.e. red, green and blue, and their combination (INFINITI ELECTRO-OPTICS (https://www.infinitioptics.com/)). Depth cameras add distance information to simple 2D RGB images, thus creating stereo imaging. According to their operating principle, these can be categorised as RGB binoculars, Time of Flight, or structured light sensors. Even though processing of data captured from visual sensors can be time consuming and complicated, still, these enjoy vast popularity due to the benefits of being economical, convenient, and the vast amount of supplied information.

The majority of the research literature on obstacle detection and avoidance in a robotic environment is based on vision sensors (Melchiorre et al., 2019; Mohammed et al., 2016; Perdereau et al., 2002; Schmidt & Wang, 2014; Wang et al., 2013). The camera may be fixed somewhere in the work-space or may be mounted on some moving part of the robot. Khatib et al. (2017) used a depth camera (Microsoft Kinect) mounted on the EEF (eye-in-hand) or on the worker’s head. They achieved a coordinated motion between a KUKA LWR IV robot and human in a ROS environment. However, in this work three markers were placed around the robot for continuous camera localization and the detection was not real time. In another work, Flacco et al. (2012) proposed a real time collision avoidance approach by calculating the distance between the robot and possible moving objects. A Microsoft Kinect depth sensor was used in this work which was mounted on the top of the robot workspace. Moreover, Indri et al. (2020a, 2020b) and Rashid et al. (2020) used IP and simple RGB cameras to acquire imaging data. However, in most of the literature 3D or depth cameras have been used (De Luca & Flacco, 2012; Wang et al., 2016). The work done in the industrial field of human detection using vision sensors is presented in Table 1. Moreover, the work is categorized in terms of the main method used to detect the human/obstacle from the sensor data. Some of the methods, particularly Skeleton/Joints detection is used not only with vision sensors but also with inertial sensors (section “Inertial sensors”), hence those works are also presented in the table. The algorithms are discussed further in section “Methods used for detecting the human in a human–robot collaboration environment”.

Laser sensors

Due to the homogeneity, direction and brightness, lasers are widely used in many fields for various applications (Dubey & Yadava, 2008). Laser sensors usually consist of an emitter, a detector, and a measuring circuit. They are mainly used to measure physical quantities like distance, velocity and vibration. The main types are laser displacement sensors, laser trackers, and laser scanners. The main fundamentals of measuring laser range are: triangulation, time of flight (TOF) and optical interference (Bosch, 2001). TOF refers to the time from projecting to receiving the laser. TOF laser sensors are one of the most used range finders, especially for objects at long distances. The triangulation concept, primarily implemented in laser displacement sensors, uses trigonometric function and homothetic triangle theory to compute the distance to objects. Optical interference works on the principle that the superposition of two light beams with distinct phases will generate fringes with different brightness. It is mainly employed in laser tracker sensors.

Huang et al. (2021) present a robotic disassembly cell comprising two cobots and a human operator. A safety laser scanner along with active compliance control was used to achieve complicated disassembly operations with safe human–robot interaction. In another safe human–robot collaborative work, Safeea and Neto (2019) used a 2D LIDAR along with IMUs to actively avoid the collision of human workers with the KUKA iiwa robotic manipulator arm. We have only discussed few examples here, more details of laser scanners being used in industrial environments, especially for manipulators detecting the obstacles, are summarized in Table 2.

Inertial sensors

Inertial sensors are one of the most widely used motion tracking sensors. These sensors are comprised of an accelerometer, gyroscope and magnetometer, and the combination of these three is mainly known as an inertial measuring unit (IMU). IMU sensors are mostly placed on the body of human operator, generally close to the joints (Safeea & Neto, 2019). In the literature, there is wide use of IMU sensors in obstacle detection in an industrial environment, as these sensors are cheap (compared to other sensors) and fast. Moreover, as IMU sensors can be attached to every joint or part of interest, it can increase the overall effectiveness and performance of the obstacle detection system (Glonek & Wojciechowski, 2017).

IMU is mostly used in combination with other sensors or technologies to provide an extra layer of information. For example, Corrales et al. (2008) used a GypsyGyro-18 IMU sensor with a ubisense tag to track a human operator in the workspace of P A-10 robotic manipulator arm. Digo et al. (2020) used MTx IMUs with spatial (V120: Trio tracking bar with 17 passive reflective markers) sensors to acquire data from upper limbs of human operator for typical pick and place movements in an industrial environment. Amorim et al. (2021) used 2 IMUs with 6 FLEX3 cameras for the pose estimation of a human operator working in a robotic cell. Further details on the usage of IMU sensors in robot obstacle detection are outlined in Table 3 and also in Table 4 which summarizes multi-modal sensor systems.

Other sensors: proximity, ultrasonic, radar and acoustic

Apart from the aforementioned types of sensors, some other sensors have been utilized to some extent in the literature to detect obstacles/humans in robot workspaces, namely proximity sensors, ultrasonic sensors, acoustic sensors, and magnetic sensors.

Proximity sensors detect an object’s presence without coming in contact with it. Based on the fundamental operation of the sensor, they can be categorized as capacitive or inductive. Capacitive proximity sensors can detect anything that carries an electrical charge, while the inductive ones can only detect targets that carry a magnetic charge. The use of these types of sensors in dynamic obstacle avoidance is very limited in the literature. Another common type of proximity sensor is the distance proximity sensor. These sensors are used to detect the presence of objects within the sensing area. Mostly the fundamental operational unit of these types of sensors works on ultrasonic, infrared or radar waves. Sahu et al. (2014) developed a customised sensor base comprising of a force sensor, two capacitive proximity sensors, one inductive proximity sensor, an ultrasonic sensor, and a tactile sensor. The sensors are interfaced using a micro-controller and work as an integral part of the robotic arm.

Ultrasonic sensors are primarily used to detect the obstacle by estimating the object’s distance from the sensor base by sending ultrasonic sound waves towards it and computing the returned echo time. Although these are small in size and cheap in price, as these can only be used for short range distances, these are mostly used in combination with other sensors in a human robot collaborative environment. Dániel et al. (2012) have used the combination of an ultrasonic sensor and an infrared proximity sensor to avoid joint level collision in NACHI MR-20 robot.

Similarly, radar sensors detect the presence of objects by sending electromagnetic waves, and infrared sensors do the same by sending the energy of infrared wavelength (Stetco et al., 2020). One thing to note is that proximity distance sensors (ultrasonic proximity sensor, infrared proximity sensor and radar proximity sensors) and general distance sensors (ultrasonic sensors, infrared sensors and radar sensors) work in a similar manner but provide different output. The main difference between these two is that proximity sensors sense the presence of an object within a specific range, but do not necessarily provide distance information. Distance sensors detect the object and provide distance information. However, there are some sensors present in the market, like the HC-sr04 ultrasonic sensor (Ultrasonic Distance Sensor - HC-SR04 (5V). https://www.sparkfun.com/products/15569), which can be categorised as both. A summary of the usage of these sensors to detect obstacles in a robotic environment is outlined in Table 3.

Multiple sensors

Just as humans use different sensing capabilities, robots also benefit from multiple artificial senses to acquire information about the environment. Acquisition of information from multiple sensors is achieved in two ways:

-

Multiple sensors of the same modality: such as multiple cameras or laser scanners to cover a wider area. Research shows that if two or more sensors are scanning a workspace, if one sensor is unable to cover a space (for example, if it is setup in such a way that there are blind spots or if the sensor fails for any reason), the other sensor will compensate (Schmidt & Wang, 2014).

-

Data from multiple sensors of different modality: such as a camera and a LIDAR installed in a workspace (Kousi et al., 2018). This is usually known as a multi-modal sensor system. A typical obstacle detection system works on a single modality (discussed in previous sections). However, in complex environments, no single sensor modality can handle different situations in real-time. For example, if a system contains both a laser scanner and a camera and the obstacle is out of the laser scanner range, the camera can still detect it. Therefore, the use of various kinds of sensors for the same operation increases the chance of the task being done successfully. For example, Safeea and Neto (2019) used laser and inertial sensors to calculate the minimum distance between the human operator and KUKA iiwa robot.

A summary table (Table 4) containing the studies incorporating multi-sensor or multi-modal systems to obstacles is listed below.

Sensors used in post-impact/collision phase

Tactile (touch) and torque/force sensors

In most human–robot collaborative environments, when a robot approaches a human, it reduces its speed. However, many kinematic concepts should also be considered. For example, when a robot is about to reach its singular configuration, the angular velocity of its joints is exceptionally high even though the tip is often hardly moving (Frigola et al., 2006). In these situations, the robot can be extremely dangerous. Therefore, in this case, it is always better to reduce the speed of every part of the robot (joints as well as end-effectors), and in case of contact, a robot must minimise its contact pressure and force.

For a robot to recognize contact, tactile sensors are used, and in the case of force, torque sensors are used. These two types of sensors are discussed in this section as both come in contact with the obstacle/human operator in case of collision. A summary of these sensors used in an industrial environment is given in Table 5.

Tactile sensors equip collaborative robots with touch sensation and thus helps enhance the intelligence of the robot. There are several types of these sensors, including capacitive, piezo-electric, piezo-resistive, and optical (Girão et al., 2013). In an industrial environment, tactile sensing technologies are mainly used for object exploration or recognition. However, in the literature, these have also been used for safe human collaboration. For example, Cho et al. (2017) used a custom-made tactile sensor to grasp an irregular shaped object while avoiding the collision with the cup holding the object. O’Neill et al. (2015) also developed a custom stretchable smart skin, made of tactile sensors, wrapped around the robot arm, so that it can intelligently interact with its environment, particularly sensing and localizing physical contact around its link surfaces. In another work by Vogel et al. (2016) a floor with 1536 tactile sensors was used in the workspace of KUKA KR60 L45 to detect the dynamic obstacles/ human workers as soon as they step on the sensing floor.

A torque sensor converts a torque applied to a mechanical axis to an electrical signal. Nowadays, most cobots that are being developed, come with built-in torque sensors in arm joints. DLR-III Burger et al. (2010), KUKA LBR iiwa [57], UR5e Lightweight (https://www.universalrobots.com/products/ur5-robot/) and many more come with built-in torque sensors in their joints to detect any collision. In the literature, some work is reported on detecting and avoiding collisions using these built-in sensors. Popov et al. (2017) used joint torque sensors of the Kuka iiwa LBR 14 R820 to detect an obstacle. Along with only detecting the collision, they have also calculated the point of contact where the collision happened. To evaluate their point of contact results, they have used data from a 3D LIDAR and a camera as a ground truth to estimate the performance and the true position of collision.

Similarly, Likar and Žlajpah (2014) and Hur et al. (2014) also reported work on detecting obstacles using the robot’s joint torque sensors. However, the use of external force/torque sensors for obstacle detection in a collaborative environment is less common. In one study, Li et al. (2020), used a torque sensor at the JAKA ZU7 robot’s bedplate to detect collision. A novel method was proposed based on the dynamic model that measures the force reaction caused by the robot’s dynamics at its bedplate. In another work, Lu et al. (2006), used a torque sensor on a wristband of robot to detect collision.

Methods used for detecting the human in a human–robot collaboration environment

In this section the main algorithms used to detect/highlight the human in a collaborative workspace are discussed. Where a human and robot are working in close proximity there are two general phases; Pre-Impact/Collision and Post-Impact/collision.

Pre-impact/collision methods

In the pre-collision phase, collision avoidance is the primary task and at least local knowledge of the workspace and the location of obstacles is required. Therefore, like the sensing modalities, the methods that are used in this phase also focus on detecting the object of interest before it comes in contact with the robot.

Visual background/foreground isolation

Background/foreground isolation is a generic process to differentiate the background (workspace including the robot) and the foreground (objects and humans). It can be done in several ways, for example (Cefalo et al., 2017) uses a virtual depth image of the workspace, including a robot. The robot kinematics was used to move the virtual model to match the real robot configuration, and a sequence of linear transformations was defined to obtain the same point and field of view depth image from the Kinect camera. Furthermore, the virtual depth image is subtracted from the real camera image to cancel the robot and fixed space and build a map containing only the obstacles.

Another way to isolate the background from the foreground is to use a reference frame. A reference frame can be any image without the unknown objects that need to be detected. Rashid et al. (2020) uses a reference frame to detect the humans in the workspace. Henrich and Gecks (2008) utilizes multiple images to create a reference image. That reference image is further used to calculate a difference image by evenly subdividing the current captured image into non-overlapping tiles of a grid, where each tile contains some pixels.

Instead of using a fixed reference image, an alternative is to use the previous frame or number of previous frames as a reference and to detect the changed pixels/regions as objects Kahlouche and Ali (2007) and Rea et al (2019) used optical flow to identify the dynamic instants. Furthermore, Bascetta et al. (2011) used a static camera to produce one image as a reference for each time step.

This method is easy and straightforward in the scenario where exact information about the obstacle is not required, and the workspace remains static. Also, it is more suitable when the focus is on detecting not only the human but also other static objects that may come in the way of the robot. However, one of the main limitations of this method occurs when a frame contains overlapping objects. For instance, Kühn et al. (2006) used the difference image method to detect obstacles. However, when the objects are lined up behind each other, the algorithm treats these as one object and they are projected in the same area in a difference image.

Visual object detection

Trained neural networks are being used to detect and locate objects of interest within an image. Convolutional Neural Networks (CNN) have been widely used as these are highly successful in object detection due to their ability to automatically learn features. Indri et al. (2020a) and Kenk et al. (2019) used You Only Look Once (YOLO) based object detector to detect and track humans.

Furthermore, objects can be detected using distance-based sensing modalities like laser, ultrasonic, proximity etc. With these type of sensors, only the distance from the object can be known without identification of the object itself. For example, Huang et al. (2021) used a safety laser scanner for configuring protection zones so that the human and robot do not come close to each other.

Object detection models like YOLO are popular for their speed and accuracy. Additionally, new objects outside the training set can easily be added to the network using transfer learning. Using YOLO with a depth camera enhances its application, beyond object detection, to determination of the object’s distance. However, the object detection models only register the global position and orientation of a person. In a collaborative environment it is also necessary to have knowledge of the location of the human joints and especially how near the hands are to the system. Existing networks such as YOLO are not currently useful in this respect.

Skeleton/joints detection

Human–robot interaction in the industrial environment may be dangerous, especially when human operators are working in close proximity to robots. Therefore, not only the global position and orientation of humans is required, but also the precise localisation of joints/body parts. To detect human joints, different sensors can be used such as vision, IMU, motion capture systems etc. In terms of vision, mainly the OpenNI library from Kinect is used for human skeleton estimation (Zanchettin et al., 2016; Zhang et al., 2020a, 2020b). There are also numerous vision-based pose estimation neural networks like OpenPose. OpenPose is an open-source system for the detection of 2D multi-person body including joints, that uses deep learning and part affinity fields that enable these type of methods to achieve high accuracy while detecting pose in real-time. Antão et al. (2019) used OpenPose with COCO dataset library to detect the body of a human operator working with a UR5 robot.

As well as vision systems, inertial sensors are widely used to detect human body joints. The IMUs are placed on the desired joints, which can then be tracked (Uzunović et al., 2018; Zhang et al., 2020a, 2020b). For example, Neto et al. (2018) placed five IMUs on the upper body of a human operator and kept track of joint motion using an extended Kalman filter. Another way to capture human joints information is by using Motion Capture (MoCap) systems. In MoCap systems, users wear tags or sensors near each joint of the body and the system calculates each joint movement by comparing the positions and angles between the worn tags/sensors. Tuli et al. (2022) used an Xsens motion capturing system with eight tags on human upper body to locate and track the human position.

Skeleton/joints detection method is the safest solution when human and robot are working very closely together, as these can detect and track the various pertinent parts of the human body such as head, hands, arms, torso etc.

Marker based detection

Another method that has been used in an industrial environment to detect a human operator is marker based detection. In this method, human operators wear specific color clothing or markers on their body which are detected and tracked by receivers, such as cameras or Radio Frequency systems. Tan and Arai (2011) adhered sewn colored patches on the operator clothes representing shoulder, elbows and head. Diab et al. (2020) attached RFID tags to the target object. Hawkins et al. (2013) created bright colored gloves to allow the detection of human hands by the vision system. Marker based detection is quick and easy, however, it requires the human to wear the tags, leading to dangerous scenarios if someone enters the workspace without wearing dedicated markers.

Discussion

Sensors constitute a vital part of an industrial workspace where humans and robots work together. In particular, relying simply on force-power limitation to ensure operator safety has stifled the deployment of fenceless collaborative robots in manufacturing. From Tables 1, 2, 3, 4, 5, it may be noted that several research works involve a combination of different sensors. The primary reason behind this being the fact that a combination of sensors of different modalities (or even of the same modality) provides a more reliable detection by complementing each other’s limitations. Moreover, as different types of sensors are useful for different purposes in each application, in Table 6 we summarise some useful characteristics of sensors specifically for the scenario of human detection in a collaborative environment.

One of the main advantages of pre-impact/collision methods is that these are capable of detecting the human before any contact takes place. Below we discuss pros and cons of each sensor type outlined in section “Sensors used in pre-impact/collision phase”.

-

Vision sensors: the significant advantage of using vision sensors is that they are non-intrusive and allow a robot to perform various tasks using only one sensor. However, like any other safety related system, implementing redundancy through multiple or multimodal sensors is imperative to ensure safety in case of any sensor failure. Moreover, vision sensing modalities not only have difficulty producing robust information when facing cluttered environments, as light conditions can easily interfere with the visual results, but they also place a high computational burden on the system when using advanced vision algorithms for stereo matching and depth estimation.

-

Laser sensors: laser scanners are fast, accurate in scanning, and can detect objects at a significant distance, but mostly these sensors, especially for 3D scanning, are quite expensive and require high computational power. These also provide a low number of images per second.

-

Inertial sensors: inertial sensors provide data with good precision if used for a short time, but as time goes by, the error accumulates. Moreover, they only work if the human is wearing them and therefore cannot be used in detecting humans who may accidentally enter the robot manipulator workspace without being fitted with sensors.

-

Proximity sensors: proximity sensors are low cost, have low power consumption, and high speed. However, these cover a limited range and can be easily tempered by environmental conditions like temperature etc.

Post-collision sensors are used to ensure minimal injury to a human operator if a collision occurs. Cobots equipped with only these types of sensors must operate at low speeds and with low force when a human is present. Additionally, reliance on force-power limitation requires assurances that the limits respected throughout the workspace, a process that typically requires testing. The pros and cons of these sensors are discussed below:

-

Tactile sensors: tactile sensors can detect an object even when a vision sensor cannot due to an occluded surface. Although tactile sensors are getting more and more attention, their performance is not yet very reliable. The development of these sensors requires advancement in various technological fields like electronics, materials etc. Therefore, despite these sensors having significant potential, there is a long way to go for these to be successfully used in obstacle detection modules of cobots.

-

Force sensors: force sensors only trigger when an object comes in contact; therefore, these are not ideal for avoiding an obstacle.

Along with using multiple kinds of sensors in a system, it is also important to note where these sensors will be installed in the workspace. A sensor must be placed in such a way that it covers the maximum area/angle of the robot workspace. There is no defined location or position to install each kind of sensor. In the literature, researchers have proposed different locations to enhance the system’s efficiency. For example, Perdereau et al.2002 used 5 IMU sensors and placed these on the upper body of a human (two at each forearm, two at each upper arm and one at the chest), while Digo et al. (2020) used 7 IMU sensors, one at the table for reference and six on the human body (one at right forearm, one at right upper arm, two at both shoulders, one at sternum and last one at pelvis). Therefore, in Table 4 we also list the location of installed sensors in each study.

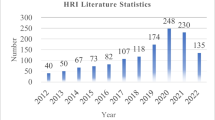

This review of the literature was conducted up to January 2023 and 171 papers were identified as relevant to the topic of external sensors for object detection in the robotic manipulator environment. Of these, 76 papers satisfied the criteria of hardware implementation as described in section “Obstacle detection and collision avoidance in cobots”. Overall, from this review, we can summarize that the use of vision sensors (either single or in combination) dominates with about 41.3% usage in the literature. The use of other sensors, discussed in this article, whether these were used alone or in a multi-sensor system, is presented in Fig. 5. Moreover, if we make a comparison of systems where either a single sensor is used or a combination of these, then we can conclude that the use of multiple sensors (51%) is fairly equal to the use of a single sensor system (48.7%), as shown in Fig. 4. In multi sensors, mainly multiple vision sensors are used but when it comes of different modalities vision with laser, vision with inertial are used most as compared to other combinations. Further, if we focus on single sensor systems, again the vision sensor dominates, having been used in 40% of the relevant studies.

Multi-modal sensing systems aim to get the best out of different sensors. However, using external sensors in a system means increasing the price and computational cost; thus, adding more sensors adds extra levels of complexity (Wang et al., 2020a, 2020b). The best solution is to utilise optimal mathematical and statistical methods to achieve the best possible accuracy with minimal cost. This requires efficient coding, and algorithms which can make optimal use of noisy data from low cost sensors without a high computational burden. A recent review (Ding et al., 2022), indicates that the main algorithms which have been used so far in the literature for robot perception are stochastic algorithms such as the Kalman filter and particle filters. Researchers have also explored artificial intelligence based algorithms including fuzzy logic and neural networks etc., however to date the AI based approaches are not as mature in terms of accuracy or computational cost.

For the specific task of detecting a human in workspace, various kinds of algorithms are used in literature mainly using vision sensors. From the review, we can summarize that mainly the background/foreground method (41.0%) is used to isolate the human operator or any dynamic moving obstacle from the static environment. The second most used method is skeleton/joints detection (37.2%) using both vision and inertial sensors. Figure 5 illustrates the percentage of research works using each method to detect humans. Moreover Tables 2, 3, and 5, detail methods and algorithms used when vision sensors are excluded.

Conclusions

In this study, a comprehensive review of sensors for human detection in a robotic manipulator industrial environment is presented. We have grouped the literature review into three categories: entirely simulated (both the data and the environment is simulated), partially simulated (real data but simulated environment), and hardware implementation (both data and environment is real). Focusing on hardware implementation, a range of different sensor modalities have been implemented on a wide range of industry-standard manipulators. From the review, it can be concluded that the most used common sensor technology for obstacle detection is the vision sensor, and specifically depth cameras, due to the rich information they provide about the environment. However, a major drawback of using a vision sensor is that it has difficulty in producing robust information when facing cluttered environments and it also places a high computational burden on the system. To overcome this limitation of a vision sensor and to get more accurate information (especially distance) in any kind of environmental condition, laser sensors have been proposed. Laser sensors provide fast and accurate information but these tend to be expensive, especially 3D laser sensors. Tactile sensors can be useful in aiding the robot in grasping tasks but are of limited application in obstacle detection and avoidance. Inertial sensors are particularly useful for detection and tracking of humans in the robot workspace, but the major limitation of these types of sensors is that these have to be worn by human operators and cannot protect a human who may unintentionally enter the workspace for example. Many works have used multiple sensors for enhanced environment perception, but this also increases the complexity and the cost. Therefore, future research should also focus on methods to not only improve the reliability and accuracy of the system but also to reduce the cost and computational burden of the perception system.

Data availability

The complete search history and findings supporting this study are available from the corresponding author upon request.

References

Abb Group. Leading Digital Technologies for Industry—Abb Group. (n.d.). ABB. Retrieved January 29, 2024, from https://global.abb/group/en

Adamides, O. A., Modur, A. S., Kumar, S., & Sahin, F. (2019). A time-of-flight on-robot proximity sensing system to achieve human detection for collaborative robots. In IEEE 15th international conference on automation science and engineering (CASE), 2019 (pp. 1230–1236). https://doi.org/10.1109/coase.2019.8842875

Ahmad, R., & Plapper, P. (2015). Human-robot collaboration: Twofold strategy algorithm to avoid collisions using TOF sensor. International Journal of Materials, Mechanics and Manufacturing, 4(2), 144–147. https://doi.org/10.7763/ijmmm.2016.v4.243

Amorim, A., Guimares, D., Mendona, T., Neto, P., Costa, P., & Moreira, A. P. (2021). Robust human position estimation in cooperative robotic cells. Robotics and Computer-Integrated Manufacturing, 67, 102035. https://doi.org/10.1016/j.rcim.2020.102035

Antão, L., Reis, J., & Gonçalves, G. (2019). Voxel-based space monitoring in human-robot collaboration environments. In IEEE international conference on emerging technologies and factory automation (ETFA), 2019 (pp. 552–559). https://doi.org/10.1109/etfa.2019.8869240

Afsari, K., Gupta, S., Afkhamiaghda, M., & Lu, Z. (2018, April). Applications of collaborative industrial robots in building construction. In 54th ASC Annual International Conference Proceedings (pp. 472–479).

Avanzini, G. B., Ceriani, N. M., Zanchettin, A. M., Rocco, P., & Bascetta, L. (2014). Safety control of industrial robots based on a distributed distance sensor. IEEE Transactions on Control Systems Technology, 22(6), 2127–2140. https://doi.org/10.1109/tcst.2014.2300696

Ayyad, A., Halwani, M., Swart, D., Muthusamy, R., Almaskari, F., & Zweiri, Y. (2023). Neuromorphic vision based control for the precise positioning of robotic drilling systems. Robotics and Computer-Integrated Manufacturing, 79, 102419. https://doi.org/10.1016/j.rcim.2022.102419

Bascetta, L., Ferretti, G., Rocco, P., Ardö, H., Bruyninckx, H., Demeester, E., & Di Lello, E. (2011). Towards safe human-robot interaction in robotic cells: An approach based on visual tracking and intention estimation. In IEEE/RSJ international conference on intelligent robots and systems, 2011 (pp. 2971–2978). https://doi.org/10.1109/iros.2011.6048287

Berg, J., Lottermoser, A., Richter, C., & Reinhart, G. (2019). Human-robot-interaction for mobile industrial robot teams. Procedia CIRP, 79, 614–619. https://doi.org/10.1016/j.procir.2019.02.080

Borrell, J., Pérez-Vidal, C., Heras, J. V. S., & Perez-Hernandez, J. J. (2020). Robotic pick-and-place time optimization: Application to footwear production. IEEE Access, 8, 209428–209440. https://doi.org/10.1109/access.2020.3037145

Bosch, T. (2001). Laser ranging: A critical review of usual techniques for distance measurement. Optical Engineering, 40(1), 10. https://doi.org/10.1117/1.1330700

Bragança, S., Costa, E., Castellucci, I., & Arezes, P. (2019). A brief overview of the use of collaborative robots in industry 4.0: Human role and safety. In Studies in systems, decision and control (pp. 641–650). https://doi.org/10.1007/978-3-030-14730-3_68

Cefalo, M., Magrini, E., & Oriolo, G. (2017). Parallel collision check for sensor based real-time motion planning. In IEEE international conference on robotics and automation (ICRA), 2017 (pp. 1936–1943). https://doi.org/10.1109/icra.2017.7989225

Ceriani, N. M., Zanchettin, A. M., Rocco, P., Stolt, A., & Robertsson, A. (2015). Reactive task adaptation based on hierarchical constraints classification for safe industrial robots. IEEE-ASME Transactions on Mechatronics, 20(6), 2935–2949. https://doi.org/10.1109/tmech.2015.2415462

Cherubini, A., & Chaumette, F. (2012). Visual navigation of a mobile robot with laser-based collision avoidance. The International Journal of Robotics Research, 32(2), 189–205. https://doi.org/10.1177/0278364912460413

Cherubini, A., & Navarro-Alarcon, D. (2021). Sensor-based control for collaborative robots: Fundamentals, challenges, and opportunities. Frontiers in Neurorobotics. https://doi.org/10.3389/fnbot.2020.576846

Cherubini, A., Passama, R., Crosnier, A., Lasnier, A., & Fraisse, P. (2016). Collaborative manufacturing with physical human–robot interaction. Robotics and Computer-Integrated Manufacturing, 40, 1–13. https://doi.org/10.1016/j.rcim.2015.12.007

Cho, I., Lee, H. K., Chang, S., & Yoon, E. (2017). Compliant ultrasound proximity sensor for the safe operation of human friendly robots integrated with tactile sensing capability. Journal of Electrical Engineering & Technology, 12(1), 310–316. https://doi.org/10.5370/jeet.2017.12.1.310

Collaborative Robotic Automation—Cobots from Universal Robots. (n.d.). Universal robots. Retrieved January 29, 2024, from https://www.universal-robots.com/

Collaborative Robotics for Assembly and Kitting in Smart Manufacturing. (n.d.). European Comission. Retrieved January 29, 2024, from https://cordis.europa.eu/project/id/688807

Corrales, J. A., Candelas, F. A., & Torres, F. (2008, March). Hybrid tracking of human operators using IMU/UWB data fusion by a Kalman filter. In Proceedings of the 3rd ACM/IEEE international conference on Human robot interaction (pp. 193–200)

Costanzo, M., De Maria, G., Lettera, G., & Natale, C. (2022). A multimodal approach to human safety in collaborative robotic workcells. IEEE Transactions on Automation Science and Engineering, 19(2), 1202–1216. https://doi.org/10.1109/tase.2020.3043286

Dániel, B., Korondi, P., & Thomessen, T. (2012). Joint level collision avoidance for industrial robots. IFAC Proceedings Volumes, 45(22), 655–658. https://doi.org/10.3182/20120905-3-hr-2030.00068

Davison, E., & Goldenberg, A. (1975). Robust control of a general servomechanism problem: The servo compensator. Automatica, 11(5), 461–471. https://doi.org/10.1016/0005-1098(75)90022-9

De Luca, A., Albu‐Schäffer, A., Haddadin, S., & Hirzinger, G. (2006). Collision detection and safe reaction with the DLR-III lightweight manipulator arm. In IEEE/RSJ international conference on intelligent robots and systems, 2006 (pp. 1623–1630). https://doi.org/10.1109/iros.2006.282053

De Luca, A., & Flacco, F. (2012). Integrated control for pHRI: Collision avoidance, detection, reaction and collaboration. In 4th IEEE RAS & EMBS international conference on biomedical robotics and biomechatronics (BioRob), 2012 (pp. 288–295). https://doi.org/10.1109/biorob.2012.6290917

De Gea Fernández, J., Mronga, D., Günther, M., Knobloch, T., Wirkus, M., Schröer, M., Trampler, M., Stiene, S., Kirchner, E. A., Bargsten, V., Bänziger, T., Teiwes, J., Krüger, T., & Kirchner, F. (2017). Multimodal sensor-based whole-body control for human–robot collaboration in industrial settings. Robotics and Autonomous Systems, 94, 102–119. https://doi.org/10.1016/j.robot.2017.04.007

De Pace, F., Manuri, F., Sanna, A., & Fornaro, C. (2020). A systematic review of augmented reality interfaces for collaborative industrial robots. Computers & Industrial Engineering, 149, 106806. https://doi.org/10.1016/j.cie.2020.106806

Deniz, C., & Gökmen, G. (2021). A new robotic application for COVID-19 specimen collection process. Journal of Robotics and Control (JRC), 3(1), 73–77. https://doi.org/10.18196/jrc.v3i1.11659

Diab, M., Pomarlan, M., Beßler, D., Akbari, A., Rosell, J., Bateman, J. A., & Beetz, M. (2020). SkillMaN—A skill-based robotic manipulation framework based on perception and reasoning. Robotics and Autonomous Systems, 134, 103653. https://doi.org/10.1016/j.robot.2020.103653

Digo, E., Antonelli, M., Cornagliotto, V., Pastorelli, S. P., & Gastaldi, L. (2020). Collection and analysis of human upper limbs motion features for collaborative robotic applications. Robotics, 9(2), 33. https://doi.org/10.3390/robotics9020033

Ding, H., Schipper, M., & Matthias, B. (2013). Collaborative behavior design of industrial robots for multiple human-robot collaboration. In IEEE ISR 2013 (pp. 1–6). https://doi.org/10.1109/isr.2013.6695707

Ding, X., Guo, J., Ren, Z., & Deng, P. (2022). State-of-the-art in perception technologies for collaborative robots. IEEE Sensors Journal, 22(18), 17635–17645. https://doi.org/10.1109/jsen.2021.3064588

Ding, Y., Wilhelm, F., Faulhammer, L., & Thomas, U. (2019). With proximity servoing towards safe human-robot-interaction. In IEEE/RSJ international conference on intelligent robots and systems (IROS), 2019 (pp. 4907–4912). https://doi.org/10.1109/iros40897.2019.8968438

Burger, R., Haddadin, S., Plank, G., Parusel, S., & Hirzinger, G. (2010, October). The driver concept for the DLR lightweight robot III. In 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems (pp. 5453–5459). IEEE.

Du, G., Long, S., Li, F., & Huang, X. (2018). Active collision avoidance for human-robot interaction with UKF, expert system, and artificial potential field method. Frontiers in Robotics and AI. https://doi.org/10.3389/frobt.2018.00125

Du, G., & Zhang, P. (2016). A novel human–manipulators interface using hybrid sensors with Kalman filter and particle filter. Robotics and Computer-Integrated Manufacturing, 38, 93–101. https://doi.org/10.1016/j.rcim.2015.10.007

Dubey, A. K., & Yadava, V. (2008). Laser beam machining—A review. International Journal of Machine Tools & Manufacture, 48(6), 609–628. https://doi.org/10.1016/j.ijmachtools.2007.10.017

Kumar, S., Savur, C., & Sahin, F. (2018). Dynamic awareness of an industrial robotic arm using time-of-flight laser-ranging sensors. In 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC). IEEE, (pp. 2850–2857)

Elshafie, M., & Bone, G. M. (2008). Markerless human tracking for industrial environments. In Conference proceedings. https://doi.org/10.1109/ccece.2008.4564716

Escobedo, C., Strong, M., West, M. E., Aramburu, A., & Roncone, A. (2021). Contact anticipation for physical human–robot interaction with robotic manipulators using onboard proximity sensors. In 2021 IEEE/RSJ international conference on intelligent robots and systems (IROS). https://doi.org/10.1109/iros51168.2021.9636130

Fang, M., Chen, Z., Przystupa, К, Li, T., Majka, M., & Кoчaн, O. (2021). Examination of abnormal behavior detection based on improved YOLOV3. Electronics, 10(2), 197. https://doi.org/10.3390/electronics10020197

Fischer, M., & Henrich, D. (2009a). 3D collision detection for industrial robots and unknown obstacles using multiple depth images. In Springer eBooks (pp. 111–122). https://doi.org/10.1007/978-3-642-01213-6_11

Fischer, M., & Henrich, D. (2009b). Surveillance of robots using multiple colour or depth cameras with distributed processing. In Third ACM/IEEE international conference on distributed smart cameras (ICDSC), 2009 (pp. 1–8). https://doi.org/10.1109/icdsc.2009.5289381

Flacco, F., & De Luca, A. (2010). Multiple depth/presence sensors: Integration and optimal placement for human/robot coexistence. In IEEE international conference on robotics and automation, 2010 (pp. 3916–3923). https://doi.org/10.1109/robot.2010.5509125

Flacco, F., Kröger, T., De Luca, A., & Khatib, O. (2012). A depth space approach to human-robot collision avoidance. In EEE international conference on robotics and automation, 2012 (pp. 338–345). https://doi.org/10.1109/icra.2012.6225245

Franka Emika - The Robotics Company. (n.d.). Franka robotics. Retrieved January 29, 2024, from https://www.franka.de/

Frigola, M., Casals, A., & Amat, J. (2006). Human-robot interaction based on a sensitive bumper skin. In IEEE/RSJ international conference on intelligent robots and systems, 2006 (pp. 283–287). https://doi.org/10.1109/iros.2006.282139

Fritzsche, M., Saenz, J., & Penzlin, F. (2016). A large scale tactile sensor for safe mobile robot manipulation. In 2016 11th ACM/IEEE international conference on human-robot interaction (HRI). https://doi.org/10.1109/hri.2016.7451789

Gabler, V., Stahl, T., Huber, G., Oguz, O. S., & Wollherr, D. (2017). A game-theoretic approach for adaptive action selection in close proximity human-robot-collaboration. In IEEE international conference on robotics and automation (ICRA), 2017 (pp. 2897–2903). https://doi.org/10.1109/icra.2017.7989336

Girão, P. S., Ramos, P. M., Postolache, O., & Pereira, M. (2013). Tactile sensors for robotic applications. Measurement, 46(3), 1257–1271. https://doi.org/10.1016/j.measurement.2012.11.015

Glonek, G., & Wojciechowski, A. (2017). Hybrid orientation based human limbs motion tracking method. Sensors, 17(12), 2857. https://doi.org/10.3390/s17122857

Guang, X., Gao, Y., Leung, H., Liu, P., & Li, G. (2018). An autonomous vehicle navigation system based on inertial and visual sensors. Sensors, 18(9), 2952. https://doi.org/10.3390/s18092952

Halme, R., Lanz, M., Kämäräinen, J., Pieters, R., Latokartano, J., & Hietanen, A. (2018). Review of vision-based safety systems for human-robot collaboration. Procedia CIRP, 72, 111–116. https://doi.org/10.1016/j.procir.2018.03.043

Han, D., Nie, H., Chen, J., & Chen, M. (2018). Dynamic obstacle avoidance for manipulators using distance calculation and discrete detection. Robotics and Computer-Integrated Manufacturing, 49, 98–104. https://doi.org/10.1016/j.rcim.2017.05.013

Han, F., Siva, S., & Zhang, H. (2019). Scalable representation learning for long-term augmented reality-based information delivery in collaborative human-robot perception. In Lecture notes in computer science (pp. 47–62). https://doi.org/10.1007/978-3-030-21565-1_4

Hawkins, K. P., Vo, N., Bansal, S., & Bobick, A. F. (2013). Probabilistic human action prediction and wait-sensitive planning for responsive human-robot collaboration. In 13th IEEE-RAS international conference on humanoid robots (humanoids), 2013 (pp. 499–506). https://doi.org/10.1109/humanoids.2013.7030020

Henrich, D., & Gecks, T. (2008). Multi-camera collision detection between known and unknown objects. In Second ACM/IEEE international conference on distributed smart cameras, 2008 (pp. 1–10). https://doi.org/10.1109/icdsc.2008.4635717

Hentout, A., Mustapha, A., Maoudj, A., & Akli, I. (2019). Human–robot interaction in industrial collaborative robotics: A literature review of the decade 2008–2017. Advanced Robotics, 33(15–16), 764–799. https://doi.org/10.1080/01691864.2019.1636714

Hietanen, A., Pieters, R., Lanz, M., Latokartano, J., & Kämäräinen, J. (2020). AR-based interaction for human-robot collaborative manufacturing. Robotics and Computer-Integrated Manufacturing, 63, 101891. https://doi.org/10.1016/j.rcim.2019.101891

Hoffmann, A., Poeppel, A., Schierl, A., & Reif, W. (2016). Environment-aware proximity detection with capacitive sensors for human-robot-interaction. In IEEE/RSJ international conference on intelligent robots and systems (IROS), 2016 (pp. 145–150). https://doi.org/10.1109/iros.2016.7759047

Huang, J., Pham, D. T., Li, R., Qu, M., Wang, Y., Kerin, M., Su, S., Ji, C., Mahomed, O., Khalil, R. A., Stockton, D., Xu, W., Liu, Q., & Zhou, Z. (2021). An experimental human-robot collaborative disassembly cell. Computers & Industrial Engineering, 155, 107189. https://doi.org/10.1016/j.cie.2021.107189

Hur, S., Oh, S., & Oh, Y. (2014). Joint space torque controller based on time-delay control with collision detection. In IEEE/RSJ international conference on intelligent robots and systems, 2014 (pp. 4710–4715). https://doi.org/10.1109/iros.2014.6943232

Ibarguren, A., & Daelman, P. (2021). Path driven dual arm mobile co-manipulation architecture for large part manipulation in industrial environments. Sensors, 21(19), 6620. https://doi.org/10.3390/s21196620

Indri, M., Sibona, F., & Cheng, P. D. C. (2020a). Sen3Bot Net: a meta-sensors network to enable smart factories implementation. In 25th IEEE international conference on emerging technologies and factory automation (ETFA), 2020 (pp. 719–726). https://doi.org/10.1109/etfa46521.2020.9212125

Indri, M., Sibona, F., Cheng, P. D. C., & Possieri, C. (2020b). Online supervised global path planning for AMRs with human-obstacle avoidance. In 25th IEEE international conference on emerging technologies and factory automation (ETFA), 2020 (pp. 1473–1479). https://doi.org/10.1109/etfa46521.2020.9212151

Industrial Automation and Robotics - Comau. (n.d.). COMAU. Retrieved January 29, 2024, from https://www.comau.com/en/

Industrial Intelligence 4.0 Beyond Automation—KUKA AG. (n.d.). KUKA. Retrieved January 29, 2024, from https://www.kuka.com/

Industry 5.0: Towards More Sustainable, Resilient and Human-Centric Industry. (2021, January 7). Research and innovation. https://research-and-innovation.ec.europa.eu/news/all-research-and-innovation-news/industry-50-towards-more-sustainable-resilient-and-human-centric-industry-2021-01-07_en

Innovative Human-Robot Cooperation in BMW Group Production. (n.d.). BMW Group. Retrieved January 29, 2024, from https://www.press.bmwgroup.com/global/article/detail/T0209722EN/innovative-human-robot-cooperation-in-bmw-group-production?language=en

Su, H. et al. (2020, May). Internet of things (IoT)-based collaborative control of a redundant manipulator for teleoperated minimally invasive surgeries. In 2020 IEEE international conference on robotics and automation (ICRA) (pp. 9737–9742). IEEE.

ISO/TS 15066:2016. (2016, March 8). ISO. https://www.iso.org/standard/62996.html

Javaid, M., Haleem, A., Singh, R. P., & Suman, R. (2021). Substantial capabilities of robotics in enhancing industry 4.0 implementation. Cognitive Robotics, 1, 58–75. https://doi.org/10.1016/j.cogr.2021.06.001

Jin, M., Kang, S. H., Chang, P. H., & Lee, J. (2017). Robust control of robot manipulators using inclusive and enhanced time delay control. IEEE/ASME Transactions on Mechatronics, 22(5), 2141–2152. https://doi.org/10.1109/tmech.2017.2718108

Juel, W. K., Haarslev, F., Ramírez, E. R., Marchetti, E., Fischer, K., Shaikh, D., Manoonpong, P., Hauch, C., & Krüger, N. (2019). SMOOTH robot: Design for a novel modular welfare robot. Journal of Intelligent and Robotic Systems, 98(1), 19–37. https://doi.org/10.1007/s10846-019-01104-z

Kahlouche, S., & Ali, K. (2007). Optical flow based robot obstacle avoidance. International Journal of Advanced Robotic Systems, 4(1), 2. https://doi.org/10.5772/5715

Kaldestad, K. B., Haddadin, S., Belder, R., Hovland, G., & Anisi, D. A. (2014). Collision avoidance with potential fields based on parallel processing of 3D-point cloud data on the GPU. In IEEE international conference on robotics and automation (ICRA), 2014 (pp. 3250–3257). https://doi.org/10.1109/icra.2014.6907326

Kallweit, S., Walenta, R., & Gottschalk, M. B. (2015). ROS based safety concept for collaborative robots in industrial applications. In Advances in robot design and intelligent control (pp. 27–35). Springer, 2016. https://doi.org/10.1007/978-3-319-21290-6_3

Kenk, M. A., Hassaballah, M., & Brethé, J. (2019). Human-aware robot navigation in logistics warehouses. In ICINCO (2), 2019 (pp. 371–378). https://doi.org/10.5220/0007920903710378

Khatib, M., Khudir, K. A., & De Luca, A. (2017). Visual coordination task for human-robot collaboration. In IEEE/RSJ international conference on intelligent robots and systems (IROS), 2017 (pp. 3762–3768). https://doi.org/10.1109/iros.2017.8206225

Knudsen, M. S., & Kaivo-oja, J. (2020). Collaborative robots: Frontiers of current literature. Journal of Intelligent Systems: Theory and Applications, 3(2), 13–20. https://doi.org/10.38016/jista.682479

Koç, S., & Doğan, C. (2022). Manufacturing and controlling 5-axis ball screw driven industrial robot moving through G codes. Gümüşhane Üniversitesi Fen Bilimleri Enstitüsü Dergisi. https://doi.org/10.17714/gumusfenbil.990175

Kortli, Y., Jridi, M., Falou, A. A., & Atri, M. (2020). Face recognition systems: A survey. Sensors, 20(2), 342. https://doi.org/10.3390/s20020342

Kousi, N., Michalos, G., Aivaliotis, S., & Makris, S. (2018). An outlook on future assembly systems introducing robotic mobile dual arm workers. Procedia CIRP, 72, 33–38. https://doi.org/10.1016/j.procir.2018.03.130

Kühn, S., Gecks, T., & Henrich, D. (2006). Velocity control for safe robot guidance based on fused vision and force/torque data. In IEEE international conference on multisensor fusion and integration for intelligent systems, 2006 (pp. 485–492). https://doi.org/10.1109/mfi.2006.265623

KUKA AG. (2021, October 6). LBR iiwa. https://www.kuka.com/en-de/products/robot-systems/industrial-robots/lbr-iiwa

KUKA AG. (2024, January 19). LBR iisy cobot. https://www.kuka.com/en-de/products/robot-systems/industrial-robots/lbr-iisy-cobot

Lačević, B., Rocco, P., & Zanchettin, A. M. (2013). Safety assessment and control of robotic manipulators using danger field. IEEE Transactions on Robotics, 29(5), 1257–1270. https://doi.org/10.1109/tro.2013.2271097

Li, C., Hansen, A. K., Chrysostomou, D., Bøgh, S., & Madsen, O. (2022). Bringing a natural language-enabled virtual assistant to industrial mobile robots for learning, training and assistance of manufacturing tasks. 2022 In IEEE/SICE international symposium on system integration (SII). https://doi.org/10.1109/sii52469.2022.9708757

Li, P., & Liu, X. (2019). Common sensors in industrial robots: A review. Journal of Physics: Conference Series, 1267(1), 012036. https://doi.org/10.1088/1742-6596/1267/1/012036

Li, W., Han, Y., Wu, J., & Xiong, Z. (2020). Collision detection of robots based on a force/torque sensor at the bedplate. IEEE-ASME Transactions on Mechatronics, 25(5), 2565–2573. https://doi.org/10.1109/tmech.2020.2995904

Likar, N., & Žlajpah, L. (2014). External joint torque-based estimation of contact information. International Journal of Advanced Robotic Systems, 11(7), 107. https://doi.org/10.5772/58834

Lim, G. H., Pedrosa, E., Amaral, F., Dias, R., Pereira, A., Lau, N., Azevedo, J. L., Cunha, B., & Reis, L. P. (2017). Human-robot collaboration and safety management for logistics and manipulation tasks. In Advances in intelligent systems and computing (pp. 15–27). https://doi.org/10.1007/978-3-319-70836-2_2

Limoyo, O., Ablett, T., Marić, F., Volpatti, L., & Kelly, J. (2018). Self-calibration of mobile manipulator kinematic and sensor extrinsic parameters through contact-based interaction. In IEEE international conference on robotics and automation (ICRA), 2018 (pp. 4913–4920). https://doi.org/10.1109/icra.2018.8460658

Liu, H., & Wang, L. (2018). Gesture recognition for human-robot collaboration: A review. International Journal of Industrial Ergonomics, 68, 355–367. https://doi.org/10.1016/j.ergon.2017.02.004

Long, P., Chevallereau, C., Chablat, D., & Girin, A. (2017). An industrial security system for human-robot coexistence. Industrial Robot: An International Journal, 45(2), 220–226. https://doi.org/10.1108/ir-09-2017-0165

Lu, S., Chung, J., & Velinsky, S. A. (2006). Human-robot collision detection and identification based on wrist and base force/torque sensors. In Proceedings of the 2005 IEEE international conference on robotics and automation, 2005 (pp. 3796–3801). https://doi.org/10.1109/robot.2005.1570699

Ma, R., Chen, J., & Oyekan, J. (2020). A review of manufacturing systems for introducing collaborative robots. Journal of Robotics & Autonomous Systems. https://doi.org/10.31256/zb5dy3b

Majumder, S., & Kehtarnavaz, N. (2021). Vision and inertial sensing fusion for human action recognition: A review. IEEE Sensors Journal, 21(3), 2454–2467. https://doi.org/10.1109/jsen.2020.3022326

Makris, S., & Aivaliotis, P. (2022). AI-based vision system for collision detection in HRC applications. Procedia CIRP, 106, 156–161. https://doi.org/10.1016/j.procir.2022.02.171

Manoharan, M., & Kumaraguru, S. (2018). Path planning for direct energy deposition with collaborative robots: A review. In 2018 conference on information and communication technology (CICT), 2018 (pp. 1–6). https://doi.org/10.1109/infocomtech.2018.8722362

Mariotti, E., Magrini, E., & De Luca, A. (2019). Admittance control for human-robot interaction using an industrial robot equipped with a F/T sensor. In International conference on robotics and automation (ICRA), 2019 (pp. 6130–6136). https://doi.org/10.1109/icra.2019.8793657

Martínez-Villaseñor, L., & Ponce, H. (2019). A concise review on sensor signal acquisition and transformation applied to human activity recognition and human–robot interaction. International Journal of Distributed Sensor Networks, 15(6), 155014771985398. https://doi.org/10.1177/1550147719853987

Mejia, O., Nuñez, D., Razuri, J., Cornejo, J., & Palomares, R. (2022). Mechatronics design and kinematic simulation of 5 DOF serial robot manipulator for soldering THT electronic components in printed circuit boards. In 2022 first international conference on electrical, electronics, information and communication technologies (ICEEICT). https://doi.org/10.1109/iceeict53079.2022.9768447

Melchiorre, M., Scimmi, L. S., Pastorelli, S. P., & Mauro, S. (2019). Collison avoidance using point cloud data fusion from multiple depth sensors: A practical approach. In 23rd international conference on mechatronics technology (ICMT), 2019 (pp. 1–6). https://doi.org/10.1109/icmect.2019.8932143

Mohammed, A., Schmidt, B., & Wang, L. (2016). Active collision avoidance for human–robot collaboration driven by vision sensors. International Journal of Computer Integrated Manufacturing, 30(9), 970–980. https://doi.org/10.1080/0951192x.2016.1268269

Moon, S., Kim, J., Yim, H., Kim, Y., & Choi, H. R. (2021). Real-time obstacle avoidance using dual-type proximity sensor for safe human-robot interaction. IEEE Robotics and Automation Letters, 6(4), 8021–8028. https://doi.org/10.1109/lra.2021.3102318