Abstract

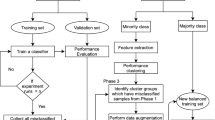

The problem of imbalanced regression is widely prevalent in various intelligent manufacturing systems, significantly constraining the industrial application of machine learning models. Existing research has overlooked the impact of redundant data and has lost valuable information within unlabeled data, therefore, the effectiveness of the models is limited. To this end, we propose a novel model framework (sNN-ST, similarity-based nearest neighbor and Self-Training fusion) to address imbalanced regression in industrial big data. This approach comprises two main steps: first, we identify and remove redundant samples by analyzing the redundancy relationships among samples. Then, we perform pseudo-labeling on unlabeled data, selectively incorporating reliable and non-redundant samples into the labeled dataset. We validate the proposed method on two imbalanced regression datasets. Removing redundant data and effectively utilizing unlabeled data optimize the dataset's distribution and enhance its information entropy. Consequently, the processed dataset significantly improves the overall model performance. We used this model to conduct a Multi-Parameter Global Relative Sensitivity Analysis within a production system. This analysis optimized existing process parameters and improved product quality consistency. This research presents a promising approach to addressing imbalanced regression problems.

Similar content being viewed by others

Data availability

The data that support the findings of this study are openly available at https://gitee.com/superfeif/imbalanced-regression-datasets.git.

References

Branco, P., Torgo, L., & Ribeiro, R. P. (2019). Pre-processing approaches for imbalanced distributions in regression. Neurocomputing, 343, 76–99.

Camacho, L., Douzas, G., & Bacao, F. (2022). Geometric SMOTE for regression. Expert Systems with Applications, 193, 116387.

Chen, B., Jiang, J., Wang, X., Wan, P., Wang, J., & Long, M. (2022). Debiased self-training for semi-supervised learning. Advances in Neural Information Processing Systems, 35, 32424–32437.

Demšar, J. (2006). Statistical comparisons of classifiers over multiple data sets. The Journal of Machine Learning Research, 7, 1–30.

Gharehchopogh, F. S. (2023). Quantum-inspired metaheuristic algorithms: Comprehensive survey and classification. Artificial Intelligence Review, 56(6), 5479–5543.

Gharehchopogh, F. S., & Khargoush, A. A. (2023). A chaotic-based interactive autodidactic school algorithm for data clustering problems and its application on COVID-19 disease detection. Symmetry, 15(4), 894.

Gharehchopogh, F. S., Namazi, M., Ebrahimi, L., & Abdollahzadeh, B. (2023a). Advances in sparrow search algorithm: A comprehensive survey. Archives of Computational Methods in Engineering, 30(1), 427–455.

Gharehchopogh, F. S., Ucan, A., Ibrikci, T., Arasteh, B., & Isik, G. (2023b). Slime mould algorithm: A comprehensive survey of its variants and applications. Archives of Computational Methods in Engineering, 30(4), 2683–2723.

Haixiang, G., Yijing, L., Shang, J., Mingyun, G., Yuanyue, H., & Bing, G. (2017). Learning from class-imbalanced data: Review of methods and applications. Expert Systems with Applications, 73, 220–239.

Haliduola, H. N., Bretz, F., & Mansmann, U. (2022). Missing data imputation using utility-based regression and sampling approaches. Computer Methods and Programs in Biomedicine, 226, 107172.

Herbold, S., Trautsch, A., & Grabowski, J. (2018, May). A comparative study to benchmark cross-project defect prediction approaches. In Proceedings of the 40th international conference on software engineering (p. 1063).

Li, D., Liu, Y., Huang, D., & Xu, C. (2022a). A semi-supervised soft-sensor of just-in-time learning with structure entropy clustering and applications for industrial processes monitoring. IEEE Transactions on Artificial Intelligence, 4(4), 722–733.

Li, F. F., He, A. R., Song, Y., Xu, X. Q., Zhang, S. W., Qiang, Y., & Liu, C. (2023). MDA-JITL model for on-line mechanical property prediction. Journal of Iron and Steel Research International, 30(3), 504–515.

Li, F., Song, Y., Liu, C., Li, B., & Zhang, S. (2021). Ensemble learning model for mechanical performance prediction of strip and its reliability evaluation. Journal of Mechanical Engineering, 57(2), 239–246.

Li, J., Savarese, S., & Hoi, S. (2022, September). Masked unsupervised self-training for label-free image classification. In The 11th international conference on learning representations.

Li, R. L., & Hu, Y. F. (2004). A density-based method for reducing the amount of training data in KNN text classification. Journal of Computer Research and Development, 41(4), 539–545.

Liu, H., Wang, J., & Long, M. (2021). Cycle self-training for domain adaptation. Advances in Neural Information Processing Systems, 34, 22968–22981.

Liu, J., Li, X., & Yang, G. (2018, September). Cross-class sample synthesis for zero-shot learning. In BMVC (p. 113).

Liu, W., Xu, W., Yan, S., Wang, L., Li, H., & Yang, H. (2022). Combining self-training and hybrid architecture for semi-supervised abdominal organ segmentation. In MICCAI challenge on fast and low-resource semi-supervised abdominal organ segmentation (pp. 281–292). Springer.

Maharana, K., Mondal, S., & Nemade, B. (2022). A review: Data pre-processing and data augmentation techniques. Global Transitions Proceedings, 3(1), 91–99.

Maurya, J., Ranipa, K. R., Yamaguchi, O., Shibata, T., & Kobayashi, D. (2023, January). Domain adaptation using self-training with Mixup for one-stage object detection. In 2023 IEEE/CVF winter conference on applications of computer vision (WACV) (pp. 4178–4187). IEEE.

Meng, W., & Yolwas, N. (2023). A study of speech recognition for Kazakh based on unsupervised pre-training. Sensors, 23(2), 870.

Mukherjee, S., & Awadallah, A. (2020). Uncertainty-aware self-training for few-shot text classification. Advances in Neural Information Processing Systems, 33, 21199–21212.

Okazaki, Y., Okazaki, S., Kajitani, Y., & Ishizuka, M. (2020). Regression of imbalanced river discharge data using resampling technique. Journal of Japan Society of Civil Engineers, Series B1 (Hydraulic Engineering), 76(2), I_133-I_138.

Sahid, M. A., Hasan, M., Akter, N., & Tareq, M. M. R. (2022, July). Effect of imbalance data handling techniques to improve the accuracy of heart disease prediction using machine learning and deep learning. In 2022 IEEE Region 10 symposium (TENSYMP) (pp. 1–6). IEEE.

Scheepens, D. R., Schicker, I., Hlaváčková-Schindler, K., & Plant, C. (2023). Adapting a deep convolutional RNN model with imbalanced regression loss for improved spatio-temporal forecasting of extreme wind speed events in the short to medium range. Geoscientific Model Development, 16(1), 251–270.

Shishavan, S. T., & Gharehchopogh, F. S. (2022). An improved cuckoo search optimization algorithm with genetic algorithm for community detection in complex networks. Multimedia Tools and Applications, 81(18), 25205–25231.

Steininger, M., Kobs, K., Davidson, P., Krause, A., & Hotho, A. (2021). Density-based weighting for imbalanced regression. Machine Learning, 110, 2187–2211.

Sun, S., Hu, X., & Liu, Y. (2022). An imbalanced data learning method for tool breakage detection based on generative adversarial networks. Journal of Intelligent Manufacturing, 33(8), 2441–2455.

Temraz, M., & Keane, M. T. (2022). Solving the class imbalance problem using a counterfactual method for data augmentation. Machine Learning with Applications, 9, 100375.

Torgo, L., Branco, P., Ribeiro, R. P., & Pfahringer, B. (2015). Resampling strategies for regression. Expert Systems, 32(3), 465–476.

Torgo, L., & Ribeiro, R. (2007). Utility-based regression. In Knowledge discovery in databases: PKDD 2007: 11th European conference on principles and practice of knowledge discovery in databases, Warsaw, Poland, 17–21 September 2007. Proceedings 11 (pp. 597–604). Springer.

Torgo, L., Ribeiro, R. P., Pfahringer, B., & Branco, P. (2013, September). Smote for regression. In Portuguese conference on artificial intelligence (pp. 378–389). Springer.

Wang, K., Guo, B., Yang, H., Li, M., Zhang, F., & Wang, P. (2022). A semi-supervised co-training model for predicting passenger flow change in expanding subways. Expert Systems with Applications, 209, 118310.

Wei, C., Sohn, K., Mellina, C., Yuille, A., & Yang, F. (2021). Crest: A class-rebalancing self-training framework for imbalanced semi-supervised learning. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 10857–10866).

Wei, G., Mu, W., Song, Y., & Dou, J. (2022). An improved and random synthetic minority oversampling technique for imbalanced data. Knowledge-Based Systems, 248, 108839.

Xianli, L. I. U., Qingzhen, S. U. N., Caixu, Y. U. E., & Hengshuai, L. I. (2022). Optimization of milling process parameters of titanium alloy based on data mining technology. Computer Integrated Manufacturing System, 28(8), 2440–2448.

Xie, Q., Luong, M. T., Hovy, E., & Le, Q. V. (2020). Self-training with noisy student improves ImageNet classification. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 10687–10698).

Yang, L., Zhuo, W., Qi, L., Shi, Y., & Gao, Y. (2022). St++: Make self-training work better for semi-supervised semantic segmentation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 4268–4277).

Yang, G., Song, C., Yang, Z., & Cui, S. (2023). Bubble detection in photoresist with small samples based on GAN augmentations and modified YOLO. Engineering Applications of Artificial Intelligence, 123, 106224.

Yang, W., Li, W. G., Zhao, Y. T., Yan, B. K., & Wang, W. B. (2018). Mechanical property prediction of steel and influence factors selection based on random forests. Iron and Steel, 3, 44–49.

Yang, Y., & Xu, Z. (2020). Rethinking the value of labels for improving class-imbalanced learning. Advances in Neural Information Processing Systems, 33, 19290–19301.

Zhang, Y., Li, X., Gao, L., Wang, L., & Wen, L. (2018). Imbalanced data fault diagnosis of rotating machinery using synthetic oversampling and feature learning. Journal of Manufacturing Systems, 48, 34–50.

Zhao, Y. B., Song, Y., Li, F. F., & Yan, X. L. (2023). Prediction of mechanical properties of cold rolled strip based on improved extreme random tree. Journal of Iron and Steel Research International, 30(2), 293–304.

Zhao, Z., Zhou, L., Wang, L., Shi, Y., & Gao, Y. (2022, June). Lassl: Label-guided self-training for semi-supervised learning. In Proceedings of the AAAI conference on artificial intelligence (Vol. 36, No. 8, pp. 9208–9216).

Ziqi, W., Jinwen, H. E., & Liangxiao, J. (2019). New redundancy-based algorithm for reducing amount of training examples in KNN. Computer Engineering and Applications., 55(22), 40–45.

Zoph, B., Ghiasi, G., Lin, T. Y., Cui, Y., Liu, H., Cubuk, E. D., & Le, Q. (2020). Rethinking pre-training and self-training. Advances in Neural Information Processing Systems, 33, 3833–3845.

Zou, Y., Yu, Z., Liu, X., Kumar, B. V. K., & Wang, J. (2019). Confidence regularized self-training. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 5982–5991).

Acknowledgements

This research supported by the National Natural Science Foundation of China (No.52004029), Fundamental Research Funds for the Central Universities (FRF-TT-20-06) and division 8, Xinjiang Production and Construction Corps Science and Technology Program (2022ZD02).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Li, F., He, A., Song, Y. et al. Improving imbalanced industrial datasets to enhance the accuracy of mechanical property prediction and process optimization for strip steel. J Intell Manuf (2023). https://doi.org/10.1007/s10845-023-02275-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10845-023-02275-1