Abstract

Constraint solving is applied in different application contexts. Examples thereof are the configuration of complex products and services, the determination of production schedules, and the determination of recommendations in online sales scenarios. Constraint solvers apply, for example, search heuristics to assure adequate runtime performance and prediction quality. Several approaches have already been developed showing that machine learning (ML) can be used to optimize search processes in constraint solving. In this article, we provide an overview of the state of the art in applying ML approaches to constraint solving problems including constraint satisfaction, SAT solving, answer set programming (ASP) and applications thereof such as configuration, constraint-based recommendation, and model-based diagnosis. We compare and discuss the advantages and disadvantages of these approaches and point out relevant directions for future work.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Over several decades, constraint solving (we use this as a general term referring to specific problem solving approaches such as constraint satisfaction (Freuder, 1997), SAT solving (Gu et al., 1996), and answer set programming (Brewka et al., 2011)) has shown to be a core technology of Artificial Intelligence (Apt, 2003; Brailsford et al., 1999; Rossi et al., 2006). Its widespread use led to the development of algorithms and tools that help to tackle a variety of combinatorial problems. Basic examples thereof are the n-queens problem and car sequencing (Adorf & Johnston, 1990; Tsang, 1993). The n-queens problem is related to the task of placing n queens on n distinct squares of an n × n chessboard in such a way that no pair of queens attacks each other. This problem has been extensively used to illustrate the modeling of constraint solving tasks and related search algorithms. The car sequencing problem is another problem that can be tackled on the basis of constraint solving techniques. This problem is related to the task of scheduling a car production sequence in such a way that capacity constraints of the assembly line are satisfied. Cars can be of different type and thus need different features to be installed and, as a consequence, also different production steps need to be taken into account. For example, a type-A car might need a sunroof installed but no skibag. An assembly line is often composed of different sections, each specialized in installing one or more specific features. Each section, however, has a limited capacity which has to be taken into account by the solver. Another application area of constraint-based knowledge representations and reasoning is knowledge-based configuration (Felfernig et al., 2014). Configuration has become a core technology for businesses depending on the mass customization paradigm (Felfernig et al., 2014). Configuration technologies help to tackle important challenges triggered by mass customization, for example, due to the correctness of configurations, errors in processing orders and related follow-up costs due to the production of faulty configurations can be avoided. Beyond configuration, constraint-based technologies are applied in a variety of further scenarios such as scheduling, vehicle routing, and recommender systems just to mention a few (Burke, 2000; Felfernig & Burke, 2008; Rossi et al., 2006).

As indicated by a couple of research results, combinatorial problems such as configuration problems have no universal best solving approach (Bengio et al., 2021; Kotthoff, 2014; Loreggia et al., 2016; O’Mahony et al., 2013; Spieker & Gotlieb, 2018; Xu et al., 2008; Xu et al., 2009). To solve such problems in an efficient fashion, state of the art techniques exploit search heuristics (Arbelaez et al., 2010; Beck et al., 2004; Da Col & Teppan, 2017; Erdeniz et al., 2017; Jannach, 2013; Johnston & Minton, 1994; Mouhoub & Jafari, 2011; Pearl, 1984; Sadeh & Fox, 1996) or try to find the best solver (algorithm) for the problem instance at hand. Algorithm selection approaches have shown to be applicable in various scenarios – related approaches to predict a solver’s performance become an increasingly active field of research (Kotthoff, 2014). Furthermore, the development of machine learning methods that are capable of learning search heuristics in a domain-dependent fashion is relevant in the context of minimizing search efforts and optimizing the prediction quality (Raedt et al., 2011). Machine learning can also be used to predict the satisfiability of a specific problem and thus can help to significantly reduce the overall search effort. We have identified the following main application areas of machine learning techniques in the context of constraint solving: (1) direct solution search, (2) constraint learning, (3) learning search heuristics (and/or parameters) (e.g., related to possible variable and value orderings), (4) satisfiability prediction (by learning relevant problem features that help to correctly classify problems as (un-)satisfiable), and (5) algorithm selection (based on problem properties/features).

There exist different surveys related to the topics of constraint satisfaction and SAT solving (Apt, 2003; Rossi et al., 2006; Tsang, 1993), algorithm portfolios (Kotthoff, 2014), and also interactions between data mining and constraint programming (Bessiere et al., 2016). A survey of different algorithmic approaches to solve constraint satisfaction problems is provided, for example, by Kumar (1992). An overview of different approaches to SAT solving is provided, for example, by Gu et al. (1996). Compared to the literature study presented in this article, commonalities exist with the overviews provided by Kotthoff (2014), Bischl et al. (2015), and Bessiere et al. (2016). Kotthoff (Kotthoff, 2014) provides a survey of algorithm portfolios that allow to select the “best” algorithm for a specific problem instance. Bischl et al. (2015) propose an algorithm benchmark including different datasets for evaluation purposes. Finally, Bessiere et al. (2016) focus on synergies between data mining and constraint satisfaction. They focus on related aspects such as approaches to algorithm selection, constraint learning, and representing data mining problems as constraint satisfaction problems. In this line of research, Alves Pereira et al. (2019) motivate the application of machine learning methods for the purpose of configuration space learning. The authors propose the application of machine learning methods for the purpose of predicting non-functional system properties such as response time or system failure probabilities. Furthermore, overviews of the state of the art in algorithm runtime prediction and selection can be found in Hutter et al. (2014) and Lombardi and Milano (2018). Finally, an overview of recent research related to the application of machine learning methods in the context of combinatorial optimization can be found in Bengio et al. (2021).

The major contribution of this article is the following. There does not exist an in-depth overview of machine learning methods aiming at improving constraint solving (in areas such as constraint satisfaction, SAT solving, and answer set programming). Although the interest in the field of applying machine learning to the solution of combinatorial problems is growing (Bengio et al., 2021), an overview specifically focusing on the application of machine learning approaches to constraint solving is still missing. With this article, we provide such an overview and also include an in-depth discussion of challenges and open issues for future work.

The remainder of this article is organized as follows. In Section 2, we present our methodological approach to the literature analysis on machine learning and constraint solving. Section 3 provides an introduction to major relevant concepts from the areas of constraint solving and machine learning that are regarded as a basis for being able to understand the discussions in Section 4. In Section 4, we summarize the results of our literature analysis on the integration of machine learning techniques into different types of constraint solving and provide selected examples to increase understandability. Specifically, we focus on related work in constraint satisfaction, SAT solving, answer set programming, and related applications in configuration, diagnosis, and constraint-based recommendation. In Section 5, we provide an overview of relevant issues for future research. The article is concluded in Section 6.

2 Research methodology

Our goal is to provide a comprehensive overview of the state of the art in the application of machine learning methods in constraint solving. We want to provide an in-depth overview of the field and thus also contribute to further bridge building between the two research fields of machine learning and constraint solving. This article is based on a literature review comprised of paper selection, review, and a discussion of reviewed approaches. Specifically, our work involved an initial step of querying leading research portals with relevant keywords, an intermediary phase consisting of classifying approaches based on their contribution to Constraint Solving and used machine learning methods, and a final phase with a discussion of current challenges and relevant future research directions.

We performed queries on well-known research platforms such as Google ScholarFootnote 1, ResearchGateFootnote 2, ScienceDirectFootnote 3, SpringerLinkFootnote 4, and ElsevierFootnote 5 with the initial keywords “machine learning” + “constraint satisfaction”. Taking into account the initial query results regarded as relevant, we established further search criteria (in terms of keywords) that combined in a systematic fashion “machine learning” and “constraint satisfaction” with related terms such as “deep learning”, “data mining”, “matrix factorization” and “constraint solving”, “SAT solving”, “Answer Set Programming”, and related meta-criteria such as “overview”, “review”, and “survey”. In addition, we analyzed topic-related work from the last 15 years published in the International Joint Conference on Artificial Intelligence (IJCAI), the AAAI Conference on Artificial Intelligence, the European Conference on Artificial Intelligence (ECAI), the Conference on Theory and Applications of Satisfiability Testing (SAT), and the Constraint Programming (CP) conference. Following the snowballing technique (Wohlin, 2014), we further analyzed the reference sections of the identified papers with the goal to find further topic-related work. The major criterion for regarding a reference as relevant was a topic-wise match, i.e., the reference needed to cover the topic of integrating machine learning (ML) techniques with constraint solving. We have identified 41 publications as a basis for our overview. We did not include contributions focusing on the integration of constraint solving into machine learning (see, e.g., Lallouet and Legtchenko, 2005). The majority of the identified publications is based on supervised ML (see Table 1).

3 Preliminaries

In the following, we present relevant constraint solving paradigms, such as constraint satisfaction (Freuder, 1997), SAT solving (Gu et al., 1996) and answer set programming (Brewka et al., 2011), and key concepts from machine learning which constitute the basic knowledge required to be able to understand the discussions in this overview.

3.1 Constraint satisfaction problems (CSPs)

A constraint on a set of variables defines a restriction on possible combinations of variable assignments (Apt, 2003). Constraint Satisfaction Problems (CSPs) specify a problem in terms of relevant variables and corresponding constraints on possible value combinations (Apt, 2003). Ideally, an assignment should instantiate each variable and satisfy all the constraints defining the problem. Examples of CSPs are the configuration of telecommunication switches (Fleischanderl et al., 1998), the recommendation of financial services (Felfernig et al., 2006), and reconfiguration (Felfernig et al., 2018). As a basis for the following discussions, we now introduce a definition of a constraint satisfaction problem (CSP) (Rossi et al., 2006; Tsang, 1993).

Definition (CSP and Solution). A CSP is defined as a triple (V,D,C) where V = {v1..vn} is a set of variables, D = {domain(v1)..domain(vn)} are the corresponding domain definitions, and C = {c1..ck} is a set of constraints. A solution to a CSP is a variable assignment A = {v1 = valv1..vn = valvn} where valvj denotes the value of variable vj with \(val_{v_{j}} \in domain(v_{j})\) and consistent(C ∪ A).

A basic approach to solve a CSP is to apply backtracking search combined with forward checking (analyzing whether a variable value assignment has an impact on the set of possible values of other variables) and different approaches assuring consistency properties such as node consistency (each value of a variable domain has to be consistent with all unary constraints defined on that variable) and arc consistency (for each binary constraint ca connecting two variables, e.g., x1 and x2, it must be the case that each value of domain(x1) must have a corresponding values in domain(x2) such that the corresponding assignment is consistent with ca). For a general overview of different approaches to solve constraint satisfaction problems we refer to Apt (2003). Example evaluation criteria that can be used for evaluating the performance of solvers are runtime (how long does it take to find a solution), optimality (solution that achieves an optimum with regard to a predefined optimization function), accuracy (share of correct classifications), and completeness (degree to which existing solutions can be found).

3.2 Boolean satisfiability problems (SAT & ASP problems)

A Boolean Satisfiability (SAT) problem is a specific type of CSP where variables have Boolean domains and constraints are represented as Boolean formulas (Rossi et al., 2006). As a consequence, CSPs and SAT problems are in a close relationship which allows for a reformulation of CSPs into corresponding SAT representations (Gu et al., 1996). In the line of the definition of a CSP, a SAT problem is defined by a set of variables V = {v1..vn} where each variable represents a specific domain value. For example, if V = {x1} and domain(x1) = {a,b} in the CSP representation, the corresponding SAT representation would be V = {xa,xb} with domain(xa) = domain(xb) = {true,false}. Constraints in SAT solving are represented in terms of clauses where each clause is represented by a disjunction of literals (a positive literal represents the assumption that the variable value is “true”, a negative literal represents the assumption that the variable value is “false”) (Gu et al., 1996). A solution to a SAT problem is a consistent variable assignment for the equivalent conjunctive normal form (CNF) which represents a conjunction of the mentioned clauses. For an overview of different approaches to solve SAT problems, we refer to Gu et al. (1996). Importantly, answer set programming (ASP) (Brewka et al., 2011) is an expressive declarative knowledge representation that allows to model complex domains in a predicate logic fashion. On the reasoning level, answer set programs are translated into a SAT representation, i.e., ASP problems can be solved on the basis of SAT algorithms. It is worth mentioning that one of the first real-world applications of answer set programming was product configuration – for details we refer to Myllärniemi et al. (2014) and Simons et al. (2002).

3.3 Machine learning

The basic idea of machine learning is to exploit data from past events (represented in terms of training data) to build a (hopefully) reliable model that is able to generalize the given data. On a high level, machine learning is used to solve two basic tasks. First, classification is used to figure out for a given test case if it falls under a given category or not. For example, in the context of a constraint-based configuration scenario, an estimation whether a user will be interested in a specific potentially new product component (or a new service) can be regarded as a classification task, i.e., to figure out whether an item is relevant or irrelevant. Second, prediction is used to estimate a specific value. For example, the estimation of the upper price limit of a user can be regarded as a prediction task (predicting user-individual price limits). Depending on the machine learning task to be solved (classification or prediction), corresponding evaluation metrics can be applied, i.e., classification metrics such as precision and prediction metrics such as mean squared error (MSE) (Gunawardana and Shani, 2015; Uta et al., 2021). In addition to the basic differentiation between classification and prediction, machine learning algorithms can be further differentiated as follows. First, supervised learning is based on the idea of learning from labeled data (often denoted as “datasets”) where datasets are divided into a training dataset (used for learning purposes) and a test dataset (used for evaluation purposes). Examples of machine learning algorithms implementing supervised learning are linear regression, decision tree based classification, matrix factorization, and neural networks (Bishop, 2006). Second, unsupervised learning focuses on the identification of patterns in unlabeled data. A basic example of an algorithmic approach supporting unsupervised learning are different clustering algorithms. Third, reinforcement learning learns from its past experience by trying to maximise a reward function with respect to the next state and actions available for selection (Sutton & Barto, 1998).

4 Machine learning in constraint solving

The majority of research contributions related to the integration of machine learning with constraint solving are based on supervised machine learning. In the following, we will discuss these approaches in detail and – where applied – also discuss the corresponding unsupervised learning and reinforcement learning approaches. Table 1 provides an overview of relevant research contributions related to the topic of machine learning and constraint solving. In the line of the basic classification of machine learning methods as discussed in Carbonell et al. (1983), we categorize the identified research contributions according to the used machine learning method/algorithm (e.g., decision trees and neural networks), the application in the constraint solving context (e.g., algorithm selection and learning of heuristics), and the used evaluation metrics (e.g., runtime and accuracy).

4.1 Constraint satisfaction

Research contributions related to the integration of machine learning and constraint satisfaction problem solving (Freuder, 1997; Tsang, 1993) support different goals. In the context of solution search, constraint learning supports the learning of constraints that will help to avoid redundant search and thus improve the search performance. Solution prediction is related to the goal of directly applying machine learning to derive a solution for a given constraint satisfaction problem (CSP). Furthermore, satisfiability prediction focuses on predicting the satisfiability of a CSP without the need of activating a solver. Heuristics learning focuses on the learning of search heuristics that improve the performance of a constraint solver with regard to criteria such as runtime and prediction quality. Finally, constraint learning in the context of knowledge acquisition supports the derivation of constraints from a given dataset or on the basis of the feedback from expert users. Research contributions related to these aspects will be discussed in the following paragraphs.

The idea of constraint learning is to identify previously unknown constraints of a CSP and – through the inclusion of these constraints – speed up the search process. Gent et al. (2010) introduce a classification model that helps to identify CSP instances on which constraint learning is likely to improve a solver’s performance. The motivation behind this work is that not every CSP instance is amenable of constraint learning which means, it cannot be guaranteed that constraint learning helps to improve performance in every case. In order to better understand the utility of constraint learning, Gent et al. (2010) introduce a decision tree based approach that helps to evaluate a given CSP instance. Specifically, the authors use lazy learning in the context of nogood learning where a nogood can be interpreted as a combination of attribute values that do not allow the determination of a solution. Such nogoods can be interpreted as additional constraints to be taken into account by the solver. Examples of features used in the decision tree based approach of Gent et al. (2010) are number of problem constraints, number of variables, number of attributes, number of constraints that refer to a specific variable, and the proportion of tuples not allowed by a specific constraint (constraint tightness).

A neural network architecture that helps to solve constraint satisfaction problems is proposed in Wang and Tsang (1991). CSP variables and potential values are encoded as nodes of the neural network. Based on the results of their performance evaluation, the authors point out that CSP solution search based on neural network approaches is feasible also for CSPs with up to 170 variables. As a working example, the authors present the domain of car scheduling. A neural network can learn relevant variable assignments on the basis of a training data set which is represented by a collection of already solved CSPs. In order to escape local minima (e.g., in terms of the number of still violated constraints), the authors propose a so-called heuristic repair method. More in-depth evaluations are needed to have a clearer view in which contexts neural network based approaches can outperform standard constraint solving. A multi neural network architecture for solving binary CSPs is proposed by Adorf and Johnston (1990) where the problem of escaping local minima is solved by the integration of so-called auxiliary guard networks.

A deep learning based approach to predict the satisfiability of constraint satisfaction problems is presented by Xu et al. (2018). Their approach exploits basic properties of CSPs (e.g., individual variable values) as input for the neural network which has to decide whether the given CSP is satisfiable or not. Besides satisfiability, further labels of data points are k-consistency, best algorithm to solve, and required time to solve the problem. The input for the neural network is the CSP represented in matrix form where each entry indicates whether the corresponding value combination is allowed or not. The rationale behind this representation is its similarity to a 2-D representation of a grayscale image which is also used as a basis for pattern recognition in image analysis. The major motivation behind the work of Xu et al. (2018) is to accurately predict the satisfiability of CSPs to achieve the goal of minimizing the need of backtracking in solution search. In the context of the presented evaluation settings (synthesized dataset that includes data points labeled in terms of satisfiability or unsatisfiability), the authors report to have achieved an accuracy rate of higher than 99.99%. A similar line of research is followed by Wen et al. (2020) who apply deep learning for improving the performance of constraint solving in the context of symbolic execution. Symbolic execution is used, for example, in software testing and test case generation with the goal to identify in an efficient fashion executable paths in a program.

The objective of continuous search is to permanently improve the search behavior of a solver (Arbelaez et al., 2010). Related approaches include two basic modes: first, the so-called functioning mode which is used to solve concrete user problems (ako productive environment) whereas the exploration mode focuses on continuously improving the search performance by reusing the information from already completed solver sessions for training the heuristics model. The underlying machine learning approach is based on a binary classification model. As mentioned by Arbelaez et al. (2010), the proposed approach follows the idea of lifelong learning which means to successively become an expert in the application domain. Based on a set of static features (e.g., #variables, variable domain sizes, and constraint degrees) and dynamic features (e.g., used heuristics for variable selection, variable domain size during search, and involvement degree of variables in failed constraints), the underlying machine learning task is defined as a binary classification problem. A related neural network approach to the training of heuristics is introduced in Galassi et al. (2018).

Permanently improving the search performance is also the idea of reinforcement learning which follows the idea of continuously exploring new problem solving approaches with the overall goal of continuously improving problem solving behavior and corresponding outputs (Bello et al., 2017; Lagoudakis and Littman, 2001; Lederman et al., 2020). Xu et al. (Xu et al., 2009) and Spieker and Gotlieb (2018) introduce reinforcement learning approaches for efficiently solving constraint satisfaction problems. Adaptive behavior is achieved, for example by the continuous adaptation of individual variable ordering heuristics with the goal to improve the performance of the problem solving behavior. Reinforcement learning does not primarily rely on datasets used for evaluation purposes but focuses more on an instance by instance training with adaptations based on the feedback of reward functions. A simple example of such a reward function is the validity of a solution or the share of violated constraints in a proposed solution. Consequently, two major roles can be observed in reinforcement learning based approaches: first, actors are responsible for the selection of the next actions (e.g., adaptation of the domain ordering heuristic for a specific variable), second, critiquing agents are responsible for estimating the reward after an action has been performed (Nareyek, 2004; Spieker & Gotlieb, 2018).

Combinatorial optimization is related to the task of finding an optimal solution for a given (constrained) problem (Cappart et al., 2020; Korte & Vygen, 2000). Example algorithmic approaches to solve such problems range from solving integer linear programs to basic formulations as constraint satisfaction problems. Guerri and Milano (2004) apply decision tree learning in the context of the Bid Evaluation Problem (BEP) which is regarded as a combinatorial optimization problem. They propose the application of a decision tree to decide about the solving approach to be used for a problem instance. There are two basic problem solving approaches taken into account in the reported evaluation scenario which are constraint programming (CP) and integer programming (IP). Each entry in the machine learning training set consists of 25 features and – as an annotation – the best algorithm to solve the problem.

In the context of combinatorial optimization, Bonfietti et al. (2015) propose an approach to the integration of Decision Trees (DTs) and Random Forests (RFs) in a constraint programming model. The major motivation for this integration is that in many domains constraints and heuristics part of the model are not completely clear. The overall idea is to learn additional constraints that are then integrated into the constraint model. This approach can be regarded as a kind of empirical model learning where data from past solutions can be used to infer the mentioned classification models. In the work of Bonfietti et al. (2015), different embedding techniques are used which basically represent different ways of integrating the knowledge from decision trees and random forests into the constraint-based representation. An example thereof is a rule-based embedding where rules become part of the constraint-based representation.

Runtime prediction and optimization is important in different contexts. For example, predicting the performance of a solver given a set of solver settings and a corresponding problem instance can help to avoid suboptimal solver parametrizations but also support the optimization of algorithm parametrization, i.e., parameter tuning. Furthermore, runtime predictions in the context of solution space learning can help to efficiently estimate the applicability of a specific (constraint-based) knowledge base in interactive settings. With the goal to support the prediction of solver runtimes, Hutter et al. (2006) propose a regression-based prediction model where empirical hardness aspects derived from a problem instance are used as basic indicators for runtime estimation.

Gent et al. (2010) introduce a classification based approach to select the optimal implementation of the alldifferent constraint with the overall goal of solver performance optimization. The semantics of the alldifferent constraint associated with a set of variables V = {x1,x2,..,xn} can be defined as \(\forall _{(x_{i},x_{j})}: val(x_{i})\neq val(x_{j}) \) with i≠j. The features used by the classification approach are constraint and variable statistics directly derived from each given CSP instance. The training data was automatically labeled on the basis of the performance of a specific alldifferent implementation given a set of problem-specific constraint and variable statistics (features). Examples of such features are average constraint arity, proportion of constraint pairs that share more than one variable, and constraint tightness in terms of the portion of disallowed tuples. The approach showed to clearly outperform CSP implementations with one specific alldifferent variant.

In contrast to the previously discussed work, Erdeniz and Felfernig (2018) show how to make the learning of CSP heuristics more applicable to interactive settings. In their approach, a dataset of already collected user requirements is forwarded to a k-means clustering process (Burkardt, 2009) which is used to group similar sets of user requirements. Thereafter, in the line of the work of Da Col and Teppan (2017), a genetic algorithm is used to determine cluster-specific heuristics that cause the best runtime behavior for the problem instances (user requirements) in the individual cluster. After having determined the cluster-specific heuristics, the resulting models (clusters) can be applied in interactive settings in the context of new user sessions: if a user specifies a set of preferences, the system can determine the most similar cluster and use the cluster-specific heuristics for determining a solution. Based on example map coloring problems (Fritsch et al., 1998; Leighton, 1979), Erdeniz and Felfernig (2018) show that their cluster-based approach outperforms settings with globally defined variable and value ordering heuristics. For visualization purposes, a simple example of learning of variable value orderings in the context of constraint-based recommendation scenarios is depicted in Tables 6, 7, 8 and 9.

An application of case-based reasoning (CBR) for the selection of well-performing constraint solvers is introduced by O’Mahony et al. (2013). CBR is based on the idea of exploiting existing problem solving knowledge from previous cases for solving new problems. In this context, old cases are retrieved that are similar to the new problem and the problem solving practices of the old cases are reused to solve the new one. In the approach proposed by O’Mahony et al. (2013), solvers are recommended which have a high probability of efficiently solving the given problem. The similarity between old cases and the new ones is determined on the basis of similarity metrics defined on features such as maximum constraint arity, maximum variable domain size, number of variables, number of constraints, and ratio of different types of constraints (e.g., extensional and intensional). Table 2 depicts a simple solver recommendation scenario where individual sessions reflect stored data about already completed constraint solving sessions and the task is to find an appropriate solver for the current session (scurrent).

The nearest neighbor in terms of the problem features pi of the current session is session s2, the second nearest neighbor is session s3. Since the solver chosen for session s3 showed a significantly better performance, solver solver2 (used in session s3) should be recommended/used for/in the current session.

Constraint learning

Raedt et al. (2018) in the context of knowledge acquisition scenarios (i.e., not during solution search) can be interpreted as inductive learning task of identifying a constraint set that supports a training/test dataset. This problem is different from learning processes within the scope of a solver session where the learned constraints are primarily used to improve solver performance (Gent et al., 2010). Learning constraints from data is a relevant approach to tackle issues in the context of the knowledge acquisition bottleneck which can be a major obstacle when deploying constraint-based systems in industrial contexts. Another example task of constraint learning is the learning of preferences which is used, for example, when learning search heuristics for improving the prediction quality of a solver (Erdeniz et al., 2019). Constraints can also be learned in an interactive fashion within the scope of so-called active learning processes where the machine learning component is not limited to the analysis of a given dataset but also interacts with expert users who give feedback on the relevance of learned constraints. In this line of research, also human computation based approaches to constraint learning (Ulz et al., 2016) are used to exploit the feedback of expert users.

4.2 SAT solving

In the SAT solving literature, there are two basic method categories how to integrate machine learning for the purpose of problem solving (Zhang et al., 2020). First, machine learning can be used directly to solve individual SAT instances, i.e., given a SAT problem as input, the machine learning algorithm learns to solve the problem instance itself – see, for example, Galassi et al. (2018). Second, so-called heuristic methods use machine learning for the purpose of predicting relevant heuristics which are then used by solvers to find a solution for a given instance (Zhang et al., 2020). Beyond solution search, there are further applications of machine learning techniques in SAT solving which include the aspects of predicting satisfiability and selecting the best algorithm for solving a given problem instance. Related scientific contributions will be discussed in the following paragraphs.

The task of algorithm selection is to select the best algorithm to solve a specific problem instance. An in-depth survey of algorithm selection techniques is given in Kotthoff (2014). One of the most prominent environments supporting algorithm selection is SATzilla (Xu et al., 2008). It can be regarded as one of the first systems propagating the idea of algorithm portfolios as a reasonable alternative to the optimization of individual algorithms. The application context of SATzilla is SAT solving scenarios where runtime per problem instance is used as evaluation criteria (see also Haim and Walsh, 2009 where machine learning is applied to determine solver restart strategies). Algorithm portfolios can be static (a portfolio is comprised of a fixed set of algorithms which comes along with limitations regarding flexibility) or dynamic (algorithms in a portfolio can be modified, e.g., on the basis of parametrization). A dynamic approach proposed in the context of constraint solving is the Adaptive Constraint Engine (ACE) (Epstein & Freuder, 2001) which supports the adaptive selection of variable orderings based on a multi-tier adaptive voting architecture with the goal to optimize solution search. Related approaches to heuristic selection in SAT solving are proposed in Ansótegui et al. (2009) and Samulowitz and Memisevic (2007). Finally, Da Col and Teppan (Da Col & Teppan, 2017) show how to apply genetic algorithms to automatically figure out optimal search heuristics (in terms of variable orderings, value orderings, and pruning strategies) for a set of predefined benchmark problems.

An approach to the application of neural networks for classifying SAT problems in terms of satisfiability is introduced by Selsam et al. (2019). In this context, a SAT problem is encoded as a undirected graph where each node represents one literal of the SAT problem. Furthermore, each clause is represented as a node of the neural work which is connected to those literals that are part of the clause. In this scenario, the neural network can be applied for two purposes: first, it can be applied for predicting the satisfiability of a given SAT problem. Second, it can be applied for determining a solution. If one exists, it can be directly derived from the individual activation level of the nodes in the neural network. The proposed approach is also flexible in terms of solving SAT problems in different domains such as graph coloring (e.g., in map coloring, no two adjacent map areas have the same color) and clique detection (e.g., in social network analysis, the goal could be to identify subgraphs of people where everybody knows each other). Another classification approach for estimating the satisfiability of a given SAT problem is discussed in Bünz and Lamm (2017). Regarding the used structural properties of SAT formulae, the authors point out that neural networks fail to produce a different output given certain highly particular symmetries between two different inputs (SAT problems).

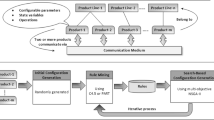

In many application contexts, there is no single solver that provides the optimal performance for a range of different problem settings (Loreggia et al., 2016). The goal of algorithm selection is to identify the best problem solving approach (algorithm) for a given problem at hand. Such an algorithm selection is often based on a manually defined set of features which are then used by different machine learning techniques to predict the optimal solver for a given problem. The deep learning approach presented in Loreggia et al. (2016) supports the automated determination of an informative set of machine learning features thus making the algorithm selection a completely automated process. Interestingly, the deep learning approach is based on the idea of transforming input text files representing problem instances into corresponding grayscale square images that serve as an input for the training of the neural network. The presented approach outperforms single solvers. A basic example of a related neural network architecture supporting the selection of solvers on the basis of a given set of problem properties (features) is depicted in Fig. 2.

The term phase transition denotes the transition from a region of search spaces where the large majority of problems have many solutions (the problems are under-constrained) to a region where the large majority of problems has no solution (the problems become increasingly over-constrained). Xu et al. (2012) introduce a decision tree based classification approach that supports the classification of SAT problems with regard to satisfiability. The classification approach is based on a set of features derived from a given SAT problem. An example of such a feature is the average imbalance of the non-negated and negated occurrences of SAT variables. Following a similar idea, Yolcu and Pó (2019), Kurin et al. (2019), and Liang et al. (2016) introduce reinforcement learning approaches with the goal of improving the performance of SAT solving through the repeated adaptation of search heuristics.

With the focus of predicting the satisfiability of individual 3-SAT problems (maximum three literals per clause), Cameron et al. (2020) introduce a deep neural network based approach based on the concept of end-to-end learning (all model parameters are learned at the same time). In this context, the authors introduce two different network architectures, one focusing on the prediction of satisfiability, the other one supporting the prediction of satisfiable variable assignments. These variants were evaluated on SAT instances ranging from 100 to 600 variables and showed an increased prediction accuracy compared to related work (Xu et al., 2012). For visualization purposes, we provide a simple example of a neural network architecture (see Fig. 1).

4.3 Answer set programming (ASP)

An approach to solver selection in the context of ASP scenarios is introduced by Gebser et al. (2011). More precisely, the authors show how to select a specific solver configuration given a specific answer set program (ASP). The problem features used by the machine learning approach (support vector regression) are, for example, number of constraints, number of variables, average backjump length, and length of learned clauses. These features are used to map a given problem to the most promising solver configuration.

Yang et al. (2020) present a framework (NeurASP) for the integration of neural networks into answer set programs. The underlying idea is to exploit the output of a neural network for the purpose of ranking atomic facts in answer set programs. As mentioned by the authors, this integration is a good example of approaches combining symbolic AI (ASP) and sub-symbolic AI (neural networks). It helps to exploit synergy effects by combining the strengths of both worlds. For example, in the context of solving Sudoku, neural networks can be used to recognize the digits on the game board whereas ASP logic can be used to represent the constraints of the game and to find solutions. The approach of Yang et al. (2020) easily generalizes to different Sudoku instances.

As mentioned, there is rarely one specific problem solving approach/algorithm that performs best in all of a given set of problem instances. More often, the best-performing algorithm differs depending on the problem setting. In the line of the research of Gebser et al. (2011) and Maratea et al. (2012) present a classification-based (a.o., nearest neighbor based classification) machine learning approach to per problem instance solver selection in a multi ASP solver environment. Examples of features used by the classification approach are the following ones: number of rules, number of atoms, share of unary/binary/ternary rules, and number of true facts. For visualization purposes, we provide a simple example of a related neural network architecture (see Fig. 2).

A simple neural network architecture with one hidden layer that can be used for learning solver selection (solveri) based on basic properties (propj) of a given ASP. Examples of such properties (features) are: number of rules in the ASP, number of atoms, share of unary/binary/ternary rules, and number of true facts

4.4 Configuration

Highly-variant products, services, and systems allow for a potentially huge number of possible configurations (Felfernig et al., 2014; Felfernig et al., 2021). The corresponding configuration space could also allow faulty configurations simply due to the fact that some relevant constraints have not been integrated into the configuration model. Examples of such faulty configurations are non-compilable software components and non-producible products. Configuration problems are typically represented on the basis of the already discussed approaches of constraint satisfaction, SAT solving, and answer set programming (ASP). In the context of feature model development, Temple et al. (2016) introduce a classification-based (decision trees) learning approach that supports the derivation of additional constraints that help to reduce the space of possible configurations. In their approach, an oracle is used to estimate the correctness of generated configurations. These annotated configurations are then used to build a decision tree that classifies correct and faulty configurations depending on the configuration-individual attribute values. This decision tree is then used for the derivation of the mentioned additional constraints. Intuitively, new relevant constraints can be extracted by negating those decisions that led to faulty configurations (Temple et al., 2016). In a related work, Temple et al. (2017) propose a machine learning approach that helps to relate context information (represented in terms of feature selections) with corresponding parts of a software and thus replaces manual modeling efforts. Based on the criteria of runtime performance of generated configurations, these problem instances (configurations) can be evaluated as acceptable or unacceptable. On the basis of this annotated dataset, decision trees can be learned which again serve as a basis for the derivation of additional constraints that reduce the search space.

In the context of configuring highly-variant products with around 40 different product properties, Uta and Felfernig (2020) introduce a machine learning approach that helps to predict variable values a user would regard as relevant. The learning approach is based on a three-layer neural network with one input, output, and hidden layer. In the presented approach, variable value predictions do not take into account domain constraints which can result in situations where the recommendations can become inconsistent with the underlying product model. Related approaches based on recommendation techniques are summarized a.o. in Falkner et al. (2011) where techniques such as collaborative filtering (Konstan et al., 1997) are applied to predict variable values of relevance for a user. A basic method to avoid inconsistencies between recommended variable values and the underlying product domain knowledge is to avoid direct variable value predictions but forward value selection probabilities to a configurator (e.g., a constraint solver) which can integrate this information, for example, in terms of variable value ordering heuristics.

Writing a paper, a project proposal, or a curriculum vitae that meets different criteria such obligatory contents, maximum and minimum sizes of paragraphs, upper and lower bounds in terms of pages and words used in a document is often a challenging task. Reasons for making such tasks challenging are time pressure when submitting the document or simply cognitive overheads that are triggered by complex rules regarding the correct format of a submitted document. In order to tackle these challenges, Acher et al. (2018) introduce VaryLATEX which enriches LATE X document editing tasks with the concepts of variability modeling (in terms of feature models) and binary classification which classifies a configured document as acceptable or unacceptable. Features used by classification are all variability properties defined for the document under consideration.

For an overview of the application of machine learning and recommender systems techniques in configuration scenarios (especially in feature-modeling related scenarios), we refer to Felfernig et al. (2021). The example depicted in Table 3 sketches a basic approach for value prediction in configuration scenarios. For example, a user interacting with a configurator has already specified some parameters and the task is to recommend values for parameters that have not been specified up to now. Following the principles of collaborative filtering (Konstan et al., 1997), the nearest neighbor of the current user (ucurrent) is the user u1. His/her preference with regard to the variable x5 is 3. Consequently, 3 is taken as recommendation for variable x5 for user ucurrent.

4.5 Diagnosis

If no solution can be identified for a given constraint set C = {c1,c2,..,cn}, one or more conflicts exist in C. In this context, a minimal conflict (set) \(CS \subseteq C\) represents a minimal set of constraints which is inconsistent (Junker, 2004), i.e., inconsistent(CS). If CS is minimal, only one constraint has to be deleted from CS to restore consistency. If C entails more than one conflict, each individual conflict has to be resolved to restore consistency in C. A minimal set of constraints that needs to be deleted from C to restore consistency is also denoted as minimal hitting set (for the given conflict sets) or minimal diagnosis (Felfernig et al., 2012; Reiter, 1987). A diagnosis (often denoted as Δ) is minimal if \(\neg \exists {\varDelta }^{\prime }:{\varDelta }^{\prime } \subset {\varDelta }\) and \({\varDelta }^{\prime }\) is still a diagnosis.

In most scenarios, there exists more than one diagnosis for a given inconsistent constraint set C (e.g., a CSP). In such cases, we need to find the most relevant diagnosis, for example, a diagnosis that will be with a high probability of relevance for a user. Erdeniz et al. (2018) introduce an approach based on clustering and genetic algorithms that help to optimize the prediction quality for diagnoses which is especially relevant in interactive settings. Assuming the existence of a dataset comprised of sets of inconsistent user requirements, clustering can be applied to form individual groups of similar (inconsistent) user requirements. On each of the individual clusters, a genetic algorithm can determine a cluster-specific importance ranking of constraints (user requirements) that helps to maximize the diagnosis prediction quality.

For diagnosis purposes, Erdeniz et al. (2018) apply a specific variant of FastDiag (Felfernig et al., 2012) which is a divide-and-conquer based direct diagnosis algorithm. FastDiag is based on a constraint ordering that has an impact on the determined diagnosis. For example, if we assume C = [c1,c2,c3] (ordered set notation) and there exists a conflict CS = {c1,c3}, the resulting diagnosis would be Δ = {c1}. If we assume C = [c3,c2,c1], the corresponding diagnosis determined by FastDiag would be Δ = {c3}. In the approach of Erdeniz et al. (2018), optimal cluster-specific constraint orderings are determined by a genetic algorithm. The optimization criteria in this context is prediction quality, for example, in terms of precision which is \(\frac {|correctly predicted diagnoses|}{|predictions|}\). In a new user session where user requirements become inconsistent, the cluster most similar to the current set of user requirements has to be identified. Using the cluster-specific constraint ordering, FastDiag can be activated with the current set of inconsistent user requirements. More details about the used diagnostic approach can be found in Felfernig et al. (2012) and Felfernig et al. (2018). A further development of the approach of Erdeniz et al. (2018) is introduced in (Erdeniz et al., 2019) where the task of adaptive constraint ordering (in diagnosis contexts) is represented as a matrix factorization task (Koren et al., 2009).

A simple example of the impact of different constraint orderings on the outcome of a diagnosis task is depicted in Tables 4 and 5. In this example, we assume a knowledge base consisting of the constraints C = {c1 : x1≠x2,c2 : x2≠x3} and the additional constraints {c3,c4,c5} representing, for example, user requirements. In Table 4, the constraint with the lowest importance is assumed to be c3 whereas in Table 5, the constraint with the lowest importance is assumed to be c5. The different rankings result in different corresponding diagnosis (Δ1,Δ2). In Tables 4 and 5, \(\underline {\times }\) indicates that the corresponding constraint has been selected for conflict resolution, i.e., is part of a diagnosis Δi.

4.6 Constraint-based recommendation

Constraint-based recommendation (Felfernig and Burke, 2008; Grasch et al., 2013; Zanker et al., 2007) is based on the idea of encoding recommendation knowledge into a set of constraints or rules which has to decide about the acceptability of individual items. Consequently, in their pure form, constraint-based recommender systems are quite similar to knowledge-based configuration systems. A major difference is the underlying solution space which is typically quite large in the context of configurator applications (Alves Pereira et al., 2019) but rather small in recommendation scenarios where the different items (products) are enumerated in terms of constraints describing a product catalog (Felfernig & Burke, 2008). Constraint-based recommender systems in their basic form do not provide a means of ranking individual recommendation candidates. In order to support the ranking of recommendation candidates, Felfernig et al. (2006) show how to integrate the concepts of utility-based recommendation with constraint-based reasoning by adding an additional evaluation/ranking phase that follows an initial candidate item selection process performed, for example, by a constraint solver. Following a similar goal, Zanker (2008) proposes an approach to derive preference knowledge from a collaborative filtering process (preferences are derived from a users nearest neighbors) and exploit the learned preferences in a semantic-level recommender system.

More advanced approaches are able to rank individual solutions where recommendation knowledge is encoded in the search heuristics of the used constraint solver. Erdeniz et al. (2019) introduce a novel approach to preference learning in the context of constraint-based recommendation scenarios. In this work, value ordering heuristics are learned on the basis of previous user interactions. The underlying idea is to apply the concepts of matrix factorization (Koren et al., 2009) to support the determination of individual attribute value recommendations for the current user. Matrix factorization (Koren et al., 2009) is based on the idea of approximating the original (potentially sparse) matrix R by two lower-rank matrices U and V where \(U\times V = R^{\prime }\) and \(R^{\prime }\) (the resulting dense matrix) approximates R. The idea introduced by Erdeniz et al. (2019) is to learn individual user preferences on the basis of historical data and to apply the acquired knowledge for determining recommendations for a new user. After a new user has specified a couple of preferences, the nearest neighbors of the current user can be determined by comparing the preferences of the current user with the corresponding attribute value selections of the nearest neighbors.

In the following, we will explain the approach of learning search heuristics proposed by Erdeniz et al. (2019) on the basis of a simple example. Table 6 represents the history of already completed user sessions where xi.vj = 1 denotes the fact that a user selected the attribute value vj of variable xi (in machine learning, also denoted as one hot encoding).

We are now interested in performing a factorization between two low-rank matrices U and V that can approximate R. The approximation of R is \(R^{\prime }=U\times V\) (see Tables 7 and 8).

Finally, the approximation of R which is \(R^{\prime }\) is depicted in Table 9. Given a new user (ucurrent in Table 9) who has already specified a set of attribute values, a nearest neighbor (or a set of nearest neighbors) NN can be identified in \(R^{\prime }\) (in our example, NN = {u1}) and the corresponding table entries (matrix factorization predictions) can be used for specifying the value orderings for determining a solution for the current user (see Table 9). Note that this approach does not directly predict values of relevance for a user but is used to derive a variable domain ordering than can be used by a constraint solver to derive a preferred solution.

5 Research issues

The integration of high-level reasoning in terms of different symbolic AI based approaches with sub-symbolic reasoning is not new but, however, an open challenge in AI-related research areas (Manhaeve et al., 2018). In the following, we summarize major issues for future research specifically related to the topic of integrating machine learning with constraint solving.

Algorithm Selection

In constraint solving scenarios, machine learning has the potential to significantly improve the overall performance of solvers, for example, in terms of lower runtimes, but also in terms of an increased prediction quality. Important to mention is that machine learning techniques helped to change the focus from optimizing individual solvers (algorithms) to the development of algorithm selection architectures that flexibly select the best fitting algorithm (or algorithm bundle) – this approach also helps to improve flexibility and applicability in the context of new problem settings. Quite in line with this, constraint solving benchmarks and competitionsFootnote 6 help to foster new developments on the level of individual constraint solvers as well as on the level algorithm selection and parametrization. A research issue in the context of algorithm selection is to take into account criteria beyond solver runtime performance, for example, solvers should also be able to predict user preferences – well-established techniques that can serve as a starting point can be found in recommender systems related research (Falkner et al., 2011).

Evaluation Metrics for Constraint-based Systems

Predominant evaluation criteria used in the constraint solving literature are performance-related, for example, the time needed to find a solution, and accuracy-related, for example, classifying the satisfiability of a given SAT problem. Only a few approaches focus on aspects that go beyond basic performance analyses and classification accuracy (Erdeniz et al., 2019). Especially in the context interactive constraint-based applications (e.g., configurators), prediction quality has also to be measured in terms of the fit of a proposed solution with the wishes and needs of a user. Such evaluations are typically conducted on the basis of datasets that provide a so-called ground truth or gold standard. A major issue for future work is to define evaluation metrics that cover a broad range of different scenarios and thus form the basis of a more detailed analysis of the quality of constraint-based systems. Compared to algorithm evaluation techniques existent, for example, in recommender systems (Gunawardana and Shani, 2015), evaluation metrics for constraint-based systems still need to be developed or at least adapted and/or extended. For example, prediction quality can be measured in terms of the share of correct item predictions. In constraint-based scenarios, there are different types of prediction tasks that need to be covered by evaluation metrics. For example, constraint solvers can be used to predict/propose values for single variables but also values for groups of variables and in the “extreme” case propose completions, i.e., value settings for variables that have not been instantiated up to now. Diagnosis scenarios experience a similar situation in terms of being in the need of standard evaluation metrics that help to increase comparability. For example, diagnosis in constraint-based applications is often used in interactive settings to proactively support users in finding out a way from the no solution could be found dilemma (Felfernig et al., 2012). Evaluation metrics needed in such contexts go beyond standard metrics and should enable, for example, the measurement of diagnosis minimality.

Learning Configuration Spaces

Learning configuration/solution spaces is an important aspect to be taken into account in the context of developing constraint-based systems (Alves Pereira et al., 2019). For example, in the context of software engineering scenarios, variability aspects are often represented in terms of feature models which specify functional aspects in terms of how individual components can be combined with each other (e.g., operating system packages or source components in the compilation phase). In the sampling phase of such systems, solutions have to be identified that (1) satisfy the constraints specified in the feature model but (2) also take into account non-functional criteria (often not covered by the feature model) such as runtime and stability of the generated solutions. Since it is not possible to test the whole configuration space (specifically for reasons of time limitations), a major research challenge is to optimize individual sampling strategies in order to provide a stable dataset that allows machine learning to build a generalized model. In this context, research questions such as what is the optimal sample size? and how can we measure the overall quality of a given sample? have to be answered. For an in-depth overview of applications and open research issues in configuration space learning we refer to Alves Pereira et al. (2019).

Testing and Debugging Constraint-based Systems

Similar to Software Engineering scenarios, testing and debugging also plays a major role in the development of constraint-based systems (Felfernig et al., 2004). For example, before deploying a configuration system in a productive environment, knowledge engineers have to assure the correctness of the underlying configuration knowledge base. Faulty configurations contradicting the product domain knowledge can lead to situations where even faulty products are delivered to a customer. In order to avoid such situations, intelligent testing and debugging mechanisms are needed to help to automatically identify faulty parts in a knowledge base. Existing approaches to the automated testing and debugging of constraint-based systems focus on a consistency-based approach where predefined test cases derived, for example, from already completed configuration sessions, are used for inducing conflicts in a knowledge base. Corresponding diagnoses indicate potential sources of the faulty behavior in the knowledge base (Felfernig et al., 2004). A research issue in this context is how to automatically generate test cases. Machine learning concepts can support the automated generation of test cases by learning to predict faulty regions in a knowledge base on the basis of insights from already existing development and maintenance iterations. In a similar fashion, such concepts should help to indicate potentially faulty regions of a knowledge base that should be tested more intensively compared to other parts of the knowledge base. Features of relevance for machine learning in this context can come from different sources such as development process information (e.g., who worked how long on the adaptation of which constraints and which constraints have not been tested for quite a long time) and basic complexity metrics for knowledge bases (Felfernig, 2004).

Constraint-aware Machine Learning

Typical machine learning approaches focus on building a prediction model on the basis of a given set of training data. Given a new user, for example, the user of a recommender system, predictions are based on the model derived from a dataset. Especially in the context of constraint solving, the defined constraints must be taken into account and – as a consequence – machine learning must be combined with the constraint solver in one way or another. More generally, there are different ways to take into account constraints in scenarios in which we want to combine constraint solving with machine learning techniques. First, constraint solving can be regarded as its own layer accepting the input of an upstream machine learning phase. An example thereof is that a neural network determines context-specific search heuristics and the solver applies these heuristics in a problem-dependent fashion. Second, constraint violations can be used as a penalty function in machine learning processes. For example, if we want to predict attribute settings that will be of relevance for a user, we could do this even without activating the constraint solver. Including constraint violations as penalties in machine learning optimization functions helps to build prediction models that implicitly take into account (explicitly mentioned) constraints, however, there is no absolute guarantee that with such an approach predictions are always correct. Third, constraint enforcing approaches go one step further and encode domain constraints directly into the machine learning model. In neural networks, dependencies (constraints) can be defined between individual node activations. For example, the constraint \(a \leftrightarrow b\) can be directly translated into constraints referring to node activations \(highactivation(a) \leftrightarrow highactivation(b)\). Fourth, data sampling and augmentation can be used to increase the “quality” of a dataset and thus help to increase the prediction quality of the learned model (Alves Pereira et al., 2019). In this context, important issues for future research include efficient ways to encode constraints into machine learning models in such a way that constraint solving can be (partially) replaced by machine learning (and vice-versa). A related research question is to which extent reasoning on top of a constraint solver, for example, in the form of a model-based diagnosis task, can be directly encoded into a corresponding machine learning model.

Explanations

Combining constraint solving with machine learning makes both areas profiting from each other. On the one hand, constraint solving has to deal with situations where different explanations are possible and it is important to figure out the most relevant ones for a user (Felfernig et al., 2009). For example, in the context of interactive constraint-based systems such as configurators it is an important issue to provide mechanisms supporting users to get out from no solution could be found dilemmas. Typically, there are many explanation candidates and machine learning from past user interactions can help to significantly improve the prediction quality of explanations. On the other hand, machine learning approaches such as neural networks lack explanation capabilities (Darwiche and Hirth, 2020), i.e., are not able to provide deep explanations that can help to understand the proposed solutions. Combining deep learning with knowledge-based approaches can help to make machine learning based solutions more accessible for users. Consequently, explanation-related research in both areas can profit from each other in terms of (1) exploiting machine learning for predicting relevant explanations and (2) exploiting symbolic AI based approaches to support semantic reasoning about explanations.

6 Conclusions

In this article, we have analyzed and summarized research contributions related to the integration of machine learning with constraint solving including (1) the basic approaches of constraint satisfaction, SAT solving, and answer set programming, and (2) applications thereof including configuration, diagnosis, and constraint-based recommendation. In this context, we have summarized research contributions that solve basic tasks such as algorithm selection, parametrization, selection and parametrization of search heuristics, performance prediction, and the prediction of satisfiability. In the majority of the cases, supervised machine learning approaches have been applied for problem solving whereas unsupervised and reinforcement learning approaches are in the minority and often applied in combination with corresponding supervised approaches (Bengio et al., 2021). Topics related to the integration of machine learning with constraint solving can be regarded as a thriving research area which requires research focusing on the combination of symbolic and sub-symbolic AI. In order to stimulate further related research, we have discussed relevant research issues comprising the topics of algorithm selection, evaluation metrics for constraint-based systems, learning configuration (solution) spaces, machine learning aspects in the testing and debugging of constraint-based systems, constraint-aware machine learning, and explanations.

Notes

See, for example, www.minizinc.org.

References

Acher, M., Temple, P., Jézéquel, J.-M., Galindo, J., Martinez, J., & Ziadi, T. (2018). VaryLATEX: learning paper variants that meet constraints. In 12th International Workshop on Variability Modelling of Software-Intensive Systems (pp. 83–88).

Adorf, H. -M., & Johnston, M. (1990). A discrete stochastic neural network algorithm for constraint satisfaction problems. In International Joint Conference on Neural Networks (pp. 917–924).

Alves Pereira, J., Martin, H., Acher, M., Jézéquel, J.-M., Botterweck, G., & Ventresque, A. (2019). Learning software configuration spaces: a systematic literature review research report 1-44, Univ Rennes, Inria, CNRS, IRISA.

Ansótegui, C., Sellmann, M., & Tierney, K. (2009). A gender-based genetic algorithm for the automatic configuration of algorithms. In I.P. Gent (Ed.) Principles and Practice of Constraint Programming - CP 2009 (pp. 142–157). Berlin: Springer.

Apt, K. (2003). Principles of constraint programming. Cambridge: Cambridge University Press.

Arbelaez, A., Hamadi, Y., & Sebag, M. (2010). Continuous search in constraint programming. Autonomous Search, 1, 53–60.

Beck, J.C., Prosser, P., & Wallace, R.J. (2004). Variable Ordering Heuristics Show Promise. In M. Wallace (Ed.) Principles and Practice of Constraint Programming – CP 2004 (pp. 711–715). Berlin: Springer.

Bello, I., Pham, H., Le, Q.V., Norouzi, M., & Bengio, S. (2017). Neural combinatorial optimization with reinforcement learning.

Bengio, Y., Lodi, A., & Prouvost, A. (2021). Machine learning for combinatorial optimization: a methodological tour d’horizon. European Journal of Operational Research, 290(2), 405–421.

In C. Bessiere, L. De Raedt, L. Kotthoff, S. Nijssen, B. O’Sullivan, & D. Pedreschi (Eds.) (2016). Data Mining and Constraint Programming: Foundations of a Cross-Disciplinary approach, volume 10101 of Lecture Notes in Artificial Intelligence. Berlin: Springer.

Bischl, B., Kerschke, P., Kotthoff, L., Lindauer, M., Malitsky, Y., Frechette, A., Hoos, H., Hutter, F., Leyton-Brown, K., Tierney, K., & Vanschoren, J. (2015). ASLib: A Benchmark Library for Algorithm Selection. Artificial Intelligence, 237, 41–58.

Bishop, C.M. (2006). Pattern recognition and machine learning (information science and statistics). Berlin: Springer.

Bonfietti, A., Lombardi, M., & Milano, M. (2015). Embedding decision trees and random forests in constraint programming. In L. Michel (Ed.) Integration of AI and OR Techniques in Constraint Programming (pp. 74–90). Cham: Springer International Publishing.

Brailsford, S., Potts, C., & Smith, B. (1999). Constraint satisfaction problems: Algorithms and applications. European Journal of Operational Research, 119(3), 557–581.

Brewka, G., Eiter, T., & Truszczynski, M. (2011). Answer set programming at a glance. Communications of the ACM, 54, 92–103.

Bünz, B., & Lamm, M. (2017). Graph neural networks and boolean satisfiability. arXiv:1702.03592.

Burkardt, J. (2009). Virginia Tech, Advanced Research Computing, Interdisciplinary Center for Applied Mathematics.

Burke, R. (2000). Knowledge-based recommender systems. In Encyclopedia of library and information systems (p. 2000). Marcel dekker.

Cameron, C., Chen, R., Hartford, J., & Leyton-Brown, K. (2020). Predicting propositional satisfiability via end-to-end learning. AAAI Conference on Artificial Intelligence, 34, 3324–3331.

Cappart, Q., Moisan, T., Rousseau, L.-M., Prémont-Schwarz, I, & Cire, A. (2020). Combining reinforcement learning and constraint programming for combinatorial optimization.

Carbonell, J.G., Michalski, R.S., & Mitchell, T.M. (1983). Machine learning: a historical and methodological analysis. AI Magazine, 4(3), 69.

Da Col, Giacomo, & Teppan, EC (2017). Learning constraint satisfaction heuristics for configuration problems. In 19Th international configuration workshop (pp. 8–11).

Darwiche, A., & Hirth, A. (2020). On the reasons behind decisions. In 24Th european conference on artificial intelligence (ECAI 2020) (pp. 712–720).

Epstein, S., & Freuder, E. (2001). Collaborative learning for constraint solving. In Intl. Conference on principles and practice of constraint programming, volume 2239 of LNCS (pp. 46–60).

Erdeniz, S.P., & Felfernig, A. (2018). Cluster and learn: Cluster-specific heuristics for graph coloring. In Intl. Conference on the practice and theory of automated timetabling (pp. 401–404). Elsevier.

Erdeniz, S.P., Felfernig, A., & Atas, M. (2018). Learndiag: A Direct Diagnosis Algorithm Based On Learned Heuristics. In Joint german/austrian conference on artificial intelligence (künstliche intelligenz) (pp. 190–197). Springer.

Erdeniz, S.P., Felfernig, A., & Atas, M. (2019). Matrix factorization based heuristics for direct diagnosis. In Intl. Conference on industrial, engineering and other applications of applied intelligent systems (IEA/AIE’19). Springer.

Erdeniz, S.P., Felfernig, A., Atas, M., Tran, T.N.T., Jeran, M., & Stettinger, M. (2017). Cluster-Specific Heuristics for constraint solving. In Intl. Conference on industrial, engineering and other applications of applied intelligent systems (pp. 21–30). Springer.

Erdeniz, S.P., Felfernig, A., Samer, R., & Atas, M. (2019). Matrix Factorization based Heuristics for Constraint-based Recommenders. In 34Th ACM/SIGAPP symposium on applied computing (SAC’19) (pp. 1655–1662). ACM.

Falkner, A., Felfernig, A., & Haag, A. (2011). Recommendation technologies for configurable products. AI Magazine, 32(3), 99–108.

Felfernig, A. (2004). Effort estimation for knowledge-based configuration systems. In 16Th intl. Conference on software engineering and knowledge engineering (SEKE2004) (pp. 148–155). Canada: Banff.

Felfernig, A., & Burke, S. R. (2008). Constraint-based recommender systems: technologies and research issues. In 10th Intl. Conference on Electronic commerce, ICEC ’08, New York, NY USA. Association for Computing Machinery.

Felfernig, A., Friedrich, G., Jannach, D., & Stumptner, M. (2004). Consistency-based diagnosis of configuration knowledge bases. Artificial Intelligence, 152(2), 213–234.

Felfernig, A., Friedrich, G., Jannach, D., & Zanker, M. (2006). An integrated environment for the development of knowledge-based recommender applications. International Journal of Electronic Commerce (IJEC), 11(2), 11–34.

Felfernig, A., Friedrich, G., Schubert, M., Mandl, M., Mairitsch, M., & Teppan, E. (2009). Plausible repairs for inconsistent requirements. In 21St international joint conference on artificial intelligence (IJCAI’09) (pp. 791–796). California: Pasadena.

Felfernig, A., Hotz, L., Bagley, C., & Tiihonen, J. (2014). Knowledge-Based Configuration: From Research to Business Cases. Burlington: Morgan Kaufmann.

Felfernig, A., Le, V., Popescu, A., Uta, M., Tran, T., & Atas, M. (2021). An overview of recommender systems and machine learning in feature modeling and configuration, (pp. 1–8). Austria: ACM.

Felfernig, A., Schubert, M., & Zehentner, C. (2012). An efficient diagnosis algorithm for inconsistent constraint sets. Artificial intelligence for engineering design. Analysis, and Manufacturing (AIEDAM), 26(1), 53–62.

Felfernig, A., Walter, R., Galindo, J.A., Benavides, D., Erdeniz, S.P., Atas, M., & Reiterer, S. (2018). Anytime diagnosis for reconfiguration. Journal of Intelligent Information Systems, 51(1), 161–182.

Fleischanderl, G., Friedrich, G., Haselböck, A., Schreiner, H., & Stumptner, M. (1998). Configuring large systems using generative constraint satisfaction. IEEE Intelligent Systems, 13(4), 59–68.

Freuder, E. (1997). In pursuit of the holy grail. Constraints, 2, 57–61.

Fritsch, R., Fritsch, R., Fritsch, G., & Fritsch, G. (1998). Four-Color Theorem. Berlin: Springer.

Galassi, A., Lombardi, M., Mello, P., & Milano, M. (2018). Model Agnostic Solution of CSPs via Deep Learning: A Preliminary Study. In W.-J. van Hoeve (Ed.) Integration of constraint programming, artificial intelligence, and operations research (pp. 254–262). Springer.

Gebser, M., Kaminski, R., Kaufmann, B., Schaub, T., Schneider, M.T., & Ziller, S. (2011). A portfolio solver for answer set programming: Preliminary report. In J.P. Delgrande W. Faber (Eds.) Logic Programming and Nonmonotonic Reasoning (pp. 352–357). Berlin: Springer.

Gent, I., Jefferson, C., Kotthoff, L., Miguel, I., Moore, N., Nightingale, P., & Petrie, K. (2010). Learning when to use lazy learning in constraint solving. Frontiers in Artificial Intelligence and Applications, 215, 873–878.

Gent, I.P., Kotthoff, L., Miguel, I., & Nightingale, P. (2010). Machine learning for constraint solver design – a case study for the alldifferent constraint. In 3Rd workshop on techniques for implementing constraint programming systems (TRICS) (pp. 13–25).

Grasch, P., Felfernig, A., & Reinfrank, F. (2013). Recomment: Towards Critiquing-based Recommendation with Speech Interaction. In 7th ACM conference on Recommender systems (RecSys ’13) (pp. 157–164). China: ACM.

Gu, J., Purdom, P.W., Franco, J., & Wah, B.W. (1996). Algorithms for the satisfiability (SAT) problem: a survey. In DIMACS Series in discrete mathematics and theoretical computer science (pp. 19–152). American Mathematical Society.

Guerri, A., & Milano, M. (2004). Learning techniques for automatic algorithm portfolio selection. In 16th European Conference on Artificial Intelligence, ECAI’04 (pp. 475–479). NLD: IOS Press.

Gunawardana, A., & Shani, G. (2015). Evaluating Recommender Systems. In F. Ricci, L. Rokach, & B. Shapira (Eds.) Recommender Systems Handbook (pp. 265–308). Springer.

Haim, S., & Walsh, T. (2009). Restart strategy selection using machine learning techniques. In O. Kullmann (Ed.) Theory and Applications of Satisfiability Testing - SAT 2009 (pp. 312–325). Berlin: Springer.

Hutter, F., Hamadi, Y., Hoos, H., & Leyton-Brown, K. (2006). Performance prediction and automated tuning of randomized and parametric algorithms. In Intl. Conference on principles and practice of constraint programming (CP 2006), (Vol. 4204 pp. 213–228).

Hutter, F., Xu, L., Hoos, H.H., & Leyton-Brown, K. (2014). Algorithm runtime prediction: Methods & evaluation. Artificial Intelligence, 206, 79–111.

Jannach, D. (2013). Toward Automatically Learned Search Heuristics for CSP-encoded Configuration Problems - Results from an Initial Experimental Analysis. In 15th international configuration workshop (pp. 9–13).

Johnston, M., & Minton, S. (1994). Analyzing a heuristic strategy for constraint satisfaction and scheduling. Intelligent Scheduling, 257–289.

Junker, U. (2004). QuickXPlain: preferred explanations and relaxations for over-constrained problems. In AAAI 2004 (pp. 167–172). AAAI.

Konstan, J., Miller, B., Herlocker, J., Gordon, L., & Riedl, J. (1997). GroupLens: Applying Collaborative Filtering to Usenet News. Communications of the ACM, 40(3), 77–87.

Koren, Y., Bell, R., & Volinsky, C. (2009). Matrix factorization techniques for recommender systems. IEEE Computer, 42(8), 30–37.